- Department of Education, University of Cyprus, Nicosia, Cyprus

The present study addresses the lack of an instructional design methodology that guides the integration of technology in music listening and composition activities, and enriches the framework of Technological Pedagogical Content Knowledge (TPCK)—an essentially cognitive model– with the affective domain. The authors herein provide many examples that illustrate the music design principles and the expanded Technology Mapping instructional design process that have been proposed in previously published work. The practical examples provide concrete ideas on how to transform the musical materials into more understandable forms and how to associate them with emotions using technology. Besides its practical contribution, the research has also a theoretical significance for the theory of TPCK as it examines the interrelations between music content, technology, cognition, and affect, and identifies discipline-specific aspects of TPCK that include the affective domain. The empirical evidence of 191 secondary school students presented within the context of a music composition task using the software MuseScore, supports that both the TPCK framework as well as the proposed music guidelines can effectively guide teachers in designing lessons with technology while incorporating effect. Through the 4E perspective, technology and the proposed approach are viewed as agents of a distributed system that can support the embodied minds to develop musical and emotional understanding.

Introduction

Despite the fact that the transformative (Beckstead, 2001; Brown and Dillon, 2007; Ruthmann, 2007) and efficiency (Beckstead, 2001; Brown, 2007; Savage, 2007; Webster, 2007; Mroziak and Bowman, 2016) role of digital technologies has long been established in music education research, technology’s use in the classroom is still limited while its potential is not utilized to serve curricular objectives but often peripheral purposes, such as preparing handouts, musical scores, or presentations, showing videos, and recording student classroom activities (Bauer et al., 2003; Savage, 2010; Greher, 2011; Wise et al., 2011; Mroziak and Bowman, 2016). This is attributed to teachers’ lack of knowledge relating to the affordances of music and non-music technologies and to insufficient guidance on instructional approaches for incorporating technology in music pedagogy (Savage, 2007, 2010; Webster, 2007; Bauer, 2014; Mroziak and Bowman, 2016).

About 15 years ago, Technological Pedagogical Content Knowledge (TPCK or TPACK) has been proposed by researchers in the field of instructional technology as a framework for guiding in systematic ways the integration of technology in teaching and learning (Angeli and Valanides, 2005, 2009, 2013, 2015; Mishra and Koehler, 2006; Niess, 2011). While different TPCK models have been introduced in the literature during the last 15 years, this study took on the TPCK framework proposed by Angeli and Valanides (2005; 2009; 2013) in order to investigate within the field of music education subject-specific aspects of the model. The authors herein identified that the focus of the existing body of research associated to TPCK was on the cognitive domain of learning, and, appraised that the affective domain is severely overlooked (Macrides and Angeli, 2018a, b).

Aiming to extend the framework reported by Angeli and Valanides (2005; 2009; 2013), Macrides and Angeli (2018a; 2018b) proposed a set of instructional design guidelines and an expanded Technology Mapping model situated in music learning. The methodology, which constituted an approach for musical learning and creativity using technology, addressed the role of affect in the design of learning activities, and, uncovered connections between emotions, cognition, musical content, pedagogy, and technological tools.

The terms affect and emotions are used according to the definitions provided by Juslin and Sloboda (2011) in their introduction of the Handbook of music and emotion (2011: 10). According to these authors, affect is used as an “umbrella” term that covers different affective states, including, emotions, moods, feelings, preferences, and aesthetics. The term emotion is referred to an “intense affective reaction” that typically involves a number of manifestations –including, subjective feeling, physical stimulation, expression, action tendency, and regulation– that more or less happen simultaneously (e.g., happiness and sorrow) (Juslin and Sloboda, 2011). The term “musical emotions” denotes emotions that are somehow induced by music and include emotions felt or perceived (Juslin and Sloboda, 2011; Juslin et al., 2011).

Despite the broad definition of the term affect, in practice the field is still referred to as Music and Emotion (Juslin and Sloboda, 2011). This is reflected in many journals (e.g., Cognition and Emotion, Motivation, and Emotion) and books (e.g., Music and emotion, 2001), Handbook of music and emotion (2011) that use the word “emotion” rather than the word “affect.” Hallam’s article on “Music Education: the role of affect” (2011) is an example in which both terms are used interchangeably (2011). In the present article, the term affect is used to denote affective phenomena in general, while more precise terms (i.e., emotion, mood, and feeling) are used to refer to specific emotional states that are evoked by music.

Embodied music cognition (EMC) is a theory that considers the human body as a mediator between mind and physical environment in which music can be heard and assumes that musical perception is formed through bodily movements (Leman, 2008; Leman and Maes, 2014). Furthermore, multimedia interactive technologies and sensor interfaces are viewed as extensions of the human body and can serve as supporting mediating vehicles in forming cognitive and emotive musical understanding and in shaping mental representations through corporeal actions (Camurri et al., 2005). The EMC approach relies on the supposition that musical understanding and production is based on an embodied sensory motor engagement and is thus the result of corporeal actions (bodily movements) of the listener/performer, including, dance movements, tapping the beat, expressive-supportive gestures of a performer, expressive-responding gestures of a listener, feeling emotional empathy with the emotions expressed by the music, and so on.

Although the EMC is an interesting and widely cited theory, critical views on the embodied music approach to cognition indicate that the EMC hypothesis is rather abstract, narrow and self-contradictory, and requires clarification on various issues (Schiavio and Menin, 2013; Matyja, 2016). The assumption that understanding is formulated through the actions of a physical mediator, i.e., the body, and its interactions with the music is quite undefined and unclear especially for pedagogical purposes. For example, the theory does not explicitly identify (methodologically, theoretically and empirically) how embodiment and specific bodily movements influence specific cognitive processes and musical understanding, and does not clearly define the boundaries of disembodied musical processing from the embodied musical perception (Matyja, 2016). Critics point out that (1) by focusing more on the music listener the theory does not sufficiently account for a broad range of musical experiences; (2) the action-oriented process described does not overcome the dualistic perspective of mind and matter as it claims it does, but reinforces the subjective (mind)-objective (music) dualism creating an inconsistency in the proposed theory; and (3) the theory lacks support from substantial empirical evidence (Matyja and Schiavio, 2013; Schiavio and Menin, 2013; Geeves and Sutton, 2014; Matyja, 2016). Thus, unless the theory of EMC becomes more precise as to how the musical content can be better facilitated, explained, and understood, it is difficult to translate and apply the EMC approach into the teaching practice.

The 4E perspective (embodied, embedded, enactive, and extended) considers cognition as a dynamic, social, distributed and interactive procedure in which mental operations, bodily or psychomotor movements, and environmental agents, such as, collaboration with peers, use of instruments, and computers, interrelate and contribute to learning, creativity and sense-making in music (Van der Schyff et al., 2018). According to the 4E approach, the embodied minds or human beings (in which mind and body are neither detached nor one commands the other, but rather act together as one entity) are embedded (or exist) with in an environment or physical and socio-cultural system. In this musical system or context, embodied minds can enact (engage, initiate, relate, adapt, interact, negotiate, and so forth) with the environmental agents, and at the same time can be extended by the agents of that system (e.g., technological tools and approaches), thus contributing to the generation of musical ideas, emotions, and musical learning. In music education literature, i.e., textbooks, teacher guides and research, music cognition may be demonstrated and assessed in many different ways, such as describing musical characteristics, critically reflecting on different forms, interpreting the meaning of music verbally or with bodily movements, creating visual representations or concept maps, and so on (Statton et al., 1980, 1988; Bond et al., 1995, 2003; Dunn, 2008; Kerchner, 2013; Bauer, 2014).

This study explores musical cognition and emotions in a dynamic, distributed and interactive process where students interact with computers, their peers, the musical materials and their emotional effects, and investigates the interrelations of emotional understanding, feeling and expression with the musical content, the development of musical ideas, and technology. For the purposes of this study, we consider cognitive aspects of music that are related to the use, manipulation, and interpretation of musical materials (such as, melody, rhythm, timbre, texture, tempo, dynamics) and features (i.e., musical instruments, sounds, constructs, and forms) within the context of musical listening-and-analysis and composition activities. Using the notation program MuseScore, the authors illustrate the proposed music guidelines with examples that show how various uses, combinations, and changes in certain elements and constructs can influence different emotion inductions.

In addition to its practical significance, this research study also has a theoretical contribution for the conceptualization of TPCK. Firstly, the study promotes the theory about the technological knowledge element (TK) of the TPCK construct by identifying the affordances of technological tools with which educators can create powerful and understandable representations of the content, an aspect which is currently underexplored (Angeli and Valanides, 2018). Second, this study explores the links between basic elements of the TPCK structure, including, cognition or musical content, technology, and pedagogy, and the affective domain. Lastly, the learning outcomes of student compositions support that both the TPCK model as a construct as well as the proposed music instructional guidelines for music can provide effective guidance in the design of music lessons.

Background

Technological Pedagogical Content Knowledge and Music Education

About 15 years ago, when different researchers introduced Technological Pedagogical Content Knowledge (TPACK or TPCK) as a framework for guiding technology integration in teaching, they extended Shulman’s (1986) theoretical model about Pedagogical Content Knowledge (PCK) by adding the component of technology (Angeli and Valanides, 2005, 2009; Koehler and Mishra, 2005; Niess, 2005, 2011; Mishra and Koehler, 2006). These initial models, as well as others that were proposed some years later, have different theoretical views about the composition, nature and growth of TPCK. These differences include the components of the framework, i.e., the forms of knowledge required to teach effectively using digital technologies and their relationships, how teachers need to be trained in order to better develop their personal TPCK, and how TPCK is measured and assessed (Angeli et al., 2016; Angeli and Valanides, 2018). Among these models, two were the most prevailing, namely, the model proposed by Koehler and Mishra (2005); Mishra and Koehler (2006) which is more linked to the TPACK nomenclature and the integrative conceptualization, and the model suggested by Angeli and Valanides (2005; 2009; 2013) which is usually referred to as TPCK and the transformative interpretation.

This research study adopted the model of Angeli and Valanides (2005; 2009; 2013) because it explicitly guided the design process of technology-enhanced learning with clear instructional design guidelines. These principles include:

(1) Identify content for which teaching with technology can have an added value, i.e., topics that students have difficulties in grasping or teachers have difficulties in presenting/teaching.

(2) Identify representations for transforming the content to be taught or learned into more understandable forms that are not possible to implement without technology.

(3) Identify teaching methods that are impossible or difficult to implement with traditional means and without technology.

(4) Select appropriate tools with the right set of affordances.

(5) Design and develop learner-centered activities for integrating technology in the classroom.

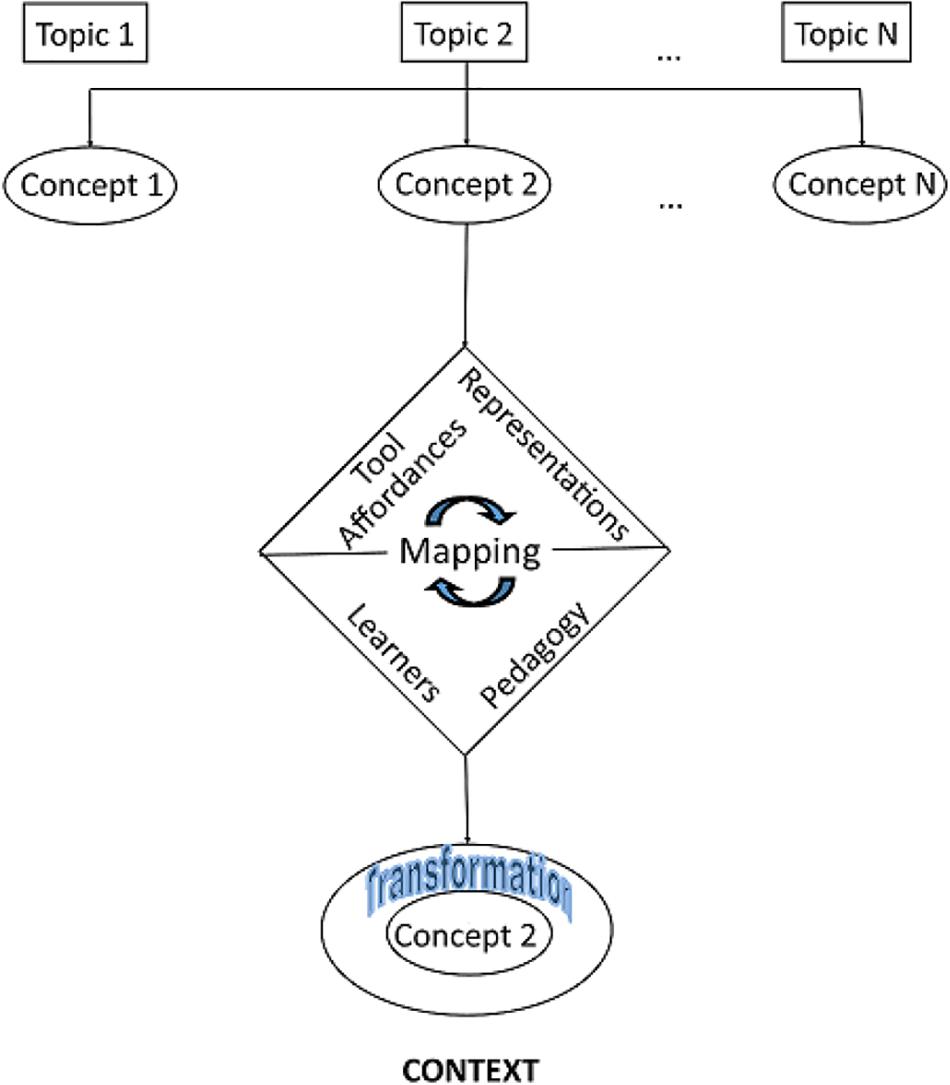

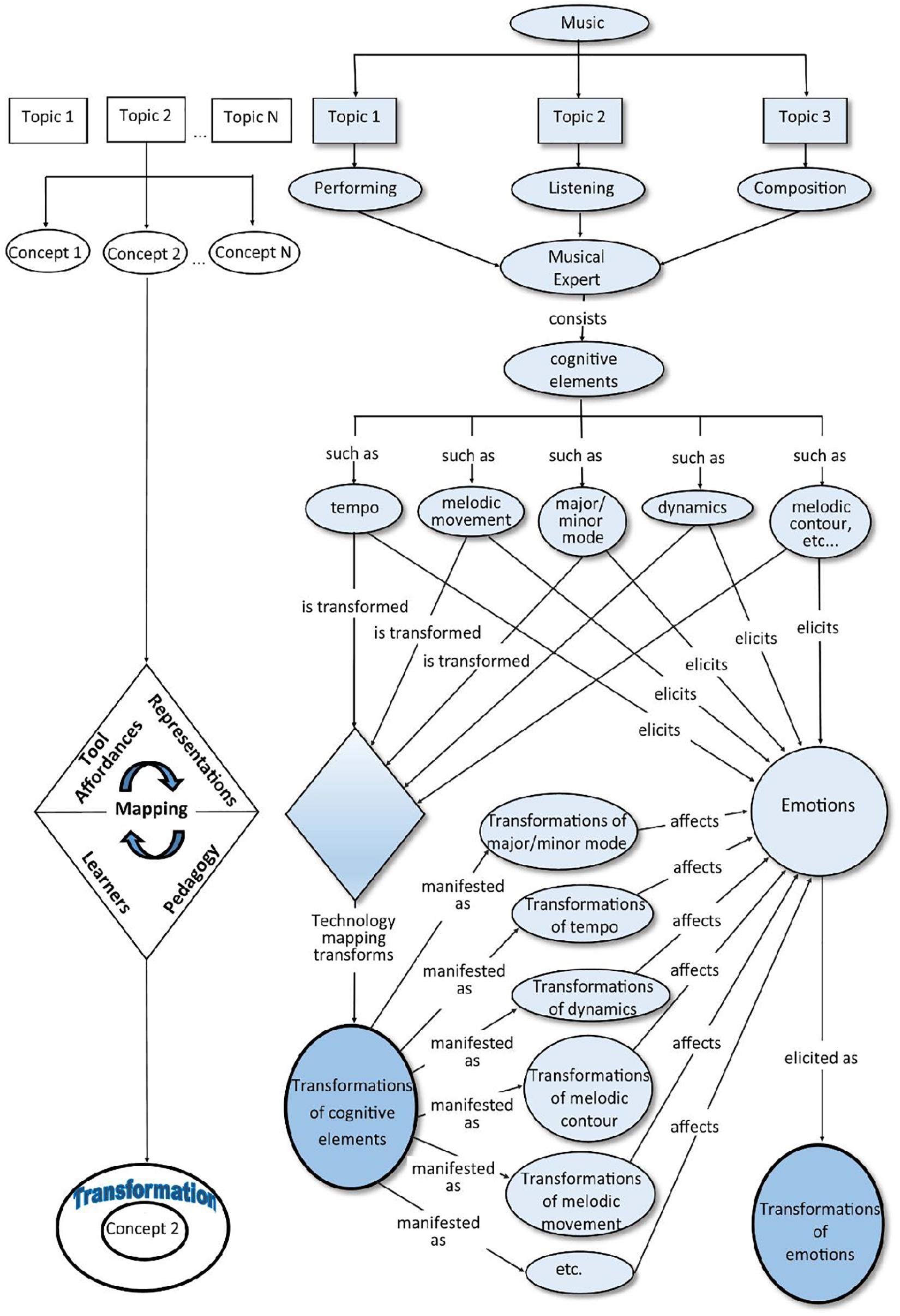

Along with these five principles, Angeli and Valanides (2013) developed an instructional design approach, known as Technology Mapping (shown in Figure 1), aiming to provide further guidance for the complex procedure of creating learning designs with technology. The main objective of this design process was to support teachers in mapping or identifying the necessary connections between the content or subject area, pedagogical affordances of a particular technology and possible representations, pedagogical approaches, students’ content-related misconceptions and difficulties and context (Angeli and Valanides, 2009, 2013; Ioannou and Angeli, 2013).

Figure 1. Technology Mapping model (adopted from Angeli and Valanides, 2013).

Furthermore, other researchers indicated that investigating discipline-specific aspects of TPCK, including the affective domain, was necessary for developing the theory around the TPCK framework and for better supporting teacher educators and practitioners in their efforts to create effective technology-enhanced teaching designs (Chai et al., 2013; Voogt et al., 2013; Angeli et al., 2016).

While investigating whether the TPCK theory was sufficient to guide music teachers through their learning designs, the authors identified that the model did not provide any guidance on how to incorporate affect in teaching music with technology, an all important aspect for music pedagogy, because the model was –and remains– essentially cognitive (Macrides and Angeli, 2018a, b). However, the authors in this study were challenged with yet another gap, because likewise in the area of music education they encountered lack of substantial research relating to the inclusion of emotions in teaching and learning. Therefore, although the authors herein adopted the general TPCK instructional design principles proposed by Angeli and Valanides (2005, 2009), they were geared to search in another field, and specifically that of music psychology, in order to find guidance regarding the affective domain.

Music and Emotional Response

According to the literature the two main contexts in which music listening experiences take place are: (a) in everyday life or ordinary contexts, such as managing jobs, traveling, shopping, exercising, studying, relaxing from work, and (b) in specialized music-listening contexts, such as concerts, weddings, and funerals (Sloboda, 2011; Sloboda et al., 2011). In the first category, music plays a background or supporting role and emotions arise from the surrounding materials, context and activities of the listener, whereas in the second category music is at the foreground and emotions elicit from the nature of the musical materials and aesthetic reactions to the music itself. Hence, everyday musical emotions are less memorable, brief and multiple, have basic content and are self-referral (i.e., happy, sad, calm), have low intensity and a higher proportion of negative force, are listener-focused, and are influenced by non-musical content. On the other hand, in specialized musical settings emotions are high on intensity, more memorable, sustained and integrated, more complex, mainly positive, music-referenced, music focused, and are influenced by musical content (Sloboda, 2011; Sloboda et al., 2011).

Musical emotions can be stimulated mainly by the musical works and their particular characteristics (e.g., singer’s voice, tempo, melody, virtuosic performance), the listeners’ situations, circumstances, and pre-existing moods (e.g., a significant event, the weather, the social environment), memory influences (emotions elicited from memories or imagination that the music evokes) and the lyrics (Juslin et al., 2011). In addition to these conditions, emotions evoked during classroom listening activities are also associated to students’ musical preferences and familiarity with the pieces and styles presented (Todd and Mishra, 2013), the mode of activity (auditory, visual, kinesthetic) and students’ learning style and abilities (Dunn, 2008).

Several researchers identified a distinction between perception and arousal of emotion and supported that the process of how listeners perceive emotions expressed in the music, differs from the process of how music arouses felt emotions in listeners (Gabrielsson, 2002; Zentner et al., 2008; Juslin, 2019). Perceiving the emotional meaning or expression in a musical work involves a cognitive identification of a musical emotion, such as understanding that a specific piece expresses sadness. Whereas the induction of a felt musical emotion involves information that has emotional effects and elicits an emotional reaction which may or may not be related to the perceived emotion, such as a personal emotional memory triggered by the music (Juslin, 2019). For example, the emotion perceived in the music (e.g., sadness) might be different from the emotion induced by the same music (e.g., nostalgia).

Although the perceived and felt emotions are different psychological processes, they both take place “in the listener” and both depend on the musical features or acoustic and structural qualities of the music. Perceived and felt emotions may both occur together and sometimes influence each other while listening to music, or they may overlap generating the contagion mechanism. Contagion is an internal emotional reaction to musical features that matches the emotion expressed in the music, i.e., feeling empathy or mirroring the perceived emotion (Juslin, 2019).

Gabrielsson and Lindström (2010) suggested that there are certain factors in musical structure that contribute to the perceived emotional expression, including various devices and constructs (e.g., repetition, variation, transposition) and musical features that are usually represented by designations in a musical score, such as tempo markings, dynamics markings, pitch, intervals, mode, melody, rhythm, and harmony. Numerous studies investigated the emotional effects of single musical factors (musical elements) either individually or in additive and interactive ways (e.g., mode × tempo), however, musical expression in a piece of music is usually influenced by various musical features.

According to empirical research, the most distinct results regarding the expression of emotion in music are related to the effects of tempo, mode, loudness and timbre/spectrum, i.e., tone quality of a sound based on its frequency-amplitude content (Gabrielsson, 2009). An increase in any of these elements results in higher activation. Faster tempo is associated with happier states while slow tempo is associated with sadness (Juslin and Sloboda, 2001). For the effects of mode, researchers reported happy states for major mode and sad/melancholic for minor mode, but when interacting with faster tempo, the emotional states change (Juslin and Laukka, 2004; Webster and Weir, 2005). In an empirical investigation, Webster and Weir (2005) found that increasing tempo clearly increased ratings of happiness for both modes, although there is a different critical speed for each mode at which the influence of tempo on happiness is weakened (for major mode) or strengthened (for minor mode). Studies of musical timbre have mainly focused more on the underlying acoustical properties (spectrum, transients, etc.) than on the perceived timbre as such, and thus there is still little research on the role timbre (instrumental/vocal tone color) plays in musical structure and musical expression (Gabrielsson, 2009; Gabrielsson and Lindström, 2010). Regarding more typical musical elements, results are less clear or there is little empirical research available. For example, ascending melody may be associated with dignity (pride), serenity, tension, happiness, fear, surprise, anger, and potency (power, energy, strength), and loudness with intensity/power, excitement, tension, anger, joy (Gabrielsson, 2009; Gabrielsson and Lindström, 2010).

A theoretical framework, known as the BRECVEM model (Juslin and Vastfjall, 2008; Juslin et al., 2011) was introduced to address the gap regarding how musical emotions are evoked. This model features seven underlying mechanisms which are responsible for the induction of emotion through music listening: (1) brain stem reflexes (basic acoustical characteristics of the music, such as, dissonance or sudden changes in loudness and speed induce arousal in the listener); (2) rhythmic entrainment (synchronization/adjustment of an internal body rhythm—e.g., heart rate or respiration—to a strong musical rhythm and dissemination of this arousal to other components of emotion—e.g., feeling, expression); (3) evaluative conditioning (linking a musical stimulus to a non-musical stimulus or event because they occurred several times simultaneously); (4) emotional contagion (feeling empathy or psychological identification with the presumed emotional expression of the music); (5) visual imagery (imagining visual images about the music, such as, a beautiful scenery); (6) episodic memory (eliciting affectively loaded memories from the past, e.g., a nostalgic event); and (7) musical expectancy (perceiving expected or unexpected musical events in the discourse of musical structure relating to the building up of tension and resolution). The BRECVEM framework was later expanded to BRECVEMA in order to explain both “everyday emotions” and “aesthetic emotions” (Juslin, 2013). The updated model includes an additional mechanism labeled as (8) aesthetic judgment to better account for typical “appreciation emotions” such as admiration and awe.

Scherer (2004) proposed that music can provoke emotions through five routes of emotion induction, three central and two peripheral. The central mechanisms include cognitive appraisal, memory, and empathy (contagion), while the peripheral processes are the proprioceptive feedback (due to rhythmic entrainment, i.e., synchronization of the body’s internal rhythms or motor external rhythmic motions to the music’s rhythm and beat) and expression of pre-existing emotions. Apart from apprehending the processes underlying the induction of emotions when listening to music, researchers in music psychology devoted efforts in understanding the nature of the musically induced emotion itself. They supported that multi-purpose models and approaches applied for the description of everyday non-musical emotions in the field of emotion research, such as, the dimensional and categorical methods, are not adequate measures for grasping the core of and characterizing musically induced emotions (Scherer and Zentner, 2001; Scherer, 2004).

After a systematic investigation of describing emotions evoked by different genres of music among a wide range of listeners, Zentner et al. (2008) derived the Geneva Emotional Music Scale (GEMS), a taxonomy of forty-five labels or characteristic feeling terms that can be used to classify, characterize and measure music-evoked emotional states. In addition, these emotive labels can be grouped into nine musical emotion factors or categories, including wonder, transcendence, tenderness, nostalgia, peacefulness, power, joyful activation, tension and sadness, that in turn can be reduced in three greater factors.

Recent research concerning music emotion recognition (MER) invested efforts in training a machine to automatically recognize the emotion of a musical piece. The purpose of MER systems is to organize and retrieve musical information from large online music playlists, streaming services, digital music libraries, and databases using emotions (Yang and Chen, 2012). Although emotion-based music retrieval has received increasing attention in both academia and the industry, there are still many open issues to be resolved. These include the lack of consensus on the conceptualization and categorization of emotions, the different factors influencing emotion perception, and the absence of publicly accessible ground-truth data on musically induced emotion (Yang and Chen, 2012).

The categorization of emotions is problematic because there is no consensus on the approach or model (i.e., dimensional and categorical) and the number of taxonomies that should be used for accurately describing emotions, especially since each model or approach accounts for different aspects of emotions (i.e., arousal or intensity of emotions, valence or positive-negative impact, and labeling). Whether emotions should be modeled as categories (categorical approach) or continua (dimensional approach) has been a long deliberation in psychology. Nevertheless, Yang and Chen (2012) suggested that since each approach describes different qualities of emotions which are complementary to each other, it would be possible to employ both approaches in the development of MER systems and in emotion-based music retrieval.

Another problem in the development of MER systems is the subjectivity of emotion perception. Emotional responses to music are dependent on the interplay between musical, personal, and situational factors (Juslin and Sloboda, 2001; Gabrielsson, 2002; Hargreaves, 2012; Hargreaves et al., 2012), including musical materials, cultural background, age, gender, personality, musical training, familiarity, and personal musical preferences. A characteristic example that illustrates the complexity of the matter, is that even a single person’s emotion perception of the same song could vary depending on personal and situational factors. Furthermore, another parameter that perplexes the identification of musical emotions is that, although different musical elements are usually associated with different emotion perceptions, emotion perception is rarely dependent on a single musical factor but a combination of them (Juslin and Laukka, 2004; Gabrielsson, 2009).

Thus, in order to accurately represent the acoustic properties of individual musical pieces, MER systems utilized various musical features, such as aspects of melody (pitch and mode), timbre (spectrum and harmony), loudness, and rhythm (meter, tempo, and phrasing). Through different computational methods, these musical features are extracted from audio signals (i.e., songs or musical pieces) existing in the database of a system, and are inputted in learning machines as machine learning algorithms. These algorithms are applied to teach/train the machine the association between the extracted musical features of each song and its corresponding emotion label(s) that has been assigned by listeners during an emotion annotation process. The ground truth emotional labeling of the songs in a music dataset is usually obtained by averaging the emotional responses of all the participating subjects, which are categorized in the system according to a specified musical emotions taxonomy model. In addition to the information on musical characteristics, some MER systems employ algorithms that take into consideration listener and situational characteristics, such as a listener’s mood or the environment’s loudness. In conclusion, MER systems apply computational methods to explore the underlying mechanisms (musical features) in the perception of musical emotions, and subsequently use them for automatic emotion-based classification and retrieval of music (Yang and Chen, 2012).

Models and Practices for Teaching Composition and Musical Creativity

The term creativity creates confusion not only because it has many possible meanings and is complex and versatile (Saetre, 2011; Burnard, 2012; Nielsen, 2013), but also because teaching approaches and methods of creative activities lack consistency (Webster, 2009) and are wide-ranging and diverse (Saetre, 2011; Burnard, 2012). Furthermore, while it may be easy to explain practical aspects of composition work, it is difficult to describe the process itself (Gall and Breeze, 2005).

According to Hickey and Webster (2001) the creative thinking process begins with an idea or intention and ends with a creative product. One of the earliest studies on the process of creativity was the work of Wallas (1926) who proposed four stages of creative thinking and greatly influenced the views of Webster (Hickey and Webster, 2001; Gall and Breeze, 2005). Webster’s model of creative thinking process in music represents the entire process of composition and also consists of the four stages conceived by Wallas (1926): preparation—thinking of materials and ideas; incubation (or time away)—mixing and assimilating ideas subconsciously; illumination (working through)—generating a great idea in mind; and verification—bringing ideas together and trying out the creative product (Webster, 1990, 2003). After initially verifying the creative idea by listening to the entire piece, the creator(s) should edit or revise their work by going through the four thinking stages again.

Creative music making is an interactive process between the participants’ musical experience and competence, their cultural practice, the tools, the instruments, and the instructions (Nilsson and Folkestad, 2005). Hickey and Webster (2001) suggested the use of a memory aid (paper manuscript or computer notation software) to keep track of ideas during the illumination stage and a hearing run-through of the composition at the final stage, performed either by students or by a synthesizer. Similarly, other authors (Reimer, 1989; Beckstead, 2001; Freedman, 2013) supported that technology can better facilitate the creative process and can be more efficient than traditional settings with musical instruments, particularly for students with limited notation and performance skills. Using computer software enables students to create, recall, revise, and develop musical ideas in succeeding lessons, and facilitates the process of providing meaningful and constructive peer and teacher feedback on specific places in the music (Breeze, 2011; Freedman, 2013; Nielsen, 2013).

Although creative thinking and creative activities in music have been discussed by music educators since the inception of public music education, there are major differences between the past, the present and the future of creative thinking activities in music classrooms (Hickey, 2001). First, our knowledge about how children learn with regards to active, meaningful, contextual, authentic, and collaborative learning from both within and outside the field of music education has expanded greatly over the past 30 years. For example, there is a growing interest in the literature about the social and collaborative aspects of creative musical activities (Green, 2007) and especially concerning the use of online collaborative technologies (Burnard, 2007). Second, advances in music and other digital technologies provide more powerful and easily accessible tools than ever (Brown, 2007; Bauer, 2014). Third, our understanding about the role of emotion in creative activities has been expanded over the past years (Webster, 2002; Hallam, 2011).

Wiggins (2007) suggested that when designing activities for composing in music education, teachers and researchers need to recognize and validate the following principles: (1) the holistic nature of the compositional process (embedding the generation of musical ideas in the context of a whole musical work with an intended character, structure, and meaning); (2) the intentional and cognitive nature of the conception and generation of musical ideas; (3) the need for personal agency within socio-cultural and musical contexts; (4) the need for setting composition assignments that are as close as possible to the composers’ ways of practice; (5) the need for engaging learners in compositional experiences to foster musical thinking; (6) the knowledge constructed from musical experiences both in and out of school; and (7) the dynamic qualities of collaborative composing experiences.

Researchers agree that providing restrictions, guidelines, or a pedagogical framework for the process triggers the initiation of the creative procedure more easily than composing a free task (Folkestad, 2005; Hallam, 2006; Bauer, 2014). Folkestad (2005) suggested that it is crucial to formulate instructions that are feasible to implement in the creative activity and in ways that prompt creativity and transform a pedagogical framing into a musical framing enabling musical identity, self-expression, and communication in music. Thinking in sound involves imagining and remembering sounds or sound structures (Hickey and Webster, 2001) and hearing or conceiving music in one’s mind (Swanwick, 1988; Wiggins, 2007). Hickey and Webster (2001) suggested activities and strategies for nurturing the creative thinking processes, which include opportunities for sound exploration, manipulation, organization of musical material through composition, playing around with sounds, and brainstorming solutions to musical problems. Furthermore, Hickey and Webster (2001) recommended that reflective thoughts and revisions are crucial in developing intentionality in a student.

Barrett and Gromko (2007) advocated that the process of creativity emerges in a system involving social interaction, joint interpretation and creative collaboration. Other studies (Burnard and Younker, 2002, 2004; Wiggins, 2007) revealed that students’ composing processes or “pathways” may vary according to a number of factors, including socio-cultural practices and students’ musical background, and suggested that teachers should take into consideration their particular educational settings and context of learning.

In her book Musical Creativities in Practice (2012), Burnard presented creative practices of music bands, singer–songwriters, DJ culture, and new technologies that do not belong to one genre or culture. She proposed a framework for understanding musical creativities through a sociological context and a pluralistic view in which different types of creativities are essentially varied pathways of composing situated in real word contemporary practices. In this view of musical creativity, Burnard suggested incorporating current and divergent musical practices into traditional music curricula that are drawn from the diverse collection of case studies presented in the book. The author supported that in educational practice musical creativities can be fostered by utilizing digital technologies in the same way that technologically mediated music practices (such as DJ practices, computer games audio design, live coding, etc.), digital societies and music making communities arise through interconnections between cultures, communities and consumers (Dobson, 2015).

The huge diversity of creative practices, the complicated view of creativity with its many types, and the lack of formalized models of composition processes makes the design of experimental investigations and quantitative methodologies extremely difficult (Lefford, 2013). Furthermore, the lack of an overarching working definition of creativity, and the ambiguity and lack of greater specificity of the creative process, prevents the inclusion of detailed and clear attributes of creativity into music curricula (Lefford, 2013).

Challenges in Teaching Music and the Importance of Affect

The three fundamental activities in music education are musical performance, including singing and playing instruments, composition, including improvisation, and listening (Paynter, 1992; Swanwick and Franca, 1999; Swanwick, 2011). Creative thinking or thinking in sound is a central cognitive ability to the art of music and an essential principle of music teaching and learning and is therefore linked to any type of musical activity, i.e., listening, composing or performing (Hickey and Webster, 2001).

Despite the fact that composition has been long established as a core curricular activity, understanding creativity in student compositions continues to be a complicated matter (Burnard and Younker, 2002; Webster, 2016). Teachers consider composition as the most problematic and admit difficulties or lack of knowledge in planning and implementing creative activities that can promote music learning and creative thinking in the classroom (Dogani, 2004; Saetre, 2011; Coulson and Burke, 2013; Bauer, 2014). The realization of creative activities, whether these are improvisatory based or short structured composition tasks, is also problematic for students. For example, Coulson and Burke (2013) report difficulties of elementary students in incorporating the same rhythmic/melodic motifs in their antecedent-consequent improvisations using bar instruments and lack of ability in hearing the music in their head before playing it. Some researchers support that students’ difficulties in carrying out creative tasks are related to lack of confidence in playing musical instruments and in using them to explore and engage in creative processes, lack of musical skills and knowledge, and lack of effective modeling and support from teachers (Burnard and Younker, 2002, 2004; Crow, 2008). Other authors refer to a large percentage of students in music classrooms that do not have a musical background as “the other 80%” (Bauer, 2014; 60). As a consequence, students limited musical training and understanding of musical notation constrains imaginative and expressive use of musical materials (Burnard and Younker, 2004), and also restricts them from writing down their creative work.

Music listening activities (also known as audience-listening, or listening-and-analysis), is another area of the music practice that poses problems. Most students do not remain focused during listening activities because, of all the arts, music is the most abstract and requires a period of time to unroll in order for the listener to experience it (Kravitt, 1972; Deliege et al., 1996; Todd and Mishra, 2013). Furthermore, students’ personal preferences in specific genres of music interfere with their receptiveness and influence negatively their attentiveness and engagement (Swanwick, 2011; Todd and Mishra, 2013). Thus, students struggle to identify and describe musical elements and constructs, to retain musical events and compare sections of the music, or to critically reflect on the music (Tan and Kelly, 2004; Dunn, 2008; Todd and Mishra, 2013). Although the process of “thinking in sound” is considered as a cognitive task, it also includes another essential aspect, that of communicating feelings or being inspired by feelings. People listen to music because of its ability to influence emotions, i.e., to change moods, to release emotions, to have a fun or relax, to match their current emotional state with the music, etc. (Juslin and Sloboda, 2011). Performers and composers use musical elements and structure to communicate, induce, and express emotions in all aspects of music making. Well-renowned music educators supported that both cognition and emotion are essential in the process of musical composition (Webster, 2002), and that students should have control of the musical materials, form, and the expressive character of music in all core activities (Swanwick, 2011). Therefore, a real musical experience, i.e., musical performance, audiation, or composition, should not separate the music’s expressive character and emotional influence from the learning of its cognitive aspects (Paynter, 1992).

Despite the wide acceptance about the emotional impact of music and its importance in music education, there is lack of substantial guidance relating to the inclusion of affect in music learning, including studies that deal with self-expression and emotion in student compositions (Dogani, 2004; Hallam, 2011). Hallam (2011) suggested that by initiating positive emotional experiences and personal fulfillment in the classroom, encouraging expression of emotions and identity, and placing the emphasis on the enjoyment throughout the basic activities, would increase engagement and positively influence learning and attainment in music. She also supported that motivation and enthusiasm will grow by placing the emphasis on understanding the role of emotion in music rather than on the acquisition of skills (Dogani, 2004; Hallam, 2011).

Juslin (2019) supported that music is constantly interpreted by the listener—whether implicitly or explicitly—and that music and emotion are both linked to musical meaning and perception. According to Swanwick (2016) music is a symbolic language that uses metaphors to convey meanings and emotions. Music can be experienced and understood through engagement and interaction with its musical materials, expressive character and structure (Swanwick, 2016). The empirical findings in psychology of music and neuroscience suggest that music activates and moves us both intellectually, emotionally and physiologically (Koelsch, 2014). Thus, emotions and intellect in music are interdependent and interwoven. The problem, however, is not with making our musical thinking and engagement (composing, listening, or performing) more “embodied” to facilitate better understanding because musical thought and understanding is a full-bodied affair due to the nature and features of the music. Musical creativity, composition, listening and performing entail embodied, embedded, enactive and extended forms of participation and sense making activities that take place within a dynamic, distributed, multi-organism living system. In this system or context, interacting, self-organizing agents influence each other and use tools which extend musical understanding and scaffold them. The issue at stake thus, is discovering approaches and tools to make musical properties more understandable and participants more aware of the qualities of the music and more efficient in using them creatively.

Teaching Music Composition and Listening-and-Analysis With Technology

The most commonly documented composition technologies include either the use of musical notation software and sequencers or music production and digital audio applications (Bauer, 2014). Websites for online music production and collaboration1,2,3,4 and “apps” (such as, GarageBand, Logic Pro, Reaper, Studio One, Pro Tools or any other audio software) can be used offline or over a network, enabling users to record and upload their musical ideas, invite others to collaborate, explore and remix music of others, and sell their finished collaborations. Some researchers suggested that this kind of informal experiences are very promising and can transform music education (Brown and Dillon, 2007; Ruthmann, 2007; Gall and Breeze, 2008). Digital music applications and devices, including loop-based technologies that use chunks of pre-recorded music, DJ mixing programs, MP3 files, and composition tools that generate algorithms, are also attractive to young people because they do not require reading notation and performing skills (Crow, 2006; Gall and Breeze, 2008; Mellor, 2008; Wise et al., 2011). However, these approaches were criticized because they involve a limited number of musical materials and styles, do not support a deep understanding about musical characteristics and structure, while they produce long, automated music that lacks imagination, originality, and expressiveness (Crow, 2006; Savage, 2007; Swanwick, 2011). Therefore, some authors suggested that these technologies should be used at a starting level and then familiarize students with more creative approaches (Freedman, 2013; Bauer, 2014).

According to research evidence, compositional approaches that use music notation or sequencing applications enable the development of musical literacy and knowledge and allow students who do not read musical notation to fully engage in the creative process (Nilsson and Folkestad, 2005; Breeze, 2011). Because of their immediate playback feature, these technologies allow students to easily follow their saved musical ideas, and to edit, extend, and share them with others for feedback (Savage, 2010; Breeze, 2011; Wise et al., 2011; Freedman, 2013).

Bamberger (2003) investigated the compositional processes of non-musically trained college students using the interactive program “Impromptu.” The application supports musical analysis and composition activities, such as the reconstruction and composition of melodies without harmonic accompaniment (Downton et al., 2012; Portowitz et al., 2014). In addition to the immediate playback feature, the software enables multiple representations of melodies, including patterned blocks which symbolize melodic fragments of 5−8 notes, a graphical illustration of the melodic contour that documents pitch and note duration, and a notebook in which students take notes of their decisions, thinking processes, steps taken in making their melodies, and reflective comments. According to research evidence, this software can promote the development of musical conceptual knowledge, including the production of coherent tonal melodies, and reveal cultural perceptions of music (Bamberger, 2003; Downton et al., 2012; Portowitz et al., 2014).

Composition activities using algorithmic and electroacoustic techniques require sound processing technologies and programing skills, and therefore their application in secondary education classrooms is rare (Brown and Dillon, 2007; Field, 2007), while to the best of these authors’ knowledge their efficiency has not been examined.

Kassner (2007) described listening maps as graphical depictions of musical works that are used during listening activities as a means to draw students’ attention and perception to important events, elements and sections of the music. Listening maps may include pictures, text, piano-roll representations, musical signs and elements, musical notation, and various lines and shapes representing pitch, duration of notes, and melodic contour. Animated maps have an added value compared to static maps because they feature musical events and musical concepts the same time as the music progress. In addition, they intrigue learners through interesting graphics, images, cartoons, line drawings, and colors (Kassner, 2007). Animated or interactive listening maps can be found in music textbook series, such as Spotlight on Music (Bond et al., 2006, 2008, 2011), or in multimedia CD sets, such as Making Music: Animated Listening Maps (Burdett, 2006), and may incorporate interactive games and activities in addition to the animations of musical works. Existing publications examined the effectiveness of static listening guides, proposed related teaching strategies (Gromko and Russell, 2002; Tan and Kelly, 2004; Dunn, 2008; Kerchner, 2013), or investigated the creation of musical maps as a tool for developing students’ musical understanding while listening to music (Gromko and Russell, 2002; Blair, 2007). However, there is a lack of studies that investigate the teaching of music with animated and interactive listening maps taking into consideration affect.

Affective Guidance in Teaching Music With Technology: A Design Methodology Based on an Extended View of TPCK

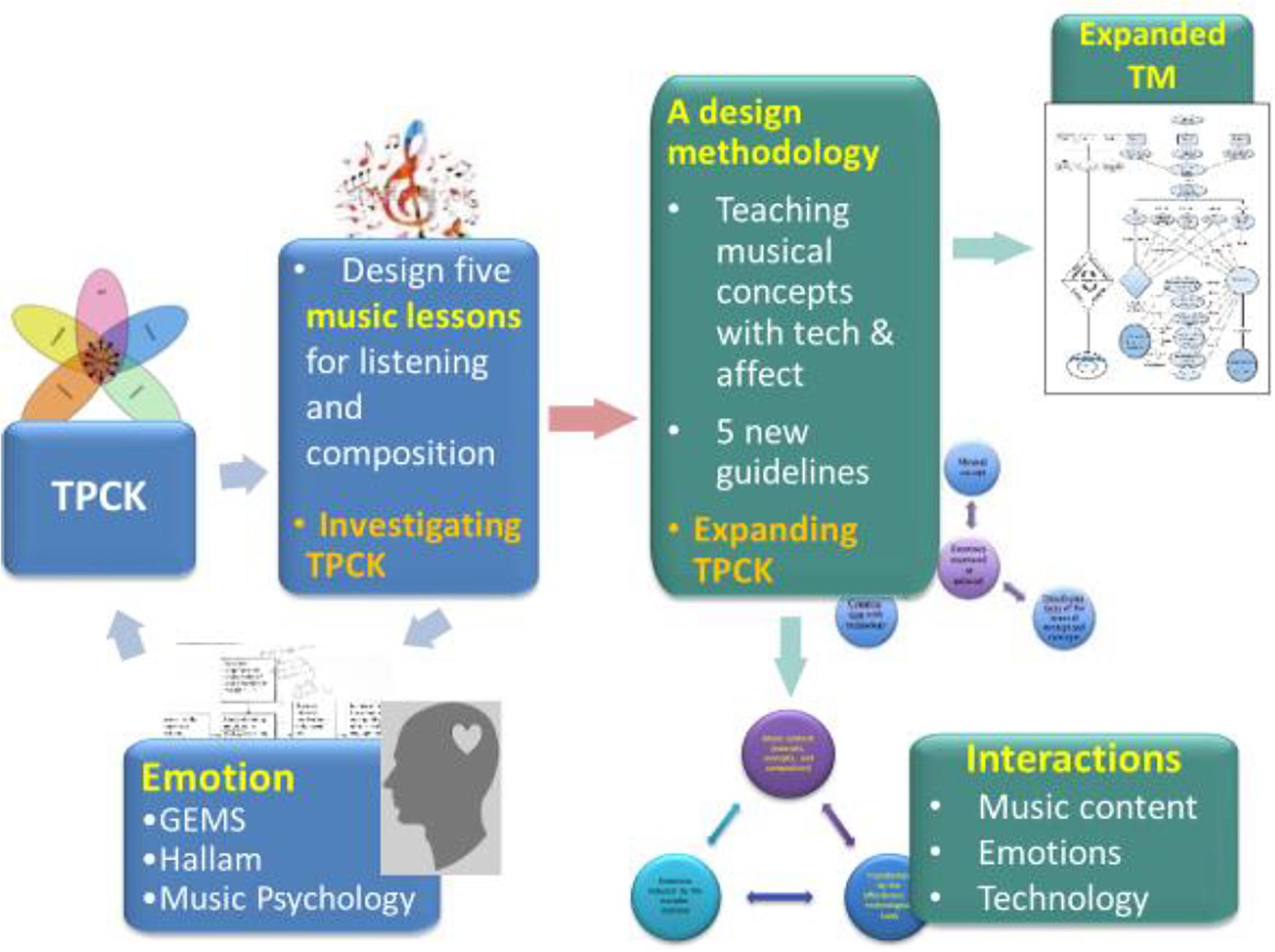

The research procedures of this study, shown in Figure 2, began with a design process of five lessons in the domain of music taking as a basis the design guidelines of the general TPCK framework (Angeli and Valanides, 2009, 2013). This design process which focused on the activities of listening and composition, aimed at investigating the adequacy of the model in guiding the instructional design in music education. However, the weakness of the TPCK framework to guide the inclusion of affect and the lack of substantial studies that addressed the integration of emotions in the process of composition and the teaching and learning of music (Webster, 2002; Hallam, 2011), shifted the investigation in the area of music psychology.

Figure 2. Developing a design methodology through the process of investigating TPCK in music education.

Thus, the need for considering both the cognitive and the affective domains in the design process of music lessons from the very beginning of this effort, resulted in the development of a new design methodology for music elaborated by a set of five instructional design principles and the expansion of Technology Mapping to include affect. The term design methodology refers to the development of a method or process for designing technology-enhanced learning within the domain of music education which constitutes an approach of teaching musical content and concepts and relating them with emotions through the use of technology. The proposed design methodology is the outcome of an extended literature review integrated with personal teaching experiences and individual design processes. The proposed instructional principles clarify for music teachers and designers the second and third general design principles proposed by Angeli and Valanides (2009, 2013).

The inclusion of affect in the design methodology was grounded on the GEMS framework (Zentner et al., 2008), Hallam’s (2011) model of how emotion could enhance commitment and attainment in music, and music psychology experimental research on emotional responses (Gabrielsson, 2009; Gabrielsson and Lindström, 2010). Many of the emotional labels included in the GEMS model, the only instrument for classifying musically induced emotions, were used in student handouts to help learners describe more accurately the emotions induced during listening activities. More explicitly, a list of musical emotions (or synonyms), taken from the nine categories of the GEMS scale, were provided in student handouts to help them select the appropriate emotional expression during the hearing of listening selections, while there was an option for supplying one’s own emotional description. The principles of Hallam’s (2011) model “understanding emotion in music” and “expressing oneself through music” have been infused in the design methodology, guidelines and activities that were implemented in this research. Specifically, the proposed guidelines and the designed activities require that students recognize emotions expressed or felt, relate cognitive and emotional aspects of the music, or express moods or feelings through their compositions.

In psychology of music there is also a body of experimental research that investigated the emotional effects of single musical elements, such as texture, tempo, melody, mode, and dynamics (Juslin and Laukka, 2004; Gabrielsson, 2009). Few studies also investigated the interactions among two or three of such musical elements (Webster and Weir, 2005). The researcher borrowed the idea of experimenting with different dimensions of musical parameters in some of the designed activities in order to help students understand both the cognitive aspects and the emotional effects of certain musical elements. An example of such an activity is described later in this section for the element of tempo and its interactive effect with mode.

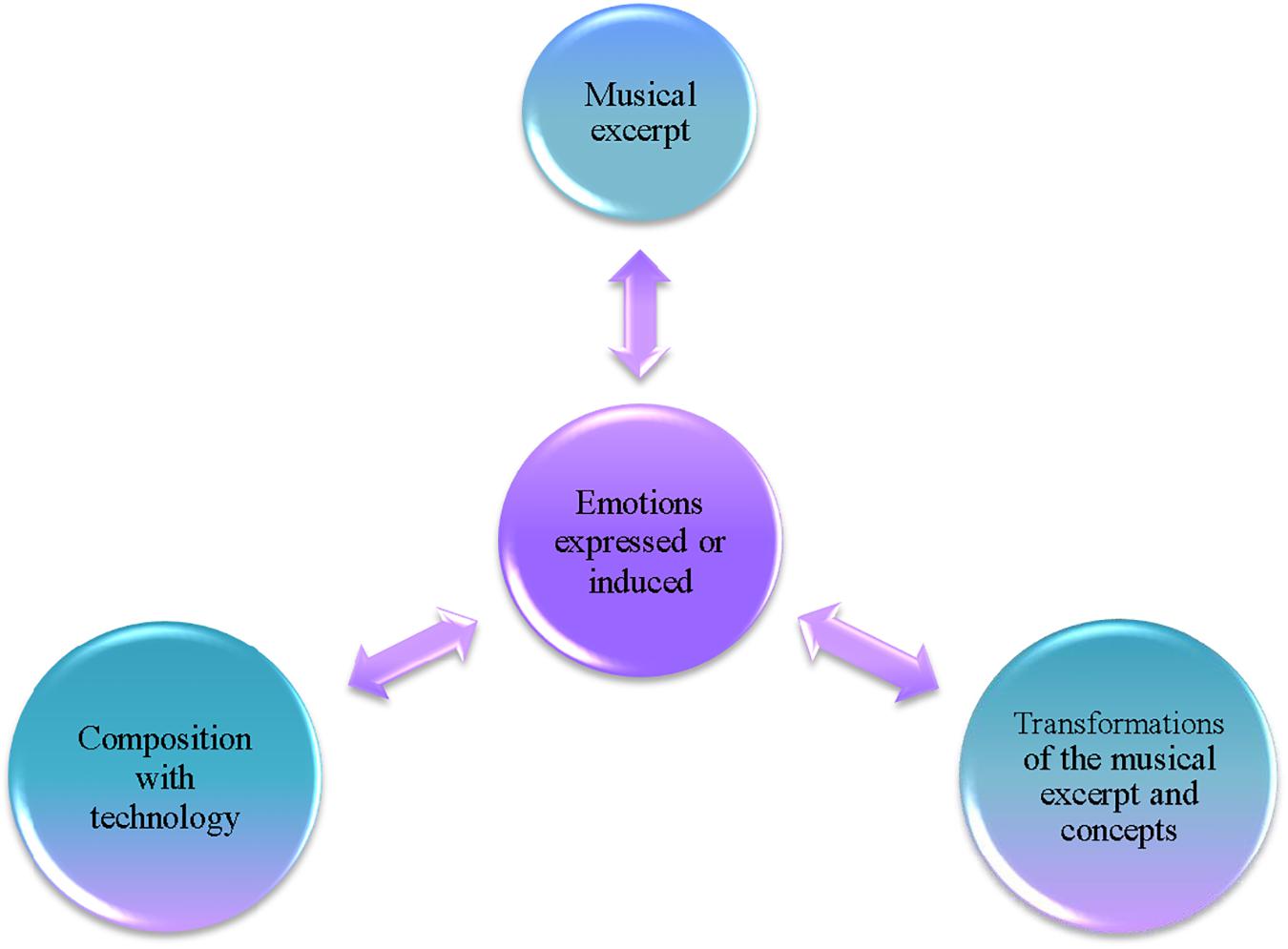

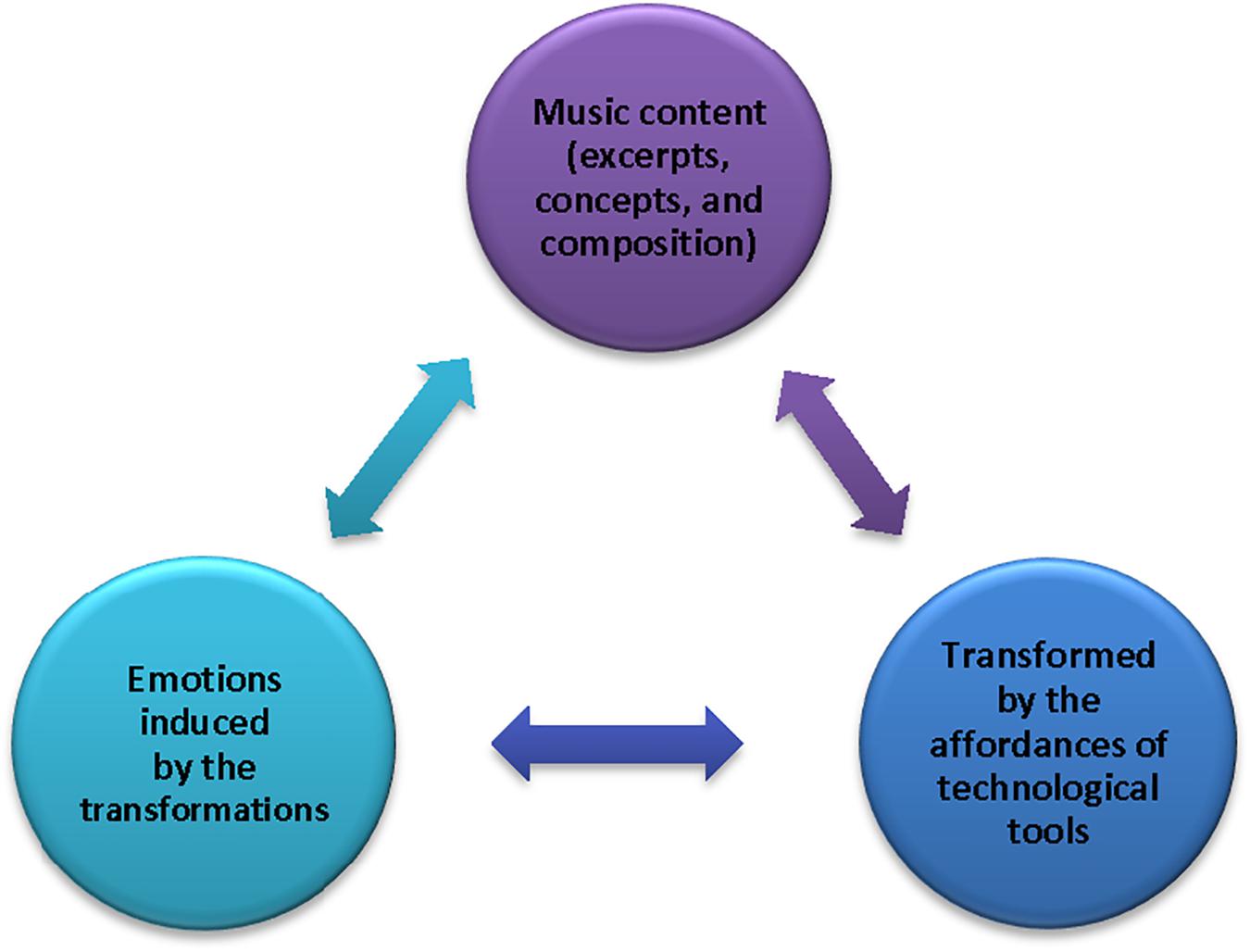

Part of the consideration for the inclusion of the affective domain in the design methodology, focused on understanding the relationship between music cognition, technology, and affect, according to the guidelines of the original Technology Mapping process depicted in Figure 1. Figure 3 shows a representation of the design methodology proposed by Macrides and Angeli (2018a; 2018b; Figures 4, 5) denote the interactions occurring between musical content, emotions and technology during the learning process. The authors elucidated the design methodology with instructional guidelines (Macrides and Angeli, 2018a, b) a final set of which is presented herein. The examples provided further illustrate the expanded Technology Mapping model and the proposed guidelines as well as some pedagogical affordances of MuseScore, including the piano-roll depiction, the tempo slider, the transpose function, the Palettes, and the Mixer window.

Figure 3. A methodology for designing technology-enhanced learning in listening and composition: Teaching musical concepts and relating them with emotions through the use of technology (adopted from Macrides and Angeli, 2018a, b).

Figure 4. Interactions among musical content, emotions, and technology (adopted from Macrides and Angeli, 2018a, b).

Figure 5. An expanded model of Technology Mapping showing the interrelations of musical elements and concepts, technology, and affect (adopted from Macrides and Angeli, 2018a, b).

As Figure 3 shows, during the first hearing of a listening selection (upper circle) students identify felt or expressed emotions that the music elicits (inner circle) without having any visual stimuli. In subsequent hearings, visualizations through digital technologies, such as animated listening maps and notation software, support the exploration and understanding of the cognitive aspects of music, according to the learning objectives (right hand side circle). While supporting music learning through experimentations and transformations of the content, technology supports the association of emotive expressions with different and contrasting treatments of musical characteristics and promotes the understanding of feelings with respect to specific musical materials or mixes of them (inner circle). At the final stage of this process, students engage in a short composition activity using technology (left hand side circle). Students are guided to decide about how to use the musical materials and constructs learned to communicate the desired emotion or feeling (inner circle).

In the procedure presented above, there are interactions among musical content, emotions, and technology which are designated in Figure 4. Due to the affordances of technology to isolate and experiment with musical materials using multiple dynamic representations, and immediately observe, hear and feel the modifications made, including, changes in the tempo, sounds, rhythmic patterns, pitch, dynamics, articulation, etc., students can relate the particular changes with respective changes in moods or emotions. Thus, technology can facilitate more clearly and immediately not only the understanding of the cognitive aspects of music but also the understanding of their emotional effects. Empirical findings of students’ experimentation with tempo using MuseScore were reported by Macrides and Angeli (2018a; 2018b). In an experimental study, they found that students investigating tempo through technology scored higher in both the understanding of tempo (i.e., the speed of the music) and its emotional effects than students carrying out the same activity with musical instruments.

Furthermore, since the computer can play back whatever users create, students can focus on the creative process and how to shape their ideas and communicate feelings instead of concentrating or even struggling to perform their works and synchronize with each other. Lastly, technology’s rich resources and affordances facilitate the creation of new emotions and ideas during the entire composition process.

Accordingly, based on the processes described in Figures 3, 5, the authors herein propose a design methodology consisting of a set of design guidelines that extend the second and third instructional design guidelines proposed by Angeli and Valanides (2009, 2013), providing, this way, explicit guidance in the application of TPCK theory in the teaching of music.

(1) Use affect (emotion elicited from a musical excerpt) to motivate students to engage in analysis and exploration of musical excerpts and related concepts.

(1.1) Ask students to identify emotions felt or expressed by the music and write them on their handout without having any visual stimuli.

(2) Use technology to help visualize, explore, and support understanding of the cognitive aspects of music (structures and elements) according to curricular objectives, such as melodic contour, dynamics, melodic motives, ostinato, phrases, sections, etc.

(2.1) Present an interactive/animated listening map of a short musical excerpt.

(2.2) Include pre-listening visualizations and explorations of individual musical features.

(2.2.1.) Students, working in dyads, explore the animation’s resources and complete short questions on their handout. They are also provided with a printed version of the map.

(2.3) Alternatively, play reductions of musical excerpts using a notation software, and/or provide different representations of concepts using the affordances of the software (i.e., piano-roll editor view, mixer, palette).

(2.3.1) Students identify contrasting or different treatment of musical materials and complete very short questions.

(3) Use the different transformations that become possible with the affordances of technology to relate cognitive and emotional aspects, i.e., understand how musical elements influence emotion induction (affect).

(3.1) Provide a mapping or round up of the emotional expression(s) with the musical features identified in the listening selection.

(3.2) Discuss which musical or structural elements most likely affected the emotions identified earlier, or how the mood might change if these elements change.

(3.3) Use a notation file that has been prepared before the lesson, and have students (a) experiment with contrasting dimensions of a musical element in order to understand how a change of feeling or mood can be induced, and/or (b) apply the new device or element in a short task using a semi-completed template file so that students can become more familiar with technical, cognitive, and affective aspects of a particular concept, or, combination of two-three concepts (i.e., soft vs. loud dynamics, thin vs. thicker texture, ascending vs. descending melody, conjunct or disjunct melody, etc.).

(4) Prompt students to create musical compositions with emotions in order to express or communicate feelings and mood, using elements and structural devices explored in the unit.

(4.1) Use a template composition file and provide a handout with restrictions and guiding questions about the treatment of musical characteristics explored in the unit.

(5) Repeat steps 1−4 to teach new concepts, gradually engaging students in more musically and emotionally coherent and technically informed compositions.

To better illustrate the preceding discussion and guidelines, the authors henceforth provide

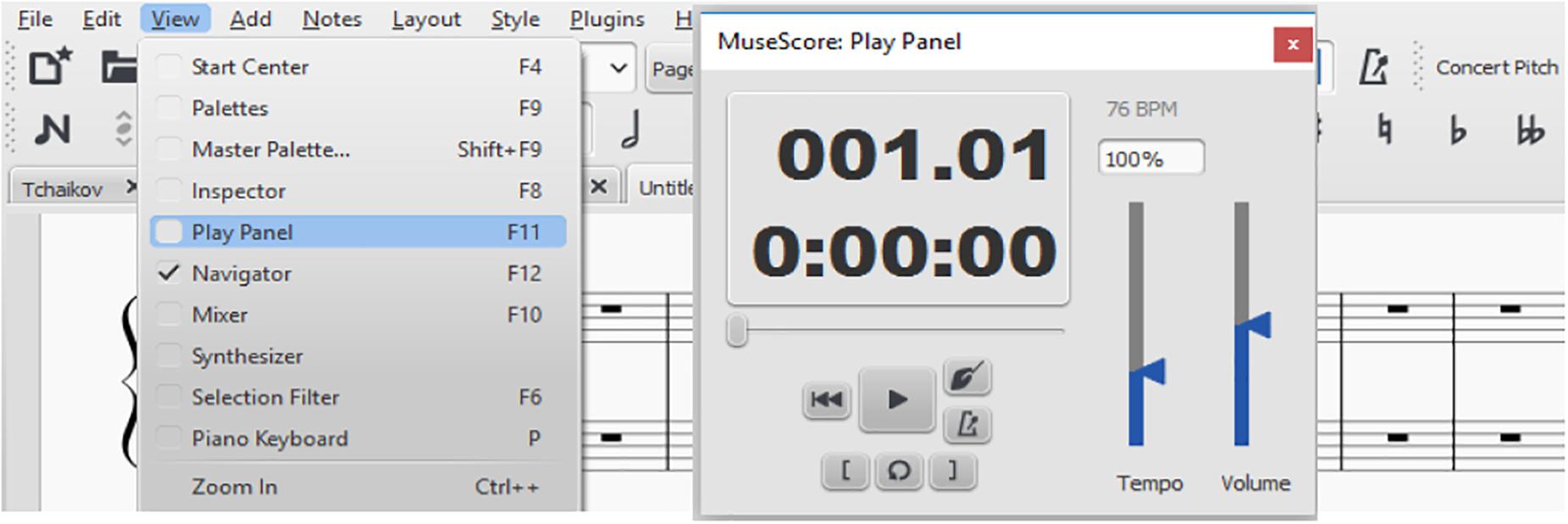

several examples. In the first example, students experiment with tempo (guideline 3) through the MuseScore application. They are provided with a file containing a melancholic folk song in A Minor set at the actual tempo (70 bpm) of the song. After hearing the song at the original slow speed, students are guided to open the Play Panel from the View Menu (see Figure 6), adjust the tempo slider at 130 bpm, and listen again to the same song. Due to the interactive effects of tempo × mode, students experience a happier mood at the second hearing (i.e., faster speed) despite the minor mode of the song. Then students are asked to write how can tempo changes evoke different emotions. Similarly, other explorations can be carried out with various elements or combinations of elements using short excerpts in MuseScore. For example, students may explore the emotions elicited by sequentially trying out soft and then loud dynamics on a single melody, and, hence, by repeating the process on a harmonized melody. Exploration may continue by adding a third parameter, i.e., by changing the instrument sounds in the given arrangement.

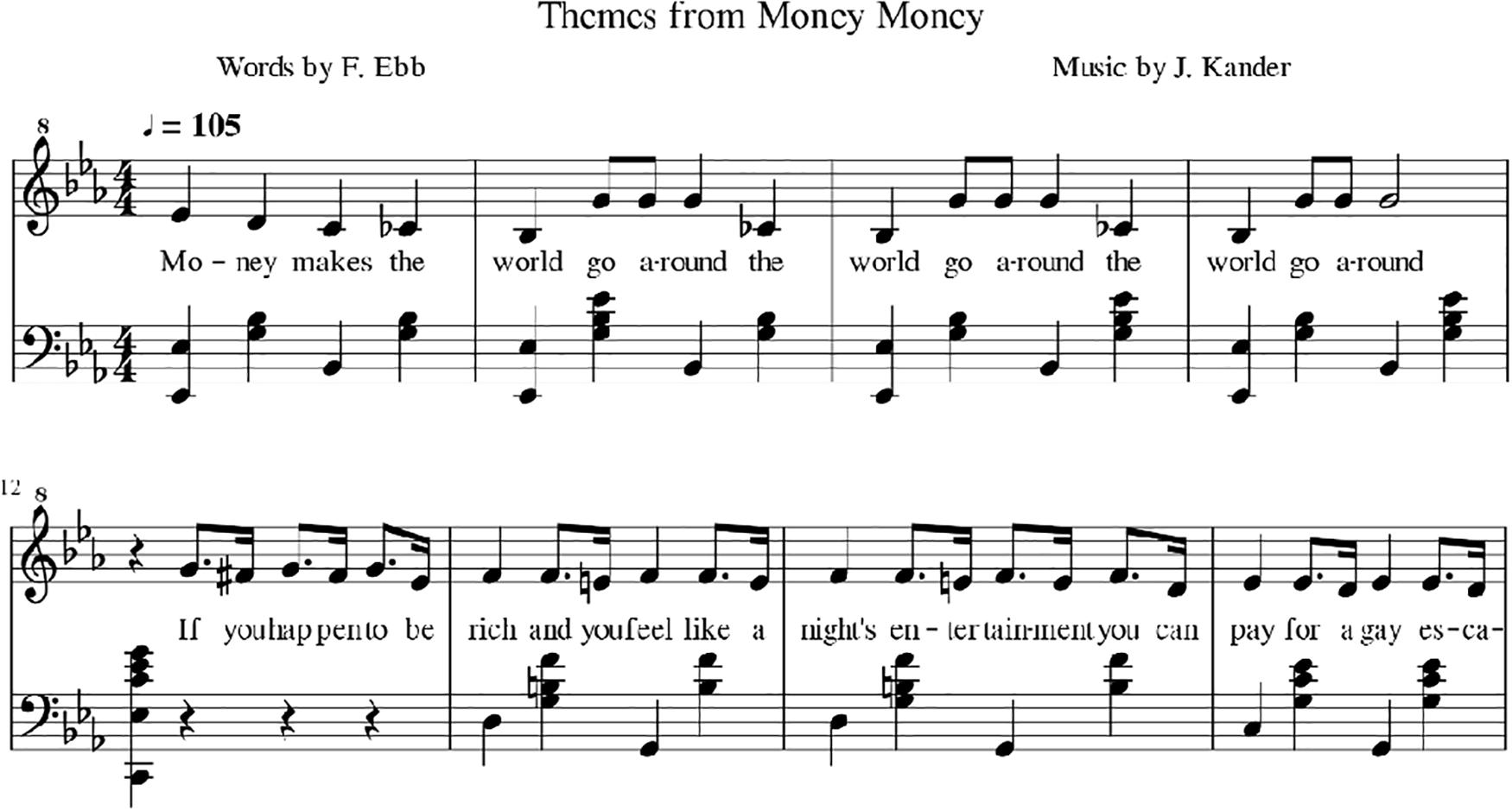

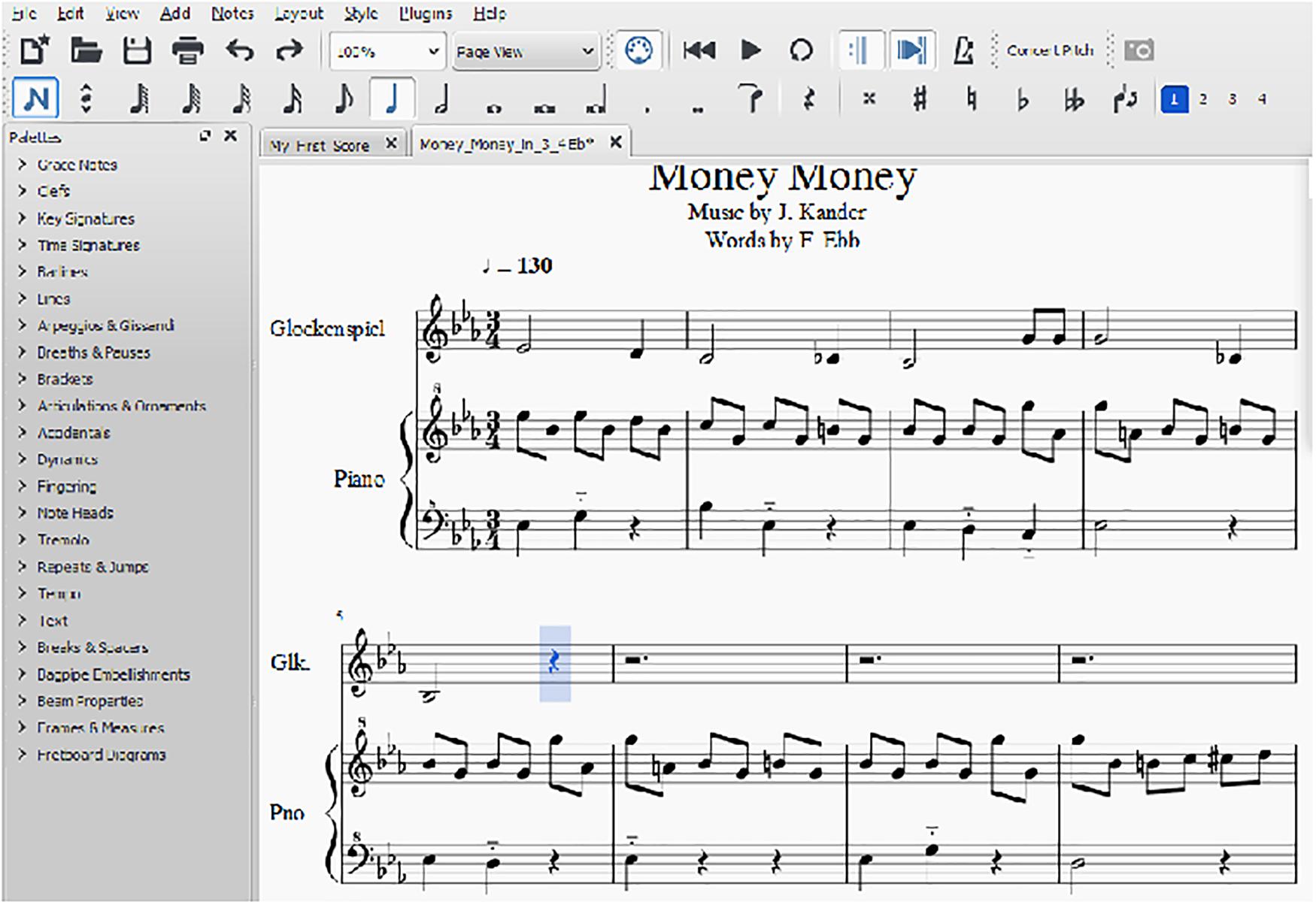

The second example provided shows how the software MuseScore can help in identifying changes in the melody (i.e., melodic rhythm, motion, and contour) and in relating these changes with emotions. Before using this notation program, students jot down their emotions while listening to a recording of the song “Money Money” [from the musical Cabaret] composed by John Kander without having any visual stimuli (guideline 1), and, afterward they sing or play the song. Subsequently, they view a short piano reduction (guideline 2) of the same song played through the software’s notation view, which consists of two sections. The first section is made up of an eight-measure verse, while the second section includes a three-measure transition leading to the refrain, which is repeated at a higher pitch and different tonality. Figure 7 shows the first four-measure phrase from each section.

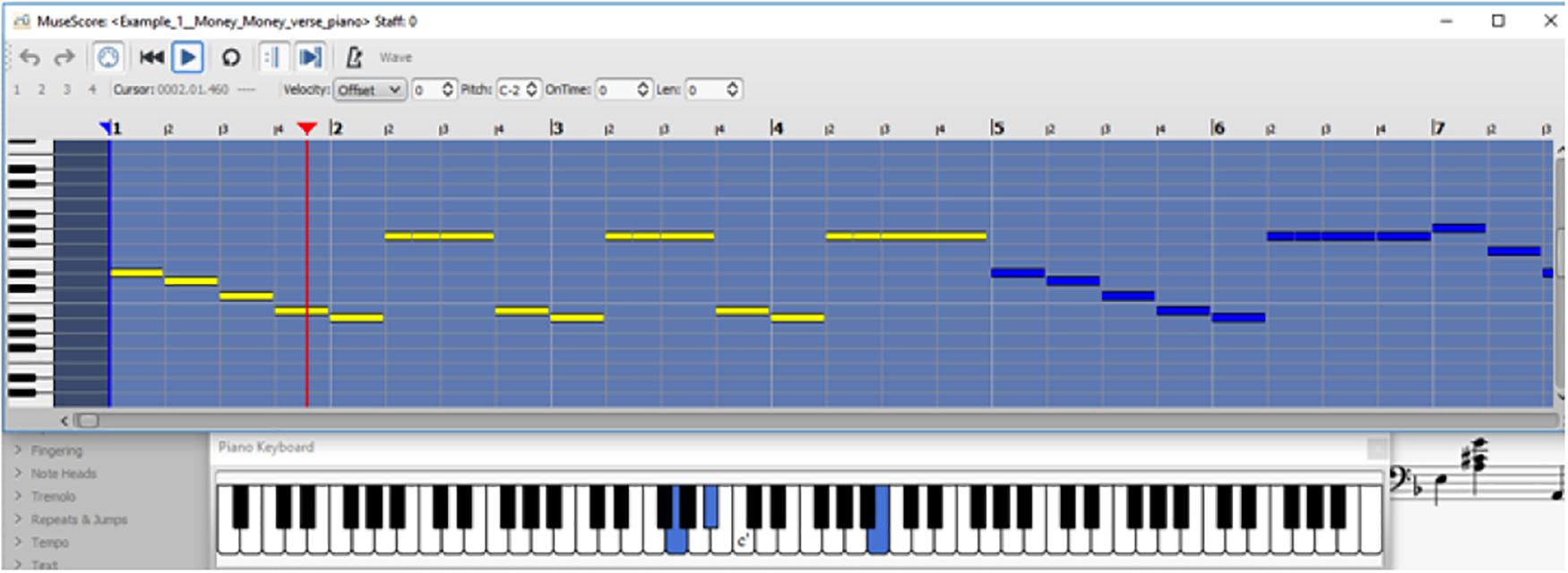

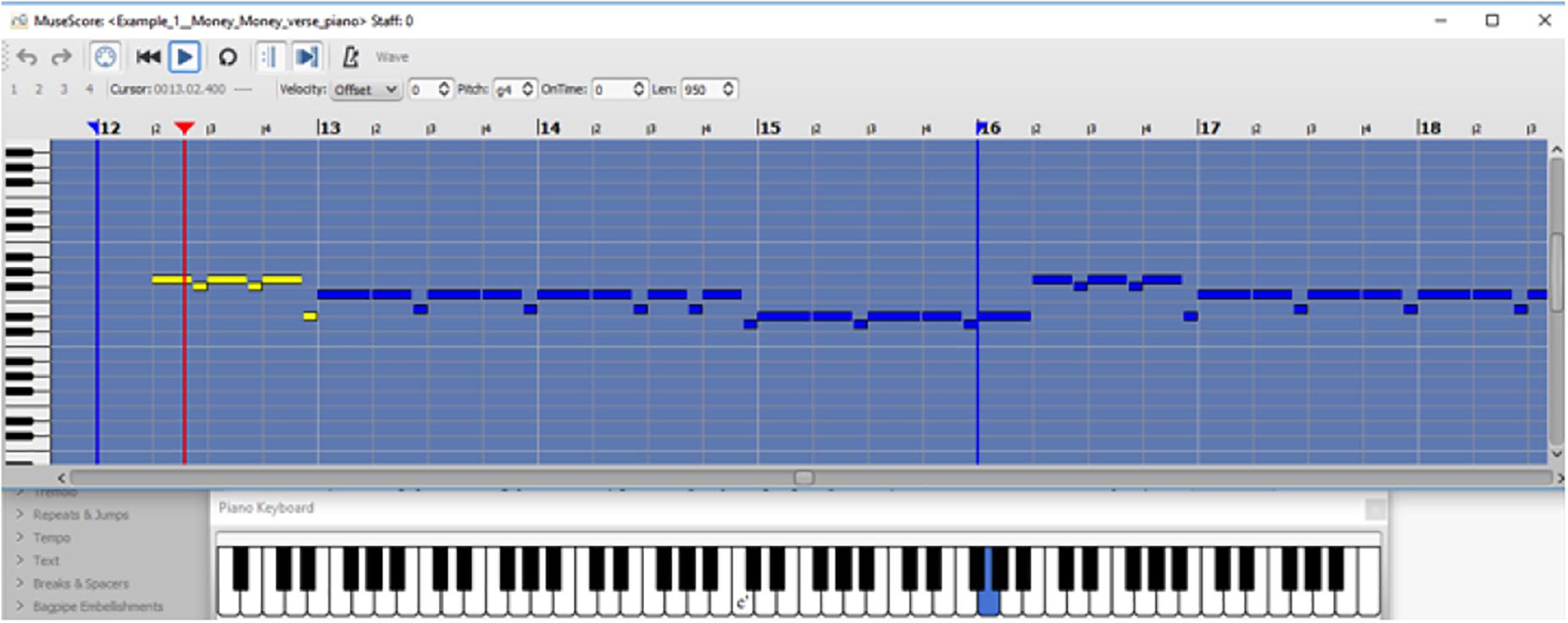

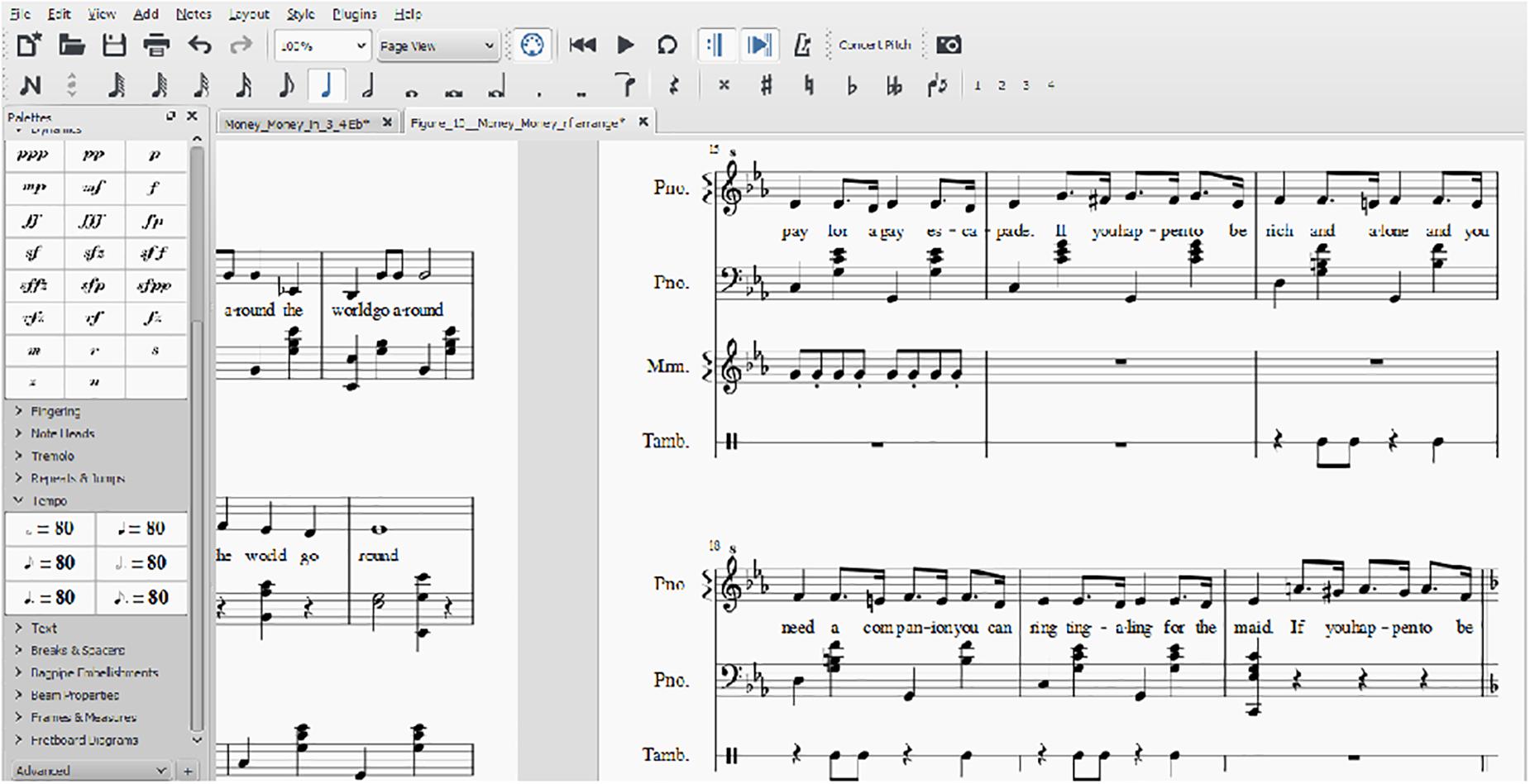

After viewing the reduced version, students recognize subtle emotional changes that may occur between the verse and refrain due to differences in the melodic shape and rhythm, and then identify the durations employed in the melody of each section (i.e., primarily quarter notes and some eighths in the verse and a dotted eighth followed by a sixteenth note figure in the refrain). In order to better help students visualize the changes in the melodic motion, shape, and rhythm, the teacher mutes the accompaniment from the Mixer window, and switches to the piano-roll-editor view, which is a graphical representation of the melody as shown in Figure 8. In the verse, students can observe a five note stepwise descending melodic motion followed by upward and downward leaps (i.e., major and minor sixth intervals) that form a “V” shaped melody. In contrast, the melodic movement in the second section is very conjunct, consisting, primarily, of descending chromatic semitones (see Figure 9). The prominent dotted eight-sixteenth note rhythmic figure in the refrain is repeated three times on the same pitch before reiterated more times down a step and then again down a third, creating a terraced falling shape. Jeanneret and Britts (2007) supported that piano-roll representation can improve students’ attentiveness, support the identification of pitch patterns, and enable the development of students’ abilities to verbalize what they are hearing, including structural and textural aspects. Thus, students can better visualize, hear, feel, and understand how contrasts in the melody amongst the two musical sections, including different rhythmical activity, different melodic motion, and, shift in pitch, can elicit a change in mood (i.e., in this case a more cheerful or agitated feeling).

Underneath the piano-roll views in Figures 8, 9, a piano keyboard view is shown in which the corresponding piano keys are highlighted as the melodic theme is played. This representation can be used additionally but not simultaneously to support an understanding of some melodic intervals or as a simulator for learning to play the tunes on keyboard instruments.

Apart from enabling visualization and identification of musical materials and emotions, the software can further enhance cognitive and emotional understanding through creative tasks. The authors provide an example of a semi-completed task (guideline 3) aiming to help students understand and feel how changes in rhythm, meter, and accompaniment influence emotions. In this exercise, students can work in dyads to complete a different arrangement of the previously studied song by re-writing the melodic theme of the verse in 3/4 time instead of 4/4. Students are provided with a MuseScore file (see Figure 10) that contains a new accompaniment for the verse, namely a variation of the theme in eighth notes with a bass line, while the refrain remains unchanged in 4/4 time.

Students also have at their disposal two types of files: (a) a MuseScore file of the previously studied example in 4/4 from which they can copy the melody notes, and (b) an audio recording file or a link to a video of a performance for hearing the song in triple time. The melody in 3/4 time consists of longer notes, i.e., half notes followed by quarter notes, and therefore, a more relaxed or lyrical feeling is elicited as opposed to the rigid quarter-note verse melody and the jovial refrain in quadruple time. Thus, this arrangement reinforces the juxtaposition of the two sections (verse-refrain) by creating greater contrasts in moods and also in musical materials, including different accompanying rhythmic patterns (an eighth note counter melody vs. the “oom-pah” pattern), changes in meter (triple vs. quadruple), and different melodic figures. An optional extension to this activity may include exploration of other musical elements that create contrasts, while at the same time familiarizing students with various functions of the software. These may include applying different dynamics and tempi in the two sections (from the Palette) or creating thicker texture by easily adding a rhythmic ostinato or a vocal pedal note melody in the refrain, as shown in Figure 11.

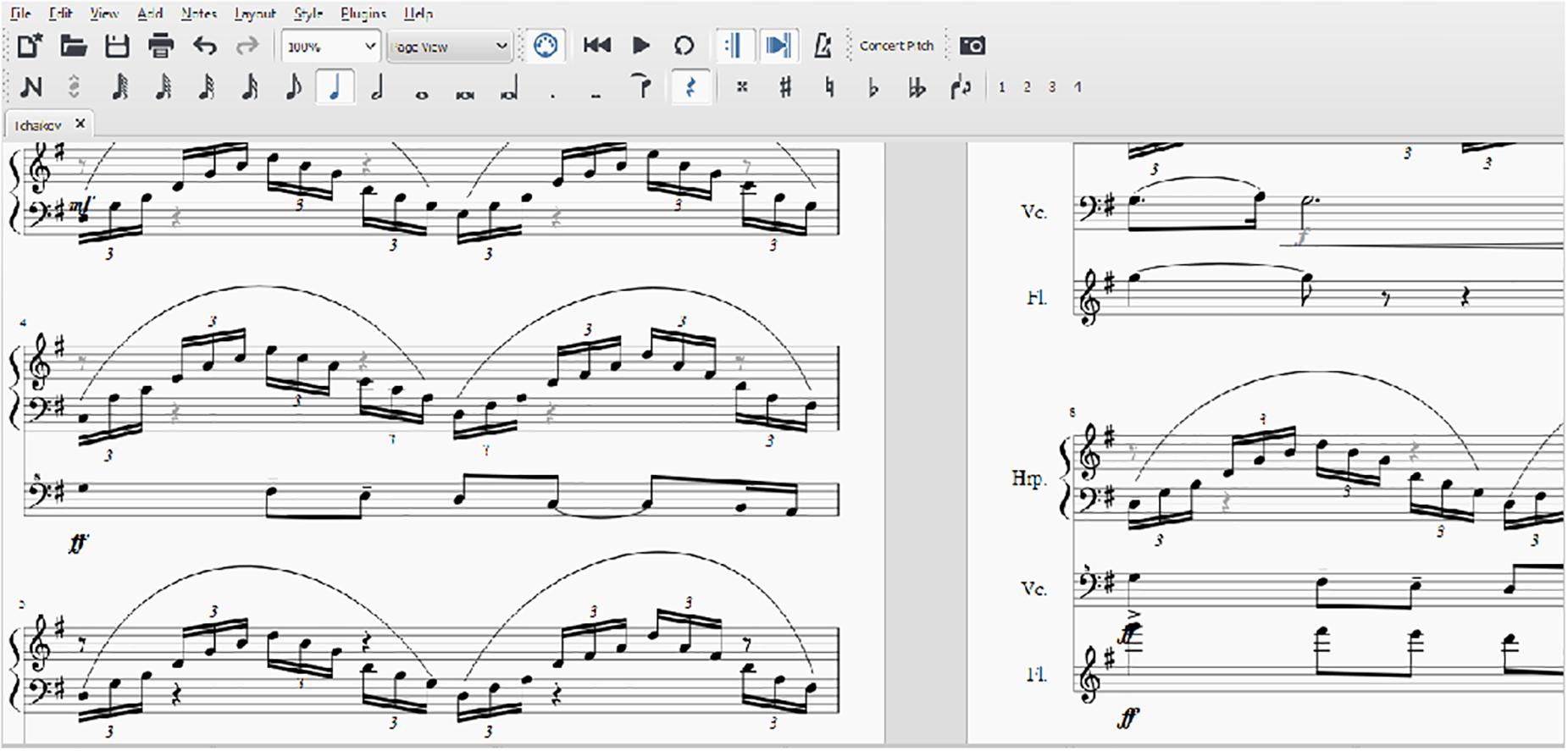

The following example is an excerpt from Tchaikovsky’s Pas de Deux, Intrada from the Nutcracker ballet (see Figure 12). The excerpt further demonstrates how various accompanying types influence different emotion inductions by featuring another accompaniment pattern (guideline 2). Moreover, this reduction highlights three timbres (harp, cellos, and flute), high and low registers, and two types of melodic motion, which also evoke different feelings. The harp accompaniment opens the excerpt (as in the original piece) with arpeggiated sixteenth-note triplets that form an arched shaped pattern (shown in Figure 12), while the cello and later the flute join in with a descending-scale melody that forms repeated falling line shapes. Although, the sixteenth-note triplets create a busy rhythmical activity, the slow tempo, the harp sound, and the arpeggiated motion create a romantic or dreamy mood.

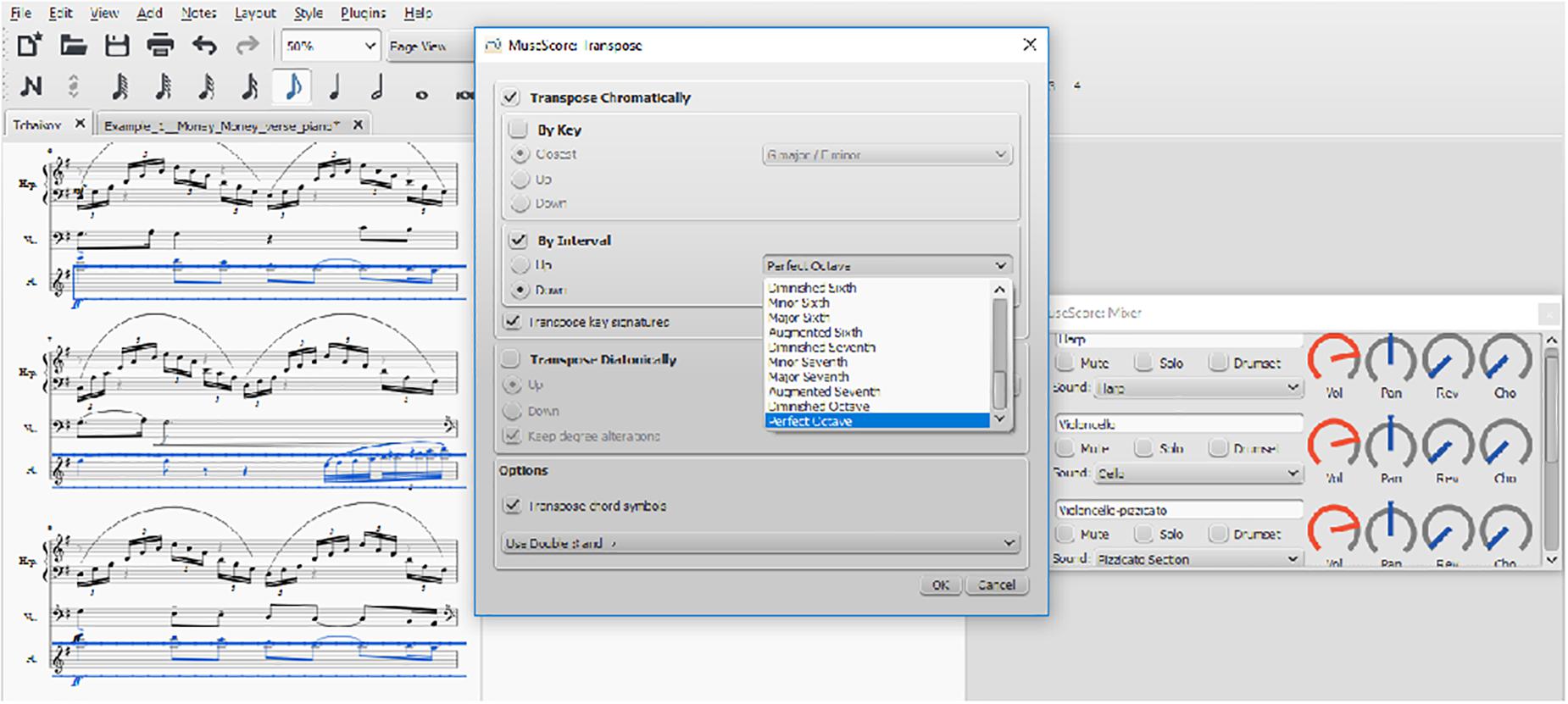

In addition to identifying specific musical elements with the above file, students can also experiment with them using the same file (guideline 3). For example, they can shift registers or change the sounds of melodic instruments and experience how these changes may affect their feelings. Specifically, using the Transpose window, students may easily move up or down a selected passage by a specified interval, as shown in Figure 13. Furthermore, they can explore sounds for a particular instrument line through its “Sound” drop-down menu in the Mixer window (shown on the right-hand side of Figure 13).

However, since the example presented here is an excerpt, containing only the theme of the piece, it cannot convey the increasingly dramatic character of the music as the work progresses. Consequently, an animated listening map (guideline 2) can provide a better understanding of the musical work’s structure and of how musical materials are used to evoke emotions as the music evolves, including changes in dynamics, mode and tonality, instrument sounds, pitch, and register.

The goal of simplifying and reducing original musical pieces is to help students zoom in on specific elements and constructs for the purpose of understanding and learning to manipulate them. However, the procedure of eliminating musical materials from large-scale works and studying or exploring them independently, entails the danger of oversimplification and fragmentation. Therefore, while it is helpful to work with excerpts and reductions using the software, a zoom out to the authentic musical pieces is also necessary in order to better relate the musical devices studied to the works from which they were extracted, and to experience the full breadth of emotions and character of the music.

At the end of the unit, students should be able to create a short composition applying the knowledge, skills and experiences acquired (guideline 5). To save time and set some limits, students can work with a template file (guideline 4) that contains the number of measures, key and time signatures, and instrument lines with selected timbres, although any of the above settings can be changed in the process accordingly. At the beginning of the composition task, students must decide what emotion or mood they wish to express or convey to the audience through their music, and, how they will use musical elements and building blocks to achieve their target, including, for example major/minor mode, melodic movement, dynamics, tempo, timber/sounds, motives, ostinato and pedal point.

Even though the approach presented here using MIDI technology has many advantages, teachers must also be aware of some limitations of this technology in order to use it more effectively in teaching. Perhaps the biggest disadvantage of MIDI is that instruments do not sound as realistic as real instruments, because this technology lacks certain natural elements that are present in acoustic instruments, such as strumming a guitar, bowing a violin, or blowing air through a wind instrument. Furthermore, the fact that all instruments play with great precision result in an unnatural feeling that is not met in natural orchestral settings. Due to these constrains, music played by MIDI instruments sounds less expressive than music performed by humans using real instruments.

Despite the aforementioned weaknesses of MIDI technology, musical concepts and emotions can be expressed and understood using music notation software, and, particularly MuseScore. In order to achieve better results when creating examples or designing activities, apart from using good sound cards and sound fonts, teachers must have in mind some tips and encourage students to follow them. Concerning instrument sounds, good or realistic timbres are percussions and keyboards. Instruments that sound unnatural include solo brass and strings, although orchestral legato strings may not sound bad in an orchestration with appropriate balance and mixing. In addition, students should be guided to explore synthetic or electronic sounds and experiment with the Palette, the Synthesizer, and Mixer windows, adding dynamics and articulation, adjusting volume, effects (echo, reverb), and tempo to achieve a good sounding and balanced orchestration. Furthermore, changing playback devices may cause instrument timbres to sound very differently, thus, exporting final products to an audio format, such as, mp3s is another option.

Empirical Evidence

The derived design methodology was tested and revised in a three-cycle design-based research that aimed at determining the effectiveness of the five proposed music guidelines through the implementation of the five lesson designs in control and experimental secondary education classrooms with 516 participating students (Macrides and Angeli, 2018a, b). The aim of the design-based research was to reach a robust methodology for designing technology-enhanced learning within the context of listening and composition activities in music that would include the affective domain.

The three cycles spanned over a period of one school year while the implementation of the lesson designs and the data collection procedures per cycle were completed in a period of 7 weeks during the regular weekly 40-min music class meeting. Prior to the application of the lesson designs, a pre-test and a questionnaire concerning student demographics and musical background information were administered. The first two lessons focused on listening and analysis of two listening excerpts using animated listening maps. Lessons three and four focused on the teaching of musical materials and constructs using the notation program MuseScore while engaging students in two short composition exercises. Lesson five consisted of a composition task involving the use of musical materials presented in all the previous lessons and was completed in two meetings.

The proposed guidelines and methodology were tested by assessing students’ learning gain on musical knowledge through a post-test that was administered at the end of each lesson and a delayed test given a week later. Furthermore, music composition products were collected at the end of the final lesson and evaluated according to the Composition Assessment Form. The results presented herein concern the composition task. This investigation sought to determine if there were any statistically significant differences in the musical quality, coherence, originality and emotional expressivity of student compositions between the experimental and control groups.

Method

Participants

The participants in the study were secondary school students (N = 516) who were enrolled in Grade 8 (N = 114), Grade 9 (N = 366), and Grade 10 (N = 36). Students were between 14 and 16 years of age, and were selected from five schools, one private and four public schools from three different towns. In the first cycle, there were 50 participants all of which were enrolled at School A in Grade 9. In the second cycle, the total number of participants was 148, of which 72 were enrolled at School B in Grade 9 and 76 at School C in Grade 9 (N = 40) and Grade 10 (N = 36). The total number of participants in the third cycle were 318, including 228 students enrolled at School D in Grades 8 (N = 90) and 9 (N = 138) and 90 students at School E in Grades 8 (N = 24) and 9 (N = 66).

Cycle 2 had the highest percentage of students studying music privately (30%) and the highest percentage of students continuing their private music instruction after their fourth year of study (14%). Cycle 3 had the second highest percentage of students studying music outside school (28.5%), however, only 6% continued after the fourth year, while in cycle 1 only 12.5% received private music lessons most of which dropped out before their fourth year.

Students, belonging in intact classes, were randomly divided into two groups to form the control and experimental groups for each cycle. There were 26 intact classes, 11 of which were assigned as control and 16 as experimental. The number of students per class in public schools usually ranged from 22 to 25, whereas in the private school the number of students per class was usually 15. The teaching in the two groups was equivalent in terms of teaching procedures and materials. The difference in the approach between control and experimental groups was the use of technological tools during the implementation of some activities for the experimental group.

Due to the length of the study and the large amount of data that had to be collected and analyzed, the results for 191 students (control group, N = 86; experimental group, N = 105) or about 40% of the student compositions have been graded and are presented in this paper. Students come from three schools and belonged in nine intact control and experimental classes, three of which were in cycles 2 and six in cycle 3.

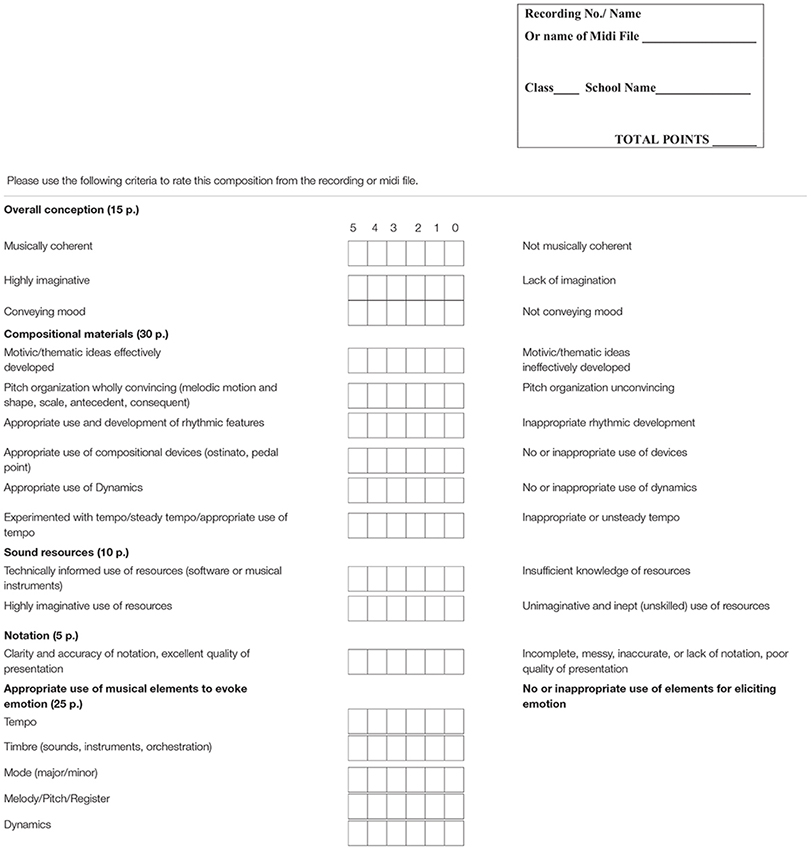

Composition Assessment Form

The Composition Assessment Form (CAF), as shown in the Appendix, was created by the researchers to assess students’ compositional tasks. This scoring form was based on a similar instrument created by MacDonald et al. (2006) in a tertiary education music department in Scotland. The criteria in the Macdonald et al. evaluation form included the use of compositional devises and musical materials (such as motivic/thematic development, pitch/melodic organization, rhythmic development), the technically informed and creative use of sound sources, the clarity of notation and quality of presentation, and the overall conception (musical coherence and musical imagination). The CAF used in this study includes most of the above criteria and some additional musical concepts and materials that were presented in the lesson designs as well as criteria for evaluating the expression or induction of musical emotions or mood.

The CAF consisted of seventeen items, each rated on a scale from 0 to 5. Thus, the maximum score a student composition could receive was 85 marks. The criteria were grouped in five sub-categories, namely, overall conception (OC), compositional materials (CM), sound resources (SR), notation (N), and emotions evoked (EE).

The OC sub-score derived from the sum of three items, i.e., musical coherence, musical imagination, and emotional expression/conveying mood. The sub-score CM was made of six elements, i.e., motivic/thematic development, pitch organization, rhythmic development, compositional devices (such as ostinato and pedal point), dynamics, and tempo. The score for the SR sub-category was based on two criteria, i.e., technical knowledge and imaginative/skilled use of resources (musical instruments or software), while the sub-score for N was based on just one item, that is, clarity and accuracy of notation. The final sub-score concerned the use of musical materials to evoke emotion (EE) and was formed from the total score on five criteria, i.e., tempo, timbre (sounds, instruments), mode, melody, and dynamics.

Procedures for Lesson Five: Music Composition

Students of both groups were given a composition worksheet which included the requirements of the assignment, a table named “Diagram of composition” and a three-part system. The composition task required creating a melody line and two simple accompanying lines, including a rhythmic and a melodic accompaniment, of eight measures in length, that would convey an emotion or mood. Students were expected to effectively manipulate musical elements or concepts that have been presented in Lessons 1−4 in order to create an expressive musical sentence. These materials and constructs included characteristics of melody, melodic motion, pitch, major/minor mode, dynamics, tempo, motive and motivic development, melodic and rhythmic ostinato, and pedal note.