- University of Maine, Orono, ME, United States

To address gaps in knowledge and to tackle complex social–ecological problems, scientific research is moving toward studies that integrate multiple disciplines and ways of knowing to explore all parts of a system. Yet, how these efforts are being measured and how they are deemed successful is an up-and-coming and pertinent conversation within interdisciplinary research spheres. Using a grounded theory approach, this study addresses how members of a sustainability science-focused team at a Northeastern U.S. university funded by a large, National Science Foundation (NSF) grant contend with deeply normative dimensions of interdisciplinary research team success. Based on semi-structured interviews (N = 24) with researchers (e.g., faculty and graduate students) involved in this expansive, interdisciplinary team, this study uses participants’ narrative accounts to progress our understanding of success on sustainability science teams and addresses the tensions arising between differing visions of success present within the current literature, and perpetuated by U.S. funding agencies like NSF. Study findings reveal that team members are forming definitions of interdisciplinary success that both align with, and depart from, those appearing in the literature. More specifically, some respondents’ notions of team success appear to mirror currently recognized outcomes in traditional academic settings (i.e., purpose driven outcomes—citations, receipt of grant funding, etc.). At the same time, just as many other respondents describe success as involving elements of collaborative research not traditionally acknowledged as a forms of “success” in their own right (i.e., capacity building processes and outcomes—relationship formation, deep understandings of distinct epistemologies, etc.). Study results contribute to more open and informed discussions about how we gauge success within sustainability science collaborations, forming a foundation for appreciation and exploration of the disciplinary and normative dimensions of this work.

Introduction

Forms of interdisciplinary collaboration have grown in recent years as funding agencies, universities, and research units recognize the need to fill gaps in knowledge and to tackle complex societal problems that cannot be adequately addressed by single disciplines alone. As this mode of research organization is increasingly being used to investigate the dynamic and interdependent needs of science and society, a growing body of literature is focused on the processes of team success. Studies that focus on the processes of these interdisciplinary teams present understandings of the capacities, contexts, and resources that collaborators draw upon in their collaborative interactions that contribute to interdisciplinary team success. Scholars in the “science of team science” field, for example, have developed conceptual frameworks, establishing classifications of contextual influences that serve as indicators of the success of collaborative endeavors as well as practical parameters to measure team process and integration (Stokols et al., 2003; Wagner et al., 2011; Armstrong and Jackson-Smith, 2013). Likewise, literature from the field of communication has focused on processes, structures, and outcomes associated with interdisciplinary teams (Thompson, 2007, 2009; Fraser and Schalley, 2009; McGreavy et al., 2013, 2015). Taking a systems approach, these researchers assess how patterns of interaction can influence the success of these teams, identifying patterns of communication behavior and the quality of interpersonal relationships that affect how group goals are accomplished (Thompson, 2009; McGreavy et al., 2015). At the same time, however, several scholars studying these teams contend that current definitions of research success are narrowly defined to outputs that are easy to measure (i.e., publications, citation rates) (Sonnenwald, 2007; Stokols et al., 2008a,b; Cheruvelil et al., 2014; Goring et al., 2014; Bark et al., 2016), thus leaving process orientated measures—often assessing interpersonal relationships—out of the conversation (Wagner et al., 2011). In turn, calls for new definitions of research success have been made, with scholars pushing the boundaries of defining research success, including a regard for the collaborative process (Cheruvelil et al., 2014).

Spurred by the recent calls for expanded measures of success, and the apparent tension between differing measures, this research asks, “How do collaborators themselves construct and pursue the idea of success?” The following study examines how collaborators define success, providing evidence of how collaborators contend with deeply normative dimensions of interdisciplinary success, and providing insight into how scientists and research agencies might shape research agendas and their relationship to society moving forward. Based on semi-structured interviews with sustainability scientists from an interdisciplinary, social–ecological systems-driven, National Science Foundation (NSF)-funded grant in the Northeast U.S., this study uses participants’ narrative accounts to progress our understanding of success on sustainability science teams and address the tensions arising between differing visions of success. In so doing, we propose not simply to identify rigid formulations of success and put them into boxes; rather, we intend to create a basis for a “deeper dialog among sustainability scientists” (Miller, 2012). That is, we intend study results to contribute to more open and informed discussions about how we gauge success within sustainability science collaborations, forming a foundation for appreciation and exploration of the disciplinary and normative dimensions of this work.

Literature Review

Many terms exist to describe collaborative research, including multidisciplinary, interdisciplinary, and transdisciplinary. These terms distinguish between levels of working with and across diverse expertise and disciplinary assumptions [see Stock and Burton (2011) for contextual information on this terminology]. This paper uses the terms “interdisciplinary” and “collaborative,” often interchangeably, when discussing the research. For the present purpose, we define interdisciplinary and collaborative research as an approach that involves a group made up of researchers from different disciplines or fields who are working together to integrate some aspect(s) of their own disciplinary approach and method in order to jointly tackle a research problem as a team. The term IDR is used throughout the manuscript to denote interdisciplinary research teams.

As society faces key issues that increasingly resemble “wicked problems,” (Kreuter et al., 2004) or tensions within complex systems in which each solution causes new and often unforeseen consequences, a field like sustainability science with its commitment to continued pursuit of solutions to complex problems, becomes relevant and useful. Sustainability science, as a term, was in part established by Kates et al.’s (2001) momentous paper in Science, as it launched a conceptual and analytical framework of sustainability science (Kates and Clark, 1999; McGreavy and Hart, 2017). For the context of this study, we define sustainability science as a process of inquiry that works to engage multiple stakeholders and their varying patterns of thought, opinion, approach, and identity in order to foster a space that propagates knowledge creation designed to inform and support action (Lindenfeld et al., 2012; McGreavy et al., 2013).

Understandings of success may diverge among the key players within a given sustainability science collaborative team. While no known research has directly considered any of the following examples, they are nonetheless suggestive of ways in which visions of success may differ between those involved in these interdisciplinary teams. Consider, for example, the following: several researchers are working together on a collaborative team, tasked with examining an emergent issue in a coastal region. Researcher #1 considers the pragmatic outcomes of a new coastal management practice, such as improvements in leasing policies, as “successful.” On the other hand, Researcher #2 values knowledge generation goals and publication outputs. All the while, Researcher #3, though valuing and working toward the measures of success mentioned above, is also concerned with the nature of the process needed to achieve these goals. Which researcher is correct in his/her vision of success? Is each vision of success equally useful on its own terms, and/or is one version “better” or “less” than the other? Who decides? Further, if funding agencies are involved, how do these answers affect resource allocation? The following section begins to explore these areas by reviewing how success on IDR teams has been characterized in the literature and then suggests how these ideas contribute to the present study.

Process-Orientated Views of IDR Teams

Science of Team Science

Largely in response to concerns about the value and effectiveness of public- and private-sector investments in team-based science, the “science of team science” field has emerged in recent years (Bennett et al., 2010). Incorporating a blend of conceptual and methodological strategies, the science of team science field focuses on expanding our understanding and enhancing the outcomes of large-scale collaborative research programs through an emphasis on the antecedent, process, and outcome factors involved in these efforts (Stokols et al., 2008a; Bennett et al., 2010; Armstrong and Jackson-Smith, 2013). Recognizing the “readiness” of a team to succeed (Hall et al., 2008), antecedent factors reflect user-centered factors such as values, expectations, and prior experience, as well as structural and institutional contexts (Wagner et al., 2011; Armstrong and Jackson-Smith, 2013). Process factors include capacity building actions, whether intentional or unintentional, which facilitate or improve interpersonal or intrapersonal relationships among members who are expected to collaborate (Stokols et al., 2008a,b). Outcomes of team science processes can be immaterial, such as mutual understanding and feelings of trust, or include quantifiable indicators of scientific productivity, such as publications and successful external granting (Armstrong and Jackson-Smith, 2013).

Recent studies also investigate the facilitating and constraining factors on collaborative teams, establishing a classification of contextual influences that can determine the success of collaborative endeavors as well as be used as practical parameters to measure team process and integration. For instance, in a formative review of empirical evidence for contextual determinants of team performance across varying areas of team science research literature, Stokols et al. (2008b) present a six-pronged success typology, including: intrapersonal, interpersonal, organizational/institutional, physical/environmental, technologic, and sociopolitical factors. Additionally, Cheruvelil et al. (2014), drawing from the authors’ collective experience on such teams, and the science of team science literature (Stokols et al., 2008a), describe the characteristics of “high performing” teams and strategies for maintaining such teams. They describe diversity (e.g., ethnicity, gender, culture, career stage, points of view, disciplinary affiliation); interpersonal skills (e.g., social sensitivity, emotional engagement); team functioning (e.g., creativity, conflict resolution), and team communication (e.g., talking and listening) as the characteristics of these successful teams.

Systems View of Collaborative Teams

It is important to note that the overall landscape and boundaries of the science of team science field are challenging to determine (Syme, 2008; Bennett et al., 2010) and that not all research endeavors that examine team processes identify under the auspices of this field. Other recent studies have also investigated and identified processes that lead to success in sustainability science collaborations (Fraser and Schalley, 2009), but have taken a systems approach. Research in this tradition has found that success is related to the patterns of communication behavior and the quality of the relationships formed as a product of the teams (Fraser and Schalley, 2009; Thompson, 2009; McGreavy et al., 2015). In a formative, ethnographic study of a large interdisciplinary team, Thompson (2009) reports that interactions described as “collective communication competencies” (CCC) on the team level influence the collaborative endeavor and its movement toward objectives. Challenging statements in a positive manner, inviting opportunities for reflexive talk, demonstrating presence, and using humor are processes that influence the team’s ability to communicate effectively. Conversely, acts of blatant boredom, intentional challenging of expertise, and sarcasm can compromise CCC (Thompson, 2009).

McGreavy et al. (2015) take this research a step further to identify important communication dimensions of sustainability science teams, when viewed as complex systems. These researchers explore how communication within sustainability science teams influences the results related to team learning and progress toward group goals. Building on the work completed by Thompson (2009), McGreavy et al. (2015) utilize a mixed methods approach, developing quantitative instruments to measure CCCs. Their results demonstrate that differing styles of decision making and communication competencies influence mutual understanding, inclusion of diverse ideas, motivations to engage, and progress toward sustainability related objectives.

The Call for Expansion

Beyond process approaches to IDR teams, scholars looking at the more commonly used rubrics of success have gone on to suggest that the measures of interdisciplinary success typically used to evaluate interdisciplinary teams remain a challenge (Hasan and Dawson, 2014; Balvanera et al., 2017). Several scholars contend that current definitions of research success are narrowly defined as outputs that are easy to quantify (Sonnenwald, 2007; Stokols et al., 2008a,b; Cheruvelil et al., 2014; Goring et al., 2014). One of the most conventional indicators of research success is bibliometrics (Bark et al., 2016). In essence, bibliometric methods utilize a quantitative approach in order to describe, evaluate, and monitor published research (Zupic and Čater, 2015). Traditional bibliometric measures include citation based indicators such as co-authorship, citations, and cocitations (Wagner et al., 2011).

A limited body of research examines these mainstream measures of success within interdisciplinary collaborative settings, such as bibliometrics, and call for expanded measures that focus specifically on the value of process. Goring et al. (2014) identifies and problematizes two traditional forms of success within academic research careers. They note that the number of grants secured and dollar amount awarded, and peer-reviewed publications do not adequately reflect contributions of team members, arguing that collaborative team effort measurements need to, “evolve to explicitly value all of the outcomes of successful interdisciplinary work” (Goring et al., 2014, p. 43). These broadened views of success within research scholarship include: creating broader impacts beyond traditional publication metrics, recognizing and rewarding administrative and mentoring duties, as well as communicating and sharing the knowledge created within these efforts to the general public.

Along these lines, in a seminal literature review on both quantitative and qualitative measurements of outputs of IDR teams, Wagner et al. (2011) find a need for more holistic metrics to measure IDR teams. They note that the current measures of success within IDR, which rely heavily on output measures, may offer an inaccurate assessment of IDR teams, as IDR practices are dynamic and encompass more than just the end products (Wagner et al., 2011). These scholars point toward integrated approaches to IDR measurement, linking “process” orientated approaches by utilizing tenets from the science of team science tradition with “output” measures (i.e., bibliometrics). Likewise, Cheruvelil et al. (2014) conclude their “high performing team” proposition (described above) by calling for new definitions of collaborative success—ones that promote, recognize, and value collaborative processes.

Summary: Making Sense of IDR Success

As the literature reviewed above has shown, the understanding of IDR team success is a central and relevant focus of much contemporary research; however, in many ways, what researchers mean by “success” remains black-boxed—that is, not sufficiently problematized. Current literature on the success of these teams reveals a tension between various attributes of success, including both product- and process-oriented outcomes, and what is traditionally valued in academic settings. While these studies allow us to understand the differing ways success can be viewed within the IDR team setting, we do not necessarily understand how those who are a part of these teams are making sense of the seemingly abstract notion of success. We couple this notion with the calls for expanded measures, driving our study toward better understanding how those involved in these collaborations choose to construct and pursue (possibly differing) visions of success. More specifically, we ask:

RQ1: How do collaborators form definitions and make sense of success on a large, sustainability science, interdisciplinary team?

Materials and Methods

Sampling and Recruitment

The sampling frame for this study included graduate students and faculty researchers at a mid-sized public university in New England currently involved in a large, 5-year, $20 million NSF—funded grant aimed at increasing research and development activities that will assist in the further growth of the aquaculture industry in this New England state. The authors of this study are affiliated with the team being studied and obtained Institutional Board Review (IRB) approval before embarking on the research. This research is part of a larger study conducted under the auspices of the grant, which involves a quantitative analysis of survey data.

The team studied is comprised of approximately 60 faculty and staff and 20 graduate students spread across more than 9 academic and research institutions. The team’s “architecture” includes four sub-groups or “themes” organized around specific aspects of the project, including (a) ecological and sociological carrying capacity, (b) aquaculture in a changing ecosystem, (c) innovations in aquaculture, and (d) human dimensions. Each theme includes members from varying academic disciplines, including: marine sciences, computing and information science, aquaculture biology, engineering, food science, chemistry, economics, anthropology, and communication.

Respondents included graduate students, including those pursuing MA, MS, and Ph.D. degrees, and faculty, including assistant, associate, full professors, and one post-doctorate, employed by a variety of institutions involved in the grant. Other respondents included two individuals involved in the management and facilitation of the grant, as these individuals had significant experience working as a part of these teams. Due to the team’s wide-ranging disciplinary affiliations, ranks, and institutional affiliations, a purposive sampling approach was used to ensure a representative sample on several dimensions (i.e., disciplinary affiliation, rank, intuitional affiliation) (Welman and Kruger, 1999; Tracy, 2013). Two interviews of the 26 were removed due to the respondents not explicitly answering the questions pertaining to the present research, leaving 24 interviews to be used in the analysis.

Interviews

Following a grounded theory approach (Corbin and Strauss, 2008) to data collection, 26 in depth, in person, semi-structured interviews were conducted between June and November 2016. While our study was deemed exempt by our institution’s Institutional Review Board, we did provide “consent to participate” forms via email and in person before interviews. As is standard practice for many IRBs, these forms described the study, along with the risks and benefits of participation, and steps being taken to protect identities (e.g., the use of numbers to identify participants, rather than names). Interviews ranged from 24 min to an hour and a half, with an average length of 37 min. While interviews fluctuated in length and question order, all respondents were asked questions under three broad categories, which included: (1) identity as a researcher and as an interdisciplinary researcher; (2) perceptions of interdisciplinary work; (3) attribution of communication in interdisciplinary work [i.e., (how) does communication play a role in IDR research]. The interview protocol was developed on the basis of the academic literature, as well as the needs of the research team, per specifications written into the NSF grant under which this study received funding. The full extent of results from all three categories described above are not used within this paper, as this work is part of a broader study, which included a survey distributed to all team members prior to the qualitative interviews that gathered information about team members’ communicative preferences and motivations for participating in large-scale, interdisciplinary teams, as well as individual-level and sociodemographic information (e.g., years at the university, knowledge of social–ecological systems research prior to joining the present team). The survey data results were developed into a technical report for the use by the team. Participants were made aware of the broader intentions of this study and told that interview data would be analyzed to provide both action-orientated and theoretical insights. For the purposes of this paper, we consider only responses under the category of “perceptions of interdisciplinary work,” specifically, responses related to respondent perceptions of success, including the question “what counts as success on interdisciplinary collaborations?” and narrative accounts in response to the prompt, “can you tell me a story of a time or experience when you felt successful on an IDR team?”

Analysis

Interviews were recorded and transcribed, then coded initially line-by-line. In the process, the first author recorded memos (Corbin and Strauss, 2008), giving form to emergent codes. NVivo qualitative data analysis software was used to keep track of and gather quotations within emergent codes. This work subscribed to validity measures consistent with grounded theory technique, including a high level of methodology and coding transparency, such as labeling and categorizing phenomena, grouping concepts at an abstract level and then moving to developing main categories and their sub-categories (Corbin and Strauss, 2008). This lead to an extensive, iterative process of working closely with the data and the literature to pursue alternate justifications for data trends, while also working with the model in progress to develop categories by recording and comparing connections and divergence from the initial codes and recurrently refining the categories (Corbin and Strauss, 2008). Because the authors of this study were both observers and participants in the team (i.e., the first author as a graduate student research assistant and the second author as a participating faculty member), they were compelled to repeatedly negotiate their positionality while gathering and analyzing data, such as their lived experience of the research team. By laying claim to contextual values at play, Glaser and Strauss (1967) would argue that the grounded theory researcher does not jeopardize the validity inherent in the cataloging of the “emergent” themes that constitute the data set. Rather, the principles of grounded theory privilege integrating the researcher’s current knowledge and experience into making sense of the data. Nonetheless, throughout this process, both researchers acknowledged and discussed how their unique, dual- “insider” and “outsider” identities might influence their interpretation of the data.

Limitations

As with any case study focused on a singular team, and qualitative investigations in general, there are limits to extrapolating our findings. Here, we highlight three limitations of this study. First, the present investigation involves responses from only one medium-sized, sustainability science-focused collaborative team. The nature of the grant that our respondents are working on is driven by the need to solve issues within the community and state, as the scientific vision of the grant includes the development of innovative solutions to a myriad of social–ecological system challenges posed by the state’s coastal social, economic, and environmental nexus. Therefore, the culture of this team and the values that members hold may be very different than that of IDR teams lacking a sustainability science focus.

Second, the lead author served as the sole coder, and, as such, the initial tool of analysis and interpretation, though the second author assisted in interpretation. To counter this limitation, the lead author frequently discussed preliminary data interpretation with other researchers who have engaged in IDR-related research, shared initial findings with the second author, and made changes based on this feedback throughout the process.

Last, this study interviewed participants in year three of a 5-year grant. While results described were not limited to describing the “successes” of this particular grant, interviewing respondents a later stage in the grant’s lifetime could affect how respondents answer, as many researchers were in beginning stages of their work.

Results

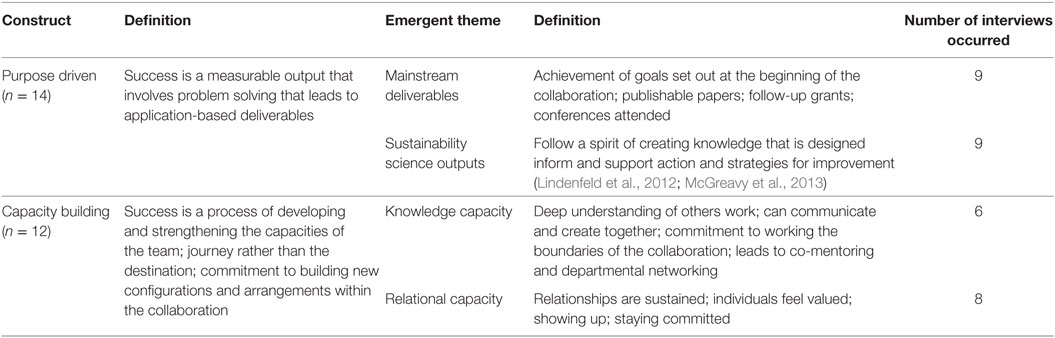

In this section, we present themes that emerged from interviews that serve to illustrate varying approaches to and implications for participant-defined collaborative success. The presentation of results (Table 1) is organized around what we characterize as two forms of success emerging from the interviews: (1) purpose driven and (2) capacity building. Respondents’ definitions of success almost always conformed exclusively to one or the other category, with the exception of two respondents. These individuals “had their feet in both rivers”—responding in ways that suggested elements of both purpose driven and capacity building definitions of success. Given their unique standing, these respondents will be discussed separately, below.

The first construct, purpose driven forms of success, concerns the degree to which goals and measurable outputs are achieved. Respondents described deliverables that ranged from broad level accomplishments, such as the achievement of project goals, to more specific examples, such as academic and application-based deliverables. The second construct of success concerns the development and sustaining of relationships and knowledge capacities—in other words, working to build a network of researchers who understand one another’s work and can rely on each other in professional and interpersonal ways. Each construct and the emergent themes within are described below.

Purpose-Driven Forms of Success

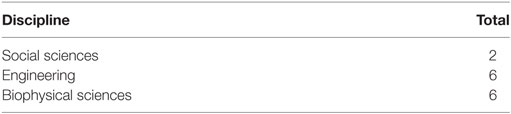

The first construct focuses on purpose driven forms of success, with 14 respondents (social sciences: 2; engineering: 6; biophysical sciences: 6) describing this form of success (hereafter, n indicates the number of participants who mentioned, and thus are grouped under each construct or emergent theme). When making sense of success, respondents in this group described it in terms of measurable outputs, often involving problem solving that led to demonstrable deliverables. A linear tone was set within these responses, as respondents described the end product of their work as representing the success.

For example, a faculty member (F1) from engineering noted, “a simple yardstick for how well the collaboration has worked is whether we achieved the goals we set out from the beginning.” The necessity to produce outcomes was frequently mentioned as one of the determining factors of collaborative success and this purpose-driven definition of success runs throughout this construct. Respondents identified two interrelated forms of purpose driven success: mainstream measures of success and sustainability science goals. These emergent themes are described below.

Mainstream Measures of Success

The first emergent theme within the construct of purpose driven success included kinds of outcomes that are recognized as mainstream measures of success within IDR teams (n = 9). These outcomes included the achievement of project goals and academic deliverables. Broadly speaking, respondents described success as the completion of a set goal. As a graduate student from engineering (GS2) put it, “I think that the accomplishment of a given goal defines success. I think that it should be verifiable.” Part of this construct also had to do with deliverables that tend to be valued in academic settings. For instance, respondents cited publishable papers, follow-up grant money, and conferences attended as examples corresponding to this theme. As one faculty member (F7) from the biophysical sciences described, the measurement of success starts with solving a problem and then leads to academic deliverables:

There’s just being able to answer the question, but then, get outcomes that are again like publishable papers or new research grants as follow-on from those collaborations. Those would all be, I think, metrics for success.

Further, when asked if she could tell a story of collaborative success, a faculty member from the biophysical sciences (F7) recounted a meeting that resulted in talk about future academic deliverables. As she said:

I think we made a lot of progress…. this was across institution too. And we talked about a paper, and we talked about some follow-on research, and actually we wrote two follow-on proposals shortly after that, so it was—there were—a lot came out of it. It was—it felt like—I think everybody was like “Oh.” We came away from the day feeling like “That was really productive.” [Laughs] And it was.

As mentioned above, part of this emergent theme was focused on publications, which included discourse that could be characterized as both supporting and challenging the notion that these products be viewed as quintessential metrics for success. One biophysical scientist faculty member (F4), recognizing publications as counting as success, noted that he would expect “collaborative successes being recorded systematically,” with the author indexing value going up for collaborators. Further, he contended that these publications should reach outside collaborators’ home disciplines, stating:

You hope to see new publications using new collaboration teams and not in journals that you would necessarily expect. So you may see a chemical journal publishing a sea lice paper based on this polymer. You may see an engineering journal publishing a micro fluidics paper on sea lice, and that I would count as a success.

Relevant to this discussion, and explored further in the theme, we refer to as “sustainability science outputs” (below), other respondents pushed back against the metric of academic deliverables, specifically published papers. Demonstrating this, one biophysical graduate student (GS5) noted, “there’s a lot of other things other than academic papers.” Other respondents noted similar understandings, often “othering” themselves from those who believe in such measurements. A faculty member from the biophysical sciences (F6) noted that academic articles do not always reach the audiences for whom the research might be most impactful, stating:

I was just reading—well, I’ve stopped reading it [review board assessments], but I noticed that they really did rely on bibliometrics, so they’re going to measure success by what we publish. And, you know, I know the commissioner of marine research pretty well. I’ve known a few of them—I can’t think of any of them that subscribe to a scientific journal. Their staff might, but the person in that hot seat isn’t going to read scholarly works, just not going to happen. So that’s not even a good measure of success, I don’t think.

Sustainability Science Outputs

The theme of sustainability science outputs (n = 9) was the second emergent theme within the construct of purpose driven success. Responses indicated that, on a broad level, individuals subscribing to this perspective see success in terms of sustainability science research outputs. Echoing discussion above, several respondents pushed back against “mainstream measures of success,” positioning themselves in a way that we identify as representative of sustainability scientists—specifically, by describing problem-focused approaches to working across disciplines and with diverse stakeholders in order to “link knowledge to action” (Cash et al., 2006; Miller, 2012). As a faculty member (F13) from the biophysical sciences stated:

From a researcher’s perspective success is in the paper, that theoretically, other people can make an argument that no one ever reads. I like to think of success as either in terms of, (A) to just help improve policy, tweaking existing systems, the overall benefit to a large group of people, and then I think there’s an economic success story to this—does this information we produce, for instance, about the environment, help people make economically sustainable and environmentally sustainable decisions about sighting aquaculture? That would be, I think, success.

Others expressing opinions categorized within this theme, while not pushing back as explicitly against “mainstream measures,” described making a difference with the information produced, and providing real world solutions was seen as central to this practice. One graduate student (GS1) from the biophysical sciences noted that success is doing work that goes beyond “research for the sake of research,” explaining, “I think that successful integration of gathering all of the information and then trying to get an answer that’s useful for people, I guess that’s a good baseline to have.”

Capacity Building Forms of Success

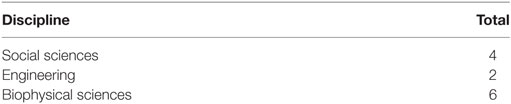

Responses from interviews (n = 12; social sciences: 4; engineering: 2; biophysical sciences: 6) suggested that some collaborators tended to consider what we refer to broadly as “capacity building” as a form of success within interdisciplinary settings. When making sense of success, respondents in this group recognized a commitment to constructing new configurations and arrangements within the collaboration in order to build capacities for sustainability work to take place. This capacity work included the development and sustaining of the relationships and knowledge capacities, as respondents described efforts to build a network of researchers who understand one another’s work and can rely on each other in professional and interpersonal ways. Distinct but not completely unrelated to purpose driven success, this viewpoint still recognizes deliverables as a desirable consequence of success but primarily focuses on the connections that take place along the way—in many ways, capturing the essence of “the journey rather than the destination” mindset. According to a graduate student (GS7) from the social sciences:

I think a lot of the success comes from the process rather than the outcomes. For us, because it’s a grant, we have to have certain outcomes achieved and certain things met…. If you’re only focusing on your own research and trying to tie it into the bigger framework at the end, you’re not—when this grant dissolves, you’re not going to have a sustainable system of researchers.

Similarly, respondents seemed to be focused on the pragmatic side of capacity building, not just the “touchy-feely” quality of relationship building. That is, respondents recognized that with bolstered capacities, both in terms of interdisciplinary relationships and robust knowledge basis (e.g., of varying epistemologies, research methods, etc.), the team would be poised to accomplish more. According to a faculty member (F10) from the biophysical sciences:

I think that it’s [success] when the research becomes fun and everybody’s excited about it and not just when something gets accomplished. I mean, yes, it adds to that excitement when you can get a grant funded and when the publications start to come out of that work, but I think it’s about putting together a group of people, students included, that have mutual respect, and they know that they can ask questions and they can move through the process quicker.

As alluded to above, respondents recognized the two cross-cutting themes that we refer to as “relational capacities” and “knowledge capacities” within this construct of success. These emergent themes are described and explored below.

Knowledge Capacities

Respondents described connecting to and understanding fellow collaborators who hail from disparate disciplines and backgrounds as a form of success (n = 6). This included going beyond representational explanations of another’s discipline in order to form an understanding of the nuances of the discipline and the ability to communicate with others on an academic level. One faculty member from the social sciences (F2) recognized “deep understandings” of fellow researchers’ epistemological values as a success. As she expressed:

I think sort of an even deeper level [of success] is when you and a colleague from different disciplines can sit down and say, “Okay we’re gonna study this because this. And so what are some questions we could ask?” And even start to have an understanding of what your colleague’s questions might be, and even some of the start to how they might address it.

In this same vein, one respondent, a graduate student from the social sciences (GS7), noted the process of “constantly showing up” in order to develop these deep understandings. Her use of this phrase surpassed being present physically, as she explains:

I think the process of constantly working together and showing up and actually understanding where other researchers and other themes are coming from and finding connections to your own work, or connecting to someone else.

When asked to tell a story of success, a faculty member from the social sciences (F15) recounted an experience with a natural scientist in which neither of the parties had an exact idea of the other’s research methods at the beginning of the collaboration. As she described, “It took us a while to get there where we understand each other’s methods to the point where we can talk about things.” And when her collaborator finally came to the understanding that social science is not synonymous with providing outreach, this researcher explained feeling the most successful. As she describes:

So I felt that moment of wow, we get it. That was a successful moment for me. Oh, now she understands, and she said what she said. Like oh, she (the social scientist) has questions and research that she is doing. So I think just moments where it’s clear that oh, you get what I’m doing. That’s sort of a moment of success…you understand why I’m asking that question. You understand why I need a sample like this.

Relational Capacities

Engendering, as well as maintaining, relationships (n = 8) was also an emergent theme within the interviews that described success as capacity building. According to the respondents, part of interdisciplinary success is building relational capacities in order sustain and forward the research taking place. Within this grouping, several respondents described success in collaborative settings as being contingent on the “people”—that is, both people with strengths in separate areas, as well as people on whom you can rely in professional and interpersonal ways. According to a faculty researcher (F8) from the biophysical sciences:

So interdisciplinary research: yes, it is about the science; it is about the work; but also it’s about the people and the relationships. And I think the best—at least in my case, I’ve worked with many—this is my 20th year here. I don’t know, I’ve probably done research with 30, 40 different people. But the most successful ones were the ones that I actually liked hanging out with ‘em, with people. Those are always the most successful ones.

Tying back into knowledge capacities, several respondents described successful collaborations as involving people who respect and care for the work that fellow collaborators are taking part in. Respondents described teams that have not been “harmed” by the varying patterns of thought, opinion, approach, goals, and identity within the collaborative setting. As one graduate student (GS3) from the biophysical sciences, put it, “I would say just having a research project, 3–5 years, whatever, that at the end, everybody’s still on good terms, and you felt like you met the goals of each person within that.” In this same vein, when describing a successful collaboration, a faculty member (F9) from engineering said:

Everyone feels like they’ve gotten what they set out to get out of the initial collaboration, that the science is improved because you’re collaborating, and that the relationship isn’t hurt because of the collaboration and the different points of view on how to do anything.

Additionally, respondents gave examples of what we call “productive environments,” citing feelings of ease to ask “dumb” questions, respect for deadlines, and appreciation for one another’s work. Along these lines, a faculty member (F14) from the biophysical sciences noted:

…if you’re comfortable with certain persons, they’re really good at responding to an email, they care what you look for, you know, they understand what are the pieces of work you can do and how you can solve it.

Furthermore, respondents pointed out that the relationships that prompted these productive environments are not just about making friends, but rather that the connections made within the collaboration transpire into opportunities for networking that often lead to pragmatic outcomes. Multiple respondents coupled knowledge and relational capacity formation through stories they told about relationships with collaborators from outside of their own discipline that turned into valuable learning and networking opportunities. One faculty member from engineering (F3) told a story about a collaborative relationship between himself and a biophysical scientist that was built over time and resulted in departmental connections:

And in fact through our work in [the grant] together with our student and we also co-advise some undergrads. We have invited [X] to become a cooperative faculty in our department, because he is co-advising students with me—because he teaches many of our undergrads a course, an elective course, and because he has experience.

In this same vein, a faculty member from the biophysical sciences (F12) told a story of networking that resulted in connections for her home department. Describing an event that had recently taken place in her home department, this respondent recounted how her “network” of researchers from other departments helped her contribute to a hiring process within her home department by recommending researchers that others would otherwise not have known:

Anyway, but building networks…. I feel like oh (the grant) aside this issue coming up has nothing to do with aquaculture or sustainability but I felt like because of this network that I was able to really contribute something and I felt really happy and I felt like that was a success…

“Foot in Each River”

Respondents almost always identified success as distinctly purpose driven or capacity driven, with the exception of two respondents, whom we identify as having “a foot in each river.” These respondents described visions of success that were clearly focused on both the “process” and the “product.” These respondents hailed from distinct disciplinary backgrounds, social science, and engineering. One respondent, a faculty member from the social sciences (F15), identified strongly with capacity building, focusing on success as the development and sustaining of relationships and knowledge capacities, but simultaneously seemed to exemplify purpose driven when describing a caveat in her view of success:

The other is getting the work done. Right? Answering the questions at hand and so if it’s an applied question solving the problem and contributing new information or something that will help move that solution to that problem or if it’s an academic question, papers, presentations, outputs, having made some outputs that are important and successful. So if it’s a project that doesn’t produce anything, yes, it’s great that everyone sat together and worked and collected data, but if they didn’t do anything with it that’s not very successful to me.

Further, the other respondent (F9), an engineer, seemed to describe success in terms of capacity-building, as noted above, when stating that success was linked to relationships and the ability to sustain such relationships. Yet, illustrating purpose-driven success, this respondent went on to tell a story of success that focused on the fact that the project that was pitched was funded; indeed, she emphasized that the most successful collaboration that she has participated in involved the receipt of further funding. In essence, these respondents understand collaborative work in a non-bifurcated manner, as they see the collaborative setting as dynamic and as an iterative process. Implications and avenues for future research related to these observations will be described below.

Discussion

This study has worked toward two goals: first to describe how those involved in a sustainability science IDR team made sense of success, and second, to contribute to ongoing discussion in the academic literature about gauging success within sustainability science collaborations. Interview findings revealed that those involved in this IDR team are forming distinct definitions of interdisciplinary success. Interestingly, the definitions formed appear to align with those currently recognized in traditional academic settings as success (i.e., purpose driven), as well as with others that have been less often acknowledged (i.e., capacity building). This distinction between the two groupings, the “even” grouping, with neither group being larger than the other, and researcher diversity—that is, the distinction did not adhere to disciplinary or university rank lines (see Tables 2 and 3)—is important to note.Below, we discuss the findings from this study and implications for future research within these parameters.

Purpose Driven

Respondents who articulated purpose driven forms of success described success in terms of measurable outputs, often involving problem solving that led to some type of deliverable. This form of success is in line with measures of success that are currently recognized in academic culture such as bibliometric measures (Wagner et al., 2011; Hasan and Dawson, 2014), professional success measures (Goring et al., 2014), and criteria such as NSF’s two overarching aims of knowledge generation and broader impact integration. This construct does, however, offer an interesting conundrum—while both mainstream deliverables and sustainability science outputs fall under the umbrella of being measurable and leading to confirmable deliverables, there is a tension between the two, as mainstream deliverables are reported to be more widely understood in both academia and funding agencies like NSF than sustainability science outputs.

The mainstream deliverables respondents described include the completion of academically verifiable outputs, such as the achievement of project goals, research funding, and outputs related to bibliometrics. These types of deliverables are the most recognizable form of success (Wagner et al., 2011; Hasan and Dawson, 2014) and fall under the NSF’s first merit review principle of, “All NSF projects should be of the highest quality and have the potential to advance, if not transform, the frontiers of knowledge” (NSF, p. 63). Our interviews point to an interplay between mainstream deliverables and sustainability science outputs, both in that they are related and can go hand-in-hand, but also in that they can run counter to one another. Before exploring this tension and its implications, we describe NSF’s understanding of sustainability science outputs and compare them with our respondents’ understandings.

The sustainability science outputs that respondents described work to engage multiple stakeholders and their varying patterns of thought, opinion, approach, and identity in order foster a space that propagates knowledge creation designed to inform and support action (Lindenfeld et al., 2012; McGreavy et al., 2013). These outputs are recognizable in NSF’s broader impact criterion (BIC) requirements, which in many ways overlap with what we are calling sustainability science outputs. Essentially, the BIC is a scientific outreach exercise carried out by researchers funded by NSF, designed to have the potential to benefit society and contribute to the achievement of specific, desired societal outcomes (National Science Foundation, 2017). Having evolved throughout the years, BIC presently includes five core, long-term outcomes: teaching and education, broadening participation of underrepresented groups, enhancing infrastructure, public dissemination, and other benefits to society (Wiley, 2014). While this type of output is recognized, measured, and encouraged by funding agencies like NSF, there has been considerable recognition in the IDR community of the criterion’s pitfalls. In many ways, the criterion has been met with “considerable confusion and dread” (Lok, 2010) as many involved in collaborative research have cited issues with the criterion being neither transparent nor practical (Bornmann, 2013). The research surrounding the BIC indicate that these difficulties run deep and include such complaints as the answering and fulfilling of the criterion does not allow for individual efficacy, as well as the belief that it is not within researchers’ duties to engage in science communication and outreach (Alpert, 2009; Bozeman and Boardman, 2009; Holbrook and Frodeman, 2011; Wiley, 2014).

The results we share provide a significant nuance to the literature on NSF’s BIC. Many of our respondents seem to find “BIC-like” criteria (i.e., sustainability science outputs) meaningful to their personal definitions of success, which in many ways, stands in contrast to the literature. If researchers, especially those working on sustainability science endeavors such as our respondents, are identifying these forms of success, it becomes necessary for funding agencies, such as NSF, to better understand how to measure these types of outputs and improve existing measurement structures. Not only do we need to take heed of this development but we must also critically consider the apparent tension both cited in the literature and indicated by our respondents. Even more than capacity building forms of success, sustainability science outputs stand in stark contrast to the mainstream deliverables. Take, for example, the several instances of respondents pushing back against measures not classified as sustainability science outputs, such as the faculty member criticizing scientific journals’ publication metrics due to the fact that stakeholders (i.e., those in need of the information) neither subscribe to nor read such publications. The fact that respondents are explicitly “calling out” mainstream deliverables as insufficient further suggests the need for sustainability science outputs and the BIC criterion to be explored. Foremost, our research suggests that there is perhaps a need for “traditional” measures of success used both in academic settings and by funding agencies to include adequate space for, and weighting of, broader measures of success, such as what we have referred to as sustainability science outputs. Additional research is needed to examine how collaborators are reporting their findings, and if perhaps this finding is isolated to sustainability science-focused IDR teams.

Capacity Building

Respondents who described capacity building forms of success focused on the development of new connections within the collaboration. The capacity building construct does not fit as neatly into current measures of success recognized within IDR culture and by funding agencies such as NSF; however, it does coincide with much of the “science of team science” and systems-centered work appearing within the IDR literature. This described form of success and connection to previous literature concerned with the variables of success provides both evidence of the process-based work that has been done in the past, as well as responds to the calls for these forms of success within IDR and academic culture. This construct of success and the connections that are present brings up various questions related to the way collaborators are making sense of success, while also standing (in some ways) in stark contrast with purpose-driven forms of success.

Respondents recognized that the building of capacities results in pragmatic outcomes for and beyond the collaboration. In many ways, this practically oriented capacity-building echoes assertions from the science of team science literature. One instance of this is can be seen as respondents appeared to recognize, through their definitions and narratives of success, the three stages of collaboration, as described in the literature: antecedents, processes, and outcomes (Wagner et al., 2011; Armstrong and Jackson-Smith, 2013). Although the stages are not necessarily recognized in “order” described by the authors (i.e., antecedent first, processes second, and outcomes third), and each stage is not described in full, taken together, the stages are evident within respondents’ descriptions of success. Instances of the antecedent stage are apparent when respondents’ definitions reflect user-centered factors such as success being contingent on the “people.” The process stage is largely present within the accounts of development and sustaining of the relationships and knowledge capacities. Last, outcomes were described as both material (i.e., networks and learning environments established and maintained) and immaterial (i.e., feelings of ease, trust, happiness). Additionally, many of the characteristics of “high performing teams” cited by Cheruvelil et al. (2014) and the contextual typologies cited by Stokols et al. (2008b) are present in responses, specifically: interpersonal skills, diversity, team functioning, and team communication.

Further, these responses can be looked at as signs of researchers recognizing IDR team settings as complex systems, as respondents described constructing configurations, arrangements, and communication behaviors within the collaboration that have the ability to influence the pragmatic outcomes of the team (Thompson, 2009; McGreavy et al., 2015). In many ways, respondents identified success in terms of CCCs (Thompson, 2009). Our respondents described environments wherein opportunities for researchers to negotiate understandings of knowledge and identity were available, and presence was demonstrated (Thompson, 2009). An example of this includes the described “productive environments,” wherein respondents appeared to embrace feelings of ease and ability to learn about one another’s disciplines and appreciation for one another’s work. In addition to demonstrating their understanding of the successful processes, respondents were also tapping into the unsuccessful ones; alluding to comprising behaviors that were not engaged in, as they could harm the team (Thompson, 2009). In this way, respondents were drawing attention to the complex nature of communication processes on these teams and how unsuccessful processes fit into the application of systems thinking. An example of this includes respondents describing teams as “unharmed” by the collaborative research process; take, for instance, the faculty member from engineering who described a successful team as one that has intact relationships—unaffected by the varying patterns of thought present within the research team.

Moreover, in terms of knowledge capacity, respondents recognized epistemological pluralism (Miller et al., 2008) as a form of capacity building. Respondents demonstrated that beyond recognizing that there is more than one way to know, that this varied knowledge recognition in action can be seen as a measure of success. For example, one faculty member told the story of her relationship with a biophysical scientist, where “success” was made possible by the continued communication about each researcher’s discipline and methods, and resulted in a deeper understanding of the seemingly disparate disciplines. In this same vein, and worth mentioning, is the description of “deep understanding” that led to the ability to communicate with others on academic levels, that a faculty member used when providing her own definition of success.

While our respondents are recognizing capacity building as a form of success, it can be argued that these forms of success do not currently have a place at the IDR table. Despite the fact that these forms are recognized in the literature as “processes,” “factors,” or “variables” of success, by many they are not seen as measurable outputs to be recognized as a success (Cheruvelil et al., 2014). This result of the capacity building construct does beg to be understood, as it seems that some respondents are tapping into indicators of well-being of the team, and recognizing that—if not for certain practices—collaborative work would not get off the ground. Moreover, this research responds to the calls in the literature, specifically by Cheruvelil et al. (2014) to begin expanding measures. That is, we provide empirical evidence of researchers involved in these collaborative projects recognizing forms of success that are distinct from purpose based forms—adding to the conversation on and delivering substantiation to expanded measures of success.

Foot in Each River

The two respondents who described visions of success that were clearly focused on both the “process” and the “product” provide an interesting counterpoint to the either-or trend that emerged in the other 22 responses. As mentioned, the respondents who described both were from distinct disciplinary backgrounds, social science and engineering. In many ways, these respondents embody the claim made by Wagner et al. (2011) when they describe IDR taking place as, “ a dynamic process operating at a number of levels” (Wagner et al., 2011: p. 19), as these respondents seem to recognize that IDR success is both process and output. Better understanding these respondents and their views of interdisciplinary success would entail expanding sampling in new research methods, both of which are described in the following section.

Implications and Future Research

The implications of this study are broad and deserve future research in order to expand this type of work. Moving forward we contend that additional work will need to be done both through research and practice. First, in terms of research, we see the need to expand this study in an effort to better understand how agencies’, such as NSF, definitions, and measures of success are matching with research perceptions. This could include studies that ask researchers explicitly about these measures and their experiences and perceptions of them, and how these results accord with current measures. Second, this line of work would also benefit from research that encompassed more than one IDR team, and further, went beyond the focus on interdisciplinary collaboration in order to incorporate a transdisciplinary viewpoint, that is, a focus on stakeholders and other “non-academic” knowledge and practice contributors within these teams. Third, moving forward, there are many more pieces of this “process” form of success that need to be explored, as well as a need for funding agencies to consider the value, role, and prospect of this form of success. For example, process measures call into question if measures of success based on capacity building are able to be measured and how funding agencies like NSF will or can blend these types of measures into their criterion. And last, future research should also ask how these “output” and “process” based forms move together in practice. Current collaboration literature focused on stakeholder groups holds some suggestive directions in how both process and output based forms of success can be incorporated. Recognizing the importance of both material and immaterial forms of success, Roux et al. (2010) present a framework for co-reflecting on the accomplishments of transdisciplinary research programs. In this same vein, Allegretti et al. (2015) provide accountability indicators for IDR and transdisciplinary teams that may also be used for both forms of measuring successes. Our “foot in each river” respondents provide some notion of how individuals might embrace both of these conceptions of success at once, but it would also be interesting to see how and if others demonstrate this duality in day-to-day interactions. Extended ethnographic observations would be one way to move forward on this research avenue.

In terms of practice, we intend that this work will add value to the conversation about IDR measurements of success. As many scholars in the literature note, in order for measures to gain traction, we must start on the level of academic culture. Our results indicate that this shift might already be taking place. Our user-centered approach allowed for illustrative examples of many instances of emergent shifts within respondents’ words. About half of our respondents focused their responses on measures of success that are unmistakably distinct from “mainstream” outputs. The focus on sustainability science outputs and the range of capacity building forms of success provide an empirically grounded response to the calls for expanded measures. However, the prospect of an expanded and more richly integrative approach to IDR success is one that is needed, and we hope that this work spurs future research and moves this dialog forward.

Conclusion

Understandings of success diverge among the key players within sustainability science collaboration teams. Through this study, we have seen some indication that collaborators are forming distinct definitions of success that do not always match up with measures that are currently employed. Results indicate that collaborators are carving out a role for collaborative work and shaping the ways this work is valued. For some researchers, success takes a “purpose” form, with definitions and narratives that concern the degree to which goals and measurable outputs are achieved. For others, success is looked at through the lens of “capacity building” as researchers take “the journey rather than the destination” mindset. Combined, these distinct, participant-defined collaborative successes help to understand the nuances of IDR success. Ultimately, our work provides a basis for a “deeper dialog amongst sustainability scientists” (Miller, 2012)—that is, our empirical results contribute to a more open and informed discussion about how we gauge success within sustainability science collaborations, forming a foundation for appreciation and exploration of the disciplinary and normative dimensions of this work.

Ethics Statement

This study was carried out in accordance with the recommendations of the University of Maine Institutional Review Board with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the University of Maine Institutional Review Board, application number 2016-05-07.

Author Contributions

AJR conceptualized study, gathered data, analyzed data, and wrote 90% of the manuscript. LNR assisted in conceptualizing study, assisted the gathering and analysis of data, and wrote 10% of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This project was conducted as part of the first author’s master’s thesis research (Roche, 2017).

Funding

This project was funded by The National Science Foundation (NSF) award #1355457 to Maine EPSCoR at the University of Maine.

References

Allegretti, A. M., Thompson, J., and Laituri, M. (2015). Engagement and accountability in transdisciplinary space in Mongolia: principles for facilitating a reflective adaptive process in complex teams. Knowl. Manage. Dev. J. 11, 23–43.

Alpert, C. L. (2009). Broadening and deepening the impact: a theoretical framework for partnerships between science museums and STEM research centres. Soc. Epistemol. 23, 267–281. doi: 10.1080/02691720903364142

Armstrong, A., and Jackson-Smith, D. (2013). Forms and levels of integration: evaluation of an interdisciplinary team-building project. J. Res. Pract. 9:Article M1.

Balvanera, P., Daw, T. M., Gardner, T. A., Martín-López, B., Norström, A. V., Ifejika Speranza, C., et al. (2017). Key features for more successful place-based sustainability research on social-ecological systems: a programme on ecosystem change and society (PECS) perspective. Ecol. Soc. 22, 14. doi:10.5751/ES-08826-220114

Bark, R. H., Kragt, M. E., and Robson, B. J. (2016). Evaluating an interdisciplinary research project: lessons learned for organisations, researchers and funders. Int. J. Proj. Manage. 34, 1449–1459. doi:10.1016/j.ijproman.2016.08.004

Bennett, L. M., Gadlin, H., and Levine-Finley, S. (2010). Collaboration & Team Science: A Field Guide. Bethesda, MD: National Institutes of Health. NIH Publication No 10-7660.

Bornmann, L. (2013). What is societal impact research and how can it be assessed? A literature survey. J. Am. Soc. Inf. Sci. Technol. 64, 217–233. doi:10.1002/asi.22803

Bozeman, B., and Boardman, C. (2009). Broad impacts and narrow perspectives: passing the buck on science and social impacts. Soc. Epistemol. 23, 183–198. doi:10.1080/02691720903364019

Cash, D. W., Borck, J. C., and Patt, A. G. (2006). Countering the loading-dock approach to linking science and decision making: comparative analysis of El Nina/Southern Oscillation (ENSO) forecasting systems. Sci. Technol. Hum. Values 31, 465–494. doi:10.1177/0162243906287547

Cheruvelil, K. S., Soranno, P. A., Weathers, K. C., Hanson, P. C., Goring, S. J., Filstrup, C. T., et al. (2014). Creating and maintaining high-performing collaborative research teams: the importance of diversity and interpersonal skills. Front. Ecol. Environ. 12:31–38. doi:10.1890/130001

Corbin, J., and Strauss, A. (2008). Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Los Angeles: SAGE.

Fraser, H., and Schalley, A. C. (2009). Communicating about communication: intercultural competence as a factor in the success of interdisciplinary collaboration. Aust. J. Linguist. 29, 135–155. doi:10.1080/07268600802516418

Glaser, B. G., and Strauss, A. L. (1967). The Discovery of Grounded Theory: Strategies for Qualitative Research. New York: Aldine de Gruyter.

Goring, S. J., Weathers, K. C., Dodds, W. K., Soranno, P. A., Sweet, L. C., Cheruvelil, K. S., et al. (2014). Improving the culture of interdisciplinary collaboration in ecology by expanding measures of success. Front. Ecol. Environ. 12:39–47. doi:10.1890/120370

Hall, K. L., Stokols, D., Moser, R. P., Taylor, B. K., Thornquist, M. D., Nebeling, L. C., et al. (2008). The collaboration readiness of transdisciplinary research teams and centers: findings from the National Cancer Institute’s TREC year-one evaluation study. Am. J. Prev. Med. 35(2 Suppl.), S161. doi:10.1016/j.amepre.2008.03.035

Hasan, H. M., and Dawson, L. (2014). “Appreciating, measuring and incentivising discipline diversity: meaningful indicators of collaboration in research,” in Proceedings of the 25th Australian Conferences on Information Systems (New Zealand: Auckland University of Technology), 1–10.

Holbrook, J. B., and Frodeman, R. (2011). Peer review and the ex ante assessment of societal impacts. Res. Eval. 20, 239–246. doi:10.3152/095820211X12941371876788

Kates, R. W., and Clark, W. C. (1999). Our Common Journey: A Transition toward Sustainability. Washington, DC: National Academy Press.

Kates, R. W., Clark, W. C., Corell, R., Hall, J. M., Jaeger, C. C., Lowe, I., et al. (2001). Sustainability science. Science 292, 641–642. doi:10.1126/science.1059386

Kreuter, M. W., Rosa, C. D., Howze, E. H., and Baldwin, G. T. (2004). Understanding wicked problems: a key to advancing environmental health promotion. Health Educ. Behav. 31, 441–454. doi:10.1177/1090198104265597

Lindenfeld, L., Hall, D. M., McGreavy, B., Silka, L., and Hart, D. (2012). Creating a place for environmental communication research in sustainability science. Environ. Commun. 6, 23–43. doi:10.1080/17524032.2011.640702

McGreavy, B., and Hart, D. (2017). Sustainability science and climate change communication. Oxford Res. Encycl. Clim. Sci. doi:10.1093/acrefore/9780190228620.013.563

McGreavy, B., Hutchins, K., Smith, H., Lindenfeld, L., and Silka, L. (2013). Addressing the complexities of boundary work in sustainability science through communication. Sustainability 5, 4195–4221. doi:10.3390/su5104195

McGreavy, B., Lindenfeld, L., Bieluch, K. H., Silka, L., Leahy, J., and Zoellick, B. (2015). Communication and sustainability science teams as complex systems. Ecol. Soc. 20, 2. doi:10.5751/ES-06644-200102

Miller, T. R., Baird, T. D., Littlefield, C. M., Kofinas, G., Chapin, F. III, and Redman, C. L. (2008). Epistemological pluralism: reorganizing interdisciplinary research. Ecol. Soc. 13, 46. doi:10.5751/ES-02671-130246

Miller, T. R. (2012). Constructing sustainability science: emerging perspectives and research trajectories. Sustainability Sci. 8, 279–293. doi:10.1007/s11625-012-0180-6

National Science Foundation. (2017). Proposal and Award Policies and Procedures Guide. Arlington, VA: National Science Foundation. (OMB control number: 3145-0058).

Roche, A. (2017). How Do We Collaborate? A Look into the Sustainable Ecological Aquaculture Network. Master’s thesis, University of Maine, Orono, ME.

Roux, D. J., Stirzaker, R. J., Breen, C. M., Lefroy, E. C., and Cresswell, H. P. (2010). Framework for participative reflection on the accomplishment of transdisciplinary research programs. Environ. Sci. Policy 13, 733–741. doi:10.1016/j.envsci.2010.08.002

Sonnenwald, D. H. (2007). Scientific collaboration. Annu. Rev. Inf. Sci. Technol. 41, 643–681. doi:10.1002/aris.2007.1440410121

Stock, P., and Burton, R. J. (2011). Defining terms for integrated (multi-inter-trans-disciplinary) sustainability research. Sustainability 3, 1090–1113. doi:10.3390/su3081090

Stokols, D., Fuqua, J., Gress, J., Harvey, R., Phillips, K., Baezconde-Garbanati, L., et al. (2003). Evaluating transdisciplinary science. Nicotine Tob. Res. 5(Suppl_1), S21–S39. doi:10.1080/14622200310001625555

Stokols, D., Hall, K. L., Taylor, B. K., and Moser, R. P. (2008a). The science of team science: overview of the field and introduction to the supplement. Am. J. Prev. Med. 35(2 Suppl.), S77–S89. doi:10.1016/j.amepre.2008.05.002

Stokols, D., Misra, S., Moser, R. P., Hall, K. L., and Taylor, B. K. (2008b). The ecology of team science: understanding contextual influences on transdisciplinary collaboration. Am. J. Prev. Med. 35, S96–S115. doi:10.1016/j.amepre.2008.05.003

Syme, S. L. (ed) (2008). The science of team science: assessing the value of transdisciplinary research. Am. J. Prev. Med. 35(2 Suppl.), S94–S95. doi:10.1016/j.amepre.2008.05.017

Thompson, J. L. (2007). Interdisciplinary Research Team Dynamics – A Systems Approach to Understanding Communication and Collaboration in Complex Teams. Saarbrücken, Germany: VDM Verlag.

Thompson, J. L. (2009). Building collective communication competence in interdisciplinary research teams. J. Appl. Commun. Res. 37, 278–297. doi:10.1080/00909880903025911

Tracy, S. J. (2013). Qualitative Research Methods: Collecting Evidence, Crafting Analysis, Communicating Impact. Chichester, West Sussex, UK: Wiley-Blackwell.

Wagner, C. S., Roessner, J. D., Bobb, K., Klein, J. T., Boyack, K. W., Keyton, J., et al. (2011). Approaches to understanding and measuring interdisciplinary scientific research (IDR): a review of the literature. J. Informetr. 5, 14–26. doi:10.1016/j.joi.2010.06.004

Welman, J. C., and Kruger, S. J. (1999). Research Methodology for the Business and Administrative Sciences. Johannesburg, South Africa: International Thompson.

Wiley, S. L. (2014). Doing Broader Impacts? The National Science Foundation (NSF) Broader Impacts Criterion and Communication Based Activities. Master’s thesis. Iowa State University, Ames, ME.

Keywords: interdisciplinary communication, sustainability science, grounded theory, collaborative research team, interdisciplinary research

Citation: Roche AJ and Rickard LN (2017) Cocitation or Capacity-Building? Defining Success within an Interdisciplinary, Sustainability Science Team. Front. Commun. 2:13. doi: 10.3389/fcomm.2017.00013

Received: 28 July 2017; Accepted: 22 September 2017;

Published: 11 October 2017

Edited by:

Todd Norton, Boise State University, United StatesReviewed by:

Ashley Rose Mehlenbacher, University of Waterloo, CanadaArren Mendezona Allegretti, Santa Clara University, United States

Copyright: © 2017 Roche and Rickard. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Abby J. Roche, YWJieS5yb2NoZUBtYWluZS5lZHU=

Abby J. Roche

Abby J. Roche Laura N. Rickard

Laura N. Rickard