- 1School of Communication, Journalism and Marketing, Massey University, Wellington, New Zealand

- 2National Research University Higher School of Economics, Moscow, Russia

Twitter is a powerful tool for world leaders to disseminate public health information and to reach citizens. While Twitter, like other platforms, affords world leaders the opportunity to rapidly present information to citizens, the discourse is often politically framed. In this study, we analysed how leaders’ of the Five Eyes intelligence sharing group use Twitter to frame the COVID-19 virus. Specifically, four research questions were explored: 1) How frequently did each leader tweet about COVID-19 in 2020? 2) Which frames emerged from tweet content of each leader regarding COVID-19? 3) What was the overall tweet valence of each leader regarding COVID-19? and 4) To what extent can leaders’ future tweets be predicted by the data? We used natural language processing (NLP) and conducted sentiment analysis via Python to identify frames and to compare the leaders’ messaging. Results showed that of the leaders, President Trump tweeted the most, with Prime Minister Morrison posting the least number of tweets. The majority of each leaders’ tweets were positive, while President Trump had the most negative tweets. Predictive modelling of tweet behavior was highly accurate.

Introduction

In March 2020, the World Health Organization (WHO) declared COVID-19 a global pandemic. Since December 2019, more than 170 million people have been infected with the virus and more than 3.5 million have died (Johns Hopkins Coronavirus Resource Center, updated daily). As the virus spread, billions went into lockdowns. Schools were shut down, businesses closed, international travel virtually halted, and many people transitioned to work from home. As governments and citizens grapple with lockdowns and social distancing, people have increasingly used social media to communicate their thoughts, opinions, beliefs, feelings and information about the virus (Wicke and Bolognesi, 2020). Similarly, leaders have increasingly turned to social media as a platform to disseminate information about the virus, government response plans, to promote public health, and to connect with citizens (Hubner, 2021; Rufai and Bunce, 2020).

During the COVID-19 pandemic, Twitter hashtags such as #coronavirus, #COVID19, #pandemic, and others multiplied as people began to debate, discuss, and discover the COVID-19 world. Twitter has become a powerful tool for world leaders to disseminate public health information and to reach its citizens. Rufai and Bunce (2020) for example found that of the G7 world leaders, only Angela Merkel did not have a Twitter account, while the leaders of Canada, France, Italy, Japan, the United Kingdom, and the United States were active users. Though Twitter, like other platforms, affords leaders (and other users) the opportunity to rapidly present information to citizens, the discourse is often politically framed (Burch et al., 2015; Ross and Caldwell, 2020). COVID-19 is one such issue that has been politically framed via media (traditional and social), as the pandemic has become a health, economic, and political issue (Croucher et al., 2020; Hubner, 2021).

This study explores how the leaders of the “Five Eyes” nations framed the COVID-19 discourse. The “Five Eyes” is a multilateral intelligence sharing and gathering group including the U.S., United Kingdom, Australia, New Zealand (NZ), and Canada. At the end of World War II, the United Kingdom and the U.S. signed the British-U.S. Communication Agreement (BRUSA), later known as UKUSA. The UKUSA initially focused on intelligence gathering about the Soviet Union. Canada joined the alliance in 1948, followed by Australia and New Zealand in 1956 (Pfluke, 2019). The group’s members gather and share intelligence information vital to defence planning and responding to global crises (Craymer, 2021). During the COVID-19 pandemic, the group expanded their coordination to focus on providing consular assistance, increased sharing of scientific information, and joint economic responses to the pandemic (Manch, 2020). Of the Five Eyes nations, only the NZ leader is not active on Twitter.

In comparison, the other four leaders are active users of Twitter for connecting with their citizens and communicating urgent messages such as public health and pandemic messaging. Previous research shows social media messaging has framed COVID-19 in various ways (Cinelli et al., 2020; Wicke & Bolognesi, 2020), sometimes facilitating or hindering public health efforts (Oladap et al., 2020). This article applies sentiment analysis to the Twitter activity of the Five Eyes leaders to examine how they framed COVID-19. Understanding how political leaders convey messages via platforms like Twitter will help inform future real-time public policy and crisis messaging.

Framing

The practice of media and politicians framing information or news is widely documented and shown to impact public opinion (e.g., de Vreese, 2004; Scheufele, 1999; Wicks, 2005). Frames are mental schemas that enable processing of information. The communicative process of framing is when frames or issues are presented “in such a way as to promote a particular problem definition, causal interpretation, moral evaluation, and/or treatment recommendation” (Entman, 1993, p. 52). The framing process is the selection of, and emphasis on, particular aspects of reality (frames) and making those more salient to the audience. Consistent use of frames helps guide audiences and publics towards certain perspectives on issues. Focusing on news events, Gamson and Modigliani (1989) described framing as emphasising specific aspects of reality while pushing other aspects into the background. de Vreese and Lecheler (2012) identified two types of frames used in the coverage of an issue: generic or issue-specific frames. Generic frames are ones that cross-thematic areas/topics and are applicable in all situations. Issue specific frames are ones that are relevant only to a specific topic or event, such as framing of the Vancouver riots on Twitter (Burch et al., 2015), or world leaders’ response to COVID-19 on Twitter (Wicke & Bolognesi, 2020).

In addition, the valence or the extent to which media frames are positive or negative in nature has a significant effect on the persuasiveness of framing (Levin et al., 1998). It is through framing messages that political leaders are able to influence opinions and attitudes on a particular issue (Cappella & Jamieson, 1997; Levin et al., 1998).

Strategic framing approaches the analysis of strategic messages looking across who is communicating, what they are saying (here, the content of the tweet), the receiver, and the culture (Guenther, et al., 2020). The focus of the frame is to understand what is foregrounded and what is neglected in the message. For this study we focus specifically on how these leaders are composing their tweets on COVID-19, though there is the potential for future work to explore a larger corpus that includes the threaded conversations.

Unlike more traditional mass media, social media provide a sense of immediacy, connectedness and dialogue (Papacharissi, 2016). Frames draw on wider knowledge, memory, and attitude, and thus are shaped over time. Twitter is also dialogic, meaning frames are amplified, reinforced and refined. Therefore, to understand how leaders use frames, it is necessary to look at their social media activity over a significant period to assess the nature and influence of the frames brought to bear on individual and collective messaging strategies.

Prior research has also found a relationship between frames propagated via Twitter and frames emerging in mainstream media (An et al., 2012; Moon and Hadley, 2014). Twitter informs not only general publics and populations, but also journalists who construct news out of the Twitter message or even the tweet itself. Though only a handful of studies have explored this link (Nerghes and Lee, 2019), it suggests the importance of understanding the frames of Twitter messaging in dynamic and complex situations such as that of the evolving pandemic crisis. That political actors carefully compose tweets to appeal to certain frames is already well-understood (Johnson, Jin, and Goldwasser, 2017). While earlier studies used manual techniques to explore the emerging frames, large data techniques are only now being used to develop insight into Twitter frames by exploring the sentiment of the language used as representative of the frames being foregrounded.

Political leaders and public actors use Twitter as a key communication space due to its directness and immediacy. As Hemphill, Culotta, and Heston (2013) note, Twitter frames different from mass media frames in that Twitter frames are direct from key sources. As such, they reflect direct attempts to influence public opinion and debate and to help shape what is included and excluded in the public imagination. Furthermore, Twitter frames were often most clearly applied to divisive or complex issues (Hemphill et al., 2013). By creating a consist frame over time through regularly repeating key phrases and language, maintaining tone and sentiment, and focusing on clear priorities, political leaders can use Twitter to steer the national conversation onto what they see as the main priorities in what are complex and dynamic issues, including pandemics.

Prior research has looked at how Covid-19 has been framed on Twitter generally (Dubey, 2020), for or against specific groups or populations (Shurafa, Darwish, and Zaghouani, 2020; Wicke and Bolognesi, 2021), or within limited contexts (Wicke and Bolognesi, 2020). Few studies have compared Twitter use during the pandemic between national leaders.

Study Rationale

Research has demonstrated the ability of framing to influence public opinions and attitudes on issues. While framing research has mainly focused on traditional media, social media platforms like Twitter have been shown to be platforms for framing. As the world has grappled with COVID-19, many world leaders took to social media platforms, such as Twitter to communicate with their citizens. The language used by world leaders is significant in shaping and guiding public health measures (Rufai and Bunce, 2020). The Twitter messaging from world leaders, as well as the public, in 2020 ranged from informative, to political, to negative (Wicke and Bolognesi, 2020). Thus, in line with suggestions to further clarify how COVID-19 is framed (Rufai & Bunce, 2020; Wicke & Bolognesi, 2020), and building off of methodological developments using sentiment analysis and natural language processing to explore human language and communication (Burscher et al., 2014; Evans et al., 2019; Oz, et al., 2018; Havens, and Bisgin, 2018) we pose the following research questions about the Twitter behaviour of the Five Eyes leaders regarding COVID-19:

RQ1: How frequently did each leader tweet about COVID-19 in 2020?

RQ2: Which frames emerged from tweet content of each leader regarding COVID-19?

RQ3: What was the overall tweet valence of each leader regarding COVID-19?

RQ4: To what extent can leaders’ future tweets be predicted by the data?

Method

This research explores leaders’ opinions on Twitter, regarding the COVID-19 pandemic through sentiment analysis using natural language processing (NLP). Data were processed in Python and visualized through Excel. Twitter boasts 330 million monthly active users, which allows leaders to reach a broad audience and connect with their people without intermediaries (Tankovska, 2021). Sentiment analysis involves monitoring emotions in conversations on social media platforms. Sentiment analysis uses NLP to make sense of human language and machine learning (Klein et al., 2018). Sentiment analysis is the process of mining meaningful patterns from textual data. This kind of analysis examines attitudes and emotions, and gathers information about the context so to predict and make accurate computational decisions based on large textual datasets. One method of sentiment analysis assigns polarity to a piece of text, for example, positive, negative, or neutral (Agarwal et al., 2011). Connecting sentiment analysis tools directly to Twitter allows researchers to monitor tweets as and when they come in, 24/7, and get up-to-the-minute insights from these social mentions. Sentiment analysis is gaining in prominence in communication research (Burscher et al., 2014; Robles et al., 2020; Rudkowsky et al., 2018). Sentiment analysis using NLP is a novel and robust form of data analysis used to understand various frames in media, including Twitter and other forms of social media (Akyürek et al., 2020; Luo, Zimet, and Shah, 2019).

Data Collection

A total of 15,848 posts were collected from the five leaders’ Twitter accounts using the Twitter Streaming API (Application Programme Interface). The Twitter API allows researchers to access and interact with public Twitter data. We used the Twitter streaming API (Tweepy) to connect with Twitter data streams and gather tweets and retweets containing keywords and hashtags, as well as number of likes and number of retweets and their posting time from these five leaders. The data selection frame for all tweets was from January 1, 2020 to December 21, 2020. Johnson (United Kingdom), Trudeau (Canada), Ardern (NZ) and Morrison’s (AUS) data were successfully collected using Twitter API streaming.

During data collection in January 2021, Twitter announced that former President Donald Trump’s (US) Twitter was suspended permanently on January 8, 2021 (Permanent, 2021). The suspension meant Trump’s prior tweets were out of public view. Retweets of the president’s messages were removed from the forwarding user’s timelines, and quote tweets were replaced with the message “Account suspended,” and a further sentence was added saying, “Twitter suspends accounts which violate the Twitter Rules”. It meant the ability to navigate his likes, retweets, and quotes of tweets was gone, as was the ability to see, in one place, all replies to anything he tweeted. Trump’s data were collected from “Trump Twitter Archive” (Trump, n.d.). This archive allows the public to search through more than 56,000 tweets posted to his account between 2009 and 2021. The archive allows the ability to filter tweets by date and the device used, and also archived the tweets Trump posted and then later deleted, starting in September 2016 (Where, 2021). Thus, a total of 11,880 posts, including tweets and retweets containing keywords and hashtags as well as number of likes and number of retweets and their posting time, were collected for this research.

Reviewing the data collected, we realized that NZ Prime Minister Ardern only posted three tweets over the sample period and none of them were COVID-19 related. Thus, her Twitter data was excluded from this study. Moreover, we found Canadian Prime Minister Trudeau used more than one language in his tweets, English and French. As both languages appeared in his tweets, which may affect the results, we dropped his French-only tweets. However, there were many tweets that included both English and French, and these tweets were retained. In the final analysis Trump, Trudeau, Johnson, and Morrison were included.

Data Processing

Social media data is unstructured and needs to be cleaned before analysis. TextBlob is the python library for processing textual data. TextBlob is a high-level library built within the natural language toolkit (NLTK). First, we conducted a “clean_tweet” method to remove links, special characters, etc. from each tweet. Then, we analyzed each tweet to create a TextBlob object; this is a four step process. First, each tweet is tokenized, i.e. split words from body of text. Second, stop-words are removed from the tokens. Stop-words are commonly used words that are irrelevant in text analysis like: I, am, you, are, etc. Third, part of speech (POS) tagging is conducted. POS is when each token is analyzed for only significant features like, adjectives, adverbs. Fourth, tokens are passed through a sentiment classifier, which classifies the tweet sentiment as positive or negative by assigning it a polarity between −1.0 and 1.0.

Pre-processing a Twitter dataset involves a series of tasks like removing all types of irrelevant information such as emojis, special characters, and extra blank spaces. It also involves making format improvements, deleting duplicate tweets, or tweets that are shorter than three characters. To clean the data and prepare it for key phrase extraction, we applied the following pre-processing steps using NLP techniques implemented by Python. First, declined a utility function to clean the text in a tweet by removing links and special characters using regex, including URLs and hashtags. Second, removed all retweets. Third, removed all non-English tweets, except Trudeau’s tweets. Fourth, reduced repeated characters (e.g., toooooool becomes tool). Fifth, removed numeric words.

After the pre-processing tasks were completed, non-English and duplicated tweets were removed, thereby reducing the total number of comments to 8,485. We then extracted meaningful keywords or topics to convey the subject content of the review. For example, Trump shared a post on 10:20:32 PM 2020–08–31 (NZDT), and its original content shows “Will be interviewed by Laura Ingraham (@IngrahamAngle) tonight at 10:00 P.M. Eastern on @FoxNews. Enjoy!.” After it has been pre-processed, the special characters, P.M., at, (), and ! were dropped, the numeric words, 10:00, was dropped, and non-meaningful words, “will,” “be,” “by,” “at,” and ‘on’, were dropped as well. So, the remaining text identified as meaningful keywords for this post is ‘interviewed Laura Ingraham IngrahamAngle tonight Eastern FoxNews Enjoy.”

Sentiment Level Analysis

To determine opinionated words, a scoring module computes sentiment level, ranging from -1 to 1 for each post using the VADER lexicon-based algorithm (Borg and Boldt, 2020). Afterwards, each theme is assigned a polarity based on the sentiment level using the criteria in Table 1.

To classify sentiment level, we followed three steps. First, we created a column with the result of positive or negative attitude for each tweet. Second, we parsed the tweets, classifying each tweet as positive or negative. Third, we trained the sentiment analysis model by tagging each of the tweets as either positive or negative based on the polarity of the opinion. We defined sentiment level greater than or equal to 0 as positive words, and sentiment level less than 0 as negative words.

Positive and negative features are extracted from each positive and negative review. Training data now consists of labelled positive and negative features. In the following, we refer to the data set containing annotations as a corpus, and each annotated document as a document. Since our goal is to evaluate public opinion and the impact on the COVID-19 pandemic, we focus on targeted topics, that is, expressing or suggesting positive or negative emotions. To use this data for predictive purposes, we applied eight steps using Python. First, we split the data into two datasets, including training data, which contains 95% of the data, and test data, which contains 5% of the data. Second, the X_train dataset was trained by Vectoriser, which converts a collection of raw documents into a matrix of TF-IDF features, or a statistical measure that evaluates how relevant a word/document is within a corpus. Third, we transformed the X_train and X_test dataset into matrix of TF-IDF features by using the TF-IDF Vectoriser. TF-IDF indicates what the importance of the word is in order to understand the dataset. Fourth, we evaluated model function. Fifth, we ran a Bernoulli Naive Bayes (BernoulliNB) model1, linear Support Vector Classification (LinearSVC) model, and logistic regression (LR) model. Sixth, we evaluated model accuracy. Seventh, we selected the model of best fit. Eighth, we computed and plotted a confusion matrix.

Results

Frequency of Tweets

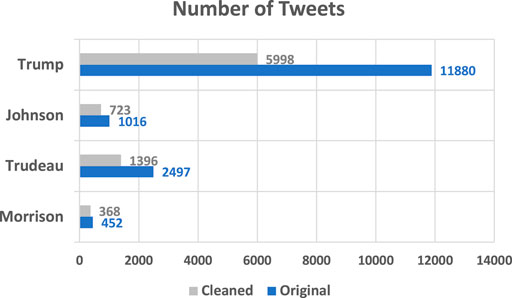

Verified Twitter accounts of four final subjects were identified as active accounts. Originally, 15,848 tweets were collected from these four national leaders and after data processing, 8,485 tweets were left for further analysis. The detailed distribution of tweets for each leader are shown in Figure 1 above.

As shown in Figure 1, Trump is the most active Twitter user amongst the leaders by a significant margin; he tweeted 11,880 tweets in 2020. The other leaders tweeted much less. Morrison tweeted 452 times, which is the least among the four leaders. The number of Trump’s cleaned tweets dropped to 5,998, which is almost half of its original data. This indicates he has either retweeted a significant amount or shared URLs in his tweets without other content in the tweets.

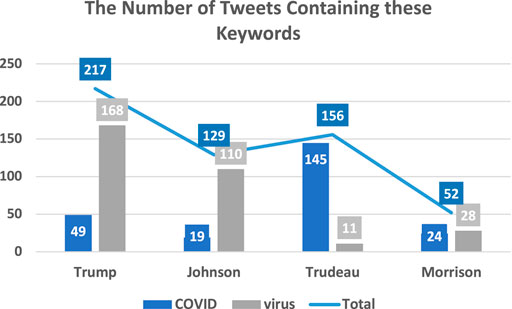

Figure 2 shows the number of tweets that contain two keywords, COVID and virus, which are words directly related to the COVID-19 pandemic. Trump mentioned these two words 217 times in 2020, ranking the highest amongst the four national leaders. However, considering the total number of tweets posted throughout the year in the cleaned dataset and compared with the other three leaders, he did not tweet these two words the most per tweet. Johnson and Trudeau used “COVID” and “virus” on their Twitter frequently. As shown in Figure 1, Johnson, the Prime Minster of the United Kingdom, who only shared 723 COVID-19 related tweets mentioned both COVID and virus 129 times in 2020, 17.84% of his total posts. Similarly, Morrison’s COVID and virus related Twitter sharing accounts for 14.13% and Trudeau’s makes up to 11.17%. Trump’s was only 3.6%. Thus, we can see that although Trump has used these two words 217 times, overall, he did not use these words as frequent per tweet. The more detailed discussion of this frequency is presented in the implications.

Tweet Sentiment

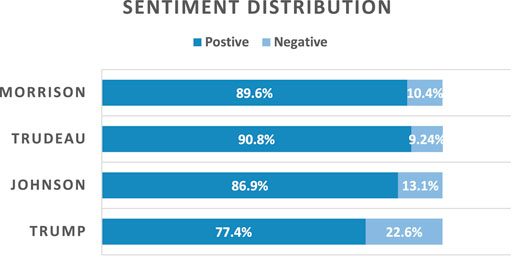

Figure 3 displays the sentiment distribution of the four national leaders. Trudeau shared the most positive tweets, accounted for 9.24%. Trumps’ Twitter had the most negative tweets, accounting for 22.6% in total. Except for Trump, the other three leaders all shared more than 85% positive tweets.

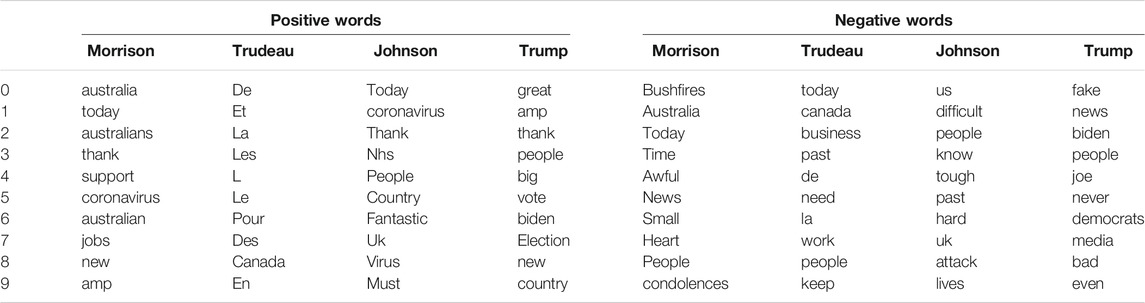

Expanding on the sentiment analysis, Table 2 shows the most common words when emotional value is scaled from positive to negative in these four leaders’ tweets. Table 3 shows the 10 most common positive and negative words used by the four leaders. It is worth mentioning that “bushfire,” “today,” “us,” and ”fake” are top 10 negative words used by Morrison, Trudeau, Johnson, and Trump, respectively. It is interesting that NLP automatically categorized the word “us” as a negative word. Similarly, “Australia” and “today” were labelled as positive words.

Predictive Modelling

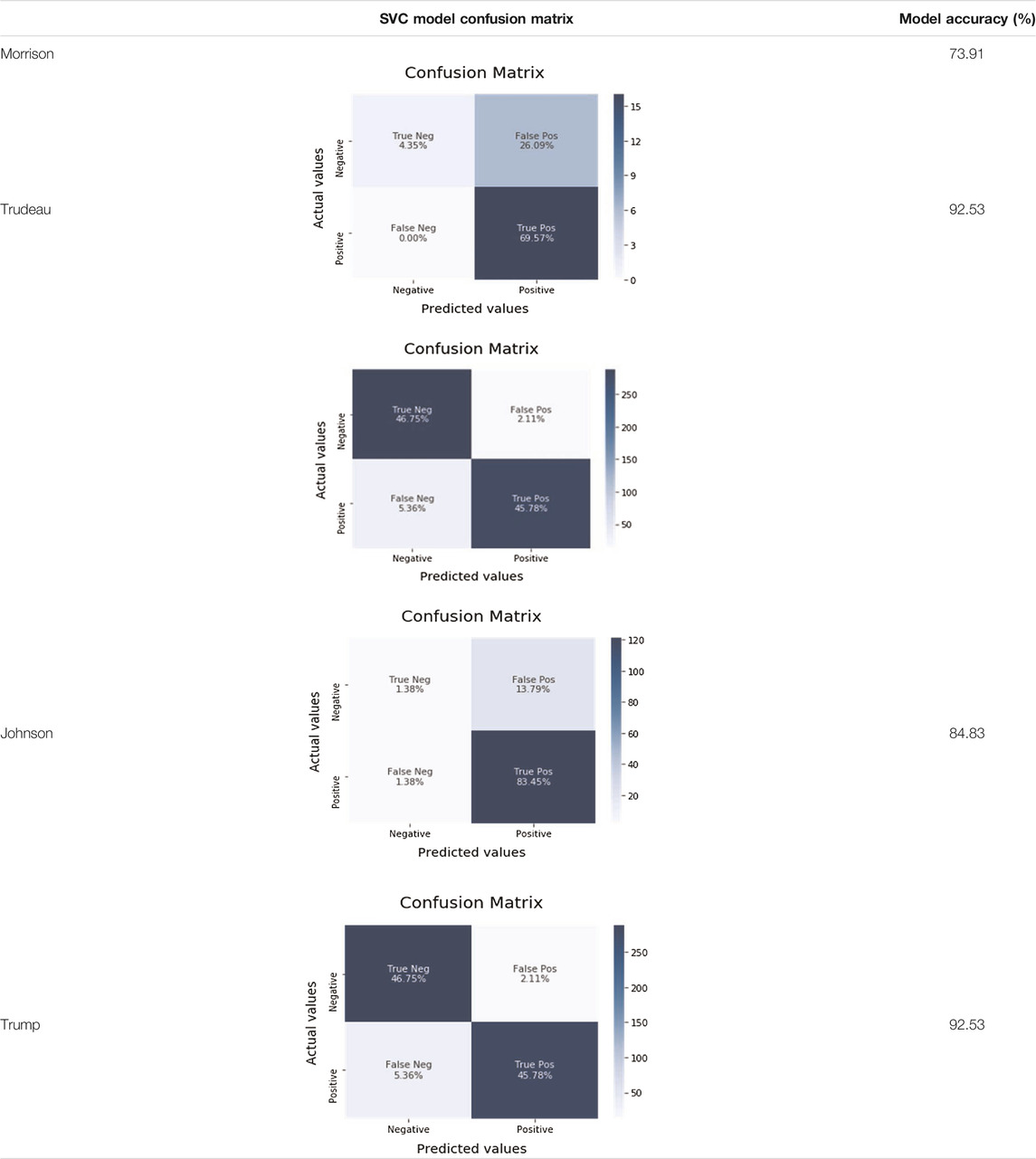

We plotted a confusion matrix to understand the predictive nature of our models (Rameshbhai and Paulose, 2019). After testing the model fit, linear support vector classification model (SVC) was the most parsimonious. The confusion matrix and model accuracy for each leader is displayed in Table 4. We ran linear SVC modelling to conduct the confusion matrix. For a step-by-step guide to SVM and NLP see Rameshbhai and Paulose (2019). As shown in Table 4, both Trump and Trudeau’s models achieve at least 92% accuracy while classifying the sentiment of a tweet. These results indicate these two models predict at least 92% of their future Twitter posts. Morrison’s model forecasts 74% of his future posts.

Discussion

In a digital global media landscape, the social media activities of political leaders has become an increasingly important source of information, perspective, and reassurance for their populations (Stier et al., 2018; Tromble, 2018). As our data show, high profile political leaders can have follower counts into the millions, granting them an extensive, always-on point of contact with their citizens and a global audience, and one that can be used to reassure or inflame. In times of crisis, these tweets are authoritative messages from those nominally in charge in a crisis and can help set the tone of public reaction, and guide what constitutes a legitimate part of the discussion.

However, Twitter messages are by design brief and do not allow for significant information density, so how leaders tweet can be just as important as what is tweeted (Ceron et al., 2014; Hong, Choi, & Kim, 2019). These on-going flow of these micro-messages from leaders to publics help set the tone of public debate and steer the conversation in different ways which have a significant effect in a crisis. Our research suggests some interesting implications for the frames found in Twitter use by the Five Eyes leaders in the midst of a global pandemic.

The results revealed Trump tweeted the most, with Morrison posting the least number of tweets (RQ1). A variety of positive and negative frames emerged from each leaders’ tweets (RQ2), with the majority of each leaders’ tweets being positive. However, President Trump had the most negative tweets (RQ3). Predictive modelling is 73.91–92.54% accurate in predicting future tweets about COVID-19 from these leaders (RQ4).

Trump’s tweets stood out in the data, both in terms of volume of overall messages and negative sentiment in the sample, but perhaps most interestingly in the comparatively infrequent use of terms “covid” and “virus.” One possible explanation was Trump was using different language to shift the frame away from these more neutral, scientifically ground terms. Another possible explanation is that by adopting a negative frame, he was attempting to elicit stronger attitude certainty and support, in this case in opposition to scientific advice. This would also support the finding of strong negative sentiment across Trump’s tweets and a messaging style at odds with other global leaders.

The other leaders in our sample took a more positive tone, reflecting messages of gratitude and support as part of their overall COVID-related twitter activity. However, closer examination of the data suggest some possible analytical issues, with both bilingual phrasing and common terms like “Australia” for the Australian Prime Minister both being coded with a positive sentiment. Even with this result, the data suggest a generally positive framing from all but the U.S. leader in the sample over a considerable period of tweeting time.

This suggests a strategic choice by these leaders to use a more positive frame to influence opinion and action (such as to encourage compliance with lockdowns) and reserve more negatively-coded words to reflect on tragedy and loss of life, as seen in Table 3. The choice of positive frames here may in part be explained as an attempt to guide the national conversation away from seeking “blame” for the pandemic towards a collective approach necessary to support the public health strategies required to manage a pandemic.

Research exploring political leaders’ framing of COVID-19 have shown leaders’ framing of COVID-19 significantly shapes how the public understands the virus (Rufai and Bunce; Wicke and Bolognesi, 2020). Our findings suggest that, apart from Trump, most other Five Eyes leaders adopted frames that stress social cohesiveness and positively present compliance with often stressful social demands such as lockdowns as the norm.

While leaders’ via social media frame the virus in varied ways, the use of Twitter, which provides instant dialogue with world leaders, demonstrates the significant ability of social media to influence public opinions and attitudes on issues. Future research should expand past identifying these frames and explore how these frames influence how publics, including other Twitter users and mainstream media actors such as journalists, perceive/understand issues, such as COVID-19.

As noted, this research uncovered issues with how sentiment analysis coded terms (such as bilingual content), and this needs to be addressed by future research. This will be particularly important for any future research that engages with bilingual content or tweets in languages other than English. Future research could expand beyond the Five Eyes group, which represents a reasonably homogeneous content sample, to a wider set of political leaders who tweet or otherwise microblog. Additionally, while this sentiment analysis identified the frames of the Five eyes leaders, future research could expand on this work by exploring these frames within each nations’ political, and socio-economic ideological foundations. Understanding the frames deployed by Five Eyes leaders in their tweets provides a grounding from which to conduct a more nuanced analysis that could also include the historical and political context in which the tweets take place might reveal new insights into the tweets and the tweeting behaviors.

A potential limitation of this project was how the NLP automatically categorised words. For example, the word “us” was coded as a negative word, while words such as “Australia” and “today” were coded as positive words. Follow-up research could retrain the sentiment analysis model learning from the current analysis. While NLP is a novel and appropriate tool for analysing Twitter and other social media messages Akyrek et al. (2020), Luo et al. (2019), the incorporation of qualitative analyses, such as discourse and/or narrative analysis might provide further understanding of the tweets’ meanings, the act of framing, and of the intentions of the political leaders.

In a future promising a range of looming global crises—economic, environmental and health—the ways in which leaders communicate key crisis messaging to dispersed publics will be a key part of wider crisis communication management. Twitter and other microblogging platforms, with their speed and brevity, will be a key part of this trend. Understanding the influence of composition of messages and how they frame and present complex but vital information for the public good will be as important as the content in shaping effective and productive micro-messaging strategies.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

This article is YW’s Master’s thesis SMC and EP assisted her on the thesis and preparing it for article submission. Both SMC and EP assisted on the literature review and discussion sections.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1Naive Bayes is a conditional probability model.

References

Agarwal, A., Xie, B., VovshaRambow, I. O., and Passonneau, R. (2011). “Sentiment Analysis of Twitter Data,” in Proceedings of the Workshop on Language in Social Media (New York: Columbia University Press), 30–38.

Akyürek, A. F., Guo, L., Elanwar, R., Ishwar, P., Betke, M., and Wijaya, D. T. (2020). “Multi-label and Multilingual News Framing Analysis,” in Proceedings of the 58th Annual Association for Computational Linguistics, 8614–8624. doi:10.18653/v1/2020.acl-main.763

An, J., Cha, M., Gummadi, K., Crowcroft, J., and Quercia, D. (2012). “Visualizing media Bias through Twitter,” in Sixth International AAAI Conference on Weblogs and Social Media.

Borg, A., and Boldt, M. (2020). Using VADER Sentiment and SVM for Predicting Customer Response Sentiment. Expert Syst. Appl. 162, 113746. doi:10.1016/j.eswa.2020.113746

Burch, L. M., Frederick, E. L., and Pegoraro, A. (2015). Kissing in the Carnage: An Examination of Framing on Twitter during the Vancouver Riots. J. Broadcasting Electron. Media 59 (3), 399–415. doi:10.1080/08838151.2015.1054999

Burscher, B., Odijk, D., Vliegenthart, R., De Rijke, M., and De Vreese, C. H. (2014). Teaching the Computer to Code Frames in News: Comparing Two Supervised Machine Learning Approaches to Frame Analysis. Commun. Methods Measures 8 (3), 190–206. doi:10.1080/19312458.2014.937527

Cappella, J. N., and Jamieson, K. H. (1997). Spiral of Cynicism: The Press and the Public Good. Oxford University Press.

Ceron, A., Curini, L., Iacus, S. M., and Porro, G. (2014). Every Tweet Counts? How Sentiment Analysis of Social media Can Improve Our Knowledge of Citizens' Political Preferences with an Application to Italy and France. New Media Soc. 16 (2), 340–358. doi:10.1177/1461444813480466

Cinelli, M., Quattrociocchi, W., Galeazzi, A., Valensise, C. M., Brugnoli, E., Schmidt, A. L., et al. (2020). The COVID-19 Social media Infodemic. Sci. Rep. 10, 16598. doi:10.1038/s41598-020-73510-5

Craymer, L. (2021). Five Eyes: What Is it? and How Has the Intelligence Group Expanded into More of a Diplomatic mission? Stuff. Available at: https://www.stuff.co.nz/national/politics/124970043/five-eyes-what-is-it-and-how-has-the-intelligence-group-expanded-into-more-of-a-diplomatic-mission.

Croucher, S. M., Nguyen, T., and Rahmani, D. (2020). Prejudice toward Asian Americans in the Covid-19 Pandemic: The Effects of Social Media Use in the United States. Front. Commun. 5, 39. doi:10.3389/fcomm.2020.00039

de Vreese, C. H., and Lecheler, S. (2012). “News Framing Research: An Overview and New Developments,” in The SAGE Handbook of Political Communication. Editors H. A. Semetko, and M. Scammell (Thousand Oaks, CA: Sage), 292–306.

de Vreese, C. (2004). The Effects of Strategic News on Political Cynicism, Issue Evaluations, and Policy Support: A Two-Wave experiment. Mass Commun. Soc. 7 (2), 191–214. doi:10.1207/s15327825mcs0702_4

Dubey, A. D. (2020). Twitter Sentiment Analysis during COVID19 Outbreak. Available at: https://ssrn.com/abstract=3572023. doi:10.2139/ssrn.3572023

Entman, R. M. (1993). Framing: Toward Clarification of a Fractured Paradigm. J. Commun. 43 (4), 51–58. doi:10.1111/j.1460-2466.1993.tb01304.x

Evans, L., Owda, M., Crockett, K., and Vilas, A. F. (2019). A Methodology for the Resolution of Cashtag Collisions on Twitter - A Natural Language Processing & Data Fusion Approach. Expert Syst. Appl. 127 (1), 353–369. doi:10.1016/j.eswa.2019.03.019

Gamson, W. A., and Modigliani, A. (1989). Media Discourse and Public Opinion on Nuclear Power: A Constructionist Approach. Am. J. Sociol. 95 (1), 1–37. doi:10.1086/229213

Guenther, L., Ruhrmann, G., Bischoff, J., Penzel, T., and Weber, A. (2020). Strategic Framing and Social Media Engagement: Analyzing Memes Posted by the German Identitarian Movement on Facebook. Soc. Media + Soc. 6, 205630511989877. doi:10.1177/2056305119898777

Hemphill, L., Culotta, A., and Heston, M. (2013). Framing in Social Media: How the US Congress Uses Twitter Hashtags to Frame Political Issues. Available at: https://ssrn.com/abstract=2317335. doi:10.2139/ssrn.2317335

Hong, S., Choi, H., and Kim, T. K. (2019). Why Do Politicians Tweet? Extremists, Underdogs, and Opposing Parties as Political Tweeters. Policy & Internet 11 (3), 305–323. doi:10.1002/poi3.201

Hubner, A. (2021). How Did We Get Here? A Framing and Source Analysis of Early COVID-19 media Coverage. Commun. Res. Rep. 38 (2), 112–120. doi:10.1080/08824096.2021.1894112

Johns Hopkins Coronavirus Resource Center (updated daily). Tracking. Available at: https://coronavirus.jhu.edu/map.html.

Johnson, K., Jin, D., and Goldwasser, D. (2017). Modeling of Political Discourse Framing on Twitter. Proc. Int. AAAI Conf. Web Soc. Media 11 (1), 556–559. Retrieved from: https://ojs.aaai.org/index.php/ICWSM/article/view/14958.

Klein, A. Z., Sarker, A., Cai, H., Weissenbacher, D., and Gonzalez-Hernandez, G. (2018). Social media Mining for Birth Defects Research: A Rule-Based, Bootstrapping Approach to Collecting Data for Rare Health-Related Events on Twitter. J. Biomed. Inform. 87, 68–78. doi:10.1016/j.jbi.2018.10.001

Levin, I. P., Schneider, S. L., and Gaeth, G. J. (1998). All Frames Are Not Created Equal: A Typology and Critical Analysis of Framing Effects. Organizational Behav. Hum. Decis. Process. 76 (2), 149–188. doi:10.1006/obhd.1998.2804

Luo, X., Zimet, G., and Shah, S. (2019). A Natural Language Processing Framework to Analyse the Opinions on HPV Vaccination Reflected in Twitter over 10 Years (2008 - 2017). Hum. Vaccin. Immunother. 15 (7-8), 1496–1504. doi:10.1080/21645515.2019.1627821

Manch, T. (2020). Five Eyes Spy-alliance Countries to “Coordinate” Covid-19 Economic Response. Stuff. Available at: https://www.stuff.co.nz/national/politics/121786841/five-eyes-spyalliance-countries-to-coordinate-covid19-economic-response.

Moon, S. J., and Hadley, P. (2014). Routinizing a New Technology in the Newsroom: Twitter as a News Source in Mainstream media. J. Broadcasting Electron. Media 58 (2), 289–305. doi:10.1080/08838151.2014.906435

Nerghes, A., and Lee, J.-S. (2019). Narratives of the Refugee Crisis: A Comparative Study of Mainstream-media and Twitter. MaC 7 (2), 275–288. doi:10.17645/mac.v7i2.1983

Oyebode, O., Ndulue, C., Adib, A., Mulchandani, D., Suruliraj, B., Orji, F. A., et al. (2020). Health, Psychosocial, and Social Issues Emanating from the COVID-19 Pandemic Based on Social Media Comments: Text Mining and Thematic Analysis Approach. JMIR Med. Inform. 9, e22734. doi:10.2196/22734

Oz, T., Havens, R., and Bisgin, H. (2018). Assessment of Blame and Responsibility through Social media in Disaster Recovery in the Case of #FlintWaterCrisis. Frontiers in Communication. Available at: https://www.frontiersin.org/articles/10.3389/fcomm.2018.00045/full.

Papacharissi, Z. (2016). Affective Publics and Structures of Storytelling: Sentiment, Events and Mediality. Inf. Commun. Soc. 19 (3), 307–324. doi:10.1080/1369118X.2015.1109697

Permanent (2021). Permanent Suspension of @realDonaldTrump. Twitter Inc. Available at: https://blog.twitter.com/en_us/topics/company/2020/suspension.html.

Pfluke, C. (2019). A History of the Five Eyes Alliance: Possibility for Reform and Additions. Comp. Strategy 38 (4), 302–315. doi:10.1080/01495933.2019.1633186

Rameshbhai, C. J., and Paulose, J. (2019). Opinion Mining on Newspaper Headlines Using SVM and NLP. Ijece 9 (3), 2152–2163. doi:10.11591/ijece.v9i3.pp2152-2163

Robles, J. M., Velez, D., De Marco, S., Rodríguez, J. T., and Gomez, D. (2020). Affective Homogeneity in the Spanish General Election Debate. A Comparative Analysis of Social Networks Political Agents. Inf. Commun. Soc. 23 (2), 216–233. doi:10.1080/1369118X.2018.1499792

Ross, A. S., and Caldwell, D. (2020). ‘Going Negative': An APPRAISAL Analysis of the Rhetoric of Donald Trump on Twitter. Lang. Commun. 70, 13–27. doi:10.1016/j.langcom.2019.09.003

Rudkowsky, E., Haselmayer, M., Wastian, M., Jenny, M., Emrich, Š., and Sedlmair, M. (2018). More Than Bags of Words: Sentiment Analysis with Word Embeddings. Commun. Methods Measures 12 (2-3), 140–157. doi:10.1080/19312458.2018.1455817

Rufai, S. R., and Bunce, C. (2020). World Leaders' Usage of Twitter in Response to the COVID-19 Pandemic: a Content Analysis. J. Public Health 42 (3), 510–516. doi:10.1093/pubmed/fdaa049

Scheufele, D. A. (1999). Framing as a Theory of media Effects. J. Commun. 49 (1), 103–122. doi:10.1111/j.1460-2466.1999.tb02784.x

Shurafa, C., Darwish, K., and Zaghouani, W. (2020). “Political Framing: US COVID19 Blame Game,” in Social Informatics. SocInfo 2020. Lecture Notes in Computer Science. Editors S. Aref, K. Bontcheva, M. Braghieri, F. Dignum, F. Giannotti, F. Grisoliaet al. (Cham: Springer), 333–351. doi:10.1007/978-3-030-60975-7_25

Stier, S., Bleier, A., Lietz, H., and Strohmaier, M. (2018). Election Campaigning on Social media: Politicians, Audiences, and the Mediation of Political Communication on Facebook and Twitter. Polit. Commun. 35 (1), 50–74. doi:10.1080/10584609.2017.1334728

Tankovska, H. (2021). Twitter: Number of Monthly Active U.S. Users 2010-2019. Statista. Available at: https://www.statista.com/statistics/274564/monthly-active-twitter-users-in-the-united-states/#:∼:text=With%20more%20than%20330%20million,reach%2059.6%20million%20in%202022.

Tromble, R. (2018). Thanks for (Actually) Responding! How Citizen Demand Shapes Politicians' Interactive Practices on Twitter. New Media Soc. 20 (2), 676–697. doi:10.1177/1461444816669158

Trump (n.d.). Trump Twitter Archive. Available at: https://www.thetrumparchive.com/.

Where (2021). Where to Read Donald Trump’s Tweets Now that Twitter Has Closed His Account. Available at: https://qz.com/1955036/where-to-find-trumps-tweets-now-that-hes-banned-from-twitter/.

Wicke, P., and Bolognesi, M. M. (2021). Covid-19 Discourse on Twitter: How the Topics, Sentiments, Subjectivity, and Figurative Frames Changed over Time. Front. Commun. 6. doi:10.3389/fcomm.2021.651997

Wicke, P., and Bolognesi, M. M. (2020). Framing COVID-19: How We Conceptualize and Discuss the Pandemic on Twitter. PLoS ONE 15 (9), e0240010. doi:10.1371/journal.pone.0240010

Keywords: twitter, framing, natural language processing, sentiment analysis, COVID-19

Citation: Wang Y, Croucher SM and Pearson E (2021) National Leaders’ Usage of Twitter in Response to COVID-19: A Sentiment Analysis. Front. Commun. 6:732399. doi: 10.3389/fcomm.2021.732399

Received: 29 June 2021; Accepted: 23 August 2021;

Published: 08 September 2021.

Edited by:

Pradeep Nair, Central University of Himachal Pradesh, IndiaReviewed by:

Rasha El-Ibiary, Future University in Egypt, EgyptKostas Maronitis, Leeds Trinity University, United Kingdom

Copyright © 2021 Wang, Croucher and Pearson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stephen M Croucher, cy5jcm91Y2hlckBtYXNzZXkuYWMubno=

Yuming Wang

Yuming Wang Stephen M Croucher

Stephen M Croucher Erika Pearson

Erika Pearson