Abstract

Laughter is a ubiquitous vocal behavior and plays an important role in social bonding, though little is known if it can also communicate romantic attraction. The present study addresses this question by investigating spontaneous laughter produced during a 5-min conversation in a heterosexual speed-dating experiment. Building on the posits of Accommodation Theory, romantic attraction was hypothesized to coincide with a larger number of shared laughs as a form of convergence in vocal behavior that reduces the perceived distance between the daters. Moreover, high-attraction dates were expected to converge toward the same laughter type. The results of the experiment demonstrate that (a) laughs are particularly frequent in the first minute of the conversation, (b) daters who are mutually attracted show a significantly larger degree of temporal overlap in laughs, (c) specific laughter types (classified as a nasal “laugh-snort”) prevail in high-attraction dates, though shared laughs are not consistently of the same type. Based on this exploratory analysis (limited to cisgender, heterosexual couples), we conclude that laughter is a frequent phenomenon in speed dating and gives some indication of a mutual romantic attraction.

Introduction

Laughter is a universal communicative behavior that is observed and recognized in all cultures around the world (Lefcourt, 2000; Provine, 2000; Kipper and Todt, 2001; Sauter et al., 2010; Bryant et al., 2016). Despite its prevalence in social interaction (e.g., Jefferson, 2004; Vettin and Todt, 2004; Tanaka and Campbell, 2011; Truong and Trouvain, 2014), social meanings and communicative functions of laughter are still not entirely understood. In a large cross-cultural study, Bryant et al. (2016) investigated the perception of laughter produced in conversations between friends or strangers and showed that listeners from 24 different cultures could reliably identify the familiarity levels between speakers based on their laughs alone. This finding suggests that laughter might fulfill an important social function of signaling in-group/out-group relationships between conversational interlocutors. It has further been proposed that laughter might have a face-preserving function in situations of a conversational face threat (Grammer and Eibl-Eibsfeldt's, 1990). Specifically, “mixed sex encounter have a high potential risk of face loss and rejection […]. A statement accompanied by laughter which has the metacommunicative function of signaling that this statement is a play statement could reduce this possibility” (Grammer and Eibl-Eibsfeldt's, 1990, p. 195-196). Laughter has also been suggested to reflect and reinforce positive emotion in social interaction (Owren and Bachorowski, 2003; Smoski and Bachorowski, 2003). Both in-group/out-group relations, face preservation and positive emotion might play an important role in the context of an emerging romantic attraction. The present paper aims to address the empirical question of how, and to what extent, laughter is involved in mutual romantic attraction, by studying spontaneous laughter produced in the context of cisgender, heterosexual speed dating.

Acoustic and respiratory features of laughter

Bryant et al. (2016) showed that laughter can be acoustically diverse and analyzed a number of laughs that had been previously investigated in a perception study. Accordingly, laughter produced among friends has a shorter duration and larger variability in the fundamental frequency and intensity, compared to laughter produced among strangers.

In an earlier large-scale study, Bachorowski et al. (2001) analyzed acoustic features of 1,024 laughs produced by 97 young adults while watching humorous video clips. The study dismantled the myth of a “stereotypical laugh”—a harmonically rich, vowel-like vocalization consisting of multiple repetitions of “ha,” “he” or “ho.” Merely 30% of all observed laughs were of this type. In contrast, around 50% of all recorded laughs in this study were unvoiced, with two sources of voiceless turbulence in the signal differentiating between a nasal, “snort-like” and an oral, “grunt-like” laugh. Among acoustic parameters that play a role in laughter, fundamental frequency, signal-to-noise ratio, glottal excitation features, vowel quality, intensity and duration are often mentioned (Grammer and Eibl-Eibsfeldt's, 1990; Bachorowski et al., 2001; Kohler, 2008; Tanaka and Campbell, 2011), though there are disagreements as to how these acoustic characteristics should be mapped onto the auditory impressions of laughter and lead to an acoustically informed laughter typology (Trouvain, 2003).

Physiological correlates of laughter can be found in a forced exhalation (Ruch and Ekman, 2001), sometimes described as the “spasms of the diaphragm” (Scott et al., 2014), and in repeated contractions of the intercostal muscles within the chest wall (Kohler, 2008). A strong physiological component that plays a role in laughter is respiration, though only a very limited number of studies has addressed the respiratory kinematics of different laughs. Filippelli et al. (2001) studied respiration in participants watching a variety of humorous video clips and found a steep decrease in lung volume during laughter (to a level as low as the residual respiratory capacity in some individuals). However, such respiratory changes might depend on the type of laughter which was not controlled for in Filippelli et al. (2001). For example, a rapid drop in lung volume requires the glottis to be open, meaning that the vocal fold vibration that leads to voicing cannot take place. This appears to be at odds both with the voiced hahaha-type of laughter reported in the literature (e.g., Provine, 1996, 2000; Bachorowski et al., 2001; Trouvain, 2003) and with the frequent co-occurrence of speech and laughter (Nwokah et al., 1999). A physiologically imposed limit applies to the frequency of pulses within a laugh cycle. These can vary from 4 to 12 pulses per cycle, and their occurrence is influenced by the individual lung volume (Ruch and Ekman, 2001).

Respiratory kinematics of laughter during spoken social interaction has rarely been addressed in previous research. McFarland (2001) investigated interpersonal synchrony of breathing kinematics using cross-correlation of data taken from spontaneous conversations between same-sex participants and reported many occurrences of simultaneous laughter close to turn changes. Interlocutors showed a high degree of joint respiratory synchronization (in-phase coordination of in-breaths) around 5 s before or after a turn change, or after a shared laugh. Shared laughter refers to those periods in a conversation when both interlocutors are laughing simultaneously. Even though respiratory kinematics involved in single or shared laughter is rather poorly documented, mutual influences between respiration and basic emotions have been frequently discussed, with laughter being often seen as part of the basic emotion repertoire (specifically in the category of joy). Empirical evidence for the interplay between laughter, emotion and breathing dates back to the work by Feleky (1915) who found that basic emotions—among them joy with laughter—have specific breathing characteristics and may express themselves in the facial and the respiratory muscles. Bloch et al. (1991) elaborated that joy-laughter has some specific temporal relations between inhalations and exhalations, namely the ratio between the duration of an inhalation and an exhalation was ~0.4. This timing ratio was further accompanied by a small inhalation amplitude and saccadic patterns in the exhalation amplitude.

Frequency of individual and shared laughter in conversation

As far as an overall frequency of laughter in a conversation is concerned, about 5 laughs per 10 min have been observed in daily interactions among close friends (Vettin and Todt, 2004), though laughter frequency is known to increase up to 75 times per 10 min in the context of an interaction between strangers of opposite sex, with women laughing slightly more frequently than men (Grammer, 1990). The most frequently observed laughs are known to be purely interactional, unrelated to the presence of humor (Jefferson, 2004; Vettin and Todt, 2004). These interactional laughs are often initiated by the person who has just spoken (Glenn, 1989, 1992; Vettin and Todt, 2004), occur at the end of utterances (Provine and Emmorey, 2006) and are said to express emotion of the speaker (; Provine, 2004; Provine and Emmorey, 2006; Kohler, 2008). A prolonged period of laughter in a conversation offers an opportunity for the interlocutor to join in, and share the laugh (Glenn, 1989, 1992, following Jefferson, 1979, p. 3). The sequential order of shared laughs is sometimes described as an ‘accepted invitation to laugh'. Such joint laughter has been identified as a cross-culturally universal means of signaling affiliation and expressing social cohesion (Bryant et al., 2016). Shared laughter has also been identified as a behavioral indicator of wellbeing in romantic relationships where closeness and social support are positively associated with the frequency of shared laughs (Kurtz and Algoe, 2015).

Functions of laughter

Our present understanding of the potential role and functions of laughter in romantic communicative interaction is limited to cisgender, heterosexual dyads. A recent meta-analysis by Montoya et al. (2018) suggests that the social importance of laughter extends as far as fostering romantic bonds and expressing sexual attraction, though empirical evidence in support of this view is not always clear-cut. For example, a study by Grammer (1990) found no correlation between the frequency of laughter and a romantic interest in conversations of heterosexual interlocutors of the opposite sex. According to the results of the study, laughter can signal either aversion or interest. It is rather the body posture that clearly indicates romantic intentions (Grammer, 1990). In contrast, McFarland et al. (2013) study showed that heterosexual men, but not women, use laughter as an indicator of their attraction. This conflicting evidence might be due to cross-cultural differences in mating behavior. While Grammer and Eibl-Eibsfeldt (1990) sample was German, McFarland et al.'s (2013) conducted their study with American participants. A discrepancy in the results is also very likely to arise from the difference in the methods of the data collection employed by the two studies, given that specifics of the interactional context and the surrounding environment can affect linguistic behavior (e.g., Hay and Drager, 2010; Hay et al., 2017). While Grammer (1990) did not inform the study participants that a potential romantic interest arising during their interaction was the purpose of the experiment, McFarland et al.'s (2013) set out to collect their data in an explicit speed dating setting.

Speed dating has been recognized as an ecologically valid means of studying the role of interpersonal attraction on linguistic behavior (McFarland et al., 2013; Michalsky et al., 2017) and is a generally well-known method of studying mate selection in social sciences (e.g., Finkel et al., 2007). A speed dating event usually attracts singles who seek to meet many strangers within a short period of time, with a view to find a romantic partner (Finkel and Eastwick, 2008; McFarland et al., 2013). Each date lasts just 2–3 min and offers two strangers a short window of opportunity to find out if the interlocutor has the potential of becoming a romantic partner. When the time is up, a sound signal indicates that participants have to move on to a new dating partner. As McFarland et al.'s (2013, p. 1,605) note, speed dates share many characteristics with initial romantic conversations in other contexts: “people meet and greet one another, they try to reveal positive features of themselves and learn about the other, they engage in efforts to relate and connect with one another, and they experience (a)symmetries of attraction.” A dater might meet up to 20 mating candidates at such an event. After the event, a scoring card is filled in and returned to the organizers who ensure that only those daters who both found each other attractive and expressed an interest in seeing each other again, receive each other's contact details.

Existing studies into the role of laughter in romantic attraction have rarely investigated the role of different laughter types (in fact, it is often unclear if only the vowel-like, stereotypical hahaha-laughter was included in the analyses). However, research by Grammer and Eibl-Eibsfeldt's (1990) indicates that different types of laughter may play a differential role in promoting attraction. In an experiment, Grammer and Eibl-Eibsfeldt's (1990) observed dating behaviors of cisgender, heterosexual couples and concluded that male participants tended to express more interest in seeing a female stranger again if she produced voiced, but not if she produced unvoiced, laughter during their brief interaction.

Aims and hypotheses of the study

By adopting a more nuanced, empirically elaborated laughter typology, the present study aims to better understand how laughter is used and produced in the context of an emerging romantic attraction during speed dating. It further aims to make the following novel contributions to the existing field:

It will clarify what types of laughter prevail in high vs. low levels of mutual attraction, and supply empirical evidence on temporal and distributional features of these laughter types;

It will provide examinations of breathing kinematics in different laughter types and evaluate timing, amplitude and the degree of the respiratory overlap in shared laughter during speed dating;

It will assess whether or not shared laughter is moderated by mutual attraction among the daters.

We hypothesized that given its apparent importance in fostering social bonds (Montoya et al., 2018) and improving relationship quality (Kurtz and Algoe, 2015), laughter would play an important role during speed dating and might help to express romantic attraction. Sonorous laughter rich in vocalic resonance was assumed to be particularly likely to accompany high-attraction dates (cf. Grammer and Eibl-Eibsfeldt's, 1990). Moreover, attraction might be signaled via an increased number of shared laughs (cf. Kurtz and Algoe, 2015; Bryant et al., 2016). Building on the posits of the Accommodation Theory (Giles, 1973; Giles et al., 1991), we predict to find evidence for laughter convergence (cf. Ludusan and Wagner, 2022). Specifically, shared laughter and laughter of the same type is expected to prevail among daters with high but not low levels of mutual attraction. That is, we predicted a high degree of laughter convergence to express attraction. Giles and Ogay (2007) specifically hypothesize that situations with a high romantic potential might show gendered accommodation patterns. With regards to acoustic features and breathing kinematics, we expected different laughter types to be consistently distinguished by duration and exhalation gradients. Synchronization of the physiological signals between the daters could be a further indicator of mutual attraction (cf. McFarland, 2001).

Methodology

Participants

All participants were registered on a database at the Leibniz-Center for General Linguistics in Berlin and recruited in October 2017. The call for participation invited heterosexual, single males and females, native speakers of German aged between 20 and 30 years, with an interest in speed dating. Two males (henceforth m1 and m2) and six females (henceforth f1-6) volunteered to take part. They were informed about the purpose of the study, signed a consent form, and received a standard rate of €10/h as compensation for their time involvement. Prior to the experiment, the participants had to fill in an online questionnaire and provide some detailed information about their relationship status, previous dating experience and answer questions from a range of socio-psychological scales.

According to the questionnaire, the participants had been single for variable periods of time, ranging from just 2 months (m1) to their whole adult life (m2 and f2). Apart from the females f4 and f6, all participants had some previous experience with online dating, but none of the participants had ever tried speed dating prior to the experiment.

Experimental setup

To bring some romantic mood into the lab, the recording room was decorated with green plants, posters, and flowers (cf. Hay and Drager, 2010, see Figure 1). All computers were moved outside of the lab, without disrupting the set-up that was necessary for tracking multi-channel recordings in real time.

Figure 1

Laboratory setting during the experiments showing participants during a conversation (both participants were wearing head-mounted microphones, motion capture jackets and headbands, the respiratory belt was worn underneath the jacket).

All recordings were made with a multimedia setup. To record upper body movement (including head and arms), a motion capture system (OptiTrack, Motive Version 1.9.0) with 12 cameras (Prime 13) was used. An inductance plethysmograph enabled a simultaneous recording of respiratory movements of the ribcage and the abdomen using two belts. Head-mounted microphones and two free-standing microphones were used in parallel to support the temporal synchronization of the signals collected across the different systems. The acoustic stereo signals of the dyadic conversations were recorded via two head-mounted microphones (see Figure 1) and stored as a stereo signal on a digital audio tape (DAT) recorder. One of the free-standing microphones was linked to the motion capture data via optitrack, and the other free-standing microphone was coupled with the inductance plethysmography signals on a multi-channel recorder. At the beginning and end of each experimental session, synchronization impulses were sent from the motion capture system to the computer connected to the plethysmograph via an OptiTrack synch box, which made a subsequent signal synchronization possible. Acoustic data from the three channels (OptiTrack, Plethysmograph and DAT) were also used to check the temporal synchronization of all recordings using cross-correlation.

Procedure

The participants were asked to come to the laboratory on two consecutive days. On the first day, they had a chance to familiarize themselves with the lab, the experimental setting and the equipment. They could ask any questions about the procedure. Their motion-capture jackets and respiratory belts were fitted by a trained researcher. Baseline recordings were obtained, including a 5-min dialogue with a same-sex conversation partner (i.e., a male or a female confederate). The participants practiced the use of their smartphones for the collection of attraction ratings. At the beginning and the end of the baseline recording, each participant was asked to rate the confederate on a 10-point Likert scale, stating how attractive they found the same-sex confederate (from 1, being the least attractive, up to 10, being the most attractive). In addition, participants performed a series of chest movements in quiet breathing and in different respiratory maneuvers (iso-volume, vital capacity, see Hixon et al., 1973). The iso-volume maneuvers were needed to determine a constant in the habitual movements of the rib cage and the abdomen during quiet breathing. Using the constant derived from quiet breathing, rib cage and abdomen signals could be summed up to derive a joint signal representative of a proportion of the overall lung volume. The vital capacity maneuver served as a reference for each speaker's individual lung volume. For each laughter, its duration (in ms), displacement (in Volt) and the first velocity peak (in Volt/ms) were calculated from the respiratory data and used as dependent variables in the statistical modeling below.

The speed dating experiment took place on the following day. Both male speakers talked to all six female participants, which resulted in 12 dyadic conversations in total. We ensured that participants would not see each other before the recording and directed them to a waiting room upon arrival (there was a separate waiting room for male and female participants). While waiting, participants prepared themselves for the recording session ahead by putting on a motion-capture headband and jacket with markers. Two assistants were present to support with the set-up and the change-over.

During each dating session, participants were first invited to sit down at a table. Their respiratory belts and head-mounted microphones were then connected to the recording computers via a set of leads. The experimenters checked all signals and left the recording room as quickly as possible, to give the daters a feeling of privacy. Using a smartphone, the daters rated each partner on a 10-point Likert scale (from 1, being the least attractive, up to 10, being the most attractive) before and after their 5-min dating conversation. An experimenter ensured that the initial attraction ratings were given as soon as the two daters sat at the table and prior to any conversation between the daters taking place. The same experimenter ended each dating session after the allocated 5 min, by entering the room and thanking the participants. It was agreed that the contact details of the daters would be communicated by email, if (and only if) there was a match. That is, an expression of further interest in a follow-up date that was mutual. If only one of the daters expressed further interest in a follow-up date with the interlocutor, no contact details were released. Expressions of further interest were collected on smartphones at the end of each experimental session, right after the final attraction scores were obtained.

The experiment yielded one perfect match: m1 and f2 were both interested in seeing each other again (see Table A3). The romantic interest was not mutual in several pairs: f1 and f3 were interested in a second date with m1 (but not vice versa). In contrast, both male participants expressed an interest in another date with f2, f4, f5, f6, but not f1 or f3. M2 was less popular with all female participants.

The above chemistry (or the lack thereof) was also reflected in the attraction scores collected before and after the speed date (see Tables A1, A2). M1 received higher first-impression scores than m2 (mean scores before the date: 4.2 vs. 2.3, respectively), and all females increased their attraction scores by 1–3 after their date with m1 (mean score after the date: 6.3). In contrast, females felt only slightly (if at all) more attracted to m2 after the date (mean score after the date: 3.2). The wilcoxon signed-rank test showed significantly lower scores for m2 than m1 after the date (V = 21, p < 0.05), though the attraction-score difference prior to the date did not reach significance (V = 10, p = 0.098). Both men used the higher end of the given 10-point attraction scale when expressing their attraction to women: scores given by m1 averaged around 7.2. Similarly, scores given by m2 were around 7.0, with the lowest score of 4 (given to f1 before/after the date) and the highest score of 9 (given to f2, f4, f5, f6 after the date). Overall, both males gave significantly higher attraction scores to all women after their date, as compared to their first impression prior to the date (v = 4, p < 0.5), though the average numerical score difference was rather small (7.4 vs. 6.7) and did not hold for f3 who received a slightly lower score from m1 after their date. These results suggest that the interaction during the date had an impact on the attraction among the daters.

Using the after-date attraction scores and the expressed interest in a second date (0 = both partners uninterested, 1 = only one partner interested, 2 = mutually interested partners), we calculated a cumulative mutual attraction score for each dialogue. This derived score will serve as a predictor in the statistical analyses below. Individual attraction and mutual scores can be found in Tables A1–A3.

Data preparation

All dialogues were first transcribed in Praat (version 6.0.37, Boersma and Weenink, 2018), using the audio signals recorded by the plethysmograph. Transcribers (two native speakers of German) were also instructed to identify bouts of laughter for each speaker, and to annotate those on a separate, “non-verbal” tier. The laughs ter annotations were then inspected in more detail and corrected where necessary, using the stereo acoustic signal from the DAT recorder. Unfortunately, the recorder had a temporary failure that affected conversations of m2 with f1 and f2, resulting in the lack of stereo recordings for the m1_f1 and m1_f2 pairs. Accordingly, all annotations for these pairs were conducted on the basis of the mono-signal from the respiratory system and are therefore less precise. To account for this issue, we added recording source as a random effect to our statistical modeling.

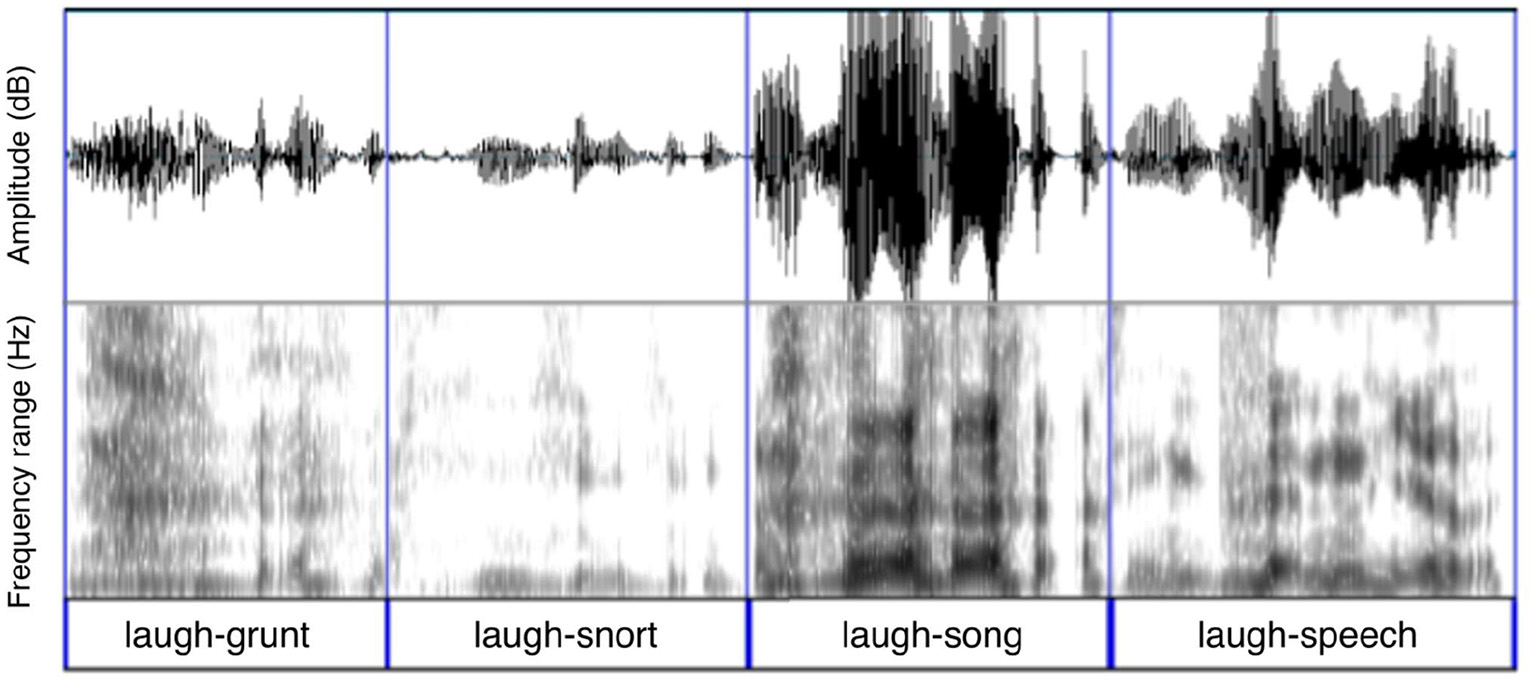

Following Bachorowski et al. (2001), we adopted a three-way classification of laughs. Accordingly, voiced laughter with a high vocalic proportion was identified as “song-like.” Predominantly voiceless laughter was examined with respect to its perceptually salient noise, and classified either as “snort-like” (if the turbulence appeared to originate from the nasal cavity) or “grunt-like” (if the turbulence was produced in the oral or laryngeal tract, including breathy laughs and harsher cackles). As in Bachorowski et al. (2001), an audible inhalation or exhalation noise abutting a laugh episode was not included into the laughter interval. Given that our study, unlike the study by Bachorowski et al. (2001), involved spoken interactions, we also had to add “laughed speech” (or speech-laughs, Nwokah et al., 1999) as the fourth category to account for all observed occurrences of laughter. Following Nwokah et al. (1999), speech-laugh was identified in all cases of a simultaneous occurrence of speech and laughter. We avoided the temporal separation of a long laugh into several intervals, unless there was a prolonged (i.e., longer than 600 ms, cf. Jaffe and Feldstein, 1970) silent pause between two consecutive bouts.

A preliminary annotation was prepared by a trained junior phonetician and subsequently checked by both co-authors. No formal cross-rater agreement was collected. Instead, each laugh was checked by at least two raters and had to be agreed by all raters in less clear-cut cases. Some laughs were difficult to assign to one category as the quality of the laugh might have changed throughout the interval. In (rare, n < 10) cases like this, we applied the preponderance principle and classified the laugh based on the temporally and auditorily predominant vocalization (see Figure 2 for examples).

Figure 2

Waveforms (Top) and spectrograms (Center) and annotations (Bottom) of the four laughter types produced by the male speaker m1 on his date with f1.

Timing of the respiratory signals of the speakers was identified with reference to the synchronization impulses sent from the motion capture PC to the PC running the Inductance devices and cut using MATLAB (R2017b). After combining the ribcage and abdominal data into one signal representative of overall lung volume, the data were low-pass filtered with a cut-off frequency of 10 kHz and the 6th-order Butterworth filter. The filtered respiratory signal was then used to calculate the velocity as the first derivative.

The auditory annotations of laughter described above served as the points of reference for the examination of the accompanying respiratory curves. An exhalation phase coinciding with an acoustic duration of a laugh was identified as its respiratory signature. Its onset was defined as the point of a zero crossing prior to a stark decrease in the signal velocity. Its offset was more difficult to determine visually because the exhalation gradient was often shallow toward the end of an exhalation cycle, with zero crossings occurring rather frequently. If we took a simple numeric threshold criterion of 10 or 20% to define the on- and offset of the breathing kinematics (as it is common in articulatory analyses, e.g., Katsika et al., 2014), the algorithm would stop after the first bout. However, many laughs consisted of several bouts, each comprising a drop in displacement and a relatively stable part. We therefore considered the last zero crossing before the onset of the next inhalation to constitute the most consistent, reliable, and replicable point for the annotation of the kinematic offset of each laugh.

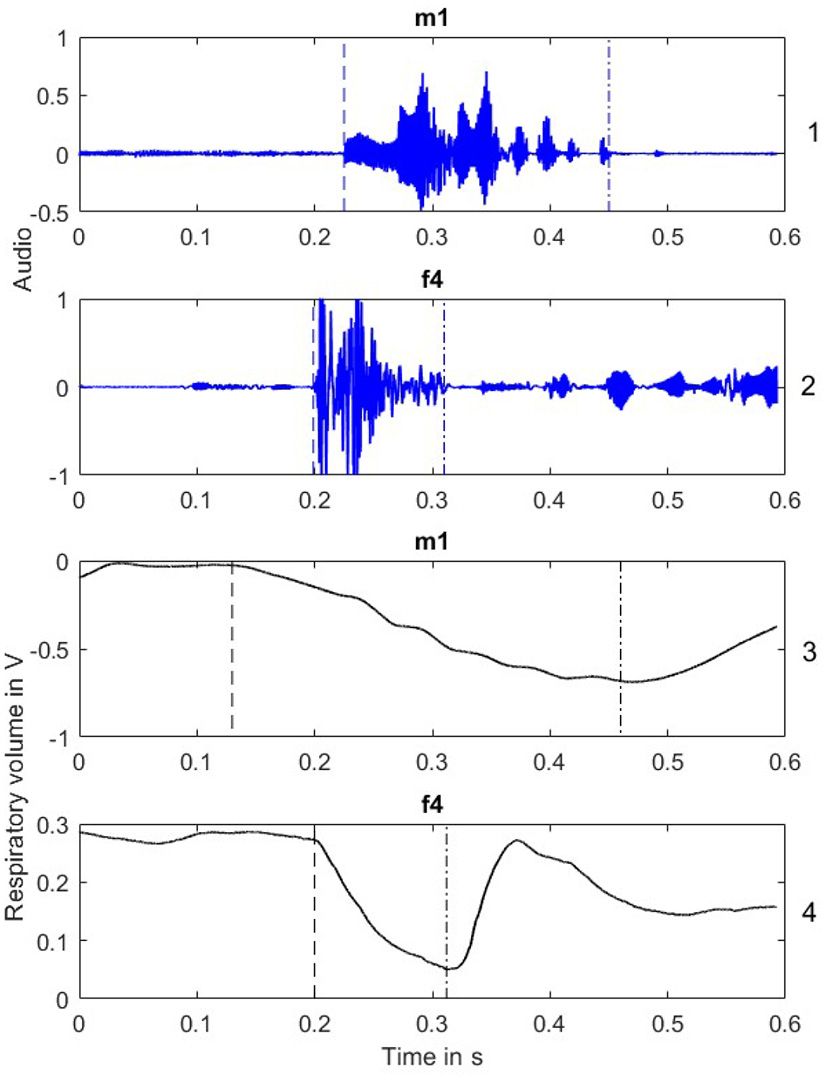

An example extracted from the dyad of m1 and f4 is shown in Figure 3. The two upper panels display the acoustic waveforms of the vocalizations produced by the two speakers. Laughter duration is indicated by the vertical dashed/dotted lines. Shared laughter starts with the onset of the laugh by m1 and ends with the offset of the laugh by f4. The two lower panels display the accompanying respiratory kinematics. The onset and the offset of the respiratory movements of the two laughs are also indicated by the vertical dashed/dotted lines. A visual comparison of the upper and the lower panels is indicative of the magnitude of potential discrepancies between the acoustics and the kinematics of laughter. In the given example, the acoustic and respiratory duration are tightly aligned in f4 but not in m1 whose respiratory maneuvers start before the acoustic onset of the laugh and end a few milliseconds after. Across all dyads, the duration of shared laughter identified in the acoustic waveforms ranged between 5 and 1,890 ms. The duration of the accompanying respiratory overlaps ranged between 26 and 1,492 ms.

Figure 3

Waveforms of a laugh produced by m1 (first panel, see right y-axis for panel number) and f4 (second panel). The accompanying respiratory kinematics (respiratory volume in V, arbitrary unit) are displayed in panel 3 (m1) and panel 4 (f4). Vertical dashed lines mark the onsets of laughter and dashed-dotted lines the offsets.

Differences in the respiratory volume between the onset and offset of each laugh were normalized and expressed as percent of the speaker's vital lung capacity. Moreover, we measured peak velocity of the initial respiratory drop (if several velocity peaks occurred, only the first one was considered) and identified laughter duration preceding or following a shared bout (i.e., time lags between the two onsets and the two offsets of a shared laugh, cf. Figure 3)1.

Data analyses

All statistical analyses reported here were carried out in RStudio (running R version 3.6.1) using the packages lme4 (Bates et al., 2015), lmerTest (Kuznetsova et al., 2017) and ordinal (Christensen, 2018).

Models were based on a mixed-effects, statistics with an alpha level of 0.05. Ordinal (for scales), Poisson (for counts) and linear (for duration, displacement and velocity) models were fitted as appropriate. In all mixed models, the random effect structure included intercepts only since models with the maximal structure frequently showed model convergence issues (though whenever possible, we also ran models including speaker-specific random slopes and verified all effects reported below). Dater pair, serial order of a dialogue during the day-long session, and where appropriate, speaker were defined as random effects. If the residuals of the model were not linearly distributed, the data were log-transformed (duration, displacement). Attraction scores (see Section: Distribution of shared and individual laughter), laughter types (see Section: Respiratory properties of shared and individual laughter) and the presence of laughter overlap (see Sections: Time course and duration of laughter, Respiratory properties of shared and individual laughter, and Is there a good predictor for growing attraction?) were fitted as fixed predictors in a forward-fitting procedure (i.e., starting with a simpler model and successively increasing its complexity by adding more factors).

Results

Distribution of shared and individual laughter

An overview of the distribution of all individual and shared laughs is given in Table 1. These count data were analyzed using the Wilcoxon signed rank test for paired samples. Overall, female and male speakers did not significantly differ in the frequency of their laughter (19–22 times in 5 min on average, Wilcoxon signed rank test n.s.).

Table 1

| Pair | Attraction score | Laughs (total) | Laughs (m) | Laughs (f) | Shared (%) |

|---|---|---|---|---|---|

| m1_f1 | 4.7 | 46 | 18 | 28 | 57 |

| m1_f2 | 5.7 | 37 | 18 | 19 | 54 |

| m1_f3 | 4 | 39 | 24 | 15 | 46 |

| m1_f4 | 5.7 | 54 | 23 | 31 | 63 |

| m1_f5 | 5.3 | 37 | 19 | 18 | 43 |

| m1_f6 | 4.7 | 32 | 19 | 13 | 31 |

| m2_f1 | 2.3 | 39 | 14 | 25 | 15 |

| m2_f2 | 4.3 | 26 | 12 | 14 | 23 |

| m2_f3 | 2.7 | 24 | 15 | 9 | 33 |

| m2_f4 | 4 | 58 | 21 | 37 | 31 |

| m2_f5 | 5 | 69 | 31 | 38 | 34 |

| m2_f6 | 4 | 36 | 15 | 21 | 28 |

| Mean (SD) | 4.4 (1.1) | 41.5 (13.4) | 19.1 (5.2) | 22.3 (9.5) | 38.2 (14.5) |

An overview of the distribution of laughter across the 12 dating pairs (m, male; f, female).

Shared laughter (defined as a temporal overlap in the acoustically salient laughter bouts, see Figure 3) was also influenced by attraction, with more overlaps found in pairs who showed a higher degree of mutual attraction as predicted (z = 3.3, p < 0.001). Men led these shared laughs more often than women did (65.3% of all cases, V = 70, p < 0.05). In other words, women joined in a laugh initiated by their interlocutor more frequently than men (who laughed more if they experienced a sense of attraction).

In terms of the four laughter types (see Section: Procedure), the most frequent one was a grunt (34%) while the least frequent was a snort laugh (21%). The difference in this frequency of occurrence was significant (V = 65.5, p < 0.05). Being a rare laugh, a snort laugh proved the only type of laughter whose occurrence was moderated by the presence of attraction between the conversation partners (z = 4.3, p < 0.001). A snort-like laugh was more likely to occur when the conversation partners felt attracted to each other. A song-like laugh initiated a shared bout significantly more often (34%) than a speech-laugh did (19%, V = 66.5, p < 0.05). However, it did not significantly differ from a grunt-like laugh (21%, V = 45, n.s.) or snort-like laugh (26%, V = 32, n.s.) in this regard. Only 25% of all overlapped laughs were of the same laughter type, and they were not influenced by the mutual attraction among the daters. No durational differences were found in shared laughs initiated by the different laughter types.

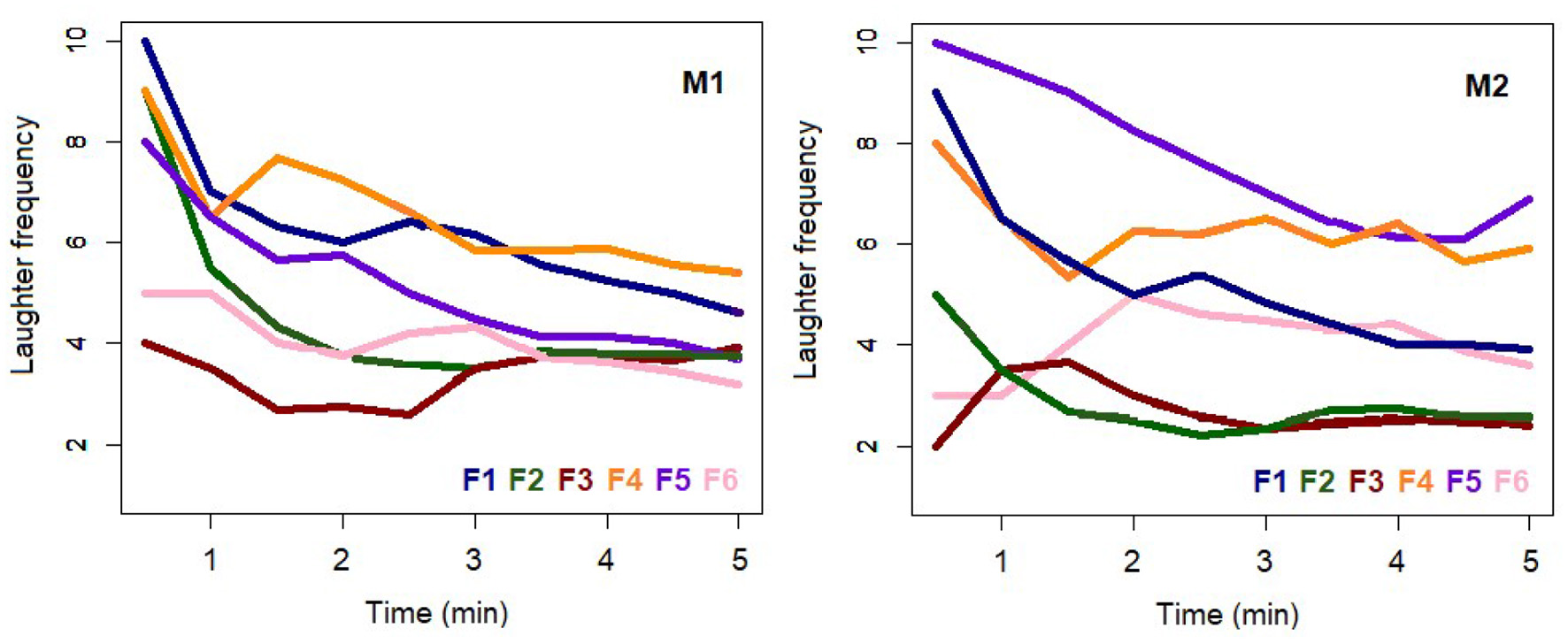

Time course and duration of laughter

As shown in Figure 4, laughter prevailed at the very beginning of most dates and declined in frequency over the time course of 5 min. 26.7% of all laughs recorded in this study were produced within the first minute of the conversation (vs. merely 1.6% in the last minute). Wilcoxon signed rank test with continuity correction showed that the decrease in laughter frequency after the first minute of the date was significant (comparing frequencies recorded within the first minute with the average frequencies recorded later in the conversation, V = 74.5, p < 0.01). Frequent laughter at the start of a conversational encounter is unusual in same-sex dialogues (e.g., no more than 2.3% of all recorded laughter bouts reported in Vettin and Todt, 2004) and might be an important feature in the context of dating (cf. Grammer and Eibl-Eibsfeldt's, 1990).

Figure 4

Time course of laughter frequency during the twelve dates. Data of the six female speakers are color-coded, data of speaker m1 are shown on the left, m2 on the right.

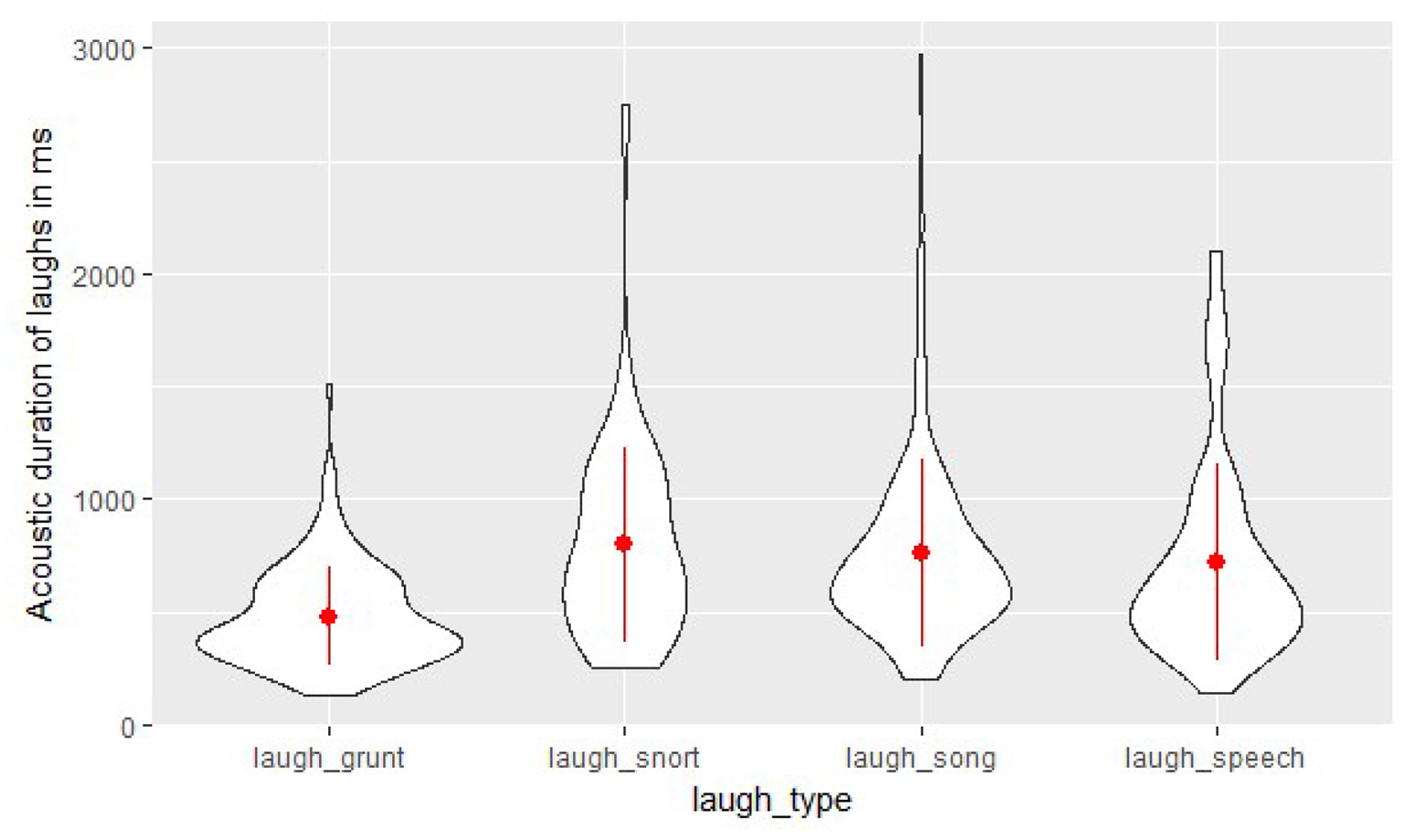

The acoustic duration of laughter did not differ between male and female speakers and was unaffected by their attraction. Instead, it varied systematically across the different laughter types [F(3) = 25.6, p < 0.001] and in the presence of an overlap [F(1) = 27.7, p < 0.001].

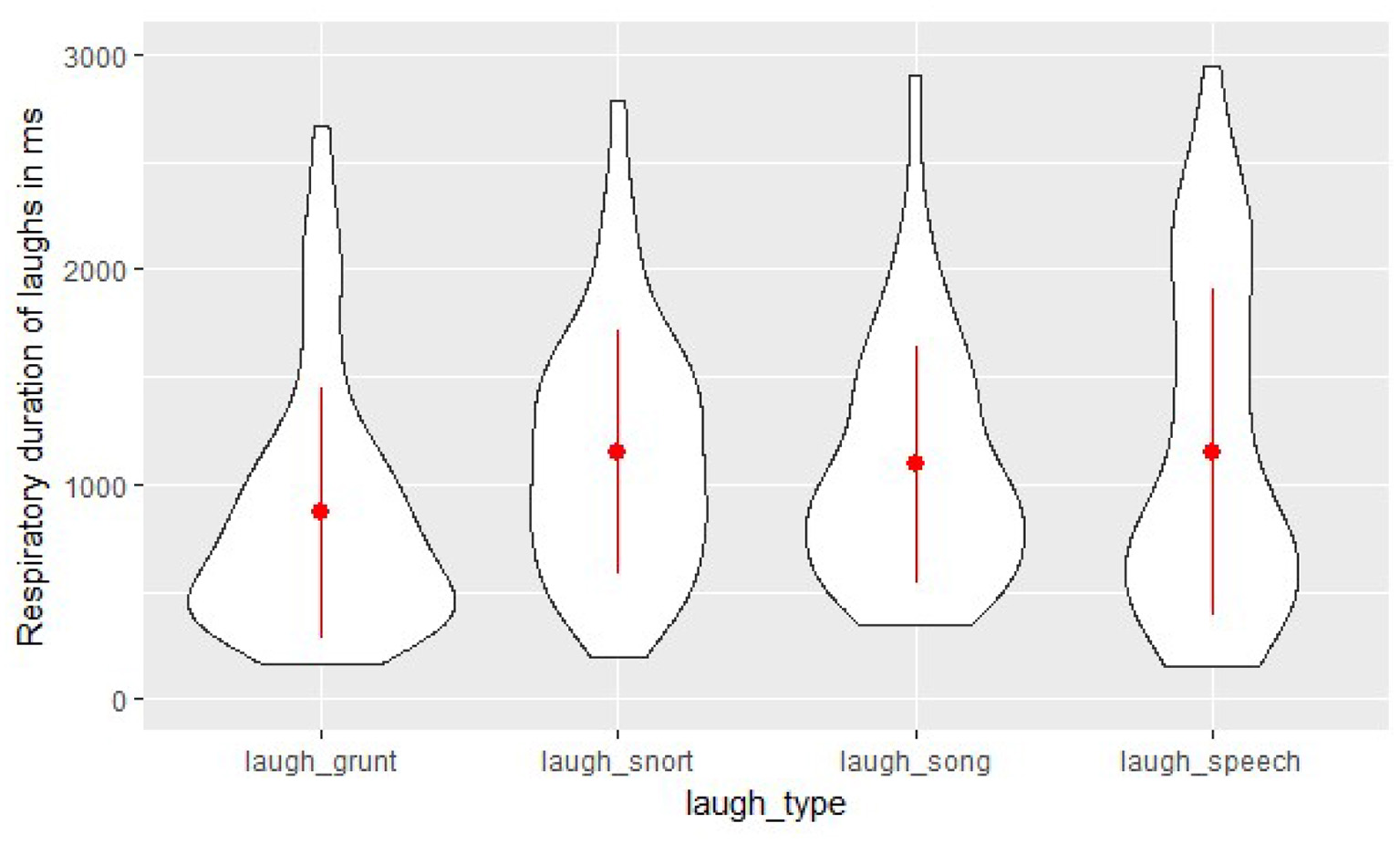

Raw acoustic durations of the four laughter types are plotted in Figure 5 and show that the grunt-like laugh (~430 ms on average) was 200–250 ms shorter than all other laughter types (all comparisons significant at t > 6.0, p < 0.001). The song-like laugh was the longest (~690 ms on average) but did not differ significantly from either speech-laugh or from the snort-like laugh. When laughter was shared, it was about 215 ms longer than individual laughs by the speakers (t = 5.4, p < 0.001). The lengthening effect of shared laughter applied equally across all laughter types. The duration of the overlap itself was not influenced by the variables of interest, and neither were the duration of the onset or the offset of a shared laugh (see Figure 3). By and large, the incoming interlocutor joined into an ongoing laugh ~320 ms after its start. Once an interlocutor stopped laughing, their partner continued to laugh on their own for further 360 ms on average. This pattern did not show an effect of the interlocutor gender or the degree of mutual attraction.

Figure 5

Violin plots of acoustic durations (in ms) of the four laughter types. Red dots correspond to the means and red vertical lines to the standard deviations.

Respiratory properties of shared and individual laughter

Similar to the acoustic data, the duration of laughter bouts identified in the respiratory signal was influenced by the laughter type [F(3) = 5.8, p < 0.001] and the presence of an overlap [F(1) = 20.4, p < 0.001]. Even though the respiratory data measured longer laugh durations as compared to the acoustic data, the overall patterns were similar across the two measurements. As shown in Figure 6, laughter duration was generally shorter in laughs classified as grunts (~860 ms on average) than in all other laughter categories (~1,100 ms on average; all comparisons with the grunt-like laughs were significant with t > 2.4, p < 0.05). Moreover, about 340 ms lengthening applied when a laugh was shared (i.e., when the interlocutors' respiratory motions overlapped in time). Unlike the acoustic data, the respiratory data showed an effect of mutual attraction among daters. Shared laughter defined with reference to the participants' respiration was significantly different across dating pairs. Those pairs who scored higher on mutual attraction also showed longer laughter overlaps [F(1) = 4.6, p < 0.05].

Figure 6

Violin plots of respiratory durations (in ms) of the four laughter types under investigation. Red dots correspond to the means and red vertical lines to the standard deviations.

Other aspects of respiratory kinematics also varied systematically in response to the factors under investigation. The initial velocity peak depended on the laughter type [F(3) = 9.8, p < 0.001]: It was slowest (i.e., closer to zero) in laughs which were categorized as laugh-snort as compared to all other laughter types (all comparisons significant with t > 2.0, p < 0.05). The highest velocity peak was observed in laugh-song which was also significantly different from laugh-snort (t = 5.1, p < 0.001) and laugh-speech (t = 2.7, p < 0.01).

Furthermore, the amplitude of respiratory displacement during laughter was also affected by the laughter type [F(3) = 6.0, p < 0.001]. A respiratory displacement was very subtle in laugh-grunts (mean: 7.2 V). In contrast, the displacement was very pronounced in laugh-snorts (mean: 11.4 V) and laugh-songs (mean: 10.2 V), though not all comparisons reached significance at the set alpha level (only the comparison between song-like and grunt-like laughs was significant, t = 3.4, p < 0.001).

To check for a fine-tuned overlap of the respiratory signals between the daters, we calculated the time delay between the onsets and the offsets of shared laughter in each pair of speakers (see Figure 3) and tested if these durations were affected by attraction. Against expectation, these analyses did not show any systematic effect of attraction. The finding speaks against a fine-grained view of respiratory synchronization between daters. The predicted effect of an increased mutual attraction on respiration seems to occur on a rather broad level, in terms of a frequent laughter overlap and a longer duration of the overlap. A more fine-grained synchronization account is not supported by these data.

Is there a good predictor for growing attraction?

Following the aims of the study, the above sections addressed the role that attraction may play in laughter behavior during speed dating. In the final section we explored which of the observed behaviors during the date might have contributed to growing mutual attraction during speed dating. An increase in romantic attraction was measured by the subtraction of the score that participants gave their interlocutors prior to the conversation from the score that was given at the end of the 5-min date: The higher the resulting score difference, the higher the potential role of conversational behaviors for the development of attraction. Here, the score varied from −1 (given by m1 to f3) up to 3 (given by f2, f3, f5 to m1). In general, a greater increase in the attraction score after the date was reported by women (1.5 on average) than by men (0.75 on average), though the difference did not reach significance (V = 37, p = 0.08, n.s.).

Focusing on the results discussed above, we tested the role of an average laughter duration, percentage of shared laughs, percentage of voiced laughter (i.e., laugh-song vs. all other laughter types, following Grammer and Eibl-Eibsfeldt's, 1990) and percentage of snort-like laughs (since this was the only laughter type whose occurrence was moderated by the presence of attraction, see Section: Time course and duration of laughter). An ordinal mixed-effects model was fitted to the data and revealed one main effect of an averaged laughter duration (z = 3.5, p < 0.001). That is, daters who laughed longer were perceived as more attractive at the end of the conversation than at the first sight.

Discussion

The present study was conducted to investigate how non-verbal vocal behavior such as laughter might be a sign of mutual attraction among cisgender, heterosexual interlocutors during speed dating. Different laughter types and their kinematic characteristics were considered. Given the complexity of multi-channel recordings in the present experimental set-up, current results are limited to eight participants and 12 conversations in total and will benefit from a replication with a larger sample and an increased number of male speakers and dyadic conversations. This being said, current findings are in full agreement with previous work (e.g., McFarland, 2001; McFarland et al., 2013; Michalsky and Schoormann, 2018). The observed laughter frequencies are commensurate with the frequencies reported in similar research (e.g., Grammer and Eibl-Eibsfeldt's, 1990; Vettin and Todt, 2004; Kurtz and Algoe, 2015). Moreover, our study adds some new insights to the existing evidence and new perspectives on potential communicative functions of laughter during a romantic communicative interaction.

Laughter and attraction

Based on the present evidence, we may conclude that laughter plays an important role in moderating attraction among mixed-sex, heterosexual dyads, potentially promoting mutual attraction during a speed-date. We combined individual attraction ratings and mutual attraction between the daters in one attraction score. While this score may have some limitations, it might be more robust for statistical analysis than single values. The findings support our hypotheses and reinforce the idea that laughter helps with fostering social and romantic bonds (Kurtz and Algoe, 2015; Bryant et al., 2016; Montoya et al., 2018). Unlike in some previous literature (McAdams et al., 1984; Grammer, 1990), men and women of our study laughed equally frequently, which is likely to be due to the context of their conversation. A dating encounter in our study contrasts with same-sex interviews (McAdams et al., 1984) or non-romantic conversations with a stranger of the opposite sex (Grammer, 1990) in that the main purpose of the short conversations investigated here was to explore a possibility of a romantic bond.

We found many instances of shared laughter in our speed-dating corpus, and their presence was moderated by mutual attraction between the daters. This finding supports our initial hypothesis and is in line with previous research (Kurtz and Algoe, 2015; Bryant et al., 2016; Michalsky and Schoormann, 2018). In the context of the present results, laughter accommodation can be considered only partial (Giles, 1973; Giles et al., 1991; Giles and Ogay, 2007). Specifically, the prediction that shared laughs would be of the same type (cf. Ludusan and Wagner, 2022) was not borne out by the data. Merely 25% of all shared laughs were identified as being of the same category, and their presence was not moderated by attraction.

Instead, our analyses showed that certain laughter types—more specifically, laugh-song (cf. Grammer and Eibl-Eibsfeldt's, 1990) and laugh-snort (as opposed to laugh-speech or laugh-grunt in our classification)—were significantly more likely to invite a joint laughter bout. The two former laughter types differ quite substantially in their temporal, respiratory and intensity features (see Figure 2, cf. Curran et al., 2018). While laugh-song represents a high-sonority, high-energy laughter of the prototypical hahaha-type, laugh-snort with its low energy and relatively low sonority is (at least in terms of its acoustic salience) the exact opposite. The present finding that both of these very different laughs were highly likely to invite a romantic interlocutor to join in is therefore intriguing and somewhat unexpected. Previous research by Curran et al. (2018) investigated what impact laughter intensity might have on the perception of laughter as spontaneous or volitional, showing that high vs. low levels of intensity in laughter cannot be easily mapped onto the dichotomy of spontaneous vs. volitional laughter. Low-intensity laughs being particularly ambiguous with respect to their spontaneity (Wild et al., 2003; Scott et al., 2014; McGettigan et al., 2015). However, research by Lavan et al. (2016) demonstrated that nasality is an extremely salient perceptual feature of deliberate laughs. Such laughs are also rated low on arousal in comparison to a voiced, open-mouthed laugh like laugh-song in our classification (Ruch and Ekman, 2001; Lavan et al., 2016).

It seems that shared laughs observed in our data fall into two categories. On the one hand, laugh-song laughter that is associated with higher arousal and more spontaneity might sound more contagious to the interlocutor, thus more inviting to join in. On the other hand, laugh-snort that has a less aroused and a more volitional quality to it might be experienced as a non-verbal invitation to establishing rapport between the daters, thus playing to the cooperative nature of laughter (cf. Mehu and Dunbar, 2008; Davila-Ross et al., 2011; Curran et al., 2018). These observations warrant a dedicated follow-up study into the communicative functions of different laughter types in the context of dating. Future work on the topic would further benefit from an investigation of the relations between the linguistic content of verbal interactions and laughter occurrence (Mazzocconi et al., 2020), specifically examining the potential role of humorous content on individual and shared laughs (cf. Bachorowski et al., 2001; Holt, 2019).

In our data, men frequently initiated a shared laugh, while the likelihood of female laughter sparking a laugh from a male interlocutor was much lower. These results are very comparable with the gender effects reported for shared laughs in long-term couples by Kurtz and Algoe (2015) and are in line with the previous research on humor and attraction (Bressler et al., 2006). This research has shown that women tend to favor men who make them laugh, while men are more attracted to women who laugh at their jokes. Our study provides unique evidence on how these gender preferences may play out in the context of an emerging attraction and suggest that laughter fulfills similar functions at the beginning of a potential relationship, as it does in established couples (Kurtz and Algoe, 2015).

Our study further showed that shared laughter prolonged the overall duration of a laugh and that longer laughs of an interlocutor increased the sense of an attraction to them. Previous research showed that duration of a laugh plays a role for its perceptual interpretation, with a longer laugh being judged as more spontaneous and authentic (Bryant and Aktipis, 2014; Lavan et al., 2016). In contrast, none of the factors in the present investigation influenced the timings of the beginning and the end of shared laughter, though latencies in both onsets and offsets of joint laughs fell into a comparable window of 320–360 ms. These latencies align with the delays observed in shadowing (Marslen-Wilson, 1973) and might be indicative of the time course involved in some general mental processes that are not specific to laughter (cf. Marslen-Wilson, 1985). Discourse analysts suggest that interactional contributions within dialogues follow strict timing patterns (Kendrick and Torreira, 2015), and for example delays from an accepted response window may be interpreted as an indication of a negative response to a posed question (e.g., Clayman, 2002). Future work on timing patterns of laughter will help to uncover if the alignment between an initial and a burst of incoming laughter can increase, or decrease, the sense of attraction and rapport between the interlocutors.

Respiratory features of laughter

To date, only a limited number of studies have addressed respiratory kinematics of laughter in social interactions. The core advantage of this method is that physiology of speech offers a rich source of information about the speaker but does not necessarily leave an acoustic imprint in the speech signal (e.g., Lisker, 1986; Pouplier and Goldstein, 2005; Schaeffler et al., 2015; Cleland et al., 2019). Compared to the acoustic data discussed in Section: Distribution of shared and individual laughter, our respiration findings were consistent with the overall patterns of laughter and its role in attraction. For example, shared laughs were longer in acoustics and respiration. The subtle effect of attraction on shared laughter duration could not be ascertained in acoustics, while the respiratory laughter overlap was clearly longer in pairs who scored higher on attraction. This signifies the importance of physiological data for understanding social interaction. Overall, the physiologically defined duration of laughter exhalation was longer than the acoustically measured durations. Such differences may arise from the fact that periods of acoustic silence during laughter preparation or at the end of a laughter bout can correspond to some ongoing respiratory activity identifiable in the physiological signal only. Effects like these have been well-documented for many acoustically silent periods during speech production when the articulators are moving but not producing any audible acoustic cues (e.g., closure intervals in stop production, cf. Lisker, 1986, or during pauses, e.g., Ramanarayanan et al., 2009; Krivokapić et al., 2020).

Previous work by McFarland (2001) focused on respiratory kinematics in different conversational modes (speaking, listening, turn-taking) in same-sex dyads. All participants were familiar with each other and had been close friends for at least 3 years prior to the study. That is, romantic attraction was an extremely unlikely factor in those dialogues. McFarland et al. (2013) results based on cross-correlation showed a high degree of in-phase-coordination in respiratory kinematics between conversational partners during their laughter, especially in the proximity of a speaker change (i.e., ±5 s before or after turn taking). In contrast, participants of the 12 conversational interactions studied here met each other for the first time and participated in a speed dating experiment where attraction, or the lack thereof, was expected to have an influence on the duration of respiratory overlap while laughing together. The kinematic measures employed here were more fine-grained than those obtained in McFarland et al. (2013) study, though we also examined only the onset and offset of the breathing cycles in shared laughter. Using these measures, we did not observe an effect of attraction on the temporal synchronization at the beginning or the end of joint laughter. However, further analyses of kinematic signals ought to examine possible effects of attraction on the magnitude of respiratory movements during shared laughter.

The present study focused primarily on the exhalation phase as it has been recognized as one of the most important phases in laughter (Filippelli et al., 2001). During exhalation in laughter, the lung volume tends to decrease rapidly, and according to the visual inspections of these data, the volume sometimes reached a much lower level than is typical of speech production. Such rapid respiratory changes are likely to depend on the type of laughter—a variable which was not assessed in previous research. In line with our hypothesis, the data reveal an effect of laughter type on the properties of the exhalation. More specifically, laugh-grunts were produced with the shortest duration and a smallest displacement as compared to all other laugh types. The two respiratory parameters are clearly linked. That is, at any given speed of respiratory motion, shorter duration sets limits to the degree of a respiratory displacement and will result in smaller displacements. However, the speed of respiratory motion, as measured by the initial velocity peak in the exhalation phase, was not particularly slow in laugh-grunts. On the contrary, the slowest peak was measured in laugh-snorts, while the fastest peak occurred in laugh-songs. These findings contradict our initial, physiologically motivated account of laughter, which predicted high velocity peaks to occur in voiceless laughs when the glottis is open, and the airway is not obstructed by the vocal folds. If this hypothesis were to have found support, we would have observed the opposite patterns. That is, laugh-snorts and laugh-grunts would have measured higher velocity peaks, while laugh-songs would have been produced with the lowest peaks. However, obstructions of air flow may not only occur at the glottal level, but also elsewhere in the vocal tract. Against the background of the current results, future research on the respiratory physiology of laughter should consider laughter intensity and the corresponding subglottal pressure, along with phonation and aerodynamics.

Summary, conclusion, and outlook

Our small-scale study combined acoustic, physiological and psychological measurements of opposite-sex interlocutors involved in romantic conversations during speed dating. Partially supporting the ideas of the Accommodation Theory that non-verbal conversation behaviors can show convergence (Giles and Ogay, 2007), we found that high-attraction dates had a higher number of shared laughs with longer kinematic overlaps, though these laughs were not of the same type, as had been predicted. Laugh-snorts were more prevalent in high-attraction dates, while laugh-songs were more likely to induce a joint laughter bout, leading to the conclusion that a nuanced view of laughter types would benefit the understanding of the many functions that laughter might serve in (romantic) communicative interactions. Importantly, we would like to highlight that our study, along with much previous research, is limited to cisgender, heterosexual behaviors during speed dating. The present findings may not generalize to other contexts of mutual attraction in conversational dyads. More work is needed to shed light on the social importance of laughter across diverse dating contexts.

Funding

This work was supported by an intramural travel grant from the University of Kent to TR and financial support of the Leibniz Association to SF.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author contributions

TR and SF designed the experiment, ran the recording, jointly annotated the data, and wrote and revised the manuscript in response to the reviewer comments. TR analyzed the acoustic data and conducted the statistical analyses. SF analyzed the respiratory data. Both authors contributed to the article and approved the submitted version.

Acknowledgments

We would like to thank our participants who were amazingly open, authentic, friendly and extremely supportive of the nervous experimenter crew throughout the duration of the experiment. We would also like to thank Jörg Dreyer for the technical assistance, Olivia Maky for the support during data collection and preparation, and James Brand for a good laugh. We are further grateful to Dani Byrd, one reviewer and the issue editor for a helpful and constructive discussion during the publication process. An earlier version of this study along with preliminary analyses of the data was discussed at the laughter workshop 2018 in Paris, which also published short conference proceedings.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1.^Since internal clocks of the DAT and plethysmograph recorders showed slight discrepancies, subtle temporal misalignments between the two types of annotations (up to 35 ms per 5 min of speech) could not have been avoided. Although the misalignment did not have any implications for the comparability of the temporal measurements derived from these annotations, the results below describe either the acoustic or the respiratory channel, without directly comparing temporal relationships between the two channels.

Appendix

Table A1

| Dater | f1 pre | f1 post | f2 pre | f2 post | f3 pre | f3 post | f4 pre | f4 post | f5 pre | f5 post | f6 pre | f6 post |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| m1 | 6 | 6 | 7 | 8 | 7 | 6 | 7 | 9 | 7 | 9 | 7 | 7 |

| m2 | 4 | 4 | 8 | 9 | 5 | 6 | 6 | 7 | 8 | 9 | 8 | 9 |

Initial (pre) and final (post) scores given by the two male speakers (in rows) to the 6 female speakers (in columns).

Table A2

| Dater | m1 pre | m1 post | m2 pre | m2 post |

|---|---|---|---|---|

| f1 | 6 | 7 | 2 | 3 |

| f2 | 4 | 7 | 2 | 3 |

| f3 | 2 | 5 | 2 | 2 |

| f4 | 5 | 7 | 3 | 4 |

| f5 | 3 | 6 | 3 | 5 |

| f6 | 5 | 6 | 2 | 2 |

Initial (pre) and final (post) scores given by the six female speakers (in rows) to the two male speakers (in columns).

Table A3

| Dating pairs | Further interest in a date |

|---|---|

| m1-f1 | 1 |

| m1-f2 | 2 |

| m1-f3 | 1 |

| m1-f4 | 1 |

| m1-f5 | 1 |

| m1-f6 | 1 |

| m2-f1 | 0 |

| m2-f2 | 1 |

| m2-f3 | 0 |

| m2-f4 | 1 |

| m2-f5 | 1 |

| m2-f6 | 1 |

Scores for mutual interest in a second date: 2 = both like to meet again, 1: one interlocutor likes to meet again, but not the other, 0: none is interested in a further meeting.

References

1

BachorowskiJ.-A.SmoskiM. J.OwrenM. J. (2001). The acoustic features of human laughter. J. Acoust. Soc. Am.110, 1581–1597. 10.1121/1.1391244

2

BatesD.MaechlerM.BolkerB.WalkerS. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw.67, 1–48. 10.18637/jss.v067.i01

3

BlochS.LemeignanM.AguileraN. (1991). Specific respiratory patterns distinguish among human basic emotions. Int. J. Psychophysiol.11, 141–154.

4

BoersmaP.WeeninkD. (2018). Praat: Doing Phonetics by Computer [Computer Program]. Version 6.0.37. Available online at: http://www.praat.org/ (accessed February, 2018).

5

BresslerE. B.MartinR. A.BalshineS. (2006). Production and appreciation of humour as sexually selected traits. Evol. Hum. Behav.27, 121–130. 10.1016/j.evolhumbehav.2005.09.001

6

BryantG. A.AktipisC. A. (2014). The animal nature of spontaneous human laughter. Evol. Hum. Bsehav.35, 327–335. 10.1016/j.evolhumbehav.2014.03.003

7

BryantG. A.FesslerD. M. T.FusaroliR.ClintE.AarøeL.ApicellaC. L.et al. (2016). Detecting affiliation in colaughter across 24 societies. Proc. Natl. Acad. Sci. U. S. A.113, 4682–4687. 10.1073/pnas.1524993113

8

ChristensenR. H. B. (2018). Package ‘Ordinal'. Available online at: https://cran.r-project.org. (accessed May 08, 2021).

9

ClaymanS. E. (2002). Sequence and solidarity, in Group Cohesion, Trust and Solidarity, eds ThyeS. R.LawlerE. J. (Oxford: Elsevier Science Ltd.), 229–253. 10.1016/S0882-6145(02)19009-6

10

ClelandJ.ScobbieJ. M.RoxburghZ.HeydeC.WrenchA. (2019). Enabling new articulatory gestures in children with persistent speech sound disorders using ultrasound visual biofeedback. J. Speech Lang. Hear. Res.62, 1–18. 10.1044/2018_JSLHR-S-17-0360

11

CurranW.McKeownG. J.RychlowskaM.Elisabeth AndréE.WagnerJ.LingenfelserF. (2018). Social context disambiguates the interpretation of laughter. Front. Psychol.8, 2342. 10.3389/fpsyg.2017.02342

12

Davila-RossM.AllcockB.ThomasC.BardK. A. (2011). Aping expressions? Chimpanzees produce distinct laugh types when responding to laughter of others. Emotion11, 1013–1020. 10.1037/a0022594

13

Feleky (1915). The influence of the emotions on respiration (M.A. thesis). Columbia University, New York, NY, United States.

14

FilippelliM.PellegrinoR.IandelliI.MisuriG.RodarteJ. R.DurantiR.et al. (2001). Respiratory dynamics during laughter. J. Appl. Physiol.90, 1441–1446. 10.1152/jappl.2001.90.4.1441

15

FinkelE. J.EastwickP. W. (2008). Speed dating. Curr. Direct. Psychol. Sci.17, 193–197. 10.1111/j.1467-8721.2008.00573.x

16

FinkelE. J.EastwickP. W.MatthewsJ. (2007). Speed-dating as an invaluable tool for studying romantic attraction: a methodological primer. Pers. Relationsh.14, 149–166. 10.1111/j.1475-6811.2006.00146.x

17

GilesH. (1973). Accent mobility: a model and some data. Anthropol. Linguist.15, 87–109.

18

GilesH.CouplandJ.CouplandN. (1991). Contexts of Accommodation: Developments in Applied Sociolinguistics. Cambridge: CUP.

19

GilesH.OgayT. (2007). Communication accommodation theory, in Explaining Communication: Contemporary Theories and Exemplars, eds WhaleyB. B.SamterW. (Mahwah, NJ: Laurence Erlbaum), 293–310.

20

GlennP. (1989). Initiating shared laughter in multi-party conversations. West. J. Speech Commun.53, 127–149.

21

GlennP. (1992). Current speaker initiation of two-party shared laughter. Res. Lang. Soc. Interact.25, 139–162.

22

GrammerK. (1990). Strangers meet: laughter and nonverbal signs of interest in opposite-sex encounters. J. Nonverb. Behav.14, 209–236.

23

GrammerK.Eibl-EibsfeldtI. (1990). The ritualisation of laughter, in Die Natürlichkeit der Sprache und der Kultur, ed KochW. A. (Bochum: Brockmeyer), 192–214.

24

HayJ.DragerK. (2010). Stuffed toys and speech perception. Linguistics48, 865–892. 10.1515/ling.2010.027

25

HayJ.PodlubnyR.DragerK.McauliffeM. (2017). Car-talk: location-specific speech production and perception. J. Phonet.65, 94–109. 10.1016/j.wocn.2017.06.005

26

HixonT. J.GoldmanM. D.MeadJ. (1973). Kinematics of the chest wall during speech production: volume displacements of the rib cage, abdomen, and lung. J. Speech Hear. Res.16, 78–115.

27

HoltE. (2019). Conversation analysis and laughter. Concise Encycl. Appl. Linguist.275, 275–279. 10.1002/9781405198431.wbeal0207.pub2

28

JaffeJ.FeldsteinS. (1970). Rhythms of Dialogue. New York, NY: Academic Press.

29

JeffersonG. (1979). A technique for inviting laughter and its subsequent acceptance/declination, in Everyday Language: Studies in Ethnomethodology, ed PsathasG. (New York, NY: Irvington Publishers), 79–96.

30

JeffersonG. (2004). A note on laughter in ‘male-female' interaction. Discourse Stud.6, 117–133. 10.1177/1461445604039445

31

KatsikaA.KrivokapićJ.MooshammerC.TiedeM.GoldsteinL. (2014). The coordination of boundary tones and its interaction with prominence. J. Phonet.44, 62–82. 10.1016/j.wocn.2014.03.003

32

KendrickK. H.TorreiraF. (2015). The timing and construction of preference: a quantitative study. Discourse Processes52, 255–289. 10.1080/0163853X.2014.955997

33

KipperS.TodtD. (2001). Variation of sound parameters affects the evaluation of human laughter. Behaviour138, 1161–1178. 10.1163/156853901753287181

34

KohlerK. J. (2008). ‘Speech-smile', ‘speech-laugh', ‘laughter' and their sequencing in dialogic interaction. Phonetica65, 1–18. 10.1159/000130013

35

KrivokapićJ.StylerW.ParrellB. (2020). Pause postures: the relationship between articulation and cognitive processes during pauses. J. Phonet.79, 100953. 10.1016/j.wocn.2019.100953

36

KurtzL.AlgoeS. (2015). Putting laughter in context: shared laughter as behavioral indicator of relationship well-being. J. Int. Assoc. Relationsh. Res.22, 573–590. 10.1111/pere.12095

37

KuznetsovaA.BrockhoffP. B.ChristensenR. H. B. (2017). lmerTest package: tests in linear mixed effects models. J. Stat. Softw.82, 1–26. 10.18637/jss.v082.i13

38

LavanN.ScottS.McGettiganC. (2016). Laugh like you mean it: authenticity modulates acoustic, physiological and perceptual properties of laughter. J. Non-verb. Behav.40, 133–149. 10.1007/s10919-015-0222-8

39

LefcourtR. M. (2000). Humor: The Psychology of Living Buoyantly. New York, NY: Plenum Publishers.

40

LiskerL. (1986). Voicing” in english: a catalogue of acoustic features signalling /b/ versus /p/ in trochees. Lang. Speech29, 3–11.

41

LudusanB.WagnerP. (2022). Laughter entrainment in dyadic interactions: temporal distribution and form. Speech Commun.136, 42–52. 10.1016/j.specom.2021.11.001

42

Marslen-WilsonW. (1973). Linguistic structure and speech shadowing at very short latencies. Nature244, 522–523.

43

Marslen-WilsonW. (1985). Speech shadowing and speech comprehension. Speech Commun.4, 55–73.

44

MazzocconiC.TianY.GinzburgJ. (2020). What's your laughter doing there? A taxonomy of the pragmatic functions of laughter. IEEE Trans. Affect. Comput.1–19. 10.1109/TAFFC.2020.2994533

45

McAdamsD. P.JacksonR. J.KirshnitC. (1984). Looking, laughing, and smiling in dyads as a function of intimacy motivation and reciprocity. J. Personal.52, 261–273.

46

McFarlandD. H. (2001). Respiratory markers of conversational interaction. J. Speech Lang. Hear. Res.44, 128–143. 10.1044/1092-4388(2001/012)

47

McFarlandD. H.JurafskyD.RawlingsC. (2013). Making the connection: social bonding in courtship situations. Am. J. Sociol.118, 1596–1649. 10.1086/670240

48

McGettiganC.WalshE.JessopR.AgnewZ. K.SauterD. A.WarrenJ. E.et al. (2015). Individual differences in laughter perception reveal roles for mentalizing and sensorimotor systems in the evaluation of emotional authenticity. Cerebral Cortex25, 246–257. 10.1093/cercor/bht227

49

MehuM.DunbarR. I. M. (2008). Naturalistic observations of smiling and laughter in human group interactions. Behaviour145, 1747–1780. 10.1163/156853908786279619

50

MichalskyJ.SchoormannH. (2018). Phonetic entrainment of laughter in dating conversations: on the effects of perceived attractiveness and conversational quality, in Proceedings of Laughter Workshop, eds GinzburgJ.PelachaudC. (Paris: Sorbonne Université Paris), 40–44.

51

MichalskyJ.SchoormannH.NiebuhrO. (2017). Turn transitions as salient places for social signals–local prosodic entrainment as a cue to perceived attractiveness and likability, in Proceedings of the Conference on Phonetics and Phonology of German-Speaking Areas (Berlin), 125–128.

52

MontoyaR. M.KershawC.ProsserJ. L. (2018). A meta-analytic investigation of the relation between interpersonal attraction and enacted behaviour. Psychol. Bull.144, 673–709. 10.1037/bul0000148

53

NwokahE. E.HsuH.-C.DaviesP.FogelA. (1999). The integration of laughter and speech in vocal communication: a dynamic systems perspective. J. Speech Lang. Hear. Res.42, 880–894.

54

OwrenM.BachorowskiJ. (2003). Reconsidering the evolution of nonlinguistic communication: the case of laughter. J. Nonverb. Behav.27, 183–200. 10.1023/A:1025394015198

55

PouplierM.GoldsteinL. (2005). Asymmetries in the perception of speech production errors. J. Phonet.33, 47–75. 10.1016/j.wocn.2004.04.001

56

ProvineR. R. (1996). Laughter. Am. Sci.84, 38–45.

57

ProvineR. R. (2000). Laughter: A Scientific Investigation. New York, NY: Viking.

58

ProvineR. R. (2004). Laughing, tickling, and the evolution of speech and self. Curr. Direct. Psychol. Sci.13, 215–218. 10.1111/j.0963-7214.2004.00311.x

59

ProvineR. R.EmmoreyK. (2006). Laughter among deaf signers. J. Deaf Stud. Deaf Educ.11, 403–409. 10.1093/deafed/enl008

60

RamanarayananV.BreschE.ByrdD.GoldsteinL.NarayananS. S. (2009). Analysis of pausing behavior in spontaneous speech using real-time magnetic resonance imaging of articulation. J. Acoust. Soc. Am.126, EL160–EL165. 10.1121/1.3213452

61

RuchW.EkmanP. (2001). The expressive pattern of laughter, in Emotion, Qualia and Consciousness, ed KaszniakA. (Tokyo: World Scientific), 426–443. 10.1142/9789812810687_0033

62

SauterD. A.EisnerF.EkmanP.ScottS. K. (2010). Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proc. Natl. Acad. Sci. U. S. A.107, 2408–2412. 10.1073/pnas.0908239106

63

SchaefflerS.ScobbieJ. M.SchaefflerF. (2015). Complex patterns in silent speech preparation, in Proceedings of the 18th International Congress of Phonetic Sciences, ed The Scottish Consortium for ICPhS 2015 (Glasgow: The University of Glasgow). ISBN 978-0-85261-941-4. Paper number 866, 1–5. Available online at: https://www.internationalphoneticassociation.org/icphs-proceedings/ICPhS2015/Papers/ICPHS0866.pdf (accessed August 05, 2021).

64

ScottS. K.LavanN.ChenS.McGettiganC. (2014). The social life of laughter. Trends Cognit. Sci.18, 618–620. 10.1016/j.tics.2014.09.002

65

SmoskiM. J.BachorowskiJ.-A. (2003). Antiphonal laughter between friends and strangers. Cognit. Emot.17, 327–340. 10.1080/02699930302296

66

TanakaH.CampbellN. (2011). Acoustic features of four types of laughter in natural conversational speech, in Proceedings of the 17th International Congress of Phonetic Sciences (Hong Kong), 1958–1961.

67

TrouvainJ. (2003). Segmenting phonetic units in laughter, in Proceedings of the 15th International Congress of Phonetic Sciences Barcelona (Adelaide: Causal Productions), 2793–2796.

68

TruongK. P.TrouvainJ. (2014). Investigating prosodic relations between initiating and responding laughs, in Proceedings of the 15th Annual Conference of the International Speech Communication Association (Interspeech), 1811–1815. 10.21437/Interspeech.2014-412

69

VettinJ.TodtD. (2004). Laughter in conversation: features of occurrence and acoustic structure. J. Nonverb. Behav.28, 93–115. 10.1023/B:JONB.0000023654.73558.72

70

WildB.RoddenF. A.GroddW.RuchW. (2003). Neural correlates of laughter and humour. Brain126, 2121–2138. 10.1093/brain/awg226

Summary

Keywords

vocal accommodation, laughter, speed dating, attraction, timing, respiration

Citation

Rathcke T and Fuchs S (2022) Laugh is in the air: An exploratory analysis of laughter during speed dating. Front. Commun. 7:909913. doi: 10.3389/fcomm.2022.909913

Received

31 March 2022

Accepted

18 July 2022

Published

04 August 2022

Volume

7 - 2022

Edited by

Oliver Niebuhr, University of Southern Denmark, Denmark

Reviewed by

Dani Byrd, University of Southern California, United States; Margaret Zellers, University of Kiel, Germany

Updates

Copyright

© 2022 Rathcke and Fuchs.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tamara Rathcke tamara.rathcke@uni-konstanz.de

This article was submitted to Language Sciences, a section of the journal Frontiers in Communication

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.