- 1Department of Linguistics, University of Texas at Austin, Austin, TX, United States

- 2The University of Texas at Austin, Austin, TX, United States

The majority of adult learners of a signed language are hearing and have little to no experience with a signed language. Thus, they must simultaneously learn a specific language and how to communicate within the visual-gestural modality. Past studies have examined modality-unique drivers of acquisition within first and second signed language learners. In the former group, atypically developing signers have provided a unique axis—namely, disability—for analyzing the intersection of language, modality, and cognition. Here, we extend the question of how cognitive disabilities affect signed language acquisition to a novel audience: hearing, second language (L2) learners of a signed language. We ask whether disability status influences the processing of spatial scenes (perspective taking) and short sentences (phonological contrasts), two aspects of the learning of a signed language. For the methodology, we conducted a secondary, exploratory analysis of a data set including college-level American Sign Language (ASL) students. Participants completed an ASL phonological- discrimination task as well as non-linguistic and linguistic (ASL) versions of a perspective-taking task. Accuracy and response time measures for the tests were compared between a disability group with self-reported diagnoses (e.g., ADHD, learning disability) and a neurotypical group with no self-reported diagnoses. The results revealed that the disability group collectively had lower accuracy compared to the neurotypical group only on the non-linguistic perspective-taking task. Moreover, the group of students who specifically identified as having a learning disability performed worse than students who self-reported using other categories of disabilities affecting cognition. We interpret these findings as demonstrating, crucially, that the signed modality itself does not generally disadvantage disabled and/or neurodiverse learners, even those who may exhibit challenges in visuospatial processing. We recommend that signed language instructors specifically support and monitor students labeled with learning disabilities to ensure development of visual-spatial skills and processing in signed language.

Introduction

Consider a hearing, monolingual, English-speaking individual with a diagnosis of dyslexia learning a spoken second language (L2), such as Spanish or French, in a formal classroom setting in the United States. This student will likely have both a neuropsychiatric and academic record of impairment in the written and spoken modalities of language (Individuals with Disabilities Education Act, 2004). Based on this record, this learner may have specific modifications made to their coursework so that it is accessible, such as oral administration of test materials (Kormos, 2017b). In some cases, this record would even sufficiently permit them to waive institutionally administered requirements for world language coursework (Lys et al., 2014).

Now consider the same dyslexic learner in a signed language classroom. This student will likely have no record of their personal abilities in the context of the signed modality of language. There is scant research on hearing, atypical L2 sign learners (though see Singleton and Martinez, 2015), either working from a framework of disability or neurodiversity1 (Singer, 1998; Bertilsdotter Rosqvist et al., 2020). Thus, there is little context to predict what unique experiences this learner, or any disabled2 or neurodiverse learner with diagnoses affecting cognitive and/or linguistic processes, will face while specifically undergoing signed L2 acquisition. Students with a range of conditions, be they medically diagnosed or self-identified, may find themselves in need of support in language classrooms, but cohesive, empirically based recommendations for accommodations are nearly nonexistent. Demonstrating this unmet need, many language instructors have opted to collaborate amongst each other to develop and share their own personal strategies for working with disabled second language students (Kormos, 2017a), though existing discussion appears to be limited to spoken language settings. Disability services coordinators have even recommended taking signed language courses as an alternative to spoken language coursework as a form of accommodation in itself, likely reflecting misguided conceptions about signed languages (Arries, 1999). Researchers have posited potential modality effects on sign-naïve L2 learning (Quinto-Pozos, 2011; Chen Pichler and Koulidobrova, 2016) and, importantly, have noted signed modality features that might either impede or facilitate learning for individuals with processing challenges (Quinto-Pozos, 2014). Determining if and in what direction modality effects exist for atypical L2 learners could set precedent for signed language classroom accommodations, while also informing theory of modality and language.

The landscape of disability types and labels is complex, such as reference to non-discrete categories and the use of various approaches for diagnosis, including academic and psychological factors. In this introduction we highlight some of the complexity with disability categories and labels, with a focus on disabilities that are primarily cognitive in nature and may be particularly pertinent to learning (thus, excluding physical/motor disabilities, sensory disabilities such as blindness and deafness, and emotional or mental illness). First, we introduce general points about disability types and terminology, including how various labels are used by well-known diagnostic criteria. Then, we discuss disabilities affecting cognition in the context of language learning, including what we know from spoken language studies. As part of the landscape on this topic, we highlight the category of “learning disability,” a contentious topic in the context of world language coursework, and its own complexity. Finally, we discuss psycholinguistic factors related to signed L2 learning, with an emphasis on factors that are shown to be challenges with the disability groups examined for this paper. The goal of the introduction is to provide the reader with information about the landscape of disabilities related to cognition, learning, and language and what we know about their relationships with language learning. For a detailed reference on how and which specific disabilities are potentially implicated as risk factors to second language learning, see Kormos (2017a).

Most existing discussion on the disability in second language learning is exclusive to spoken or written language and centers “learning disability,” which is not a cohesive term. Colloquially, people often refer to wide range of disabilities as “learning disabilities,” including those that technically fall into different diagnostic groups from specified learning disorders (Learning Disabilities Association of America, 2013a). Related phrases like “cognitive disability(s)” are likewise used at times as interchangeable umbrella terms for disabilities related broadly to learning, cognition, and language, including Attention-Deficit Hyperactivity Disorder (ADHD), autism, and even aphasia (Sims and Delisi, 2019). However, intellectual disability (the modern term for what was formerly labeled cognitive disability) is a specific medical label separate from learning disorders, which is indicated by diminished “intellectual functioning,” challenges in “adaptive functioning,” and an onset in early childhood (American Psychiatric Association, 2013). This label is also associated historically with low IQ, though this is no longer a diagnostic requirement per the most recent iteration of the DSM-5. At any rate, the coopting of labels such as “learning disability” and “cognitive disability” as umbrella terms appears to reflect an inclination to group disabilities like learning disorders, ADHD, language impairment, and autism together, despite the fact that there isn't an existing universal term encompassing these diagnoses.3 Though maintaining the distinction of other diagnoses from specific learning disorders, Kormos (2017a) likewise includes ADHD, autism, and language impairment in their discussion of how learning differences affect second language learning processes, justified on the basis of impairment in both academic performances and language learning.

Technically, the most recent iteration of the Diagnostic and Statistical Manual, the DSM-5 (American Psychiatric Association, 2013), defines only one “Specific Learning Disorder” with potential subtypes in reading, writing, and mathematics (also referred to as dyslexia, dysgraphia, and dyscalculia, respectively).4 Per the DSM-IV (American Psychiatric Association, 1994), these would have simply been referred to as “learning disorders,” or “learning disabilities.” Thus, much of the literature reviewed here, contemporary with the era of the DSM-IV, uses these terms (we maintain usage from source material when referencing terminology). Other sources may opt for the term “learning differences” to reference these diagnoses (Kormos, 2017a). Learning disorder diagnoses are unique in that they are predicated on an educational context. They are defined broadly, encompassing, essentially, any pronounced difficulty in one or more respective academic subjects (reading, writing, mathematics) that is unexplained by a separate disability or environmental factors. Despite changes in diagnostic criteria over time, any iteration of the label for learning disorders presupposes an element of “unexpectedness” (Kormos, 2017a; Schaywitz and Schaywitz, 2017) of the demonstrated academic performance based on factors such as overall intelligence, physical capabilities, and environment. “IQ-achievement discrepancies” were once even a core criterion of these diagnoses, though these guidelines are no longer in practice (Sparks, 2016). Here, we emphasize a relevant distinction from intellectual disability and general cognitive impairment encoded into learning disorder diagnoses: these labels are typically used for individuals who are deemed as having relatively “low” support and accommodation needs.5 Given that literature on disability and language learning centers specific learning disorders, the nature of the diagnosis greatly affects the landscape of the types of participants included in this research. Namely, the sample populations are generally relatively high-achieving students, including in the present study, which is an important limitation to generalizability to keep in mind.

While there is a large body of research devoted to the psycho-linguistic underpinnings of specific learning disorders (especially dyslexia), diagnostic criteria for these labels are guided primarily by academic performance as assessed at an early age. Evaluations include review of academic records, behavioral interviews, and psychometric measures which are often performed within primary and secondary school systems by qualified counselors, teachers, and school psychologists (Learning Disabilities Association of America, 2013b). This is in contrast to a diagnosis like Auditory Processing Disorder (APD), for example, which is typically provided by an outpatient audiologist using specified auditory processing tasks of temporal processing, sound localization, and pattern recognition (American Speech Language Hearing Association, 2023). This is not to say that specific learning disorder diagnoses not rigorous or valid. Among thirteen disability categories, accommodations for both specific learning disorders and ADHD are equally protected by the Individuals with Disability Education Act (IDEA), and auditory processing disorder, for example, was recently determined by court decision as a protected disability by IDEA as well (E. M. vs. Pajaro Valley United School District, 2014). The process of diagnosis for specific learning disorders, though (especially for dyslexia), entails an assessment of poor reading and/or written language skills, hence their prominence in disability and language learning literature.

Specific learning disorders are of interest to language learning literature due not only to their inherent relationship to reading and writing skills, but their prevalence. Alongside Attention Deficit Hyperactviity Disorder (ADHD), they are among the most common neurodevelopmental disorders (American Psychiatric Association, 2013). Specific learning disorders and ADHD are also highly co-occuring. Thus, they both have received the bulk of attention in regard to disability in language learning literature, especially the dyslexic presentation of a specific learning disorder. Likewise, the labels “learning disorder,” “dyslexia,” and “ADHD” represent the most common diagnoses in our sample population.6 Therefore, these diagnoses will be the focus of this literature review.

Schneider and Crombie (2003) emphasize the nature of dyslexia as a multi-modal construct which could impact levels of L2 learning beyond reading and writing, including “oral, auditory, kinesthetic, and visual” difficulties. Arries (1999) likewise cites “salient characteristics” of “learning disabled” students that may impact their L2 learning, including phonological processing issues, reading difficulties, memory impairments, anxiety, challenges with maintaining attention, and poor metacognitive skills for classroom learning. Here, Arries is using a more colloquial definition of “learning disability,” including ADHD and even brain injuries as well as specific learning disorders like dyslexia. The DSM-V describes ADHD as a learning difficulty (though not a specific learning disorder), as opposed to its description as a behavioral disorder in previous iterations (Kałdonek-Crnjaković, 2018). It also specifies that invidiuals with ADHD have “reduced school performance and academic attainment” American Psychiatric Association, 2013). Kałdonek-Crnjaković (2018) discusses how features of ADHD could specifically impact language learning: inattentiveness may hinder incidental learning as well as novel word storage, retrieval, and coding, while impulsivity could impede high-level, pragmatic skills like conversational turn-taking. These suggestions are supported by the findings of Paling (2020), in which college-level students with ADHD self-reported significantly more negative experiences than a control group without ADHD in terms of language learning difficulty and progression.

Spoken language learning research on disability has primarily focused on auditory phonological measures. Thus, extrapolating findings to signed languages is not straightforward. In fact, the most recent legal definition of dyslexia in the United States invokes phonological processing difficulties as a reason dyslexic individuals may struggle with second language learning, but specifically in the auditory realm:

“[Dyslexia is] most commonly due to a difficulty in phonological processing (the appreciation of individual sounds of spoken language), which affects the ability of the individual to speak, read, spell, and often, learn a second language.”

[Senate Resolution. 114th CONGRESS, 2nd SESSION ed, 2016, as cited in Schaywitz and Schaywitz (2017)]

However, research on dyslexia does analyze visual language processing in the form of written language. As referenced in the senate resolution above, the primary etiology for dyslexia is widely considered to be an auditory phonology based issue (American Psychiatric Association, 2013), but there is evidence in developmental literature that children with dyslexia also exhibit visual or visuospatial challenges (Valdois et al., 2004; Lipowska et al., 2011; Laasonen et al., 2012; Chamberlain et al., 2018). Valdois et al. (2004) argue that visual attentional challenges may be a secondary area of concern for characterizing different profiles of dyslexia. They explain that a atypical visual attentional patterns may, for example, drive distinctiveness in visual scanning behaviors and span while reading. To be clear, signed language structure is assuredly different from that of written language. The latter is two-dimensional code, while the former is three-dimensional, natural language. Still, the evidence of distinct visual processing challenges in dyslexic populations raises the question of whether such differences will be domain general and thus, affect signed language processing.

Though not examined in the context of language learning, developmental research also indicates that ADHD populations demonstrate specific challenges in visual processing. Children with ADHD show disrupted visual attention (Li et al., 2004), and in fact, may have more prominent challenges with attention in the visual modality versus the auditory modality (Lin et al., 2017). Furthermore, children with ADHD show impaired working memory in both visual and phonological (i.e., auditory) domains (Gallego-Martínez et al., 2018). Both verbal and non-verbal working memory impairments are hallmarks of language atypicality in children, and there is evidence that attentional impairments in these populations contribute to an inability to maintain, process, and synthesize linguistic information in short-term memory (Gillam et al., 2017). Given that these exact types of challenges are observed in ADHD populations, this raises the question of whether they similarly impact ADHD individuals' language learning processes, be that in the auditory or visual modality. Moreover, if challenges are more pronounced for this group in the visual modality as Lin et al. (2017) suggests, then it is worth investigating whether the visual modality of language yields specific detriments to ADHD learners.

There is even reference in practice to specific “learning disabilities” of visual processing in the (outdated) label of “non-verbal learning disability,” which ostensibly encompasses challenges specifically in visuospatial perception and reasoning as well as “social-emotional” skills (Garcia et al., 2014). In part due to the controversial nature of this diagnostic label (which is not in the DSM-5)7, findings are sparse and difficult to review, particularly in relation to language learning. However, research on these disabilities provides evidence of visuospatial working memory challenges that are parallel to the types of verbal working memory issues observed in dyslexic populations (Garcia et al., 2014). Relatedly, there is direct evidence from sign language research of how such challenges could affect native signed language acquisition. Quinto-Pozos et al. (2013) review the case of a deaf, child, signer of American Sign Language named “Alice,” who, based on educational and psychological records, had a marked impairment in visuo-spatial processing which was not explained by her general intelligence, language fluency, or language experience. The case study affirmed this assessment of Alice, as she exhibited difficulty with production and perception of spatial devices in ASL, especially those requiring shifts in visual perspective. Alice's processing difficulties were remarkable since she was exposed to ASL from birth and attended a bilingual (ASL-English) school.

Similar visual-spatial challenges to Alice's have yet to be reported in adult hearing learners of a signed language. Such learners may not even be aware of underlying visuospatial weaknesses, especially given labels for such impairments aren't used ubiquitously. Without a label, these students may not have a history of accessing classroom-based accommodations with respect to visual processing, thus leaving them without any reference for accommodation practices in a signed language classroom. For example, a formally diagnosed dyslexic student may have already engineered a set of personalized meta-learning accommodations over the course of years (oral administration of instructions, alternative fonts for written materials, etc.). An individual with visual processing difficulties lacking official diagnosis would not be aware of similar accommodations that could aid their learning, such as: three-dimensional models in lieu of abstracting from two-dimensional photos, moving visual information closer to their visual field, and visually streamlined (uncluttered) course materials (Ho, 2020).

The processing of visual perspective is particularly important in signed language learning, due to the visual-spatial nature of signed language productions. When describing a spatial scene in American Sign Language (ASL), signers often describe that scene from their own perspective, which means that interlocutors have to engage in mental perspective shifts in order to correctly interpret the layout of the scene. Additionally, various studies have revealed differences between males and females on perspective-taking tasks; generally, males outperform females on such non-linguistic tasks (Tarampi et al., 2016; Hegarty, 2017). However, differences among genders have been shown to disappear when participants are given perspective-taking tasks while engaged in linguistic processing (Brozdowski et al., 2019; Secora and Emmorey, 2020).

The bulk of research on even well-documented disabilities like dyslexia and ADHD, including that reviewed thus far, primarily concerns children, especially school-aged populations. As is the case with ADHD, characteristics of individuals in diagnostic groups might change as they age (Kormos, 2017a). There is mixed evidence as to whether and how visuospatial challenges persist into adulthood, and thus, would be relevant to an adult L2 learner. Bacon et al. (2013) find that dyslexic adults only performed worse than control groups on the reverse condition of a visuospatial memory task, a disadvantage remedied by explicit instruction. The authors argue that this suggests difficulty with executive function, but not visuospatial processing per se. Likewise, Łockiewicz et al. (2014) find that adults with dyslexia perform on par with control groups on 2D and 3D versions of a mental rotation task. These results, then, do not support sustained visual challenges in adult dyslexic populations.

In contrast, in an online measure of language processing, Armstrong and Muñoz (2003) find that adults with ADHD exhibit a prolonged “attentional blink” (the lag needed between two successive, rapid visual targets—e.g., alphabetic letters— to successfully identify both) compared to adult controls without ADHD. The ADHD group additionally, regardless of the lag in two targets, never reached performance commensurate with that of their performance on a single-target condition, while the control group did. Furthermore, the ADHD group tended to report targets that did not appear on screen in the trial, implying entirely failed perception that led to supplied guesses, where the control group would incorrectly identify stimuli actually preceding or following the target on screen. Finally, the ADHD group had an “unstable gaze” characterized by more eye movements overall, supporting the indication of non-perception errors.

Laasonen et al. (2012), though, only finds a prolonged attentional blink for dyslexic adults, and not ADHD adults, compared to neurotypical controls. Likewise, there was no disadvantage for the ADHD group on a field of vision (Useful Field of View) or visual attention capacity (Multiple Object Tracking) task, but the dyslexic group had longer response times on the former. By way of explaining the lack of underperformance for the ADHD group, Laasonen et al. report that ADHD is a “multifactorial and heterogeneous disability,” and thus, number of and degree of symptoms that implicate cognitive issues may vary between individual cases, both in type and degree. Supporting this conclusion, Nilsen et al. (2013) find that ADHD adults with higher-severity symptoms (as determined by a standardized diagnostic scale) made more eye movements to distractors in a communicative perspective-taking task compared with adults with lower-severity symptoms. However, importantly, accuracy in the task itself was not affected by symptom degree.

In summary, studies of adult dyslexic and ADHD populations reveal the potential for sustained visuo-spatial processing challenges from childhood, especially in the temporal domain, but presentation may vary by the measure used and by individual performance. Both dyslexic and ADHD adults may show poor rapid visual processing, and multiple studies provide evidence of unstable eye gaze in adults with ADHD. The non-perception errors exhibited by individuals with ADHD in Armstrong and Muñoz (2003) exemplify a potential consequence of deficient temporal visuo-attentional mechanisms to online language processing, where portions of the linguistic signal may not be perceived at all. While Laasonen et al. (2012) do not propose a specific underlying mechanism, they show dyslexic adults exhibit temporal visual processing differences using the same attentional blink task, resulting in lowered performance. Especially as the rigor and expected receptive fluency in a language learning environment increases, issues with temporal processing could pose challenges to students' understanding of the linguistic signal. It's further worth noting that the differences between neurotypical and disabled groups in these studies were found in a controlled experimental setting, where distractions would be at a minimum. This setting may not be representative of real-life classrooms, which have more environmental variability. Finally, while medication can sometimes alleviate visual attention difficulties in ADHD groups, some visual-processing challenges appear to be medication resistant (Maruta et al., 2017), and there are no such standardly used medications for dyslexic or otherwise learning-disabled groups. For both these reasons, one can't assume that either accommodation or medication will completely alleviate language learning challenges caused by problems with visualspatial processing in these groups.

While the previously reviewed studies provide insight into the cognitive profiles of dyslexic and ADHD groups, the question remains as to whether particular cognitive challenges will impact overall language learning outcomes in a classroom setting. In one of the few existing studies of disabled second language learners, Sparks et al. (2008) directly compare public high school students in Spanish language classrooms who either have a diagnosis of “learning disability” (LD) or ADHD. Diagnoses for students in each group were formally administered by appropriate authorities, per United States federal guidelines. These two groups were compared with an additional set of peers lacking either diagnosis, who were divided by the researchers into either “high-achieving” or “low-achieving” groups for purpose of analysis. The achievement groups were determined by teacher recommendations (for “good L2 learners” and “poor L2 learners”) and the final grades of the students in the class, where those with a B or higher were in the high-achieving group. It's important to note that these comparison groups are not equal (i.e., there aren't “low-achieving” and “high-achieving” divisions for the LD and ADHD groups). The intent of the study was in part to determine whether poor language learners with disabilities are distinct from generally poor language learners without diagnoses, thus implying the need for specialized accommodations (as opposed to general supportive language learning practices in-classroom, for example).

The results for the Sparks et al. (2008) study were as follows. For L1 and L2 literacy measures as well as L2 proficiency measures, high-achieving students performed significantly better than low-achieving and LD students, but not ADHD students. However, high-achieving students did outperform ADHD students, as well as low-achieving and LD students, on the MLAT (Modern Language Aptitude Test), the L2 aptitude measure that was used for the study. ADHD students performed significantly better than LD students on all L2 linguistic tasks (e.g., word decoding, pseudoword decoding, spelling) and better than low-achieving students on all but one. Overall, there were no group differences between low-achieving and LD students on any measures. Sparks et al. interpret the fact that the non-disabled “low-achieving” and LD groups show no group differences as an indication of, essentially, equally deficient language learning capabilities and cognitive-linguistic skills. We find it worth noting, though, that especially in a group of LD students with formal diagnoses and accommodations to support their learning, one wouldn't expect students labeled as learning disabled students to perform categorically like “poor learners;” variation should be expected within-group, as well. The results also provide evidence that ADHD students may not share this risk for language learning difficulties. To this point, the authors note the heterogeneity in individuals with ADHD, which was reflected in how performance in this group varied greatly by individual and task. In other words, they emphasize that the ADHD participants' performance as a group may not reflect individual challenges; this commentary echoes the findings of Laasonen et al. (2012), who also indicated that individual differences in ADHD participants may mask overall group differences.

The overview presented thus far on disability categories and (language) learners provides a backdrop for considering L2 hearing learners of a second language, primarily in spoken language learning. In addition to the fact that the indicated disability groups here may present specific visual processing challenges, signed language classrooms need consideration due to their well-established popularity. American Sign Language (ASL) is an increasingly popular choice for college students seeking to fulfill post-secondary language requirements in the United States. According to the Modern Language Association survey of foreign language enrollment in higher education, enrollment in ASL courses increased by 434 percent between 1998 and 2002, growing from 11,420 to 60,781 students (Welles, 2004). By 2013, ASL became the third most studied language in U.S. higher education, following Spanish and French (Looney and Lusin, 2019). Disabled students are also a growing minority in higher education, generally (Sanford et al., 2011), increasing the chance that students in language classrooms have a disability affecting their learning experience. The general population of students has been shown to gravitate to signed language classrooms due to assumptions about the “ease” of learning signed languages (Jacobs, 1996). We speculate that disabled students could be particularly attracted by the misleading proposition that signed languages are more adaptable to their learning difficulties, which is supported by formal recommendations made to pursue signed language classrooms as alternatives to spoken language coursework (Arries, 1999). In a more positive vein, we also suggest disabled learners could be drawn to (and feel welcomed in) signed language learning spaces due to the Deaf community's proximity to disability communities.

The majority of adult ASL learners are what Chen Pichler and Koulidobrova (2016) define as M2L2 learners— L2 learners also acquiring that second language in a non-native modality. Among possible modality-driven learning effects for this group, Chen Pichler and Koulidobrova describe the challenge of acquiring a new phonological inventory within a simultaneously acquired, also non-native system of visual phonology. Of note here is the simultaneous vs. sequential arrangement of signed and spoken phonological parameters, which may in itself drive differences in L2 learning (Quinto-Pozos, 2011). Grammatical use of space, they add, such as pointing, spatial agreement on verbs, and classifiers (gesture-like signed language devices used to indicate movement, shape, and size via language-specific handshape constructions), also necessitates that sign-naïve individuals learn to operationalize their gestural space according to signed language grammar. Evidencing the difficulty of spatial grammar acquisition, classifier constructions in particular are shown to challenge M2L2 signers (Boers-Visker and Van Den Bogaerde, 2019); these constructions are also late-acquired in native signing (Morgan et al., 2008) and shown in at least one case study to be difficult for a visuospatially impaired native signer (Quinto-Pozos et al., 2013). This raises the question of whether M2L2 learners with similar visuospatial challenges will also encounter additional difficulty in spatial grammar, especially classifier constructions.

A handful of studies have directly assessed the relationship between cognitive factors like visuospatial or auditory memory and M2L2 signed language learning specifically. Williams et al. (2017) investigate cognitive-linguistic measures administered in the auditory L1 modality in relation to L2 signed language learners' ASL vocabulary and self-rated proficiency tested at the beginning and end of one semester of ASL instruction. The predictive measures included a forward and backward version of a digit span task, an English vocabulary test, and an English phonetic categorization task. Interestingly, Williams et al. find that the English vocabulary and phonetic categorization measures, and not the verbal memory measures, predicted ASL vocabulary growth and self-rating.

Martinez and Singleton (2018, 2019) also conducted a series of studies looking at factors for signed vocabulary learning in hearing, non-signing populations. In the first study (2018), the authors implement a series of short-term memory tasks, contrasting those that include sign or sign-like stimuli with those that contain visual stimuli which are not sign-like (for example, videos of body movements vs. patterns of shapes). The first set of these tasks were referred to as movement short-term memory (STM) tasks, which the authors hypothesized would be more related to sign learning than the other types of STM tasks due to the “encoding and binding of biological motion.” They find that all three administered measures of movement STM and both measures of visuospatial STM positively related to hearing non-signers' performance on a sign learning task. Additionally, the visuospatial memory tasks, which, in contrast to two of the movement tasks, did not resemble linguistic properties of signed language, accounted for variance in the sign learning task beyond that accounted for in the movement memory tasks. The authors take the latter finding to indicate the importance of perceptual-motor processes related to the visuospatial modality generally, rather than phonological properties of signed language.

Accordingly, Martinez and Singleton (2019) investigate both domain-general and modality-specific factors related to sign and word learning. In addition to investigating working memory and short-term memory via span tasks, they explore the effect of both crystallized and fluid intelligence. Here, crystallized intelligence refers to the learner's pre-established familiarity with facts and processes, where fluid intelligence refers to the individual's capacity to navigate and solve novel situations (Martinez and Singleton, 2019). These measures were obtained via a series of tasks testing general knowledge, pattern recognition abilities, and English vocabulary. They find that fluid intelligence related to both sign and word learning; however, the effects of phonological short-term memory (P-STM) were modality-specific (i.e., spoken P-STM related to word learning, and signed P-STM related to sign learning). The authors did not find a significant effect for Working Memory Capacity (WMC) as a whole on either sign or word learning. This finding is unexpected due to the fact that STM is a sub-component of WMC. The authors suggest that between the variance accounted for by both fluid intelligence, a skill highly related to WMC, and the P-STM tasks, the effect of WMC was potentially obscured. The influence of both WMC and P-STM on sign learning processes is particularly relevant to the present study due to the ubiquitous presence of challenges with working memory in target disability population groups. The two studies from Martinez and Singleton (2018, 2019) affirm that individual capabilities in the visuospatial short-term memory subcomponent of working memory are, in fact, at issue for signed vocabulary learning.

Due to the demonstrated impact of both modality-general and modality-specific factors on signed language learning, it is possible that M2L2 signers with disabilities that entail deficient general cognitive or visual processes will be negatively impacted in sign learning processes. Considering the literature reviewed on ADHD and dyslexic individuals specifically, potential areas of interest include visuospatial working memory, rapid visual processing, and inhibiting attention to visual distractors. However, it's also possible that the modality of signed language may be beneficial in general to many different kinds of atypical learners, particularly in comparison to L2 acquisition of spoken language. Quinto-Pozos (2014) predicts that for signed language users with compromised processing, some difficulties may include comprehension and production of complex forms with simultaneous morphology, rapid fingerspelling comprehension, and managing perspective shifts with respect to the signing space. Some benefits, though, may be the increased size of and visual access to articulators as well as slow signing speed in comparison to speech rates.

Singleton and Martinez (2015) provide, to the authors' knowledge, one of the only existing studies explicitly designed to investigate the experience of M2L2 learners with disabilities. Singleton et al. (2019) for a review of atypicality with respect to signed language learning in adolescence and adulthood. The study was conducted at a private United States high school for academically gifted students with language and learning disabilities (here, used in a more general sense, encompassing disabilities like ADHD as well as specific learning disorders). The authors conducted interviews with students taking either Spanish or ASL. They also collected student self-ratings of difficulty for learning the language for which they were enrolled. Some students reported a positive qualitative experience with ASL in relation to their disability, including one student with both dyslexia and ADHD:

“Yeah… it makes your eyes more focused. Like, maybe this is just for me. But like, obviously, dyslexia is a big part of my life, “cause this is what I have to live with for the rest of it. But like I said before, people on dyslexia they pick up on the small things. And people on ASL, like sign language, you have to look at the small things. And it's helped my focus. Because I have dyslexia and ADD. So, it helps me focus better. Like paying attention, ‘cause I have to pay attention to them to understand what they're saying.” (Singleton and Martinez, 2015)

They also found that students with ADHD, for example, on average rated Spanish as more difficult than ASL. However, the students in the ASL courses also had higher scores on IQ measures, so this could be explained by a cognitive advantage in the ASL student group. Extrapolating from the findings on the ratings, then, must be done with caution. However, the consistent, positive attitudes students held about their learning experience in ASL classrooms are informative. It's also valuable that these reports come directly from the experiences of the population of interest. At minimum, these students did not report a negative experience with respect to their diagnoses, in contrast to the findings of Paling (2020), who found that students with ADHD self-reported negative experiences with spoken language learning. In the above excerpt, the student goes so far as to describe the visual-manual mode of language as a benefit, rather than an obstacle, to their language learning experience.

In summary, there are aspects unique to the visual modality of signed language that may have negative or positive implications for M2L2 signed language learners. Additionally, cross-modal cognitive factors from learners' first languages, domain-general skills like fluid intelligence, and modality-specific perceptual and memory processes are all potentially implicated in sign learning (Williams et al., 2017; Martinez and Singleton, 2018, 2019). There is evidence that learners with certain diagnoses, especially ADHD and dyslexia, may show challenges in these domains, raising the question of whether they are at risk in a signed language learning setting compared to peers lacking these diagnoses.

We have outlined various ways in which language learners with disabilities encounter challenges with language processing, and phonological features of language have been investigated repeatedly. Specific to signed language, the processing of perspective has been shown to be particularly challenging for all learners (Brozdowski et al., 2019; Secora and Emmorey, 2020). This study investigates whether hearing L2 ASL signers with disabilities related to cognition, learning, and language perform differently than peers reporting no such diagnoses on three tasks: (1) a signed phonological discrimination task; (2) an ASL-based perspective-taking task; and (3) a non-linguistic perspective taking task. Between these three tasks, we are able to compare participants' perception of: (1) phonological components of signed languages, and (2) visuospatial processing skills in both a linguistic and non-linguistic mode of delivery. For individuals with specific learning disorders in particular (especially dyslexia), there is ample evidence of phonological processing challenges in the spoken modality of language. Including a signed phonological processing measure allows us to assess whether this is the case in the signed modality, as well. On the other hand, there is at least one documented case of a deaf adolescent native signer with visuospatial processing challenges that negatively affects their processing of perspective-taking constructions in ASL (Quinto-Pozos, 2011). Perspective-taking has also been shown to present challenges to deaf L2 signers, as well (Brozdowski et al., 2019; Secora and Emmorey, 2020). In other words, these measures probe a uniquely challenging aspect of sign learning, which may be even more difficult for learners with visuospatial processing difficulties, as are documented in our target disability populations.

Our participant pool is composed of students from beginning and intermediate courses of an ASL program at a large, public, university. The majority of students in these courses (over 75%) during the semesters we collected data participated in data collection, which contributes to the ecological validity of the collected data. In other words, the data set is highly representative of typical ASL students at the points of time that data were collected. This is true, as well, for the range of disabilities reported by participants. As such, we did not actively recruit students from any disability category, which is reflected in the uneven distribution of participants across neurodiverse categories.

Most literature reviewed here is limited to only specific learning disorders (especially dyslexia) and ADHD. Here, we consider any disability reported that would primarily affect language, cognition, or learning, excluding categories such as deafness, mobility disabilities, and emotional or psychological disabilities. In this approach, we hope to contribute to establishing a lacking precedent in analyzing disability as a part of L2 acquisition research. Moreover, we hope that in including participants who directly correspond to the types of disabled students in ASL classrooms, our project is directly informative to practice. We seek to address which, if any, components of signed language and visual-spatial processing disadvantage L2 learners who are disabled and/or neurodiverse.

Materials and methods

Participants

Participants were hearing college students (n = 166, 134 female, 29 male, 3 N/A) enrolled in either beginners' (ASL I, n = 43) or intermediate (ASL III, n = 123) university ASL courses at the same public university. Students in these courses received credit for participating in a research experiment. Students also had the option of attending a colloquium presentation and writing a summary of that presentation if they did not want to participate in the research experiment. Some students did not participate in any way (either via completing the research experiment or attending the colloquium presentation), in which case they were not awarded credit for this aspect of the course. The participants in this analysis represent students in the ASL program who elected to fulfill this research credit by participating in the present study.

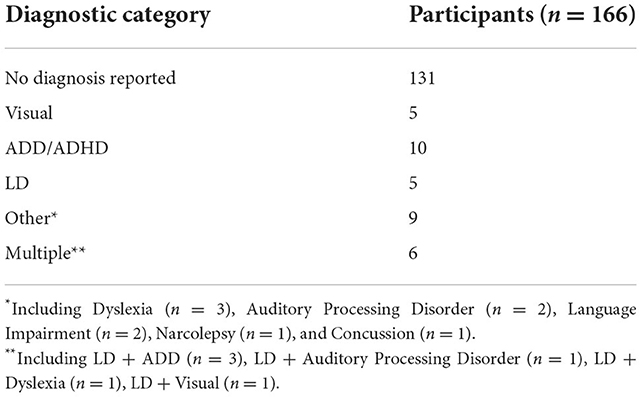

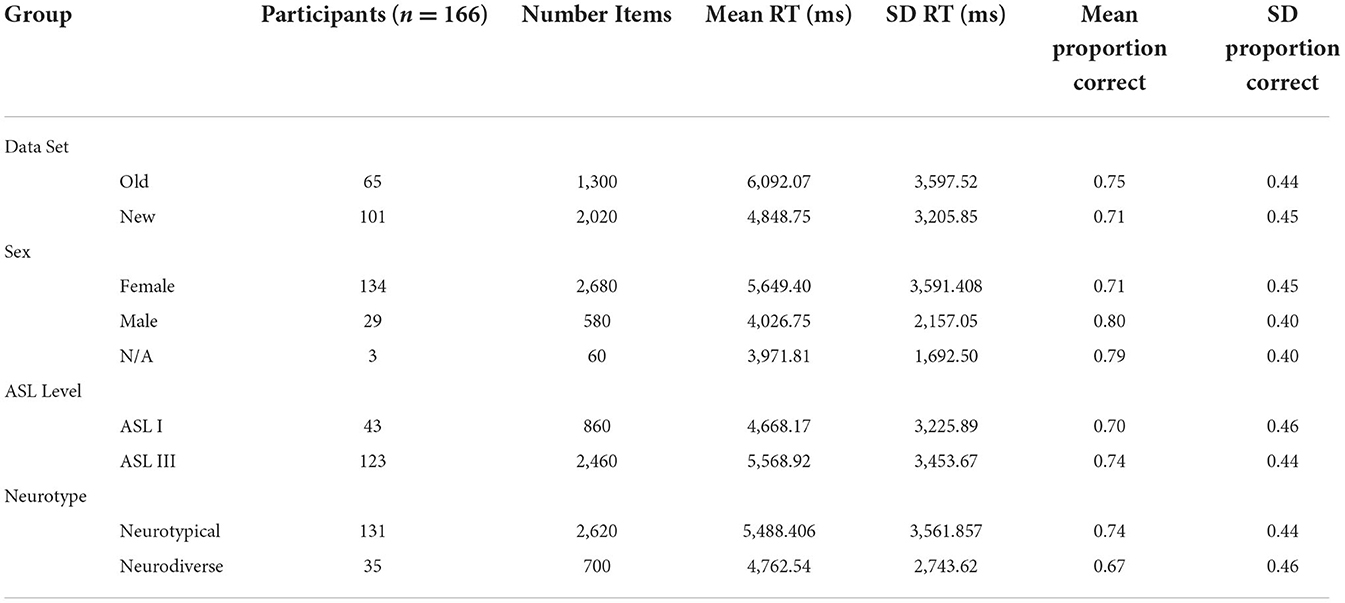

Prior to administration of the experimental tasks, all participants filled out a Qualtrics data form regarding demographic information, relationships to the Deaf community, and language backgrounds. Participants also self-reported diagnoses from a provided list, with the option to submit additional diagnoses not provided. Thirty-five participants reported one or more diagnoses (Table 1). The diagnoses listed in the survey consisted primarily of those that might belong the neurodivergent community, such as autism, ADHD, learning disability/dyslexia, traumatic brain injuries, and auditory processing disorders (Singer, 1998; Bertilsdotter Rosqvist et al., 2020). Language and speech disorders were also included. The goal was to target diagnoses that represented individuals who primarily had learning, language, and processing difficulties. Motor disabilities, for example, were not included, as well as mood, emotional, and personality disorders. Participants were asked to report visual disabilities, which were included in the analysis.

While disability was not among the initial factors that motivated the data collection for this study, nearly 22% of respondents identified with one or more disabilities. Due to this notable percentage, we were compelled to engage in this exploratory analysis of the role of disability in signed language learning. As such, we did not purposefully recruit students with disabilities (of any category), and the results is that categories of disability have a variety of participants for the analysis.

Procedure

Participants locally participated in the experiment in a single, hour-long session. Administration of tests was provided in either English or ASL by a trained research assistant. Data collection occurred as part of research projects that were carried out in 2012 and 2018–2020; the data from the two time periods are compared statistically in Sections ASL-PTCT and NL-PTT. The order of the tasks was randomized for each participant. Informed consent was received from all participants.

Materials

The American sign language discrimination test

The American Sign Language Discrimination Test (ASL-DT) is a phonological discrimination task administered online with 48 items consisting of videos of paired sentences in ASL (Bochner et al., 2011). After viewing the temporarily displayed videos, participants report whether the sentences are the same or different by selecting an appropriate button. The sentences may be the same or differ on five morphophonological-parameters on a single sign: handshape, orientation, location, movement, and complex morphology, where complex morphology refers to contrasts in directionality, numerical incorporation, noun classifier usage, and verb inflection. Each item consists of two pairs of sentences, and test-takers provide a judgment of the similarity or difference of the sentences. There are eight stimuli pairs for each of the six morphophonological contrast categories, including the same condition in which there is no difference between the two sentences in each stimulus pair. There are five contrast conditions, and one same condition. To reduce chance performance, participants must respond correctly to each pair in the item.

Three Deaf native signers produced the ASL sentences in the video. Two were male and one was female. The first male always signs the first sentence, and one of the other two signers produce the comparison sentence that follows. Non-contrastive variation between the signers, such as phonetic articulatory differences (which do not alter the meaning of the sentence), was included in the recordings to increase the difficulty of the task.

Only participants in the later data collection session completed the ASL-DT (n = 101). Thus, there were 66 participants in the earlier data collection session which did not complete the ASL-DT. Only data on overall accuracy (in the form of a percentage correct on all items) and confidence intervals of the final score are provided for the test-takers, thus, these are the only measures we have accessible to analyze.

The American sign language perspective-taking comprehension test

The American Sign Language Perspective-Taking Comprehension Test (ASL-PTCT) consists of 20 items in which the participant views a video of a classifier description in ASL of two objects in relation to each other, then selects the appropriate picture of the corresponding items from four answer choices (Quinto-Pozos and Hou, 2013). The answer choices differ both in how the described objects are arranged and oriented with respect to each other within-trial. Arrangement refers to whether one object, for example, is set up to the left or right of the other (both objects always face the same direction). Orientation refers to the orientation of the object in its position, for example, whether a dog is standing up or laying on its side. The answer choices also vary across trials by the shift in perspective required between the stimulus and the answer choices. Namely, each of the five blocks of the experiment has a different degree of rotation from the original perspective of the photo, increasing in 45° increments from 0° in the first block (0°, 45°, 90°, 135°, 180°).

The prompt ASL video was also filmed either from the opposite perspective (the typical 180-degree shift in viewpoint in signing) or a side-by-side perspective (the signer shares a perspective with the participant), which allows the test-taker to have two views of the signer and the signs for the test. One of two versions of the ASL test was administered to each participant, each with a different model signer. All videos were filmed with a fluent, Deaf ASL signer as the signing model. Accuracy and response time are measured for each item. See https://osf.io/cnhq7/?view_only=7531d3529b1d453ebf04e93329321963 for all of the materials in the ASL-PTCT task.

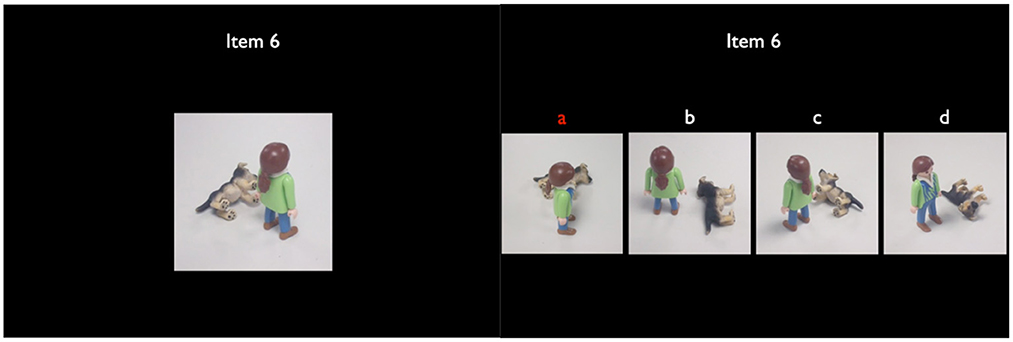

The non-linguistic perspective taking test

The Non-Linguistic Perspective-Taking Test (NL-PTT) is structured like the ASL-PTCT, but uses still images of objects arranged in relation to each other as the stimulus prompts rather than videos of signed language describing the same scenes. Each of these stills stays on the screen for a total of 3 s at the beginning of the trial. Again, participants must select the correct answer choice from four photos that corresponds to the arrangement they saw in the prompt, where the arrangements and orientations between the objects in each answer choice are different, and the perspective shifts vary by block. For example, below, the participant sees a toy dog on its side facing a standing toy human in the prompt stimulus. The correct answer choice A, which matches the arrangement in the prompt stimulus but is viewed from a 45 degree shift in perspective. All of the other answer choices show the dog in an incorrect orientation with respect to the human.

All participants took both the ASL-PTCT and NL-PTT. See https://osf.io/7z4g5/?view_only=00085ff216164d14bcdc79e3e47314cc for a full demonstration of the NL-PTT task.

Analysis

The method for the study was a secondary data analysis on the existing dataset. Data was analyzed in R using the following packages: tidyverse, lme4, sandwich, lmerTest, and effectsize.

For the purposes of the analysis, the participants who reported one or more diagnosis were assigned to the neurodiverse group, while those without a reported diagnosis were assigned to the neurotypical group. The ASL-DT was analyzed using A 2 × 2 ANOVA with total ASL-DT score per participant as the dependent variable and course level and neurotype as independent variables.

Response time and item accuracy in the perspective-taking tasks were analyzed using series of linear and generalized linear mixed effects regression models, respectively, estimated via maximum likelihood methods. Each model had random effects for item and participant. Fixed effects included a group for neurotype, sex, course level, and item perspective (ASL only).

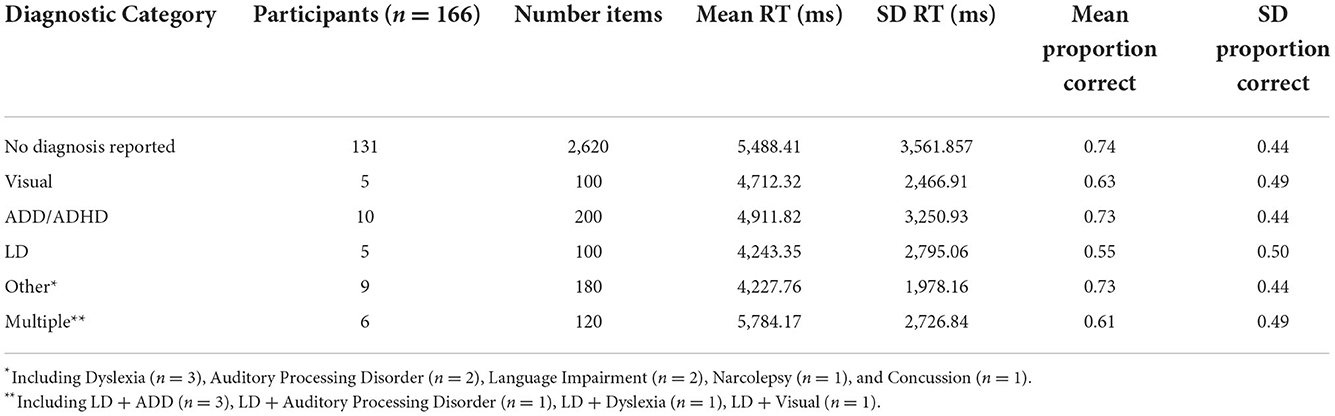

Furthermore, models were run where diagnostic statuses were coded individually into groups, rather than by overall assignment to a neurotype. The categories were as follows: Neurotypical (no diagnosis reported), Visual Impairment, ADD/ADHD, Learning Disability (LD)8, Other (including Auditory Processing Disorder, Concussion, Dyslexia9, Narcolepsy, and Language Impairment), and Multiple. While these categories are not ideal or necessarily homogenous, we are limited by the relatively small number of diagnoses reported, and the responses as provided by the participants.

Wald confidence intervals were obtained for each regression factor; tests were set at 0.05 level of significance and all p-values were adjusted using the Holm correction. We report Odds-Ratios for the logistics regression analyses.

Results

ASL-DT

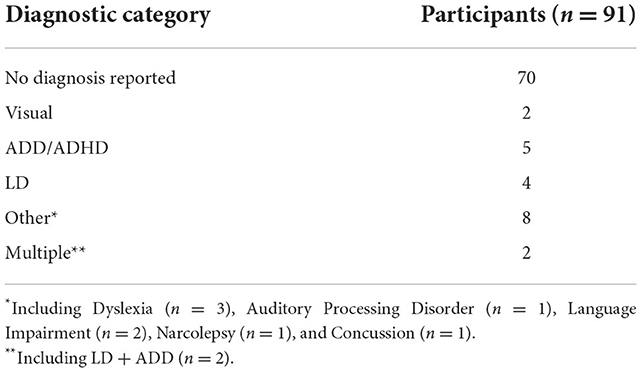

Seven of the participants' ASL-DT scores could not be recovered and three were indeterminate, thus n = 91 for the ASL-DT analysis, 21 of which identified one or more diagnoses (Table 2). Additionally, five outliers (all in the ASL III group) were removed from the analysis, based on the fact that their score was further than two standard deviations from the mean. There was a main effect for course level (F1, 86 = 18.80, p = 0.00, partial eta-squared = 0.19), where ASL III students (M = 52.67, SD = 3.45, n = 48) had higher percentage accuracy scores than ASL I students (M = 49.08, SD = 4.16, n = 38). Neurotypical students outperformed neurodiverse students, but this difference did not reach significance.

ASL-PTCT

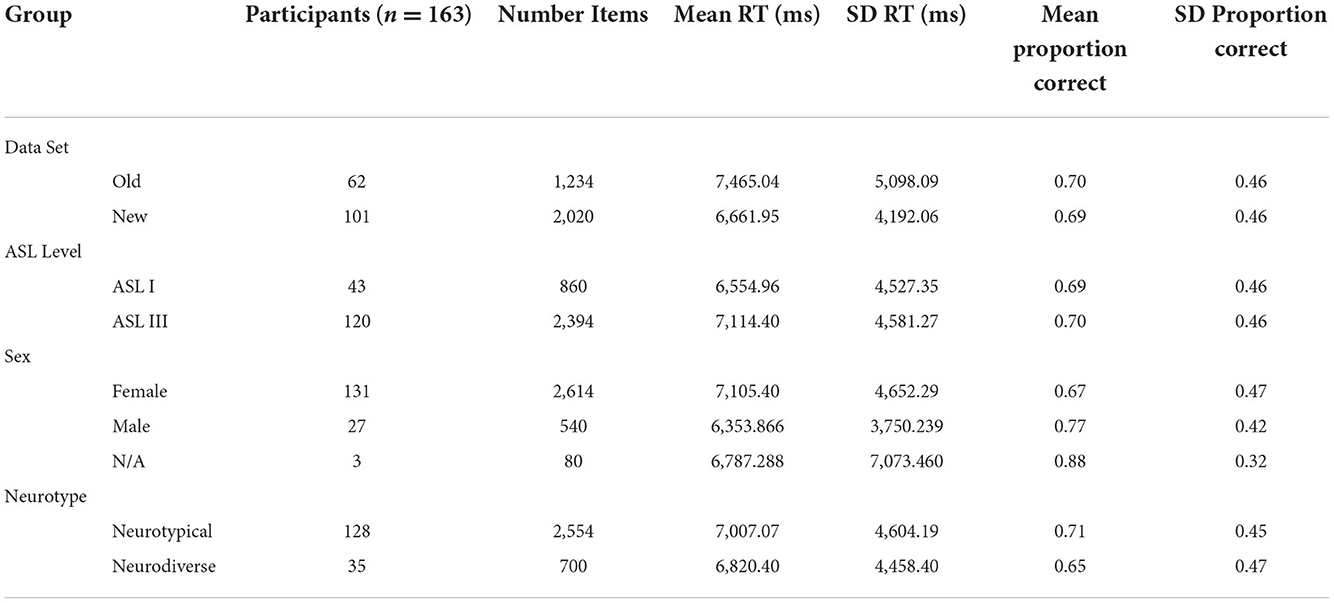

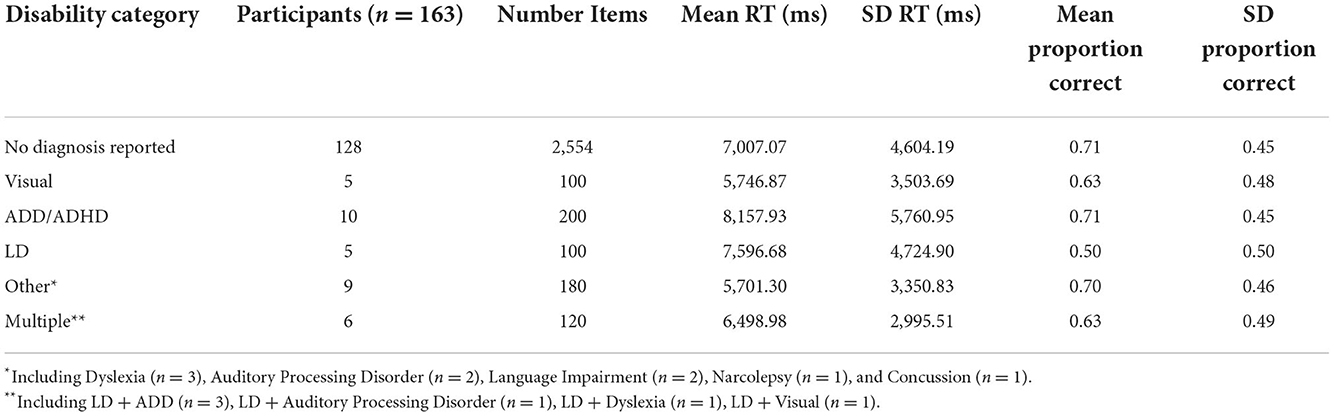

Four participants' ASL-PTCT data could not be retrieved, thus n = 162 for the ASL-PTCT analysis, 35 of which identified one or more diagnosis (no individuals reporting a diagnosis were missing from the data set, so the breakup is the same as the general dataset). Summary data are reported overall in Table 3 and by diagnostic category in Table 4. Initial regression analyses revealed no significant effects of time period of the data set (i.e., the 2012 or 2018–2020 session of data collection) or test version on accuracy or response time, so these factors were removed from further models in the ASL-PTCT analysis, so that these groups were collapsed. The linear model found no significant main effects for group (disability category), sex, course level, or item perspective. The logistic model found a significant main effect for item perspective (OR = 0.51, CI = [−0.91, −0.43], p < 0.001), where opposite perspective (M = 0.64, SD = 0.48) items were less accurate than side-by-side perspective items (M = 0.75, SD = 0.43). A main effect for sex was also found (OR = 1.92, CI = [0.19, 1.19], p = 0.05), where males (M = 0.77, SD = 0.42) were more accurate than females (M = 0.68, SD = 0.47). No interaction effects were found.

Next, the same models were run with diagnoses coded by group. The logistic model again found a significant main effect for item perspective (OR = 0.51, CI = [−0.91, −0.43], p < 0.001). No other effects were found.

NL-PTT

The same models as above were conducted. All participants were available, with 35 participants identifying as having a relevant disability. Summary data are reported overall in Table 5 and by diagnostic category in Table 6. Interestingly, there was a marginally significant main effect for data set on reaction time [F(1, 158.3) = 5.7608, CI = [−1,724.30, −174.08], p = 0.05], such that the data collected in 2012 set had a longer response time (M = 6,092.07 ms, SD = 3,597.52 ms) than the data from 2018 to 2020 (M = 4,848.75 ms, SD = 3,205.85 ms), so this factor was not removed for the NL-PTT (i.e., it was included in the statistical analyses as a factor). There was a main effect of sex on response time [F(1, 158.3) = 8.81, CI = [−2,226.77, −445.39], p = 0.01], such that the males (M = 4,026.758 ms, SD = 2,157.052 ms) were faster than females (M = 5,649.40 ms, SD = 3,591.408 ms).

The logistic model found a main effect for sex (OR = 2.06, CI = [0.32, 1.13], p = 0.002), where males were more accurate (M = 0.80, SD = 0.40) than females (M = 0.711, SD = 0.45). There was also a main effect of group (OR = 0.60, CI = [−0.86, −0.15], p = 0.02), where disabled participants (M = 0.67, SD = 0.46) were less accurate than the neurotypical group (M = 0.74, SD = 0.44).

Finally, the models were once again run with diagnoses separated by groups rather than as a single binary variable. A main effect for sex was once again found in the main linear model [F(1, 154.4) = 9.36, CI = [−2,308.55, −505.55], p = 0.02] and in the logistic model (OR = 2.04, CI = [0.31, 1.11], p = 0.004). Additionally, there was a main effect for the learning disability group (OR = 0.32, CI = [−1.95, −0.34], p = 0.04) and a marginal effect of the multiple disabilities group (OR = 0.37, CI = [−1.72, −0.25], p = 0.053), where each group was less accurate (M = 0.55, SD = 0.50; M = 0.61, SD = 0.49) than the neurotypical group (M = 0.74, SD = 0.44).

Discussion

In this study, we compared the performance of neurodivergent and neurotypical college-level ASL learners on a signed language phonological discrimination task as well as both a non-linguistic and a linguistic (ASL) version of a perspective-taking task. We sought to investigate whether individuals in the neurodivergent group were disadvantaged by visual-spatial processing in linguistic and non-linguistic contexts.

There was a main effect for group (disability vs. neurotypical) on accuracy, where neurotypical students outperformed disabled students, in the non-linguistic version of the perspective-taking task only. Similarly, there was an effect for the learning disability group and a marginal effect for the multiple diagnoses group in the models comparing disability groups, where both of these groups performed more poorly than the other diagnostic group, including the neurotypical group. All multiply diagnosed students reported “learning disability”10 as one of their diagnoses. Students who self-identified as learning-disabled and multiply diagnosed were among the lowest scores in the ASL-DT as well (Figure 1). These observations align with studies of spoken L2 learning showing that “learning disabled” students perform most like their “low-achieving” peers, where other diagnoses such as ADHD might be spared (Sparks et al., 2008). Likewise, our findings also align with research on visuospatial skills in ADHD and dyslexic adults, where Laasonen et al. (2012) finds that the latter group is impaired compared to a control group while the ADHD group is not.

The main effects of sex in the perspective-taking tasks echo, in part, similar findings in previous research that males outperform females on visual-spatial tasks primarily in non-linguistic contexts, and less so in linguistic settings. Notably, Emmorey et al. (1998) finds that the effect of sex is only revealed in the non-linguistic, but not the ASL, versions of visual-spatial tasks. Likewise, Brozdowski et al. (2019) find that for deaf participants in a classifier-based spatial task, there is only a marginal (i.e., not statistically significant) effect of gender, where males outperformed females. Both Hegarty (2017) and Tarampi et al. (2016), by contrast, find robust effects of sex in non-linguistic mental rotation and spatial perspective-taking tasks in hearing groups. Tarampi et al. (2016) demonstrate that males only outperform females in the version of a visual-spatial processing components that is non-social. That is, when a figure of a human body was included, females no longer underperformed. They attributed this result, in part, potentially to stereotype threat. In comparison, we found no effect of sex on performance of the ASL-DT. Likewise, there was an effect of sex on response time for the ASL-PTCT, but not the NL-PTT, where males outperformed females. We did find an effect of sex on accuracy in both the ASL-PTCT and NL-PTT, though it may be worth noting this result bordered significance (p = 0.05). Because the ASL-PTCT contains live videos of a human, whereas the NL-PTT only contains a figure of a human, social as well as linguistic factors could similarly be affecting the differences between male and female participants. The fact that we did observe a statistically significant difference between male and female participants on the ASL version of the task, despite evidence from previous research with deaf participants that does not find this pattern, may also be due to the hearing status and ASL experience level of our participants. Further research disentangling linguistic from social context, especially in a signed language where the signer's body must be visible, may prove useful in understanding the underlying factors driving performance differences between sexes.

The ASL III students outperformed the ASL I students on the ASL-DT, but not on either of the perspective-taking tasks. It is possible that three semesters of ASL is enough for signed phonological skills to significantly improve, but not visual-spatial skills (linguistic or otherwise). This analysis is supported by the fact that classifiers are late-mastered grammatical components for both first and second language signers (Morgan et al., 2008; Boers-Visker and Van Den Bogaerde, 2019). It is also possible that the sheer difficulty of the ASL-DT task leant to differentiating the two groups; the maximum score was only sixty-three percent, compared to a ceiling with perfect scores on the perspective-taking measures.

What is striking is that the effects of disability were not observed in the ASL version of the perspective-taking task, but were in the non-linguistic version. One possibility is that some component of linguistic context is generally equalizing for between group-discrepancies. In other words, some relatively universal factor is available from language context to support all learners and is enough to compensate for any potential population differences related to visual-spatial computation.

This would account for the fact that our result mirrors the findings on sex in linguistic vs. non-linguistic tasks as mentioned above (Emmorey et al., 1998; Tarampi et al., 2016; Hegarty, 2017; Brozdowski et al., 2019). However, such an analysis is complicated by the fact that both the neurotypical and the collective disability groups, as well as most specific diagnostic groups, had both lower accuracy and longer response times on the ASL-PTCT compared to the NL-PTT. Conversely, this fact also rules out an explanation that the NL-PTT was simply harder than the other tasks. In fact, the ASL-DT task seemed to be the most difficult task by far and did not yield any differences between neurotypical and disability groups.

An alternative interpretation is that some specific aspect of the NL-PTT is problematic for the disabled learners, and in particular, the learning disability group. For example, perhaps the difference between encoding and retrieving static images vs. video stimuli presents an inherent challenge for the disability groups. However, this is yet again complicated by the fact that the self-identified disabled learners overall and the learning disability group specifically both had higher accuracies and shorter response times for the NL-PTT compared to the ASL-PTCT.

Finally, another prominent possibility is that the tasks administered in the present study do not target compromised cognitive abilities in the given disability populations. It is possible that with a different set of tasks (e.g., those explicitly designed to probe working memory and short-term memory), there would be a more prominent distinction between neurotypical and disability groups, as well as in between specific disability categories.

A generous interpretation of the results is that the language-based skills involved in the ASL version of the perspective-taking place reliance on language skills for which the neurotypical and disabled learners have equal access too. Such students might come into the ASL classroom, despite differences in visual-spatial processing skills, approaching with a similar language foundation in terms of learning a signed language (i.e., being M2L2 learners). While the process of learning the new language and modality may be taxing overall, the disabled learners aren't more susceptible to these difficulties compared to the neurotypical group. Crucially, there is no evidence here that the signed modality is specifically disadvantageous to disabled learners. This aligns well with the anecdotal and quantitative reports provided by disabled signers in Singleton and Martinez (2015), who in fact, if anything, indicated a positive experience with signed language learning. As mentioned by students with both ADHD and dyslexia in the aforementioned study, learners may simply find the modality more stimulating and engaging due to the method of articulation. Further investigation is warranted to determine, if, for example, the slower signing speed aids those with temporal processing deficits as suggested by Quinto-Pozos (2014). It also may be worth empirically investigating the propensity for signed language stimuli to engage the attention of individuals with attentional challenges, particularly in comparison to non-linguistic visual stimuli.

These results have interesting implications for both M2L2 sign learning generally and that of neurodiverse and/or disabled learners. First, while phonological perception is improved as we expect by experience, classifier perception is not. This may call for more prolonged attention to instruction in this domain beginning early on in ASL coursework, especially receptively. However, it also may reflect an appropriate L2 trajectory that is not yet fully realized within the course of three semesters. Instructors may want to intentionally incorporate receptive practice that varies on perspective shifts and the position of the interlocuter with respect to the perceiver.

Disabled students—particularly, those categorized as having a sort of learning disability—may require additional instruction and support from ASL teachers in comparison to neurotypical peers when it comes to spatial processing. As far as these discrepancies only being significant in the NL-PTT, this could possibly be related to construing sign spatial arrangements from static representations, which might imply difficulty with, for example, photos in textbooks as opposed to videos. While there was no statistically significant difference according to neurotype (disabilty vs. neurodiverse) in the ASL-PTCT, it is worth noting that the performance of the learning disability group is still very poor (having a mean of 0.50 for proportion accuracy, compared to the neurotypical mean proportion accuracy of 0.71), as well as that of the visual impairment group and multiple diagnosis group, to a lesser degree (both having means of 0.63 for proportion accuracy). ASL instructors may want to provide additional, targeted opportunities for receptive practice to individuals with these specific diagnoses, perhaps aided with more explicit strategies for improving spatial reasoning and classifier skills.

There are a number of limitations to this project. First, as noted in the analysis, the categorization of the diagnostic types is far from ideal. These groups were also analyzed as part of an exploratory analysis, as opposed to be collected intentionally. Additionally, pooling all diagnostic types together in one “disability” category for sake of comparison to the “neurotypical” group is limiting. In doing so, we have collapsed a very diverse set of individuals into one group, which we might not expect to be homogeneous (and in fact, the results suggest they are not). Finally, it is worth noting again that the self-identification of diagnoses by the participants does not necessarily theoretically align with how these disabilities are conceptually categorized. For example, individuals identified as being dyslexic without identifying a learning disability, and we chose to follow the indications of participants. Future works should explicitly target specific diagnostic groups (which may be very low incidence as M2L2 signed language learners) and prioritize thorough data collection with a battery of measures. Ideally, these measures would also include validated diagnostic assessments and standardized assessments of skills such as visual and auditory working memory, for example, to create baselines based on language-independent and language dependent cognitive skills across potentially diverse groups.

A second limitation is that neither the ASL-PTCT or the NL-PTT have validation and reliability measures. There was also a high degree of variance for both proportion correct and response time for all groups in both versions of the task. Relatedly, we did not have a validated measure of ASL proficiency for baseline comparison, such as a test of ASL production. Unfortunately, we do not have production data since there was limited time during data collection sessions. And finally, perhaps most importantly in terms of lacking measures, we did not provide a comparison to an active spoken language-based perspective-taking task.

In summary, we have found no evidence, even for diagnostic groups with poor non-linguistic visuospatial processing relative to their peers, that the modality of signed language is inherently disadvantageous to disabled M2L2 learners. It is promising that, despite minimal empirical background concerning disabled learners in M2L2 learning contexts, we find no evidence of a distinct signed language processing gap between neurotypical students and those with the most common categories of disabilities in signed language learning classrooms. While it is important to caution against the unfounded notion that signed languages are inherently less complex or easier to learn compared to spoken languages (Jacobs, 1996), it has been suggested in the past that specific features of the signed signal (signing speed, ease of access to articulators, etc.) could be supportive to individuals with processing impairments (Quinto-Pozos, 2014). There may, in fact, be something supportive about signed language learning to such disabled learners that leads to attenuating and/or masking differences between disabled and non-disabled groups.

Future work, as mentioned above, should move beyond this exploratory analysis in targeting specific disability groups and utilizing batteries of tasks selected to target specific challenges as evidenced by former literature within these groups. Remaining to investigate, as well, is the differentiation between the influence of language context generally compared to the influence of signed language context specifically, which could be probed by including either including groups with minimal or no sign exposure, or performing longitudinal analyses of sign learners.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by International Review Board, University of Texas at Austin. The patients/participants provided their written informed consent to participate in this study. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

DQ-P designed and oversaw collection of data, which was provided as a source for the presented secondary data analysis conducted by TJ. TJ designed the analysis and authored the manuscript under the advising of JS and DQ-P, both of whom provided direction and feedback in each domain. MD also provided input on the analysis. MD and TJ prepared and coded data for analysis and also wrote and executed the code for the statistical analyses. TJ prepared the manuscript with input from DQ-P and JS. TJ, DQ-P, and JS prepared the revised and approved version for publication. All authors contributed to the article and approved the submitted version.

Funding

The work of the author TJ was completed under the support of the Donald D. Harrington Doctoral Fellowship. The design of the ASL-PTCT and the NL-PTT were supported, in part, by the National Science Foundation Science of Learning Center Program, under cooperative agreement number SBE-0541953.

Acknowledgments

The authors would like to acknowledge the work of the undergraduate research assistants in the University of Texas at Austin Sign Lab, who assisted with collecting, managing, and preparing data. Additionally, the authors would like to acknowledge Dr. Lynn Hou, co-designer for both the NLL-PTT and ASL-PTCT and language model for the ASL-PTCT measure. We would also like to thank the second ASL model, Dr. Carrie Lou Garberoglio. Likewise, we would like to acknowledge the creators of the ASL-DT for granting permission the use their measure for analysis: Dr. Joseph H. Bochner, Dr. Vincent J. Samar, Dr. Peter J. Hauser, Dr. Wayne M. Garrison, Dr. J. Matt Searls, and Dr. Cynthia A. Sanders.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

Any opinions, findings, and conclusions or recommendations expressed are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2022.920752/full#supplementary-material

Footnotes

1. ^That is, a framework of neurodiversity which includes but is not limited to diagnoses such as autism, dyslexia, ADHD, brain injuries, and personality disorders, as per both the original and other modern frameworks of neurodiversity (Singer, 1998; Bertilsdotter Rosqvist et al., 2020).

2. ^Here, we intentionally take after disability scholars and activists in following the Say The Word movement (Andrews et al., 2019), where we use identity-first language; i.e., disabled people versus person with a disability.

3. ^It is worth noting that these do all fall under the umbrella of “neurodevelopmental disabilities” in the DSM-5, however (American Psychiatric Association, 2013).

4. ^Incidentally, none of the participants in the present study self-indentified with dysgraphia or dyscalculia; thus, we will not discuss these specific diagnoses in much detail.

5. ^We would like to acknowledge that many members of the disability community, especially the autistic community, find reference to terminology of “low” and “high” functioning and/or needs to be harmful (Bottema-Beutel et al., 2021); our reference to support needs reflects ways in which researchers and practitioners categorize these individuals. This performance may be contrary to true differences between individuals with learning and intellectual disabilities.

6. ^Much of the literature referring to “learning disability” or “learning disorder” appears to primarily focus on dyslexia; however, much of the referenced literature does not make a distinction between the types of learning disorder. Hence, the rest of this paper tends to refer to “learning disability” as referenced in the discussed literature. When a research finding specifically pertains to dyslexia and this is made explicit in the referenced text, the term “dyslexia” is used.

7. ^It's important to note that though the diagnoses may be outdated per standard resources like the DSM, diagnoses may still be assigned by practitioners at their discretion; further, individuals provided with diagnoses in childhood that later become out of date will still maintain that diagnostic label and are able to receive services for it. This is especially important to consider in the context of how participants self-identify their diagnoses.

8. ^It is worth nothing, again, that the definition of “Learning Disability” is somewhat vague and may be used different colloquially to refer to a range of disabilities, while in research and practice, it has been used to refer very specifically to reading, writing, and mathematical disabilities. We chose to follow the reports provided to us by participants.

9. ^It is interesting that these individuals identified Dyslexia and not Learning Disability, as any definition of Learning Disability should include Dyslexia. However, again, we chose to follow the reports provided to us by participants.

10. ^It is worth noting, as stated earlier, that “learning disability” technically is used to refer to specific disabilities in reading, writing, and math (e.g., dyslexia, dysgraphia, dyscalculia); however, colloquially, people may use this to refer more generically to include other disabilities. Thus, without specific details, it's not clear what participants meant by reporting either “learning disability” or “dyslexia” without reporting the other option or further specifying their diagnosis, in the first case.

References

American Psychiatric Association. (1994). Diagnostic and Statistical Manual of Mental Disorders, 4th Edn.

American Psychiatric Association. (2013). Diagnostic and Statistical Manual of Mental Disorders, 5th Edn. doi: 10.1176/appi.books.9780890425596

American Speech Language Hearing Association. (2023). Central Auditory Processing Disorder. Available online at: https://www.asha.org/practice-portal/clinical-topics/central-auditory-processing-disorder/ (accessed April 2, 2023).

Andrews, E. E., Forber-Pratt, A. J., Mona, L. R., Lund, E. M., Pilarski, C. R., and Balter, R. (2019). SaytheWord: a disability culture commentary on the erasure of “disability.” Rehabil. Psychol. 64, 111–118. doi: 10.1037/rep0000258