- 1Institute of Communication Science, University of Jena, Jena, Germany

- 2Department of Communication and Media Research, University of Zurich, Zürich, Switzerland

Social media platforms like YouTube can exacerbate the challenge of ensuring public adherence to health advisories during crises such as the COVID-19 pandemic, primarily due to the spread of misinformation. This study delves into the propagation of antivaccination sentiment on YouTube in Switzerland, examining how different forms of misinformation contribute to this phenomenon. Through content analysis of 450 German- and French-language YouTube videos, we investigated the prevalence and characteristics of completely and partially false information regarding COVID-19 vaccination within the Swiss context. Our findings show that completely false videos were more prevalent, often embedded with conspiracy theories and skepticism toward authorities. Notably, over one-third of the videos featured partially false information that masquerades as scientifically substantiated, associated with higher view counts and greater user engagement. Videos reaching the widest audiences were marked by strategies of commercialization and emotionalization. The study highlights the insidious nature of partially false information in Switzerland and its potential for greater impact due to its seemingly credible presentation. These findings underscore the need for a multifaceted response to misinformation, including enhancing digital literacy among the public, promoting accurate content creation, and fostering collaborations between health authorities and social media platforms to ensure that evidence-based information is prominently featured and accessible. Addressing the subtleties of misinformation is critical for fostering informed public behavior and decision-making during health emergencies.

Introduction

Since the onset of the COVID-19 pandemic in 2020, a proliferation of (mis)information has been observed globally (Altay et al., 2022), leading the World Health Organization (WHO) to refer to it as an “infodemic.1” This misinformation encompasses a wide range of topics, including denial of the pandemic, false symptom control measures (e.g., eating garlic), and conspiracy theories attributing the pandemic to foreign governments or economic elites (AFP et al., 2020; Brennen et al., 2020). Of particular concern is the misinformation related to vaccination, as it can potentially hinder efforts toward vaccination, which is crucial for pandemic containment (Lewandowsky et al., 2021). Furthermore, numerous studies have documented a negative association between belief in COVID-19 misinformation and vaccination intent (Bertin et al., 2020; Roozenbeek et al., 2020; Chadwick et al., 2021; Loomba et al., 2021).

Much of the misinformation disseminated during the first phase of the pandemic was visual and audiovisual content (Knuutila et al., 2020; Vaccari and Chadwick, 2020). Misinformation was mainly spread via social media platforms, such as YouTube and Facebook, or messenger services, such as WhatsApp (Li et al., 2020; Wilson and Wiysonge, 2020). A study showed that 27.5% of the most-viewed YouTube videos on COVID-19 contained misinformation (Li et al., 2020). Moreover, Li et al. (2022) found that ~11% of YouTube's most-viewed videos on COVID-19 vaccines, accounting for over 18 million views, contradicted the reference standard from the WHO or other public health institutions. YouTube videos by governmental organizations received significantly more dislikes than likes compared to entertainment videos, indicating a less favorable public perception of such content (Li et al., 2022). Although YouTube and social media companies, in collaboration with organizations such as the WHO, have committed to addressing such misinformation, implementation is still difficult and insufficient (Zarocostas, 2020). Despite efforts to combat health misinformation, a substantial portion of highly viewed YouTube content continues to contain misinformation regarding COVID-19 vaccines.

We investigated misinformation on YouTube in Switzerland to expand our understanding of this kind of content and ultimately facilitate its detection and moderation; 61% of the Swiss population regularly used YouTube during the 1st year of the pandemic (Newman et al., 2020), and the Swiss Science Barometer (2020) shows a significant, positive correlation between YouTube use and belief in COVID-19 misinformation. Moreover, it has been argued that online platforms such as YouTube provide new stages for antivaccination groups to spread their messages, expand their reach, and establish new follower networks (Vosoughi et al., 2018; Knuutila et al., 2020). The use of social media in this way is worrying because it can hinder collective action during a health crisis. Misinformed citizens are less likely to take action to mitigate the pandemic or get vaccinated (Allington et al., 2020; Bertin et al., 2020; Roozenbeek et al., 2020; Loomba et al., 2021).

To better understand how producers of misinformation try to deceive YouTube users, we conducted a quantitative content analysis of 450 videos containing misinformation about COVID-19 vaccination. We analyzed different types of misinformation (partially vs. completely false information) and examined views, user reactions, actors, and claims. In addition, we compared videos with an extensive reach to those with fewer views. Finally, we discuss the results considering potential future health crises in which it will likely be essential to detect misinformation early and educate the public about common deception strategies.

Literature review

Misinformation and disinformation dynamics in the public health context

The term “misinformation” is often utilized to denote information that is false or misleading, regardless of the intention behind its dissemination (Tandoc et al., 2018; Wardle, 2018). Distinct from misinformation, “disinformation” represents a subset of misleading information crafted and circulated with the explicit intent to deceive and inflict harm, such as exacerbating social divisions or influencing political decisions (Wardle, 2018). Citizens who accept disinformation as legitimate may base their perceptions and actions on fundamentally erroneous information, leading to significant real-world consequences. Societal challenges are further compounded by what Bennett and Livingston (2018) describe as a “disinformation order,” where subcultural frames and false narratives are systematically promoted, often by extreme ideological groups that exploit digital tools such as trolls and bots to broaden their reach (Marwick and Lewis, 2017).

In the contemporary landscape, the COVID-19 pandemic has acted as a catalyst for an unprecedented surge in misinformation, impacting public responses to health directives and fostering a climate of doubt and skepticism (Roozenbeek et al., 2020). The intent behind the dissemination of false information often remains opaque, complicating the task of discerning misinformation from disinformation; therefore, our review adopts a broad perspective, addressing all forms of false information under the term “misinformation” for the purposes of this analysis (Brennen et al., 2020).

Recent literature expands upon the dangers posed by misinformation, highlighting its capacity to shape public attitudes (Loomba et al., 2021; Sharma et al., 2023) and reinforce enduring misbeliefs (Hameleers et al., 2020). These issues become acutely problematic during health crises, where misinformation has been shown to dissuade people from vaccinating, raising individual risk levels and impeding collective efforts to manage the spread of disease (Wilson and Wiysonge, 2020).

The complexities surrounding vaccine safety narratives have been explored in studies like Lockyer et al. (2021), which delve into the qualitative aspects of COVID-19 misinformation and its implications for vaccine hesitancy within specific communities. Exposure to misinformation caused confusion, distress, and mistrust, fueled by safety concerns, negative stories, and personal knowledge. Further Rhodes et al. (2021) examine the intentions behind vaccine acceptance or refusal, illuminating that vaccine acceptance is not static and can be influenced by a variety of factors, including perceptions of risk and the flow of information regarding vaccine safety and necessity.

Conspiracy theories have been identified as a common form of misinformation, particularly in the context of vaccine acceptance. Featherstone et al. (2019) examine the correlation between sources of health information, political ideology, and the susceptibility to conspiratorial beliefs about vaccines, showing that political conservatives and social media users are more susceptible to vaccine conspiracy beliefs. Moreover, Romer and Jamieson (2020) provide insights into how conspiracy theories have acted as barriers in controlling the spread of COVID-19 in the U.S., a pattern observable in other contexts as well such as the Netherlands (Pummerer et al., 2022).

Finally, emotional appeals play a crucial role in the dissemination and impact of misinformation. Carrasco-Farré (2022) underscores that misinformation often requires less cognitive effort and more heavily relies on emotional appeals compared to reliable information. This tactic can make deceptive content more appealing and persuasive to audiences, particularly in a context like social media where emotional resonance can enhance shareability. Sangalang et al. (2019) further emphasize the potential of narrative strategies in combatting misinformation. They propose that narrative correctives, which incorporate emotion-inducing elements, can be effective in countering the persuasive appeal of misinformation narratives. Additionally, Yeo and McKasy (2021) highlight the role of emotion and humor as potential antidotes to misinformation. Their research suggests that integrating emotional and humorous elements into accurate information can enhance its appeal and effectiveness in counteracting the influence of misleading content. Moreover, emotional appeals in misinformation serve a distinct purpose compared to neutral presentation (Carrasco-Farré, 2022). Emotional content is designed to engage users at a visceral level, tapping into their feelings and biases. This strategy can make misinformation more persuasive and shareable, as emotionally charged content often resonates deeply with users, compelling them to react and share. Such content, leveraging human emotions like fear, anger, or empathy, tends to have a higher potential for viral spread, thereby amplifying its reach and impact (Yu et al., 2022).

This body of research underscores the importance of understanding and strategically utilizing emotional appeals in both the propagation of misinformation and the development of interventions to counteract its influence.

Considering these issues, it becomes evident that misinformation is not a monolithic problem but a multifaceted challenge that intersects with safety, efficacy, and conspiracy theories and is deeply entwined with emotional appeals. Misinformation often leverages emotive narratives to capture attention and elicit reactions, making it more persuasive and shareable among users. The research discussed underscores the need for nuanced approaches to tackle misinformation. These insights are instrumental in devising strategies to counteract misinformation and foster an informed public that can critically engage with health information during health crises.

Misinformation on YouTube

Several authors argue that social media platforms such as YouTube facilitate the spread of misinformation (Li et al., 2022; Tokojima Machado et al., 2022). Users primarily search for entertainment on social media platforms, accidentally come across (false) information, and sometimes spread it carelessly (Boczkowski et al., 2018). Emotional and visual content attracts users' attention, and user reactions (e.g., likes, shares, and comments) increase their visibility due to how the algorithms work (Staender et al., 2021). Misinformation can be found on all major social media platforms, but research suggests that YouTube played a vital role relative to COVID-19. For example, in the United Kingdom, YouTube was the source of information most strongly associated with belief in conspiracy theories: Of those who believed that 5G networks caused COVID-19 symptoms, 60% said that much of their knowledge about the virus came from YouTube (Allington et al., 2020). Li et al. (2022) analyzed 122 highly viewed YouTube videos in English related to COVID-19 vaccination; 10.7% of these videos contained non-factual information, which accounted for 11% of the total viewership. The authors thus posit that the public may perceive information from more reputable sources as less favorable (Li et al., 2022). Furthermore, producers of misinformation often employ rhetorical strategies to enhance the appeal and persuasive power of their content on social media. These tactics include mimicking the style and presentation of reliable sources, using emotional and sensationalist elements to captivate audiences, and exploiting the dynamics of social media algorithms for wider dissemination (Staender et al., 2021).

While emotional content has been identified as a powerful tool in spreading misinformation, it is important to note that a neutral presentation can also enhance the believability of misinformation (Weeks et al., 2023). When misinformation is presented in a neutral, matter-of-fact manner, it may be perceived as more credible and less biased, making it easier for users to accept without skepticism. This subtlety of presentation can make neutral misinformation insidiously effective, as it can blend seamlessly with genuine information, evading immediate doubt or critical scrutiny. Tokojima Machado et al. (2022) found that misinformation producers use tactics to disguise, replicate and disperse content that impair automatic and human content moderation. According to the authors, the analyzed YouTube channels exploited COVID-19 misinformation to promote themselves, benefiting from the attention their content generated. Because of the strategic approaches adopted by content producers to enhance dissemination, YouTube played a significant role in the widespread distribution of misinformation during the pandemic.

Due to this massive spread of misinformation, YouTube revised its moderation policies in April 2020 to make credible content more visible and delete dubious content (YouTube, 2020). However, it took an average of 41 days for content with false information to be removed, so it could still reach many users (Knuutila et al., 2020). Moreover, monitoring misinformation in languages other than English continues to be a significant challenge for YouTube, and its functions must be improved. As Donovan et al. (2021) highlights, content creation models are necessary to identify “superspreader” networks and fight against organized manipulation campaigns.

Hypotheses and research question

Misinformation is disseminated with different goals, and its content can vary considerably (Staender and Humprecht, 2021). For example, half-truths can appear more credible and thus be more convincing than completely false content (Hameleers et al., 2021). Partly false information presents a unique challenge as it often closely resembles the truth. Creating a veneer of verisimilitude that can mislead viewers. This type of misinformation subtly distorts facts or presents them in misleading contexts, making it difficult for users to discern inaccuracies (Möller and Hameleers, 2019). The proximity of this information to factual content makes it particularly insidious and challenging to counter. Given its resemblance to factual content, partly false information often evades scrutiny and challenges conventional fact-checking approaches. This makes correction efforts more crucial yet more complex, particularly when such content is designed to mimic reputable sources. The need for correction is paramount precisely because these subtleties can lead to widespread acceptance of inaccuracies under the guise of credibility (Hameleers et al., 2021).

Brennen et al. (2020) examined which types of disinformation were generated most often in the United Kingdom during the first phase of the COVID-19 pandemic. The most common types were messages that frequently contained accurate information but were slightly altered or reconfigured. For example, facts were presented in the wrong context or manipulated. However, Brennen et al. (2020) found that over a third of the disinformation studied contained completely fabricated and fake content.

Switzerland has no findings yet of the types of misinformation that were disseminated during the pandemic. In contrast to other democratic countries, Switzerland is more likely to be resilient to misinformation (Humprecht et al., 2020) because of its political and media characteristics (i.e., high level of media trust, lower audience fragmentation and polarization, consensus-oriented political system). Therefore, producers of misinformation may try to mimic news media coverage and refer to actual events or facts to avoid being perceived as misleading. Against this background, we assume that partially false information about COVID-19 vaccination is more frequent on YouTube than completely false information (H1).

The challenge in automatically identifying misleading content on YouTube has made it difficult to fully understand the scope and tactics employed in such misinformation. The primary goal of video producers in this context is to maximize visibility, often measured in terms of view counts, thereby ensuring their deceptive messages reach a broad audience. Despite the prevalence of such content, there is still limited research on the specific types of arguments used in these widely viewed misleading videos. Pioneering work by Kata (2010) in analyzing anti-vaccination websites provides some insights. This research explored the nature of misinformation on these platforms, focusing on the themes and narratives employed to counter vaccine advocacy, including discussions on safety and efficacy, alternative medicine, civil liberties, conspiracy theories, and religious or moral objections. Such studies are crucial in shedding light on the strategies used in the dissemination of misinformation, particularly in the context of public health. Although Kata (2010) did not distinguish between types of misinformation, findings from studies on COVID-19 (Skafle et al., 2022) suggest that conspiracy theories and falsehoods about side effects are found primarily in entirely false content. Vaccination misinformation grounded in conspiracy theories frequently claims that corrupt elites run hidden power structures and network with pharmaceutical companies to make money or depopulate the world (Skafle et al., 2022). We therefore postulate that completely false information contains conspiracy narratives (H2a) more often than partially false information does. Similarly, we propose that completely false information contains claims about vaccination's side effects and safety aspects more frequently than partially false information does (H2b).

Kata (2010) demonstrated that vaccine misinformation frequently employs purported scientific evidence to lend a semblance of credibility to distorted information. This tactic typically involves blending actual scientific facts with fabrications, a characteristic predominantly seen in partially false information (Möller and Hameleers, 2019). Based on this understanding, we hypothesize that references to scientific evidence are more common in partially false videos than in completely false videos (H2c).

Research has shown that misinformation is often characterized by antielitism and includes criticisms of elite actors, such as politicians, or, especially in the context of health issues, medical actors (Hameleers, 2020). Such messages contain ideologically biased accusations; the actors are held responsible for the problem and accused of incompetence, malice, or unscrupulousness (Boberg et al., 2020). For example, medical actors were at the center of public debate during the pandemic, speaking out in the news media or advising policymakers. They also often recommended COVID-19 vaccination (Rapisarda et al., 2021). Therefore, antivaccine misinformation can be expected to criticize and blame them. Based on this reasoning, we assume that partially false information criticizes medical actors more often than completely false information does (H3a).

Media and political actors are also often attacked and discredited in misinformation, such as by accusing them of deliberately misleading citizens and deceiving them to their benefit (Boberg et al., 2020). Therefore, we postulate that misinformation on COVID-19 vaccination contains criticism of actors from media and politics (H3b).

Researchers have highlighted that misinformation with broad reach, attracting significant attention from social media users, is particularly concerning because users interact with and propagate it (Marwick and Lewis, 2017; Freelon and Wells, 2020). This type of misinformation transforms its negative consequences from an individual issue to a societal problem. On the one hand, the widespread reach of misinformation can be attributed to its emotionally charged content. Studies have highlighted how misinformation often leverages to capture attention and elicit strong reactions, thus increasing its shareability and impact. On the other hand, producers of misinformation also benefit financially due to the platforms' advertising logic, where sensational and emotionally engaging content often achieves higher viewership (Zollo et al., 2015; Staender et al., 2021). The emotional appeal of misinformation can both be a tool for increased dissemination and a factor in its believability, making it a crucial aspect to study. Therefore, we ask to what extent partially false and completely false videos with a broad reach (e.g., 20,000 views or more) differ in emotional-appealing and content-related design aspects from videos with a smaller reach (RQ1). Misinformation is not a one-size-fits-all phenomenon; it employs a variety of strategies to maximize reach and influence. Producers of misinformation may use a neutral tone to gain credibility and a sense of legitimacy, especially in contexts where overt emotionalism might trigger skepticism. Conversely, they may use emotional appeals to exploit cognitive biases and emotional reactions, ensuring rapid dissemination and engagement. Understanding the nuanced use of these rhetorical strategies is key to developing effective countermeasures against misinformation.

To investigate what misleading content users on YouTube were exposed to during the pandemic and what untruths were spread about the COVID-19 vaccine, we conducted a standardized content analysis of misinforming videos. In the following, we describe our approach in detail.

Methods and data

To test our hypotheses, we investigate which types of misinformation are present on YouTube, which statements such information contains, which speakers are present, and what blame attributions are made. We followed the procedure of Brennen et al. (2020) and created a data corpus with misinformation about COVID-19 vaccination. First, we identified leading actors from Switzerland who published misinformation on YouTube in German or French based on extensive research in the respective online ecosystem. In determining the leading actors among content creators, we employed specific criteria, including the number of subscribers, average views per video, frequency of content related to COVID-19 vaccination, and the level of user engagement (likes, comments, shares) their videos elicited. This approach allowed us to identify those creators who had a significant influence in shaping public discourse around COVID-19 vaccination on YouTube. Second, we used a snowball approach (references in videos or links in the comment sections) to identify accounts with similar content. These accounts also operated from Germany, Austria, or France. We found 200 accounts that published at least one video and examined whether their videos contained misinformation. Based on Humprecht (2019), we categorized misinformation as statements about COVID-19 that could be refuted by information from authorities and organizations (i.e., the Federal Office of Health, WHO) or fact-checkers. We sampled 450 German2 - and French-language videos with misinformation about the COVID-19 vaccine, which were published between July 2020 and November 2021.

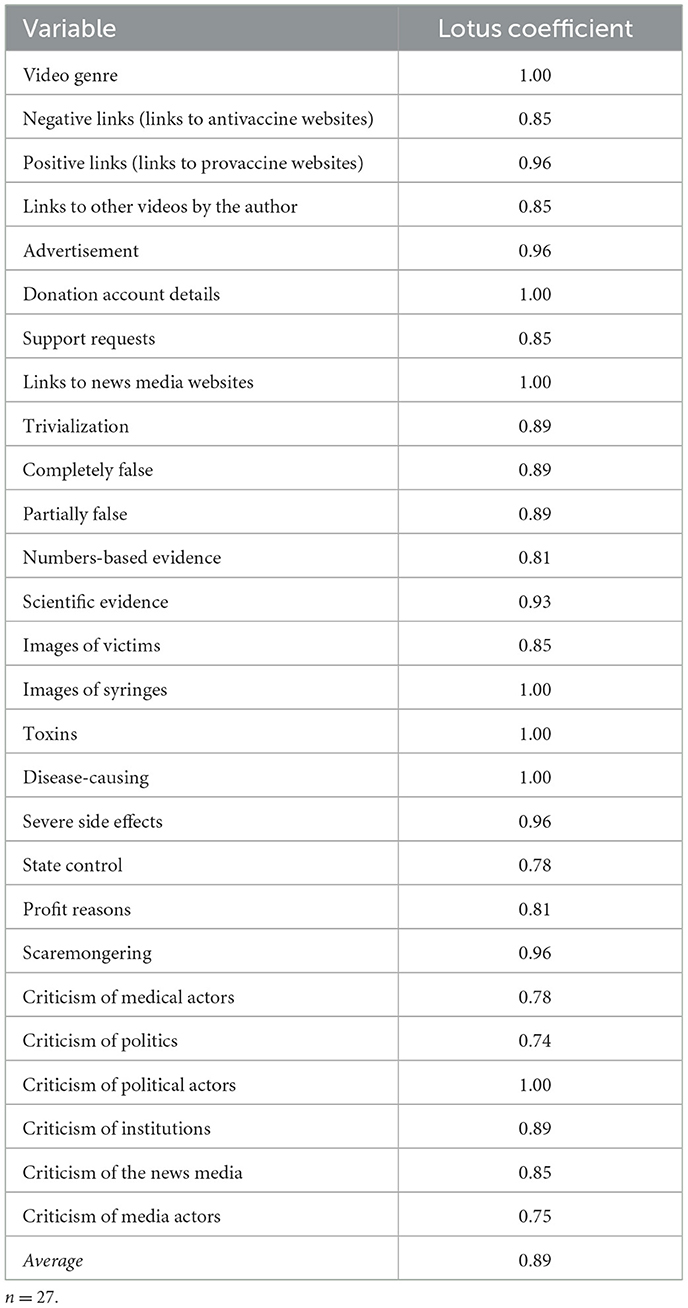

We conducted a quantitative content analysis. The intercoder reliability test of the three trained coders yielded satisfactory results: S-Lotus >0.74 (Fretwurst, 2015)3 (see the Appendix for the full results). Next, we examined whether the videos' statements about vaccination were entirely fabricated or partially false (mixed correct and false information). For example, statements were coded as partially false if an image sequence was not manipulated but appeared in the wrong context (wrong description, wrong caption, originally from a different time/context) or correct or accurate information was misinterpreted or shared with a false context. To ensure high reliability for the coding of partially and completely false information, one project leader and one student coder coded the videos regarding uncertainty.

To code the main topic of a video, we analyzed its headline and teasers. Then, we relied on the COVID-19 Vaccine Handbook's categorization of misinformation topics (Lewandowsky et al., 2021). We coded the topics of safety, efficacy, side effects, scientific evidence, and the sources or speakers mentioned (e.g., authors/bloggers, scientists, politicians, physicians, and laypersons). To measure elements of misinformation about COVID-19 vaccination, we relied on Kata's (2010) study of antivaccination websites.

To measure conspiracy narratives, we coded whether videos contained elements of conspiracy theories. For example, such videos assert that a group of people is conspiring secretly to deceive society (e.g., politicians or businesspeople are organized in a secret society because they have evil intentions). Examples of such claims include Bill Gates developing the coronavirus to earn money or the Chinese government spreading it to harm the West. Moreover, we coded whether videos claimed that national vaccination campaigns are excessive state control that restricts civil rights; vaccination policy is based on profit (i.e., the government makes money from vaccinations); the dangers of diseases are exaggerated by those in power or the media to scare people (scaremongering; e.g., the coronavirus is not as bad as the media want to make people believe, to spread panic); vaccines contain poisons (e.g., rat poison); COVID-19 vaccines cause diseases (e.g., autism) or severe side effects (e.g., thrombosis, which is more dangerous than the symptoms of COVID-19); or COVID-19 is rare, non-contagious, or relatively harmless (trivialization).

References to science were measured using the following variables: numbers-based evidence (e.g., the relevant argumentation was supported by the mention of numbers) and scientific evidence (e.g., the argumentation was based on scientific evidence, such as references to scientific studies or reviews).

Antielitism was measured by coding criticism of actors from medicine, politics, and the news media. It was coded on blaming individual actors or groups of actors for current problems or accusing them of not responding appropriately (e.g., “The government is curtailing our liberties with the certificate requirement;” “The media is hiding bad side effects of vaccinations”).

We categorized each video by genre, including discussions, interviews, animation, satire, educational (featuring an actor or offscreen narrator explaining a subject, similar to a documentary), news reports/broadcasts, commercials, individuals expressing their opinions, demonstrations, and other genres.

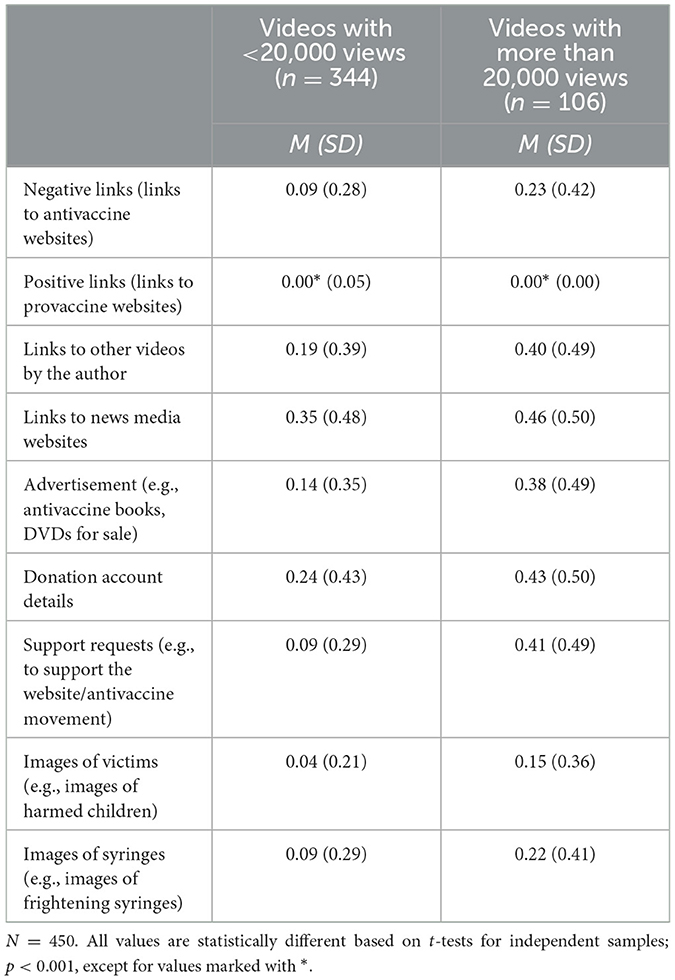

Finally, to answer RQ1 about differences between widely and less widely viewed misinformation videos, we measure views, user reactions (i.e., likes, dislikes, and comments), emotional-appealing images, and content-related design elements. Such elements from the video description included links to anti- and provaccine websites, links to videos by the author, advertising for antivaccine media (e.g., books or DVDs), requests for donations, and links to news media websites. Emotional-appealing images included images of victims (e.g., harmed children) or syringes. Based on an empirical assessment of our data, we set the threshold between widely and less widely viewed videos at 20,000 views. Our data shows a significant gap between these groups: Most videos (n = 345) had only a few views (mostly below 100), and a smaller group (n = 105) received 20,000 or significantly more.

All content-related variables were collected as dummies and recoded into metric variables (ranging from 0 to 1).

Results

Our main interest is to compare different types of misinformation. Research has distinguished between partial and complete false misinformation. The first is of particular concern because users recognize it less easily, so it may have a more significant potential for deception. In addition, platforms and fact-checkers can poorly identify and eliminate such content. As producers of antivaccine misinformation may want to convince many users of their narratives, H1 postulates that YouTube contains more partially than completely false information about the COVID-19 vaccine. To test H1, we analyzed different types of misinformation in the videos. Our analysis shows that completely false information was generally more frequent (61%; n = 363) than partial misinformation (39%; n = 177), in which true and false information are mixed or interpreted misleadingly. Both completely (42%; n = 110) and partially false (36%; n = 61) information appeared most often in videos by individuals who expressed their opinions (36%, n = 61). Explanatory videos accounted for 19% (n = 50) of the completely false and 19% (n = 32) of the partially false videos, followed by interviews, which accounted for 10% (n = 25) of the completely false and 17% (n = 30) of the partially false videos. Based on this finding, we reject H1.

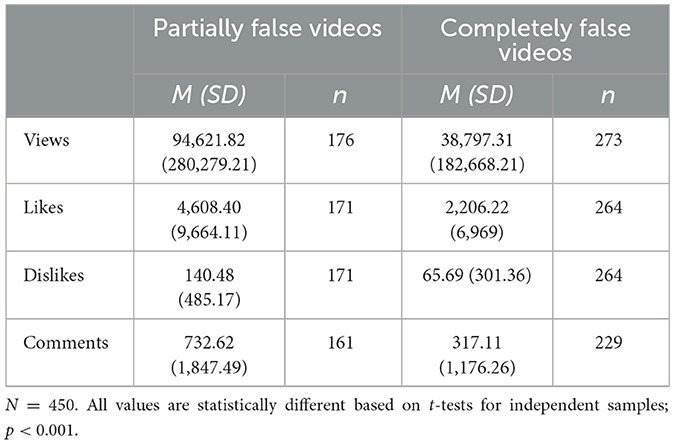

We compared views and user reactions to partially and completely false YouTube videos to understand how users responded to them (see Table 1). The results show that videos containing partially false information received more views (Mviews = 94,621.82), likes (Mlikes = 4,608.40), dislikes (Mdislikes = 140.48), and comments (Mcomments = 733.62) compared to completely false information (Mviews = 38,797.31; Mlikes = 2,206.22; Mdislikes = 65.69; Mcomments = 317.11).

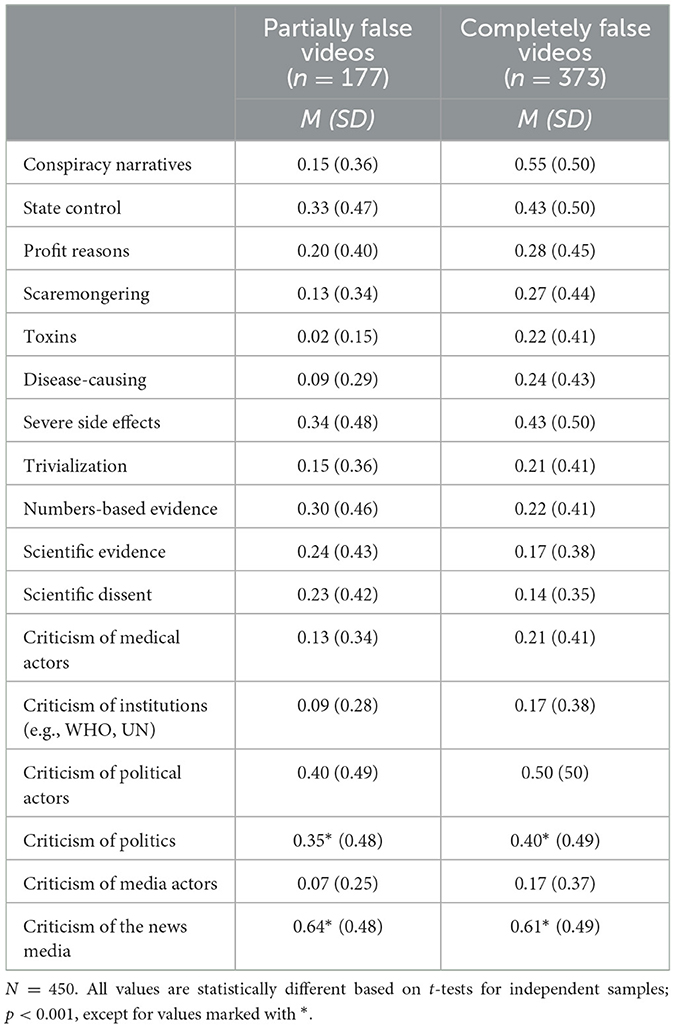

In the next step, we compared content features of partially vs. completely false information (see Table 2). Based on previous research, we hypothesized that completely false information more often includes conspiracy narratives (H2a) and claims about vaccination's side effects and safety (H2b). As Table 2 shows, completely false information contained significantly more references to conspiracy narratives (M = 0.55), accusations of excessive state control that restricts liberty rights (M = 0.43), accusations of profit motives (M = 0.28), and accusations of scaremongering (M = 0.27) compared to partially false information (Mconspiracy = 0.15; Mcontrol = 0.33; Mprofit = 0.20; Mscaremongering = 0.27), leading us to accept H2a.

Similarly, aspects of side effects and safety were present more often in completely than partially false videos. For example, such videos included claims that vaccines contain toxins (Mcompletely = 0.22; Mpartially = 0.02), cause severe diseases (Mcompletely = 0.24; Mpartially = 0.09), and have side effects that are more severe than COVID-19 (Mcompletely = 0.43; Mpartially = 0.34) and that side effects are trivialized (Mcompletely = 0.21; Mpartially = 0.15). Based on these findings, we accept H2b.

Antielitism in the form of criticism of different actor types also appeared more frequently in completely false videos. These videos included criticisms of medical actors (e.g., doctors; M = 0.21), supranational institutions (e.g., the WHO or the United Nations; M = 0.17), political actors (M = 0.50), and media actors (e.g., journalists; M = 0.17) more frequently compared to partially false videos (Mmedical = 0.13; Minstitutions = 0.09; Mpolitical = 0.40; Mmedia = 0.07). Criticisms of general elites, such as politics in general or the media, frequently appeared in both types of misinformation but slightly more often in completely false videos. Therefore, we accept H3a and H3b.

To answer RQ1, we compared videos with more and <20,000 views. Our analysis shows that videos with a higher reach differed significantly from other videos: those with more than 20,000 views (n = 105) contained 54.3% partially false information (n = 57) and 45.7% utterly false information (n = 48). The difference was even greater for videos with more than 50,000 views (n = 75). These contained 59.2% (n = 21) partially false and 40.8% completely false information. Finally, for videos with over 150,000 views (n = 35), 60% were partially false (n = 21), and 40% were completely false (n = 14).

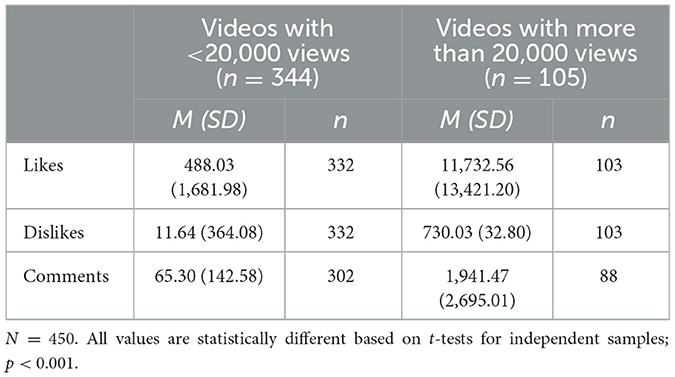

As Table 3 shows, videos with more than 20,000 views received significantly more user reactions in the form of likes, dislikes, and comments than videos viewed less often.

Those videos also stood out regarding elements of hyperlinking, emotionalization, and commercialization (see Table 4): Videos with more than 20,000 views more frequently contained links to antivaccine websites (Mcompletely = 0.23; Mpartially = 0.09), other YouTube videos by the author (Mcompletely = 0.40; Mpartially = 0.19), and news media websites (Mcompletely = 0.46; Mpartially = 0.35). Advertisements for antivaccination content, such as books or DVDs (Mcompletely = 0.38; Mpartially = 0.14) and requests for donations (Mcompletely = 0.43; Mpartially = 0.24) or support (Mcompletely = 0.41; Mpartially = 0.09) were also more frequent. In addition, emotional-appealing visuals of victims (Mcompletely = 0.15; Mpartially = 0.04) or syringes (Mcompletely = 0.22; Mpartially = 0.09) also appeared more frequently in completely false videos.

Table 4. Hyperlinking, commercialization, and emotionalization in widely and less widely viewed YouTube videos.

In sum, hyperlinking, commercialization, and emotionalization elements were found more frequently in videos with a broader reach (more than 20,000 views).

Discussion

Our research has predominantly identified completely false YouTube videos about COVID-19 vaccination, characterized by conspiracy theories, anti-elitism, and misinformation about vaccine side effects and safety. These videos aim to create doubt and mistrust by suggesting malicious motives behind the vaccine development and accusing news media of complicity.

Partially false information, while less frequent, typically involved misleading interpretations of scientific evidence and debates. These videos garnered more user attention, as indicated by view, likes, dislikes, and comments. This observation aligns with literature suggesting that partially false information can be perceived as more credibly and persuasive (Hameleers et al., 2021), potentially due to its scientific framing and subtler allusions. However, our study does not establish causality but rather describes these observed patterns.

Furthermore, we found that videos with a broad reach (over 20,000 views) distinctly use emotional appeals and content-related strategies to enhance their reach. These high-reach videos received more user reactions and exhibited a higher degree of commercialization, such as donation requests and product advertising. They effectively engage in misdirection by redirecting users to related sites through links and appealing for support, which strengthens the antivaccine network. Moreover, high-reach videos frequently utilized emotionalizing imagery to capture attention and amplify their message. This strategy is particularly evident in partially false videos, which may remain online longer due to their subtle nature (Knuutila et al., 2020). The pervasive use of emotional appeal in the videos demonstrates a deliberate tactic to resonate with and engage viewers deeply, thereby amplifying their reach and impact on public opinion about vaccination.

In sum, our research contributes to the understanding of the nature and dynamics of COVID-19 vaccine misinformation on YouTube. It underscores the need for vigilant monitoring and proactive strategies by social media platforms and fact-checkers to address both completely and partially false information.

Conclusion

The proliferation of misinformation on platforms like YouTube significantly impedes public health efforts, by undermining disease control and health promotion initiatives (Knuutila et al., 2020). Current research shows that users, especially those skeptical of vaccinations, are less likely to get vaccinated and have less confidence in vaccination after seeing misinformation on YouTube about COVID-19 vaccination (Kessler and Humprecht, 2023). Moreover, misinformed users are more likely to believe that alternative remedies, such as chloroquine, are more effective than vaccination (Bertin et al., 2020). Such a situation could be particularly problematic in countries such as Switzerland, where about a quarter of the population was initially skeptical of COVID-19 vaccination (Gordon et al., 2020). By May 2022, <70% had received at least two doses of vaccine.4 Vaccine hesitancy can vary from person to person and is influenced by a complex interplay of factors, such as misinformation, lack of trust in authorities and media, personal belief and values, and experiences with vaccination (Wilson and Wiysonge, 2020). However, if certain content is seen frequently, then the likelihood of it being seen as believable increases (Ecker et al., 2017).

We aimed to expand our understanding of different types of misinformation on COVID-19 vaccination on YouTube outside of the well-researched U.S. context. From our analysis of French- and German-language YouTube videos, we discovered a multifaceted landscape of misinformation characterized by varying degrees of factual distortion and a range of actors with differing intentions and strategies. Particularly concerning is our finding that videos containing partially false information had greater reach and engagement, suggesting that subtler forms of misinformation might be more insidious and influential. Moreover, completely false videos were more frequent, but partially false videos had a broader reach and provoked more user reactions. Such misinformation was disseminated by various actors, including individuals, groups, and (alternative) media outlets. The most common claims in the videos were related to vaccine safety and efficacy, with many videos promoting antivaccination sentiment and conspiracy theories. Our analysis also revealed that videos with a higher reach, as indicated by view counts, tended to have more elements of commercialization and emotionalization. This study has several limitations, which need to be considered. First, the content analysis was conducted on a sample of 450 YouTube French- and German-language videos containing misinformation about COVID-19 vaccination, which may only represent some misinformation on or across other social media platforms. Therefore, the findings may not be generalizable to different types of misinformation or misinformation in other languages. Second, the study focuses on YouTube and visual content, which may not capture the full extent of misinformation related to COVID-19 vaccination on other social media platforms or online sources. Third, the analysis was conducted at a specific time and may not capture changes in misinformation patterns or content on YouTube.

Finally, our study enriches the understanding of misinformation on YouTube, especially in the under-researched contexts of Swiss audiences. By highlighting specific patterns of misinformation in these languages, our research underscores the need for targeted strategies to address misinformation in diverse linguistic and cultural settings. While emphasizing the importance of collaborative efforts to combat misinformation, we also recognize the unique contribution of our findings. These insights not only contribute to a more global understanding of misinformation but also underline the importance of localized research in informing effective, culturally sensitive strategies.

In summary, our research calls for an appreciation of diverse linguistic and cultural perspective in the fight against misinformation, advocation for both international cooperation and context-sensitive approaches.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the study involving human data in accordance with the local legislation and institutional requirements. The social media data was accessed and analyzed in accordance with the platform's terms of use and all relevant institutional/national regulations.

Author contributions

EH and SHK made significant contributions throughout the research process, participated in the design of the study, and were involved in data collection and ensuring the quality and reliability of the gathered information. EH took the lead in conducting the empirical analysis, employing statistical techniques, data interpretation to derive meaningful insights from the collected data, played a central role in the synthesis and organization of the findings, and crafting the initial version of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The authors received financial support for the research for this article by the Swiss Federal Office of Communications. Moreover, they received support for the publication from the German Research Foundation Projekt-Nr. 512648189 and the Open Access Publication Fund of the Thueringer Universitaets- und Landesbibliothek Jena.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^https://www.who.int/health-topics/infodemic

2. ^German-language videos included Swiss-German content.

3. ^For a similar approach, see Blassnig et al. (2019).

References

AFP, CORRECTIV, Facta, P. P., Fact, F., and Maldita.es. (2020). Infodemic COVID-19 in Europe : a visual analysis of. AFP, CORRECTIV, Pagella Politica/Facta, Full Fact and Maldita.es. Available online at: www.covidinfodemiceurope.com (accessed February 20, 2024).

Allington, D., Duffy, B., Wessely, S., Dhavan, N., and Rubin, J. (2020). Health-protective behaviour, social media usage, and conspiracy belief during the COVID-19 public health emergency. Psychol. Med. 51, 1763–1769. doi: 10.1017/S003329172000224X

Altay, S., Nielsen, R. K., and Fletcher, R. (2022). The impact of news media and digital platform use on awareness of and belief in COVID-19 misinformation. PsyArXiv. doi: 10.31234/osf.io/7tm3s

Bennett, W. L., and Livingston, S. (2018). The disinformation order: disruptive communication and the decline of democratic institutions. Eur. J. Commun. 33, 122–139. doi: 10.1177/0267323118760317

Bertin, P., Nera, K., and Delouvée, S. (2020). Conspiracy beliefs, rejection of vaccination, and support for hydroxychloroquine: a conceptual replication-extension in the COVID-19 pandemic context. Front. Psychol. 11, 1–9. doi: 10.3389/fpsyg.2020.565128

Blassnig, S., Engesser, S., Ernst, N., and Esser, F. (2019). Hitting a nerve: populist news articles lead to more frequent and more populist reader comments. Polit. Commun. 36, 629–651. doi: 10.1080/10584609.2019.1637980

Boberg, S., Quandt, T., Schatto-Eckrodt, T., and Frischlich, L. (2020). Pandemic Populism: Facebook Pages of Alternative News Media and the Corona Crisis-A Computational Content Analysis. Available online at: http://arxiv.org/abs/2004.02566 (accessed February 20, 2024).

Boczkowski, P. J., Mitchelstein, E., and Matassi, M. (2018). “News comes across when I'm in a moment of leisure”: understanding the practices of incidental news consumption on social media. N. Media Soc. 20, 3523–3539. doi: 10.1177/1461444817750396

Brennen, A. J. S., Simon, F. M., Howard, P. N., and Nielsen, R. K. (2020). Types, Sources, and Claims of COVID-19 Misinformation (Reuters Institute Fact Sheets) (Reuters Institut for the Study of Journalism), 1–13. Available online at: https://ora.ox.ac.uk/objects/uuid:178db677-fa8b-491d-beda-4bacdc9d7069 (accessed February 20, 2024).

Carrasco-Farré, C. (2022). The fingerprints of misinformation: how deceptive content differs from reliable sources in terms of cognitive effort and appeal to emotions. Human. Soc. Sci. Commun. 9:162. doi: 10.1057/s41599-022-01174-9

Chadwick, A., Kaiser, J., Vaccari, C., Freeman, D., Lambe, S., Loe, B. S., et al. (2021). Online social endorsement and COVID-19 vaccine hesitancy in the United Kingdom. Soc. Media Soc. 7:205630512110088. doi: 10.1177/20563051211008817

Donovan, J., Friedberg, B., Lim, G., Leaver, N., Nilsen, J., and Dreyfuss, E. (2021). Mitigating medical misinformation: a whole-of-society approach to countering Spam, Scams, and Hoaxes. Technol. Soc. Change Res. Project 2021:3. doi: 10.37016/TASC-2021-03

Ecker, U. K. H., Hogan, J. L., and Lewandowsky, S. (2017). Reminders and repetition of misinformation: helping or hindering its retraction? J. Appl. Res. Mem. Cogn. 6, 185–192. doi: 10.1016/j.jarmac.2017.01.014

Featherstone, J. D., Bell, R. A., and Ruiz, J. B. (2019). Relationship of people's sources of health information and political ideology with acceptance of conspiratorial beliefs about vaccines. Vaccine 37, 2993–2997. doi: 10.1016/j.vaccine.2019.04.063

Freelon, D., and Wells, C. (2020). Disinformation as political communication. Polit. Commun. 37, 145–156. doi: 10.1080/10584609.2020.1723755

Fretwurst, B. (2015). LOTUS Manual. Reliability and Accuracy With SPSS. Zurich: University of Zurich.

Gordon, B., Craviolini, J., Hermann, M., Krähenbühl, D., and Wenger, V. (2020). 5. SRG Corona-monitor [5th SRG Corona monitor]. Available online at: https://www.srf.ch/news/content/download/19145688/file/5.SRGCorona-Monitor.pdf

Hameleers, M. (2020). Populist disinformation: exploring intersections between online populism and disinformation in the us and the netherlands. Polit. Govern. 8, 146–157. doi: 10.17645/pag.v8i1.2478

Hameleers, M., Humprecht, E., Möller, J., and Lühring, J. (2021). Degrees of deception: the effects of different types of COVID-19 misinformation and the effectiveness of corrective information in crisis times information in crisis times. Inform. Commun. Soc. 2021, 1–17. doi: 10.1080/1369118X.2021.2021270

Hameleers, M., van der Meer, T. G. L. A., and Brosius, A. (2020). Feeling “disinformed” lowers compliance with COVID-19 guidelines: evidence from the US, UK, Netherlands and Germany. Harv. Kennedy School Misinform. Rev. 1:23. doi: 10.37016/mr-2020-023

Humprecht, E. (2019). Where 'fake news' flourishes: a comparison across four Western democracies. Inform. Commun. Soc. 22, 1973–1988. doi: 10.1080/1369118X.2018.1474241

Humprecht, E., Esser, F., and Van Aelst, P. (2020). Resilience to online disinformation: a framework for cross-national comparative research. Int. J. Press/Polit. 25, 493–516. doi: 10.1177/1940161219900126

Kata, A. (2010). A postmodern Pandora's box: anti-vaccination misinformation on the Internet. Vaccine 28, 1709–1716. doi: 10.1016/j.vaccine.2009.12.022

Kessler, S. H., and Humprecht, E. (2023). COVID-19 misinformation on YouTube: an analysis of its impact and subsequent online information searches for verification. Digit. Health 9:20552076231177131. doi: 10.1177/20552076231177131

Knuutila, A., Herasimenka, A., Au, H., Bright, J., and Howard, P. N. (2020). COVID-related misinformation on YouTube the spread of misinformation videos on social media and the effectiveness of platform policies. Comprop. Data Memo 6, 1–7. doi: 10.5334/johd.24

Lewandowsky, S., Cook, J., Schmid, P., Holford, D. L., Finn, A. H. R., Lombardi, D., et al. (2021). The COVID-19 Vaccine Communication Handbook. Available online at: https://hackmd.io/@scibehC19vax/home

Li, H. O. Y., Bailey, A., Huynh, D., and Chan, J. (2020). YouTube as a source of information on COVID-19: a pandemic of misinformation? Br. Med. J. Glob. Health 5:e002604. doi: 10.1136/bmjgh-2020-002604

Li, H. O. Y., Pastukhova, E., Brandts-Longtin, O., Tan, M. G., and Kirchhof, M. G. (2022). YouTube as a source of misinformation on COVID-19 vaccination: a systematic analysis. Br. Med. J. Glob. Health 7:e008334. doi: 10.1136/bmjgh-2021-008334

Lockyer, B., Islam, S., Rahman, A., Dickerson, J., Pickett, K., Sheldon, T., et al. (2021). Understanding COVID-19 misinformation and vaccine hesitancy in context: findings from a qualitative study involving citizens in Bradford, UK. Health Expect. 24, 1158–1167. doi: 10.1111/hex.13240

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., and Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat. Hum. Behav. 5, 337–348. doi: 10.1038/s41562-021-01056-1

Marwick, A., and Lewis, R. (2017). Media Manipulation and Disinformation Online (New York, NY: Data & Society Research Institute), 7–19.

Möller, J., and Hameleers, M. (2019). “Different types of disinformation, its political consequences and treatment recommendations for media policy and practice,” in Was ist Desinformation? Betrachtungen aus sechs wissenschaftlichen Perspektiven (Düsseldorf: Landesanstalt für Medien NRW), 7–14.

Newman, N., Fletcher, R., Schulz, A., Andi, S., and Nielsen, R. K. (2020). Reuters Institute Digital News Report 2020. Oxford: Reuters Institute for the Study of Journalism.

Pummerer, L., Böhm, R., Lilleholt, L., Winter, K., Zettler, I., and Sassenberg, K. (2022). Conspiracy theories and their societal effects during the COVID-19 pandemic. Soc. Psychol. Personal. Sci. 13, 49–59. doi: 10.1177/19485506211000217

Rapisarda, V., Vella, F., Ledda, C., Barattucci, M., and Ramaci, T. (2021). What prompts doctors to recommend COVID-19 vaccines: is it a question of positive emotion? Vaccines 9:578. doi: 10.3390/vaccines9060578

Rhodes, A., Hoq, M., Measey, M.-A., and Danchin, M. (2021). Intention to vaccinate against COVID-19 in Australia. Lancet Infect. Dis. 21:e110. doi: 10.1016/S1473-3099(20)30724-6

Romer, D., and Jamieson, K. H. (2020). Conspiracy theories as barriers to controlling the spread of COVID-19 in the U.S. Soc. Sci. Med. 263:113356. doi: 10.1016/j.socscimed.2020.113356

Roozenbeek, J., Schneider, C. R., Dryhurst, S., Kerr, J., Freeman, A. L. J., Recchia, G., et al. (2020). Susceptibility to misinformation about COVID-19 around the world. Royal Soc. Open Sci. 7:201199. doi: 10.1098/rsos.201199

Sangalang, A., Ophir, Y., and Cappella, J. N. (2019). The potential for narrative correctives to combat misinformation. J. Commun. 69, 298–319. doi: 10.1093/joc/jqz014

Sharma, P. R., Wade, K. A., and Jobson, L. (2023). A systematic review of the relationship between emotion and susceptibility to misinformation. Memory 31, 1–21. doi: 10.1080/09658211.2022.2120623

Skafle, I., Nordahl-Hansen, A., Quintana, D. S., Wynn, R., and Gabarron, E. (2022). Misinformation about COVID-19 vaccines on social media: rapid review. Open Sci. Framework 2022:37367. doi: 10.2196/37367

Staender, A., and Humprecht, E. (2021). Types (disinformation). Datab. Var. Content Anal. 1:4e. doi: 10.34778/4e

Staender, A., Humprecht, E., Esser, F., Morosoli, S., and Van Aelst, P. (2021). Is sensationalist disinformation more effective? Three facilitating factors at the national, individual, and situational level. Digit. Journal. 2021, 1–21. doi: 10.1080/21670811.2021.1966315

Swiss Science Barometer (2020). COVID-19 Special. Wissenschaft im Dialog. Available online at: https://www.wissenschaft-im-dialog.de/projekte/wissenschaftsbarometer/wissenschaftsbarometer-corona-spezial/ (accessed February 20, 2024).

Tandoc, E. C., Lim, Z. W., and Ling, R. (2018). Defining “fake news.” Digit. Journal. 6, 137–153. doi: 10.1080/21670811.2017.1360143

Tokojima Machado, D. F., Fioravante de Siqueira, A., Rallo Shimizu, N., and Gitahy, L. (2022). It-which-must-not-be-named: COVID-19 misinformation, tactics to profit from it and to evade content moderation on YouTube. Front. Commun. 7:1037432. doi: 10.3389/fcomm.2022.1037432

Vaccari, C., and Chadwick, A. (2020). Deepfakes and disinformation: exploring the impact of synthetic political video on deception, uncertainty, and trust in news. Soc. Media Soc. 6:205630512090340. doi: 10.1177/2056305120903408

Vosoughi, S., Roy, D., and Aral, S. (2018). The spread of true and false news online. Science 359, 1146–1151. doi: 10.1126/science.aap9559

Wardle, C. (2018). The need for smarter definitions and practical, timely empirical research on information disorder. Digit. Journal. 6, 951–963. doi: 10.1080/21670811.2018.1502047

Weeks, B. E., Menchen-Trevino, E., Calabrese, C., Casas, A., and Wojcieszak, M. (2023). Partisan media, untrustworthy news sites, and political misperceptions. N. Media Soc. 25, 2644–2662. doi: 10.1177/14614448211033300

Wilson, S. L., and Wiysonge, C. (2020). Social media and vaccine hesitancy. Br. Med. J. Glob. Health 5:e004206. doi: 10.1136/bmjgh-2020-004206

Yeo, S. K., and McKasy, M. (2021). Emotion and humor as misinformation antidotes. Proc. Natl. Acad. Sci. U. S. A. 2021:118. doi: 10.1073/pnas.2002484118

YouTube (2020). How Does YouTube Combat Misinformation? YouTube Scam and Impersonation Policies-How YouTube Works. Available online at: https://www.youtube.com/howyoutubeworks/our-commitments/fighting-misinformation/ (accessed February 20, 2024).

Yu, W., Payton, B., Sun, M., Jia, W., and Huang, G. (2022). Toward an integrated framework for misinformation and correction sharing: a systematic review across domains. N. Media Soc. 2022:146144482211165. doi: 10.1177/14614448221116569

Zarocostas, J. (2020). How to fight an infodemic. Lancet 395:676. doi: 10.1016/S0140-6736(20)30461-X

Zollo, F., Novak, P. K., Del Vicario, M., Bessi, A., Mozetič, I., Scala, A., et al. (2015). Emotional dynamics in the age of misinformation. PLoS ONE 10:e0138740. doi: 10.1371/journal.pone.0138740

Appendix

Keywords: misinformation, COVID-19 vaccination, YouTube, public health, content analysis

Citation: Humprecht E and Kessler SH (2024) Unveiling misinformation on YouTube: examining the content of COVID-19 vaccination misinformation videos in Switzerland. Front. Commun. 9:1250024. doi: 10.3389/fcomm.2024.1250024

Received: 29 June 2023; Accepted: 16 February 2024;

Published: 29 February 2024.

Edited by:

Christopher McKinley, Montclair State University, United StatesReviewed by:

Yi Luo, Montclair State University, United StatesAnke van Kempen, Munich University of Applied Sciences, Germany

Copyright © 2024 Humprecht and Kessler. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Edda Humprecht, ZWRkYS5odW1wcmVjaHRAdW5pLWplbmEuZGU=

Edda Humprecht

Edda Humprecht Sabrina Heike Kessler

Sabrina Heike Kessler