Abstract

Introduction:

This paper investigates how 33 Turkish and 38 Russian university students perceive and experience artificial intelligence (AI) in scholarly communication. This study investigates students’ perspectives, experiences, and concerns regarding AI use in educational settings. Three main areas of inquiry are addressed: general views and experiences with AI technology, effects on academic communication and teamwork, and evaluation of AI-generated work in terms of academic integrity and plagiarism.

Methods:

The study participants were 71 university students, consisting of 33 Turkish students and 38 Russian students. Data was collected through open-ended questionnaires. Qualitative material was examined using six-stage theme analysis system.

Results:

Our findings reveal both shared and divergent perspectives among Turkish and Russian students regarding AI’s role in their educational environment. Students from both countries recognize AI’s significant potential to streamline academic tasks and enhance access to information. However, they also voice apprehensions over its influence on critical thinking abilities and academic honesty. Turkish students exhibited a predominantly favorable outlook toward the collaborative capabilities of AI, but Russian students placed greater emphasis on apprehensions over privacy and data security.

Discussion:

The study highlights the complex interplay between the benefits and challenges of AI in educational environments. Students in both countries encounter ethical issues, namely plagiarism and the authenticity of AI-generated content. Our research highlights the importance of clearly defined institutional regulations and educational initiatives to offer guidelines for the use of AI in academia. This comparative study offers a fascinating analysis of the cultural factors influencing AI applications in higher education. It adds to the ongoing worldwide conversation about how technology impacts future educational plans.

Introduction

Artificial intelligence (AI) has transformed both educational practices and communication patterns in higher education (Huang and Tan, 2023; Nikolopoulou, 2024; Thomas, 2024). Institutions are using everything from personalized learning to automated assessment systems to boost student success and institutional efficiency (Zawacki-Richter et al., 2019). However, this rapid transition raises important questions about the nature of intellectual discourse.

In academic and educational contexts, academic communication is defined as the formal, orderly means of exchanging knowledge and ideas among academics, researchers, and students. It includes scholarly papers, research reports, conference presentations, and peer reviews—written as well as oral modes of communication. The main goals of academic communication are to make sure that it increases knowledge by sharing, reviewing, and preserving scholarly work so that people in and outside of academia can access it (American Library Association, 2006; Wolfram, 2019).

New studies have shown that AI can make college more interactive and collaborative (Aldosari, 2020; Son and Jin, 2024). However, it may also bring some problems, like privacy and moral issues (Devi et al., 2023; Foltynek et al., 2023). There is limited research on the impact of AI technologies in academic communication, especially in countries such as Turkey and Russia, and these countries vary in terms of technological adoption and social norms.

This article aims to bridge this gap by looking at opinions and experiences about AI among Turkish and Russian university students. Examining how Turkish and Russian students interpret and interact with AI technologies provides insights into how cultural factors influence AI implementation in higher education.

The research questions of the study are:

Academic settings of university students in Turkey and Russia: AI tools;

purposes and frequency of use;

perceived benefits and limitations to investigate comparison.

Academic communication aided by AI in a cultural setting:

effects on student–student contact;

helps with group projects and cooperative learning;

offers chances for analysis of communication obstacles.

Students in two distinct cultural settings:

ethical issues for the academic usage of AI content;

their attitude to academic integrity and plagiarism;

institutional regulating expectations about the use of AI.

This study aims to improve our understanding of the cultural dimensions of the use of AI in higher education by comparing the views of Turkish and Russian students. The results can help us develop strategies that address cultural sensitivities to shape AI integration in universities.

Literature review: AI in academic communication

AI in higher education

Higher education academic contexts are increasingly incorporating AI technologies to enhance the student experience and optimize teaching and learning processes. Students are using chatbots to access services and assist in study (Chen et al., 2023; Okonkwo and Ade-Ibijola, 2021). Based on Iffath Unnisa Begum (2024), AI has grown from simple computer programs to include embedded computing systems, web-based intelligent systems, and humanoid robots that can do different tasks. AI applications in higher education enable flexible learning opportunities that are accessible anytime and anywhere (Ahmad et al., 2021; Owoc et al., 2021).

Cox (2021) argues that AI is fundamentally transforming teaching and learning in higher education, with the potential to reshape global educational systems. Tomar and Verma (2021) state that AI technologies are integral to higher education’s teaching, learning, and research activities. Zawacki-Richter et al. (2019) carefully evaluate these technologies, as they offer both positive and negative elements. Research has demonstrated that AI applications in higher education are broad, encompassing a wide range of applications, such as intelligent tutoring systems and personalized learning.

Also, research highlights the beneficial impact of AI applications on student achievement and the development of immersive educational settings (Aldosari, 2020; Jaboob et al., 2024). According to Rui and Badarch (2022), the main reason for looking into AI applications in education is to help improve teaching in higher education institutions. AI technologies are also becoming well-known in academia since they offer creative ways for research, instruction, and service (Barros et al., 2023). ChatGPT, emerging as a student-driven innovation, has the potential to enhance the higher education learning environment (Dai et al., 2023). In recent years, there has been a growing emphasis on promoting AI literacy among students, which has garnered significant interest from governments and society (Yang and Xu, 2024).

Higher education institutions are using smart classrooms and AI-enhanced learning models to progressively include AI (Jia and Tu, 2024), thereby improving the academic experience. This includes enhancing library services (Okunlaya et al., 2022) and automating administrative tasks (Hannan and Liu, 2023; Villegas-Ch et al., 2021). Top institutions are creating AI labs and implementing AI into their courses even as they assess how it may affect academic integrity and student performance (Ali et al., 2024; Sullivan et al., 2023).

From English preparation courses (Zhang and Qi, 2024) to art and design education (Watanabe, 2024; Zhao and Xue, 2024), the transformation of teaching methodologies uses big data and adaptive learning (Khan et al., 2022). According to research on AI use in engineering classes and university courses, it helps students interact with each other and learn in a more personalized way (Abdelmagid et al., 2024). Studies also reveal how AI raises student self-regulation in language acquisition and motivation (Li B. et al., 2024; Tang, 2024).

Impact of AI on academic communication

Academic communication is increasingly integrating AI, which presents both opportunities and challenges. AI tools such as ChatGPT demonstrate capabilities in paraphrasing, summarizing, and improving writing efficiency (Yan, 2023). These tools have the potential to transform scholarly communication by aiding content creation, authorship, and academic integrity (Dergaa et al., 2023; Lund and Naheem, 2024).

AI technologies, including natural language processing and big language models, have ushered in a transformative era in scholarly publishing (Lund et al., 2023; Petroșanu et al., 2023). Students believe that AI systems will increase the quantity and quality of teaching communication because they can ask more questions without feeling self-conscious or like they are interrupting the instructor. AI also allows instructors to save time by answering simple, repetitive questions and thus focus on more meaningful communication with students (Seo et al., 2021).

As AI is used more and more in research and scholarly communication, academic libraries face both opportunities and problems (Cox, 2023; Dwivedi et al., 2021). From changes in library subscriptions to the open access movement to the shift to digital publishing, AI clearly influences academic communication (Shrivastava and Mahajan, 2021). Academic publishing and research settings are undergoing major changes, thanks in great part to AI-powered technology. This is why knowledge of their consequences inside the academic community is becoming more and more important (Payini et al., 2024). Moreover, ethical use of AI in scientific content creation and education is becoming more popular, which emphasizes the need for rules and issues in using AI technology (Foltynek et al., 2023).

Although AI enhances language, vocabulary, and style (Kacena et al., 2024), ethical concerns still exist in academics, despite its advancements. Passmore and Tee (2024) analyzed AI’s opportunities in knowledge synthesis, content creation, and coaching, highlighting both its benefits and disadvantages relative to traditional methods. AI enhances student–student and student-faculty contacts in education by means of more interactive and communicative settings (Thomas, 2024). AI-powered solutions aid in permitting cooperative learning by means of real-time language translation and summarizing (Porter and Grippa, 2020; Son and Jin, 2024). Also, AI supports peer review processes by raising the quality and consistency of student work and providing insightful comments (Darvishi et al., 2022). Still, worries about AI lowering in-person interactions and maybe negative effects on social settings abound (Hohenstein et al., 2023).

Ethical considerations of AI in academia and academic integrity

With the development of AI technologies, issues of academic integrity and plagiarism have become increasingly important. AI tools such as text generators and literature review automators have facilitated academic writing but have also facilitated unethical practices such as plagiarism and cheating (Goel and Nelson, 2024; Yusuf et al., 2024). The ease with which students and researchers can create content using AI tools raises concerns about originality and citation (Cotton et al., 2024; Rabbianty et al., 2023). Generative AI poses significant threats to academic integrity by enabling practices such as plagiarism, cheating, fabrication, and unfair advantage (Song, 2024). The combination of AI and academic writing raises concerns about the reliability of research results, since fake scientific papers written by chatbots that do not do enough research can lead to issues of academic dishonesty and plagiarism (Zaitsu and Jin, 2023).

Higher education institutions are facing challenges in dealing with the widespread use of AI among students. This has led to an increase in academic misconduct due to the absence of official regulations and a lack of AI knowledge among students and instructors (Song, 2024). Academic institutions are required to revise their ethical guidelines and develop new methods for detecting plagiarism (Geethalakshmi, 2018). Additionally, it is advisable to promote knowledge about AI-supported writing and offer instruction on the ethical and appropriate utilization of these technologies (Miao et al., 2024).

It is recommended that institutions use suitable strategies to tackle plagiarism concerns and adapt to the changing environment influenced by AI technology (Bell, 2023; Khalil and Er, 2023; Perkins, 2023). Higher education institutions are being told to reevaluate and improve their academic integrity policies because AI is making it easier for more and more cases of academic misconduct to happen (Fowler, 2023). To protect academic integrity, training students and researchers on plagiarism, proper citation styles, and ethical writing is of more and more importance (Curtis and Vardanega, 2016; William, 2024). Furthermore, underlined is the need for online academic integrity mastery training in improving students’ understanding of plagiarism and their opinions on academic dishonesty (Curtis et al., 2013).

Educators play a crucial role in shifting the perception of AI from a shortcut to a useful tool. They achieve this by instructing students on how to use AI tools, like automatic text summarization, and emphasizing the importance of using credible sources (Simpson, 2023). By combining proactive education and rigorous policy execution, academic integrity can be preserved in the AI era, ensuring that technological advancements enhance rather than undermine scholarly communication (Goel and Nelson, 2024).

With the spread of AI applications in education, concerns about the privacy and security of student data are increasing (Huang, 2023). The integration of AI in education offers opportunities for personalized learning experiences and advanced teaching methods (Eden et al., 2024; Wang et al., 2023). AI systems gather and evaluate students’ personal data, academic achievements, and learning patterns. This integration also gives rise to substantial apprehensions regarding data privacy and security (Sontan and Samuel, 2024; Alrayes et al., 2024; Li Y. et al., 2024; Risang Baskara, 2023). These worries encompass the spreading of personal information, data recognition, and breaches of security, particularly when it comes to handling data related to students (Cahyanto, 2023). Unauthorized access could infringe upon students’ privacy and result in potentially detrimental outcomes (Devi et al., 2023; Guan et al., 2023).

The ethical risks posed by AI technology to student personal information require robust security measures and clear communication regarding data processing to ensure informed consent (Devi et al., 2023; Huang, 2023). Current privacy policies are still limited, and there are many challenges to be faced in the use of AI and e-learning, especially facial recognition and automatic decision-making (Cahyanto, 2023). Therefore, addressing these privacy and data security concerns requires a comprehensive approach that includes establishing robust ethical frameworks, increasing transparency, and ensuring equitable resource allocation to close gaps in AI education practices (Ma and Jiang, 2023).

Thus, educational institutions must establish comprehensive data protection policies, use data transparently and responsibly, and protect students’ data privacy rights (Meszaros and Ho, 2021). AI-based education tools are used to lower these risks. It is important to think about being fair, ethical, and transparent (Harry, 2023; Nikolopoulou, 2024). Frameworks like Privacy by Design and Privacy Enhancing Technologies (PETs) give instructions on how to build privacy defenses into AI systems ahead of time (Sontan and Samuel, 2024). Also, making sure everyone has the same access to new technologies and fixing biases in AI algorithms are very important things to think about in educational AI applications (Sarwar et al., 2024). The smart use of AI in education can get the most out of these technologies while still protecting people’s privacy and data safety. It’s important to find a balance between the two.

Cultural factors in accepting AI in higher education

Factors influencing the acceptance of AI are as varied as they are with other educational technologies (Ismatullaev and Kim, 2024; Ye et al., 2019). Research has shown that sociodemographic factors can influence attitudes toward AI. The different patterns observed across factors such as country, age, and gender emphasize the importance of cultural context in shaping future perspectives on AI (Grassini and Ree, 2023).

Cultural factors play an important role in the acceptance of technological tools. For instance, Keller (2009) found that cultural factors significantly influence the acceptance of virtual learning environments. Positive or negative cultural influences can significantly affect the adoption of AI in educational settings. When looking at how AI is used in academia, it’s important to think about the things that affect people’s thoughts and plans about using AI, like how dangerous they think it is, how easy it is to use, and how much work they think it will take (Jain et al., 2022).

The Unified Theory of Acceptance and Use of Technology (UTAUT) and the perceived risk theory (Wu et al., 2022) both have many factors that affect how people feel about AI-supported learning environments. The elements encompass students’ interaction with AI, their risk perception, and the level of support provided by the learning environment. Furthermore, the UTAUT model links the cultural dimension to the social norm variable (Ismatullaev and Kim, 2024).

Perceived utility and user-friendliness influence the adoption of AI applications in Pakistan. The sociocultural elements have an impact on the views of university students toward the unconscious data collection carried out by AI technologies. Men, individuals with high incomes, and those majoring in business demonstrate lower levels of concern about such activities (Bokhari and Myeong, 2023).

Cultural factors play an important role in understanding the impact of AI on its acceptance in higher education. For example, in Asian cultures, historical context, religious beliefs, and levels of exposure to technology may contribute to a higher level of acceptance toward AI. Countries like Japan have embraced AI-based solutions extensively to serve an elderly population, and cultural values have been essential in this process. On the other hand, in the West, individualism and cautious views on technology can hinder the acceptance of AI. These variations suggest that culture significantly influences the acceptance of technological breakthroughs (Na et al., 2023; Yam et al., 2023).

The integration of AI into sectors including public policies and higher education can depend much on cultural norms, especially trust, openness, and transparency. For instance, these principles serve as a major guide for policy development in Scandinavian nations to guarantee the harmonic integration of AI into society. Likewise, in Japanese society, collectivist ideas have helped public services like health and education to embrace AI. But cultural differences—ethical and privacy issues, among others—may hinder these activities (Liu, 2023; Robinson, 2020). The adoption of AI in higher education calls for not only technological advantages but also awareness of the cultural surrounds and consumer values. Policies that take cultural differences into account can help make this process less difficult and lead to more people accepting AI (Ho et al., 2023; Neumann et al., 2024) the more it is used.

In the healthcare sector, cultural perspectives significantly influence AI adoption. High uncertainty avoidance poses a significant barrier. Clinicians’ preference for face-to-face interactions over AI-based ones shows how cultural factors like avoiding uncertainty can make it harder for people to accept new technology (Krishnamoorthy et al., 2022). A complex interplay of cultural factors is evident in AI acceptance. Culturally sensitive approaches are required to improve the adoption and integration of AI technologies across different regions and sectors.

In conclusion, the literature review highlights the increasing integration of AI in higher education, transforming teaching, learning, and academic communication practices. AI tools offer benefits such as personalized learning experiences, improved student engagement, and enhanced efficiency in administrative tasks. However, the adoption of AI also raises important ethical issues, especially around academic integrity, plagiarism, and data privacy. The impact of AI on scholarly communication is evident through changes in scholarly publishing, collaborative learning environments, and the quality of student interactions. Cultural factors have a significant impact on attitudes and acceptance of AI in educational settings. At the same time, these factors demonstrate differences between countries and conditions in which they are implemented. In light of advances in AI technology, educational institutions need to establish comprehensive regulations, protocols, and training initiatives to ensure the ethical and responsible use of these technologies. At the same time, it becomes imperative to maximize their potential to improve the educational experience in this context. We need more research to comprehensively understand the interplay between AI, academic discourse, and cultural influences in higher education.

Research methodology

We asked students attending universities in Turkey and Russia about their thoughts and experiences regarding the application of AI in academic communication. The purpose of this study was to analyze these views and experiences. The study employed a qualitative research design that aligned with its objectives. According to Creswell and Creswell (2017), qualitative research is a beneficial way to investigate complicated social issues because it gives a full picture of how people think, feel, and act in their own cultural setting. The utilization of the qualitative technique will enable the acquisition of comprehensive and intricate insights into participants’ perspectives on AI at universities. Furthermore, its potential to discern cultural disparities among Turkish and Russian students led to its selection. The study employed a comparative case study design, which entails methodically comparing two or more examples to discern similarities, differences, and trends (Yin, 2018). The participants in this study consisted of university students from Turkey and Russia. The comparison mostly centered around their viewpoints and encounters regarding AI in the realm of academic communication.

Participants

The study participants were 71 university students, consisting of 33 Turkish students and 38 Russian students. Students in Russia study at a state university in Russia. Students in Turkey study at a state university in one of the southeastern provinces of Turkey. We recruited the participants using a purposive sampling technique. The inclusion criteria for participants included their current enrollment in a university program in either Turkey or Russia, as well as their willingness to participate in the study. While the female rate among participants in the Russian group is 76.3%, it is 78.8% in the Turkish group (Table 1). Both university populations have females higher than male.

Table 1

| Country | Female | Male | Total | ||

|---|---|---|---|---|---|

| N | % | N | % | ||

| Russia | 29 | 76.3 | 9 | 23.7 | 38 |

| Turkey | 26 | 78.8 | 7 | 21.2 | 33 |

| Total | 55 | 16 | 71 | ||

Demographic distribution of participants.

The primary criteria for sample selection were active university enrollment and voluntary participation in the study. Voluntary participation encouraged participants to share their experiences more openly, resulting in rich data. An appropriate foundation for cross-cultural comparison was the choice of public colleges in both nations and gathering similar numbers of data: 33 participants from Turkey and 38 participants from Russia. In addition, the similar gender distribution in both countries (Turkey: 78.8% female, Russia: 76.3% female) strengthened the comparability between the groups. Due to the qualitative nature of the study, it was aimed at capturing diversity of experience rather than statistical representation, and for this purpose, students from different academic departments were included in the study. However, it should be recognized as a limitation of the study that the voluntary participation principle may have encouraged the participation of students more interested in AI. Future studies can overcome this limitation by implementing a more systematic sampling strategy. In addition, future researchers can increase the diversity of participants by using a stratified sampling strategy. Since the nature of the study involves current technologies, future researchers can consider the level of familiarity and use of these technologies as a variable in participant selection.

As the authors, we acknowledge the limited sample size in this study for generalization purposes. However, the primary objective of this research is to provide an in-depth understanding, not to make statistical generalizations. The sample size is sufficient for the thematic analysis approach employed (Braun and Clarke, 2006). Future researchers and readers should consider the sample limitation when interpreting the study’s findings. In this context, future studies could expand on this research by using larger and more diverse samples. Also, the inclusion of a similar number of participants from the two countries (Turkey: 33, Russia: 38) provided a suitable basis for cross-cultural comparison. The data collected through open-ended questions enabled detailed information to be obtained from each participant, and only 242 codes could be made under the theme of “General Perceptions and Experiences.” Similar qualitative and intercultural comparative studies in the literature indicate that 30 to 40 participants from each group are acceptable. In addition, considering the difficulty of collecting data from two different countries and in two different languages, a sample of 71 students from public universities in both countries provided comparable and valuable data. We recommend testing the findings with larger samples in future studies.

Data collection tool

The present study utilized a survey with open-ended questions as the data collection instrument. We created the survey to allow students to share their perspectives and experiences with AI in academic communication. Three primary components divide the survey, each focusing on a specific aspect of the study inquiries.

Section 1: general perceptions and experiences

This section aimed to explore the participants’ general perceptions and experiences regarding the use of AI in academic communication. This section included the following open-ended questions:

What is your overall understanding and experience with using generative AI tools for academic communication?

How do you think the integration of generative AI in academic communication will impact your learning experience?

Can you envision any specific scenarios where generative AI tools could be particularly beneficial in your academic field or discipline?

Section 2: effects on academic communication and collaborations

This section focused on the perceived effects of AI-generated content on academic communication and collaborations. This section included the following open-ended questions:

Can you describe a scenario where AI-generated content could facilitate more effective communication among team members?

Can you describe any specific experiences or examples where generative AI has already impacted academic communication, either positively or negatively?

Section 3: evaluation of AI-generated content

The third section aimed to explore the participants’ evaluation of AI-generated content in terms of academic integrity and plagiarism. This section included the following open-ended questions:

What concerns do you have about the use of AI-generated content in academic settings?

Can you describe a situation where AI-generated content might not be suitable or appropriate for academic purposes?

How do you approach the issue of academic integrity and plagiarism when using generative AI for assignments or papers?

How comfortable would you feel submitting work that was partially generated by AI to your professors? Explain your answer.

The research team developed the open-ended survey through a comprehensive review of existing literature on AI in higher education and academic communication. Three faculty members from each country then examined the questionnaire questions and obtained expert opinions for content validity. The experts confirmed that the questions were appropriate. We translated the questionnaires into Russian and Turkish, and then administered them to the students in their native languages. Next, we conducted a pilot study with five students from each country. We asked the students to think aloud as they answered the questions in the pilot study, identifying the points they did not understand. We provided clear instructions to the participants and assured them of the confidentiality and anonymity of their responses. The students understood that their participation was entirely voluntary. We made it clear to the students that they could leave the study at any point during the research process. Additionally, we recorded the answer time for each question and optimized the questionnaire’s completion time. As a result of all these processes, the questionnaire was finalized. This comprehensive validation process shows that the data collection tool is appropriate and reliable to answer the research questions.

Due to the nature of the open-ended questionnaire, a qualitative approach was used in the study instead of quantifying student responses. Collecting opinions and other documents from students, in addition to their behaviors or studies, can achieve data triangulation. In addition, quantitative scales can be developed to examine their opinions and attitudes. In fact, the data obtained in this questionnaire has the potential to form the basis for a quantitative scale. In this context, it is suggested that future researchers should differentiate the data collection tools.

Data analysis

Thirty-three Turkish and thirty-eight Russian university students’ qualitative material was examined using Braun and Clarke's (2006) six-stage theme analysis system. All researchers participated in the rigorous and tight approach used in the analytical procedure to guarantee the quality and confidence of the outcomes.

Stage 1: familiarizing with the data

Every survey response was translated into English. Two separate translators—Turkish-English and Russian-English—reviewed the translational correctness in order to ensure semantic equality. The study team then combed over the translated responses again several times in order to spot the first likely trends and interpretations in the data.

Phase 2: initial coding

Using an inductive technique, all researchers separately initial coded the data. Under this method, codes sprang from the material instead of being predefined. Every researcher allocated initial codes and methodically went over the whole data set looking for significant language passages. The coding was very detailed in order to analyze cultural richness and differentiation. For instance, this process generated 242 codes solely under the theme of “General Perceptions and Experiences.”

Inter-coder reliability and consensus process

Three researchers independently coded the entire data set and produced 728, 705, and 710 codes, respectively. Inter-coder reliability was calculated using Miles and Huberman’s (1994) formula. Reliability scores between pairs of researchers are as follows:

Between the first and second researcher: 95.39% (33 code discrepancies).

Between the first and third researcher: 94.85% (37 code discrepancies).

Between the second and third researcher: 96.04% (28 code discrepancies).

We computed an average intercoder dependability of 95.43%. This rate demonstrates great coding consistency and is much above the 80% level advised by Miles and Huberman (1994). Consensus sessions, when the researchers addressed the justification for their coding choices, helped settle differences. The researchers developed the final coding system once they resolved all contentious rules.

Stage 3: searching for themes

The research team then cooperatively examined the initial codes in search of possible trends. This approach arranged the codes according to their correlations and trends into more general themes. The team investigated the relationship between codes and possible themes.

Phase 4: reviewing themes

To ensure the validity of the themes, the research team conducted two levels of analysis:

Level 1: Comparison of themes with coded quotations.

Level 2: Examining the relationship of themes with the whole data set.

At this stage, the researchers adjusted, merged, or separated the themes as necessary. The researchers paid particular attention to ensuring that each theme was consistent and distinctive.

Phase 5: identifying and naming themes

Every theme was precisely defined by the team to faithfully depict the data it stands for. The main idea of every subject was precisely expressed by the chosen, succinct, but instructive theme names.

The theoretical framework in data analysis

For understanding the results, the Unified Theory of Acceptance and Use of Technology (UTAUT) model and the theory of perceived risk were used. These theories talk about how people accept and use technology. We specifically used “social norm,” a sub-dimension of the UTAUT model, as a powerful tool to interpret the data. Social norm refers to the social expectations that shape individuals’ behaviors and therefore serve as an important guide in understanding individuals’ preferences and attitudes towards technology use. Social norm is one of the basic building blocks of the UTAUT model and explains the influence of the social environment on individuals’ decisions about technology use. We evaluated the participant’s statements using the relevant codes in our framework. On the other hand, perceived benefit within the model is an important element affecting the use of AI. Specific aspects of “Performance Expectancy” focus specifically on the potential of AI to accelerate learning processes, facilitate access to information, and increase academic achievement. These aspects contribute to users developing positive attitudes toward AI technology. In this context, we evaluated the positive and negative statements students made about the use of AI in academic communication. For example, statements such as “Facilitating research” and “Better presentation” were categorized as positive, while statements such as “Incorrect and inconsistent information” were categorized as negative. This evaluation provided a detailed understanding of the participants’ perceptions of AI.

Another theoretical framework is Hofstede’s cultural dimensions theory. The findings were analyzed in the context of the individualism–collectivism and uncertainty avoidance dimensions of this model. For example, in the individualism–collectivism dimension, students’ preference to use AI in collaborative projects was associated with collectivism, while their preference to use it in individual tasks was associated with individualism. In the uncertainty avoidance dimension, it was observed that concerns about privacy and data security increased this tendency. For instance, we found an association between the individualism–collectivism dimension and students’ preference for collaborative or individual work when using AI. On the other hand, codes that mentioned concerns about privacy and data security were associated with the uncertainty avoidance dimension.

Both theoretical frameworks are quite suitable for making sense of the study’s data. While the UTAUT model provides a strong theoretical basis for analyzing students’ behaviors of accepting and using AI technology (Acosta-Enriquez et al., 2024), it was effective in explaining the differences in students’ attitudes towards AI with its dimensions such as performance expectancy and effort expectancy. On the other hand, Hofstede’s Cultural Dimensions Theory provides an appropriate framework to make sense of the differences in the use of AI in two different cultural contexts. When these two theoretical frameworks were put together, they gave a full picture of how technology acceptance and cultural differences affect the use of AI.

Future researchers can analyze these data using different theoretical frameworks and approaches. Theoretical frameworks serve as a guide to making sense of and explaining the data, helping researchers to understand complex concepts more clearly. These frameworks can be considered a powerful analysis tool that illuminates different aspects of the data. Analyzing from different perspectives can naturally lead to different results.

Quality assurance measures

We employed several strategies to enhance the analysis’s reliability. The researchers held regular online meetings to discuss and refine the coding process. We documented all decisions made throughout the process in detail and created an audit trail to ensure transparency. Member checking was conducted with a subset of participants to confirm the accuracy of the interpretations obtained. In addition, peer review meetings were organized with external researchers to strengthen the objectivity of the study by questioning assumptions and interpretations in the research process.

While still sensitive to the subtleties of how Turkish and Russian students view and experience AI in academic settings, the study concentrated on spotting trends both inside and between the two cultural settings. Throughout the research process, the team stayed conscious of possible biases and often spoke about how they could influence data interpretation.

Results

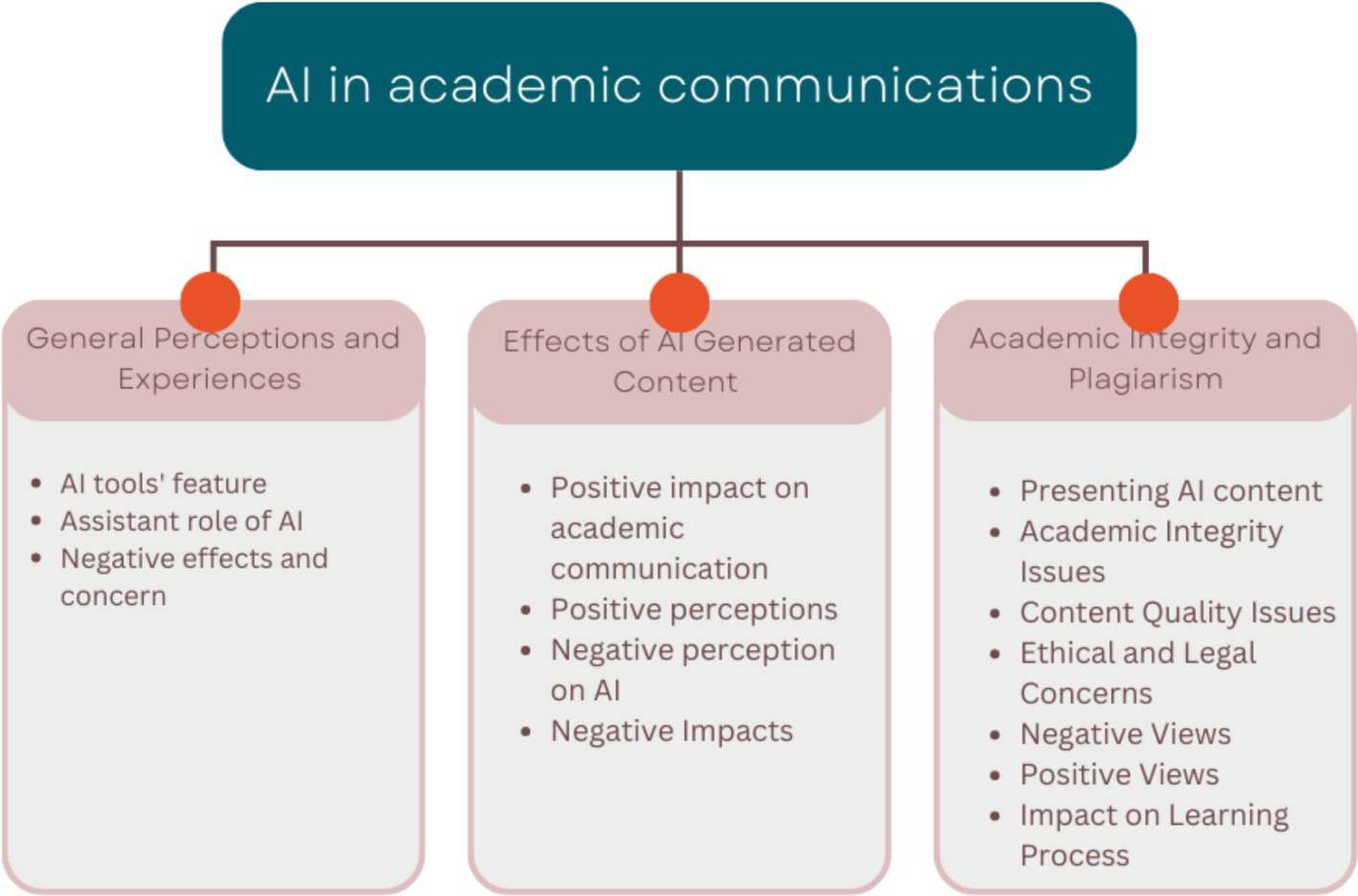

The General Perceptions and Experiences theme looks at students’ overall views of AI tools, including their perceived usefulness, position as assistance in academic duties, and related concerns. It also points up different degrees of student AI acceptance. The focus of our study is to analyze the influence of AI on student interactions and collaborative work in the context of academic communication and collaborations. The analysis contrasts the positive effects, like better teamwork within groups, with the negative perceptions, like worries about less strong analytical thinking. The evaluation focuses on the ethical issues of using AI in academia, specifically in relation to academic integrity and plagiarism. The study covers students’ degree of comfort with AI-generated content, plagiarism concerns, problems with content quality, and more general ethical issues. This study examines the perspectives of Turkish and Russian students, emphasizing the cultural and educational disparities in their comprehension and application of AI. In the study, code diversity was kept as high as possible in order to observe cultural differences more easily (see Figure 1).

Figure 1

Concept map of themes and categories.

General perceptions and experiences regarding the use of AI in communication

The category of attributes of AI tools relates to the way respondents characterize AI depending on their experiences. Respondents from Turkey and Russia noted several advantages of AI tools (Russia: 20, Turkey: 19). Both nations’ participants rank AI tools as “Useful” (Russia: 17, Turkey: 21) and “Beneficial” (Russia: 4, Turkey: 6). Whereas a Turkish student said, “I’ve used it before and it’s quite useful,” (T_16), one of the Russian students said, “It’s a very good and useful application” (R_19). Some students cited “Easily” (Russia: 6, Turkey: 4), “Effective” (Russia: 4, Turkey: 4), “Faster” (Russia: 4, Turkey: 4) and “Helpful” (Russia: 4, Turkey: 2). While another student from Turkey said, “I see it as a good and effective shortcut for my research,” and a Russian student said, “It helps in the preliminary preparation stage” (R_10). Another student from Turkey stated, “AI analyzes data sets quite effectively.” For us students in writing and editing articles, it especially offers ease; another student commented, “…I got help while doing my homework.” Furthermore, some individuals called AI technologies “Productive” (Turkey: 1) and “Successful” (Russia: 1). These results imply that people in both nations value and know the beneficial aspects of AI tools (see Table 2).

Table 2

| Codes | Russia | Turkey | Total |

|---|---|---|---|

| AI tools’ features | 56 | 60 | 116 |

| Positive | 20 | 19 | 39 |

| Useful | 17 | 21 | 38 |

| Beneficial | 4 | 6 | 10 |

| Easily | 6 | 4 | 10 |

| Effective | 4 | 4 | 8 |

| Faster | 4 | 4 | 8 |

| Successful | 1 | 1 | 2 |

| Productive | 0 | 1 | 1 |

| Assistant role of AI | 27 | 43 | 70 |

| Finding information and source | 7 | 15 | 22 |

| Assignment | 9 | 5 | 14 |

| Exploring unknown topic | 5 | 1 | 6 |

| Time-saving | 3 | 2 | 5 |

| Activities | 1 | 2 | 3 |

| Assistant | 1 | 2 | 3 |

| Writing theses and academic studies | 0 | 3 | 3 |

| Expand our world of thought | 0 | 2 | 2 |

| Idea generation | 1 | 1 | 2 |

| Correcting our writing mistakes | 0 | 1 | 1 |

| Creative writing | 0 | 1 | 1 |

| Data analysis | 0 | 1 | 1 |

| Helping attention and concentration | 0 | 1 | 1 |

| Helping daily routine | 0 | 1 | 1 |

| Increase logically thinking | 0 | 1 | 1 |

| Providing quality education for all | 0 | 1 | 1 |

| Reducing the human workload | 0 | 1 | 1 |

| Solving problems | 0 | 1 | 1 |

| Working systematically | 0 | 1 | 1 |

| Negative effects and concern | 17 | 13 | 30 |

| Negative experience | 2 | 3 | 5 |

| Concern | 1 | 3 | 4 |

| Nonpermanent-knowledge | 4 | 0 | 4 |

| Not be sufficient | 2 | 1 | 3 |

| Not effective | 2 | 0 | 2 |

| Reduce discipline | 0 | 2 | 2 |

| Should be improved | 2 | 0 | 2 |

| Addiction | 0 | 1 | 1 |

| Damages the desire for research and learning. | 1 | 0 | 1 |

| Eliminate our creativity | 1 | 0 | 1 |

| Incorrect information | 1 | 0 | 1 |

| Make people lazy | 0 | 1 | 1 |

| Reducing the quality of learning | 0 | 1 | 1 |

| Reducing the student’s thinking skills | 1 | 0 | 1 |

| Rote learning | 0 | 1 | 1 |

| No experience | 13 | 13 | 26 |

| Total | 113 | 129 | 242 |

Code frequencies for general perception theme.

The findings in the “AI Tools’ Feature” category show that students’ general evaluations of AI are largely positive. Especially the high frequency of the code “useful” (Russia: 17, Turkey: 21) reveals that students in both countries see AI as a practical tool in their academic studies. Still, what’s fascinating here is that Turkish students emphasize AI’s importance more often. This difference can be explained by the greater readiness of Turkish students to adapt to new technologies or by the greater openness of the Turkish educational system toward the integration of technology.

Respondents’ opinions about how AI products simplify their lives or tasks determine the category of AI’s assisting function. Turkey’s respondents emphasized the benefits of AI more than those of Russia (Turkey: 43, Russia: 27). AI can be beneficial in the following areas: “Finding information and source” (15), “Doing homework” (5), “Investigating unknown topics” (1), “Saving time” (2), and “writing thesis and academic works” (3), according to respondents from Turkey. Participants from Turkey stated, “I generally use it to find information that I cannot access otherwise. It’s quite useful for obtaining organized information for courses” (T_20), “I sometimes used it while making slides for my courses” (T_10) and “I view it positively and use it myself in terms of resources” (B_30). It was also stated that AI can “Expand our world of thought” (2), “Idea generation” (1), “Correcting our writing mistakes” (1), “Creative writing” (1), “Data analysis” (1), “Helping attention and concentration” (1), “Helping daily routine” (1), “Increase logical thinking” (1), “Providing quality education for all” (1), “Reducing the human workload” (1) and “Solving problems” (1). Participants underlined in Russia that AI can support “Assignment” (9), “Finding information and source” (7), “Exploring unknown topic” (5), “Time-saving” (3). Some student comments on the matter include “Generally useful for my homework and research. (R_36)” and “In my experience, ChatGPT can answer every question I ask. I have no trouble finding responses to my questions. (R_25).” These results imply that participants—especially in Turkey—believe that AI can play several beneficial functions in scholarly communication.

When the “Assistant Role of AI” category is analyzed, it is seen that Turkish students (43) use AI for more diverse purposes than Russian students (27). In particular, the fact that Turkish students used the code “finding information and source” more often (15 times vs. 7) suggests that they use AI more as a tool for searching for information and doing research. Conversely, Russian students would rather employ AI for particular chores, including homework (assignment: 9). The variations in the research-assignment balance between the two countries’ educational systems could help to explain this disparity.

The negative effects and concerns category captures students’ worries and negative experiences with AI. Some participants in both nations expressed worries about the negative effects of AI applications. Participants in Russia rated AI as “Nonpermanent-Knowledge” (4), “Not Be Sufficient” (2), “Not Effective” (2), “Should Be Improved” (2), “Eliminate Our Creativity” (1), “Incorrect Information” (1), “Damages the desire for research and learning” (1) and “reducing the student’s thinking skills” (1). About this matter, they expressed, “However, it’s also an application that needs improvement, as it sometimes cannot answer questions. (R_24)” and “It will not be permanent because it’s readily available information. (R_15).” Participants assessed AI’s “Reduce Discipline” (2), “Addition” (1), “Make People Lazy” (1), “Reducing the quality of learning” (1) and “Rote Learning” (1) in Turkey. One of the Turkish students, T_17, said, “…may reduce the quality of learning”; the other student, T_33, said, “I think it will lead to rote learning.” These results imply that certain respondents from both nations have unfavorable opinions and worries about the application of AI.

In the “Negative Effects and Concern” category, Russian students (17) expressed more concerns than Turkish students (13). In particular, the fact that the code “nonpermanent-knowledge” was seen only in Russian students (4) reflects the importance that the Russian education system attaches to permanent learning. Turkish students’ concerns, on the other hand, focus more on behavioral issues such as loss of discipline (“reduce discipline”: 2).

In both Russia and Turkey, an equal number of respondents (13) indicated they lacked knowledge of AI techniques. This result implies that some respondents in both nations have not yet used AI tools or have just limited knowledge of them. This finding is unsurprising given the relatively recent emergence of AI applications in academic communication.

Overall, it seems that respondents in Russia and Turkey view and experience AI tools in somewhat different ways, albeit with certain parallels. Respondents in both nations value the advantages of AI tools and believe they can be rather useful for academic communication in several ways. While Turkish respondents concentrated more on the beneficial usage of AI, Russian respondents gave the negative effects and worries some more weight. Furthermore, some respondents in both nations appear to have never used AI tools. These results imply that although opinions and experiences of the use of AI technologies in academic communication may vary between countries, generally there is awareness of the possible effects of these tools.

Effects of AI-generated content on academic communication and collaborations

We generated 16 codes related to the positive effects of using AI on academic communication. Participants in Turkey mentioned the positive impact of AI on academic communication more than those in Russia (Turkey: 31, Russia: 18). In Turkey, codes such as “group working” (8), “positive communication” (7), “better presentation” (4) and “increase interaction” (3) came to the fore. Students said in this regard that AI tools have good contributions in communication with the words “It strengthens communication among classmates (T_11)” and “It helps to fill our knowledge gaps and makes us more confident in communication. (T_20).” In Russia, the codes “facilitates communication” (3), “increases communication” (2) and “facilitating research” (2) were stressed more often. Russian students reported on the other hand, “an introverted individual with communication issues might try to communicate with friends using an AI-generated scenario example. (R_33)” and “it facilitates communication (R_27).” These findings suggest that mostly Turkish participants feel AI could increase contact and cooperation in academic communication.

Positive perceptions represent the positive statements used by students regarding AI tools. Participants in both countries have positive perceptions about AI (Russia: 24, Turkey: 22). “Access information” (Russia: 10, Turkey: 9) and “speed” (Russia: 2, Turkey: 4) are the most frequently mentioned positive perceptions in both countries. In this context, a Russian student stated that “Accessing more resources can lead to more effective communication. (R_14)” whereas a Turkish student said, “we can access information more easily, quickly, and reliably. (T_04).” Furthermore, whereas in Turkey it is “useful” (2) and “generating ideas” (2), in Russia participants underlined that AI is “easier” (3) and “positively” (3). Whereas the Turkish student said, “…having a language control tool is very useful for students studying in English. (T_14),” the Russian student said, “as I mentioned in my previous answers, [AI is] easy and practical. (R_19).” These results reveal that people in both nations understand the benefits of AI in scholarly communication.

We collected eleven different codes related to students’ negative perceptions of AI. Participants in Turkey had more negative perceptions about AI than those in Russia (Turkey: 20, Russia: 11). In both countries, “incorrect and inconsistent information” (Russia: 2, Turkey: 4), “ready-made solutions” (Russia: 1, Turkey: 4) and “reduce our thinking and research ability” (Russia: 2, Turkey: 3) were the most frequently mentioned negative perceptions. In this context, a Turkish student used the phrase “…producing misleading content… (T_13)” and similarly a Russian student used the phrase “it can provide incorrect and inconsistent information. (R_38).” Furthermore, while participants in Turkey stated that AI is “making people lazy” (2), “limits the individual’s creativity” (2) and “monotony” (1), participants in Russia stated that AI is “not permanent” (1) and “get in the way of people’s work” (1). One Turkish student (T_20) said “…making people lazy and leading them to incorrect information.” Also, Russian student R_08 said that “it can get in the way of people’s work.” These results imply that participants—especially in Turkey—have worries about AI possibly having some detrimental consequences on academic communication.

We identified seven codes under the category of negative effects of using AI in academic communication. In this context, the negative effects of AI on academic communication were mentioned by participants in Russia more frequently than in Turkey (Russia: 10, Turkey: 4). The most frequently mentioned negative impacts in both countries were “unfairness” (Russia: 3, Turkey: 1) and “reduce communication” (Russia: 2, Turkey: 2). A Russian student (R_04) stated “I do not think so; on the contrary, it might reduce communication,” while a Turkish student (T_06) similarly stated “I think such applications could negatively affect my interaction with classmates rather than increase it.” In addition, while in Russia participants emphasized issues such as “plagiarism” (2), “cheating” (1) and “personal information” (1), in Turkey “academic ethical issues” (1) were mentioned. Russian student (R_36) used the expression “it can lead to plagiarism.” The Turkish student (T_13) used the phrase “…producing misleading content and academic ethical issues.” These findings show that participants in both countries are aware that AI can lead to some negative consequences in academic communication.

Respondents from Russia claimed more lack of knowledge about AI than those from Turkey (Russia: 14, Turkey: 8). This result implies that users of AI in academic communication have poorer knowledge among Russian respondents.

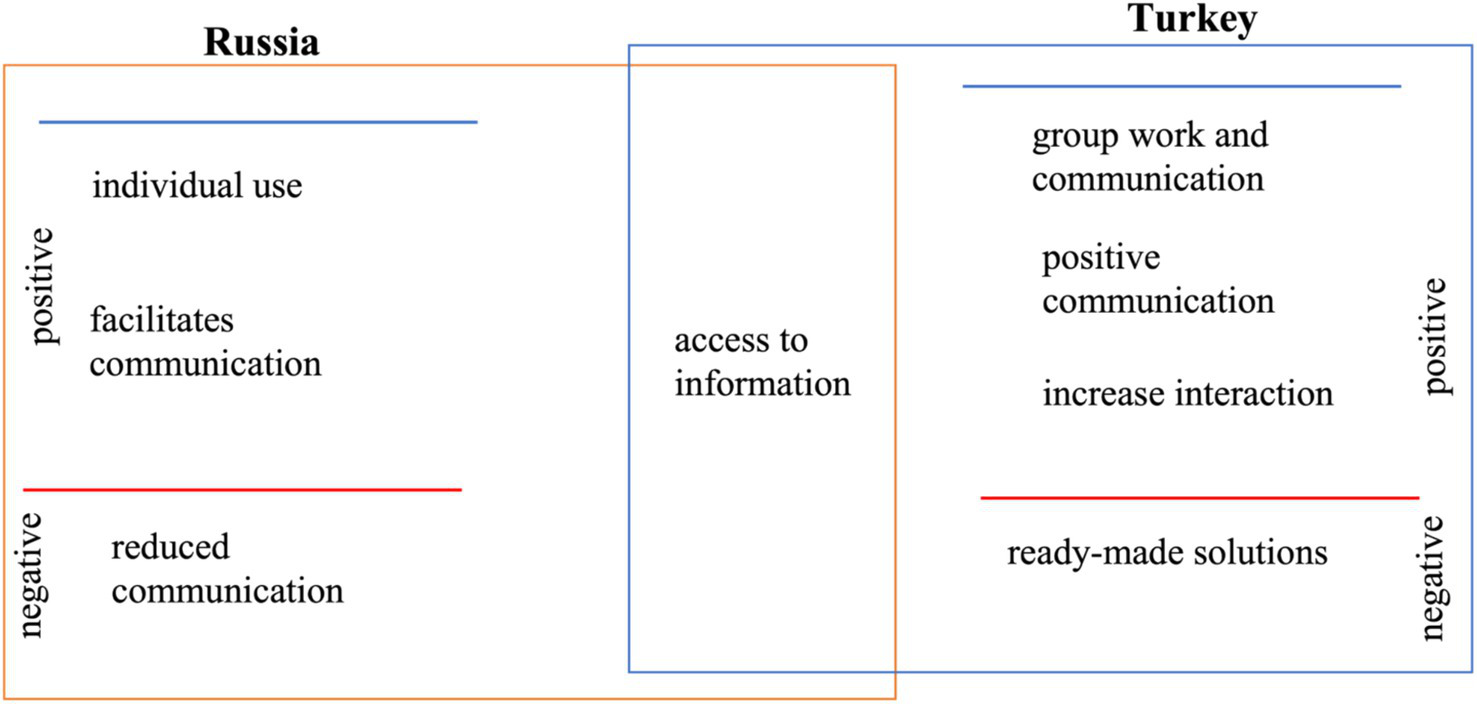

Regarding the effects of AI on academic communication, it appears that the respondents in Turkey and Russia have certain parallels as well as variances. Turkish people worry about AI’s negative effects, but they also think it could improve academic collaboration. Russian players, on the other hand, talked about both the pros and cons of AI. People in both countries know that AI can help with academic communication, but they also think that it can lead to “incorrect and inconsistent information,” “ready-made solutions,” and “lessen our ability to think and do research.” Also, people in Russia seem to have less experience with AI. These findings indicate that perceptions and approaches to AI use in scholarly communication vary across different countries. Both countries should think about the pros and cons of this use of AI (see Figure 2).

Figure 2

Relation map on academic communication and collaborations based on countries.

When we look at the findings of Table 3 with a focus on cultural context and communication, significant differences emerge. While Turkish students tend to see AI as a tool for group work and communication (Group work - Turkey: 8, Russia: 2), Russian students focus more on individual use. This reflects the collective work tendency in Turkish culture. A similar difference is seen in the perception of communication; Turkish students emphasized more on “positive communication” (7) and “increase interaction” (3), while Russian students emphasized “facilitates communication” (3). Although students from both countries value access to information (Russia: 10, Turkey: 9), Turkish students are more inclined to share and discuss this information. Cultural differences are also evident in negative perceptions. Turkish students are more concerned about ready-made solutions (4), while Russian students are more worried about reduced communication (2). The fact that inexperience is more prevalent among Russian students (14), while Turkish students use AI tools more actively (8) shows cultural differences in technology adaptation. In terms of ethical concerns, Russian students emphasized unfairness and plagiarism more, while Turkish students had a broader view of academic ethics. In terms of communication styles, Turkish students preferred presentation and interaction, while Russian students preferred direct communication, reflecting differences in cultural communication styles. Cultural values influence the use of AI in academic communication, as all these findings demonstrate.

Table 3

| Codes | Russia | Turkey | Total |

|---|---|---|---|

| Positive impact on academic communication | 18 | 31 | 49 |

| Group working | 2 | 8 | 10 |

| Positive communication | 2 | 7 | 9 |

| Facilitates communication | 5 | 0 | 5 |

| Better presentation | 1 | 4 | 5 |

| Increase interaction | 1 | 3 | 4 |

| Effective communication | 1 | 2 | 3 |

| Facilitating research | 2 | 1 | 3 |

| Projects and assignments | 1 | 2 | 3 |

| Better writing quality | 0 | 1 | 1 |

| Brainstorming with friends | 1 | 0 | 1 |

| Developing social skills | 0 | 1 | 1 |

| Impact on online lessons | 1 | 0 | 1 |

| More comprehensible language | 1 | 0 | 1 |

| Solving communication problem | 0 | 1 | 1 |

| Visualize the verbal presentation | 0 | 1 | 1 |

| Positive perceptions | 24 | 22 | 46 |

| Access information | 10 | 9 | 19 |

| Speed | 2 | 4 | 6 |

| Easier | 3 | 1 | 4 |

| Useful | 2 | 2 | 4 |

| Positively | 3 | 0 | 3 |

| Efficiency | 1 | 1 | 2 |

| Generating ideas | 0 | 2 | 2 |

| Answered more quickly and accurately | 1 | 0 | 1 |

| Checking project | 0 | 1 | 1 |

| Facilitate our work | 1 | 0 | 1 |

| Faster information discovery | 0 | 1 | 1 |

| Increase motivation | 0 | 1 | 1 |

| More creativity | 1 | 0 | 1 |

| Negative perception on AI | 11 | 20 | 31 |

| Incorrect and inconsistent information | 2 | 4 | 6 |

| Ready-made solutions | 1 | 4 | 5 |

| Reduce our thinking and research ability | 2 | 3 | 5 |

| Making people lazy | 1 | 2 | 3 |

| Negatively | 1 | 2 | 3 |

| Limits the individual’s creativity | 0 | 2 | 2 |

| Monotony | 1 | 1 | 2 |

| Not permanent | 1 | 1 | 2 |

| Get in the way of people’s work | 1 | 0 | 1 |

| Limit imagination | 0 | 1 | 1 |

| Prevents the acquisition of extra information | 1 | 0 | 1 |

| Negative impacts | 10 | 4 | 14 |

| Reduce communication | 2 | 2 | 4 |

| Unfairness | 3 | 1 | 4 |

| Plagiarism | 2 | 0 | 2 |

| Academic ethical issues | 0 | 1 | 1 |

| Cheating | 1 | 0 | 1 |

| Faulty | 1 | 0 | 1 |

| Personal information | 1 | 0 | 1 |

| No experience | 14 | 8 | 22 |

| Total | 77 | 85 | 162 |

Categories and codes related to AI-generated content on academic communication and collaborations.

Evaluation of AI-generated content for academic integrity and plagiarism

Participants in both nations perceive AI-generated content similarly: 37 in Russia and 33 in Turkey. Still, participants in Turkey feel more “comfortable” (Turkey: 8, Russia: 6) and “comfortable with my contribution” (Turkey: 9, Russia: 5) providing AI content. Russian student R_21: “I present the data after checking their source. I feel comfortable if it is precise and accurate,” and Turkish student (T_10) “If I have prepared it really consciously and made honest use of AI, I can present it with confidence.” Russia’s participants reported they were “partially comfortable” (5). Most of the participants in both nations said they were “uncomfortable” (Russia: 19, Turkey: 16) with seeing AI stuff. In this regard, a student from Turkey (T_15) said “I feel uncomfortable, I think I tricked the lecturer,” while a student from Russia (R_03) said “I am not comfortable that our entries are inspected in general.”

Respondents in Turkey mentioned academic integrity issues more often than those in Russia (Turkey: 39, Russia: 30). In both countries, “plagiarism” (Russia: 11, Turkey: 15) was the most frequently mentioned problem. For example, a student from Turkey (T_24) stated “There is absolutely no honesty in taking advantage of the whole way; it is also plagiarism.,” while a student from Russia (R_03) stated “Plagiarism, it’s quite common.” In Turkey, the participants also emphasized issues such as “be honest” (6), “not appropriate for academic assignments” (4) and “need policies and rules” (3), while in Russia issues such as “non-honest” (3) and “lack of effort” (3) were mentioned. In this context, Turkish student (T_15) stated “Even though I try not to do it, many people of our generation do it and violate the rights of other students, and I think there should be some rules to prevent this.” The Russian student (R_30) used the expression “need to be honest and academically oriented.”

Participants in Turkey mentioned content quality issues more than those in Russia (Turkey: 22, Russia: 16). While “inconsistencies, and missing information” (11) was the most frequently mentioned problem in Turkey, “ready-made information” (6) came to the fore in Russia. Problems such as “monotony” (Russia: 1, Turkey: 2) and “unproductive” (Russia: 2, Turkey: 1) were expressed in both countries. In this context, the Russian student (R_07) said, “[AI] can combine unclear and meaningless arguments, which leads to an inconsistent and poor desired outcome.” While Turkish student (T_33) used the expression “I think it is not accurate information.”

Participants in both nations expressed comparable worries regarding ethical and legal aspects of AI use (Russia: 8, Turkey: 8). Russia highlighted “privacy and data concerns” (4) more frequently, while Turkey highlighted “ethical issues” (4). In this regard, a Turkish student (T_22) said “Use of AI in important assignments or presentations is not appropriate as it is unethical.” One Russian student (R_13) said “We may experience some privacy issues.” Both Russia and Turkey voiced worries about “use for malicious purposes” (Russia: 2, Turkey: 1).

Respondents in Russia hold a more pessimistic perception of AI in comparison to those in Turkey (Russia: 22, Turkey: 16). The most cited unfavorable opinions in both Russia and Turkey are “laziness” (Russia: 6, Turkey: 6) and “losing profession” (Russia: 3, Turkey: 3). One Russian student (R_23), for instance, said “It increases the tendency to be lazy.” A Turkish student (T_25) said “I think artificial intelligence tools can overtake manpower.” While in Turkey, issues such as “hinder critical thinking” (2) and “accepting without critiques” (2) were stressed, participants in Russia also stated opinions such as “negatively” (4) and “restricts our research ability.” Accordingly, a student from Turkey (T_23) said “Loss of critical or creative thinking in users,” while a student from Russia (R_05) said “Excessive use negatively affects a person’s own learning.”

Respondents in Russia exhibited marginally more favorable attitudes toward AI in comparison to those in Turkey (Russia: 18, Turkey: 15). In Turkey, the most commonly expressed favorable opinions were “get support” and “acceptable,” while in Russia, “no concern” and “positive” were the most prominent. In this context, a Russian student known as R_22 stated that they currently have no concerns or anxieties. The Turkish student (B_13) expressed that while AI can offer assistance, they possess the authority to make judgments and assess the outcomes.

Participants in both countries think that the suitability of AI for academic purposes depends on the context (Russia: 12, Turkey: 10). In Russia, “suitable” (5) and “suitable for academic purposes” (4) were emphasized more frequently, while in Turkey, opinions such as “be sufficient for objective issues” (2) and “depends on purposes” (2) were expressed. In this regard, the Russian student stated “It may be appropriate if we offer different ideas.” Turkish student T_02 stated, “It’s crucial to consider the intended use of this content.” A presentation for a lecture is appropriate, while a paper or article is not.”

In both countries, participants mentioned the impact of AI on the learning process (Russia: 8, Turkey: 9). “Access information” (Russia: 4, Turkey: 5) and “easier” (Russia: 1, Turkey: 2) are positive impacts mentioned in both countries. However, participants in Russia were more likely to mention “no-knowledge” (8). T_03, a Turkish student, expressed that the platform is highly convenient for efficiently retrieving material from previous studies as well as fresh findings. A Russian student (R_17) observed, “It is beneficial for us when we need to compose a summary or retrieve information…”

Regarding cultural background, the findings in Table 4 reveal very significant differences between the two nations in the way AI material is presented. While Turkish students are more at ease presenting materials (Comfortable: Turkey 17, Russia 13), Russian students are just somewhat comfortable—Partially Comfortable: Russia 5, Turkey 0. In the perception of academic honesty, it is noteworthy that Turkish students emphasize this issue more (Turkey 39, Russia 30) and especially the issue of being honest (Be honest: Turkey 6, Russia 2). Cultural differences are also evident in terms of content quality, with Turkish students more concerned about inconsistency and incomplete information (Turkey 11, Russia 3), while Russian students see the use of ready-made information as more problematic (Russia 6, Turkey 4). We observe a similar divergence in ethical and legal concerns. Russian students were more concerned about privacy (privacy concerns: Russia 4, Turkey 2), while Turkish students were more worried about ethical issues (ethical issues: Turkey 4, Russia 1). Both groups shared a common concern of laziness, with six students in each group, while Russian students were more concerned about their research abilities being limited. These differences can be considered a reflection of the Turkish educational system’s emphasis on group work and sharing and the Russian education system’s emphasis on individual work and originality. In addition, these findings also reveal differences in cultural attitudes toward technology adaptation, the concept of academic honesty, and data security.

Table 4

| Codes | Russia | Turkey | Total |

|---|---|---|---|

| Presenting AI content | 37 | 33 | 70 |

| Comfortable | 13 | 17 | 30 |

| Comfortable | 6 | 8 | 14 |

| Comfortable with my contribution | 5 | 9 | 14 |

| Normal | 2 | 0 | 2 |

| Partially comfortable | 5 | 0 | 5 |

| Moderate comfort | 3 | 0 | 3 |

| Quoting from an article | 1 | 0 | 1 |

| Similarity license allows | 1 | 0 | 1 |

| Uncomfortable | 19 | 16 | 35 |

| Academic integrity issues | 30 | 39 | 69 |

| Plagiarism | 11 | 15 | 26 |

| Be honest | 2 | 6 | 8 |

| Not appropriate for academic assignments | 3 | 4 | 7 |

| Non-honest | 3 | 3 | 6 |

| Lack of effort | 3 | 1 | 4 |

| Need policies and rules | 0 | 3 | 3 |

| Effortless | 1 | 2 | 3 |

| Cheating | 1 | 1 | 2 |

| Copy and paste | 1 | 1 | 2 |

| Giving citations | 1 | 1 | 2 |

| Not appropriate to present AI output | 0 | 2 | 2 |

| Unfairness | 2 | 0 | 2 |

| Confirm the information | 1 | 0 | 1 |

| Destroys academic honesty | 1 | 0 | 1 |

| Content Quality Issues | 16 | 22 | 38 |

| Inconsistencies, and missing information | 3 | 11 | 14 |

| Ready-made information | 6 | 4 | 10 |

| Monotony | 1 | 2 | 3 |

| Unproductive | 2 | 1 | 3 |

| Decrease originality | 0 | 2 | 2 |

| Do not trust | 1 | 1 | 2 |

| Everyone’s homework is the same | 1 | 1 | 2 |

| Lack of references | 1 | 0 | 1 |

| Rewording | 1 | 0 | 1 |

| Ethical and legal concerns | 8 | 8 | 16 |

| Privacy and data concerns | 4 | 2 | 6 |

| Ethical issue | 1 | 4 | 5 |

| Use for malicious purposes | 2 | 1 | 3 |

| Personal security | 1 | 0 | 1 |

| Property rights | 0 | 1 | 1 |

| Negative views | 22 | 16 | 38 |

| Laziness | 6 | 6 | 12 |

| Losing profession | 3 | 3 | 6 |

| Negatively | 4 | 0 | 4 |

| Prevent students from thinking | 3 | 1 | 4 |

| Hinder critical thinking | 1 | 2 | 3 |

| Restricts our research ability | 3 | 0 | 3 |

| Accepting without critiques | 0 | 2 | 2 |

| Reduction in learning | 2 | 0 | 2 |

| Cannot be sufficient for issues such as social norms | 0 | 1 | 1 |

| Loss of responsibility | 0 | 1 | 1 |

| Positive views | 18 | 15 | 33 |

| Get support | 3 | 6 | 9 |

| Acceptable | 3 | 5 | 8 |

| No concern | 5 | 3 | 8 |

| Positive | 5 | 0 | 5 |

| Useful | 2 | 1 | 3 |

| Suitability depends on context | 12 | 10 | 22 |

| Suitable for academic purposes | 4 | 3 | 7 |

| Suitable | 5 | 1 | 6 |

| Proper use | 2 | 1 | 3 |

| Be sufficient for objective issues. | 0 | 2 | 2 |

| Depends on person | 1 | 1 | 2 |

| Depends on purposes | 0 | 2 | 2 |

| Impact on learning process | 8 | 9 | 17 |

| Access information | 4 | 5 | 9 |

| Easier | 1 | 2 | 3 |

| Better explanations | 1 | 0 | 1 |

| Getting help | 1 | 0 | 1 |

| Helping homework | 0 | 1 | 1 |

| Integrating course | 0 | 1 | 1 |

| Saves time | 1 | 0 | 1 |

| No-knowledge | 8 | 1 | 9 |

Categories and codes on evaluation of AI-generated content for academic integrity and plagiarism.

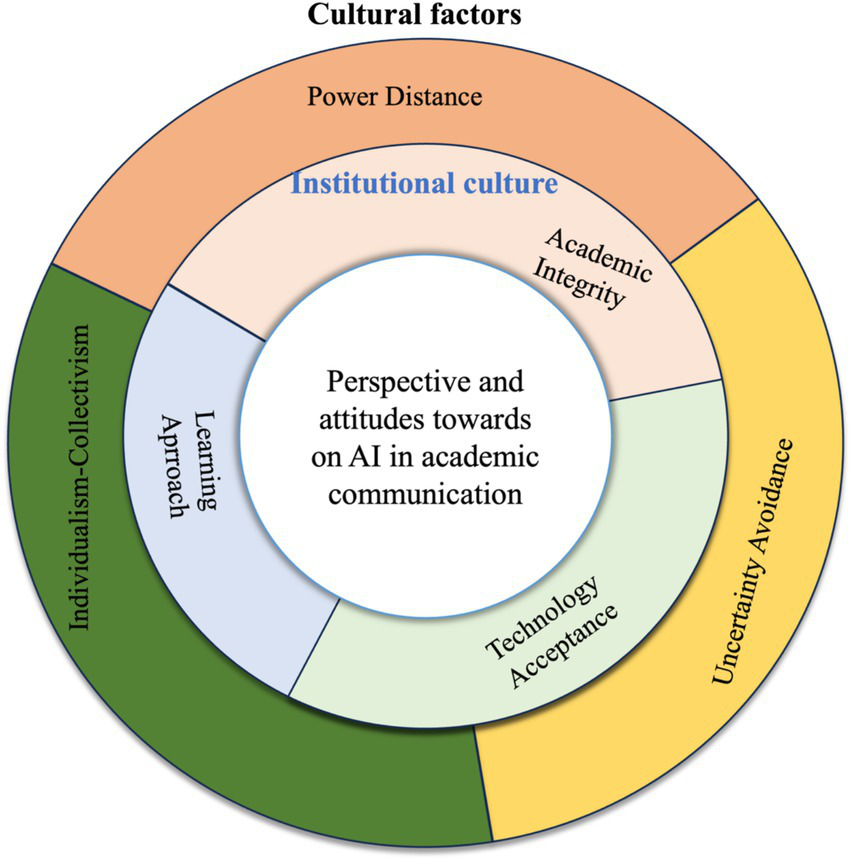

We observed significant cultural differences in the attitudes and experiences of Turkish and Russian students toward the use of AI. Turkish students tend to see AI as a means of group work and communication. This reflects the collectivist nature of Turkish culture. Information sharing and discussion are more welcome among Turkish students. Their approach to using AI also demonstrates more leisure and entrepreneurship. Conversely, Russian students give more of their individual usage top priority. Their propensity to shun ambiguity drives them to be more wary of AI. Russian students are more concerned about data security and privacy issues. Academic honesty is an important issue in both cultures. However, while Turkish students look at the issue from a broader perspective, Russian students express more specific concerns. Turkish students’ more active use of AI and evaluation of it in various fields indicates a low uncertainty avoidance tendency. Russian students’ emphasis on individual learning and their cautious approach in the use of AI reflect high uncertainty avoidance tendencies. These findings are consistent with the collectivism–individualism and uncertainty avoidance dimensions of Hofstede’s cultural dimensions theory.

Discussion

Our study reveals complex and intriguing relationships between AI technology, academic communication, and culture in Turkish and Russian university settings. Students from these two countries have the same and different perceptions and ways they use AI tools in academic communication. It depends on culture and how people see technology.

General perceptions and experiences

In terms of general perceptions and experiences, students from both Turkey and Russia recognized the potential benefits of AI in increasing the accessibility of information and streamlining academic tasks. This finding is consistent with previous research highlighting the positive impact of AI on student learning experiences (Aldosari, 2020; Jaboob et al., 2024; Yan et al., 2024). According to the results of the study conducted by Tierney et al. (2025) and Stöhr et al. (2024) university students have more positive thoughts about the use of AI. From the perspective of the UTUAT model, it can be associated with the performance expectancy dimension. Acosta-Enriquez et al. (2024) and Sergeeva et al. (2025) demonstrate that the perceived performance expectancy of AI technology is a highly positive factor. The more positive students’ performance expectations, the more likely they are to continue using it. Turkish students have a more positive attitude about the collaborative potential of AI. The results (Kim and Lee, 2023) showed that student-AI collaboration has a big impact on creativity in content, expressivity in expression, and public utility in effectiveness. These effects changed based on how students felt about AI or how good they were at drawing. But Russian students placed more emphasis on privacy and data security concerns. Cultural differences in how people avoid uncertainty (Ismatullaev and Kim, 2024) and how they feel about using technology (Grassini and Ree, 2023; Sarwari et al., 2024) can explain these differences in focus.

Impact on academic communication and collaborations

The study’s findings demonstrate that AI exerts a substantial influence on academic communication and partnerships among university students. According to the studies (Hohenstein et al., 2023; Sarwari et al., 2024), AI and technologies based on it have sped up communication and the gathering of information, created new ways for people to interact, and especially helped people who are lonely connect with each other. Specifically, Turkish participants highlighted the favorable impacts of AI on collaborative tasks, interpersonal engagement, and the overall standard of communication. Previous studies support this finding. According to studies, AI helps to create a collaborative learning environment (Porter and Grippa, 2020; Son and Jin, 2024). Students prioritize its utilization as an assistant (e.g., reviewing and preparing for lectures, assisting with assignments), while educators utilize it as a content production assistant (e.g., personalizing content, writing lecture notes) (Clos and Chen, 2024). However, Russian students are more concerned about the negative impact of AI, such as less critical thinking and lack of originality. This assessment may be due to the cultural difference between how students learn and what they value in education (Keller, 2009). The performance expectancy dimension of the UTAUT model is directly related to these findings. Especially the positive statements emphasized by Turkish students indicate that they have high performance expectations. Russian students’ positive perceptions such as easy access to information can also be considered as one of the factors supporting performance expectancy. In this respect, both positive and negative attitudes of the students reveal the importance of the UTAUT model in student motivation and adoption behaviors.

Evaluation of AI-generated content

The findings of this study reveal that there are significant cultural differences in the evaluation of AI-generated content in terms of academic integrity and plagiarism. Participants in both Russia and Turkey highlighted the risks and opportunities of using AI in the academic environment, but these considerations are shaped by different priorities and approaches.

The findings show that respondents from Turkey approach the issue of academic integrity and plagiarism in the use of AI from a broader perspective. In particular, themes such as the emphasis on “being honest” and “the necessity of policies and rules” are prominent in Turkey. This is in line with Foltynek et al. (2023) or Karkoulian et al. (2024) discussions on the need to define the ethical framework of AI use. Goel and Nelson (2024) offer a similar perspective, arguing that the principles of academic integrity need to be reinterpreted in the face of emerging technologies. In Russia, academic integrity concerns are expressed in terms of the risks of slipping into “ready-madeness,” losing the ability of students to produce original thinking, and cheating. According to the results of the study by Stöhr et al. (2024), academic integrity and ethical issues stand out among students’ concerns about the use of AI. 61.9% of the students surveyed considered the use of AI-based chatbots in assignments and exams as a form of cheating. Moreover, a significant number of respondents (54.2%) expressed concerns about the potential impact of these technologies on future learning processes. Among students, these concerns were particularly centered on ethical concerns, whether AI tools undermine educational goals, and how they would impact academic achievement. However, students were generally opposed to the idea of banning the use of AI altogether and recognized that these tools can provide certain benefits in education.

In the study, Turkish students are more concerned about inconsistency and incomplete information from AI, while Russian students find the use of “ready-made information” more problematic. This reflects the differences in the understanding of learning in both countries. It can be said that Turkish students try to internalize the information presented by AI more in both group work and individual research, whereas Russian students see “ready-made” information as a threat to their own research process. Indeed, Cotton et al. (2024) make similar warnings that the practicality offered by technological tools may weaken student research skills.

Although ethical and legal concerns were raised in both countries, Russian participants focused more on “privacy and data security” concerns, while Turkish participants drew attention to the potential for “ethical violations.” This distinction reveals that, as Bokhari and Myeong (2023) emphasize, the approach to the use of technology may vary in the educational cultures and legislative structures of different countries. Both Turkish and Russian students share a common concern about “malicious use,” underscoring the need for social and academic spheres to monitor technological developments.

Analysis of the findings within the framework of Hofstede’s cultural dimensions theory reveals striking differences. Participants in Turkey tend to see AI as a tool for sharing and group work with a more “collective” attitude. Moreover, in Turkey, there is relatively less uncertainty avoidance towards the use of AI for different purposes, which enables participants to experience AI in a more relaxed and “entrepreneurial” way. In Russia, on the other hand, the emphasis on individual work is characterized by a higher sensitivity to uncertainty (high uncertainty avoidance) and prioritization of data security concerns. Thus, Russian students are more likely to use AI with caution, worry about losing their own research skills, and have privacy concerns. These results support Hofstede’s collectivism–individualism and uncertainty avoidance dimensions.

The most frequently expressed issue in both countries’ negative perceptions of AI is that it causes laziness. Additionally, Russian students emphasize concerns such as “limitation of research ability” and “negative impact,” while Turkish students emphasize the risks of “preventing critical thinking” and “accepting without questioning.” However, it is also noteworthy that “not feeling fear or anxiety” and “finding it positive” are more common in Russia. In Turkey, on the other hand, the perceptions of “receiving support” and “acceptability” are slightly more prominent. The nuances of positioning AI technologies in education and integrating them into personal learning practices reflect this dichotomy.