- Faculty of Information Technology, Monash University, Clayton, VIC, Australia

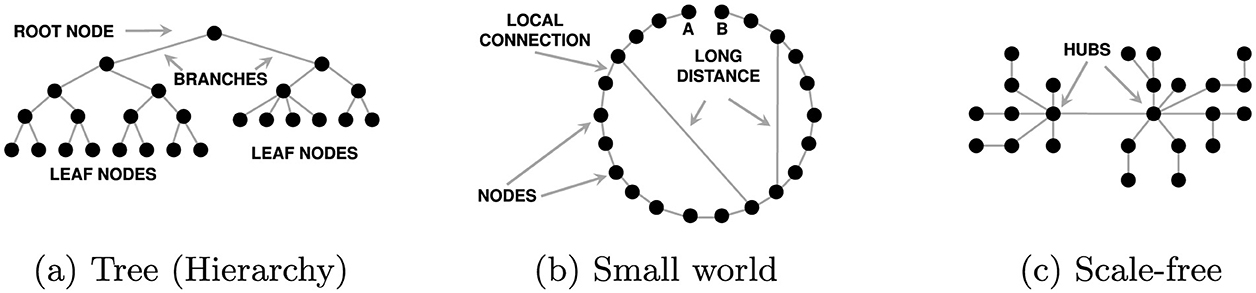

Cognitive dysfunction, and the resulting social behaviours, contribute to major social problems, ranging from polarisation to the spread of conspiracy theories. Most previous studies have explored these problems at a specific scale: individual, group, or societal. This study develops a synthesis that links models of cognitive failures at these three scales. First, cognitive limits and innate drives can lead to dysfunctional cognition in individuals. Second, cognitive biases and social effects further influence group behaviour. Third, social networks cause cascading effects that increase the intensity and scale of dysfunctional group behaviour. Advances in communications and information technology, especially the Internet and AI, have exacerbated established problems by accelerating the spread of false beliefs and false interpretations on an unprecedented scale, and have become an enabler for emergent effects hitherto only seen on a smaller scale. Finally, this study explores mechanisms used to manipulate people's beliefs by exploiting these biases and behaviours, notably gaslighting, propaganda, fake news, and promotion of conspiracy theories.

1 Introduction

Human society has long been plagued by dysfunctional behaviours that arise from faulty thinking and social interactions. Some of the most widespread cognitive failures occur within groups of people. Mackay (1841) painted a vivid picture of “popular delusions and the madness of crowds.”

A major social problem of our time is that digital media and Artificial Intelligence (AI) are exacerbating social problems that arise from dysfunctional thinking and perception. In particular, they allow conspiracy theories, and other false belief systems, to spread rapidly and create social problems (Shao et al., 2018; Bovet and Makse, 2019; Treen et al., 2020; Himelein-Wachowiak et al., 2021; Fisher, 2022; Ruffo et al., 2023).

Social media, notably Facebook and Twitter, had an immense impact on public opinion during the “Arab Spring” revolutions (Wolfsfeld et al., 2013). During the Tahrir Square protests, the regime was unable to control the information shared on social media, which played a pivotal role in shaping both the protests and their outcomes (Tufekci and Wilson, 2012). The powerful impact and pervasive influence of social media on public opinion and driving activism has been corroborated by many other researchers (Tufekci, 2017; Bessi and Ferrara, 2016; Zhuravskaya et al., 2020; Gerbaudo, 2012; Lotan et al., 2011). Conversely, Morozov (2011) described how authoritarian regimes exploit social media for distributing malign propaganda, and for monitoring public attitudes and reactions.

Many faults in social cognition and decision-making arise from the ways in which individuals process and apply information about themselves, about other individuals, and about social situations. A gap in our understanding of how major social problems arise lies in the need to identify mechanisms by which failures in cognition translate into social behaviour. This also implies that the need to identify how links occur across scales points to gaps in each of the fields of research involved.

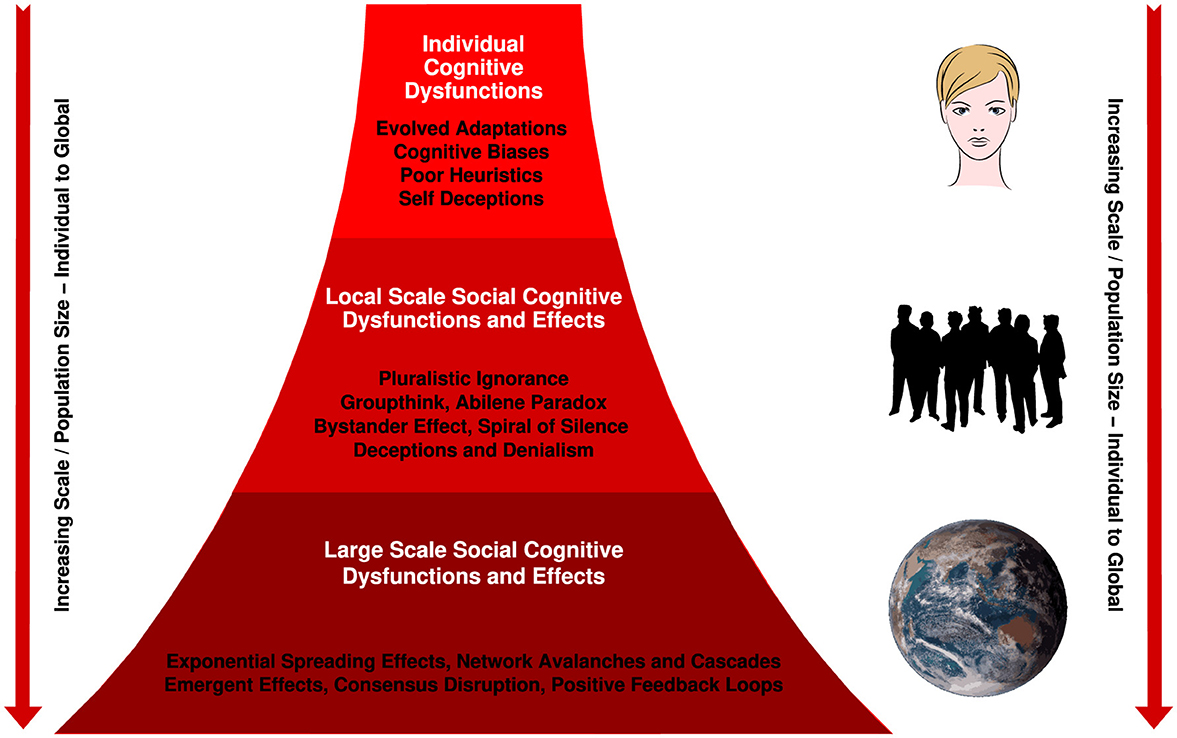

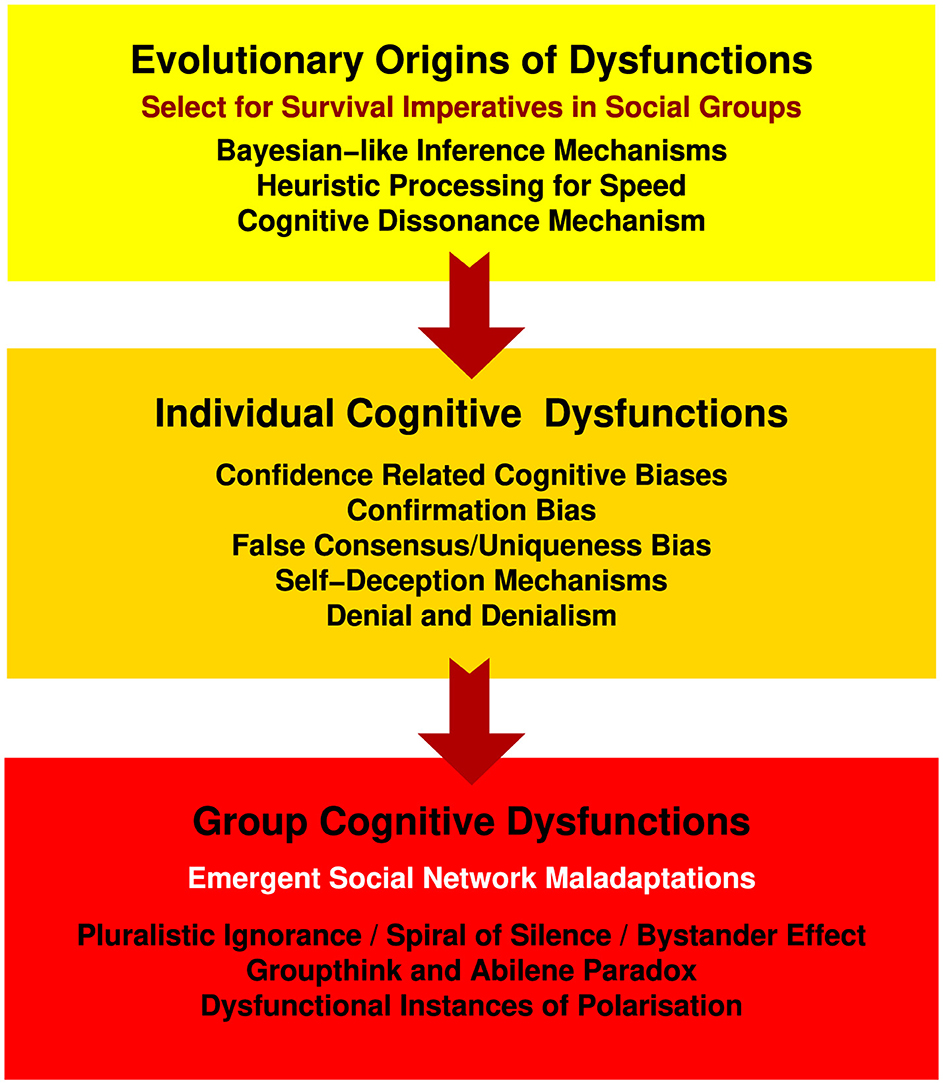

To begin closing the above gaps, this study presents a model to show how cognitive dysfunction and biases in individuals lead to dysfunctional effects within social groups and networks. We then show how modern networking technology magnifies and accelerates these effects, leading to large-scale dysfunctional behaviour (Figure 1). The scales span individuals, small social groups, and very large social groups, which may encompass entire societies. Underlying our model are insights gained from complex network theory and multi-agent models, as well as from research into the effects of modern communications, including social media.

Figure 1. Digital media with their speed and global footprint expand the populations exposed to the damaging social impacts of dysfunctional thinking.

The main points in our argument are as follows:

1. Evolved drives and simplifying mechanisms combine with cognitive limitations, creating the potential for cognitive errors and biases by individuals (Section 2). Combined with cognitive dissonance, they lead to fundamental dysfunctions, notably confidence related biases (Section 2.3), confirmation bias (Section 2.4), false consensus and uniqueness bias (Section 2.5), self-deception (Section 2.6), and denial (Section 2.7).

2. Cognitive dysfunctions spread within groups, especially by peer-to-peer interactions (Section 3). Several mechanisms enable cognitive dysfunctions to propagate across social networks (Section 3.1), including percolation and cascades, and are constrained by network topology. The spread of cognitive dysfunction through social groups leads to malign social effects, such as Pluralistic Ignorance (Section 3.2), groupthink and the Abilene Paradox (Section 3.3).

3. Modern communications, especially digital networks and media, accelerate and expand the spread of false beliefs and interpretations, and enable social cognitive errors to spread across large populations (Section 4). Digital networks enable the emergence of phenomena that were previously not possible, including the exploitation of dysfunctions across large populations (Section 4.1). New technology, especially Artificial Intelligence (AI), can enhance and accelerate adverse impacts produced by digital networks (Section 4.2). These include social harms that emerge by accident such as misinformation, pluralistic ignorance and groupthink, and those that arise by intent through malign exploitation.

4. Biases and cognitive dysfunctions are vulnerabilities that have led to a plethora of methods evolving to permit malign exploitation (Section 5). These range from the distribution of disinformation, misinformation, malinformation, and false interpretations, to gaslighting, propaganda, post-truth, and fake news (Section 5.1), through to denialism, conspiracy theories, and disruptive propaganda (Section 5.2).

In summary, we argue that advances in digital technology and AI permit the formation of immense, highly connected social networks. Together they create conditions for rapid emergence of large-scale, dysfunctional group behaviours and increase the risk of malign exploitation.

2 Cognitive dysfunction and biases

Cognitive limitations, and evolved idiosyncrasies in the way humans process information, underlie social cognitive dysfunctions. In turn, they lead to many of the large scale effects observed in social networks, especially when they are interconnected via digital media.

Here, the term cognitive dysfunction means any loss or failure of ability to perceive or interpret the world in the intended or expected manner. The term cognitive effect means any psychological mechanism that alters a person's cognition and resultant behaviour, thus effecting an internal state change. Cognitive effects include limitations and evolved idiosyncrasies in the way humans process information. Behaviour is defined here to be the reactions of individuals or groups to stimuli that include the social environment as it is perceived, or misperceived.

2.1 Evolutionary origins of cognitive errors and biases

Through evolution, humans are adapted to have drives and behaviours that ensure survival. They include affiliation with a group, status and reproductive success (Maslow, 1943; Kenrick et al., 2010). These deep-seated drives were essential for survival in the environments where they evolved. However, they often lead to dysfunctional cognition and behaviour (Maner and Kenrick, 2010) and are poorly adapted to modern societies (Li et al., 2018; Del Giudice, 2018).

Expanding and updating the work of Maslow (1943), Kenrick et al. (2010) showed that behaviours and adaptions evolved to maximise the probability of genome survival within social groups, a common strategy in many species. Survival in social groups favours adaptations for fast reaction times, as well as social identity maintenance and the imperative to improve status within a group.

When processing many stimuli to interpret and react to a complex situation, a common approach is to simplify the problem by trading away reasoning accuracy for speed (Kahneman and Tversky, 1972; Tversky and Kahneman, 1974; Kahneman, 2011). But mechanisms to simplify complicated problems often lead to cognitive errors. An example is the tendency to ascribe deliberate purpose to unexplained natural events, and to anthropomorphize complex causes behind unpredictable events (Epley et al., 2008). The search for motivated causes is seen in many contexts, such as witch hunts (Mackay, 1841), accident investigations (Green, 2014), and conspiracy theories. It appears to have its origins in our animal roots. Chimpanzees, for instance, rage at storms as though they are confronting rivals (Goodall, 1999).

Below, we discuss the most problematic cognitive effects and biases, and show how these are related to survival imperatives.

2.2 Cognitive limitations

Underlying most dysfunctional cognition and behaviours are deep-rooted mechanisms that are evident both in the way humans process information, and in behaviours (Kenrick et al., 2010; Maner and Kenrick, 2010).

The human brain and senses have limited capacity for interpreting complex information (Miller, 1956; Li et al., 2022), so environmental complexity makes it impossible to analyse every scenario quickly, and in detail. This is especially important when responding to immediate threats to survival. One consequence is that people respond to issues that they can act upon immediately, but often ignore long-term problems that they have not experienced directly, such as climate change or sea-level rise (Graham et al., 2013).

As noted earlier, an important adaptation is to use simplifying mechanisms (Halford et al., 1998). Evolution often favours solutions that are adequate rather than perfect. To cope with cognitive complexity, agents achieve “fast thinking” by using heuristics and other shortcuts that skip over often complex chains of causation (Tversky and Kahneman, 1974, 1983; Kahneman and Tversky, 1996).

Recent work shows that Bayesian-like mechanisms are central to human cognition (Zhong, 2022; Pilgrim et al., 2024). In the Bayesian model, prior knowledge is updated from observed evidence, resulting in the subjective prior beliefs or probability distributions becoming posterior beliefs or probability distributions. As genuine Bayesian inference is cognitively intensive and thus slow, simplified forms of Bayesian inference are now seen as a representative model. While simplification yields a faster result accuracy is sacrificed, therefore evolution for fast reaction times produces an implicit trade-off between accuracy and cognitive load (Griffiths et al., 2008; Bowers and Davis, 2012; Lake et al., 2015).

Heuristic “fast thinking” reasoning mechanisms, often labelled “bounded rationality” or “rules of thumb” can further improve reaction times, but often with further losses in accuracy. Poor choices of heuristics can result in dysfunctional cognition (Tversky and Kahneman, 1983; Gigerenzer, 1991; Verschuere et al., 2023). For instance, Orchinik et al. (2023) found that an adaptive heuristic could be fooled, where false messages were accepted when most messages were truthful, and vice versa. From an early age, children learn to filter random signals and remember contexts with immediate impact. The chief mechanisms are schemas: cognitive frameworks (“recipes”) for interpreting and responding to the environment (Piaget, 2013). Association plays a role in the assimilation of new experiences (Takeuchi et al., 2022), so existing schemas are reinforced and become generalised. Once a context is framed by a schema, heuristics may be used to infer consequences.

When observations of reality are inconsistent with a person's internal beliefs or expectations, Cognitive Dissonance occurs. When confronting cognitive dissonance, individuals seek to avoid the resulting discomfort (Festinger, 1962). They alter either their actions, their beliefs, or their interpretations (Harmon-Jones and Mills, 2019). The resulting inconsistency, and its psychological effects, underpin a number of dysfunctional biases and effects, such as confidence related biases, self-deception, and denial behaviour (all discussed later) (Ramachandran, 1996; McGrath, 2017). Cognitive dissonance is a strong causal factor in confirmation bias (Festinger, 1964; Jonas et al., 2001; Knobloch-Westerwick et al., 2020; Miller and Cabell, 2024).

The faculty for cognitive dissonance, and its avoidance, are evolved survival mechanisms (Egan et al., 2007; Kaaronen, 2018). The ability to recognise and act upon situational changes that could be dangerous aids survival and, acting in the presence of confusing cognitions, may also aid survival (Egan et al., 2007; Harmon-Jones et al., 2017).

2.3 Confidence related biases

A well-studied problem in psychology and other literature is overconfidence, which some consider to be the most important cognitive bias (Kahneman, 2011; Moore, 2018). Moore and Healy (2008) identified three distinct definitions of overconfidence: overestimation of one's own performance (Kruger and Dunning, 1999); overestimation of one's own performance relative to others; and overconfidence in the accuracy of one own beliefs (which they termed “over-precision”). They argued that:

... On difficult tasks, people overestimate their actual performances but also mistakenly believe that they are worse than others; on easy tasks, people underestimate their actual performances but mistakenly believe they are better than others.

Several studies have argued that the overconfidence error is an artefact of human Bayesian-like belief updating when information is uncertain (Griffiths and Tenenbaum, 2006; Griffiths et al., 2008; Moore and Healy, 2008).

Overconfidence biases can explain why agents over or underestimate group opinions, and act so readily on these false perceptions of reality. They also explain how groupthink (see later) leads to wrong decisions being made with high confidence, without assessing risk, and without reviewing alternatives. In both cases, individuals fail to recognise their incompetence to perform the task and actively exacerbate a dysfunctional group interaction. Similarly, where the Dunning-Kruger effect (see later) is in play, cognitive dissonance may not arise if the afflicted individual is unable to apprehend differences between observations and expectations of reality.

Misapprehensions of own competence can manifest in other forms. A notable example is Illusory Superiority (Taylor and Brown, 1988), a cognitive bias in which people assume superiority, leading to dysfunctional behaviours that make them feel stronger. One result is that trying to argue against people's entrenched ideas can have the opposite effect of reinforcing them (Nyhan and Reifler, 2010; Nyhan, 2021).

Where manifested this problem may be exacerbated by the Echo Chamber Effect (see later), where repeated exposure to beliefs that are coherent with prior beliefs further reinforces them (Del Vicario et al., 2016a; Cinelli et al., 2021).

Using measurements of neural activity, Rollwage et al. (2020) showed that choices made with a high confidence level lead to integration of evidence that confirms the choice, unlike evidence disconfirming the choice. This shows a causal relationship between confidence related biases, and confirmation bias, discussed next.

2.4 Confirmation bias

Confirmation bias is perhaps the most problematic inferential error in human reasoning (Greenwald, 1980; Evans, 1989). Individuals experiencing confirmation bias inadvertently collect evidence selectively, accepting evidence and opinions that support their prior beliefs, and rejecting opposing evidence (Taber and Lodge, 2006). They also tend to overvalue arguments that are consistent with their prior beliefs (Nir, 2011). Avoidance of cognitive dissonance often motivates this bias (Festinger, 1957). The selection is unwitting, in contrast to litigants deliberately selecting supporting evidence to build a case (Nickerson, 1998).

Zhong (2022) argues that choices coherent with prior beliefs are optimal, thus showing that confirmation bias is survival strategy that optimises both time and resources (Dorst, 2020; Page, 2023). This is coherent with recent work, which argues that Bayesian-like belief updating underpins confirmation bias, as the high resource demands of genuine Bayesian updating force the use of approximations (Pilgrim et al., 2024).

Both conclusions align with the observation that evolution often favours solutions that minimise resource expenditures, and provide a mathematical explanation for why confirmation bias is an evolved cognitive feature.

One consequence of confirmation bias is motivated cognition. That is, prior beliefs or predispositions motivate individuals to process information and evidence in a prejudiced manner (Strickland et al., 2011). They evaluate information positively if it is consistent with their values, but reject or demean conflicting information (Eagly and Chaiken, 1993). This behaviour does not appear to depend on cognitive ability, and measuring it has presented challenges (Stagnaro et al., 2023; Tappin et al., 2021).

Another consequence is selective exposure (Stroud, 2011). Individuals selectively gather information that resonates with their attitudes and helps them to arrive at a desired conclusion, which may not be factual (Kunda, 1990). Selective exposure leads to avoiding sources of contradictory evidence, thus reinforcing their existing attitudes, values, beliefs and predispositions (Iyengar and Hahn, 2009).

Individuals also attempt to maintain an “illusion of objectivity” by establishing pseudo-rational justifications that support their predispositions. In this way, they convince themselves that the process was fair and judicious (Kunda, 1990). This is clearly an instance of self-deception (see Section 2.6), wherein individuals deceive themselves. This bias can have lethal consequences (Kassin et al., 2013; Holstein, 1985; Norman et al., 2017).

2.5 False consensus and false uniqueness bias

Under false consensus bias, an individual's perception of group preferences becomes biased by their own preferences. They tend to overestimate support for their own views. Similarity between self and others is more readily accessed from memory than dissimilarity, thus creating an illusion of consensus on the individual's preferred position (Ross et al., 1977).

Individuals under false consensus bias are prone to interact with like-minded people. These interactions can produce an “Echo Chamber Effect” (Del Vicario et al., 2016a; Cinelli et al., 2021) and shape their perception about social preferences (Fiske and Taylor, 1991). An alternative explanation is that people exaggerate support for their preferred position because they focus on their own choice, rather than alternatives (Marks and Miller, 1987).

A commonly seen shortcut in evaluating multiple sources of information is “correlation neglect” where multiple repeated accounts from a single source are considered to be independently sourced. This can contribute to confirmation bias and false consensus bias by adding undue weight to propositions that are being widely repeated (Enke and Zimmermann, 2017; Bowen et al., 2021).

The opposite bias is false uniqueness bias where individuals mistakenly underestimate the prevalence of their own attitudes. That is, they perceive themselves to be almost unique, when they are not (Suls and Wan, 1987). A person's perception of their uniqueness can be either negative or positive, but generally this bias refers to individuals' tendency to see themselves as superior to others on desirable attributes and behaviours (Goethals et al., 1991; Marks and Miller, 1987).

2.6 Deception and self-deception

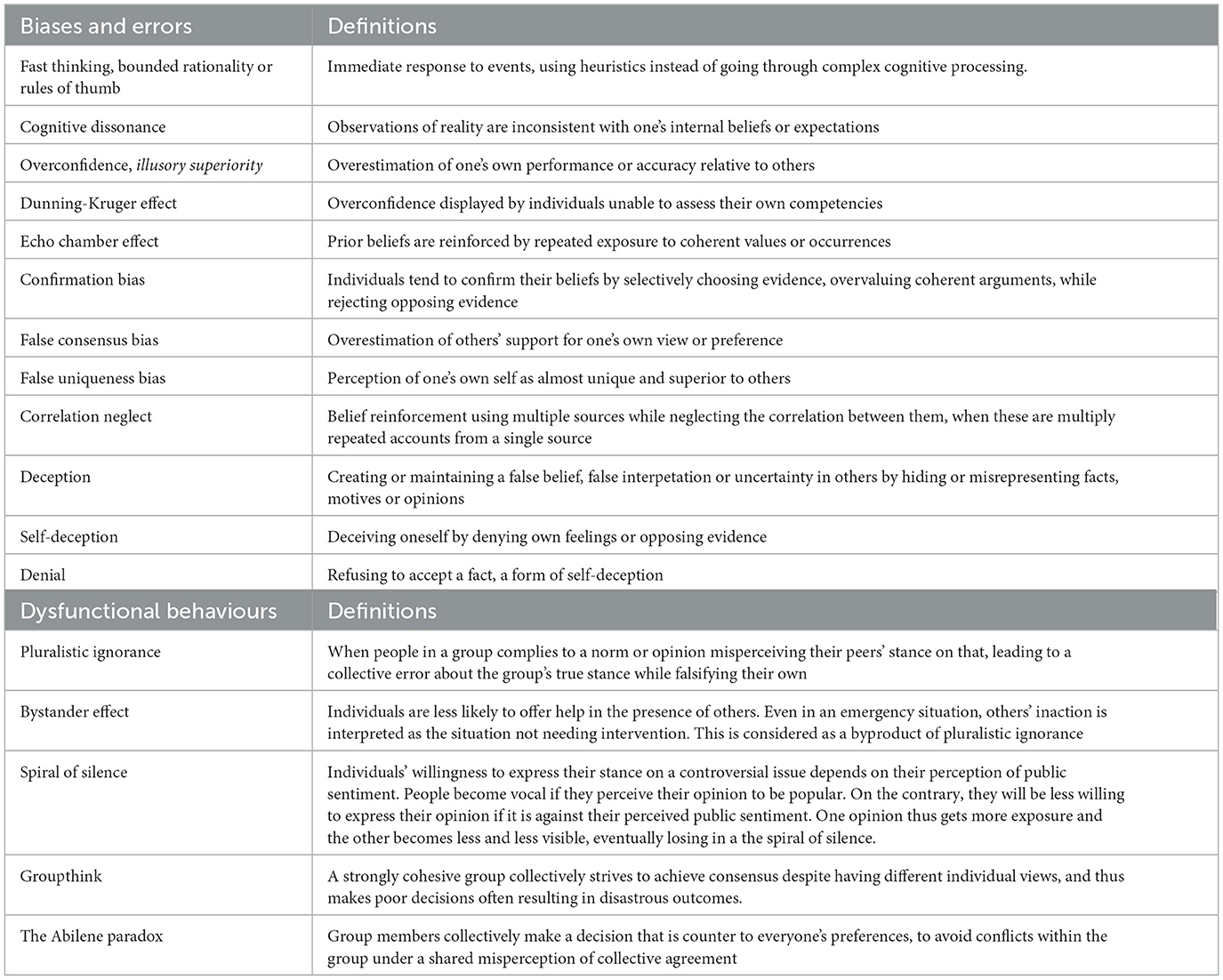

Deception evolved as a means to gain a survival advantage and is widespread in nature (Trivers, 2000). The mechanics of how deceptions work have been well studied empirically, and more recently quantitatively, and can be accurately modelled using information theory (Figure 2).

Figure 2. Information theoretic models of deception and their effects. The two prevalent deceptions in individual behaviour are degradation, where actual opinions are concealed in some fashion, and corruption, where the opinion of others is mimicked. Less frequent are denial, where the information channel is blocked, or subversion where interpretation is manipulated. The respective effects are false beliefs, or reduced confidence in true beliefs. Compound deceptions are common, mixing deception types to implant false perceptions and interpretations (Kopp et al., 2018).

Deceptive behaviours are implicit in most of the dysfunctional social behaviours explored in this paper (Kalbfleisch and Docan-Morgan, 2019). They occur when individuals create false beliefs in the minds of others by hiding or misrepresenting their actual agendas, motives, feelings, or opinions.

The four basic deception types, degradation, corruption, denial, and subversion are defined in (Kopp et al., 2018). Of these, the prevalent types in individual behaviour are degradation, where actual beliefs are concealed in some manner, and corruption, where the beliefs of others are mimicked. Individuals or groups attempting to exploit dysfunctional thinking will often employ subversion and compound deceptions, mixing deception types to implant false perceptions and interpretations (Kopp et al., 2018).

If we accept the Bayesian-like model of cognition, these four deception types target the production of a posterior belief from a prior belief. Where a prior belief is true, this is done by introducing false data or altering how true data is interpreted. Where a prior is already a false belief, this is done by reinforcing it with more false data, or by reinforcing a false interpretation with further interpretations coherent with it (Haswell, 1985; Brumley et al., 2005). Success or failure of any specific deception will depend upon the specific cognitive vulnerabilities of the targeted individual or population. Any cognitive faculty or behaviour that leads to a failure to detect and reject a deception is a vulnerability.

Deceptive behaviour is a characteristic feature of pluralistic ignorance (Section 3.2) and is often seen in groupthink (Section 3.3), where false beliefs are not internalised. However, self-deception predominates in other dysfunctional behaviours.

Three hypotheses have been proposed to explain this behaviour. Trivers (2000) argues that self-deception is an evolved function to improve an individual's ability to deceive others, while Ramachandran (1996) argues that individuals will self-deceive to avoid cognitive dissonance (Harmon-Jones and Mills, 2019). Jian et al. (2019) have shown that self-deception increases with cognitive load, as defined by Sweller (1988). This could be explained as a mechanism to reduce cognitive load and cognitive dissonance when faced with uncertainty (Kaaronen, 2018). Intentional deceivers may also become self-deceivers by believing their own falsehoods (Li, 2015). These hypotheses are not mutually exclusive. An individual internalising false beliefs can be concurrently avoiding cognitive dissonance, while also improving their ability to deceive others about their actual motivations and agendas (von Hippel and Trivers, 2011).

2.7 Denial and denialism

Bardon (2019) argues that denial and denialism are instances of motivated cognition, described as the “unconscious tendency of individuals to process information in a manner that suits some end or goal extrinsic to the formation of accurate beliefs” (Kahan, 2011). Whereas denial can be confined to a single unpalatable truth, denialism “... represents the transformation of the everyday practise of denial into a whole new way of seeing the world ...” (Kahan, 2011; Bardon, 2019). Varki (2009) argued that denial behaviours evolved to gain a survival advantage, but with a basis different from the hypothesis argued by Trivers (2000). Both hypotheses are strongly coherent with the finding by Brumley (2014) that misperception of reality can provide an evolutionary advantage.

The mechanisms that underpin denial and denialism include cognitive dissonance, the cognitive biases described above, and self-deceptions (Bardon, 2019). One driving motivation is avoidance of grief, denial being the first of the five stages in internally coping with unwanted objective truths (Kübler-Ross et al., 1972).

It is evident that self-deceptions related to denial mostly comprise denial and degradation, especially where inputs are rejected, corruption where false perceptions are accepted, and subversion where false interpretations and rationalisations are accepted. Compound self-deceptions, combining all four deceptions, are often employed (Brumley, 2014).

Both denial and denialism are important enablers of exploitation (Section 5). Notably, the contemporary digital environment characterised by ‘information overload' creates conditions highly favourable for exploitation, facilitating deception and increasing susceptibility to self-deception (Kahneman, 2011).

3 Cognitive dysfunction in groups

Interactions between individuals in social groups require communication. This results in the formation of social networks, which allow false beliefs and interpretations to propagate across the group. Properties of these networks therefore have important implications for how some social cognitive dysfunctions arise and propagate.

To explain this, we first explore social networks (Section 3.1), as distinct from digital networks providing connectivity for social networks (Section 4). We then explore the best-known group cognitive dysfunctions: pluralistic ignorance (Section 3.2), groupthink and the Abilene paradox (Section 3.3). While polarisation shares the emergent property with these cognitive dysfunctions, it is a behavioural effect rather than dysfunction (Smith et al., 2024).

3.1 Propagation of beliefs across social networks

The term social network refers here to any set of individuals who interact with one another. Ideas and beliefs typically spread across a social network via local interactions between individuals (Smith et al., 2020). Studies using agent-based models show that consensus can emerge within networks by peer-to-peer interaction (Green, 2014; Flache et al., 2017; Stocker et al., 2001; Tang and Chorus, 2019; Seeme, 2020). In contrast, social fragmentation and thus lack of consensus typically occurs when networks exceed a critical size. Even a small group propagating false beliefs can disrupt consensus forming in a large population (Dunbar, 1995; Iñiguez et al., 2014; Kopp et al., 2018; Smith et al., 2020), and spread dysfunctional thinking throughout a social network (Seeme, 2020).

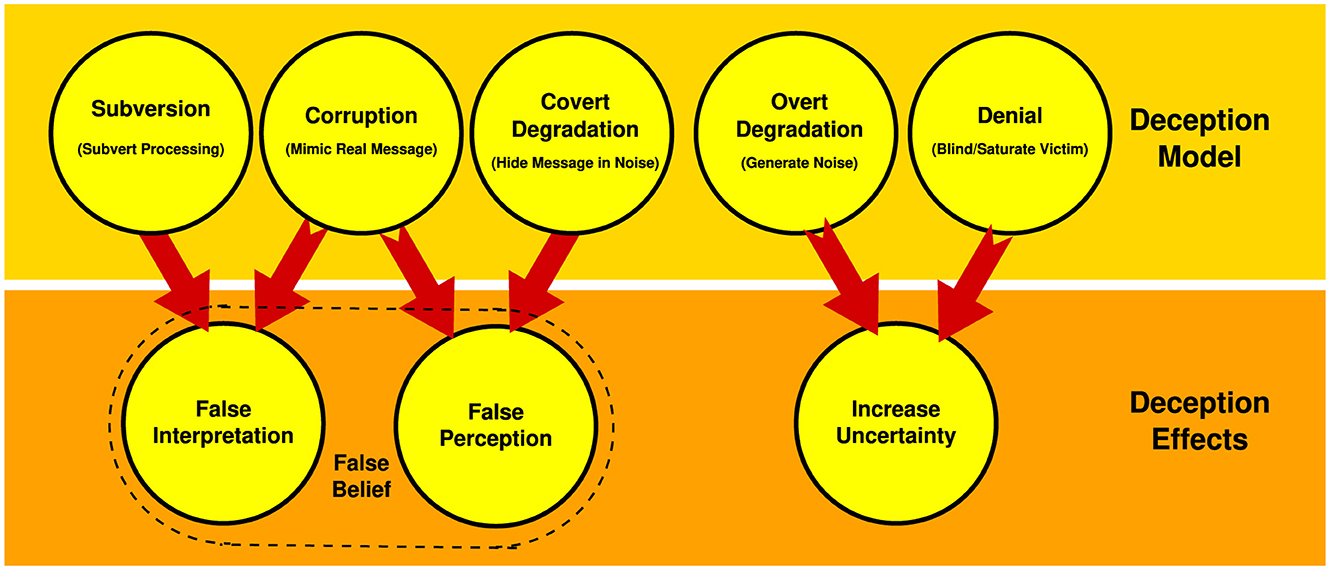

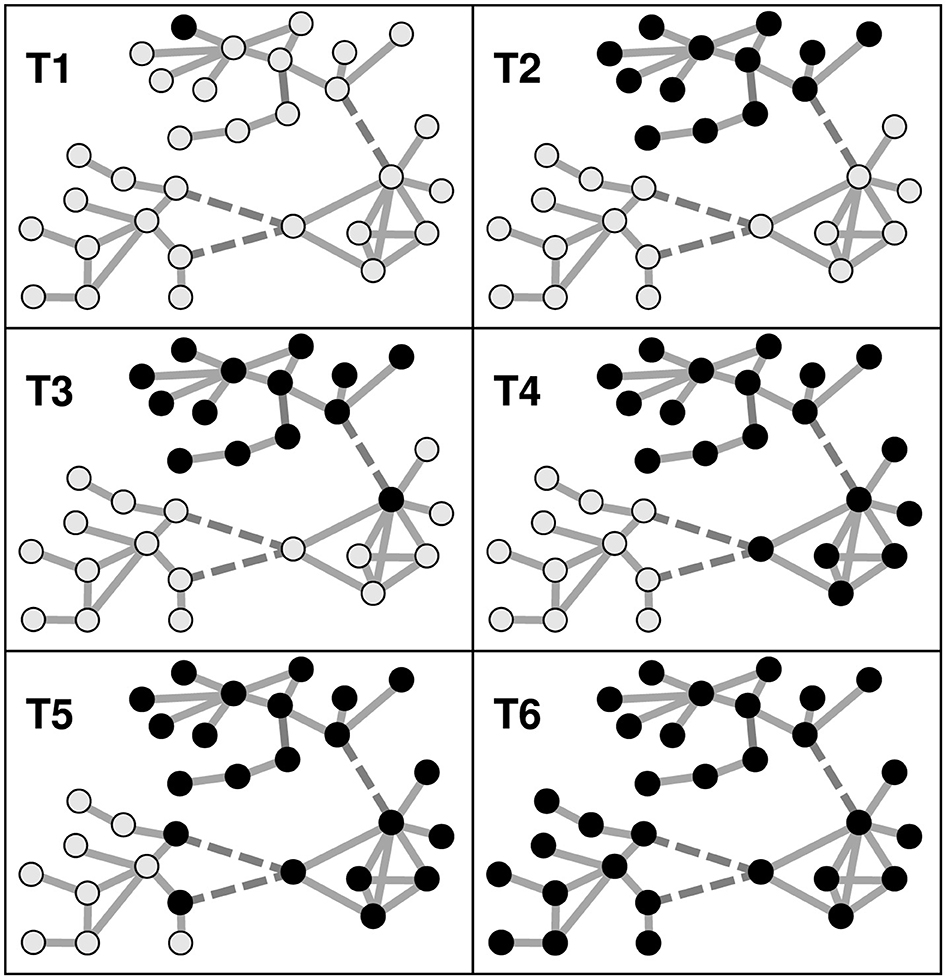

Social networks have long been studied as graphs (Barnes, 1969; Scott, 2012), in which individuals are “nodes” and relationships are “edges.” Graphs exhibit well-known topologies (Figure 3), which are patterns formed by their nodes and edges. In social networks, topologies are important for opinion formation (Burt, 1987; Choi et al., 2010; Shaw-Ching Liu et al., 2005). For instance, hierarchical organisations form tree structures (Figure 3a), in which influence often flows down. A tribal leader or company CEO would be represented by a root node in the topology of a hierarchy.

Figure 3. Common topologies in social networks. Here, circles denote nodes (individuals) and lines denote edges (links between pairs of individuals). The edges drawn here are undirected, but social interactions and influence are sometimes one-directional. (a) A hierarchy (tree graph) is common in organisations. It contains a root node and no cycles. For instance, in a typical company, the CEO is the root node and the leaf nodes are employees. (b) Small worlds are common in traditional societies. Most people interact only with others in nearby communities, shown here as local connections. However, some people travel between communities making long distance connections. Long-distance connections reduce the distance between individuals. In this example, they reduce the number of steps between A and B from 21 to 9. (c) Scale-free networks are common in online social groups. The degree of nodes (number of edges linking them to others) follows a power law and the network contains hubs of highly connected nodes. In a typical online social network, influencers would be the hubs, and their followers are the other nodes.

In traditional societies, interactions between individuals are limited by distance, so they typically form Small-Worlds (Watts and Strogatz, 1998), and long-distance travellers spread ideas between communities (Figure 3b). In small world networks, there is a critical level of connectivity, beyond which opinion drifts and a single extreme appears (Amblard and Deffuant, 2004). Long-range ties potentially connect highly dissimilar local regions or individuals, and thus can trigger repulsive influence (Flache and Macy, 2011).

The advent of online social media has enabled the formation of huge online communities. These commonly take the form of Scale-Free Networks (Barabási and Albert, 1999) (Figure 3c). That is, the distribution of the number of agents connected to an individual follows a power law. This tendency of on-line communities to form scale-free networks led to the rise of “influencers,” highly connected and trusted individuals, who are very effective at spreading ideas and opinions (Bakshy et al., 2011).

Studies of network resilience have found that scale-free networks are usually robust to random “attacks,” but can be susceptible to targeted attacks that remove hubs (Albert et al., 2000; Artime et al., 2024). This suggests that “deplatforming” influencers could be an effective way to disrupt the spread of toxic ideas from online social networks (Section 7). Classical epidemiological studies show the strong effects arising from removal of highly connected individuals in scale free networks (Keeling and Eames, 2005).

Although influencers are diffusive, they are not as persuasive as individuals with closely knitted bonds, such as friends or relatives. Rumours survive longer and reach a larger population in a randomly growing network compared to a scale-free network (Zhou et al., 2007).

In any society, individual agents are often members of all three of the above network types (and others) at different times and places. For instance, they may be part of a hierarchy in their workplace, a small world in their home or neighbourhood, and a scale-free network when using social media. In this way, individuals create overlaps between different social networks, which form a “Network of Networks” (Gao et al., 2014).

The connections between different networks are often tenuous and have no effect under normal conditions. However, an individual will sometimes relay an idea from one network into another network. In this way, an idea can be transmitted from network to network, creating a cascade in which the idea spreads across the entire society (Figure 4). This model is equally true of foot traffic between villages and online networks that encompass the globe. It shows how unexpected inputs from outside can invade and disrupt a network.

Figure 4. In this hypothetical example, a belief cascades, spreading like an epidemic across three separate social networks. The circular dots denote people and the shading denotes two different beliefs. Lines between dots denote social relationships between pairs of individuals. Dashed lines denote intermittent social relationships between individuals in different networks. The boxes (T1–T6) show the state of the community at different times. Initially (T1), a new belief (coloured in black) is held by a single individual, but quickly spreads to all others in the same network (T2). It then infiltrates another network (T3). The same pattern repeats (T3 and T4, T5 and T6) until the belief has spread to all individuals in the community. In traditional societies, the links between networks may be infrequent, requiring (say) physical movement of people from one community to another. However, in online social networks, they depend only on digital links and can be extremely fast.

The more complex and interlinked different networks are, the more susceptible they are to invasion by alien ideas and beliefs (Pastor-Satorras et al., 2015; Jalili and Perc, 2017). The complexity of overlapping social networks allows unintended consequences to emerge (Green, 2014). These can bedevil attempts to mitigate the spread of dysfunctional thinking. Connections between different networks can enable beliefs and behaviours to emerge and cascade until they become widespread (Paperin et al., 2011; Hinds et al., 2013; Tsugawa and Ohsaki, 2014; Dumitrescu et al., 2017; Shao et al., 2018). For example, after being exposed to extremist views on social media, an individual might spread them within a family or neighbourhood.

Ideas spread across, and between, networks in several ways. These include: avalanches, sudden changes in network connectivity (Paperin et al., 2011); cascades, in which a state change spreads across a system from network to network (Bikhchandani et al., 1992); and positive feedback, in which local variations grow into global patterns (Green, 2014). Network processes also mean that a small change in some widely-shared cognitive bias or dysfunction can have a massive impact on large-scale social behaviour (Green, 2014). Rafail et al. (2024) showed how feedback loops contribute to polarisation.

The complexity of overlapping social networks creates a rich array of communication pathways, which increase the likelihood of unanticipated consequences (Merton, 1936). These occur in many contexts, including accidents (Rijpma, 2019), side effects of innovations, especially new technology (Green, 2014), and cascading failures of infrastructure (Valdez et al., 2020).

The phenomenon is explained by Complexity Theory. Complex systems are composed of “agents” (in social terms, these are individual members of a group) which interact with other agents. In a poorly connected system, the agents form small isolated groups. However, as the richness of connections between agents increases, a “connectivity avalanche” occurs (Paperin et al., 2011). This avalanche causes a phase change in the network, from fragmented to connected. Instead of small, isolated groups of agents, large components emerge in which pathways of connections exist between every pair of agents. The behaviour of such a system is chaotic, which leads to unpredictable outcomes and unanticipated consequences.

3.1.1 Emergence in social networks

The advent of digital networks providing local or global connectivity between individuals has produced profound impacts. The geographical and temporal bounds on social networks that characterised human societies for millennia vanished in less than a decade. Individuals can connect locally or globally in mere seconds. This has produced opportunities for emergent behaviours to arise more frequently.

The topology of social networks emerges through behaviour of the agents involved (Wu et al., 2015; Ubaldi et al., 2021). As we saw above, trees, small-worlds and scale-free networks are well-known examples. Conversely, the topology of a social networks can affect collective cognition and behaviour, such as the formation of beliefs and norms (Momennejad, 2022). Consensus is easier to achieve in some topologies than others (Baronchelli, 2018), and changes in topology, such as a shift in centrality, can lead to emergence of new behaviours (Gower-Winter and Nitschke, 2023).

Instances of emergent behaviour in social networks are Janis' groupthink and pluralistic ignorance, detailed below (Janis, 1972; Miller and McFarland, 1987). The implicit and common antecedent for both social cognitive dysfunctions is connectivity through a social network (Heylighen, 2013; Seeme and Green, 2016). The emergence of both behaviours in small social networks connected by word of mouth, print or digital media is well studied (Schafer and Crichlow, 1996; Mendes et al., 2017).

Ruffo et al. (2023) found that polarisation, where a population divides into groups with mutually opposed viewpoints that are often impossible to reconcile, exhibited emergent properties. Confirmation bias and Bayesian reasoning have been found to be causal factors in the emergence of polarisation (Jern et al., 2014; Del Vicario et al., 2016b; Lefebvre et al., 2024).

There is a wealth of literature on emergent behaviour in very large networks (Green, 2014, 2023), but little on emergent behaviour in large, digitally connected social networks (Ubaldi et al., 2021). These implicitly allow for much larger populations to become captured by social cognitive dysfunctions like groupthink and pluralistic ignorance. More importantly, the large footprints of such networks allow for populations of individuals who meet antecedent criteria to connect, and for new groups to emerge, captured by such dysfunctions.

3.2 Pluralistic ignorance

Pluralistic Ignorance (Katz et al., 1931) is a social cognitive error where:

“..virtually every member of a group or society privately rejects a belief, opinion, or practise, yet believes that virtually every other member privately accepts it.” (Prentice and Miller, 1996)

It is a group-level phenomenon (Sargent and Newman, 2021) that stems from a shared misperception among group members that gives rise to a collective error about the true opinion(s) of their peers (Miller, 2023; Kitts, 2003). O'Gorman (1988) argued that pluralistic ignorance involves two social cognitive errors. First, individuals believe that others hold a different opinion; or they believe that they can assess others' opinions accurately.

Pluralistic ignorance appears in many forms, depending on the context, and leads to various social problems. It occurs most often in situations where people share their views about a collective position (Prentice and Miller, 1993). Pluralistic ignorance has negative impacts on health issues, education and workplace environment (Dunning et al., 2004). It also contributes to a host of social issues. These include alcohol consumption among college students (Prentice and Miller, 1993), attitudes towards dating and sex (Reiber and Garcia, 2010; van de Bongardt et al., 2015), gender bias amongst military cadets (Do et al., 2013), impediments to gender equality (Croft et al., 2021), academic underperformance by student athletes (Levine et al., 2014), avoidance of mental health services by police (Karaffa and Koch, 2016) and inaction on climate change (Geiger and Swim, 2016).

Pluralistic ignorance is usually caused by fear of rejection (Miller and McFarland, 1987, 1991), by following the herd, or by a desire to maintain group identity (Miller and Nelson, 2002). Pluralistic ignorance can lead to unpopular social norms (Prentice and Miller, 1996, 1993) and can manifest itself as other instances of dysfunctional behaviour, such as the bystander effect and the spiral of silence.

Mendes et al. (2017) found that false consensus and exclusivity biases, group polarisation, and social identity maintenance were common antecedents of pluralistic ignorance.

3.2.1 The bystander effect

The bystander effect occurs when people in groups merely observe a situation and fail to take action where they should. Despite being concerned about the victim, individuals fail to assess the need for intervention because other bystanders are also waiting for everyone else to take actions (Latané and Nida, 1981). There are many documented cases of passive bystanders witnessing violent crimes, life threatening emergencies (Banyard, 2011; Schwartz and Gottlieb, 1976; McMahon, 2015), victimisation and bullying, both in real life and online (Bauman et al., 2020; Song and Oh, 2017; Machackova et al., 2015).

The familiar pattern of pluralistic ignorance is evident in this effect. Bystanders are concerned about the victim, but wait for others to intervene. They interpret others' inaction as a sign of the situation not calling for an intervention (Miller, 2023).

3.2.2 The spiral of silence

The perception that one's opinion is counter to the popular stance on an issue inhibits one's willingness to express that opinion, leading to even less visible support for it (Koriat et al., 2016).

Examples include legal stances on abortion, preferences for a political party in national elections, addressing racial inequality (Noelle-Neumann, 1993; Moy et al., 2001; Geiger and Swim, 2016), and suppression of antiwar sentiment (Mueller, 1993).

In contrast, the perception that one's opinion is popular creates the opposite effect. Vocal expression on the one side and silence on the other side creates a “spiral of silence” on the muted topic (Noelle-Neumann, 1993).

Some studies suggest that the spiral of silence theory addresses the impact of pluralistic ignorance on public disclosure (Noelle-Neumann, 1993; Taylor, 1982). The individual misperception that their opinion is not shared by others leads to the collective error of a silent majority being suppressed by a vocal minority (Miller, 2023). The spiral of silence in social media can suppress free expression of minority opinion (Hampton et al., 2014; Gearhart and Zhang, 2015).

3.3 Groupthink and the Abilene paradox

Groupthink (Janis, 1972) is a dysfunction of group decision-making. It occurs when members of a group collectively strive to achieve consensus, even if it means ignoring their own individual views, and fail to assess alternative courses of action realistically. It leads to poor decisions, often with disastrous outcomes.

Groupthink is notorious for leading to poor decision-making and catastrophic outcomes. Infamous examples in American history include the failure to anticipate the attack on Pearl Harbour in 1941, the failed invasion of the Bay of Pigs in 1961, the escalation of the Vietnam War, NASA' s decision to launch the doomed Challenger Space Shuttle, and the Watergate cover-up (Janis, 1982). Groupthink can also lead to unethical practises within organisations (Sims, 1992) and the motivation to acquire or maintain political power may produce Groupthink in government organisations (Kramer, 1998).

Based on historic case studies of failed decisions, Janis (1982) proposed several antecedents to groupthink, including intense group cohesion, avoiding conflicts, insulation from outside influence, and external threats. A revised model of groupthink (Turner and Pratkanis, 1998) suggests that the necessary conditions are strong cohesion among the group as a social entity, and defending against a collective threat aimed at their shared positive image of the group.

Analysing many instances of groupthink, Schafer and Crichlow (1996) found that the dominant antecedents were “leadership style and patterns of group conduct,” while Turner et al. (1992); Turner and Pratkanis (1998) found that social identity maintenance was also a major factor.

In contrast, the Abilene paradox (Harvey, 1988) refers to a more passive group behaviour without any collective threat or strong group cohesion (Kim, 2001). It occurs when members of a group each make a decision that is counter to everyone's preferences, because each member seeks to avoid conflict with the group under a shared misperception of collective agreement (McAvoy and Butler, 2007; Rubin and Dierdorff, 2011).

3.4 Relationships between biases and dysfunctional group behaviours

It is apparent from the preceding discussion that many links exist between the biases and dysfunctional behaviours discussed above (Figure 5). In many cases, a prominent imperative is wanting to belong to the group (Section 2.1), which is a powerful survival imperative in humans (Brewer and Caporael, 2006). It motivates behaviours such as hiding, suppressing or misrepresenting one's real views (Cialdini and Goldstein, 2004).

Figure 5. A model for dysfunctional cognition in groups connected by social networks. Evolved limitations result in a number of individual cognitive dysfunctions. When interacting with groups, individual cognitive dysfunctions can result in social cognitive dysfunctions. As the model shows, inherited traits and cognitive mechanisms underlie cognitive biases, which in turn drive cognitive dysfunctions within social groups. This implies that reducing dysfunctions that arise at fundamental levels can help prevent dysfunctions within social groups.

Fear of isolation or rejection from the group is the primary causal driver for many behaviours, e.g., groupthink, Abilene paradox, pluralistic ignorance (Kim, 2001), and spiral of silence. Some studies suggest that the spiral of silence theory addresses the impact of pluralistic ignorance on public disclosure (Noelle-Neumann, 1993; Taylor, 1982). Spiral of silence occurs because individuals misperceive public support for their true opinion, express a different opinion to conform to their perceived majority, which is characteristic of pluralistic ignorance.

The delusion that one's beliefs are unique and different from others is common to both false uniqueness (Section 2.5) and pluralistic ignorance. However, false uniqueness is an individual bias that may be motivated by a false sense of superiority that is not linked with group dynamics, unlike pluralistic ignorance. Similarly, individuals cannot collectively experience false uniqueness bias, whereas pluralistic ignorance does not refer to a single individual's misperception (Miller, 2023).

Mendes et al. (2017) found that the specific antecedents of pluralistic ignorance depended on whether it was defined as perceptual or inferential pluralistic ignorance, and identified both false uniqueness and false consensus biases as antecedents across the literature, while Sargent and Newman (2021) argue that the former is an antecedent.

Janis (1971) cites as antecedents a multiplicity of instances of confirmation bias in groupthink, while Schafer and Crichlow (1996) found that confidence related biases were antecedents, leading to multiple types of cognitive error in group decisions.

4 Adverse impacts of advancing technologies

Problems now arising from digital technology will be further exacerbated as the technology evolves and creates more opportunities for exploitation. Historically, new communication technologies always change the way ideas spread. For instance, Gutenberg's printing press was used heavily to distribute propaganda, an effect later observed with mass media broadcasts (Hoff, 1990; Dewar, 2000; Bagchi, 2016). The observed adverse impacts of networking technology will be amplified by the rapid evolution and adoption of Artificial Intelligence (AI), which can be used to automate and accelerate many tasks that produce malign effects (Honigberg, 2022).

4.1 Impacts of digital networking technology

Digital networking technology contributes to malign exploitation by providing increasingly pervasive, dense and fast connectivity in social networks. The cause is exponential performance growth, that multiplies performance and reduces costs over time (Kopp, 2000; Cherry, 2004).

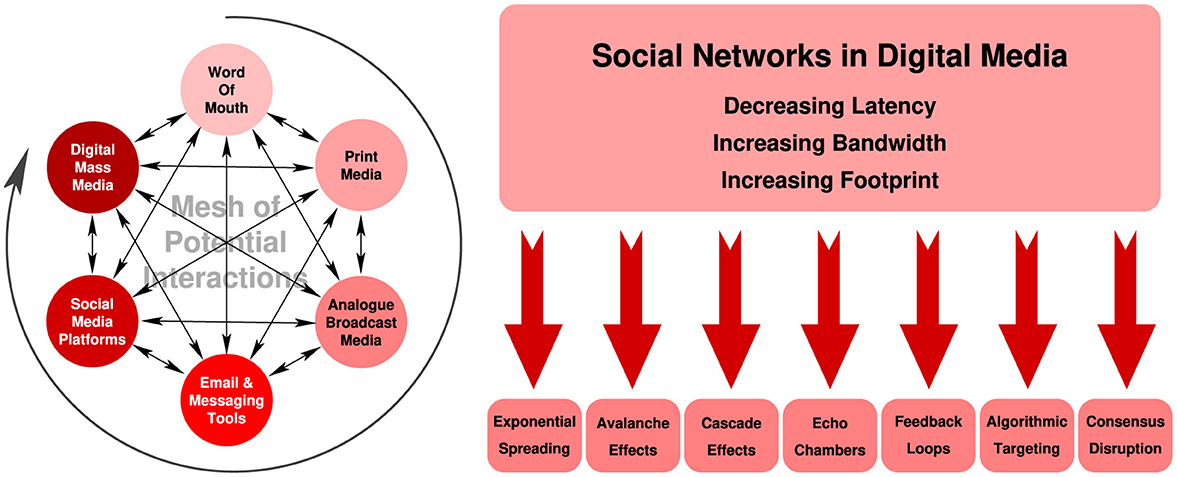

Faster and cheaper networking technology results in ever expanding geographical coverage, and thus ever increasing exposure of a global audience to potentially malign exploitation. This problem is exacerbated by the ability of digital media to migrate digital content rapidly between media types, resulting in a complex, and sometimes chaotic, unstructured, and random mesh of connections that propagate messages across different media types (Figure 6).

Figure 6. Left: Digital media permit content to migrate quickly between different channels, resulting in high connectivity within and between different media. Social networks residing in digital media display shorter latencies in message transmission, vastly greater footprints and connectivity, and more bandwidth than social networks predating the digital age. Right: The effects of dysfunctional social cognition are accelerated in time across orders of magnitude larger populations. Many features of digital media create effects unseen in the analogue era (Kopp, 2024).

The potentially very high density of very fast long-distance interconnections in social networks that are formed across digital networks has important implications (Section 3.1), which will be become worse as the technology evolves.

Spreading behaviour in large, online social networks, for instance X/Twitter, frequently follows epidemiological patterns observed with biological pathogens. Both measurements and simulations of spread display remarkably good fits to traditional compartment models used in epidemiology (Keeling and Eames, 2005; Brauer, 2008; Kopp et al., 2018; Castiello et al., 2023).

However, the large footprints and speed of digital networked media result in spreading effects many orders of magnitude greater than biological pathogens. Continuing exponential growth in digital networks will inevitably increase observed adverse effects as susceptible populations grow. Spreading effects of this magnitude implicitly increase the impact of highly connected influencers in social networks, who can propagate messages to global audiences, with increasingly large footprints over time.

Social media platforms typically rank content by popularity, that has led to the widespread use of software robots or bots to create illusory popularity and further spreading behaviour. Bots propagate content by emulating the behaviour of human users sharing content, with each bot typically employing a user account with a fake identity. Bots have been found to enhance the spreading of content that might not have otherwise propagated well (Shao et al., 2018; Vosoughi et al., 2018; Gilani et al., 2019; Bovet and Makse, 2019; Himelein-Wachowiak et al., 2021).

Bots have been supplemented by social media “troll factories” where paid personnel using fake identities participate in social media debates to promote agendas or sow discord amongst legitimate social media users (Linvill and Warren, 2020).

4.2 Impacts of Artificial Intelligence technology

Artificial Intelligence (AI) technology is rapidly maturing. It is now deployed in many applications, including chatbots, natural language translation, image, speech and text recognition, and the synthesis of text, graphics and video. A major concern is that the AI algorithms and models underlying these systems have no understanding of truth, ethics or honesty, as they typically mimic human responses.

Early AI applications have shown disturbing parallels to well-known problems in human cognition. For instance, the “hallucination” effect, in which AI systems produce nonsensical answers to trivial problems, resembles “cognitive illusions” in humans (Kahneman and Tversky, 1996; Alkaissi and McFarlane, 2023). Cognitive biases and errors inherited from human generated training sets and human labelling are well known problems in the development of AI models (Schwartz et al., 2022).

AI models exhibit behaviours akin to human confirmation bias, where they show a preference for their own generated content (Yang et al., 2024). This raises the risk of a runaway positive feedback loop, leading to “Model Collapse,” in which the AI amplifies its own erroneous outputs over many training cycles (Shumailov et al., 2024).

Peterson (2025) argues that the availability of AI content, and the tendency of AI models to ignore less frequently seen content, effectively dilutes the available base of knowledge. This leads to the “Knowledge Collapse” problem, where important content is lost. The “Model Collapse” and “Knowledge Collapse” problems could accelerate the established problem of “Truth Decay” (RAND Corporation, 2019).

Erroneous outputs and embedded bias errors, are obstacles to legitimate applications of AI systems, but they do not present obstacles in the production of propaganda and disinformation, which are not concerned with the truth. Poisoning of AI model training datasets with propaganda is now an identified problem (Bagdasaryan and Shmatikov, 2022; Nguyen et al., 2024). Sadeghi et al. (2024) found multiple AI chatbots presenting propaganda narratives as fact as a result of training dataset poisoning by a nation state actor.

The integration of generative AI with web based platforms is already being pursued as a vehicle for producing and sharing malign propaganda (Vykopal et al., 2024). The most disturbing recent instance involves training an AI model to digitally emulate a deceased ultra-nationalist propagandist (Radauskas, 2023).

Predicted a quarter of a century ago (Kopp, 2000), convincing “deep fake” imagery and video produced by generative AI now exhibit increasingly high fidelity and low production cost due to exponential growth in computer technology, and can enhance propaganda acceptance (Helmus, 2022).

Accurately assessing the effects of specific attacks is a very difficult challenge without standardised measures and detailed data showing the exposure and vulnerability of populations (Kopp, 2024). A nation-by-nation analysis of techniques employed has underscored this problem (Łabuz and Nehring, 2024). They explored the use of “deep fake” technology in election campaigns in eleven nations across the globe, specifically the USA, Turkiye, Argentina, Poland, UK, France, India, Bulgaria, Taiwan, Indonesia, and Slovakia, and also found non-election related political exploitation in Estonia, Germany, Israel, Japan, Serbia, Sudan, and other nations, showing that this technology has become a mainstream tool for malign manipulation of public opinion.

Łabuz and Nehring (2024) found the highest frequency of “deep fake” technology use in the Argentinian election of 2023, but concluded that despite intensive use by most campaigns, the election outcome was not strongly impacted. Their study shows that the use of ‘deep fake' technology in 2023 was haphazard, and techniques for maximising effects on audiences were mostly not refined. They found little evidence of precise message targeting, and in many instances the poor quality of fakes permitted easy debunking by media and political opponents.

Microtargeting, where generative AI is used to produce messages customised to the vulnerabilities of specific individuals being targeted has proven effective in political advertising (Simchon et al., 2024). The widely debated Cambridge Analytica scandal involved the abuse of personal information to enable microtargeting of voters in an election (Berghel, 2018).

The use of AI algorithms that suggest links based on a user's access history in Internet searches has caused further problems. Pariser identified the “filter bubble” effect, where users become isolated in groups that share common interests and viewpoints, and filtering hides alternative content (Pariser, 2011). Microtargeting and filtering can produce a positive feedback loop, in which existing cognitive biases are reinforced by further exposure to congruent information, thus increasing confidence in a belief that may be biased or false. For instance, after accessing a few biased items, a user may become immersed in a deluge of biased or false material (Green, 2014). Fisher (2022) details numerous cases where the spread of fake ideas and conspiracy theories in this way have spilled out into real-world violence.

Feedback loops have been shown to contribute to polarisation (Rafail et al., 2024). Recent studies have used highly polarised test populations to test hypotheses about feedback loops, echo chambers, filter bubbles and impacts of social media exposure (Asimovic et al., 2021, 2023; Guess et al., 2023; Nyhan et al., 2023; Törnberg, 2018; Cinelli et al., 2021; Dahlgren, 2021). The outcomes suggest weak impacts of these mechanisms, which in turn indicates that in a highly polarised population, mechanisms such as confirmation bias, social identity maintenance and pluralistic ignorance are dominant. Uncritical acceptance of false beliefs that appear to be coherent with prior beliefs accepted in the population is a common outcome. The paucity of studies on weakly polarised or unpolarized populations leaves the generality of claimed minor impacts only weakly tested.

Malign AI models, deep fakes, microtargeting, AI search algorithms and poisoning of training data sets exacerbate the implicit integrity problems seen in AI (Helmus, 2022; Radauskas, 2023; Cinà et al., 2023; Simchon et al., 2024). Increasing reliance on such systems for news and information are undermining sound decision-making (Winchester, 2023). AI can therefore directly contribute to dysfunctional cognition by inadvertently or intentionally injecting often persistent false beliefs, false interpretations and biases into its users (Honigberg, 2022; Vicente and Matute, 2023).

5 Malign exploitation of dysfunctional thinking

Dysfunctional thinking in individuals and groups presents opportunities for manipulation and exploitation. Such manipulation has a colourful history, involving especially propaganda, gaslighting, and political deception (Bernays, 1928; Davis and Ernst, 2019; Kahan, 2011; Bardon, 2019; MacKenzie and Bhatt, 2020).

The aim of an exploiter is to gain an advantage over victims and elicit behaviour that favours the exploiter. Opportunities arise especially where objective truth creates dissonance relative to the victim's prior beliefs or interpretations, or where understanding truth imposes a high cognitive load (Kaaronen, 2018; Sweller, 1988; Jian et al., 2019).

Gershman (2019) showed by the application of Bayesian reasoning that supporting beliefs, termed auxiliary hypotheses, can prevent disconfirmation of a belief when confronted with disconfirming evidence. Exploiters can therefore reinforce false beliefs or reduce confidence in true beliefs by focusing on supporting beliefs.

Creative exploitation of dysfunctional thinking employs tools that are all instances of well-studied and understood compound deceptions. In propaganda, gaslighting and deceptive political practises, it is a common to exploit the victim's prior false beliefs and interpretations, and to provide multiple channels of deception (Haswell, 1985; Brumley et al., 2005). Exploiters utilise false interpretations of objective truths, and if necessary, create new false posterior beliefs and interpretations (Haswell, 1985; Kalbfleisch and Docan-Morgan, 2019; Halbach and Leigh, 2020).

Once the victim is entrapped by a false belief or interpretation, the exploiter can introduce further false beliefs and interpretations. The victim then perceives an alternate reality, which is often sufficiently internally consistent to minimise cognitive dissonance (Malgin, 2014; Pies, 2017a).

Kahneman (2011) argued that humans have two modes of processing information: fast thinking, based on heuristic shortcuts, and slow thinking, based on reasoning. Empirical studies show that only some heuristics facilitate the detection of deceptions, while most are ineffective (Qin and Burgoon, 2007; Verschuere et al., 2023). Ineffective heuristics in fast thinking applied to detecting deceptions are prior false beliefs and interpretations about deceptions. This includes assumptions about the veracity of sources (Verschuere et al., 2023; Orchinik et al., 2023).

False prior beliefs are exploited often because confirmation bias makes the victim susceptible to falsehoods that are coherent with the false prior belief. Victims may accept false claims that reinforce their established bias, as this is a consequence of Bayesian-like belief updating (Pilgrim et al., 2024). Empirical studies that have shown the strong influence of prior beliefs, true or false, support the observations of Tappin et al. (2021); Orchinik et al. (2023). In both advertising and propaganda, popular techniques involve exposing audiences to large-scale, repeated messages intended to influence their beliefs. This demonstrates the effort that is required to dislodge established beliefs, whether they are true or false (Kopp, 2005, 2006; Asimovic et al., 2021, 2023; Pilgrim et al., 2024).

Beliefs that are not falsifiable, and not subject to revision, can reinforce entrapment (Friesen et al., 2015; Bardon, 2019). Where prior beliefs and interpretations are not false, an exploiter will attack both. Often they accomplish this by deceptions that increase uncertainty, and by exploiting the false consensus bias, while logical fallacies are used to disrupt proper interpretations. Once the victim is uncertain about their correct prior beliefs and interpretations, they can be seduced with false beliefs and interpretations. The diversity of known deceptions used in exploitation is empirical proof that skilled exploiters often target all vulnerabilities in a victim's cognitive functions (Bernays, 1928; Dorpat, 1996; MacKenzie and Bhatt, 2020).

5.1 Gaslighting, propaganda, post-truth, and fake news

A notable instance of exploitation is Gaslighting. This refers to the systematic practise of feeding a victim or victims with falsehoods, so that they begin to doubt their own memory, perception, or judgment (Dorpat, 1996). An exploiter will consistently deny facts and make blatantly false statements, thus undermining the victim's prior beliefs and interpretations and paving the way to promote their agenda. In effect, the exploiter is deceptively creating uncertainty in the victim's understanding.

Individuals have used gaslighting in many different contexts, for instance, to cover up extramarital affairs to their spouse (Gass and Nichols, 1988), identity-related abuse of transgender children by their parents (Riggs and Bartholomaeus, 2018), and racial gaslighting to maintain white supremacy in the United Stated (Davis and Ernst, 2019). Gaslighting has also played a part in domestic violence (Sweet, 2019) and in suppressing whistle-blowing within institutions (Ahern, 2018). Sweet (2019) suggested that gaslighting is more effective in cases where it is rooted in social inequalities, especially gender, race, and sexuality.

While the tools employed for different kinds of manipulation may be identical, the scale of the manipulated population varies widely. Like political manipulations, propaganda typically targets nation states or even global populations, while gaslighting typically targets sub-populations or individuals.

The common thread is the use of deceptions intended to inject false beliefs and false interpretations into the minds of the victims. Where successful, these deceptions put the victims into a perceptual alternate reality, where common dysfunctional thinking behaviours capture them and reinforce a system of false beliefs and interpretations.

The Nazi and Soviet propaganda systems were large, internally coherent machines for gaslighting victim populations (Krumiņš, 2018; Sinha, 2020). A notable feature of both was the intentional exclusion of external information sources. This precluded cognitive dissonance in the victim population, and enabled exploitation of prior cognitive biases and anxieties where available. By exploiting online news platforms and social media, gaslighting has also played a role in Western, especially American politics (Carpenter, 2018).

As observed above, the transition to digital media, and the advent of social media, created immense new opportunities for exploitation, by accessing millions or billions of users globally in timescales of minutes. This presented unprecedented opportunities to propagate misinformation, disinformation, propaganda, and fake news among the public (Bovet and Makse, 2019).

The nature of social media platforms, with their “influencers,” “follower networks,” “echo chambers,” “filter bubbles,” and automated ranking algorithms, present significant opportunities for exploitation. In marketing, the use of influencers and viral promotion are well-known and widely used (Miller and Lammas, 2010; Vaidya and Karnawat, 2023). Bakshy et al. (2011) showed that multiple strategies using influencers could produce cascades in social media traffic. Dubois and Blank (2018) showed that social media users who rely on a narrow range of sources are susceptible to “echo chambers,” although the prevalence was lower than earlier thought. Chitra and Musco (2020) found that for multiple kinds of social media platforms, Pariser's filter bubble effect could “greatly increase opinion polarisation in social networks.”

Fake user accounts, software bots and troll factories exploit both confirmation bias and especially false consensus bias concurrently. Both empirical studies (Vosoughi et al., 2018; Linvill and Warren, 2020) and simulations (Xiao et al., 2015) prove the effectiveness of these techniques. They show that deceptive messages propagate exponentially in a manner akin to biological pathogens, as noted earlier (Castiello et al., 2023). However, they spread much faster than biological pathogens and across much larger populations, reflecting both the well-known behaviour of connectivity avalanches (Paperin et al., 2011; Green, 2014; Akbarpour and Jackson, 2018) and the nature of the digital infrastructure (Nickerson, 1998; Pariser, 2011; Kalogeratos et al., 2018; Kopp et al., 2018; Toma et al., 2019).

For example, even if an individual's peers are not supporting an agenda, leaders could make consistent and repeated false statements about public opinion. This practise can undermine individuals' perceptions or judgments. They might begin to believe the propaganda, a situation previously explained using game theory (Press and Dyson, 2012). Thus, gaslighting a population by using propaganda, post-truth, and fake news will affect both the individual's opinion and their perception of public opinion.

Another recently popularised term is post-truth, which describes statements that appeal to public emotions, bypassing the truth and ignoring expert opinions or fact-checking. These false and misleading statements are used to gaslight the population. The intent is to make individuals ignore their own judgment, and eventually sway public opinion towards the promoted falsehood (Rocavert, 2019). While this term was popularised after the 2016 Brexit vote and the 2016 US presidential election, it was already an implicit feature of past Nazi and Soviet propaganda systems (McIntyre, 2018).

McIntyre (2018) suggested that the post-truth era is a result of people favouring “alternative facts” in place of actual facts, and of feelings having more weight than evidence:

What is striking about the idea of post-truth is not just that the truth is being challenged, but that it is being challenged as a mechanism for asserting political dominance.

Many recent studies have investigated the prevalence of post-truth in politics. It takes the form of “obfuscation of facts, abandonment of evidential standards in reasoning, and outright lying” (McIntyre, 2018; Sismondo, 2017; D'Ancona, 2017). Introducing new information with factual evidence is not enough to break this deep-seated spell of post-truth, rather it needs political intervention and sincere public incentives with sufficient motivation to become well-informed instead of staying misinformed (Lewandowsky et al., 2017).

A curious aspect of the post-truth phenomenon is that improbable falsehoods are frequently accepted without question. In part, this may reflect the potential of falsehoods to minimise cognitive dissonance and load for an audience, whereas objective truth may increase it. This could explain why falsehoods tend to propagate better in social media (Vosoughi et al., 2018). Shannon's (1948) information theory showed that improbable but true messages contain much more information than highly probable messages. This is coherent with empirical findings (Ruffo et al., 2023) that disinformation worked “through a series of cognitive hooks that make the information appealing, triggering a psychological reaction in the reader,” where disinformation employed more novelty, was easier to process, and produced a stronger emotional reaction in the audience.

If humans are instinctively drawn to the improbable, then individual and social cognitive dysfunctions create an unusually high susceptibility to improbable falsehoods.

5.2 The rise of denialism, conspiracy theories, and disruptive propaganda

As shown earlier, many kinds of dysfunctional thinking contribute to often-cited societal problems, such as the widespread influence of denialism (Section 2.7), conspiracy theories (Section 2.1), and “fake news” (Section 5.1) (Green, 2014; Beauvais, 2022). In many social contexts, especially politics, a party challenging an established belief, whether true or false, will capture attention and appear to be strong, while those defending appear weaker. This imbalance is a problem because false claims are easy to fabricate because they are unburdened by facts. Mass media and social media instantly propagate sensational claims, even if they are improbable. In contrast, disproving a false claim can take hours, days or even months. Also, disproof often requires technical evidence that is often difficult to understand, and thus poorly reported.

The increasingly esoteric, technical nature of scientific information makes the above problems worse. Science has become increasingly difficult to comprehend by lay audiences (Plavén-Sigray et al., 2017). This problem has contributed to increasing distrust in science (Rutjens et al., 2018). Instead, people often turn to sources they trust, even sources whose trustworthiness is weak.

Kahneman (2011) showed that intuitive, fast thinking favours cognitive biases over reason (Kahneman and Tversky, 1996). Where “information overload” arises (Toffler, 1970), susceptibility to fast thinking and avoidance of cognitive dissonance are inevitably increased. Such cognitive ‘shortcuts” can lead to errors in perception and interpretation. For instance, people are prone to search for patterns, and may mistake random arrangements for deliberate design (see Section 2.1). They often confuse correlation with causation (Altman and Krzywinski, 2015) and are prone to mistake random events for deliberate intent (Waytz et al., 2010; Green, 2014).

In exploiting these vulnerabilities, conspiracy theorists often employ well-tried educational methods to spread falsehoods. For instance, trying to argue against entrenched ideas only reinforces them (Nyhan and Reifler, 2010). Leading people to discover ideas for themselves is far more effective (Bruner, 1961).

False interpretations of truthful accounts can be very persuasive, Allen et al. (2024) found that such reports had 46-fold more effect than reports identified as misinformation by a social media platform.

Susceptibility to malign messaging has been identified as a factor in the spread of conspiracy theories. Bowes et al. (2023) found a close correlation between subjective beliefs in threats and powerlessness, intolerance of ambiguity, receptivity to misinformation, as well as collective narcissism, and conspiratorial ideation.

Social pressures also reinforce the acceptance of falsehoods. Pluralistic ignorance can drive members of social groups to believe that everyone in their group holds to a claim, so they advertise their membership by spreading the claim themselves. If an assertive advocate of the falsehood is present, groupthink may also arise.

Finally, digital technology exacerbates these problems by virtue of its pervasive coverage, dense network interconnections, and speed, as observed earlier. Falsehoods can be rapidly constructed using AI tools, rapidly and widely disseminated using digital mass media, social media and messaging platforms, and reinforced using “bots” and “troll farms” as observed above (Section 4). Algorithmic filtering may produce ‘filter bubbles” and positive feedback ‘echo chambers' that further reinforce prior biases and polarisation, acting in effect as passive censorship (Pariser, 2011).

These problems are exacerbated by absent or biased fact-checking, which legitimises falsehoods, whereby fact-checkers become proxy deceivers, thus supporting exploiters (Soprano et al., 2024).

Denialism, which amounts to reflexive rejection of objective truths, reinforces the previous problems. Denialists and exploiters of denialism employ the full gamut of traditional deception tactics to self-deceive, or recruit others to share their deceptions. These tactics include false allegations of conspiracy, fabrication of false evidence, cherry-picking objective truths, demanding unattainable proofs for counterargument, exploiting logical fallacies, and using deception to increase uncertainty (Hoofnagle, 2007; Diethelm and McKee, 2009; Green, 2014). The problem of denialism, exacerbated by the Dunning-Kruger effect, is central to the problem of pervasive public rejection of expert insights (Nichols, 2017).

In summary, exploiters possess multiple asymmetric advantages when individuals and groups are victims of cognitive dysfunctions.

6 Measures to defeat malign exploitation

Given the immense diversity of methods and techniques in use to effect malign exploitation, there is no simple panacea solution to address this problem, and any such expectation is wishful thinking (Kopp, 2024).

As noted earlier, the way malign messages spread closely reflects models used in epidemiology (Castiello et al., 2023). Biological pathogens are typically defeated or controlled by measures analogous to those discussed below: (1) suppress the source, (2) control or suppress propagation, and (3) increase the immunity of the exposed population. There is a case to be made for this approach in dealing with malign exploitation (Kopp, 2024, 2025).

Malign messaging intended for exploitation is typically designed for desired effects. It must be produced, delivered (directly or by proxies), and its effects must be assessed to learn whether it achieved the desired effects. Counter-measures can therefore target one or more phases in this process: the means of creating a malign message; its distribution to targeted individuals; or its processing by a targeted individual. The latter provides the option of making a target population more resistant to malign messages (Roozenbeek et al., 2022).

In practical terms, each phase of the production and delivery cycle of malign messaging is susceptible to defeat or to measures that degrade its effects (Kopp, 2024, 2025).

Law enforcement can halt production in a persistent, or non-persistent, manner. Nation state producers present the biggest challenges as they typically employ national resources to both produce and protect their means of production and distribution (Kopp, 2025). They can be countered by cyber-attacks (Nakashima, 2019) or other means, such as regime change.

The distribution of malign messaging presents further opportunities for defeat or mitigation. The means of distribution are now primarily digital. In nation states subjected to malign messaging. the entities that operate digital distribution fall under the footprint of regulatory bodies, or of law enforcement.

Social media platforms, websites, and encrypted messaging platforms can be blocked to deny access. This approach has be widely employed, but can often be overcome by technological means. Effectiveness has varied widely, often polarised populations will seek the denied content via other channels (Golovchenko, 2022; Okholm et al., 2024).

Another option to reduce the spread of disinformation is to “deplatform” malign influencers. However, such parties may simply migrate to another platform, if possible in another jurisdiction (Jhaver et al., 2021; Ribeiro et al., 2024). Removal of proxies who share malign messages could present legal challenges (Law Council of Australia, 2023), as they may believe the false messages.

Using fact checking to filter content presents practical challenges, especially the potential for bias and limitations on competency. These constraints also impact the use of AI for fact checking (Korb, 2022; Kopp, 2025). Fact checking frequently fails due to bias in a polarised audience (Hameleers and van der Meer, 2020).

Finally, immunisation or inoculation strategies aim to temporarily or permanently equip potential victims with the capability to identify malign messaging (Roozenbeek et al., 2022). However, recognising that a message contains malign disinformation does not guarantee it will be rejected (Pies, 2017b; Ecker et al., 2022). Persistence of effects is yet to be proven, and experience with the “forgetting curve” indicates that retention will be a challenge for this approach (Sarno et al., 2022; Murre and Dros, 2015).

7 Conclusion

This study proposed a synthesis of models that trace cognitive dysfunction from individuals to groups, and societies. It also argued that advances in digital technology and AI permit the formation of immense, highly connected social networks. These create conditions for the rapid emergence of large-scale, dysfunctional group behaviours.

Most previous studies have focused on processes at a single scale. This account began by surveying a range of these models, which deal with cognitive dysfunction at each of the above scales. Individuals are affected by limitations on cognition, and by innate drives. Cognitive biases of individuals lead to dysfunctional effects in behaviour of groups. In social networks, peer to peer interactions can propagate false beliefs. New technologies, especially the Internet, enable these interactions to cascade widely and rapidly. Finally, the study showed how malicious agents exploit cognitive dysfunctions.

The impacts of exponentially growing digital technologies present a daunting picture of the future. They have accelerated and expanded the scale of dysfunctional social cognition and its effects (Shao et al., 2018; Bovet and Makse, 2019; Treen et al., 2020; Himelein-Wachowiak et al., 2021; Fisher, 2022; Ruffo et al., 2023).

The future will see increasing global coverage by systems that integrate pervasive networking with AI systems capable of producing convincing content, including material that is false, misleading or malicious. The commodification of AI will further exacerbate such challenges, by providing effective tools for malign exploitation. AI generated content will rapidly displace human generated content, and nonsensical and malign messaging will become pervasive unless controlled. Opportunities for malign exploitation will abound unless controlled, and there is immense potential for commercial and political abuse of such an environment.