- Department of Commerce, Delhi School of Economics, University of Delhi, New Delhi, India

The objective of this study was to conduct a meta analysis of studies conducted in the past on effective teaching and its impact on student learning outcomes. Meta analysis is a powerful tool for synthesizing previous research and empirically validating theoretical frameworks. For this purpose, the dynamic model of educational effectiveness was used as a guiding framework to select and organize 132 studies examining the impact of teaching factors on student learning outcomes. The teaching factors of dynamic model were found to be moderately associated with learning outcomes. Various moderators like level of education and type of study were also identified. The study has various theoretical, methodological and practical implications. The study points out that when it comes to teaching, imposing non-essential and superfluous dichotomies between different teaching approaches might be impractical. The findings of this meta analysis may also provide implications for practitioners and policymakers especially in the domain of faculty development and education programs. It can give existing or prospective teachers an opportunity to practice and rehearse these factors in classroom, reflect on them and receive feedback on how their practice can be improved.

Introduction

The educational effectiveness research (EER) seeks to investigate the factors such as teaching methods, curriculum, role of leadership and learning environment (Goldstein, 2003) that affect learning outcomes of students. The first phase in the field of EER emerged as a reaction to the studies conducted by Coleman (1966) and Jencks et al. (1972) that concluded that schools do not have any impact on students in comparison to the effect of student’s own ability and social background. This conclusion was counter-intuitive and disquieting to many educators and educational researchers. As a result, many researchers like Weber (1971), Rutter et al. (1979) and Mortimore et al. (1988) started responding almost immediately. These studies found “school effects” to be consistent and significant. The early educational effectiveness studies conducted by Brookover and Lezotte (1979), Edmonds (1979) and Rutter et al. (1979) found that some schools were more effective than others even when student background characteristics were controlled for. They also presented evidence that these effects could be attributed to a process characteristics of schooling like school climate, educational leadership, curriculum and organisational characteristics. It has also been noted that large proportion of variance in student achievement can be explained by teacher’s behaviour in the classroom—what teachers do in the classroom and their interaction with students (Creemers and Kyriakides, 2006). Deep learning cannot be achieved without effective teacher guidance. However, despite the advancement made in literature on teacher’s role in promoting student learning, more research is required to understand and unfold what exactly teachers do that promotes student learning outcomes. It is precisely the reason behind conducting this meta analysis.

Also, it is noted that school effectiveness research has been criticised for lack of theoretical integration and relatedness to other parts of education. An attempt has been made to integrate the work done in the field of educational effectiveness research and discover the teaching factors related to student learning outcomes. Several theoretical models developed in the past decades have integrated factors related to teaching-learning effectiveness (Walberg, 1984; Brophy and Good, 1986; Creemers and Reezigt, 1996). In the early 1990s, numerous attempts were made to explore why schools had their different effects. The research moved from “input-output” to “input-process-output” mode (Teddlie and Stringfield, 1993), while emphasizing classroom as the key process variable.

Various models of educational effectiveness were developed by Scheerens (1990), Creemers and Reezigt (1996) and Stringfield and Slavin (1992). The EER now moved towards a dynamic area of research that did not consider education as an inherently stable set of arrangements. Rather, multiple interactive “levels” of educational systems were considered achieving various outcomes. Scheerens and Creemers (1989) measured educational effectiveness at three levels: policy level, school level and classroom level. This model became the basis for Creemers and Reezigt (1996) comprehensive model of educational effectiveness which emphasised on the factors that describe teacher’s instructional role which affects student learning outcomes. The eight factors included in the model are: orientation, structuring, questioning, teaching-modelling, applications, management of time, teacher role in making the classroom a learning environment, and classroom assessment. These eight factors were found to be associated to student learning outcomes (e.g., Brophy and Good, 1986; Rosenshine and Stevens, 1986; Scheerens and Bosker, 1997; Darling-Hammond, 2000; Muijs and Reynolds, 2000). In contrast to previous models mentioned above, the Creemers and Reezigt (1996) model is considered to be more comprehensive because an integrated approach in defining quality of teaching is adopted. The model is a combination of different approaches to teaching: Direct and Constructivist. It does not only include skills associated with direct teaching and learning such as “structuring” and “questioning” but also “orientation” and “teaching modelling” which are in line with theories of teaching related to constructivism and promoting the development of metacognitive skills (Slavin, 1983; Slavin and Cooper, 2001). It is also noted that the model does not only focus on traditional learning but collaborative learning is also emphasised upon in the overarching factor of “contribution of teacher to the establishment of classroom learning environment”.

The dynamic model stresses on the inter-relatedness nature of the teaching factors and importance of grouping specific factors. This helps in simplifying the complex nature of teaching and also provides specific strategies for improvement in teaching practice. The model has been supported by longitudinal studies conducted by Creemers and Kyriakides (2006) and Kyriakides et al. (2000). Drawing cues from the above literature, a meta analysis exploring the impact of teaching factors on student learning outcomes has been conducted.

In this paper, the researchers have used the dynamic model of educational effectiveness (Creemers and Kyriakides, 2008) as the fundamental framework to organize and structure the sample studies related to factors affecting teaching and student learning outcomes.

This paper also tests the dynamic model of educational effectiveness for its empirical validity. The dynamic model facilitates the selection of studies and ascertain the criteria for classification of factors used within each study into different categories. This type of meta analysis also helps in revealing those aspects of theoretical models which have not been empirically tested and need to be given more consideration in future. Furthermore, it helps policy makers by giving them robust solutions and helps in channelizing and prioritizing their efforts and resources. This can be achieved by focusing on the factors having strong affinity with student learning, especially in light of fiscal constraints not allowing integration of multiple factors into intervention programs.

The next section provides a short account of the “Dynamic model of educational effectiveness” based on which this meta-analysis has been structured.

Dynamic Model of Educational Effectiveness

The dynamic model given by Creemers and Reezigt (1996) is multilevel in nature encompassing four levels of factors influencing student learning, namely the context, the school, the classroom/teacher, and the student level. It is an extension of Caroll’s model of school learning (Carroll, 1989). The Carroll’s model stated that degree of mastery in a field/subject is the ratio of amount of time actually spent on learning task to total time required. According to Carroll (1989), time spent on learning should be smallest of these three: Opportunity (time allowed for learning); Perseverance (time willing to spend), and Aptitude (time needed to learn).

In Creemer’s model, time and opportunity are taken into account both at classroom and school level. The factors at school and context level have both direct and indirect effect on student’s achievement since they have potential to influence student learning directly or through having an impact on teaching and learning environment. Teaching has a central focus in the model through its classroom/teacher level. Based on findings of teacher effectiveness based studies (Rosenshine and Stevens, 1986; Frazer et al., 1987; Muijs and Reynolds, 2000) the model examines eight observable teacher’s behavior factors that have potential to influence student learning. These eight factors include orientation, structuring, questioning, teaching modeling, application, time management, classroom as a learning environment, and assessment. Since this meta-analysis is related to teaching factors, a brief description of the dynamic model at the classroom/teacher level is mentioned below:

i) Orientation: It primarily refers to teacher’s behavior in providing clear objectives of the task conducted in class. An orientation process makes tasks and lessons meaningful to students. At the same time, it encourages active participation in the classroom (De Corte, 2000; Paris and Paris, 2001).

ii) Structuring: According to Rosenshine and Stevens (1986), student achievement increases when teachers actively give content materials and structure them in the following manner:

a) Beginning with overview/defining objectives.

b) Outlining the contents to be covered and pointing the transitions between lessons.

c) highlighting main ideas.

d) Reviewing main points in the end.

Summary reviews help in integrating and reinforcing learning of major points (Brophy and Good, 1986). The aforementioned structuring elements help in memorizing of information and comprehension as an integrated whole. The student is able to recognize the inter-linkages between different parts.

iii) Questioning: This factor is defined in terms of five elements in the dynamic model. First, teachers should offer a mix of both single response questions as well as detailed explanation questions. Secondly, the length of pause should vary according to the difficulty of the question. Thirdly, question clarity can be measured by examining the extent to which student is able to understand what is required. Fourthly, teachers should expect correct answers for most of the questions and overt or incomplete/incorrect answers for others rather than no answer at all (Brophy and Good, 1986). Fifthly, correct answers by students should be rewarded or acknowledged as they motivate not only their contributors but other students as well. Teachers should try to elicit an improved response for an incorrect answer by paraphrasing or giving cues rather than ending the interaction completely (Rosenshine and Stevens, 1986).

iv) Teaching modeling: This factor addresses the role of teachers in helping students devise problem solving strategies. Effective teachers either give a clear strategy or help students in developing strategies in order to solve different problems (Gijbels et al., 2006; Grieve, 2010).

v) Application: An effective teacher gives students adequate opportunity to practice a skill or a procedure discussed in class (Borich, 1992). It also examines whether the knowledge is applied to real life problems. The application tasks in class should be used as starting points for next level of teaching and learning.

vi) Classroom as a learning environment: According to dynamic model, types of interactions in the classroom should be examined rather than student’s perception of teacher’s interpersonal behavior. Therefore, this factor consists of five components—teacher-student interaction, student-student interaction, competition between students, teacher’s treatment of students, and classroom disorder. The first two elements are crucial to measure classroom climate (Cazden, 1986; Den Broket al., 2004). The other elements reflect the teacher’s attempt to create a supportive environment for learning (Walberg, 1986).

vii) Time management: Management of time in an efficient manner is considered as an indicator of teacher’s ability to manage a classroom (Kyriakides and Tsangaridou, 2008). Effective teachers organize and manage the classroom environment as per the availability of time and maximize engagement rates (Creemers and Reezigt, 1996).

viii) Assessment: It is one of the important aspects of teaching (Stenmark, 1992). Assessment of students help in identifying student’s needs as well as evaluating the teaching practice. This factor takes into account the frequency of administering assessment, formative use of assessment and reporting to parents.

It is pointed out that eight factors of the dynamic model do not form a single teaching approach. Rather, the model consists of aspects from different teaching approaches, considering that effective teaching can be a combination of different approaches to teaching.

Research Questions

The review synthesizes research on effective teaching and student learning outcomes and addresses the following research questions:

a) Is there a correlation between teaching factors and student learning outcomes?

b) Do the effects associated with teaching factors and learning outcomes vary with level of education, country (western/non-western) and study design.

Materials and Methods

Selection Criteria

To capture evidence relevant to the research questions, studies were considered eligible for inclusion in the meta-analysis if they met the following criteria:

a) Reported original data.

b) Assessed teaching factors as defined in the Dynamic model of educational effectiveness.

c) Measured student achievement in relation to cognitive, affective and psychomotor learning outcomes.

d) They were publicly available in online mode or in library archives.

The studies included in this meta analysis were searched from various databases like: Educational resources information centre (ERIC), SCOPUS, ProQuest, Web of Science and PsycArticles. The study included unpublished dissertations. Numerous education focused peer reviewed journals like British educational research journal, Oxford review of education, Learning and Instruction, Teaching in Higher Education, and Learning environment research were scrutinized.

Search, Retrieval and Selection of Studies

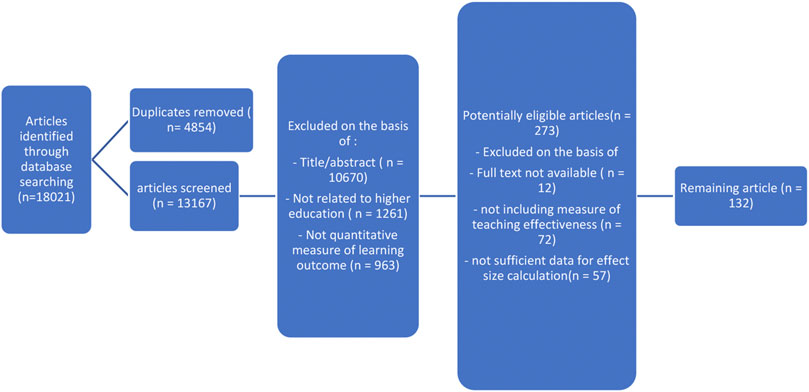

A total of 18021 records were identified through the databases between year 2000–2020. The data from last 2 decades is included because the major overhauling in the higher education sector has happened in this time frame (Capano et al., 2019). The preferred reporting items for systematic and meta analysis (PRISMA) guidelines were followed in selection of studies (See Figure 1). Some of the keywords used were: learning, teaching, learning outcome, factors influencing teaching and learning. Records were screened on the basis of titles, abstracts and keywords. Only studies that met criteria 1), 2), 3) and 4) were included in the study. The 372 articles that passed initial screening were retrieved and full text files were further evaluated. Finally, a total number of 132 studies were obtained and coded using a pre-defined coding form that elicited detailed information about the studies such as author, year, journal, type of study (longitudinal, cross-sectional, experimental), country (western/non-western), level of education (primary, secondary, college), no. of participants, reliability reporting and statistics needed for computing effect size.

Calculating Effect Sizes

In order to calculate effect sizes of each teaching factor, the Fisher’s Z transformation of the correlation coefficient was computed. Because, not all studies present their results in correlations, all other effect size measures were transformed into correlations using formula given by Rosenthal et al. (1994). For all small values of correlation coefficient (−0.20 < r < 0.20), Zr and r do not differ significantly (Hunter and Schimdt, 2004). Since meta analysis was based on the effect sizes as calculated by Cohen’s d, it was taken into account that when r value is too small, Cohen’s d value is approximately twice the size of r (Cohen, 1988).

The procedure suggested by Mavridis and Salanti (2013), Quintana (2015) and Lambert et al. (2002) was adopted to conduct meta-analysis using SPSS 26.0 version. The macros MEAN ES was added using the syntax “INCLUDE” in SPSS. This procedure has been used in various meta analysis studies conducted in the area of teacher effectiveness (Scheerens and Bosker, 1997; Kyriakides et al. 2000; Witziers et al., 2003). The meta analysis was conducted to generate the unbiased mean effect size, the standard error, 95% confidence interval and values for test of heterogeneity including Q, p and I-squared.

Meta analysis can be conducted using two types of model: Fixed effect and random effects model. A fixed effect model is based on the assumption that all studies included in the meta analysis share one effect size, which is the average effect size. On the other hand, a random effect model operates on the assumption that there is more than one true effect size which could vary from one study to another (Lipsey and Wilson, 2001). Taking into consideration the diversity in the ways effective teaching interventions are implemented and variability of student learning outcomes, a random effects model was regarded more accurate (Borenstein et al., 2009).

Results

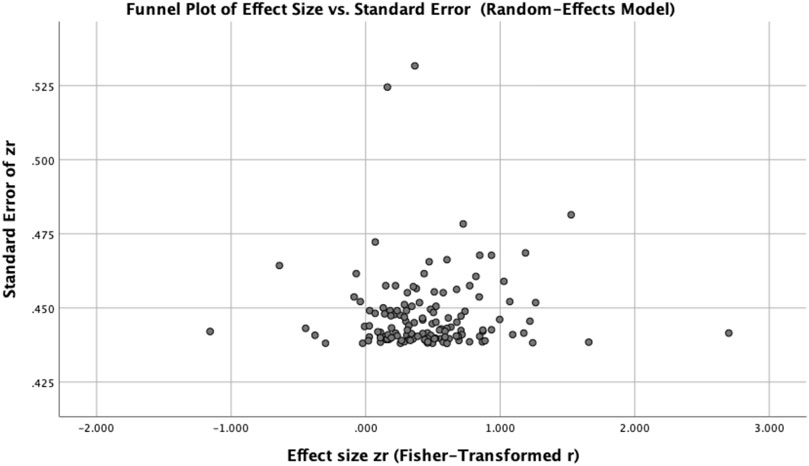

It is important to highlight that this meta analysis like any other analysis is dependent on the availability of existing literature. Therefore, some factors that are important for teaching and which are currently under investigation might have been omitted. Another recurring problem in meta-analyses is “publication bias” i.e. non published studies are seldom included in the analysis. To overcome this limitation, various doctoral theses and dissertations were included in the meta analysis. Not only this, the publication bias was checked using “Funnel plot”.

In the funnel plot, the x axis shows the mean result and y axis shows the index of precision or sample size (Egger et al., 1997). The basis of assessing bias is that if all studies give random assessment of the unbiased mean value, the plot should be symmetrical. Figure 2 shows that the funnel plot obtained in this analysis was symmetrical except 2 studies which gave extreme values. These studies were later removed from the study to obtain a final count of 132 studies.

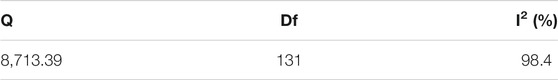

Table 1 and Table 2 showed the meta analytic results obtained in this study. The results showed a simple mean effect size of 0.37 (p < 0.05) suggesting that teaching factors have a moderate, statistically significant effect with student learning outcomes (g = 0.37, p < 0.001) with significant heterogeneity, Q (131) = 8,713.39, p < 0.001, I2 = 0.984. This showed that the findings across sample studies were highly inconsistent and heterogeneous in nature. There was unattributed variability in the individual effect size that constitute the overall result obtained. Hence, the need to conduct a moderator analysis on sample characteristics and methodological features to further determine the factors that may attribute to the variability in effect size was required.

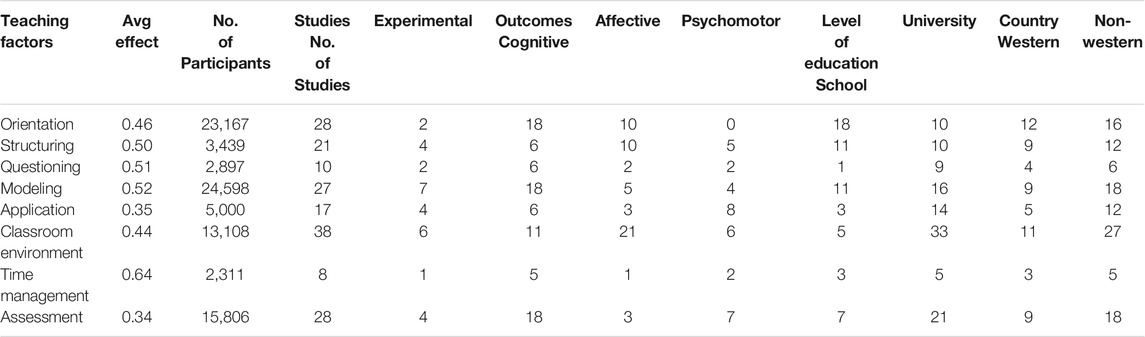

Table 3 presented below showed the results of the meta analysis conducted in this study. Other than listing the number of studies explored per teaching factor and classifying studies as per the type of learning outcome (cognitive, affective, psychomotor) and educational level (school and college), Table 3 also reported the average effect sizes of all factors included in the study. The following observations were presented below:

TABLE 3. Characteristics of studies investigating the effect of teacher-level factors on student achievement and types of effects identified.

First, it was observed that there were an uneven number of studies across the factors under exploration. The factor “time management” was examined in only eight studies, while “classroom environment” factor was analyzed in 38 studies. There is literature available on relation between student’s time management and learning outcome. But, there is paucity of studies on impact of teacher’s time management in classroom on student learning outcome. Second, experimental and longitudinal studies comprised a very small portion of the studies examined in this analysis. Third, among the learning outcomes examined, cognitive outcomes had the maximum share with fewer studies for affective and psychomotor outcomes. Fourth, more than 60% of the studies were conducted at primary (school) levels. Very few studies have been conducted at tertiary (college) levels. Further, most of the studies were conducted in the non-western parts of the world.

Moving on to the average effect sizes of the factors examined in the meta analysis, it was observed that four out of eight factors of the dynamic model had moderate effect sizes, in the range of 0.34–0.46. The most notable effect size was observed in the case of time management factor (0.64) even though it was a part of only eight studies. Therefore, further studies are therefore required to enable generalizability of results.

The next step in this meta analysis was to examine if the reported effect sizes were moderated by factors like country in which study was conducted (western or non western), level of education (school or university) and study design employed (cross sectional, longitudinal, experimental). The MetaF macros was used in SPSS to conduct the random effect moderator analysis. The following observations arise from Table 4. First, the countries in which studies were conducted did not moderate the relationship between teaching and student learning outcomes, since the reported effect sizes were not too high (western: 0.34; non-western: 0.41). This supports the assumption that factors included in the dynamic model are generic in nature. In other words, the teaching factors and their impact on student learning outcomes are universal. Second, the study design did have a moderating effect on the average effect sizes. Experimental studies reported larger effect size (0.57) than other types of studies (cross sectional: 0.30; longitudinal: 0.19). These findings reveal that researchers in the field of educational effectiveness should consider the possibility of conducting more experimental studies when exploring the impact of teaching on student learning outcomes. Third, the moderator analysis revealed that level of education acted as a moderator. The effect size of university level of education (0.79) was higher than school level (0.25) studies. This gives a scope of further research in university level education.

Discussion and Implications

Meta analysis is a popular tool for summarizing, integrating and interpreting quantitative empirical research studies. In this study, this tool was used not only to integrate existing work on the contribution of teaching factors to student learning, but also to provide empirical validity to theoretical dynamic model of educational effectiveness. For this purpose, the dynamic model was used as a framework to select and organize 132 studies examining the impact of teaching factors on student learning outcomes. In this part, we summarize the main findings of the meta analysis and consider their theoretical and practical implications.

One of the findings of the meta analysis is that the teaching factors that were found to have an impact on student learning outcomes (Cognitive, affective, psychomotor) were, actually, not related to either direct and active teaching approaches or constructivist approaches as bifurcated by many scholars. The analysis showed that factors, in fact, related to both direct active teaching approach (structuring, time management) and constructivist approach (orientation, modeling) contributed to student learning outcomes. From a theoretical standpoint, this finding points out that when it comes to teaching, imposing inessential and superfluous dichotomies between different teaching approaches might be impractical. Instead, by being agnostic to the approach to teaching and considering how teacher’s interact during the lesson—irrespective of the teaching approach might be more effective. Grossman and McDonald (2008) resonate with this idea, when they suggest that any attempt to develop a framework for learning and teaching should not prioritise one approach over another, instead be more inclusive in nature. They even contended that research on teaching suffers from a sort of historical amnesia where the past is forgotten in a rush to invent something new. On similar lines, this meta analysis has highlighted the importance of focusing on teaching factors rather than teaching approach. Good teaching may not be necessarily related to a particular teaching approach, rather its quality resides in making judicious choice and using components of different approaches to benefit student learning (Creemers and Kyriakides, 2006).

From a theoretical perspective, the findings of this meta analysis reveal weak moderators on the factors examined in this meta analysis across different countries. From this respect, it can be considered that teaching factors transcend different educational contexts and have a generic character. However, this argument can be further examined in future studies incorporating results across a greater range of countries. Further studies could also investigate into the moderating effect of level of education. This meta analysis showed greater effect in university education. The impact of teaching factor on learning outcomes can be explored at primary level in future studies.

The findings of this study offer implications for further research. First, the study gives an additional purpose for conducting meta analyses. Meta analyses are usually done for two main reasons. First, when researchers are interested in appraising and synthesizing accumulated knowledge in a particular field, with the aim of giving answers to what factors may contribute to other variables. Meta analyses are also used in capitalizing on the existing results to design future studies or build a new theory. The approach used in this study highlights the importance of using a theory driven approach in selecting, organizing and integrating existing literature. The dynamic model of educational effectiveness used in this study offered a framework that guided selecting, classification and structuring of factors included in the analysis. This helped in empirically validating the model and offered insights in which model can be extended. Therefore, it can be implied that theory driven meta analysis not only helps in synthesizing the findings across studies, but also contributes towards new theory building and modification (Creemers and Kyriakides, 2006).

The next methodological implication of this meta analysis pertains to a strong need of conducting more experimental and longitudinal studies. Such studies help in tracing the impact of teaching factors over a period of time. The third methodological implication derived from this study is that future studies should move beyond cognitive outcomes, which had a major chunk in this meta analysis. Not only this, future works should focus more on tertiary education that appeared to be ignored in comparison to other educational levels.

The findings of this meta analysis may also provide implications for practitioners and policymakers especially in the domain of faculty development and education programs. Researchers are increasingly focusing on “high leverage” or “core teaching practices” which can be emulated irrespective of the curricula or instructional approach and have the potential to improve student learning. This meta analysis provides empirical evidence of the factors identified across different studies, that influence student learning outcomes. It can give existing or prospective teachers an opportunity to practice and rehearse these factors in classroom, reflect on them and receive feedback on how their practices can be improved. But at the same time, attention should be given to the extent to which these factors have been successful at different educational levels. Something, that this meta analysis could not completely answer, and which definitely gives a scope of further research.

Various researchers like Grossman et al. (2009) argued that the main obstacle in redefining and improving teacher preparation and ongoing education programs lies in reaching consensus among educators regarding set of core practices around which these programs should be built. Findings from this meta analysis can help these stakeholders in making informed decisions which are both evidence based and theory driven.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

RS singh is the principal author of the article. PC is the corresponding author.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Borenstein, M., Hedges, L. V., Higgins, J. P. T., and Rothstein, H. R. (2009). Introduction to Meta-Analysis. Chichester, UK: John Wiley & Sons. doi:10.1002/9780470743386

Brookover, W. B., and Lezotte, L. W. (1979). Changes in School Characteristics Coincident with Changes in Student Achievement. East Lansing: Michigan State University.

Brophy, J., and Good, T. L. (1986). “Teacher Behavior and Student Achievement,” in Handbook of Research on Teaching. Editor M. C. Wittrock. 3rd ed. (New York: Macmillan), 328–375.

Capano, G., Pritoni, A., and Vicentini, G. (2019). Do policy Instruments Matter? Governments' Choice of Policy Mix and Higher Education Performance in Western Europe. J. Pub. Pol. 40, 375–401. doi:10.1017/S0143814X19000047

Carroll, J. B. (1989). The Carroll Model: A 25-Year Retrospective and Prospective View. Educ. Res. 18 (1), 26–31. doi:10.3102/0013189x018001026

Cazden, C. B. (1986). “Classroom Discourse,” in Handbook of Research on Teaching. Editor M. C. Wittrock (New York: Macmillan), 432–463.

Cohen, J. (1988). Statistical Power Analysis of the Behavioral Sciences. 2nd ed. New York: Academic Press.

Coleman, J. S. (1996). The Possibility of a Social Welfare Function. Am. Econ. Rev. 56 (5), 1105–1122.

Creemers, B. P. M., and Kyriakides, L. (2006). Critical Analysis of the Current Approaches to Modelling Educational Effectiveness: The Importance of Establishing a Dynamic Model. Sch. Effectiveness Sch. Improvement 17 (3), 347–366. doi:10.1080/09243450600697242

Creemers, B. P. M., and Kyriakides, L. (2008). The Dynamics of Educational Effectiveness: A Contribution to Policy, Practice and Theory in Contemporary Schools. London and New York: Routledge.

Creemers, B. P. M., and Reezigt, G. J. (1996). School Level Conditions Affecting the Effectiveness of Instruction. Sch. Effectiveness Sch. Improvement 7 (3), 197–228. doi:10.1080/0924345960070301

De Corte, E. (2000). Marrying Theory Building and the Improvement of School Practice: a Permanent challenge for Instructional Psychology. Learn. Instruction 10 (3), 249–266. doi:10.1016/s0959-4752(99)00029-8

Den Brok, P., Brekelmans, M., and Wubbels, T. (2004). Interpersonal Teacher Behaviour and Student Outcomes. Sch. Effectiveness Sch. Improvement 15 (4), 407–442.

Egger, M., Smith, G. D., Schneider, M., and Minder, C. (1997). Bias in Meta-Analysis Detected by a Simple, Graphical Test BMJ. 315 (7109), 629–634.

Frazer, B. J., Walberg, H. J., Welch, W. W., and Hattie, J. A. (1987). Syntheses O Educational Productivity Research. Int. J. Educ. Res. 11, 145–252.

Gijbels, D., Van de Watering, G., Dochy, F., and Van den Bossche, P. (2006). New Learning Environments and Constructivism: The Students' Perspective. Instr. Sci. 34 (3), 213–226. doi:10.1007/s11251-005-3347-z

Goldstein, H. (2003). Multilevel Models in Educational and Social Research. 3rd ed. London: Edward Arnold.

Grieve, A. M. (2010). Exploring the Characteristics of 'teachers for Excellence': Teachers' Own Perceptions. Eur. J. Teach. Education 33 (3), 265–277. doi:10.1080/02619768.2010.492854

Grossman, P., Hammerness, K., and McDonald, M. (2009). Redefining Teaching, Re‐imagining Teacher Education. Teach. Teach. 15 (2), 273–289. doi:10.1080/13540600902875340

Grossman, P., and McDonald, M. (2008). Back to the Future: Directions for Research in Teaching and Teacher Education. Am. Educ. Res. J. 45 (1), 184–205. doi:10.3102/0002831207312906

Hunter, J. E., and Schmidt, F. L. (2004). Methods of Meta-Analysis: Correcting Error and Bias in Research Findings. 2nd ed. Thousand Oaks, CA: Sage.

Jencks, C. S., Smith, M., Ackland, H., Bane, M. J., Cohen, D., Gintis, H., et al. (1972). Inequality: A Reassessment of the Effect of the Family and Schooling in America. New York: Basic Books.

Kyriakides, L., Campbell, R. J., and Gagatsis, A. (2000). The Significance of the Classroom Effect in Primary Schools: An Application of Creemers' Comprehensive Model of Educational Effectiveness. Sch. Effectiveness Sch. Improvement 11 (4), 501–529. doi:10.1076/sesi.11.4.501.3560

Kyriakides, L., and Tsangaridou, N. (2008). Towards the Development of Generic and Differentiated Models of Educational Effectiveness: a Study on School and Teacher Effectiveness in Physical Education. Br. Educ. Res. J. 34 (6), 807–838. doi:10.1080/01411920802041467

Lambert, P. C., Sutton, A. J., Abrams, K. R., and Jones, D. R. (2002). A Comparison of Summary Patient-level Covariates in Meta-Regression With Individual Patient Data Meta-Analysis. J. Clin. Epidemiol. 55 (1), 86–94.

Mavridis, D., and Salanti, G. (2013). A Practical Introduction to Multivariate Meta-Analysis. Stat. Methods Med. Res. 22 (2), 133–158. doi:10.1177/0962280211432219

Mortimore, P., Sammons, P., Stoll, L., Lewis, D., and Ecob, R. (1988). School Matters: The Junior Years. Somerset: Open Books. (reprinted in 1995 by Paul Chapman: London).

Muijs, D., and Reynolds, D. (2000). School Effectiveness and Teacher Effectiveness: Some Preliminary Findings from the Evaluation of the Mathematics Enhancement Programme (Primary). Sch. Effectiveness Sch. Improvement, 11, 273–303. doi:10.1076/0924-3453(200009)11:3;1-g;ft273

Paris, S. G., and Paris, A. H. (2001). Classroom Applications of Research on Self-Regulated Learning. Educ. Psychol. 36 (2), 89–101. doi:10.1207/s15326985ep3602_4

Quintana, D. S. (2015). From Pre-registration to Publication: A Non-technical Primer for Conducting a Meta-Analysis to Synthesize Correlational Data. Front. Psychol. 6, 1549. doi:10.3389/fpsyg.2015.01549

Rosenshine, B., and Stevens, R. (1986). “Teaching Functions,” in Handbook of Research on Teaching. Editor M. C. Wittrock. 3rd ed. (New York: Macmillan), 85–107.

Rutter, M., Maughan, B., Mortimore, P., Ouston, J., and Smith, A. (1979). Fifteen Thousand Hours: Secondary Schools and Their Effects on Children. Cambridge, Mass: Harvard University Press.

Rosenthal, r., Cooper, H., and Hedges, L. (1994). Parametric Measures of Effect Size The Handbook of Research Synthesis 621 (2), 231–244.

Slavin, R. E. (1983). When Does Cooperative Learning Increase Student Achievement? Psychological bulletin 94 (3), 429.

Slavin, R. E., and Cooper, R. (2001). Cooperative Learning Programs and Multicultural Education: Improving Intergroup Relations Research on Multicultural Education and International Perspectives, 15–33.

Scheerens, J., and Bosker, R. J. (1997). The Foundations of Educational Effectiveness. Oxford: Pergamon.

Scheerens, J., and Creemers, B. P. (1989). Conceptualizing School Effectiveness Int. J. Educ. Res. 13 (7), 691–706.

Scheerens, J. (1990). School Effectiveness Research and the Development of Process Indicators of School Functioning. Sch. Effectiveness Sch. Improvement l (1), 61–80. doi:10.1080/0924345900010106

Stenmark, J. K. (1992). Mathematics Assessment: Myths, Models, Good Questions and Practical Suggestions. Reston, VA: NCTM.

Stringfield, S. C ., and Slavin, R. E. (1992). “A Hierarchical Longitudinal Model for Elementary School Effects,” in Evaluation of Educational Effectiveness. Editors B P. M. Creemers, and G. J. Reezigt (Groningen: ICO), 3548.

Teddlie, C., and Stringfield, S. (1993). Schools Make a Difference: Lessons Learned from a Ten Year Study of School Effects. New York: Teachers College Press.

Walberg, H. J. (1986). “Synthesis of Research on Teaching,” in Handbook of Research on Teaching. Editor M. C. Wittrock. 3rd ed. (New York: Macmillan), 214–229.

Weber, G. (1971). Inner City Children Can Be Taught to Read: Four Successful Schools. Washington DC: Council for Basic Education.

Keywords: learning, teaching, meta analysis, higher education, quality

Citation: Chaudhary P and Singh RK (2022) A Meta Analysis of Factors Affecting Teaching and Student Learning in Higher Education. Front. Educ. 6:824504. doi: 10.3389/feduc.2021.824504

Received: 29 November 2021; Accepted: 27 December 2021;

Published: 21 February 2022.

Edited by:

Satyanarayana Parayitam, University of Massachusetts Dartmouth, United StatesReviewed by:

Alex Aruldoss, St. Joseph’s College of Arts and Science (Autonomous), IndiaRajesh Elangovan, Bishop Heber College, India

Copyright © 2022 Chaudhary and Singh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Priya Chaudhary, cHJpeWEuY2hhdWRoYXJ5MjEwOEBnbWFpbC5jb20=

Priya Chaudhary

Priya Chaudhary Reetesh K. Singh

Reetesh K. Singh