- 1Institute of Cognitive Neuroscience, National Research University Higher School of Economics, Moscow, Russia

- 2Department of Learning, Data Analytics and Technology, Section Cognition, Data and Education, Faculty of Behavioural, Management and Social Sciences, University of Twente, Enschede, Netherlands

Objective: Non-invasive Brain–Computer Interfaces provide accurate classification of hand movement lateralization. However, distinguishing activation patterns of individual fingers within the same hand remains challenging due to their overlapping representations in the motor cortex. Here, we validated a compact convolutional neural network for fast and reliable decoding of finger movements from non-invasive magnetoencephalographic (MEG) recordings.

Approach: We recorded healthy participants in MEG performing a serial reaction time task (SRTT), with buttons pressed by left and right index and middle fingers. We devised classifiers to identify left vs. right hand movements and among four finger movements using a recently proposed decoding approach, Linear Finite Impulse Response Convolutional Neural Network (LF-CNN). We also compared LF-CNN to existing deep learning architectures such as EEGNet, FBCSP-ShallowNet, and VGG19.

Results: Sequence learning was reflected by a decrease in reaction times during SRTT performance. Movement laterality was decoded with an accuracy superior to 95% by all approaches, while for individual finger movement, decoding was in the 80–85% range. LF-CNN stood out for (1) its low computational time and (2) its interpretability in both spatial and spectral domains, allowing to examine neurophysiological patterns reflecting task-related motor cortex activity.

Significance: We demonstrated the feasibility of finger movement decoding with a tailored Convolutional Neural Network. The performance of our approach was comparable to complex deep learning architectures, while providing faster and interpretable outcome. This algorithmic strategy holds high potential for the investigation of the mechanisms underlying non-invasive neurophysiological recordings in cognitive neuroscience.

1 Introduction

Decoding human brain activity is crucial for understanding and enhancing motor learning outcomes, as it provides insights into the neural mechanisms underlying movement control, skill acquisition, and adaptation. Brain signal patterns are associated with motor planning, execution, and learning, enabling the development of advanced neurorehabilitation strategies and personalized training protocols. For instance, studies have demonstrated that neurons in the primary motor cortex convey more information about movement direction after learning, indicating improved neuronal coding post-training (Li et al., 2001). Decoding models are also instrumental in translating neuronal group activities into understandable stimulus features, such as stimulus orientation, which is essential for interpreting complex visual scenes or abstract semantic information (Mathis et al., 2024). This knowledge is valuable for individuals recovering from neurological disorders, athletes seeking to optimize performance, and broader applications like brain-computer interfaces (BCI).

Achieving optimal performance in BCI systems requires a careful balance between interdependent factors of speed and accuracy when employing compact convolutional neural networks (CNNs) like EEGNet. While EEGNet often reports of improved accuracy over other CNNs (de Oliveira and Rodrigues, 2023; Rao et al., 2024), the computational demands associated with deep learning models can impact real-time decoding speed and hamper timely feedback in motor learning and control applications. Therefore, optimization of architecture and implementation of efficient computational CNN strategies are essential to maintain a favorable balance between speed and accuracy for practical BCI applications. Recently, a promising method called Linear Finite Impulse Response Convolutional Neural Network (LF-CNN) (Zubarev et al., 2019) emerged as a promising approach to substantially reduce CNN model complexity while preserving high accuracy and interpretability. However, the LF-CNN has only been tested in motor imagery tasks and must yet be validated in the context of more complex motor sequence learning task. The translation and decoding of finger-movements can provide essential information on the brain’s motor learning processes that can be applied to understand mechanisms of motor action, skill mastery, rehabilitation and training.

Understanding how the brain encodes and adapts during motor learning behavior is one of the main challenges in cognitive neuroscience. A key objective is to decompose complex, multidimensional brain data into interpretable representations, unveiling cognitive processes in motor learning (Bijsterbosch et al., 2020). One widely used paradigm to study implicit motor learning is the Serial Reaction Time Task (SRTT), which involves a sequence of key presses in response to spatially mapped visual stimuli (Nissen and Bullemer, 1987; Robertson, 2007). The stimuli appear random and follow a repeating 12-key sequence, making it difficult for participants to identify clear start or end points. As learning progresses, responses typically shift from slow, stimulus-driven reactions to faster, more accurate movements guided by an internalized sequence representation (Chan et al., 2018; O'Connor et al., 2022). The SRTT is particularly well-suited for investigating the neural correlates of motor learning, as it elicits well-characterized changes in cortical activity. Early learning stages are associated with increased engagement of frontal and occipital regions (Albouy et al., 2015), while later stages show a shift toward motor cortex dominance as the sequence becomes more ingrained (Immink et al., 2019; Verwey et al., 2019). These dynamics make the SRTT a valuable tool for validating cortical decoding approaches and systematically examining the neurophysiological mechanisms underlying skill acquisition (Keele et al., 2003). In this study, we recorded magnetoencephalography (MEG) data during SRTT performance to evaluate the capacity of decoding models to capture learning-related changes in cortical activity.

Decoding brain activity associated with hand and finger movements is pivotal in advancing BCI technologies, with essential advances needed for accurate interpretation of neural signals. Recent advances in neural decoding have increasingly emphasized the importance of interpretable deep learning models for capturing fine-grained motor information from non-invasive recordings. For example, Borra et al. (2023) demonstrated that convolutional neural networks can effectively decode movement kinematics from EEG while preserving interpretability, a critical feature for clinical applications. Similarly, Tian et al. (2025) and Jain and Kumar (2025) proposed regression-based and source-imaging approaches to estimate upper limb trajectories and grasp-lift dynamics, respectively, highlighting the growing interest in decoding continuous motor parameters and skill development. Borràs et al. (2025) further emphasized the role of repetition in modulating motor cortical activity, reinforcing the need for models that can adapt to dynamic neural states. Compact CNNs such as those proposed by Cui et al. (2022) and Petrosyan et al. (2021) have shown promise in cross-subject decoding tasks, underscoring the potential of lightweight architectures for real-world BCI deployment.

MEG-based studies have begun to demonstrate the feasibility of decoding complex motor behaviors with high temporal resolution. For instance, Lévy et al. (2025) used MEG to decode sentence-level typing behavior, revealing hierarchical neural dynamics that span motor and cognitive processes. Their Brain2Qwerty model achieved a peak accuracy of ~74% from MEG signals, narrowing the gap between invasive and non-invasive BCI approaches. In another instance, Bu et al. (2023) developed MEG-RPSnet, a convolutional neural network designed to classify hand gestures (rock, paper, and scissors) using MEG data. Using data from 12 participants, the authors combined a tailored preprocessing pipeline with a deep learning architecture trained on single-trial and the model achieved an average classification accuracy of 85.6%, outperforming most traditional machine learning methods and EEG-based neural networks. Notably, the model maintained high performance even when restricted to central-parietal-occipital or occipitotemporal sensor regions, highlighting the spatial specificity of gesture-related neural activity. These recent studies underscore the potential of MEG for decoding fine motor actions such as finger movements and gestures and support the development of interpretable models that can bridge decoding performance with neuroscientific insight.

Despite advances in non-invasive BCIs, decoding individual finger movements using EEG remains challenging due to overlapping cortical representations (Alazrai et al., 2019; Gannouni et al., 2020), noise (Nam et al., 2008), artifacts (Guarnieri et al., 2018), and inter-individual variability (Allison et al., 2010; Volosyak et al., 2011). These factors often can degrade decoding accuracy and obscure causal relationships in neural signals (Mitra and Pesaran, 1999). Recent work by Kim et al. (2023) successfully identified the specific cerebral cortices involved in processing acceleration, velocity, and position during directional reaching movements using MEG data and time-series deep neural network models. This approach not only facilitated the accurate decoding of kinematic parameters, but also utilized explainable AI to pinpoint the distinct and shared cortical regions responsible for each kinematic attribute, providing valuable insights into the neural underpinnings of motor control. Together, these studies underscore the value of MEG for decoding complex motor learning and support the development of interpretable, high-resolution models for gesture-based BCI applications.

Deep learning (DL) has emerged as a powerful solution to many of these challenges by integrating feature extraction and classification into a unified framework, reducing the need for manual data engineering (Mahmud et al., 2018). DL models still offer improved performance but often demand large datasets and high computational resources (Gutierrez-Martinez et al., 2021; Rashid et al., 2020; Saha et al., 2021; Zhang et al., 2021), limiting their use in time-sensitive applications like real-time rehabilitation feedback for BCI applications (Yeager et al., 2016). Effective BCIs must therefore balance accuracy, adaptability, and efficiency for these systems to translate neural signals into timely control commands for assistive technologies (King et al., 2018).

Architectures such as FBCSP ShallowNet (Amin et al., 2019; Schirrmeister et al., 2017) and EEGNet (Al-Saegh et al., 2021; Gemein et al., 2020; Lawhern et al., 2018) have demonstrated strong performance in motor imagery classification, with EEGNet in particular showing high generalizability across paradigms and tasks. Recent work has shown that EEGNet can decode individual finger movements with promising accuracy (Rao et al., 2024). Similarly, the VGG19 architecture, originally developed for image classification, has been adapted for EEG decoding due to its ability to capture complex spatiotemporal features (Bagherzadeh et al., 2023; Simonyan and Zisserman, 2015; Vignesh et al., 2023).

Among these models, the Linear Finite Impulse Response Convolutional Neural Network (LF-CNN) introduced by Zubarev et al. (2019) stands out for its interpretability. Unlike conventional CNNs, LF-CNN employs fully linear spatial and temporal filters, allowing direct visualization of learned weights and facilitating neuroscientific insight. It has demonstrated competitive accuracy in motor imagery tasks while offering enhanced transparency and generalizability. Its lightweight design and interpretability make it particularly suitable for clinical applications where explainability is essential.

In the current study, we aimed to investigate the applicability of the LF-CNN beyond motor imagery by using MEG recordings of motor learning. We applied a two-layer LF-CNN to classify finger movements during the SRTT at the single-trial level. We hypothesized that LF-CNN would be capable of accurately decoding individual finger movements and that its performance would be comparable to more complex deep learning architectures. Additionally, we expected the model to offer faster computational times and interpretable patterns across spatial, temporal, and spectral domains, making it a promising tool for both research and clinical applications.

2 Methods and materials

2.1 Participants

Eight right-handed subjects (4 females, mean age = 25 years, SD = 6.8) participated to the study. Eligibility criteria included no severe health issues, psychological, neurological, or medical disorders, with a normal vision. The study was conducted according to the Institutional and Ethical Review Board (IRB) guidelines and regulations of the Higher School of Economics. All participants signed the informed consent form and participation agreement.

2.2 Experimental protocol

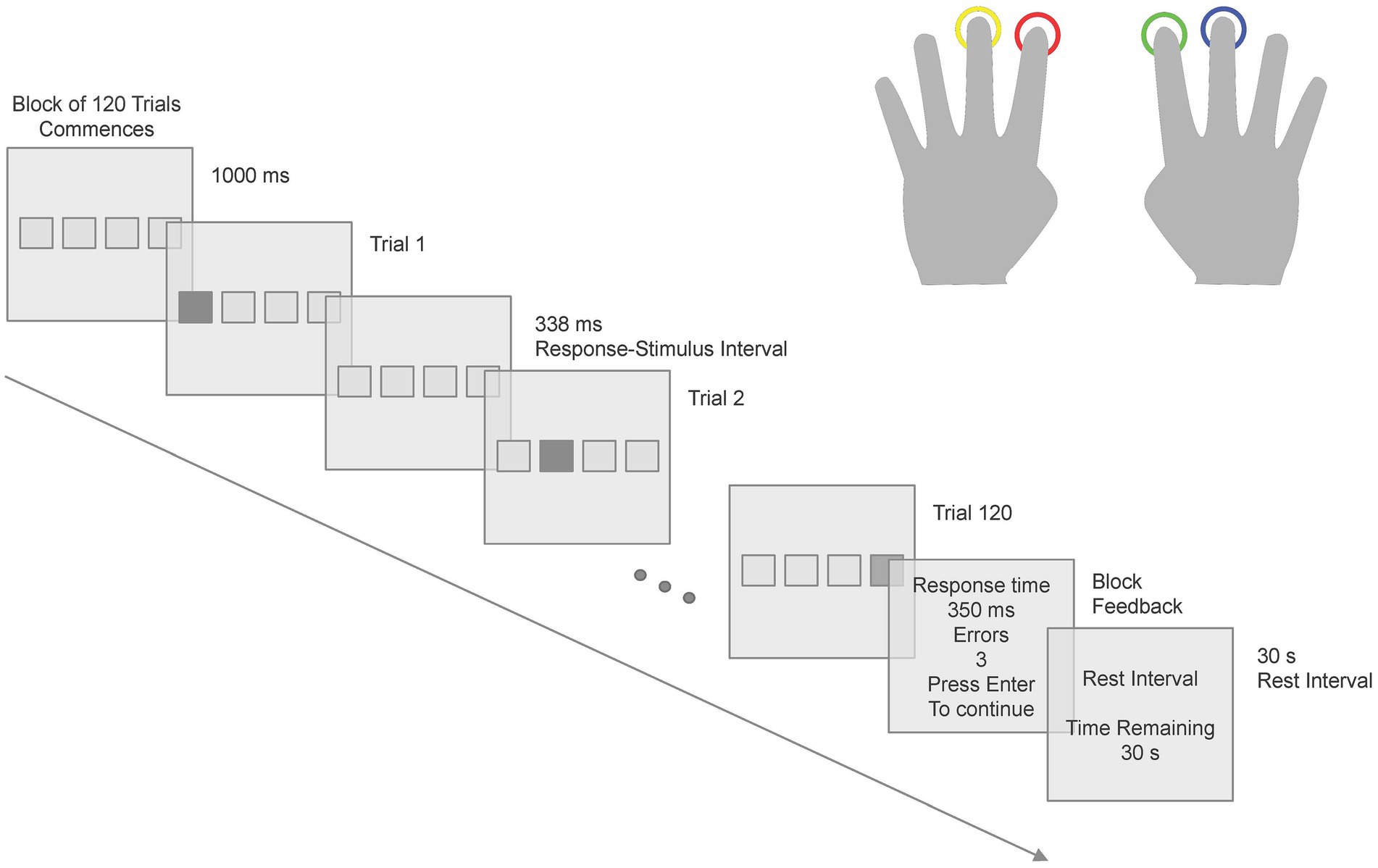

The Serial Reaction Time Task (SRTT) stimuli consisted of a series of four empty boxes, which were spatially mapped to four response keys in that participants used their left middle finger for Key 1 and left index finger for Key 2, right index finger for Key 3 and right middle finger for Key 4 (see Figure 1) on a color-coded controller pad. Participants responded when one of the four boxes turned red. The monitor was set to 64-bit color and had a screen refresh rate of 120 Hz. The participants were sitting in the MEG chair at 1.5 m from the monitor. Each box had a visual angle dimension of 2° × 2°, with a separation of 2° between each box. Following each response, there was a 338 ms interval before the next stimulus was presented. The SRTT consisted of a 12-item sequence (121342314324) repeating for 10 cycles for 12 learning blocks - 120 keypress trials per block, 1,440 keypress trials in total. After each block, participants received performance feedback, including the average reaction time and the number of error trials in that block. Following the feedback presentation, a rest interval of 30 s was given to the participants. The structure of the SRTT is depicted in Figure 1. The SRTT experiment was implemented in E-Prime® 2.0 Software (Psychology Software Tools Inc., Sharpsburg, USA). Participants were presented with on-screen written instructions for the SRTT and also explicitly told that the participants should use the designated fingers to press the corresponding response keys, prioritizing both speed and accuracy. The instructions emphasized that achieving a balance between response speed and accuracy was crucial for optimal performance.

Figure 1. Scheme of one block of the SRTT. The stimulus presentation and subsequent responses followed a specific pattern across 12 learning blocks of the SRTT.

2.3 MEG data recording and preprocessing

MEG data was recorded using the Vectorview Neuromag system, which comprised 306 channels including 204 gradiometers and 102 magnetometers, at a sampling frequency of 1,000 Hz. Data were then downsampled at 200 Hz. For subsequent analysis, only the gradiometer data was utilized. To mitigate artifacts, like eye-blinks and heartbeats, an independent component analysis (ICA) was performed. Trials affected by muscle artefacts (Muthukumaraswamy, 2013) were removed by visual inspection resulting in rejection of 5.7 ± 2.5% trials per participant. Following artifact removal, a high-pass finite impulse response (FIR) filter with a cutoff frequency of 0.5 Hz was applied to the signals to prevent data drift.

2.4 Data analysis

2.4.1 Data preparation

The MEG continuous data were sorted in epochs of ± 500 ms around the button press (1 trial): movement of left index finger, left middle finger, right index finger and right middle finger. Only trials with correct responses were considered for further analysis. These data underwent 4 classification tasks:

1. Left hand versus right hand (2 classes: left index and left middle is class 1; right index and right middle is class 2),

2. Classification within left hand (2 classes: left index is class 1; left middle is class 2),

3. Classification within right hand (2 classes: right index is class 1; right middle is class 2)

4. 4-fingers classification (4 classes: left index is class 1; left middle is class 2; right index is class 3; right middle is class 4).

Prior to the decoder training procedure, the data were z-scored in each epoch with respect to the mean and standard deviation computed across all channels. We present the analysis of response-locked trials rather than stimulus-locked to investigate the anticipation of the motor response. This is motivated by the fact that initial responses are triggered by visual input in the first blocks of the task (referred as learning phase), and anticipated by accumulated knowledge in the last blocks of the SRTT (referred as learned phase). For completeness, we included the outcome of four fingers decoding of the stimulus-locked trials in Supplementary Table S7.

2.4.2 Data decoding

The generative model for MEG data can be formulated as in Equation 1

where is the data observed at the sensor level at the timepoint t, are underlying sources projecting their activity to the sensors through the mixing matrix A, and ε is Gaussian white observation noise. Regression-based decoders are models that relate stimulus to encoded neural information. The basic principle of regression decoding (Warren et al., 2016) is presented in the computing of a matrix of spatial filters (demixing matrix), such that:

where W is the demixing matrix, which allows retrieving the estimated sources from the data . The demixing matrix W of Equation 2 can be computed with some optimization technique that aims to minimize predetermined cost function (for example stochastic gradient decent) to extract components containing the largest possible amount of information relating the underlying neural activity with the recorded data. Importantly, we emphasize that the matrix W is not itself a representation of such neural activity, but just a transfer function enhancing source related components and suppressing noise related components. To reconstruct the activation pattens mimicking the source of interest, we adopted the methodology described in Haufe et al. (2014):

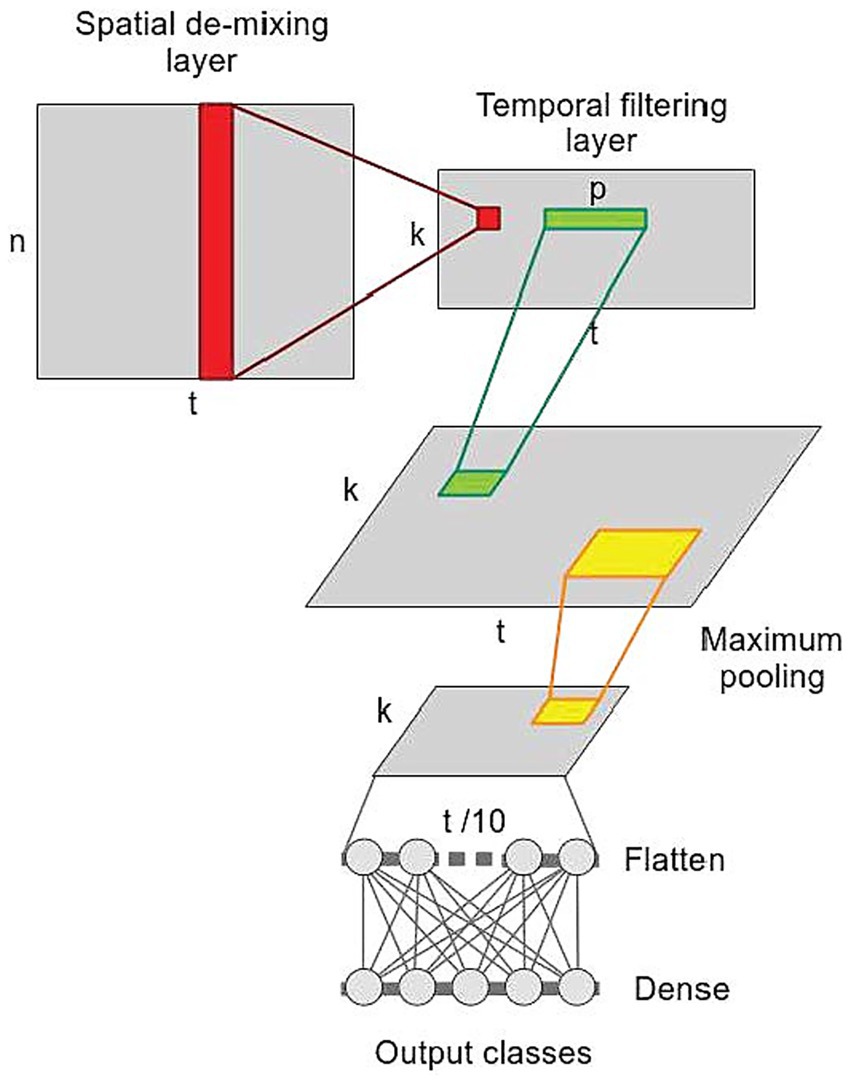

where A represents the activation patterns, while Kxx represent covariance matrices of data in sensor space and Kss is a covariance matrix of feature space, respectively. In our approach, the demixing matrix is obtained in two steps, decomposing and reconstructing the observed data in the spatial and temporal dimension. To this aim, we validated the performance Linear-Finite-Impulse-Response Filtering Convolutional Neural Network (LF-CNN) (Zubarev et al., 2019) on our SRTT dataset. The neural network consists only of 4 layers (Figure 2): (1) a spatial demixing layer applying k filters of size [n × 1] (red) to the input data of shape [n × t], producing latent sources [t × k]; (2) a temporal-filtering layer convolving k filters of size [p × 1] (green) to k latent sources extracted from the spatial demixing layer, producing a temporal filtering output of size [k × t]; (3) a temporal pooling layer (yellow) decreasing the temporal resolution by a factor of 10; (4) a fully connected layer with softmax activation to make prediction. Details on the hyperparameters of the LF-CNN are in Supplementary Table S3.

Figure 2. Linear-Finite-Impulse-Response Convolution Neural Network (LF-CNN). n, number of observation (MEG channels), k, number of extracted components, t, time (epochs length), p, length of temporal convolutional filters in the second layer.

The spatial demixing layer allows deriving spatially interpretable patterns. Weights estimated by the temporal-filtering layer can be considered as finite-impulse-response filters applied to spatial features, providing insights into the spectral and temporal properties of the extracted components as described in Petrosyan et al. (2021).

2.4.3 Results visualization

In the results section, we propose representative patterns for each set of decoders obtained, showing:

1. Spatial patterns, obtained in accordance to Equation 3 (Haufe et al., 2014), with W being the weights obtained in the first layer of the LF-CNN. These two elements are depicted in the upper panels of Figures in sections 3.2–3.3.

2. Temporal patterns. Obtained by convolving the output of the spatial filtering layer with the temporal filter weights (LF-CNN second layer). After applying the convolution separately to each trial, we averaged the time-resolved signal and also computed the average of the single-trial time–frequency representation. These two elements are superimposed in the middle panels of Figures in sections 3.2–3.3.

3. Spectral representation of input, transfer function and output of the second layer. The frequency spectra of the input to the temporal filter in the second layer (blue), the frequency spectra of the temporal filter convolution kernel, or filter response (filter green), and the frequency spectra of the output of the second layer (orange) are displayed in the bottom panels of Figures in sections 3.2–3.3.

2.4.4 Cross validation schema

To rigorously assess model performance, we adopted a 6-fold split strategy. In each iteration, five folds (83.3%) were used for training, while the remaining fold (16.6%) served as the held-out test set. Within the training phase, we further applied a 5-fold cross-validation on the training portion to create dynamic validation sets. This nested validation approach allowed for continuous monitoring and fine-tuning of the model’s generalization capability across training runs.

This strategy was used to train a decoder for each individual subject. The number of trials used in each subject for training and test are listed in Supplementary Table S2.

We benchmarked this approach with less interpretable deep-learning architectures: Shallow Filter-Bank Common Spatial Patterns CNN (FBCSP-CNN) (Schirrmeister et al., 2017), EEG-Net (Lawhern et al., 2018), VGG-19 (Simonyan and Zisserman, 2015).

2.4.5 Classification performance

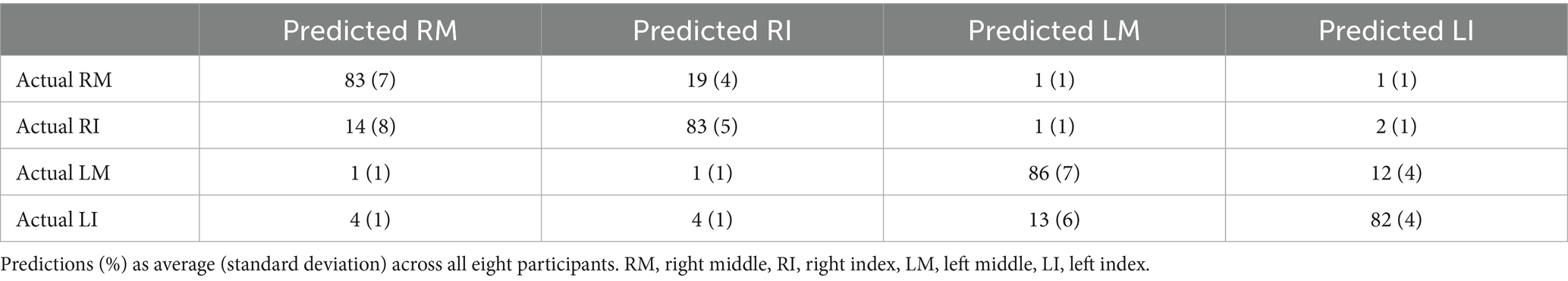

To evaluate the performance of each classifier, we computed the accuracy, defined as the percentage of correctly classified items over the total amount of items in the test set. Successful decoding must provide a performance at least above chance level, which is the probability of a correct prediction when randomly choosing one of the options. Thus, for two-classes problem a chance level is 50% and for four-classes problem a chance level is 25%. For the multiclass problem (four classes problem) we also provided a confusion matrix (Markoulidakis et al., 2021) to understand how classes are related to each other or which class is easier to classify.

2.4.6 Effect of learning

To investigate the effect of motor learning, we divided the data into two groups: learning – while the subjects are acquiring the sequence – and learned – at the end of the learning phase when the subjects already learnt the sequence. To define the learning phase, we compared the last block (when subjects was trained to perform the experimental task) versus all previous blocks, and to define the learned phase we compared the first block (when subjects are not yet trained) versus all following blocks (paired t-test, α = p < 0.05). Next, we applied the decoding strategy to a subset of trials to evaluate the sensitivity of the decoding on the change in underlying neural pattern. Specifically, we generated two classifiers, one trained on learning phase, and one trained on the learned phase. Both classifiers were tested against learning and learned test trials. Performances are compared (paired t-test, α = p < 0.05).

Differences between the temporal latent sources of the learning and learned phases of the paradigm were quantified by non-parametric cluster statistics in terms of F-values. The similarity across spectral patterns was quantified in terms of scalar product among spatial patterns coefficients.

The code is available at: https://github.com/1101AlexZab1011/FingerMovementDecoder

3 Results

3.1 Behavioral performance

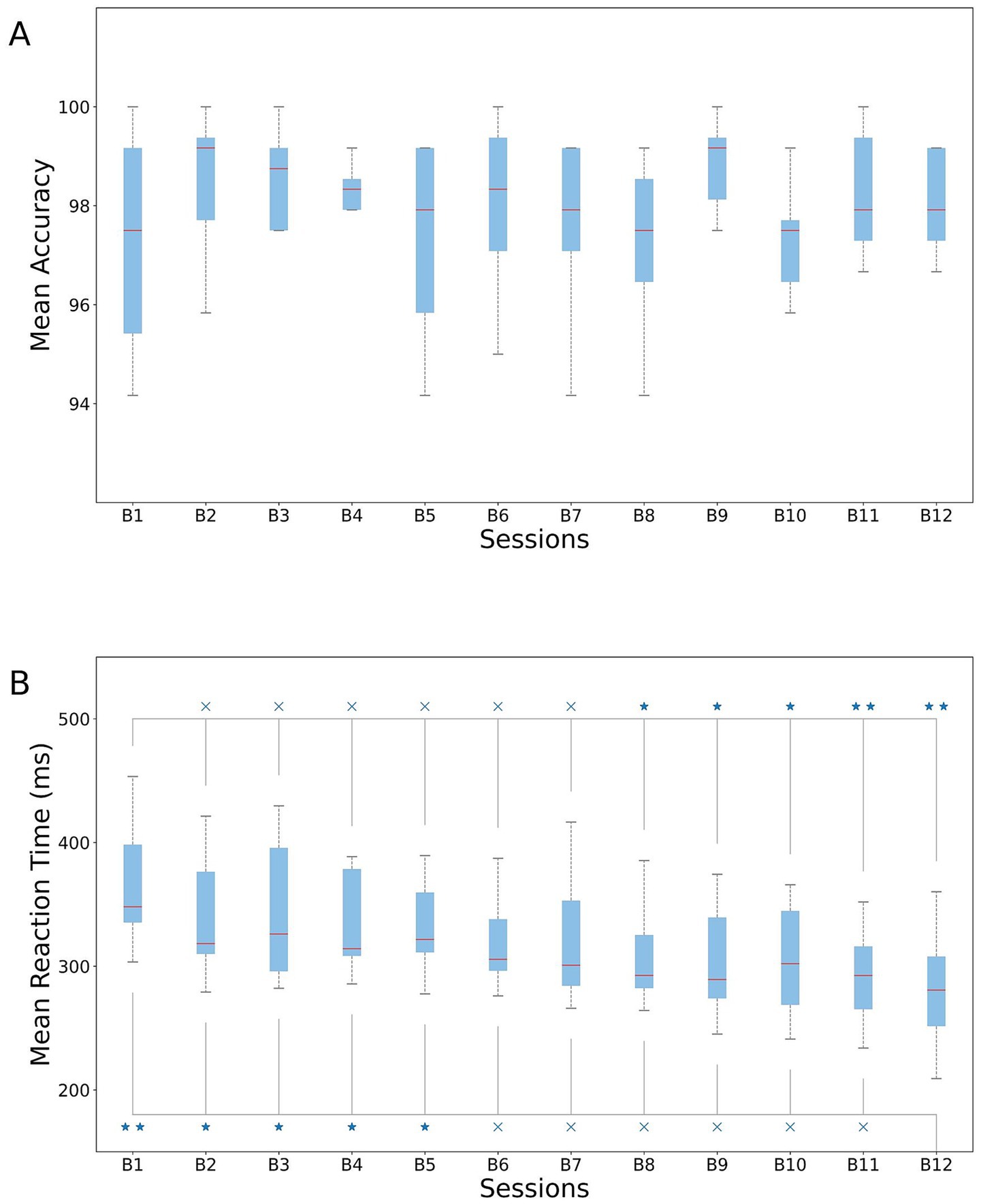

We first quantified behavioral responses across the 12 blocks of our experimental design. No significant differences in accuracy (1st vs. 12th block, paired t-test, p = 0.80) were found, which was on average 97.48 ± 2.51% (Figure 3A). The profile of the response times mirrored the learning effect, with slowest responses in the first blocks and fastest responses in the last blocks of the experimental paradigm. We observed faster reaction times for blocks 8–12 compared to block 1 (Figure 3B) and in block 12 compared with block 1–5 (Figure 3B; Supplementary Table S1).

Figure 3. Behavioral performance in the SRTT. (A) Mean accuracy and (B) mean reaction times across subjects for each of the 12 blocks. Upper line: paired t-test results between 1st block and all the others. Bottom line: paired t-test results between 12th block and all the previous. *p < 0.05 and **p < 0.01.

From the results, we concluded that subjects were still in the learning phase until the end of block 5, and that they might have already learned the sequence by the start of block 8. To maximize the separation between these two phases, we subsequently considered Blocks 1–3 as the learning phase and Blocks 10–12 as the learned phase.

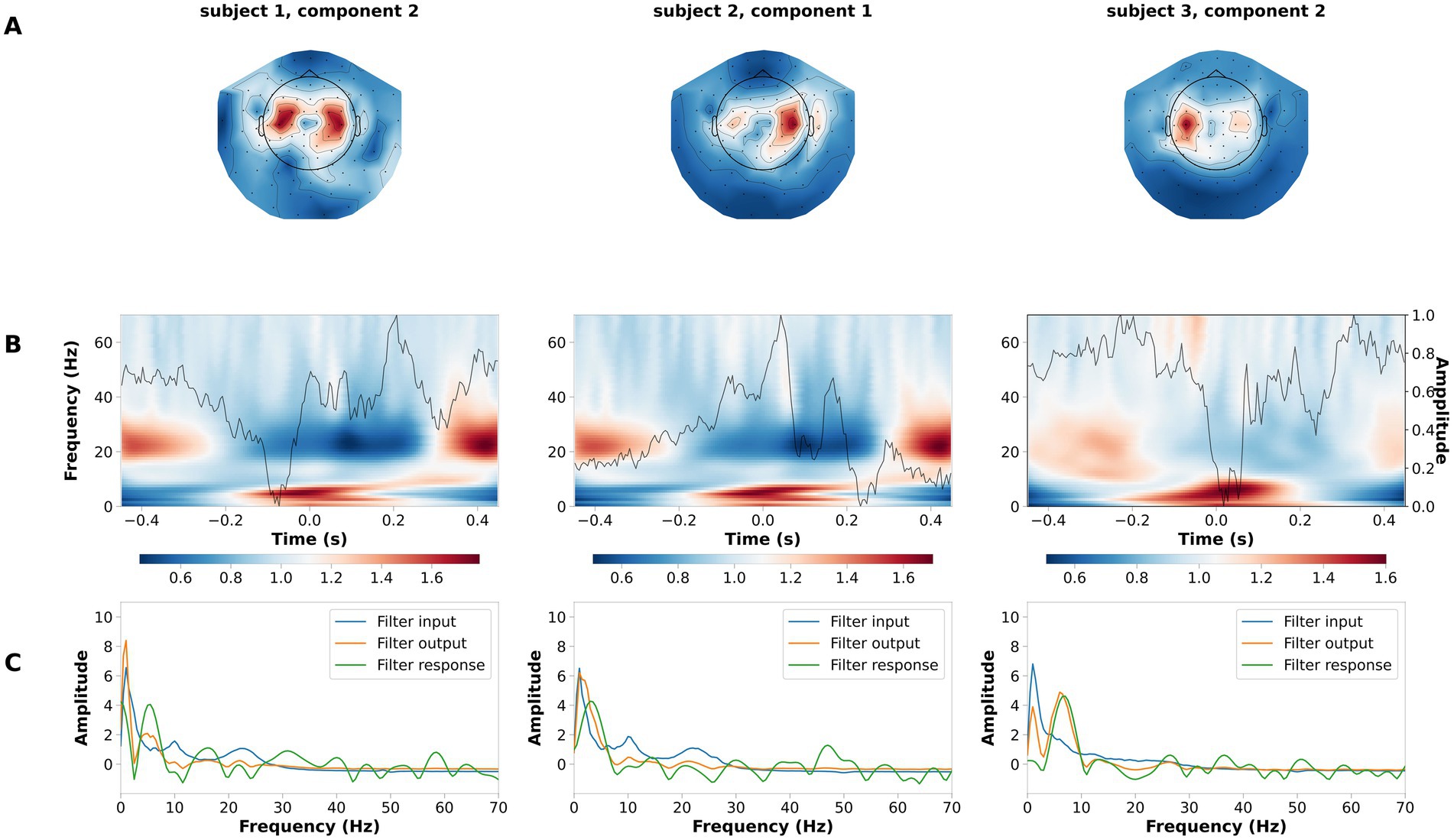

3.2 Left vs. right-hand movement decoding

We validated the performance of LF-CNN on the laterality of the button press, between left- and right-hand side. Across participants, the average performance of LF-CNN was above 95% (98.5 ± 1.2%). Typical patterns for the spatial, spectral and temporal filters optimized by the LF-CNN are depicted in Figure 4. The first layer of LF-CNN returns spatial patterns with focal maxima in central areas (Figure 4A), therefore mirroring the relevance of motor activity. The second layer of LF-CNN returns time-frequency power patterns (Figure 4B), with modulations in alpha (8–15 Hz), beta (15–30 Hz) and gamma (>30 Hz) bands around the button press (t = 0) (Mohseni et al., 2020). Interestingly, the spectral properties of the temporal filter extracted from the second layer (Figure 4C, green trace) shows the prominent contribution of low frequency power. Therefore, the LF-CNN not only provides high classification performance, but also returns physiologically interpretable patterns in spatial, temporal and spectral domains.

Figure 4. Example of spatial temporal and spectral patterns for left- vs. right-hand movement decoding. Each column is a representative component of the LF-CNN featuring: (A) spatial patterns; (B) latent sources: time resolved average (gray line) and average of the single trial time frequency representation obtained with Morlet complex wavelet (background). Time = 0 indicates response time; (C) FIR filter input (blue), filter response (green), and filter output (orange).

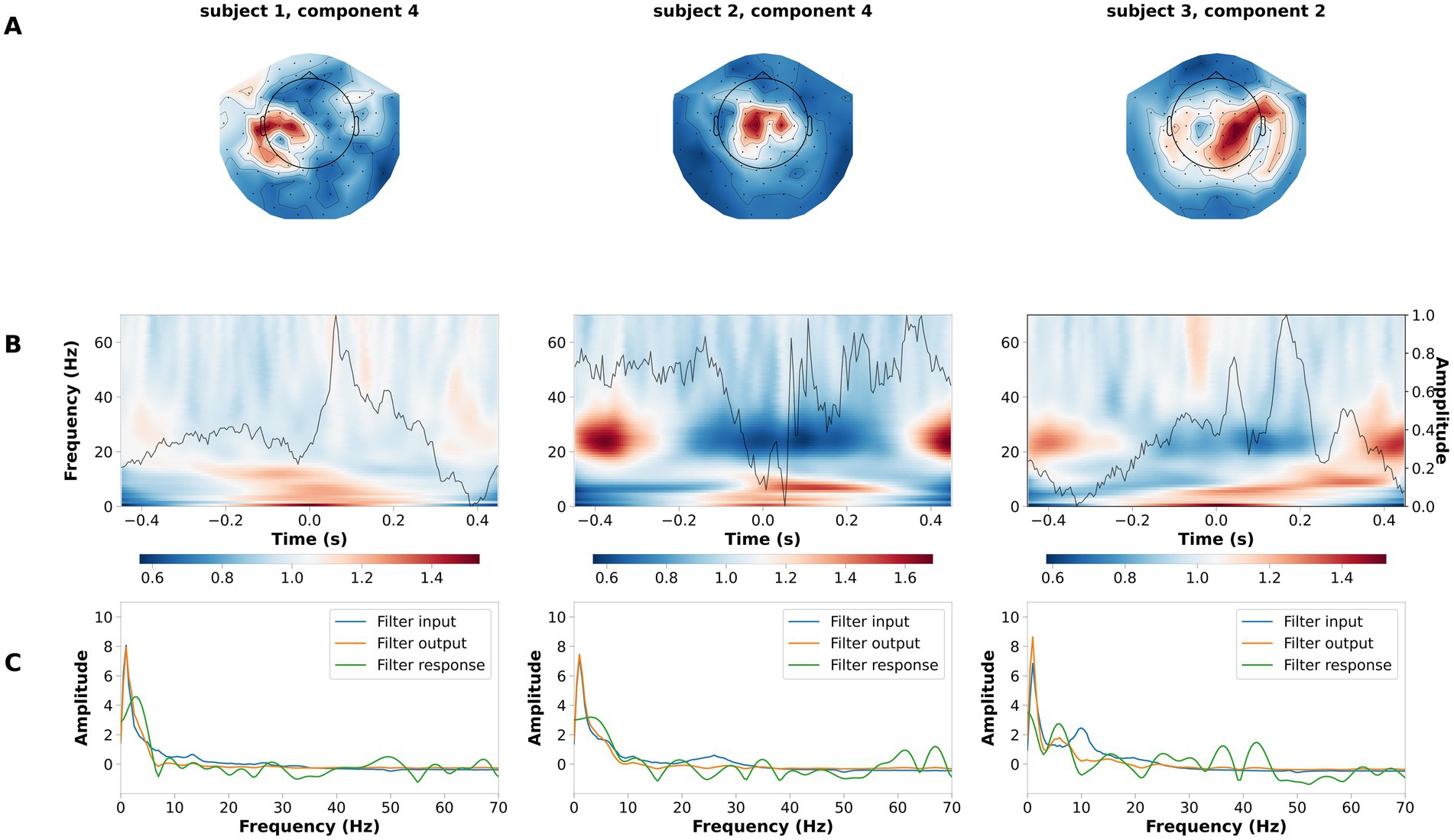

3.3 Four finger movement decoding

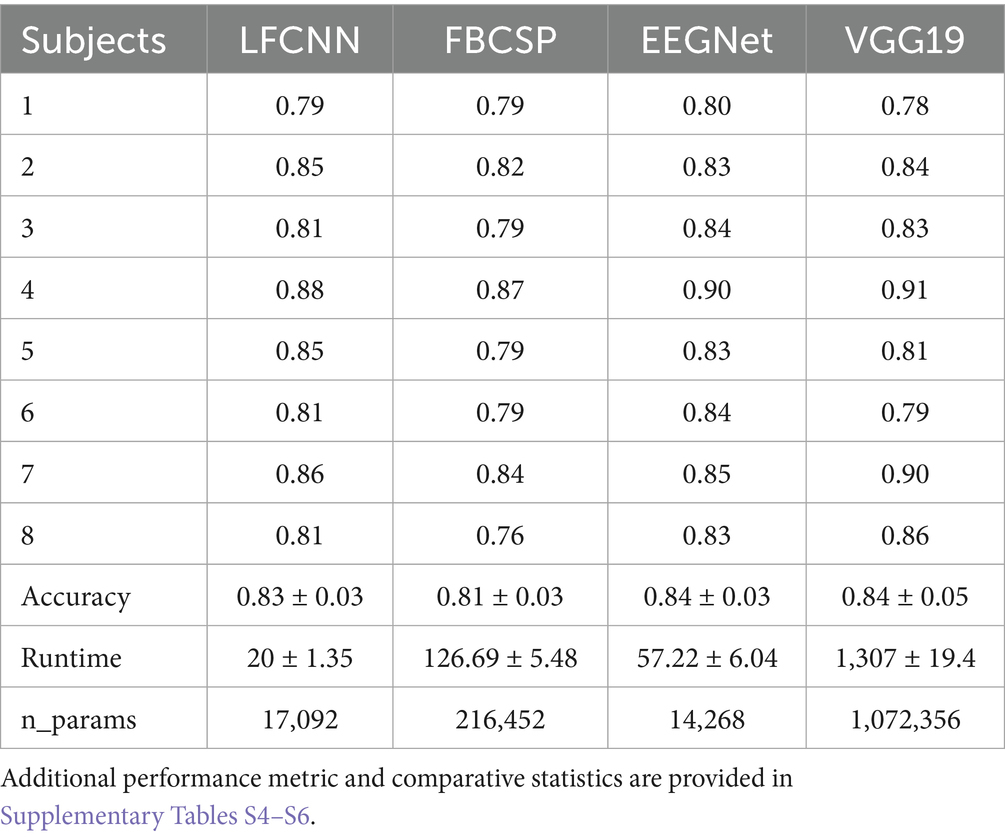

Next, we validated the LF-CNN on the classification of four finger movements. We obtained a test classification accuracy of 82.0 ± 0.3%, which was in range with the performance of more complex architecture as FBCSP ShallowNet, EEGNet and Vgg19 (Table 1).

Table 1. Performance (test accuracy), runtime (training, validation and testing for all subjects, in seconds) and number of parameters for comparative deep-learning approaches for the decoding of finger movement in a motor sequence learning task.

Importantly, given the simpler architecture and the lower number of parameters to train, LF-CNN reached this level of accuracy in a runtime which was one order magnitude faster than FBSCP and around two orders of magnitude faster than Vgg19. Interestingly, the runtime was also superior to EEGNet, despite the comparable number of parameters to be trained. Runtimes reported here were evaluated on a Workstation, operative system Ubuntu 22.04.1 LTS, Processor Intel@ Xeon(R) CPU 2.10 GHz, RAM 128 GB.

We controlled for the distribution of misclassified trials reporting the performance in a confusion matrix (Table 2), which shows that the tendency to misclassify one finger with the ipsilateral finger is higher than with a contralateral finger. Beside the highly overlapping anatomical representation of ipsilateral fingers (Beisteiner et al., 2001; Liao et al., 2014), the correct performance holds stable at or above 80%, with chance level at 25% for the four fingers and at 50% for the ipsilateral hand.

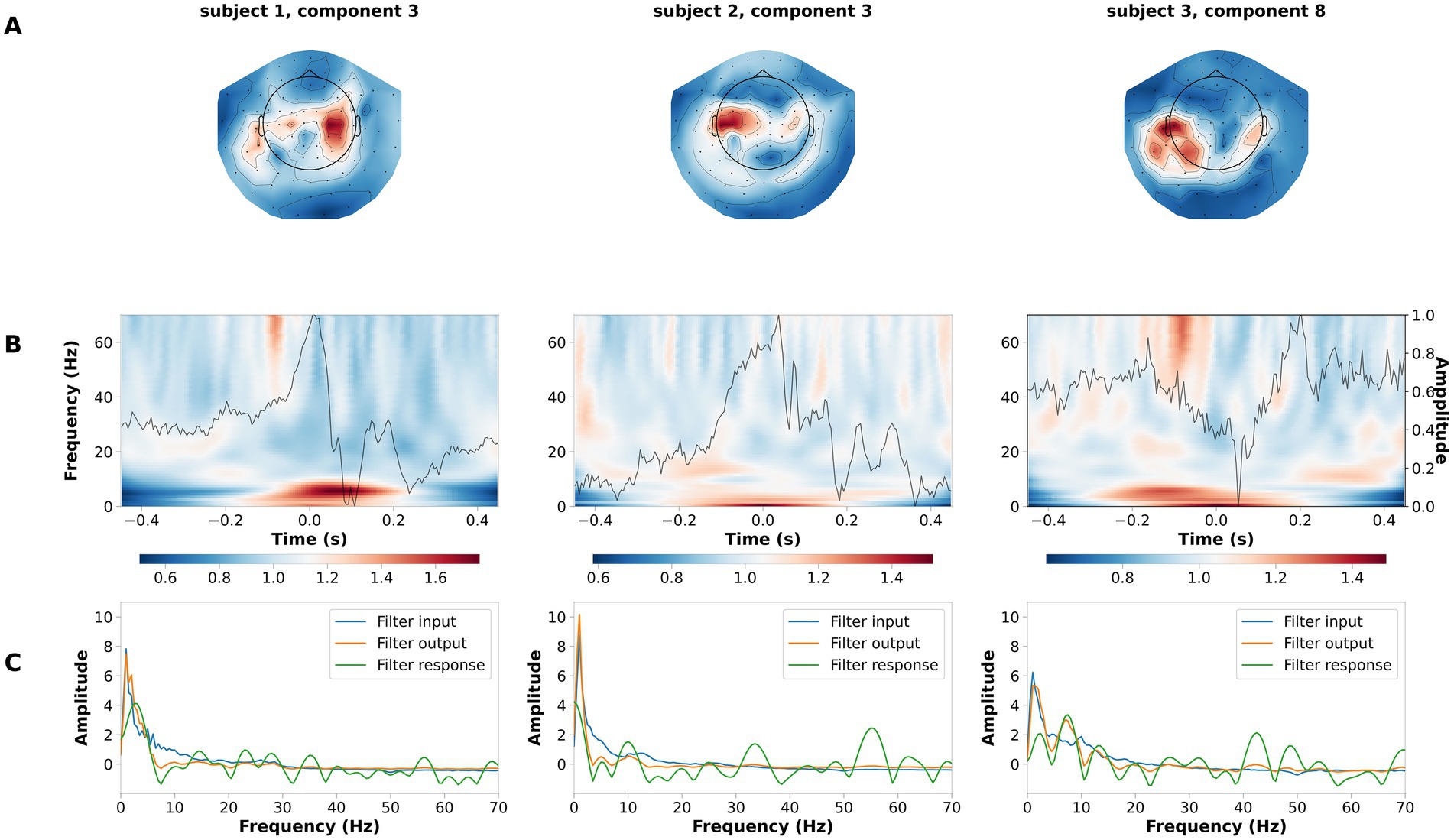

The LF-CNN decoder provides physiologically interpretable spatial, temporal and spectral patterns. LF-CNN first layer returns spatial patterns with highest values over central areas (Figure 5A), while the second layer shows marked power modulation in the alpha and beta range (Figure 5B) and a temporal filter response with a low-pass spectral profile (Figure 5C). We performed an ablation study by systematically suppressing the contribution of spatial, temporal and fully connected layers. This revealed a prominent role of the spatial and fully connected layer (Supplementary Table S9). We tested the relevance of low frequencies for decoding high-pass filtering the data at 5 Hz, and observed a decrease in the LF-CNN accuracy to 0.64 (± 0.03). We tested the temporal sensitivity of the decoding and observed maximal performance for a 300 ms window centered at 50 ms after the button press (accuracy 78.67 ± 5.53%, Supplementary Figure S1). As an additional control, we also trained LF-CNN for classification of finger movements within the left hand and within the right hand. These models provided an accuracy 77.95 ± 5.06% for within left-hand movements 82.88 ± 5.19% for within right-hand movements (Figure 6).

Figure 5. Example of spatial temporal and spectral patterns for four finger movement decoding. Each column is a representative component of the LF-CNN. (A) spatial patterns; (B) latent sources: time resolved average (gray line) and average of the single trial time frequency representation (background). Time = 0 indicates response time; (C) FIR filter input (blue), filter response (green), and filter output (orange).

Figure 6. Example of spatial temporal and spectral patterns for ipsilateral fingers decoding. Each column is a representative component of the LF-CNN. (A) spatial patterns; (B) latent sources: time resolved average (gray line) and average of the single trial time frequency representation (background). Time = 0 indicates response time; (C) FIR filter input (blue), filter response (green), and filter output (orange).

3.4 MEG—cognitive status

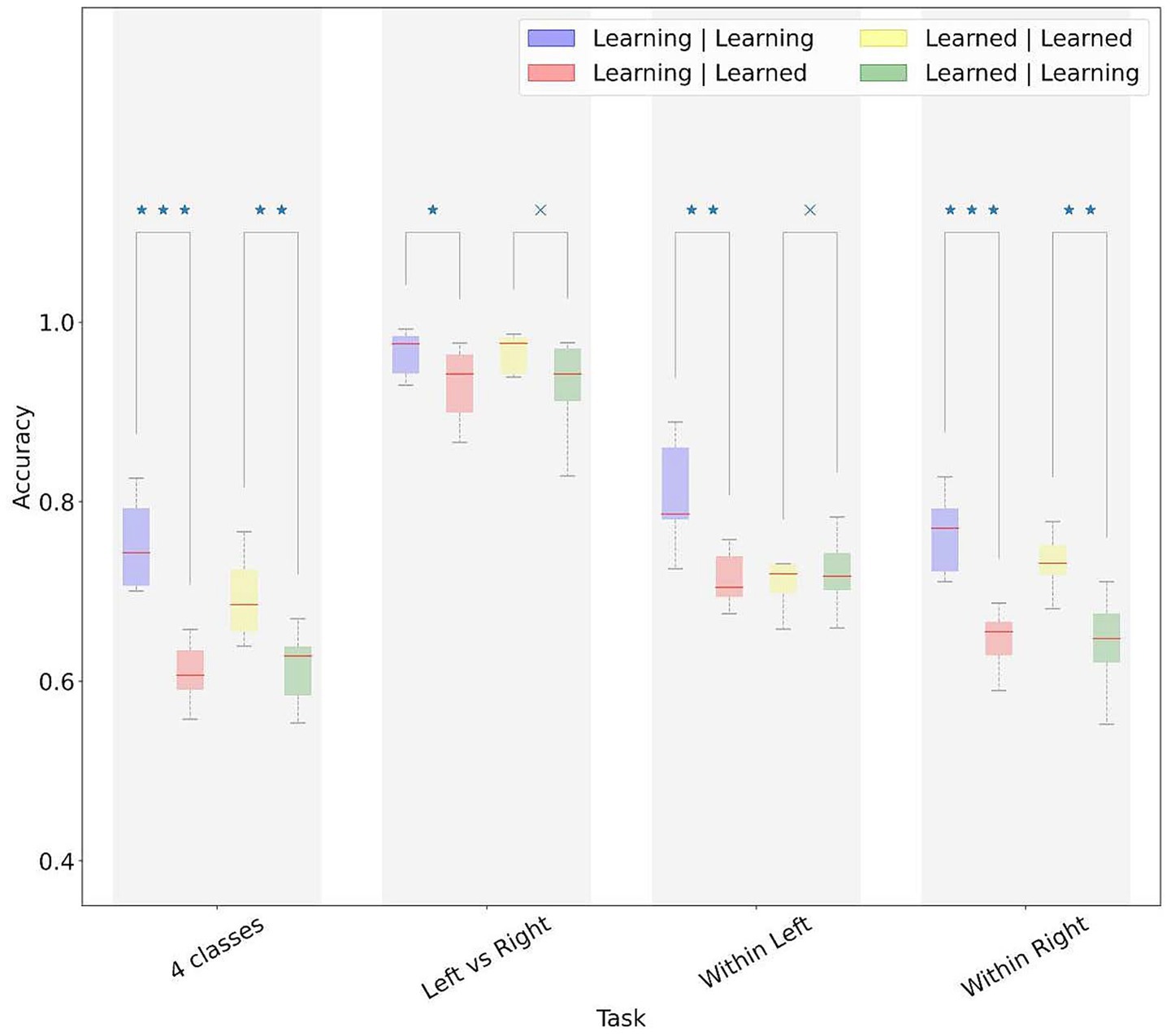

The SRTT is designed to induce sequence learning, with the motor planning strategy changing between the early and later blocks of the experiment. To evaluate the effect of learning on the decoding performance, we generated two sets of models, one trained on the learning phase (blocks 1–3) and one on learned phase (blocks 10–12, see 3.1). We then tested these two sets of models on test data from both phases.

In the finger movement decoding, both models performed better on test trials extracted from within the same phase (learning or learned) used for model training (Figure 7). The effect of phase on decoding was less visible in the hand decoding. For decoding within the same hand, we observed a significant phase effect for the right but not left-hand.

Figure 7. Performance of models across learning and learned phases of the SRTT paradigm. Model performances were averaged across participants. In the legend, the first term refers to the phase (learning vs. learned) of the training data, and the second term refers to the phase of the test data. x, not significant difference; *p-value < 0.05, **p-value < 0.01, ***p-value < 0.001.

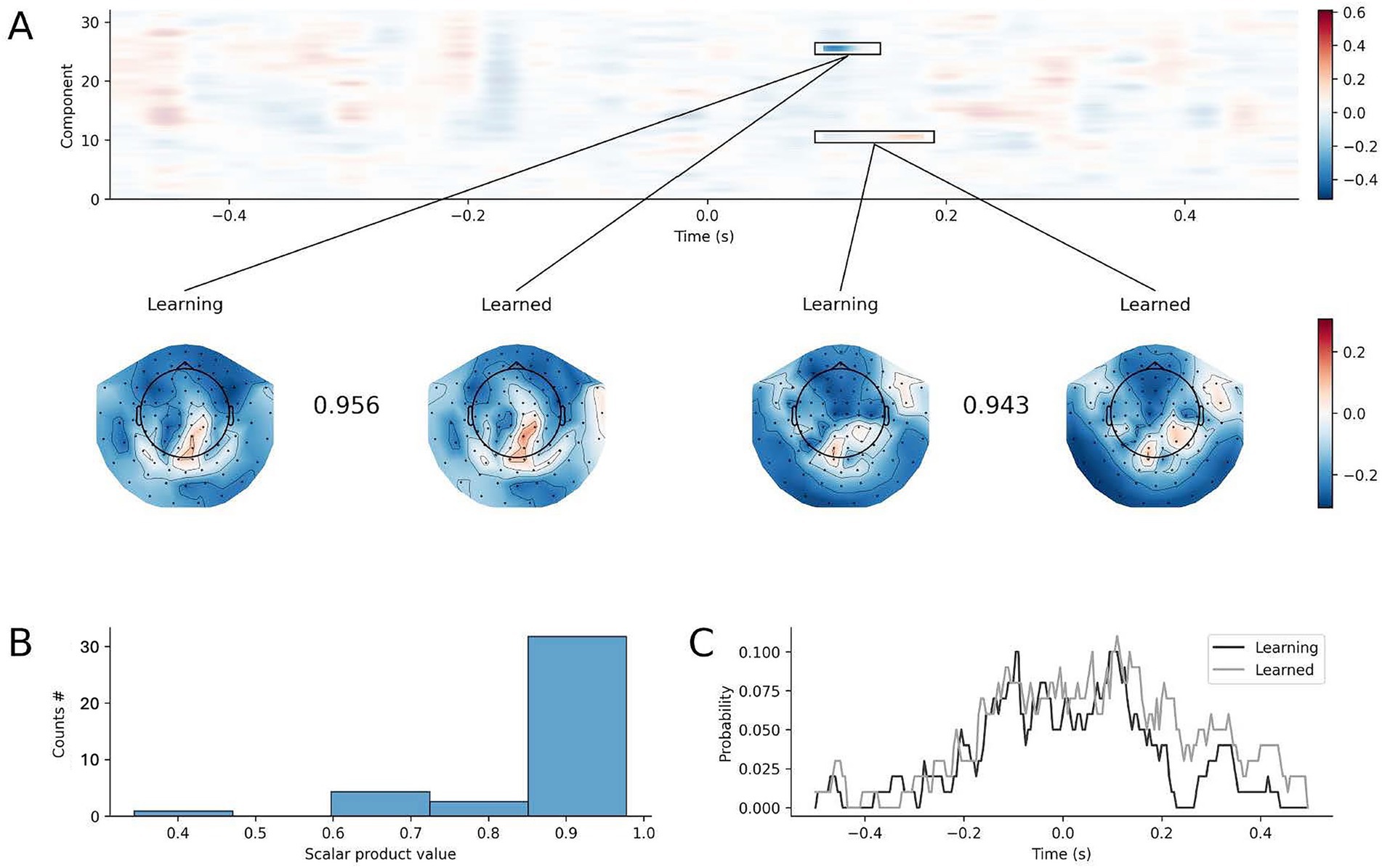

To explain the difference in decoding performance, we investigated the difference in latent sources obtained by feeding trials from the learning phase and the learned phase of the SRTT to the four-finger decoder trained on learning phase. Figure 8A shows the comparison between the obtained latent sources over time. Across subjects, only a minor number of temporal latent sources showed significant difference (range 1 to 4 out of 32 estimated components in each subject). In those components showing difference in the temporal trend, the spatial pattern was highly similar as shown by the scalar product in the inset of Figure 8A, and in the distribution of the scalar product computed for all significant clusters in Figure 8B. The difference in the temporal latent sources obtained injecting data from the learning vs. learned phases was distributed mostly in the – 200 to + 200 ms interval around button press (Figure 8C: two curves for two decoders trained on the learning and learned phases). Therefore, the difference in the properties of the decoders were minimal, distributed on different components, and variable across individuals.

Figure 8. Difference in decoding models built from the learning and learned phases of the SRTT paradigm. (A) A representative example of the differences (F-values) between the temporal latent sources obtained by feeding a decoder trained on blocks 1–3 with data from blocks 1–3, and temporal sources obtained by feeding the same decoder trained on blocks 1–3 with data from blocks 10–12. Significant differences surviving non-parametric cluster permutation test are highlighted and spatial patterns topographies are shown (the scalar product between the spatial patterns measures their similarity). (B) Scalar product of spatial patterns of significant clusters obtained from the difference between the latent temporal sources of the learning (block 1–3) and learned (block 10–12) phases of the SRTT paradigm, obtained using both the decoders trained on learning (block 1–3) and learned (block 10–12) phases (x-axis: scalar product value: y-axis: count of scalar products for each bin). (C) Percentage of statistically significant differences in the temporal pattern of the 32 latent sources for models trained during the learning (black) and learned (gray) phase.

4 Discussion

In this study, we showed that it was possible to reliably decode finger movements from non-invasive neurophysiological data in healthy participants performing the SRTT, operationalized as a motor learning task. Through our compact deep learning model, the LF-CNN, we showcased high classification accuracy and physiologically interpretable results.

The LF-CNN accuracy performance was comparable to that of more complex deep learning architectures, with significantly reduced training time. This was due to a lower number of parameters to be optimized (Schirrmeister et al., 2017; Simonyan and Zisserman, 2015) and without the need of a dense layer, thus reducing time-consuming convolutions (Lawhern et al., 2018). Specifically, the LF-CNN was able to decode dynamically changing spatio-temporal parameters in approximately 20 s compared to almost 60 s of EEGNet (Table 1). This decoding efficiency and performance gain can improve the timing of essential information in applied rehabilitation situations, whereby feedback on cortical contributions to motor learning is essential. In the next sections, we go into the details of the decoding structure from our model and their role in understanding of motor and cognitive processing.

4.1 Finger movement decoding and motor learning

In the current study, the LF-CNN approach appears to utilize both spatial and temporal filters, represented by the weights of the trained network layers, to support the decoding of finger movements. While these weights may not directly reflect neural activity (Haufe et al., 2014; Petrosyan et al., 2021), they can offer insights into the underlying signal structure. The resulting patterns may inform a generative model that characterizes the potential relationship between sensor-level recordings and neural sources (Cui et al., 2022; Petrosyan et al., 2021).

The first convolutional layer applies spatial filters across the MEG sensor array, performing a one-dimensional convolution to project the high-dimensional MEG signals into a lower-dimensional latent space. This projection captures dominant spatial features related to neural sources, significantly reducing the complexity of the data while preserving key information. Notably, the spatial patterns revealed prominent activity in sensorimotor cortices, areas known to be critical for motor planning and execution (Cheyne, 2013; Pfurtscheller and Lopes da Silva, 1999). These regions exhibited event-related synchronization (ERS) and desynchronization (ERD) in the alpha and beta frequency bands that are well-established markers of motor preparation and execution (Kilavik et al., 2013; Pfurtscheller and Neuper, 2003).

The second convolutional layer uses temporal filters that behave like univariate autoregressive (AR) models, allowing for direct interpretation in the frequency domain. These temporal filters decompose the signal into oscillatory components, forming spectral patterns that reflect the dynamics of brain rhythms associated with motor control. The learned filters predominantly exhibited low-pass characteristics, emphasizing slow oscillations (<30 Hz) that have been repeatedly implicated in motor behavior and skill acquisition (Engel and Fries, 2010; Tan et al., 2016).

Our key contribution of this study is the ability to distinguish movements of the ipsilateral index and middle fingers with a mean classification accuracy of 82%, that is performing as well as current popular CNNs with a significant reduction of computation time. Early work demonstrated somatotopic segregation of individual fingers in the somatosensory cortex (Kleinschmidt et al., 1997), a finding later refined through modelling approaches using Gaussian receptive fields (Schellekens et al., 2018). Invasive recordings using ECoG revealed distinct broadband spectral patterns for individual finger movements and movement trajectories (Miller et al., 2009), leading to highly accurate finger decoding for prosthetic control (up to 92%; see Hotson et al. (2016)). However, translating these results to non-invasive modalities like EEG and MEG typically reduces classification performance (Liao et al., 2014). Still, notable progress has been made: Quandt et al. (2012) initiated non-invasive finger decoding efforts, while more recent approaches, such as wavelet-based feature extraction with SVM-RBF classifiers (Alazrai et al., 2019) and optimized channel selection strategies (Gannouni et al., 2020) have achieved accuracies around 81–86% for finger classification. Our findings build on this momentum, demonstrating robust decoding of fine motor learning that dynamically progresses in a non-invasive MEG setting.

Our decoding results also showed higher accuracy when using broadband MEG data compared to focusing solely on the alpha/beta range, which are considered the prominent motor-related frequency bands. Notably, restricting analysis to alpha and beta bands did not improve performance. This aligns with prior findings showing that broadband features outperform narrowband (alpha/beta) approaches in non-invasive settings (Lee et al., 2022; Liao et al., 2014; Quandt et al., 2012). While some studies using alpha/beta power achieved moderate accuracy (Alazrai et al., 2019; Gannouni et al., 2020), broadband analysis consistently yielded better results, including in the present work. These findings suggest that low-frequency broadband activity captures richer, spatially localized information relevant to finger motor planning and execution.

Previous approaches (Alazrai et al., 2019; Gannouni et al., 2020; Lee et al., 2022; Quandt et al., 2012) typically employ a two-step pipeline: feature preselection followed by classification using standard machine learning algorithms. However, these methods often lack physiological interpretability of the selected features, potentially limiting their insight into neural processes. In contrast, our model learns spatial and temporal (spectral) features directly from the data, producing interpretable patterns that reflect underlying neural activity. More broadly, this approach could extend to decoding more complex motor tasks, such as wrist extension/flexion (Vuckovic and Sepulveda, 2008), differentiation of thumb, fist, and individual finger movements (Javed et al., 2017), or distinguishing grasp types like palmar, lateral, and pinch (Jochumsen et al., 2016). Enhancing finger decoding could also benefit BCI applications focused on movement trajectories like 2D finger pointing (Toda et al., 2011) to 3D hand movements (Korik et al., 2018). This could lay the groundwork for precise kinematic decoding of speed, velocity, and complex tasks like center-out reaching or drawing (Bradberry et al., 2009; Kobler et al., 2019; Lv et al., 2010) could be extended to neuroprosthetics.

4.2 Sensitivity to the cognitive state changes and implications for future work

Although deep learning methods typically require large datasets, our study demonstrates that LF-CNN can achieve high classification accuracy even with a limited number of trials. Notably, the model was sensitive to learning-related changes within the SRTT paradigm. By comparing decoder patterns between the early learning and later learned phases, we investigated intra-subject variability, with minimal differences in latent sources and spatial distribution. On the other hand, the high performance for each individual and the low level of generalization across subjects highlights the model’s ability to capture subject-specific neural dynamics associated with motor learning. This underscores the model’s capacity to detect subtle, individualized changes in brain activity, which is an essential feature for probing the neurophysiological mechanisms of learning and behavior. Moreover, its sensitivity suggests strong potential for broader applications in identifying context-specific variations in neural activity. This study represents a stride towards the development of adaptive algorithmic approaches for tackling intricate decoding challenges and elucidating the neurophysiological mechanisms of associated cognitive processes in complex motor tasks. The combination of spatial and spectral patterns supports a data-driven decoding model that captures how different brain rhythms and regions contribute to motor performance.

In the context of motor learning, this decoding approach may offer several valuable perspectives. For instance, as individuals acquire or refine motor skills, changes in the spatial distribution and strength of ERD/ERS patterns have been observed, potentially reflecting cortical reorganization (Brunner et al., 2024; Dayan and Cohen, 2011). The LF-CNN may be sensitive to such shifts in spatial representations, as suggested by the low level of generalization across subjects (Supplementary Table S8). While this accounts for subject specific distribution of the motor signaling detectable through MEG, it also provides insight into learning-related changes in the cortex (Borràs et al., 2025). Secondly, by identifying task-relevant and isolating specific frequency bands frequencies that improve movement decoding, the model reveals the rhythmic components most involved in motor learning (e.g., increased beta-band desynchronization during skilled performance). Thirdly, the decoding accuracy of motor-related states can serve as a proxy for monitoring learning progress and changes in cognitive states. As a subject becomes more proficient, the model’s ability to distinguish motor intentions or executions may improve, reflecting more robust and consistent neural representations. Lastly, the extracted spatial and spectral patterns can inform neurofeedback or brain-computer interface (BCI) applications aimed at enhancing motor learning by setting neurofeedback targets alongside timely feedback (Soekadar et al., 2015; Vidaurre et al., 2011).

Ultimately, the model’s sensitivity to neurophysiological variations holds promising implications for neurorehabilitation, which could be tested in stroke recovery. Fine motor impairments are common after stroke, with tools like the 9-Hole Peg Test routinely used to assess motor coordination (Oxford Grice et al., 2003). The ability to decode specific finger movements enables objective, real-time monitoring of recovery progress (Guger et al., 2017; Khan et al., 2020). Previous studies have shown the effectiveness of BCI-driven interventions in improving motor control in stroke patients (Ramos-Murguialday et al., 2013; Romero-Laiseca et al., 2020). For example, detecting neural activation associated with index and middle finger movements may indicate cortical-level recovery, even in the absence of overt motion. Repeated engagement of these neural patterns over time could signal positive trends in fine motor function and the prediction of motor kinematics with CNNs (Borra et al., 2023). Furthermore, accurate decoding of finger movements can support the precise control of prosthetic devices like enabling pinch or grasp functions (Jain and Kumar, 2025) and thereby providing a valuable tool for restoring functional independence in stroke patients. These findings align with our approach, which provides a promising avenue for enhancing neurorehabilitation through precise neural decoding and motor control (Tian et al., 2025).

5 Summary

We used a physiologically interpretable convolutional neural network (LF-CNN) to decode motor finger movements during the learning of the Sequential Reaction Time Task (SRTT), where participants pressed specific buttons in response to visual cues showing a repeating sequence. The LF-CNN effectively identified distinct activation patterns across spatial, temporal, and spectral domains. Compared to more complex models, it offered a notable advantage in computational efficiency, with significantly reduced runtime, while maintaining interpretability of learned weights. Importantly, the LF-CNN was sensitive to different stages of motor learning, enabling individualized monitoring of learning dynamics for diagnostic purposes. In conclusion, the LF-CNN enables a principled decomposition of MEG signals into spatial and temporal components that are both interpretable and behaviorally relevant. This approach not only enhances decoding performance but also offers a powerful framework for investigating the neural basis of motor learning.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Institutional and Ethical Review Board (IRB) guidelines and regulations of the Higher School of Economics. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AZ: Visualization, Methodology, Validation, Investigation, Data curation, Software, Formal analysis, Writing – original draft. RC: Data curation, Conceptualization, Methodology, Writing – review & editing, Software. VM: Writing – review & editing. TF: Writing – review & editing, Methodology, Formal analysis, Validation, Project administration, Supervision, Data curation, Software, Conceptualization, Writing – original draft, Investigation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work/article is an output of a research project implemented as part of the Basic Research Program at the National Research University Higher School of Economics (HSE University). Russell W. Chan was supported by the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No. 898286.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2025.1623380/full#supplementary-material

References

Alazrai, R., Alwanni, H., and Daoud, M. I. (2019). EEG-based BCI system for decoding finger movements within the same hand. Neurosci. Lett. 698, 113–120. doi: 10.1016/j.neulet.2018.12.045

Albouy, G., Fogel, S., King, B. R., Laventure, S., Benali, H., Karni, A., et al. (2015). Maintaining vs. enhancing motor sequence memories: respective roles of striatal and hippocampal systems. NeuroImage 108, 423–434. doi: 10.1016/j.neuroimage.2014.12.049

Allison, B., Luth, T., Valbuena, D., Teymourian, A., Volosyak, I., and Graser, A. (2010). BCI demographics: how many (and what kinds of) people can use an SSVEP BCI? IEEE Trans. Neural Syst. Rehabil. Eng. 18, 107–116. doi: 10.1109/TNSRE.2009.2039495

Al-Saegh, A., Dawwd, S. A., and Abdul-Jabbar, J. M. (2021). Deep learning for motor imagery EEG-based classification: a review. Biomed. Signal Process. Control 63:102172. doi: 10.1016/j.bspc.2020.102172

Amin, S. U., Alsulaiman, M., Muhammad, G., Mekhtiche, M. A., and Shamim Hossain, M. (2019). Deep learning for EEG motor imagery classification based on multi-layer CNNs feature fusion. Futur. Gener. Comput. Syst. 101, 542–554. doi: 10.1016/j.future.2019.06.027

Bagherzadeh, S., Maghooli, K., Shalbaf, A., and Maghsoudi, A. (2023). A hybrid EEG-based emotion recognition approach using wavelet convolutional neural networks and support vector machine. Basic Clin. Neurosci. 14, 87–102. doi: 10.32598/bcn.2021.3133.1

Beisteiner, R., Windischberger, C., Lanzenberger, R., Edward, V., Cunnington, R., Erdler, M., et al. (2001). Finger Somatotopy in human motor cortex. NeuroImage 13, 1016–1026. doi: 10.1006/nimg.2000.0737

Bijsterbosch, J., Harrison, S. J., Jbabdi, S., Woolrich, M., Beckmann, C., Smith, S., et al. (2020). Challenges and future directions for representations of functional brain organization. Nat. Neurosci. 23, 1484–1495. doi: 10.1038/s41593-020-00726-z

Borra, D., Mondini, V., Magosso, E., and Müller-Putz, G. R. (2023). Decoding movement kinematics from EEG using an interpretable convolutional neural network. Comput. Biol. Med. 165:107323. doi: 10.1016/j.compbiomed.2023.107323

Borràs, M., Romero, S., Serna, L. Y., Alonso, J. F., Bachiller, A., Mañanas, M. A., et al. (2025). Assessing motor cortical activity: how repetitions impact motor execution and imagery analysis. Psychophysiology 62:e70090. doi: 10.1111/psyp.70090

Bradberry, T. J., Rong, F., and Contreras-Vidal, J. L. (2009). Decoding center-out hand velocity from MEG signals during visuomotor adaptation. NeuroImage 47, 1691–1700. doi: 10.1016/j.neuroimage.2009.06.023

Brunner, I., Lundquist, C. B., Pedersen, A. R., Spaich, E. G., Dosen, S., and Savic, A. (2024). Brain computer interface training with motor imagery and functional electrical stimulation for patients with severe upper limb paresis after stroke: a randomized controlled pilot trial. J. Neuroeng. Rehabil. 21:10. doi: 10.1186/s12984-024-01304-1

Bu, Y., Harrington, D. L., Lee, R. R., Shen, Q., Angeles-Quinto, A., Ji, Z., et al. (2023). Magnetoencephalogram-based brain-computer interface for hand-gesture decoding using deep learning. Cereb. Cortex 33, 8942–8955. doi: 10.1093/cercor/bhad173

Chan, R. W., Lushington, K., and Immink, M. A. (2018). States of focused attention and sequential action: a comparison of single session meditation and computerised attention task influences on top-down control during sequence learning. Acta Psychol. 191, 87–100. doi: 10.1016/j.actpsy.2018.09.003

Cheyne, D. O. (2013). MEG studies of sensorimotor rhythms: a review. Exp. Neurol. 245, 27–39. doi: 10.1016/j.expneurol.2012.08.030

Cui, W., Cao, M., Wang, X., Zheng, L., Cen, Z., Teng, P., et al. (2022). EMHapp: a pipeline for the automatic detection, localization and visualization of epileptic magnetoencephalographic high-frequency oscillations. J. Neural Eng. 19:055009. doi: 10.1088/1741-2552/ac9259

Dayan, E., and Cohen, L. G. (2011). Neuroplasticity subserving motor skill learning. Neuron 72, 443–454. doi: 10.1016/j.neuron.2011.10.008

de Oliveira, I. H., and Rodrigues, A. C. (2023). Empirical comparison of deep learning methods for EEG decoding [original research]. Front. Neurosci. 16:984. doi: 10.3389/fnins.2022.1003984

Engel, A. K., and Fries, P. (2010). Beta-band oscillations--signalling the status quo? Curr. Opin. Neurobiol. 20, 156–165. doi: 10.1016/j.conb.2010.02.015

Gannouni, S., Belwafi, K., Aboalsamh, H., AlSamhan, Z., Alebdi, B., Almassad, Y., et al. (2020). EEG-based BCI system to detect fingers movements. Brain Sci. 10:965. doi: 10.3390/brainsci10120965

Gemein, L. A. W., Schirrmeister, R. T., Chrabąszcz, P., Wilson, D., Boedecker, J., Schulze-Bonhage, A., et al. (2020). Machine-learning-based diagnostics of EEG pathology. NeuroImage 220:117021. doi: 10.1016/j.neuroimage.2020.117021

Guarnieri, R., Marino, M., Barban, F., Ganzetti, M., and Mantini, D. (2018). Online EEG artifact removal for BCI applications by adaptive spatial filtering. J. Neural Eng. 15:056009. doi: 10.1088/1741-2552/aacfdf

Guger, C., Coon, W., Swift, J., Allison, B., and Edlinger, G. (2017). A motor rehabilitation BCI with multi-modal feedback in chronic stroke patients (P5.300). Neurology 88:300. doi: 10.1212/WNL.88.16_supplement.P5.300

Gutierrez-Martinez, J., Mercado-Gutierrez, J. A., Carvajal-Gámez, B. E., Rosas-Trigueros, J. L., and Contreras-Martinez, A. E. (2021). Artificial intelligence algorithms in visual evoked potential-based brain-computer interfaces for motor rehabilitation applications: systematic review and future directions [systematic review]. Front. Hum. Neurosci. 15:772837. doi: 10.3389/fnhum.2021.772837

Haufe, S., Meinecke, F., Görgen, K., Dähne, S., Haynes, J.-D., Blankertz, B., et al. (2014). On the interpretation of weight vectors of linear models in multivariate neuroimaging. NeuroImage 87, 96–110. doi: 10.1016/j.neuroimage.2013.10.067

Hotson, G., McMullen, D. P., Fifer, M. S., Johannes, M. S., Katyal, K. D., Para, M. P., et al. (2016). Individual finger control of a modular prosthetic limb using high-density electrocorticography in a human subject. J. Neural Eng. 13:026017. doi: 10.1088/1741-2560/13/2/026017

Immink, M. A., Verwey, W. B., and Wright, D. L. (2019). The neural basis of cognitive efficiency in motor skill performance across early learning to automatic stages. In Neuroergonomics C.S. Nam (Ed.), Cham, Switzerland: Springer. (pp 221–249). doi: 10.1007/978-3-030-34784-0_12

Jain, A., and Kumar, L. (2025). ESI-GAL: EEG source imaging-based trajectory estimation for grasp and lift task. Comput. Biol. Med. 186:109608. doi: 10.1016/j.compbiomed.2024.109608

Javed, A., Tiwana, M., Rashid, N., Iqbal, J., and Khan, U. (2017). Recognition of finger movements using EEG signals for control of upper limb prosthesis using logistic regression. Biomed. Res. 28, 7361–7369.

Jochumsen, M., Niazi, I. K., Dremstrup, K., and Kamavuako, E. N. (2016). Detecting and classifying three different hand movement types through electroencephalography recordings for neurorehabilitation. Med. Biol. Eng. Comput. 54, 1491–1501. doi: 10.1007/s11517-015-1421-5

Keele, S. W., Ivry, R., Mayr, U., Hazeltine, E., and Heuer, H. (2003). The cognitive and neural architecture of sequence representation. Psychol. Rev. 110, 316–339. doi: 10.1037/0033-295x.110.2.316

Khan, M. A., Das, R., Iversen, H. K., and Puthusserypady, S. (2020). Review on motor imagery based BCI systems for upper limb post-stroke neurorehabilitation: from designing to application. Comput. Biol. Med. 123:103843. doi: 10.1016/j.compbiomed.2020.103843

Kilavik, B. E., Zaepffel, M., Brovelli, A., MacKay, W. A., and Riehle, A. (2013). The ups and downs of beta oscillations in sensorimotor cortex. Exp. Neurol. 245, 15–26. doi: 10.1016/j.expneurol.2012.09.014

Kim, H., Kim, J. S., and Chung, C. K. (2023). Identification of cerebral cortices processing acceleration, velocity, and position during directional reaching movement with deep neural network and explainable AI. NeuroImage 266:119783. doi: 10.1016/j.neuroimage.2022.119783

King, J. R., Gwilliams, L., Holdgraf, C., Sassenhagen, J., Barachant, A., Engemann, D., et al. (2018). Encoding and Decoding Neuronal Dynamics: Methodological Framework to Uncover the Algorithms of Cognition. Available at: https://hal.science/hal-01848442 (Accessed March 3, 2025).

Kleinschmidt, A., Nitschke, M. F., and Frahm, J. (1997). Somatotopy in the human motor cortex hand area. A high-resolution functional MRI study. Eur. J. Neurosci. 9, 2178–2186. doi: 10.1111/j.1460-9568.1997.tb01384.x

Kobler, R., Hirata, M., Hashimoto, H., Dowaki, R., Sburlea, A. I., and Müller-Putz, G. (2019). Simultaneous decoding of velocity and speed during executed and observed tracking movements: an MEG study. 8th Graz brain-computer Interface conference 2019, Graz.

Korik, A., Sosnik, R., Siddique, N., and Coyle, D. (2018). Decoding imagined 3D hand movement trajectories from EEG: evidence to support the use of Mu, Beta, and low gamma oscillations [original research]. Front. Neurosci. 12:130. doi: 10.3389/fnins.2018.00130

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 15:056013. doi: 10.1088/1741-2552/aace8c

Lee, H. S., Schreiner, L., Jo, S.-H., Sieghartsleitner, S., Jordan, M., Pretl, H., et al. (2022). Individual finger movement decoding using a novel ultra-high-density electroencephalography-based brain-computer interface system [original research]. Front. Neurosci. 16:878. doi: 10.3389/fnins.2022.1009878

Lévy, J., Zhang, M., Pinet, S., Rapin, J., Banville, H., d’Ascoli, S., et al. (2025). Brain-to-text decoding: a non-invasive approach via typing. arXiv [Preprint]. doi: 10.48550/arXiv.2502.17480

Li, C.-S. R., Padoa-Schioppa, C., and Bizzi, E. (2001). Neuronal correlates of motor performance and motor learning in the primary motor cortex of monkeys adapting to an external force field. Neuron 30, 593–607. doi: 10.1016/S0896-6273(01)00301-4

Liao, K., Xiao, R., Gonzalez, J., and Ding, L. (2014). Decoding individual finger movements from one hand using human EEG signals. PLoS One 9:e85192. doi: 10.1371/journal.pone.0085192

Lv, J., Li, Y., and Gu, Z. (2010). Decoding hand movement velocity from electroencephalogram signals during a drawing task. Biomed. Eng. Online 9:64. doi: 10.1186/1475-925X-9-64

Mahmud, M., Kaiser, M. S., Hussain, A., and Vassanelli, S. (2018). Applications of deep learning and reinforcement learning to biological data. IEEE Trans. Neural Netw. Learn Syst. 29, 2063–2079. doi: 10.1109/TNNLS.2018.2790388

Markoulidakis, I., Rallis, I., Georgoulas, I., Kopsiaftis, G., Doulamis, A., and Doulamis, N. (2021). Multiclass confusion matrix reduction method and its application on net promoter score classification problem. Technologies 9:81. doi: 10.3390/technologies9040081

Mathis, M. W., Perez Rotondo, A., Chang, E. F., Tolias, A. S., and Mathis, A. (2024). Decoding the brain: from neural representations to mechanistic models. Cell 187, 5814–5832. doi: 10.1016/j.cell.2024.08.051

Miller, K. J., Zanos, S., Fetz, E. E., den Nijs, M., and Ojemann, J. G. (2009). Decoupling the cortical power Spectrum reveals real-time representation of individual finger movements in humans. J. Neurosci. 29, 3132–3137. doi: 10.1523/jneurosci.5506-08.2009

Mitra, P. P., and Pesaran, B. (1999). Analysis of dynamic brain imaging data. Biophys. J. 76, 691–708. doi: 10.1016/S0006-3495(99)77236-X

Mohseni, M., Shalchyan, V., Jochumsen, M., and Niazi, I. K. (2020). Upper limb complex movements decoding from pre-movement EEG signals using wavelet common spatial patterns. Comput. Methods Prog. Biomed. 183:105076. doi: 10.1016/j.cmpb.2019.105076

Muthukumaraswamy, S. D. (2013). High-frequency brain activity and muscle artifacts in MEG/EEG: a review and recommendations. Front. Hum. Neurosci. 7:138. doi: 10.3389/fnhum.2013.00138

Nam, C. S., Johnson, S., and Li, Y. (2008). Environmental noise and P300-based brain-computer Interface (BCI). Proc. Hum. Fact. Ergon. Soc. Annu. Meet. 52, 803–807. doi: 10.1177/154193120805201208

Nissen, M. J., and Bullemer, P. (1987). Attentional requirements of learning: evidence from performance measures. Cogn. Psychol. 19, 1–32. doi: 10.1016/0010-0285(87)90002-8

O'Connor, E. J., Murphy, A., Kohler, M. J., Chan, R. W., and Immink, M. A. (2022). Instantaneous effects of mindfulness meditation on tennis return performance in elite junior athletes completing an implicitly sequenced serve return task [original research]. Front. Sports Active Living 4:654. doi: 10.3389/fspor.2022.907654

Oxford Grice, K., Vogel, K. A., Le, V., Mitchell, A., Muniz, S., and Vollmer, M. A. (2003). Adult norms for a commercially available nine hole peg test for finger dexterity. Am. J. Occup. Ther. 57, 570–573. doi: 10.5014/ajot.57.5.570

Petrosyan, A., Sinkin, M., Lebedev, M., and Ossadtchi, A. (2021). Decoding and interpreting cortical signals with a compact convolutional neural network. J. Neural Eng. 18:026019. doi: 10.1088/1741-2552/abe20e

Pfurtscheller, G., and Lopes da Silva, F. H. (1999). Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857. doi: 10.1016/s1388-2457(99)00141-8

Pfurtscheller, G., and Neuper, C. (2003). “Movement and ERD/ERS” in The Bereitschaftspotential: Movement-related cortical potentials. eds. M. Jahanshahi and M. Hallett (Cham: Springer US), 191–206.

Quandt, F., Reichert, C., Hinrichs, H., Heinze, H. J., Knight, R. T., and Rieger, J. W. (2012). Single trial discrimination of individual finger movements on one hand: a combined MEG and EEG study. NeuroImage 59, 3316–3324. doi: 10.1016/j.neuroimage.2011.11.053

Ramos-Murguialday, A., Broetz, D., Rea, M., Läer, L., Yilmaz, Ö., Brasil, F. L., et al. (2013). Brain–machine interface in chronic stroke rehabilitation: a controlled study. Ann. Neurol. 74, 100–108. doi: 10.1002/ana.23879

Rao, Y., Zhang, L., Jing, R., Huo, J., Yan, K., He, J., et al. (2024). An optimized EEGNet decoder for decoding motor image of four class fingers flexion. Brain Res. 1841:149085. doi: 10.1016/j.brainres.2024.149085

Rashid, M., Sulaiman, N., Abdul Majeed, P. P., Nasir, A. F., Bari, B. S., and Khatun, S. (2020). Current status, challenges, and possible solutions of EEG-based brain-computer Interface: a comprehensive review [review]. Front. Neurorobot. 14:25. doi: 10.3389/fnbot.2020.00025

Robertson, E. M. (2007). The serial reaction time task: implicit motor skill learning? J. Neurosci. 27, 10073–10075. doi: 10.1523/JNEUROSCI.2747-07.2007

Romero-Laiseca, M. A., Delisle-Rodriguez, D., Cardoso, V., Gurve, D., Loterio, F., Nascimento, J. H. P., et al. (2020). A low-cost lower-limb brain-machine Interface triggered by pedaling motor imagery for post-stroke patients rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 988–996. doi: 10.1109/TNSRE.2020.2974056

Saha, S., Mamun, K. A., Ahmed, K., Mostafa, R., Naik, G. R., Darvishi, S., et al. (2021). Progress in brain computer Interface: challenges and opportunities. Front. Syst. Neurosci. 15:578875. doi: 10.3389/fnsys.2021.578875

Schellekens, W., Petridou, N., and Ramsey, N. F. (2018). Detailed somatotopy in primary motor and somatosensory cortex revealed by Gaussian population receptive fields. NeuroImage 179, 337–347. doi: 10.1016/j.neuroimage.2018.06.062

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Simonyan, K., and Zisserman, A. (2015). Very deep convolutional networks for large-scale image recognition. Available at: https://arxiv.org/abs/1409.1556 (Accessed March 11, 2025).

Soekadar, S. R., Birbaumer, N., Slutzky, M. W., and Cohen, L. G. (2015). Brain–machine interfaces in neurorehabilitation of stroke. Neurobiol. Dis. 83, 172–179. doi: 10.1016/j.nbd.2014.11.025

Tan, H., Wade, C., and Brown, P. (2016). Post-movement Beta activity in sensorimotor cortex indexes confidence in the estimations from internal models. J. Neurosci. 36, 1516–1528. doi: 10.1523/jneurosci.3204-15.2016

Tian, M., Li, S., Xu, R., Cichocki, A., and Jin, J. (2025). An interpretable regression method for upper limb motion trajectories detection with EEG signals. IEEE Trans. Biomed. Eng. 2025:255. doi: 10.1109/TBME.2025.3557255

Toda, A., Imamizu, H., Kawato, M., and Sato, M.-A. (2011). Reconstruction of two-dimensional movement trajectories from selected magnetoencephalography cortical currents by combined sparse Bayesian methods. NeuroImage 54, 892–905. doi: 10.1016/j.neuroimage.2010.09.057

Verwey, W. B., Jouen, A.-L., Dominey, P. F., and Ventre-Dominey, J. (2019). Explaining the neural activity distribution associated with discrete movement sequences: evidence for parallel functional systems. Cogn. Affect. Behav. Neurosci. 19, 138–153. doi: 10.3758/s13415-018-00651-6

Vidaurre, C., Sannelli, C., Müller, K.-R., and Blankertz, B. (2011). Machine-learning-based Coadaptive calibration for brain-computer interfaces. Neural Comput. 23, 791–816. doi: 10.1162/NECO_a_00089

Vignesh, S., Savithadevi, M., Sridevi, M., and Sridhar, R. (2023). A novel facial emotion recognition model using segmentation VGG-19 architecture. Int. J. Inf. Technol. 15, 1777–1787. doi: 10.1007/s41870-023-01184-z

Volosyak, I., Valbuena, D., Luth, T., Malechka, T., and Graser, A. (2011). BCI demographics II: how many (and what kinds of) people can use a high-frequency SSVEP BCI? IEEE Trans. Neural Syst. Rehabil. Eng. 19, 232–239. doi: 10.1109/TNSRE.2011.2121919

Vuckovic, A., and Sepulveda, F. (2008). “A four-class BCI based on motor imagination of the right and the left hand wrist” in First international symposium on applied sciences on biomedical and communication technologies (Aalborg, Denmark: IEEE), 1–4.

Warren, D. J., Kellis, S., Nieveen, J. G., Wendelken, S. M., Dantas, H., Davis, T. S., et al. (2016). Recording and decoding for neural prostheses. Proc. IEEE 104, 374–391. doi: 10.1109/JPROC.2015.2507180

Yeager, L., Heinrich, G., Mancewicz, J., and Houston, M. (2016). Effective visualizations for training and evaluating deep models. In ICML Workshop on Visualization for Deep Learning.

Zhang, X., Yao, L., Wang, X., Monaghan, J., McAlpine, D., and Zhang, Y. (2021). A survey on deep learning-based non-invasive brain signals: recent advances and new frontiers. J. Neural Eng. 18:031002. doi: 10.1088/1741-2552/abc902

Keywords: finger movement decoding, MEG, convolutional neural networks, serial reaction time task, motor learning

Citation: Zabolotniy A, Chan RW, Moiseeva V and Fedele T (2025) Convolutional neural networks decode finger movements in motor sequence learning from MEG data. Front. Neurosci. 19:1623380. doi: 10.3389/fnins.2025.1623380

Edited by:

Yun Zhou, Shaanxi Normal University, ChinaReviewed by:

Dylan Forenzo, Carnegie Mellon University, United StatesAnant Jain, University of Utah, United States

Copyright © 2025 Zabolotniy, Chan, Moiseeva and Fedele. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tommaso Fedele, ZmVkZWxlLnRtQGdtYWlsLmNvbQ==

Aleksey Zabolotniy1

Aleksey Zabolotniy1 Russell Weili Chan

Russell Weili Chan Tommaso Fedele

Tommaso Fedele