- 1Chair of Work, Organizational and Business Psychology, Ruhr University Bochum, Bochum, Germany

- 2Research Centre ZESS, Ruhr University Bochum, Bochum, Germany

- 3Faculty of Management, Economics, and Society, Witten/Herdecke University, Witten, Germany

Introduction: In collaborative industrial work systems, the locus of authority—whether control over system dynamics is initiated by the system (adaptive) or by the human operator (adaptable)—can shape work experience and perceptions of the robotic partner. This study exploratively investigates how these control schemes and a static work system influence key psychological factors and which one should be favored in collaborative assembly tasks.

Methods: In an experimental laboratory study with n = 27 participants, a collaborative gearbox assembly task with a robot is used to compare adaptive and adaptable control schemes against a non-adjustable baseline. In the adaptive condition, the robot's speed automatically adjusted to human proximity; in the adaptable condition, speed is manually adjustable via interface buttons; in the baseline condition, speed remains static. The primary endpoint was the longitudinal, comparative investigation of flow experience and perceived task demands as they represent central indicators of employees' psychological experience in dynamic work systems. Dependent variables included additionally autonomy perception and workplace fit (task perception), and trust, safety, robot's intelligence, and collaboration satisfaction (robot interaction perception), as well as cycle time (performance), measured across four collaborative trials and five time points, making group comparisons and the investigation of construct dynamics possible.

Results: Both control schemes demonstrated improved collaboration experiences compared to the baseline condition. Participants in the adaptive condition reported higher flow experience and workplace fit, and showed the fastest production times across all trials, while participants in the adaptable condition reported higher autonomy and task demands. Additionally, trust in the robot increases over time, harmonizing trust levels across conditions.

Discussion: Despite limitations related to an exploratory design and a small sample size potentially masking existing effects, the findings indicate that dynamic working conditions can improve worker experience. However, the findings do not present a consistent picture favoring either adaptive or adaptable control schemes. Further research is needed to determine which control scheme is preferable in different task contexts. To inspire future work, we derive a set of hypotheses from the study's initial findings.

1 Introduction

With Industry 5.0 as a EU-wide strategic approach toward an industrial landscapes that not only strives for efficiency and cyber-physical connectedness (Industry 4.0) but also considers sustainability, resilience, and focuses on the human as the core of production processes (Breque et al., 2021; European Commission, n.d.–b), new opportunities—and necessities—arise to design technology induced, yet humane, workplaces. One such way is to use the potentials that go along with elevated sensors, systems' learning capabilities, no-code-programming and accessible robots: that is, work system dynamics. These dynamics can be used to create workplaces and work processes that fit not only the current production requirements, but also the people working in them and the goals for sustainable and resource-conserving work. They can ensure that the technology and the structure of work fit the current needs of the sociotechnical system by means of changeable work environments, task allocation and technology behavior.

Those dynamics can be broken down into two major strategies: adaptability and adaptivity. Whereas, adaptability is initiated by the human, adaptivity is triggered automatically by the technology employed (Kidwell et al., 2012). As such, both strategies allow work systems to account for humans' characteristics, situational states and external requirements. Still, research on the effects of such a dynamic design on the people working in it is scarce, such as is especially the knowledge on where and when adaptivity or adaptability are preferable.

Therefore, our exploratory study is a step into comparing static to adaptive and adaptable work systems in the context of human-robot collaboration in production tasks. To investigate workers' perceptions of the work, collaboration, and wellbeing under those different conditions, we initiated a laboratory experiment, implementing adaptability and adaptivity (vs. fixation) of robot trajectories, to enhance human safety, robot productivity, and especially psychological resources. The objective of the study is to derive best practices for human-centered design in human-robot collaboration.

2 Theory on dynamic work systems and their effects on workers

When looking at Industry 5.0, one necessity becomes obvious: production and thus work systems will need to be inherently flexible to allow for continually adapting to changing resource availabilities to account for sustainability, but also to human needs and performance requirements (Breque et al., 2021). Especially in the production sector, we see a rising demand in flexibility (e.g., Fragapane et al., 2022) and spontaneous changes in teams. This flexibility can manifest on different levels and across different aspects of production, from job rotation for production workers to adjustments of cycle times.

The potentially best researched area is that of task allocation and the question of which agent will execute which task within a work system at a given point in time (Older et al., 1997). Here, the term dynamic automation (DA) can be found, referring to the dynamic allocation assignment of tasks to human and technical actors, independent of the decision-maker (Bernabei and Costantino, 2023). In short, dynamic allocation leads to the decision “who is doing what, and when” (Bernabei and Costantino, 2023, p. 3528). Different terms are used to refer to dynamics (in the context of task allocation, see also Older et al., 1997): mixed-initiative interaction (Hearst et al., 1999), collaborative control (Fong et al., 1999), coactive design (Johnson et al., 2011), adjustable autonomy (Kortenkamp et al., 2000), situation dependent allocation (Greenstein and Revesman, 1986), flexible and adaptive allocation (Mouloua et al., 1993), adaptive aiding (Rouse, 1988), or self-organizing sociotechnical system (Naikar and Elix, 2016).

Another area of research that partially overlaps with dynamics is automation behavior, with a particular focus on robot behavior. Under the term of behavior adaptation of robots, one can find research and practical examples trying to account for the human expectation of interaction partners to adapt to them (Mitsunaga et al., 2008) such as robots learning user preferences of them navigating (Inamura et al., 1999) with direct human feedback or by evaluating sensor data.

2.1 Two control schemes: adaptability and adaptivity

Kidwell et al. (2012) describe two distinct “control schemes” (p. 428) for automated systems: adaptivity and adaptation. Adaptation [also: adaptability] allows an operator “to specifically tailor the level of automation (LOA) to suit current and/or future workload” (p. 428) as well as adapting the system to individual factors. Thus, a robot that is adaptable can be changed by the human to fit the situation or personal needs and requirements.

Adaptivity, on the other hand, means that a system balances changes in workload automatically (Kidwell et al., 2012). As to the more specific definition of Kaber et al. (2005), adaptive automation can be understood as the dynamic allocation of control over system functions to humans and computers, based on the state of the operators or the task. It can be seen as a process of using a machine only when a human worker is in need of support (Gramopadhye et al., 1997). Adaptivity can also be seen as an ability to either recommend or decide on changes in the level of automation in case of a pre-defined trigger (set) appearing (Oppermann, 1994).

Hence, there are two directions from which dynamics can be initiated—or a “locus of authority that changes LOAs” (Calhoun, 2022, p. 271): one is the human, the other is some, at least semi-autonomous, system, potentially driven by AI. This distinction underpins a fundamental debate in the design of human–automation interaction about whether control should be dynamically adapted by the system itself through adaptive automation or remain under human authority by adaptable automation. Interestingly, after co-authoring the 2012 conference paper titled “Adaptable and Adaptive Automation for Supervisory Control […]” with Kidwell, Ruff and Parasuraman, Gloria Calhoun published a paper in 2022 named “Adaptable (Not Adaptive) Automation: Forefront of Human–Automation Teaming,” indicating a shift in focus toward adaptable automation. This later work explicitly argues for prioritizing human authority, reflecting concerns about transparency, trust, and accountability in increasingly autonomous systems. The research history thus raises the questions of the advantages and disadvantages of adaptability and adaptivity, and of what control scheme is preferable (under which condition). In the following, arguments for both will be provided to establish a base for the experimental comparison in a human-robot collaboration context.

2.1.1 Why adaptivity: reasons for technology as the locus of authority

As Dhungana et al. (2021, p. 200) report, “all these [task allocation] approaches […] leave the decision to the algorithm.” Thus, the standard case for flexible work systems is that of a technology, be it implemented in a robot or run on a separate computer, adapting processes and thus being the locus of authority. Also coined as “human-aware robot behavior” (see, e.g., Svenstrup et al., 2009) researchers and developers use e.g., camera systems and algorithms for movement prediction or infer willingness to collaborate from human posture Svenstrup et al., (2009) to make robots adapt their behavior to human collaborators. The advantages of robotic adaptivity, that Chen et al. (2024) list, include successful interactions with humans, perception as a team partner, expression of understanding, adjusting to responses of users and different situations, and embodying various roles. Mostly, the central point for adaptivity in industrial robots is that an adaptive robot is able to smoothly change its behaviors or movements and thus creating a fit to human behavior, which helps establish smooth and fast workflows Karbouj et al., (2024). An experiment by Liu et al. (2016) shows that not only are adaptive conditions faster in task execution than fixed processes, but they are also subjectively evaluated better, especially when the control avatar behaves in a predicting manner, going beyond reactive adaptation.

Another advantage of a locus of authority on part of the technology is that adaptivity is possible without human intervention, without the need to overthink needs and preferences and without the interruptions of user inputs to control a robot.

2.1.2 Why adaptability: reasons for humans as the locus of authority

The arguments for having the human decide on or at least be involved in the decision of what and how automation works lie mainly in the psychological literature and knowledge regarding the importance of autonomy, completeness, and empowerment. It is based on the principle of human-centeredness, seeing the human as the central role within a sociotechnical system (Huchler, 2015), which is also vital for an Industry 5.0-way of thinking.

Additional, central work-psychological models and theories such as the criteria of humane work (Hacker, 2005), the job-demands-resources model (Bakker and Demerouti, 2007), the job characteristics model (Hackman and Oldham, 1975), the more current SMART Work Design model (Parker and Knight, 2024) as well as self-determination theory (Ryan and Deci, 2000) all underline the importance of varying demands, autonomy, and opportunities for learning and self-development as fundamental psychological needs or essential resources at work. These aspects are more likely to be preserved and actively supported through an adaptable approach.

Strengthening those resources is of vital importance, especially in the production sector, where work can often be classified as basic work (Bovenschulte et al., 2021) with low competence demands and predominantly manual activities. While such work is particularly suitable for robotic applications, it inherently suffers from low degrees of autonomy and from tasks with low cognitive stimulation that are partially physically straining or lead to continuous distress. Adaptability can thus be a way toward worker empowerment, can help level out the physical demands by enabling workers to dynamically change task allocation and hand over strenuous tasks to robots, and can even leverage autonomy and task completeness through oversight of a whole production sequence instead of only small task sequences decided upon in a top-down manner (see also Tausch and Kluge, (2023).

3 Research questions and empirical motivation

Despite the ongoing theoretical debate on adaptability and adaptivity, the empirical evidence base remains surprisingly limited. Calhoun's (2022) mini-review on opportunities to flexibly design human-automation systems reveals a huge research asymmetry: while adaptive automation has been the focus of extensive investigations, publications on adaptable automation remain scarce, with adaptive approaches outnumbering adaptable ones by a factor of ten. Although hybrid approaches appear promising, experimental comparisons between both dynamic modes remain underexplored. Thus, Calhoun (2022) claims that a shift in research is needed away from “building and demonstrating” (Sheridan, 2016, p. 531) the optimal adaptive system toward empirically examining “adaptable automation enabled with efficient interface design” (Calhoun, 2022, p. 273). Moreover, existing research in the field of flexible automation has primarily focused on identifying triggers for switching LOA such as physiological arousal, critical events, performance breakdowns, or a combination (see e.g., Feigh et al., 2012). In contrast, there is a lack of studies that systematically examine the consequences of dynamic control schemes on the human experience, the perception of the robotic partner, and the interaction between the two.

Against this background and in the light of the guiding human-centered, sustainable, and resilient principles of Industry 5.0, there is a clear need for research that explores how different control schemes influence the quality of human collaboration with automations. Building on the theoretical foundations introduced in the previous sections, we assume that an adaptable approach may better support psychological resources such as autonomy. At the same time, adaptive automation might promote smoother task execution. Due to the limited empirical basis in this field, we refrain from formulating predefined hypotheses but instead derive research questions. They form the basis for the exploratory experimental study conducted in this work and are further broken down into specific constructs and empirical evidence in the following chapter:

a) How do adaptive and adaptable work systems, in comparison to static systems, influence the perception of work characteristics, and how do they affect cognitive and affective reactions toward the robot and the interaction with it?

b) Which control scheme—adaptable or adaptive automation—is preferable for implementation in a human-robot workplace in production?

This approach provides a first step toward a joint consideration of adaptability and adaptivity and thus allows us to investigate the advantages and disadvantages of both control schemes in a nuanced manner within an industrial production context. In that sense, our study differs from prior research in the following points:

1) It experimentally compares adaptive and adaptable automation within the same design, which is still scarce (Calhoun, 2022), AND further provides a static baseline condition to contrast both forms of dynamic control against. Thereby, our focus is not on robot initiative as in prior studies (e.g., Baraglia et al., 2017; Liu et al., 2016; Noormohammadi-Asl et al., 2024) but specifically on the comparative effects of adaptable, adaptive, and static control over robot speed parameters.

2) Dynamics are implemented via robotic speed changes, which represents a smooth and non-obstructive means of conveying dynamism. This is in contrast to prior experiments using, e.g., verbal instructions necessary for the robot to act at all (Shah et al., 2011), or colliding human and robot movement paths resulting in waiting times when non-adaptable (Lasota and Shah, 2015).

3) Our task context differs from abstract or context-free paradigms (e.g., Nikolaidis et al., 2017; Noormohammadi-Asl et al., 2024) by situating the experiment in a realistic, low-effort production environment.

4) The interaction takes place in a physical space and directly influences the shared work environment unlike simulation-based or web-based studies (e.g., Liu et al., 2016; Nikolaidis et al., 2017).

5) Our outcomes are not limited to performance metrics but center on perceptual variables that are critical for humane and meaningful work design.

Taken together, our contribution lies in systematically comparing adaptivity and adaptability to a static baseline in a setting that avoids obstructive or artificial task dynamics. By using a realistic production task that mirrors low-demand workplaces likely to be augmented by robots, our results provide insights into an easily implementable parameter of robot dynamism and thus contribute to the human-centered design of decent work in line with current societal efforts such as Industry 5.0.

3.1 Consequences of adaptivity and adaptability on experiencing one's work

Autonomy, besides being one of the central human motives according to see self-determination theory Ryan and Deci, (2000), is also a key motivational task characteristic that describes the freedom and independence in planning and sequencing work tasks, making self-directed decisions, and choosing work methods (Morgeson and Humphrey, 2006). As Morgeson and Humphrey (2006) show, autonomy is more pronounced in professional occupations, thus likely harder to achieve in less complex, potentially unlearned production jobs. Adaptability on behalf of the human keeps them in the loop whilst exploiting anticipatory and preparatory powers of the human (van Dongen and van Maanen, 2005)—thus, a higher felt autonomy in an adaptable (not adaptive) workplace can be expected.

Flow-experience is another motivational state, expressed by being absorbed during concentratedly executing and activity, and also by feeling in control over the situation (Csikszentmihalyi, 1975). It also occurs at work (e.g., Engeser and Baumann, 2014) and during cooperative activities (Magyaródi and Oláh, 2015). Its positive consequences are manifold (see Peifer and Wolters, 2017, for an overview), hence it is a state that should be supported, by, e.g., offering autonomy as a central antecedent (Peifer and Wolters, 2017).

Still, work does not only provide resources, but also puts demands on people (JD-R model by Bakker and Demerouti, 2007). Those demands can be regarded as “physical, social, or organizational aspects of the job that require sustained physical or mental effort and are therefore associated with certain physiological and psychological costs” Demerouti et al., (2001), p. 501). If demands are high and not counterbalanced by adequate resources, such as job control, job strain can evolve (demand-control model by Karasek, 1979). Kidwell et al. (2012) state that applying adaptability generally can reduce complacency and increase monitoring and task completion attention, as well as performance and operator confidence, whilst it can also increase workload and demands put on the human part. From this, they conclude that “an adaptive scheme that automatically rebalances workload as the need arises might be more effective for optimal human-system performance” (Kidwell et al., 2012, pp. 428–429). Adaptivity has been shown to improve performance while reducing attention demands in comparison to undynamic automation use (Calhoun, 2022; Parasuraman et al., 2009). Thus, from a demands-perspective, adaptivity should be preferable due to not posing additional demands and reducing efforts needed to execute tasks. However, particularly in low-demanding environments such as routine production contexts, adaptability may be preferred, as it can elevate task demands to a psychologically desirable level by offering opportunities for meaningful engagement, decision-making, and active participation in the work process.

In addition to motivational and strain effects of workplace dynamics, they are also cognitively evaluated. This perception of workplace fit refers to the subjective impression that one's workplace, including the tools and technologies it involves, is more or less aligned with one's individual needs, abilities, and working style (Karmacharya et al., 2025). Based on the logic of human-technology fit (Goodhue and Thompson, 1995) and human-centered design (Wilkens et al., 2023), we assume that designing workplaces that do not only match “standard” humans, but the individual, is another resource at work. It might be equally affected by adaptivity and adaptability and should be generally rated higher than when standardized workplaces and non-reactive technologies are used.

3.2 Consequences of adaptivity and adaptability on experiencing the robot and the interaction

Trust is a complex meta-construct addressed by models such as the TrAM (trustworthiness assessment model; Schlicker et al., 2025). Overall, it can be understood as an attitude and expectation that some form of automation will help reach one's goals, especially in vulnerable and unclear situations (Lee and See, 2004). Accounting for its complexity, aspects of trustworthiness, i.e., an AIs purpose, processes and performance Lee and See, (2004) can be integrated, coming to an overall picture of trust in AI, that, e.g., can be subdivided into global trust and its facets, including perceived ability, integrity, transparency, unbiasedness, and vigilance Wischnewski et al., (2025). A study by Nikolaidis et al. (2017) showed that trust was higher when the robot adapted its behaviors and that with higher initial trust, participants were more willing to adapt the robot themselves. Also Lasota and Shah (2015) show trust gains in an adaptive condition where the robot made avoidance maneuvers in order to adjust to human actions compared to a static robot behavior condition.

One of the more global constructs regarding interaction experience is the satisfaction with collaboration with the robot. Satisfaction, transferred from work satisfaction, can be understood as a positive emotional state resulting from the judgement of, in this case, the interaction with the robot and the work executed together Locke, (1976). As to the operationalization of Lasota and Shah (2015), it is based on a fluent and mutually understanding interaction that works out. Liu et al. (2016) showed that most people prefer working with a robot who adapts to their intentions. The same is shown by Sekmen and Challa (2013) who implemented adaptivity in a robot by a learning algorithm updating after every interaction with a human. Using multiple inputs such as human speech and its understanding, localization of sounds, a navigation system and recognition of faces and attention measure, their robot either adapted to its human interaction partners or not. They show that adaptive interaction is preferred. Thus, enhanced collaboration satisfaction can be expected in dynamic work situations.

Collaboration satisfaction is closely related to the perceived safety and comfort in interacting, understood as the perception that the robot moves adequately and does not endanger the human by its behaviors (Lasota and Shah, 2015). A study by Munzer et al. (2017) compares an instructed (i.e., human-controlled) with a semi-autonomous collaboration between a human and a collaborative robot, where the robot learns from the prior trial which action is expected from it. This condition is rated more helpful, although it also comes with increased fear of the robot Munzer et al., (2017). This can potentially be explained by the more fluid interaction evolving from the robot acting proactively and by the loss in controllability on part of the human. Thus, there are hints both for enhanced collaboration satisfaction and potential impairments of safety perception, which might not occur in adaptable contexts.

Perceived adaptivity is a more concrete construct related to especially the degree of perceived ability of a robot to adapt to one's presence and needs. Ahmad et al. (2017) describe adaptive robot systems as “capable of adapting based on the user actions […] based on their emotions, personality or memory of past interactions” (p. 1). It is supposed to relate to the dimension of workplace fit (see chapter before) and can be understood as one possible precondition to enable this fit. Nevertheless, Calhoun (2022) lists dangers of adaptivity such as a potential loss in acceptance (Parasuraman and Riley, 1997) and situation awareness, depending e.g., on predictability and reasonableness of automatic changes, potentially leading to irritation, performance drops or safety issues (Miller and Hannen, 1999).

Another variable evaluating a robot or any cognitive agent is its perceived intelligence, which can be described as the perceived competence of a robot and sensibility of its behaviors (see Bartneck et al., 2009). It can be regarded as a factor within the judgement of anthropomorphism, i.e., “the attribution of a human form, human characteristics, or human behavior to non-human things such as robots” (p. 74) and is mainly influenced by a robot's competence (Koda and Maes, 1996). It can be expected that more abilities, such as being able to adapt to human posture and behavior, will lead to a higher perception of intelligence.

4 Materials and methods

The aim of this study is to explore how different control schemes, implemented across three study groups (adaptive, adaptable, static) in robot-assisted work settings affect perception-related variables regarding the task, the robotic system, and the interaction. To address this, we conducted a laboratory experiment using a mixed within-between subjects design, allowing us to exploratory examine the derived research questions regarding adaptability and adaptivity in an application-oriented industrial production context.

4.1 Experimental design

The laboratory experiment investigated the effects of different robot control schemes as independent variable on perception-related variables of the task, robot, and interaction (see Table 1 for an overview) as dependent variables. The independent variable was manipulated by systematically varying the robot's speed control across two experimental groups and one control group during a collaborative gearbox assembly task. In the adaptive experimental group (EGrobot), the robot automatically adjusted its speed in real time based on the distance to the human. To enable this, a camera system continuously tracked the positions of the human's head and hands relative to the robot's Tool Center Point. Every 200 milliseconds, the minimum distance between these points was computed and used to scale the robot's speed linearly between two thresholds: the robot moved at full speed when the distance exceeded 0.75 meters and gradually slowed down as the human approached, coming to a complete stop at 0.25 meters or closer (for further information on the technical implementation see Supplementary material). In the adaptable experimental group (EGhuman), participants had full manual control over the robot's speed throughout the task. Using three physical buttons located on the robot's teach pendant, they could switch at any time between three predefined speed levels corresponding to low (30%), medium (65%), and high (100%) speed relative to the robot's maximum speed of 500 millimeters per second. The robot operated by default at medium speed, but participants were free to adjust the speed as needed according to their comfort and perceived task demands. In the control group (CG), the robot operated at a constant speed of 65% of its maximum.

4.2 Sample and participation

The study was conducted between February 18 and March 17, 2025. A total of 31 participants were recruited, of whom 27 were included in the final analysis after data cleaning due to a failed manipulation check. Of the remaining sample, 59.26% were female, the mean age was M = 32.33 years (SD = 10.49), and the sample was generally highly educated, with 44% holding a master's degree and 11% holding a doctoral degree. In addition, 59% of participants reported prior experience with robotic systems.

Participation in the study was voluntary, with informed consent, and participants received a compensation of 15€ for approximately 1 h of involvement. An ethics approval under the number 976 was received from the local ethics committee of the Faculty of Psychology at Ruhr University Bochum following German Research Foundation guidelines (Schönbrodt et al., 2017). Eligibility was limited to individuals aged 18 years or older. Participants who experienced difficulties understanding the German language (used throughout the experiment) were excluded from participation.

Participants were randomly assigned to one of three study groups, with 10 participants in the static study group (CG), 6 in the adaptive study group (EGrobot), and 11 in the adaptable study group (EGhuman). Groups did not differ in the distribution of sex, in age, or experience with robots.

4.3 Experimental setup for gearbox assembly task

4.3.1 Laboratory setup and workstation

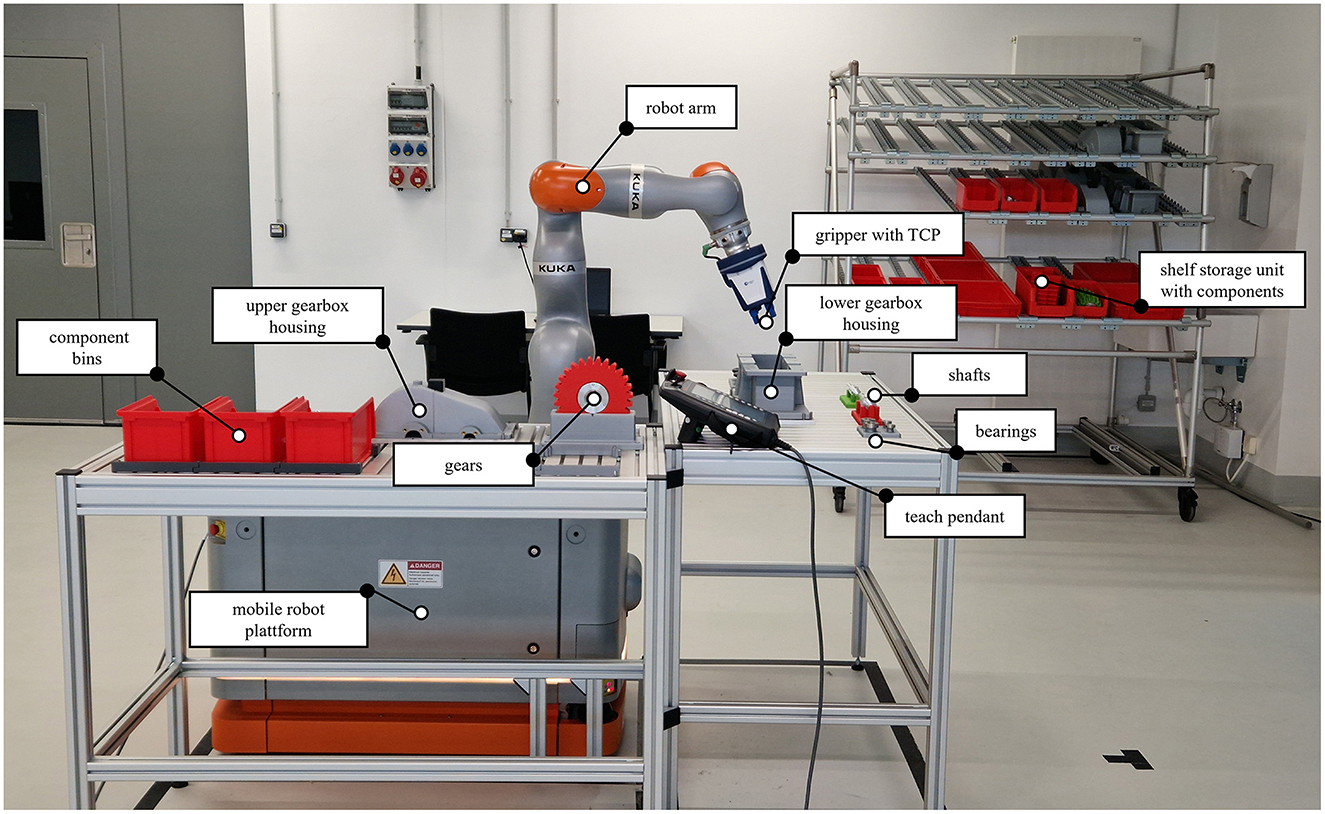

The experiment was conducted in a laboratory environment at the Centre for the Engineering of Smart Product-Service Systems (ZESS) using a collaborative robotic system for a semi-automated gearbox assembly task. The setup included the assembly workstation, a mobile robotic system (KUKA KMR 200 iiwa 14 R820), a 3D stereo camera (ZED2i, Stereolabs) for human body tracking, and a dedicated computing system for robot control and sensor data processing. Figure 1 illustrates the experimental setup.

Figure 1. Experimental setup for gearbox assembly. The 3D camera used for human body tracking was mounted on a tripod and not visible in the figure, as the image was taken from the camera's perspective.

The workstation involved all components needed for a gear assembly, including two gears, two shafts, four bearings, an upper and lower gearbox housing, eight screws and nuts to fasten the housings together. As such, the end product is a functional yet simplified version of a gearbox that is easy to handle and features a quick-to-learn assembly process. The workstation also comprised component bins for part provisioning, and a shelf storage unit for additional components.

4.3.2 Assembly procedure

While the full experimental procedure is described later on, the robot-assisted assembly process involved the following steps: after verifying the presence of all required components, the robot was initialized by the participant via the teach pendant. The robot then grasped and held the first gear while the participant inserted the output shaft, followed by the insertion of two large bearings. After confirming this step by pressing a button on the robot, the robot placed the assembled output shaft and gear into the lower gearbox housing. Next, the robot grasped and held the second gear while the participant inserted the input shaft and two small bearings. After confirming completion again, the robot positioned the second gear into the lower gearbox housing. It proceeded to place the upper gearbox housing onto the lower part. In the final assembly steps, the robot inserted screws and washers into the designated holes, while the participant fastened each screw from below using back nuts. This was repeated for all eight screw locations. After assembly, the participant placed the completed gearbox into the designated storage area.

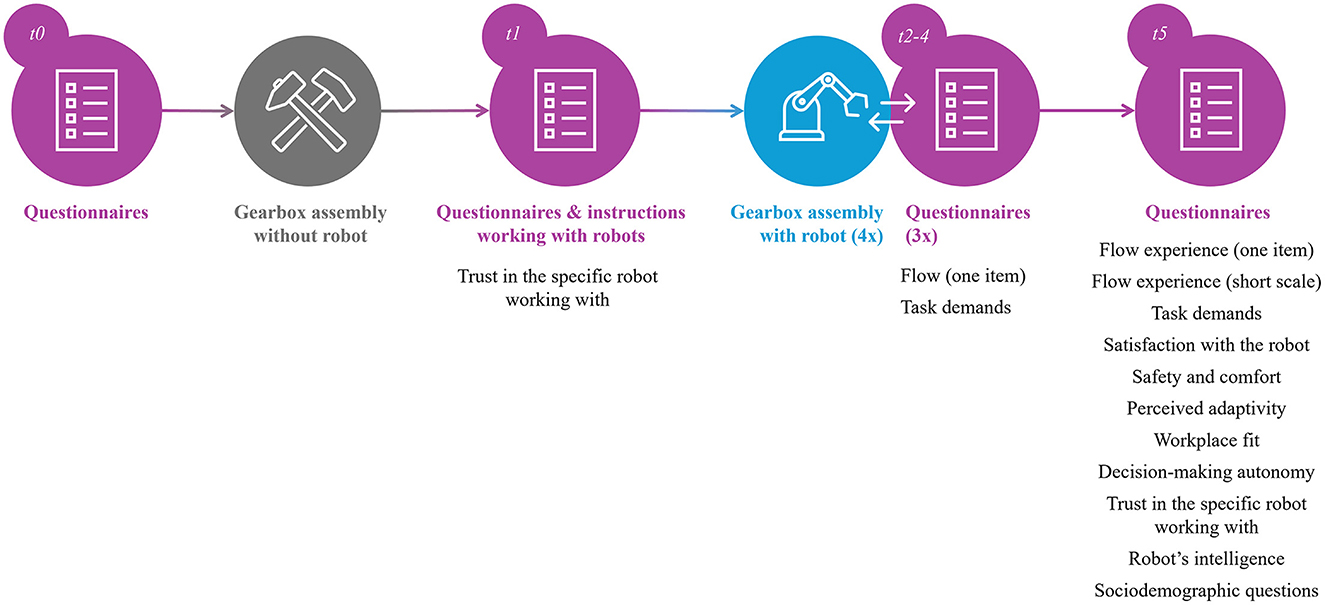

4.4 Experimental procedure

The experiment followed a structured, multi-phase procedure (see Figure 2 for an overview of the process and variables analyzed in this study; for a detailed description of all variables, see Table 1 in the Supplementary material). First, participants were verbally briefed, signed an informed consent form, and were introduced to the study. At baseline (t0), they completed the first questionnaires on different control variables.

To familiarize participants with the task and ensure a comparable baseline, each participant first completed one full gearbox assembly manually before the robot-assisted assembly. This initial trial was crucial to establish understanding of the task and allowed for later evaluation of collaborative performance.

After completing the manual assembly, they filled out a robot-specific trust questionnaire (t1) to measure their initial trust, before they received detailed instructions for the robot-assisted assembly, including safety guidance and information about the robot's behavior depending on their assigned condition. They then performed four robot-assisted gearbox assemblies. After each assembly procedure, short questionnaires (t2–t4) assessed their experience of the task and interaction with the robotic system. Finally, at t5, participants completed a post-task questionnaire that included various perceptual-related variables as well as questions regarding their sociodemographic background. The experimental procedure concluded with a debriefing, and participants were compensated for their time.

4.5 Dependent variables under exploration

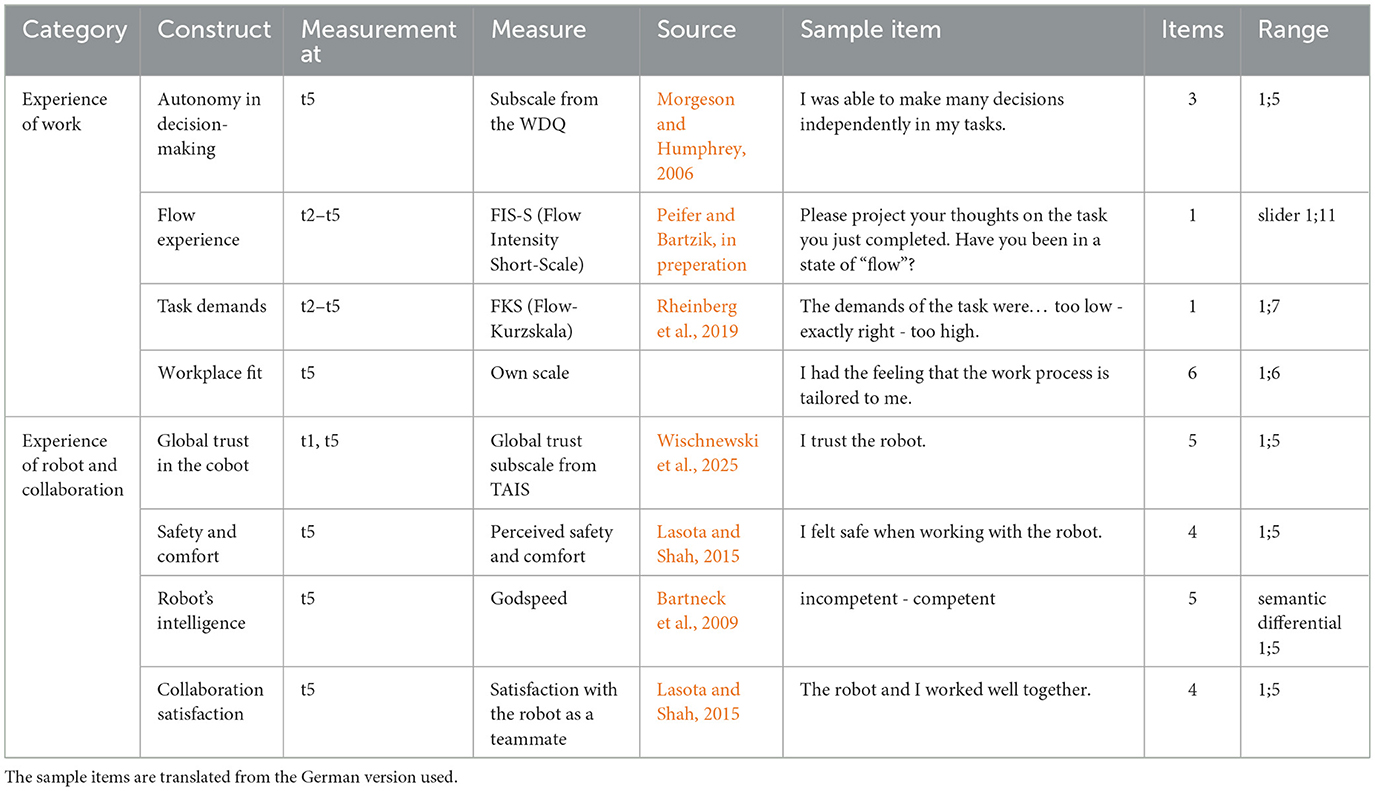

A set of validated and newly developed instruments was used to assess various psychological variables on the perception of the task, the robot and the collaboration as well as traits, attitudes, and sociodemographics during the experimental procedure. Whilst the full table including all measurement instruments can be found in the Supplementary material, Table 1 lists only the variables analyzed throughout the manuscript, sorted in the categories of experience of (1) work and (2) robot and collaboration.

Collaboration satisfaction, although assessed after each collaborative task, was considered only as a one-time measure at t5, so after the full collaboration, for this manuscript. Thus, participants' overall evaluation of the collaboration could be assessed rather than the temporal progression.

Additionally, we had a manipulation check asking participants if the robot's speed during production had been static, automatically adapting or changeable by themselves. Another variable, perceived adaptivity (measured at t5), was used as a manipulation-check to see if our experimental design was perceived by participants as intended. It was measured with three self-developed variables on a scale from 1 to 5, including “I had the feeling that the robot could adapt to my needs.” For sociodemographics, we measured educational level, age, gender and experience with robots to characterize the sample.

For performance, the most promising measure is the cycle time in seconds within each assembly cycle. These cycle times represent the duration of the robot's movements from the initiation of the first motion command to the completion of the final motion step. Manual preparatory or subsequent human actions were not included in these measures, but potential interruptions (e.g., robot stops due to collisions caused by incorrectly placed parts) were part of the logged time. As such, the automatically logged, and thus objective, cycle time can be seen as a measure for performance speed as it includes most of the time needed to assemble one gearbox. However, it should be noted that cycle time is not only an outcome variable but is also directly shaped by the experiments' manipulation of robot adaptation, which must be taken into account when interpreting this measure. At the same time, no other objective performance indicators were recorded in the present study, which is why cycle time serves as the only available proxy for performance and is therefore used in the analyses.

4.6 Primary and secondary endpoints

Following best practices from clinical research, we formally defined primary and secondary endpoints to increase transparency, facilitate the interpretation of our explorative results, and clarify which results are most relevant for addressing the central research question (Eldawlatly and Meo, 2019; Willis, 2023). The primary endpoint in this study was defined as the longitudinal investigation of flow experience and perceived task demands across the four collaborative trials, depending on the dynamic control scheme. These variables represent central indicators of employees' psychological experience in dynamic work systems and provide insight into how participants adapt to different control schemes over time. Other variables, including autonomy, workplace fit, trust, safety, perceived robot intelligence, collaboration satisfaction, and cycle time, were defined as secondary endpoints. Their analyses complement the primary endpoint by offering a broader, more holistic perspective on the psychological, relational, and performance-related effects of different control schemes.

4.7 Exploratory statistical analyses

To obtain exploratory insights into the proposed research questions, we regarded variable descriptives such as means, standard deviations and correlations, and conducted ANOVA tests to examine group differences between the three study conditions with regard to the reported dependent variables. For variables that were assessed at multiple time points, repeated measures ANOVAs were performed. To account for the multiple hypothesis tests implied by our primary and secondary endpoints, we applied the Bonferroni correction, which controls the family-wise error rate at α = 0.05 (Ranstam, 2016). This procedure ensures that the overall false-positive risk remains below the pre-specified α-level.

As the study follows an exploratory experimental approach, the sample size was limited, which in some cases led to violations of statistical assumptions. In cases with violation of normality assumption, non-parametric Kruskal-Wallis tests were used as an alternative. In cases of heterogeneity of variances, Welch-corrected ANOVAs were applied to ensure the robustness and interpretability of the results despite the exploratory character and sample size limitations.

5 Results

The exploratory data collection delivered a plethora of data, of which selected analyses are presented in the following. Regarding behavior in the experiment, we find that in the adaptable EGhuman, people changed the robot's speed on average 1.07 times (SD = 1.33), with a maximum of five changes and one person not adjusting the speed at all. Across all groups, the mean cycle time in the final trial was 6.05 min (SD = 1.40).

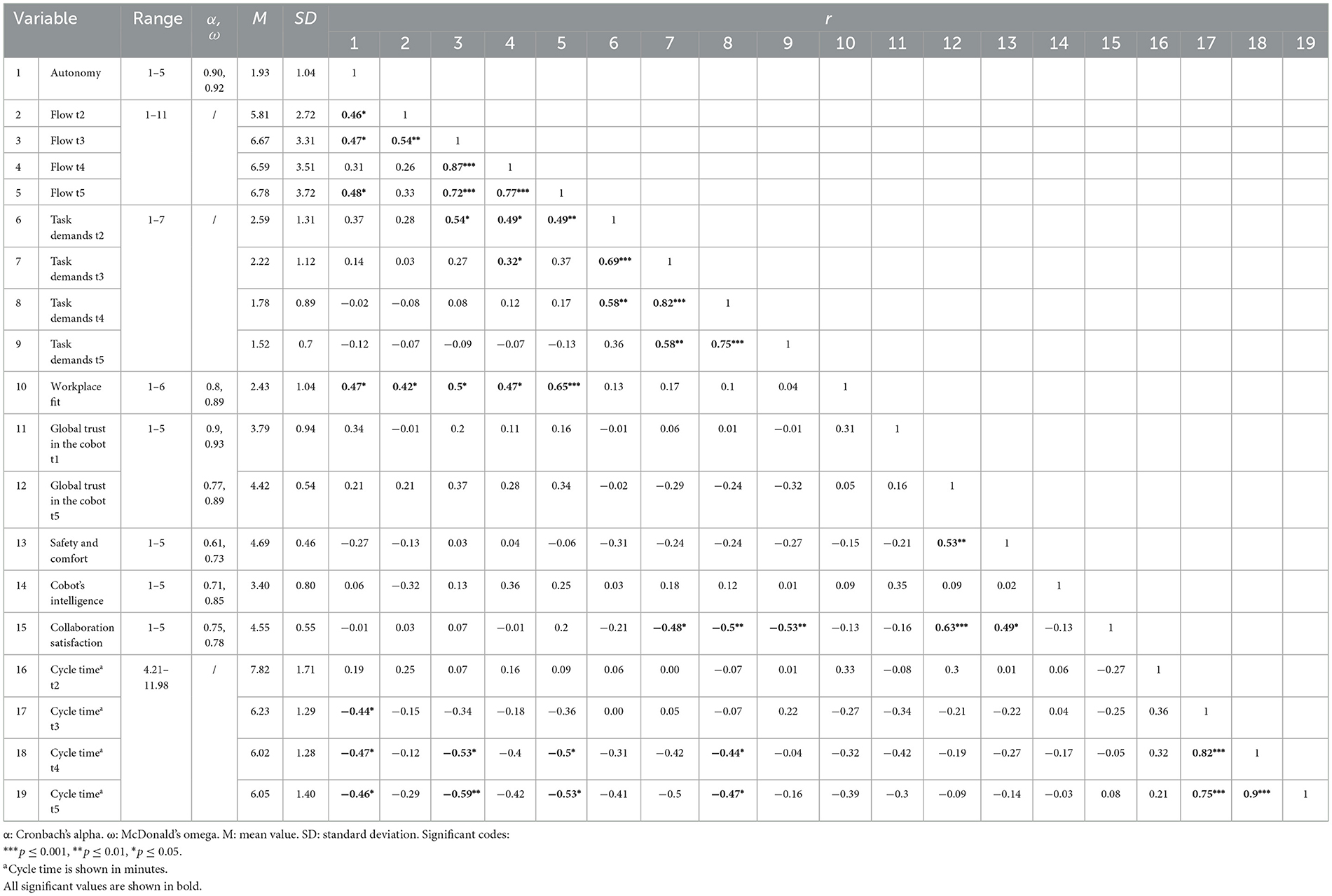

Table 2 gives an overview over the measurements' means, their reliability, and correlations.

Table 2. Descriptive statistics, internal consistencies, and intercorrelations of the assessed variables.

5.1 Analyses of perceptual-related measures

5.1.1 Group effects on outcome variables

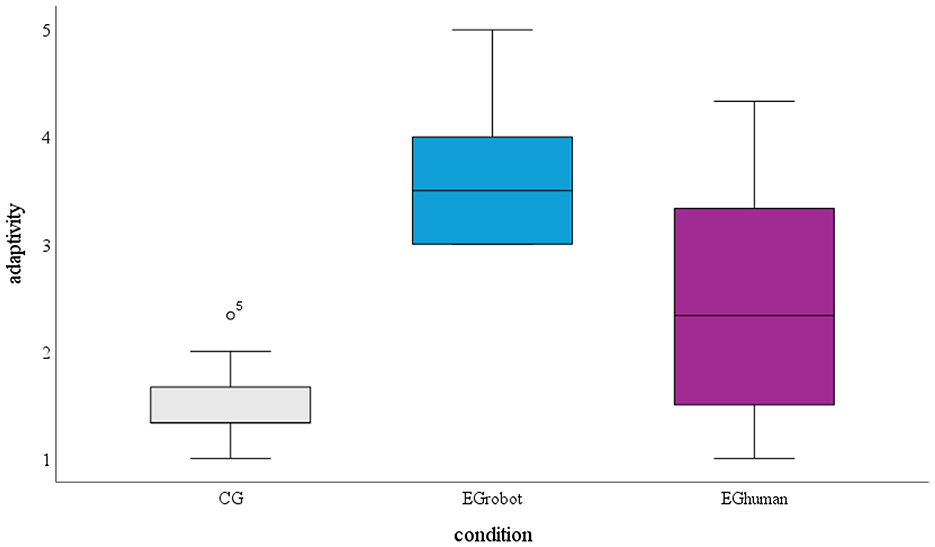

To verify the effectiveness of the experimental manipulation, we first examined participants' perception of the robot's adaptivity. As expected, the perceived adaptivity differed substantially between the three study groups (see also Figure 3): the control group reported a mean of M = 1.5 (SD = 0.42), the EGhuman M = 2.42 (SD = 0.42), and the EGrobot M = 3.67 (SD = 0.76). A robust Welch-ANOVA confirmed significant group differences, F(2, 11.39) = 20.62, p < 0.001, ω2 = 0.73, 95% CI [0.45, 0.87]. Corrected post-hoc t-tests revealed a significant difference between the control group and the EGrobot [p = 0.001, g = 3.63, 95% CI [1.95, 5.31)], as well as a marginally significant difference between the two experimental groups [p = 0.053, g = 2.13, 95% CI (0.88, 3.38)]. These results indicate that participants reliably perceived the intended differences in the robot's adaptivity, validating the manipulation.

Figure 3. Boxplots of perceived robot adaptivity across the study groups. CG, control group; EGrobot, adaptive experimental group; EGhuman, adaptable experimental group.

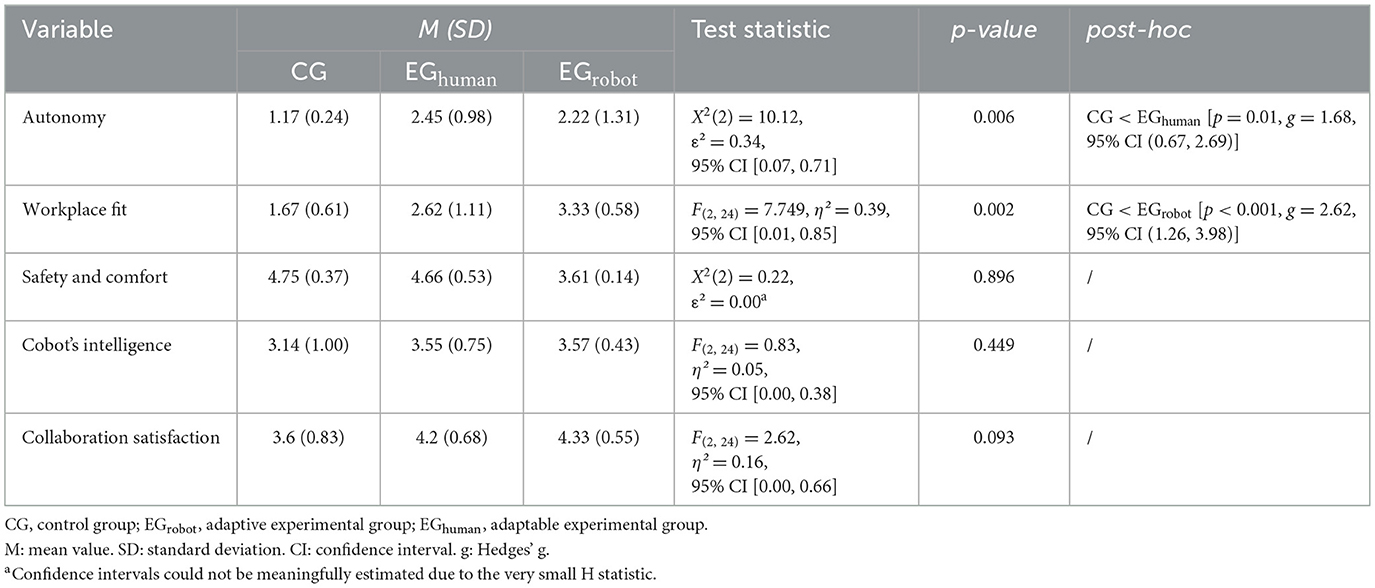

Following this manipulation check, we analyzed perception-related variables that were assessed once, after participants had completed the collaborative assembly task. Table 3 summarizes the results for perceived decision-making autonomy, workplace fit, safety and comfort, perceived intelligence of the cobot, and satisfaction with collaboration, presenting group means, standard deviations, test statistics, and post-hoc comparisons where applicable.

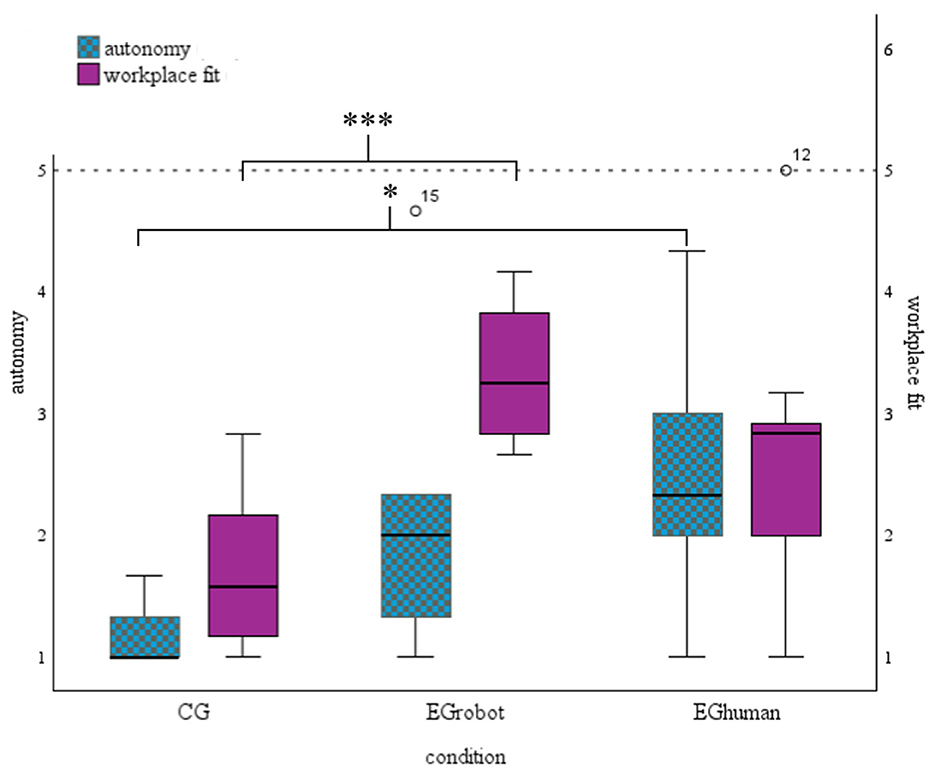

Significant group differences were found for perceived autonomy and workplace fit, with autonomy rated significantly higher in the EGhuman compared to the control group, and workplace fit rated significantly higher in the EGrobot compared to the control group (see Figure 4).

Figure 4. Boxplots of group differences in perceived decision autonomy and workplace fit. CG, control group; EGrobot, adaptive experimental group; EGhuman, adaptable experimental group. *p < 0.05, ***p < 0.001.

5.1.2 Effects of time and group on flow-experience, task demands, and trust

Following the analyses of perception-related variables assessed at a single measurement point, we examined variables measured repeatedly across the experimental trials, namely flow experience, perceived task demands, and trust.

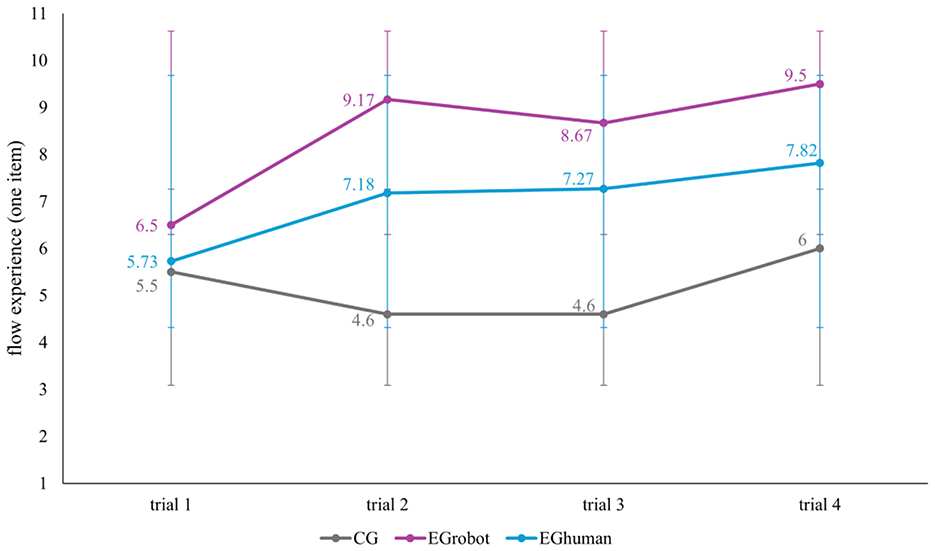

Flow experience was assessed repeatedly across all trials (see Figure 5). Across all trials, mean flow experience (one item measurement) was M = 4.68 (SD = 2.84) in the control group, M = 8.46 (SD = 1.44) in the EGrobot, and M = 6.91 (SD = 2.44) in the EGhuman. Due to a lack of sphericity, a Greenhouse-Geisser correction was applied. The repeated measures ANOVA revealed a significant main effect of group, F(2, 24) = 4.97, p = 0.016, η2 = 0.21, 95% CI [0.01, 0.44], but no significant main effect of time, F(3, 72) = 1.82, p = 0.171, η2 = 0.07, 95% CI [0.00, 0.17], and no significant interaction, F(6, 72) = 2.24, p = 0.077, η2 = 0.16, 95% CI [0.00, 0.29] (see Figure 4). Post-hoc comparisons showed a significant difference between the CG and the EGrobot, p = 0.018, g = 1.73, 95% CI [0.29, 3.18].

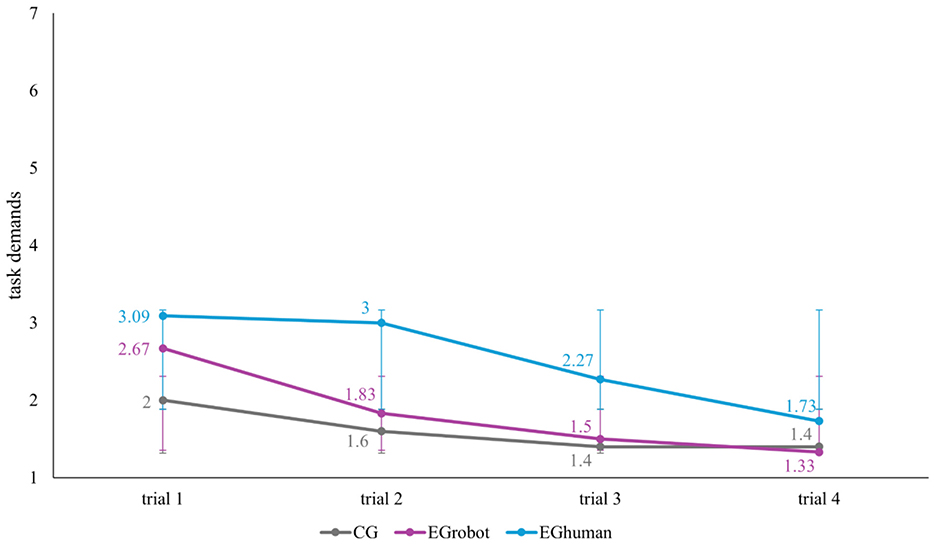

Figure 5. Mean flow experience across trials and groups. CG, control group; EGrobot, adaptive experimental group; EGhuman, adaptable experimental group. Trials 1–4 correspond to measurement points t2–5.

Also perceived task demands were assessed repeatedly across trails (see Figure 6). Over all trials, mean task demands were M = 1.7 (SD = 0.92) in the control group, M = 1.83 (SD = 0.41) in the EGrobot and M = 2.52 (SD = 0.96) in the EGhuman. A repeated-measures ANOVA with Greenhouse-Geisser correction indicated significant main effects of group, F(2, 24) = 3.96, p = 0.033, η2 = 0.18, 95% CI [0.01, 0.41] and time, F(3, 72) = 14.24, p < 0.001, η2 = 0.17, 95% CI [0.06, 0.28]. Post-hoc comparisons with Bonferroni correction revealed that for time, trials 1 and 2 differed significantly from trials 3 and 4 [p < 0.02, g = 0.08, 95% CI (−0.45, 0.61)], and for group, the control group differed significantly from EGhuman [p = 0.035, g = 0.86, 95% CI (0.01, 1.71)].

Figure 6. Mean task demands across trials and groups. CG, control group; EGrobot, adaptive experimental group; EGhuman, adaptable experimental group. Trials 1–4 correspond to measurement points t2–5.

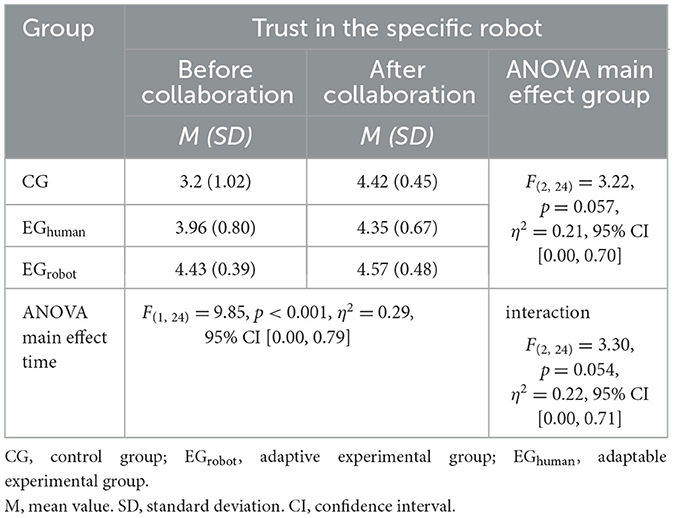

Trust was measured as trust in the specific robot participants collaborated with: once before the robot was introduced and once after all four trials. Means, standard deviations, and ANOVA results are presented in Table 4, showing a significant main effect of time [p = 0.004, g = 0.91, 95% CI [0.31, 1.51)].

5.2 Analyses of objective measures

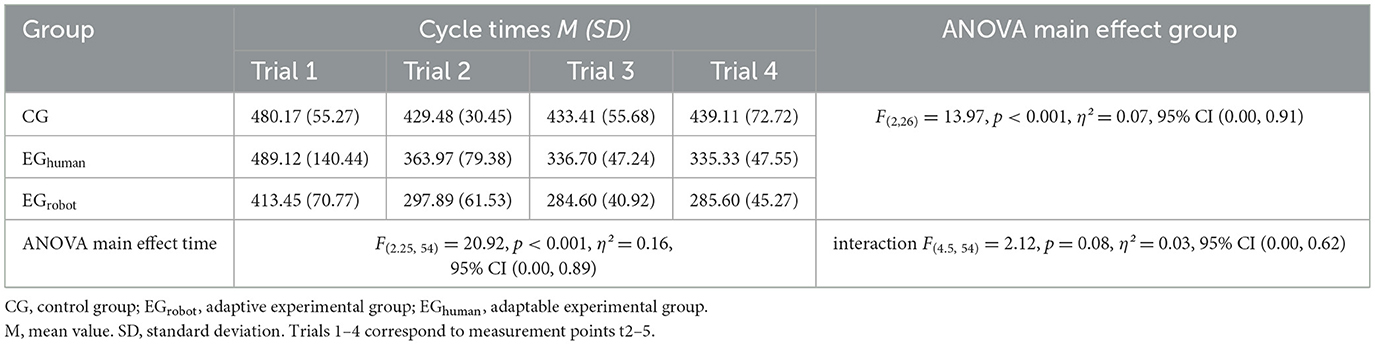

In addition to self-reported measures, the cycle times were analyzed across the four trials as a potential measure for performance regarding speed in the conditions. Descriptive results (see Table 5) showed a decrease in mean cycle times across trials in all groups, with the shortest times observed in the EGrobot and the longest times in the CG. Specifically, overall cycle times averaged 445.54 seconds (SD = 31.33) in CG, 381.28 s (SD = 65.34) in EGhuman, and 320.38 s (SD = 33.51) in EGrobot.

A repeated-measures ANOVA with Greenhouse-Geisser correction revealed significant main effects of group and time, whereas the interaction effect did not reach significance (see Table 5). Post-hoc comparisons with Bonferroni correction showed significant differences between CG and EGrobot [p < 0.001, g = 3.90, 95% CI (2.13, 5.66)], between CG and EGhuman [p = 0.011, g = 1.23, 95% CI (0.29, 2.18)], and between EGhuman and EGrobot [p = 0.023, g = 1.07, 95% CI (0.01, 2.14)]. For the effect of time, cycle times in Trial 1 were significantly longer than in Trials 2 [p < 0.001, g = 0.90, 95% CI (0.45, 1.36)], 3 [p < 0.001, g = 0.92, 95% CI (0.46, 1.37)], and 4 [p < 0.001, g = 0.90, 95% CI (0.45, 1.36)].

6 Discussion

The present study aims to examine how different control schemes (adaptive or adaptable) in designing human-robot collaboration during an assembly task influence participants' perceptions, experiences, and collaboration outcomes in comparison to a static work setting without (control over) dynamics. The goal is to identify if dynamics lead to positive consequences and which locus of authority over the dynamics should be preferred for industrial implementation. The results show that participants in the adaptable condition, who could manipulate robot speed on their own, reported higher perceived autonomy and higher task demands than the static group with constant robot speed. Workplace fit and flow experiences were rated higher in the adaptive condition, with the robot adaptively changing its speed in relation to human proximity, compared to the static group. Trust in the robot increased over time across all groups. Interestingly, adaptive automation (EGrobot) yielded the highest flow experience, whereas adaptable automation (EGhuman) resulted in the highest perceived demands, although demands were generally low across conditions. This finding aligns with the notion that manual control options, while increasing flexibility, may introduce additional cognitive load, particularly when switching between assembly task and system adjustment is required, while adaptive control leads to more fluent work processes. In line with these subjective patterns, the objective cycle-time indicated efficiency gains under both dynamic schemes compared to the static baseline, with the adaptive control scheme yielding the shortest processing times. Across all groups, cycle times further reflected familiarization effects, with the first production process being markedly slower than the subsequent sequences, which progressively accelerated before stabilizing.

Taken together, these results tentatively point toward the potential advantage generally of dynamic work systems and specifically of adaptive automation in dynamic work systems, at least for parameters such as flow facilitation, and perceived alignment with user needs via automatic robot speed adaptation. Before engaging in a deeper interpretation of these and additional descriptive findings, they must be considered in light of the limitations inherent to the exploratory nature and small sample of this study.

6.1 Limitations of the study

This study's exploratory design, motivated by the lack of prior research jointly examining adaptive and adaptable control schemes alongside a static baseline, led to the derivation of overarching research questions rather than specific hypotheses. While certain effects could be assumed, insights were too limited to create well-grounded hypotheses, and thus no formal sample size planning was conducted. As the goal was to explore these questions, yet in an authentic production setting with a working robot and actual adaptive and adaptable robot behavior, the experiment could only be done with a limited number of people. Consequently, the small and uneven sample size limits statistical power and potentially masks existing group differences (Baguley, 2004).

Additionally, the chosen gearbox assembly task was simplified to ensure technical feasibility, which lowered cognitive and physical demands and may have reduced the study's sensitivity to detect effects. The significant differences in perceived demands found suggest that control schemes can influence demands, even in low-demand scenarios. In such contexts, both adaptive and adaptable automation may be perceived as equally manageable, limiting observable impact of the control scheme on constructs such as flow, workload, or perceived teaming.

Another limitation is the focus on perception-based variables without including differentiated performance-related measures. This limited the ability to link subjective experiences to objective task outcomes, which in turn is essential for deriving comprehensive insights for implementation approaches (Isham et al., 2021). Measuring performance in dynamic scenarios is inherently challenging, as production cycles vary within and between subjects due to dynamic work system behavior. Although production times were recorded, comparing them across conditions is difficult, as robot speed, as well as participant behavior, varied. We included cycle time analyses and were able to show that generally, the dynamic conditions performed better regarding speed than the static baseline. Still, this comparison is somewhat “unfair” due to the opportunity of accelerating speed only available in those dynamic conditions. Thus, a shorter cycle time was expectable due to faster potential robot movements. This does not necessarily transfer to real performance advantages, e.g., if the static condition was designed differently, the speed there could have been higher. Developing methods to validly compare static and (different) dynamic processes using objective performance parameters remains an open challenge.

Moreover, workplace adaptations were narrowly operationalized, limited to robot motion control by scaling the execution speed of predefined motion commands. This can be seen as a study's strength, as it is a realistic and easy-to-implement parameter for adaptations in human-robot interaction. Still, other potentially impactful dynamic elements such as flexible task allocation or task timing were not included due to the explorative character of the study and its setup. Limiting adjustments to a single collaboration parameter likely reduced available cues for workplace fit or autonomy. Safety regulations for close human–robot interaction (capped robot speed at 500 mm/s), as implemented in real production scenarios, further required conservative speed profiles, limiting the opportunity in the adaptable condition to adjust the speed according to one's own preferences.

These implementation constraints were further accompanied by technical limitations of the adaptive control setup itself. In the adaptive condition real-time, distance-based speed control worked reliably, but body tracking and calibration limitations (occlusions and position errors up to ~0.2 m) required an additional safety buffer, resulting in even more conservative speed profiles during close human–robot collaboration. Furthermore, speed control was limited to three discrete levels (30/65/100%), constraining perceived speed differentiation and fine-grained user control.

Despite these limitations, the study design holds important strengths. Using a real robotic system addresses the intention-behavior gap common in simulated or vignette-based approaches (Hulland and Houston, 2021; Sheeran and Webb, 2016) and enhances external validity (Voit et al., 2019) compared to the usual Wizard-of-Oz experiments. Situating the study in a low-demand production context with an embodied and functioning robotic system broadens understanding of adaptive and adaptable control schemes beyond software-based systems or high-demand tasks. The extended experiment duration—over 1 h per participant with multiple task repetitions—enabled not only evolving familiarity with the system, yielding more stable perceptions and experiences, but also closer alignment with real work tasks and the investigation of dynamics, e.g., in trust in the robot.

Within these boundaries, the study provides initial insights into the consideration of different control schemes in a comparable manner in production work systems and supports the formulation of future research questions and hypotheses.

6.2 What we learned about work perception

When looking at work characteristics, we find that both dynamic groups report higher autonomy than people in the condition with the robot not changing speed. Whilst the difference to the group being able to manually adapt speed is significant, it is comparable, yet not significant, when the robot behaves adaptively, and thus could be understood equivalently considering the smaller sample size and higher variance. Thus, we see that adaptable and adaptive control schemes seem to support autonomy, with no advantage of people being able to manually adjust working conditions as could have been expected. This can be explained by people indirectly controlling robot behavior also in the adaptive condition: If participants understood how the robot adjusted its speed based on their movements, this could have given them control and thus create indirect adaptability. Additionally, we see the importance of autonomy as a resource because of its medium to high correlations both with workplace fit and flow experience. Autonomy can be seen as a precondition to create the balance of demands and abilities that is needed for flow as well as to make the workplace more fitting to one's own performance premises.

On the other hand, task demands are often considered less of a resource, potentially causing strain and exhaustion Demerouti et al., (2001). In our data, we find that demands are comparable, and very low, in the static and adaptive condition. Only when robot speed was adaptable, people reported higher, yet not high, demands. Looking at the time curve, one can also see a tendency toward convergence at the last measurement point. This suggests that it places additional demands on people to adapt robotic behavior, yet this effect might diminish over time and thus only plays a role in work contexts when it comes to the new introduction of adaptable scenarios. Still, the adaptivity implemented here was rather simple with only three speed levels to be chosen from. Thus, generalizations on adaptability of work systems in general need to be reflected in the light of its complexity. Additionally, the increase in demands might even be beneficial for basic work that, as already pointed out in the theoretical introduction, usually poses very low demands that might not be challenging enough to allow for, e.g., flow experiences or personal development. Interestingly, our data also show a medium to high positive correlation between initial task demands and flow experience. As such, raising demands—by introducing ways to adapt one's own—work functions as a resource itself depending on the context.

Across all conditions, flow experience tended to increase throughout the production cycles. This pattern can be explained by enhanced routine, less need to think about procedures, being increasingly sure about the production steps and potentially also by clearer expectations about the robot's behavior, which can all enhance absorption. Nevertheless, differences between control schemes become also apparent: our results suggest that adaptive robot behavior fosters a deeper state of flow than static work scenarios. Descriptively, flow values in the adaptable groups are also higher than under static conditions, but the pronounced effect is evoked by an adaptive control scheme, leading to a deep flow experience especially in the last trial. Potentially, having the robot adapt its speed automatically helps staying immersed in the production task, with it working fluently and fitting to one's own motion. In an adaptable scenario, one will always have to cognitively take a meta-perspective on the work situation, asking if the current speed is appropriate and if and how it should be changed. This awareness and its reflection will, as to Csikszentmihalyi (2014), disturb flow maintenance. As such, the results are well explainable within the construct. Still, for flow, one has to keep in mind that workers with a certain flow metacognition, i.e., who are convinced that it is a useful state, are more likely to invest in reaching this state (Weintraub et al., 2023), e.g., by adapting working conditions. Consequentially, also personal prerequisites might play a role in how (1) adaptability is used and (2) in how far dynamic working conditions can be exploited in a flow-supportive manner.

As a potential enabler of flow experience, we can identify workplace fit. It correlates with medium intensity with flow experience, as well as with autonomy, which can be a precondition for achieving fit. Overall, workplace fit is not very high, a logical consequence of the aspect of only one parameter to adapt or be adapted already discussed in the limitations. If more aspects were adaptable or the adaptations possible were of greater extent, we would have expected fit to be higher. As such, especially in the static condition the perceived fit, just as the objectively given fit, is very low. We see a substantially higher mean in the group able to adapt robot speed, but only a significant, yet big, difference to the condition with the robot behaving adaptively. In line with research conceptualizing interruptions as hindrance stressors (Baethge and Rigotti, 2013; Pachler et al., 2018), micro-interruptions during task execution can reduce perceived workplace fit by disrupting flow experience, raising strain, and undermining the sense that the environment matches one's way of working. As adaptive, system-initiated adjustments occur without workers intervention, they minimize self-imposed task switching and thus fewer interruptions, which supports both flow experience and workplace fit. In contrast, adaptable, human-initiated adjustments require periodic meta-decisions (i.e., whether, when, and how to change speed) that may be perceived as interruptions and can reduce perceived workplace fit relative to adaptive control, while still exceeding the static condition where no tailoring occurs. Similar to the perception of flow, this pattern is also likely moderated by individual difference: e.g., tolerance for automation may mitigate the disruptive impact of such perceptions (Merritt and Ilgen, 2008), whereas impulsive personality traits may amplify it (Tett et al., 2021).

6.3 What we learned about robot and collaboration perception

Interestingly, when looking at the satisfaction with the collaboration with the robot, no differences between the control schemes can be found. There is only a slight descriptive difference to the static work setting, where satisfaction is a little lower, but no subjective preference for one or the other control scheme can be deduced. Based on theory and research from work psychology, a preference of being able to control one's own work environment and execute job crafting (Tims et al., 2012) would have been expectable. In the light of the previously discussed results, including those of enhanced autonomy and flow experience especially under the adaptive control scheme, it is possible that those resources and positive states lead to positive feelings and thoughts, contributing to satisfaction. This would be inline with research suggesting that constructs such as autonomy (see, e.g., the Job characteristics model (Hackman and Oldham, 1975)), agency (S.M.A.R.T. model on agency, Parker and Knight, 2024), or flow (for an overview, see a review on flow experience and its (affective) correlates, Peifer et al., 2022) contribute to satisfaction. In our case, this effect could not be reproduced. One likely explanation lies in the task design and technical constraints of the study: due to safety regulations in close human-robot collaboration, the robot's working speed was generally kept low across all conditions. As a result, participants occasionally had to wait for the robot to complete its task steps, even when the speed was adjustable. Such waiting times may have lowered perceived productivity, normally associated with satisfaction (e.g., Kowalski et al., (2022). A moderating effect of low performance perception could mask potential effects of our manipulation on satisfaction and related variables. Furthermore, correlational analyses indicate that satisfaction with the collaboration was positively related to perceived safety and trust in the robot after the task. The relatively slow execution may have influenced these perceptions in contrasting ways: while slow operation may enhance feelings of safety and trust in the robot, it may simultaneously reduce perceived productivity, ultimately limiting satisfaction.

Safety and comfort were generally reported as medium to high when working with the robot; descriptively, ratings tended to be lowest when the robot behaved adaptively and similar between static and user-controlled (adaptable) scenarios, although the difference is not significant. Consistent with the control schemes, the adaptive condition automatically scaled speed with human proximity (moving faster when farther away and slowing to a robot stop at close range), which may have reduced perceived controllability and thus tempered safety feelings. This mechanism echoes prior observations, e.g., by Munzer et al. (2017), of proactive robot behavior sometimes elevating fear due to loss of control. By contrast, the static condition used a constant, conservative speed, whereas in the adaptable condition most participants quickly increased speed from 65% (equal to the static robot's speed) to 100%, yet perceived safety and comfort remained similar between participants in both conditions. This suggests that perceived controllability supports feelings of safety in our setup. Complementary correlational evidence indicates that higher safety and comfort co-occurs with higher trust in the robot and with greater collaboration satisfaction. Trust can thus be understood as a precondition of experiencing interactions with autonomous agents as safe and comfortable.

Interestingly, trust results before and after collaboration show quite different patterns: initial trust (see Mcknight et al., 2011), much based on expectations and the information provided in the situation, was different (though below the level for significance) depending on the adjustment opportunities. It was highest when the robot was initially presented as adaptive, lower when its speed was adaptable for participants and lowest when it was presented as non-adjustable. This can be explained by attribution of more competence and thus, perceived trustworthiness (Schlicker et al., 2025), shown in our data as a medium correlation (n.s.) of initial trust with perceived robot intelligence. This intelligence had thus no correlation with trust ratings after the collaboration. Overall, intelligence ratings were comparable between conditions, with a descriptively slightly lower intelligence when no adjustments were possible. This is interesting as a higher intelligence attribution for a robot behaving adaptively could have been expected. Potentially due to the limitation of adjustments, being only the speed of the robot arm that changes, and limited participant knowledge on the complexity of this adaptive movement scheme, intelligence perception was not affected by objectively given competence differences between conditions.

Additionally, we see that trust rises with a large effect after the interaction occurs. Still, for the adaptable group, it remains on the same level. This assimilation effect underscores the importance of regarding trust throughout interactions, examining trust trajectories (Alonso and La Puente, 2018) and not only initial reactions or attitudes toward a technology. Trust after the collaboration, understandable as a form of calibrated trust, correlates negatively, yet insignificant, with task demands, in the sense that when trusting the collaboration partner, demands to monitor it and be suspicious might decrease. It as well has a medium correlation with flow, while initial trust does not, hinting at potential trust-promoting effects of flow experiences, strengthening the bond between human and robot through pleasing and fluid interactions.

6.4 What we learned about production speed as a measure of task performance

Beyond subjective measures, we also examined cycle time as an objective indicator of task performance. The results showed a clear reduction in execution times across trials with a marked decrease from trial 1 to subsequent trials and a plateau thereafter. This pattern suggests that learning and familiarization effects were concentrated in the early phase of the experiment, reflecting a gain in process fluency. In addition, the dynamic conditions outperformed the static baseline, with adaptive robot speed showing the shortest cycle times, i.e., the fastest execution.

Taken together, these results indicate that dynamic speed control, and particularly automatic adaptation, led to measurable efficiency gains in this collaborative assembly task. However, since speed adaptation was the very mechanism of dynamism in this study, performance speed and experimental manipulation are not fully independent. This limits the generalizability of the performance findings to scenarios where adaptivity and adaptability are operationalized in a different manner. Nevertheless, the expectable performance benefits of an automatically regulated speed profile become evident here, underscoring the potential of adaptive control schemes to facilitate more fluent and efficient human-robot collaboration processes.

6.5 Research and theory implications

This study makes a contribution by jointly examining different loci of control—either with the human or the technical system—in the context of human-robot collaboration, an approach still largely underrepresented in the literature (see Calhoun, 2022). It further contributes to examining low-demand, production-based settings, thereby extending the scope beyond predominantly high-demand work contexts and collaboration with technology and beyond simulation-driven research. This broadens not only our empirical knowledge to more scenarios highly relevant for designing locus-of-control arrangements and for considering improvements of work design that really matter but also allows us to test the transfer of theory to those scenarios. This is especially important in the light of the EU's Industry 5.0 program and the development goal of “decent work.” Such safe work with equal opportunities for everyone offering a perspective for individual development (European Commission, n.d.–a) can, especially in the production sector, only be reached by implementing dynamics and individualization of work places, technologies and processes. Therefore, investigation and an understanding of control schemes and dynamic work conditions, their distinct advantages and peculiarities is of vital importance to realize human-centered work. Our research helps working toward that goal by looking at human cognitions, emotions and motivation under dynamic working conditions.

While Calhoun (2022) emphasizes the advantages of human-controlled adaptations, our findings indicate that system-controlled adaptations appear, to some extent, more advantageous in the highly structured, low-demand context investigated here. This suggests a more nuanced understanding of control schemes: the choice between adaptive and adaptable approaches should not be based solely on system capabilities or performance, but must also consider task structure, individual design consequences and demands, in line with established work design models (see e.g., Hackman and Oldham, 1975; Parker and Knight, 2024). In highly structured, repetitive contexts, a smooth workflow particularly supported by system-initiated adjustments may be valued over direct control, making this control scheme more favorable and, e.g., flow-promotive, than in high-risk or high-demand settings where aspects like job crafting might be more beneficial and demanded.

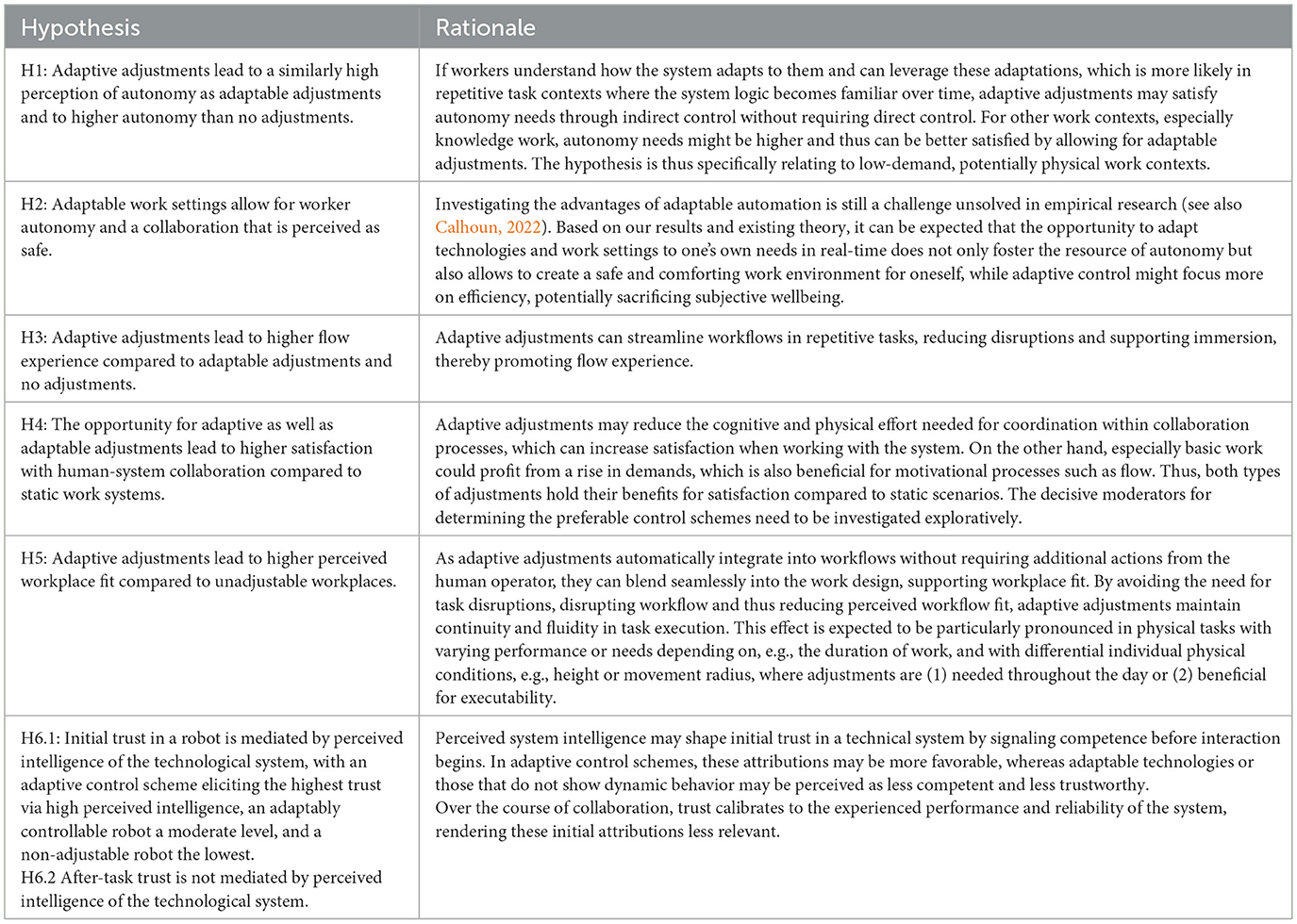

However, given the limitations of the present study, further research is required to validate this assumption and to explore their boundaries. As a starting point and based on this exploratory study's insights, Table 6 presents a set of hypotheses for future work, focusing on variables relevant to the design of workplaces and highlighting areas where our exploratory findings point to some surprising patterns in the specific context of highly structured and low-demands tasks.

Future research should test these hypotheses in comparable low-demand, repetitive task contexts, ideally involving a larger participant sample to strengthen the assumptions and preliminary findings of this study. Moreover, directly comparing these contexts with high-demand task settings could provide deeper insights into how task demands interact with control schemes, thereby offering a stronger empirical basis for determining whether adaptive or adaptable approaches are more suitable in specific work contexts.

6.6 Practical implications

From a practical standpoint, the results suggest that adaptive automation, when implemented with robust, low-latency sensing, and control, can enhance flow experience in human-robot collaboration without imposing additional task demands, which may offer potential efficiency gains in industrial contexts while maintaining safety requirements (see e.g., Byner et al., 2019). Beyond that, there are several levers to strengthen both the real and perceived safety within adaptive or adaptable speed control, as suggested by our research. For adaptive automation, improving sensing, and calibration, e.g., via full-body 38-keypoint tracking and multi-sensor fusion, can reduce positional uncertainty and allow higher robotic speed while preserving predictable behavior. In addition, the implementation details observed here suggest that conservative safety buffers and restricted speed profiles, while protective, shape how adaptation is experienced; careful tuning of these parameters may improve perceived safety (which proved as a downside of adaptivity in our experiment) as well as responsiveness. Transparency-oriented design, such as visual previews of planned trajectories via mixed reality (Maccio et al., 2022; Shaaban et al., 2024), can further increase the predictability of robot behavior and help humans feel more at ease.

In parallel, emerging approaches integrate richer human feedback into the control loop, moving beyond proximity-only rules. Zhang et al. (2022) have shown that machine learning can leverage physiological signals to modulate a robot's velocity in real time; for example, a reinforcement-learning system using electroencephalography and -oculography inputs tailored a teleoperator's speed to the operator's mental state, improving task efficiency and safety. Likewise, novel control frameworks (e.g., Franceschi et al., 2025) adjust robot motion based on physical interaction cues. While traditional mechanisms such as speed and separation monitoring impose fixed limits when a human is nearby, more advanced schemes propose continuously scaling speed using interaction-force feedback to maintain safety while improving responsiveness. By incorporating such intelligent adaptation mechanisms, future robotic systems could extend our speed-adjustment methodology to more complex scenarios and diverse user profiles, enabling robots to autonomously balance productivity with human comfort and safety in real time.

For adaptable automation, intuitive and low-effort user interfaces are crucial. Finer-grained and more intuitive control via gesture or voice commands can reduce the observed rising perceived task demands and interruptions to flow experience (Campagna et al., 2025). As such, a control scheme that allows workers to adapt their workplace, technologies or processes does not necessarily lead to excessive demand or fragmented work processes. Well-designed adaptable automation even offers distinct benefits in practice, aligning with the goals of Industry 5.0: it empowers workers with direct control, supports autonomy, allows personalized adjustment for varying skill levels or physical needs (that a robot might not be able to assess via sensors), and can be deployed in environments where sensing quality limits adaptive schemes. Ultimately, the choice between adaptable and adaptive approaches should be guided by the specific task context as well as worker needs and capabilities. While this study highlights practical advantages from a human-centered viewpoint for both, its findings for the examined production setting lean toward adaptive adjustments as the more favorable option.

7 Conclusion

This exploratory laboratory study jointly examined adaptable (human-initiated) and adaptive (system-initiated) control schemes against a static baseline in a structured, low-demand human–robot assembly task. Relative to static control, adaptive automation yielded higher workplace fit and higher flow experience across trials, indicating that proximity-based speed adaptation can support immersion and perceived alignment of the work process with user needs. Adaptable automation, in turn, produced higher perceived autonomy than static control and was associated with somewhat higher, though generally low, task demands. Over all measures, the dynamic approaches lead to more favorable results than a static work system. However, these conclusions must be viewed in light of the study's limitations, including small group sizes, a simplified task, and the restriction to speed as the only adaptation parameter.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://doi.org/10.17605/OSF.IO/QRUAF.

Ethics statement

The studies involving humans were approved by local Ethics Committee of the Faculty of Psychology at Ruhr University Bochum. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

SB: Investigation, Conceptualization, Supervision, Visualization, Methodology, Data curation, Formal analysis, Writing – original draft, Writing – review & editing. AT: Supervision, Conceptualization, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing. PG: Data curation, Investigation, Writing – original draft, Software.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The mobile robotic system (KUKA KMR iiwa) used in this study was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation-project number INST 213/1029-1 FUGB).

Acknowledgments

We would like to thank Dr.-Ing. Michael Herzog and Florian Bülow for accompanying the project and thinking with us about operationalizations and implementation as well as theoretical aspects of workplace dynamics.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/forgp.2025.1685961/full#supplementary-material

References

Ahmad, M., Mubin, O., and Orlando, J. (2017). A systematic review of adaptivity in human-robot interaction. Multimodal Technol. Interact. 1:14. doi: 10.3390/mti1030014

Alonso, V., and La Puente, P. de (2018). System transparency in shared autonomy: a mini review. Front. Neurorobot. 12:83. doi: 10.3389/fnbot.2018.00083

Baethge, A., and Rigotti, T. (2013). Interruptions to workflow: their relationship with irritation and satisfaction with performance, and the mediating roles of time pressure and mental demands. Work Stress 27, 43–63. doi: 10.1080/02678373.2013.761783

Baguley, T. (2004). Understanding statistical power in the context of applied research. Appl. Ergon. 35, 73–80. doi: 10.1016/j.apergo.2004.01.002

Bakker, A. B., and Demerouti, E. (2007). The job demands-resources model: state of the art. J. Manag. Psychol. 22, 309–328. doi: 10.1108/02683940710733115

Baraglia, J., Cakmak, M., Nagai, Y., Rao, R. P. N., and Asada, M. (2017). Efficient human-robot collaboration: when should a robot take initiative? Int. J. Robot. Res. 36, 563–579. doi: 10.1177/0278364916688253

Bartneck, C., Kulić, D., Croft, E., and Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 1, 71–81. doi: 10.1007/s12369-008-0001-3

Bernabei, M., and Costantino, F. (2023). Design a dynamic automation system to adaptively allocate functions between humans and machines. IFAC-PapersOnLine, 56, 3528–3533. doi: 10.1016/j.ifacol.2023.10.1509