Introduction

Adapting existing models for new uses is an attractive strategy for quantitative systems pharmacology research due to the presumed savings on cost, time, and resources vs. creating new models from scratch. However, the process can present significant technical and scientific challenges and should be undertaken with appropriate expectations.

Body

As mechanistic modeling becomes more widely adopted in drug development, models are increasingly available for possible reuse. Publications and websites such as the BioModels Database (www.biomodels.net) and Drug Disease Model Resources (www.ddmore.eu) offer hundreds of mechanistic representations of biological pathways. With these available resources, drug development professionals must decide whether to adapt one of these existing models for new research or create a model from scratch. Potential time, cost, and resource savings, along with the ability to leverage others' efforts and expertise, are motivating reasons to use existing mechanistic models or their components. But can published (or otherwise pre-existing) models be utilized for new QSP research?

Some prominent examples from literature suggest that reuse of existing mechanistic models is feasible. For example, computational approaches to modeling blood pressure regulation were pioneered by Guyton et al. in the early 1970s (Guyton et al., 1972) and have been frequently updated and adapted (Hallow and Gebremichael, 2017). Similarly, a 2013 review (Ajmera et al., 2013) traced the genealogy of 100+ models of glucose homeostasis and diabetes developed over the past 50+ years, and an early osteoclast/osteoblast model (Komarova et al., 2003) is cited in a later model incorporating additional pathways (Lemaire et al., 2004), which is cited in later models of osteoporosis (e.g., Peterson and Riggs, 2012), and multiple myeloma-induced bone disease (e.g., Ji et al., 2014). In the authors' own organization, previously developed model components and prior existing models frequently inform the models developed for new research contexts.

Several recent publications have provided guidance on best practices for documenting and publishing models to support future use of the models. For example, Cucurull-Sanchez et al. (2019) present a “minimum set of recommendations that can enhance the quality, reproducibility and further applicability of QSP models” and suggestions for “how to document QSP models when published, framing a checklist of minimum requirements”. This perspective aims to address the complementary angle—how to evaluate existing models and consider repurposing them for new research purposes and contexts.

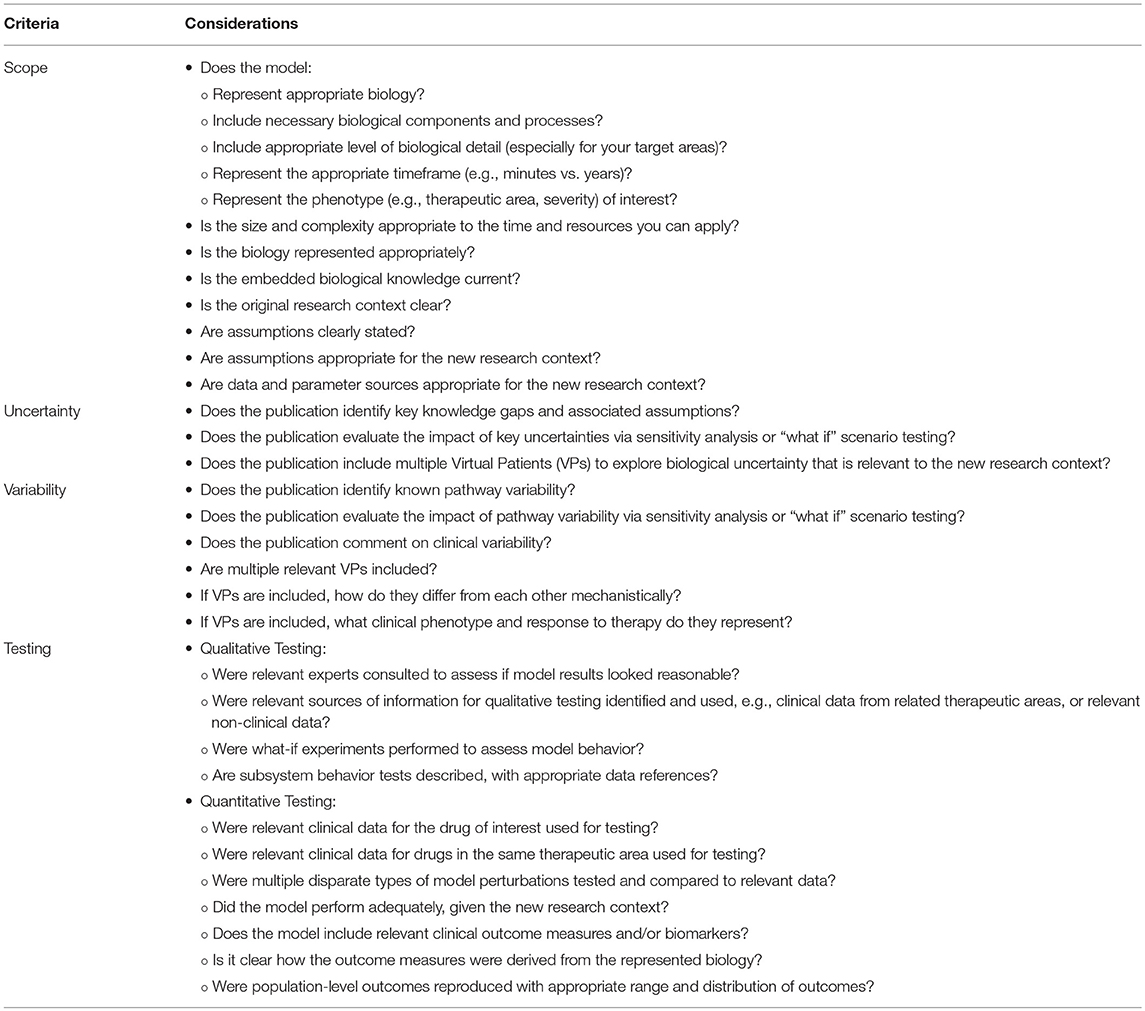

Adapting pre-existing models requires a high level of care. Models must be relevant, correct, and credible to ensure impact. Regardless of whether a model is new or pre-existing, modeling decisions should be made with the research context in mind. Components of the research context include: the key research question(s) or decision(s) to be made, available data and knowledge, time and resource constraints, and the cross-functional project team and management team members (Friedrich, 2016). Even the best models will not be of use if the decision makers do not have confidence in the results. Criteria for assessing if an existing model is fit for a research context should include technical considerations, model scope, biological uncertainty and variability, and model testing (Table 1). Each of these criteria is considered below.

Technical Considerations

Reproducibility is a fundamental requirement for reuse. However, in most cases, published models have technical issues that must be resolved before the model runs. For example, over 90% of curated models at one online repository could not be reproduced (Sauro, 2016). The most common challenges are related to typographical errors, incomplete specification of the model or simulation protocols, inconsistencies between the text and supplied model code, and the unavailability of model code or software environments. Even if the model source code is provided, it often is inadequately documented, or lacks the full specification of simulation protocols required to reproduce published results (Kirouac et al., 2019). These challenges have hindered the wide-scale use of online model databases, and the regular reuse of published models.

To overcome such issues, recent publications suggest adopting best practices for ensuring model reproducibility and reuse (Cucurull-Sanchez et al., 2019; Kirouac et al., 2019). Technical standards, such as Systems Biology Markup Language (SBML) and Simulation Experiment Description Markup Language (SED-ML), are being developed to address some of these issues. However, resolving technical issues is only the first step toward ensuring that a model is appropriate for use. Even if a model runs and reproduces published results, care must be taken to evaluate the model's suitability and the appropriate questions must be asked. Why was the model built? Under what conditions is the model valid? How was the model qualified? What biological uncertainties and variabilities were identified during the model development process? Has the impact of these uncertainties or variabilities on model outcomes been evaluated?

Model Scope Evaluation

Having clarity on the research context is essential before building or adapting a model because scope and modeling decisions must be made with the research context in mind. For example, a pathway may be modeled in more detail than it otherwise would be if a target on the pathway is to be modulated, or certain mechanisms relevant for long-term regulation may need to be added to extend a model's temporal scope. If a pre-existing model is to be used, researchers should assess what the original research context was and what adaptations are needed to ensure that the model is appropriate for the new research context.

Even if a model appears to be well-suited to be used in a new research context, it is advisable to conduct a formal process of answering and documenting the questions in Table 1 to ensure understanding and stakeholder buy-in. Stakeholder understanding of, and confidence in, the model is essential for ensuring impact of the modeling project.

As an example, consider adapting a model for use in diabetes research, which has a long history of mathematical modeling (Ajmera et al., 2013). The BioModels Database lists 64 diabetes models; and more are available on PubMed. The decision to select a published model might depend on desired outcome measures, study protocols, duration of study, intervention, and acceptance of the model by the broader research community. If the diabetes model is to be used, for example, for a multi-year prediction, then it should account for biological processes that change on the weeks-to-months timescale (e.g., insulin resistance), whereas these processes may be excluded (i.e., assumed constant) if the goal is to assess the acute impact of a novel target. Clearly defining the new research context and scope can make a seemingly overwhelming task of selecting an appropriate model more manageable. In the authors' experience, even if a suitable model is identified, it is expected that some components will need to be added to adapt it to the new research context.

Biological Uncertainty and Variability

There is uncertainty in biology, so mechanistic models must make assumptions about uncertain pathways. Uncertainties may be qualitative (e.g., is there a compensatory feedback loop that may limit the effect of inhibiting a target?) or quantitative (e.g., what is the exact strength of the feedback effect?).

Uncertainty arises from lack of data; reproducibility of data; methods for generating, analyzing, and reporting data; as well as the scientist's or modeler's interpretation of the data (Fourier et al., 2015; McShane, 2017). For example, a recent publication summarized the significant variability and its specific sources in reports of circulating chemokine and immune cell levels in inflammatory bowel disease (IBD) patients (Rogers et al., 2018), including assay differences, choice of cell markers, summary analysis methods (e.g., grouping subjects as responders vs. non-responders), and diversity in the resulting summary statistics reported (e.g., mean or median, standard deviation or interquartile ranges, etc.).

Mechanistic models facilitate the evaluation of the relative importance of these uncertainties and the possible risks they may pose. Once the material uncertainties have been identified, additional modeling steps can help evaluate the impact of alternative hypotheses, e.g., by using Virtual Patients (VPs) (Friedrich and Paterson, 2004; Rullmann et al., 2005; Shoda et al., 2010; Kirouac, 2018). Briefly, a VP is one complete parameterization of a model that represents one feasible set of biological hypotheses of disease pathophysiology and is consistent with relevant test data. Using multiple VPs can substantially reduce risk in pharmaceutical decision making.

The determination of which uncertainty matters most depends on the research context. Most publications include only limited discussion of uncertainties, and this may not be focused on the most relevant biological uncertainties for the new research context. For example, if a novel target is being evaluated, the uncertainty around that specific target warrants a closer look. Even if a publication includes an analysis of the impacts of uncertainties via sensitivity analysis or VPs, the results may differ for the new research context. Different parts of the system may be instrumental in driving different outcomes (e.g., different pathways are key drivers for fasting plasma glucose vs. hepatic glucose production), and sensitivities may shift for different patient types, so sensitivity analysis results may identify different drivers (and corresponding material uncertainties) depending on the focus of the research. Assessing and documenting uncertainty helps to provide context for future creation of VPs.

Variability is another crucial consideration in pharmaceutical research. It is often critical to assess patient heterogeneity because patients differ in their pathophysiology (mechanistic pathway variability), clinical presentation, and/or response to therapy (Chung and Adcock, 2013; Chung, 2016). This variability is separate from variability introduced by data measurement and analysis. Patients are inherently variable due to natural causes (e.g., genetics, age, comorbidities, environment) (Dibbs et al., 1999). VPs can be used to capture and explore the aspects of patient variability relevant to the new research context. New VPs can be added to a model if the existing set of VPs (if any) do not explore all aspects of mechanistic variability thought to be relevant to the new research context.

Model Testing

Testing is a critical step in adapting an existing model. Existing models are often under-tested for the new research context. Furthermore, many publications fail to fully describe the testing procedures or results. Models are constrained by a variety of data types including: physical laws and constraints, health and disease physiology, target and drug mechanisms, preclinical pharmacology, marketed therapies, and clinical trials for the investigational compound(s). Testing, therefore, should be appropriate for the type of available data such as qualitative vs. quantitative, subsystem vs. whole-system behavior, healthy vs. disease physiology, and individual vs. population-level, and described clearly. The modeling team and key stakeholders should decide which tests are most relevant to ensure that the model is fit for its intended purpose.

It is important to consider whether the original data used to construct and qualify the model is still appropriate for the new research context. For example, IBD studies often group Crohn's disease and ulcerative colitis into a single category. An IBD model may therefore not be appropriate for reuse, without considering whether the data used for model qualification is representative of the intended study population. Both disorders may involve similar components (e.g., immune and epithelial cells), and the pathology may be represented by similar model structures. However, the two disorders are significantly different. Using an IBD dataset to test a model might be appropriate under select assumptions, but inappropriate if the new research focus is a single disease. The original model parameters which assumed similar cellularity, mediator concentrations, and/or rate constants may not be appropriate with the new research focus.

Conclusions

There is more to a model than equations and parameters. To have meaningful impact, model scope must be relevant to the new research context (fit-for-purpose), relevant exploration of uncertainties and variability should be considered as part of the process of adapting the model, the model must be tested against relevant data to ensure it behaves appropriately, and clinical and management team members should be involved to ensure credibility. If an existing model does not meet all relevant criteria for the new research context, additional modeling and qualification activities should be expected. While adapting a pre-existing model for a new research context is often feasible, modeling teams should do so with appropriate expectations.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest Statement

MW, RB, and CF are employees of Rosa and Co. LLC.

References

Ajmera, I., Swat, M., Laibe, C., Le Novere, N., and Chelliah, V. (2013). The impact of mathematical modeling on the understanding of diabetes and related complications. CPT Pharmacometrics Syst. Pharmacol. 2:e54. doi: 10.1038/psp.2013.30

Chung, K. F. (2016). Asthma phenotyping: a necessity for improved therapeutic precision and new targeted therapies. J. Intern. Med. 279, 192–204. doi: 10.1111/joim.12382

Chung, K. F., and Adcock, I. M. (2013). How variability in clinical phenotypes should guide research into disease mechanisms in asthma. Ann. Am. Thorac. Soc. 10(Suppl.), S109–S117. doi: 10.1513/AnnalsATS.201304-087AW

Cucurull-Sanchez, L., Chappell, M. J., Chelliah, V., Amy Cheung, S. Y., Derks, G., Penney, M., et al. (2019). Best practices to maximise the use and re-use of QSP models Recommendations from the UK QSP Network. CPT Pharmacometrics Syst. Pharmacol. doi: 10.1002/psp4.12381. [Epub ahead of print]

Dibbs, Z., Thornby, J., White, B. G., and Mann, D. L. (1999). Natural variability of circulating levels of cytokines and cytokine receptors in patients with heart failure: implications for clinical trials. J. Am. Coll. Cardiol. 33, 1935–1942.

Fourier, A., Portelius, E., Zetterberg, H., Blennow, K., Quadrio, I., and Perret-Liaudet, A. (2015). Pre-analytical and analytical factors influencing Alzheimer's disease cerebrospinal fluid biomarker variability. Clin. Chim. Acta 449, 9–15. doi: 10.1016/j.cca.2015.05.024

Friedrich, C. M. (2016). A model qualification method for mechanistic physiological QSP models to support model-informed drug development. CPT Pharmacometrics Syst. Pharmacol. 5, 43–53. doi: 10.1002/psp4.12056

Friedrich, C. M., and Paterson, T. S. (2004). In silico predictions of target clinical efficacy. Drug Discov. Today TARGETS 3, 216–222. doi: 10.1016/s1741-8372(04)02451-x

Guyton, A. C., Coleman, T. G., and Granger, H. J. (1972). Circulation: overall regulation. Annu. Rev. Physiol. 34, 13–46. doi: 10.1146/annurev.ph.34.030172.000305

Hallow, K. M., and Gebremichael, Y. (2017). A quantitative systems physiology model of renal function and blood pressure regulation: model description. CPT Pharmacometrics Syst. Pharmacol. 6, 383–392. doi: 10.1002/psp4.12178

Ji, B., Genever, P. G., Patton, R. J., and Fagan, M. J. (2014). Mathematical modelling of the pathogenesis of multiple myeloma-induced bone disease. Int. J. Numer. Methods Biomed. Eng. 30, 1085–1102. doi: 10.1002/cnm.2645

Kirouac, D. C. (2018). How do we “Validate” a QSP model? CPT Pharmacometrics Syst. Pharmacol. 7, 547–548. doi: 10.1002/psp4.12310

Kirouac, D. C., Cicali, B., and Schmidt, S. (2019). Reproducibility of quantitative systems pharmacology models: current challenges and future opportunities. CPT Pharmacometrics Syst. Pharmacol. doi: 10.1002/psp4.12390. [Epub ahead of print]

Komarova, S. V., Smith, R. J., Dixon, S. J., Sims, S. M., and Wahl, L. M. (2003). Mathematical model predicts a critical role for osteoclast autocrine regulation in the control of bone remodeling. Bone 33, 206–215. doi: 10.1016/S8756-3282(03)00157-1

Lemaire, V., Tobin, F. L., Greller, L. D., Cho, C. R., and Suva, L. J. (2004). Modeling the interactions between osteoblast and osteoclast activities in bone remodeling. J. Theor. Biol. 229, 293–309. doi: 10.1016/j.jtbi.2004.03.023

McShane, L. M. (2017). In pursuit of greater reproducibility and credibility of early clinical biomarker research. Clin. Transl. Sci. 10, 58–60. doi: 10.1111/cts.12449

Peterson, M. C., and Riggs, M. M. (2012). Predicting nonlinear changes in bone mineral density over time using a multiscale systems pharmacology model. CPT Pharmacometrics Syst. Pharmacol. 1, 1–8. doi: 10.1038/psp.2012.15

Rogers, K. V., Bhattacharya, I., Martin, S. W., and Nayak, S. (2018). Know your variability: challenges in mechanistic modeling of inflammatory response in Inflammatory Bowel Disease (IBD). Clin. Transl. Sci. 11, 4–7. doi: 10.1111/cts.12503

Rullmann, J. A., Struemper, H., Defranoux, N. A., Ramanujan, S., Meeuwisse, C. M., and van Elsas, A. (2005). Systems biology for battling rheumatoid arthritis: application of the Entelos PhysioLab platform. Syst. Biol. 152, 256–262. doi: 10.1049/ip-syb:20050053

Sauro, H. M. (2016). “The importance of standards in model exchange, reuse and reproducibility of simulations,” in 3rd Annual Quantitative Systems Pharmacology (QSP) (San Francisco, CA: Hanson Wade).

Shoda, L., Kreuwel, H., Gadkar, K., Zheng, Y., Whiting, C., Atkinson, M., et al. (2010). The Type 1 Diabetes PhysioLab Platform: a validated physiologically based mathematical model of pathogenesis in the non-obese diabetic mouse. Clin. Exp. Immunol. 161, 250–267. doi: 10.1111/j.1365-2249.2010.04166.x

Keywords: quantitative systems pharmacology, modeling and simulation, virtual patient, pharmacometrics, in silico modeling, biomedical research, drug discovery and development

Citation: Weis M, Baillie R and Friedrich C (2019) Considerations for Adapting Pre-existing Mechanistic Quantitative Systems Pharmacology Models for New Research Contexts. Front. Pharmacol. 10:416. doi: 10.3389/fphar.2019.00416

Received: 15 February 2019; Accepted: 02 April 2019;

Published: 18 April 2019.

Edited by:

Hugo Geerts, In Silico Biosciences, United StatesReviewed by:

Peter Bloomingdale, Merck Sharp & Dohme Corp, United StatesCopyright © 2019 Weis, Baillie and Friedrich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michael Weis, bXdlaXNAcm9zYWFuZGNvLmNvbQ==

Michael Weis

Michael Weis Rebecca Baillie

Rebecca Baillie Christina Friedrich

Christina Friedrich