- 1Department of Physics, University of Helsinki, Helsinki, Finland

- 2Space and Earth Observation Centre, Finnish Meteorological Institute, Helsinki, Finland

Vlasiator is the world’s first global Eulerian hybrid-Vlasov simulation code, going beyond magnetohydrodynamics in the solar wind—magnetosphere—ionosphere system. This paper gives the story of Vlasiator. An important enabler of Vlasiator is the rapid increase of computational resources over the last decade, but also the open-minded, courageous forerunners, who have embraced this new opportunity, both as developers but also as co-authors of our papers. Typically, when starting a new coding project, people think about the presently available resources. But when the development continues for multiple years, the resources change. If instead, one targets to upcoming resources, one is always in possession of a code which does not contain large legacy parts that are not able to utilize latest resources. It will be interesting to see how many modelling groups will take the opportunity to benefit from the current high-performance computing trends, and where are we in the next 10 years. In the following, a simulation that directly handles and manipulates the phase space density f(r,v,t) is referred to as a Vlasov approach, whereas a simulation system that traces phase space samples by their kinetic characteristics of motion is a Particle-in-Cell approach. This terminology is consistent with its use in the magnetospheric simulation community.

Life at the turn of the millennium

In 2004, as a young postdoc, I was doing my postdoctoral period in Boulder, USA. This time was generally marked by high hopes and positive expectations for the future of space physics. The first constellation space physics mission Cluster (Escoubet et al., 2001) had just been launched, introducing an opportunity to distinguish spatial effects from temporal variations for the first time. Simultaneously, we still had many of the International Solar Terrestrial Physics (ISTP) satellites in operation, like Polar and Geotail. I had just finished the methodology to assess magnetospheric global energy circulation using magnetohydrodynamic (MHD) simulations (Palmroth et al., 2003; Palmroth et al., 2004). It felt like anything would be possible, and we could, for example, explain magnetospheric substorms within no time. In fact, I remember wondering what to do once we understand the magnetosphere.

I had conflicting thoughts about the Cluster mission, though. On one hand, this European leadership mission would surely solve all our scientific questions. On the other hand, for me personally, Cluster posed a difficult problem. As a four-point tetrahedron mission, it would provide observations of ion-kinetic physics. This was an intimidating prospect, as I had just written my PhD thesis concerning the fluid physics, using a global MHD simulation GUMICS-4 (Janhunen et al., 2012). During those times, simulations were thought primarily as context to data, not really experiments on their own. It seemed that even though we had built eye-opening methods based on MHD, they would be left behind of the development. With Cluster, observations took a giant leap forward, and it seemed that MHD simulations would soon become obsolete.

The modelling community was also thinking about how to go forward. Code coupling and improving grid resolution were frequent topics of conversations. One of the most challenging places to do MHD is the inner magnetosphere, where most of the societally critical spacecraft traversed. The inner magnetosphere is characterized by co-located multi-temperature plasmas of the cold plasmasphere, the semi-energetic ring current, and the hot radiation belts. It is a source region of the Region-2 field-aligned current system that closes through the resistive ionospheric medium providing Joule heating that can bring spacecraft down (Hapgood et al., 2022). MHD fails in the inner magnetosphere because it represents the multi-temperature plasmas by a Maxwellian approximation of the temperature (e.g., Janhunen and Palmroth, 2001). Therefore, it does not reproduce the Region-2 current system (Juusola et al., 2014) and is possibly off by orders of magnitude in estimating Joule heating (Palmroth et al., 2005). Hence, many researchers were relying on code coupling to improve the representation of the inner magnetosphere (e.g., Huang et al., 2006).

During the postdoctoral period, I visited the Grand Canyon. While taking pictures, I anticipated that my old camera would not convey the truth about the place. It struck me that this is like me, using an MHD simulation to reproduce our great magnetosphere. MHD was the best we had, and it was very useful in some respects—but it did not really describe the near-Earth space like Cluster would in the coming years. One-way code coupling, like coupling an MHD magnetosphere to non-MHD inner magnetosphere would not yield a better representation of the global description because that would still be represented as a fluid. Besides, I had doubts towards code coupling (and still do): It would be more about coding than physics, and I was not really interested in that. I wanted to understand how the Cluster measurements would fall into context. But that meant that one would have to change the physics in the simulation.

Beyond MHD?

I remember watching Nick Omidi’s work about the formation of the foreshock (Omidi et al., 2004). They had developed a 2-dimensional (2D) hybrid particle-in-cell (hybrid-PIC) code, in which protons were macroparticles describing ion-kinetic physics, and electrons were a charge-neutralizing fluid. Now we are getting somewhere, I thought. However, even though I was amazed of their new capabilities, in comparison to satellite observations the results seemed rather hard to interpret in terms of foreshock wave characteristics, like amplitudes and frequencies. The physics in a hybrid-PIC simulation depends on the ion velocity distribution function (VDF) constructed from the macroparticle statistics, and since they were not able to launch very many particles due to computational restrictions, the outcome was noisy. The other option to simulate ion-kinetic physics was the Vlasov approach (e.g., Elkina and Büchner, 2006), which did not launch macroparticles, but modified the VDF itself in time. Many Vlasov solvers were called spectral, i.e., they used the property of the distribution function being constant along the characteristic curves according to the Liouville theorem. The benefit was that there was no noise. However, their problem was filamentation. Formulated as a differential equation on f(r,v,t), nothing prevents the phase space from forming smaller and smaller structures ad infinitum. The spectral Vlasov simulations need to address this issue through filtering.

Simply mimicking someone else’s approach did not seem very appealing, and a noiseless representation of the same physics would give nice complementarity, I thought, and started to think how a global hybrid-Vlasov simulation would become possible. Let’s take the number of GUMICS-4 cells in a refined state, this is 300,000 cells in the r-space. Then, let’s set the VDF into each r-space grid cell to form the v-space, use a Eulerian method for propagation in time to get rid of the filamentation, and assume 3,000 grid cells, yielding about 109 phase space cells in total. These numbers turned out to be about 100–1,000 times too small in the end, but they were my starting point. Any code development takes time, so let’s look at where the computational resources are in 5 years. If one would start to develop a global hybrid-Vlasov code that currently does not fit into any machine, global runs would eventually become possible if the Moore’s law continued to increase available resources. This led to two strategic factors: First, the code would need to be always portable to the best available machine irrespective of the architecture, indicating that the latest parallelisation technologies should be used. On the other hand, it meant that I would need to have sustained funding to develop the code for at least during the time at which the computational resources are increasing. So—I thought—my only problem is to get a five-year grant with which I could hire a team to develop the code.

In 2004–2005 the plan did not seem plausible, but in 2007 an opportunity presented itself. The European Union established the European Research Council (ERC). ERC’s motto became “Excellence is the sole criterion,” as they wanted to fund frontier paradigm-changing research, bottom up, from all fields. They had a two-stage call to which I submitted an improved version of the old plan. Based on the then available resources it seemed that in a few years even the Finnish Meteorological Institute would have machines that could be utilised. The first stage proposal deadline was also my own deadline, as it was the same day as my child was due. In the summer of 2007, I received notice that my proposal was accepted to the second stage. The deadline of the full proposal was in the fall, and the interview in Brussels was at my child’s 6-months birthday. I wrote the second stage proposal in 1-h slots when the baby was sleeping, and to make my time more efficient, I utilised a “power-hour” concept established by Finnish explorers. If you concentrate all your daily courage into 1 h, you can do whatever during that hour. So, I called around and talked to different researchers about Vlasov solvers and managed to submit in time. The interview went beyond all expectations. In May 2008, I received information that from the ∼10,000 submitted proposals, 300 were funded, my proposal among the successful ones.

Vlasiator

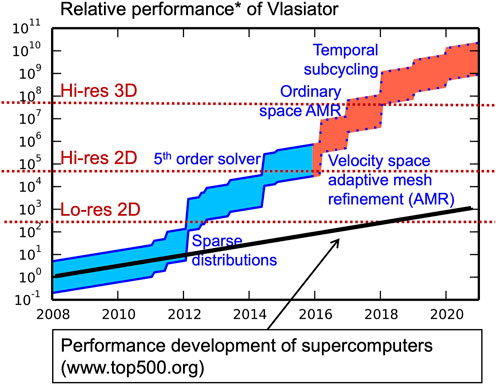

In the beginning, there were many obstacles. Not many people were willing to come to Finland, because the challenge seemed enormous even with knowledge of the Moore’s law. Further, the community did not seem to believe in the project. I remember participating in the International Symposium of Space Simulations 2009, where the first ever Vlasiator poster was presented (Daldorff et al., 2009). Most people thought developing Vlasiator is impossible because there would not be enough supercomputer capacity to realize the simulation in the global scale. Figure 1 displays the relative performance of Vlasiator in time, showing that indeed in 2009, we did not have anything concrete yet. Several optimizations enabled our first 3D test-Vlasov simulation (Palmroth et al., 2013). The first real breakthrough in Vlasiator development was the use of a sparse velocity space. As a large part of the VDF is negligible, we decided not to store nor propagate those VDF grid cells that were below a certain threshold in phase space density. Sparsity enabled the first 2D paper of the foreshock waves (Pokhotelov et al., 2013). The second breakthrough was when we replaced the diffusive finite volume solver with a semi-Lagrangian approach (see details in Palmroth et al., 2018). At the end of my ERC Starting grant in 2013 we were able to carry out similar studies as Omidi showed in 2004, but without noise in the solution.

FIGURE 1. Relative performance of Vlasiator normalized to the performance in the beginning of the project. The target simulation is global, so incorporating parts of the solar wind and a large part of the magnetosphere. The black line gives the performance development of the world’s 500 top supercomputers. The blue and red areas give the relative performance of Vlasiator depending on whether we used a local machine or a top European supercomputer available through Partnership for Advanced Computing in Europe (PRACE). The plot was made in 2016, explaining the discontinuity in colors (blue is past and red is future).

Throughout the project, we developed and utilized new parallelization schemes, and one critical success factor was, and is, the collaboration with the Finnish supercomputing center CSC. Even though the scientific community doubted Vlasiator, CSC believed in it, and helped us at every step of the way. They thought it was remarkable that someone is not thinking about the current resources but is aiming for the coming resources. It made sense to them, too, because they had seen how many codes are obsolete and not able to utilize the newest resources efficiently; supercomputing architectures are experiencing a constant change. CSC is tasked to look ahead in high-performance computing (HPC), and by helping us they proofed many coming HPC architectures and technologies. When a supercomputer is installed, it needs to be piloted, i.e., executed under a heavy load to understand how the system behaves. Few codes can scale to hundreds of thousands of compute cores linearly, and so we have piloted many supercomputer installations providing information both to vendors as well as supercomputing centers. The collaboration with the HPC professionals is very rewarding.

Then, in 2015, I won an ERC Consolidator grant, this time to make Vlasiator 3D and to couple it with the ionosphere. Long story short, at the end of my second ERC grant, this is where we are now. The team increased from 3 to over 20, and we have held international hackathons, and invited guest first authors to utilize the results. We have presented the first 6D results using a global 3D ordinary space, which includes a 3D velocity space in every grid cell. We have coupled the code with an ionospheric solution. Even though we only have submitted papers of the new capabilities, I can say that the first results are so breath-takingly and utterly beautiful, worth all these years of blood, sweat, tears, and trying to convince people who doubted the project. Figure 2 shows preliminary results. We are finally able to address many of the great mysteries of our field, like what is the interplay and spatiotemporal variability of ion-kinetic instabilities and reconnection in the substorm onset (Palmroth et al., 2021a). We have made a paradigm shift and finally, for the first time, see how the global magnetosphere looks like in a Eulerian hybrid-Vlasov simulation. We have gone beyond global MHD. We can also compare to the complementary hybrid-PIC simulations that have also recently extended their approach to 3D (e.g., Lin et al., 2021).

FIGURE 2. First global view of the 6D Vlasiator, depicting plasma density. Solar wind comes to the simulation box from the right, and a real-size dipole sits in the origin. Every ordinary space grid cell includes a velocity space, where the ion VDF can have an arbitrary form. The solution allows to investigate ion-kinetic phenomena within the global magnetosphere, including also physics that is beyond the MHD description, like Hall fields in reconnection, drifts, instabilities, co-located multi-temperature plasmas, field-aligned and field-perpendicular velocities, just to name a few.

Vlasiator has two strengths compared to the complementary hybrid-PIC approach; the noiseless representation of the physics, and the fact that we can give the results in non-scaled SI units that are not factors of the ion scale lengths but are directly comparable with spacecraft observations. We have also weaknesses compared to the hybrid-PIC: We can only follow the particle trajectories by particle tracing (at run-time we only see how the VDFs evolve), and we are possibly using more computational resources. The latter weakness is not certain, though, because to be able to represent also the tenuous parts of the magnetosphere as accurately as in a Eulerian hybrid-Vlasov scheme, the hybrid-PIC simulations would need to use so many macroparticles that the computational resources would possibly be of the same order of magnitude as we use. On the other hand, the Vlasiator performance is also increasing in time as seen in Figure 1. In 2022, we can carry out 6D global simulations at the cost of about 15 million core hours, the number we used to carry out our first 5D simulation.

Closing remarks

One of the most important lessons I’ve learnt is that if the motivation to make a paradigm shift comes from the matter itself—in my case the need to understand how our system behaves beyond MHD—the seeds of success have been planted. This is a great shield against the inevitable misfortunes and drawbacks, which are an integral part of any success. If I was foremost interested in appreciation or approval, I would not have pulled this project through. Another lesson concerns recruiting. When doing something that has never been done before, prior skills and knowledge are of lesser importance. In fact, it may even hurt to have too much prior knowledge due to a psychological concept called confirmation bias1, a tendency to favor information that confirms or supports prior beliefs. When developing Vlasiator, a confirmation bias would have magnified the notion of a shortage of available computational resources, leading to misguided thinking about what is or will become possible in the future. If the will to understand is strong enough, resources will come. Right now, there are more HPC resources than ever before—but—they are again changing. In the beginning of 2010s, the direction was towards compute processing unit (CPU) parallelism, now it is in heterogeneous architectures including graphics processing units (GPUs). To meet this challenge, of course we have an active development branch concentrating on how to harness GPUs in improving Vlasiator performance.

It feels that this age has more frustration than 20 years ago, perhaps because it is more difficult to get new spacecraft missions. Our field possibly suffers from some sort of a first in—first out challenge. It was our field, which first started using spacecraft to understand how the near-Earth space behaves (van Allen and Frank, 1959). Increasingly in the past decades, other fields have started to use space as well, and therefore there is more competition in getting new missions. Since we have studied the near-Earth space using spacecraft already from 1960s, the other space-faring fields may have the (wrong) impression that we already know how our system behaves. Right now, there is more need for our field than ever before due to the increasing economical use of space (Palmroth et al., 2021b), and also because the society is critically depending on space. If we do not understand the physical space environment, it is like sending an increasing number of ships to wreck in the Cape of Good Hope.

The new 6D Vlasiator results may improve our chances in convincing the selection boards that new missions are needed. Indeed, it seems that the tides have turned: The Cluster mission was one of the major reasons to develop Vlasiator in the first place. However, now it is the models which lead the search for new physics. For example, we have found that magnetopause reconnection can launch bow waves that deform the bow shock shape upstream and influence the particle reflection conditions in the foreshock (Pfau-Kempf et al., 2016). In fact, Vlasiator results make the whole concept of scales obsolete: If we can look at ion-kinetic physics globally, there is no fluid-scale anymore, there is only scale-coupling—how small-scale physics affects at global scales and vice versa. For example, we can see how small variability like reconnection finally emerges as large-scale changes, like eruptions of plasmoids and brightening of the aurora. We will not know the answers with the current missions, but with ion-kinetic models we can build a picture that guides future mission development.

My advice for the next generation? First, follow your own nose, and take only projects that are worth carrying out at least for 10 years. Otherwise, you are completing someone else’s dream, and contributing incrementally to the present state-of-the-art. Confirmation bias means that our field does not renew fast enough if we do not dare to think of the impossible. When you do follow your own nose, do not expect that people see or understand what you are working towards. Strategies are very difficult to discern from the outside. When your strategy succeeds, your accomplishments seem easily earned, even if they required an enormous effort. This leads to another problem: only you know how hard it was! When your hard-earned results are showcasing new science, you may get nods of approval and positive feedback, followed by requests to run parameter studies which are feasible with previous-generation tools. For Vlasiator, developing it took over 10 years and several millions of euros, while running it at state-of-the-art scale requires competitive funding and computational proposals.

Our field also needs holistic thinking, unity, and a sense of community. We are sandwiched between the large Earth system sciences and astrophysics. They are often the gatekeepers in deciding who gets funding, new missions, and high-impact papers. Our internal quarrels are interpreted as a weakness, and a sign that investing into us may be wasting resources. Hence, every time someone succeeds, we should celebrate because as our field progresses, so do we on the surf. Every time someone struggles, we should help because at the same time, we help ourselves. We also need to improve the general work-wellbeing to be able to lure new people to our field. Our work forms such a large part of our identity that we might as well enjoy the road and invest in a great, forward-going atmosphere.

Data availability statement

The raw data supporting the conclusion of this article will be made available as per Vlasiator rules of the road (see: http://helsinki.fi/vlasiator).

Author contributions

The author confirms being the sole contributor of this article and has approved it for publication.

Funding

Work was supported by European Research Council Consolidator grant 682068-PRESTISSIMO and Academy of Finland grants 1336805, 1312351, 1339327, 1335554, 1347795, and 1345701.

Acknowledgments

I have been blessed in finding great people in the Vlasiator team. I would like to thank each past and current member equally, as working with you has made the journey enjoyable. I am also extremely grateful to the co-authors of our papers, and the numerous Vlasiator-enthusiasts we have encountered over the years: Your support was crucial to continue. Vlasiator (http://helsinki.fi/vlasiator) was developed with the European Research Council Starting grant 200141-QuESpace, and Consolidator grant 682068—PRESTISSIMO. The CSC—IT Center for Science in Finland and the Partnership for Advanced Computing in Europe (PRACE) Tier-0 supercomputer infrastructure in HLRS Stuttgart are acknowledged for their constant support and absolutely brilliant advice on HPC (grant numbers 2012061111, 2014112573, 2016153521, and 2019204998). Academy of Finland has been a great contributor to Vlasiator work as well, especially through grants 138599, 267144, 1312351, 1336805, 1309937, 1345701, 1347795, 1335554, and 1339327.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1https://en.wikipedia.org/wiki/Confirmation_bias

References

Daldorff, L. K. S., Yando, K. B., Palmroth, M., Hietala, H., Janhunen, P., and Lucek, E. (2009). “Preliminary studies on the development of a global hybrid-Vlasov simulation,” in poster presentation in 9th International Symposium for Space Simulations, July 3–10, Paris France.

Elkina, N. V., and Büchner, J. (2006). A new conservative unsplit method for the solution of the Vlasov equation. J. Comput. Phys. 213, 862–875. doi:10.1016/j.jcp.2005.09.023

Escoubet, C. P., Fehringer, M., and Goldstein, M. (2001). Introduction the cluster mission. Ann. Geophys. 19, 1197–1200. doi:10.5194/angeo-19-1197-2001

Hapgood, M., Liu, H., and Lugaz, N. (2022). SpaceX—sailing close to the space weather? Space weather. 20, e2022SW003074. doi:10.1029/2022SW003074

Huang, C.-L., Spence, H. E., Lyon, J. G., Toffoletto, F. R., Singer, H. J., and Sazykin, S. (2006). Storm-time configuration of the inner magnetosphere: Lyon-Fedder-Mobarry MHD code, Tsyganenko model, and GOES observations. J. Geophys. Res. 111, A11S16. doi:10.1029/2006JA011626

Janhunen, P., and Palmroth, M. (2001). Some observational phenomena are well reproduced by our global MHD while others are not: Remarks on what, why and how. Adv. Space Res. 28, 1685–1691. doi:10.1016/S0273-1177(01)00533-6

Janhunen, P., Palmroth, M., Laitinen, T. V., Honkonen, I., Juusola, L., Facskó, G., et al. (2012). The GUMICS-4 global MHD magnetosphere – ionosphere coupling simulation. J. Atmos. Sol. Terr. Phys. 80, 48–59. doi:10.1016/j.jastp.2012.03.006

Juusola, L., Facsko, G., Honkonen, I., Tanskanen, E., Viljanen, A., Vanhamäki, H., et al. (2014). Statistical comparison of seasonal variations in the GUMCIS-4 global model ionosphere and measurements. Space weather. 11, 582–600. doi:10.1002/2014SW001082

Lin, Y., Wang, X. Y., Fok, M. C., Buzulukova, N., Perez, J. D., Cheng, L., et al. (2021). Magnetotail-inner mag- netosphere transport associated with fast flows based on combined global-hybrid and CIMI simulation. JGR. Space Phys. 126, e2020JA028405. doi:10.1029/2020JA028405

Omidi, N., Sibeck, D., and Karimabadi, H. (2004). “Global hybrid simulations of solar wind and bow shock related discontinuities interacting with the magnetosphere,” in Proceeding AGU Fall Meeting Abstracts, SM21B-0, December 2004, Available at: https://ui.adsabs.harvard.edu/abs/2004AGUFMSM21B.01O.

Palmroth, M., Pulkkinen, T. I., Janhunen, P., and Wu, C.-C. (2003). Stormtime energy transfer in global MHD simulation, J. Geophys. Res. 108 (A1), 1048. doi:10.1029/2002JA009446

Palmroth, M., Janhunen, P., Pulkkinen, T. I., and Koskinen, H. E. J. (2004). Ionospheric energy input as a function of solar wind parameters: global MHD simulation results. Ann. Geophys. 22, 549–566. doi:10.5194/angeo-22-549-2004

Palmroth, M., Janhunen, P., Pulkkinen, T. I., Aksnes, A., Lu, G., Ostgaard, N., et al. (2005). Assessment of ionospheric joule heating by GUMICS-4 MHD simulation, AMIE, and satellite-based statistics: towards a synthesis. Ann. Geophys. 23, 2051–2068. doi:10.5194/angeo-23-2051-2005

Palmroth, M., Honkonen, I., Sandroos, A., Kempf, Y., von Alfthan, S., and Pokhotelov, D. (2013). Preliminary testing of global hybrid-Vlasov simulation: magnetosheath and cusps under northward interplanetary magnetic field. J. Atmos. Sol. Terr. Phys. 99, 41–46. doi:10.1016/j.jastp.2012.09.013

Palmroth, M., Ganse, U., Pfau-Kempf, Y., Battarbee, M., Turc, L., Brito, T., et al. (2018). Vlasov methods in space physics and astrophysics. Living Rev. comput. Astrophys. 4, 1. doi:10.1007/s41115-018-0003-2

Palmroth, M., Ganse, U., Alho, M., Suni, J., Grandin, M., Pfau-Kempf, Y., et al. (2021a). “First 6D hybrid-Vlasov modelling of the entire magnetosphere: Substorm onset and current sheet flapping,” in AGU Fall Meeting 2021. New Orleans, 13–17.

Palmroth, M., Tapio, J., Soucek, A., Perrels, A., Jah, M., Lönnqvist, M., et al. (2021b). Toward sustainable use of space: Economic, technological, and legal perspectives. Space Policy 57, 101428. doi:10.1016/j.spacepol.2021.101428

Pfau-Kempf, Y., Hietala, H., Milan, S. E., Juusola, L., Hoilijoki, S., Ganse, U., et al. (2016). Evidence for transient, local ion foreshocks caused by dayside magnetopause reconnection. Ann. Geophys. 36, 943–959. doi:10.5194/angeo-34-943-2016,

Pokhotelov, D., von Alfthan, S., Kempf, Y., Vainio, R., Koskinen, H. E. J., and Palmroth, M. (2013). Ion distributions upstream and downstream of the earth's bow shock: first results from vlasiator. Ann. Geophys. 31, 2207–2212. doi:10.5194/angeo-31-2207-2013

Keywords: space physics, modelling, ion-kinetic physics, magnetohydrodynamics, space weather, numerical simulations

Citation: Palmroth M (2022) Daring to think of the impossible: The story of Vlasiator. Front. Astron. Space Sci. 9:952248. doi: 10.3389/fspas.2022.952248

Received: 24 May 2022; Accepted: 18 July 2022;

Published: 29 August 2022.

Edited by:

Elena E. Grigorenko, Space Research Institute (RAS), RussiaReviewed by:

Gian Luca Delzanno, Los Alamos National Laboratory (DOE), United StatesCopyright © 2022 Palmroth. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Minna Palmroth, bWlubmEucGFsbXJvdGhAaGVsc2lua2kuZmk=

Minna Palmroth

Minna Palmroth