- 1Department of Statistics and Mathematics, Bindura University of Science Education, Bindura, Zimbabwe

- 2Department of Mathematics, University of Botswana, Gaborone, Botswana

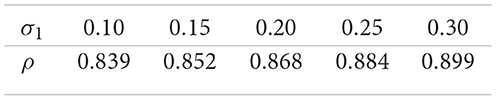

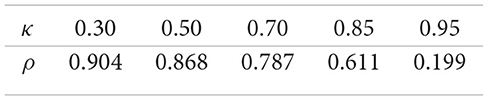

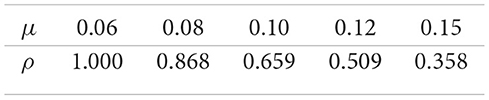

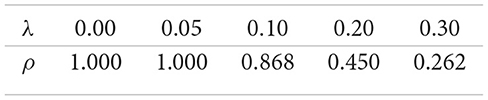

This study develops a unified framework for optimal portfolio selection in jump–uncertain stochastic markets, contributing both theoretical foundations and computational insights. We establish the existence and uniqueness of solutions to jump–uncertain stochastic differential equations, extending earlier results in uncertain–stochastic and Liu–uncertain settings without jumps, and provide a rigorous proof of the principle of optimality, thereby reinforcing the link between dynamic programming and the maximum principle under both continuous and discontinuous uncertainty. Applying this framework to a financial market with jump uncertainty, we demonstrate that under constant relative risk aversion (CRRA) utility, the optimal portfolio rule preserves the constant–proportion property, remaining independent of wealth. Numerical analysis further reveals consistent comparative statics: The optimal fraction ρ allocated to the risk–free asset rises with Brownian volatility σ1 and jump intensity λ, reflecting precautionary behavior under uncertainty, while it declines with the expected risky return μ and the risk–aversion parameter κ, indicating greater exposure to risk when returns are higher or investors are less risk–averse. Taken together, these results confirm the robustness, tractability, and economic relevance of the framework, aligning with classical findings in jump–diffusion models and offering implementable strategies for decision-making in financial markets subject to both continuous and jump risks.

1 Introduction

Optimal control theory, a core area of applied mathematics, focuses on determining strategies that optimize a performance criterion under system dynamics. The two main approaches are dynamic programming, introduced by Bellman [1], and the maximum principle, developed by Pontryagin et al. [2]. This study examines an optimal control problem under a jump-uncertain stochastic framework, using dynamic programming to derive the value function and connect it with the uncertain stochastic maximum principle. As an application, we analyze a portfolio selection problem in a financial market with jump uncertainty, extending Chirima et al. [3], who considered models without jumps.

Dynamic programming solves optimal control problems recursively through a value function that satisfies the Hamilton–Jacobi–Bellman (HJB) equation. In deterministic systems, this yields a first-order partial differential equation, while in stochastic settings with Brownian motion, it becomes a second-order non-linear partial differential equation. Liu's uncertainty theory adapts dynamic programming using belief-based measures, resulting in uncertain differential equations (UDEs) and a modified HJB equation with the Liu expected value. In jump-diffusion models, the HJB includes an integro-differential term that accounts for sudden events, offering more realistic dynamics.

Dynamic programming has been applied in many settings. Bellman's work [1] laid the foundation for deterministic control; stochastic extensions were developed by Kushner [4] and Fleming and Rishel [5]. Liu [6] extended the framework to uncertain systems, and Yong and Zhou [7] unified dynamic programming and maximum priciple for stochastic control. Øksendal and Sulem [8] applied dynamic programming to jump-diffusions in finance, while Chirima et al. [3] studied uncertain stochastic systems without jumps.

The maximum principle provides necessary conditions for optimality by introducing adjoint (costate) variables and requiring the maximization of the Hamiltonian function. In deterministic settings, this formulation typically leads to a two-point boundary value problem. In stochastic control, Peng [9] developed a backward stochastic differential equation (BSDE) formulation, which was subsequently extended by Yong and Zhou [7] to broader stochastic contexts. For systems governed by jump-diffusion dynamics, additional terms are incorporated to represent the effects of Poisson, Lévy, or uncertain jumps. In uncertain systems, the principle has been adapted to uncertain differential equations, featuring modified adjoint processes and transversality conditions [10]. Within uncertain stochastic frameworks, uncertain stochastic adjoint equations have been introduced to model both randomness and belief-based uncertainty [11].

Since its introduction by Pontryagin et al. [2], the maximum principle has evolved into a versatile tool for optimal control across diverse settings. Peng's BSDE-based formulation [9] and the extensions by Yong and Zhou [7] advanced its applicability in stochastic environments. Ge and Zhu [10] broadened its scope to uncertain systems, while Chikodza and Hlahla [11] applied it within uncertain stochastic frameworks. In the realm of jump-diffusion control, Framstad et al. [12] demonstrated its effectiveness and established its connection with dynamic programming. These developments collectively underscore the maximum principle's flexibility and its unifying role in addressing optimal control problems across a wide range of mathematical models.

A fundamental link exists between the maximum principle and dynamic programming, the two main approaches in optimal control theory. Dynamic programming characterizes the optimal value function through the Hamilton–Jacobi–Bellman (HJB) equation. When the value function is sufficiently smooth, maximum principle adjoints can be expressed as gradients of the value function, showing consistency between the two methods. Yong and Zhou [7] established this equivalence in stochastic systems, and Framstad et al. [12] extended it to jump-diffusion models, affirming its validity even with discontinuities.

Financial markets often experience sudden, unpredictable events—known as jumps—that cause sharp changes in asset prices. These deviations differ from the continuous variations captured by Brownian models. For instance, Brent crude rose over 13 percent intraday on 13 June 2025 following Israeli airstrikes on Iranian facilities and climbed further after renewed missile attacks [13–15]. Such movements underscore the need to model jumps explicitly.

Ignoring jumps can misrepresent risk and lead to inaccurate pricing, underestimated Value-at-Risk, and suboptimal investment decisions. Models that incorporate jump processes—such as Poisson, Lévy, or V-uncertain terms—better capture market realities and improve portfolio and hedging strategies. Thus, jump modeling is essential for robust financial decision-making.

Liu's uncertainty theory, introduced in 2007 [16], models belief-based uncertainty that arises from subjective judgment rather than randomness. Unlike probability theory, which relies on frequencies, Liu uncertainty theory uses axiomatic uncertain measures to define uncertain variables and processes, such as the canonical Liu process and uncertain differential equations. It is especially useful where probabilities are unreliable or unknown, such as expert-based financial forecasts.

In this framework, Zhu [17] applied dynamic programming to uncertain optimal control and solved a portfolio selection problem. Deng and Zhu [18] addressed uncertain systems with jumps driven by both Liu and V-jump processes, applying dynamic programming to pension fund control. Zhu [19] later introduced optimistic and expected value-based models for uncertain dynamic systems. These studies highlight Liu uncertainty theory's role in addressing ambiguity beyond classical stochastic tools.

Uncertain stochastic systems combine randomness and belief-based uncertainty, capturing dynamics influenced by both Brownian motion and Liu-uncertain processes. This hybrid framework is effective in contexts where some variables are stochastic, while others reflect subjective expectations. Researchers have extended both dynamic programming and maximum principle to such systems, developed Itô–Liu calculus, and solved problems in portfolio optimization, inventory control, and differential games.

Fei [20] studied uncertain stochastic systems with Markov switching, derived a generalized HJB equation, and solved optimal consumption and investment problems. In related work, Fei [21] proved the existence and uniqueness of backward uncertain stochastic differential equations (BUSDEs). More recently, Chirima et al. [3] used dynamic programming to address uncertain stochastic control without jumps in portfolio models, while Hlahla and Chikodza [11] developed a maximum principle involving both Brownian and Liu-uncertain drivers. These contributions show that Liu uncertain stochastic systems offer a practical and flexible framework for modeling decisions under both randomness and human ambiguity.

Portfolio selection involves deciding how to allocate wealth across financial assets to balance expected return and risk. The goal is to either maximize return for a given risk level or minimize risk for a target return. For example, investing equally in a risk-free bond yielding 2% and a stock expected to return 8 percent yields an average return of 5% with lower risk than full stock investment. By adjusting asset proportions, investors create portfolios offering different risk-return combinations, forming the efficient frontier–portfolios that optimize return for each risk level.

Harry Markowitz [22] established the foundation of portfolio theory with the mean–variance optimization model, showing that diversification reduces risk without lowering expected return. Merton [23] later extended this to continuous time, using stochastic calculus to derive dynamic investment strategies. His model showed that, under certain assumptions, optimal investment proportions depend on market parameters and risk preferences, not current wealth. Together, Markowitz and Merton laid the foundation for modern quantitative finance and the analysis of optimal investment under uncertainty.

More recent research addresses complex uncertainties. Deterministic models assume known returns, while stochastic models use processes such as Brownian motion to reflect randomness. Framstad et al. [12] included jump-diffusion to capture price shocks. In contrast, Liu's uncertainty theory models belief-based uncertainty with uncertain variables. Zhu [17] applied uncertain differential equations to portfolio problems under this framework. Hybrid models now combine stochastic and uncertain elements. Chirima et al. [3] used dynamic programming for portfolio selection in a setting with both randomness and belief-based uncertainty but no jumps. These advances reflect a growing need for models that integrate stochastic processes, Liu uncertainty, and jump components in financial decision-making.

The central contribution of this article is the study of an optimal control problem within a jump–uncertain stochastic framework using the dynamic programming approach. First, we establish an existence and uniqueness theorem for jump–uncertain stochastic differential equations, thereby extending the results of Chen and Liu [24], who proved a similar result in a Liu–uncertain framework without jumps, and Chirima et al. [25], who established it in an uncertain–stochastic setting without jumps. Second, we prove the principle of optimality and derive the corresponding Bellman optimality equation in the jump–uncertain stochastic framework, extending the work of Chirima et al. [3], who considered the case without jumps. Third, we extend the work of Framstad et.al [12] on the connection between the maximum principle and dynamic programming in jump–diffusion models, by providing a rigorous link between the dynamic programming principle and the uncertain stochastic maximum principle in a jump–uncertain setting. Finally, we demonstrate the applicability of these theoretical results through a portfolio selection problem, thereby providing a unified framework for optimal portfolio choice that integrates theoretical foundations with computational insights.

The remainder of this study is organized as follows. Section 2 reviews the fundamental concepts and theorems of jump–uncertain stochastic theory and establishes an existence and uniqueness result for jump–uncertain stochastic differential equations. Section 3 proves the principle of optimality and derives the associated Bellman optimality equation. Section 4 develops the maximum principle and shows that, under suitable conditions, the adjoint processes in the uncertain stochastic control problem can be expressed through derivatives of the value function V(t, x). Section 5 applies these theoretical results to a portfolio selection problem in a jump–uncertain stochastic financial market, thereby presenting a unified framework for optimal portfolio choice that integrates both theoretical foundations and computational insights. Finally, Section 6 concludes the study.

2 Preliminary

This study explores an optimal control problem applied to portfolio selection in a Liu-uncertain stochastic market with jumps. To support the analysis, this section reviews key concepts related to the chance space, defined as the product space . Here, represents a classical probability space, where Ω is the sample space, is a σ-algebra, and P is a probability measure. Meanwhile, denotes an uncertain space, where Γ is the universal set, is a σ-algebra, and is an uncertain measure. For a comprehensive treatment of the chance space, we refer the reader to Hou [26], Liu [27], Fei [21], and Liu [28].

Definition 2.1 Hou [26] Let be a chance space, and let be an event. Then, the chance measure of Θ is defined as

where and are a probability space and an uncertainty space in that order.

Hou [26] and Liu [27] proved that a chance measure satisfies normality, duality, monotonicity, and subadditivity properties, that is

(i) Normality Ch{Γ × Ω} = 1, Ch{∅} = 0.

(ii) Monotonicity Ch{Θ1} ≤ Ch{Θ2}, for any events Θ1 ⊂ Θ2.

(iii) Self- duality Ch{Θ} + Ch{Θc} = 1, for any eventΘ.

To illustrate this axiom, consider an event . When an observation is performed, the total chance that the event Θ either occurs or does not occur must be equal to 1. This reflects the intuitive requirement that one of the two mutually exclusive outcomes must happen with certainty.

(iv) Subadditivity for any countable sequence of events Θ1, Θ2, ⋯ .

Definition 2.2 Liu [28]. An uncertain random variable is a function ξ from a chance space to the set of real numbers such that {ξ ∈ B} is an event in for any Borel set B of real numbers.

Definition 2.3 Liu [28]. Let ξ be an uncertain random variable, then its chance distribution of ξ is defined by

for any x ∈ ℝ.

Definition 2.4 (i) Liu [28]. Let ξ be an uncertain random variable. Then, its expected value is defined by

provided that at least one of the two integrals is finite.

(ii) Fei [21]. The expected value of an uncertain random variable ξ is defined by

where Ep and are the expected values under the Liu uncertainty and the probability space, respectively.

The expected value of the uncertain random variable ξ is the probability expectation of the expected value of ξ under Liu uncertainty. In order to simplify the work and presentation, Ech(.) shall be denoted by E(.).

Definition 2.5 Fei [21]. A hybrid process Xt is an uncertain stochastic process if Xt is an uncertain random variable for each t ∈ [0, T].

An uncertain stochastic process X(t) is said to be continuous if the sample paths of X(t) are all continuous functions of t for almost all (γ, ω) ∈ Γ × Ω.

Definition 2.6 Wu [29] (Jump Ito-Liu Integral) Suppose X(t) = (Y(t), Z(t)) is a jump-uncertain stochastic process, for any partition of closed interval [a, b] with a = t1 < t2 < ⋯ < tN+1 = b, the mesh is expressed as

The jump Ito-Liu integral of X(t) with respect to (W(t), C(t), N(t)) is defined as follows:

In the case above, X(t) is called jump Ito-Liu integrable.

Definition 2.7 Deng and Zhu [18]. An uncertain variable Z(r1, r2, t) is said to be an uncertain Z-jump uncertain variable with parameters r1 and r2 (0 < r1 < r2 < 1) for t > 0 if it has a jump uncertainty distribution

Definition 2.8 Deng and Zhu [18]. An uncertain process Nt is said to be a V-jump process with parameters r1 and r2 (0 < r1 < r2 < 1) for t ≥ 0 if (i) N0 = 0, (ii) Nt has stationary and independent increments, (iii) every increment Ns+t − Ns is an uncertain Z-jump variable Z(r1, r2, t). Let Nt be an uncertain V-jump process, and ΔNt = Nt+Δt − Nt. Then

Definition 2.9 Deng and Zhu [Jump–uncertain stochastic differential equation (jUSDE)] Suppose Bt is a one-dimensional Brownian motion, Ct is a one-dimensional Liu-canonical process, and Nt is a V–jump uncertain process with pararmeters 0 < r1 < r2 < 1, independent of (Bs, Cs), all defined on the chance space with the natural filtration generated by (Bs, Cs, Ns). For T > 0 and t ∈ [0, T], a process Xt is said to satisfy a jump–uncertain stochastic differential equation if

where α, σ, γ, η:[0, T] × ℝ → ℝ are progressively measurable with respect to in t and continuous in x.

Theorem 2.1 (Existence and uniqueness for jump–uncertain stochastic differential equation) A jump-uncertain stochastic differential equation (Equation 6) admits a unique (up to indistinguishability) adapted solution if the coefficients α(t, x), σ(t, x), θ(t, x), and η(t, x) the coefficients of the jump-uncertain stochastic differential Equation 6 satisfies the Lipschitz condition

and linear growth condition

for L > 0 and K > 0 such that, for a.e. t ∈ [0, T] and all x ∈ ℝ.

Define

Furthermore, we assume the following

(A1) (Canonical Liu process: pathwise Lipschitz) For each γ ∈ Γ, the path t ↦ Ct(γ) is Lipschitz on [0, T], with Lipschitz constant KC(γ) < ∞, and .

(A2) (Uncertain V–jump: bounded variation/moment bound) N has càdlàg bounded–variation paths and .

Proof of Theorem 2.1 To establish existence, we construct a Picard iteration scheme as in Chen and Liu [24], starting from a constant process and defining successive approximations by plugging the previous iterate into the integrals.

As in [24], set and, for n ≥ 0,

By Theorem 2.1, each term is well–defined and X(n) is adapted càdlàg.

We derive uniform bounds for the iterates using inequalities tailored for each noise component—Burkholder–Davis–Gundy for the Brownian part, pathwise Lipschitz bounds for the Liu integral, and bounded- variation estimates for the V–jump part.

(i) For any square–integrable progressively measurable ψ,

(ii) Since C(γ) is Lipschitz with constant KC(γ), for any integrable φ and 0 ≤ a < b ≤ T,

Hence, by Cauchy–Schwarz and Fubini,

(iii) Each sample path of N is of bounded variation. For any integrable ϕ and each γ,

for some finite pathwise constant KN(γ). Consequently,

Using Minkowski inequality, Equations 8–10, and the linear growth condition, there exists C1 > 0 (depending on ) such that

Let . Then

To ensure these bounds remain finite, we apply the Gronwall's inequality:

Let D(n+1): = X(n+1) − X(n). Subtract Equation 7 for consecutive indices:

Since the Lipschitz condition guarantees the iteration sequence is Cauchy in the space of adapted câdlàg processes with finite second moments, using Theorem 2.1 together with Equations 8–10 yields,

By dominated convergence, using the linear growth, and the continuity of the integrals with respect to convergence Equations 8–10, pass to the limit in Equation 7 to conclude that X satisfies Equation 6. Moreover, Equation 11 gives .

To establish uniqueness of the solution to Equation 6, let be two solutions and define their difference by Z: = X − Y. Then, Z satisfies

Applying Theorem 2.1 together with the inequalities Equations 8–10, we obtain

By Gronwall's lemma, it follows that

which implies that Zt = 0 almost surely for all t ∈ [0, T]. Therefore, X and Y are indistinguishable, and we conclude that Equation 6 admits a unique adapted solution (up to indistinguishability).

3 The uncertain stochastic optimal control with jump

This study assumes that the uncertain stochastic optimal control model with jump is given by

where us is the control variable, and Xs represents the state variable. The functions f and G are the objective function and terminal utility function, respectively. Finally, the four functions α, σ, γ and η are functions of state Xs, control us and time s. Bs, Cs and Ns are the Brownian motion, Liu-uncertain canonical process, and uncertain V-jump process with parameters r1, r2 for s > 0, respectively.

Next, we present the principle of optimality and hence derive the equation of optimality.

Theorem 3.1 (Principle of optimality). For any (t, x) ∈ [0, T) × ℝ and Δt ∈ (0, T − t], the value function V(t, x) can be expressed as

where x + ΔXt = Xt+Δt.

The proof of Theorem 3.1 follows the dynamic programming approach of Chirima et al. [3], which we extend to incorporate the Brownian–Liu–V-jump framework.

Proof of Theorem 3.1. Let X: = Xt, x; u and assume the standard admissibility conditions on the control process u (progressive measurability, integrability, and continuity) so that the system is well posed and satisfies the controlled Markov property. Then, the value function satisfies

For any admissible u, the performance functional can be decomposed as

By the Markov property and independence of future increments (Bs − Bt+Δt, Cs − Ct+Δt, Ns − Nt+Δt) from , the conditional expectation equals the cost-to-go under the tail control starting from Xt+Δt, that is,

Hence,

Since V is the supremum over all admissible tail controls, it follows that

Substituting into Equation 15 and maximizing over all u on [t, T] yields

Conversely, fix ε > 0. For each y ∈ ℝ, let vε, y be an ε–optimal tail control on [t + Δt, T] such that

Using a measurable selection argument, one may construct the mapping y ↦ vε, y measurably. Define a combined control ū by setting ūs = us for s ∈ [t, t + Δt) and for s ∈ [t + Δt, T]. Admissibility of ū follows by construction. Applying Equation 15 with u replaced by ū gives

Maximizing over all admissible controls u on the interval [t, t + Δt] and then refining the approximation by taking ε arbitrarily small yields the reverse inequality. Taken together, the two inequalities establish the dynamic programming relation Equation 14, thereby completing the proof of Theorem 3.1.

Theorem 3.2 (Equation of optimality). Let V(t, x):[0, T] × ℝ be a twice continuously differentiable function. Then

where Vt(t, x) and Vx(t, x) are the partial derivatives of the function V(t, x) in t and x, respectively, and Vxx(t, x) is the partial derivative of Vx(t, x).

Proof of Theorem 3.2: For any Δt > 0, we have

By using the Taylor series expansion, we get

By substituting Equations 17 and 18 into Equation 13, we generate

We know that , and . Based on the jump-uncertain stochastic differential equation, the constraint in Equation 12, ΔXt = α(t, Xt, ut)Δt + σ(t, Xt, ut)ΔBt + θ(t, Xt, ut)ΔCt + η(t, Xt, ut)ΔNt, we can generate Equations 20 and 21 as follows:

and

Substituting Equations 20, 21 into Equation 19 yields

Since E(ΔBt) = E(ΔCt) = 0, and from Equation 5

Dividing Equation 23 by Δt, we obtain

Thus, Theorem 3.2 has been proved.

4 Relation to uncertain random maximum principle

In this section, we examine the relationship between the maximum principle and dynamic programming within a jump-uncertain stochastic control framework. Under appropriate regularity and non-degeneracy conditions adapted to the uncertain jump setting, we demonstrate that the value function V(t, x) is the unique classical solution to the associated Hamilton-Jacobi-Bellman (HJB) equation. Consequently, the adjoint processes arising in the maximum principle formulation can be identified with the derivatives of the value function, thereby establishing a rigorous correspondence between the two approaches.

Moreover, we verify that the optimal control derived from the HJB equation coincides with that obtained via the maximum principle, confirming their internal consistency in the context of uncertain jump-diffusion models. To illustrate this connection, we present a concrete example from a portfolio selection problem.

4.1 Maximum principle formulation

We consider a controlled uncertain stochastic system:

as defined by Equation 5 and the contraint in Equation 12. We consider a performance criterion J(t, x) of the form

where is an admissible control process, and f, G are the running and terminal rewards, respectively. The control objective is to maximize J(t, x), such that

provided V(t, x) ∈ C1, 2([0, T] × ℝ), so that classical derivatives Vt, Vx, Vxx exist and are continuous. In the stochastic maximum principle framework, we define the Hamiltonian:

The adjoint process (pt, qt, rt, ψt) satisfies the backward uncertain stochastic differential equation (BUSDE):

To rigorously connect the dynamic programming and maximum principle approaches, we require that the value function V(t, x) be sufficiently smooth, specifically V ∈ C1, 2([0, T] × ℝ), so that the Hamilton-Jacobi-Bellman (HJB) equation holds in the classical sense.

For classical smoothness, we impose the following conditions:

(H1) The coefficients α, σ, θ, η and the reward functions f, G are Lipschitz continuous in x, uniformly in t and u, and twice continuously differentiable with respect to x.

(H2) The terminal reward G(x) ∈ C2(ℝ) satisfies polynomial growth.

(H3) The control set U⊂ℝm is compact and convex.

(H4) The diffusion coefficients satisfy a uniform non-degeneracy condition:

(H5) The uncertain-jump process Nt is of finite activity, and its expectation is smooth in t.

To achieve regularity via viscosity solution theory, under assumptions (H1)–(H5), the value function satisfies the dynamic programming principle and is a continuous viscosity solution to the Hamilton–Jacobi–Bellman equation:

Existence and uniqueness of viscosity solutions to HJB equations are well-established (see Barles and Imbert [30]), and extensions to uncertain differential equations have been developed in the Liu process framework (see Chen and Liu [24]).

To obtain a classical solution, note that viscosity solutions are not generally smooth in most cases. However, under the non-degeneracy condition (H4), if the jump terms have finite activity and the coefficients are smooth, the associated HJB equation becomes uniformly parabolic. In this setting, Krylov's existence–uniqueness theorem for Bellman equations, together with standard regularity theory, ensures that the viscosity solution V is in fact smooth:

Therefore, under standard regularity and non-degeneracy conditions adapted to the uncertain-jump setting, the value function V(t, x) is the unique classical solution to the HJB equation. Consequently, the adjoint processes in the maximum principle approach can be identified with the derivatives of V, ensuring a rigorous connection between dynamic programming and the maximum principle.

4.2 Connection between dynamic programming and maximum principle

Under the smoothness assumption, the value function satisfies the Hamilton–Jacobi–Bellman (HJB) Equation 29.

Given that V(t, x) ∈ C1, 2, we can express the adjoint processes from the maximum principle in terms of derivatives of the value function from dynamic programming:

Substituting into the Hamiltonian:

which matches the integrand of the HJB equation. Thus, the maximization condition in the maximum principle is equivalent to the supremum condition in the HJB.

In summary, the connection between the dynamic programming and maximum principle approaches is established through the smoothness of the value function. Under appropriate regularity conditions, the adjoint process pt corresponds to the gradient Vx(t, Xt), while the integrands qt, rt, ψt correspond to expressions involving the second-order derivative Vxx(t, Xt) and the gradient Vx(t, Xt). Moreover, the Hamiltonian in the maximum principle coincides with the integrand appearing inside the supremum in the Hamilton–Jacobi–Bellman (HJB) equation. Therefore, when the value function V ∈ C1, 2, both methods provide consistent characterizations of the optimal control, thereby completing the connection between dynamic programming and the maximum principle in the jump uncertain-stochastic framework.

4.3 Example

In order to illustrate how the maximum principle's adjoint process pt = Vx(t, Xt) aligns with the derivative of the value function from dynamic problem in a jump uncertain-stochastic portfolio selection problem, we consider a financial market in which an investor allocates wealth between a risk-free asset and a risky asset. We let Xt denotes the investor's wealth at time t, and denotes the proportion of wealth invested in the risky asset. The wealth is governed by the following jump-uncertain stochastic differential equation:

where μ is the excess return of the risky asset over the risk-free asset, and σ > 0, γ > 0, and η > 0 are the volatility. Bt, Ct, and Nt are the standard Brownian motion, Liu Canonical process, and an uncertain V-jump process with parameters r1 and r2, respectively.

The investor seeks to maximize the expected utility of terminal wealth. Let G(x) = log(x) be the utility function. The value function is

and is assumed to belong to .

Using Theorem 3.2, the Hamilton–Jacobi–Bellman (HJB) equation is

Combining terms:

where .

On the other hand, the Hamiltonian is

and the adjoint process satisfies

Under the assumption V ∈ C1, 2, we identify

Thus, the Hamiltonian becomes

which is the same expression as inside the supremum in the HJB equation.

Maximizing the Hamiltonian or solving the HJB yields

implying the optimal control:

This matches the control derived from the HJB equation, demonstrating the consistency of the dynamic programming and maximum principle approaches under the smoothness of the value function.

5 Application: portfolio selection in a jump-uncertain stochastic market

The portfolio selection problem, a core topic in financial economics, addresses how to allocate wealth between risk-free and risky assets. Seminal works by Merton [23] and Kao [31] employed stochastic optimal control to model this decision. Zhu [17] later incorporated uncertain returns using uncertain optimal control. This study extends Zhu's framework by introducing jump-uncertain stochastic dynamics.

5.1 Optimal portfolio allocation

Let Xt represents the investor's wealth at time t in a market with two assets: a risk-free security and a risky asset. A fraction ρ of the wealth is invested in the risk-free asset and the remainder in the risky asset.

The risk-free asset earns a constant return b. The risky asset has an expected return μ (μ > b), and its volatility is influenced by both randomness and uncertainty, modeled by σ1 (stochastic), σ2 (uncertain), and λ (jump volatility). Asset dynamics are driven by independent processes: Brownian motion Bt, jump process Nt, and canonical Liu process Ct.

The return on the risky asset over (t, t + dt) is given by

Thus,

We assume that the investor's risk aversion remains constant over time and therefore uses the constant relative risk aversion (CRRA) utility function similar to Zhu [17]. Assume that an investor is interested in maximizing the expected utility over an infinite time horizon. Then, a portfolio selection model is provided by

where b > 0, 0 < k < 1.

By Theorem 3.2, the value function satisfies

In Equation 37, define the function

then the first-order condition with respect to ρ yields

We conjecture that

so that

Substituting the derivatives into Equation 38 and dividing by the common factor e−βtxκ yields the equation

Hence, the optimal fraction invested in the risk-free asset is independent of total wealth.

Since Equation 39 does not generally admit a closed-form solution, we solve it numerically and project the result onto the interval [0, 1] to ensure feasibility under the standard no-leverage and no-shorting constraints. This implicit optimality condition characterizes the optimal portfolio allocation, where ρ denotes the proportion of wealth invested in the risk-free asset and (1 − ρ) in the risky asset.

5.2 Numerical illustration and comparative statics

We use financially plausible, annualized baseline parameters: risk–free rate b = 0.02, expected risky return μ = 0.08, Brownian volatility σ1 = 0.20, jump volatility scale λ = 0.10, V–jump parameters (r1, r2) = (0.2, 0.8) so that , and CRRA parameter κ = 0.5. We set the value–function scale constant to K = 10 to obtain interior solutions. Each experiment varies one parameter over a common, realistic grid while holding the others at their baseline values.

Response to Brownian volatility σ1.

Response to risk–aversion κ.

Response to expected return μ.

Response to jump scale λ.

5.3 Discussion of computational results

The computational experiments provide important insights into the comparative statics of the optimal portfolio fraction ρ in the jump–uncertain setting, highlighting how different market and preference parameters shape investment behavior. First, the results show that as the Brownian volatility σ1 increases, the optimal allocation ρ to the risk–free asset rises monotonically. This pattern reflects the natural precautionary response to higher diffusion risk: Investors reduce exposure to the risky asset and seek safety in the risk–free asset, consistent with the negative coefficient of (κ − 1) in Equation 39. This finding underlines the stabilizing role of the risk–free asset in portfolios when market uncertainty is driven by continuous volatility. Second, the risk–aversion parameter κ exerts a powerful influence on the portfolio rule. For highly risk–averse investors (small κ), ρ is large, meaning that most wealth is allocated to the risk–free asset. As κ increases, reflecting weaker risk aversion and greater willingness to tolerate variability in returns, ρ declines, and wealth is shifted toward the risky asset. This comparative static emphasizes the theoretical consistency of the model with classical portfolio theory. Third, the effect of the expected return μ is particularly striking: As μ rises, ρ decreases sharply, shifting wealth into the risky asset. This is intuitive since higher expected reward offsets risk, encouraging more aggressive investment. Notably, when μ is low, the no–shorting constraint binds and the model projects ρ to one, implying a complete allocation to the risk–free asset. This binding case illustrates how constraints can dominate investor preferences when returns are insufficient to justify risky exposure. Finally, the V–jump intensity λ captures discontinuous risks that amplify downside exposure. The numerical results show that higher values of λ significantly increase ρ, reflecting investors' tendency to shield wealth from rare but severe shocks. For small λ, the projection again binds at ρ = 1, consistent with highly conservative behavior under jump risk, while for moderate to large λ, the optimal policy shifts markedly toward the risk–free asset. Overall, these findings demonstrate that the fraction ρ responds in economically consistent ways to both risk parameters and preference parameters. The comparative statics not only validate the theoretical formulation of the model but also provide practical guidance for portfolio selection, illustrating how investors adjust their allocation between risky and risk–free assets in response to changes in volatility, return, risk aversion, and jump exposure.

5.4 Summary

The computational findings indicate that the proposed model effectively replicates realistic investor decision-making across a range of market environments. The numerical simulations consistently exhibit comparative statics: The optimal proportion ρ allocated to the risk-free asset increases with both the Brownian volatility σ1 and the jump intensity λ, signifying a heightened precautionary stance in response to elevated uncertainty. Conversely, ρ declines as the expected return on the risky asset μ rises, reflecting stronger incentives to assume risk for higher potential gains. Similarly, ρ decreases with the risk aversion coefficient κ, suggesting that less risk-averse investors allocate a smaller share to safe assets. Crucially, the value of ρ is determined solely by investor preferences and market parameters and remains independent of current wealth levels. This confirms the persistence of the constant-proportion investment rule under CRRA preferences, even in the presence of jump risk. Collectively, these outcomes substantiate the reliability and economic relevance of the proposed framework, emphasizing its practical effectiveness in guiding portfolio decisions under both continuous and jump-driven sources of uncertainty.

6 Conclusion

This study develops a unified framework for optimal portfolio selection in jump–uncertain stochastic markets, making both theoretical and computational contributions. On the theoretical side, we establish the existence and uniqueness of solutions to jump–uncertain stochastic differential equations, thereby extending the results of Chirima et al. [25], who studied the case without jumps in an uncertain–stochastic setting, and complementing the work of Chen and Liu [24], who proved an existence and uniqueness theorem in a Liu–uncertain framework without jumps. In addition, we provide a rigorous proof of the principle of optimality for jump–uncertain stochastic control problems. This result extends the work of Chirima et al. [3], who considered uncertain–stochastic systems without jumps, and generalizes the dynamic programming foundation developed by Chen and Liu [24], thereby reinforcing the link between dynamic programming and the maximum principle under both continuous and discontinuous uncertainty.

From a computational perspective, our analysis demonstrates that the framework captures realistic investor behavior across diverse market conditions. The numerical experiments reveal consistent comparative statics: The optimal fraction ρ invested in the risk–free asset increases with Brownian volatility σ1 and jump intensity λ, reflecting stronger precautionary allocation under heightened uncertainty, while ρ decreases with the expected risky return μ and the risk–aversion parameter κ, indicating greater exposure to risk when expected returns rise or when investors are less risk–averse. Importantly, ρ depends only on preferences and market parameters, and not on current wealth, thereby confirming that the constant–proportion property of CRRA preferences persists in jump–uncertain settings.

Taken together, these findings underscore the robustness, tractability, and economic relevance of the proposed model. The result that the optimal fraction of wealth allocated to the risk-free asset remains independent of total wealth is a direct consequence of the constant–proportion investment rule under CRRA utility, which holds even in the presence of both Brownian and jump uncertainty. This outcome aligns with classical results in jump–diffusion models, notably those of Øksendal and Sulem [8], who demonstrated the same independence property. Similar conclusions were reached by Zhu [17] and Chirima et al. [3], further reinforcing the generality of this principle. More broadly, by integrating existence and uniqueness theory, the dynamic programming principle, and computational validation, this study offers a mathematically rigorous and practically applicable framework for portfolio optimization in markets subject to both continuous and discontinuous sources of risk.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

CH: Writing – original draft, Writing – review & editing, Conceptualization, Investigation. EC: Supervision, Writing – review & editing. CK: Supervision, Writing – review & editing. MM: Supervision, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

2. Pontryagin LS, Boltyanskii VG, Gamkrelidze RV, Mishchenko EF. The Mathematical Theory of Optimal Processes. New York: Interscience Publishers. (1962).

3. Chirima J, Matenda FR, Chikodza E, Sibanda M. Dynamic programming principle for optimal control of uncertain random differential equations and its application to optimal portfolio selection. Rev Busin Econo Stud. (2024) 12:74–85. doi: 10.26794/2308-944X-2024-12-3-74-85

4. Kushner HJ. On the Hamilton–Jacobi–Bellman equation for optimal stochastic control. J Math Anal Appl. (1965) 11:56–74.

7. Yong J, Zhou XY. Stochastic controls: Hamiltonian systems and HJB equations. In: Applications of Mathematics. New York: Springer. (1999).

8. Øksendal B, Sulem A. Applied Stochastic Control of Jump Diffusions. 2nd ed. Berlin: Springer (2005).

9. Peng S. A general stochastic maximum principle for optimal control problems. SIAM J Cont Optimizat. (1990) 28:966–79. doi: 10.1137/0328054

10. Ge X, Zhu Y. A necessary condition of optimality for uncertain optimal control problem. Fuzzy Opt Deci Making. (2013) 12:41–51. doi: 10.1007/s10700-012-9147-4

11. Chikodza E, Hlahla CK. Necessary Condition for Optimal Control of Uncertain Stochastic Systems. Singapore: Contemporary Mathematics, Universal Wiser Publisher (2025).

12. Framstad NC, Øksendal B, Sulem A. Sufficient stochastic maximum principle for the optimal control of jump diffusions and applications to finance. J Optimizat Theory Appl. (2004) 121:77–98. doi: 10.1023/B:JOTA.0000026132.62934.96

13. Seba E. Oil Settles up 7% as Israel, Iran Trade Air Strikes (2025). Available online at: https://www.reuters.com/world/china/oil-prices-jump-more-than-4-after-israel-strikes-iran-2025-06-13 (Accessed July 06, 2025).

14. Stephenson A. Oil Prices Up Nearly 3 Percent as Israel-Iran Conflict Escalates, US Response Remains Uncertain (2025). Available online at: https://www.reuters.com/business/energy/oil-falls-investors-weigh-chance-us-intervention-iran-israel-conflict-2025-06-19/?utm_source (Accessed July 06, 2025).

15. Bousso R. Israel-Iran War Highlights Mideast's Declining Influence on Oil Prices (2025). Available online at: https://www.reuters.com/markets/commodities/israel-iran-war-highlights-mideasts-declining-influence-oil-prices-2025-06-25 (Accessed July 06, 2025).

17. Zhu Y. Uncertain optimal control with application to a portfolio selection model. Cybern Syst. (2010) 41:535–47. doi: 10.1080/01969722.2010.511552

18. Deng L, Zhu Y. Uncertain optimal control with jump. Comp Sci Inform Syst. (2012) 9:1453–68. doi: 10.2298/CSIS120225049D

20. Fei W. Optimal control of uncertain stochastic systems with Markovian switching and its applications to portfolio decisions. Cybern Syst. (2014) 45:69–88. doi: 10.1080/01969722.2014.862445

21. Fei W. On existence and uniqueness of solutions to uncertain backwards stochastic differential equations. J Chin Univers. (2014) 29:55–66. doi: 10.1007/s11766-014-3048-y

22. Markowitz H. Portfolio selection. J Finance. (1952) 7:77–91. doi: 10.1111/j.1540-6261.1952.tb01525.x

23. Merton RC. Optimum consumption and portfolio rules in a continuous-time model. J Econ Theory. (1971) 3:373–413. doi: 10.1016/0022-0531(71)90038-X

24. Chen X, Liu B. Existence and uniqueness theorem for uncertain differential equations. Fuzzy Optimiz Deci Making. (2010) 9:69–81. doi: 10.1007/s10700-010-9073-2

25. Chirima J, Chikodza E, Hove-Musekwa SD. Numerical Methods for first order uncertain stochastic differential equations. Int J Mathem Operat Res. (2020) 16:1–23. doi: 10.1504/IJMOR.2020.104679

26. Hou Y. Subadditivity of chance measure. J Uncert Analy Appl. (2014) 2:14. doi: 10.1186/2195-5468-2-14

27. Liu Y. Uncertain random variables: a mixture of uncertainty and randomness. Soft Comp. (2013) 17:625–34. doi: 10.1007/s00500-012-0935-0

29. Wu C, Yang L, Zhang C. Uncertain stochastic optimal control with jump and its application in a portfolio game. MDPI. (2022). 14. doi: 10.3390/sym14091885

30. Barles G, Imbert C. Second-order elliptic integro-differential equations: viscosity solutions' theory revisited. Ann I H Poincaré – AN. (2008) 25:567–85. doi: 10.1016/j.anihpc.2007.02.007

Keywords: optimal control, jump–uncertain stochastic differential equation, uncertain stochastic maximum principle, V-jump process, backward uncertain stochastic differential equation

Citation: Hlahla CK, Chikodza E, Kazunga C and Magodora M (2025) Optimal portfolio selection in jump-uncertain stochastic markets via maximum principle and dynamic programming. Front. Appl. Math. Stat. 11:1667889. doi: 10.3389/fams.2025.1667889

Received: 17 July 2025; Accepted: 22 August 2025;

Published: 10 September 2025.

Edited by:

Indranil SenGupta, Hunter College (CUNY), United StatesReviewed by:

Minglian Lin, North Dakota State University, United StatesJustin Chirima, University of Malawi, Malawi

Copyright © 2025 Hlahla, Chikodza, Kazunga and Magodora. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Clift Kudzai Hlahla, a3VkemFpaGxhaGxhQHlhaG9vLmNvbQ==

Clift Kudzai Hlahla

Clift Kudzai Hlahla Eriyoti Chikodza

Eriyoti Chikodza Cathrine Kazunga

Cathrine Kazunga Mangwiro Magodora1

Mangwiro Magodora1