Abstract

Aim:

Generative adversarial networks (GANs) were employed to predict the morphology of OBL before femtosecond laser scanning during SMILE.

Methods:

A retrospective cross-sectional analysis was conducted on 4,442 eyes from 2,265 patients who underwent SMILE surgery at the Ophthalmic Center of the Second Affiliated Hospital of Nanchang University between June 2021 and August 2022. Surgical videos, preoperative panoramic corneal images, and intraoperative OBL images were collected. The dataset was randomly split into a training set of 3,998 images and a test set of 444 images for model development and evaluation, respectively. Structural similarity index (SSIM) and peak signal-to-noise ratio (PSNR) were used to quantitatively assess OBL image quality. The accuracy of intraoperative OBL image predictions was also compared across different models.

Results:

Seven GAN models were developed. Among them, the model incorporating a residual structure and Transformer module within the Pix2pix framework exhibited the best predictive performance. This model’s intraoperative OBL morphology prediction demonstrated high consistency with actual images (SSIM = 0.67, PSNR = 26.02). The prediction accuracy of Trans-Pix2Pix (SSIM = 0.66, PSNR = 25.76), Res-Pix2Pix (SSIM = 0.65, PSNR = 23.08), and Pix2Pix (SSIM = 0.64, PSNR = 22.97), Pix2PixHD (SSIM = 0.63, PSNR = 23.46), DCGAN (SSIM = 0.58, PSNR = 20.46) was slightly lower, while the CycleGAN model (SSIM = 0.51, PSNR = 18.30) showed the least favorable results.

Conclusion:

The GAN model developed for predicting intraoperative OBL morphology based on preoperative panoramic corneal images demonstrates effective predictive capabilities and offers valuable insights for ophthalmologists in surgical planning.

Introduction

Myopia, the most prevalent refractive error, emerges as a significant public health concern due to its rising global incidence (Baird et al., 2020; Medina, 2022). Laser refractive surgery, a widely used treatment, has been proven to enhance visual quality safely and effectively, significantly improving quality of life and work performance in individuals with myopia (Kim et al., 2019; Wilson, 2020). Small Incision Lenticule Extraction (SMILE), a relatively novel femtosecond laser technique, offers several advantages over traditional Laser-Assisted in Situ Keratomileusis (LASIK). However, it is technically demanding and associated with a steep learning curve (Lin et al., 2024; Zhao J. et al., 2023). During the early learning phase, surgeons may encounter unforeseen complications, which can negatively affect surgical outcomes (Wan et al., 2021; Titiyal et al., 2017).

A common intraoperative complication in femtosecond laser photofracture of corneal tissue is the formation of opaque bubble layers (OBL), caused by the accumulation of air bubbles between corneal layers (Zhu et al., 2024). This condition may complicate lenticule separation during surgery or increase the complexity, potentially delaying postoperative vision recovery (Zhu et al., 2024; Yang S. et al., 2023). While previous studies have identified several risk factors for OBL formation (Yang S. et al., 2023; Son et al., 2017; Ma et al., 2018), no comprehensive model currently exists to predict the morphology of OBL. The development of such a predictive model would significantly improve surgical decision-making accuracy and enhance the safety of SMILE procedures.

The creation of the lenticule during laser scanning is a crucial step in SMILE surgery. This process, fully automated and controlled by machine, is critical (Teo and Ang, 2024). If complications arise during this phase, surgeons typically need to halt the surgery promptly and use alternative techniques or adjustments to resolve the issue (Wan et al., 2021). This not only poses a significant challenge to the surgeon’s skills but also increases patient anxiety, potentially affecting both the postoperative visual acuity and recovery (Yang S. et al., 2023; Titiyal et al., 2018). For minimizing these risks, pre-laser-scanning anticipation and identification of potential intraoperative complications are essential, especially in the suction-initiated step. Identifying any abnormalities during this phase enables surgeons to interrupt and restart the procedure without affecting the laser scanning. Research has shown that the major risk factors for OBL during SMILE surgery are associated with special corneal parameters (Yang S. et al., 2023; Son et al., 2017; Ma et al., 2018). An important question arises: Can artificial intelligence (AI) technology predict the morphology of OBL by analyzing the panoramic corneal images captured during the negative pressure suction phase of SMILE surgery?

With the rapid development of AI, it has increasingly become an impotant auxiliary tool in the filed of ophthalmology, demonstrating substantial potential in improving clinical management and workflows (Zheng et al., 2021; Xu and Yang, 2023; Yang W.-H. et al., 2023). In the field of image prediction, Generative adversarial networks (GANs), a key area within AI, offer substantial advantages in processing high-dimensional data and images, showing great promise in automated medical analysis, particularly for managing large, complex datasets related to human diseases (Saeed et al., 2021; Gong et al., 2021; Waisberg et al., 2025). Compared to other models, such as Conditional Diffusion Models or Autoregressive Models, GANs can directly model image-to-image mapping through adversarial training, which is suitable for tasks such as intraoperative OBL prediction that require detail preservation (Waisberg et al., 2025). Pix2Pix is a universal image-to-image translation model in GANs (Kim and Chin, 2023; Zhang et al., 2022; Abdelmotaal et al., 2021). However, when it was used in medical image generation, it often resulted in blurred and distorted outputs, and it was difficult to capture details, and the generated image lacks texture (Zhang et al., 2022; Kim et al., 2025). While the residual structure helps preserve shallow and high-frequency information across layers (Wang et al., 2017; Park et al., 2019), and the Transformer network enhances global understanding through its attention mechanism (Chen et al., 2021; Cao et al., 2021).

To address this, the study incorporated a combination of residual structure and Transformer module into the Pix2Pix to predict the morphology of OBL during SMILE surgery, providing crucial intraoperative support for surgeons. Given the increased difficulty when OBL forms at the posterior interface of the lenticule during laser scanning, this study focused primarily on analyzing OBL during this critical phase (Son et al., 2017; Titiyal et al., 2018). The model allows surgeons to implement timely interventions, such as promptly releasing negative pressure and adjusting surgical parameters. These measures can effectively reduce OBL incidence, thereby minimizing its negative impact on both the surgical process and postoperative visual recovery. This approach holds significant practical value for enhancing the quality and safety of SMILE surgery.

Materials and methods

Research object

This retrospective cross-sectional study was conducted in strict adherence to the Declaration of Helsinki and received approval from the Ethics Committee of the Second Affiliated Hospital of Nanchang University (Approval No. 2024086). It is registered with ClinicalTrials.gov (Identifier: NCT06577012). The study included patients who underwent SMILE surgery at the Ophthalmic Center of the Second Affiliated Hospital of Nanchang University between June 2021 and October 2022.

Inclusion criteria were as follows: (1) age between 18 and 45 years; (2) Corrected Distance Visual Acuity (CDVA) of 16/20 or better; (3) preoperative spherical equivalent (SE) ≥ −10.0 diopters; (4) relatively stable refractive error, with annual changes of less than 0.50 diopters over the past 2 years; (5) no contact lens use in the 2 weeks preceding surgery.

Exclusion criteria were as follows: (1) presence of ocular conditions other than myopia and astigmatism, such as keratoconus, severe dry eye, uncontrolled glaucoma, visually significant cataracts, or a history of ocular trauma; (2) prior ocular surgery; (3) history of systemic diseases that could compromise surgical outcomes or patient safety, including psychiatric disorders, severe hyperthyroidism, systemic connective tissue diseases, or autoimmune diseases.

Surgical procedure

In this study, all patients underwent SMILE surgery for the correction of myopia and astigmatism. The procedures were performed by two experienced surgeons with 10 and 20 years of refractive surgery experience, respectively, each having performed thousands of procedures including PRK, FS-LASIK, and SMILE.

To minimize the risk of infection, patients were prescribed 0.3% gatifloxacin eye gel starting 3 days before surgery. Fifteen minutes prior to surgery, the nurse administered surface anesthesia using 0.5% proparacaine hydrochloride eye drops, with one drop every 5 min for a total of two drops. During the procedure, a femtosecond laser system (Carl Zeiss, VisuMax, Germany) was used. The laser settings were as follows: pulse energy of 135 nJ, spot and track spacing of 4.5 μm, corneal cap thickness between 100–120 μm, and a cap diameter of 7.5 mm. The femtosecond laser performed sequential cuts in the following order: initial posterior lenticule cut, followed by the lenticule side cut, anterior lenticule cut, and cap side cutting. The corneal micro-incision was made at the 2 mm mark at the 12 o’clock position, with an angle of 90°. The transition zone for astigmatism treatment was set to the default value of 0.1 mm (Table 1). During the procedure, the surgeon stabilized the eyeball using microscopic toothed forceps with the left hand, while employing a lenticule separation spatula with the right hand to sequentially separate the anterior and posterior interfaces of the lenticule. Once these steps were completed, the lenticule was extracted, followed by the removal of the eyelid speculum, marking the end of the surgery.

TABLE 1

| Surgical parameters | Range |

|---|---|

| Wavelength/nm | 1,053 |

| Pulse duration/fs | 400 |

| Pulse emission frequency/kHz | 500 |

| Optical zone/mm | 6.5 |

| The transition zone for astigmatism treatment/mm | 0.1 |

| Cap diameter/mm | 7.5 |

| Cap thickness/μm | 100-120 |

| The line and spot separations/μm | 4.5 |

| Cap side cut angle/° | 90 |

| Incision width/mm | 2 |

| Energy/nJ | 135 |

SMILE surgical parameters.

Postoperative care began on the first day after surgery, with the following regimen: 0.3% gatifloxacin eye gel four times daily for 1 week; 0.1% flumetholone eye drops four times daily for 4 weeks, with weekly frequency reductions; and sodium hyaluronate eye drops four times daily for 4 weeks.

Data set building

Data acquisition

The panoramic corneal view during SMILE surgery and the laser scanning image of the posterior lenticule interface were captured from the SMILE surgical video in the VisuMax system.

The OBL area was measured according to the methods described in previous literature (Zhu et al., 2024; Yang S. et al., 2023; Son et al., 2017). Adobe Photoshop 2020 software (Adobe Systems, San Jose, CA) was used, and the OBL area was defined as the percentage of pixels that are two standard deviations (SD) brighter than the average background, with the corneal region selected by the elliptical marquee tool. To ensure measurement accuracy and consistency and reduce software operation errors, each measurement was independently completed by three senior surgeons and cross-checked.

Data preprocessing

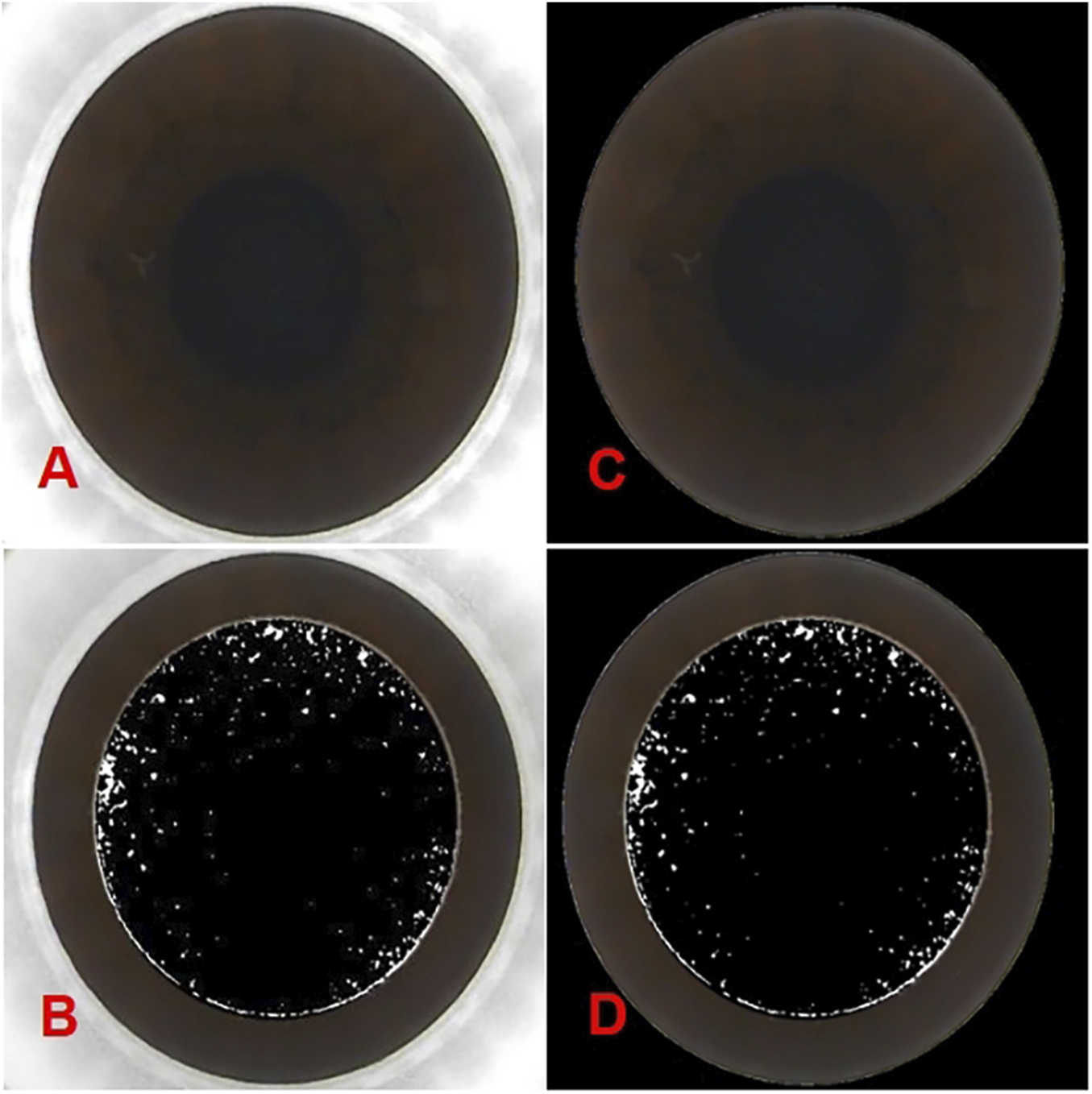

As the images obtained from the femtosecond laser system (Carl Zeiss, VisuMax, Germany) include both the cornea and portions of the surgical instrument, the focus for OBL prediction is solely on the corneal region. Consequently, it is essential to systematically remove non-corneal elements from the images to minimize potential errors. The images were initially processed using OpenCV within Python, employing binarization and closing operations to distinguish the corneal and non-corneal regions. Next, the Canny algorithm was applied for edge detection to identify and trace the image boundaries. A polygon approximation method was then used to smooth the contours, followed by ellipse fitting to determine the optimal fitting ellipse. The area within the ellipse was marked as the corneal region, and the regions outside this ellipse were masked in black to achieve segmentation. These preprocessing steps were applied consistently to both preoperative and postoperative images. The segmented images are presented in Figure 1.

FIGURE 1

The panoramic corneal view processed using OpenCV within Python. (A) show the original panoramic views of the corneal captured from the VisuMax storage system (Carl Zeiss, Germany). (B) show the intraoperative OBL image processed by PS. While (C,D) display the processed panoramic views of the corneal and the intraoperative OBL image processed by PS after Python-based processing).

The dataset was randomly divided into a training set (n = 3,998) and a test set (n = 444) using a random split method, ensuring that the network was trained and tested on different subsets of the data.

Model construction

The task involves generating an intraoperative image of the posterior interface, including the OBL area, from the panoramic corneal image captured before laser scanning. To tackle this image-to-image challenge, an image generation model that integrates a transformer block and a residual structure into the Pix2Pix framework was developed. This model leverages the Pix2Pix architecture to map the input image to the output image and enhances the network’s deep feature extraction capabilities by incorporating a residual structure. Additionally, the transformer block, with its self-attention mechanism, is adept at capturing long-range dependencies within the sequence, which helps maintain global consistency and structural integrity during the image generation process.

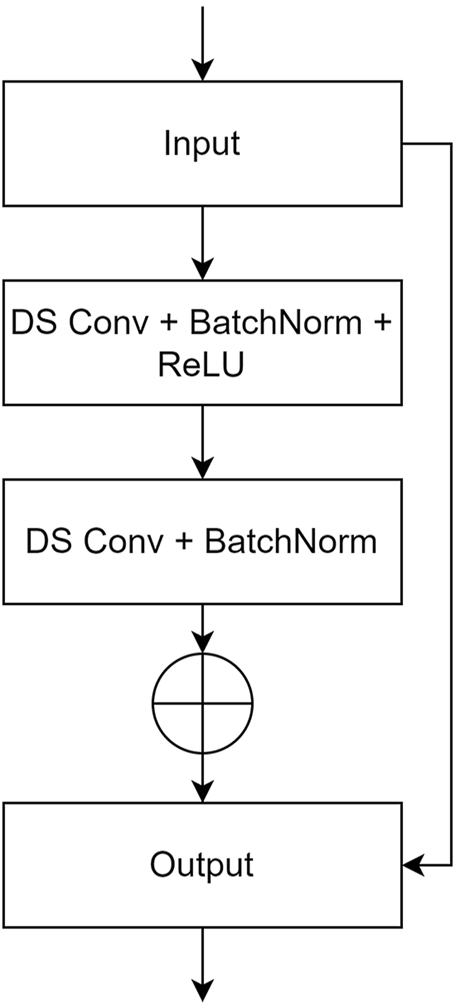

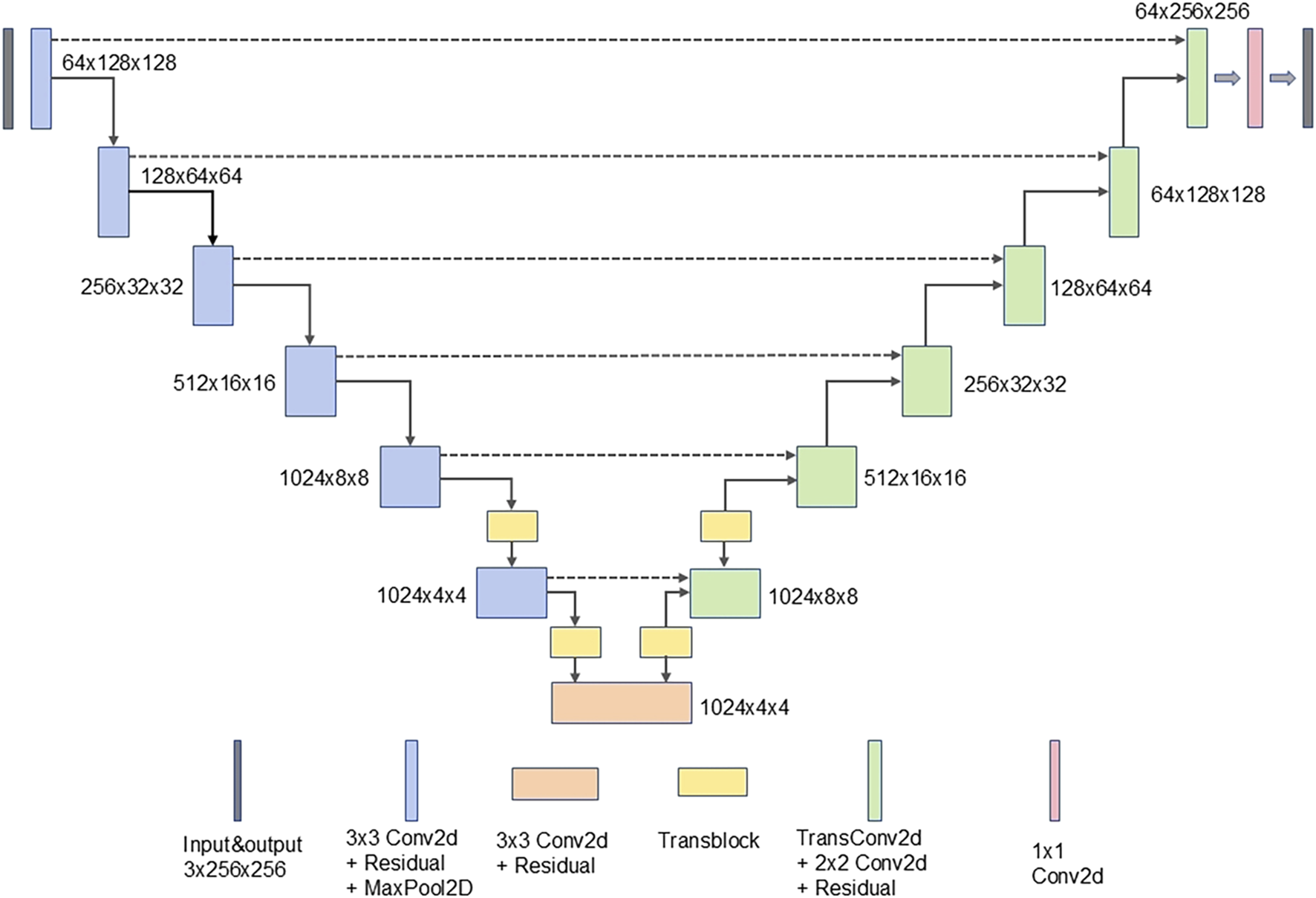

In this study, a deep UNet network was constructed as the generator, using Pix2Pix as the foundational framework. The model integrates a residual structure with depthwise separable convolution and incorporates several transformer blocks. To improve the model’s feature extraction abilities and enhance image generation performance, the UNet network was modified by integrating a custom-designed residual structure into the conventional convolutional blocks in both the encoder and decoder of the UNet-128 model. This modification increased the model’s depth by adding two layers and expanded the maximum channel capacity from 512 to 1,024. Moreover, to mitigate the impact of the increased number of layers on computational efficiency, depthwise separable convolution was incorporated within the residual structure, replacing traditional convolution. This adjustment reduced the model’s parameter count to one-third of the original while also lowering computational complexity. To further enhance the model’s ability to comprehend corneal images at a holistic level, transformer modules were introduced after the fifth and sixth layers of the generator’s encoder. Symmetrical transformer modules were added at corresponding positions within the decoder. The residual structure and the complete generator network are illustrated in Figures 2, 3.

FIGURE 2

The user-defined residual structure diagram. (DS Conv: depthwise separable convolution; BatchNorm: batch normalization).

FIGURE 3

The complete generator network.

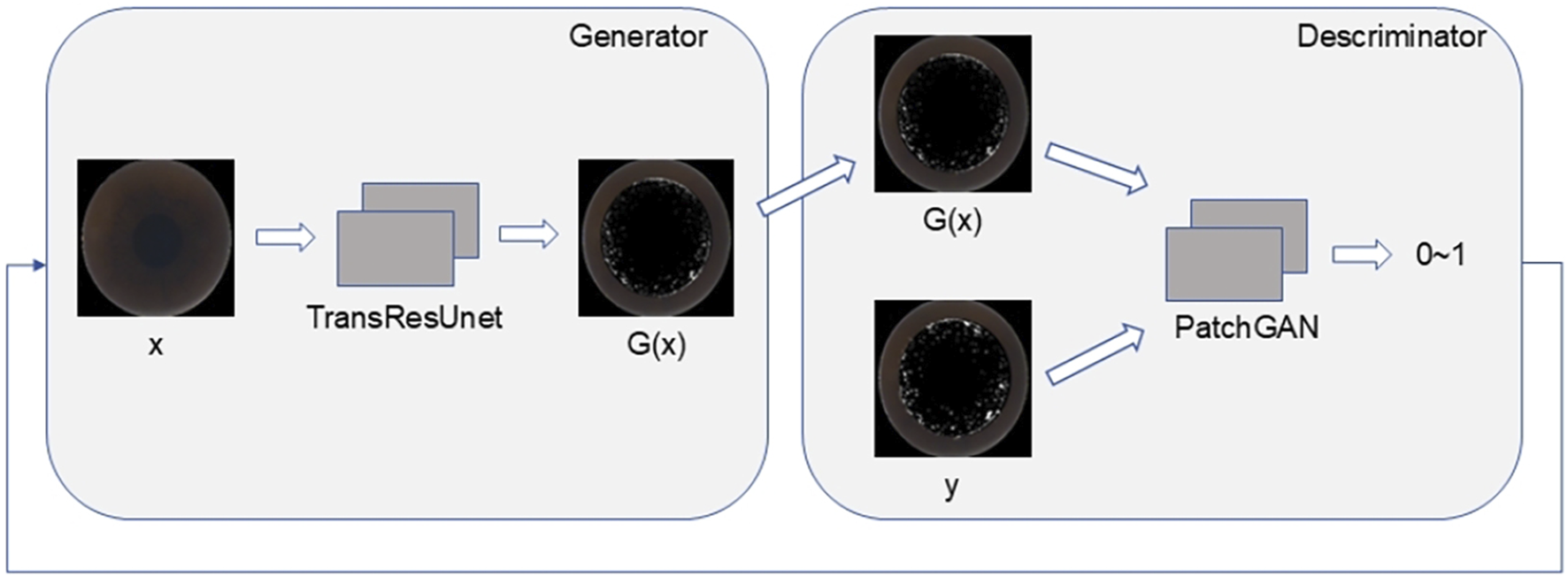

The discriminator in this study utilizes the PatchGAN model, which operates by first concatenating the generated predicted image with the real image along the channel dimension. After passing through a convolution layer, the combined data undergoes further convolution processing. The PatchGAN output is then mapped to a single-channel two-dimensional matrix, maintaining the resolution of the original input. Each matrix element represents a similarity score for the corresponding patch, indicating the probability that the local area at that location is authentic or fabricated. Unlike PixelGAN, PatchGAN does not classify individual pixels but evaluates multiple local areas (patches), enabling it to capture rich texture and contextual information. This enhances the model’s ability to assess the similarity between the generated intraoperative image and the real image. The comprehensive network structure of both the generator and discriminator is illustrated in Figure 4.

FIGURE 4

The TransRes-Pix2Pix integrated network structure.

Several optimization strategies were employed during model training. Binary Cross-Entropy Loss (BCELoss) served as the adversarial loss function, quantifying the discrepancy between the generated and real images within the discriminator. This mechanism improves the visual quality of the generated images, making them more similar to the real images (Ho et al., 2022). Additionally, L1Loss was applied to assess the pixel-level difference between the generated and real images, guiding the model to accurately match the content composition of the real images while enhancing clarity. The Adam optimizer was deployed for both the generator and discriminator to optimize the respective loss functions and expedite model convergence (Qu et al., 2023; Zadeh and Schmid, 2021).

Evaluation indicators

To thoroughly evaluate the performance of the generated images, multiple evaluation metrics were used. The Structural Similarity Index (SSIM) was employed to assess the structural similarity between the generated and real images. SSIM captures critical visual elements such as brightness, contrast, and structural integrity, ensuring that the generated image closely mirrors the real one. The SSIM index is calculated using the following formula:

In formula 1, x and y are represent the generated image data and the real image data respectively. The values and are the mean values of x and y, while and denote their respective standard deviations. The covariance between x and y is represented by . The constants and are introduced to avoid division by zero, with and default values of 0.01 and 0.03, respectively. MAX refers to the maximum value of x and y.

The second metric, Peak Signal-to-Noise Ratio (PSNR), is widely employed to assess reconstruction quality in image processing. It quantifies the pixel-level difference between the predicted and real images. The PSNR is calculated using the following formula:

In formula 2, MAX represents the maximum value of the generated image data, while MSE refers to the mean squared error between the generated and actual image data.

Experimental environment

The experimental environment was set up using Python 3.8, PyTorch 2.2.2, and CUDA 12.2. The server configuration included an AMD R9 7950X processor, 64 GB of RAM, and an NVIDIA GeForce RTX 3090 Ti graphics card with 24 GB of video memory.

Statistical methods

IBM SPSS Statistics 26.0 (IBM Corp., Armonk, NY, USA) was utilized for statistical processing and data analysis. All measurements are presented as mean ± standard deviation (). Count data are presented as n (%).

SSIM evaluates the structural similarity between two images, with a value closer to one indicating greater similarity. In contrast, PSNR assesses image reconstruction quality by comparing the peak signal power to the noise power, with a higher PSNR indicating better reconstruction quality.

Results

The study included 2,265 patients, corresponding to 4,442 eyes, all of which successfully underwent lenticule separation and removal during surgery. The average patient age was 21.88 ± 5.32 years. The mean preoperative spherical equivalent (SE) was −4.28 ± 1.83 D, and the average area affected by OBL was 3.26% ± 0.64%. Detailed surgical parameters are presented in Table 2.

TABLE 2

| Parameters | Values | |

|---|---|---|

| Age/y | 21.88 ± 5.32 | |

| Gender/(n, M%) | 3026 (68.12) | |

| Eyes/(n, R%) | 2259 (50.84) | |

| CDVA/(logMAR) | 0.00 ± 0.08 | |

| SE/D | −4.28 ± 1.83 | |

| Spherical/D | −3.96 ± 1.63 | |

| Cylinder/D | −0.63 ± 0.57 | |

| IOP/mmHg | 15.81 ± 3.51 | |

| CCT/μm | 545.97 ± 27.57 | |

| Corneal curvature | K1/D | 42.36 ± 1.37 |

| K2/D | 43.52 ± 1.45 | |

| Corneal diameter/mm | 11.61 ± 0.44 | |

| Lenticule thickness/μm | 91.42 ± 30.61 | |

| RST/μm | 334.61 ± 37.66 | |

| Relative vertical position of posterior surface/% | 0.39 ± 0.06 | |

Preoperative general data of patients (, n%).

Data are presented as mean ± SD, or No. (%).

CDVA: corrected distance visual acuity, D: diopters, IOP: intraocular pressure. CCT: central corneal thickness, RST: residual stromal thickness.

In this study, the proposed model was assessed using a test set to highlight the advantages of the network architecture, with comparative experiments conducted for validation. Widely referenced models in the field, including CycleGAN, DCGAN, Pix2PixHD were employed in parallel to evaluate their performance. The efficacy of these models was rigorously compared. Additionally, an ablation experiment was performed on key structural components of the model, specifically assessing the performance of Pix2Pix, Res-Pix2Pix and Trans-Pix2Pix, to evaluate the impact of different architectural modules on the quality of the generated images. , with the average value from five random experiments taken as the experimental result. The results of these evaluations are summarized in Table 3 below.

TABLE 3

| Model | SSIM | PSNR |

|---|---|---|

| CycleGAN | 0.51 | 18.30 |

| DCGAN | 0.58 | 20.34 |

| Pix2PixHD | 0.63 | 23.46 |

| Pix2Pix | 0.64 | 22.97 |

| Res-Pix2Pix | 0.66 | 23.08 |

| Trans-Pix2Pix | 0.66 | 25.76 |

| TransRes-Pix2Pix (The method in this paper) | 0.67 | 26.02 |

The performance of image generation by different generative adversarial network (GAN) models.

SSIM:structural similarity index, PSNR: peak signal-to-noise ratio.

As presented in the table, the CycleGAN and DCGAN models primarily utilize unsupervised learning through adversarial training, which lacks explicit structural information and conditional constraints. This limitation makes it prone to mode collapse during training (Yoo et al., 2020; Chaurasia et al., 2024). Additionally, given the subtle and often difficult-to-discern nature of corneal features, the model struggles to capture these details effectively. Consequently, the two establish only a relatively simplistic mapping relationship, producing generated images that tend to lack diversity and exhibit significant discrepancies in detail and perceptual quality compared to the real images. Consequently, the model’s overall performance is suboptimal, suggesting that the unpaired nature of CycleGAN and DCGAN may not be well-suited for tasks requiring precise feature mapping.

In contrast, Pix2Pix benefits from paired data, where the input and output exhibit a direct, strong correlation. The content of the input image directly influences the output, allowing the generation of images that align more closely with the real scenario (Abdelmotaal et al., 2021). Unlike CycleGAN’s reliance on unpaired data, Pix2Pix shows considerable improvement in performance. Pix2PixHD, as one of the extension models of Pix2Pix, is designed to improve the task of image conversion with high resolution, and it is inclined towards multi-scale image generation (Baek et al., 2024). Therefore, in this task, it failed to fully demonstrate its advantages.

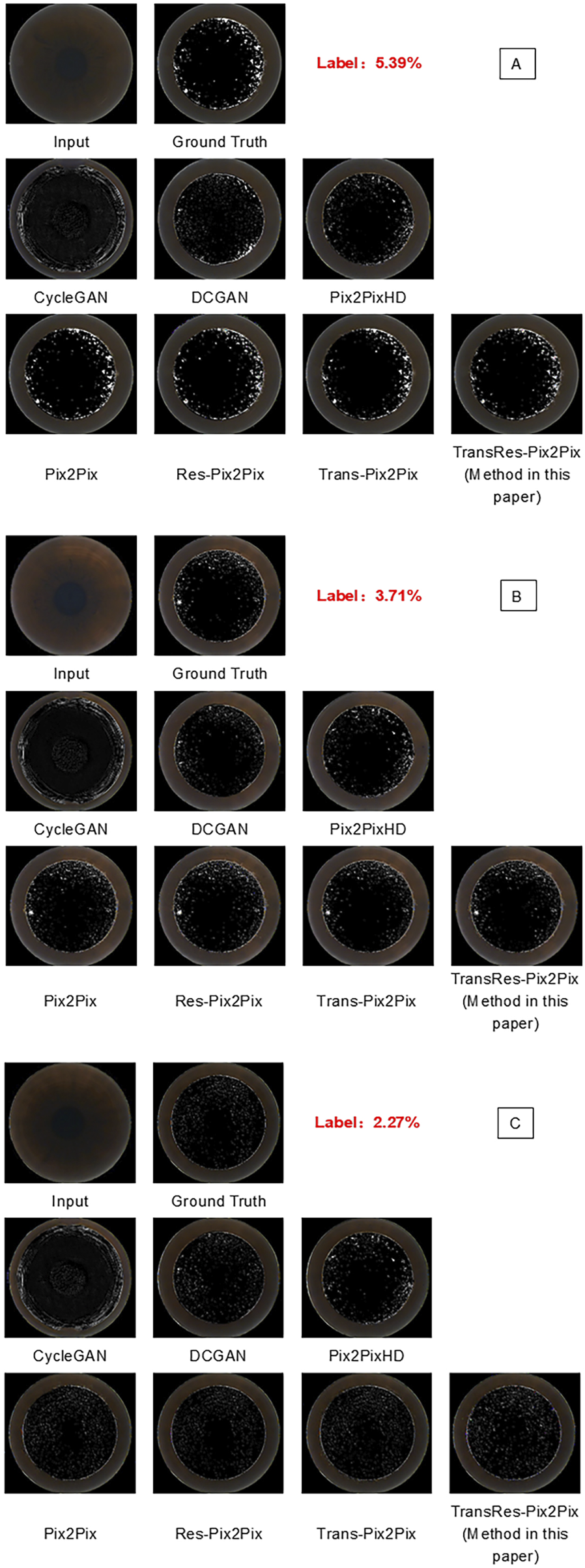

In particular, replacing the original structure with a residual structure in Pix2Pix enhances the model’s ability to learn complex nonlinear mappings, refining image details more accurately. This modification addresses issues like vanishing gradients, stabilizes the training process, and enables the model to capture more intricate features (Zhao B. et al., 2023; Ghanbari and Sadremomtaz, 2024). Moreover, incorporating the Transformer module within Pix2Pix introduces a self-attention mechanism that enhances the model’s understanding of the global structure of the input image. This improvement helps capture long-range dependencies and global context, leading to better image quality and improved retention of global features (Zhao B. et al., 2023; Yan et al., 2022). When both the residual structure and Transformer module are integrated into the Pix2Pix framework, the model leverages their complementary strengths in capturing both detailed and global features. This synergy significantly optimizes the model’s overall performance in image generation tasks (Zhao B. et al., 2023). Some of the generated image results are shown in Figure 5.

FIGURE 5

Prediction visualization results generated by various model. (A–C) respectively show the predicted OBL morphology images by various models from different original panoramic views of the corneal, and they are compared with the superbright OBL images processed by PS).

Discussion

In this study, seven GAN models were systematically trained and evaluated using SSIM and PSNR as metrics to access the fidelity of generated OBL images. To determine the optimal model, an internal validation set was created, and comprehensive ablation studies were performed. Upon thorough evaluation, the model integrated within the Pix2Pix framework—enhanced by the addition of a transformer module and a residual structure—demonstrated the strongest capability for capturing critical features. It achieved an SSIM of 0.67 and a PSNR of 26.02, producing results with the highest similarity to actual intraoperative OBL images. To our knowledge, this is the first GAN model developed to predict images of intraoperative complications in corneal refractive surgery, offering potential future support for decision-making in SMILE surgeries.

OBL is a prevalent complication in SMILE and other femtosecond laser surgeries (Asif et al., 2020; He et al., 2022). Previous research has identified several influencing factors for OBL in SMILE, including central corneal thickness (CCT), corneal tissue density, corneal curvature, corneal cap thickness, lenticule thickness, residual stromal thickness (RST), laser energy, and patient astigmatism (Yang S. et al., 2023; Son et al., 2017; Brar et al., 2021; Liu et al., 2017). However, significant challenges remain in this area of study. Analyses often suffer from small sample sizes, limited inclusion criteria, and inconsistent methodologies for quantifying the OBL area. Additionally, conventional methods like linear regression fail to capture complex nonlinear relationships, limiting the development of a robust predictive model for OBL in SMILE based on preoperative parameters (El Hechi et al., 2021; Kapoor et al., 2019).

The lenticule production phase in laser scanning is a critical aspect of SMILE surgery, fully controlled by the femtosecond laser system (Carl Zeiss, VisuMax, Germany) (Titiyal et al., 2017). If complications arise during this phase, surgeons may need to immediately halt the surgery and apply alternative techniques to address the issue. This not only challenges the surgeon’s technical skills but may also increase patient anxiety, potentially affecting postoperative visual outcomes and recovery (Wan et al., 2021; Yang S. et al., 2023). Given these risks, it is crucial to accurately predict and identify potential complications before initiating the laser scan. This is particularly vital during the “negative pressure suction” step, which allows the surgeons to pause and resume the procedure without affecting the laser scan, effectively reducing surgical risks.

The occurrence of OBL typically does not result in severe outcomes; however, an extensive area of OBL can lead to significant intraoperative complications, such as difficulties in lenticule separation, epithelial breakthrough, lenticule residue, and other serious issues (Zhu et al., 2024; Sahay et al., 2021), which compromise the safety and efficacy of SMILE surgery and delay postoperative visual recovery (Aristeidou et al., 2015). Notably, these complications are more likely to arise when scanning the posterior interface of the lenticule (Son et al., 2017). A key contribution of this study is the direct capture of panoramic corneal images under negative pressure aspiration from SMILE surgery videos, providing real-time insights into corneal status and serving as input for GAN models. This method is particularly important as preoperative parameters often fail to accurately reflect the intraoperative conditions, with variables such as CCT, IOP, and others fluctuating throughout the day. This research represents the first use of a GAN model to synthesize intraoperative OBL images from preoperative corneal panoramas. Quantitative evaluation reveals that the synthesized OBL image closely matches the actual intraoperative image, with a PSNR of 26, demonstrating excellent performance, and forming a foundation for future autonomous systems and data-driven improvements in surgical techniques.

Several limitations exist in this study. Notably, no prior studies in the field of refraction have compared synthetic images of intraoperative complications with actual images to access their similarity. Consequently, quantitative metrics from other AI research in ophthalmology have been used as benchmarks. Moving forward, establishing a uniform standard for this type of evaluation is essential. Furthermore, collaboration with additional ophthalmologists for a more thorough analysis will be necessary to improve the validity and applicability of the findings. Additionally, this study focused exclusively on predicting OBL during SMILE surgery, excluding other intraoperative complications such as dark spots and aspiration. Future efforts should aim to develop a comprehensive prediction platform that incorporates all potential intraoperative complications and factors influencing postoperative visual recovery. Moreover, the research relied on an internal test set; future studies should include a broader dataset from multiple eye centers to enhance generalizability. Additionally, the practical impact of this model on SMILE surgical procedures remains unclear; further research is needed concerning how the model integrates into the Visumax system and whether the surgeon can reduce the intraoperative OBL area with the aid of the model. There is considerable potential for further exploration and refinement in this area.

Conclusion

In conclusion, this study leverages a Pix2Pix generative adversarial network enhanced with an embedded residual module and a Transformer module, comparing various attention mechanisms to effectively genrate the morphology of OBL during SMILE surgery. This approach provides valuable insights for ophthalmologists in refining and customizing refractive surgery plans, underscoring its clinical significance and potential to improve surgical outcomes.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Biomedical Research Ethics Committee, the Second Affiliated Hospital of Nanchang University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

ZZ: Formal Analysis, Visualization, Data curation, Validation, Investigation, Software, Writing – review and editing, Methodology, Supervision, Writing – original draft. PL: Visualization, Validation, Data curation, Software, Writing – original draft. LZ: Writing – review and editing, Validation, Data curation, Writing – original draft, Supervision. QW: Methodology, Investigation, Writing – original draft. JX: Investigation, Writing – original draft. KY: Investigation, Writing – original draft. ZG: Data curation, Writing – original draft. YX: Writing – original draft, Data curation. TQ: Data curation, Methodology, Visualization, Project administration, Conceptualization, Supervision, Writing – review and editing, Formal Analysis. YY: Writing – review and editing, Supervision, Conceptualization, Validation, Funding acquisition.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

We are grateful for the cooperation of the participants, and we would like to thank Bullet Edits (http://www.bulletedits.cn) for English language editing of the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

OBL, Opaque bubble layer; SMILE, Small incision lenticule extraction; AI, Artificial intelligence; GAN, Generative adversarial networks; SSIM, Structural similarity index; PSNR, Peak signal-to-noise ratio; PS, Adobe Photoshop; D, Dioptre.

References

1

Abdelmotaal H. Abdou A. A. Omar A. F. El-Sebaity D. M. Abdelazeem K. (2021). Pix2pix conditional generative adversarial networks for scheimpflug camera color-coded corneal tomography image generation. Transl. Vis. Sci. Technol.10, 21. 10.1167/tvst.10.7.21

2

Aristeidou A. Taniguchi E. V. Tsatsos M. Muller R. McAlinden C. Pineda R. et al (2015). The evolution of corneal and refractive surgery with the femtosecond laser. Eye Vis. (Lond)2, 12. 10.1186/s40662-015-0022-6

3

Asif M. I. Bafna R. K. Mehta J. S. Reddy J. Titiyal J. S. Maharana P. K. et al (2020). Complications of small incision lenticule extraction. Indian J. Ophthalmol.68, 2711–2722. 10.4103/ijo.IJO_3258_20

4

Baek J. He Y. Emamverdi M. Mahmoudi A. Nittala M. G. Corradetti G. et al (2024). Prediction of long-term treatment outcomes for diabetic macular edema using a generative adversarial network. Transl. Vis. Sci. Technol.13, 4. 10.1167/tvst.13.7.4

5

Baird P. N. Saw S.-M. Lanca C. Guggenheim J. A. Smith Iii E. L. Zhou X. et al (2020). Myopia. Nat. Rev. Dis. Prim.6, 99. 10.1038/s41572-020-00231-4

6

Brar S. Ganesh S. Gautam M. Meher S. (2021). Feasibility, safety, and outcomes with standard versus differential spot distance protocols in eyes undergoing SMILE for myopia and myopic astigmatism. J. Refract Surg.37, 294–302. 10.3928/1081597X-20210121-01

7

Cao H. Wang Y. Chen J. Jiang D. Zhang X. Tian Q. et al (2021). Swin-unet: unet-like pure transformer for medical image segmentation.

8

Chaurasia A. K. MacGregor S. Craig J. E. Mackey D. A. Hewitt A. W. (2024). Assessing the efficacy of synthetic optic disc images for detecting glaucomatous optic neuropathy using deep learning. Transl. Vis. Sci. Technol.13, 1. 10.1167/tvst.13.6.1

9

Chen J. Lu Y. Yu Q. Luo X. Adeli E. Wang Y. et al (2021). TransUNet: transformers make strong encoders for medical image segmentation. ArXiv abs/2102.04306.

10

El Hechi M. Ward T. M. An G. C. Maurer L. R. El Moheb M. Tsoulfas G. et al (2021). Artificial intelligence, machine learning, and surgical science: reality versus hype. J. Surg. Res.264, A1–A9. 10.1016/j.jss.2021.01.046

11

Ghanbari S. Sadremomtaz A. (2024). Residual Pix2Pix networks: streamlining PET/CT imaging process by eliminating CT energy conversion. Biomed. Phys. Eng. Express11, 015037. 10.1088/2057-1976/ad97c2

12

Gong M. Chen S. Chen Q. Zeng Y. Zhang Y. (2021). Generative adversarial networks in medical image processing. Curr. Pharm. Des.27, 1856–1868. 10.2174/1381612826666201125110710

13

He X. Li S.-M. Zhai C. Zhang L. Wang Y. Song X. et al (2022). Flap-making patterns and corneal characteristics influence opaque bubble layer occurrence in femtosecond laser-assisted laser in situ keratomileusis. BMC Ophthalmol.22, 300. 10.1186/s12886-022-02524-6

14

Ho E. Wang E. Youn S. Sivajohan A. Lane K. Chun J. et al (2022). Deep ensemble learning for retinal image classification. Transl. Vis. Sci. Technol.11, 39. 10.1167/tvst.11.10.39

15

Kapoor R. Walters S. P. Al-Aswad L. A. (2019). The current state of artificial intelligence in ophthalmology. Surv. Ophthalmol.64, 233–240. 10.1016/j.survophthal.2018.09.002

16

Kim J. Chin H. S. (2023). Deep learning-based prediction of the retinal structural alterations after epiretinal membrane surgery. Sci. Rep.13, 19275. 10.1038/s41598-023-46063-6

17

Kim T.-I. Alió Del Barrio J. L. Wilkins M. Cochener B. Ang M. (2019). Refractive surgery. Lancet393, 2085–2098. 10.1016/S0140-6736(18)33209-4

18

Kim Y. J. Hwang S. H. Kim K. G. Nam D. H. (2025). Automated imaging of cataract surgery using artificial intelligence. Diagn. (Basel)15, 445. 10.3390/diagnostics15040445

19

Lin M.-Y. Tan H.-Y. Chang C.-K. (2024). Myopic regression after FS-LASIK and SMILE. Cornea43, 1560–1566. 10.1097/ICO.0000000000003573

20

Liu Y.-C. Rosman M. Mehta J. S. (2017). Enhancement after small-incision lenticule extraction: incidence, risk factors, and outcomes. Ophthalmology124, 813–821. 10.1016/j.ophtha.2017.01.053

21

Ma J. Wang Y. Li L. Zhang J. (2018). Corneal thickness, residual stromal thickness, and its effect on opaque bubble layer in small-incision lenticule extraction. Int. Ophthalmol.38, 2013–2020. 10.1007/s10792-017-0692-2

22

Medina A. (2022). The cause of myopia development and progression: theory, evidence, and treatment. Surv. Ophthalmol.67, 488–509. 10.1016/j.survophthal.2021.06.005

23

Park T. Liu M.-Y. Wang T.-C. Zhu J.-Y. (2019). “Semantic image synthesis with spatially-adaptive normalization,” in 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 2332–2341.

24

Qu Y. Wen Y. Chen M. Guo K. Huang X. Gu L. (2023). Predicting case difficulty in endodontic microsurgery using machine learning algorithms. J. Dent.133, 104522. 10.1016/j.jdent.2023.104522

25

Saeed A. Q. Sheikh Abdullah S. N. H. Che-Hamzah J. Abdul Ghani A. T. (2021). Accuracy of using generative adversarial networks for glaucoma detection: systematic review and bibliometric analysis. J. Med. Internet Res.23, e27414. 10.2196/27414

26

Sahay P. Bafna R. K. Reddy J. C. Vajpayee R. B. Sharma N. (2021). Complications of laser-assisted in situ keratomileusis. Indian J. Ophthalmol.69, 1658–1669. 10.4103/ijo.IJO_1872_20

27

Son G. Lee J. Jang C. Choi K. Y. Cho B. J. Lim T. H. (2017). Possible risk factors and clinical effects of opaque bubble layer in small incision lenticule extraction (SMILE). J. Refract Surg.33, 24–29. 10.3928/1081597X-20161006-06

28

Teo Z. L. Ang M. (2024). Femtosecond laser-assisted in situ keratomileusis versus small-incision lenticule extraction: current approach based on evidence. Curr. Opin. Ophthalmol.35, 278–283. 10.1097/ICU.0000000000001060

29

Titiyal J. S. Kaur M. Rathi A. Falera R. Chaniyara M. Sharma N. (2017). Learning curve of small incision lenticule extraction: challenges and complications. Cornea36, 1377–1382. 10.1097/ICO.0000000000001323

30

Titiyal J. S. Kaur M. Shaikh F. Gagrani M. Brar A. S. Rathi A. (2018). Small incision lenticule extraction (SMILE) techniques: patient selection and perspectives. Clin. Ophthalmol.12, 1685–1699. 10.2147/OPTH.S157172

31

Waisberg E. Ong J. Kamran S. A. Masalkhi M. Paladugu P. Zaman N. et al (2025). Generative artificial intelligence in ophthalmology. Surv. Ophthalmol.70, 1–11. 10.1016/j.survophthal.2024.04.009

32

Wan K. H. Lin T. P. H. Lai K. H. W. Liu S. Lam D. S. C. (2021). Options and results in managing suction loss during small-incision lenticule extraction. J. Cataract. Refract Surg.47, 933–941. 10.1097/j.jcrs.0000000000000546

33

Wang T.-C. Liu M.-Y. Zhu J.-Y. Tao A. Kautz J. Catanzaro B. (2017). “High-resolution image synthesis and semantic manipulation with conditional GANs,” in 2018 IEEE/CVF conference on computer vision and pattern recognition, 8798–8807.

34

Wilson S. E. (2020). Biology of keratorefractive surgery- PRK, PTK, LASIK, SMILE, inlays and other refractive procedures. Exp. Eye Res.198, 108136. 10.1016/j.exer.2020.108136

35

Xu Y. Yang W. (2023). Editorial: artificial intelligence applications in chronic ocular diseases. Front. Cell Dev. Biol.11, 1295850. 10.3389/fcell.2023.1295850

36

Yan S. Wang C. Chen W. Lyu J. (2022). Swin transformer-based GAN for multi-modal medical image translation. Front. Oncol.12, 942511. 10.3389/fonc.2022.942511

37

Yang S. Wang H. Chen Z. Li Y. Chen Y. Long Q. (2023a). Possible risk factors of opaque bubble layer and its effect on high-order aberrations after small incision Lenticule extraction. Front. Med.10, 1156677. 10.3389/fmed.2023.1156677

38

Yang W.-H. Shao Y. Xu Y.-W. (2023b). Guidelines on clinical research evaluation of artificial intelligence in ophthalmology (2023). Int. J. Ophthalmol.16, 1361–1372. 10.18240/ijo.2023.09.02

39

Yoo T. K. Choi J. Y. Kim H. K. (2020). CycleGAN-based deep learning technique for artifact reduction in fundus photography. Graefes Arch. Clin. Exp. Ophthalmol.258, 1631–1637. 10.1007/s00417-020-04709-5

40

Zadeh S. G. Schmid M. (2021). Bias in cross-entropy-based training of deep survival networks. IEEE Trans. Pattern Anal. Mach. Intell.43, 3126–3137. 10.1109/tpami.2020.2979450

41

Zhang Z. Cheng N. Liu Y. Song J. Liu X. Zhang S. et al (2022). Prediction of corneal astigmatism based on corneal tomography after femtosecond laser arcuate keratotomy using a pix2pix conditional generative adversarial network. Front. Public Health10, 1012929. 10.3389/fpubh.2022.1012929

42

Zhao B. Cheng T. Zhang X. Wang J. Zhu H. Zhao R. et al (2023b). CT synthesis from MR in the pelvic area using Residual Transformer Conditional GAN. Comput. Med. Imaging Graph103, 102150. 10.1016/j.compmedimag.2022.102150

43

Zhao J. Li Y. Yu T. Wang W. Emmanuel M. T. Gong Q. et al (2023a). Anterior segment inflammation and its association with dry eye parameters following myopic SMILE and FS-LASIK. Ann. Med.55, 689–695. 10.1080/07853890.2023.2181388

44

Zheng B. Wu M.-N. Zhu S.-J. Zhou H.-X. Hao X.-L. Fei F.-Q. et al (2021). Attitudes of medical workers in China toward artificial intelligence in ophthalmology: a comparative survey. BMC Health Serv. Res.21, 1067. 10.1186/s12913-021-07044-5

45

Zhu Z. Zhang X. Wang Q. Xiong J. Xu J. Yu K. et al (2024). Predicting an opaque bubble layer during small-incision lenticule extraction surgery based on deep learning. Front. Cell Dev. Biol.12, 1487482. 10.3389/fcell.2024.1487482

Summary

Keywords

artificial intelligence, generative adversarial networks, opaque bubble layer, small-incision lenticule extraction, complication

Citation

Zhu Z, Lin P, Zhong L, Wang Q, Xu J, Yu K, Guo Z, Xu Y, Qiu T and Yu Y (2025) Based on TransRes-Pix2Pix network to generate the OBL image during SMILE surgery. Front. Cell Dev. Biol. 13:1598475. doi: 10.3389/fcell.2025.1598475

Received

23 March 2025

Accepted

05 May 2025

Published

21 May 2025

Volume

13 - 2025

Edited by

Weihua Yang, Southern Medical University, China

Reviewed by

Jiajia Chen, Zhejiang University, China

Kunke Li, Jinan University, China

Updates

Copyright

© 2025 Zhu, Lin, Zhong, Wang, Xu, Yu, Guo, Xu, Qiu and Yu.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yifeng Yu, 171018170@qq.com; Taorong Qiu, qiutaorong@ncu.edu.cn

†These authors have contributed equally to this work

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.