- Cognitive Sciences, Department of Linguistics, University of Potsdam, Potsdam, Germany

Introduction: Prosody plays a critical role in linguistic processing at both sentential and information-structural levels, while prosodic impairments in individuals with aphasia can lead to difficulties in sentence comprehension and everyday communication. Despite its importance, prosodic processing in aphasia and its relationship to inter-individual variability within this highly heterogeneous population remain underexplored. This study examined prosodic cue use for structural prediction in individuals with and without aphasia, exploring individual differences in prosodic impairments.

Methods: Sixteen individuals with aphasia and thirty neurotypical control participants completed a sentence type identification task using string-identical (i.e., structurally ambiguous) German sentences (interrogative vs. declarative) presented under two focus conditions (wide vs. narrow). Response accuracy and reaction times were analyzed using linear mixed-effects models. To explore variability among individuals with aphasia, a clustering analysis was conducted based on task performance.

Results: Individuals with aphasia demonstrated significant difficulties in prosodic processing, particularly in identifying questions under wide focus conditions. Wide focus posed challenges for structural prediction due to deficient prosodic cue use, while narrow focus facilitated task performance by providing more salient prosodic cues. The level of speech fluency and abilities in global pitch detection emerged as potential sources of variability. Clustering analysis identified distinct subgroups of individuals with aphasia, each of which was characterized by unique patterns of task performance, suggesting differential underlying mechanisms potentially linked to cognitive abilities and overall processing demands.

Discussion: These findings emphasize challenges and resources of prosodic cue use for structural prediction, advancing the understanding of prosodic impairments and their effects on communication. This study underscores the importance of considering individual differences in prosodic processing for developing targeted diagnostic tools and therapeutic approaches tailored to the needs of individuals with aphasia.

1 Introduction

Prosody serves important linguistic functions that facilitate sentence processing, while prosodic impairments, such as those in aphasia, can lead to difficulties in sentence comprehension and everyday communication. For successful sentence comprehension, listeners must identify both the word sequence and the superimposed prosodic contour (Pell, 2001). Different types of sentences can share the same word sequence but differ in their prosodic contours, which syntactically mark them, for instance, as either interrogative (question) or declarative (statement). Consequently, listeners rely on prosodic cues at the sentential level to distinguish between these string-identical (i.e., structurally ambiguous) sentences. The fast, incremental use of prosodic cues – conveyed through fundamental frequency (f0), duration, and intensity at the acoustic level – to immediately resolve structural ambiguities and distinguish between sentence types has been demonstrated for neurotypical adult listeners in several studies and across different languages (Eady and Cooper, 1986; Hadding-Koch and Studdert-Kennedy, 1964; Heeren et al., 2015; Mulders and Szendrői, 2016; Petrone and Niebuhr, 2014). An example for structurally ambiguous questions and statements in German is provided in (1).

(1) Die Tante grüßt den Bäcker.?

theNOM-f. aunt greets theACC-m. baker

“The aunt greets the baker.?”

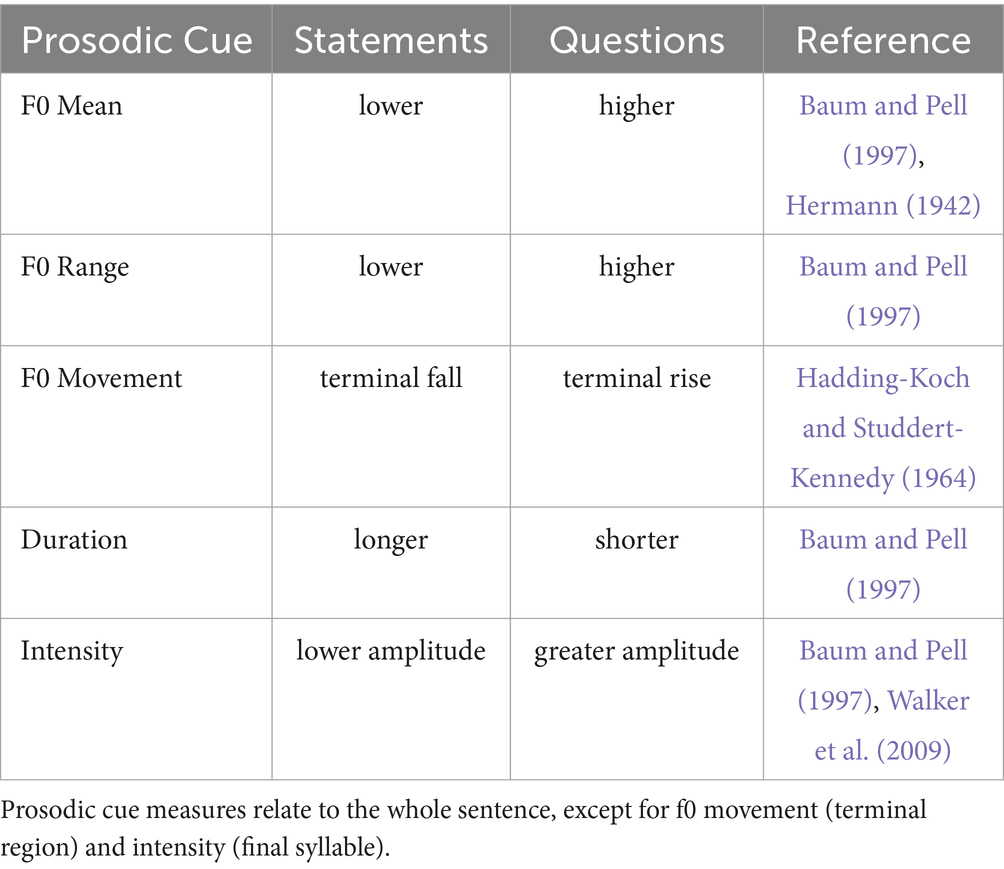

Peng et al. (2012) noted that f0 serves as the primary cue for distinguishing questions with rising prosodic contours from statements with falling contours, whereas changes in duration and intensity provide less reliable information. Following the GToBI annotation system (Grice et al., 2005), prosodic contours can be described in terms of pitch accents (i.e., f0 movements around lexically stressed syllables) and boundary tones (i.e., structure-forming features at syntactically meaningful positions within a sentence). Accordingly, in German – similar to English – questions (i.e., those that are string-identical with statements) are marked by a high boundary tone (H-H%), a L* or L* + H nuclear pitch accent, and a rising contour with a larger f0 range and shallower declination of f0. In contrast, statements are characterized by a low boundary tone (L-L%), a H* nuclear pitch accent, and a falling prosodic contour with a smaller f0 range. Even though there is variability in the production of prosodic cues among speakers (Brinckmann and Benzmüller, 1999), previous studies have reported intonation patterns of the “average speaker” that would effectively distinguish structurally ambiguous sentence types by prosodically marking interrogative and declarative structures (see Table 1).

Table 1. Prosodic cues in productions of the “average speaker” for marking interrogative and declarative structures in English and German.

In perception studies investigating the differentiation between sentence types, particular emphasis has been placed on prosodic cues in the terminal portion of the sentence, specifically within the last 150–200 ms of the prosodic contour (Lieberman, 1967; Peters and Pfitzinger, 2008). However, previous literature also suggested that additional features along the entire prosodic contour may contribute to the differentiation and identification of interrogative and declarative structures (Hadding-Koch and Studdert-Kennedy, 1964; Petrone and Niebuhr, 2014). For example, Petrone and Niebuhr (2014) showed that prenuclear alignment, slope and shape differences in f0 serve as cues in the pre-terminal region of the sentence. Yet, Michalsky (2017) argued that these differences pertain only to phonetic realization, not to tonal structure, suggesting that pitch accents in the pre-terminal region do not syntactically mark interrogativity. These mixed results on the role of the entire prosodic contour require further investigation of prosodic cue use for sentence type identification.

Another crucial aspect of sentence comprehension involves the level of information structure, which refers to the packaging of information to meet communicative needs (Féry and Krifka, 2008). For instance, listeners use prosodic cues to mark the focus of a sentence highlighting new or contrasting information as prominent (e.g., Seeliger and Repp, 2023). Focus is typically conveyed through pitch accents on syntactic representations of constituents, with the constituent in question being focused by default in interrogative structures (Schafer et al., 2000). Thus, focus marking constitutes an additional factor for the identification of questions and statements, underscoring its significance for serving linguistic functions. In sentences without focused elements or sentence-final (wide) focus, differences between sentence types emerge on the last noun phrase, with questions exhibiting a higher f0 maximum as compared to statements. In contrast, under sentence-initial (narrow) focus, prosodic contours of questions and statements diverge markedly at the focused constituent: questions exhibit a rising f0 with a prolonged f0 maximum, while statements show a falling f0 that drops to a low contour by the end of the sentence (Altmann et al., 1989; Eady and Cooper, 1986; Pell, 2001). Neurotypical adult listeners are sensitive to these prosodic differences showing fast, incremental use of focus to support sentence comprehension (Mulders and Szendrői, 2016).

In summary, prosodic cues are crucial for syntactically marking sentence types and for signaling focus at the level of information structure, thereby influencing to what extent prosody can be used for structural prediction by listeners and serving as an important resource to support sentence comprehension.

Impairments in prosodic processing have been previously described in aphasia, an acquired language disorder predominantly resulting from left-hemispheric lesions (for a review: see, e.g., de Beer et al., 2023). Aphasia can affect linguistic prosody processing to varying degrees across different modalities (perception and production). These prosodic impairments have an impact on processing of linguistic structures at both sentential and information structural levels. For instance, individuals with aphasia (IWA) may have challenges identifying different sentence types – such as misunderstanding when an interlocutor asks a question that requires a response – or processing focused information – such as new or contrasting elements in discourse. These difficulties illustrate how impairments in linguistic prosody can affect sentence comprehension, potentially leading to significant difficulties in everyday communication. While prosodic impairments pose communicative challenges, they also raise important questions about how the role of prosodic cues is shaped by aphasia as an underlying disorder.

IWA are often classified as fluent or non-fluent based on their speech production abilities. Following Bayer (1984), Goodglass and Kaplan (1983), and Stadie (2010), fluent IWA typically exhibit a regular or increased speech rate of > 90 words per minute and a mean length of utterances exceeding five words, while presenting symptoms such as mild word-finding difficulties, semantic paraphasias, and production of complex, but paragrammatic syntax. In contrast, non-fluent IWA show a reduced speech rate of < 50 words per minute and a mean length of utterances of less than five words, along with more severe word-finding difficulties, phonematic paraphasias, and reduced syntactic complexity (Bayer, 1984; Goodglass and Kaplan, 1983; Stadie, 2010). Additionally, many IWA experience impairments in speech comprehension, particularly when processing syntactic structures to derive the correct meaning (for a review: see Caplan, 2006). In this context, impairments in prosody may significantly contribute to the challenges of structural prediction during sentence comprehension.

According to functional load hypotheses (e.g., Colsher et al., 1987; Cooper et al., 1984; Van Lancker, 1980), different aspects of prosody are lateralized distinctively in the brain: linguistic prosody, which serves syntactic or semantic functions, is primarily processed in the left hemisphere, while emotional prosody, conveying affective information, is processed in the right hemisphere. Accordingly, previous studies have highlighted particular difficulties in IWA when processing linguistic prosody – in contrast to emotional prosody — in tasks such as sentence type identification (e.g., Pell and Baum, 1997; Seddoh, 2006; Walker et al., 2002). These findings may potentially relate to generally higher linguistic and cognitive demands involved in processing multiple cues (semantic, syntactic and prosodic) in tasks on linguistic prosody. The following sections will explore linguistic prosody processing in IWA in more depth, discussing results on the identification of sentence types and the role of focus for sentence comprehension, while also considering how the use of prosodic cues may relate to non-linguistic auditory processing skills.

Understanding how IWA process sentence types provides valuable insights into their challenges with linguistic prosody. Previous research has shown that IWA are impaired in sentence type identification, making more errors for questions vs. statements as compared to both neurotypical control participants and individuals with right-hemispheric lesions (Walker et al., 2002). These findings support the theory of functional lateralization and highlight general processing difficulties of IWA in linguistic prosody tasks. Potentially, IWA rely on a default strategy for sentence type identification, inferring statements from both prosody and word order, but questions from prosody only, which may contribute to their higher error rates.

Speech fluency and severity of aphasia also play a critical role in understanding IWA’s difficulties in prosodic processing. Fluent IWA tend to outperform non-fluent IWA in sentence type identification tasks in English (Seddoh, 2006). While mildly to moderately impaired non-fluent IWA struggled with identifying questions, particularly in using prosodic cues in the pre-terminal region of the sentence, severely impaired non-fluent IWA exhibited generally poor performance across both sentence types. Differential findings from pre-terminal and terminal sentence regions further suggest that non-fluent IWA experience particular difficulties in processing prosodic information in smaller units than the global prosodic contour.

Similar findings have been reported for IWA in German when identifying lexically masked or unmasked questions and statements with either congruent intonation (e.g., statements in content and word order with falling intonation: Die Eltern essen Suppe.; “The parents eat soup.”; questions in content and word order with rising intonation: e.g., Kannst du mir heute helfen?; “Can you help me today?”; following the canonical rule in German), or incongruent intonation (statements in content and word order with rising intonation: e.g., Die Frau liest eine Zeitung?; “The woman is reading a newspaper?”) (Raithel, 2005). Non-fluent IWA significantly underperformed control participants, whereas fluent IWA did not differ significantly from either group. Notably, both non-fluent IWA and control participants appeared to rely more on lexical content and word order than on prosodic cues in the unmasked condition. Additionally, in neurotypical individuals, age-related cognitive declines in, for instance, attention, working memory, and executive functioning, may influence linguistic prosody processing (e.g., Raithel and Hielscher-Fastabend, 2004; Shahouzaei et al., 2024). However, the precise mechanisms underlying the links between processing linguistic prosody and non-linguistic cognitive abilities remain open to further investigation.

To summarize, processing of sentence types in IWA reveals significant challenges related to linguistic prosody. However, these prosodic impairments are modulated by individual levels of speech fluency, aphasia severity, and cognitive abilities.

Examining how IWA process prosodic cues related to information structure, such as sentence focus, sheds further light on their challenges with linguistic prosody. Focus marking thus plays a critical role, as it can either facilitate or compromise sentence comprehension, as reflected in the mixed findings of existing literature on focus discrimination and identification in aphasia (Gavarró and Salmons, 2013; Geigenberger and Ziegler, 2001; Kimelman, 1999; Pell, 1998).

Prosodic cues for focus marking may facilitate auditory comprehension of new information in IWA, with previous findings suggesting that severely impaired IWA benefit more from prosody than those with mild or moderate impairments (Kimelman, 1999). This was particularly evident in contexts with low linguistic complexity. However, as linguistic demands increase, resources available for prosodic processing appear to diminish. In addition, IWA have shown difficulties in discriminating between wide and narrow focus in Catalan (Gavarró and Salmons, 2013) and in identifying focus in English questions and statements, relying less on prosodic cues as compared to control participants and individuals with right-hemispheric lesions (Pell, 1998). But IWA’s use of prosodic cues in perception may be influenced by sentence type, with f0 being more crucial in statements, while additional durational cues become more relevant in questions.

In German, impaired performance in discriminating statements with wide and narrow focus was observed for sentences artificially manipulated for f0, duration, and intensity (Raithel, 2005). Notably, both fluent and non-fluent IWA, as well as control participants, performed below chance, suggesting possible issues with the experimental design. Moreover, despite high variability among IWA, deficits have also been observed in identifying narrow focus across different semantic categories – such as person, place, action, and time – in sentences like “Last year we went to Italy by plane” (Geigenberger and Ziegler, 2001).

To sum up, research on focus-related processing in IWA yields inconclusive results, with variability in speech fluency, linguistic complexity, and experimental designs complicating our understanding of prosodic impairments in aphasia at information structural levels.

Since processing of pitch and rhythm – key spectral and temporal parameters of prosody – is relevant for prosodic cue use at both sentential and information structural levels, considering non-linguistic auditory processing skills could offer further insights into prosodic impairments in IWA and their implications for sentence comprehension.

For example, Stefaniak et al. (2021) assessed perception of pitch, rhythm, and timbre in IWA and identified impairments in pitch discrimination, various rhythm perception tasks, and dynamic modulation detection. Significant positive correlations were found between auditory processing and speech fluency, particularly for global pitch detection and rhythm discrimination. Similarly, Zipse et al. (2014) and Grube et al. (2016) reported impaired performance in IWA across different measures of pitch and rhythm processing as compared to control participants. Interestingly, previous studies have also linked prosodic processing to musical abilities, showing that musical experience facilitates detection of pitch variations in neurotypical individuals (Magne et al., 2003). Overall, these findings suggest that non-linguistic auditory processing skills are impaired in IWA, potentially influencing their language abilities and limiting their use of prosodic cues for sentence processing.

The literature review above highlights the importance of prosodic processing for sentence comprehension, which may be particularly challenging for IWA due to impairments in linguistic prosody processing. It also reveals mixed findings on the role of the global prosodic contour and sentence focus for structural prediction. However, prosodic processing in aphasia is still underexplored and questions regarding the interplay of individual differences in language, cognitive or musical abilities, and non-linguistic auditory processing skills for prosodic processing remain open. To our knowledge, no previous study has examined to what extent IWA use prosodic cues for structural prediction in German, including the influence of focus marking on sentence type identification, thereby considering prosodic impairments at both sentential and information structural levels. Furthermore, there is limited research on how challenges in processing linguistic prosody relate to variability in speech fluency, aphasia severity, cognitive or musical abilities, and non-linguistic auditory processing skills. Exploring these sources of variability could further illuminate how impairments in prosodic processing impact sentence comprehension in aphasia.

In the present study, we aimed: first, to investigate the use of prosodic cues for identifying interrogative and declarative structures with wide and narrow focus in individuals with and without aphasia (RQ1), and second, to explore individual differences in prosodic processing to better understand potential sources of variability in prosodic cue use (RQ2).

RQ1: To what extent is prosodic cue use impaired in individuals with aphasia when identifying German structurally ambiguous questions and statements in two different focus conditions?

To address RQ1, we compared the performance of IWA and a neurotypical control group in a sentence type identification task using structurally ambiguous sentences (statements vs. questions) across two focus conditions (wide vs. narrow).

We formulated the following predictions for response accuracy: first, we predicted that IWA would exhibit overall lower response accuracy due to difficulties in sentence type identification (Pell and Baum, 1997; Seddoh, 2006; Walker et al., 2002). Second, we anticipated that IWA would show lower response accuracy for questions as compared to statements, as previous studies highlighted greater challenges in question identification (Pell and Baum, 1997; Seddoh, 2006; Walker et al., 2002). Third, if focus marking facilitates sentence processing (Kimelman, 1999; Mulders and Szendrői, 2016), we expected higher response accuracy for narrow focus in both participant groups. Conversely, if IWA’s focus perception is impaired (Gavarró and Salmons, 2013; Geigenberger and Ziegler, 2001; Pell, 1998; Raithel, 2005), we would expect similar response accuracy across focus conditions or lower accuracy for narrow compared to wide focus in IWA.

We formulated the following predictions for reaction times: first, we predicted overall slower reaction times in IWA, particularly for questions, while no significant differences between sentences types were expected in the control group, aligning with findings by Pell and Baum (1997) and conclusions from Seddoh (2006). Second, if focus marking facilitates sentence processing (Kimelman, 1999; Mulders and Szendrői, 2016) and prosodic cues in pre-terminal regions can be used for sentence type identification, we expected faster reaction times for narrow focus in both participant groups. Conversely, if IWA exhibit impaired focus perception and difficulties using prosodic cues in pre-terminal regions (e.g., Geigenberger and Ziegler, 2001; Pell, 1998; Seddoh, 2006), we anticipated comparable reaction times across focus conditions or slower reaction times for narrow compared to wide focus in IWA.

RQ2: To what extent are individual differences in sentence type identification related to severity of aphasia, speech fluency, language, cognitive or musical abilities, and non-linguistic auditory processing skills?

To address RQ2, we explored effects of aphasia severity, speech fluency, comprehension of linguistic and emotional prosody, global pitch detection, rhythm discrimination, and musical abilities as covariates. Based on previous studies, we predicted lower response accuracy in severely impaired as compared to moderately and mildly impaired IWA, and in non-fluent as compared to fluent IWA (Raithel, 2005; Seddoh, 2006). Following the functional load hypothesis (e.g., Van Lancker, 1980), we also expected a positive correlation between sentence type identification and comprehension of linguistic prosody, but no such correlation for emotional prosody. Additionally, given prior findings on the influence of non-linguistic auditory processing skills (Zipse et al., 2014; Grube et al., 2016; Stefaniak et al., 2021) and musical experience (Magne et al., 2003) on language abilities and prosodic processing, we anticipated positive correlations between sentence type identification and measures of pitch, rhythm, and musical abilities.

Finally, we conducted a clustering analysis to explore individual differences in prosodic cue use within the IWA group based on response accuracy and reaction times in sentence type identification. We anticipated to identify distinct aphasia subgroups based on task performance, and descriptively explored relationships with language, cognitive and musical abilities, as well as non-linguistic auditory processing skills as potential sources of variability.

2 Materials and methods

2.1 Participants

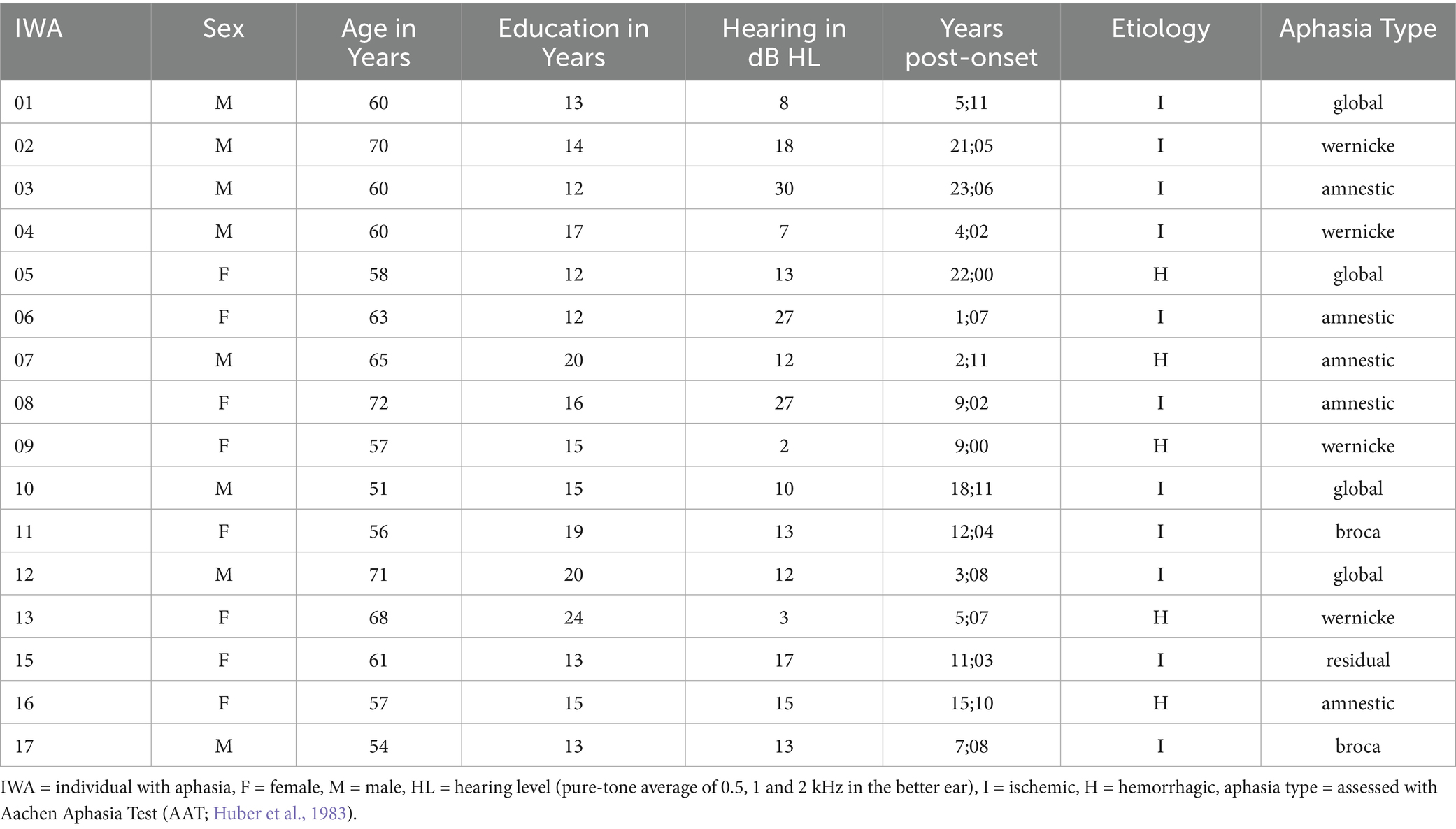

Forty-nine native German speakers participated in this study: 17 individuals with aphasia (IWA) and 32 control participants. Due to technical issues during data collection, two control participants were excluded, as well as one individual with aphasia (AP14), who was diagnosed with a cognitive communication disorder without aphasia. The final sample included 17 females and 13 males in the control group, aged 53–80 years (M = 66.83, SD = 7.04), with no history of neurological or language impairments. The IWA group (8 females, 8 males) ranged between 51 and 72 years (M = 61.44, SD = 6.25), with post-onset times between 1; 07–23; 06 years. Aphasia in the IWA group was caused by single unilateral lesions in the dominant left hemisphere. Both participant groups were matched for age range (50–80 years), years of education (t(25.6) = 1.94, p = 0.06; Welch’s two-sample t-tests, two-sided, here and elsewhere), and hearing thresholds (t(29.5) = −0.19, p = 0.85). All participants were (pre-morbidly) right-handed as assessed using the Edinburgh Handedness Inventory (Oldfield, 1971), and reported normal or corrected-to-normal vision. A hearing screening was conducted following the guidelines of the American Speech-Language-Hearing Association (ASHA Panel on Audiologic Assessment, 1997), ensuring mean air-conduction thresholds in a pure-tone test at 0.5, 1 and 2 kHz of < 25 dB HL in the better ear (Clark, 1981). Participants failing the pure-tone test were included if they passed the Ventry and Weinstein criterion (< 40 dB HL per frequency in the better ear), indicating a mild hearing loss (Ventry and Weinstein, 1983). Those with moderate to severe hearing impairments or hearing aids were excluded. Demographic and neurological data for IWA are presented in Table 2.

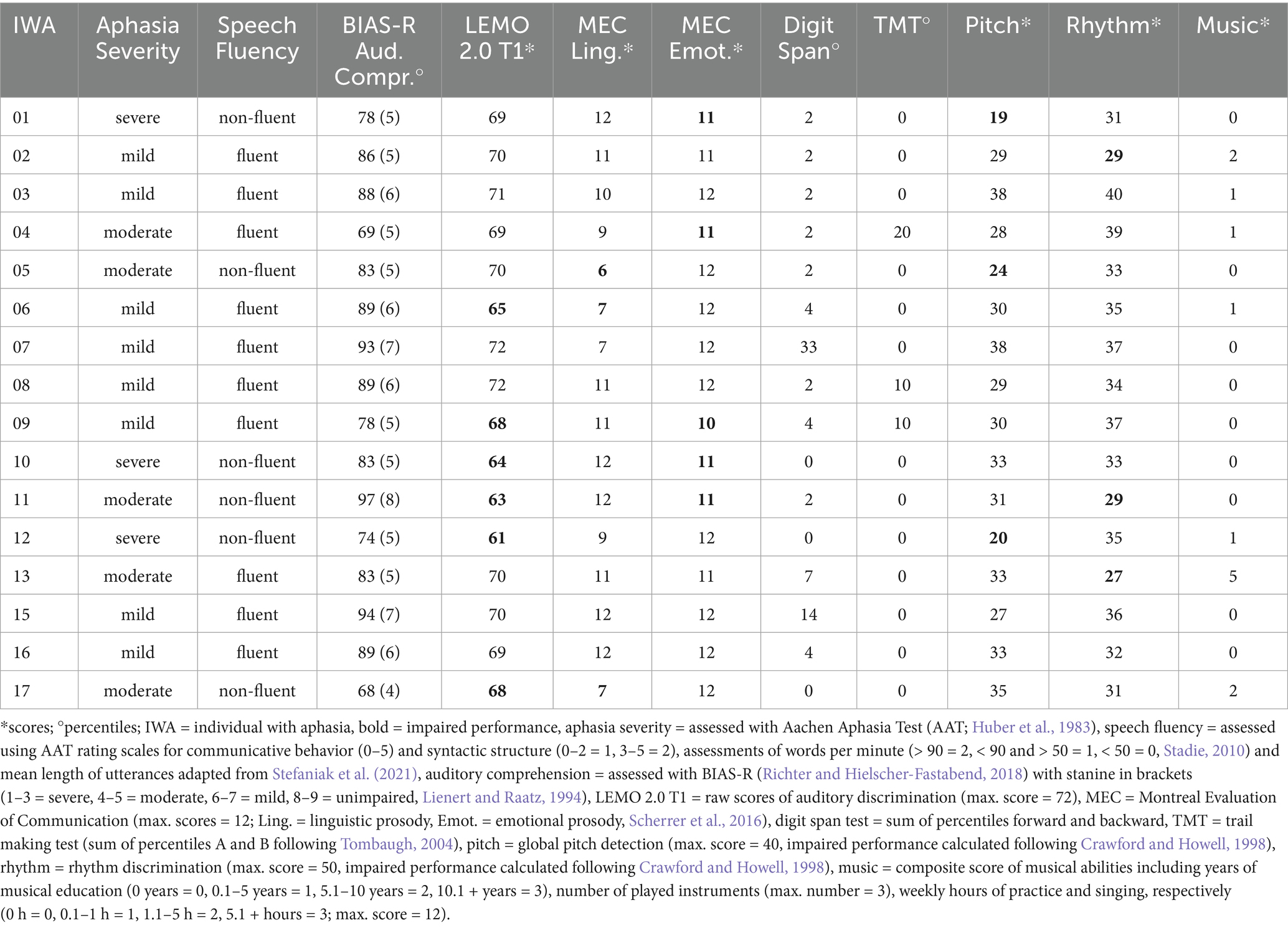

The following background measures were assessed: all participants completed two subtests of the Montreal Evaluation of Communication (MEC; Scherrer et al., 2016) to assess comprehension of linguistic (subtest 5) and emotional (subtest 9) prosody, serving as a sanity check for the present study. Cognitive abilities were evaluated using German versions of the following tests: Digit Span Test of Wechsler’s Memory Scale (WMS-R; Härting et al., 2000) for auditory working memory, and Trail Making Test A and B for executive functioning (TMT; Reitan, 1979). Non-linguistic auditory processing skills were assessed with a frequency discrimination task for global pitch detection, following the procedure of Grube et al. (2016), and a rhythm discrimination task based on Zipse et al. (2014). All participants completed an additional demographical questionnaire, which gathered information on musical abilities, calculated as a composite score across years of musical education, number of played instruments, and weekly hours of practice and singing. Moreover, control participants completed the Montreal-Cognitive-Assessment (MoCa; Nasreddine et al., 2005) as a screening for mild cognitive impairment, with all participants meeting the age-and education-corrected thresholds provided by Thomann et al. (2018).

In the IWA group, severity of aphasia was assessed using the Aachen Aphasia Test (AAT; Huber et al., 1983). Additionally, speech fluency was evaluated through a semi-standardized interview, using AAT rating scales for communicative behavior and syntactic structure, as well as assessments of words per minute and mean length of utterances, adapted from Stefaniak et al. (2021). Auditory comprehension was tested using the Bielefeld Aphasia Screening (BIAS-R; Richter and Hielscher-Fastabend, 2018) to ensure basic task understanding and to exclude participants with severe impairments in comprehension at the word and sentence level. Furthermore, IWA completed an auditory discrimination task (subtest T1 of the German psycholinguistic test battery LEMO 2.0; Stadie et al., 2013) to assess auditory analysis. Results of IWA on language, cognitive, and musical abilities, linguistic and emotional prosody, and non-auditory processing skills are provided in Table 3.

Table 3. Language, cognitive, and musical abilities, linguistic and emotional prosody, and non-auditory processing skills in individuals with aphasia (n = 16).

The study was approved by the ethics committee of the University of Potsdam (registration number 99/2020), and all participants provided informed consent in accordance with the Declaration of Helsinki (World Medical Association, 2013). Participants were recruited from the University’s patient database, local aphasia support groups, and senior associations. They were compensated with a monetary reimbursement. The study was conducted in the Neurocognition of Language Lab at the University of Potsdam.

2.2 Materials

Linguistic stimuli consisted of 20 German sentences (10 statements, 10 questions) structured as subject (NP1), verb, and object (NP2). The sentences used transitive verbs and bisyllabic animate nouns from the semantic categories of humans, animals, and fairy tale characters in both subject and object positions. All nouns were either feminine (NP1, n = 5) or masculine (NP2, n = 10), with lexical stress on the first syllable. The nouns in both positions were balanced for mean type frequency. The sentences were structurally ambiguous (i.e., string-identical questions and statements) and could only be differentiated by their prosodic contours, which are presented in the following sections on auditory stimuli and acoustic analysis. Visual stimuli consisted of two pictograms: one with a question mark and the other with a full stop, representing questions and statements, respectively.

2.2.1 Auditory stimuli

Auditory stimuli comprised 80 experimental items (20 SVO structures * 2 sentence types * 2 focus conditions) and 4 practice items (1 SVO structure * 2 sentence types * 2 focus conditions). These stimuli were based on a preceding production study — an elicitation task conducted with four pilot participants who were unfamiliar with the project and naïve to its purpose. The production study aimed to explore the prosodic contours of two sentence types (statements vs. questions) and two focus conditions (wide vs. narrow) in German, verifying whether the material could, in principle, elicit distinguishable prosodic contours in the four experimental conditions (as exemplified in 2), thus ensuring its suitability for the present perception study.

(2) Die Tante grüßt den Bäcker. wide statement

Die Tante grüßt den Bäcker? wide question

Die TANTE grüßt den Bäcker. narrow statement

Die TANTE grüßt den Bäcker? narrow question

theNOM-f. aunt greets theACC-m. baker

“The aunt greets the baker.?”

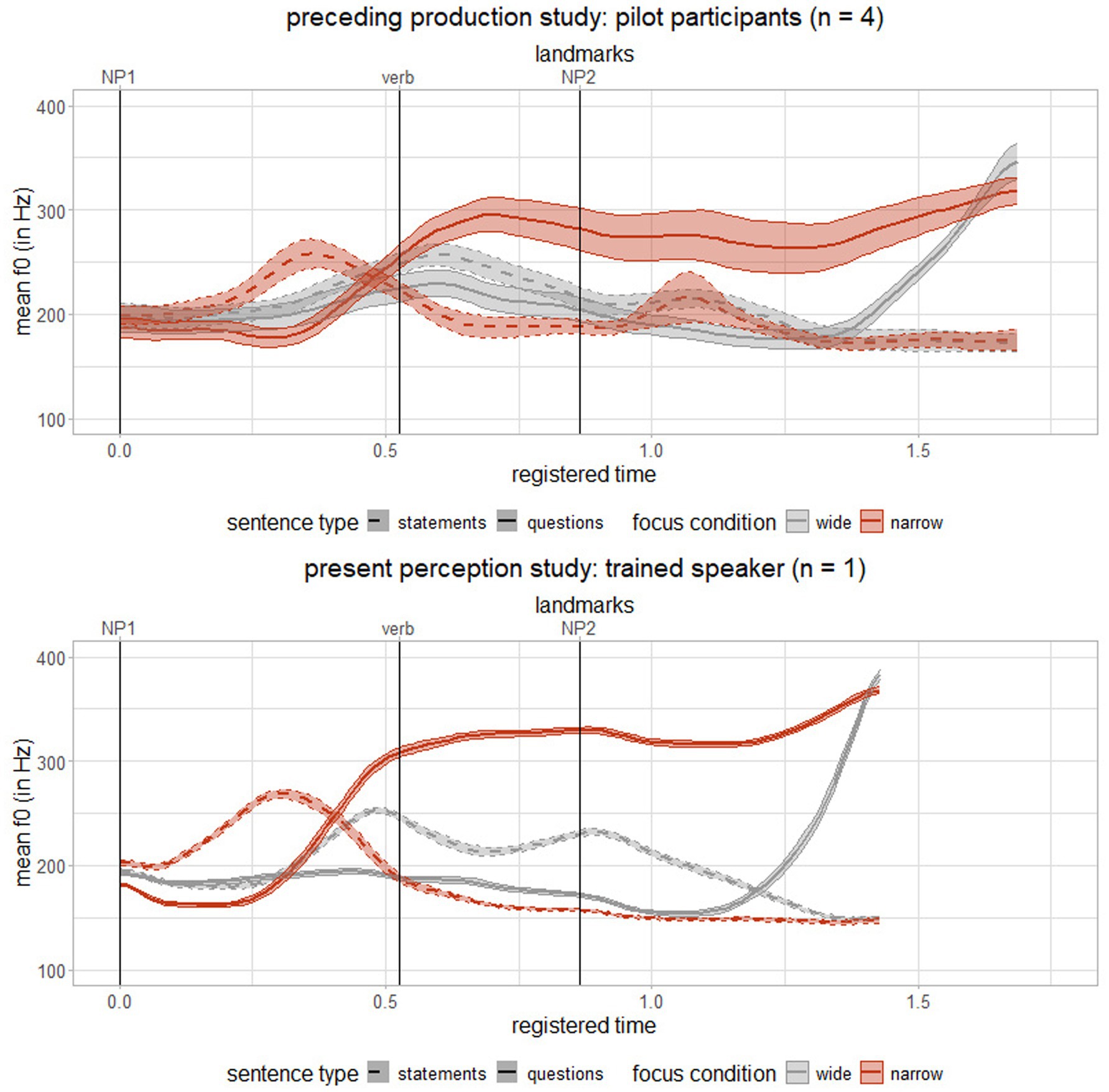

For the present perception study, auditory stimuli were spoken by a trained female native speaker of German, who was familiar with the prosodic contours identified in the production study, to closely resemble the intonation patterns. All recordings were made in a sound-attenuated booth at a sampling rate of 48 kHz, and post-processed using PRAAT (Boersma and van Heuven, 2001). Prosodic contours were analyzed and plotted using RStudio (R Core Team, 2025) and are displayed in Figure 1 for both the preceding production study and the present perception study.

Figure 1. Mean and standard error of time-registered f0 (in Hz) for the preceding production study (top) and the present perception study (bottom) across two sentence types (statements = dashed line, questions = solid line) and two focus conditions (wide = grey, narrow = red), landmarks separated using landmark registration following Asano and Gubian (2018), time axis is fictitious considering onsets of constituents as landmark positions.

2.2.2 Acoustic analysis

Acoustic analysis of the auditory stimuli and signal detection theory measures were conducted using RStudio (R Core Team, 2025) and are further detailed in Supplementary material 1. First, we extracted acoustic measures to ensure that our auditory stimuli successfully elicited the prosodic contours in the four experimental conditions, aligning with prior research on prosodic cues distinguishing interrogative and declarative structures (e.g., Baum and Pell, 1997; Eady and Cooper, 1986; Hadding-Koch and Studdert-Kennedy, 1964). Second, a perception check was performed to confirm that the control group was sensitive to the experimental conditions, providing normative data for group comparisons with IWA.

2.2.2.1 Acoustic measures

Prosodic contours of the four experimental conditions differed in f0, with questions exhibiting a higher f0 mean than statements, particularly under narrow focus. Differences in f0 movement on NP1 were dependent on focus condition: statements showed a falling contour, while questions exhibited a rising contour with significantly higher f0 range in narrow vs. wide focus. On NP2, f0 movement showed a fall in statements and a rise in questions, with significantly higher f0 range observed for questions in both focus conditions. Results align with previous research on prosodic cues distinguishing questions from statements in two different focus conditions (e.g., Baum and Pell, 1997; Eady and Cooper, 1986; Hadding-Koch and Studdert-Kennedy, 1964). No durational differences between sentence types were observed for either narrow or wide focus. The overall speech rate across conditions averaged 4.91 syllables per second (SD = 0.26), consistent with the natural speech rate of three to six syllables per second reported in various languages, including German (Levelt, 2001). Auditory stimuli were scaled at an intensity level of 70 dB, with a silence period of 250 ms added before each stimulus and 40 ms after to ensure a smooth uploading to PsychoPy (Peirce et al., 2019).

2.2.2.2 Perception check

As a perception check, we analyzed data of the control group in the present perception study (for task details: see section on procedure) using signal detection theory to conduct d’-analyses (Jang et al., 2009). This analysis aimed to determine whether control participants could serve as a normative group for IWA by evaluating their sensitivity to distinguish prosodic contours across the four experimental conditions. Results revealed very good discriminability between questions and statements, with focus condition influencing sentence type identification independently of any response bias. Higher discriminability was found in narrow vs. wide focus. Additionally, an overall response bias toward statements was identified, which was greater in wide vs. narrow focus. These findings validate our experimental prosodic conditions and confirm the task’s feasibility, demonstrating that the control group was sensitive to reliably distinguish prosodic contours across the four experimental conditions and suitable as a normative group for IWA.

2.3 Procedure

Before the experiment, participants received study information, signed consent forms, and completed a data protection declaration. Demographic and musical experience information were collected, and participants underwent a hearing screening. Written instructions for the experimental task were provided, and for IWA, the experimenter also read them aloud. Participants sat in front of a computer screen and wore headphones to listen to the auditory stimuli. Key instructions were reiterated on the screen, and participants could ask questions to ensure their task understanding.

During the experimental task, each trial started with a central fixation cross displayed for 1,000 ms. Next, two pictograms (full stop vs. question mark) appeared on the screen while participants listened to the auditory stimulus. Their task was to identify the sentence type by selecting the appropriate pictogram via button press, responding as quickly and accurately as possible. The index and middle fingers of their non-dominant left hand were used for button presses. After each response (or a time-out which was set to 10 s from sentence onset), a fixation cross was shown during a 1,000 ms inter-stimulus-interval, followed by the next trial.

The first four trials comprised practice items, during which participants received immediate feedback on their performance to familiarize themselves with the task. They could repeat the practice trials once. The test phase consisted of 80 trials without feedback, with a short break after 40 trials to maintain attention. The experimental task lasted about 15 min. Afterwards, assessments of handedness, cognitive abilities, linguistic and emotional prosody, and non-linguistic auditory processing skills were conducted. For control participants, the entire experiment took about 120 min in one session. For IWA, testing was split into two 120-min sessions, with tests on language abilities administered during the second appointment.

2.4 Experimental design

The experiment used a 2×2 factorial between-participant design. Four pseudorandomized lists of experimental items were created, ensuring no more than three consecutive occurrences of the same sentence type (statements vs. questions) or focus condition (wide vs. narrow). Further parameters were applied to ensure a minimum of five items between repetition of the same lexical content and at least three items between occurrences of the same NP1. Additionally, the position of the visual stimuli (full stop vs. question mark) was counterbalanced across participants. For half of the participants, the full stop appeared on the right side of the screen and the question mark on the left. For the other half of participants, the positions were reversed.

2.5 Data analysis

This study was pre-registered on OSF, where data analysis plans, data and code are openly available: osf.io/3je8q.

Deviations from the pre-registration implemented in the present study are reported in Supplementary material 2. Data analysis and visualizations were conducted using RStudio (R Core Team, 2025) with various packages listed in Supplementary material 3. Sanity checks were performed on the dependent variables – response accuracy and reaction times — to detect any systematic patterns in responses, and participants with more than 50% null responses. No participants were excluded based on these pre-registered criteria. However, due to technical issues during data collection, we excluded ten consecutive trials for one control participant.

Response accuracy was coded as incorrect (0), correct (1) or no response (time-out) (2), with time-outs excluded from further analysis. Binomial tests were used to determine whether response accuracy fell above, within, or below the chance range per condition, defined as 30 to 70% correct. Reaction times were measured in ms from sentence onset and normalized to sentence offset to account for any durational differences in the auditory stimuli. To prevent negative values, reaction times were re-zeroed based on the fastest response. Finally, they were log-transformed according to the results of the box-cox transformation test (λ = 0.10) to normalize the distribution of the data and better meet model assumptions.

To address RQ1, we conducted statistical analyses of group comparisons between IWA and control participants across two sentence types (statements vs. questions) and two focus conditions (wide vs. narrow). A generalized linear mixed model with a binomial link function was applied to response accuracy, and linear mixed models with a Gaussian link function were fit on reaction times of correct responses. Fixed effects included sentence type, focus, group, and their interactions, with contrasts effect coded (+/− 0.5), so that statements, wide focus, and control participants served as baselines for comparisons with questions, narrow focus and IWA. Linguistic prosody, emotional prosody, global pitch detection, rhythm discrimination, and musical abilities were included as covariates. Models further comprised random effects of sentence type, focus, and group with correlated varying intercepts and slopes by participant (sentence type, focus) and by item (sentence type, focus, group). Model specifications are provided in Supplementary material 4. Variance inflation factors were used to detect multicollinearity. If multicollinearity (VIF > 5) or convergence issues arose, we reduced models stepwise, following the concept of parsimony in mixed models (Matuschek et al., 2017). Model comparisons were performed using likelihood ratio tests, and residuals were checked for distributional properties. Coefficient estimates, standard errors, and z-values (for generalized linear mixed models) or t-values (for linear mixed models) are reported – reflecting differences in how these models estimate uncertainty –, with p-values indicating statistical significance. In the following, we focused on key main effects and interactions relevant to the research question.

To address RQ2, we conducted statistical analyses focusing solely on IWA. A generalized linear mixed model with a binomial link function was applied to response accuracy, including sentence type, focus, and their interactions as fixed effects (effect coded +/− 0.5). Severity of aphasia and speech fluency were added as covariates: severity was coded with repeated contrasts (mild vs. moderate, moderate vs. severe), while fluency was effect coded (fluent vs. non-fluent). To investigate patterns of impairment beyond the group level, we conducted a clustering analysis following previous studies investigating potential sources of variability in individuals with acquired speech and language disorders (e.g., Akinina et al., 2021; Fernández et al., 2024). Thus, we explored variability within the IWA group using hierarchical agglomerative clustering, which calculates the similarity between data points and iteratively merges them into clusters (Tan et al., 2005). The ward.D2 linkage function was used to minimize total within-cluster variance. A tree-like diagram (dendrogram) was generated to display the level of similarity at which clusters were joined. Clustering was based on task performance, that is, response accuracy and reaction times by condition. Details on determining the optimal number of clusters are presented in Supplementary material 5. We further explored the resulting aphasia subgroups by identifying demarcating patterns in task performance and their levels of chance or impaired performance following the procedure proposed by Crawford and Howell (1998) — computed for each individual and condition to determine whether performance fell significantly below the control group mean. We also considered the influence of potential sources of variability (i.e., aphasia severity, speech fluency, language, cognitive, and musical abilities, comprehension of linguistic prosody, and non-auditory processing skills), by descriptively analyzing how these factors may be reflected in task performance of aphasia subgroups.

3 Results

3.1 Group comparisons

For RQ1, we conducted statistical comparisons of response accuracy and reaction times between IWA and control participants across two sentence types (statements vs. questions) and two focus conditions (wide vs. narrow). Below, we present group comparisons on background measures first, and results of (generalized) linear mixed models second.

3.1.1 Background measures

Results of the MEC (Scherrer et al., 2016) revealed significant group differences in linguistic prosody (t(17.38) = 2.81, p < 0.05; Welch’s two-sample t-tests, two-sided, here and elsewhere), with IWA scoring lower than control participants. No significant group differences were found for emotional prosody (t(31.95) = 0.84, p = 0.41). Performance on the Digit Span Test (WMS-R; Härting et al., 2000) indicated that IWA scored significantly lower than the control group on auditory working memory (forward: t(35.76) = 10.33, p < 0.001; backward: t(30.31) = 12.73, p < 0.001). Similarly, results from the TMT (Reitan, 1979) showed significantly lower executive functioning in IWA (A: t(32.90) = 6.95, p < 0.001; B: t(29) = 7.32, p < 0.001). Regarding non-linguistic auditory processing, significant group differences were observed for both global pitch detection (t(25.12) = 2.79, p < 0.01) and rhythm discrimination (t(38.89) = 3.46, p < 0.01), with IWA scoring again lower than control participants. In contrast, no significant group differences were found for musical experience (t(43.39) = 1.81, p = 0.08).

3.1.2 Response accuracy

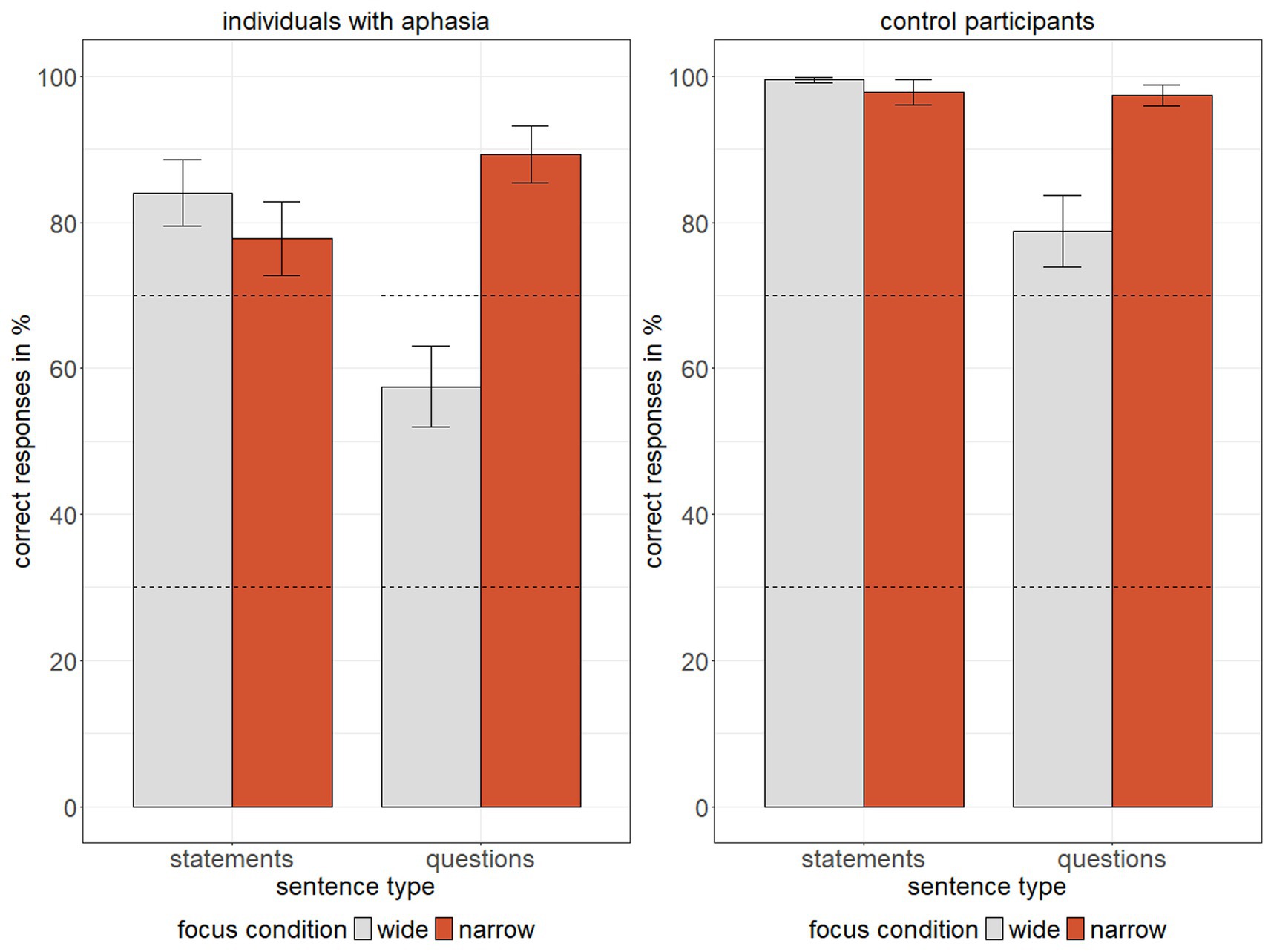

Means and standard errors of response accuracy for IWA and control participants are shown in Figure 2 and model results are presented in Table 4. Results of the generalized linear mixed model revealed statistically significant main effects of sentence type, focus, and group, further qualified by the following statistically significant interactions: first, a significant interaction between sentence type and focus indicated a larger difference in response accuracy between questions (lower) and statements (higher) in wide as compared to narrow focus. Second, a significant interaction between sentence type and group showed that the difference in response accuracy between sentence types was greater for IWA than for control participants. In sum, response accuracy was overall lower in IWA than in the control group, specifically for questions with wide focus. Additionally, global pitch detection, included as a covariate, was a statistically significant predictor, with higher global pitch detection scores corresponding to higher response accuracy.

Figure 2. Mean response accuracy (% correct) for individuals with aphasia and control participants across two sentence types (statements vs. questions) and two focus conditions (wide = grey, narrow = red), whiskers represent +/− 1 standard error, dashed lines indicate chance range per condition.

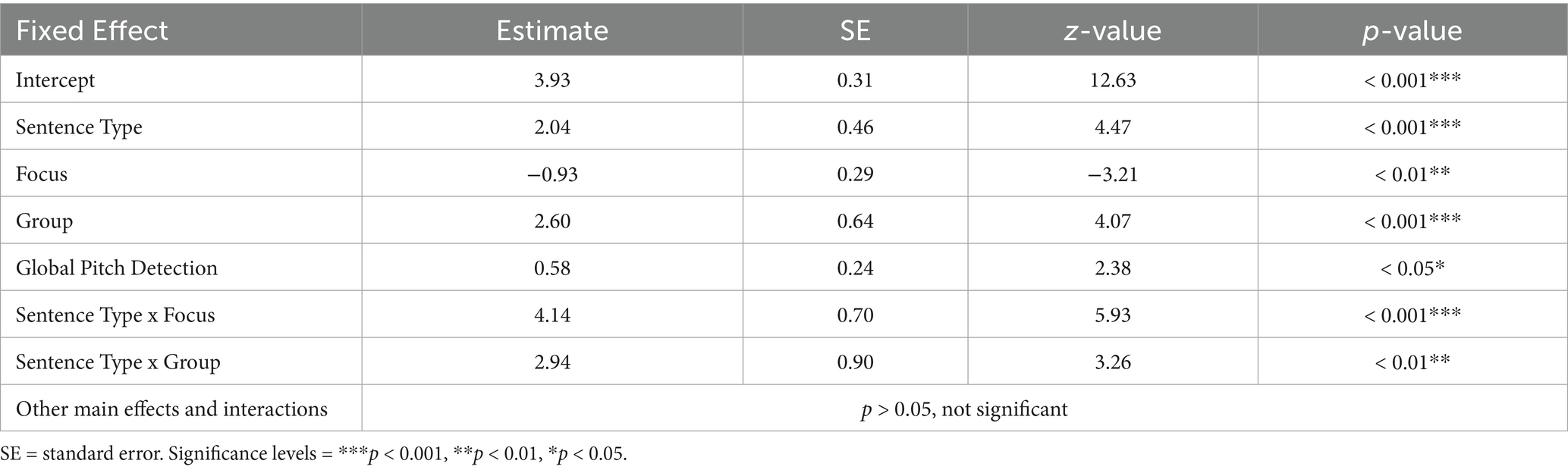

Table 4. Model results of the fixed effects from the generalized linear mixed model on response accuracy.

3.1.3 Reaction times

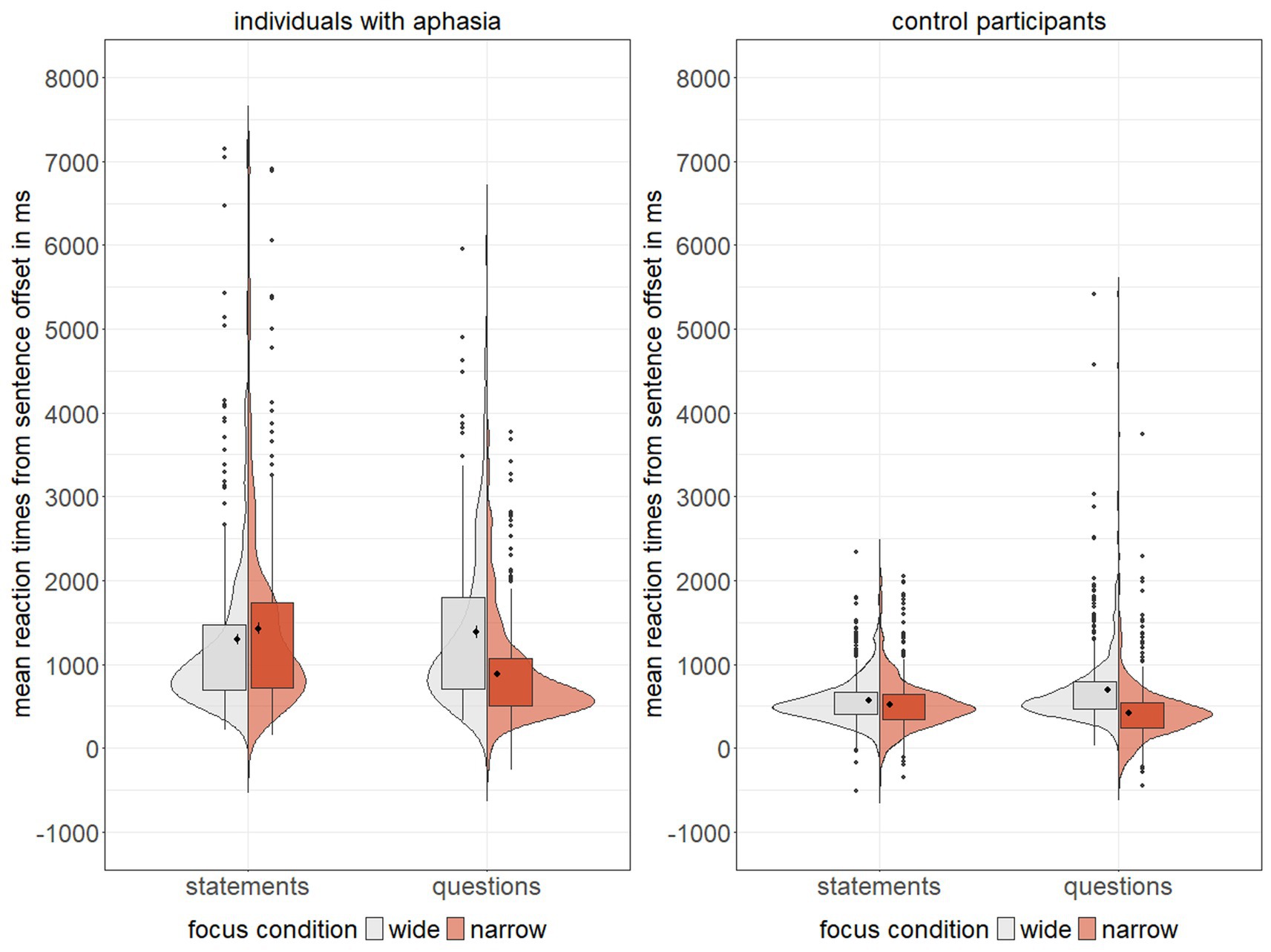

Means and standard errors of reaction times for correct responses, normalized to sentence offset, are shown in Figure 3 and model results are presented in Table 5. Overall, 1.52% of responses occurred before sentence offset, with 92.86% of these early responses being correct and predominantly made by control participants (96.43%), indicating that incorrect responses did not arise from responding too early, but participants rather listened to the entire prosodic contour. The maximal linear mixed model on correct responses did not converge and showed a singular fit. Additionally, variance inflation factors indicated multicollinearity among the covariates of linguistic prosody, global pitch detection, and rhythm discrimination. Hence, we excluded these covariates from further analyses. The model was then reduced stepwise in its random effects structure following Matuschek et al. (2017). The final model explained variance equally well as the maximal model (χ2(33) = 46.16, p = 0.06), and had a better model fit, as indicated by a smaller AIC value. Therefore, the final, less complex model was preferred.

Figure 3. Mean reaction times (in ms) for individuals with aphasia and control participants across two sentence types (statements vs. questions) and two focus conditions (wide = grey, narrow = red), violin plots display kernel probability density, boxplots indicate median, interquartile range, and outliers.

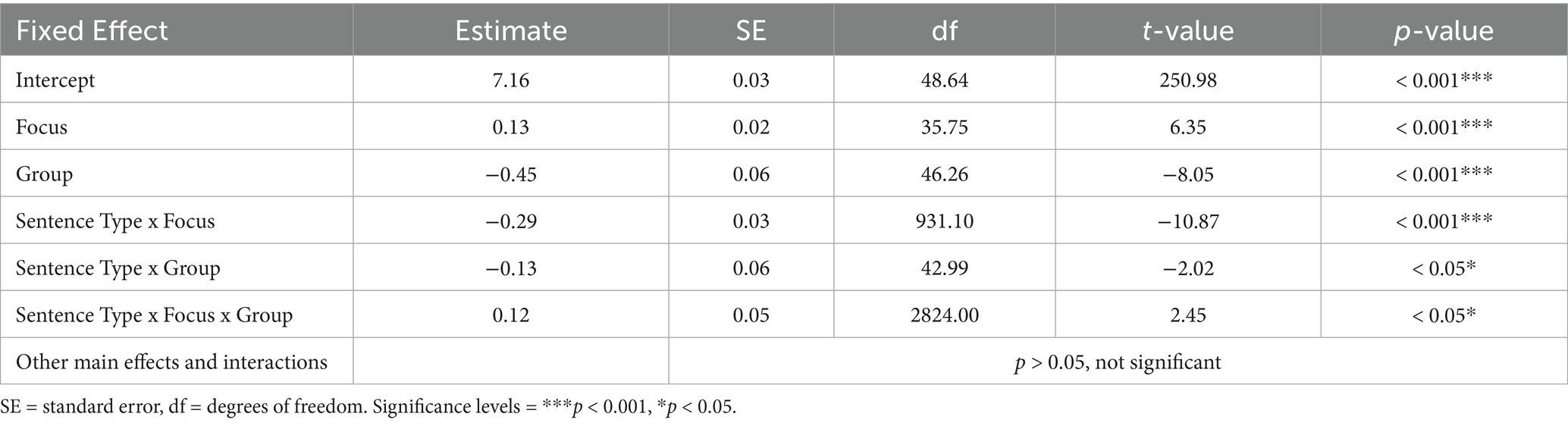

Table 5. Model results of the fixed effects from the final linear mixed model on reaction times for correct responses.

Results revealed statistically significant main effects of focus and group, further qualified by several statistically significant two-and three-way interactions: first, a significant interaction between sentence type and focus indicated faster reaction times for narrow focus as compared to wide focus in questions, while reaction times did not differ between focus conditions in statements. Second, a significant interaction between sentence type and group showed slower reaction times in IWA, with greater differences between groups for statements than for questions. Lastly, a significant three-way interaction between sentence type, focus, and group demonstrated that the relationship between sentence type and focus was influenced by group. Specifically, IWA responded significantly faster to narrow than to wide questions, while showing the reverse pattern for statements. In contrast, control participants exhibited faster reaction times for narrow vs. wide focus in both sentence types. Notably, there was no statistically significant main effect of sentence type, so these interactions should be interpreted with caution.

3.2 Aphasia subgroup analysis

For RQ2, we conducted statistical analyses on language abilities in IWA and performed hierarchical agglomerative clustering to explore aphasia subgroups based on their task performance in sentence type identification. Below, we present results of a generalized linear mixed model that includes severity of aphasia and speech fluency as covariates, followed by the clustering results to further explore individual differences and potential sources of variability in IWA.

3.2.1 Effects of language abilities

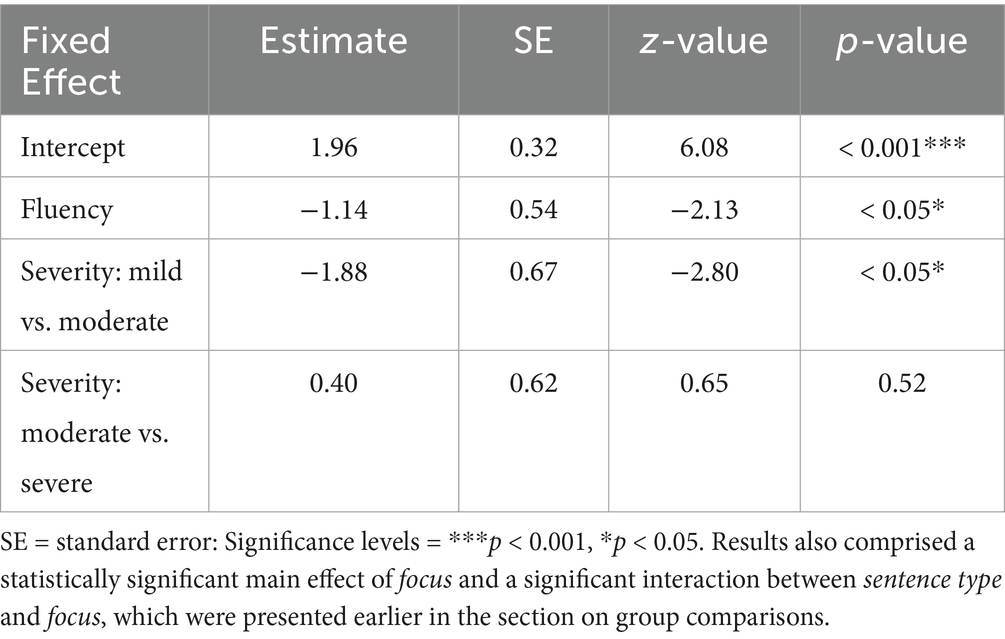

Results of the generalized linear mixed model on response accuracy in IWA indicated a statistically significant main effect of severity of aphasia, showing higher response accuracy in moderately vs. mildly impaired IWA, but no statistically significant difference between moderately vs. severely impaired IWA. Additionally, a statistically significant main effect of fluency showed higher response accuracy in fluent vs. non-fluent IWA (Table 6).

Table 6. Model results of the fixed effects of severity and fluency from the generalized linear mixed model on response accuracy in IWA.

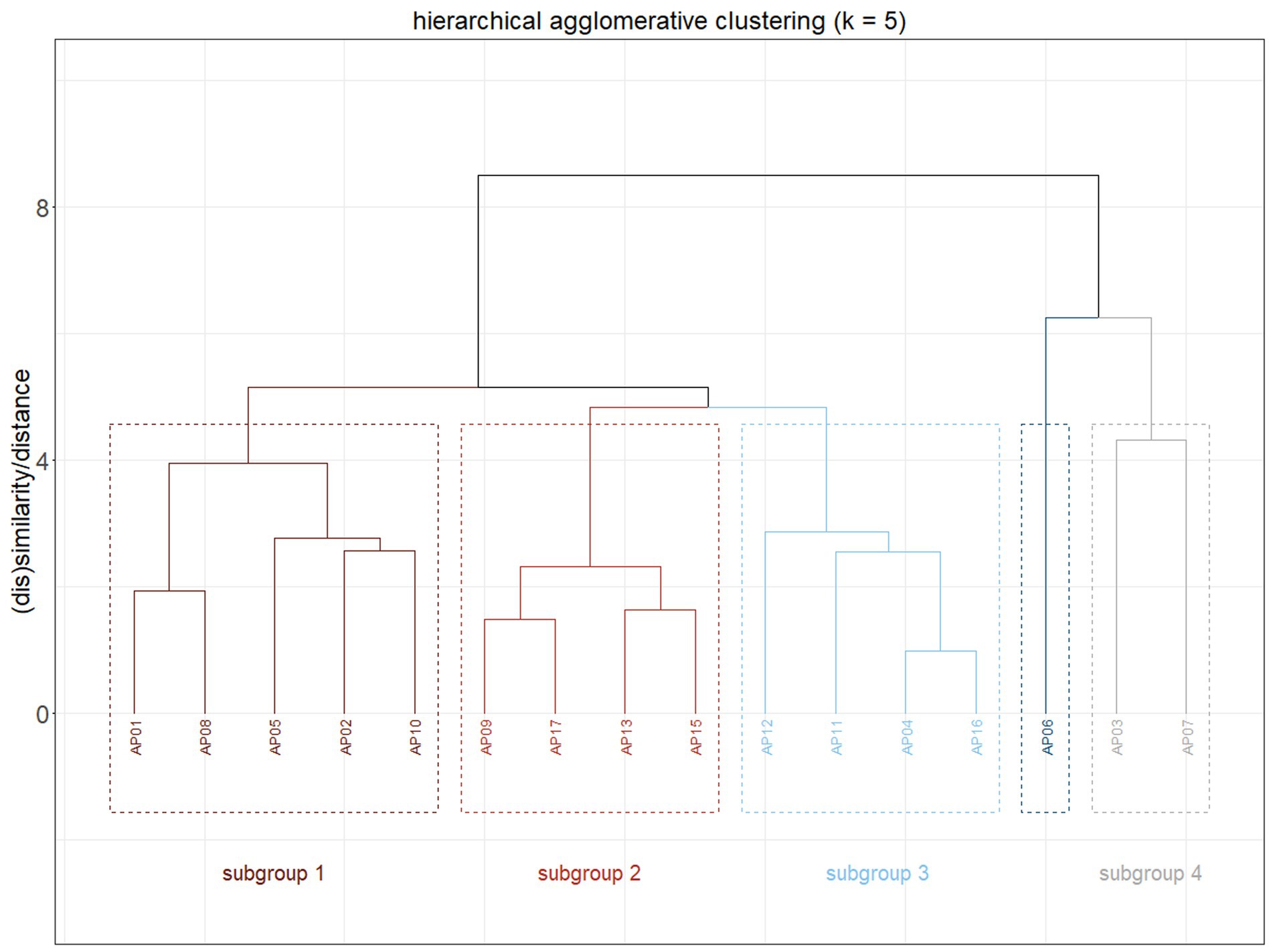

3.2.2 Clustering analysis

Results of the hierarchical agglomerative clustering are shown as a dendrogram in Figure 4. The correlation between the linking of data points in the cluster tree (i.e., the distance at which clusters were joined) and the distance between data points in the original distance matrix was 0.83, where a correlation coefficient of 1 would indicate an accurate reflection of the original data and correlations above 0.75 are generally considered strong (Tan et al., 2005). Clustering analysis was based on response accuracy and reaction times in sentence type identification and focused on identifying distinct subgroups of IWA. The dendrogram revealed five main clusters, from which we identified four subgroups and one outlier (AP06), who did not cluster with any other individual.

Figure 4. Dendrogram for hierarchical agglomerative clustering of individuals with aphasia, k = number of subgroups, position of the line on the y-axis indicates the cophenetic distance between clusters with higher values representing less similarity (0 = absolute similarity).

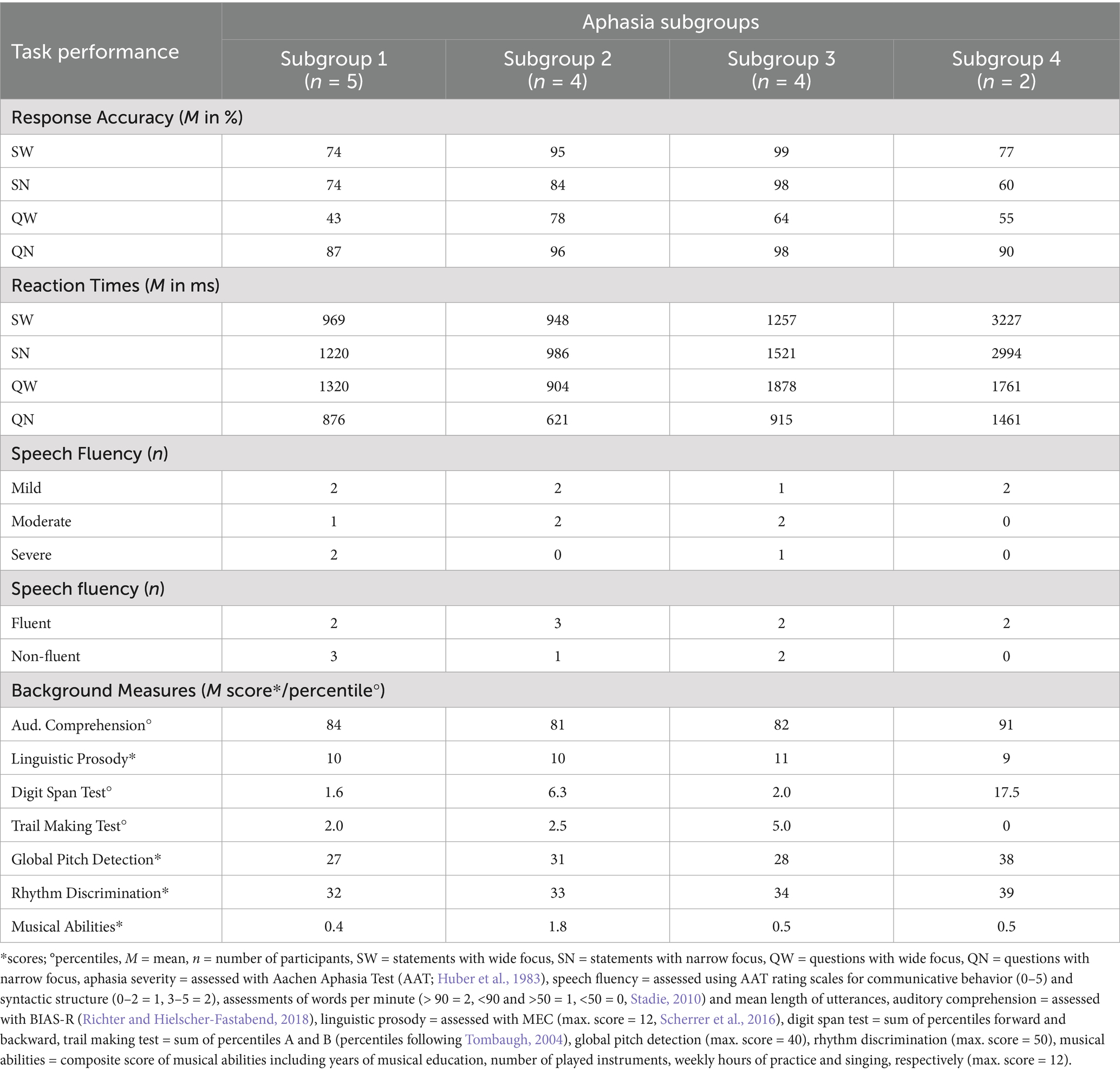

We further explored demarcating patterns between aphasia subgroups, considering the influence of potential sources of variability (i.e., aphasia severity, speech fluency, language, cognitive, and musical abilities, comprehension of linguistic prosody, and non-auditory processing skills). The results are presented in Table 7 and analyzed descriptively.

Table 7. Mean task performance and background measures on language, cognitive, and musical abilities, linguistic prosody, and non-auditory processing skills for individuals with aphasia, separated by subgroups 1–4 following hierarchical agglomerative clustering.

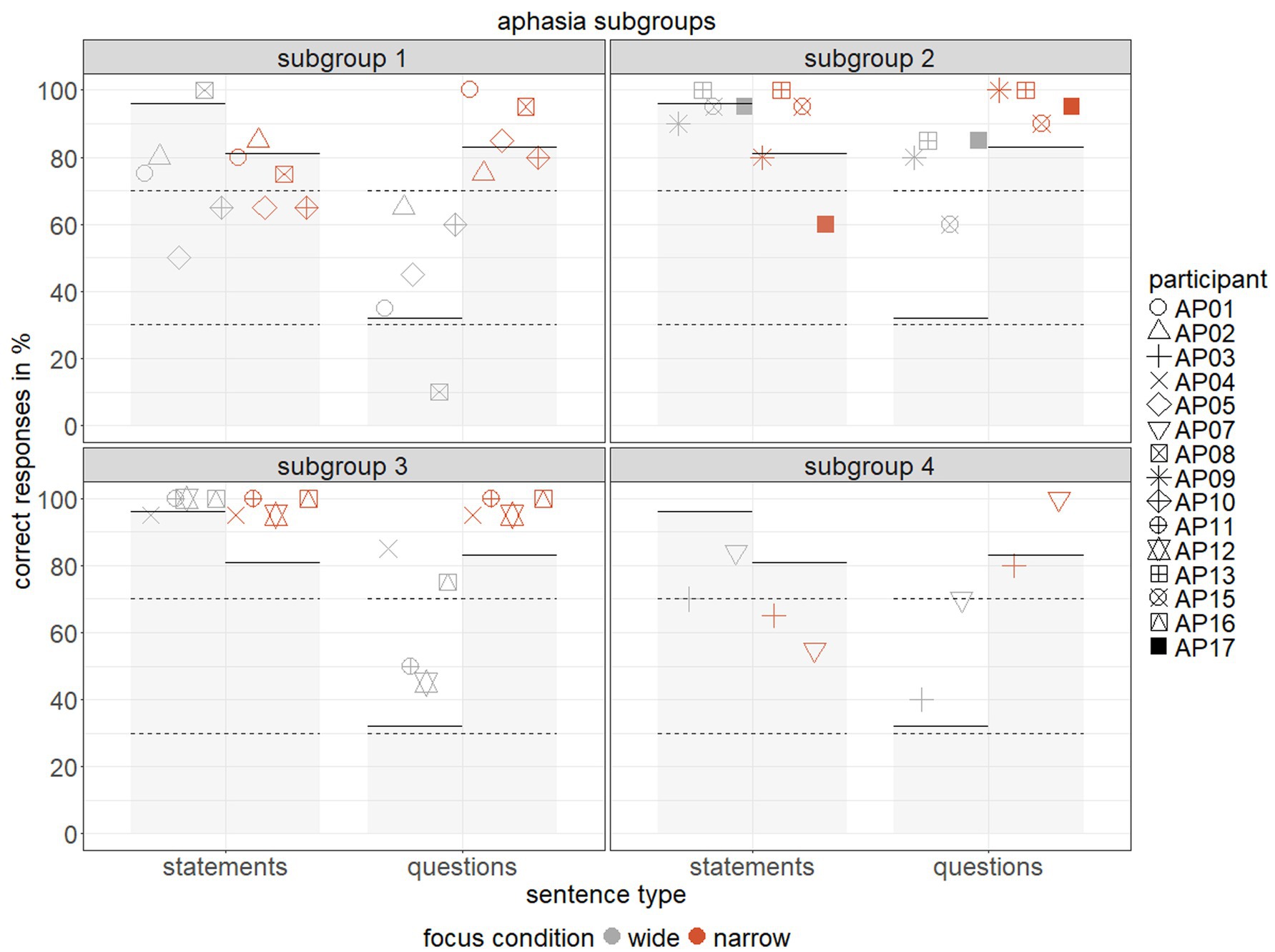

Response accuracy relative to chance and impaired performance in subgroups 1–4 is displayed in Figure 5. To determine impaired performance in IWA, we computed for each individual and condition whether their performance statistically deviated from the mean response accuracy of the control group. Specifically, we calculated the range of neurotypical performance, accounting for sample size, mean, and standard deviation following the method by Crawford and Howell (1998). We then defined condition-specific cut-offs using the software singlimsES (Crawford et al., 2010). Based on these, we determined the range of impaired performance and classified each IWA’s performance as either impaired (i) or not impaired (n) in the respective experimental conditions.

Figure 5. Response accuracy (% correct) for each individual with aphasia, separated by subgroups 1–4 across two sentence types (statements vs. questions) and two focus conditions (wide = grey, narrow = red), grey area indicates impaired task performance (solid lines = cut-off) across conditions following Crawford and Howell (1998), dashed lines represent chance range per condition.

Subgroup 1 included participants across all levels of aphasia severity and speech fluency, who predominantly showed impaired or chance performance with relatively fast reaction times. This subgroup was characterized by low mean scores in global pitch detection, rhythm discrimination, and auditory working memory, though these scores were still comparable to those of subgroups 2 and 3. Additionally, low mean scores in musical abilities were similar to those in subgroups 3 and 4.

Subgroup 2 comprised both fluent and non-fluent IWA with mild to moderate impairments. Their response accuracy was predominantly above chance and not impaired. Participants demonstrated relatively fast reaction times and displayed higher mean score in musical abilities as compared to the other aphasia subgroups, even though still at floor.

Subgroup 3 also included participants from all levels of severity and fluency, with task performance comparable to the control group but notably slower reaction times. Compared to the other subgroups of IWA, subgroup 3 exhibited higher mean scores in executive functioning, even though still at floor performance, and in the linguistic prosody subtest, as measured by the MEC (Scherrer et al., 2016). However, differences in executive functioning and the comprehension of linguistic prosody were minor across all four subgroups.

Subgroup 4 consisted of two mildly impaired, fluent IWA, who predominantly showed impaired or chance performance with relatively slow reaction times. However, this subgroup had the highest mean scores in global pitch detection, rhythm discrimination, auditory working memory and auditory comprehension, as assessed with BIAS-R (Richter and Hielscher-Fastabend, 2018). These differences were more pronounced as compared to the other subgroups.

To further investigate individual differences, we examined the unique patterns of outlier AP06, a mildly impaired, fluent IWA. AP06 demonstrated impaired performance across all four experimental conditions, with response accuracy at chance for wide statements and narrow questions. However, response accuracy for narrow statements and wide questions fell below chance. Reaction times were relatively slow for wide and narrow questions and wide statements, while narrow statements yielded faster reaction times. Performance on cognitive tests was very low (Digit Span Test = 4; Trail Making Test = 0; percentiles), though still comparable to other subgroups. AP06’s score of musical abilities (1 out of 12) was also low, while scores in global pitch detection (30 out of 40) and rhythm discrimination (35 out of 50) were relatively high, though again similar to those of other subgroups. In contrast, AP06’s performance in the linguistic prosody subtest (7 out of 12) was impaired and clearly below the other subgroups’ means, aligning with the poor task performance in sentence type identification.

4 Discussion

The present study examined the use of prosodic cues for sentence type identification in German interrogative and declarative structures with wide and narrow focus in individuals with and without aphasia. Specifically, we investigated to what extent prosodic cue use is impaired in aphasia (RQ1) by comparing response accuracy and reaction times between IWA and neurotypical control participants. Additionally, we examined individual differences in sentence type identification in aphasia (RQ2) by exploring potential sources of variability (i.e., severity of aphasia, speech fluency, language, cognitive or musical abilities, and non-linguistic auditory processing skills) through aphasia subgroup and clustering analyses. In the following, we address three main aspects: observed impairments in prosodic processing in IWA, potential sources of variability contributing to individual differences in prosodic cue use, and the limitations of the present study alongside directions for future research.

4.1 Impaired prosodic processing in individuals with aphasia (RQ1)

IWA exhibited overall lower response accuracy and slower reaction times in sentence type identification as compared to the control group. These findings suggest impaired prosodic processing in IWA, in line with our predictions and previous studies highlighting significant challenges in the identification of structurally ambiguous sentences (Pell and Baum, 1997; Seddoh, 2006; Walker et al., 2002). Such difficulties are consistent with the functional load hypothesis, which argues that linguistic prosody is primarily processed in the left hemisphere and thus affected by left-hemispheric lesions in IWA (e.g., Van Lancker, 1980). In the following sections, we discuss prosodic impairments in IWA addressing effects of sentence type and focus marking.

4.1.1 Sentence type

Our results revealed overall lower response accuracy for questions as compared to statements, with more pronounced differences for IWA. This was particularly evident in questions with wide focus, where IWA performed at chance, and control participants also showed reduced response accuracy as compared to other conditions. Previous studies suggested that processing linguistic prosody may be more challenging for older adults, possibly due to higher cognitive demands when prosody serves linguistic functions (e.g., Raithel and Hielscher-Fastabend, 2004; Shahouzaei et al., 2024). For IWA, these challenges are compounded by left-hemispheric lesions. In wide focus, prosodic cues in the terminal region, such as an f0 rise for questions and an f0 fall for statements, play a critical role in sentence type identification (Pell, 2001; Peters and Pfitzinger, 2008). While prior research emphasizes the contribution of the entire prosodic contour, including the pre-terminal region, to sentence type identification (Hadding-Koch and Studdert-Kennedy, 1964; Petrone and Niebuhr, 2014), our findings suggest that differences in pre-terminal contours of questions and statements with wide focus may have been too subtle for IWA to decode. This aligns with findings by Seddoh (2006), where mildly to moderately impaired non-fluent IWA struggled with pre-terminal question identification. However, in contrast to results of this study, where the same group successfully identified questions in the terminal region, IWA in the present study also showed difficulties in using prosodic cues at the end of sentences.

The discrepancy between the identification of wide questions and statements in IWA may have been influenced by a response bias toward statements given the sentences’ SVO structure. This aligns with the perception check results (see Supplementary material 1), which showed a bias toward statements in control participants. Similarly, Walker et al. (2002) suggested that IWA rely on a default strategy, inferring statements from both prosody and word order, while question identification depends solely on prosodic cues. However, in the present study, IWA neither performed at ceiling for statements nor exclusively gave statement responses to question stimuli. Instead, their identification of questions with wide focus was at chance. We propose that this may reflect difficulties in establishing structural predictions due to deficient prosodic cue use. Seddoh (2006) argued that decoding of prosodic information takes place in smaller units rather than the global prosodic contour, with the terminal f0 movement being the most perceptually salient feature in wide questions (Lieberman, 1967). IWA may have waited for this more salient, terminal cue but then struggled to decode or use it for structural prediction, as it appears only very late and briefly in the sentence. This strategy is comparable to syntactic processing of locally ambiguous German sentences, where IWA often misinterpret object-first structures as canonical subject-first structures due to case syncretisms at the first noun phrase. When disambiguating morpho-syntactic case cues appear at the second noun phrase, IWA must revise their initial interpretation, increasing processing demands (Bornkessel et al., 2002; Grewe et al., 2007). Consequently, IWA often adopt a “wait-and-see” strategy and delay establishing predictions until disambiguating cues are available, minimizing prediction errors (Hanne et al., 2015). In the present study, IWA may have similarly waited until the terminal f0 movement to form predictions, but their difficulty decoding this cue likely contributed to their low response accuracy, as no prediction was generated at all, leading to overall chance performance. Thus, challenges in sentence type identification may be linked to both cognitive abilities and impaired use of prosodic cues for structural prediction.

4.1.2 Focus marking

Our results further showed that focus marking influences sentence type identification in both IWA and control participants. Previous studies provided mixed findings on focus perception in IWA, suggesting focus marking to either facilitate or compromise sentence comprehension (Gavarró and Salmons, 2013; Geigenberger and Ziegler, 2001; Kimelman, 1999; Pell, 1998; Raithel, 2005). The present study reflects these inconsistencies but offers a theoretical explanation for differential processing of focus-related prosody in aphasia: for statements, narrow focus at the first noun phrase led to significantly lower response accuracy in IWA as compared to wide focus. Since statements can already be inferred from word order following the canonical rule in German, additional prosodic cues may have increased processing demands, contributing to impairments in focus-related prosody (e.g., Geigenberger and Ziegler, 2001; Pell, 1998). Conversely, for questions, focus marking facilitated sentence type identification, resulting in significantly higher response accuracy and faster reaction times in narrow vs. wide focus in IWA. In narrow questions, prosodic cues (f0 rise at NP1) appear earlier and are more prolonged throughout the sentence, making them more prominent for IWA to decode. This supports the role of the pre-terminal region, as suggested by Petrone and Niebuhr (2014), who highlighted the importance of cue alignment, slope, and shape of the prosodic contour for prosodic processing. Our findings reveal that IWA can use prosodic cues effectively when cue alignment and prolongation provide sufficient time to establish structural predictions, thereby improving task performance. Since f0 serves as the primary cue for distinguishing questions from statements (Peng et al., 2012), and IWA can use f0 under certain conditions, our results support a relative preservation of f0 processing in individuals with left-hemispheric lesions. Our findings suggest that difficulties in prosodic cue use in aphasia may not stem from impairments in f0 processing itself but rather from how these cues are temporally aligned within the structure of the sentence: while early and prolonged cues can be used for structural prediction, late and briefly presented cues remain challenging for IWA.

To summarize, our results highlight impairments in prosodic cue use in IWA, influenced by cue alignment and prolongation, as well as cognitive abilities and overall processing demands. Variability in sentence type and focus marking determined how these cues were used for structural prediction, providing further insights into the challenges of prosodic cue use in aphasia.

4.2 Sources of variability in prosodic impairments in aphasia (RQ2)

The following sections discuss potential sources of variability in prosodic cue use, focusing on how language abilities (i.e., aphasia severity and speech fluency), as well as non-linguistic auditory processing skills (i.e., global pitch detection, rhythm discrimination, musical abilities) influence prosodic processing in aphasia. Additionally, we shed more light on distinct subgroups of IWA with demarcating patterns in sentence type identification, that are potentially related to differential underlying mechanisms of prosodic impairments.

4.2.1 Effects of language abilities

Contrary to our hypothesis on the effects of aphasia severity, moderately impaired IWA showed significantly higher response accuracy as compared to mildly impaired IWA, with no difference between moderately and severely impaired IWA. This contrasts with previous studies reporting differences in linguistic prosody processing between mild-to-moderately and severely impaired IWA (Kimelman, 1999; Seddoh, 2006). One possible reason for this discrepancy is that the sentence type identification task in the present study did not impose complex linguistic demands on auditory comprehension. In contrast to Kimelman (1999), who found that IWA’s resources for prosodic processing diminish with increasing linguistic complexity, our task required more metalinguistic skills, targeting sentence processing in a more explicit way. Another possible explanation could stem from differences in how aphasia severity was defined across studies. For instance, Kimelman (1999) classified severity based on auditory comprehension performance, while Seddoh (2006) used aphasia syndrome classification, with Broca’s aphasia considered less severe than global aphasia among non-fluent IWA. In the present study, severity of aphasia was assessed using the AAT (Huber et al., 1983), which provides a composite score across multiple language modalities. This broader definition of severity may include measures less directly related to prosodic processing, potentially obscuring severity effects. To address this, we conducted a post-hoc analysis using auditory comprehension scores from the BIAS-R (Richter and Hielscher-Fastabend, 2018), which are more closely linked to prosody perception. Results mirrored those of the AAT, with moderately impaired IWA outperforming mildly impaired IWA. These findings underscore the need for further research on how aphasia severity influences linguistic prosody processing, especially given the unexpected results and the small sample size of the present study. They also suggest that there is a distinction between more general language comprehension tasks included in standardized test batteries and the present task, which involved sentence type identification as a more metalinguistic skill without requiring comprehension. Consequently, tasks requiring metalinguistic skills can be accomplished by IWA with more pronounced impairments, highlighting the potential of prosodic cue use as a resource in tailored therapeutic approaches.

Classifying IWA by speech fluency rather than severity revealed significantly higher response accuracy in fluent as compared to non-fluent IWA, consistent with our hypothesis and prior research (Raithel, 2005; Seddoh, 2006). Speech fluency has also been linked to non-linguistic auditory processing skills, particularly rhythm discrimination (Stefaniak et al., 2021), with rhythm representing a key temporal parameter of prosody. While f0 is the primary cue for sentence type identification (Peng et al., 2012), additional durational cues, such as those involved in focus marking in questions, may further enhance task performance (Pell, 1998). In the present study, cue alignment and prolongation particularly facilitated the identification of questions with narrow focus. These findings suggest that fluency in speech production may relate to the processing of linguistic prosody at both sentential and information structural levels, potentially reflecting shared mechanisms of structural prediction in perception and production.

4.2.2 Non-linguistic auditory processing

Our results on the influence of non-linguistic auditory processing skills revealed a positive correlation between global pitch detection and sentence type identification, with significant effects on response accuracy differences between IWA and control participants. This aligns with our predictions and previous studies showing individual impairments in pitch discrimination in aphasia (Zipse et al., 2014; Grube et al., 2016; Stefaniak et al., 2021). Contrary to expectations, however, rhythm discrimination and musical abilities did not significantly affect task performance, suggesting that global pitch detection was more closely aligned with the demands of the sentence type identification task. These findings support those of Peng et al. (2012) and Schafer et al. (2000), highlighting the critical role of f0 in sentence type identification and focus marking.

4.2.3 Aphasia subgroups and individual differences

In a clustering analysis, we identified aphasia subgroups and potential sources of variability in IWA’s use of prosodic cues beyond the group level. Clustering revealed demarcating patterns in task performance between subgroups, pointing to certain limits of variability. For response accuracy, subgroups 1 and 4 showed predominantly impaired or chance performance, while subgroups 2 and 3 displayed response accuracy largely comparable to control participants. For reaction times, subgroups 1 and 2 responded relatively fast, whereas subgroups 3 and 4 showed slower responses. However, variability within subgroups was observed, driven by outlier performances in specific experimental conditions. In these cases, participants generally followed the subgroup’s overall pattern but showed notably higher or lower performance in single conditions. For example, AP11 and AP12 exhibited chance performance for questions with wide focus but performed at ceiling in the other conditions, aligning with the subgroup’s overall pattern and thus justifying their clustering. These results underscore both distinct subgroup characteristics and individual differences within broader patterns.

In contrast, the outlier AP06 showed unique patterns in task performance and was consequently not clustered with any other subgroup. AP06 demonstrated impaired performance across all conditions, either at or below chance, resembling subgroup 4 in terms of low response accuracy and slow reaction times. However, below chance performance for statements with narrow focus and questions with wide focus suggests a default strategy of identifying narrow focus stimuli as questions and wide focus stimuli as statements. Unlike most IWA, AP06 did not display chance performance but instead applied an incorrect strategy for structural prediction. Specifically, AP06 may have struggled to decode prosodic cues appearing late and briefly in sentences with wide focus, and instead inferred sentence type from word order (Walker et al., 2002). For narrow focus, earlier cues may have been misinterpreted as signaling questions. This severe difficulty in prosodic cue use was also evident in AP06’s performance on the linguistic prosody subtest of the MEC, which was notably below subgroup means.

We also explored potential sources of variability within subgroups, examining whether patterns in task performance reflected language, cognitive, and musical abilities, as well as non-linguistic auditory processing skills. However, findings were inconclusive. Subgroups displayed mixed patterns regarding aphasia severity and speech fluency, with mean scores and percentiles often similar across subgroups, showing only slight tendencies toward differential profiles. These results should be interpreted cautiously, as individual differences may significantly impact findings due to small sample sizes within each subgroup. Such limitations are common in clinical populations due to recruitment challenges and strict inclusion criteria, which is why individual differences were examined in an exploratory manner.

The most pronounced differences were observed in subgroup 4, which displayed the highest mean scores in global pitch detection, rhythm discrimination, auditory working memory, and auditory comprehension, yet showing impaired or chance performance with slow reaction times in sentence type identification. The paradoxical nature of this subgroup’s profile contrasts with our hypotheses and previous research, suggesting that the relationship between sources of variability and linguistic prosody processing is more complex than anticipated. Sidtis and Van Lancker Sidtis (2003) proposed a neurobehavioral approach to dysprosody, emphasizing that prosodic processing depends on intricate interactions between motor, perceptual, and cognitive mechanisms. Similarly, Wells and Walker (2024) argued that prosodic impairments can arise from “high level” deficits in linguistic representations or “low level” auditory processing difficulties that hinder prosodic cue use. This complexity is reflected in our findings: while some individuals’ performance correlated with non-linguistic auditory processing skills (i.e., global pitch detection), subgroup 4 showed impairments in prosodic cue use despite relatively intact abilities in global pitch detection, suggesting partly independence of higher level prosodic processing and lower level auditory processing skills.

When considering cognitive abilities, such as auditory working memory and executive functioning, generally low percentiles were observed across IWA despite slight subgroup differences. Standardized cognitive measures may not always allow for a clear separation between cognitive impairments and linguistic impairments when interpreting results. Low task performance may, for instance, stem from difficulties in digit repetition or symptoms of acalculia. Additionally, actual cognitive challenges may have imposed additional demands on IWA during linguistic prosody processing (Raithel and Hielscher-Fastabend, 2004; Shahouzaei et al., 2024), potentially affecting sentence type identification. There is a clear need in future research to develop cognitive measures tailored for individuals with language impairments, accounting for individual differences associated with the underlying impairment. This would allow for more precise inferences about whether difficulties are primarily linguistic or cognitive in nature, and how cognitive abilities may shape prosodic processing, thereby informing individualized therapeutic approaches.

4.3 Limitations and outlook

In the following, we discuss possible limitations of the present study. First, our sentence type identification task, which targeted sentence processing in a more explicit way, does not allow conclusions about the use of prosodic cues in auditory comprehension or everyday communication. This may also explain why, contrary to our hypothesis, findings on aphasia severity showed no direct link between impairments in auditory comprehension and task performance. However, examining metalinguistic skills in linguistic prosody processing highlighted that prosodic cues can serve as valuable resources for individuals with more pronounced impairments. Future research should explore how these skills can then be applied in more naturalistic settings, increasing ecological validity.

Second, to examine whether sentence type identification was related to the comprehension of linguistic or emotional prosody, we used the linguistic (i.e., identification of sentence types: questions, statements or commands) and emotional (i.e., identification of affective tone: happy, angry or sad) prosody subtests of the MEC (Scherrer et al., 2016). In line with the functional load hypothesis (e.g., Van Lancker, 1980), we expected linguistic, but not emotional, prosody to relate to our findings. However, our results only partially supported these predictions, as neither measure showed significant effects. The MEC, although standardized for language and communication disorders, is primarily designed for individuals with right-hemispheric lesions resulting in cognitive communication disorders (for more details: see Büttner-Kunert et al., 2022; Neumann et al., 2019), which may limit its applicability for IWA. Furthermore, the MEC’s prosody subtests include relatively few trials (n = 12), which could explain the lack of correlation with our task. Nonetheless, the current range of prosodic assessment tools remains sparse, and measures of linguistic prosody are limited (Benedetti et al., 2022). Despite the lack of significant correlations, group differences on the MEC revealed that IWA performed worse than control participants on the linguistic prosody subtest but showed comparable performance on the emotional prosody subtest, supporting specific impairments in linguistic prosody in aphasia. These findings underscore the need for standardized prosodic assessment tools that more thoroughly address impairments in linguistic prosody and are tailored to individuals with left-hemispheric lesions.

Lastly, the small sample of IWA, a common limitation in clinical research, constrains the analysis of aphasia subgroups with demarcating patterns in task performance and individual differences. Additionally, task demands, the nature of prosodic cues, and experimental design (Paulmann, 2016) likely further influence individual variability. Linguistic prosody processing appears to rely on complex underlying mechanisms, specific linguistic functions, and brain structures engaged during task performance. The heterogeneity of IWA, including lesion sites and subsequent brain reorganization, may further shape the neurobehavioral system involved in prosodic processing. Future research should focus on individual differences, as factors such as language, cognitive, and musical abilities, as well as non-linguistic auditory processing skills could reveal differential underlying mechanisms of prosodic impairments.

5 Conclusion