- 1The University of Sheffield, Sheffield, United Kingdom

- 2Tubr, Sheffield, United Kingdom

Introduction: This study examines the utilization, challenges, and design principles of data visualization approaches, focusing on their applications within AI-assisted decision-making contexts, by reviewing relevant literature. We explore the types of visualization approaches used and the challenges users face. We also examine key visual elements that influence understanding and the evaluation methods used to assess these visualizations.

Methods: A systematic literature review (SLR) adhering to PRISMA protocols was carried out across five major academic databases, resulting in 127 relevant studies published from 2011 to July 2024. We synthesize insights from existing visualization approaches used in decision-making, and evaluates key aspects such as usability, interactivity, accessibility, and cognitive load management.

Results: We identified a range of visualization forms including charts, graphs, dashboards, and interactive platforms aimed at enhancing data exploration and insight extraction. The identified challenges include achieving a balance between complexity and usability, fostering intuitive design, and providing sufficient training to aid accurate interpretation of complex data. Specific visual elements, such as color usage, symbolic representation, and data density control, are highlighted as essential for enhancing user comprehension and supporting effective decision-making. Interactive and customizable visualizations tailored to individual cognitive styles proved especially effective. We further underscore the importance of diverse evaluation methods, including usability testing, surveys, and cognitive assessments, to iteratively refine visualization approaches based on user feedback.

Discussion: Our findings suggest that users benefit most from customizable, interactive approaches that cater to varied cognitive preferences and incorporate continuous training to reduce interpretive biases. This research contributes to best practice development for designing accessible, effective visualization approaches suited to the complex decision-making needs in data-centric environments.

1 Introduction

Data visualization plays a crucial role in transforming complex data into accessible and interpretable formats, including charts, graphs, scatter plots, or other visualization types (Gubala and Meloncon, 2022). Kirk (2016) defines data visualization as “the representation and presentation of data to facilitate understanding” (p. 52), framing comprehension as a dynamic process that involves perception, interpretation, and reasoning. This perspective assumes an active user who derives meaning from visual artifacts.

As data-driven practices expand across domains, these has been increasing attention to how visualization approaches can effectively communicate insights to diverse audiences. Despite the widespread availability of visualization approaches, there remains uncertainty over which specific visual elements (e.g., color, layout, and interactivity) best facilitate users comprehension and decision-making, particularly for users with limited data literacy.

In addition, evaluation techniques for visualizations are evolving, with traditional task-based assessments and user feedback surveys increasingly supplemented by real-time engagement metrics. However, consistent standards for evaluating visualization effectiveness are still limited, particularly as interactive features become more prevalent in visualizations and more central to user experience (Survey Point, 2023).

In this review, we focus on the decision-making context, where stakeholders or domain expert users are responsible for making informed decisions. We use the term domain expert users (or domain experts) to refer to individuals with subject-matter expertise (e.g., in healthcare, education, or business) who engage with data visualizations to support their decision-making. Typically, these users lack formal training in data science, programming, or visual analytics (Wong et al., 2018). This definition aligns with the concept of the lay audience described in Meloncon and Warner (2017). In particular, the interaction of domain experts with visualizations focuses on interpreting and applying the presented information to inform decisions, rather than developing or customizing the visualizations themselves.

We define AI-assisted decision-making tools as systems designed to support human decision-makers in analyzing data, identifying patterns, and generating recommendations. These tools are intended to enhance human-based judgement, particularly in scenarios involving complex or large-scale data, rather than replacing humans in making decisions. In this paper, we adopt a broad definition of Artificial Intelligence (AI), as “a system's ability to interpret external data correctly, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation” (Haenlein and Kaplan, 2019). AI encompasses a range of techniques, including machine learning, predictive modeling, rule-based logic and optimization algorithms (Gudigantala et al., 2023).

These AI-assisted tools are deigned to process complex data, identify patterns and provide recommendations that help stakeholders make faster, more accurate, and better informed decisions. While such tools do not always require a visual interface, this review focuses specifically on those that incorporate data visualization approaches to support interpretability and usability for end users. In these cases, as we will see in Section 3, visualization plays an important role in helping users understanding on the system's outputs, through approaches such as interactive dashboards, visual summaries or visual comparisons. Our perspective is consistent with prior research in Miller (2019) which emphasizes the importance of user-centers design for effective decision-making.

In this paper, we distinguish between visualization tools to be software applications used to create data visualizations (e.g., Tableau, Power BI), and visualization approaches and types to refer to the specific graphical formats in which data is presented (e.g., bar charts, treemaps).

Given the rapid developments in data visualization field, there is a growing need to understand how these tools are used by domain experts, who often rely on visualizations for critical decision-making and communication. In addition to academic interest, there is also significant industry demand for better data visualization approaches that serve domain experts. Technology companies building customer-facing platforms face substantial challenges in designing visualizations for domain experts who struggle to interpret complex data representations. Despite incorporating research-backed insights into their designs, UI/UX designers often default to conventional visualization patterns that fail to effectively communicate meaning to users without technical backgrounds. This disconnect creates a critical gap between data availability and usability, particularly in contexts where busy professionals need to quickly extract actionable insights from dashboards viewed on mobile devices. Industry practitioners report that existing visualization solutions frequently overwhelm users, resulting in low engagement and reduced operational value, underscoring the pressing need for this systematic review to bridge theoretical research with practical implementation challenges.

While prior studies like Gubala and Meloncon (2022) and Meloncon and Warner (2017) have explored various visualization techniques and their impact on user comprehension, gaps remain in our understanding of: (1) which specific approaches most effective for domain experts without technical backgrounds; (2) the challenges these users face in interpreting visualizations; and (3) the influence of individual visual elements on comprehension and decision-making. Furthermore, evaluating these visualizations requires an understanding of not only their design, but also their real-world application and effectiveness. To address these gaps, this systematic literature review addresses the following four research questions:

• RQ1: What types of data visualization approaches are used in AI-assisted decision-making tools to support decision-making by domain experts?

• RQ2: What challenges do domain experts encounter in understanding, interpreting, and comprehending data visualizations?

• RQ3: Which visual aspects and elements in data visualization influence user understanding and decision-making?

• RQ4: What evaluation methods have been employed to assess these visual elements and visualizations?

To address these research questions, this literature review synthesizes current approaches to data visualization, critically examines the challenges domain experts face in interpreting visual information, and explores the visual elements that most significantly influence comprehension and decision-making. By evaluating the methods used to assess visualizations, the review seeks to highlight research gaps and support the development of evidence-based practices that enhance the usability and effectiveness of data visualization approaches for domain experts without technical backgrounds.

Several previous existing literature reviews have examined the role of data visualization in enhancing user comprehension. Gubala and Meloncon (2022) conducted an integrative analysis of empirical studies across multiple fields, exploring how data visualization enhances comprehension of complex information. Their work builds upon an earlier review by Meloncon and Warner (2017), which examined 25 studies across fields such as health and medicine, underscoring both the potential and limitations of visual representation in specialized domains.

Some recent studies have explored new visualization techniques, such as pictographs, dashboards, and interactive features, aimed at simplifying data presentation. Yet, as noted by Jiang et al. (2023), significant challenges persist in determining how best to design visualizations that optimize user understanding across varying contexts. However, previous reviews have primarily focused on domain-specific applications or the technical dimensions of data visualization. In contrast, this systematic review centers on visualization strategies designed for domain experts without technical background, bringing together state-of-the-art techniques and evaluation methods. By addressing this gap, we aim to contribute practical insights and recommendations for designing and assessing data visualizations that more effectively support domain experts in decision-making and data communication.

The remainder of the paper is structured as follows. Section 2 outlines the methodology used to conduct the systematic literature search and provides an overview and synthesis of the selected studies. Section 3 presents the main findings and addresses each of the research questions. Section 4 discusses the key factors influencing users' understanding and decision-making, summarizes the findings, outlines the limitations of the review, and concludes with suggestions for future research.

2 Methods

The SLR has been conducted in accordance with PRISMA guidelines (Page et al., 2021). The database search was carried out on 1st July 2024.

2.1 Search strategy

This systematic literature review employed a structured keyword search across five major databases: IEEE Xplore, Scopus, Web of Science, the ACM Digital Library (including both the Full-Text Collection and the Guide to Computing Literature), and PubMed, adopting a similar approach to that used by Gubala and Meloncon (2022).

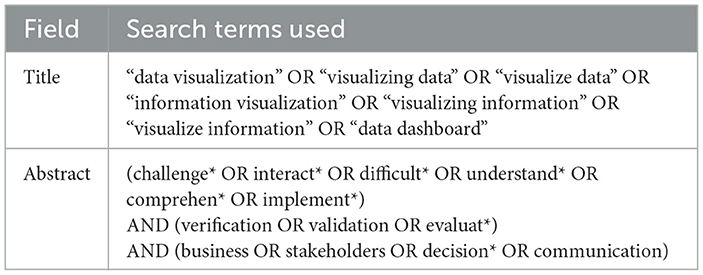

The primary search terms are summarized in Table 1. To ensure comprehensive retrieval, keyword variations including both UK and US spellings (e.g., “visualization” and “visualization”) were explicitly included across all database queries, accounting for orthographic differences in terminology. But for the sake of illustration, we only show American spelling here. Because Web of Science does not support wildcard characters within quotation marks, a slightly adjusted set of terms was applied for that platform. A complete list of all keywords used is provided in Appendix Table 1.

To ensure topical relevance, the following search string was applied to the Title field: “data visualization” OR visualizing data” OR “visualize data” OR “information visualization” OR “visualize information” OR “visualizing information” OR “data dashboard*”. A range of synonymous terms was included to maximize the retrieval of pertinent studies.

To further narrow the scope and target studies aligned with this review's focus, the following additional keywords were applied to Abstract field.

• challenge* OR interact* OR difficult* OR understand* OR comprehen* OR implement is used to retrieve documents that focus on, or mention, challenges the user might encounter in their interaction with data visualization approaches.

• verification OR validation OR evaluat* is used to retrieve documents that focus on, or mention, how data visualization approaches are evaluated.

• business OR stakeholders OR decision* OR communication is used to retrieve documents that focus on, or mention, how data visualization approaches are used by domain experts in decision-making. Typically, these domain experts are center to interpreting data and making practical decisions (i.e., stakeholders), but lacks formal training in technical aspects such as data science, programming, or visual analytics, and thus are also called non-technical users (Wong et al., 2018).

After retrieving the articles from the selected databases, the following inclusion criteria were used to determine eligibility for this systematic review. Studies were included if they met all of the following conditions:

• Published in journals or conference proceedings.

• Published in 2011 or later.

• Written in English.

• Available online in full-text.

We target post-2011 literature, as this year marks a significant shift in the data visualization landscape, fuelled by advancements in big data technologies and mainstream industry adoption. Notably, Manyika et al. (2011) emphasized the transformative role of data and visualization approaches in unlocking insights across industries, underscoring a foundational change in how data-driven practices are perceived and applied. The limiters in the five databases are used to capture these inclusion criteria.

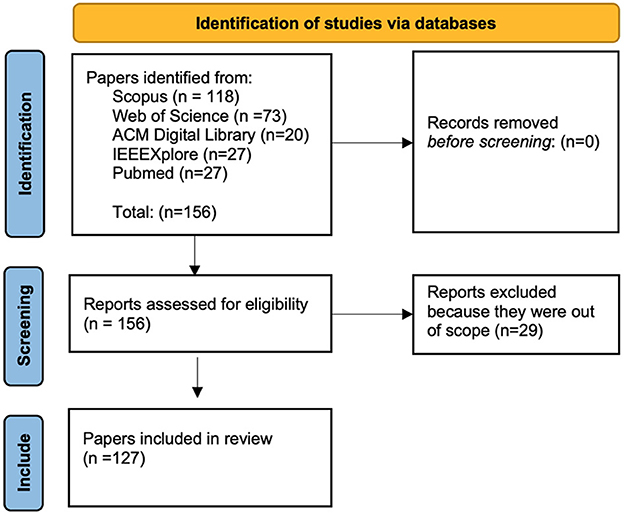

Figure 1 show the PRISMA flow diagram. After manual deduplication, 156 unique studies remained. The abstracts were then screened to assess their relevance to the review objectives. A total of 29 studies were excluded after abstract or full-text screening due to the misalignment with the scope of this review. More specifically, studies were excluded for the following reasons:

• Studies whose primary contribution lies in technical innovation in algorithmic development for visualization, with no or limited consideration of user interaction or decision-making contexts of the visualization, such as the dimensionality reduction method LaptSN (Sun et al., 2023) or large-scale rendering techniques (Park et al., 2018).

• Studies that used data visualization solely to present findings, without examining the visualization design, such as online survey results on language learning challenges (Jhamb et al., 2020).

• Studies focused on proposal of architecture, model or development of complex systems, in which visualization was either absent or have a minor role, and the visualization is not analyzed or evaluated, such as recommender systems (Huang et al., 2019), data distribution model of regional sports tourism (Chen, 2017), or robot teleoperation systems (Ueda et al., 2015).

• Studies targeting user groups outside the intended scope, such as tools designed for visually impaired users (Aljasem, 2020; Gorniak et al., 2023), which involve accessibility challenges distinct from visualization design considerations for decision-markers;

• Studies that are not related to data visualization in decision-making or users understanding, such as an analysis of children's drawings (Charitos et al., 2024), or explorations of socio-cultural dimensions of visualization (de Almeida, 2022).

After the screening, 127 papers are included in this review.

2.2 Data extraction and synthesis

2.2.1 Domains of the papers

We manually categorized the domains of the papers into six categories.

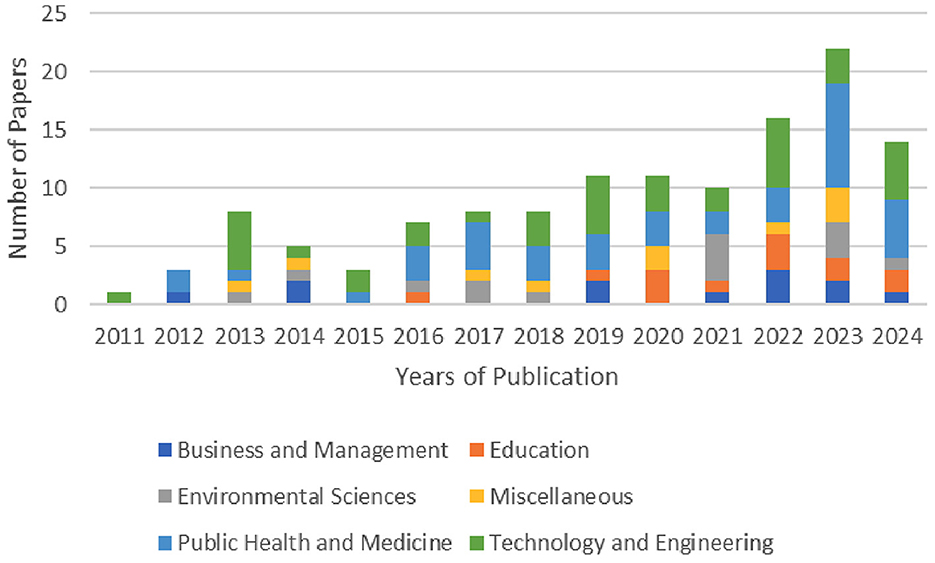

Figure 2 shows the distribution of publications across the six domains over the years from 2011 to 2024. The chart shows a clear upward trend in the total number of relevant publications. The database search was conducted in July 2024; therefore, the publication count for that year may be incomplete.

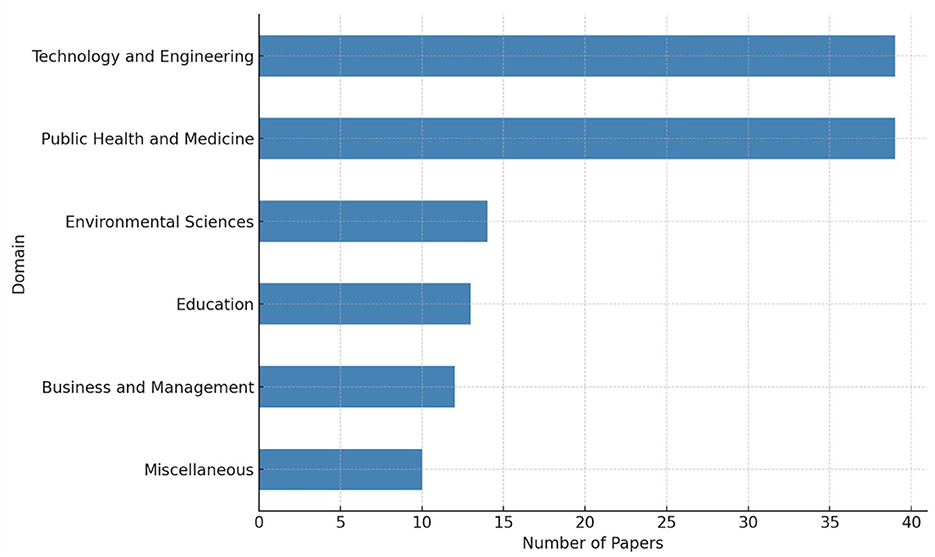

Figure 3 shows the distribution of the 127 studies reviewed, categorized into six domains: (a) Technology and Engineering, (b) Public Health and Medicine, (c) Environmental Sciences, (d) Education, (e) Business and Management, and (f) Miscellaneous. The highest concentration of studies is in Technology and Engineering and Public Health and Medicine (39 each), followed by Environmental Sciences (14), Education (13), Business and Management (12), and Miscellaneous (10). The full list of papers, sorted by domain, is provided in Appendix Table 2. Note that the domain categories were applied post-selection, based on the substantive content of each study already deemed relevant to decision-making tools for domain experts. The details of the categories are listed as follows.

(a) Technology and engineering. Studies focusing on the development, deployment, or evaluation of visualization approaches in computational, technical, or engineering-related contexts. This includes cybersecurity (Wu et al., 2024), AI interface design (Ai et al., 2022), systems monitoring (Evergreen and Metzner, 2013), and infrastructure analytics (Andreou et al., 2023; Somanath et al., 2014).

(b) Public health and medicine. Studies where data visualization approaches are applied within healthcare delivery, medical decision-making, or public health monitoring. This includes both clinical dashboards (Thayer et al., 2021; Wanderer et al., 2016) and approaches designed for population-level health insights (Albarrak, 2023; Burgan et al., 2024; Gisladottir et al., 2022).

(c) Environmental sciences. Studies that employ visualization in fields such as climate monitoring (Haara et al., 2018), energy systems (Stecyk and Miciula, 2023), ecology (Morini et al., 2023), or sustainability (Medeiros et al., 2016; Zheng et al., 2021). These visualizations often help domain experts engage with spatial or temporal environmental data.

(d) Education. Visualizations developed for educational purposes, including learner analytics (Ismail et al., 2022), performance feedback systems (Alger et al., 2024; Hernndez-Caldern et al., 2023), or curriculum-level decision-making (Akanmu and Jamaluddin, 2016; Deshmukh et al., 2023).

(e) Business and management. Studies situated in commercial or organizational contexts, such as business intelligence (Alwi et al., 2023), project management (Sanchez-Ferrer et al., 2019), or supply chain optimization (Couto et al., 2022; Luo, 2023). Data visualization supports managerial or operational decision-making (Ballarini et al., 2022).

(f) Miscellaneous. Studies that do not clearly fall into the above categories. This includes domains such as social services (Ansari et al., 2022), humanitarian logistics (Euman and Abdelnour-Nocera, 2013), community planning (Kukimoto, 2014), or other multi-contextual applications involving experimental or early-stage systems (Lizenberg et al., 2020; Mao et al., 2018; Muller and Tierney, 2017).

2.2.2 Types and methods of the papers

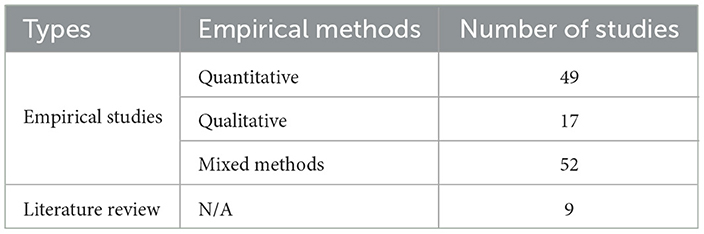

Table 2 summarizes the distribution of papers by type and the research methods employed in the empirical studies. Of the 127 papers reviewed, 9 are literature reviews, while the remaining 118 are empirical studies. Among the empirical studies, 49 adopted quantitative methods, for example, Handoko et al. (2023) applied Structural Equation Modeling Partial Least Squares (SEM-PLS). Seventeen studies employed qualitative approaches; for instance, Daradkeh (2015) utilized direct observations, think-aloud protocols, and content analysis of participant responses. The remaining 52 studies adopted mixed-methods designs. These include studies such as Burgan et al. (2024), which combined stakeholder perception surveys with semi-structured interviews, and Kettelhut et al. (2017), which employed a quasi-experimental design along with pre- and post-questionnaires, supplemented by qualitative participant feedback.

3 Findings and implications

Section 3.1 through 3.4 directly address the four research questions posed in this review. Specifically, Section 3.1 responds to RQ1 by mapping the range of data visualization approaches in AI-assisted decision-making contexts. Section 3.2 addresses RQ2 by identifying the key challenges that hinder users understanding and effective use of these approaches. Section 3.3 responds to RQ3 by analyzing how specific visual elements influence comprehension and decision-making outcomes. Finally, Section 3.4 answers RQ4 by reviewing the evaluation methodologies employed to assess the usability and effectiveness of visualization types and approaches.

3.1 Data visualization approaches in decision-making tools

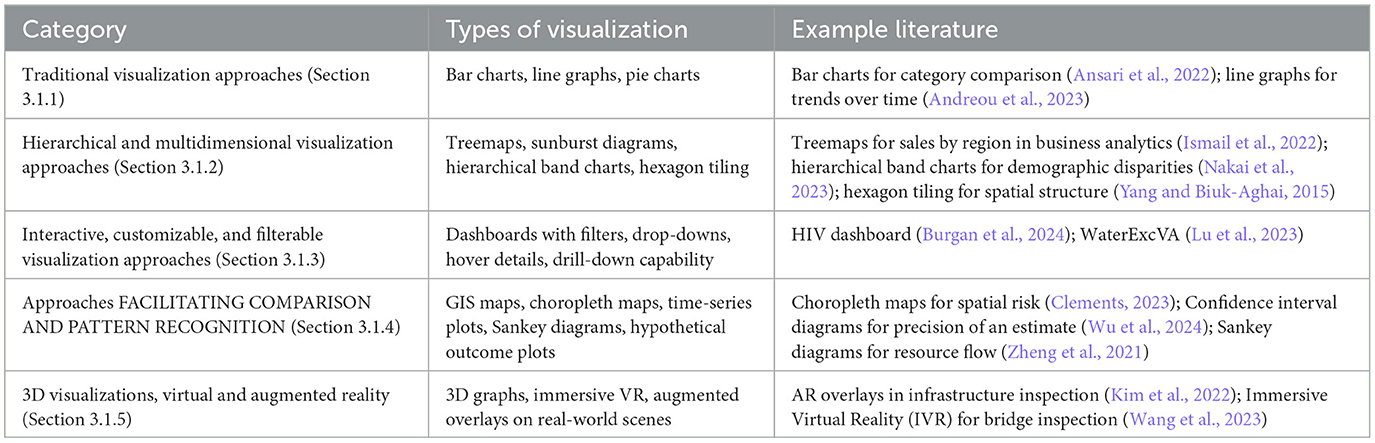

In this section, we detail the types of data visualization approaches used in AI-assisted decision making tools to support decision-making. Table 3 shows a summary of the approaches, which each are detailed below.

3.1.1 Traditional visualization approaches

Traditionally, data visualization has depended on familiar types like bar charts, line graphs, and pie charts. These methods have proven to be fundamental in depicting quantitative data clearly and efficiently (Ansari et al., 2022; Luo, 2023; Llaha and Aliu, 2023; Bafna et al., 2019; Shaheen et al., 2019). Bar charts are particularly effective for comparing different categories, while line graphs excel at illustrating trends over time (Andreou et al., 2023). The simplicity of these visualizations also avoids visual noise (i.e., visual clutter) (Evergreen and Metzner, 2013).

However, as the era of big data has emerged, these conventional approaches have struggled to adequately represent large and complex datasets, prompting the creation and adoption of more sophisticated visualization techniques (Muller and Tierney, 2017).

3.1.2 Hierarchical and multidimensional data visualization approaches

As data complexity increases, hierarchical and multidimensional visualization approaches have become prevalent. These approaches allow for the representation of large-scale and structured data in an intuitive manner, helping users explore relationships within datasets. Treemaps, sunburst diagrams, and hierarchical band charts provide hierarchical data representation, allowing users to explore and understand relationships within the data at different levels of detail (Akanmu and Jamaluddin, 2016; Ismail et al., 2022; Diaz et al., 2022).

Treemap is a commonly used visualization for hierarchical data. In business analytics, for example, treemaps are effective for displaying hierarchical information such as sales performance by regions and product categories (Ismail et al., 2022). These visualizations help users identify patterns that may not be immediately apparent in traditional two-dimensional charts. In the security domain, treemap and rules tree visualizations allow users to explore the log coverage, helping users understand the distribution and classification of security logs. Euman and Abdelnour-Nocera (2013) note that treemaps are effective for pattern recognition. However, they are less effective at revealing insights when data variation is limited.

In addition, hierarchical data visualizations are useful in understanding the relationships between metrics. Hierarchical band charts, for instance, visualize data across demographic groups, helping professionals identify disparities and target interventions (Nakai et al., 2023). The hexagon-tiling algorithm can be used to represent hierarchical data in a map-like format, improving spatial understanding (Yang and Biuk-Aghai, 2015).

3.1.3 Interactive, customizable, and filterable visualization approaches

Dashboards have emerged as a particularly effective and efficient visualization approach because of their comprehensive data overview capabilities (Borrego and Lewellen, 2014; Shetty and Keshavjee, 2024; Goodwin et al., 2021). Filterability, as a commonly seen feature in dashboard, allows users to visualize and focus on the subset of data relevant to them (Stern et al., 2024; Burgan et al., 2024; Ansari and Martin, 2024; Upreti et al., 2024; Balaji et al., 2024; Haara et al., 2018; Ries et al., 2012; Sopan et al., 2012; Porter et al., 2021; da Silva Franco et al., 2019). For instance, Burgan's dashboards for HIV pre-exposure data can be filtered by variables such as ethnicity, region and gender. The filtering can be accomplished in different ways [e.g., by selecting a specific tab that corresponds to a subset of the data as in Burgan et al. (2024), or by selecting options from a drop-down menu as in Ansari and Martin (2024)].

Customisability is mentioned as an attribute of usable visualizations [e.g., allowing the user to enlarge certain portions of a graph, as suggested by Wilhelm et al. (2014)]. On the other hand, interactivity, such as hover-over details and clickable elements, can improve users' interaction effectiveness and efficiency (Choudhary et al., 2024). This is crucial for decision-makers who need to understand data nuances to make informed choices (Freeman et al., 2023; Bornschlegl et al., 2018; Morgan et al., 2018). For example, the visualization system WaterExcVA not only provides users with an overview of the water supply abnormalities, but also allows them to drill down to specific points in time and space (Lu et al., 2023).

3.1.4 Approaches facilitating comparison and pattern recognition

Another major ability of visualization types is to represent trends and allow comparison, by displaying multiple data points simultaneously (Ancker et al., 2024; Alger et al., 2024; Balaji et al., 2024; Bishop et al., 2013). For example, Cheng and Senathirajah (2023) 's experiment points out the effectiveness of a tool which compiles multiple metrics in one graph, enhancing pattern recognition and thus decision-making capabilities. On the other hand, Alger et al. (2024) critiques the RateMyProfessor tool interface for its inability to display multiple variables at the same time (e.g., difficulty of the course and perceived expertise of the professor).

As highlighted in Medeiros et al. (2017), Geographic Information Systems (GIS) mapping visualize the geographical distribution of risks on one screen, helps users perceive it effectively. Similarly, Clements (2023) points out the usefulness of choropleth maps for spatial data visualization, where various areas (e.g., subnational administrative units) can be represented in different colors. The author states that time-series plots with smoothing functions are “the most common way of demonstrating temporal data, at sub-national, national, and global levels.” Kettelhut et al. (2017) mention the benefit of visualizations integrating spatial data on hospital settings, to highlight the area of risk.

Trend plots are commonly used because they integrate multiple factors and provide a holistic view of the data. They are especially helpful for illustrating trends over time and connecting data to contextual situations (e.g., a physician can associate a higher heart rate with going up a staircase) (Sadhu et al., 2023).

The usefulness of incorporating contextual information in the visualizations has also been pointed out by Alwi et al. (2023) in the context of Business Intelligence (e.g., supplier overview, material overview, service overview). The cumulative distribution function graphs and the quantile dot graphs are useful to compare the distributions of data (Wu et al., 2024).

The tool described in Monsivais et al. (2018) combines geographic specificity and interactivity, enhancing user understanding and decision-making by allowing data exploration at various scales. Also, Herring et al. (2017) uses map-based visualizations that allow users to explore the impacts of climate change locally by comparing different emission scenarios.

The color-coded risk maps and correlation analysis (like Kendall's tau) are used to compare different risk rankings, aiding in quick decision-making processes (Medeiros et al., 2016). The Component Network Meta Analysis (CNMA) approach, utilizing various visualization formats like CNMA-UpSet plots, CNMA heat maps, and CNMA-circle plots, allows for the effective display and comparison of data from multiple studies (Freeman et al., 2023).

Hypothetical outcome plots (i.e., animated diagrams) can also facilitate pattern recognition, because they can display different possible outcomes over time, allowing users assess their likelihood (Wu et al., 2024). Besides, the confidence interval diagrams are used to indicate the precision of an estimate (Wu et al., 2024). Holdsworth and Zagorecki (2023) suggests that node link diagrams might help the user to create a mental picture of an emergency response.

Real-time information is also often represented by visualizations (Choudhary et al., 2024). Time-series visualization such as line charts, area charts, and candlestick charts are often employed to track changes over time in industries in particular finance. Heat-maps are used to highlight patterns and correlations. Geospatial visualizations are used to display location-based data to optimize routes, as well as to track the spread of diseases. Streaming data visualizations are used to represent continuous data streams (e.g., to monitor social media usage). Sankey Diagrams display the flow of resources within a system (Zheng et al., 2021).

3.1.5 3D visualizations, use of virtual reality and augmented reality

3D visualizations can significantly enhance the comprehension of complex datasets for users. Kaya et al. (2023) showed that 3D visualizations facilitate a better understanding of COVID-19 data than traditional 2D approaches. Moreover, 3D visualizations have been used to optimize energy consumption patterns by providing a more intuitive user experience and reducing visualization complexity (Stecyk and Miciula, 2023). In the field of structural engineering, 3D visualizations are particularly effective in displaying the structural condition of a building (Kim et al., 2022). Similarly, Lizenberg et al. (2020) uses RViz to generate 3D models to visualize real-time data on vehicles and their surroundings, improving the understanding of dynamic environments. Ntoa et al. (2017) utilizes rack close-ups and room views with interactive features to manage and monitor the equipment effectively.

In infrastructure inspections, augmented reality (AR) is used to overlay virtual information onto real-world environments, allowing inspectors to visualize data in real-time and make more informed decisions (Kim et al., 2022). This technology is particularly useful in fields that require precise spatial awareness and real-time data analysis, such as engineering and architecture. In Wang et al. (2023), Immersive Virtual Reality (IVR) was used in conjunction with 2D displays for bridge inspections, where 3D elements were also used to highlight the severity of damages, facilitating more accurate assessments.

3.2 Challenges in understanding visualizations

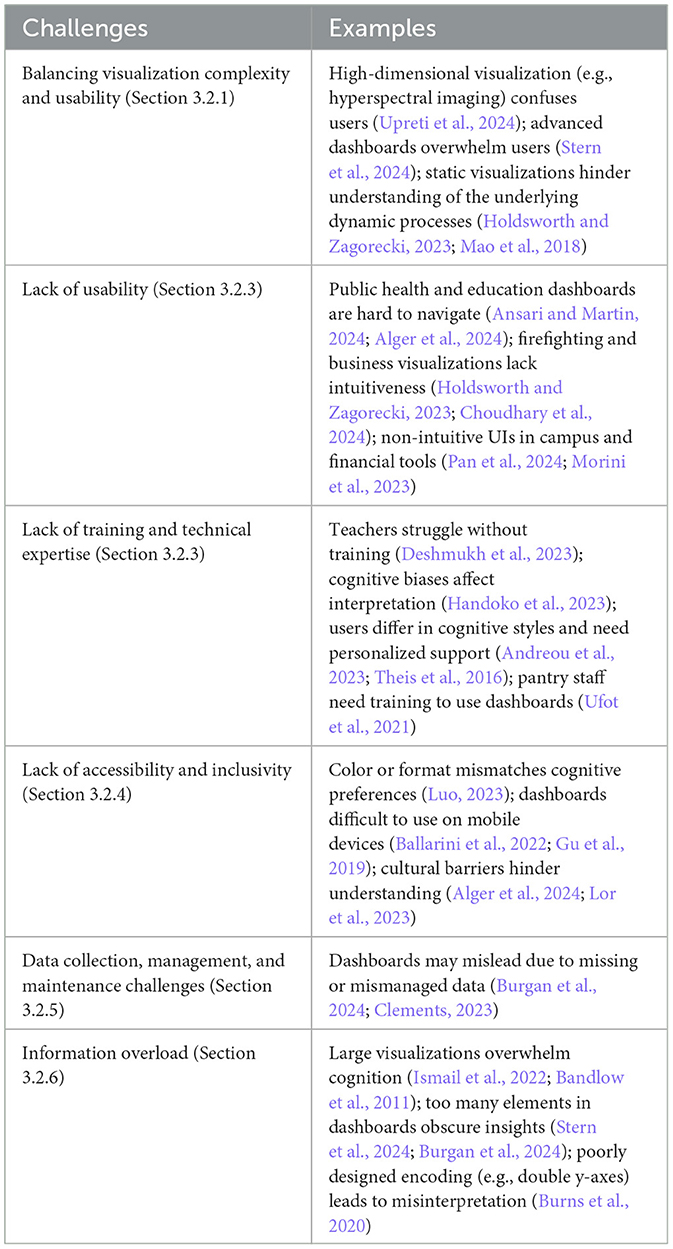

This section synthesizes the findings from the literature to understand the difficulties and challenges that users may encounter when interacting with data visualizations. These challenges often stem from the complexity of the visualizations, the design of the interfaces, and the lack of user training. Table 4 presents a list of the types of challenges, which each are detailed in this section.

3.2.1 Balancing visualization complexity and usability

A significant challenge consistently identified in the literature is the balance between the complexity and the usability of visualizations. It has been suggested that heterogeneous data represented by various visualization techniques may introduce complexities that are difficult for a lay audience to interpret (Morini et al., 2023; Al-Ghamdi, 2024; Choudhary et al., 2024; Contreras, 2019; Langton and Baker, 2013). For example, Density Plots and Cumulative Distribution Functions require a higher level of statistical literacy than that of an average domain expert user (Wu et al., 2024). Similarly, Upreti et al. (2024) state that horticulture experts struggle to understand high dimensional hyper-spectral data.

Stern et al. (2024) discuss how interactive dashboards and advanced visualization approaches, while powerful, can become overwhelming for users, particularly those without a technical background. The complexity of these approaches can make them difficult to use, reducing their effectiveness and limiting their adoption (Flor et al., 2023; Alger et al., 2024). According to Yan et al. (2013), the ease with which users can access detailed information via menus or touchscreen influences their willingness to and use the applications.

Al-Ghamdi (2024), Wu et al. (2024) and Choudhary et al. (2024) also highlight this challenge in the context of technology and business applications. These studies emphasize the importance of designing visualizations that are both powerful enough to handle complex datasets and user-friendly enough for domain experts to use effectively. Achieving this balance requires careful consideration of the user interface design, the level of interactivity, and the way data is presented. According to Golfarelli and Rizzi (2020), domain expert users may struggle in choosing the correct type of visualization for a given dataset and analytical goal. Also, overlapping elements in the visualizations can lead to difficulties in extracting detailed information (Somanath et al., 2014).

Holdsworth and Zagorecki (2023) point out that static representations “do not capture the pace of change and sequence of interactions”, when the visualization is used to help firefighters develop a mental image of an emergency response. Further, Mao et al. (2018) add that static images and textual data do not suit battlefield analysis, which is why their approach involves transforming them into animated representations, which are more aligned with the cognitive processes of the users.

3.2.2 Lack of usability

The lack of usability is a significant challenge for domain expert users, especially when the interface design is overly complex or not intuitive. Ansari and Martin (2024) discuss how public health dashboards, which are designed to manage and present complex health data, often pose usability challenges for domain expert users who may find the interface difficult to navigate. Alger similarly highlights challenges in educational dashboards, where domain expert users might struggle with interfaces that are not user-friendly (Alger et al., 2024).

Holdsworth and Zagorecki (2023) explore how public safety dashboards, particularly those used in firefighting services, present usability challenges. The need for quick decision-making can be hindered by complex, non-intuitive interfaces.

Wu et al. (2024) and Choudhary et al. (2024) address the usability challenges in technology and business applications. They note that domain expert users often struggle with the sophisticated interfaces of data visualizations, which can be a significant barrier to effective data-driven decision-making. Pan et al. (2024) and Morini et al. (2023) focus on campus management and financial services, respectively. These visualizations often require a level of interaction that domain expert users find challenging, particularly when the interface is not intuitive.

3.2.3 Lack of training and technical expertise

Patel (2023) and Lundkvist et al. (2021) highlight the importance of providing training to stakeholders to help them navigate and effectively utilize complex visualizations. Without such training, users may struggle to interpret data accurately. For instance, Deshmukh et al. (2023) discuss the challenges teachers face in interpreting complex data visualizations without training. Handoko et al. (2023) explore how cognitive biases can affect users' interpretation of visual data, emphasizing the need for proper training to mitigate these biases and ensure accurate data interpretation.

Stecyk and Miciula (2023) mention the steep learning curve associated with advanced visualization approaches, indicating that stakeholders often require additional training to use these approaches effectively. Moreover, Andreou et al. (2023) and Theis et al. (2016) highlight that the variability in cognitive styles and processing abilities among lay users can create challenges in understanding complex visualizations. Andreou et al. (2023) suggests that personalized training programs can address these discrepancies, enhancing the accessibility of the approaches.

Luo (2023) also highlights the need for user training to improve the ability to interpret graphical data correctly and consistently, as differences in cognitive styles can impact how users interact with and understand data visualizations. Similarly, Ufot et al. (2021) stress the importance of consistent usage and training to maintain accuracy and improve productivity among non-technical staff (e.g., pantry users), particularly in environments where data interpretation skills vary widely.

Ai et al. (2022) mention the need for continuous training and motivation to help stakeholders effectively use intelligent visualizations, indicating that without ongoing training, the effectiveness of these visualizations may be diminished. Peng and Cao (2022) discuss the complexity of mathematical models, noting that stakeholders may struggle to understand and interpret these without proper training.

3.2.4 Lack of accessibility and inclusivity

Lack of accessibility may prevent the user from interacting with the visualization, or make the interaction more difficult. Patel (2023) discusses the challenges non-technical users face in interpreting complex data presentations, emphasizing the importance of designing accessible visualizations and providing the necessary support to ensure users can utilize them effectively.

Deshmukh et al. (2023) highlights the difficulties teachers face in selecting the right type of visualization to communicate their messages effectively, pointing out the need for visualizations that are both accessible and intuitive. Nakai et al. (2023) discusses the challenges of understanding complex hierarchical visualizations, noting that these visualizations require significant effort to interpret, particularly for users without a technical background. de Camargo et al. (2020) adds that non-technical users often find it difficult to synthesize and interpret data presented in traditional tabular formats, suggesting that graphical representations may be more accessible.

Luo (2023) emphasizes the importance of cognitive style differences, noting that users with different cognitive preferences may find certain visualization formats more challenging to interpret (i.e., verbalizers prefer tables, while visualisers prefer graphs). Chiang et al. (2022) and Ballarini et al. (2022) discuss the difficulties non-technical users face when trying to interpret data from multiple sources and formats, particularly when the data must be manually mapped across databases. Ballarini et al. (2022) adds that, when displaying the data on a mobile device, it is important to keep the data points visible and understandable. Gu et al. (2019) makes a similar observation, adding that different usability considerations are needed for touch screens.

After pointing out that Public Health data dashboards need to be understood by Government workers, as well as by Health practitioners, Alger et al. (2024) warns against the use of technical language on these visualizations. Cultural and linguistic barriers also play a significant role in how visualizations are interpreted, with Lor et al. (2023) emphasizing that stakeholders, such as patients with limited literacy may struggle to comprehend text-heavy visualizations.

3.2.5 Data collection, management, and maintenance challenges

The lack of time and staff availability have been linked to issues with data collection and management, which lead to inaccurate dashboards (Burgan et al., 2024). Once released into the public domain, data visualizations may be misused or misinterpreted (Clements, 2023). Inaccurate data, including incomplete, improperly formatted, and duplicated data, may compromise the accuracy of the visualizations (Alwi et al., 2023). Once the data has been made available, there are issues with accessibility and privacy to consider (Al-Ghamdi, 2024). Developing and maintaining robust visualizations can be resource-intensive (Choudhary et al., 2024).

The preprocessing of data, especially when involving advanced machine learning techniques, may require expert knowledge and considerable time (Upreti et al., 2024).

3.2.6 Information overload

Information overload poses a significant challenge for stakeholders, often leading to misinterpretation and errors in decision-making. Ismail et al. (2022)) and Sullivan et al. (2020) highlight that when users are confronted with large volumes of data or an excessive number of visual elements, they can quickly become cognitively overwhelmed. This overload impairs their ability to extract meaningful insights and can result in a failure to identify key patterns or trends within the data. Sanchez-Ferrer et al. (2019) observes that too many details shown in advance on the dashboard may confuse users.

In the context of medical decision-making, Gisladottir et al. (2022) highlights the issue of information overload, where presenting too many risks can overwhelm physicians, making it harder for them to make informed decisions. Sadhu et al. (2023) sustains that busy clinicians are in need of a dashboard that is meaningful and easy to interpret, and that unnecessary data make the tool more difficult to use and understand.

Behavioral users, as opposed to theoretical rational agents, have difficulties understanding data visualizations, when information is presented in a complex and uneven way. For instance, a tool might highlight certain data points or trends and omit others (Wu et al., 2024).

Stern et al. (2024), Burgan et al. (2024), and Malik and Sulaiman (2016) highlight the cognitive load that domain expert users experience when interacting with complex dashboards. These approaches often present large volumes of data in a format that can be overwhelming, leading to difficulties in identifying key insights. Choudhary et al. (2024) also notes that users can struggle with the amount of information presented, particularly when dashboards lack a clear focus or are cluttered with too many elements. According to Bandlow et al. (2011), large graphs are responsible for cognitive load. Simões Jr et al. (2017) and Lami et al. (2014) make a similar observation for map-based visualizations.

Stecyk and Miciula (2023) and Freeman et al. (2023) emphasize that domain expert users may find it challenging to process and make sense of the information displayed in financial and environmental data visualizations, which often require a high level of understanding to interpret correctly.

Koopman et al. (2020) also addresses the cognitive load that can occur when visualizations are not designed with user capacity in mind. They point out that if the format is too complex or not intuitive enough, it can overwhelm users, particularly those who lack a technical background, thereby increasing the risk of misinterpretation.

Thayer et al. (2021) and Couto et al. (2022) further elaborate on the problem of information overload by discussing how the fast-paced environment in certain fields, such as emergency departments or project management, can exacerbate the effects of data overload. In these settings, stakeholders may struggle to quickly and accurately process scattered information, leading to potential misinterpretations and slower decision-making.

Finally, Burns et al. (2020) underscores the importance of carefully designing visualizations to avoid cognitive overload, noting that complex visual encoding, such as double y-axes, may cause users to misinterpret the data or draw inaccurate conclusions.

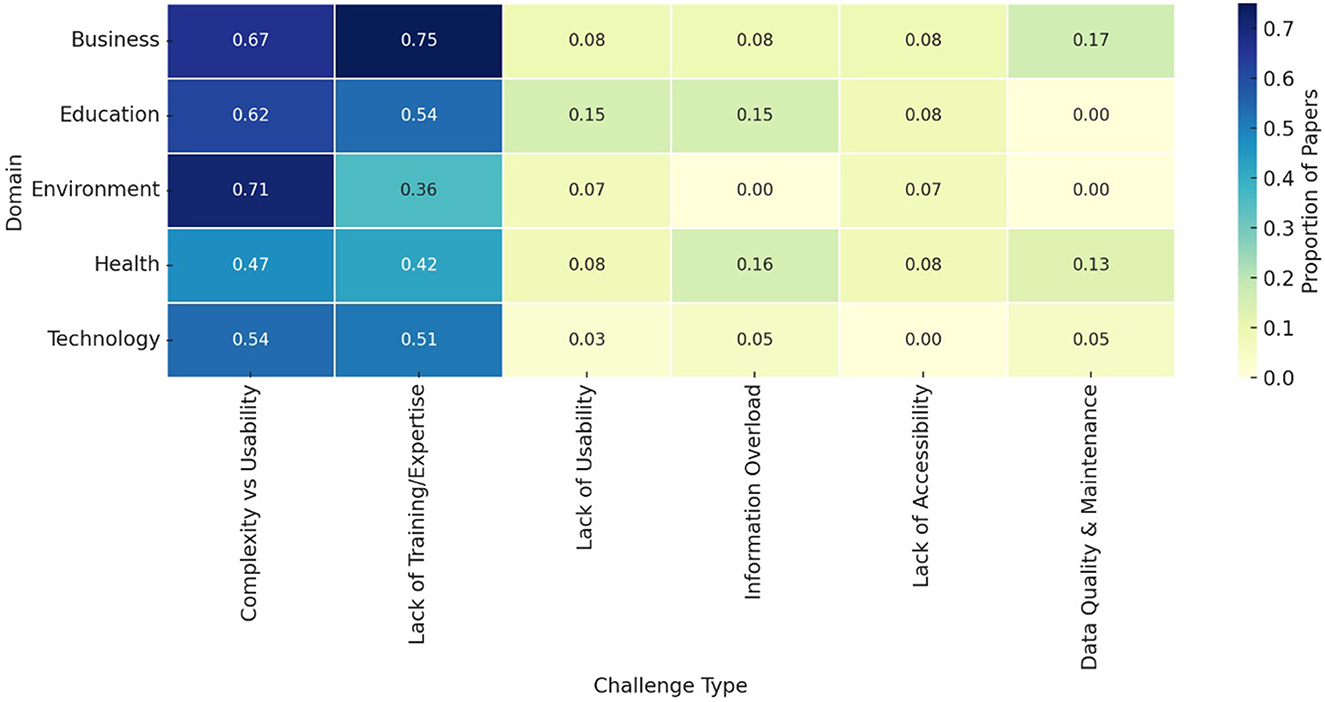

In summary, Figure 4 shows the normalized proportion of studies within each domain that reported specific challenges faced by domain experts of data visualization approaches. Complexity vs Usability is the most frequently reported challenge across all domains, followed by Lack of Training or Expertise and Lack of Usability. Information Overload is also notable, especially in Public Health and Technology contexts. Less frequent issues include Accessibility and Data Quality & Maintenance. The Miscellaneous category was excluded to ensure domain comparisons remain interpretable and methodologically consistent.

3.3 Impact of visual aspects and elements on user understanding and decision-making

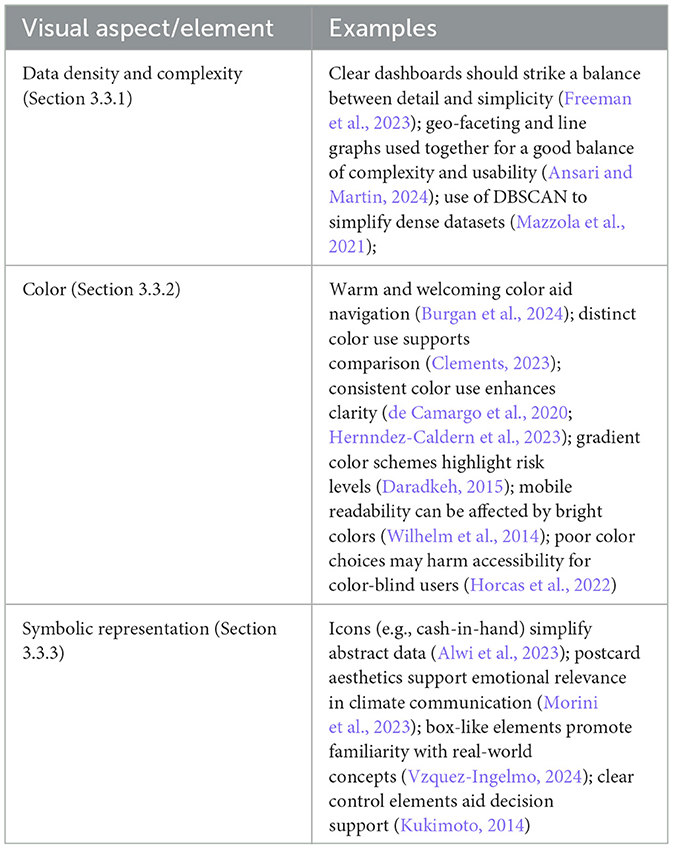

Visual elements play an important role in how the data is perceived and interpreted by the users. The design choices made in data visualization can also greatly influence user understanding and thus the decision-making process. Table 5 lists the three types of visual elements and their examples. In the following, we explore the impact of data complexity and density, color, and symbolic presentation in visualization approaches.

3.3.1 Data density and complexity

Balancing complexity and simplicity in data visualization is essential for ensuring that users can comprehend the information without being overwhelmed. Freeman et al. (2023) argue that visualizations must strike a balance between providing sufficient detail and maintaining clarity to avoid confusing users. Lu et al. (2023) and Andreou et al. (2023) highlight that while simplicity is important, it should not come at the expense of important details. Simplifying visualizations should involve removing unnecessary elements without losing critical information, ensuring that the visualization remains informative. Similarly, Cabitza et al. (2022) warn against misleading simplicity, which might confuse the users about results reliability. In fact, overly simplistic visualizations may lack the necessary context for making informed decisions.

According to Ansari and Martin (2024), a tool should display “an appropriate balance between complexity and usability.” In their study, line graphs for each variable serve as the usable element, providing clear and intuitive trend visualization, while geo-faceting represents the complex element, allowing users to explore spatial variations by selecting different areas. Using consistent scales and visual elements proportional to the data also aids user understanding (Wu et al., 2024). On the other hand, Nguyen and Song (2016) state that lay users might struggle with interpreting the visualizations if the sampling methods do not preserve essential information.

Managing data density is critical in data visualization. Overloading a visualization with too much information can overwhelm users, making it difficult for them to discern the key insights (Burns et al., 2020). Perdana et al. (2019) suggest that, to mitigate complexity, the tool's characteristics should be aligned with users' tasks and cognitive abilities.

Mazzola et al. (2021) discuss the use of DBSCAN (Density-Based Spatial Clustering of Applications with Noise) in security-related visualizations, which helps in managing complex datasets by identifying outliers. This technique simplifies the data, allowing users to focus on potential threats without being distracted by irrelevant information.

Sadhu et al. (2023) discuss trend plots that integrate multiple factors, offering a comprehensive view of complex datasets without overwhelming users, allowing users to understand the broader picture without losing sight of the details. Clarity in visualizations also affects how users understand and utilize the information (Azzam et al., 2013). Dashboards should be intuitive enough to allow a non-technical user to engage with them without training (Alwi et al., 2023; Concannon et al., 2019). The information displayed should also be clear and digestible (Morini et al., 2023; Choudhary et al., 2024).

3.3.2 Color

The use of color in visualization plays an important role in enhancing user interaction and comprehension. A “warm and welcoming color scheme” has been associated with navigation ease by the vast majority of Burgan et al. (2024)'s participants, while one participant pointed out that the color red signals “bad” or “wrong.” Wilhelm et al. (2014) observes how bright colors on a mobile device might be difficult to read. Clements (2023) refers to the importance of representing the variables in different colors. A consistent use of colors helps users identify patterns and categories (Wu et al., 2024; Choudhary et al., 2024; Zhang and Padman, 2017; Pitchforth, 2013; Forsman et al., 2013; Price et al., 2016), and intensity (Bacic and Henry, 2012). Andreou et al. (2023) and Patel (2023) further emphasize that color plays a crucial role in engaging users by visually separating different datasets, thereby preventing confusion and enhancing interpretation accuracy. For example, increasing the size of primary elements can help users with low visual acuity, while adjusting the proximity between elements can aid in distinguishing closely related data points (Andreou et al., 2023).

Chen et al. (2023) and Kwong et al. (2022) highlight the role of color in improving the clarity of visualizations. They explain that by using distinct colors for different data elements, users can more easily differentiate and understand the information being conveyed. This reduces cognitive load and makes the visualization more effective for decision-making. Color gradations to indicate varying levels of risk and the layout of information were successfully utilized by Daradkeh (2015). Lee et al. (2015) noted how colors can successfully represent data changes in line graphs.

de Camargo et al. (2020) and Hernndez-Caldern et al. (2023) underscore the importance of consistency in color usage. They argue that when colors are used consistently throughout a visualization, it helps maintain a cohesive visual structure, making the data easier to follow. Chiang et al. (2022), Peng and Cao (2022), and Ballarini et al. (2022) further argue that well-chosen color schemes can make visualizations more engaging and easier to understand, capturing users' attention and making the data more approachable, which is essential for maintaining user interest and improving comprehension.

Horcas et al. (2022) recommend the use of distinct colors and scaling. However, they warn against visual clutter and the use of non-distinct colors for color-blind users. He (2022), Peng and Cao (2022), and Wang et al. (2022) suggest that color can guide user interpretation by drawing attention to key areas of the data. They note that strategic color usage can highlight trends or anomalies in the data, making it easier for users to identify and analyze these patterns. Lizenberg et al. (2020) and Holjevac and Jakopec (2020) highlight the importance of using color to create visual consistency within a dataset. They argue that consistent use of color helps unify the various elements of the visualization, making it easier for users to follow and understand the data.

3.3.3 Symbolic representation

Applying symbols in visualization enhances user understanding and decision-making by making data more interpretable (Ferreira et al., 2020; Cabitza et al., 2022; Ansari et al., 2022). For example, using cash-in-hand icon to represent total spending simplifies complex information (Alwi et al., 2023). Also, Kukimoto (2014) stresses the importance of the clarity of control elements for decision making.

For the sake of constructive climate journalism, Morini et al. (2023) utilizes the aesthetics of travel postcards to represent key sectors affecting climate change such as agriculture and energy, fostering a sense of hopefulness and personal relevance. Tools are considered more usable when they resemble the real world. Visualization experts suggested using meta-elements looking like boxes, as they would have reminded the users of the real world (Vzquez-Ingelmo, 2024). In medical contexts, meaningful symbols derived from medical training enhance the usability of clinical data visualizations (Wanderer et al., 2016).

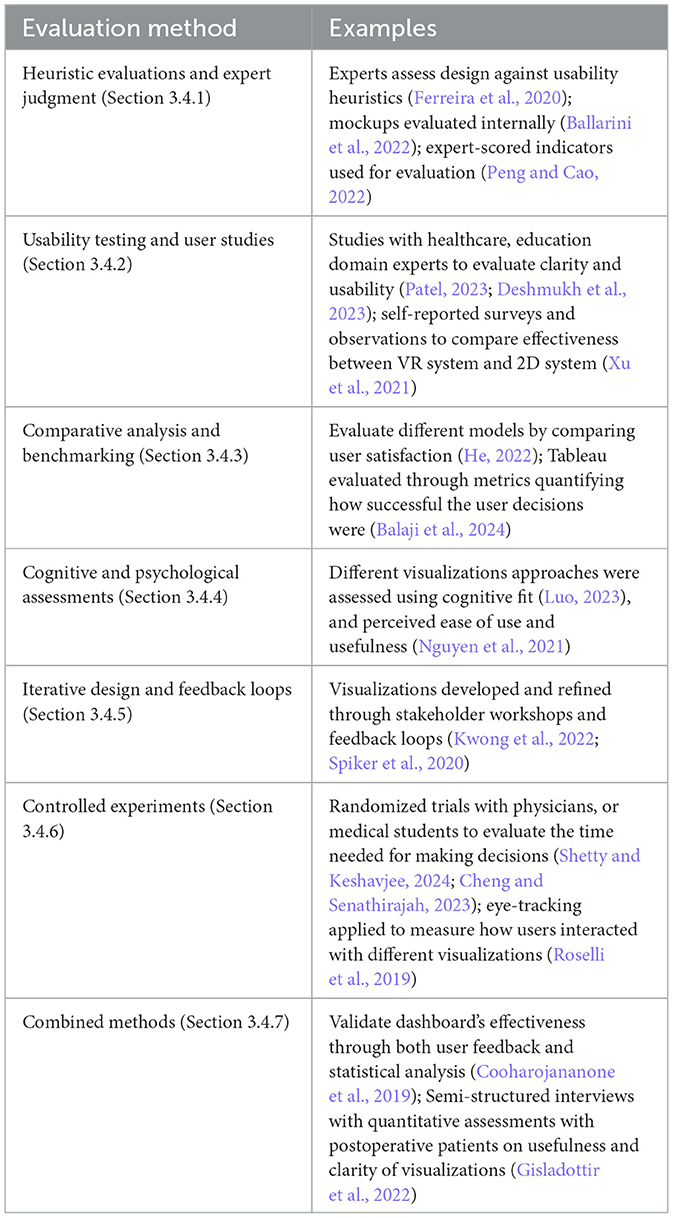

3.4 Evaluation methods of data visualization approaches

Following the exploration of how different visual elements influence user understanding and decision-making, it is important to assess the effectiveness of these elements within data visualization approaches.

This section reviews the diverse methodologies used to evaluate these approaches, as summarized in Table 6, including heuristic evaluations, usability testing, comparative analyses, cognitive and psychological assessments, iterative design feedback, controlled experiments, and mixed-method approaches. These methods leverage expert judgment, user feedback, and quantitative metrics to refine visualizations, ensuring their usability, functionality, and effectiveness across various contexts and user groups, enhancing users' decision-making capabilities.

3.4.1 Heuristics evaluations and expert judgment

Heuristic evaluation and expert judgment involve experts evaluating the design and functionality of visualizations based on established usability criteria to identify areas for improvement. Ballarini et al. (2022) use a design process that involved creating mockups and gathering feedback from project personnel. Internal evaluations showed improvements in readability and usability, with plans for future user feedback to further validate the visualizations. Ferreira et al. (2020) conduct heuristic evaluations and usability tests, with experienced evaluators identifying usability issues in different development phases. Domain experts provided feedback, which was critical in refining the visualization approaches to meet user needs effectively.

Peng and Cao (2022) employ expert judgment and questionnaire surveys to evaluate data visualization approaches. Experts scored the importance of various indicators, which informed the construction of judgment matrices. Consistency tests were conducted to ensure the reliability and validity of these evaluations. Nielsen's 10 heuristics principles have been used to evaluate a data visualization (Vzquez-Ingelmo, 2024). In the study, two data visualization experts checked the interface against the principles, with the purpose of creating a more user-friendly one.

3.4.2 Usability testing and user studies

Usability testing and user studies are common methods used to assess how well a data visualization serves its users. These methods provide insights into user satisfaction, decision-making efficiency, and the overall effectiveness of visualizations.

Patel (2023) evaluates visualization approaches in healthcare settings through user studies with healthcare professionals, focusing on metrics like decision-making efficiency, interpretation accuracy, and user satisfaction. The goal was to determine how effectively these visualizations supported clinical decision-making. Similarly, Deshmukh et al. (2023) use usability testing involving educational stakeholders to refine a data visualization platform designed to present teacher performance metrics. Feedback was collected to ensure the platform's accessibility and effectiveness in decision-making contexts.

Nakai et al. (2023) employ a participant experiment involving computer science students to evaluate three different visualization techniques. Participants rated these visualizations based on ease of use, bias detection, and overall satisfaction. This approach highlighted the proposed method's superiority over baseline implementations in detecting and understanding biases. Chiang et al. (2022) evaluate the Quality Instructional Management System (QIMS) through trial operations in universities, focusing on feedback from teachers and staff. The study noted improvements in teaching quality through enhanced material retrieval and student performance visualization, though further research was suggested.

Cabitza et al. (2022) conduct a user study via an online questionnaire to assess the effectiveness of visualizations in conveying test results, with a focus on communicating the reducing error and conveying predictive uncertainty. Thayer et al. (2021) evaluate an asthma timeline application using usability testing, post-implementation surveys, and application monitoring. These methods measured user satisfaction, perceived utility, and the efficiency of the application compared to standard electronic health records (EHR). Xu et al. (2021) combine self-reported surveys and observational data to evaluate a VR system against a 2D system. The study measured task completion time, accuracy, and user preferences, using statistical analyses to compare the effectiveness of the two systems in engaging users and promoting energy-saving behaviors.

Ufot et al. (2021) assess data visualizations implemented in a food pantry setting. Staff provided feedback on the visualizations, which were used to improve inventory management. The study highlighted the visualizations' effectiveness in simplifying data and enhancing staff collaboration. Koopman et al. (2020) employ iterative user-centered design processes, including focus groups with patients and physicians, to refine visualization approaches. Thematic analysis of qualitative data ensured that the visualizations met user needs and preferences effectively.

Sullivan et al. (2020) utilize various methods, including website metrics, academic citations, and user surveys, to evaluate the engagement and usability of data visualizations. The study also incorporated feedback from advisory committees and public health use cases to refine the visualizations. Diaz et al. (2022) conduct usability experiments with both expert and domain expert users to evaluate their ability to interpret complex visualizations. While experts navigated the visualizations effectively, domain experts struggled, indicating the need for simpler, more intuitive designs for broader user groups.

Quantitative surveys to measure the stakeholders' perception have been conducted alongside semi-structured interviews with practitioners (Burgan et al., 2024). The Post-Study System Usability Questionnaire (PSSUQ) was used to evaluate Business Intelligent dashboards in Alwi et al. (2023). Eleven domain expert participants filled the questionnaire to assess effectiveness and user satisfaction. Grossman et al. (2018) also evaluate their dashboard via a user satisfaction questionnaire.

Moreover, Sadhu et al. (2023) asked clinicians to evaluate and refine the design of the CarePortal dashboard, which showed patients' data from wearable sensors. A user experience questionnaire was then used to collect feedback.

3.4.3 Comparative analysis and benchmarking

Comparative analysis and benchmarking involve comparing different visualization methods to identify the most effective approach.

He (2022) uses comparative experiments to evaluate different models, focusing on metrics like convergence speed and user satisfaction. Zheng et al. (2021) compare the bricked format and Level of Detail (LOD) algorithm with traditional seismic data reading methods. The findings highlighted significant improvements in loading times and real-time visualization capabilities.

Wang et al. (2022) evaluate ML-assisted visualization approaches using benchmark tasks such as graphic element extraction and visualization generation. The study compares ML methods with non-ML methods, highlighting the effectiveness and efficiency of ML-driven visualizations in solving complex problems. Wu et al. (2024) conduct experiments with human participants (as “behavioral agents”) after a mathematical model (as “rational agent”) set benchmarks. The human participants were asked to engage with various data visualization types to make decisions. The experiment asked, for example, to decide whether to salt a car park based on the forecasted probability of freezing temperatures, and when to leave for the bus stop based on bus arrival time predictions. Mixed-effects Bayesian regression models are used to estimate behavioral payoffs and identify sources of decision-making errors. The results showed that the rational agent consistently outperformed the behavioral agent. However, data visualizations significantly improve the ability of the user to interpret data and make decisions.

In Balaji et al. (2024), the effectiveness of Tableau, the data visualization tool used to aid taxi-drivers in decision making, was evaluated through a metric called total reward per episode, which quantifies how successful their decisions were during each episode based on predefined objectives. Results were visually represented through plots depicting the evolution of total rewards, exploration rates, action distributions, cumulative profits, and reward variance across episodes, providing a comprehensive overview of performance dynamics over time.

3.4.4 Cognitive and psychological assessments

Cognitive and psychological assessments focus on understanding how visualizations influence users' cognitive processes and decision-making. Luo (2023) assesses the cognitive fit of different visualization formats using the Verbalizer-Visualiser Questionnaire, linking decision accuracy and confidence to the visualization format. In Hoeber (2018), interfaces have been evaluated based on their ability to support specific information-seeking behaviors and strategies.

Ai et al. (2022) compared traditional teaching methods with intelligent teaching tools, focusing on teacher-student interactions, engagement levels, and quiz performance. The study highlighted improvements in understanding and retention, particularly with the use of tools like Rain Classroom, which provided real-time data for teachers to adjust their strategies. Nguyen (2021) apply the Technology Acceptance Model (TAM) to evaluate visualization approaches, focusing on perceived ease of use and usefulness. The study revealed that while the tool was user-friendly, its focus on word frequency limited its effectiveness in capturing the full depth of student responses, suggesting the need for more advanced analysis techniques.

Kastens et al. (2020) use a structured research design to evaluate the types and quality of questions generated by participants. The study applied Bloom's taxonomy to categorize questions, providing insights into how different visualization formats influence cognitive engagement and comprehension. Similarly, Burns et al. (2020) introduce a novel framework based on Bloom's six-level taxonomy to evaluate visualizations. The study assessed tasks such as retrieving values, summarizing messages, and predicting future outcomes, comparing alternative designs to highlight how different visualizations support varying levels of cognitive understanding.

3.4.5 Iterative design and feedback loops

Iterative design and feedback loops involve continuously refining visualizations based on user feedback. Kwong et al. (2022) use an iterative process to develop and refine visualizations based on feedback from stakeholders, including healthy volunteers, patients, and clinicians. The evaluation through semi-structured interviews and content analysis allowed for successive improvements, ensuring the visualizations met user needs effectively. Similarly, Spiker et al. (2020)'s evaluation consists of multiple meetings where users reviewed the tool, completed an evaluation form, and provided feedback on its functionalities and usability.

Goodwin et al. (2021) conduct iterative workshops using design techniques like card sorting and affinity diagramming. Feedback from expert panels and broader practitioners was incorporated to refine and improve the visualization approaches continuously. In Hernndez-Caldern et al. (2023), multiple iterations of user feedback were gathered in a detailed evaluation process, involving expert heuristic assessments and real scenario evaluations. The study used virtual sessions to assess user interaction with improved dashboards, emphasizing the importance of iterative feedback in refining visualization approaches.

Mazzola et al. (2021) and Albarrak (2023) focus on refining solutions based on iterative user feedback from various professionals, In Mazzola et al. (2021), this included developers and ERP integrators. The evaluation aimed to improve attractiveness, interpretability, and usefulness, addressing challenges with contextual information for business users.

3.4.6 Controlled experiments

Controlled experiments also play an important role in assessing the practical effectiveness of visualization approaches in enhancing user interaction and decision-making processes.

In the healthcare domain, Shetty and Keshavjee (2024) conducted an experiment involving ten primary care physicians which showed that a significant reduction in the information retrieval time using the newly developed dashboards. Physicians were asked to review ten elements of diabetes care data, and were randomly allocated to initially use either i4C (the newly developed dashboards) or Native Query (database) and then switched to the other method, with their time needed being recorded. Similarly, Cheng and Senathirajah (2023) conduct an experiment with 15 medical students. Students were asked to diagnose clinical cases after engaging with a visualization for different intervals. The results reveal that short interactions with the visualization can reduce cognitive burden and speed up the diagnosis.

On the other hand, a pilot study conducted by Kaya et al. (2023) explored the preference for 2D vs. 3D visualization among 20 participants following COVID-19 news. The results show that 3D is better for complex data. Kaya et al. (2023) also conducted an immersive study which participants used a head mounted display and were able to grab and move the 3D object. All participants favored 3D visualizations over the 2D ones. In Holdsworth and Zagorecki (2023), firefighters were assigned three tasks to be completed with the help of a static node-link diagram (i.e., identifying roles, recognizing stages of a temporal response, and evaluating the response).

Kim et al. (2021) crowd-sourced experiment with 4,800 participants, testing how well participants updated their beliefs under different visualization conditions. Bayesian assistance techniques improved users' belief updating for small data samples but had mixed results for large data samples. Roselli et al. (2019)'s experiments employ eye-tracking technology to measure how users interacted with different visualization. Simulation and numerical analysis were utilized to evaluate the performance of visualization techniques, where numerical simulations on IEEE benchmark systems demonstrated that criticality graphs significantly improved the interpretation of criticality analysis results over traditional tabular presentations (de Camargo et al., 2020).

3.4.7 Combined methods

To address the complexities in evaluating data visualization approaches, studies also employ a combination of methodologies that includes quantitative metrics with qualitative findings. This ensures a comprehensive assessment of both performance and user experience of visualization approaches.

Ansari and Martin (2024) conduct a two-phase evaluation of data dashboards: (1) a user evaluation of existing data dashboards (pairwise user study with community-based organizations and experts) and (2) a usability evaluation of the prototype dashboard with an embedded experiment about visualizing missing race and ethnicity data.

Quantitative methods (i.e., five point scales) and qualitative insights (i.e., reader's emotional response) have been combined to evaluate the dashboards in Morini et al. (2023). Usability testing and user feedback gatherings are also frequently used to evaluate data visualization approaches (Choudhary et al., 2024). Cooharojananone et al. (2019) combine user feedback with statistical analysis of course evaluation data to validate the dashboard's effectiveness.

Gisladottir et al. (2022) used a mixed-method approach involving semi-structured interviews and quantitative assessments with postoperative patients. The study gathered feedback on the usefulness and clarity of visualizations, focusing on patient preferences, information retention, and decision-making confidence.

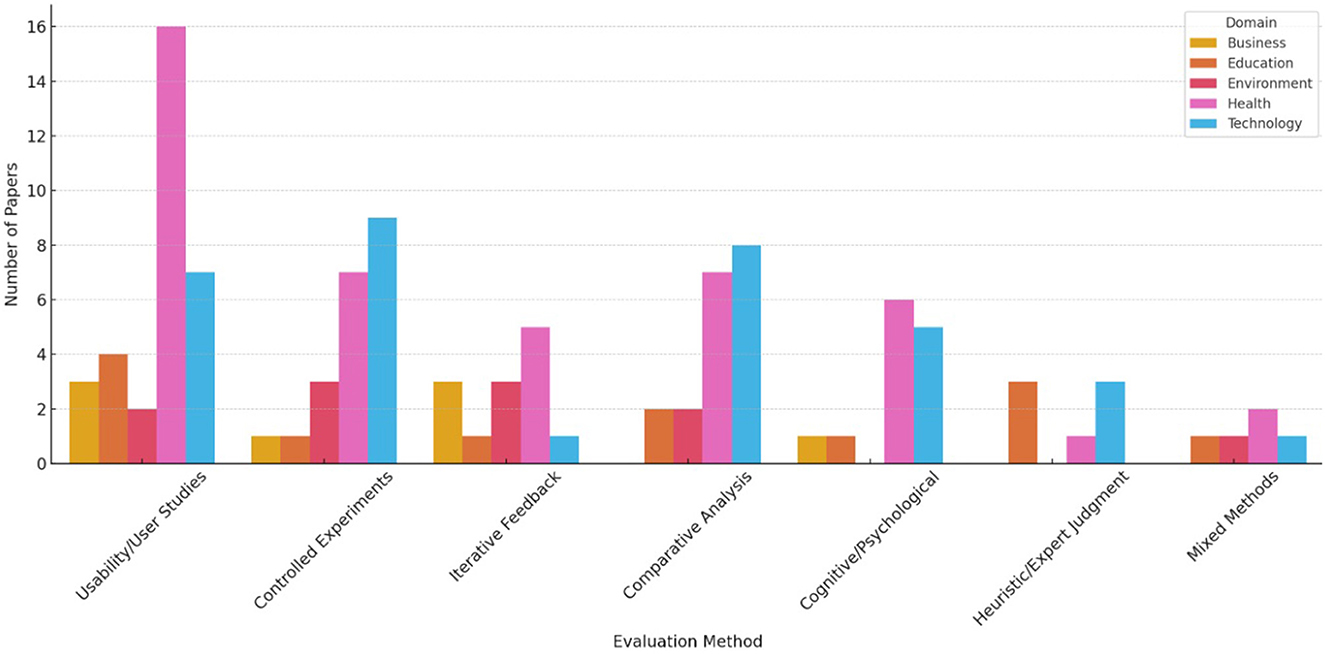

In summary, Figure 5 illustrates the number of studies in each domain that employed specific evaluation methods for assessing data visualization approaches. It enables direct comparison of methodological preferences across fields such as Health, Technology, Education, and Business. Evaluation methods like Usability/User Studies and Controlled Experiments are most frequently applied, while Heuristic Evaluation and Cognitive Assessments appear more selectively. Note that we excluded the Miscellaneous category to improve readability and interpretability, as it grouped heterogeneous studies without a consistent domain classification, which could skew comparisons across clearly defined fields.

4 Discussion

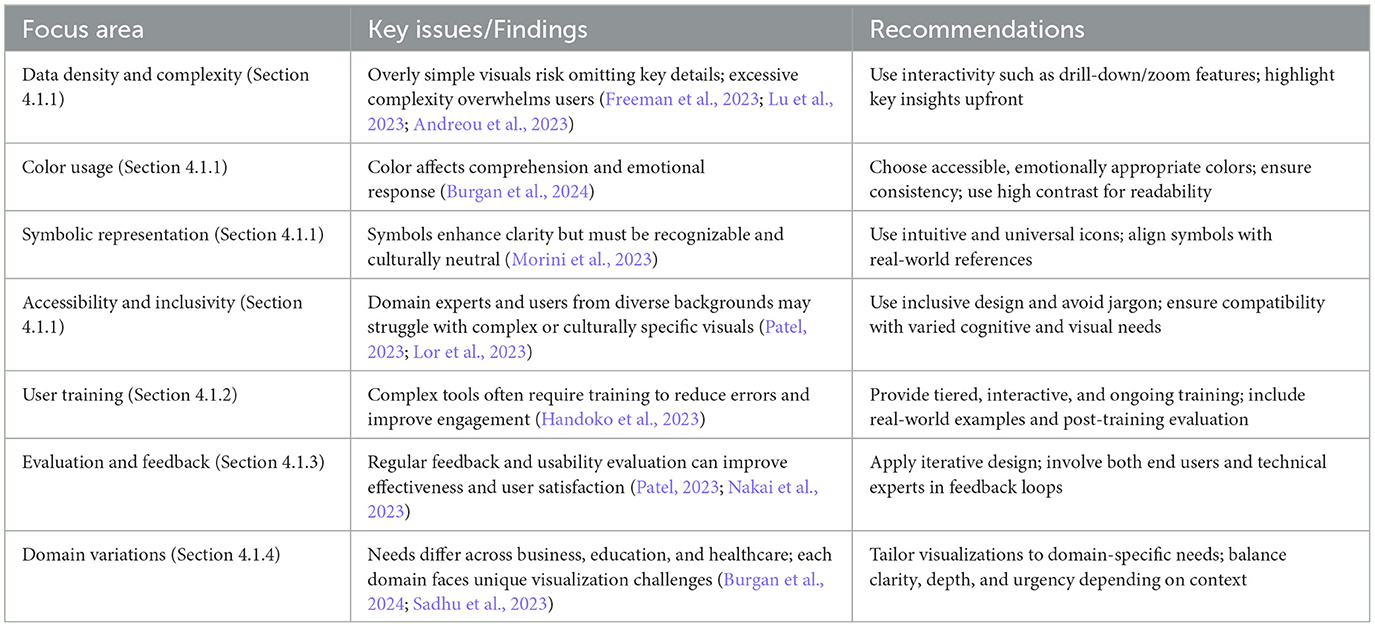

4.1 Summary of main findings

Following the detailed findings in Section 3 covering what challenges users face in understanding visualizations, how visual elements affect user comprehension and decision-making, and the methods used to evaluate these approaches, we next consolidate the key insights that emerged across the reviewed literature. This summary distills the critical design considerations, evaluation practices, and domain-specific challenges into practical and specific recommendations. By synthesizing these findings, we aim to support researchers and practitioners in developing more effective, accessible and inclusive data visualization tools in real-world decision-making contexts.

Table 7 shows the list of focus areas, and highlights some key issues as well as recommendations for each of these areas.

4.1.1 Design considerations

Optimizing data density. Managing data density and complexity is one of the most important considerations in data visualization. A balance must be struck between providing enough detail for informed decision-making and avoiding overwhelming users with too much information (Freeman et al., 2023). While Lu et al. (2023) and Andreou et al. (2023) stress that overly simplistic visualizations risk omitting critical details, which may impair users decision making, complexity for complexity's sake should also be avoided, as it increases the cognitive load on users, making it more difficult to focus on key insights.

The use of clustering techniques, such as DBSCAN, highlighted by Mazzola et al. (2021), is one method of managing data complexity. These techniques allow for the simplification of complex datasets by grouping data points and filtering out noise. Visualizations must also be interactive, enabling users to drill down into details when needed. This is particularly important in fields like healthcare and security, where missing key data points can have significant consequences.

Use of colors. Color is a crucial element in data visualization that aids in distinguishing between different datasets and guiding user attention. However, its misuse can result in confusion, particularly if the colors chosen have unintended emotional connotations or are difficult to distinguish for users with visual impairments. Burgan et al. (2024) mentions that users often associate red with danger or errors, which could skew the interpretation of neutral data. Ensuring appropriate, accessible color choices is key to effective visual communication.

Use of symbolic representation. The use of symbols and signs to represent data can enhance the interpretability of complex visualizations. Morini et al. (2023) demonstrates how using aesthetically resonant symbols, such as those inspired by travel postcards for climate journalism, can make visualizations more engaging and relatable to users. However, symbols must be carefully chosen to ensure that they are universally recognizable, especially in contexts involving domain expert users. Tools that closely resemble real-world objects or scenarios tend to be more intuitive.

Accessibility and inclusivity. Accessibility and inclusivity emerged as recurring concerns across multiple studies. Several authors noted the difficulty domain experts without technical background face in interpreting complex visualizations, particularly when visual elements are not adapted for diverse cognitive styles, visual acuity, or interaction contexts (Patel, 2023; Nakai et al., 2023; Luo, 2023). Lor et al. (2023) and Alger et al. (2024) also emphasize that linguistic and cultural barriers, such as technical jargon or region-specific symbolism, can hinder comprehension among users with limited literacy or from diverse cultural backgrounds. These findings underscore the importance of designing visualizations that are both accessible and culturally neutral to ensure equitable data interpretation for all user groups.

In summary, we have the following recommendations on data consideration in visualizations:

• Employ interactive features such as zoom and drill-down capabilities to allow users to explore data at varying levels of detail.

• Utilize clustering and aggregation techniques to simplify complex datasets, thus highlighting significant patterns without clutter.

• Highlight key insights first and reveal more details as needed. This can reduce cognitive overload.

• Select colors that support the understanding and context of the data, maintaining consistency across different visualizations.

• Design for accessibility by choosing high-contrast color combinations and including alternatives like patterns or textures for users with color vision deficiencies.

• Use intuitive and culturally universal symbols to decrease cognitive load and aid in quicker data interpretation.

4.1.2 User training

Training plays a pivotal role in helping users, particularly in complex environments, effectively interpret and engage with data visualizations and decrease the likelihood of errors. While many visualizations aim to be intuitive, professional users, such as those in healthcare, security, or finance, may require more in-depth training to understand the full potential of advanced visualization types. Training can also be useful to mitigate user bias (e.g., Handoko et al., 2023).

We have the following recommendations:

• Provide tiered training programmes that cater to different expertise levels, from beginners to advanced users. This ensures that all users, regardless of their prior experience with data visualization approaches, can engage effectively.

• Offer interactive, hands-on training sessions to allow users to explore the visualizations themselves, which is far more effective than passive learning. Such training should include real-world scenarios where users can practice interpreting data and making decisions based on visualizations.

• Provide ongoing training and support, particularly in environments where the data being visualized changes frequently. Training should be refreshed regularly to ensure users remain proficient as the visualization approaches evolves.

• Use follow-up evaluations to assess the effectiveness of training in improving user proficiency. Post-training surveys, performance metrics, and observational studies can be used to assess whether training was sufficient or further support is required.

4.1.3 Evaluation and feedback

Evaluation is essential to ensure that visualizations meet user needs, remain intuitive, and effectively communicate insights. Evaluation can provide valuable feedback on how users interact with a visualization. For example, Patel (2023) and Nakai et al. (2023) emphasize the role of usability studies in assessing how well visualization approaches facilitate decision-making.

We have the following recommendations on evaluation and feedback:

• Employ a combination of evaluation methods including usability testing, surveys, and expert heuristic evaluations to gather diverse feedback. This will ensure a comprehensive understanding of how users interact with the visualization and where improvements are needed.

• Implement iterative design processes where visualizations are regularly updated based on user feedback. Engaging users early and often helps identify usability issues and clarify areas where the visualizations may be overwhelming or unclear.

• Use heuristic evaluations, particularly with experts in the field, to identify usability issues that might not be apparent to general users. This ensures that the visualization approach meets the necessary standards of clarity and effectiveness.

4.1.4 Domain variations

Visualization challenges vary significantly across domains, shaped by users' roles and decision contexts. In business, visualization approaches like dashboards support strategic decisions but often overwhelm non-specialist users with complex, real-time data (Burgan et al., 2024; Alwi et al., 2023). In education, the emphasis is on accessibility and interpretive clarity, especially for users with limited data literacy (Deshmukh et al., 2023; Chiang et al., 2022). In healthcare, where decisions are high-stakes and time-sensitive, visualizations must balance clinical precision with rapid comprehension (Sadhu et al., 2023; Shetty and Keshavjee, 2024). These variations highlight the need for domain-sensitive visualization design.

4.2 Limitations

In the following, we discuss the potential limitations of this work.

Language bias. One of the inclusion criteria for papers in this review was that they be written in English. This may have introduced a language bias, as we excluded research published in other languages. As a result, the review may have missed valuable insights from non-English speaking regions or perspectives. This limitation might lead to an incomplete understanding of data visualization practices, especially in culturally diverse contexts where unique approaches and challenges may be present.