- 1School of Medical Technology, Beijing Institute of Technology, Beijing, China

- 2Department of Multimedia Design, Kyiv National University of Technologies and Design, Kyiv, Ukraine

- 3Department of Psychology, Capital Normal University, Beiing, China

Introduction: The rapid advancement of intelligent chatbots has transformed human-AI interaction, offering novel opportunities to enhance user experience (UX) through psychological and design interventions. However, the mechanisms by which chatbot design features influence UX remain understudied, particularly regarding the roles of emotional and cognitive mediators.

Methods: This study employed a 2 × 2 within-subjects experimental design with 160 participants to investigate the effects of anthropomorphism (high vs. low) and perceived intelligence (high vs. low) in chatbot avatars on UX. Structural equation modeling (SEM) was utilized to analyze the mediating roles of perceived empathy and trust in this relationship.

Results: Direct effects of anthropomorphism and perceived intelligence on UX were nonsignificant. However, their combined influence was significantly mediated by perceived empathy and trust (β = 0.48, *p* < 0.01). Specifically, highly anthropomorphic avatars correlated with elevated empathy (β = 0.32) and trust (β = 0.27), which in turn improved UX.

Discussion: These findings underscore the importance of emotional engagement over mere intelligence in designing effective chatbots. This research contributes unique insights into the complex mechanisms governing user interactions with intelligent chatbots, emphasizing the need for design strategies that prioritize emotional connections and cognitive ease.

1 Introduction

In recent years, the rapid development of intelligent chatbots has revolutionized human-computer interaction, establishing these digital assistants as indispensable tools across various industries (Bednarek et al., 2024). From customer service to healthcare, education, and entertainment, chatbots have become integral to enhancing user experiences and optimizing operational efficiencies. Artificial intelligence (AI) technologies, particularly in natural language processing (NLP) and Large language models (LLMs), have expanded their capabilities beyond simple query responses. These chatbots now interact with users in increasingly sophisticated, efficient, and personalized ways, adapting their behavior based on user inputs and preferences. Despite these technical improvements, user experience with chatbots remains influenced by several psychological and design factors (Zhang and Huang, 2024). The anthropomorphic design of chatbots, characterized by the incorporation of human-like attributes in their visual presentation, vocal qualities, and conversational approaches, has been identified as a significant factor influencing user interaction (Mosleh et al., 2024).

A crucial yet often overlooked aspect of chatbot design is the implementation of avatars, which serve as digital representations that enhance user experience, making their intangible nature more accessible and relatable to users (van der Goot and Pilgrim, 2019). The design of these avatars can span multiple dimensions, including appearance, facial expressions, body movements, and emotional responses. First, anthropomorphic features such as facial expressions, eye contact, and gestures create a sense of connection and attentiveness, making interactions feel more natural. Second, emotional expression is conveyed through dynamic facial movements and gestures, allowing avatars to recognize and validate user emotions, which fosters empathy and engagement. Third, personalization and customization enable users to tailor avatars to their preferences, strengthening emotional attachment and making interactions more relatable. As shown in Figure 1, users can modify chatbot avatars (such as Siri, Monica, Call Annie, Google, NIO Motors, Xpeng Motors and Google assistant) to reflect their preferences, which can lead to a stronger emotional attachment to the brand and product. Finally, cultural adaptability and interactivity—including culturally relevant symbols and real-time behaviors like nodding and blinking—help create inclusive and immersive experiences that resonate with diverse global audiences.

These design features align with the principles of media equation theory, which posits that people respond to computers (and by extension, chatbots) as if they were social entities. This tendency, sometimes referred to as “anthropomorphization bias, “suggests that human-like features in machines can elicit stronger emotional responses, such as empathy and trust. Considering their applications in healthcare or education, the anthropomorphic visual features of intelligent chatbots are critical for enhancing user experience. Anthropomorphic visual design also plays a crucial role in enhancing trust and emotional resonance, both of which are key factors in successful human-chatbot interactions (Bird et al., 2024). Trust is the cornerstone of successful human-chatbot interactions, as it encourages users to engage deeply and confidently with the technology. Anthropomorphic design elements—such as soft facial expressions, friendly gestures, and empathetic responses—are crucial for building this trust by bridging the gap between humans and machines. Emotional resonance is at the core of effective chatbot design, as avatars that mimic human emotions through gestures and facial expressions foster a natural sense of understanding and support. This empathy-driven approach not only boosts user satisfaction but also cultivates loyalty, encouraging more frequent and meaningful interactions (Lee and Li, 2023).

Perceptual intelligence, another key factor, significantly enhances user experience by improving the chatbot’s ability to understand and respond to user emotions and needs (Hoffman et al., 2024). Perceptual intelligence enables chatbots to recognize users’ emotions through cues like tone, word choice, and facial expressions, allowing them to adjust responses with empathy and support. This capability, rooted in emotional intelligence and human-centered design, facilitates more natural and personalized interactions by dynamically adapting to conversation contexts. By understanding user preferences, chatbots can deliver relevant content, fostering satisfaction and loyalty. Additionally, perceptual intelligence enhances a chatbot’s ability to handle complex queries, integrating emotional awareness with factual knowledge for more effective engagement. This personalized, emotionally aware approach strengthens user experience and deepens human-computer interaction.

In conclusion, anthropomorphic visual features and perceptual intelligence technologies significantly impact user experience in human-chatbot interactions (Abdelhalim et al., 2024). By mimicking human facial expressions, body language, and emotional responses, these design elements enhance emotional connection, trust, and interaction naturalness, making the chatbot feel more relatable and supportive (Du et al., 2022). Theories such as the media equation, emotional intelligence, and human-centered design frameworks provide valuable insights into how these design features can be optimized to enhance user satisfaction, trust, and emotional engagement. Moreover, the integration of these elements can facilitate smoother transitions between automated responses and human interactions, allowing users to feel more at ease when switching between chatbot assistance and live human support (Følstad et al., 2020). This is especially crucial in service-oriented environments where users may need to escalate issues to human representatives (Hobert et al., 2023).

This study aims to address these challenges by investigating how the anthropomorphic design and perceived intelligence of chatbot avatars affect user experience. In particular, we examine the mediating roles of perceived empathy and trust. The remainder of this paper will further examine the role of perceived empathy and trust in shaping user experiences with intelligent chatbots (Balderas et al., 2023). By delving deeper into these psychological constructs, the research aims to uncover additional insights that can inform future chatbot design and development strategies, ultimately leading to more effective and meaningful interactions between users and technology. In a world increasingly dominated by digital interactions, understanding these dynamics will be essential for creating user-friendly and emotionally intelligent systems that resonate with diverse audiences across various domains (Ayanwale and Ndlovu, 2024).

2 Related works

2.1 Anthropomorphic visual design in chatbot

Anthropomorphism refers to the tendency to attribute human characteristics, emotions, and behaviors to non-human entities, such as animals, objects, or technological systems. In the context of human-computer interaction (HCI), anthropomorphic design is the intentional use of human-like features in technology to create more relatable and engaging experiences (Khadija et al., 2021). This design principle draws from theories in psychology, including Media Equation Theory, which posits that people treat computers and media much like real people and situations, applying social rules unconsciously (Reeves and Nass, 1996). According to this theory, users may react to anthropomorphized interfaces in the same way they would respond to human behaviors, explaining why people attribute human-like intentions and emotions to interactive systems with anthropomorphic features (Kim and Song, 2021).

From a psychological perspective, anthropomorphism can be linked to Social Presence Theory, which suggests that increased social presence—how much a system feels like a real person—enhances the user’s emotional and cognitive engagement (Lu et al., 2023). When users perceive a system as more socially present, they are more likely to engage with it on an emotional level, increasing their sense of connection and comfort during interactions. This emotional engagement is often seen in chatbot interactions where users may feel understood and supported, especially if the chatbot employs facial expressions or empathetic language (Meng et al., 2023). As Parasocial Interaction Theory suggests, users may develop parasocial relationships with anthropomorphic systems, where they form emotional bonds similar to those with media characters. These relationships can increase trust, user satisfaction, and even loyalty to the system (Gambino et al., 2024), leading to sustained use and positive feedback.

In Engineering Psychology, anthropomorphism plays a crucial role in reducing cognitive load, as outlined by Cognitive Load Theory (Mihalache et al., 2024). By designing systems that mimic human-like behaviors, such as using gestures, facial expressions, or speech patterns, designers can make interactions more intuitive, which reduces the mental effort required to understand and operate the system. For example, a chatbot with a friendly, human-like demeanor might simplify a user’s decision-making process by providing information in a conversational manner, making the interaction more natural and less cognitively taxing. This reduction in cognitive load can improve user performance, as tasks become easier to understand and execute, leading to higher satisfaction levels (Murtarelli et al., 2021).

Moreover, Attribution Theory suggests that users attribute human traits such as empathy and trustworthiness to anthropomorphized systems. This is particularly relevant in domains where trust is critical, such as healthcare or customer service. For instance, a chatbot that responds with empathetic phrases when a user expresses frustration can create a sense of understanding and emotional support, enhancing perceived empathy and trust. This emotional resonance is key to improving user experience because it not only makes the interaction more pleasant but also fosters a deeper connection between the user and the system, promoting continued use and reliance on the technology.

Overall, the incorporation of anthropomorphic features into technology design is not merely an aesthetic choice but a psychologically grounded strategy that can significantly enhance user experience by fostering emotional engagement, reducing cognitive load, and promoting trust and satisfaction (Pizzi et al., 2021). By leveraging these psychological and engineering principles, designers can create more effective, user-friendly systems that resonate on both emotional and cognitive levels. This makes anthropomorphism a powerful tool in the development of intelligent technologies, particularly in areas that require ongoing interaction and user trust (Shapiro and Lyakhovitsky, 2024).

2.2 Perceived intelligence

Perceived intelligence in chatbots refers to the degree to which users believe the system is capable of intelligent decision-making, reasoning, and adaptation. This concept is deeply rooted in cognitive psychology, particularly through Dual-Process Theory (Tai and Chen, 2024), which explains how human cognition operates through two distinct systems: System 1, which is fast, automatic, and intuitive, and System 2, which is slow, deliberate, and analytical. In interactions with perceived intelligent systems, users may initially rely on System 1 thinking, where they trust the chatbot’s responses without critically analyzing every piece of information. A chatbot that adapts quickly to user input, solves complex problems, or demonstrates contextual understanding triggers this automatic response, leading users to assume the system is intelligent without engaging in deep scrutiny (Urbani et al., 2024). However, when chatbots fail to meet expectations, users might switch to System 2, evaluating the chatbot’s intelligence more carefully, which can lead to lower trust and user satisfaction if the chatbot fails to deliver.

In Engineering Psychology, perceived intelligence is closely related to Trust in Automation Theory (Muir, 1987). According to this theory, user trust in automated systems develops based on three primary factors: reliability, predictability, and ease of use. Chatbots that integrate NLP and LLMs to understand and process user inputs effectively enhance perceived intelligence. These systems appear more reliable because they can predict and respond to user needs, identifying emotional cues and adjusting their tone or content accordingly (Yahagi et al., 2024). For instance, if a chatbot detects user frustration through tone or keywords and adjusts its response to become more empathetic or supportive, the system exhibits a higher level of perceived intelligence. This adaptability and context-aware interaction contribute to users trusting the system more, as it demonstrates an understanding of both the task and the user’s emotional state, thereby improving user satisfaction (Yuan et al., 2024).

Moreover, Automation Trust theories suggest that when users perceive a chatbot to be intelligent, they are more likely to develop long-term trust, especially in high-stakes environments such as healthcare or financial services, where reliability is critical. The chatbot’s perceived intelligence enables users to delegate more complex tasks without feeling the need to constantly monitor or question the system’s performance. This enhances both short-term satisfaction—due to smoother, more intuitive interactions—and long-term adoption—as users become more reliant on the system over time.

From the perspective of Flow Theory, perceived intelligence also plays a crucial role in maintaining user engagement. Flow occurs when individuals are fully immersed in an activity where the challenge of the task matches their skill level. Intelligent chatbots contribute to this state by adapting to users’ behaviors and keeping the interaction dynamic and engaging. For example, if a chatbot can answer a range of user queries and adapt its responses as the conversation progresses, it keeps the user in a state of flow (Zgonnikov et al., 2024). This balance between challenge and skill promotes sustained user interaction, as the chatbot continually meets the user’s needs without causing frustration or boredom. In turn, this sustained engagement enhances user experience by creating a seamless, enjoyable interaction, leading to higher satisfaction and a greater likelihood of continued use.

In conclusion, perceived intelligence in chatbots is a multifaceted concept that significantly influences user trust, satisfaction, and engagement (Bai et al., 2024). By leveraging cognitive psychology and engineering psychology principles such as Dual-Process Theory, Automation Trust, and Flow Theory, chatbot designers can create systems that not only meet user expectations for intelligent interaction but also foster deeper emotional and cognitive connections, ultimately improving user experience and long-term adoption of the technology.

2.3 The need for perceived empathy in chatbot

Empathy in human interactions involves the ability to understand and share another person’s emotional state, which is crucial for fostering strong interpersonal relationships. In psychology, empathy theory distinguishes between two primary types of empathy: cognitive empathy, which involves the intellectual understanding of others’ emotions, and emotional empathy, which pertains to the ability to feel and resonate with those emotions. In the context of chatbots, perceived empathy can be effectively simulated through anthropomorphic visual design and intelligent responses that mimic human emotional recognition (Feng et al., 2024).

Cognitive empathy in chatbots is achieved through advanced techniques such as sentiment analysis and contextual response generation (Vizoso et al., 2023). Sentiment analysis enables the chatbot to assess the emotional tone of user inputs, allowing it to respond appropriately based on the user’s current emotional state. For example, if a user expresses frustration, the chatbot can recognize this sentiment and provide responses that acknowledge the user’s feelings and offer solutions. Meanwhile, emotional empathy is portrayed through human-like visual cues—such as facial expressions and tone of voice—which enhance the user’s perception of the chatbot as an empathetic entity.

The field of affective computing, as defined by Picard (1997), focuses on developing systems that can recognize, interpret, and simulate human emotions. This interdisciplinary area bridges emotional psychology and artificial intelligence, enabling systems to respond in ways that resonate emotionally with users. For instance, when a chatbot detects signs of frustration or distress in a user’s voice or text input, it can adjust its responses to be more supportive or reassuring. Such capabilities strengthen the emotional connection between the user and the system, leading to increased user satisfaction and trust.

In engineering psychology, the importance of user-centered design principles becomes evident in the creation of empathetic systems. Chatbots designed to simulate empathy can significantly reduce user frustration by providing emotional support during interactions. By incorporating emotional cues—such as displaying concern or encouragement—these chatbots create a more personalized experience that alleviates users’ feelings of alienation and frustration. This is especially crucial in high-stress contexts, such as mental health support or customer service, where empathy plays a key role in calming users and addressing their concerns effectively (Hu and Sun, 2023).

Furthermore, the ability of chatbots to display perceived empathy can enhance user engagement and promote positive outcomes. For example, in mental health applications, an empathetic chatbot can make users feel heard and understood, which may encourage them to open up more about their feelings and challenges. Similarly, in customer service scenarios, a chatbot that acknowledges a user’s frustration can help diffuse tension, leading to more productive interactions. Ultimately, by fostering perceived empathy through thoughtful design and intelligent responses, chatbots can significantly improve the overall user experience, resulting in higher satisfaction, increased trust, and a greater likelihood of continued user engagement (Marks, 2014).

In conclusion, integrating principles of empathy into chatbot design not only enhances the emotional intelligence of the system but also aligns with the fundamental goals of human-computer interaction: to create interfaces that feel intuitive, relatable, and supportive. Here, “integrating principles of empathy” specifically refers to incorporating insights from affective computing—focusing on emotional empathy, which is the ability to feel and resonate with user emotions—rather than the broader spectrum of empathy theories in psychology. This focus on emotional empathy is critical for developing chatbots that build trust, reduce user frustration, and improve overall satisfaction (Feng et al., 2024; Picard, 1997). Through this empathetic approach, chatbots can play a transformative role in various domains, including mental health, education, and customer service, ultimately enriching the interactions between users and technology.

2.4 Perceived trust in chatbot

Trust is a fundamental concept in psychology and human-computer interaction, playing a crucial role in shaping user experiences with technology. Trust theory, as articulated by Mayer et al. (1995), posits that trust is based on three main factors: ability, benevolence, and integrity. In the context of chatbots, perceived trust emerges from the system’s ability to meet user expectations reliably and consistently. Users assess a chatbot’s trustworthiness by evaluating the accuracy of its responses, the transparency of its underlying processes, and its capacity for perceived empathy.

From an engineering psychology perspective, perceived trust is closely linked to the concept of calibrated trust in automation. Lee and See (2004) propose that users develop calibrated trust through repeated interactions with a system, where their experiences align with their expectations. This process involves users adjusting their trust levels based on their interactions with the chatbot, leading to a more nuanced understanding of the system’s capabilities. For instance, a chatbot that consistently provides accurate and contextually relevant responses builds a stronger foundation of trust over time.

Anthropomorphic visual design can significantly enhance perceived trust by making the chatbot appear more approachable and emotionally intelligent. Features such as human-like facial expressions, gestures, and tone adjustments can create a socially transparent interface that fosters trust. By simulating human behaviors, chatbots can make their processes more understandable and predictable. When users perceive the chatbot as relatable and emotionally aware, they are more likely to trust it and engage in more open and honest interactions.

Perceived trust also has a profound impact on long-term engagement with chatbots. According to commitment-trust theory (Morgan and Hunt, 1994), trust influences users’ willingness to rely on technology over time. As users interact with chatbots that demonstrate reliability and empathy, their trust levels increase, making them more likely to integrate these systems into their daily routines and rely on them for decision-making. This reliance can foster a sense of loyalty toward the technology, resulting in enhanced user satisfaction and engagement.

Moreover, the interplay between trust and user experience can lead to beneficial outcomes across various domains. In customer service, for example, a trusted chatbot can enhance user satisfaction by providing prompt and accurate support, thereby reducing frustration and improving the overall experience (Lin et al., 2022). In mental health applications, perceived trust can encourage users to confide in the chatbot, leading to more meaningful and effective interactions (Mogaji et al., 2021). Thus, intelligent chatbots designed with anthropomorphic features not only have the potential to build long-term relationships with users but also to enhance the overall efficacy of the interaction by consistently demonstrating reliability, empathy, and transparency.

In conclusion, trust is a pivotal factor in the interaction between users and chatbots, influencing not only immediate user satisfaction but also the long-term adoption and integration of these technologies into users’ lives. By prioritizing design elements that foster trust—such as anthropomorphic features and intelligent responses—developers can create chatbots that not only meet user needs but also cultivate a supportive and reliable digital companion (Rietz et al., 2019). This focus on trust ultimately enriches the user experience, ensuring that chatbots serve as valuable assets in various applications, from customer service to mental health support.

2.5 The user experience of chatbots with anthropomorphic visual design

User Experience (UX) refers to a user’s perceptions and responses resulting from the use or anticipated use of a product, system, or service. This includes emotions, beliefs, preferences, perceptions, physical and psychological responses, behaviors, and accomplishments that occur before, during, and after use (Haugeland et al., 2022; Yang and Qi, 2024). The user-centered design philosophy, which is pivotal in UX, underscores the necessity of deeply understanding user needs, behaviors, and emotions. This comprehensive approach allows designers to optimize product interfaces and interactions, making them more intuitive and accessible. Within the realm of chatbots, the strategic combination of anthropomorphic visual design and intelligent behavior plays a vital role in enriching user experience, rendering interactions not only more natural but also significantly more engaging.

The Technology Acceptance Model (TAM) (Davis, 1989) offers a fundamental framework for examining how users come to adopt new technologies. Central to this model are two key concepts: perceived usefulness and perceived ease of use. The anthropomorphic visual design of chatbots enhances perceived ease of use by integrating familiar human-like interaction cues, such as smiling or nodding, which facilitate navigation and encourage user engagement. These human-like features reduce cognitive load by making the chatbot’s responses more relatable and easier to interpret, thus promoting comfort during interactions. In contrast, perceived intelligence enhances perceived usefulness by equipping chatbots with the capability to understand and effectively respond to complex user requests. For instance, a chatbot that adapts its dialogue based on user sentiment not only meets expectations but also reinforces the user’s sense of competence and satisfaction.

Moreover, the overall user experience is profoundly influenced by the chatbot’s ability to establish a balanced interaction between autonomy and control (Deci and Ryan, 1985). Effective chatbots that display anthropomorphic characteristics and intelligent behaviors empower users by giving them a sense of agency in the conversation while allowing the system enough autonomy to facilitate the dialogue. This balance is crucial in preventing feelings of frustration or helplessness often associated with technology interactions. When users feel their input is valued and the chatbot is responsive to their needs, they are more likely to engage deeply with the system, leading to richer and more meaningful interactions.

In addition to these principles, the notion of emotional design plays a significant role in enhancing user experience. Emotional design emphasizes creating products that resonate with users on an emotional level, fostering connections that go beyond mere functionality. When chatbots exhibit characteristics of empathy through their interactions, they not only improve user satisfaction but also cultivate long-term loyalty. The design of a chatbot that can recognize emotional cues—such as distress or joy—and respond appropriately fosters a deeper emotional connection, which is critical in domains such as mental health, where users often seek understanding and support.

Furthermore, social presence theory suggests that the perceived presence of others in communication can enhance user engagement (Parker et al., 1976). Chatbots that effectively use anthropomorphic features can create an illusion of social presence, making interactions feel more like conversations with a human. This illusion can significantly influence how users perceive the chatbot’s reliability and trustworthiness, leading to improved user experiences and a greater likelihood of continued interaction.

In conclusion, the integration of anthropomorphic visual design and intelligent behavior in chatbots significantly enhances user experience by fostering perceived empathy, trust, and overall satisfaction (Roesler et al., 2024). The psychological theories and principles that underpin these interactions highlight the necessity of developing systems that perform well while also connecting with users on emotional and cognitive levels. By prioritizing user-centered design principles and leveraging insights from psychology, developers can create chatbots that not only fulfill functional requirements but also build positive emotional connections with users. Such advancements are poised to expand the potential applications of chatbots across various sectors, including customer service, education, and healthcare, where empathetic and intelligent communication is paramount (Olszewski et al., 2024). By creating chatbots that truly understand and respond to user emotions and needs, we move toward a future where technology seamlessly integrates into our daily lives, enhancing our interactions and improving our overall quality of life (Sutoyo et al., 2019). The continued exploration of these principles will not only drive innovation in chatbot design but will also pave the way for deeper, more meaningful human-technology interactions that resonate with users and meet their evolving needs.

3 Research hypothesis and model development

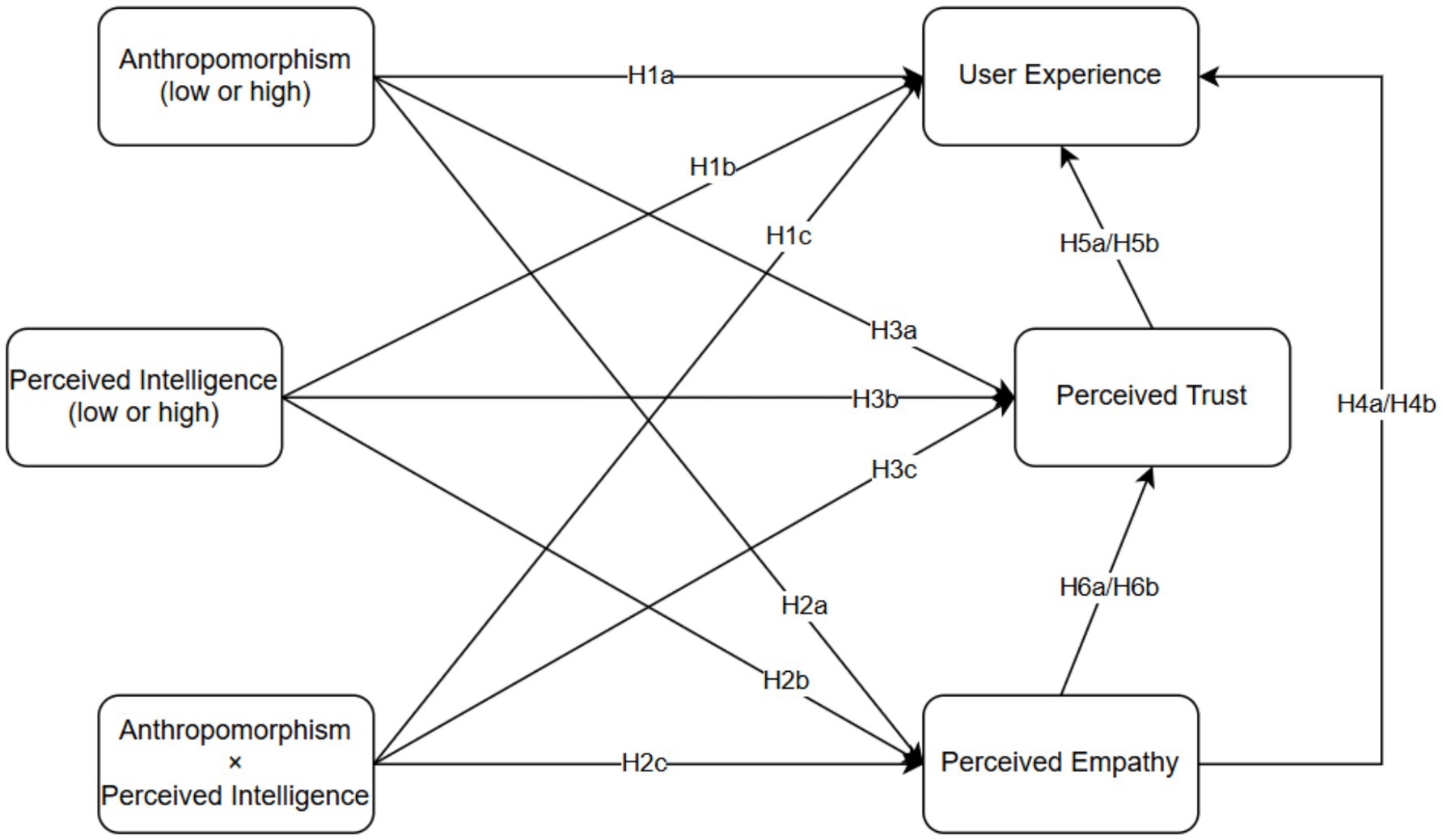

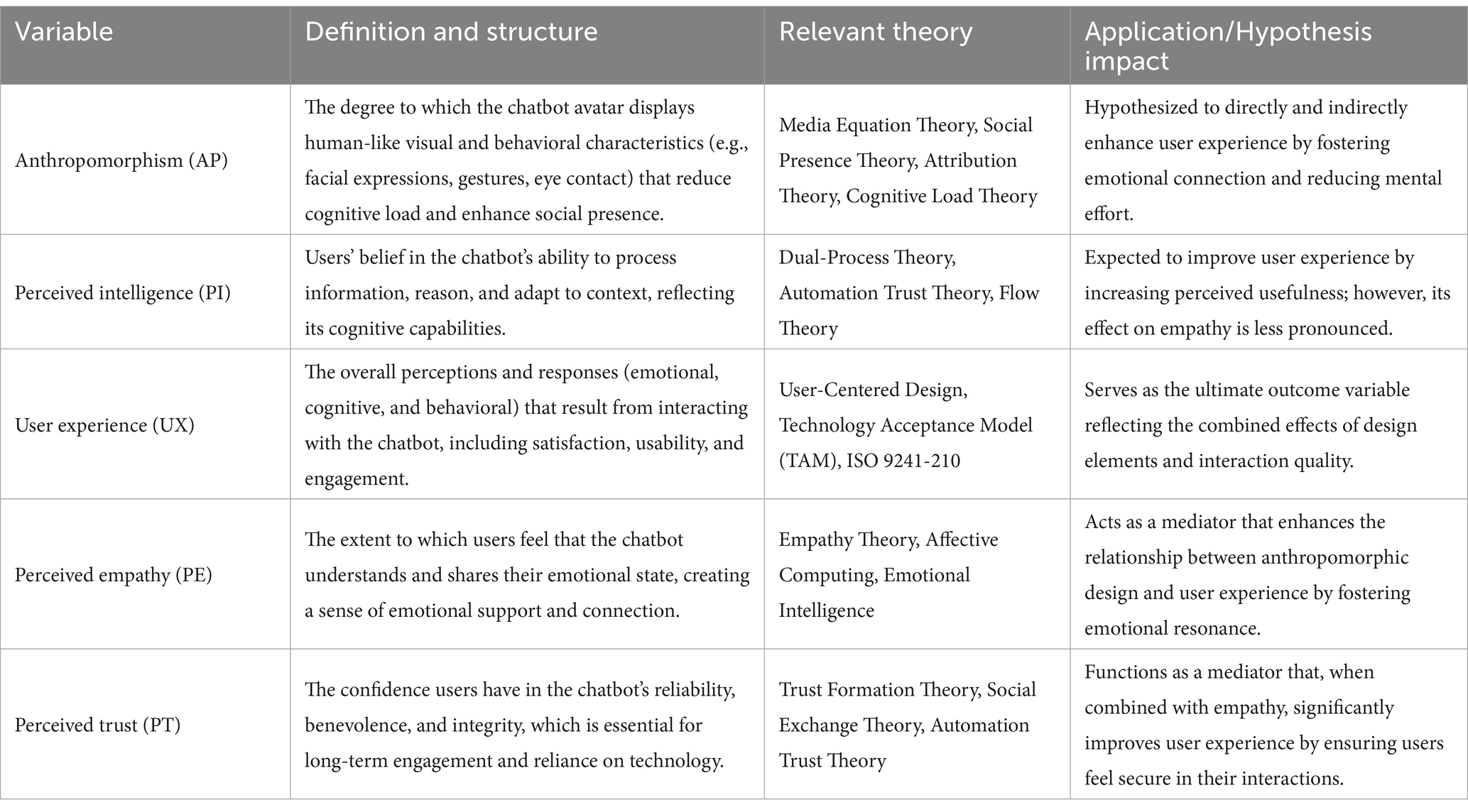

The purpose of our research is to explore the relationships among anthropomorphism, perceived intelligence, user experience, perceived empathy, and perceived trust. Specifically, we aim to analyze how anthropomorphic characteristics influence a user’s perceived empathy toward visually designed intelligent chatbot avatars, thereby affecting their overall user experience. For instance, when a chatbot avatar possesses more anthropomorphic attributes, users are more likely to experience empathy, which can enhance their affection and satisfaction with the interaction. Additionally, we investigate the interplay between perceived intelligence, perceived empathy, and perceived trust, examining whether high levels of perceived intelligence enhance a user’s empathy and trust, and how this relationship varies across different contexts. Our goal is to identify strategies for improving user experience by enhancing these factors and determining which elements are key drivers that can be reinforced through design, interaction, or other means to elevate the user experience with visual design chatbot avatars.

Previous research has highlighted the significant role of anthropomorphism and perceived intelligence in enhancing user experience (UX) when interacting with intelligent systems. Epley et al. (2007) found that when digital agents or chatbots are designed with human-like characteristics, users tend to form more positive evaluations, which contributes to better overall user satisfaction and engagement. This is due to the fact that anthropomorphized avatars foster a sense of social presence and relatability, enhancing users’ emotional responses during their interactions. Moreover, studies by Go and Sundar (2019) show that perceived intelligence of chatbots significantly influences UX, particularly by increasing users’ perceptions of the system’s effectiveness and capability. When users believe that a chatbot is capable of processing information and delivering relevant responses intelligently, it leads to a more seamless and enjoyable interaction. Finally, Nass and Moon (2000) demonstrate that the combination of anthropomorphism and intelligence in chatbot design can have a synergistic effect, improving the overall user experience by providing both emotional engagement and practical functionality. Users are more likely to have positive interactions when they feel both emotionally connected to the chatbot and confident in its ability to handle complex tasks. For these reasons, we assume that the degree of anthropomorphism and perceived intelligence of chatbot avatars has a positive effect on user experience.

H1a: Increased anthropomorphism of intelligent chatbots Avatars of visual design positively affects Avatar’s user experience (UX).

H1b: Higher perceived intelligence of intelligent chatbots Avatars of visual design positively affects Avatar’s user experience (UX).

H1c: The interaction between anthropomorphism and perceived intelligence positively affects Avatar’s user experience (UX).

Previous studies have consistently highlighted the impact of anthropomorphism on enhancing users’ perception of empathy in interactions with intelligent agents. When avatars are designed with more human-like features, users are more likely to attribute empathetic qualities to them, as these designs promote a sense of familiarity and emotional connection. Research has shown that anthropomorphic avatars can create a stronger sense of social presence, which in turn enhances perceived empathy (Ryan and Deci, 2000). Similarly, perceived intelligence has been found to contribute to the avatar’s ability to understand and respond to users’ needs, further improving the sense of empathy experienced during interactions. Some studies suggest that combining anthropomorphism with high perceived intelligence can have an additive effect, strengthening users’ perception of the avatar’s empathetic abilities. Following the assumptions outlined above, we hypothesize.

H2a: Increased anthropomorphism of intelligent chatbots Avatars of visual design positively affects Avatar’s perceived empathy (PE).

H2b: Higher perceived intelligence of intelligent chatbots Avatars of visual design positively affects Avatar’s perceived empathy (PE).

H2c: The interaction between anthropomorphism and perceived intelligence positively affects Avatar’s perceived empathy (PE).

Research has consistently demonstrated the importance of anthropomorphism and perceived intelligence in fostering trust between users and digital agents. For instance, studies by De Visser et al. (2016) indicate that anthropomorphized avatars can enhance trust by making users feel more connected and understood in their interactions. This human-like appearance and behavior create a sense of familiarity, which is crucial for building trust in intelligent systems. Similarly, perceived intelligence plays a critical role in shaping trust. As indicated by findings from Hoff and Bashir (2015), when users perceive a chatbot or digital assistant as more intelligent, they tend to rely on it more, particularly in decision-making or problem-solving contexts. Higher levels of perceived intelligence are often associated with greater competence, which leads to increased trust in the system’s abilities. Furthermore, Lee and Moray (1994) suggest that the relationship between trust and perceived intelligence is particularly strong when users encounter complex tasks, as the appearance of intelligence reassures users that the system can handle intricate or challenging situations effectively. In consequence, we hypothesize.

H3a: Increased anthropomorphism of intelligent chatbots Avatars of visual design positively affects Avatar’s perceived trust (PT).

H3b: Higher perceived intelligence of intelligent chatbots Avatars of visual design positively affects Avatar’s perceived trust (PT).

H3c: The interaction between anthropomorphism and perceived intelligence positively affects Avatar’s perceived trust (PT).

Previous research has extensively explored the role of anthropomorphism and perceived intelligence in shaping user experience (UX), perceived empathy (PE), and perceived trust (PT) in human-computer interactions. Studies have shown that anthropomorphic design elements, such as human-like avatars, enhance user engagement and foster more positive interactions by increasing perceived social presence. Research by Nowak and Biocca (2003) suggests that users often attribute human-like characteristics to avatars, which can lead to higher levels of trust and empathy toward digital agents. Furthermore, Waytz et al. (2010) demonstrated that when technology, such as chatbots, appears more anthropomorphized, users tend to feel more connected and empathetic during interactions. In parallel, perceived intelligence has been shown to significantly impact user perceptions of competence and reliability in digital agents. Users tend to trust more intelligent-seeming systems, as they are associated with better performance and decision-making abilities. For example, research by Lee and See (2004) found that perceived intelligence in automated systems leads to enhanced user trust, especially in complex or unfamiliar tasks. Given that, we hypothesize the following for the case of chatbots.

H4a: Perceived empathy (PE) positively mediates the positive relationship between anthropomorphism and Avatar’s user experience (UX).

H4a: Perceived empathy (PE) positively mediates the positive relationship between perceived intelligence and user Avatar’s experience (UX).

H5a: Perceived trust (PT) positively mediates the positive relationship between anthropomorphism and Avatar’s user experience (UX).

H5b: Perceived trust (PT) positively mediates the positive relationship between anthropomorphism and Avatar’s user experience (UX).

H6a: By perceived trust (PT), Perceived empathy (PE) positively mediates the positive relationship between anthropomorphism and Avatar’s user experience (UX).

H6b: By perceived trust (PT), Perceived empathy (PE) positively mediates the positive relationship between perceived intelligence and Avatar’s user experience (UX).

In conclusion, our research model and corresponding theoretical variables are, respectively, depicted in Figure 2 and Table 1.

4 Methods

4.1 Participants

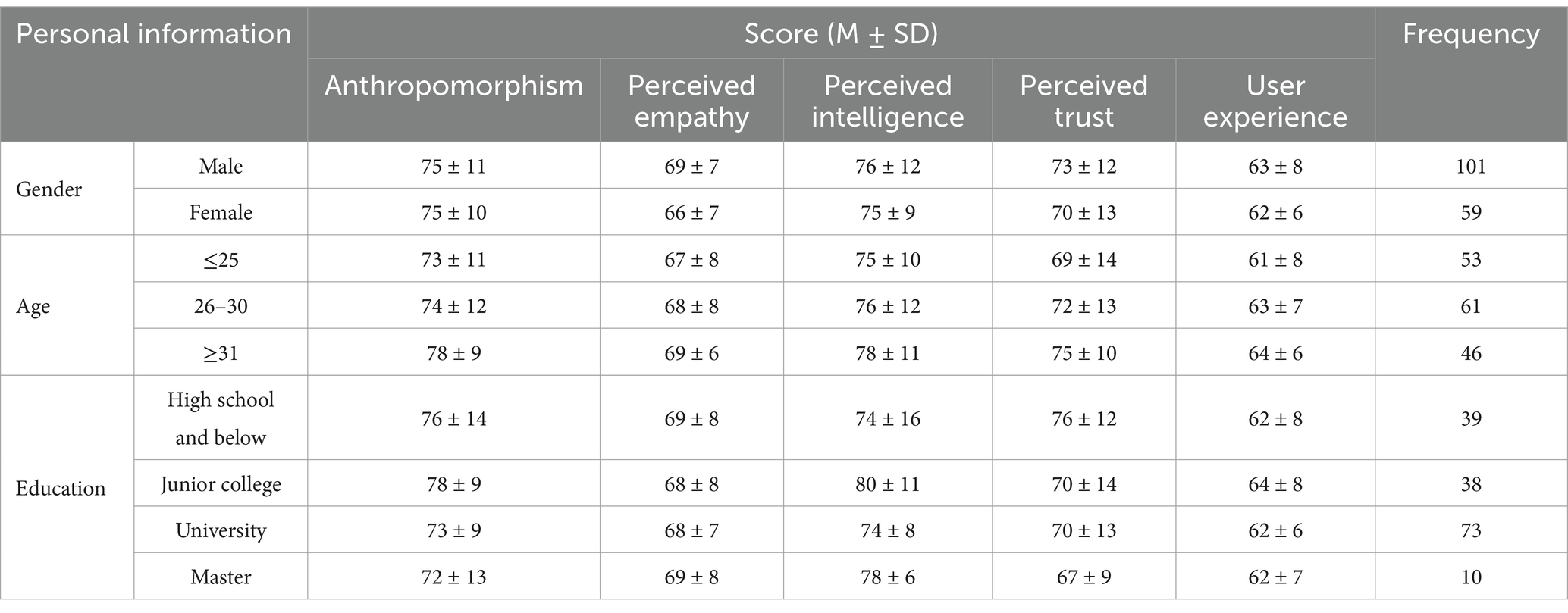

To test the underlying hypotheses of this study and ensure a robust analysis, we conducted an in-person experiment at the Beijing Institute of Technology, followed by a survey. Participants were recruited from within the university, with the recruitment criteria requiring that they had interacted with intelligent chatbots at least five times per day over the past 3 months to ensure relevant and recent experience with chatbot interactions. Participation was voluntary, and participants were incentivized with gift cards valued at approximately 200 RMB. A total of 179 participants were involved in the experiment. After excluding 19 invalid participants due to missing data and other issues, 160 valid participants remained. The final sample consisted of 101 female and 59 male participants, with an average age of 26 years (mean = 26, SD = 5). Table 2 presents the demographic characteristics of the participants and their scores on various scales. The recruitment and participation processes adhered to relevant privacy protocols, as approved by the appropriate data protection body. Informed consent was obtained from all participants, who were made aware of their right to withdraw from the study at any time. Additionally, all data were handled with strict confidentiality and anonymized to protect participants’ privacy.

4.2 Experimental design and procedure

The primary aim of this study was to investigate the effects of anthropomorphic visual design and perceived intelligence on user experiences during human-chatbot interactions. Specifically, we sought to examine how these elements influence perceived empathy, perceived trust, and overall user experience. To test our hypotheses, we introduced two independent variables: anthropomorphism, manipulated at two levels (high and low), and perceived intelligence, also manipulated at two levels (high and low). These levels were operationalized through variations in the visual design of the chatbot avatars.

Employing a 2 × 2 within-subjects design allowed each participant to be exposed to all four conditions, facilitating more precise comparisons and minimizing inter-individual variability. This within-subjects approach also enhanced the statistical power of our analysis while controlling for potential differences among participants. To mitigate any order effects and ensure the robustness of our findings, the sequence of exposure to the four conditions was determined using a Latin square design. This method ensured equal representation of all conditions across participants, thereby minimizing order-related biases and enhancing the internal validity of the study.

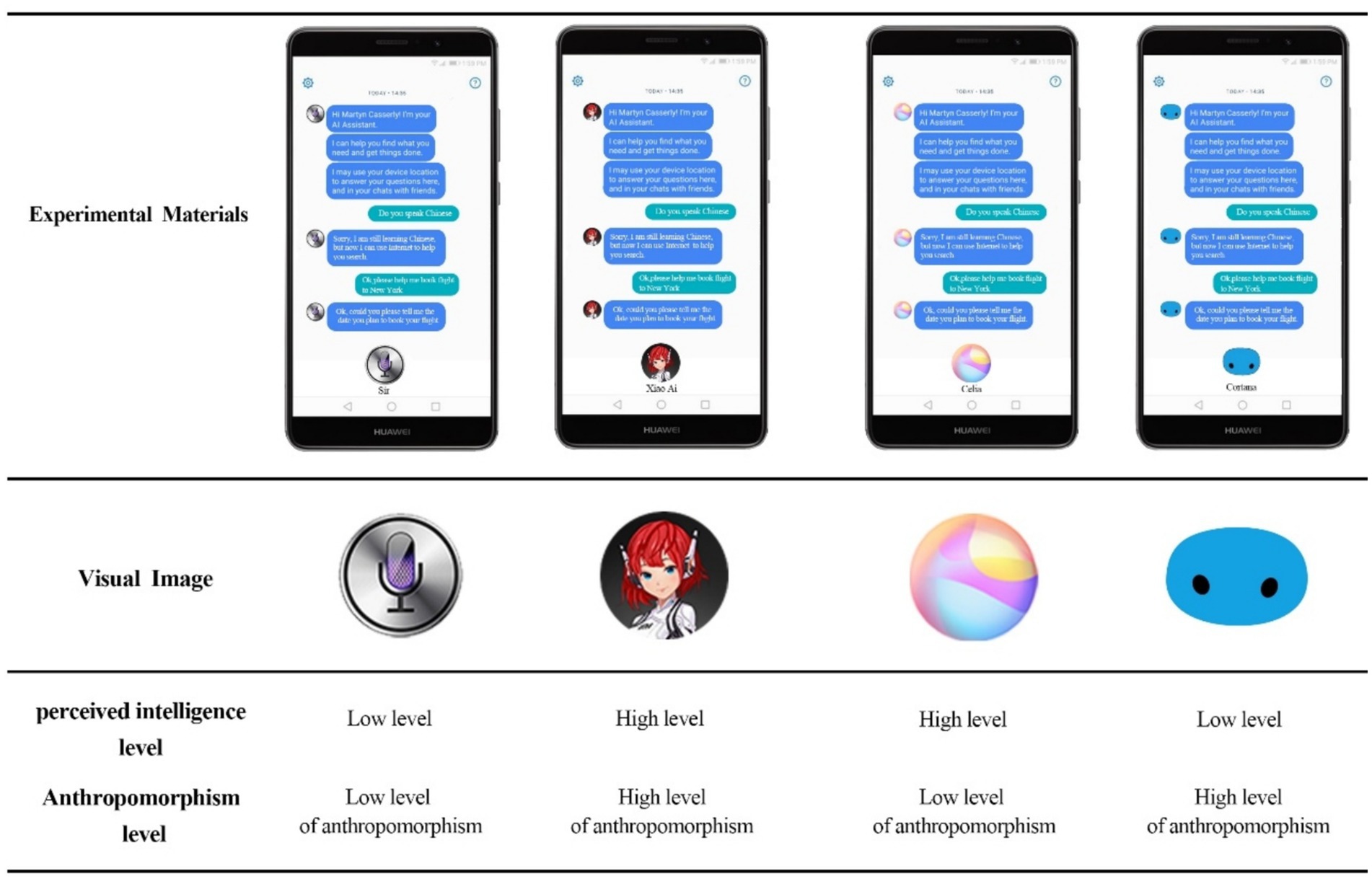

In this research, four chatbot avatar designs were developed as stimulus materials to represent the experimental conditions. These avatars were based on the same platform but varied in their levels of anthropomorphism. As illustrated in Figure 3, the visual designs of the chatbots were presented dynamically to enhance the realism of the interactions. To simulate real-world scenarios, each visual design was paired with a 30-s human-chatbot conversation sourced from a large-scale Chinese short-text conversation dataset. Additionally, each chatbot avatar was accompanied by a contextual digital scenario image in the questionnaire to help participants better understand the interaction setting. As shown in Figure 3, the experiment was conducted using a HUAWEI Mate 20 X smartphone with a 7.2-inch screen and a resolution of 2244×1080 pixels, which displayed the interaction demos featuring the four chatbot avatar designs. Each participant engaged with all four conditions, with the order of presentation counterbalanced using a Latin square design to control for any potential order effects.

Before the experiment, participants received a brief introduction to chatbots, covering their definitions, functions, and the nature of human-chatbot interactions. To prevent potential biases arising from specific designs, participants were shown images of chatbot avatars that were not part of the experimental conditions. This approach ensured that participants had a general understanding of chatbot avatars and could approach each interaction with an open mindset. Prior to the formal experimental session, the principal investigator conducted short pre-interviews with participants. These interviews aimed to capture participants’ initial impressions and expectations regarding the chatbot avatars, providing qualitative data that enriched the interpretation of the experimental results.

During the main experimental procedure, participants watched demonstration videos featuring human-chatbot interactions, with a particular emphasis on the visual design of the chatbot avatars. After viewing each video, participants were directed to complete a questionnaire hosted on the Wenjuanxing platform, designed to capture their responses related to the dependent variables, including perceived empathy, perceived trust, and user experience. This process was repeated for each of the four experimental conditions, ensuring that participants interacted with all chatbot designs and completed the corresponding questionnaires. The 2 × 2 within-subjects design, combined with Latin square counterbalancing, ensured that the data collected from each participant was comparable across conditions, thereby strengthening the study’s internal validity. By integrating both quantitative data from the questionnaires and qualitative insights from the pre-interviews, the study provided a comprehensive understanding of how varying levels of anthropomorphism and intelligence in chatbot avatars affect key aspects of user experience during human-chatbot interactions. This multi-method approach and robust experimental design allowed for a nuanced exploration of the relationships between visual design elements and user perceptions in intelligent systems.

4.3 Questionnaire and measures

Based on four distinct anthropomorphic visual designs of intelligent chatbot avatars, data were collected from participants using a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The data covered five key variables: anthropomorphism, perceived intelligence, perceived trust, perceived empathy, and user experience.

Anthropomorphism was measured using the 6-item Perceived Anthropomorphism of Personal Intelligent Agents Scale (Moussawi and Koufaris, 2019), which precisely gauged participants’ perceptions of the anthropomorphic qualities of chatbot avatars with different visual designs.

Perceived intelligence was assessed with a single item on a 5-point Likert scale. Participants rated the statement, “This chatbot’s digital avatar appears highly intelligent” from 1 (strongly disagree) to 5 (strongly agree). This scale showed high reliability with a Cronbach’s alpha of 0.917.

Perceived trust was measured using the 12-item Human-Machine Trust Scale (Jian et al., 2000), which allowed for an assessment of participants’ confidence in the chatbot’s reliability, integrity, and trustworthiness.

Perceived empathy was evaluated using the 8-item Robot’s Perceived Empathy (RoPE) understanding scale, ensuring a precise assessment of participants’ perceptions of the chatbot’s ability to understand and emotionally connect with them.

Lastly, user experience was measured with the User Experience Questionnaire (UEQ), a comprehensive tool that evaluates multiple aspects of user experience, including attractiveness, perspicuity, efficiency, dependability, stimulation, and novelty. The UEQ is well-established and widely validated for its reliability and effectiveness in capturing the multifaceted nature of user experience.

5 Results

5.1 Manipulation check

The paired sample t-test confirmed successful manipulations of two variables in human-like interactions: the intelligent chatbots Avatars’ perceived anthropomorphism (low vs. high) and intelligence (low vs. high). For the anthropomorphism, the high-level group (M = 78.46, SD = 12.99) reported significantly higher anthropomorphism in visual design chatbot Avatars than the low-level group (M = 75.00, SD = 15.91), t (319) = −4.34, p < 0.001. Similarly, the intelligence manipulation was validated, with the high-level group (M = 80.25, SD = 16.38) reporting higher perceived intelligence than the low-level group (M = 76.76, SD = 17.23), t (319) = −3.70, p < 0.001.

5.2 Main and interaction effect of anthropomorphism and intelligence

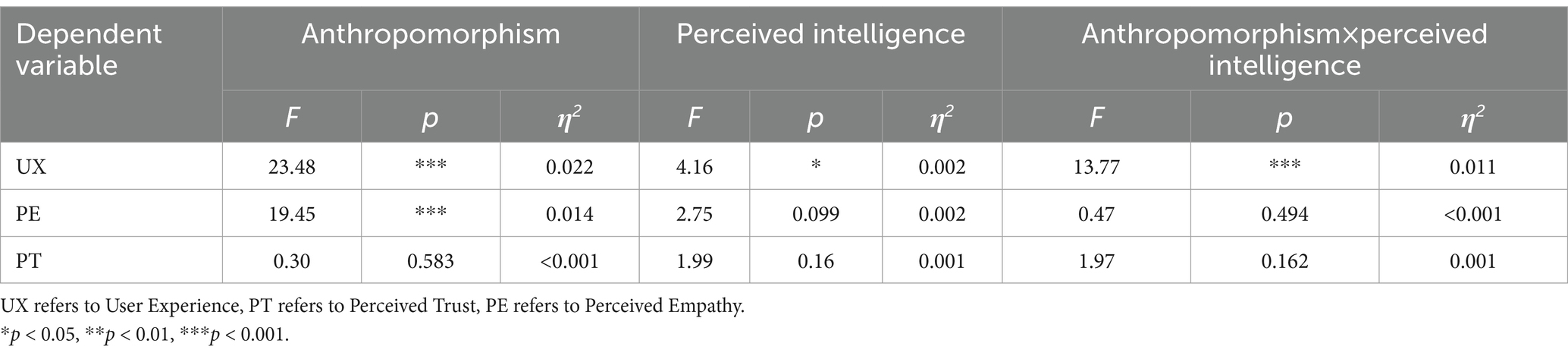

Using repeated measures ANOVA, we examined the main effects and interaction effects of anthropomorphic visual design and intelligence levels on user experience (UX), perceived trust (PT), and perceived empathy (PE). The results are summarized in Table 3.

For user experience (UX), the results indicated a significant main effect of anthropomorphic visual design, F (1, 159) = 23.48, p < 0.001, partial η2 = 0.022, showing a mild effect, accordingly, we can accept hypothesis H1a. Additionally, perceived intelligence also had a significant effect on UX, F (1, 159) = 4.16, p < 0.05, partial η2 = 0.002, hence, we accept hypothesis H1b. There was also a significant interaction effect between anthropomorphic visual design and perceived intelligence, F (1, 159) = 13.77, p < 0.001, partial η2 = 0.011, showing we can accept hypothesis H1c.

Figure 4 visually represents the main effects and interaction plots for UX, PT, and PE, providing clarity on the distinct trends observed in the data. Based on the results derived from SEM, we accepted Hypotheses 1 and 2a, affirming the positive relationships between anthropomorphism, perceived intelligence, and user experience. However, we rejected Hypotheses 2b and 2c, which proposed significant roles for perceived intelligence in influencing perceived empathy and for the interaction between anthropomorphism and perceived intelligence on PE. Furthermore, Hypothesis 3 was also rejected, indicating that neither anthropomorphism nor perceived intelligence significantly impacts perceived trust. These insights contribute to a deeper understanding of how visual design and perceived intelligence interact to shape user experiences in chatbot interactions.

For perceived empathy (PE), the main effect of anthropomorphic visual design was significant, F (1, 159) = 19.45, p < 0.001, partial η2 = 0.014. Thus, we can accept hypothesis H2a. However, the effect of perceived intelligence on PE was not significant, F (1, 159) = 2.75, p = 0.099, partial η2 = 0.002, we must reject H2b. Similarly, the interaction effect between anthropomorphic visual design and perceived intelligence was not significant, F (1, 159) = 0.47, p = 0.494, partial η2 < 0.001, hence, we must reject H2c.

For perceived trust (PT), the main effect of anthropomorphic visual design was not significant, F (1, 159) = 0.30, p = 0.583, partial η2 < 0.001. Similarly, the main effect of perceived intelligence on PT was also not significant, F (1, 159) = 1.99, p = 0.16, partial η2 = 0.001. The interaction effect between anthropomorphic visual design and perceived intelligence was also not significant, F (1, 159) = 1.97, p = 0.162, partial η2 = 0.001. Therefore, we must reject Hypothesis 3.

In summary, our findings indicate that varying levels of anthropomorphic visual design have a significant impact on both user experience (UX) and perceived empathy (PE). Specifically, as the anthropomorphism in chatbot avatars increases, users report a notably enhanced UX and PE, suggesting that human-like features resonate well with users and foster deeper engagement. Conversely, perceived intelligence was shown to significantly influence UX, but did not have a meaningful effect on PE, highlighting that users value the chatbot’s functionality but may not necessarily feel a corresponding increase in empathy. Moreover, the interaction between anthropomorphic visual design and perceived intelligence demonstrated a significant effect on UX, underscoring the importance of integrating both elements to maximize user engagement. However, this interaction did not extend to perceived empathy or perceived trust (PT), indicating that while UX is positively affected by the combination of these factors, users’ feelings of trust and empathy may depend on other design aspects or personal experiences.

5.3 Results of the confirmatory factor analysis (CFA)

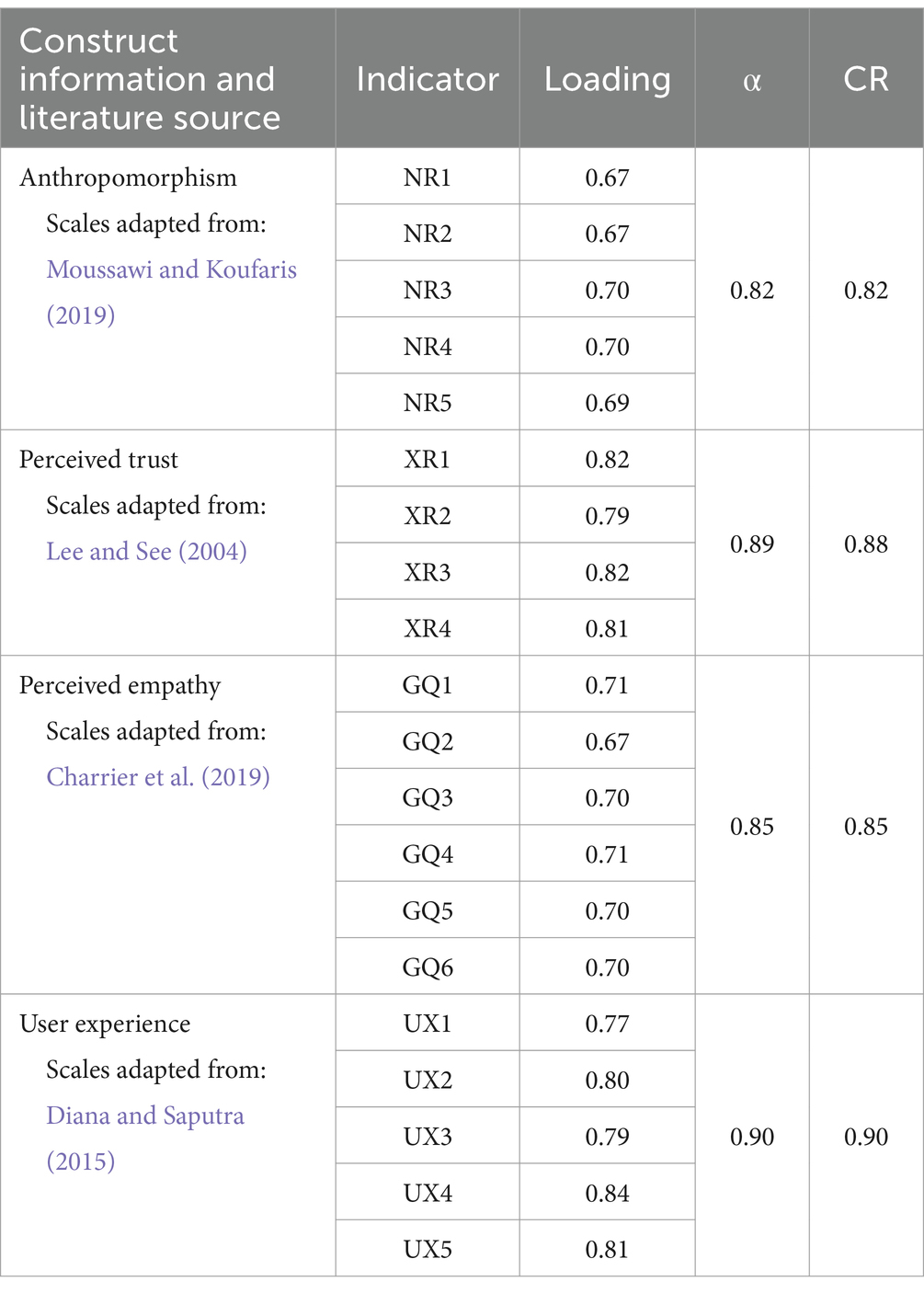

The validation measures for the sample were conducted using SPSS 26.0, applying Cronbach’s Alpha (>0.7) to assess the reliability of each construct, and items with factor loadings below 0.4 were removed (see Table 4).

For anthropomorphism, Cronbach’s Alpha was 0.82, and the F-value was 3.88 (p = 0.004). Confirmatory Factor Analysis (CFA) confirmed the validity of all five items, with a mean score of M = 3.84 (SD = 0.003). This suggests that participants perceived the anthropomorphic characteristics of the chatbot avatars more strongly compared to trust and empathy. For perceived trust, Cronbach’s Alpha was 0.89, and the F-value was 8.46 (p < 0.001). CFA validated all four items, with factor loadings above 0.70. The mean score was M = 2.77 (SD = 0.006), indicating a lower level of trust in the chatbot avatars.

For perceived empathy, Cronbach’s Alpha was 0.85, and the F-value was 10.308 (p < 0.001). All six items were confirmed by CFA with loadings above 0.65. The mean score was M = 3.81 (SD = 1.01), showing that the perception of empathy toward the chatbot avatars was higher than the perception of trust. For user experience, Cronbach’s Alpha was 0.72, and the F-value was 268.84 (p < 0.001). CFA validated all ten items, confirming the reliability of the construct. The mean score for user experience was M = 3.17 (SD = 0.37), suggesting that the overall user experience with the chatbot avatars was average. Full details of the questionnaire quality criteria are reported in Table 4.

5.4 Results of the structural equation models

To validate our research hypotheses, we used Amos 24.0 to establish a structural equation model, with empathy and trust as mediating variables, to explore the mechanisms by which anthropomorphism and perceived intelligence of chatbots influence user experience. The model fit indices were χ2/df = 2.41, GFI = 0.94, CFI = 0.97, AGFI = 0.92, RMSEA = 0.05, RMR = 0.03, indicating that the model fit well.

The evaluation of this model followed a two-step process (Hair et al., 2012). In the first step, the focus was on assessing the measurement model to reveal the reliability and validity standards related to latent variables. The second step involved evaluating the internal model and structural relationships (Henseler et al., 2009). The external model evaluation only included first-order constructs, and the quality criteria for the external model are presented in Table 3. We used standardized indicator loadings to measure the reliability of the indicators, with all loadings exceeding the minimum threshold of 0.700 (Hulland, 1999). The internal consistency of latent variables was represented by composite reliability for all constructs (Hair et al., 2012; Henseler et al., 2009), and values exceeding 0.700 indicate that composite reliability is acceptable, confirming the internal consistency of the latent variables (Bagozzi and Yi, 1988). We applied a path-weighting scheme PLS algorithm with 300 iterations to evaluate the structural model and used a bootstrap procedure with 5,000 samples to determine significance levels. The results of the structural model are summarized in Figure 5.

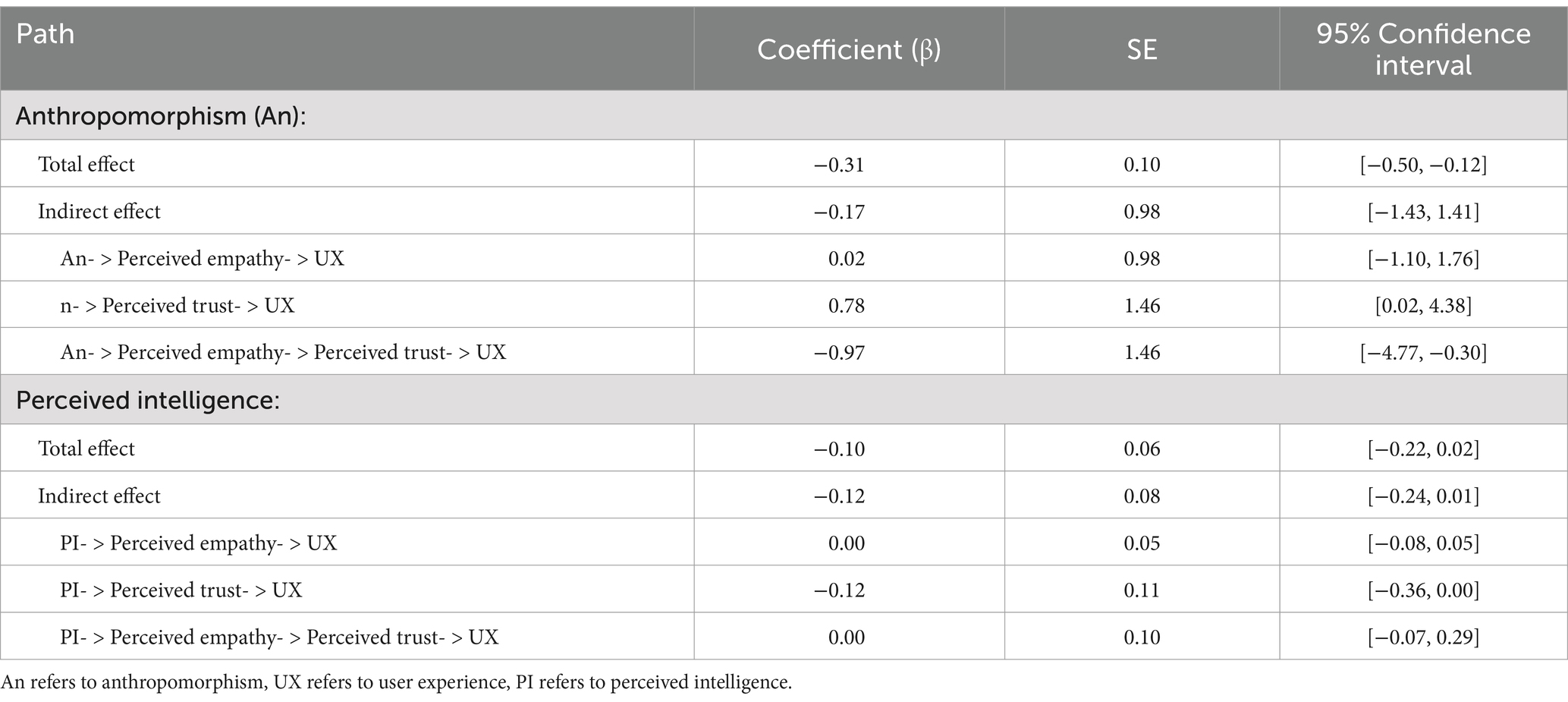

The results of the structural model show that the direct relationship between anthropomorphic visual design of chatbot avatars and user experience was not significant (β = −0.10, p > 0.05). Similarly, the direct relationship between perceived intelligence of anthropomorphic chatbot avatars and user experience was also not significant (β = 0.01, p > 0.05). Additionally, the path analysis results from the structural equation model show that higher levels of anthropomorphism in the visual design of chatbot avatars lead to higher levels of perceived empathy and trust (β = 0.95, p < 0.001; β = 0.66, p < 0.05). Moreover, perceived empathy in anthropomorphic chatbot avatars had a significant negative predictive effect on perceived trust (β = −0.86, p < 0.01), and perceived intelligence negatively predicted perceived trust (β = −0.13, p < 0.05). A positive relationship was found between perceived trust and user experience (β = 0.87, p < 0.001).

Next, we tested the mediation effect with empathy and trust as mediators to explore the mechanisms by which anthropomorphism and perceived intelligence of chatbot avatars influence user experience. The results are shown in Table 5. The mediation effect of perceived trust on the relationship between anthropomorphism and user experience was significant (β = 0.78, 95% CI = [0.02, 4.38], excludes 0), accordingly, we can accept hypothesis H5a. Additionally, the chain mediation effect from perceived empathy to perceived trust and then to user experience was significant (β = −0.97, 95% CI = [−4.77, −0.30], excludes 0). Accordingly, we accepted hypotheses H6a. However, the 95% CIs for the other mediation paths included zero, indicating that the other indirect paths were not significant. Therefore, we could not accept the remaining hypotheses. In conclusion, based on the structural equation model results, we accepted Hypotheses 5a and 6a, while rejecting Hypotheses 4, 5b and 6b.

6 Discussions

6.1 Discussion

Hypothesis 1 predicted that the anthropomorphism and perceived intelligence of visually designed chatbot avatars significantly influence user experience, demonstrating both main and interaction effects. The results from the analysis of variance support this hypothesis. Specifically, when the level of anthropomorphism is low, a decrease in perceived intelligence correlates with an enhanced user experience. Conversely, when the level of anthropomorphism is high, an increase in perceived intelligence is associated with an improved user experience, aligning with Social Presence Theory; this theory posits that more human-like avatars foster a stronger sense of social presence, leading to increased engagement and satisfaction. Additionally, regardless of the intelligence level of the visually designed chatbot avatars, a higher degree of anthropomorphism consistently leads to a better user experience. Similarly, irrespective of the anthropomorphism level, higher perceived intelligence also enhances the user experience of the avatars, reinforcing the idea that intelligent interactions create a more seamless user experience.

Hypothesis 2 predicted that the anthropomorphism and perceived intelligence of visually designed chatbot avatars significantly influence perceived empathy, demonstrating both main and interaction effects. Based on the results of the analysis of variance, we can only accept H2a while rejecting H2b and H2c. Specifically, regardless of the intelligence level of the visually designed chatbot avatars, higher levels of anthropomorphism consistently lead to greater perceived empathy among participants. However, the influence of perceived intelligence on empathy is not significant, and anthropomorphism does not interact with intelligence to affect perceived empathy. This outcome can be explained by Empathy Theory, which posits that more human-like avatars elicit stronger emotional connections and empathy from users (Spaccatini et al., 2023). Research by Bailenson et al. (2008) supports this, demonstrating that increased anthropomorphism in virtual agents enhances users’ empathetic responses. Conversely, the limited impact of perceived intelligence on empathy aligns with findings from Social Judgment Theory, suggesting that emotional and social cues, rather than cognitive evaluations, are more influential in forming empathetic responses. Thus, while intelligent features may enhance interaction quality, they do not significantly contribute to the empathetic experience as much as the human-like qualities of the avatars.

Hypothesis 3 predicted that the anthropomorphism and perceived intelligence of visually designed chatbot avatars significantly influence perceived trust, demonstrating both main and interaction effects. However, the results from the analysis of variance reject this hypothesis, indicating that the levels of anthropomorphism and intelligence in avatars do not have a significant effect on perceived trust. Our research focuses on how the anthropomorphism and perceived intelligence of visually designed chatbot avatars influence user experience. Previous studies have established that trust is a critical component of user experience with intelligent chatbots (McKnight et al., 2002; Gefen et al., 2003). Given this understanding, we develop a structural equation model to further explore the mechanisms through which anthropomorphism and perceived intelligence impact user experience with visually designed chatbot avatars. This approach allows us to examine not only direct effects but also the potential mediating role of trust in shaping user interactions.

Based on the analysis results of structural equation model, there is no significant direct relationship between anthropomorphism and user experience, which aligns with the conclusions of some previous studies. However, when perceived empathy and perceived trust are considered as mediating variables, anthropomorphism significantly affects user experience, suggesting that anthropomorphism has a complex mechanism of influence on user experience. Attribution theory offers a framework to explain the relationship between anthropomorphism and user experience. Attribution theory suggests that people tend to attribute human characteristics and behaviors to non-human agents, making interactions more predictable and familiar. This tendency is particularly evident in interactions with non-human agents like intelligent chatbots, which enhances users’ perceived empathy and perceived trust toward these agents. While anthropomorphism does not have a significant direct effect on user experience, its cumulative indirect effects through perceived empathy and perceived trust become evident, aligning with Social Cognitive Theory.

While anthropomorphism does not have a significant effect on user experience through a single path, the cumulative effects of multiple paths make the overall effect significant. This indicates that the influence of anthropomorphism on user experience is not achieved through a single route or mechanism, but rather through the accumulation of multiple small effects. At the same time, we observe from the indirect effects that some mediating effects cancel each other out. Specifically, anthropomorphism positively affects user experience through perceived trust, but when anthropomorphism influences perceived trust via perceived empathy, it negatively impacts user experience. The positive and negative effects counterbalance each other, resulting in a non-significant total indirect effect. However, after combining these effects, the total effect of anthropomorphism on user experience remains significant. Regarding the relationship between perceived intelligence and user experience, we found that neither the direct effects, indirect effects, nor the total effects were significant. This suggests that even when considering the complex mechanisms of perceived empathy and perceived trust, perceived intelligence still does not influence user experience.

Interestingly, while the results from the analysis of variance suggest that the anthropomorphism and perceived intelligence of visually designed chatbot avatars do not significantly affect perceived trust, the results from the structural equation model reveal a more complex relationship. When both perceived empathy and perceived trust are considered, the impact of anthropomorphism and perceived intelligence on user experience is primarily mediated through perceived trust. This finding suggests that there is a more intricate mechanism at play between anthropomorphism, perceived intelligence, and user experience. From a psychological perspective, this can be explained by Trust Formation Theory, which highlights that trust is often developed through indirect cues, such as perceived empathy, rather than directly through visual or cognitive attributes (Mayer et al., 1995). Moreover, Social Exchange Theory posits that users engage in reciprocal relationships with technology, meaning they may perceive higher levels of trust when the chatbot exhibits empathetic behaviors, thus influencing overall user experience. The structural equation model further supports the idea that anthropomorphism and perceived intelligence enhance user experience not by directly fostering trust, but by enhancing empathetic interactions, which in turn build trust over time.

6.2 Limitations and further research

This study has several limitations that need to be addressed. First, the sample size used in this research was relatively small, limiting the generalizability of the findings. A larger and more diverse sample might yield different results and provide a more comprehensive understanding of the relationships between anthropomorphism, perceived intelligence, and user experience. Second, the study focused primarily on visual anthropomorphism and perceived intelligence of chatbot avatars, neglecting other potential dimensions such as voice, behavior, basic personality traits or interaction styles. These factors could also contribute significantly to user experience and should be considered in future studies. Third, the cross-sectional design of the research does not allow for conclusions about causality. Longitudinal studies or experimental designs would be more appropriate to examine how changes in anthropomorphic design or perceived intelligence over time impact user experience. Lastly, the study only explored empathy and trust as mediating variables, which may have limited the scope of understanding other relevant psychological constructs, such as perceived ease of use, satisfaction, or emotional engagement, that might play a role in shaping user experience.

Future research could expand by investigating other dimensions of chatbot design, such as voice or interaction style, to see how these factors affect user experience. Additionally, exploring moderating factors like cultural differences or individual preferences could provide insights into how different user groups respond to anthropomorphic and intelligent designs. Experimental studies, particularly longitudinal ones, could offer more definitive insights into the causal relationships between chatbot design elements and user experience over time.

7 Conclusion

This study investigates the impact of anthropomorphic visual design and perceived intelligence of intelligent chatbot avatars on user experience, with a specific focus on the mediating roles of perceived empathy and perceived trust. Using SPSS 26.0 and Amos 24.0, this study conducted an analysis of variance to examine the relationships between the anthropomorphism and perceived intelligence of visually designed chatbot avatars with perceived empathy, perceived trust, and user experience. The mediating roles of perceived trust and empathy were also considered, leading to the construction and evaluation of a structural equation model to further explore these relationships.

Key findings indicate that the level of anthropomorphism significantly affects visually designed avatar’s user experience, particularly through perceived empathy and perceived trust. When anthropomorphism is low, a decrease in perceived intelligence correlates with an enhanced user experience. In contrast, when anthropomorphism is high, increased perceived intelligence enhances user experience. The results indicate that higher levels of anthropomorphism consistently lead to better user experiences. While the initial ANOVA results for Hypothesis 2 confirmed that higher levels of anthropomorphism enhance perceived empathy, they did not support the significant influence of perceived intelligence or its interaction with anthropomorphism on empathy. However, perceived intelligence appears less crucial in enhancing empathetic experience. Our investigation into Hypothesis 3 indicated that both anthropomorphism and perceived intelligence did not significantly influence perceived trust in the ANOVA results. However, the SEM analysis revealed a more nuanced relationship, highlighting the mediating roles of perceived empathy and perceived trust. This finding suggests that while anthropomorphism does not directly affect user experience, it exerts a significant indirect influence through these mediators, indicating a complex mechanism of impact. Additionally, the interplay between perceived empathy and perceived trust points to the importance of fostering empathetic interactions to build trust over time, as supported by Trust Formation Theory.

In conclusion, this study advances our understanding of the multifaceted relationships between anthropomorphism, perceived intelligence, and user experience in intelligent chatbot interactions. By emphasizing the need for emotional engagement and the reduction of cognitive strain, our findings provide valuable insights for designers aiming to create more effective chatbot interfaces. Future research should consider broader design dimensions and explore additional factors influencing these dynamics to further enhance user experience in human-chatbot interactions.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

NM: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. RK: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Supervision, Validation, Writing – original draft, Writing – review & editing. YH: Conceptualization, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. YW: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by the Foundation of the National Key Laboratory of Human Factors Engineering, grant no. HFNKL2023WW02, the Beijing Natural Science Foundation, grant no. 9244037, the Beijing Institute of Technology Research Fund Program for Young Scholars, grant no. 3320012222316.

Acknowledgments

We would like to extend our sincere gratitude to all the participants who generously contributed their time and effort to this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. During the preparation of this work the authors used ChatGPT in order to improve the readability and language of the manuscript. After using this tool, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdelhalim, E., Anazodo, K. S., Gali, N., and Robson, K. (2024). A framework of diversity, equity, and inclusion safeguards for Chatbots. Bus. Horiz. 67, 487–498. doi: 10.1016/j.bushor.2024.03.003

Ayanwale, M. A., and Ndlovu, M. (2024). Investigating factors of students’ behavioral intentions to adopt Chatbot Technologies in Higher Education: perspective from expanded diffusion theory of innovation. Comp. Human Behav. Rep. 14:100396. doi: 10.1016/j.chbr.2024.100396

Bagozzi, R. P., and Yi, Y. (1988). On the evaluation of structural equation models. J. Acad. Mark. Sci. 16, 74–94. doi: 10.1007/BF02723327

Bai, S., Dingyao, Y., Chunjia Han, M., Yang, B. B., Gupta, V. A., Panigrahi, P. K., et al. (2024). Warmth trumps competence? Uncovering the influence of multimodal AI anthropomorphic interaction experience on intelligent service evaluation: insights from the high-evoked automated social presence. Technol. Forecast. Soc. Chang. 204:123395. doi: 10.1016/j.techfore.2024.123395

Bailenson, J. N., Yee, N., Blascovich, J., Beall, A. C., Lundblad, N., and Jin, M. (2008). The use of immersive virtual reality in the learning sciences: digital transformations of teachers, students, and social context. J. Learn. Sci. 17, 102–141. doi: 10.1080/10508400701793141

Balderas, A., García-Mena, R. F., Huerta, M., Mora, N., and Dodero, J. M. (2023). Chatbot for communicating with university students in emergency situation. Heliyon 9:e19517. doi: 10.1016/j.heliyon.2023.e19517

Bednarek, H., Przedniczek, M., Wujcik, R., Olszewska, J. M., and Orzechowski, J. (2024). Cognitive training based on human-computer interaction and susceptibility to visual illusions. Reduction of the Ponzo effect through working memory training. International Journal of Human-Computer Studies 184:103226.

Bird, J. J., and Lotfi, A. (2024). Customer service chatbot enhancement with attention-based transfer learning. Knowledge-Based Systems 301:112293.

Charrier, L., Rieger, A., Galdeano, A., Cordier, A., Lefort, M., and Hassas, S. (2019). “The rope scale: a measure of how empathic a robot is perceived” in In 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI) Daegu, Korea (South), 2019, 656–657. doi: 10.1109/HRI.2019.8673082

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

Deci, E. L., and Ryan, R. M. (1985). The general causality orientations scale: Self-determination in personality. Journal of research in personality 19, 109–134.

De Visser, E. J., Monfort, S. S., McKendrick, R., Smith, M. A., McKnight, P. E., Krueger, F., et al. (2016). Almost human: Anthropomorphism increases trust resilience in cognitive agents. Journal of Experimental Psychology: Applied 22:331.

Diana, N. E., and Saputra, O. A. (2015). “Measuring user experience of a potential shipment tracking application” in Proceedings of the International HCI and UX Conference in Indonesia (CHIuXiD ‘15) (New York, NY, USA: Association for Computing Machinery), 47–51.

Du, Y. C., Li, Y. Z., Qin, L., and Bi, H. Y. (2022). The influence of temporal asynchrony on character-speech integration in Chinese children with and without dyslexia: an ERP study. Brain and Language 233:105175.

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295X.114.4.864

Feng, C. M., Botha, E., and Pitt, L. (2024). From HAL to GenAI: optimizing Chatbot impacts with CARE. Bus. Horiz. 67, 537–548. doi: 10.1016/j.bushor.2024.04.012

Følstad, A., Araujo, T., Papadopoulos, S., Law, E. L.-C., Granmo, O.-C., Luger, E., et al. (2020). Chatbot research and design. Amsterdam: Springer International Publishing. Cham, Switzerland.

Gambino, G., Frinchi, M., Giglia, G., Scordino, M., Urone, G., Ferraro, G., et al. (2024). Impact of “Golden” tomato juice on cognitive alterations in metabolic syndrome: Insights into behavioural and biochemical changes in a high-fat diet rat model. Journal of Functional Foods 112:105964.

Gefen, D., Karahanna, E., and Straub, D. W. (2003). Trust and TAM in online shopping: an integrated model. MIS Q. 27, 51–90. doi: 10.2307/30036519

Go, E., and Sundar, S. S. (2019). Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Computers in human behavior 97, 304–316.

Hair, J. F., Sarstedt, M., Ringle, C. M., and Mena, J. A. (2012). An assessment of the use of partial least squares structural equation modeling in marketing research. Journal of the academy of marketing science 40, 414–433.

Haugeland, I. K., Fornell, A. F., Taylor, C., and Bjørkli, C. A. (2022). Understanding the user experience of customer service Chatbots: an experimental study of Chatbot interaction design. Int. J. Human Comp. Stud. 161:102788. doi: 10.1016/j.ijhcs.2022.102788

Henseler, J., Ringle, C. M., and Sinkovics, R. R. (2009). “The use of partial least squares path modeling in international marketing” in New challenges to international marketing, advances in international marketing. eds. R. R. Sinkovics and P. N. Ghauri (Emerald Group Publishing Limited), 277–319.

Hobert, S., Følstad, A., and Law, E. L. C. (2023). Chatbots for active learning: A case of phishing email identification. International Journal of Human-Computer Studies 179:103108.

Hoff, K. A., and Bashir, M. (2015). Trust in automation: integrating empirical evidence on factors that influence trust. Hum. Factors 57, 407–434. doi: 10.1177/0018720814547570

Hoffman, B. D., Oppert, M. L., and Owen, M. (2024). Understanding young adults’ attitudes towards using AI Chatbots for psychotherapy: the role of self-stigma. Comp. Human Behav. 2:100086. doi: 10.1016/j.chbah.2024.100086

Hu, Y., and Sun, Y. (2023). Understanding the joint effects of internal and external anthropomorphic cues of intelligent customer service bot on user satisfaction. Data and Information Management 7:100047.

Hulland, J. (1999). Use of partial least squares (PLS) in strategic management research: a review of four recent studies. Strateg. Manag. J. 20, 195–204. doi: 10.1002/(SICI)1097-0266(199902)20:2<195::AID-SMJ13>3.0.CO;2-7

Jian, J. Y., Bisantz, A. M., and Drury, C. G. (2000). Foundations for an empirically determined scale of trust in automated systems. International journal of cognitive ergonomics 4, 53–71.

Khadija, A., Zahra, F. F., and Naceur, A. (2021). “AI-powered health Chatbots: toward a general architecture” in The 18th international conference on Mobile systems and pervasive computing (MobiSPC), the 16th international conference on future networks and communications (FNC), the 11th international conference on sustainable energy information technology, vol. 191, 355–360. doi: 10.1016/j.procs.2021.07.048

Kim, T., and Song, H. (2021). How should intelligent agents apologize to restore trust? Interaction effects between anthropomorphism and apology attribution on trust repair. Telematics Inform. 61:101595. doi: 10.1016/j.tele.2021.101595

Lee, K.-W., and Li, C.-Y. (2023). It is not merely a chat: transforming Chatbot affordances into dual identification and loyalty. J. Retail. Consum. Serv. 74:103447. doi: 10.1016/j.jretconser.2023.103447

Lee, J. D., and Moray, N. (1994). Trust, self-confidence, and operators' adaptation to automation. Int. J. Human-Comp. Stud. 40, 153–184. doi: 10.1006/ijhc.1994.1007

Lee, J. D., and See, K. A. (2004). Trust in automation: designing for appropriate reliance. Hum. Factors 46, 50–80. doi: 10.1518/hfes.46.1.50.30392

Lin, X., Shao, B., and Wang, X. (2022). Employees’ perceptions of Chatbots in B2B marketing: affordances vs. Disaffordances. Ind. Mark. Manag. 101, 45–56. doi: 10.1016/j.indmarman.2021.11.016

Lu, C., Lu, H., Chen, D., Wang, H., Li, P., and Gong, J. (2023). Human-like decision making for lane change based on the cognitive map and hierarchical reinforcement learning. Transportation research part C: emerging technologies 156:104328.

Marks, P. (2014). Want classified information? Talk to the Chatbot. New Scientist 223:22. doi: 10.1016/S0262-4079(14)61485-8

Mayer, R. C., Davis, J. H., and Schoorman, F. D. (1995). An integrative model of organizational trust. Acad. Manag. Rev. 20, 709–734. doi: 10.2307/258792

McKnight, D. H., Choudhury, V., and Kacmar, C. (2002). Developing and Validating Trust Measures for e Commerce: An Integrative Typology. Information Systems Research 13, 334–359.