- 1RITMO Centre for Interdisciplinary Studies in Rhythm, Time and Motion, University of Oslo, Oslo, Norway

- 2Department of Informatics, University of Oslo, Oslo, Norway

- 3Department of Numerical Analysis and Scientific Computing, Simula Research Laboratory, Oslo, Norway

The development of robots that can dance like humans presents a complex challenge due to the disparate abilities involved and various aesthetic qualities that need to be achieved. This article reviews recent advances in robotics, artificial intelligence, and human-robot interaction toward enabling various aspects of realistic dance, and examines potential paths toward a fully embodied dancing agent. We begin by outlining the essential abilities required for a robot to perform human-like dance movements and the resulting aesthetic qualities, summarized under the terms expressiveness and responsiveness. Subsequently, we present a review of the current state-of-the-art in dance-related robot technology, highlighting notable achievements, limitations and trade-offs in existing systems. Our analysis covers various approaches, including traditional control systems, machine learning algorithms, and hybrid systems that aim to imbue robots with the capacity for responsive, expressive movement. Finally, we identify and discuss the critical gaps in current research and technology that need to be addressed for the full realization of realistic dancing robots. These include challenges in real-time motion planning, adaptive learning from human dancers, and morphology independence. By mapping out current methods and challenges, we aim to provide insights that may guide future innovations in creating more engaging, responsive, and expressive robotic systems.

1 Introduction

Getting robots to dance has been a goal since the dawn of the idea of robotics, an ultimate expression of human likeness. For roboticists, this can help facilitate trust and comfort with their creations, while for artists, both human-like and non-human-like motion are powerful expressive tools. Furthermore, the fact that dance incorporates so many abilities also makes robotic dance a prime example of “understanding by simulating” (Simon, 1996).

The specific motor-sensory abilities employed in human dance depend on the setting, but can include motor sequence learning, real-time imitation, spatial awareness, rhythmic entrainment, and fluid improvisation. In solo artistic settings, for example, emphasis is generally placed on precise, expressive, and elaborate motor sequences, possibly in tandem with music or video. Participatory dance, on the other hand, is relatively more dependent on real-time response and adaptation to musical cues, spatial constraints and other bodies. While many of these dance-related abilities have been implemented in robots, combining them requires a common framework for training and executing controllers.

A recent review by LaViers (2024) positions robotic dance as a field at the frontier of the study of movement, where imbuing robots with the ability to dance is a precursor to the general understanding of movement by machines. Indeed, major advances in robotic control are often demonstrated through dance performance. One such advance was bio-inspired robotics, where biological principles are used to overcome differences in morphology, to integrate multimodal information, and to move in tandem with others (Aucouturier et al., 2008). Advances in control techniques led to automated motion retargeting (Ramos et al., 2015), even demonstrated by dancers in real time (Hoffman et al., 2018). In industry, dance has been used as a marketing tool by the well-known robotics company Boston Dynamics. Their dance videos demonstrate their robots' control abilities, even resulting in the development of a Choreographer software development kit.1 Another recent review of artistic robot dance (Maguire-Rosier et al., 2024) categorizes works according to their primary focus, whether it is motion, responsiveness, or the hybridization of human and robot bodies. The authors point to how dance can help to free human-robot interaction from cultural expectations such as human-ness, machine-ness and efficiency.

Now in the era of generative AI, novel expressive movements of robots and avatars can be learned from training on data (Wallace et al., 2021; Osorio et al., 2024), with implementation in dancing robots likely to be around the corner. With the proliferation of sophisticated machine learning techniques, a survey of computational methods that can be applied and combined for general robotic dance ability is required. In this review, we focus on expressive movement and responsiveness as key complementary qualities of dance, and the sensorimotor abilities behind them. Unlike Maguire-Rosier et al. (2024), we consider a standard human-robot interaction context, where robots are distinct from any human performers. While morphology has a profound influence on affective qualities, we are most interested in control methods that are morphology-independent and hence as widely applicable as possible. For the purposes of this review, we define expressiveness and responsiveness as follows:

• Expressiveness. We define expressiveness as the agent's ability to move in a manner that conveys a thought or feeling. Highly expressive robots should be able to signal agency and express a wide variety of emotional affect. This requires a high level of variability and flexibility in its movement patterns and likely also in the level of complexity of these movements. The dimensions of expressiveness can be captured in various ways, such as in the 12 principles of animation (Yoshida et al., 2022) or Laban movement analysis (Burton et al., 2016).

• Responsiveness. Current neuroscientific theory posits that music and dance help to train predictive networks in the brain (Koelsch et al., 2019), which may explain the pleasure associated with sensorimotor entrainment or “groove” (Witek, 2017). This points to real-time responsiveness as another fundamental aspect of human dance. We define responsiveness as movement initiated by external stimulus, or modified in response to changing stimulus. The appropriateness of the timing of response is generally important. Also included in this category is an awareness of context, such that not all stimuli are treated equally.

Movement that is both expressive and responsive requires combining several abilities of which we provide an overview in Section 2, focusing on key methods and recent developments. After surveying research performed on these abilities, Section 3 will discuss their relation to expressiveness and responsiveness, and identify shared computational methods and principles. Finally, we provide perspectives on directions for future research on dancing robotic systems.

2 Dance-related abilities in robots: state of the art

The key sensorimotor abilities involved in dance are actively researched within robotics, often with one ability as a sole focus, and not always applied specifically to dance. In the following sections, one for each ability that we have identified, we present a non-exhaustive overview of key papers and recent works in robotics and AI, focusing specifically on methods that can be transferred to dance.

2.1 Movement primitive learning

Movement, including dance, can often be divided into discrete building blocks such as stepping, reaching or kicking, which can be combined into more complex movements. In neuroscience and robotics these are often called “movement primitives” (Ijspeert et al., 2013; Ravichandar et al., 2020), while in human motion analysis they are sometimes termed “movemes” (Bregler, 1997). Such division of movement naturally leads to hierarchical control schemes, where a high-level controller selects and sequences actions, and low-level controllers execute them with the necessary proprioceptive feedback as input (Merel et al., 2019a).

A common method to learn and generate primitives in robots is to learn a trajectory in a low-dimensional space (such as a hand position) which is then transformed into a stable attractor of a dynamical system in this space. In doing so, the system makes use of motor synergies, such that groups of associated joints do not need to be controlled independently. Traditionally, these spaces have been specified manually (Ijspeert et al., 2001). More recent work uses unsupervised learning to find reusable low-dimensional latent spaces (Merel et al., 2019b).

Primitives can also be learned through automated segmentation of longer demonstrations, using techniques such as change-point detection (Konidaris et al., 2012). Recent work in 3D animation and robot simulation has used vector-quantized variational autoencoders to learn a range of primitives from human movement datasets, also incorporating latent spaces (Siyao et al., 2022; Luo et al., 2023).

For robot dance, movement primitives have been used to incorporate principles of specific dance styles such as neoclassical (Troughton et al., 2022) and tango (Kroma and Mazalek, 2021), albeit in a hand-crafted way. Modulating certain movement primitives in their speed or amplitude can imbue the motion with affective and expressive qualities (Thörn et al., 2020). Depending on how feedback is implemented, primitives can be made more or less responsive to external influences such as engagement (Javed and Park, 2022), tactile feedback (Kobayashi et al., 2022) or musical features (Boukheddimi et al., 2022).

2.2 Movement sequence generation

Over the past decade, there have been significant advancements in sequence modeling tasks such as text generation using data-driven deep learning models such as the Transformer (Vaswani et al., 2017) and variants of the recurrent neural network (RNN) such as the LSTM (Hochreiter and Schmidhuber, 1997). Movement data can also be represented as a sequence by segmenting dance recordings into sequences of individual poses and/or motion primitives. Using computers to generate dance steps has a history spanning decades; from the work of pioneers such as Merce Cunningham (Schiphorst, 2013), to data-driven models for creating and exploring sequences of choreography (Pettee et al., 2019; Li et al., 2021; Carlson et al., 2016). In animation, generative movement models have been used to create realistic, fluid movements for characters, allowing for lifelike motion in films, character control in video games (Alemi and Pasquier, 2017), and virtual avatars (Lee and Marsella, 2006). Recently, the automatic generation of movement for robot control using deep learning has also emerged (Osorio et al., 2024; Park et al., 2024).

2.3 Entrainment

When accompanied by music, dancers often need to align the timing of their motions with a beat. The technical term “entrainment” refers to the general ability for an oscillating system to adapt its frequency to that of an external stimulus. Mechanistic models that achieve this can be divided into two categories. The first uses adaptive feedback to adjust an intrinsic oscillation frequency (Mörtl et al., 2014), and has been used to find a natural walking frequency for a given robot weight (Buchli et al., 2006). The second method uses self-organized responses of dynamical systems such as coupled oscillators. This principle has been applied using gradient frequency networks (Large et al., 2015). In general, highly nonlinear neural-like oscillators, when connected in the right way, have a strong tendency to synchronize (Izhikevich, 2007). Neural circuits governing vertebrate locomotion, known as central pattern generators (CPGs) (Ijspeert, 2008), can also show entrainment to a wide range of rhythmic stimuli when their parameters and weights are evolved for this property (Szorkovszky et al., 2023a).

2.4 Imitation

In the context of dance, imitation is used when learning new movements from teacher demonstration. Imitation also occurs in the form of mirroring during dance improvisation. Imitation comprises two essential components—first, motion characteristics of a partner need to be recognized through the senses, then they need to be reproduced (or retargeted) in one's own body. Stoeva et al. (2024) reviews progress in robotic imitation. Mapping can occur on various levels of abstraction, from pure motion trajectories (e.g. rotation of a hand about an axis formed by its arm), to classifiable gestures or movement primitives (turning a door knob), to achievement of a goal (opening a door) (Lopes et al., 2010). For dance, lower level mapping is most relevant, as in this case motion is not necessarily connected to function.

2.4.1 Motion recognition

Various motion recognition systems provide low-level information of a partner's actions. Motion capture systems with reflective markers or inertial measurement units can provide this directly, although this requires the partner to wear a specialized suit (Welch and Foxlin, 2002). Deep learning based pose estimation, such as OpenPose (Cao et al., 2021), are an emerging way to estimate the same data from single camera feeds.

2.4.2 Motion retargeting

For humanoid robots, an approximately one-to-one low-level mapping is feasible using inverse kinematics to solve for body positions that match the partner's as closely as possible, while dealing with constraints such as balance and stiffness. Various data-driven machine learning approaches also exist to solve the inverse kinematic problem (Xu et al., 2017). Furthermore, by incorporating prediction into the model, a robot can be made to anticipate the partner's motion and hence increase synchronicity (Dallard et al., 2023). The robot Alter3 has been equipped with both automated low-level imitation and a “dream mode,” in which its history is searched for a movement that is closest to matching the target's optical flow, for a more abstract higher level imitation (Masumori et al., 2021).

For non-humanoid robots, although low level mapping of joints is not possible, analogous movement primitives can in principle be learned. In this case, the concept of affordance is useful (Lopes et al., 2010) yet underexplored. Laban movement analysis can also be used to describe the characteristics of the motion to be matched (Burton et al., 2016; Cui et al., 2019). Another solution is to specify situations where close position mapping is most important, such as when motion is slowest, and optimize for smooth transitions at other times (Rakita et al., 2017). In a dance context, rhythmic entrainment could also be used to find an analogous movement sequence to that of a partner with a different morphology (Michalowski et al., 2007; Szorkovszky et al., 2023b).

2.5 Stabilization

Balance and stability are important for dancers to execute complex movements in a controlled manner, allowing their movements to look effortless while also protecting themselves from injuries. Similarly, it is essential for legged robots to keep the center of mass supported at all times. Hence, continuous feedback control is ubiquitous for such robots in industrial settings. Typically, this relies on hand-designed motion plans so that the kinematic equations and hence the necessary feedbacks are known (Kuindersma et al., 2016). More recently, machine learning approaches such as reinforcement learning have been used to automatically find motion plans and corresponding feedbacks (Castillo et al., 2021). In both of these cases, robots employing feedback control can be seen as responsive, as the feedback can “respond” to perturbations quickly much in the same way as a dancer would if finding themselves in an unnatural position. However, since adjustments are directed toward a stable subset of positions, feedback control by itself limits expressiveness of motion.

2.6 Spatial awareness

Human-robot interaction in dance encompasses a variety of modes, each presenting distinct challenges and opportunities. A key distinction can be made between interactions that require real-time responsiveness and those that do not. For performances with minimal or no physical touch, such as those by Merritt Moore with a UR10e robot (Universal Robots, 2020) or Huang Yi with the industrial robot KUKA (Yi, 2017), the movements can be intricate and highly expressive. These performances often create the illusion of responsiveness, despite being pre-designed and static. In such cases, the lack of real-time interaction simplifies the computational demands but limits the dynamic exchange between human and robot. In contrast, partner dancing necessitates real-time responsiveness to a human partner's movements. Recent advances have incorporated tactile (Sun et al., 2024) and haptic sensors (Kobayashi et al., 2022) to enable robots to detect and respond to proxemic cues in partner dance scenarios. For human-robot partner dancing to succeed, the robot's movements must be both predictable and highly responsive to the human's actions. Responsiveness is not limited to partner dance; it can also be applied to other contexts, such as using human movement to control robot swarms (St-Onge et al., 2019).

2.7 Improvisation

Improvisation, the ability to respond spontaneously without a predefined plan, is an important part of many creative practices. During training and the ideation stage of choreographic development, for example, improvisation often plays a central role. In these contexts, a robot's ability to perform unexpected and surprising movements may be favorable compared to more predictable movement patterns. In order to achieve more surprising and interesting interactions, previous work has leveraged the power of generative AI. AI-generated outputs, often characterized by their unconventionality or absurdity, have been explored across various creative domains, including text-based prompts for writing (Singh et al., 2022) and the ideation phase of dance composition (Wallace et al., 2024) and could play an important role in developing robot dancers that can improvise. In the project AI_am (Berman and James, 2015), a dancer interacts with an AI in real time, experiencing its “weird grace, punctuated by a glitchy flow.” This was achieved by sampling trajectories through a low-dimensional “pose map” in an interactive but partially randomized way. Similarly, Carlson et al. (2016) used evolutionary algorithms to mutate keyframes in choreography, producing poses that were unconventional and, at times, impossible for dancers to replicate directly. The unpredictable nature of these systems enriched the dialogue between the dancer and the AI, broadening the dancer's movement vocabulary.

3 Discussion

How do we imagine a future robot dancer? We might imagine a human dancer sharing a stage with a robot partner. It's body may not be human, but the way it moves and reacts to the human dancer is. When the dancer changes their use of space on the stage, the robot accommodates the dancer by moving closer or farther away. Without mirroring, the robot adjusts the speed and intensity of its movements to compliment what the dancer is doing. Without words or designated signals, the robot is capable of interpreting the dancer's movements on-the-fly. The robot can determine the tempo, emotion, ethnographic roots and genre of the dance and any musical accompaniment, and use these facets to take part in shaping the story of the performance.

By combining the disparate abilities involved in dance under the rubric of expressiveness and responsiveness, the life-like behavior described above can be pursued in a systematic way. Quantitative measures that capture aspects of expressive movement can be derived based on Laban movement analysis (Knight and Simmons, 2014; Burton et al., 2016) or animation principles (Yoshida et al., 2022). Entropy measures can then capture the overall utilization of the affective spaces that span a range of qualities or a learned set of motion primitives (Xiao et al., 2024). Due to the subjective nature of expressiveness, these should be combined with surveys following performance and interaction studies. Metrics that capture responsiveness include Granger causality for low-dimensional data such as agent position (Chang et al., 2017) and Markov-based conditional independence for higher-dimensional data such as motion capture (Castri et al., 2024). It is likely that these methods would need to be complemented with measures of entrainment, such as mean asynchrony (Aschersleben, 2002), as synchronized repetitive motion will eliminate the temporal differences used to infer causality.

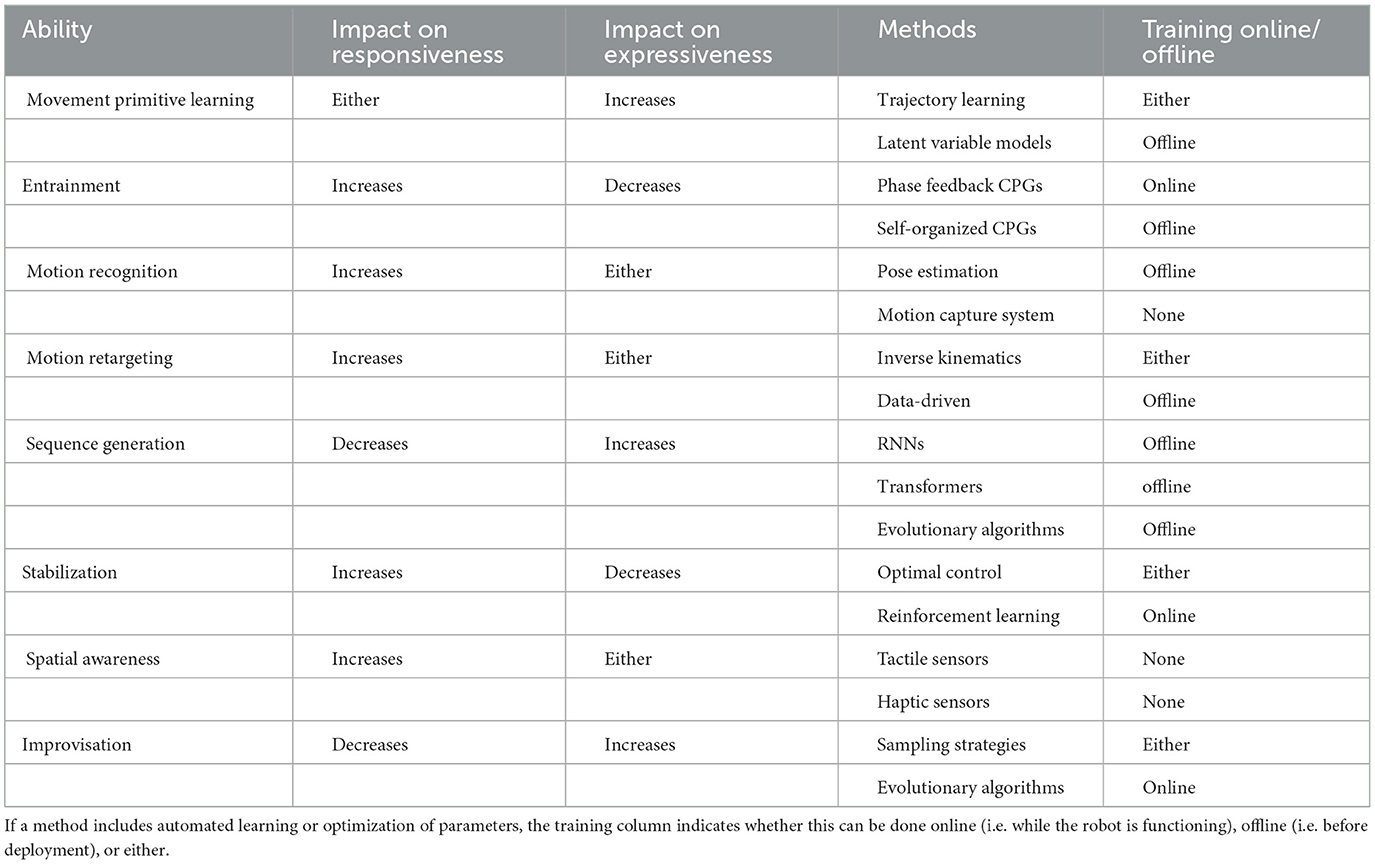

In Table 1, we summarize the abilities and methods reviewed in Section 2. For each ability, we have listed the most promising methods and whether they require online or offline training/optimization (if relevant). While offline training reduces the computational load while running, online learning allows the robot to learn from its environment and interactions, avoiding “reality gaps” (Collins, 2022). Therefore this is an important consideration when combining abilities. We have also specified whether each ability is expected to increase or decrease expressiveness and responsiveness, or whether this depends on context or method (labeled “either”).

Table 1. Desired abilities for dancing robots, relevant methods, and their impact on responsiveness and expressiveness.

Although expressiveness and responsiveness are not mutually exclusive, care needs to be taken to maintain a balance between them. For example, stabilizing feedback will make a robot more reactive to sensory input and less likely to produce extreme motions. Conversely, sequence generation on its own will plan an expressive series of movements that are not conditional on the environment.

A combination of abilities is clearly required to allow a high degree of both qualities. In order to make these compatible, it is necessary to identify shared computational methods and principles. Here, we outline some selected approaches that we believe could be relevant in an integrated design approach:

• Latent spaces: these are low-dimensional representations that capture variation or structure in data, well suited to the high dimensional spatiotemporal data encountered in the study of movement. As such, they have been used for learning movement primitives (Merel et al., 2019b), imitation (Masumori et al., 2021) and improvisation (Berman and James, 2015).

• Hierarchical control: this divides the control problem into that of low-level movement aided by proprioceptive feedback and high level movement selection (Merel et al., 2019a). While this idea is implicit to movement primitives, it is has also been fruitfully applied to entrainment (Mörtl et al., 2014). Although end-to-end control approaches with deep neural networks have made tremendous progress in recent years, they would be insufficient in providing the long-term expressive motion planning required in a dance context. A hierarchical approach would enable, for example, entrainment and sequence generation to be executed at the level of discrete motions, with expressivity and stabilization delegated to a continuous control scheme.

• Online adaptivity: a fully improvisational and interactive dancing robot would need to monitor its performance at runtime, and adaptively manage tradeoffs between e.g. responsive and expressive behavior, or improvisation and precision. In this context, an architecture considering computational self-awareness could be a relevant framework in the design process (Lewis et al., 2015). Since it is difficult to optimize several abilities simultaneously, it would also be useful to include some modules pre-trained offline, leaving continuous learning for modules where this is most important.

To achieve the goal of a fully capable robotic dancer, we also identify some important challenges to be considered:

• Morphology dependence: given a common framework for movement, can it be applied to a biped, quadruped, hexapod or other body plan? The field of evolutionary robotics has devised several approaches to adapt controllers to newly generated morphologies (Le Goff et al., 2022). Likewise, in reinforcement learning there have also recently been significant advances in morphology-agnostic control architectures (Bohlinger et al., 2024; Doshi et al., 2024). However, these are generally applied to locomotion or manipulation. Additionally, since the robots we imagine will need to be trained primarily on datasets of human dance, and interact with human partners, finding a morphology-agnostic way to map gestures in tasks such as retargeting remains an important challenge. A promising future source of data in this case is from dancers exploring the affordances of non-anthromorpic structures (Gemeinboeck and Saunders, 2017).

• Latency: responsive motion, in particular, requires fast motors and rapid computation. Although hierarchical control allows for a repertoire of pre-computed low-level actions, there is very little room to spare when including communication and motor latency. For this reason, sensory feedback loops need to be made as short as possible. Castillo et al. (2021) provide one such architecture that achieves this, with deep reinforcement learning and low level kinematic modules operating asynchronously at different sampling rates. While low-cost platforms can be made rapidly responsive (Michalowski et al., 2007), expressiveness may be limited due to lack of computational power.

• Context-aware motion planning: the responsiveness quality that we have considered so far is purely reactive—a robot that responds to some stimulus need only alter its current movement to be considered responsive. However, to smoothly deal with spatial constraints and aesthetic context, a competent human dancer would also adjust their plans, signaling of intention, and predictions of others' intentions (Kobayashi et al., 2022). The future robot dancer that we imagined would require a context-rich state variable to allow flexibility in where and how it moves ahead in time.

With these obstacles overcome, and with the outlined abilities combined under a common framework, it is possible that a robot can dance both expressively and responsively, untethered to human bodily constraints. It has already been found that AI-generated movement that would be unrealistic for humans can facilitate creativity in dancers (Wallace et al., 2024). In human-robot collaborative dance, we predict that by “closing the loop” with responsiveness, complex feedback can lead to more and more surprising movement, outside the bounds of any training data.

Dance is an artform that expands the boundaries of how we move and perceive motion, and robots are designed, above all, to move. As such, the emerging field of robotic dance has much to offer both pursuits (LaViers, 2024). We have reviewed several sensorimotor abilities that, if integrated in a single robotic system, can greatly expand both its expressiveness and responsiveness. Progressing toward this goal can expand the use of robots as creative tools, advance understanding of the human sensorimotor system, and stimulate future work in adaptive control and social robotics.

Author contributions

BW: Conceptualization, Writing – original draft, Writing – review & editing. KG: Supervision, Writing – original draft, Writing – review & editing. AS: Conceptualization, Project administration, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was partially supported by the Research Council of Norway through its Centers of Excellence scheme, project number 262762.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Alemi, O., and Pasquier, P. (2017). “Walknet: a neural-network-based interactive walking controller,” in International Conference on Intelligent Virtual Agents (Berlin: Springer), 15–24. doi: 10.1007/978-3-319-67401-8_2

Aschersleben, G. (2002). Temporal control of movements in sensorimotor synchronization. Brain Cogn. 48, 66–79. doi: 10.1006/brcg.2001.1304

Aucouturier, J.-J., Ikeuchi, K., Hirukawa, H., Nakaoka, S., Shiratori, T., Kudoh, S., et al. (2008). Cheek to chip: dancing robots and AI's future. IEEE Intell. Syst. 23, 74–84. doi: 10.1109/MIS.2008.22

Berman, A., and James, V. (2015). “Kinetic dialogues: enhancing creativity in dance,” in Proceedings of the 2nd International Workshop on Movement and Computing (New York, NY: ACM), 80–83. doi: 10.1145/2790994.2791018

Bohlinger, N., Czechmanowski, G., Krupka, M. P., Kicki, P., Walas, K., Peters, J., et al. (2024). “One policy to run them all: an end-to-end learning approach to multi-embodiment locomotion,” in 8th Annual Conference on Robot Learning (Munich).

Boukheddimi, M., Harnack, D., Kumar, S., Kumar, R., Vyas, S., Arriaga, O., et al. (2022). “Robot dance generation with music based trajectory optimization,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Kyoto: IEEE), 3069–3076. doi: 10.1109/IROS47612.2022.9981462

Bregler, C. (1997). “Learning and recognizing human dynamics in video sequences,” in Proceedings of the 1997 Conference on Computer Vision and Pattern Recognition (CVPR'97) (San Juan, PR: IEEE), 568–574. doi: 10.1109/CVPR.1997.609382

Buchli, J., and Iida Ijspeert, F. A. J. (2006). “Finding resonance: adaptive frequency oscillators for dynamic legged locomotion,” in 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems (Beijing: IEEE), 3903–3909. doi: 10.1109/IROS.2006.281802

Burton, S. J., Samadani, A.-A., Gorbet, R., and Kulić, D. (2016). “Laban movement analysis and affective movement generation for robots and other near-living creatures,” in Dance Notations and Robot Motion, eds. J. P. Laumond, and N. Abe (Cham: Springer), 25–48. doi: 10.1007/978-3-319-25739-6_2

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E., and Sheikh, Y. (2021). Openpose: realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 43, 172–186. doi: 10.1109/TPAMI.2019.2929257

Carlson, K., Pasquier, P., Tsang, H. H., Phillips, J., Schiphorst, T., Calvert, T., et al. (2016). “Cochoreo: a generative feature in idanceforms for creating novel keyframe animation for choreography,” in Proceedings of the Seventh International Conference on Computational Creativity (Paris), 380–387.

Castillo, G. A., Weng, B., Zhang, W., and Hereid, A. (2021). “Robust feedback motion policy design using reinforcement learning on a 3d digit bipedal robot,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Prague: IEEE), 5136–5143. doi: 10.1109/IROS51168.2021.9636467

Castri, L., Beraldo, G., Mghames, S., Hanheide, M., and Bellotto, N. (2024). “Experimental evaluation of ros-causal in real-world human-robot spatial interaction scenarios,” in 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN) (Pasadena, CA: IEEE), 1603–1609. doi: 10.1109/RO-MAN60168.2024.10731290

Chang, A., Livingstone, S. R., Bosnyak, D. J., and Trainor, L. J. (2017). Body sway reflects leadership in joint music performance. Proc. Natl. Acad. Sci. 114, E4134–E4141. doi: 10.1073/pnas.1617657114

Collins, J. T. (2022). Simulation to Reality and Back: A Robot's Guide to Crossing the Reality Gap (PhD thesis). Brisbane, QLD: Queensland University of Technology.

Cui, H., Maguire, C., and LaViers, A. (2019). Laban-inspired task-constrained variable motion generation on expressive aerial robots. Robotics 8:24. doi: 10.3390/robotics8020024

Dallard, A., Benallegue, M., Kanehiro, F., and Kheddar, A. (2023). Synchronized human-humanoid motion imitation. IEEE Robot. Autom. Lett. 8, 4155–4162. doi: 10.1109/LRA.2023.3280807

Doshi, R., Walke, H. R., Mees, O., Dasari, S., and Levine, S. (2024). “Scaling cross-embodied learning: one policy for manipulation, navigation, locomotion and aviation,” in 8th Annual Conference on Robot Learning (Munich).

Gemeinboeck, P., and Saunders, R. (2017). “Movement matters: how a robot becomes body,” in Proceedings of the 4th International Conference on Movement Computing (New York, NY: ACM), 1–8. doi: 10.1145/3077981.3078035

Hochreiter, S., and Schmidhuber, J. (1997). Long short-term memory. Neural Comput. 9, 1735–1780. doi: 10.1162/neco.1997.9.8.1735

Hoffman, E. M., Tsagarakis, N. G., and Mouret, J.-B. (2018). “Robust real-time whole-body motion retargeting from human to humanoid,” in 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids) (Beijing: IEEE), 425–432.

Ijspeert, A. J. (2008). Central pattern generators for locomotion control in animals and robots: a review. Neural Netw. 21, 642–653. doi: 10.1016/j.neunet.2008.03.014

Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., and Schaal, S. (2013). Dynamical movement primitives: learning attractor models for motor behaviors. Neural Comput. 25, 328–373. doi: 10.1162/NECO_a_00393

Ijspeert, A. J., Nakanishi, J., and Schaal, S. (2001). “Trajectory formation for imitation with nonlinear dynamical systems,” in Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the Next Millennium (Cat. No. 01CH37180), Volume 2 (Maui, HI: IEEE), 752–757. doi: 10.1109/IROS.2001.976259

Izhikevich, E. M. (2007). Dynamical Systems in Neuroscience. Cambridge, MA: MIT Press. doi: 10.7551/mitpress/2526.001.0001

Javed, H., and Park, C. H. (2022). Promoting social engagement with a multi-role dancing robot for in-home autism care. Front. Robot. AI 9:880691. doi: 10.3389/frobt.2022.880691

Knight, H., and Simmons, R. (2014). “Expressive motion with x, y and theta: Laban effort features for mobile robots,” in The 23rd IEEE International Symposium on Robot and Human Interactive Communication (Edinburgh: IEEE), 267–273. doi: 10.1109/ROMAN.2014.6926264

Kobayashi, T., Dean-Leon, E., Guadarrama-Olvera, J. R., Bergner, F., and Cheng, G. (2022). Whole-body multicontact haptic human-humanoid interaction based on leader-follower switching: a robot dance of the “box step”. Adv. Intell. Syst. 4:2100038. doi: 10.1002/aisy.202100038

Koelsch, S., Vuust, P., and Friston, K. (2019). Predictive processes and the peculiar case of music. Trends Cogn. Sci. 23, 63–77. doi: 10.1016/j.tics.2018.10.006

Konidaris, G., Kuindersma, S., Grupen, R., and Barto, A. (2012). Robot learning from demonstration by constructing skill trees. Int. J. Robot. Res. 31, 360–375. doi: 10.1177/0278364911428653

Kroma, A., and Mazalek, A. (2021). “Interfacing &embodiment: “baby tango” dancing robot attempts to communicate,” in Proceedings of the 13th Conference on Creativity and Cognition (New York, NY: ACM), 1–5. doi: 10.1145/3450741.3466633

Kuindersma, S., Deits, R., Fallon, M., Valenzuela, A., Dai, H., Permenter, F., et al. (2016). Optimization-based locomotion planning, estimation, and control design for the atlas humanoid robot. Auton. Robots 40, 429–455. doi: 10.1007/s10514-015-9479-3

Large, E. W., Herrera, J. A., and Velasco, M. J. (2015). Neural networks for beat perception in musical rhythm. Front. Syst. Neurosci. 9:159. doi: 10.3389/fnsys.2015.00159

LaViers, A. (2024). Robots and dance: a promising young alchemy. Annu. Rev. Control Robot. Auton. Syst. 8, 323–350. doi: 10.1146/annurev-control-060923-100542

Le Goff, L. K., Buchanan, E., Hart, E., Eiben, A. E., Li, W., De Carlo, M., et al. (2022). Morpho evolution with learning using a controller archive as an inheritance mechanism. IEEE Trans. Cogn. Dev. Syst. 15, 507–517. doi: 10.1109/TCDS.2022.3148543

Lee, J., and Marsella, S. (2006). “Nonverbal behavior generator for embodied conversational agents,” in Proceedings of the 6th International Conference on Intelligent Virtual Agents, IVA'06 (Berlin: Springer-Verlag), 243–255. doi: 10.1007/11821830_20

Lewis, P. R., Chandra, A., Faniyi, F., Glette, K., Chen, T., Bahsoon, R., et al. (2015). Architectural aspects of self-aware and self-expressive computing systems: from psychology to engineering. Computer 48, 62–70. doi: 10.1109/MC.2015.235

Li, R., Yang, S., Ross, D. A., and Kanazawa, A. (2021). “AI choreographer: music conditioned 3d dance generation with aist++,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Montreal, QC), 13401–13412. doi: 10.1109/ICCV48922.2021.01315

Lopes, M., Melo, F., Montesano, L., and Santos-Victor, J. (2010). Abstraction Levels for Robotic Imitation: Overview and Computational Approaches. Berlin: Springer Berlin Heidelberg, 313–355. doi: 10.1007/978-3-642-05181-4_14

Luo, J., Dong, P., Wu, J., Kumar, A., Geng, X., Levine, S., et al. (2023). “Action-quantized offline reinforcement learning for robotic skill learning,” in Conference on Robot Learning (PMLR), 1348–1361.

Maguire-Rosier, K., Abe, N., and Andreallo, F. (2024). What other movement is there?: rethinking human-robot interaction through the lens of dance performance. TDR 68, 87–103. doi: 10.1017/S1054204323000552

Masumori, A., Maruyama, N., and Ikegami, T. (2021). Personogenesis through imitating human behavior in a humanoid robot “alter3”. Front. Robot. AI 7:532375. doi: 10.3389/frobt.2020.532375

Merel, J., Botvinick, M., and Wayne, G. (2019a). Hierarchical motor control in mammals and machines. Nat. Commun. 10, 1–12. doi: 10.1038/s41467-019-13239-6

Merel, J., Hasenclever, L., Galashov, A., Ahuja, A., Pham, V., Wayne, G., et al. (2019b). “Neural probabilistic motor primitives for humanoid control,” in International Conference on Learning Representations (New Orleans, LA).

Michalowski, M. P., Sabanovic, S., and Kozima, H. (2007). “A dancing robot for rhythmic social interaction,” in Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: ACM), 89–96. doi: 10.1145/1228716.1228729

Mörtl, A., Lorenz, T., and Hirche, S. (2014). Rhythm patterns interaction-synchronization behavior for human-robot joint action. PLoS ONE 9:e95195. doi: 10.1371/journal.pone.0095195

Osorio, P., Sagawa, R., Abe, N., and Venture, G. (2024). A generative model to embed human expressivity into robot motions. Sensors 24:569. doi: 10.3390/s24020569

Park, K. M., Cheon, J., and Yim, S. (2024). A minimally designed audio-animatronic robot. IEEE Trans. Robot. 40, 3181–3198. doi: 10.1109/TRO.2024.3410467

Pettee, M., Shimmin, C., Duhaime, D., and Vidrin, I. (2019). “Beyond imitation: generative and variational choreography via machine learning,” in 10th International Conference on Computational Creativity (Charlotte, NC).

Rakita, D., Mutlu, B., and Gleicher, M. (2017). “A motion retargeting method for effective mimicry-based teleoperation of robot arms,” in Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: ACM), 361–370. doi: 10.1145/2909824.3020254

Ramos, O. E., Mansard, N., Stasse, O., Benazeth, C., Hak, S., Saab, L., et al. (2015). Dancing humanoid robots: systematic use of osid to compute dynamically consistent movements following a motion capture pattern. IEEE Robot. Autom. Magaz. 22, 16–26. doi: 10.1109/MRA.2015.2415048

Ravichandar, H., Polydoros, A. S., Chernova, S., and Billard, A. (2020). Recent advances in robot learning from demonstration. Ann. Rev. Control Robot. Auton. Syst. 3, 297–330. doi: 10.1146/annurev-control-100819-063206

Schiphorst, T. (2013). “Merce cunningham: making dances with the computer,” in Merce Cunningham, ed. D. Vaughan (London: Routledge), 79–98.

Simon, H. A. (1996). The Sciences of the Artificial: Third Edition, Volume 1. Cambridge, MA: The MIT Press.

Singh, N., Bernal, G., Savchenko, D., and Glassman, E. L. (2022). “Where to hide a stolen elephant: leaps in creative writing with multimodal machine intelligence,” in ACM Transactions on Computer-Human Interaction (New York, NY). doi: 10.18653/v1/2022.in2writing-1.3

Siyao, L., Yu, W., Gu, T., Lin, C., Wang, Q., Qian, C., et al. (2022). “Bailando: 3d dance generation by actor-critic gpt with choreographic memory,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA: IEEE), 11050–11059. doi: 10.1109/CVPR52688.2022.01077

Stoeva, D., Kriegler, A., and Gelautz, M. (2024). Body movement mirroring and synchrony in human-robot interaction. ACM Trans. Hum.-Robot Interact. 13, 1–26. doi: 10.1145/3682074

St-Onge, D, Côté-Allard, U., Glette, K., Gosselin, B., and Beltrame, G. (2019). Engaging with robotic swarms: commands from expressive motion. ACM Trans. Hum.-Robot Interact. 8, 1–26. doi: 10.1145/3323213

Sun, Y., Xiao, C., Chen, L., Chen, L., Lu, H., Wang, Y., et al. (2024). Beyond end-effector: utilizing high-resolution tactile signals for physical human-robot interaction. IEEE Trans. Ind. Electron. 72, 5022–5031. doi: 10.1109/TIE.2024.3458180

Szorkovszky, A., Veenstra, F., and Glette, K. (2023a). Central pattern generators evolved for real-time adaptation to rhythmic stimuli. Bioinspir. Biomim. 18:046020. doi: 10.1088/1748-3190/ace017

Szorkovszky, A., Veenstra, F., and Glette, K. (2023b). From real-time adaptation to social learning in robot ecosystems. Front. Robot. AI 10:1232708. doi: 10.3389/frobt.2023.1232708

Thörn, O., Knudsen, P., and Saffiotti, A. (2020). “Human-robot artistic co-creation: a study in improvised robot dance,” in 2020 29th IEEE International conference on robot and human interactive communication (RO-MAN) (Naples: IEEE), 845–850.

Troughton, I. A., Baraka, K., Hindriks, K., and Bleeker, M. (2022). “Robotic improvisers: rule-based improvisation and emergent behaviour in HRI,” in 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (IEEE), 561–569. doi: 10.1109/HRI53351.2022.9889624

Universal Robots (2020). Dancing Through the Pandemic: How a Quantum Physicist Taught a Cobot to Dance. Available online at: https://www.universal-robots.com/blog/dancing-through-the-pandemic-how-a-quantum-physicist-taught-a-cobot-to-dance (accessed May 25, 2025).

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., et al. (2017). “Attention is all you need,” in Advances in Neural Information Processing Systems (Long Beach, CA), 5998–6008.

Wallace, B., Martin, C., Tørresen, J., and Nymoen, K. (2021). “Exploring the effect of sampling strategy on movement generation with generative neural networks,” in International Conference on Computational Intelligence in Music, Sound, Art and Design (Part of EvoStar), eds. J. Romero, T. Martins, and N. Rodríguez-Fernández (New York, NY: Springer), 344–359. doi: 10.1007/978-3-030-72914-1_23

Wallace, B., Nymoen, K., Torresen, J., and Martin, C. P. (2024). Breaking from realism: exploring the potential of glitch in ai-generated dance. Digit. Creat. 35, 125–142. doi: 10.1080/14626268.2024.2327006

Welch, G., and Foxlin, E. (2002). Motion tracking: no silver bullet, but a respectable arsenal. IEEE Comput. Graph. Appl. 22, 24–38. doi: 10.1109/MCG.2002.1046626

Witek, M. A. (2017). Filling in: syncopation, pleasure and distributed embodiment in groove. Music Anal. 36, 138–160. doi: 10.1111/musa.12082

Xiao, Y., Shu, K., Zhang, H., Yin, B., Cheang, W. S., Wang, H., et al. (2024). “Eggesture: entropy-guided vector quantized variational autoencoder for co-speech gesture generation,” in Proceedings of the 32nd ACM International Conference on Multimedia (New York, NY: ACM), 6113–6122. doi: 10.1145/3664647.3681392

Xu, W., Chen, J., Lau, H. Y., and Ren, H. (2017). Data-driven methods towards learning the highly nonlinear inverse kinematics of tendon-driven surgical manipulators. Int. J. Med. Robot. Comput. Assist. Surg. 13:e1774. doi: 10.1002/rcs.1774

Yi, H. (2017). A Human-Robot Dance Duet. Available online at: https://www.ted.com/talks/huang_yi_kuka_a_human_robot_dance_duet (accessed May 24, 2025).

Keywords: dance, human-robot interaction, expressive movement, sensory feedback, generative AI, robot control

Citation: Wallace B, Glette K and Szorkovszky A (2025) How can we make robot dance expressive and responsive? A survey of methods and future directions. Front. Comput. Sci. 7:1575667. doi: 10.3389/fcomp.2025.1575667

Received: 12 February 2025; Accepted: 15 May 2025;

Published: 03 June 2025.

Edited by:

Anna Xambó, Queen Mary University of London, United KingdomReviewed by:

Liu Yang, Sultan Idris University of Education, MalaysiaCopyright © 2025 Wallace, Glette and Szorkovszky. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Benedikte Wallace, YmVuZWRpd2FAaWZpLnVpby5ubw==

Benedikte Wallace

Benedikte Wallace Kyrre Glette

Kyrre Glette Alex Szorkovszky

Alex Szorkovszky