- Faculty of Education, Hanoi University of Science and Technology, Hanoi, Vietnam

Introduction: As AI tools become more widely used in higher education, concerns about AI-assisted academic cheating are growing. This study examines how postgraduate students interpret these behaviors.

Methods: We conducted an exploratory qualitative study. We analyzed ten ten course-embedded reflective essays using conventional content analysis and identified 159 meaning units, 34 codes, 12 categories, and 6 themes.

Results: Students described two main forms of AI-assisted cheating: misusing AI to complete academic tasks and improperly using AI-generated content. They attributed these behaviors to work pressure, ethical ambiguity, AI affordances, and gaps in institutional policies. They also proposed solutions, including clearer guidelines, improved assessment design, and stronger ethics education.

Discussion: The findings show that students construct their views on AI-assisted cheating within social, technological, and institutional contexts. Strengthening policy clarity and promoting a culture of ethical AI use can help institutions address these emerging challenges.

1 Introduction

Artificial intelligence (AI) technologies have rapidly transformed many aspects of modern education and provided powerful tools to support students’ learning and academic productivity (Baidoo-Anu and Owusu Ansah, 2023; Tlili et al., 2023). Among these, AI-driven writing assistants and language models such as ChatGPT, Copilot, and Gemini, which enable users to generate text, solve problems, and access information with unprecedented ease (Houston and Corrado, 2023; Mai et al., 2024). These AI chatbots provide personalized, instant feedback and can be actively engaged in natural language conversations, making them versatile educational tools that have the potential to revolutionize traditional teaching and learning methods (Can and Nguyen, 2025; Firat, 2023; Mai and Van Hanh, 2024). The growing presence of AI in education offers unprecedented opportunities for personalized learning, accessibility, and efficiency, thus supporting individual student needs and educational goals (Halaweh, 2023). Many scholars and educators argue that AI chatbot systems should be viewed as developmental tools rather than threats to improving educational outcomes (Kooli, 2023).

However, alongside these benefits, AI also raises significant ethical and practical concerns. A prominent issue is the rise of AI-assisted academic cheating, where students use AI tools to generate assignments, essays, or examination answers without genuine personal effort (Sallam et al., 2023). The extraordinary capabilities of AI chatbots to pass professional and standardized tests, such as the University of Minnesota law school final examinations (Kelly, 2023), the US medical licensing examinations (Abdel-Messih and Kamel Boulos, 2023; Subramani et al., 2023), and UK standardized tests (Giannos and Delardas, 2023), highlight their potential misuse and pose critical challenges to academic integrity and fairness. Furthermore, the rapid development and adoption of AI technologies have outpaced institutional policies, creating ambiguity and difficulties in enforcement regarding AI use in academic settings. Institutions face the dual challenge of leveraging AI’s benefits while minimizing risks that threaten the authenticity and credibility of academic qualifications (Rodrigues et al., 2025; Sallam et al., 2023). In summary, AI’s growing influence in education marks a transformative era with vast potential to improve learning but also requires proactive measures to address its complex ethical implications, especially concerning academic integrity and the responsible integration of AI tools.

In such a context, there is a pressing need to consider students’ own voices in ongoing debates about AI and academic integrity. Understanding how learners construct meanings around AI-assisted academic cheating is essential for designing responsive policies, pedagogies, and institutional strategies. This study addresses that need by examining a specific case: reflective essays produced in a postgraduate-level ethics course. As a course-embedded task, these essays provide authentic insights into how students articulate their ethical reasoning in the context of AI use in education. Accordingly, the purpose of this study is to explore postgraduate students’ perceptions of AI-assisted academic cheating by analyzing their reflective essays. Specifically, we seek to identify the behaviors they associate with AI-assisted academic dishonesty, the factors that motivate such behaviors, and the strategies they propose for mitigating them.

2 Review of related literature

2.1 Academic integrity and conventional forms of academic misconduct

Academic cheating refers to acts of dishonesty or fraudulence within academic settings, where students engage in behaviors that violate the principles of academic integrity. These behaviors typically involve attempts to gain an unfair advantage or to deceive others in order to achieve academic success. Academic cheating can take various forms, such as plagiarism, examination cheating, data or result fabrication, and more. Overall, academic cheating undermines the foundational values of fairness, honesty, and intellectual integrity, which are essential to the educational process (Lim and See, 2001). From the perspective of higher education institutions, academic cheating erodes the reputation of academic institutions and diminishes the perceived integrity of academic degrees, posing significant challenges to the broader mission of higher education.

Academic dishonesty is a long-standing concern in higher education, predating the emergence of AI. Foundational studies have shown that student cheating behaviors are shaped by institutional context, policies, peer influence, and ethical culture (McCabe et al., 2001; McCabe and Trevino, 1993). Later studies further highlighted that exemplary academic integrity policies require fairness, clarity, consistency, and the integration of educational rather than purely punitive approaches (Bretag, 2013; Bretag et al., 2011). The study highlighted that both undergraduate and postgraduate students require training to improve their understanding of plagiarism (Bretag, 2013). From a values perspective, academic integrity is grounded in honesty, trust, fairness, respect, responsibility, and courage (International Center for Academic Integrity, 2021), while cultural and pedagogical contexts also strongly affect how students interpret these values (Macfarlane et al., 2014). They highlighted that students’ interpretations of integrity are deeply influenced by institutional culture, teaching practices, and broader social values (Macfarlane et al., 2014). Similarly, systemic forms of misconduct such as contract cheating and plagiarism illustrate that policy and surveillance alone are insufficient without a parallel effort to build a culture of integrity (Dawson and Sutherland-Smith, 2018; Sutherland-Smith, 2008). Another study also asserted that promoting integrity is not simply about controlling student behavior but about embedding ethical learning across the curriculum (Chesney, 2009).

Overall, these prior studies provide a rich body of evidence showing that academic integrity requires a dual approach: enforceable policies and the cultivation of ethical culture. They offer an essential backdrop for understanding AI-assisted academic cheating, which introduces novel technological affordances for creating new forms of academic dishonesty, but may ultimately resonate with the same foundational drivers of dishonesty in higher education.

2.2 Emergence of AI-assisted academic cheating

Although student academic cheating has long persisted and become widespread across universities (Yu et al., 2021), the emergence of AI-powered tools such as chatbots has introduced a new layer of complexity, blurring the boundary between legitimate academic support and unethical behavior (Lau et al., 2022).

AI-assisted academic cheating refers to the unethical use of AI technologies to produce academic work without genuine student effort, including generating essays, completing assignments, or answering examination questions (Oravec, 2023). This emerging form of dishonesty poses a serious threat to academic integrity by undermining fairness and diminishing the value of educational credentials (Abd-Elaal et al., 2019; Yusuf et al., 2024). Additionally, AI-generated outputs easily bypass traditional plagiarism detection tools such as Turnitin and iThenticate, complicating enforcement and detection efforts (Chaudhry et al., 2023). Furthermore, the increasing complexity and quality of AI-generated content complicates institutional efforts to ensure credible assessment processes. This phenomenon blurs the line between authentic learning and outsourcing academic work (Lau et al., 2022), forcing educators to reconsider definitions of acceptable AI use and to foster responsible digital citizenship among students (Yan, 2023). However, institutional policies frequently lag behind rapid advancements in AI technologies, leading to ambiguity in ethical guidelines and enforcement mechanisms (Sallam et al., 2023).

Additionally, AI-assisted academic cheating encompasses not only the use of AI to generate entire academic assignments but also subtler forms, such as employing AI to rewrite texts, create bibliographies, or assist in problem-solving without attributing sources (Lau et al., 2022; Oravec, 2023). This diversity challenges educators in identifying and addressing all forms of AI misuse effectively (Abd-Elaal et al., 2019). Modern AI tools provide a platform for new types of serious academic misconduct that are not easily detected and, even if detected, are difficult to prove (Abd-Elaal et al., 2019). To address these challenges, some institutions advocate for redesigning assessments to emphasize in-person presentations, oral defenses, and process documentation, which are less susceptible to AI exploitation (Chaudhry et al., 2023; Nikolic et al., 2023). Others underscore the importance of digital literacy programs that educate students about ethical AI usage and the consequences of academic dishonesty (Chaudhry et al., 2023; Jeon and Lee, 2023). However, understanding students’ perceptions of AI-assisted academic cheating is particularly crucial, as it provides insight into the underlying drivers of AI misuse and informs the development of effective interventions (Nguyen and Goto, 2024; Pariyanti et al., 2025).

2.3 AI-assisted academic cheating among university students

Recent literature reveals a growing concern about AI-assisted academic cheating among university students, highlighting both behavioral patterns and underlying factors. A study based on interviews and list experiments with 305 undergraduate students of Economics and Business in Indonesia showed that students were often reluctant to admit to dishonest behavior, such as using AI to cheat, when asked directly due to feelings of shame and social pressure (Pariyanti et al., 2025). However, the list experiment method, which provided anonymous data, resulted in higher levels of admissions of academic cheating (Pariyanti et al., 2025). A significant relationship between students’ spiritual values and their ethical behavior was also found, with notable gender differences in the tendency to cheat academically (Pariyanti et al., 2025). Similarly, a quantitative study of 1,386 Vietnamese undergraduates using list experiments found that students tended to conceal AI-assisted cheating when asked directly, as anonymized indirect data reported cheating rates nearly three times higher than those observed through direct questioning (Nguyen and Goto, 2024). Additionally, gender and academic level differences emerged: female senior students showed higher cheating tendencies than freshmen females, while males consistently exhibited cheating behaviors across all years (Nguyen and Goto, 2024). The authors suggest that factors such as gender, academic pressure, and peer influence contribute to heterogeneous cheating behaviors (Nguyen and Goto, 2024).

An interview study with 13 undergraduate and 6 postgraduate business students in the UAE to explore ethical issues in the use of ChatGPT revealed that students expressed concerns about aspects of privacy, data security, bias, plagiarism, and lack of transparency (Benuyenah and Dewnarain, 2024). This may complicate our understanding of students’ attitudes toward the use of AI for academic tasks, as they are aware of ethical dilemmas but may still engage in questionable behavior. Similarly, a survey of 400 undergraduate health students in the United States found similar ambivalence, with most students seeing threats from generative AI to graded assignments such as tests and essays, but many of them believed that using these tools for learning and research outside of graded assignments is acceptable (Kazley et al., 2025). In Mexico, a survey of 376 undergraduate students found that there was a low outright disapproval of academic cheating, with approximately one-third of students not condemning such behaviors, and 85% admitting to at least one cheating behavior (Lau et al., 2022).

Drawing on the theory of planned behavior, a longitudinal survey study of 610 undergraduate students at time 1 (beginning of the semester) and 212 students at time 2, 3 months later in Austria, showed that students’ attitudes, subjective norms, and perceived behavioral control significantly predicted their intention to cheat using AI-generated texts, which in turn predicted actual usage (Greitemeyer and Kastenmüller, 2024). Their earlier survey study of 283 undergraduate students found that personality traits such as Honesty-Humility, Conscientiousness, and Openness to Experience were negatively correlated with intention to cheat using chatbot-generated texts, while Machiavellianism, narcissism, and psychopathy were positively correlated (Greitemeyer and Kastenmüller, 2023). A multiple regression analysis found that the Honesty-Humility factor was the most predictive, meaning that individuals with high scores on this factor would not use chatbot-generated texts to cheat academically because they prioritize fairness over their own interests (Greitemeyer and Kastenmüller, 2023). Thus, these psychological insights may provide a valuable approach to promoting ethical behavior in academic contexts, such as nurturing students’ Honesty-Humility (Greitemeyer and Kastenmüller, 2023).

2.4 Research gaps

Although AI-assisted academic cheating in higher education has attracted increasing attention from scholars, existing research predominantly focuses on undergraduate populations, with limited exploration of postgraduate students’ experiences. The majority of studies employ quantitative methods such as surveys and list experiments to measure cheating prevalence and related behavioral factors, which ignore rich qualitative insights that can reveal deeper motivations and ethical considerations behind AI misuse. Additionally, existing literature highlights general concerns about AI’s impact on academic integrity but lacks a nuanced understanding of how postgraduate students define AI-assisted cheating, what drives their engagement in such behaviors, and what solutions they envision. They tend to categorize academic cheating as a clear-cut ethical violation, often ignoring the subtle distinctions students make about what constitutes acceptable versus unacceptable use of AI tools in their academic studies. This oversimplification fails to capture the diverse ways in which students interpret and rationalize their behaviors, which can range from outright misconduct to perceived legitimate assistance. Without capturing these viewpoints, institutions risk enforcing policies that are disconnected from students’ realities and may not effectively address the root causes of misconduct. Addressing these gaps can provide critical, contextual knowledge to inform targeted policies and educational strategies aimed at preserving academic honesty in the evolving AI landscape.

2.5 Research questions

Based on the identified gaps, this study seeks to answer the following research questions:

Research question 1 (RQ1): What types of behaviors do postgraduate students perceive as AI-assisted academic cheating?

Research question 2 (RQ2): What factors motivate or drive postgraduate students to engage in AI-assisted academic cheating?

Research question 3 (RQ3): What solutions or strategies do postgraduate students suggest to mitigate AI-assisted academic cheating?

3 Methods

3.1 Theoretical approach

This study adopted a strategy of theoretical triangulation to strengthen the interpretive depth of the analysis. Rather than relying on a single explanatory framework, we intentionally combined constructivist epistemology, technological mediation theory, and situated learning theory to capture multiple layers of AI-assisted academic cheating. AI-assisted academic cheating is a complex, emergent phenomenon, involving individual meaning-making (constructivist epistemology), the mediating role of technology in shaping ethical judgments (technological mediation theory), and the influence of social and institutional contexts (situated learning theory). Using a single theoretical framework may not be sufficient to explain these multidimensional issues. Therefore, we combined three interrelated perspectives: constructivist epistemology, technological mediation theory, and situated learning theory to explore postgraduate students’ perceptions of AI-assisted academic cheating. Drawing on these theoretical frameworks, our analysis sought to avoid one-sided interpretations and to generate a richer, more comprehensive understanding of how postgraduate students construct, negotiate, and rationalize their perceptions of AI-assisted academic cheating. Importantly, the use of triangulation was not intended to test hypotheses, but to provide layered insights into a phenomenon that spans personal, technological, and community domains. Across this manuscript, we engaged these three frameworks as conceptual lenses (sensitizing concepts) to guide the interpretation of qualitative data, which is a theory-generating approach, rather than a theory-testing approach as seen in quantitative studies. Our research followed the conventional content analysis of qualitative exploratory research, and the theories informed how we read the data, not a prescriptive step-by-step methodology.

3.1.1 Constructivist epistemology: AI ethics as socially constructed

The research is grounded in a constructivist epistemology, which posits that knowledge and reality are not objective and fixed but are actively constructed through social interactions, cultural norms, and lived experiences (Creswell and Poth, 2016). While realist models explain academic integrity based on predefined moral goods, constructivist models emphasize autonomous ethical reasoning shaped by context (Sparks and Wright, 2025). This makes constructivism particularly relevant for exploring how postgraduate students actively negotiate and construct ethical meaning in response to the novel, uncertain, and institutionally ambivalent landscape of AI-assisted academic practices (Raskin and Debany, 2018). From a constructivist perspective, students not only accept ethical norms in the use of AI as taught by their instructors, but they also actively formulate and internalize ethical principles in specific academic contexts (Asmarita et al., 2024). Thus, rather than viewing academic cheating as fixed, the study assumes that students’ perceptions of cheating are influenced by their educational contexts, institutional policies, and peer norms. For example, while one student may perceive using ChatGPT to generate a full essay as academic misconduct, another may interpret it as a legitimate form of support, particularly in the absence of clear guidance from instructors or institutions. This constructivist lens enables the study to examine how students co-create ethical boundaries around AI use based on their values, experiences, and pressures.

3.1.2 Technological mediation theory: AI as an ethical actor

Technological mediation theory challenges the notion that technologies are neutral tools but instead recognizes them as active mediators of human experiences, decisions, and moral reasoning (Verbeek, 2005). This theory acknowledges the intervention of technologies into our lifeworld and their influence on our way of thinking and moralizing (Liu, 2023; Van Hanh and Turner, 2024). In academic contexts, generative AI systems such as ChatGPT do not simply support student learning; they transform how students understand intellectual effort, authorship, originality, and legitimacy. The immediacy and fluency of AI-generated output can lower the perceived effort required for academic tasks, thereby shifting students’ ethical judgments about what counts as their own work. For example, some students may perceive AI chatbot-generated content as their own (Chaudhry et al., 2023). Furthermore, as AI systems are increasingly designed to simulate human-like interaction, they may be perceived as collaborators rather than passive tools (Jeon and Lee, 2023). It complicates the ethical question of whether a machine helps you without asking for credit, is that cheating? Thus, the mediation of AI technology in students’ learning may significantly influence their perceptions of academic integrity in contexts where norms for AI use have yet to be solidified.

3.1.3 Situated learning theory: ethical behavior as contextually learned

The third theoretical foundation is situated learning theory (Lave and Wenger, 1991), which conceptualizes learning as inherently social and embedded within communities of practice. In the context of AI-assisted academic study, students’ perceptions and ethical stances are shaped not solely by formal instruction but by the social norms, peer behaviors, and institutional cultures that surround them. Peer norms, informal exchanges, implicit expectations, and faculty silence all contribute to students’ construction of what is considered acceptable AI use. For example, if students observe peers using AI tools to complete assignments with no apparent consequences, or if educators remain silent on the issue, such behaviors may become normalized and even valorized. As found in prior studies, students recognize potential harms of AI misuse (e.g., bias, plagiarism) (Benuyenah and Dewnarain, 2024), yet rationalize use based on situational pressures such as workload, unclear guidelines, or peer conformity (Nguyen and Goto, 2024). This supports the broader constructivist perspective that ethical meaning is not universally fixed but dynamically negotiated within specific contexts. Thus, situated learning provides a valuable lens for understanding how moral reasoning emerges not in isolation but through participation in academic microcultures.

3.2 Research design

This study employed an exploratory qualitative design to investigate how postgraduate students interpret and make meaning of AI-assisted academic cheating. The data consisted of 10 reflective essays submitted as part of a postgraduate-level ethics course. As a course-embedded task, these essays provided a naturalistic source of student voices, allowing participants to present their ethical reasoning in an authentic academic context. The aim of the study was not to generate generalizable claims but to capture rich, situated insights into how students construct their perceptions of academic integrity in relation to AI use.

We applied conventional content analysis to systematically identify, code, and categorize meaning units within the essays, following the procedures outlined in a previous study (Erlingsson and Brysiewicz, 2017). This approach was appropriate for capturing participants’ subjective interpretations and ethical reasoning, consistent with a constructivist epistemological stance (Creswell and Poth, 2016). While the dataset was limited to a single cohort and may not have reached theoretical saturation, this design was sufficient for an exploratory inquiry aimed at illuminating emergent patterns, conceptual categories, and potential pathways for future, more extensive research.

3.3 Materials

The data source for this study was 10 course-based reflective writing assignments by 10 postgraduate students (three men and seven women). The students had an average age of 23 and represented a relatively homogeneous demographic group in terms of age and academic background. In the study of the topic “AI ethics and academic integrity,” part of the core course called Professional Ethics Education in the master’s program of Teaching and Learning at Hanoi University of Science and Technology (HUST), 10 postgraduate students were asked to submit individual essays on the topic of AI-assisted academic cheating. The reflective essay assignment was designed as a standard learning task within the course, but also served as a means of data collection. The assignment was structured around three guiding questions: (1) what behaviors do you consider to be AI-assisted academic cheating? (2) what factors do you think contribute to students engaging in such behaviors? (3) what strategies or solutions do you propose to mitigate AI-assisted academic cheating? Essays ranged from 2,000 to 3,000 words and were submitted electronically in December 2024. The task was designed to elicit personal reflection and ethical reasoning in a self-paced format, allowing students to express their perspectives without time pressure or external influence.

3.4 Data analysis

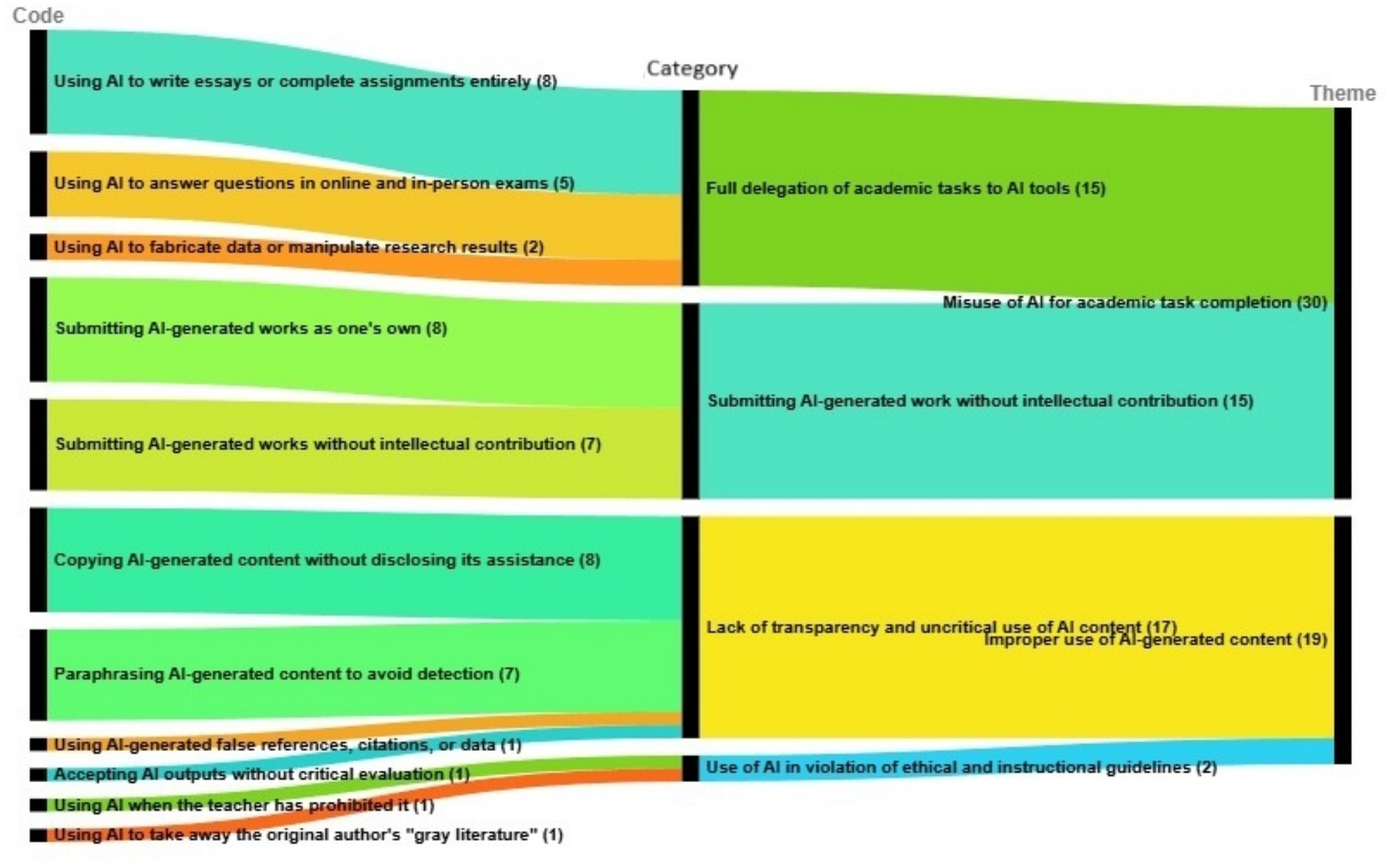

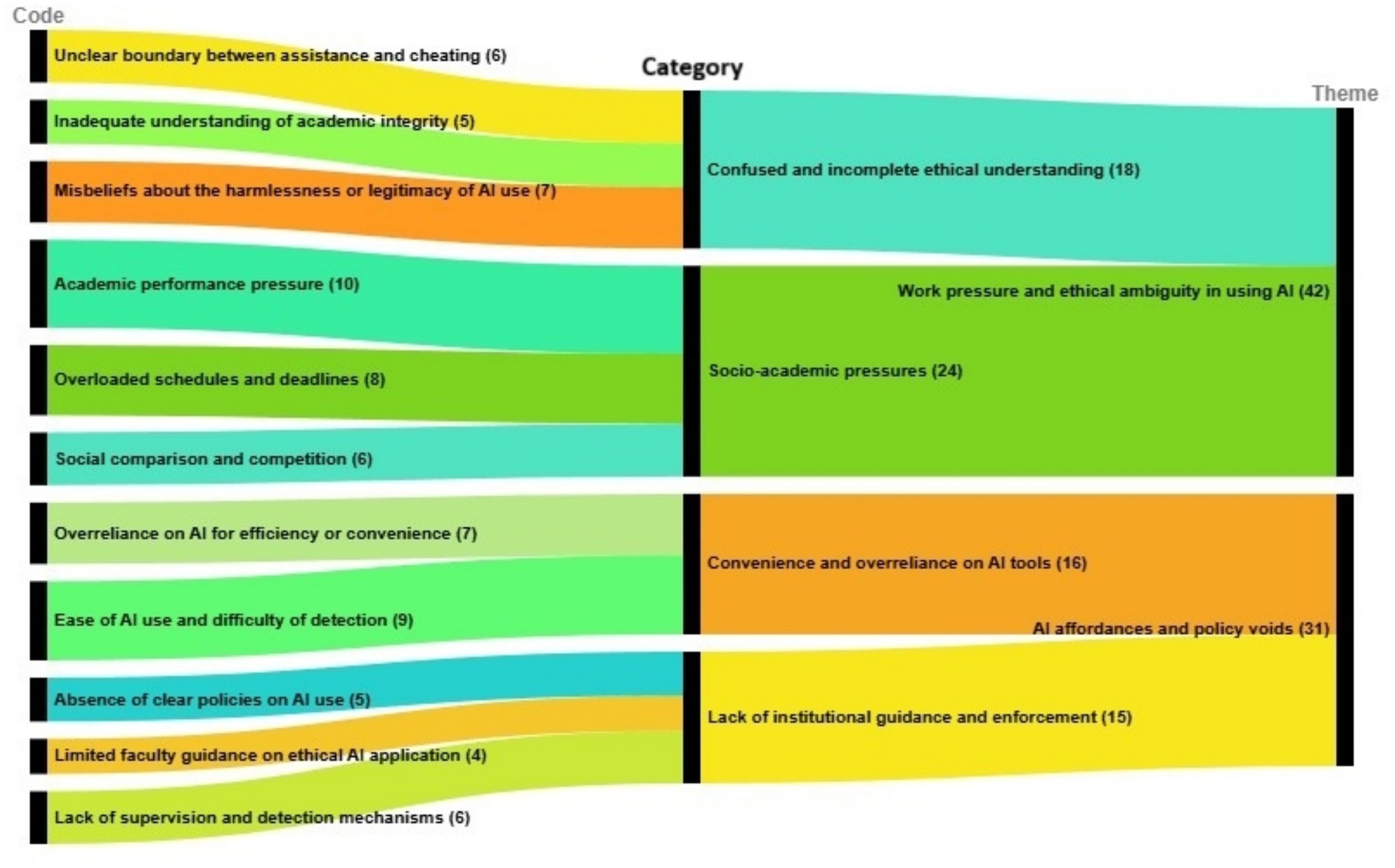

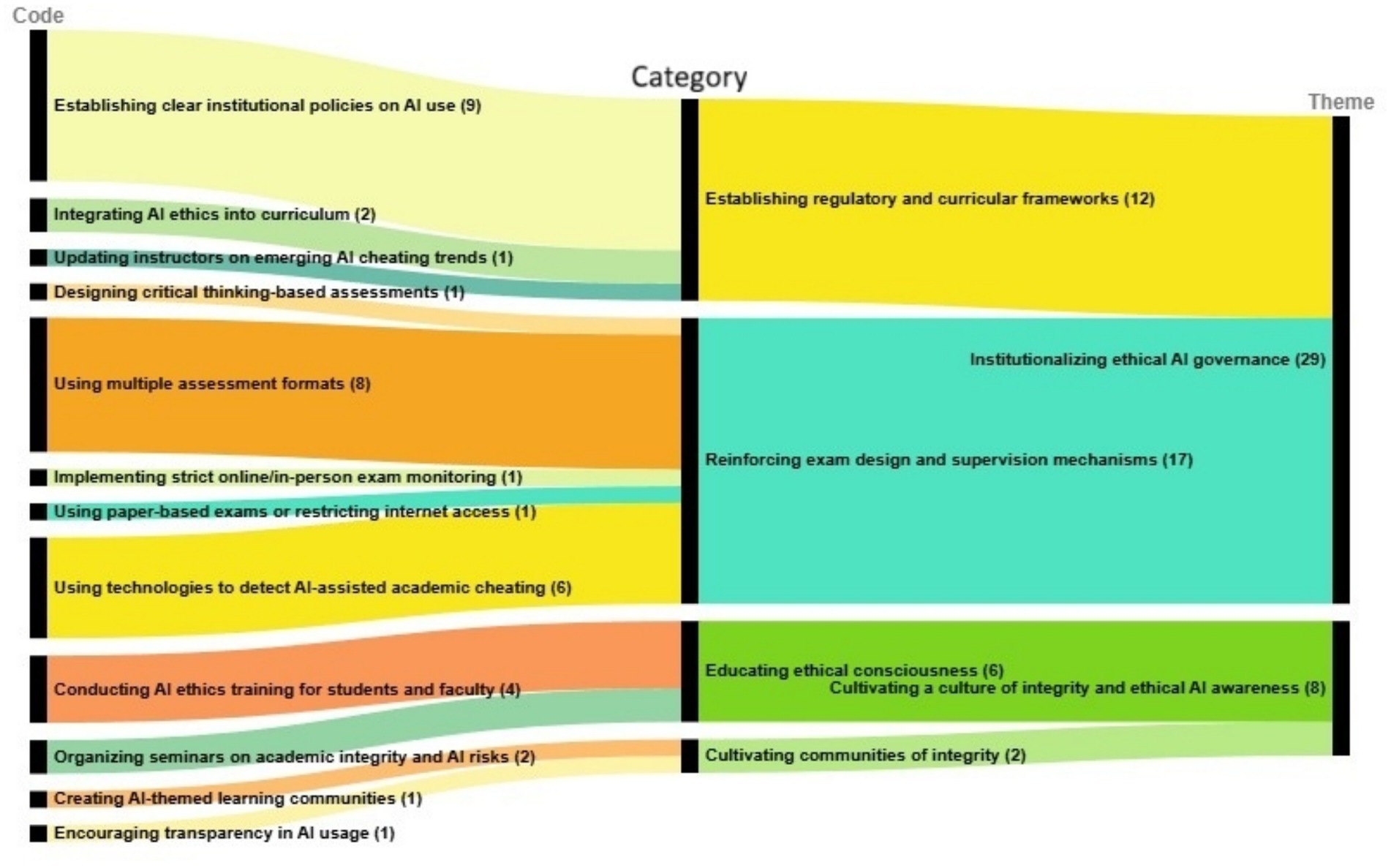

We conducted a conventional content analysis (Erlingsson and Brysiewicz, 2017), identifying meaning units, generating codes, and abstracting these into categories and themes. We began by carefully reading all 10 student essays multiple times to gain a holistic understanding of the content. Through this iterative process, we identified 159 meaning units, which are phrases or text segments directly related to the research topic. These meaning units were condensed and assigned 34 unique codes. We then grouped the codes into 12 categories, which were abstracted into 6 overarching themes aligned with the study’s research questions and theoretical framework, detailed in Figures 1–3.

Figure 1. Alluvial flowchart illustrating how postgraduate students describe behaviors associated with AI-assisted academic cheating. The diagram maps 49 meaning units onto specific codes and four categories: full delegation of academic tasks to AI, submitting AI-generated work without intellectual contribution, lack of transparency in acknowledging AI assistance, and using AI in violation of ethical or instructional guidelines. Flow widths represent the frequency of each behavior. These categories converge into two themes: “Misuse of AI for academic task completion” and “Improper use of AI-generated content.”

Figure 2. Alluvial diagram showing how 73 meaning units map onto codes, categories, and two themes. It describes the factors that motivate AI-assisted academic cheating. Four categories are represented: confused or incomplete ethical understanding, socio-academic pressures, convenience and overreliance on AI, and lack of institutional guidance and enforcement. These categories flow into two themes: “Work pressure and ethical ambiguity in using AI” and “AI affordances and policy voids.”

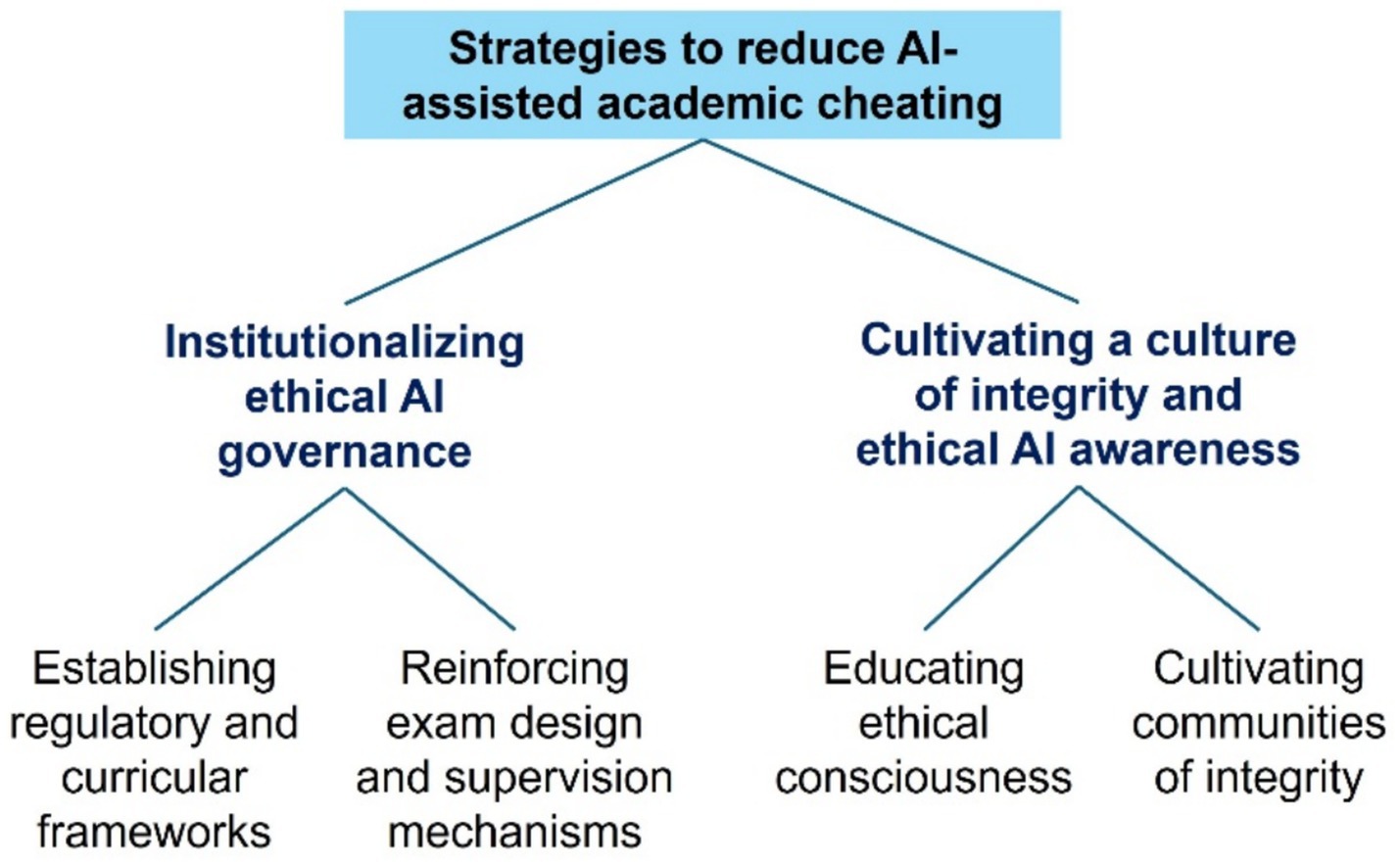

Figure 3. Alluvial diagram that illustrates how 37 meaning units map onto codes, categories, and two themes outlining students’ proposed solutions to reduce AI-assisted academic cheating. The diagram organizes solutions into four categories: establishing regulatory and curricular frameworks, reinforcing exam design and supervision mechanisms, educating ethical consciousness, and building integrity-centered communities. These flow into two themes: “Institutionalizing ethical AI governance” and “Cultivating a culture of integrity and ethical AI awareness.” The largest flow highlights students’ preference for assessment redesign and clear policies, while smaller flows show interest in ethics training and community-based approaches to support responsible AI use.

3.5 Inter-rater reliability

Two authors, NVH and NTD, were involved in the data analysis process. To establish inter-rater reliability, we randomly selected three student essays, representing 30% of the dataset. Each author independently reviewed the same three essays and conducted open coding. Following individual coding, we met to compare our coding outcomes, discuss discrepancies, and refine the definitions of codes and categories. Through this collaborative discussion, we reached full consensus on the interpretation of meaning units and the grouping of codes. Using a simple percentage agreement method (McHugh, 2012), we calculated an agreement rate of 87%, which we considered acceptable for qualitative content analysis at this stage (Ha et al., 2023). After achieving this level of agreement, one researcher (NTD) continued with coding the remaining data, while the second researcher (NVH) reviewed the final categories and themes.

3.6 Limitations

This study has several methodological limitations. The dataset consisted of 10 reflective essays from a single postgraduate cohort, which limited the diversity of perspectives and may not allow for theoretical saturation in the strict sense used in grounded theory or other interpretive traditions. Because this study employed an exploratory qualitative design, the goal was not to achieve full saturation but to generate an initial picture of how postgraduate students perceive AI-assisted academic cheating. From this perspective, the dataset may be sufficient to highlight emergent categories and themes while providing a conceptual basis for further inquiry. Therefore, the findings should be interpreted as exploratory insights rather than generalizable conclusions. Future studies could extend this study through semi-structured interviews with participants from multiple programs, cohorts, and phases of study, concluding data collection when thematic saturation is reached. This approach would allow for deeper probing of the theoretical dimensions identified here and provide more comprehensive evidence for testing and refining the conceptual model.

4 Findings

4.1 RQ1: What behaviors do postgraduate students perceive as AI-assisted academic cheating?

Twelve distinct behaviors were identified from postgraduate students’ essays, reflecting a wide range of student perceptions about what constitutes academic dishonesty in using AI. These behaviors were grouped into four categories, including: (1) full delegation of academic tasks to AI tools, (2) submitting AI-generated work without intellectual contribution, (3) lack of transparency and uncritical use of AI-generated content, and (4) use of AI in violation of ethical and instructional guidelines. From these categories, two overarching themes emerged: (1) Misuse of AI for academic task completion and (2) Improper use of AI-generated content (see Figure 1).

As shown in Figure 1, the distribution of coded behaviors (N = 49 meaning units) indicated that students most frequently highlighted full delegation to AI and non-disclosure of AI assistance. The five most common codes were using AI to write essays/assignments (n = 8), submitting AI-generated work as one’s own (n = 8), submitting AI-generated works without intellectual contribution (n = 7), copying AI-generated content without disclosure (n = 8), and paraphrasing AI-generated content to avoid detection (n = 7). In contrast, more covert practices, such as AI-fabricated references or accepting outputs uncritically, appeared infrequently (each n = 1). Overall, the pattern suggests that postgraduate students view AI-assisted academic cheating primarily as a matter of delegating responsibility and concealing technological involvement.

4.1.1 Theme 1: misuse of AI for academic task completion

The codes in this theme include using AI to write essays or complete assignments entirely, using AI to answer questions in online and in-person examinations, fabricating data or manipulating research results with AI, submitting AI-generated work as one’s own, and submitting AI-generated work without intellectual contribution. These practices capture students’ concerns about fully delegating academic tasks to AI systems. Viewed through a constructivist epistemology lens, these practices illustrate how learners actively define and interpret academic integrity in contexts where technological capabilities challenge traditional expectations of authorship. Students perceived such actions as a clear violation because they removed the individual’s intellectual effort. These perceived behaviors highlight that ethical judgments are not fixed but constructed within the lived experiences of students’ learning. In sum, this theme reflects students’ negotiation of the boundary between acceptable assistance and academic dishonesty when AI performs tasks that traditionally represent personal achievement.

4.1.2 Theme 2: improper use of AI-generated content

The codes in this theme include copying AI-generated content without disclosing its assistance, paraphrasing AI-generated content to avoid detection, using AI-fabricated references, citations, or data, accepting AI outputs without critical evaluation, using AI when the teacher has prohibited it, and using AI to take away the original author’s “gray literature,” reflecting practices where students engaged with AI outputs in covert or uncritical ways. Through the lens of technological mediation theory, these behaviors illustrate how AI affordances reshape students’ ethical judgments. The fluency of AI-generated text made it easy to copy or paraphrase without acknowledgment, while the plausibility of fabricated references reduced students’ motivation to verify accuracy. Similarly, the immediacy of AI responses encouraged uncritical acceptance, blurring the line between genuine understanding and mechanical reproduction. Even when institutional or instructor prohibitions were clear, the accessibility of AI tools mediated students’ decisions to disregard those rules. In sum, this theme highlights that the mediation of AI systems complicates ethical boundaries, producing a variety of improper uses that students struggled to clearly differentiate from legitimate assistance.

4.2 RQ2: What factors motivate or drive students to engage in AI-assisted academic cheating?

Postgraduate students described AI-assisted academic cheating as a response to multiple pressures and uncertainties. These motivations were grouped into four categories: (1) confused and incomplete ethical understanding, (2) socio-academic pressures, (3) convenience and overreliance on AI tools, and (4) lack of institutional guidance and enforcement. From these, two themes emerged: (3) Work pressure and ethical ambiguity in using AI and (4) AI affordances and policy voids (see Figure 2).

As shown in Figure 2, aggregating frequencies at the category level (N = 73 meaning units) showed socio-academic pressures as the largest contributor (24/73; 32.9%), followed by confused/incomplete ethical understanding (18/73; 24.7%), convenience and overreliance (16/73; 21.9%), and lack of institutional guidance/enforcement (15/73; 20.5%). At the code level, the five most common codes were academic performance pressure (n = 10), ease/difficulty of detection (n = 9), overloaded schedules (n = 8), overreliance on AI (n = 7), and misbeliefs about harmlessness/legitimacy (n = 7). The remaining codes were mentioned slightly less frequently by students, but also ranged from 4 to 6. Overall, postgraduate students perceive AI-assisted academic cheating as emerging from a combination of socio-academic pressure, ethical ambiguity, and affordability of AI tools, compounded by policy and enforcement gaps.

4.2.1 Theme 3: work pressure and ethical ambiguity in using AI

The codes in this theme include unclear boundaries between assistance and cheating, inadequate understanding of academic integrity, misbeliefs about the harmlessness or legitimacy of AI use, academic performance pressure, overloaded schedules, and social comparison, which together illustrate how students negotiate the blurred ethical space of AI-assisted practices. From a constructivist epistemology perspective, students actively constructed personal definitions of integrity in the absence of clear guidance. For example, students described unclear boundaries between assistance and cheating, or an inadequate understanding of integrity, often justifying AI use as harmless. At the same time, the codes related to misbeliefs about AI legitimacy resonate with technological mediation theory. Here, the affordances of AI tools, such as speed and fluency, reshaped ethical judgments by lowering the perceived effort of academic work and making transgressive practices more acceptable. Finally, the drivers of academic performance pressure, overloaded schedules, and social comparison highlight a situated learning dimension. Students’ ethical reasoning was not formed in isolation but embedded within peer norms, competitive academic environments, and institutional silence, which normalized ambiguous practices. In sum, this theme illustrates the driving factors of socio-academic pressures and ethical uncertainties that create the conditions for AI-assisted academic cheating to become rationalized and normalized.

4.2.2 Theme 4: AI affordances and policy voids

The codes in this theme include overreliance on AI for efficiency or convenience, ease of AI use and difficulty of detection, absence of clear policies on AI use, limited faculty guidance on ethical AI application, and lack of supervision and detection mechanisms, indicating how institutional gaps and technological affordances jointly encourage misuse. From the lens of technological mediation theory, students’ dependence on AI reflects the way tool affordances reshape academic practices. For example, AI systems are fast, free, and capable of generating high-quality output, which encourages dependence, while the difficulty of detection reduces the perceived risk of misconduct. At the same time, the absence of clear policies and limited faculty guidance highlights an academic vacuum in which students are left to construct their own ethical interpretations. This resonates with constructivist epistemology, as learners fill policy gaps by creating personal rules about what constitutes legitimate AI use. Finally, the lack of supervision and detection mechanisms underscores how institutional silence can implicitly normalize questionable practices, a process consistent with situated learning theory, in which community norms and enforcement structures shape students’ ethical reasoning. In sum, these codes illustrate that both AI affordances and policy voids co-produce conditions where AI misuse can appear acceptable as outcomes of contexts of technological mediation and situated learning.

4.3 RQ3: What solutions or strategies do postgraduate students suggest to mitigate AI-assisted academic cheating?

Postgraduate students suggested a combination of institutional and cultural strategies to address AI-assisted academic cheating. Their suggestions were grouped into four categories: (1) establishing regulatory and curricular frameworks, (2) reinforcing examination design and supervision mechanisms, (3) educating ethical consciousness, and (4) cultivating communities of integrity. These were synthesized into two themes: (5) Institutionalizing ethical AI governance and (6) Cultivating a culture of integrity and ethical AI awareness (see Figure 3).

As shown in Figure 3, the proposed solutions from postgraduate students (N = 37 meaning units) concentrated on assessment design/supervision (17/37; 45.9%) and policy/curricular frameworks (12/37; 32.4%), with additional emphasis on ethics education (6/37; 16.2%) and integrity communities (2/37; 5.4%). At the code level, multiple assessment formats (oral/process-based) (n = 8), clear policies (n = 9), AI-detection technologies (n = 6), and AI ethics training for students and faculty (n = 4) were the four most common solutions mentioned by postgraduate students. The remaining codes were mentioned very rarely by students, with a frequency of only 1 or 2. Overall, although postgraduate students proposed diverse strategies, their responses showed a stronger preference for institutional and policy-based solutions over individual ethical responsibility.

4.3.1 Theme 5: institutionalizing ethical AI governance

Corresponding to the codes, there were a total of eight solutions proposed by the students. Viewed through situated learning theory, students’ suggestions highlight how ethical norms are shaped and reinforced within academic communities. Clear policies and curricular integration indicate what practices are legitimate, while instructor training ensures that faculty consistently model and enforce shared expectations. Similarly, redesigning assessments and employing monitoring or detection mechanisms create institutional contexts where opportunities for AI misuse are limited and integrity is encouraged. In this way, students framed governance not simply as rule enforcement but as the establishment of a communal framework that guides ethical learning. As such, this theme underscores the belief that institutional policies, curricula, and assessment designs are critical in shaping how members of the academic community practice integrity in the age of AI.

4.3.2 Theme 6: cultivating a culture of integrity and ethical AI awareness

The codes in this theme include conducting AI ethics training for students and faculty, organizing seminars on academic integrity and AI risks, creating AI-themed learning communities, and encouraging transparency in AI usage, reflecting students’ emphasis on cultural strategies in ensuring academic integrity. Viewed through situated learning theory, these practices highlight that ethical understanding is developed and reinforced through participation in academic communities. Training programs and seminars provide shared spaces where norms around AI use can be discussed and clarified, while student- or faculty-led learning communities offer opportunities to internalize these norms through dialog and collaboration. Calls for transparency further suggest that integrity is sustained when openness becomes part of everyday academic practice. Collectively, these codes underscore the view that integrity in the AI era is not only a matter of institutional rules but also of cultivating a community culture where ethical awareness is continuously learned, practiced, and supported.

5 Discussion

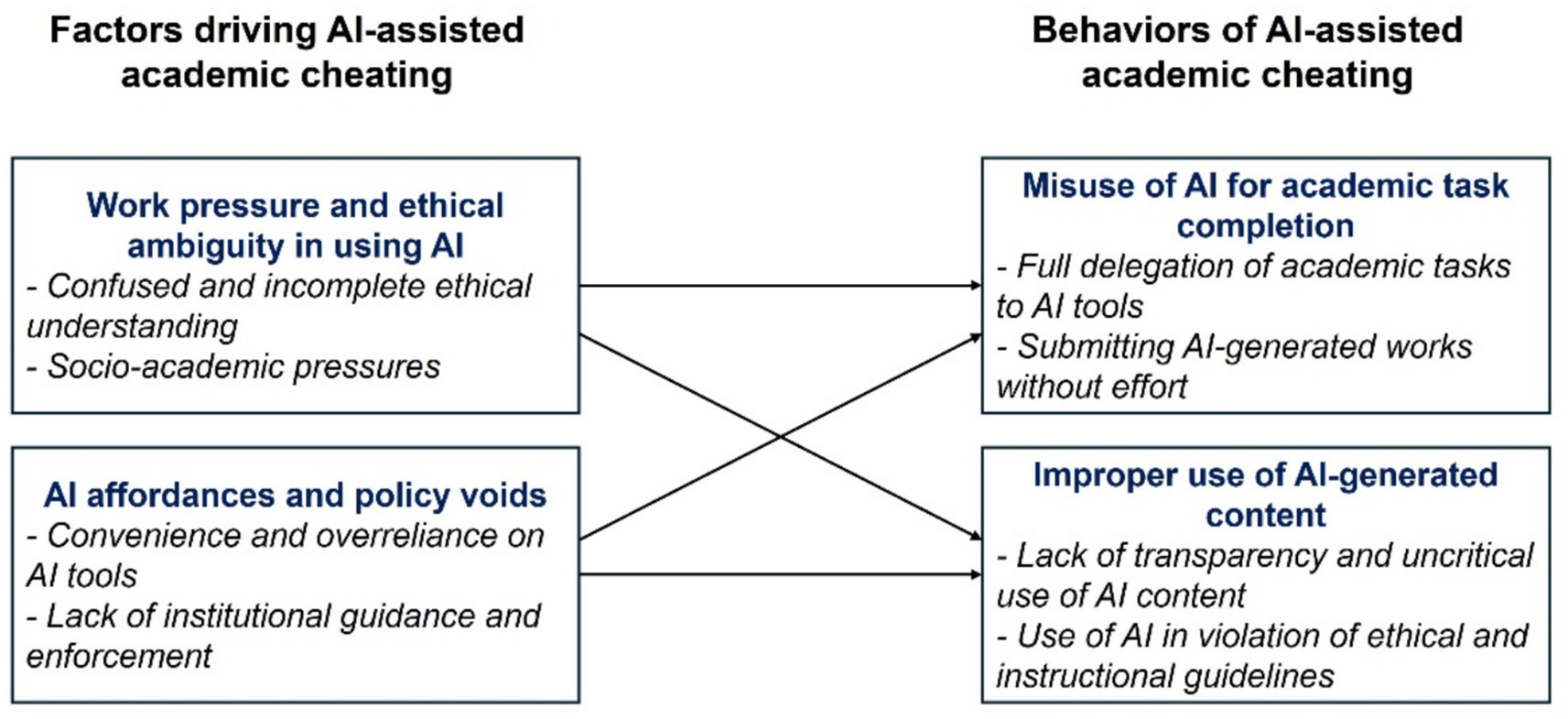

5.1 A conceptual model: from driving factors to behaviors of AI-assisted academic cheating

Through the content analysis of postgraduate students’ essays, we explored how students make sense of emerging forms of AI-assisted academic cheating. Rather than viewing cheating as a simple, individual act of dishonesty, students interpret it as a response to a wider set of conditions, including academic pressure, ethical ambiguity, technological convenience, and institutional silence, a view increasingly supported in the literature (Sallam et al., 2023). Based on students’ reflections, we propose a conceptual model (Figure 4) that illustrates how postgraduate students perceive the connection from driving factors to specific behaviors of AI-assisted academic cheating.

Figure 4. A conceptual model that illustrates how postgraduate students perceive the pathways connecting driving factors to behaviors of AI-assisted academic cheating. The model presents four driving factors: academic pressure, ethical ambiguity, AI affordances, and institutional policy gaps, positioned as inputs. Arrows from these factors point toward two behavioral outcomes: “Misuse of AI for academic task completion” and “Improper use of AI-generated content.”

5.1.1 Work pressure and ethical ambiguity in using AI

One of the most consistent findings in the student reflections is that academic pressure plays a pivotal role in shaping unethical AI use. All student essays reported that high expectations related to grades, scholarships, graduation time, and performance comparisons with peers were major stressors. This strongly supports a previous study (Nguyen and Goto, 2024), which found that students under high academic pressure were more likely to rationalize or conceal dishonest behaviors when using AI tools. Additionally, students’ ethical ambiguity in using AI is also a major factor, as they fail to distinguish the blurred line between assistance and cheating. Reflective essays reveal that students are often unsure where acceptable AI use ends and academic dishonesty begins. This ambiguity arises partly from a lack of formal instruction on academic integrity in the context of AI, as well as from internal misbeliefs about AI’s role. Several students likened AI chatbots to online search engines, perceiving them as benign tools rather than agents that could fundamentally compromise intellectual ownership (Chaudhry et al., 2023). These findings are consistent with a previous study (Oravec, 2023), which emphasized the increasing difficulty students face in defining authorship and originality in the age of generative AI.

5.1.2 AI affordances and policy voids

Alongside academic pressure and ethical ambiguity, the technological affordances of AI tools have become powerful drivers of misconduct. Students highlighted the efficiency, accessibility, and speed of AI-generated outputs as major temptations. These tools reduce the effort needed to complete academic tasks while offering high-quality responses that are difficult to detect with existing technologies. This finding is mirrored by a previous study (Chaudhry et al., 2023), which pointed out that AI tools’ capability to produce original-like content often exceeds institutions’ ability to detect or manage it. The ease of use, combined with minimal risk of being caught, encourages overreliance on AI use. Additionally, many students expressed frustration with the lack of clear guidelines on ethical AI use. This creates an ethical gray zone in which students are left to determine acceptable boundaries on their own. Prior research (Sallam et al., 2023) confirms that regulatory ambiguity has contributed significantly to the rise of AI-assisted academic cheating, especially in settings where educational technologies are rapidly evolving but ethical frameworks lag behind.

5.1.3 From driving factors to behavioral outcomes

Through their essays, postgraduate students highlighted how specific driving factors, including academic pressure, ethical ambiguity, AI affordances, and policy gaps, create conditions that make AI-assisted academic cheating more likely. These factors shape how students perceive the boundaries of acceptable AI use. As illustrated in Figure 4, students associated these influences with two key behavioral outcomes: (1) the misuse of AI for academic task completion, and (2) the improper use of AI-generated content.

The first behavioral outcome, misuse of AI for academic task completion, refers to situations in which students allow AI tools to perform entire assignments, such as writing essays, solving technical problems, or generating examination answers (Oravec, 2023). This behavior reflects a complete delegation of intellectual effort to technology (Lau et al., 2022; Oravec, 2023). Several essays emphasized that such use of AI undermines the learning process and constitutes clear academic dishonesty. Notably, this misuse is not perceived as arising from bad intentions alone. Students linked it to pressure to meet deadlines and unclear institutional messaging about what constitutes acceptable AI use. These reflections suggest that the behavior is often a response to contextual stress and ethical uncertainty, rather than a deliberate act of cheating.

The second behavioral outcome, improper use of AI-generated content, encompasses more subtle but equally problematic actions. These include copying AI-generated text without disclosing AI assistance, paraphrasing outputs to avoid detection, using AI-fabricated references, or submitting AI-written content without critical review (Lau et al., 2022; Oravec, 2023). Students viewed these practices as ethically ambiguous, often normalized within peer networks and overlooked by instructors. Some justified them as harmless, especially when the content is edited or when institutional policies remain silent on AI disclosure. This gray area, as students described it, makes it easier for such behaviors to spread. More importantly, the normalization of these practices signals a gradual shift in how students understand authorship and responsibility (Nguyen and Goto, 2024). When AI is fluent, fast, and widely used, the distinction between legitimate support and academic dishonesty becomes blurred.

As technological mediation theory (Verbeek, 2005) suggests, tools like ChatGPT not only reshape what is technically possible but also influence users’ ethical judgments. When AI begins to feel like a “neutral co-author,” the sense of academic responsibility can shift from the student to the system, raising urgent questions for educators, institutions, and policymakers alike.

5.2 Strategies to reduce AI-assisted academic cheating

Figure 5 of this study conceptualizes a dual-pathway framework for mitigating AI-assisted academic cheating, reflecting postgraduate students’ belief that effective solutions must integrate both top-down institutional actions and bottom-up cultural engagement. This finding aligns with broader calls in the literature for comprehensive responses to AI’s disruptive impact on education (Oravec, 2023; Sallam et al., 2023).

Figure 5. A dual-pathway framework depicting postgraduate students’ proposed strategies to mitigate AI-assisted academic cheating. It divides into two main branches: “Institutionalizing ethical AI governance” and “Cultivating a culture of integrity and ethical AI awareness.” The first branch includes “Establishing regulatory and curricular frameworks” and “Reinforcing exam design and supervision mechanisms.” The second branch includes “Educating ethical consciousness” and “Cultivating communities of integrity”.

As shown in Figure 5, on the institutional side, students advocated for explicit policy frameworks, integration of AI ethics into academic curricula, and robust assessment designs, all of which address the current policy vacuum and implementation lag described in prior studies (Sallam et al., 2023). These top-down strategies serve not only as preventive mechanisms but also as norm-setting tools, bringing clarity to the landscape of ambiguity regarding AI use (Nguyen and Goto, 2024). Redesigning assessments to emphasize process, personalization, and oral components as recommended by students is consistent with institutional practices proposed in existing work to reduce AI exploitability (Chaudhry et al., 2023; Nikolic et al., 2023).

On the other hand, students also recognized that policy alone is insufficient. Drawing from their experiences, they emphasized the need to foster an academic culture where ethical AI use is discussed, internalized, and normalized through communities of practice. These grassroots strategies align with situated learning theory (Lave and Wenger, 1991) and support findings from related studies (Greitemeyer and Kastenmüller, 2024; Nguyen and Goto, 2024), which showed that students’ attitudes toward AI ethics are shaped as much by peer norms and faculty modeling. Student proposals, such as AI-themed ethics workshops and learning communities, suggest that ethical understanding emerges not only from top-down imposition but from dialogical, socially situated practices (Chaudhry et al., 2023; Jeon and Lee, 2023).

Overall, the dual-pathway model offers a holistic framework for understanding and addressing AI-assisted academic cheating from the perspective of postgraduate students. Their opinions seem to reflect thinking for a broader shift in pedagogical philosophy: from enforcement to ethical empowerment, from punishment to prevention, and from unilateral regulation to co-created norms in the academic landscape of the AI era.

5.3 Connecting to the broader academic integrity literature

A central contribution of this study is that postgraduate students’ perspectives on AI-assisted academic cheating resonate strongly with long-standing research on academic integrity. Foundational studies have shown that student academic misconduct is rarely the result of individual morality alone, but is strongly shaped by contextual factors such as academic pressure, peer influence, pedagogical practices, and institutional silence (Macfarlane et al., 2014; McCabe et al., 2001; McCabe and Trevino, 1993). Our findings confirm and extend these insights into the AI era. The conceptual model developed from postgraduate students’ reflections identifies four key drivers: work pressure, ethical ambiguity, AI affordances, and policy voids, which closely mirror the traditional factors influencing academic misconduct. At the same time, the model highlights how generative AI intensifies these conditions by lowering the effort required for misconduct and blurring the boundary between acceptable assistance and dishonesty.

In terms of solutions, postgraduate students proposed strategies long emphasized in the academic integrity literature, including clarifying institutional policies and fostering an ethical culture (Bretag et al., 2011; Chesney, 2009; Dawson and Sutherland-Smith, 2018; Macfarlane et al., 2014; Sutherland-Smith, 2008). Their proposals show that, while the AI technology landscape changes, the principles of effective integrity promotion remain consistent.

6 Conclusion

This study provides a nuanced understanding of how postgraduate students perceive, rationalize, and respond to AI-assisted academic cheating. Through qualitative analysis of reflective essays, we uncovered not only the behaviors students associate with academic dishonesty but also the driving forces that enable such practices. Academic pressure, ethical ambiguity, AI affordances, and institutional silence emerged as key contextual factors shaping students’ decisions to misuse AI tools. These findings challenge the notion that AI-related cheating is solely a result of individual ethical failings. Instead, they reveal a broader ecology in which ethical boundaries are blurred, and institutional guidelines lag behind technological realities. The study’s proposed models offer conceptual tools to visualize these driving forces. To mitigate AI-assisted academic cheating, postgraduate students recommended a dual-pathway approach: institutional policy reforms that provide clear and enforceable standards, and cultural strategies that cultivate ethical awareness through community and dialog. Overall, this study highlights the importance of listening to postgraduate student voices and contributes to the development of evidence-based approaches to promote academic integrity in the age of AI.

6.1 Future directions for research

Building on the conceptual model developed from postgraduate students’ voices (Figure 4), future research should conduct quantitative studies to empirically test the relationships between driving factors and AI-assisted cheating behaviors. Using methods such as survey design and path analysis, researchers could examine how variables like academic pressure, ethical ambiguity, AI affordances, and policy gaps predict AI-assisted cheating behaviors such as task delegation to AI or improper content use. Validating this model with larger samples would enhance its generalizability and provide stronger evidence for targeted institutional interventions.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by the Office of Research and Technology Management of Hanoi University of Science and Technology (Approval number T2024-PC-003, December 13, 2024). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

NH: Funding acquisition, Validation, Project administration, Supervision, Conceptualization, Formal analysis, Writing – review & editing, Methodology, Writing – original draft, Data curation, Visualization, Resources. ND: Writing – review & editing, Formal analysis, Investigation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by Hanoi University of Science and Technology (HUST) under project number T2024-PC-003.

Acknowledgments

The authors sincerely thank the 10 postgraduate students who consented to the use of their reflective essays for research purposes. Their thoughtful reflections greatly contributed to understanding how postgraduate learners perceive AI-assisted academic cheating and its ethical implications.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abd-Elaal, E.-S., Gamage, S. H., and Mills, J. E.. (2019). Artificial intelligence is a tool for cheating academic integrity. 30th Annual Conference for the Australasian Association for Engineering Education (AAEE 2019): Educators Becoming Agents of Change: Innovate, Integrate, Motivate, 397–403.

Abdel-Messih, M. S., and Kamel Boulos, M. N. (2023). ChatGPT in clinical toxicology. JMIR Med. Educ. 9:876. doi: 10.2196/46876

Asmarita, D., Muyassaroh, I. K., and Saptaputra, I.. (2024). Causality of the artificial intelligence paradigm on constructivist perspective for student academic ethics. Proceeding International Conference on Religion, Science and Education, 3, 993–1004.

Baidoo-Anu, D., and Owusu Ansah, L. (2023). Education in the era of generative artificial intelligence (AI): understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 7:4337484. doi: 10.2139/ssrn.4337484

Benuyenah, V., and Dewnarain, S. (2024). Students’ intention to engage with ChatGPT and artificial intelligence in higher education business studies programmes: an initial qualitative exploration. Int. J. Dist. Educ. Technol. 22, 1–21. doi: 10.4018/IJDET.348061

Bretag, T. (2013). Challenges in addressing plagiarism in education. PLoS Med. 10:e1001574. doi: 10.1371/journal.pmed.1001574

Bretag, T., Mahmud, S., Wallace, M., Walker, R., James, C., Green, M., et al. (2011). Core elements of exemplary academic integrity policy in Australian higher education. Int. J. Educ. Integr. 7:759. doi: 10.21913/IJEI.v7i2.759

Can, V.-D., and Nguyen, V.-H. (2025). The relationship between perceived usability and perceived credibility of middle school teachers in using AI chatbots. Cogent Educ. 12:2473851. doi: 10.1080/2331186X.2025.2473851

Chaudhry, I. S., Sarwary, S. A. M., El Refae, G. A., and Chabchoub, H. (2023). Time to revisit existing student’s performance evaluation approach in higher education sector in a new era of ChatGPT — a case study. Cogent Educ. 10:461. doi: 10.1080/2331186X.2023.2210461

Chesney, T. (2009). Academic integrity in the twenty-first century: a teaching and learning imperative. Rev. High. Educ. 32, 544–545. doi: 10.1353/rhe.0.0088

Creswell, J. W., and Poth, C. N. (2016). Qualitative inquiry and research design: choosing among five approaches : 4th edition. Thousand Oaks, California, US: Sage publications.

Dawson, P., and Sutherland-Smith, W. (2018). Can markers detect contract cheating? Results from a pilot study. Assess. Eval. High. Educ. 43, 286–293. doi: 10.1080/02602938.2017.1336746

Erlingsson, C., and Brysiewicz, P. (2017). A hands-on guide to doing content analysis. Afr. J. Emer. Med. 7, 93–99. doi: 10.1016/j.afjem.2017.08.001

Firat, M. (2023). What ChatGPT means for universities: perceptions of scholars and students. J. Appl. Learn. Teach. 6, 57–63. doi: 10.37074/jalt.2023.6.1.22

Giannos, P., and Delardas, O. (2023). Performance of ChatGPT on UK standardized admission tests: insights from the BMAT, TMUA, LNAT, and TSA examinations. JMIR Med. Educ. 9:e47737. doi: 10.2196/47737

Greitemeyer, T., and Kastenmüller, A. (2023). HEXACO, the dark triad, and chat GPT: who is willing to commit academic cheating? Heliyon 9:e19909. doi: 10.1016/j.heliyon.2023.e19909

Greitemeyer, T., and Kastenmüller, A. (2024). A longitudinal analysis of the willingness to use ChatGPT for academic cheating: applying the theory of planned behavior. Technol. Mind Behav. 5, 1–8. doi: 10.1037/tmb0000133

Ha, V. T., Hai, B. M., Mai, D. T. T., and Van Hanh, N. (2023). Preschool STEM activities and associated outcomes: a scoping review. Int. J. Eng. Pedagogy 13, 100–116. doi: 10.3991/ijep.v13i8.42177

Halaweh, M. (2023). ChatGPT in education: strategies for responsible implementation. Contemp. Educ. Technol. 15:ep421. doi: 10.30935/cedtech/13036

Houston, A. B., and Corrado, E. M. (2023). Embracing ChatGPT: implications of emergent language models for academia and libraries. Tech. Serv. Q. 40, 76–91. doi: 10.1080/07317131.2023.2187110

International Center for Academic Integrity (2021) The fundamental values of academic integrity (3rd Ed). Available online at: https://www.ucd.ie/artshumanities/t4media/20019_ICAI-Fundamental-Values_R12.pdf (Accessed: September 24, 2025).

Jeon, J., and Lee, S. (2023). Large language models in education: a focus on the complementary relationship between human teachers and ChatGPT. Educ. Inf. Technol. 28, 15873–15892. doi: 10.1007/s10639-023-11834-1

Kazley, A. S., Christine, A., Angela, M., Clint, B., and Segal, R. (2025). Is use of ChatGPT cheating? Students of health professions perceptions. Med. Teach. 47, 894–898. doi: 10.1080/0142159X.2024.2385667

Kelly, S. M. (2023). ChatGPT passes exams from law and business schools : CNN Business. Available at: https://edition.cnn.com/2023/01/26/tech/chatgpt-passes-exams (Accessed January 26, 2023).

Kooli, C. (2023). Chatbots in education and research: a critical examination of ethical implications and solutions. Sustainability 15:5614. doi: 10.3390/su15075614

Lau, J., Bonilla Esquivel, J. L., Sanabria Barrios, D. J., and Gárate, A. (2022). “Academic integrity of undergraduates: the CETYS university case” in Information literacy in a post-truth era. eds. S. Kurbanoğlu, S. Špiranec, Y. Ünal, J. Boustany, and D. Kos (Cham, Switzerland: Springer International Publishing), 567–575. doi: 10.1007/978-3-030-99885-1_47

Lave, J., and Wenger, E. (1991). Situated learning: legitimate peripheral participation. Cambridge, England: Cambridge university press.

Lim, V. K. G., and See, S. K. B. (2001). Attitudes toward, and intentions to report, academic cheating among students in Singapore. Ethics Behav. 11, 261–274. doi: 10.1207/S15327019EB1103_5

Liu, Z. (2023). Technological mediation theory and the moral suspension problem. Hum. Stud. 46, 375–388. doi: 10.1007/s10746-021-09617-z

Macfarlane, B., Zhang, J., and Pun, A. (2014). Academic integrity: a review of the literature. Stud. High. Educ. 39, 339–358. doi: 10.1080/03075079.2012.709495

Mai, D. T. T., Da, C.Van, and Hanh, N.Van 2024 The use of ChatGPT in teaching and learning: a systematic review through SWOT analysis approach Front. Educ. 9:1328769 doi: 10.3389/feduc.2024.1328769

Mai, D. T. T., and Van Hanh, N. (2024). Whether English proficiency and English self-efficacy influence the credibility of ChatGPT-generated English content of EMI students. Int. J. Comput. Assis. Lang. Learn. Teach. 14, 1–21. doi: 10.4018/IJCALLT.349972

McCabe, D. L., and Trevino, L. K. (1993). Academic dishonesty: honor codes and other contextual influences. J. High. Educ. 64, 522–538. doi: 10.1080/00221546.1993.11778446

McCabe, D. L., Trevino, L. K., and Butterfield, K. D. (2001). Cheating in academic institutions: a decade of research. Ethics Behav. 11, 219–232. doi: 10.1207/S15327019EB1103_2

McHugh, M. L. (2012). Interrater reliability: the kappa statistic. Biochem. Med. 22, 276–282. doi: 10.11613/BM.2012.031

Nguyen, H. M., and Goto, D. (2024). Unmasking academic cheating behavior in the artificial intelligence era: evidence from Vietnamese undergraduates. Educ. Inf. Technol. 29, 15999–16025. doi: 10.1007/s10639-024-12495-4

Nikolic, S., Daniel, S., Haque, R., Belkina, M., Hassan, G. M., Grundy, S., et al. (2023). ChatGPT versus engineering education assessment: a multidisciplinary and multi-institutional benchmarking and analysis of this generative artificial intelligence tool to investigate assessment integrity. Eur. J. Eng. Educ. 48, 559–614. doi: 10.1080/03043797.2023.2213169

Oravec, J. A. (2023). Artificial intelligence implications for academic cheating: expanding the dimensions of responsible human-AI collaboration with ChatGPT. J. Interact. Learn. Res. 34, 213–237. doi: 10.70725/304731gmmvhw

Pariyanti, E., Wibowo, M., Sultan, Z., and Siolemba Patiro, S. P. (2025). Navigating the ethical dilemma in digital learning: balancing artificial intelligence with spiritual integrity to address cheating. J. Appl. Res. High. Educ. doi: 10.1108/JARHE-10-2024-0522

Raskin, J. D., and Debany, A. E. (2018). The inescapability of ethics and the impossibility of “anything Goes”: a constructivist model of ethical meaning making. J. Constr. Psychol. 31, 343–360. doi: 10.1080/10720537.2017.1383954

Rodrigues, M., Silva, R., Borges, A. P., Franco, M., and Oliveira, C. (2025). Artificial intelligence: threat or asset to academic integrity? A bibliometric analysis. Kybernetes 54, 2939–2970. doi: 10.1108/K-09-2023-1666

Sallam, M., Salim, N., Barakat, M., and Al-Tammemi, A. (2023). ChatGPT applications in medical, dental, pharmacy, and public health education: a descriptive study highlighting the advantages and limitations. Narra J 3, e103–e103. doi: 10.52225/narra.v3i1.103

Sparks, J., and Wright, A. T. (2025). Models of rational agency in human-centered AI: the realist and constructivist alternatives. AI Ethics 5, 3321–3328. doi: 10.1007/s43681-025-00658-z

Subramani, M., Jaleel, I., and Mohan, S. K. (2023). Evaluating the performance of ChatGPT in medical physiology university examination of phase I MBBS. Adv. Physiol. Educ. 47, 270–271. doi: 10.1152/ADVAN.00036.2023

Sutherland-Smith, W. (2008). Plagiarism, the internet, and student learning: Improving academic integrity. 1st Edn, New York: Routledge.

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10:15. doi: 10.1186/s40561-023-00237-x

Van Hanh, N., and Turner, A. (2024). “Technology education in Vietnam: fostering creativity” in Education in Vietnam. eds. M. Hayden and TL. Tran (Routledge), 159–169.

Verbeek, P.-P. (2005). What things do: Philosophical reflections on technology, agency, and design. Pennsylvania: Penn State Press.

Yan, D. (2023). Impact of ChatGPT on learners in a L2 writing practicum: an exploratory investigation. Educ. Inf. Technol. 28, 13943–13967. doi: 10.1007/s10639-023-11742-4

Yu, H., Glanzer, P. L., and Johnson, B. R. (2021). Examining the relationship between student attitude and academic cheating. Ethics Behav. 31, 475–487. doi: 10.1080/10508422.2020.1817746

Keywords: AI-assisted academic cheating, postgraduate students, student voices, academic integrity, conceptual model, reflective essays

Citation: Hanh NV and Duyen NT (2025) AI-assisted academic cheating: a conceptual model based on postgraduate student voices. Front. Comput. Sci. 7:1682190. doi: 10.3389/fcomp.2025.1682190

Edited by:

Maha Khemaja, University of Sousse, TunisiaReviewed by:

Eric Best, University at Albany, United StatesPatricia Milner, University of Arkansas, United States

Copyright © 2025 Hanh and Duyen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nguyen Van Hanh, aGFuaC5uZ3V5ZW52YW5AaHVzdC5lZHUudm4=

Nguyen Van Hanh

Nguyen Van Hanh Nguyen Thi Duyen

Nguyen Thi Duyen