Abstract

Objective:

This study aims to conduct an in-depth analysis of diabetic foot ulcer (DFU) images using deep learning models, achieving automated segmentation and classification of the wounds, with the goal of exploring the application of artificial intelligence in the field of diabetic foot care.

Methods:

A total of 671 images of DFU were selected for manual annotation of the periwound erythema, ulcer boundaries, and various components within the wounds (granulation tissue, necrotic tissue, tendons, bone tissue, and gangrene). Three instance segmentation models (Mask2former, Deeplabv3plus, and Swin-Transformer) were constructed to identify DFU, and the segmentation and classification results of the three models were compared.

Results:

Among the three models, Mask2former exhibited the best recognition performance, with a mean Intersection over Union of 65%, surpassing Deeplabv3’s 62% and Swin-Transformer’s 52%. The Intersection over Union value of Mask2former for wound recognition reached 85.9%, with IoU values of 80%, 78%, 62%, 61%, 47%, and 39% for granulation tissue, gangrene, bone tissue, necrotic tissue, tendons, and periwound erythema, respectively. In the wound classification task, the Mask2former model achieved an accuracy of 0.9185 and an Area Under the Curve of 0.9429 for the classification of Wagner grade 1-2, grade 3, and grade 4 wounds.

Conclusion:

Among the three deep learning models, the Mask2former model demonstrated the best overall performance. This method can effectively assist clinicians in recognizing DFU and segmenting the tissues within the wounds.

1 Introduction

Diabetic foot ulcer (DFU) is one of the most severe and costliest chronic complications of diabetes, with approximately 25% to 34% of diabetic patients experiencing at least one episode of DFU in their lifetime. If not treated promptly and appropriately, diabetic foot can lead to severe consequences, including amputation and even death (1). Systematic reviews suggest that the median delay for referral of DFU patients from primary care specialists is between 7 to 31 days, while the median time to initiate final treatment ranges from 6.2 to 56 days (2). In China, 80.8% to 95.6% of DFU patients face delays in seeking medical care, with the average delay extending to 54.81 days (3). The complexity of diabetic foot cases, the shortage of specialized healthcare professionals, and the lack of experience in wound assessment at primary healthcare institutions present significant challenges for the early diagnosis and treatment of diabetic foot. As the population of diabetic patients continues to grow, enhancing the management of DFU has become increasingly urgent.

DFU of varying severity exhibit numerous visual characteristics, such as granulation tissue, necrotic tissue, erythema, tendons, bones, and gangrene. Traditional computer vision algorithms based on machine learning primarily rely on multiple stages, including feature sensing, image preprocessing, feature extraction, feature selection, and inference prediction, to identify abnormal regions (4, 5). These methods utilize differences in color and texture descriptors on the surface of abnormal areas (e.g., wounds) and employ classifiers such as support vector machines, neural networks, random forests, or Bayesian classifiers to perform binary classification (i.e., distinguishing between healthy skin and ulcerated skin) (6–10). In recent years, with the rapid advancement of computer vision technology, deep learning has demonstrated exceptional effectiveness in processing DFU images. Han et al. (11)enhanced the Faster Region-based Convolutional Neural Network algorithm using K-means clustering to achieve automatic recognition and localization of diabetic foot wounds according to Wagner grades. Goyal et al. (12) proposed a deep learning-based method for real-time detection and localization of DFUs. Huang HN et al. (13), through transfer learning and the Faster R-CNN algorithm, were able to perform image segmentation, distinguishing between ulcers, sutures, and gangrene caused by vascular blockage in DFU, achieving up to 90% accuracy in wound image detection. Zhao Nan et al. (14) conducted a study involving 1,042 images of DFU, with manual annotation of ulcer boundaries and different color regions, achieving wound localization and area measurement.

Deep learning-based artificial intelligence (AI) technology has shown great potential in the image recognition and lesion area segmentation of DFU, providing a powerful tool to enhance patient management and potentially improve the current challenges in diagnosis and treatment. However, existing visual computing research has largely focused on DFU recognition, wound segmentation, and distinguishing between infected and non-infected areas, while neglecting critical features such as tendons and bones within the wound, which directly influence the grading of diabetic foot. Additionally, current approaches have not achieved simultaneous recognition of tissue classification and infection characteristics. This study aims to explore the optimization of deep learning techniques for semantic segmentation of wound and surrounding tissue areas, and to construct models for wound feature recognition and grading diagnosis, with the goal of developing an AI-based tool to assist in the recognition of diabetic foot.

2 Material and methods

2.1 Patients and images

A total of 671 images of DFU were collected from patients treated at the Air Force Medical Center between January 2015 and December 2023. The diagnostic criteria for diabetic foot include patients with newly diagnosed diabetes or a history of diabetes, presenting with infections, ulcers, or tissue destruction in the foot, usually accompanied by lower limb neuropathy and/or peripheral arterial disease (15). The inclusion criteria for this study were as follows: images of Wagner grade 1-4 DFU confirmed by professional healthcare personnel; images clearly displaying the ulcer area of the foot with sufficient resolution to accurately distinguish tissue characteristics such as granulation tissue, necrosis, tendons, bone, and gangrene; and images sourced from various devices and conditions to enhance the robustness of the model. Exclusion criteria included: poor-quality images, such as those that are blurred, underexposed, or overexposed, or where key information is obscured; ulcers not caused by diabetes or of unclear etiology; incomplete image information with annotation errors or missing critical details; images of unknown origin or those involving copyright disputes; and any images that do not meet ethical and legal requirements.

2.2 Data preprocessing

The collected images underwent preprocessing to improve processing efficiency and accuracy. Given the substantial variability in original dimensions (ranging from 3864×5152 to 1080×1920 pixels), we prioritized scaling over cropping to preserve critical anatomical details and maintain automation. Bilinear interpolation was employed for resizing: inserting new pixels via interpolation during upscaling and averaging neighboring pixels during downscaling. All images were standardized to 1024×1024 pixels through this scaling approach.

To enhance model robustness, we implemented five data augmentation techniques in Figure 1:

-

Brightness Adjustment: Modifying the R, G, B channel values of pixels to alter overall image brightness.

-

Contrast Adjustment: Enhancing the difference between the brightest and darkest regions to improve image hierarchy and visual impact.

-

Horizontal Flip: Mirroring the image along the vertical axis.

-

Vertical Flip: Mirroring the image along the horizontal axis.

-

Transposition: Swapping rows and columns to invert the image spatially.

Figure 1

Data augmentation techniques.

2.3 Dataset for the study

A total of 671 DFU images were collected, with annotation work conducted by an expert team from the Endocrinology Department of the Air Force Medical Center. These physicians, who have extensive experience in diabetic foot care, used Labelme software for precise annotation. The annotations included the ulcer area, granulation tissue, necrotic tissue, tendons, bone, gangrene, and infection across seven categories. Specifically, 631 images included granulation tissue, 458 included necrotic tissue, 130 included tendons, 140 included bone, 122 included gangrene, and 264 included infection. This study utilized the Wagner classification system (16), and considering the similarity in treatment strategies between ulcers affecting the skin and soft tissue layers in Wagner grades 1 and 2, we combined Wagner grades 1 and 2 into a single category. This adjustment aimed to more precisely reflect the characteristics of the wounds and the extent of tissue damage while ensuring clear performance in the model’s classification tasks:

-

W1-2 Grade: Wounds limited to the skin and soft tissue, without bone involvement or abscess formation.

-

W3 Grade: Wounds have invaded deeper tissues, manifested by bone exposure and/or tendon damage, but gangrene has not yet occurred.

-

W4 Grade: Wounds accompanied by gangrene, indicating tissue necrosis beyond the capacity for repair.

2.4 Deep learning procedure

In this study, we employed three different deep learning models: Deeplabv3Plus, Swin-Transformer, and Mask2Former, chosen for their distinct advantages in image segmentation tasks. Deeplabv3Plus is a well-established model based on convolutional neural networks (CNNs) with widespread application in semantic segmentation (17); Swin-Transformer leverages the Transformer architecture to handle long-range dependencies, making it suitable for recognizing complex features (18); and Mask2Former combines the global modeling capabilities of Transformers with the local perception abilities of CNNs, making it particularly effective for multi-label recognition tasks (19). Deeplabv3Plus uses the CNNs model ResNet101 as its backbone, while Swin-Transformer and Mask2Former use Transformer models based on self-attention mechanisms as their backbone networks. CNNs have translation invariance and local connectivity, primarily extracting local features in images through convolution and pooling operations, whereas Transformer network models utilize self-attention mechanisms and multi-layer perception structures to capture global feature representations through complex spatial transformations and long-range feature dependencies. These models were pre-trained on the publicly available ImageNet dataset (20), with the pre-trained model parameters used as initialization parameters. The collected DFU images were then used to fine-tune the models, with training progress monitored through loss function values, accuracy curves on the training and test sets, and other metrics until the loss values were sufficiently low and the accuracy curves stabilized. The overall workflow of the study is illustrated in Figure 2.

Figure 2

Schematic diagram of the study flow.

2.5 Evaluation of the performance of deep learning models

To quantitatively assess the performance of the proposed models, the DFU image set was divided into two subsets in an 8:2 ratio, used for model training and testing, respectively. We employed two key metrics: Intersection over Union (IoU) and Dice coefficient to comprehensively evaluate the recognition and segmentation performance of the three deep learning models on DFU images. Both metrics quantify the overlap between the predicted ulcer area by the model and the ground truth area annotated by experts. A perfect match would yield IoU and Dice coefficients of 1, indicating complete concordance between the predicted and actual areas; conversely, values of 0 would indicate no overlap. In addition to evaluating the models’ performance on segmentation tasks through IoU and Dice coefficients, we also assessed the diabetic foot Wagner grading task using metrics such as precision, sensitivity, specificity, accuracy, F1 score, area under the curve (AUC), and receiver operating characteristic (ROC) curve. The specific calculations for each metric are detailed in Table 1.

Table 1

| Metric | Formula | Description |

|---|---|---|

| Precision | Correct positive predictions over all positive predictions | |

| Sensitivity | Fraction of correct positive predictions | |

| Specificity | Fraction of correct negative predictions | |

| Accuracy (ACC) | Correct prediction ratio | |

| F1 Score | The harmonic mean of precision and recall | |

| AUC (area under the curve) | Threshold-invariant prediction quality | |

| DICE | Coefficient for measuring overlap between predicted and actual regions. Similar to F1 score | |

| IoU | Another coefficient for measuring the overlap between predicted and actual regions |

Calculation formulae.

sn, Sensitivity; sp, Specificity; TP, True Positives; FN, False Negatives; FP, False Positives; TN, True Negatives.

2.6 Hardware and software specifications

The training server used in this experiment was configured with the CentOS Linux Release 7.4.1708 operating system, Intel(R) Xeon(R) CPU, Nvidia GeForce RTX 3090 GPU, and CUDA Version 11.4. The AI framework was implemented using the Pytorch framework, with OpenCV version 4.3.0.36.

3 Result

3.1 Model construction and comparison

3.1.1 Training and inference efficiency

During 100 epochs of training, the DeeplabV3Plus model exhibited faster training speed, completing the entire process in approximately 11 hours, with an inference time of around 0.19 seconds per image. The Swin-Transformer model required a slightly longer training period of about 14 hours and 30 minutes for 100 epochs, with an inference time of 0.42 seconds. The Mask2Former model also had an extended training period, taking 13 hours and 10 minutes, which is attributable to its complex architecture and focus on detailed features; however, its inference time was only 0.39 seconds, indicating that despite the longer training time, the model is capable of delivering efficient real-time performance.

3.1.2 Wound segmentation performance

The segmentation results of the three models on DFU are presented in Table 2 and Figure 3. In the wound segmentation task, the IoU value for the Mask2Former model was 85.88%, closely matching Swin-Transformer’s 85.9%. However, Mask2Former outperformed in multi-label recognition tasks, achieving IoU values of 78.78%, 59.53%, 48.72%, 53.45%, and 77.14% for granulation tissue, necrotic tissue, tendons, bone, and gangrene, respectively. Regarding the mean Intersection over Union (mIoU) on the test set, Mask2Former achieved 65.79%, Swin-Transformer 65.31%, and DeeplabV3Plus 52.86%. These results indicate that while Mask2Former and Swin-Transformer performed comparably in single wound segmentation, Mask2Former demonstrated a clear advantage in multi-label recognition tasks.

Table 2

| Model | Indicator | Wound | Infection | Granulation | Necrosis | Tendon | Bone | Gangrene |

|---|---|---|---|---|---|---|---|---|

| DeeplabV3Plus | IoU | 78.48 | 45.45 | 65.77 | 39.12 | 26.20 | 32.25 | 64.22 |

| ACC | 84.72 | 61.06 | 79.93 | 49.14 | 27.45 | 37.51 | 69.41 | |

| Dice | 87.94 | 62.50 | 79.35 | 56.23 | 41.52 | 48.77 | 78.21 | |

| mIoU | 52.86 | |||||||

| mAcc | 60.46 | |||||||

| mDice | 66.44 | |||||||

| Swin-transformer | IoU | 87.36 | 47.22 | 78.52 | 59.18 | 47.95 | 48.76 | 77.84 |

| ACC | 92.71 | 59.5 | 88.19 | 73.26 | 58.63 | 73.12 | 85.85 | |

| Dice | 93.25 | 64.15 | 87.97 | 74.35 | 64.82 | 65.56 | 87.54 | |

| mIoU | 65.31 | |||||||

| mAcc | 76.80 | |||||||

| mDice | 77.60 | |||||||

| Mask2former | IoU | 85.88 | 45.14 | 78.78 | 59.53 | 48.72 | 53.45 | 77.14 |

| ACC | 89.60 | 50.62 | 86.67 | 72.0 | 63.12 | 76.61 | 89.31 | |

| Dice | 92.40 | 62.20 | 88.13 | 74.63 | 65.52 | 69.66 | 87.09 | |

| mIoU | 65.79 | |||||||

| mAcc | 76.84 | |||||||

| mDice | 78.00 | |||||||

Segmentation identification results of the three models in diabetic foot wounds:.

Figure 3

Demonstration of three model segmentation tasks. Granulation (red), Necrotic tissue (yellow), Bone (green), Tendon (cyan), Infection (blue), Gangrene (purple).

3.1.3 Infection recognition ability

In our study, we compared the performance of the three deep learning models in identifying the presence or absence of infection in diabetic foot wounds (Table 3, Figure 4). The DeeplabV3Plus model exhibited a sensitivity of 0.8868 in infection state recognition but was slightly weaker in specificity, with a value of 0.7317, resulting in an overall accuracy of 0.7926 and an Area Under the Curve (AUC) of 0.8093. The Swin-Transformer model showed balanced performance in recognizing both infected and non-infected states, with a sensitivity of 0.7925 and a specificity of 0.8780, achieving an accuracy of 0.8444 and an AUC of 0.8353. The Mask2Former model performed best across all evaluation metrics, particularly in accuracy and AUC, where it achieved 0.8519 and 0.8353, respectively.

Table 3

| Model | Class | Precision | Sensitivity | Specificity | F1 | ACC | AUC |

|---|---|---|---|---|---|---|---|

| DeeplabV3Plus | infections | 0.6812 | 0.8868 | 0.7317 | 0.7705 | 0.7926 | 0.8093 |

| non-infectious | 0.9091 | 0.7317 | 0.8868 | 0.8108 | |||

| Swin-transformer | infections | 0.8077 | 0.7925 | 0.8780 | 0.8000 | 0.8444 | 0.8353 |

| non-infectious | 0.8675 | 0.8780 | 0.7925 | 0.8727 | |||

| Mask2former | infections | 0.9231 | 0.6792 | 0.9634 | 0.7826 | 0.8519 | 0.8353 |

| non-infectious | 0.8229 | 0.9634 | 0.6792 | 0.8876 |

Results of the three models in recognizing the presence or absence of infection in diabetic foot wounds:.

Figure 4

ROC curves of the three models for identifying the presence of infection in diabetic wounds.

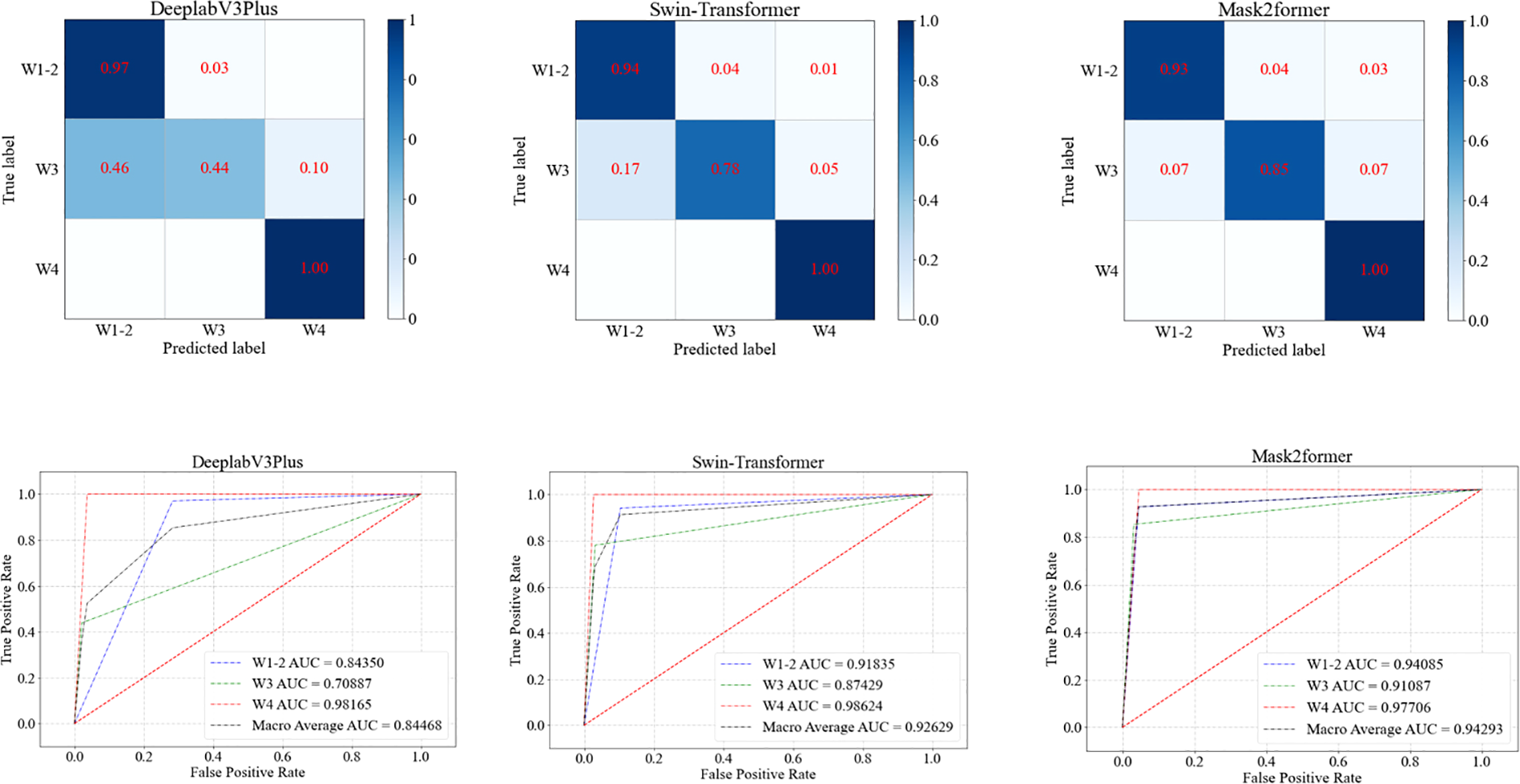

3.1.4 Grading diagnosis of DFU

In the task of grading DFU, the Mask2Former model demonstrated the best overall performance (Table 4, Figure 5). The Mask2Former model exhibited high precision and specificity across all grade classification tasks and achieved the best results in overall accuracy (ACC) and AUC, with ACC at 0.9185 and AUC at 0.9429. The DeeplabV3Plus model achieved corresponding values of 0.8148 and 0.8447, while the Swin-Transformer model had an ACC of 0.9037 and an AUC of 0.9263. These results highlight the superior performance of the Mask2Former model in the grading diagnosis of DFU.

Table 4

| Model | Disaggregated indicators | Precision | Sensitivity | Specificity | F1 | ACC | AUC |

|---|---|---|---|---|---|---|---|

| DeeplabV3Plus | W1-2 | 0.7765 | 0.9706 | 0.7164 | 0.8628 | 0.8148 | 0.8447 |

| W3 | 0.9 | 0.439 | 0.9787 | 0.5901 | |||

| W4 | 0.8667 | 1.0 | 0.9633 | 0.9286 | |||

| Swin-transformer | W1-2 | 0.9014 | 0.9412 | 0.8955 | 0.9209 | 0.9037 | 0.9263 |

| W3 | 0.9143 | 0.7805 | 0.9681 | 0.8421 | |||

| W4 | 0.8966 | 1.0 | 0.9725 | 0.9455 | |||

| Mask2former | W1-2 | 0.9545 | 0.9265 | 0.9552 | 0.9403 | 0.9185 | 0.9429 |

| W3 | 0.9211 | 0.8537 | 0.9681 | 0.8861 | |||

| W4 | 0.8387 | 1.0 | 0.9541 | 0.9123 |

Results of the three models in grading diabetic foot wounds:.

Figure 5

ROC curves of three models for grading diabetic foot wounds.

4 Discussion

This study successfully constructed and compared the performance of three deep learning models (Mask2Former, DeeplabV3Plus, and Swin-Transformer) in the recognition and segmentation of DFU images. The results revealed the significant advantages of the Mask2Former model in terms of mean accuracy, segmentation precision, and grading diagnosis, achieving effective AI-based wound feature recognition and Wagner grading.

Previous studies have focused on segmenting and identifying the extent of the wound area and structural regions within DFU using deep learning. Can Cui et al. (21)proposed a CNN-based method for precise wound area segmentation, achieving an accuracy of 72%. The 2022 DFU Segmentation Challenge (22)showcased the latest advancements in DFU segmentation, where the winning team achieved a Dice score of 0.7287 in the wound recognition task. Our results indicate that the Mask2Former model achieved an IoU of 85.88% in wound segmentation tasks, and reached a Dice score of 0.9240, with the Swin-Transformer model achieving an even higher Dice score of 0.9325. Our models offer superior accuracy in wound segmentation. The core innovation of the Mask2Former model lies in its mask attention mechanism, which enhances the precision and efficiency of local feature extraction by focusing on and optimizing cross-attention processing within the predicted mask region. Notably, the unified architecture and ease of training of Mask2Former enable it to effectively adapt to DFU image recognition without requiring specific adjustments (19).

Compared to earlier studies (13, 14, 23, 24), we have achieved significant progress in the accuracy of tissue recognition within diabetic foot wounds. Our study not only accomplished precise segmentation and delineation of the wound area but also extended to the identification of necrotic tissue, tendons, bone, and gangrene within the wound. Detailed wound segmentation and tissue recognition are critical steps in managing DFU. By accurately distinguishing between granulation tissue, necrotic tissue, tendons, bone, gangrene, and the periwound erythema, we can provide a clear basis for ulcer severity grading, as well as offer precise information for prognosis assessment and treatment planning. For instance, the presence of necrotic tissue indicates the need for debridement. Exposed bone is at risk of osteomyelitis, which can increase the likelihood of amputation, prolong hospitalization, extend antibiotic treatment duration, and delay healing (25).

Diabetic foot infection (DFI) is the most common reason for hospitalization among patients with diabetic-related foot ulcers and is a major cause of lower limb amputation. Only about 46% of infected foot ulcers heal within a year (with 10% recurring), and 15% of patients die, while 17% require lower limb amputation (26). Goyal M et al. (27)developed a novel dataset and superpixel color descriptor technique combined with an ensemble CNN model, effectively improving the recognition efficiency of ischemia and infection, achieving an infection recognition accuracy of 73%. Yogapriya J et al. (28)constructed the Diabetic Foot Infection Network (DFINET), specifically designed to assess infection and non-infection states in DFU images, with a recognition accuracy of 91.98%. In this study, the Mask2Former model achieved an infection recognition accuracy (ACC) of 0.8519 and an AUC of 0.8353. Previous studies have primarily focused on identifying the presence of infection, but delineating the extent of infection is not only essential for distinguishing between mild, moderate, and severe infections but also directly influences the choice of antibiotics and treatment duration (29). This study also developed a model for determining the extent of diabetic foot infection, although model performance still needs improvement. This may be due to image quality and the variability in how annotators define the extent of infection.

Diabetic foot prognosis is closely linked to Wagner grading. In this study, AI-based wound feature recognition was integrated with the Wagner system to achieve automated grading. Notably, Wagner grade 5 cases (characterized by extensive necrosis and requiring urgent surgical intervention) were excluded, as their management relies on comprehensive clinical evaluation rather than image analysis alone. The Mask2Former model demonstrated robust performance in distinguishing Wagner grades 1–4, with AUC values of 0.97 (grades 1–2), 0.82 (grade 3), and 0.78 (grade 4). This AI-assisted approach addresses critical challenges in diabetic foot care—including limited expertise and time constraints—by providing efficient, objective decision support for severity assessment and treatment prioritization.

While our study advances wound feature recognition and grading in DFUs, several limitations warrant attention:

-

Narrow applicability: The model focuses solely on DFU wound characterization and lacks utility for differential diagnosis of non-diabetic foot lesions.

-

Data constraints: The pilot-scale dataset (n=671 images) and absence of external validation limit generalizability. Future work will expand sample diversity and incorporate multi-center data.

-

Image standardization: Variability in image quality (e.g., lighting, angles) may impair model robustness. Standardized imaging protocols and scale integration will enhance segmentation accuracy and enable wound area quantification.

-

Infection localization: While infection presence detection is reliable, precise spatial delineation requires improved annotation strategies and advanced algorithms.

-

Clinical integration: Augmenting image analysis with clinical data (e.g., vascular status, wound location) could refine prognostic predictions and personalize treatment plans.

The Mask2Former model achieves high-precision segmentation and classification of DFUs, excelling in identifying critical tissue components (necrotic tissue, tendons, bone) and Wagner grading. By automating severity assessment, it addresses clinical challenges such as diagnostic delays and expertise shortages, particularly in resource-limited settings. These capabilities lay the groundwork for intelligent DFU management systems, supporting telemedicine and real-time monitoring to optimize patient outcomes.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethical Committee of Air Force Medical Center of Chinese People ‘s Liberation Army. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

G-XZ: Writing – original draft. Y-KT: Writing – original draft. J-ZH: Writing – original draft. H-JZ: Writing – original draft. LX: Writing – original draft. NZ: Writing – original draft. X-WW: Writing – original draft. B-LD: Writing – original draft. DZ: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by a grant from the Clinical Trial Project of the Air Force Medical Center (2021LC018).

Conflict of interest

Authors NZ, X-WW and B-LD were employed by the company Chongqing Zhijian Life Technology Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Lin C Liu J Sun H . Risk factors for lower extremity amputation in patients with diabetic foot ulcers: A meta-analysis. PloS One. (2020) 15:e0239236. doi: 10.1371/journal.pone.0239236

2

Lingyan L Liwei X Han Z Xin T Bingyang H Yuanyuan M et al . Identification, influencing factors and outcomes of time delays in the management pathway of diabetic foot: A systematic review. J Tissue Viability. (2024) 33:345–54. doi: 10.1016/j.jtv.2024.04.007

3

Yan J Liu Y Zhou B Sun M . Pre−hospital delay in patients with diabetic foot problems:influencing factors and subsequent quality of care. Diabetes Med. (2014) 31:624−629. doi: 10.1111/dme.12388

4

Hazenberg CEVB Van Netten JJ Van Baal SG Bus SA . Assessment of signs of foot infection in diabetes patients using photographic foot imaging and infrared thermography. Diabetes Technol Ther. (2014) 16:370–7. doi: 10.1089/dia.2013.0251

5

Yadav MK Manohar DD Mukherjee G Chakraborty C . Segmentation of chronic wound areas by clustering techniques using selected color space. J Med Imaging Health Inf. (2013) 3:22–9. doi: 10.1166/jmihi.2013.1124

6

Kolesnik M Fexa A . Multi-dimensional color histograms for segmentation of wounds in images. Berlin, Heidelberg: Springer Berlin Heidelberg (2005) p. 1014–22.

7

Kolesnik M Fexa A . "How robust is the SVM wound segmentation?", In: Proceedings of the 7th nordic signal processing symposium-NORSIG. Reykjavik, Iceland: IEEE (2006). p. 50–3. doi: 10.1109/NORSIG.2006.275274

8

Veredas F Mesa H Morente L . Binary tissue classification on wound images with neural networks and bayesian classifiers. IEEE Trans Med Imaging. (2010) 29:410–27. doi: 10.1109/TMI.2009.2033595

9

Veredas FJ Luque-Baena RM Martín-Santos FJ Morilla-Herrera JC Morente L . Wound image evaluation with machine learning. Neurocomputing. (2015) 164:112–22. doi: 10.1016/j.neucom.2014.12.091

10

Wang L Pedersen PC Agu E Strong DM Tulu B . Area determination of diabetic foot ulcer images using a cascaded two-stage SVM-based classification. IEEE Trans Biomed Eng. (2017) 64:2098–109. doi: 10.1109/TBME.2016.2632522

11

Han A Zhang Y Liu Q Dong Q Zhao F Shen X et al . Application of refinements on faster-RCNN in automatic screening of diabetic foot wagner grades. Acta Med Mediterr. (2020) 36:661–5.

12

Goyal M Reeves N Rajbhandari S Yap MH . Robust methods for real-time diabetic foot ulcer detection and localization on mobile devices. IEEE J Biomed Health Inf. (2018), 1–1. doi: 10.1109/JBHI.2018.2868656

13

Huang HN Zhang T Yang CT Sheen YJ Chen HM Chen CJ et al . Image segmentation using transfer learning and Fast R-CNN for diabetic foot wound treatments. Front Public Health. (2022) 10:969846. doi: 10.3389/fpubh.2022.969846

14

Zhao N Zhou Q Hu J Huang W Xu J Qi M et al . Construction and verification of an intelligent measurement model for diabetic foot ulcer. Zhong Nan Da Xue Bao Yi Xue Ban. (2021) 46:1138–46. doi: 10.11817/j.issn.1672-7347.2021.200938

15

Karabanow AB Zaimi I Suarez LB Iafrati MD Allison GM . An analysis of guideline consensus for the prevention, diagnosis, and management of diabetic foot ulcers. J Am Podiatr Med Assoc. (2022) 112:19–175. doi: 10.7547/19-175

16

Wagner F . A classification and treatment program for diabetic,neuropathic, and dysvascular foot problems. Instr Course Lect. (1979) 28:143–65.

17

Chen LC Papandreou G Schroff F Adam H . Rethinking atrous convolution for semantic image segmentation. (2017). doi: 10.48550/arXiv.1706.05587

18

Liu Z Lin Y Cao Y Hu H Wei Y Zhang Z et al . Swin transformer: hierarchical vision transformer using shifted windows. doi: 10.48550/arXiv.2103.14030

19

Cheng B Misra I Schwing AG Kirillov A Girdhar R . Masked-attention mask transformer for universal image segmentation. (2021). doi: 10.48550/arXiv.2112.01527

20

Deng J Dong W Socher R Li LJ Li K Li FF . ImageNet: a large-scale hierarchical image database, in: 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Proceedings of the Nordic Signal Processing Symposium (NORSIG), Miami, Florida, USA, 20-25 June 2009. Piscataway, New Jersey: Institute of Electrical and Electronics Engineers (IEEE). (2009). doi: 10.1109/CVPR.2009.5206848

21

Cui C Thurnhofer-Hemsi K Soroushmehr R Mishra A Gryak J Dominguez E et al . Diabetic wound segmentation using convolutional neural networks. Annu Int Conf IEEE Eng Med Biol Soc. (2019) 2019:1002–5. doi: 10.1109/EMBC.2019.8856665

22

Yap MH Cassidy B Byra M Liao TY Yi H Galdran A et al . Diabetic foot ulcers segmentation challenge report: Benchmark and analysis. Med Image Anal. (2024) 94:103153. doi: 10.1016/j.media.2024.103153

23

Mukherjee R Manohar DD Das DK Achar A Mitra A Chakraborty C . Automated tissue classification framework for reproducible chronic wound assessment. BioMed Res Int. (2014) 2014:851582. doi: 10.1155/2014/851582

24

Toledo Peral CL Ramos Becerril FJ Vega Martínez G Vera Hernández A Leija Salas L Gutiérrez Martínez J . An application for skin macules characterization based on a 3-stage image-processing algorithm for patients with diabetes. J Healthc Eng. (2018) 2018:9397105. doi: 10.1155/2018/9397105

25

Cazzell S Moyer PM Samsell B Dorsch K McLean J Moore MA . A prospective, multicenter, single-arm clinical trial for treatment of complex diabetic foot ulcers with deep exposure using acellular dermal matrix. Adv Skin Wound Care. (2019) 32:409–15. doi: 10.1097/01.ASW.0000569132.38449.c0

26

Senneville É Albalawi Z van Asten SA Abbas ZG Allison G Aragon-Sanchez J et al . IWGDF/IDSA guidelines on the diagnosis and treatment of diabetes-related foot infections (IWGDF/IDSA 2023). Diabetes Metab Res Rev. (2024) 40:e3687. doi: 10.1002/dmrr.3687

27

Goyal M Reeves ND Rajbhandari S Yap MH . Robust methods for real-time diabetic foot ulcer detection and localization on mobile devices. IEEE J BioMed Health Inform. (2019) 23:1730–41. doi: 10.1109/JBHI.2018.2868656

28

Yogapriya J Chandran V Sumithra MG Elakkiya B Shamila Ebenezer A Suresh Gnana Dhas C . Automated detection of infection in diabetic foot ulcer images using convolutional neural network. J Healthc Eng. (2022) 2022:2349849. doi: 10.1155/2022/2349849

29

Li M . Guidelines and standards for comprehensive clinical diagnosis and interventional treatment for diabetic foot in China (Issue 7.0). J Interv Med. (2021) 4:117–29. doi: 10.1016/j.jimed.2021.07.003

Summary

Keywords

diabetic foot ulcers, artificial intelligence, deep learning, segmentation, classification

Citation

Zhou G-X, Tao Y-K, Hou J-Z, Zhu H-J, Xiao L, Zhao N, Wang X-W, Du B-L and Zhang D (2025) Construction and validation of a deep learning-based diagnostic model for segmentation and classification of diabetic foot. Front. Endocrinol. 16:1543192. doi: 10.3389/fendo.2025.1543192

Received

11 December 2024

Accepted

25 March 2025

Published

09 April 2025

Volume

16 - 2025

Edited by

Ping Wang, Michigan State University, United States

Reviewed by

Huseyin Canbolat, Ankara Yıldırım Beyazıt University, Türkiye

Dimiter Prodanov, Interuniversity Microelectronics Centre (IMEC), Belgium

Updates

Copyright

© 2025 Zhou, Tao, Hou, Zhu, Xiao, Zhao, Wang, Du and Zhang.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Da Zhang, zhangda79@aliyun.com

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.