- 1Department of Orthopedics, The Fourth Hospital of Hebei Medical University, Shijiazhuang, Hebei, China

- 2Department of Pharmacy, the Fourth Hospital of Hebei Medical University, Shijiazhuang, Hebei, China

Machine learning (ML) has played a crucial role in advancing precision immunotherapy by integrating multi-omics data to identify biomarkers and predict therapeutic responses. However, a prevalent methodological flaw persists in immunological studies—an overreliance on correlation-based analysis while neglecting causal inference. Traditional ML models struggle to capture the intricate dynamics of immune interactions and often function as “black boxes.” A systematic review of 90 studies on immune checkpoint inhibitors revealed that despite employing ML or deep learning techniques, none incorporated causal inference. Similarly, all 36 retrospective studies modeling melanoma exhibited the same limitation. This “knowledge–practice gap” highlights a disconnect: although researchers acknowledge that correlation does not imply causation, causal inference is often omitted in practice. Recent advances in causal ML, like Targeted-BEHRT, CIMLA, and CURE, offer promising solutions. These models can distinguish genuine causal relationships from spurious correlations, integrate multimodal data—including imaging, genomics, and clinical records—and control for unmeasured confounders, thereby enhancing model interpretability and clinical applicability. Nevertheless, practical implementation still faces major challenges, including poor data quality, algorithmic opacity, methodological complexity, and interdisciplinary communication barriers. To bridge these gaps, future efforts must focus on advancing research in causal ML, developing platforms such as the Perturbation Cell Atlas and federated causal learning frameworks, and fostering interdisciplinary training programs. These efforts will be essential to translating causal ML from theoretical innovation to clinical reality in the next 5-10 years—representing not only a methodological upgrade, but also a paradigm shift in immunotherapy research and clinical decision-making.

1 Introduction

Machine learning (ML) technologies have played a pivotal role in advancing precision immunotherapy by integrating multi-omics data to identify biomarkers, predict treatment responses, discover novel therapeutic targets (1, 2), characterize the tumor microenvironment, and optimize patient stratification. These predictive models have greatly enhanced clinical decision-making capabilities (3, 4). However, the application of ML in immunology has increasingly come under scrutiny. Traditional models often fail to capture the complexity of immune interactions (5), suffer from the “black-box” nature of deep learning (6), and lack standardized data preprocessing protocols (7).

Despite broad recognition that “correlation ≠ causation” is a fundamental statistical principle, this distinction is frequently overlooked in practice. A systematic review of 90 studies on immune checkpoint inhibitors (ICIs) revealed that while 72% employed traditional ML and 22% used deep learning, none incorporated causal inference. Consequently, these models were not included in phase III clinical trial designs or referenced in major clinical guidelines (8). This phenomenon is not isolated: a parallel analysis of 36 melanoma prediction models showed all studies were retrospective correlation-based analyses, with none applying causal inference. As a result, PROBAST evaluations rated them as having moderate to high bias, limiting their translational utility and clinical applicability (9, 10).

This disconnect between knowledge and practice highlights a broader issue in immunology research—an overreliance on digital correlations. Researchers may acknowledge the importance of causality but are deterred from applying causal frameworks due to the intrinsic complexity of immunological data. High-dimensional, noisy, and temporally dynamic immune responses, combined with treatment-induced nonlinear effects and substantial interindividual heterogeneity (across genotype, phenotype, and microenvironment), pose significant challenges to conventional causal inference methods (11–14).

Fortunately, recent methodological advances have made the integration of causal inference and ML increasingly feasible. For example, the Targeted-BEHRT model combines transformer architecture with doubly robust estimation to infer long-term treatment effects from longitudinal, high-dimensional data (15). Causal network models incorporating selection diagrams, missingness graphs, and structure discovery techniques outperform standard ML in risk evaluation and adverse event prediction for immunotherapies (16). CIMLA exhibits exceptional robustness to confounding in gene regulatory network analysis, offering insights into tumor immune regulation (17). CURE, leveraging large-scale pretraining, improves treatment effect estimation with gains of ~4% in AUC and ~7% in precision-recall performance over traditional methods (18). Causal-stonet handles multimodal and incomplete datasets effectively, crucial for big-data immunology research (19). LingAM-based causal discovery models have demonstrated high accuracy (84.84% with logistic regression; 84.83% with deep learning) and can directly identify causative factors, significantly improving reliability in immunological studies (20).

These innovations represent a confluence of causal reasoning and machine learning methodologies (21), which are now being increasingly applied in immunology research (22, 23). They help reveal true causal relationships, mitigate confounding (both observed and unobserved), enhance model interpretability and robustness (24), and integrate heterogeneous data types including genomics, proteomics, clinical phenotypes, and medical imaging (25, 26). Ultimately, they enable the construction of more realistic models with superior generalizability and predictive performance across diverse patient populations (27, 28).

This Perspective aims to systematically highlight the paradigm-shifting value of causal machine learning in immunological research. We focus on the following key questions (Figure 1):

Figure 1. Transitioning from the correlation trap to the causal paradigm in immunotherapy machine learning. This figure illustrates the urgent need and conceptual roadmap for transitioning machine learning applications in immunotherapy research from correlation-based analyses to causal inference frameworks. The left red module highlights critical issues in current practice: among 90 ICI (immune checkpoint inhibitor) studies, none incorporated causal inference; the hazard ratio (HR) for immune-related adverse events (irAEs) shifted from 0.37 to 1.02 after causal bias correction, underscoring the misleading nature of pure correlational analysis. Moreover, some models were excluded from Phase III clinical trials due to a lack of causal validation. The central green bridge represents the solution offered by causal machine learning (Causal ML), characterized by three key strengths: identifying true causal effects, integrating multimodal data (genomics, imaging, and clinical records), and providing interpretable mechanistic insights. The right blue module envisions future breakthroughs over the next 5-10 years, including the development of the Perturbation Cell Atlas, federated causal learning approaches, and eventual clinical translation. The cliff–bridge–shoreline metaphor visually encapsulates the methodological leap required to shift from flawed analytics to a robust scientific paradigm.

1. Pitfalls of correlation-based approaches: Why do conventional models relying solely on correlation lead to conflicting conclusions? For instance, how should we reinterpret established “consensus” when the hazard ratio (HR) of immune-related adverse events (irAEs) for survival shifts from 0.37 to 1.02 after causal correction?

2. Unique advantages of causal ML: How does causal ML bridge the gap from “correlation discovery” to “causal identification”? What breakthrough capabilities does it offer in capturing the complexity of the immune system?

3. Implementation challenges: How do issues such as data quality, model interpretability, and interdisciplinary collaboration hinder the clinical adoption of causal ML?

4. Future directions: From “perturbed cellular atlases” to federated causal learning, which innovations over the next 5-10 years are most likely to translate causal ML from theory into real-world practice?

2 Misconceptions in immunological research: equating correlation with causation

In current immunotherapeutic research, traditional machine learning (ML) models primarily rely on retrospective data mining of correlations (29), yet they often fail to explore the underlying causal mechanisms (30). For instance, in studies on the gut microbiome and immune checkpoint inhibitors (ICIs), although advanced algorithms such as Random Forests and SVMs were employed, only 4 out of 27 studies conducted cross-validation. Furthermore, key confounding factors such as antibiotic use and dietary differences were not adequately controlled, resulting in highly heterogeneous and unreliable conclusions regarding the efficacy of the same microbial strains (31). Similarly, in the analysis of immune-related adverse events (irAEs) and survival, traditional Cox regression yielded a hazard ratio (HR) of 0.37, implying a protective effect of irAEs. However, causal ML using target trial emulation (TTE) to correct for immortal time bias revealed a true HR of 1.02—completely overturning the conventional belief that irAEs improve prognosis (32). These findings underscore the urgent need for sound causal inference in immunological studies to avoid conclusions that contradict biological plausibility.

Moreover, the insufficient recognition of the importance of causal inference among researchers (33) has led to multiple problems. Notably, effective therapies may be erroneously rejected due to improper grouping strategies (34), while correlations that appear statistically significant (35) may be misinterpreted as causal relationships (36), leading to misleading clinical implications (37). For example, studies examining the impact of antibiotic exposure on ICI outcomes reported a statistically significant HR of approximately 1.3, yet the authors explicitly acknowledged the presence of residual unmeasured confounders. This raises the risk of inappropriate clinical decisions, such as the unjustified discontinuation of antibiotics due to a presumed class-wide harmful effect (38). Likewise, deep learning models based on CT radiomics for predicting ICI responses reported an AUC of ~0.71, but the signal captured largely reflected confounders such as tumor burden and treatment line rather than true drug sensitivity, casting doubt on the validity of the model’s conclusions (39).

Therefore, neglecting causal inference not only compromises the reliability of study results (40), impedes clinical translation (41–43), and misguides clinical decision-making, but also wastes research resources and delays the development of effective therapies (44). A typical example is seen in COVID - 19 vaccine research, where including non-virus-related hospitalizations (“false-positive cases”) led to substantial underestimation of the protective effect of vaccines that primarily prevent severe post-infection complications rather than infection itself—ultimately resulting in misleading conclusions about vaccine efficacy (45).

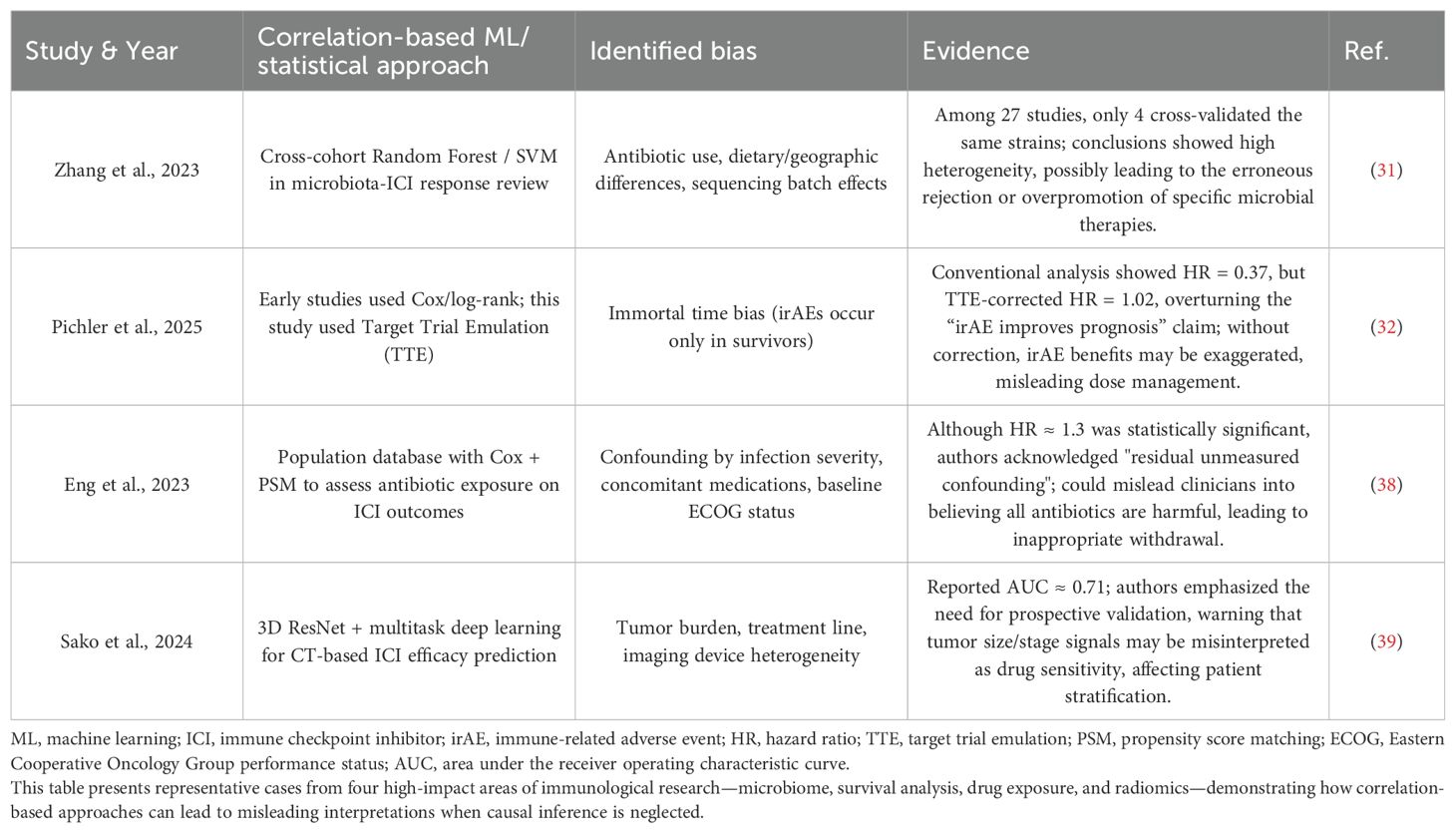

Although the importance of causal inference has been increasingly recognized in clinical research, many studies still rely on conventional causal inference methods, which face significant challenges in practice. Randomized controlled trials (RCTs) are often infeasible due to high costs, ethical constraints, and heterogeneity among patients (46). Stratified designs in observational studies struggle with high-dimensional omics data, and multivariable regression fails to capture the nonlinear characteristics of the immune system (47). Propensity score methods (PSM), based on the unrealistic assumption that all confounders are measurable, have been misapplied in 72% of studies (8). Mendelian Randomization (MR) also faces methodological limitations, including susceptibility to false associations and estimation bias stemming from the quality of genetic instruments and core assumptions (48, 49). Specifically, MR applications in immunology face four major hurdles: violation of the instrumental variable assumption due to pleiotropy; weak instruments owing to low heritability of immune exposures; a mismatch between lifelong genetic effects and short-term therapeutic interventions; and systematic bias from population stratification (50–52). Collectively, these limitations have constrained the application and scalability of traditional causal inference approaches in immunology.

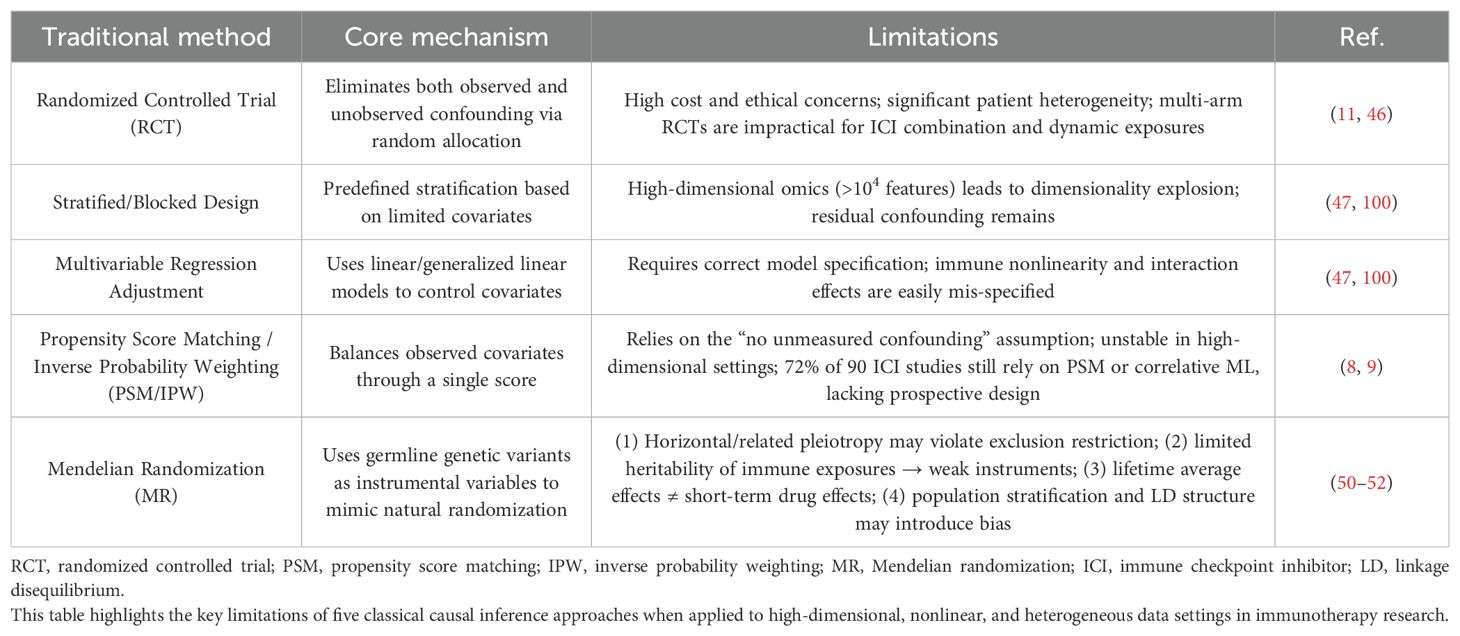

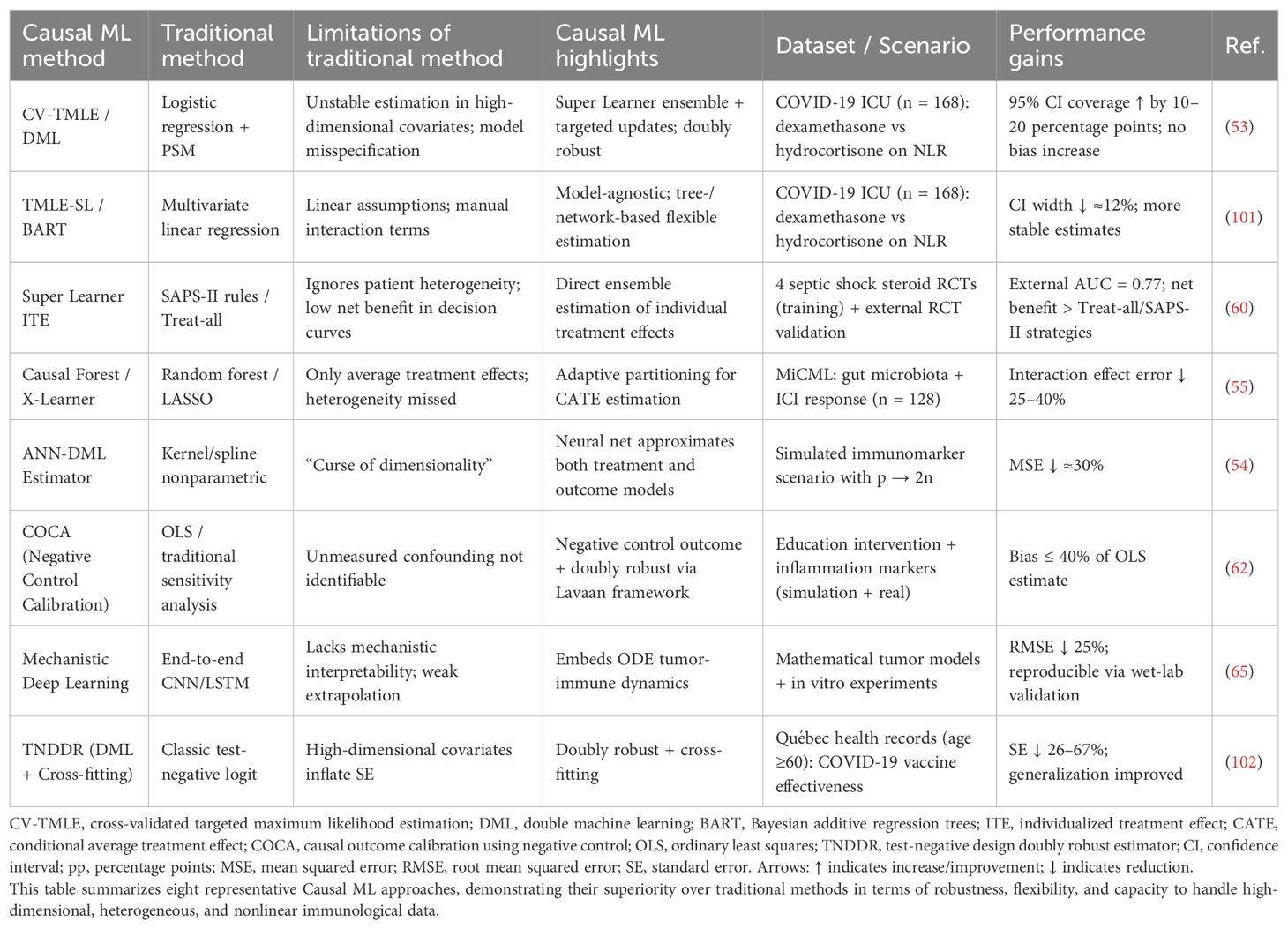

Table 1 presents representative cases where correlation-based analyses failed, while Table 2 summarizes the limitations of traditional causal inference methods.

Table 1. Representative bias cases in immune studies dominated by correlation-based machine learning.

3 Unique advantages of causal inference machine learning models

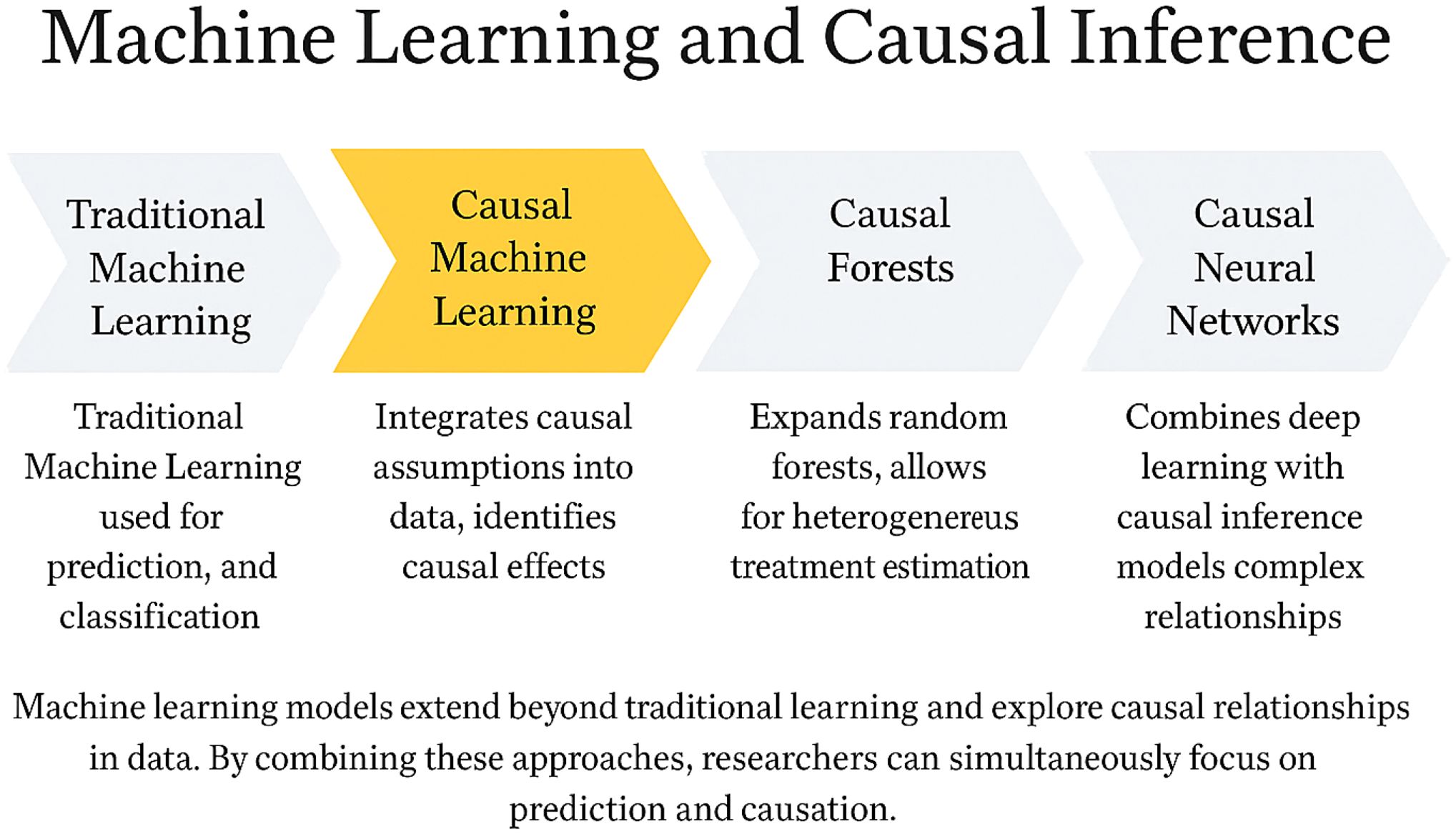

To overcome the limitations of both traditional causal inference and conventional machine learning approaches, causal inference-based machine learning (causal ML) models have emerged (Figure 2). Compared to classical causal methods such as propensity score matching (PSM), Cox regression, or linear models, causal ML lifts the constraints of strict parametric assumptions and rigid model forms, enabling more flexible modeling of the nonlinear dynamics and high-dimensional interactions inherent to immune systems (53–55). For instance, CV-TMLE, when applied in a small-scale study of only 168 ICU patients with COVID - 19, employed the Super Learner ensemble approach to effectively relax regularity conditions and increased the 95% confidence interval coverage by 10-20 percentage points compared to standard methods (53). Similarly, the ANN-DML estimator demonstrated a ~30% reduction in mean squared error (MSE) relative to conventional kernel smoothing methods when handling extremely high-dimensional scenarios where the number of immune biomarkers scales with sample size (p → 2n) (54).

Figure 2. Integrating machine learning and causal inference: from predictive models to causal understanding. This figure illustrates the methodological evolution of machine learning from conventional predictive modeling toward causal inference. Traditional machine learning focuses on prediction and classification tasks without addressing underlying causal mechanisms. Causal machine learning integrates causal assumptions into data analysis to estimate true treatment effects. Causal forests extend random forests to enable estimation of heterogeneous treatment effects. Causal neural networks combine deep learning architectures with causal inference to model complex relationships. Together, these approaches bridge the gap between predictive accuracy and causal interpretability, providing a comprehensive analytical framework for immunotherapy research.

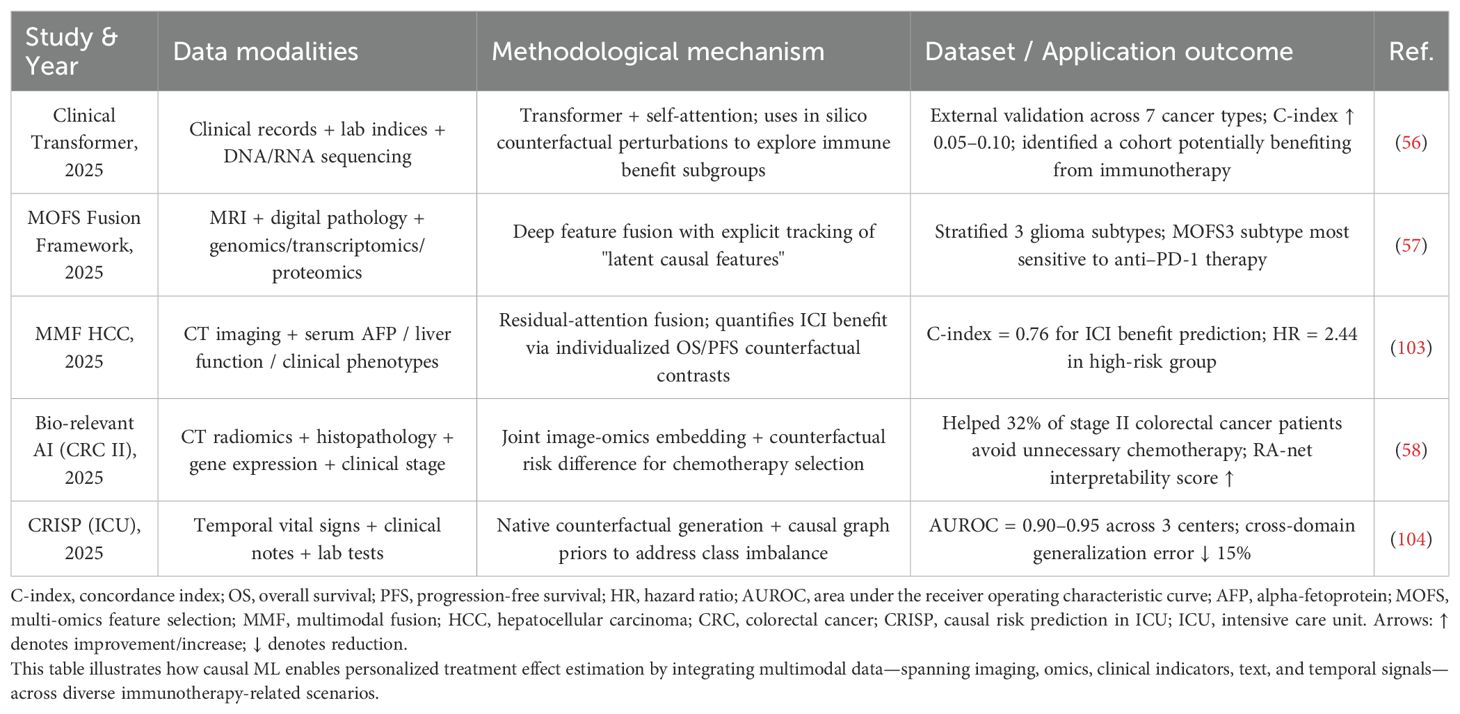

Moreover, causal ML enables multi-modal modeling by integrating imaging, text, time-series, and genomic data. For example, Clinical Transformer can fuse clinical records, laboratory metrics, and sequencing data. By leveraging counterfactual perturbation strategies, it achieved an improvement of 0.05-0.10 in C-index across seven cancer types (56). MOFS effectively integrates MRI, pathology, and multi-omics data to identify glioma subtypes most responsive to anti-PD-1 therapy (57), while Bio-relevant AI combines imaging, pathology, and gene expression data to help 32% of stage II colorectal cancer patients avoid unnecessary chemotherapy (58). These unique strengths contribute to more accurate prediction of therapeutic outcomes (33), optimizing drug use and enhancing treatment efficacy (59).

In contrast to conventional machine learning methods such as random forests, LASSO, or deep learning—models that rely solely on correlational pattern discovery—causal ML shifts the focus from predicting associations to identifying causality. For instance, the Super Learner ITE framework estimates individual treatment effects (ITE) through model ensembling, achieving an AUC of 0.77 in external validation, with decision curve analysis showing a significantly higher net clinical benefit compared to treat-all or SAPS-II strategies (60). Similarly, in the MiCML platform study, Causal Forest utilized adaptive partitioning to estimate conditional average treatment effects (CATE), reducing prediction error for treatment–microbiome interaction effects by 25-40% compared to traditional LASSO regression (55).

Furthermore, causal ML effectively addresses key limitations of correlational models—namely spurious associations and confounding bias—by enabling robust control of unmeasured confounding (61). This facilitates the clarification of true causal relationships between immune cells and disease (36). For instance, COCA utilizes negative control outcome calibration to restrict estimation bias to less than 40% of that seen in conventional OLS models (62), and CV-TMLE improves 95% confidence interval coverage (53). Collectively, these advantages enhance model performance (63), clinical interpretability (43), and generalizability (64), providing robust scientific guidance for clinical decision-making (40).

In addition, mechanism-aware causal ML approaches embed biological prior knowledge into model structures, achieving a unification of data-driven and mechanism-driven strategies—a closed loop between computation and experimentation (65). This integration enables better capture of complex clinical phenotypes, deeper mechanistic insights (41), and enhanced feasibility and translational value of biomedical research (66). Consequently, causal ML provides promising avenues for early detection strategies (64) and novel drug development pipelines (34).

Table 3 summarizes the unique advantages of causal ML methods, while Table 4 outlines their applications in multi-dimensional data integration.

Table 3. Advantages of causal machine learning (causal ML) over traditional machine learning methods.

Table 4. Applications of multimodal causal ML: integrated modeling of imaging, omics, clinical, textual, and temporal data.

3 Challenges in the application of causal inference machine learning models

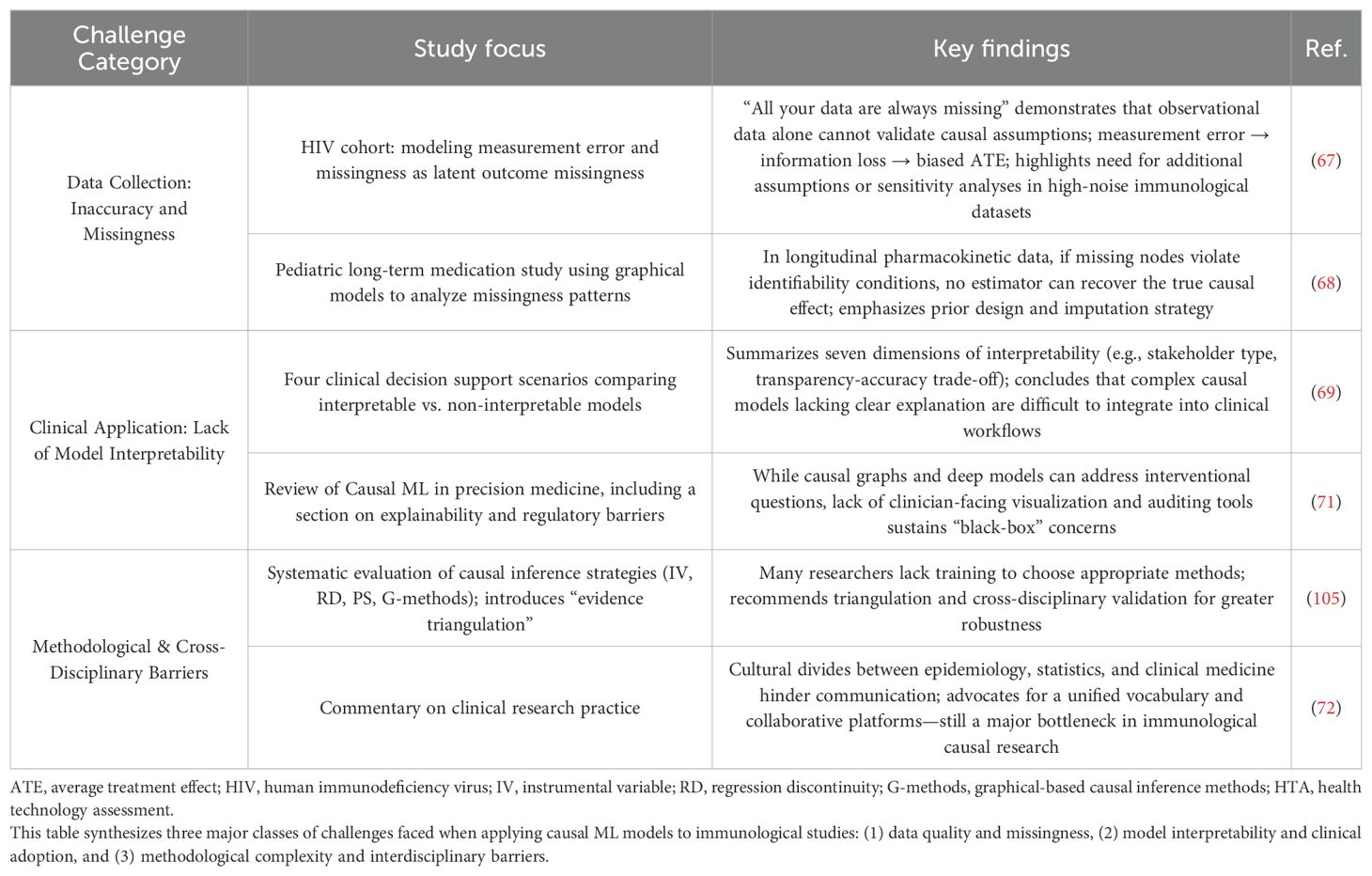

At the data acquisition level, the presence of inaccurate or incomplete data significantly hinders the implementation of causal inference models. In particular, measurement errors can amplify causal bias, thereby undermining the reliability of results (67). Moreover, when missing data violate identifiability assumptions, no estimator can recover the true causal effect, rendering any derived causal inference invalid (68).

At the clinical application level, causal machine learning (Causal ML) models often exhibit a “black-box” nature, which severely limits clinician acceptance (69). When internal parameters and computational processes become overly complex, it becomes difficult for clinicians to understand how conclusions are derived, ultimately impeding clinical translation (70, 71).

At the research methodology level, both methodological selection difficulties and interdisciplinary collaboration barriers constrain the advancement of Causal ML in immunological research. Causal relationships vary in structure and often require tailored methods, yet the abundance of available approaches—each with unique limitations—makes optimal selection challenging, especially for researchers with limited formal training in causal modeling (33). Furthermore, interdisciplinary efforts are frequently impeded by cultural and conceptual gaps between domains. For instance, biomedical scientists tend to focus on clinical applicability, statisticians emphasize methodological validity, and computer scientists prioritize algorithmic performance. These differing priorities can lead to communication breakdowns and ultimately slow scientific progress (72, 73).

Table 5 summarizes the three major challenges faced by Causal ML.

Table 5. Challenges and limitations in applying causal machine learning (causal ML) models in immunological research.

4 Discussion

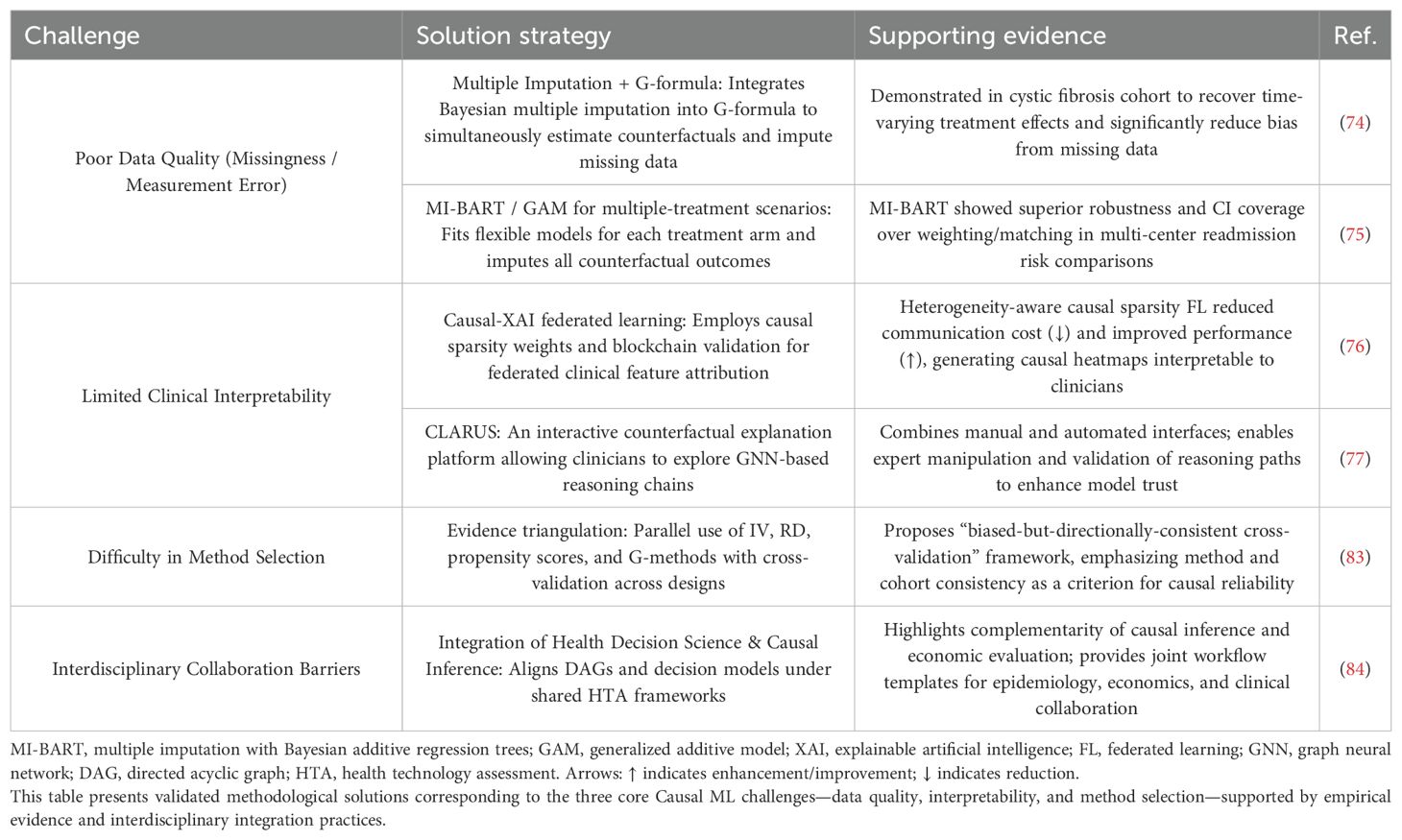

Over the next five years, addressing the two core challenges—data quality and model interpretability—will require the development of innovative technical solutions. In terms of data quality, the integration of multiple imputation with the G-formula has significantly reduced bias caused by missingness in cystic fibrosis studies (74). Likewise, the MI-BART method has demonstrated superior robustness in multi-treatment comparisons (75), offering promising prospects for enhanced data control and quality improvement over the next 5-10 years.

Regarding interpretability, studies have shown that Causal-XAI hybrid frameworks can generate causal attribution heatmaps, enabling physicians to better understand image-based decisions (76). In addition, CLARUS, an interactive counterfactual reasoning platform, allows clinical experts to directly manipulate and verify model reasoning chains (77). This effectively addresses the “black-box” issue by clarifying causal pathways underlying model outputs (78), thereby improving both clinical decision-making and regulatory trust, ultimately facilitating clinical translation (79). In the future, the integration of Bayesian nonparametric models and natural language processing (NLP) is expected to further enhance model performance by extracting authentic causal structures from large-scale biomedical data (80), revealing deep causal relationships and identifying novel therapeutic targets (81, 82).

In the next 5-10 years, methodological integration will become a central theme. The emerging “triangulation framework” will be more widely adopted. This framework enhances the robustness of causal inference by integrating and cross-validating multiple approaches such as instrumental variables (IVs), regression discontinuity (RD), and propensity scores (83). In parallel, strengthening interdisciplinary collaboration and talent development will become essential. Multidisciplinary teams can develop shared terminologies and workflows, promoting effective integration across epidemiology, economics, and clinical medicine (84) and enabling each field to contribute its strengths to solve complex problems (42, 73). Cultivating versatile professionals capable of navigating the intricacies of immune-related biological systems (85) will help dismantle disciplinary silos and address challenges in resource allocation and coordination (86). This integrated approach will enable more comprehensive solutions (87) to meet the rapidly evolving demands of immune drug research (88). Furthermore, academic institutions should establish dedicated programs and curricula to train cross-disciplinary talent in causal inference and immunotherapy (42, 73), fostering the convergence of modern science and specialized education (89), promoting skills development (90), and facilitating global collaboration in immunology research (91), injecting new vitality and opportunity into the field.

In the next 5-10 years, causal inference models are expected to be widely implemented in clinical immunology. One notable development is the “Perturbation Cell Atlas” proposed by Rood et al., which represents a conceptual turning point. Future research will likely build on this by leveraging large-scale CRISPR-scRNA-seq perturbation datasets to train and deploy foundational causal models for practical guidance (92). Technologically, tools such as Velorama, which has shown great promise in immune differentiation studies, will play a pivotal role. By integrating RNA velocity to express cellular developmental trajectories as directed acyclic graphs (DAGs), these tools enable causal network inference at single-cell resolution, a capability expected to be expanded in future research (93).

With the continued advancement of artificial intelligence, AI-assisted vaccine design is poised to become a prevailing trend. This will necessitate the use of target trial emulation, causal NLP, and federated causal estimation frameworks to identify causally relevant endpoints and accelerate critical discoveries (94, 95). Moreover, as federated learning frameworks mature across institutions (76), interpretable causal tools such as CIMLA will likely become standardized (96), enabling a full transition of causal inference from theoretical development to routine clinical decision support. This process will be further facilitated by improvements in data quality and model robustness through rigorous control of covariates and confounding variables (97–99), which are essential for enhancing the credibility, transparency, and real-world applicability of causal models in clinical settings.

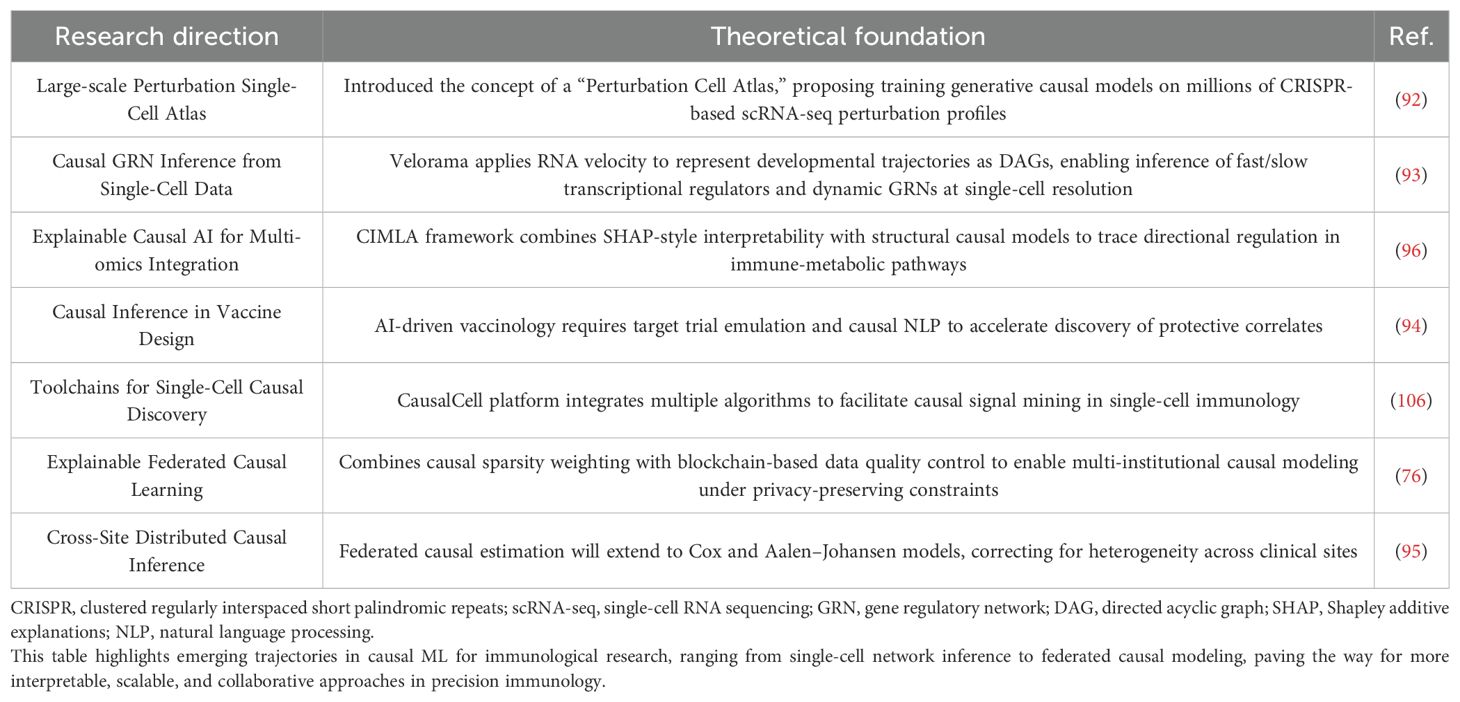

Table 6 presents strategies to address the three major challenges, while Table 7 outlines the projected applications of causal ML in immunology over the next 5-10 years.

Table 6. Technical strategies addressing the three core challenges in causal machine learning (causal ML) applications.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

JW: Writing – original draft, Investigation, Methodology, Conceptualization. MD: Supervision, Writing – review & editing, Visualization. PL: Writing – review & editing. JH: Writing – review & editing. MM: Writing – review & editing, Visualization, Supervision.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This work was supported by the Key Research Project Plan of Hebei Provincial Medical Science Research (20230962).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Koelzer VH, Sirinukunwattana K, Rittscher J, Rittscher J, and Mertz KD. Precision immunoprofiling by image analysis and artificial intelligence. Virchows Archiv: an Int J Pathol. (2019) 474:511–522. doi: 10.1007/s00428-018-2485-z

2. Lu M, Jin R, Ye H, and Ma T. The artificial intelligence and machine learning in lung cancer immunotherapy. J Hematol Oncol. (2023) 16:55. doi: 10.1186/s13045-023-01456-y

3. Li Y, Wu X, Fang D, and Luo Y. Informing immunotherapy with multi-omics driven machine learning. NPJ digital Med. (2024) 7:67. doi: 10.1038/s41746-024-01043-6

4. Roelofsen LM and Thommen DS. Multimodal predictors for precision immunotherapy. Immuno-oncology Technol. (2022) 14:100071–100071. doi: 10.1016/j.iotech.2022.100071

5. Sakhamuri MHR, Henna S, Creedon L, and Meehan K. Graph modelling and graph-attention neural network for immune response prediction. Piscataway, NJ, USA: IEEE (2023).

6. Murray JD, Lange JJ, Bennett-Lenane H, Holm R, Kuentz M, and O’Dwyer PJ. Advancing algorithmic drug product development: Recommendations for machine learning approaches in drug formulation. Eur J OF Pharm Sci. (2023) 191:106562. doi: 10.1016/j.ejps.2023.106562

7. Wossnig L, Furtmann N, Buchanan A, Kumar S, and Greiff V. Best practices for machine learning in antibody discovery and development. In: arXiv.org abs/2312.08470 Ithaca, NY, USA: arXiv (Cornell University Library) (2023).

8. Prelaj A, Miskovic V, Zanitti M, Trovò F, Genova C, and Viscardi G. Artificial intelligence for predictive biomarker discovery in immuno-oncology: a systematic review. Ann Oncol. (2024) 35:29–65. doi: 10.1016/j.annonc.2023.10.125

9. Li J, Dan K, and Ai J. Machine learning in the prediction of immunotherapy response and prognosis of melanoma: a systematic review and meta-analysis. Front Immunol. (2024) 15:1281940. doi: 10.3389/fimmu.2024.1281940

10. Kaiser I, Mathes S, Pfahlberg AB, Uter W, Berking C, and Heppt MV. Using the prediction model risk of bias assessment tool (PROBAST) to evaluate melanoma prediction studies. Cancers (Basel). (2022) 14:3033. doi: 10.3390/cancers14123033

11. Bao R, Hutson A, Madabhushi A, Jonsson VD, Rosario SR, and Barnholtz-Sloan JS. Ten challenges and opportunities in computational immuno-oncology. J Immunother Cancer. (2024) 12:e009721. doi: 10.1136/jitc-2024-009721

12. Bulbulia JA. Methods in causal inference. Part 2: Interaction, mediation, and time-varying treatments. Evol Hum Sci. (2024) 6:e41. doi: 10.1017/ehs.2024.32

13. Cobey S and Baskerville EB. Limits to causal inference with state-space reconstruction for infectious disease. PloS One. (2016) 11:e0169050. doi: 10.1371/journal.pone.0169050

14. Zhang A, Miao K, Sun H, and Deng C-X. Tumor heterogeneity reshapes the tumor microenvironment to influence drug resistance. Int J Biol Sci. (2022) 18:3019–33. doi: 10.7150/ijbs.72534

15. Rao S, Mamouei M, Salimi-Khorshidi G, Li Y, Ramakrishnan R, and Hassaine A. Targeted-BEHRT: deep learning for observational causal inference on longitudinal electronic health records. Ithaca, NY, USA: arXiv (Cornell University Library) (2022) 1–12.

16. Bernasconi A, Zanga A, Lucas PJF, Scutari M, and Stella F. Towards a transportable causal network model based on observational healthcare data. In: arXiv.org abs/2311.08427. Ithaca, NY, USA: arXiv (Cornell University Library) (2023).

17. Dibaeinia P and Sinha S. CIMLA: Interpretable AI for inference of differential causal networks. In: arXiv.org. Ithaca, NY, USA: arXiv (Cornell University Library) (2023).

18. Li S. Large pre-trained models for treatment effect estimation: Are we there yet. Patterns. (2024) 5:101005–5. doi: 10.1016/j.patter.2024.101005

19. Fang Y and Liang F. Causal-stoNet: causal inference for high-dimensional complex data. In: arXiv.org abs/2403.18994. Ithaca, NY, USA: arXiv (Cornell University Library) (2024).

20. Noh M and Kim YS. Diabetes prediction through linkage of causal discovery and inference model with machine learning models. Adv Cardiovasc Dis. (2025) 13:124–4. doi: 10.3390/biomedicines13010124

21. Deng Z, Zheng X, Tian H, and Zeng DD. Deep causal learning: representation, discovery and inference. In: arXiv.org abs/2211.03374. Ithaca, NY, USA: arXiv (Cornell University Library) (2022).

22. Chernozhukov V, Hansen C, Kallus N, Spindler M, and Syrgkanis V. Applied causal inference powered by ML and AI. In: arXiv.org abs/2403.02467. Ithaca, NY, USA: arXiv (Cornell University Library) (2024).

23. Michoel T and Zhang JD. Causal inference in drug discovery and development. Drug Discov Today. (2023) 28:103737–103737. doi: 10.1016/j.drudis.2023.103737

24. Roy S and Salimi B. Causal inference in data analysis with applications to fairness and explanations. Lecture Notes Comput Sci. (2023) 105:131. doi: 10.1007/978-3-031-31414-8_3

25. Balzer LB and Petersen ML. Invited commentary: machine learning in causal inference-how do I love thee? Let me count the ways. Am J Epidemiol. (2021) 190:1483–1487. doi: 10.1093/aje/kwab048

26. Wu A, Kuang K, Xiong R, and Wu F. Instrumental variables in causal inference and machine learning: A survey. In: arXiv.org abs/2212.05778. Ithaca, NY, USA: arXiv (Cornell University Library) (2022).

27. Kuang K, Li L, Geng Z, Xu L, Zhang K, and Liao B. Causal inference. Amsterdam, Netherlands: Elsevier. (2020).

28. Shi J and Norgeot B. Learning causal effects from observational data in healthcare: A review and summary. Front Med. (2022) 9. doi: 10.3389/fmed.2022.864882

29. Hünermund P, Kaminski J, and Schmitt C. Causal machine learning and business decision making. Soc Sci Res Network (SSRN). (2022) 1:52. doi: 10.2139/ssrn.3867326

30. Ness RO, Sachs K, Mallick P, and Vitek O. A bayesian active learning experimental design for inferring signaling networks. J Comput biology: J Comput Mol Cell Biol. (2018) 25:709–25. doi: 10.1089/cmb.2017.0247

31. Zhang M, Liu J, and Xia Q. Role of gut microbiome in cancer immunotherapy: from predictive biomarker to therapeutic target. Exp Hematol Oncol. (2023) 12:84. doi: 10.1186/s40164-023-00442-x

32. Pichler R, Fritz J, Maier S, Hassler MR, Krauter J, and Andrea DD. Target trial emulation to evaluate the effect of immune-related adverse events on outcomes in metastatic urothelial cancer. Cancer Immunol Immunother. (2024) 74:30. doi: 10.1007/s00262-024-03871-7

33. Feuerriegel S, Frauen D, Melnychuk V, Schweisthal J, Hess K, and Curth A. Causal machine learning for predicting treatment outcomes. Nat Med. (2024) 30:958–968. doi: 10.1038/s41591-024-02902-1

34. Baillie JK, Angus D, Burnham K, Calandra T, Calfee C, and Gutteridge A. Causal inference can lead us to modifiable mechanisms and informative archetypes in sepsis. Intensive Care Med. (2024) 50:2031–42. doi: 10.1007/s00134-024-07665-4

35. Gong P, Lu Y, Li M, Li X, and Carla CJ. WITHDRAWN: Causal relationship between immune cells and idiopathic pulmonary fibrosis based on Mendelian randomization analysis. (2023). doi: 10.21203/rs.3.rs-3704028/v1

36. Cao Z, Zhao S, Hu S, Wu T, Sun F, and Shi LI. Screening COPD-related biomarkers and traditional chinese medicine prediction based on bioinformatics and machine learning. Int J chronic obstructive pulmonary Dis. (2024) 19:2073–2095. doi: 10.2147/COPD.S476808

37. Sharma A and Kiciman E. Causal inference and counterfactual reasoning. Proceedings of the 7th ACM IKDD CoDS and 25th COMAD (CoDS-COMAD 2020). (2020) 369 -:370. doi: 10.1145/3371158

38. Eng L, Sutradhar R, Niu Y, Liu N, Liu Y, and Kaliwal Y. Impact of antibiotic exposure before immune checkpoint inhibitor treatment on overall survival in older adults with cancer: A population-based study. J Clin Oncol. (2023) 41:3122–34. doi: 10.1200/JCO.22.00074

39. Sako C, Duan C, Maresca K, Kent S, Gilat-Schmidt T, and Aerts HJWLA. Real-world and clinical trial validation of a deep learning radiomic biomarker for PD-(L)1 immune checkpoint inhibitor response in advanced non-small cell lung cancer. JCO Clin Cancer Inform. (2024) 8:e2400133. doi: 10.1200/CCI.24.00133

40. Ingle SM, Miro JM, May MT, Cain LE, Schwimmer C, and Zangerle R. Early antiretroviral therapy not associated with higher cryptococcal meningitis mortality in people with human immunodeficiency virus in high-income countries: an international collaborative cohort study. Clin Infect diseases: an Off Publ Infect Dis Soc America. (2023) 77:64–73. doi: 10.1093/cid/ciad122

41. Jordan DM, Choi HK, Verbanck M, Topless R, Won H-H, and Nadkarni G. No causal effects of serum urate levels on the risk of chronic kidney disease: A Mendelian randomization study. PloS Med. (2019) 16:e1002725. doi: 10.1371/journal.pmed.1002725

42. Chaudhary NS, Tiwari HK, Hidalgo BA, Limdi NA, Reynolds RJ, and Cushman M. APOL1 risk variants associated with serum albumin in a population-based cohort study. Am J Nephrol. (2022) 53:182–190. doi: 10.1159/000520997

43. Chen S, Yu J, Chamouni S, Wang Y, and Li Y. Integrating machine learning and artificial intelligence in life-course epidemiology: pathways to innovative public health solutions. BMC Med. (2024) 22:354. doi: 10.1186/s12916-024-03566-x

44. Hawkes N. Poor quality animal studies cause clinical trials to follow false leads. BMJ: Br Med J. (2015) 351:h5453. doi: 10.1136/bmj.h5453

45. Hansen CH. Bias in vaccine effectiveness studies of clinically severe outcomes that are measured with low specificity: the example of COVID - 19-related hospitalization. Eurosurveillance (Euro Surveill). (2024) 29:2300259. doi: 10.2807/1560-7917.ES.2024.29.7.2300259

46. van Amsterdam W, Elias S, and Ranganath R. Causal inference in oncology: why, what, how and when. Clin Oncol (R Coll Radiol). (2025) 38:103616. doi: 10.1016/j.clon.2024.07.002

47. Moodie E. Causal inference and confounding: A primer for interpreting and conducting infectious disease research. J Infect Dis. (2023) 228:365–7. doi: 10.1093/infdis/jiad144

48. Geng Z, Yang T, Chen Y, Wang J, Liu Z, and Miao J. Investigating the causal relationship between immune factors and ankylosing spondylitis: insights from a Mendelian Randomization study. Adv Rheumatol. (2024) 64:89. doi: 10.1186/s42358-024-00428-1

49. Li J, Liu L, Luo Q, Zhou W, Zhu Y, and Jiang W. Exploring the causal relationship between immune cell and all-cause heart failure: a Mendelian randomization study. Front Cardiovasc Med. (2024) 11. doi: 10.3389/fcvm.2024.1363200

50. Burgess S, Woolf B, Mason AM, Ala-Korpela M, and Gill D. Addressing the credibility crisis in Mendelian randomization. BMC Med. (2024) 22:374. doi: 10.1186/s12916-024-03607-5

51. Chen J, Su B, Zhang X, Gao C, Ji Y, and Xue X. Mendelian randomization suggests causal correlations between inflammatory cytokines and immune cells with mastitis. Front Immunol. (2024) 15:1409545. doi: 10.3389/fimmu.2024.1409545

52. Tang B, Lin N, Liang J, Yi G, Zhang L, and Peng W. Leveraging pleiotropic clustering to address high proportion correlated horizontal pleiotropy in Mendelian randomization studies. Nat Commun. (2025) 16:2817. doi: 10.1038/s41467-025-57912-5

53. Smith MJ, Phillips RV, Maringe C, and Luque-Fernández MA. Performance of cross-validated targeted maximum likelihood estimation. In: arXiv:2409.11265. Ithaca, NY, USA: arXiv (Cornell University Library) (2024). doi: 10.48550/arXiv.2409.11265

54. Chen X, Liu Y, Ma S, and Zhang Z. Causal inference of general treatment effects using neural networks with a diverging number of confounders. J Econometrics. (2024) 238:105555. doi: 10.1016/j.jeconom.2023.105555

55. Koh H, Kim J, and Jang H. MiCML: a causal machine learning cloud platform for the analysis of treatment effects using microbiome profiles. BioData Min. (2025) 18:10. doi: 10.1186/s13040-025-00422-3

56. Arango-Argoty G, Kipkogei E, Stewart R, Sun GJ, Patra A, and Kagiampakis I. Pretrained transformers applied to clinical studies improve predictions of treatment efficacy and associated biomarkers. Nat Commun. (2025) 16:2101. doi: 10.1038/s41467-025-57181-2

57. Liu Z, Wu Y, Xu H, Wang M, Weng S, and Pei D. Multimodal fusion of radio-pathology and proteogenomics identify integrated glioma subtypes with prognostic and therapeutic opportunities. Nat Commun. (2025) 16:3510. doi: 10.1038/s41467-025-58675-9

58. Xie C, Ning Z, Guo T, Yao L, Chen X, and Huang W. Multimodal data integration for biologically-relevant artificial intelligence to guide adjuvant chemotherapy in stage II colorectal cancer. EBioMedicine. (2025) 117:105789. doi: 10.1016/j.ebiom.2025.105789

59. Rust J and Autexier S. Causal inference for personalized treatment effect estimation for given machine learning models. 2022 IEEE 21st International Conference on Machine Learning and Applications (ICMLA) (2022), 1289–95. doi: 10.1109/ICMLA55696.2022.00206

60. Pirracchio R, Hubbard A, Sprung CL, Chevret S, Annane D, and RECORDS Collaborators. Assessment of machine learning to estimate the individual treatment effect of corticosteroids in septic shock. JAMA Netw Open. (2020) 3:e2029050. doi: 10.1001/jamanetworkopen.2020.29050

61. Karmakar S, Majumder SG, and Gangaraju D. Causal Inference and Causal Machine Learning with Practical Applications: The paper highlights the concepts of Causal Inference and Causal ML along with different implementation techniques. New York, NY, USA: Association for Computing Machinery (ACM). (2023).

62. Loh WW. Causal inference with unobserved confounding: leveraging negative control outcomes using lavaan. Multivariate Behav Res. (2025), 1–13. doi: 10.1080/00273171.2025.2507742

63. Pavlovi’c M, Al Hajj GS, Kanduri C, Pensar J, Wood M, and Sollid LM. Improving generalization of machine learning-identified biomarkers with causal modeling: an investigation into immune receptor diagnostics. In: arXiv.org abs/2204.09291. Ithaca, NY, USA: arXiv (Cornell University Library) (2022).

64. Papanastasiou G, Scutari M, Tachdjian R, Hernandez-Trujillo V, Raasch J, and Billmeyer K. Causal modeling in large-scale data to improve identification of adults at risk for combined and common variable immunodeficiencies. Cold Spring Harbor, NY, USA: Cold Spring Harbor Laboratory (medRxiv). (2024).

65. Metzcar J, Jutzeler CR, Macklin P, Köhn-Luque A, and Brüningk SC. A review of mechanistic learning in mathematical oncology. Front Immunol. (2024) 15:1363144. doi: 10.3389/fimmu.2024.1363144

66. Oh TR. Integrating predictive modeling and causal inference for advancing medical science. Childhood Kidney Dis. (2024) 28:93–98. doi: 10.3339/ckd.24.018

67. Edwards JK, Cole SR, and Westreich D. All your data are always missing: incorporating bias due to measurement error into the potential outcomes framework. Int J Epidemiol. (2015) 44:1452–9. doi: 10.1093/ije/dyu272

68. Holovchak A, McIlleron H, Denti P, and Schomaker M. Recoverability of causal effects under presence of missing data: a longitudinal case study. Biostatistics. (2024) 26:kxae044. doi: 10.1093/biostatistics/kxae044

69. Hildt E. What is the role of explainability in medical artificial intelligence? A case-based approach. Bioengineering (Basel). (2025) 12:375. doi: 10.3390/bioengineering12040375

70. Raita Y, Camargo CA Jr, Liang L, and Hasegawa K. Big data, data science, and causal inference: A primer for clinicians. Front Med. (2021) 8:678047. doi: 10.3389/fmed.2021.678047

71. Sanchez P, Voisey JP, Xia T, Watson J, O’Neil A, and Tsaftaris SA. Causal machine learning for healthcare and precision medicine. R Soc Open Sci. (2022) 9:220638. doi: 10.1098/rsos.220638

72. Lynch J. It’s not easy being interdisciplinary. Int J Epidemiol. (2006) 35:1119–22. doi: 10.1093/ije/dyl200

73. Miles B, Shpitser I, Kanki P, Meloni S, and Tchetgen E. On semiparametric estimation of a path-specific effect in the presence of mediator-outcome confounding. Biometrika. (2020) 107:159–172. doi: 10.1093/biomet/asz063

74. Bartlett JW, Olarte Parra C, Granger E, Keogh RH, van Zwet EW, and Daniel RM. G-formula with multiple imputation for causal inference with incomplete data. Stat Methods Med Res. (2025) 34:1130–43. doi: 10.1177/09622802251316971

75. Silva GC and Gutman R. Multiple imputation procedures for estimating causal effects with multiple treatments with application to the comparison of healthcare providers. Stat Med. (2022) 41:208–26. doi: 10.1002/sim.9231

76. Mu J, Kadoch M, Yuan T, Lv W, Liu Q, and Li B. Explainable federated medical image analysis through causal learning and blockchain. IEEE J BioMed Health Inform. (2024) 28:3206–18. doi: 10.1109/JBHI.2024.3375894

77. Metsch JM, Saranti A, Angerschmid A, Pfeifer B, Klemt V, and Holzinger A. CLARUS: An interactive explainable AI platform for manual counterfactuals in graph neural networks. J BioMed Inform. (2024) 150:104600. doi: 10.1016/j.jbi.2024.104600

78. Ahmed A, Maity GC, Ashifizzaj A, and Najir I. Artificial Intelligence in the field of pharmaceutical Science. International Journal of Pharmaceutical Research and Applications (IJPRA). (2024) 09:927–33. doi: 10.35629/4494-0905927933

79. Nihalani G. Applications of artificial intelligence in pharmaceutical research: an extensive review. Bioequivalence bioavailability Int J. (2024) 8:1–7. doi: 10.23880/beba-16000231

80. Bhattamisra SK, Banerjee P, Gupta P, Mayuren J, Patra S, and Candasamy M. Artificial intelligence in pharmaceutical and healthcare research. BIG Data AND Cogn COMPUTING. (2023) 7:10–10. doi: 10.3390/bdcc7010010

81. Arora P, Behera MD, Saraf SA, and Shukla R. Leveraging artificial intelligence for synergies in drug discovery: from computers to clinics. Curr Pharm design. (2024) 30:2187–205. doi: 10.2174/0113816128308066240529121148

82. Pereira A. A transformação da indústria farmacêutica pela inteligência artificial: impactos no desenvolvimento de medicamentos. Rio de Janeiro, Brazil: Editora FT Ltda.. (2024). pp. 24–5. pp. 24–5.

83. Hammerton G and Munafò MR. Causal inference with observational data: the need for triangulation of evidence. Psychol Med. (2021) 51:563–78. doi: 10.1017/S0033291720005127

84. Kühne F, Schomaker M, Stojkov I, Jahn B, Conrads-Frank A, and Siebert S. Causal evidence in health decision making: methodological approaches of causal inference and health decision science. Ger Med Sci. (2022) 20:Doc12. doi: 10.3205/000314

85. Gong F. The exploration and research of talent training ideas in interdisciplinary background. Adv Soc Science Educ Humanities Research/Advances Soc science Educ humanities Res. (2024) 227:235. doi: 10.2991/978-2-38476-253-8_28

86. Li J. A comparative study on the reform of interdisciplinary talents training model under the background of new liberal arts. Occupation and Professional Education. (2024) 1:25–30. doi: 10.62381/O242104

87. Wang L, Lan Z, Zhang Y, Guo Z, and Sun J. Dual-agent, six-dimensional, four-drive” Talent cultivation model innovation and practice. Adv education humanities Soc Sci Res. (2024) 12:137–137. doi: 10.56028/aehssr.12.1.137.2024

88. Jia S, Chen X, and Yang T. Research on the training model of financial technology talents based on interdisciplinary and multi-professional integration. Int J New Developments Educ. (2023) 5:21–6. doi: 10.25236/IJNDE.2023.050204

89. Xu Y, Ramli HB, and Khairani MZB. Interdisciplinary talent training model of new media art major at Xi’an University: A literature review. Global J arts humanities Soc Sci. (2023) 11:1–12. doi: 10.37745/gjahss.2013/vol11n7112

90. Xi W, Yue P, Ye XF, and Deng J. Research on the cultivation of innovative talents for science education under the background of new liberal arts. Educ review USA. (2024) 8:43–47. doi: 10.26855/er.2024.01.005

91. Sono MG and Diputra GBW. The evolution of talent development programs in academic literature: A bibliometric review. Eastasouth Manage Business. (2024) 3:133–150. doi: 10.58812/esmb.v3i1.336

92. Rood JE, Hupalowska A, and Regev A. Toward a foundation model of causal cell and tissue biology with a Perturbation Cell and Tissue Atlas. Cell. (2024) 187:4520–45. doi: 10.1016/j.cell.2024.07.035

93. Singh R, Wu AP, Mudide A, and Berger B. Causal gene regulatory analysis with RNA velocity reveals an interplay between slow and fast transcription factors. Cell Syst. (2024) 15:462–474.e5. doi: 10.1016/j.cels.2024.04.005

94. Anderson LN, Hoyt CT, Zucker JD, McNaughton AD, Teuton JR, Karis K, et al. Computational tools and data integration to accelerate vaccine development: challenges, opportunities, and future directions. Front Immunol. (2025) 16:1502484. doi: 10.3389/fimmu.2025.1502484

95. Li H, Xu J, Gan K, Wang F, and Zang C. Federated causal inference in healthcare: methods, challenges, and applications. In: arXiv preprint arXiv:2505.02238. Ithaca, NY, USA: arXiv (Cornell University Library) (2025). doi: 10.48550/arXiv.2505.02238

96. Dibaeinia P, Ojha A, and Sinha S. Interpretable AI for inference of causal molecular relationships from omics data. Sci Adv. (2025) 11:eadk0837. doi: 10.1126/sciadv.adk0837

97. Yu X, Zoh RS, Fluharty DA, Mestre LM, Valdez D, and Tekwe CD. Misstatements, misperceptions, and mistakes in controlling for covariates in observational research. Elife. (2024) 13:e82268. doi: 10.7554/eLife.82268

98. Frank KA. Impact of a confounding variable on a regression coefficient. SOCIOLOGICAL Methods Res. (2000) 29:147–94. doi: 10.1177/0049124100029002001

99. Kahlert J, Gribsholt SB, Gammelager H, Dekkers OM, and Luta G. Control of confounding in the analysis phase - an overview for clinicians. Clin Epidemiol. (2017) 9:195–204. doi: 10.2147/CLEP.S129886

100. Byrnes J and Dee LE. Causal inference with observational data and unobserved confounding variables. Ecol Lett. (2025) 28:e70023. doi: 10.1111/ele.70023

101. Nkuhairwe IN, Esterhuizen TM, Sigwadhi LN, Tamuzi JL, Machekano R, and Nyasulu PS. Estimating the causal effect of dexamethasone versus hydrocortisone on the neutrophil- lymphocyte ratio in critically ill COVID - 19 patients from Tygerberg Hospital ICU using TMLE method. BMC Infect Dis. (2024) 24:1365. doi: 10.1186/s12879-024-10112-w

102. Jiang C, Talbot D, Carazo S, and Schnitzer ME. A double machine learning approach for the evaluation of COVID - 19 vaccine effectiveness under the test-negative design: analysis of québec administrative data. arXiv. (2024) 23.

103. Xu J, Wang T, Li J, Wang Y, Zhu Z, and Fu X. A multimodal fusion system predicting survival benefits of immune checkpoint inhibitors in unresectable hepatocellular carcinoma. NPJ Precis Oncol. (2025) 9:185. doi: 10.1038/s41698-025-00979-6

104. Wang L, Guo X, Shi H, Ma Y, Bao H, and Jiang L. CRISP: A causal relationships-guided deep learning framework for advanced ICU mortality prediction. BMC Med Inform Decis Mak. (2025) 25:165. doi: 10.1186/s12911-025-02981-1

105. Matthay EC, Hagan E, Gottlieb LM, Tan ML, Vlahov D, and Adler NE. Alternative causal inference methods in population health research: Evaluating tradeoffs and triangulating evidence. SSM Popul Health. (2020) 10:100526. doi: 10.1016/j.ssmph.2019.100526

Keywords: causal inference, machine learning, immunotherapy, immune checkpoint inhibitors, confounding bias, treatment effect estimation, multimodal data integration, precision medicine

Citation: Wang J-W, Meng M, Dai M-W, Liang P and Hou J (2025) Correlation does not equal causation: the imperative of causal inference in machine learning models for immunotherapy. Front. Immunol. 16:1630781. doi: 10.3389/fimmu.2025.1630781

Received: 18 May 2025; Accepted: 01 September 2025;

Published: 17 September 2025.

Edited by:

Vera Rebmann, University of Duisburg-Essen, GermanyReviewed by:

Paola Lecca, Free University of Bozen-Bolzano, ItalyCopyright © 2025 Wang, Meng, Dai, Liang and Hou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Meng Meng, NDg4MDE2NzFAaGVibXUuZWR1LmNu

Jia-Wen Wang1

Jia-Wen Wang1 Meng Meng

Meng Meng Mu-Wei Dai

Mu-Wei Dai Ping Liang

Ping Liang Juan Hou

Juan Hou