Abstract

Artificial intelligence (AI) is revolutionizing oncology, with deep learning (DL) emerging as a pivotal technology for addressing gynecologic malignancies (GMs). DL-based models are now widely applied to assist in clinical diagnosis and prognosis prediction, demonstrating excellent performance in tasks such as tumor detection, segmentation, classification, and necrosis assessment for both primary and metastatic GMs. By leveraging radiological (e.g., X-ray, CT, MRI, and Single Photon Emission Computed Tomography (SPECT)) and pathological images, these approaches show significant potential for enhancing diagnostic accuracy and prognostic evaluation. This review provides a concise overview of deep learning techniques for medical image analysis and their current applications in GM diagnosis and outcome prediction. Furthermore, it discusses key challenges and future directions in the field. AI-based radiomics presents a non-invasive and cost-effective tool for gynecologic practice, and the integration of multi-omics data is recommended to further advance precision medicine in oncology.

Highlights

-

Synthesizes the integrated application of AI across multi-omics data (radiomics, pathomics, and genomics) for gynecologic malignancies, moving beyond siloed reviews.

-

Details and contrasts a comprehensive array of both traditional machine learning and advanced deep learning architectures tailored for medical image and data analyses.

-

Critically identifies the pervasive challenge of limited, heterogeneous data and the “black box” nature of AI as the primary barriers to clinical translation in GM care.

-

Proposes standardized benchmarking and the development of explainable AI (XAI) frameworks as essential pathways for future clinical integration.

-

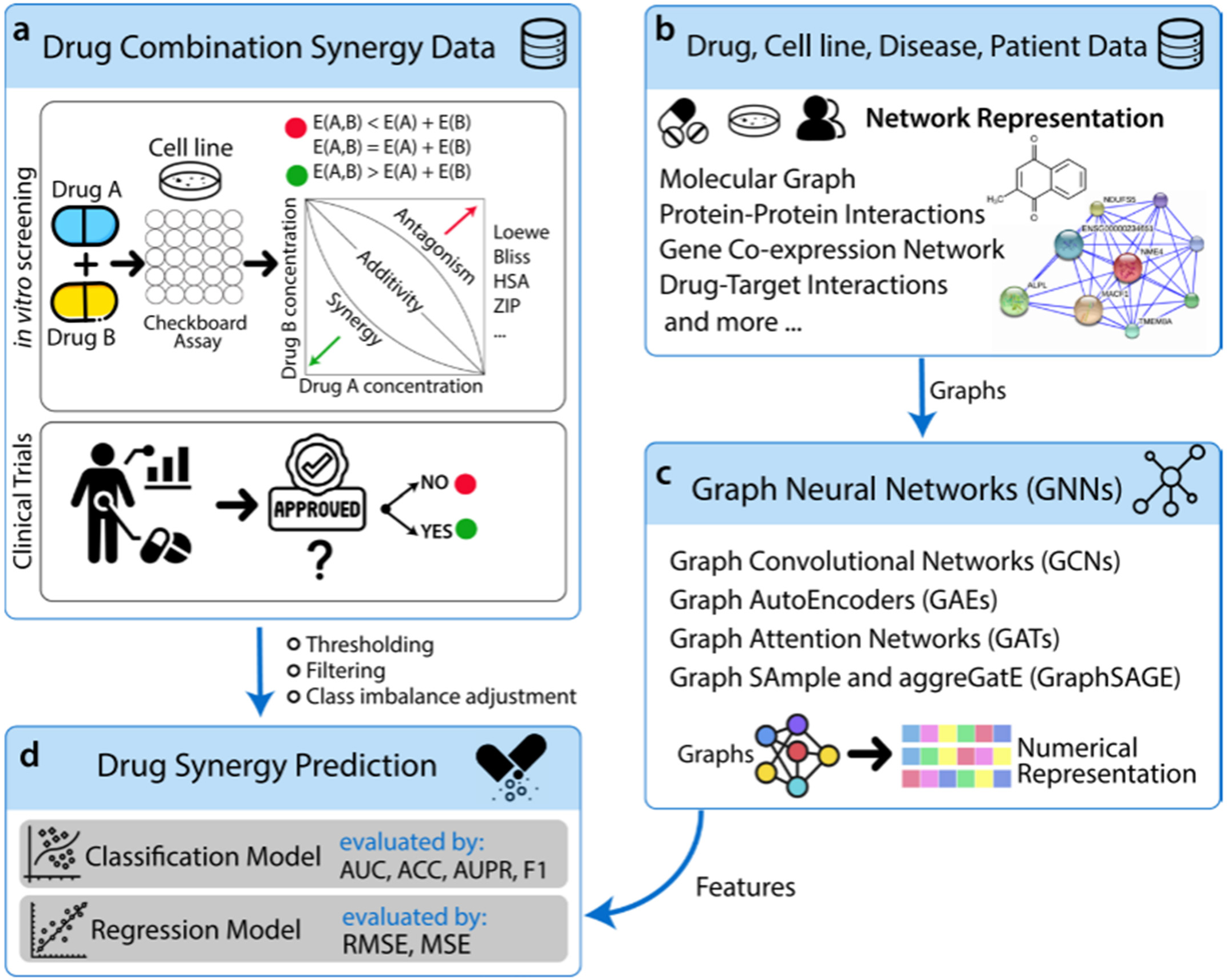

Discusses the emerging role of graph neural networks (GNNs) in predicting drug synergism and analyzing complex biological networks for personalized therapy.

1 Introduction

Advances in computer science over the past decade have propelled the growth of artificial intelligence (AI), leading to its widespread adoption in various scientific domains, including medicine. AI differs from regular computer programming in several aspects. Traditional programming algorithms generate outputs based on input data and predefined rules, while AI has the ability to generate rules and patterns based on both input and output data. As a result, AI can accurately predict outcomes for fresh input.

AI and machine learning (ML) are increasingly making their presence felt in everyday life and are expected to have a significant impact on digital healthcare, particularly in the areas of disease detection and treatment, in the near future. The progress in AI and ML technologies has enabled the development of autonomous disease diagnosis tools. These tools utilize large datasets to address the future difficulties of the early identification of human diseases, particularly cancer. ML is a specific branch of AI that focuses on developing algorithms based on neural networks. These algorithms enable machines to learn and solve problems in a manner similar to the human brain (1). Deep learning (DL) is a subset of ML that aims to replicate the data processing capabilities of the human brain. It is used to detect images and objects, process languages, enhance drug discovery, improve precision medications, enhance diagnosis, and aid humans in decision-making. It is capable of functioning and generating outputs without human intervention (2). DL uses artificial neural networks (ANNs) to analyze data, such as medical images. It mimics the structure of the human neural system and consists of input, output, and hidden multi-layer networks. These networks improve the capabilities of machine learning processing (Figure 1A) (5).

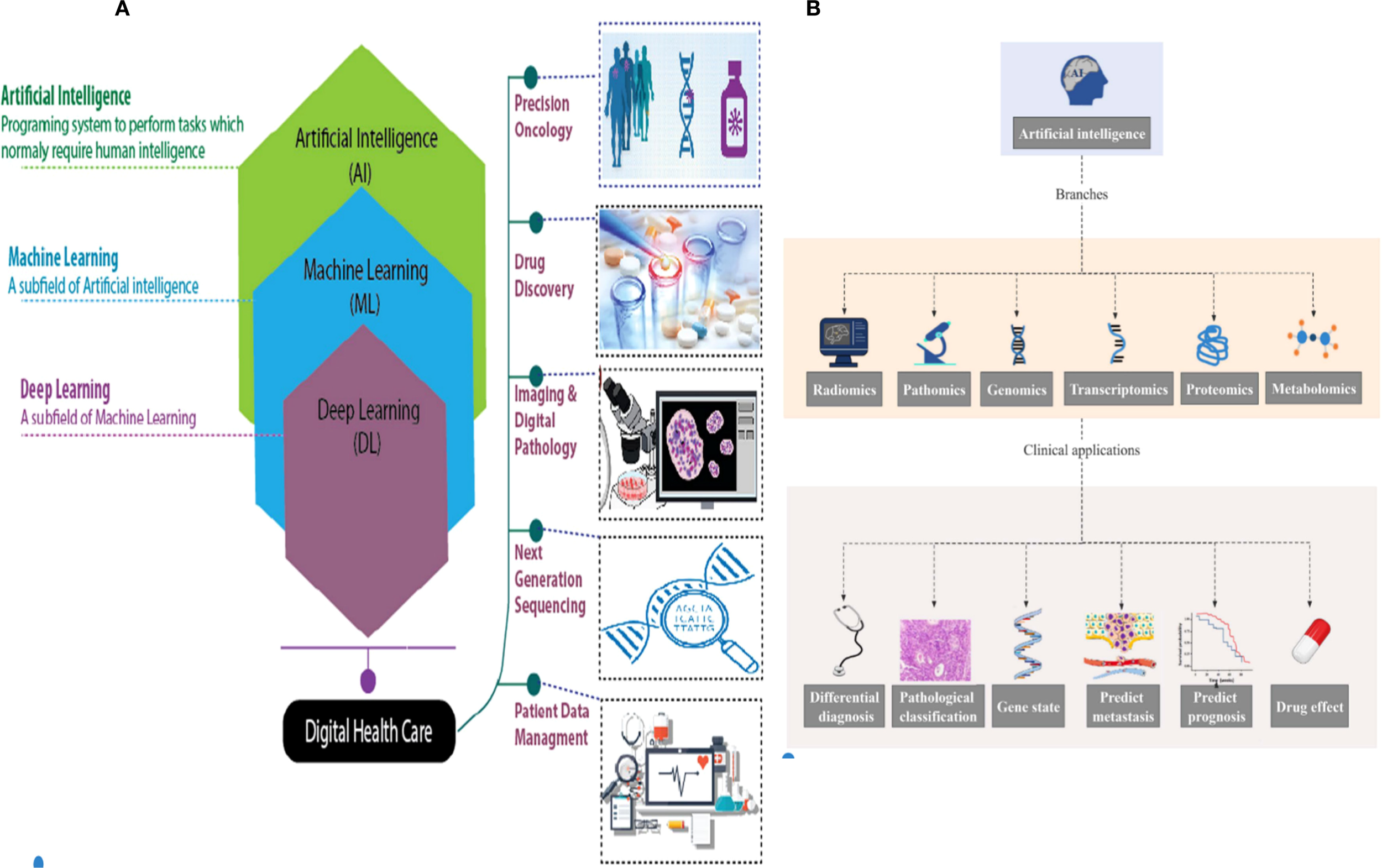

Figure 1

(A) Hierarchy of artificial intelligence (AI), machine learning (ML), deep learning (DL), and their applications in digital healthcare and oncology (e.g., precision oncology, drug discovery, and digital pathology) (3). (B) AI in omics (radiomics, pathomics, genomics, etc.) and related clinical applications (differential diagnosis, prognosis prediction, drug effect evaluation, etc.) (4).

The progress in artificial intelligence has led to the successful use of deep learning techniques, including segmentation, detection, classification, and augmentation, in the field of medical imaging (6, 7) (Figure 2A). This has opened up new possibilities for developing computer-aided systems for medical imaging diagnosis. Recent studies have shown that deep learning-based AI models can enhance the accuracy of diagnosing, predicting, and prognosticating gynecologic malignancies (GMs). These models also have the potential to improve the identification, classification, segmentation, and visual interpretation of bone tumors. In addition, radiomics is a sophisticated technology that is frequently used in conjunction with artificial intelligence. It is specifically developed to extract and analyze numerical radiological patterns using quantitative image parameters such as geometry, size, texture, and intensity. It is often compared to deep learning. Radiomics has been widely recognized as a valuable tool for disease prediction, prognosis, and monitoring (8).

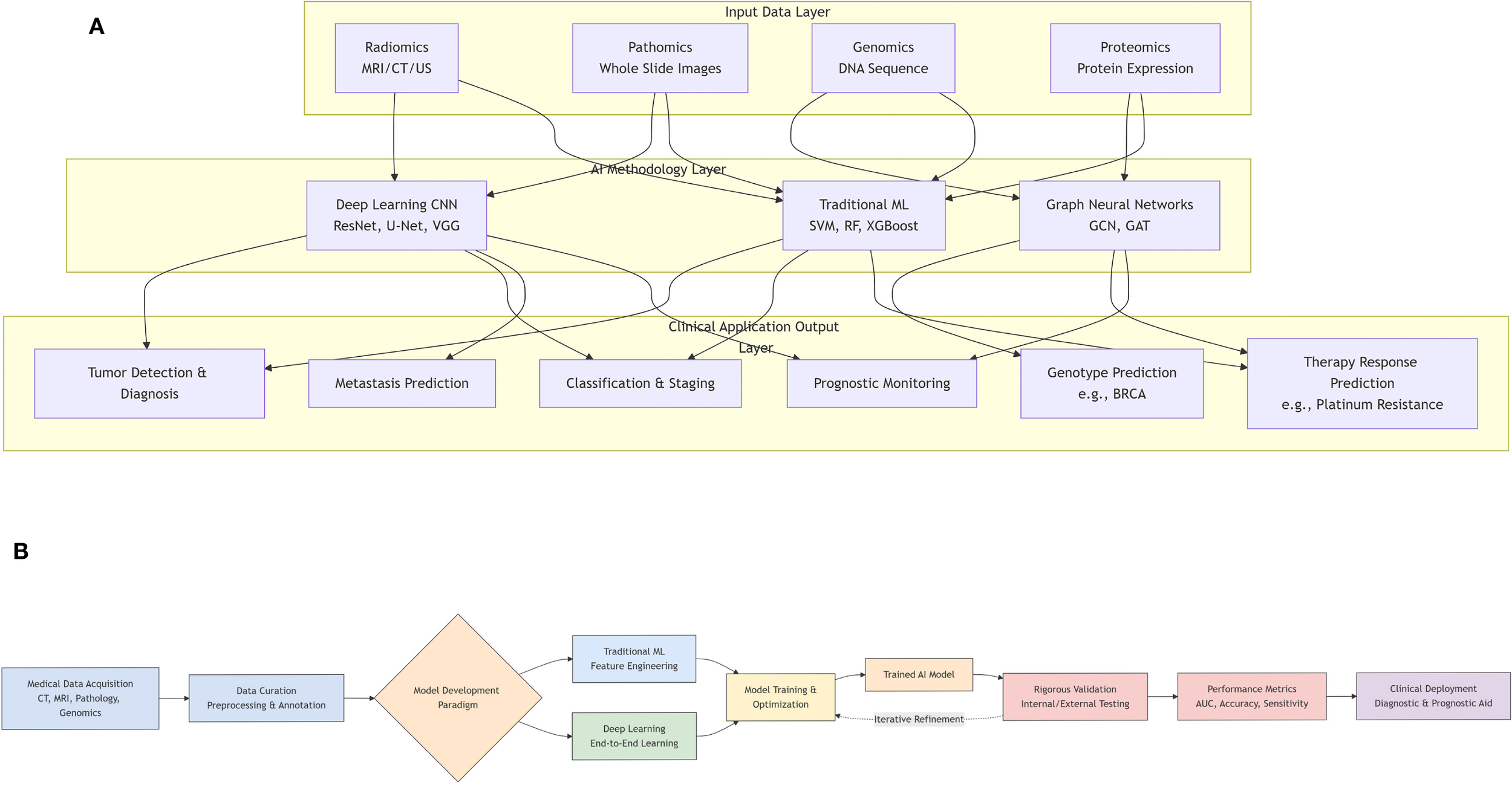

Figure 2

(A) Workflow of artificial intelligence (AI) model development and validation in medical research. (B) A graphical overview of the application of artificial intelligence in gynecologic malignancies.

When it comes to AI technology, gynecologic oncology falls short of the level required for everyday clinical use, unlike other medical specialties like endoscopy. The precise prediction of a definite diagnosis or prognosis significantly influences the therapy of gynecologic malignancies. The objective of this study was to elucidate the current status of AI research in relation to gynecologic cancers. In addition, we examined the obstacles encountered in the advancement of artificial intelligence in the field of gynecologic oncology. We anticipate that this study will subsequently encourage further research and accelerate the implementation of AI in the field of gynecologic oncology.

This review makes several key contributions to the field. First, it provides a comprehensive and up-to-date synthesis of the rapidly evolving application of deep learning across various imaging and omic modalities for gynecologic malignancies (Figure 2B). Second, it offers a detailed technical explanation of fundamental AI/ML/DL concepts and model architectures tailored for a clinical audience. Third, it critically examines not only the promising results but also the significant technical and clinical challenges hindering widespread clinical adoption. Finally, it discusses future directions to overcome these barriers and realize the potential of AI in improving gynecologic oncology care.

The paper is structured as follows: Section 2 introduces fundamental AI, ML, and DL concepts and architectures. Section 3 provides an overview of major gynecologic malignancies and precision oncology. Section 4 details DL applications in radiological image analysis (radiomics) for tasks like tumor detection, classification, and prognosis prediction. Section 5 focuses on DL for pathological image analysis, while Section 6 explores integration with other omics data. A comparative analysis of DL versus conventional imaging is presented in Section 7. Key technical and clinical challenges are discussed in Section 8, followed by future directions in Section 9. The review concludes with a summary in Section 10.

2 Artificial intelligence and deep learning

Artificial intelligence (AI) is a branch of computer science focused on replicating human intelligence to perform tasks that typically require human expertise (9). ML, a subset of AI, employs mathematical algorithms to enable autonomous decision-making (10). DL, a modern ML technique, differs from traditional ML in its data dependency, hardware requirements, feature engineering, problem-solving approach, execution time, and interpretability (11). DL excels in complex classification tasks using diverse inputs such as images, text, or audio, often outperforming classical ML methods (12). DL models consist of multiple layers that form neural network architectures and require extensive training on large labeled datasets.

2.1 Artificial intelligence

AI is an emerging discipline aimed at replicating, enhancing, and extending human intelligence through theoretical and technological innovations (13). The key components of AI technical systems include natural language processing, image recognition, human–computer interaction, and machine learning (14). Natural language processing integrates linguistics, computer science, and mathematics to enable machines to understand, interpret, and generate human language, supporting tasks such as information retrieval, speech recognition, and translation (15). Image processing involves acquisition, filtering, enhancement, and feature extraction, which significantly improves computational efficiency and reduces energy consumption compared to traditional methods (15). Human–computer interaction technologies, including computer graphics and augmented reality, facilitate seamless communication between users and machines (15). ML encompasses supervised, unsupervised, transfer, reinforcement, and integrated learning, employing algorithms such as deep learning, artificial neural networks, decision trees (16), and boosting algorithms. Rule-based AI systems have demonstrated clinical utility in lung cancer diagnosis (17), treatment (18), and prognosis (Figure 1B) (19).

AI is increasingly applied in medical research, including imaging, pathomics, genomics, transcriptomics, proteomics, and metabolomics. Recent studies have highlighted its role in multi-omics analysis for diagnosing GMs, distinguishing benign from malignant tumors, and predicting pathological classification, treatment response, and prognosis.

2.2 Machine learning

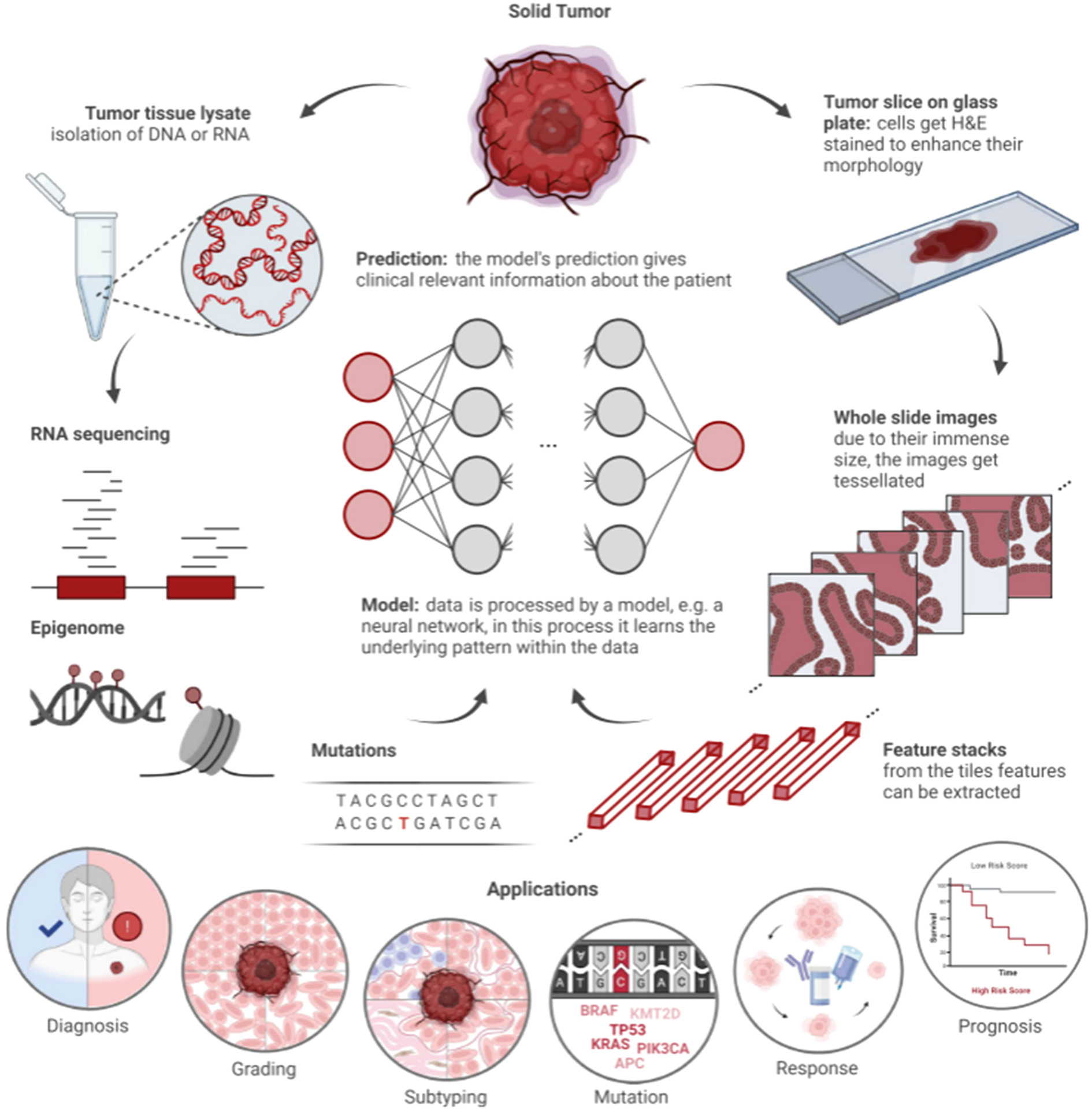

As a core component of AI, ML includes three primary methodologies: supervised, unsupervised, and reinforcement learning. In reinforcement learning, models receive rewards for correct decisions. Unsupervised learning identifies patterns in unlabeled data, such as through clustering algorithms. Supervised learning relies on human-labeled data to train models, which are penalized for incorrect predictions. Common supervised models include support vector machines (SVMs), decision trees, and artificial neural networks. These models vary in size, with neural networks containing parameters ranging from hundreds to billions (20). DL, or deep neural network, is particularly effective for image and text data due to its robustness in handling complex structures. In precision oncology, DL efficiently analyzes histopathologic and genomic data (Figure 3) (22). Multimodal approaches integrating ML and DL on diverse data types, such as histopathological images combined with genetic information, further enhance model performance by leveraging complementary information (Table 1) (42).

Figure 3

Workflow of artificial intelligence (AI) in histopathology and clinical genomics (21). Solid tumors supply two data streams: 1) molecular data (DNA/RNA sequencing, epigenomic profiles, and genetic mutations) and 2) histopathological data (H&E-stained tumor slices, with whole slide images split into tiles for feature extraction). A model (e.g., neural network) analyzes these data to identify patterns, supporting applications including diagnosis, grading, subtyping, mutation detection, treatment response prediction, and prognosis.

Table 1

| Model/method | Key principle | Advantages | Limitations | Typical use cases in GMs |

|---|---|---|---|---|

| Support vector machine (SVM) | Finds the optimal hyperplane that maximizes the margin between classes. | Effective in high-dimensional spaces; robust against overfitting. | Sensitive to kernel/parameters; poor scalability to large datasets. | Classification of tumors based on radiomic features (23). |

| Random forest (RF) | An ensemble of decision trees, using bagging and feature randomness. | High accuracy; handles non-linear data; provides feature importance. | Less interpretable; computationally expensive with many trees. | Variable importance analysis; classification and regression (24, 25). |

| Decision tree (DT) | A tree-like model of decisions and their possible consequences. | Highly interpretable and visualizable; easy to understand. | Prone to overfitting; unstable to data variations. | Base learner for ensembles; preliminary data exploration (26, 27). |

| Artificial neural network (ANN) | Network of interconnected nodes that mimic neurons, learning complex non-linear relationships. | Can model highly complex patterns; universal function approximator. | Can be a black box; requires careful tuning; prone to overfitting without regularization. | Early ML models for classification and prediction tasks (28, 29). |

| k-Nearest Neighbor (k-NN) | Classifies a data point based on how its k-nearest neighbors are classified. | Simple to implement and understand; no training phase (lazy learner). | Computationally intensive during prediction; sensitive to irrelevant features and k-value. | Classification based on similarity in feature space (30, 31). |

| Bayesian network (BN) | A probabilistic graphical model representing variables and their conditional dependencies via a Directed Acyclic Graph (DAG). | Handles uncertainty well; interpretable causal relationships. | Learning network structure can be complex; requires prior knowledge or assumptions. | Probabilistic reasoning and risk assessment (32). |

| Classification and regression tree (CART) | A predictive model that uses a tree structure to go from observations to target value. | Can handle both classification and regression; handles non-linear relationships. | Can create over-complex trees that do not generalize well (overfitting). | Similar to DTs, used for building interpretable models (33). |

| Multivariate adaptive regression splines (MARS) | A non-parametric regression technique that models complex relationships by splitting data into regions. | Flexible in modeling non-linearities; handles high-dimensional data. | Can become overly complex and lose interpretability. | Modeling complex, non-linear relationships in medical data (34). |

| Gray-level co-occurrence matrix (GLCM) | A statistical method that examines texture by considering the spatial relationship of pixels. | Effective for capturing texture features in images; well-established. | Computationally heavy; features can be sensitive to image rotation and scale. | Texture analysis and feature extraction from MR/CT images (35). |

| Feature extraction | The process of transforming raw data into a reduced representation of informative features. | Reduces data dimensionality; can improve model performance and efficiency. | Hand-crafted features may not capture the most discriminative information. | Extracting radiomic features from medical images for downstream ML tasks (36–39). |

| Model building | The integrative process of combining features, clinical data, and algorithms to create a predictive model. | Creates robust and clinically applicable tools; can incorporate multi-modal data. | Requires domain expertise for variable selection and interpretation. | Building nomograms or integrated models for diagnosis/prognosis (40, 41). |

Comparison of selected traditional machine learning and feature engineering methods.

GMs, gynecologic malignancies; ML, machine learning; DTs, decision trees.

2.2.1 Support vector machine

SVM is a widely used ML method for classification and regression. It identifies the optimal hyperplane that separates classes in an n-dimensional space. The optimization objective for a linear SVM is as follows:

where w is the weight vector defining the hyperplane, *b* is the bias term, xi is the data points, and yi ∈ {−1, +1} is their corresponding class labels.

SVMs use support vectors and kernel functions to handle non-linear separations. In GM research, SVMs have been applied to tumor detection using features from MR images (23).

2.2.2 Decision tree

Decision trees (DTs) are supervised learning models that identify attributes and patterns in large datasets for predictive modeling (26). They provide interpretable visual representations of relationships between variables (27). While DTs are easy to construct and explain, ensemble methods like random forests improve predictive stability by combining multiple trees.

2.2.3 Artificial neural network

ANNs are computational models inspired by biological neural networks, capable of learning patterns from data (28). They adapt through experience, making them suitable for classification and prediction tasks. ANNs exhibit non-linearity, enabling them to model complex data patterns. The output a of a neuron is computed as follows:

where xi is the input, wi is the corresponding weight, b is the bias term, and f is the non-linear activation function (e.g., Sigmoid and Rectified Linear Unit (ReLU)). ANNs are structured into input, hidden, and output layers, with the configuration denoted as X–Y–Z, indicating the number of neurons in each layer (29).

2.2.4 k-Nearest neighbor

k-Nearest neighbor (k-NN) is a non-parametric method used for classification and regression (30). It identifies the k most similar training examples to a new input and assigns the majority class among them. The choice of k affects model complexity: small k may lead to overfitting, while large k may include irrelevant data. Cross-validation helps select an optimal k (31).

2.2.5 Bayesian network

Bayesian networks (BNs) represent probabilistic relationships among variables using a directed acyclic graph (32). Nodes denote variables, and arcs indicate dependencies. BNs estimate event probabilities rather than provide deterministic predictions.

2.2.6 Random forest

Random forest (RF) is an ensemble learning method that combines multiple decision trees to reduce variance and improve accuracy (24). It trains trees on random data subsets and averages their predictions, mitigating overfitting and providing variable importance estimates (25).

2.2.7 Classification and regression trees

Classification and regression tree (CART) constructs binary trees for classification or regression (33). Nodes represent decision rules, and leaves represent outcomes. Split points are chosen to minimize a cost function, emphasizing problem structure over data distribution.

2.2.8 Multivariate adaptive regression splines

Multivariate adaptive regression splines (MARS) models relationships between continuous dependent and independent variables using piecewise regression equations (34). It handles categorical and continuous data, offering flexibility beyond linear regression.

2.2.9 Gray-level co-occurrence matrix

Gray-level co-occurrence matrix (GLCM) is a texture analysis method that computes spatial relationships between pixel pairs in an image (35). It generates a co-occurrence matrix from which statistical features are extracted and applied in MRI-based feature analysis (35).

2.2.10 Feature extraction

Feature extraction includes feature selection and transformation (36). Selection identifies relevant variables (e.g., gene expression), while transformation uses dimensionality reduction or neural networks to derive latent features (37–39). Graph neural networks [graph convolutional network (GCN), graph autoencoder (GAE), and graph attention network (GAT)] learn low-dimensional representations from network-structured data for predictive modeling.

2.2.11 Model building

The final step in radiomics integrates clinical data, risk factors, biomarkers, and radiomic features into predictive models (e.g., nomograms) (40). Such models improve disease diagnosis, classification, and prognosis, advancing personalized medicine (41).

2.3 Deep learning

DL is a powerful subset of machine learning that automatically learns hierarchical features from large-scale datasets, such as images, text, and audio. A typical DL model consists of an input layer, multiple hidden layers (e.g., convolutional, pooling, recurrent, and fully connected layers), and an output layer (43). The convolutional layers extract local patterns through learnable filters, pooling layers reduce spatial dimensions and enhance translational invariance, and fully connected layers integrate high-level features for final prediction (44–46). DL encompasses various advanced architectures, including deep neural networks (DNNs), autoencoders (AEs), deep belief networks (DBNs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs). Among these, CNNs have achieved remarkable success in visual recognition tasks and are increasingly applied in medical image analysis (Figure 4) (48).

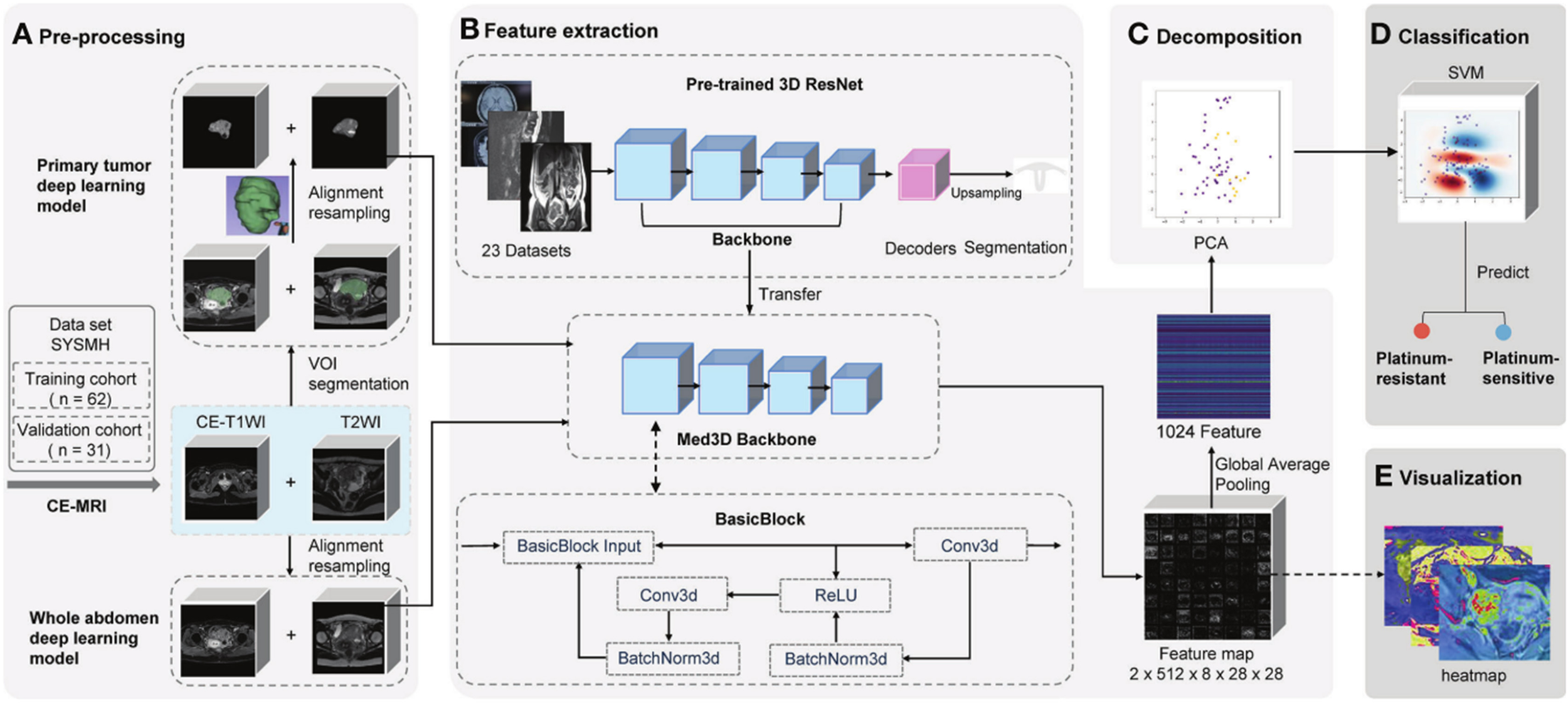

Figure 4

Schematic of deep learning frameworks for predicting platinum sensitivity using MRI (47). Two models use distinct volumes of interest (VOIs). 1) Primary tumor model: manually segmented primary tumor in contrast-enhanced T1-weighted imaging (CE-T1WI)/T2-weighted imaging (T2WI). 2) Whole abdomen model: entire abdomen volume (no manual segmentation). Workflow: (A) pre-processing (segmentation/registration/normalization of CE-T1WI/T2WI VOIs), (B) feature extraction via pre-trained 3D ResNet (transferred backbone + global average pooling to extract 1,024 features per patient), (C) principal component analysis (PCA) for feature decomposition, (D) support vector machine (SVM)-based platinum sensitivity prediction, and (E) heatmap visualization of convolutional layer feature maps.

2.3.1 Convolutional neural networks

A CNN is a type of feedforward neural network commonly composed of convolutional layers, activation functions (e.g., ReLU), pooling layers, and fully connected layers (49). The convolutional filters operate on local receptive fields and share parameters across spatial locations, enabling efficient feature learning without manual design. This capability has proven highly effective in tasks such as tumor segmentation and classification in medical imaging (50). However, CNNs generally require large amounts of annotated data for training and are susceptible to overfitting. Regularization methods such as dropout, weight decay, and data augmentation are widely used to improve generalization (51).

2.3.1.1 Basic structure

The fundamental building blocks of CNNs include the following.

Convolutional layers: These layers apply a set of learnable filters to the input. Each filter performs convolution operations across the input volume to produce feature maps highlighting specific patterns such as edges, textures, or complex shapes. The discrete convolution operation between an input image I (height H and width W) and a kernel K (size kh×kw) is defined as follows:

This operation is performed across the entire image to produce a feature map, highlighting the locations where the kernel’s pattern is detected. This process allows the network to detect locally relevant patterns such as edges, textures, and shapes (52).

Pooling layers: Pooling (e.g., max pooling or average pooling) downsamples the feature maps, reducing computational burden and increasing receptive field size. It also contributes to model robustness against input variations (53).

Fully connected layers: After feature extraction and dimensionality reduction, the features are flattened and processed through one or more fully connected layers. These layers perform global reasoning and generate final outputs, such as class labels or regression values (54).

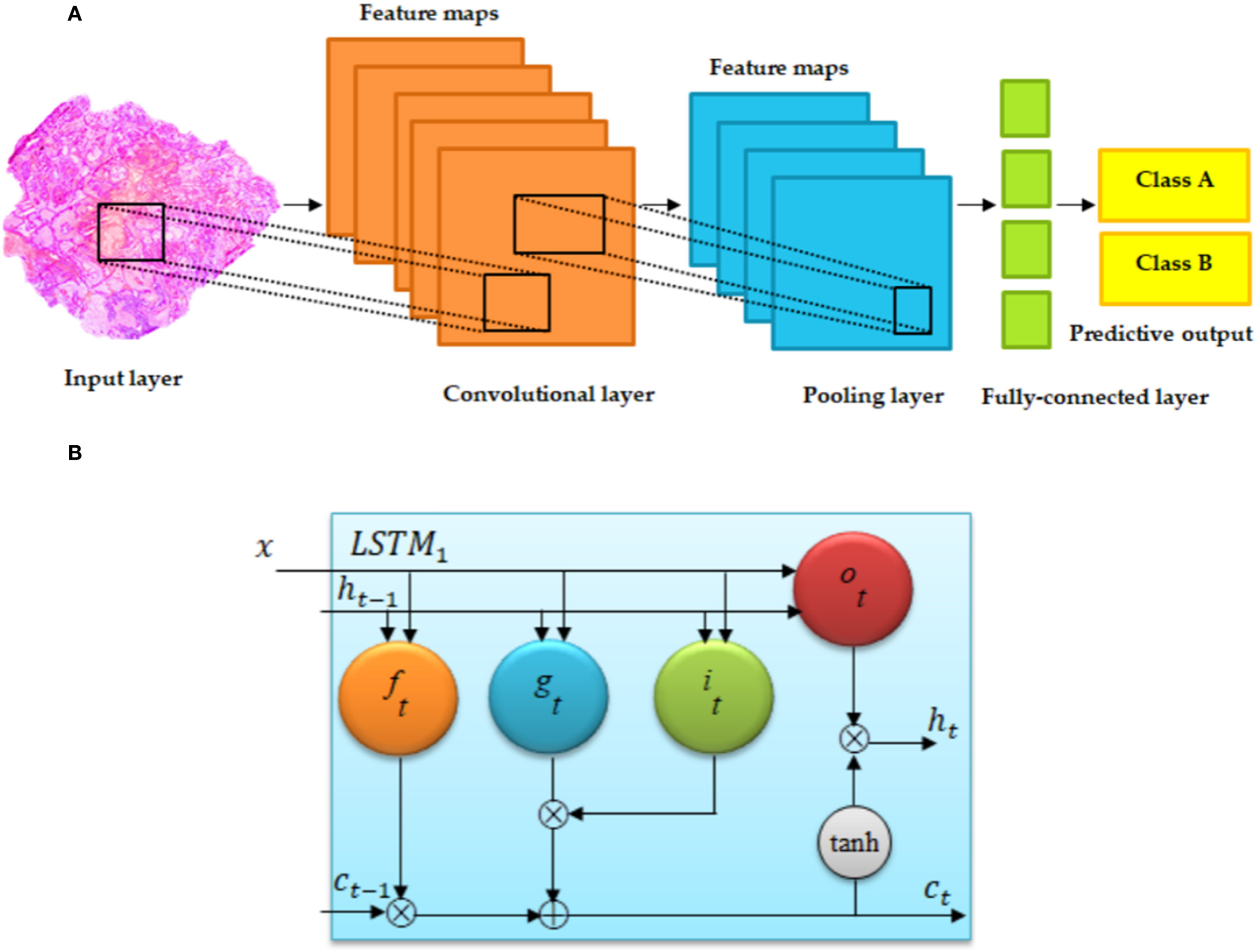

A representative CNN structure is illustrated in Figure 5, showing the flow from input through convolutional and pooling layers to the fully connected output layers.

Figure 5

Basic architecture of (A) convolutional neural network and (B) long short-term memory (LSTM) (55). (A) Regular convolutional neural networks (CNNs) have convolutional, fully connected (FC), and pooling layers. (B) The memory cell c is controlled through a group of gate networks, including the following: f, forget gate network; i, input gate network; and o, output gate network.

2.3.1.2 Network structures

Convolutional, ReLU, pooling, and fully connected layers are stacked to form CNNs, which can be designed as either deep or shallow architectures. Classical deep CNNs such as LeNet (56), AlexNet (57), and GoogLeNet (58) are summarized in Table 2. Training deep CNNs requires large amounts of annotated data, which are often limited in medical imaging applications. Therefore, shallower CNN architectures are also widely considered in this domain, offering a balance between performance and data efficiency (Figure 6) (59).

Table 2

| Model | Key architecture | Advantages | Limitations | Typical use cases in medical imaging |

|---|---|---|---|---|

| AlexNet | A pioneering deep CNN with 5 convolutional and 3 fully connected layers, using ReLU activation. | Demonstrated the power of deep CNNs on large-scale datasets; revolutionized the field. | By modern standards, architecture is less efficient, and parameters are not optimized. | Baseline architecture for image classification tasks (57, 59). |

| VGGNet | A very deep CNN with a simple architecture using stacks of 3 × 3 convolutional layers. | High representational power due to depth; simple and uniform architecture. | Very computationally expensive and parameter-heavy due to full connections. | Feature extractor for various medical image analysis tasks. |

| GoogLeNet/Inception | Introduced the Inception module to perform multi-level feature extraction within a single layer. | Improved computational efficiency; reduced number of parameters. | Complex network design can be harder to modify and train from scratch. | Efficient and accurate image classification and detection (58). |

| ResNet | Introduces skip connections (residual blocks) to solve the vanishing gradient problem in very deep networks. | Enables training of extremely deep networks (100+ layers); state-of-the-art performance. | Very deep networks can still be computationally intensive. | Backbone for many state-of-the-art models in classification, segmentation, etc. (60). |

| U-Net | Symmetric encoder–decoder architecture with skip connections for precise localization. | Excellent for semantic segmentation; effective with limited data. | Primarily designed for segmentation, not classification. | Biomedical image segmentation (e.g., tumor and organ delineation) (61, 62). |

| Generative adversarial network (GAN) | Two networks (generator and discriminator) trained adversarially. | Can generate synthetic data; useful for data augmentation. | Training can be unstable (mode collapse). | Data augmentation for rare cancer types; image synthesis (63, 64). |

| Convolutional autoencoder (CAE) | An autoencoder using convolutional layers to encode input into a latent space and decode it. | Learns compressed representations; useful for denoising and dimensionality reduction. | The latent space may not be as interpretable. | Image denoising, compression, and unsupervised feature learning (65, 66). |

| Vision Transformer (ViT) | Applies transformer architecture with self-attention mechanisms to image patches. | Captures global contextual information effectively. | Requires large datasets to outperform CNNs; computationally heavy. | Alternative to CNNs for image classification and analysis (67). |

| Graph neural network (GNN) | A general class of networks that operate on graph-structured data. | Models complex relationships and dependencies between entities. | Not directly applicable to standard image data without graph construction. | Analyzing molecular structures, protein interactions, and relational data (68). |

| Graph convolutional network (GCN) | A type of GNN that performs convolution operations on graphs. | Efficiently captures node features and graph topology. | Requires a defined graph structure as input. | Node classification, link prediction in biological networks (69). |

| Graph attention network (GAT) | Incorporates attention mechanisms into graph learning, weighting the importance of neighbors. | Dynamic and adaptive neighborhood importance; often outperforms GCN. | Higher computational cost than GCN. | Tasks where some connections are more important than others (70). |

| Graph autoencoder (GAE) | Uses GNNs as encoders to learn node/graph embeddings for unsupervised reconstruction. | Learns meaningful latent representations of graph data in an unsupervised way. | Quality of embeddings is tied to the reconstruction task. | Dimensionality reduction, anomaly detection in network data (71). |

| GraphSAGE | An inductive framework that generates node embeddings by sampling and aggregating features from a node’s local neighborhood. | Generalizes to unseen nodes/graphs; not transductive like GCN. | Sampling process can omit important information. | Large-scale graph applications where new nodes are common (72). |

| Graph regularization | A technique to incorporate graph/network information as a constraint into an optimization problem. | Improves model performance by enforcing smoothness or structure on the solution. | Not a standalone model; an add-on technique to guide other algorithms. | Regularizing models in semi-supervised learning (73). |

Comparison of selected deep learning model architectures.

CNNs, convolutional neural networks.

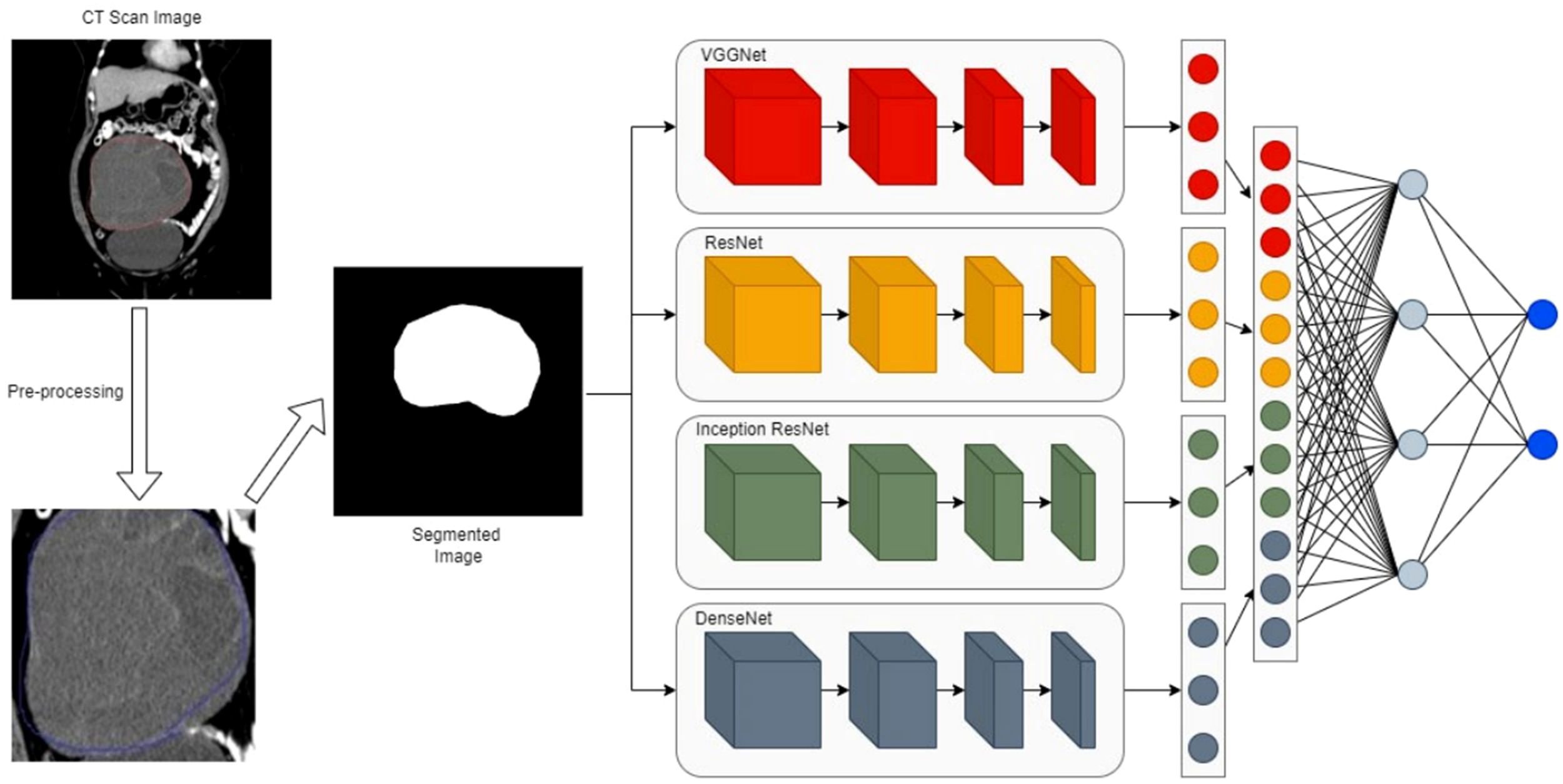

Figure 6

The proposed ensemble network with a four-path convolutional neural network (CNN) of VGGNet, ResNet, Inception, and DenseNet (74). The workflow involves 1) taking a CT scan image as input, 2) pre-processing the CT image to generate a segmented image of the region of interest, 3) extracting features from the segmented image via four parallel CNN branches, and 4) fusing branch-specific features and feeding them into subsequent networks to complete the final task (e.g., classification).

It is essential to ensure that the test dataset follows the same distribution as the training set to obtain a reliable evaluation of model performance. Common metrics include accuracy, precision, recall, sensitivity, specificity, AUC-ROC, and F1-score. While accuracy is sometimes used for quick model comparison, a comprehensive evaluation typically employs multiple metrics. In practice, a trade-off between accuracy and computational efficiency (e.g., inference time within 100 ms) is often necessary, where accuracy is optimized under predefined runtime constraints (75).

AlexNet: This pioneering deep CNN helped popularize deep learning in computer vision. It consists of five convolutional layers and three fully connected layers, utilizing ReLU activations and dropout regularization. AlexNet significantly outperformed traditional methods in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2012 (59).

VGGNet: Known for its simplicity and depth, VGGNet uses stacks of 3 × 3 convolutional layers followed by max pooling. This design increases network depth while preserving receptive fields, improving feature learning capacity (31).

GoogLeNet: It introduced the Inception module, which performs parallel convolutions with different kernel sizes and merges their outputs. This structure captures multi-scale features efficiently while controlling computational cost (58).

ResNet: Residual Networks address the degradation problem in very deep networks through skip connections. These identity mappings allow gradients to flow directly through layers, enabling stable training of networks with hundreds of layers (60).

U-Net: Originally designed for biomedical image segmentation, U-Net employs a symmetric encoder–decoder architecture with skip connections. This design combines high-resolution features from the encoder with upsampled decoder features, enabling precise localization (61).

GNNs and Extensions: Graph neural networks (GNNs), including GCNs, GATs, GAEs, and GraphSAGE, extend convolutional operations to graph-structured data. They learn node representations by aggregating information from neighborhoods and have shown promise in modeling biological networks (68–72).

GANs: Generative adversarial networks consist of a generator and a discriminator trained adversarially. GANs are highly effective in generating realistic synthetic data and have been used for data augmentation, domain adaptation, and image reconstruction in medical applications (63, 64).

CAEs: Convolutional autoencoders employ convolutional layers in both encoder and decoder components. They are used for unsupervised representation learning, denoising, and anomaly detection (65, 66).

ViT: Vision Transformer (ViT)adapts the transformer architecture to images by dividing them into patches and processing them as sequences. ViT captures global contextual information and has achieved competitive performance in several medical imaging benchmarks (67).

3 Gynecologic malignancies

Timely identification can reduce the risk of substantial illness and death associated with neoplasms in women. Among all types of cancer, breast cancer is the most frequently occurring, with gynecologic malignancies of endometrial, ovarian, and cervical origin being the next most common (76). Although gynecologic cancers are less common than breast cancer, they have higher rates of illness and death. The American Cancer Society predicts that there will be approximately 116,760 new cases and 34,080 deaths caused by gynecologic cancers in 2021 (77).

The majority of existing studies using AI have concentrated on breast imaging. An extensive literature search on the application of AI in breast cancer imaging identified 767 studies spanning from the 1990s to the present. However, a different search for AI in gynecologic cancer imaging resulted in only 194 studies, with a majority of these being published in the last 2 years.

3.1 Ovarian cancer

Ovarian cancer (OC) typically manifests with a subtle onset, lacking any distinctive symptoms or indicators. Unfortunately, the disease often remains undetected until its advanced stages, affecting over 70% of patients. As a result, patients miss the window for effective treatment. As a result, ovarian cancer has the greatest death rate among tumors in the female reproductive system (78). The condition is characterized by mild initial symptoms and a poor prognosis. OC is the most prevalent and perilous form of gynecologic cancer. The four subtypes of primary epithelial ovarian carcinoma include serous, mucinous, endometrioid, and clear cell ovarian cancer. There is still a shortage of effective screening techniques for ovarian cancer. Clinical settings commonly employ the combination of transvaginal sonography and serum carbohydrate antigen (CA) 125 to initially identify ovarian cancer. However, this method has limited sensitivity and specificity (79). Transvaginal sonography frequently leads to the misidentification of benign pelvic masses as malignant ones (80), and its accuracy is significantly affected by the doctor’s level of expertise. However, peripheral blood testing offers the benefits of being painless, minimally invasive, and rapid, with greater acceptance and compliance. However, the use of CA125 is prone to false-positive results due to interference from benign tumors, inflammation, and hormone levels. Prior research (81) has consistently demonstrated that the area under the receiver operating characteristic curve (AUC) for the subject operating characteristic curve is below 0.8, posing challenges in meeting clinical requirements.

Debulking surgery and platinum-based chemotherapy are the conventional methods used to treat epithelial ovarian cancer (EOC) (82). Despite the possibility of achieving a high remission rate, approximately 20% to 30% of patients undergo numerous cycles of toxic medication before developing resistance to platinum-based treatments. This delay in identifying resistance and initiating therapy with effective drugs has proven to be a significant obstacle in improving patient outcomes (83). At the same time, platinum sensitivity is an easy way to find groups that respond to poly(ADP-ribose) polymerase inhibitors (PARPi) (84). This prediction can help prevent the unnecessary inclusion of patients in different clinical studies. If platinum sensitivity could be accurately anticipated, patients would derive greater advantages from precision therapy. Nevertheless, traditional clinical markers such as CA125 and tumor immunohistochemistry have a restricted ability to predict outcomes (85). In modern times, biopsies followed by mutation profiling or surgical resections have become a customary and enlightening practice (86). Nevertheless, the high expense, the invasive nature of the methods, the presence of genetic variation inside the tumor, and the need for many tumor samples greatly restrict the usefulness of molecular testing. This raises significant concerns about the cost-effectiveness of such testing.

The difficulty lies in the absence of a reliable screening technique, resulting in the diagnosis of ovarian cancer at an advanced stage, typically Stage III or IV. Radiologists conduct a manual analysis and interpretation of medical images from a patient suspected of having cancer in order to determine the specific type and stage of the cancer. As a result, the process misclassifies cancer subtypes, introduces variances in observations across different individuals, introduces subjectivity, and consumes a significant amount of time. This led to the creation of a diverse range of machine learning models aimed at forecasting and identifying tumors. The lack of effective early screening methods and the complexity of predicting platinum resistance represent significant clinical challenges that artificial intelligence and deep learning approaches are uniquely positioned to address.

3.2 Cervical cancer

The human cervix is lined by a delicate layer of tissue. The condition known as cervical cancer occurs when a cell transforms into a malignant one, exhibiting rapid growth and division, leading to the formation of a tumor. Early detection of this malignancy is crucial for successful treatment (87).

Cervical cancer is a prevalent type of cancer that affects the female reproductive system and has a significant impact on health and survival. It is widespread globally and particularly affects a large number of patients in China (88). Established risk factors for cervical cancer include human papillomavirus (HPV) infection, chlamydia infection, smoking, overweight/obesity, an unhealthy lifestyle, and the use of intrauterine devices (89). Prompt and consistent screening, together with early detection, are crucial in the prevention and management of cervical cancer. This is because precancerous abnormalities can manifest before the onset of cervical cancer and may progress into cancerous growths over a span of many years (90).

Cervical cancer screening involves the identification of cervical intraepithelial neoplasia (CIN), commonly referred to as cervical dysplasia. CIN is categorized into three grades: CIN1 (mild), CIN2 (moderate), and CIN3 (severe) (91). The main objective of cervical cancer screening in clinical practice is to assess the stage of CIN, which includes normal, CIN1, and CIN2/3.

Cervical cancer screening primarily consists of three steps: a Pap/HPV test, a colposcopy, and a pathological examination. During a Pap test, trained medical staff retrieve a few cell samples from the cervix and scrutinize them under a microscope to detect squamous and intraglandular epithelial lesions (SILs). The HPV test is a molecular test that pinpoints specific strains of the human papillomavirus associated with cervical cancer. If the Pap/HPV test yields abnormal results, it is recommended to undertake a colposcopy to locate suspicious lesions and undergo pathological investigations to determine the stage of CIN (92). Based on the specific attributes of the lesions seen during the colposcopy, the severity of CIN, and the patient’s medical background, a personalized treatment plan can then be developed.

Ultrasound is a commonly employed imaging diagnostic method for screening cervical cancer due to its simplicity and affordability. Computed tomography (CT) offers a superior ability to accurately display organs and soft tissue structures with subtle variations in density because of its high-density resolution. However, its capacity to evaluate the infiltration and dissemination of cervical cancer in other regions is limited, thereby constraining its therapeutic effectiveness. Because of the benefits of multiparameter multisequence imaging and high tissue resolution, MRI plain scan is highly suitable for diagnosing and staging cervical cancer (93). However, it still has certain drawbacks. Recently, researchers have developed several new multimodal MRI sequences that significantly enhance the diagnostic precision of MR images for various disorders.

Squamous cell carcinoma (SCC), adenocarcinoma (ADC), and tumors with unclear histological subtypes are the most common classifications for cervical cancer. SCC is the predominant form of cervical cancer, accounting for approximately 80% of all occurrences (94). SCC detection has greater clinical relevance for detecting SCC than ADC. HPV testing is more sensitive than cytology testing for cervical cancer screening, as discussed earlier (95). HPV testing enhances the comprehension of cervical cancer progression and identifies specific HPV genotypes, including HPV 16 and HPV 18. These two genotypes of high-risk HPV are the most prevalent, and together, they contribute to approximately 70% of cervical cancer cases. The Cancer Genome Atlas project has documented that gene alterations exhibit variability across different subtypes, indicating that distinct tumor subtypes may require tailored therapeutic interventions (96). The classification of cervical cancer subtypes is intriguing because it directly impacts the development of personalized treatment approaches by distinguishing between different types of cervical cells.

Although low-grade lesions often resolve on their own, high-grade lesions have the capacity to advance to aggressive malignancy. Hence, it is imperative to promptly detect high-grade lesions in order to intervene and prevent cervical cancer. DL algorithms can effectively and swiftly identify and categorize the extent of abnormalities in acetic acid test images, assisting in the prompt identification of severe abnormalities and enabling appropriate intervention and treatment. Computer-assisted diagnosis of cervical cancer is critical for efficiently preventing cancer development, making it highly important in clinical practice (97). However, the heavy reliance on cytological and colposcopic expertise, coupled with the subjective interpretation of screenings, creates a pressing need for automated, objective, and AI-powered diagnostic tools to improve accessibility and consistency in early detection.

3.3 Endometrial cancer

Endometrial carcinoma (EC) is a malignant tumor that develops in the inner epithelial lining of the uterus. It is the sixth most common cancer among women. Globally, 417,367 women received EC diagnoses in 2020, leading to significant financial burdens for both patients and caregivers (98). It is noteworthy that Asian women are prone to developing endometrial cancer at a younger age compared to other groups. Additionally, they tend to have more advanced stages of the illness. Therefore, it is crucial to accurately diagnose patients at an early age in order to provide appropriate management (99).

Endometrial cancer is associated with certain risk factors, such as postmenopausal hemorrhage, diabetes mellitus, arterial hypertension, smoking, nulliparity, and late menopause (100). Endometrial thickness has been proposed as a screening approach for women; however, its diagnostic effectiveness is severely limited due to the high occurrence of false-positive results, which have been reported to exceed 70% (101). Upon amalgamating several characteristics, the predictive efficacy of these indices appears to be enhanced, as indicated by recent studies reporting estimated sensitivity and specificity rates ranging from 70% to 80% in extensive cohorts (100).

The 2023 International Federation of Gynaecology and Obstetrics (FIGO) staging system categorizes endometrial cancers into two types: type I (low-grade endometrioid, grade 1 or 2) with a generally favorable prognosis and type II (grade 3 endometrioid, serous, clear cell, carcinosarcoma, undifferentiated/dedifferentiated) with a poorer prognosis. These tumors originate from many biological pathways with specific molecular changes (102). The current guidelines for optimum care of endometrial cancer patients include molecular categorization based on the standards published by the World Health Organization (WHO) (103), the European Society of Gynaecological Oncology (ESGO) (104), and the 2023 FIGO (105). WHO, the ESGO, and the 2023 FIGO guidelines all support the molecular categorization of endometrial cancer. This gives a more accurate prognosis and more personalized treatment plans than traditional grading methods. Nevertheless, the adoption of this technology is still limited, especially in underdeveloped nations, because of resource constraints and the limited availability of specialized diagnostic equipment. Molecular classification, in contrast to the grading system, focuses on examining precise genetic and molecular alterations (such as POLEmut, MMRd, NSMP, and p53abn) in cancer cells to inform treatment choices, rather than evaluating histological characteristics such as cellular atypia and tumor architecture.

Currently, the diagnosis of EC primarily relies on clinical symptoms, physical examinations, laboratory tests, transvaginal ultrasound, pelvic ultrasonography, endometrial biopsy with hysteroscopy, and various imaging techniques such as computed tomography, positron emission tomography/computed tomography, and magnetic resonance imaging. Diagnostic purposes also utilize certain biomarkers such as CA125 and HE4 (99). The goal of these examinations is to analyze the endometrial cells, assess the degree of disease, and identify the presence or absence of metastases. While these approaches exhibit favorable sensitivity in detecting EC, they also have drawbacks like limited specificity (especially transvaginal ultrasonography), invasiveness, discomfort, and high expense.

AI techniques in image processing are crucial for the timely identification, tracking, diagnosis, and treatment of EC. These methods aid the doctor in achieving a more precise disease diagnosis and can attain a high level of accuracy that may even surpass human recognition capabilities. Following the diagnosis of EC, physicians will endeavor to determine the extent of its dissemination, a procedure referred to as staging. The cancer stage refers to the extent to which cancer has spread across the body. It aids in assessing the severity of the cancer, devising appropriate treatment strategies, and predicting the potential efficacy of the treatment. The EC is categorized into four stages according to its extent of dissemination. The malignancy is localized exclusively in the uterus at stage 1. Stage 2 of cancer involves cancer cells in the uterus and cervix. In stage 3, the cancer has gone beyond the uterus but has not reached the rectum or bladder. It may also be found in the fallopian tubes, ovaries, vagina, and adjacent lymph nodes. The stage 4 malignancy has metastasized beyond the pelvic region. The presence of the condition may be observed in the bladder, rectum, and/or other remote tissues and organs. MRI is the most appropriate for detecting and assessing endometrial cavity EC, tumor infiltration into the myometrium, endocervix, and extensive spread into the parametria, as well as other cancer deposits in the pelvic region. Quantitative assessments on MRI are more effective than direct inspection by radiologists in identifying deep myometrial invasion. However, there are instances where it is not reliable to diagnose some invisible EC lesions on MRI. The rapid advancement of DL techniques, ranging from the initial shallow CNN model to the deep CNN model, along with the use of transfer learning, data augmentation, and other novel techniques, has provided motivation for their application in the automatic identification of EC.

Surgery remains a critical component in the treatment of endometrial cancer. The primary goals of this procedure are twofold: first, to remove the original tumor, and second, to accurately determine the extent of the disease and assess its prognostic aspects. While achieving the first target may be possible by a “simple” hysterectomy, the latter requires a more thorough intervention. This includes a complete omentectomy, pelvic lymphadenectomy, and lumbo-aortic lymphadenectomy (106). However, the therapeutic value of these procedures is still a subject of controversy. Performing invasive surgery on obese, elderly, and fragile individuals with endometrial cancer may result in serious consequences of significant concern. Therefore, it is necessary to maximize the diagnostic performance before surgery. Improving the patient selection process for surgery would lead to a decrease in the risk of unnecessary treatment, complications, and death by providing personalized care. The critical challenges of pre-operative molecular classification and accurate staging for personalized treatment planning are areas where deep learning models applied to imaging and histopathological data show immense potential.

3.4 Precision oncology

Precision tumor medicine entails utilizing a variety of advanced detection technologies, such as proteomics, transcriptomics, genomics, epigenomics, and metabonomics, to gather biological information related to tumors. This information is then used to guide the process of tumor screening, diagnosis, and treatment (107). The discovery of many gene mutations has significant benefits for molecular subtype classification, risk prediction for GMs, and accurate treatment strategy selection.

Precision oncology refers to the accurate identification and analysis of individual tumor cells. It is widely recognized as a crucial therapeutic approach in the battle against cancer and is centered on the identification of precise molecular targets. Precision oncology is associated with the use of personalized cancer genetic data. Additionally, it can incorporate proteomics data by extracting clinical signatures from electronic records stored in various computational databases (108). AI-based, innovative molecular techniques have been utilized in recent advancements in clinical oncology. Next-generation sequencing (NGS) is the optimal platform for producing large-scale datasets with high throughput. In addition, the development of an algorithm for early-stage cancer detection necessitates the involvement of oncology experts who possess a background in ML. This algorithm aims to identify new biomarkers and target sites, enable accurate diagnosis through NGS, identify specific target sites, and enhance medical imaging technology with high resolution (109). Precision oncology medications are developed to selectively attack cancer cells by exploiting their genetic heterogeneity. The system may use NGS data to recommend personalized therapy by taking into account individual genetic characteristics. AI is considered one of the leading cutting-edge treatments for accurate cancer diagnosis, prognosis, and treatment. This is achieved by analyzing large datasets from pharmaceutical and clinical sources through systematic data processing. The future of digital healthcare and clinical practices is expected to shift toward the utilization of algorithm-based AI for radiological image interpretation, e-health records, and data mining. This transformation aims to provide more accurate solutions for cancer treatment. The integration and interpretation of complex, high-dimensional multi-omics data remain a major hurdle in realizing the full promise of precision oncology, a challenge that requires the sophisticated pattern recognition capabilities of advanced AI and deep learning algorithms.

4 Deep radiomics-based learning in gynecologic malignancies

Radiomics is a method that allows for the extraction of a large number of imaging characteristics from medical images obtained by non-invasive procedures such as CT, MRI, and ultrasound. This methodology was initially introduced by Lambin et al. in 2012 (110). Medical images store large amounts of digital data that pertain to the pathophysiology of tumors (111). Furthermore, radiomics can extract pertinent characteristics from images and integrate and enhance the findings with clinical, pathophysiological, and molecular biological information. This can lead to enhanced clinical diagnosis, the prediction of tumor stage and genotype, and the assessment of prognosis (112). The primary stages of radiomics encompass medical image collecting, image segmentation, feature extraction, feature screening, and model development. Radiomics has been extensively employed in the investigation of many types of tumors, such as thyroid, breast, liver, prostate cancer, and OC (4).

The radiological evaluation could be enhanced by employing radiomics to characterize tumors. These imaging features are both reproducible and quantitative, and they enable the non-invasive evaluation of the heterogeneity of the tumor (113). AI in radiology is a newly developed field that involves the efficient extraction of digital medical imaging data to gather predictive and/or prognostic information about patients and their diseases. This is achieved by analyzing tumor heterogeneity and indirectly assessing the molecular and genetic features of the tumor. It has the potential to enable the anticipation of diagnosis, treatment response, and prognosis. The topic of research in oncology is rapidly growing in popularity due to its broad and potential applications, particularly in clinical decision-making and personalized treatment (114). A robust association exists between radiomic data and clinical results. The efficacy of this notion has already been demonstrated in predicting several solid tumors prior to surgery (115).

The AI methodology diverges from the usual radiological method by providing an automated, replicable, and quantitative examination of images that surpasses human visual capabilities. AI systems can be trained to analyze predefined criteria, such as tumor size, tumor shape, and lymph nodes, using machine learning. Alternatively, they can be educated without human supervision using DL, which involves a flexible analytical process that may not be easily understandable by humans. An instance of a free analysis chain is the artificial neural network, which has interconnected functions that process images as input and generate analysis as output. The complexity of a neural network may vary depending on the purpose and the type of input. A neural network is referred to as “deep” when it consists of multiple layers, known as “hidden layers”, through which information is transmitted. The greater the number of hidden layers in a network, the deeper and more intricate it becomes. An excessively complex model has the capability to fit extremely well to a particular training dataset, but it runs the risk of performing poorly when presented with fresh information. This phenomenon is sometimes referred to as “overfitting”. Hence, several methods of internal and external validation are employed to mitigate this issue, which compromises the algorithm’s applicability. The term “DL” pertains to the utilization of deep neural networks.

4.1 Tumor lesion detection and diagnosis

Initial tumors exhibit no distinct symptoms. Various forms of tumors may be accompanied by certain symptoms. Early detection of symptoms allows for the possibility of early detection of malignant tumor growth. When there is a suspicion of a tumor, a thorough examination can be conducted to obtain a comprehensive and unbiased diagnosis of the tumor’s state, facilitate early treatment, and enhance the chances of a cure.

Computer-aided diagnosis in the medical profession enables clinicians to convert subjective image data into objective image data, facilitating clinical decision-making. Nevertheless, DL utilizing a CNN possesses evident benefits in comparison to conventional computer-aided diagnosis. Simplifying the extraction procedure allows for the automatic extraction of distinctive feature information from datasets. Additionally, its performance is more systematic and offers greater ease of adjustment. ML and deep data mining techniques facilitate the identification of cancer by enabling researchers to extract distinctive information from the data, which may then be used for cancer prediction (31). The application of AI extends beyond detection to nuanced prognostic prediction. Studies have demonstrated that deep learning models can decode the complex interplay within the tumor microenvironment, offering insights into disease aggression and patient outcomes that surpass traditional staging systems (116). This aligns with our findings on the prognostic value of AI-derived features.

DL primarily utilizes X-ray, CT, and MR images for lesion detection and classification. Plain radiographs, generated using X-ray technology, provide image metrics that describe tumor features such as tumor location, tumor size, and tumor margin. CT and MRI offer enhanced radiological information and enhance the ability to detect lesions, in comparison to simple radiographs. Several advanced DL techniques have been documented for the identification and categorization of GMs using CT and MRI scans (117). CNNs, which are particularly adept at processing spatial information in images by learning hierarchical features through convolutional filters, have been widely employed for this task.

For instance, Chen et al. (118) developed a computation method called “GPS-OCM” to accelerate the investigation of metabolites associated with ovarian cancer. This method is based on the assessment of the similarity between metabolites and diseases. This method combines the techniques of GCN, principal component analysis (PCA), and SVM. The GCN was employed to extract network topology characteristics, while PCA was utilized to decrease the dimensionality of illness and metabolite variables. The SVM algorithm was utilized for the purpose of classification. The studies demonstrated the exceptional precision of our approach, as evidenced by the high values of AUC and Area Under the Precision-Recall Curve (AUPR).

Schwartz et al. (119) developed an automated methodology that aims to learn how to detect ovarian cancer in transgenic mice using optical coherence tomography (OCT) recordings. The process of classification is achieved by employing a neural network that is capable of perceiving spatially arranged sequences of tomograms. The authors introduced three neural network-based methodologies, including a feed-forward network backed by VGG, a three-dimensional (3D) convolutional neural network, and a convolutional long short-term memory (LSTM) network. Their experimental findings demonstrate that our models reach a favorable level of performance without the need for manual adjustment or the creation of specific features, despite the presence of severe noise in OCT images. The convolutional LSTM-based neural network, which is their most successful model, obtains a mean AUC of 0.81 ± 0.037 (standard error).

Tanabe et al. (120) sought to create a method called complete serum glycopeptide spectra analysis (CSGSA-AI) that uses AI and CNN to identify abnormal glycans in blood samples from patients with EOC. The researchers transformed the patterns of serum glycopeptide expression into two-dimensional (2D) barcodes in order to enable a CNN to learn and differentiate between EOC and non-EOC cases. The CNN model was trained using 60% of the available samples and validated using the remaining 40%. The researchers found that using principal component analysis-based alignment of glycopeptides to create 2D barcodes greatly improved the diagnostic accuracy of the approach, with a rate of 88%. By training a CNN with 2D barcodes that were colored according to the serum levels of CA125 and HE4, a diagnosis accuracy of 95% was attained. They are of the opinion that this uncomplicated and inexpensive approach will enhance the identification of EOC.

A comprehensive framework for detecting and classifying cervical cancer was created utilizing an optimized SOD-GAN (121). This advanced technique was designed to handle multivariate data sources. The suggested classifier accurately detects the cervix without the need for manual annotations or interventions. Additionally, it categorizes cervical cells as benign, precancerous, or cancerous lesions. The proposed approach has been expanded to include the identification of both the kind and stage of cervical cancer, in addition to its original purpose of diagnosing cervical cancer. Experiments were conducted during the training, validation, and testing phases of the proposed optimized SOD-GAN. Throughout all stages, the proposed approach demonstrated a high level of accuracy, reaching over 97% with a minimal loss of less than 1%. During the clinical analysis of 852 samples, the average duration required to classify the cervical lesion was 0.2 seconds. Therefore, the suggested method may effectively train the network through incremental learning, making it an ideal model for real-time cervical cancer diagnosis and prognosis.

The study conducted by Fekri-Ershad et al. (122) introduced a combination method that utilizes a machine learning approach. This method is characterized by a distinct separation between the feature extraction step and the classification stage. However, deep networks are employed during the feature extraction stage. This research introduces a neural network called a multi-layer perceptron (MLP), which is trained using deep features. The tuning of the number of hidden layer neurons is based on four novel concepts. In addition, MLP has been fed with ResNet-34, ResNet-50, and VGG-19 deep networks. In this technique, the layers responsible for the classification phase are eliminated in both CNN networks. The outputs then travel via a flattening layer before being fed into the MLP. To enhance performance, both CNNs are trained on correlated images utilizing the Adam optimizer. The proposed method was assessed using the Herlev benchmark database and achieved an accuracy of 99.23% for the two-class scenario and 97.65% for the seven-class scenario. The results indicate that the suggested method has achieved superior accuracy compared to both the baseline networks and other current methods.

Chandran et al. (123) presented two deep learning CNN structures for the identification of cervical cancer using colposcopy images. The first model is VGG19 (TL), while the second model is CYENET. The VGG19 model is utilized as a transfer learning technique in the CNN architecture for the research. A novel model, called the Colposcopy Ensemble Network (CYENET), was created to automatically classify cervical malignancies based on colposcopy images. The model’s accuracy, specificity, and sensitivity were evaluated. The accuracy of VGG19’s categorization was 73.3%. The findings obtained for VGG19 (TL) were relatively satisfactory. Based on the kappa score of the VGG19 model, it may be inferred that it falls into the intermediate classification group. The experimental results demonstrated that the suggested CYENET displayed a high level of sensitivity, specificity, and kappa scores, reaching 92.4%, 96.2%, and 88%, respectively. The CYENET model demonstrates an enhanced classification accuracy of 92.3%, surpassing the VGG19 (TL) model by 19%.

Takahashi et al. (124) introduced an AI-powered method that can automatically identify the areas impacted by endometrial cancer in hysteroscopic images. A total of 177 patients with a previous hysteroscopy were included in this study. Among them, 60 had a normal endometrium, 21 had uterine myoma, 60 had endometrial polyps, 15 had atypical endometrial hyperplasia, and 21 had endometrial cancer. Three widely used deep neural network models were utilized to implement machine learning techniques, while a continuity analysis method was devised to improve the precision of cancer detection. Ultimately, they examined whether precision could be enhanced by amalgamating all the learned models. The findings indicate that the diagnostic accuracy using the usual technique was approximately 80% (78.91%–80.93%). However, this accuracy improved to 89% (83.94%–89.13%) when utilizing the proposed continuity analysis. Furthermore, when integrating the three neural networks, the accuracy was above 90% (specifically, 90.29%). The sensitivity and specificity were 91.66% and 89.36%, respectively.

4.2 Tumor classification and typing

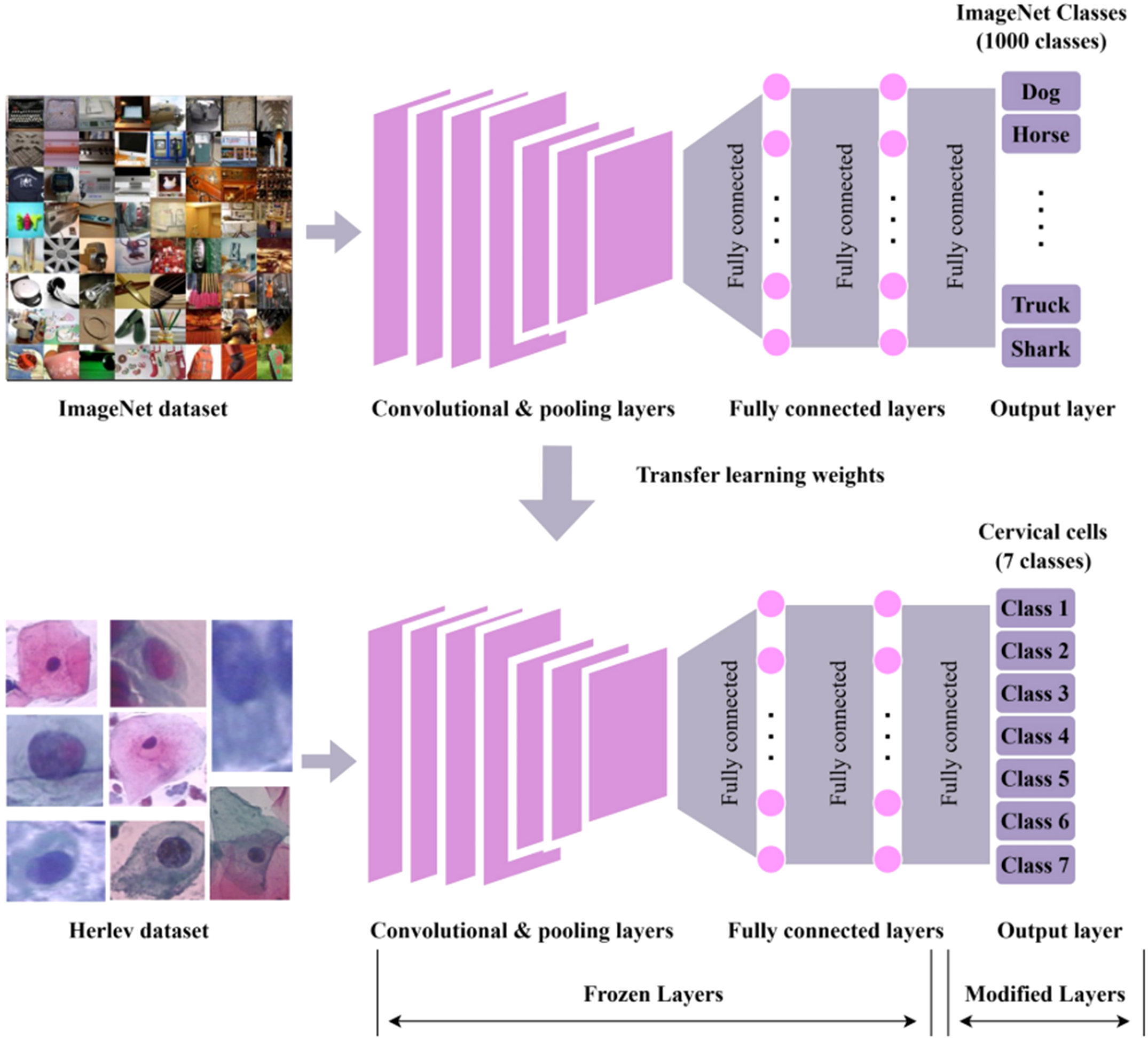

The process involves the detection and differentiation of non-cancerous and cancerous growths. In order to thoroughly assess GMs, whether they are benign or malignant, clinicians must first make an initial determination based on symptoms as well as laboratory and imaging tests. Currently, the most reliable method for distinguishing between benign and malignant GMs is the use of pathological analysis, through either a puncture biopsy or a postoperative pathological evaluation. However, the techniques used are invasive, and a puncture biopsy poses a specific risk of needle route metastases (125). Consequently, several studies have investigated the use of radiomics to detect both benign and malignant tumors (Figure 7). Here, the ability of AI to learn discriminative features directly from data is crucial. Deep Convolutional Neural Networks (DCNNs), such as the AlexNet-based architecture (57), which sparked the modern deep learning revolution by winning the ImageNet challenge, have been adapted for medical image analysis.

Figure 7

The workflow for cervical cancer classification using convolutional neural network (CNN) with transfer learning (126). Top: A CNN is pre-trained on the ImageNet dataset (containing 1,000 classes, e.g., Dog, Horse, Truck, and Shark), utilizing convolutional/pooling layers, fully connected layers, and an output layer to predict ImageNet classes. Bottom: Pre-trained weights are transferred to a new CNN for classifying seven classes of cervical cells from the Herlev dataset. In this transfer process, convolutional/pooling layers and most fully connected layers are frozen (kept unchanged), while only the output layer is modified to fit the seven cervical cell classes.

Wen et al. (127) utilized a novel 3D texture analysis technique to assess the structural alterations in the extracellular matrix (ECM) of various ovarian tissues, including normal ovarian stroma, high-risk ovarian stroma, benign ovarian tumors, low-grade ovarian serous cancers, high-grade ovarian serous cancers, and endometrioid tumors. The analysis was conducted using 3D second-harmonic generation (SHG) image data. Through the optimization of the number of textons, testing imaging weighting, and nearest neighbor numbers, they were able to attain high accuracy ranging from approximately 83% to 91% across different classes. This performance significantly surpassed that of the corresponding two-dimensional version. This application showcases the effectiveness of using quantitative computer vision evaluation of 3D SHG image features as a possible biomarker for assessing cancer stage and kind. Crucially, it does not depend on extracting basic fiber characteristics like size and alignment. This classification algorithm is a versatile technique that relies on pre-trained SHG images. It is particularly suitable for analyzing dynamic fibrillar characteristics in many types of tissues.

The study conducted by Wu et al. (128) utilized a DCNN based on AlexNet to autonomously categorize several forms of ovarian tumors from cytological images. The DCNN is composed of five convolutional layers, three max pooling layers, and two fully connected layers. Next, they trained the model using two sets of input data. The first set consisted of original image data, while the second set consisted of augmented image data that included image enhancement and image rotation. The testing findings are derived from the application of the 10-fold cross-validation technique, revealing that the accuracy of classification models has been enhanced from 72.76% to 78.20% by utilizing augmented photos as training data. The devised approach proved to be effective in categorizing ovarian tumors based on cytological images.

The study conducted by Liu et al. (129) focused on the development of a DL algorithm called the light scattering pattern-specific convolutional network (LSPS-net). This algorithm is integrated into a 2D light-scattering static cytometry system to enable automatic and label-free analysis of individual cervical cells. A classification accuracy of 95.46% was achieved for distinguishing between normal cervical cells and malignant cells (specifically, a mixture of C-33A and CaSki cells). When used to classify label-free cervical cell lines, the LSPS-net cytometric approach achieves an accuracy rate of 93.31%. Additionally, the three-way categorization of the aforementioned cell types achieves an accuracy rate of 90.90%. Comparisons with alternative feature descriptors and classification methods demonstrate the superior capability of deep learning for automatically extracting features. The LSPS-net static cytometry has the potential to be used for early screening of cervical cancer. This method is characterized by its rapidity, automation, and lack of labelling requirements.

The research by Ghoneim et al. (87) presented a system for detecting and classifying cervical cancer cells using CNNs. The cellular images were input into a CNN model in order to extract features that have been learned at a deep level. Next, an extreme learning machine (ELM)-based classifier was used to classify the input photos. The CNN model was employed using the techniques of transfer learning and fine-tuning. Additionally, the study explored other classifiers, such as MLP- and AE-based classifiers, in addition to the ELM. The Herlev database was used for conducting experiments. The CNN-ELM-based system demonstrated a detection accuracy of 99.5% for the two-class problem and a classification accuracy of 91.2% for the seven-class challenge.

The study conducted by Li et al. (130) aimed to develop an AI system capable of automatically identifying and diagnosing abnormal images of endometrial cell clumps (ECCs). The researchers used the Li Brush to collect endometrial cells from the patients. Slides were generated using the liquid-based cytology technique. The slides were digitized and categorized into malignant and benign groups. The authors put forward two networks, namely, a U-Net segmentation network and a Dense Convolutional Network (DenseNet) classification network, for the purpose of image identification. Four more categorization networks were utilized for comparative testing. We gathered a total of 113 endometrial samples, with 42 being malignant and 71 being benign. From these samples, we created a dataset consisting of 15,913 images. The segmentation network obtained a total of 39,000 patches of ECCs. Subsequently, a total of 26,880 patches were utilized for training, whereas 11,520 patches were allocated for testing. Assuming that the training set achieved a 100% success rate, the testing set achieved an accuracy of 93.5%, a specificity of 92.2%, and a sensitivity of 92.0%. The remaining 600 cancerous patches were used for verification. A successful AI system was developed to accurately categorize ECCs as either malignant or benign.

Retrospectively, clinical information and the most recent preoperative pelvic MRI were gathered from patients who had undergone surgery and were diagnosed with uterine endometrioid adenocarcinoma based on pathological examination. The region of interest (ROI) was subsequently delineated in T1-weighted imaging (T1WI), T2-weighted imaging (T2WI), and diffusion-weighted imaging (DWI) MR images. From these scans, both classical radiomic features and deep learning image features were recovered. A comprehensive radiomics nomogram model was developed by merging conventional radiomics features, DL image features, and clinical information (131). The purpose of this model is to accurately differentiate between patients at low risk and those at high risk, as per the 2020 European Society for Medical Oncology (ESMO)–ESGO–European Society for Radiotherapy & Oncology (ESTRO) criteria. The effectiveness of the model was assessed in both the training and validation sets. Utilizing MRI-based radiomics models can be advantageous in categorizing the preoperative risk of individuals diagnosed with uterine endometrioid cancer.

4.3 Image segmentation and volume computation

The most prevalent modalities for acquiring images are CT, MRI, positron emission tomography (PET), and ultrasound (132). Images acquired using identical machine equipment, scanning technique, and scanning layer thickness do not require post-processing during feature extraction. Nevertheless, images received through various equipment and under diverse acquisition conditions necessitate pre-processing prior to feature extraction. The pre-processing procedure involves resampling, standardization, and high-pass filtering in order to achieve a consistent layer thickness and matrix size for feature extraction.

Once medical images are acquired, a specific ROI is usually defined by a process that includes automatic segmentation, manual segmentation, and semi-automatic segmentation. Automated segmentation is efficient in defining lesions but lacks accuracy in recognizing them. Furthermore, the boundaries of tumors in medical images are often indistinct, and the presence of nearby metastases and accompanying symptoms, such as inflammation, can readily disrupt the contours produced by semi-automatic and automatic segmentation. Conversely, manual segmentation is a subjective and time-consuming process that relies on clinicians identifying the lesions and drawing their outlines. Semi-automated segmentation, derived from automatic segmentation, enables doctors to manually review and correct the delineated edges, hence enhancing the efficiency and accuracy of the delineation process (132). Presently, the standard software for ROI mapping comprises the MIM (www.mimsoftware.com), ITK-SNAP (www.itksnap.com), 3D Slicer (www.slicer.org), and ImageJ (National Institutes of Health) software.

Medical image processing has widely used CNNs, which have shown remarkable success in tasks like image classification and segmentation (30). Engineers specifically design CNNs (74) to capture spatial correlations in tasks like image classification, segmentation, and object detection. Transformers have recently gained prominence in the field of medical image processing, demonstrating promising outcomes in a variety of tasks. The primary benefit of transformers compared to CNNs lies in their capacity to effectively manage extensive dependencies and correlations among pixels within an image. Several regions of a medical image may exhibit interconnected characteristics that significantly impact the diagnosis or therapy process. Transformers, equipped with their own self-attention mechanism, can efficiently record these linkages and dependencies, resulting in enhanced performance in tasks like lesion categorization or segmentation. This self-attention mechanism allows for simultaneous processing, making transformers more efficient than CNNs and U-Nets. Transformers have the advantage of being trainable on large datasets, allowing them to acquire more intricate representations of medical images. Nevertheless, transformers exhibit suboptimal performance when confronted with a restricted dataset size. In medical imaging, the availability of large datasets is often limited, making this particularly important.

DL algorithms were utilized as a diagnostic tool for analyzing CT scan images of the ovarian area (133). The photos underwent a sequence of pre-processing procedures, and subsequently, the tumor was segmented using the U-Net model. The occurrences were subsequently categorized into two groups: benign and malignant tumors. The classification task was executed utilizing deep learning architectures like CNN, ResNet, DenseNet, Inception-ResNet, VGG16, and Xception, in addition to machine learning models such as Random Forest, Gradient Boosting, AdaBoosting, and XGBoosting. The DenseNet 121 model achieved the highest accuracy of 95.7% on this dataset after optimizing the machine learning models.

A CNN (134) was constructed for the categorization of image patches in cervical imaging, with the aim of detecting cervical cancer. Manually extracted image patches of 15 × 15 pixels were identified using a shallow-layer CNN. The CNN consisted of a single convolutional layer, a ReLU activation function, a pooling layer, and two fully connected layers. The patches belonged to both VIA-positive and VIA-negative areas. The shallow CNN model has a classification accuracy of 100%. Despite the intricate computations involved in training a CNN, once trained, it is capable of classifying a new image in nearly real time.