- 1College of Medicine and Biological Information Engineering, Northeastern University, Shenyang, Liaoning, China

- 2Department of Nuclear Medicine, General Hospital of Northern Theater Command, Shenyang, Liaoning, China

- 3School of Software, Shenyang University of Technology, Shenyang, Liaoning, China

- 4Department of Radiology, Cancer Hospital of China Medical University, Liaoning Cancer Hospital and Institute, Shenyang, Liaoning, China

- 5Department of Nuclear Medicine, Dalian Medical University, Dalian, China

- 6Department of Research and Development, United Imaging Intelligence (Beijing) Co., Ltd., Beijing, China

- 7Biomedical Engineering, Shenyang University of Technology, Shenyang, Liaoning, China

Objective: To develop a deep learning radiomics(DLR)model integrating PET/CT radiomics, deep learning features, and clinical parameters for early prediction of bone oligometastases (≤5 lesions) in breast cancer.

Methods: We retrospectively analyzed 207 breast cancer patients with 312 bone lesions, comprising 107 benign and 205 malignant lesions, including 89 lesions with confirmed bone metastases. Radiomic features were extracted from computed tomography (CT), positron emission tomography (PET), and fused PET/CT images using PyRadiomics embedded in the uAI Research Portal. Standardized feature extraction and feature selection were performed using the Least Absolute Shrinkage and Selection Operator (LASSO) method. We developed and validated three models: a radiomics-based model, a deep learning model using BasicNet, and a deep learning radiomics (DLR) model incorporating clinical and metabolic parameters. Model performance was assessed using the area under the receiver operating characteristic curve (AUC), accuracy, sensitivity, and specificity. Statistical comparisons were conducted using the DeLong test.

Results: Visual assessment of fused PET/CT images identified 227 (72.8%) abnormal lesions, demonstrating greater sensitivity than CT or PET alone. The complex radiomics model achieved a sensitivity of 98.9% [96.1%–99.4%], specificity of 98.2% [88.1%–99.6%], accuracy of 98.7% [89.6%–99.5%], and area under the curve (AUC) of 0.989. The BasicNet model outperformed other transfer learning models, achieving an AUC of 0.961. The DeLong test confirmed that the AUC of the BasicNet model was significantly higher than the traditional radiomics model. The DLR+Complex model with a random forest classifier achieved the highest overall performance, with an AUC of 0.990, sensitivity of 98.6%, specificity of 90.5%, and accuracy of 99.8%.

Conclusions: The BasicNet model significantly outperformed traditional radiomics approaches in predicting bone oligometastases in breast cancer patients. The DLR+Complex model demonstrated the best predictive performance across all metrics. Future strategies for precise diagnosis and treatment should incorporate histologic subtype, advanced imaging, and molecular biomarkers.

1 Introduction

Breast cancer is among the most prevalent malignant tumors globally and remains a major health concern for the female population (1).However, approximately 50%–70% of locally advanced breast cancer cases eventually metastasize to distant organs such as the lungs, liver, and bones (2). Globally, bone metastases represent a leading cause of death from breast cancer, occurring in 30%–70% of patients with advanced breast cancer, accounting for approximately 500,000 new global cases annually. Bone metastases from breast cancer remain a serious condition, contributing significantly to patients’ morbidity and mortality (3). Patients with bone-only metastases generally exhibit longer overall survival compared to those with widespread metastases involving visceral organs such as the liver and lungs. And the later patients have a median survival of only 24–36 months (4).One prior study also found that patients with bone metastases had a better survival rate than those with visceral metastases, with a 5-year survival rate of up to 20% and a median survival time of more than 72 months in some patients, indicating that a large proportion of breast cancer bone metastases were in a state of oligometastases with inert biological behavior (5). Current definitions of bone oligometastases vary across studies, While some trials define it as ≤5 lesions (4, 6–8), others include up to 3 lesions (9). This study we adopts the definition as five or fewer metastatic lesions confined to the skeletal system pathologically proven by bone scan or PET-CT, and the lesions could be considered as having oligometastases.

Early recognition and accurate diagnosis of bone oligometastases metastases with breast cancer are critical to their further treatment.Advances in medical technology and increased awareness have significantly improved the early detection and treatment of the disease (10). Traditional imaging modalities such as bone scintigraphy, computed tomography (CT), and magnetic resonance imaging (MRI) are commonly used to assess metastatic bone disease (11–13). However, these techniques have notable limitations. For example, the sensitivity, specificity, positive predictive value, and negative predictive value of 99mTc-MDP bone scintigraphy for detecting skeletal metastases are only 67%, 78%, 50%, and 50%, respectively (14). Moreover, early micrometastases (<5 mm), particularly osteolytic lesions, are often missed by CT or scintigraphy, with missed diagnosis rates as high as 40% (15). Inter-observer variability also poses a challenge (Kappa values: 0.65–0.72), and single-modality imaging fails to fully capture tumor metabolic heterogeneity and molecular characteristics (16). Although PET/CT improves sensitivity (up to 94%) with 18F-FDG imaging, it involves higher radiation exposure (14–20 mSv), and its quantitative analysis of metabolic parameters (e.g., SUVmax slope) often depends on empirical thresholds (13, 17, 18). These limitations have driven research toward more advanced multimodal imaging strategies.

Recent progress in artificial intelligence and deep learning has transformed medical image analysis, particularly in the detection of metastatic breast cancer. Deep learning radiomics (DLR), which integrates features from multiple imaging modalities, has notably emerged as a promising approach. Ceranka et al. (19) previously developed a fully automated deep learning method for detecting and segmenting bone metastases on whole-body multiparametric MRI. Their system outperformed existing methods, achieving 63% sensitivity with a mean of 6.44 false positives per image and a Dice coefficient of 0.53. In another study, Shang et al. (20) enhanced sensitivity using a Multi-Perspective Extraction module in the feature extraction phase, utilizing three different sizes of convolutional kernels to enhance sensitivity to bone metastases. Their BMSMM-Net allowed high-performance segmentation of bone metastases, achieving F1 scores of 91.07% and 95.17% for segmenting bone metastases and bone regions, respectively, along with mIoU scores of 83.60% and 90.78%.

18F-FDG PET/CT imaging is commonly used in diagnosis and follow-up of metastatic in breast cancer, but its quantitative analysis is complicated by the number and location heterogeneity of metastatic lesions. In some studies, by combining MRI, CT, and PET imaging data, researchers were able to more fully assess the risk of bone metastases in breast cancer. Moreua (21) proposed a completely automatic deep learning based method to detect and segment bones and bone lesions with 24 patients on 18F -FDG PET/CT in the context of metastatic in breast cancer, and they introduced an automatic PET bone index which could be incorporated in the monitoring and decision process. Moreua (22) also proposed networks to segment breast cancer metastatic lesions on longitudinal whole-body PET/CT with 60 patients and extract imaging biomarkers from the segmentations and evaluate their potential to determine treatment response. Their works constituted promising tools for the automatic segmentation of lesions in 60 patients with metastatic breast cancer allowing treatment response assessment with several biomarkers.

DLR offers a new paradigm for the accurate diagnosis of bone oligometastases. By extracting high-throughput features from PET/CT—including textural features (e.g., Gray Level Co-occurrence Matrix (GLCM) entropy, GLSZM regional variance) and morphological characteristics (e.g., sphericity)—DLR enables quantification of tumor heterogeneity and risk prediction. However, few studies have applied DLR specifically to bone oligometastases in breast cancer. Such models enhance multimodal feature integration and reduce overfitting. This study aims to develop a DLR model integrating PET/CT radiomics, deep learning features, and clinical parameters for early prediction of bone oligometastases (≤5 lesions) in breast cancer.

2 Materials and methods

2.1 Patient population

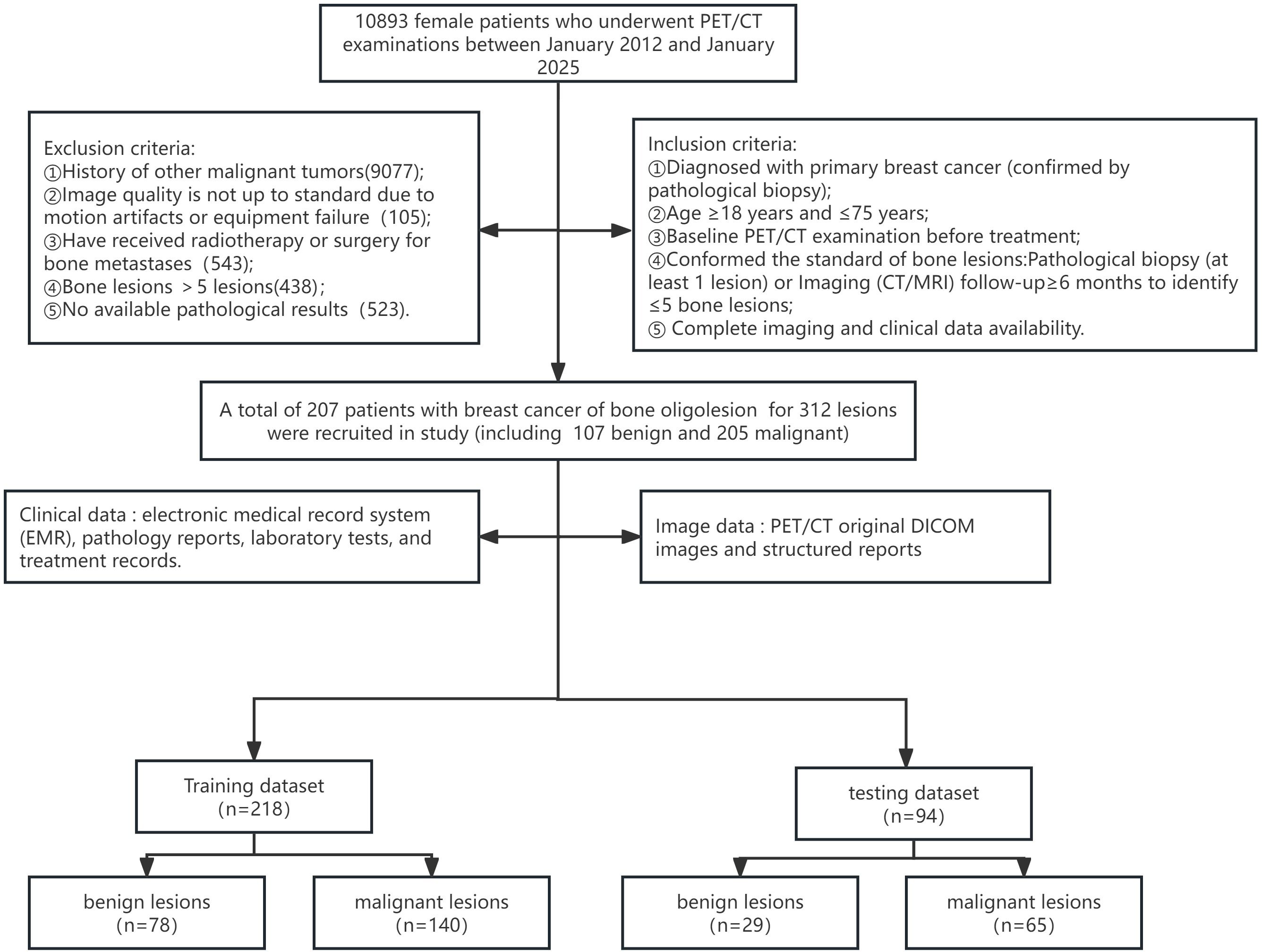

This retrospective study was approved by the institutional review board of our hospital (Approval No. Y (2025)-022), who further waived the requirement for informed consent. A total of 10,893 female patients who underwent PET/CT examinations at our centre between January 2012 and January 2025 were reviewed. Clinical data were obtained from our hospital’s electronic medical record system, including pathology reports, laboratory tests, and treatment records. Imaging data included original PET/CT DICOM images and structured departmental reports.

Inclusion criteria were:

1. Pathological biopsy-confirmed primary breast cancer.

2. Age between 18 and 75 years.

3. Underwent baseline PET/CT performed before treatment.

4. Confirmed as having bone lesions, defined as ≤5 bone lesions, confirmed via pathology (≥1 lesion) or follow-up imaging (CT/MRI) for at least 6 months.

5. Complete imaging and clinical data available.

Exclusion criteria included:

1. History of other malignancies.

2. Poor image quality due to motion artifacts or equipment failure.

3. Prior radiotherapy or surgery for bone metastases.

4. More than five bone lesions.

5. Absence of pathological confirmation.

Ultimately, 207 patients with 312 breast tumor-associated bone lesions were included. Of these, 107 lesions were benign and 205 were malignant. The dataset was split into a training and testing cohort in a 7:3 ratio. The training cohort consisted of 218 lesions (78 benign, 140 malignant), while the internal testing cohort included 94 lesions (29 benign, 65 malignant). The enrollment process is outlined in Figure 1.

2.2 Image acquisition

Images were acquired using the GE Discovery VCT and GE Discovery 710 PET/CT scanners. 18F-FDG was synthesized on a GE Minitrace cyclotron, with a radiochemical purity >98%. Patients fasted for more than 6 hours before the scan, with blood glucose controlled below 6.1 mmol/L. 18F-FDG was intravenously administered at a dose of 5.5 MBq/kg, and imaging was performed 40–60 minutes post-injection.

The imaging protocol included a non-contrast CT scan, followed by a PET scan, covering from the mid-femur to the skull vertex, and including the lower limbs when necessary. CT parameters were: 120 kV, 110 mA, pitch 1.0; rotation time 0.5 s; and slice thickness, 3.27 mm. PET images were collected in the same range, with a body collection time of 2–3 min/bed. In total, 6–8 beds were collected by 3D PET scanning, with each bed taking 1.5min. The computer system automatically performed image reconstruction using Ordered Subsets Expectation Maximization for coronal, sagittal, and transverse views and 3D projections.

2.3 Region of interest segmentation

Bone oligolesions of breast cancer were selected for region of interest (ROI) segmentation on the largest layer of the tumor. ROI segmentation in PET/CT images is a critical step in deep learning and image-based data analysis. To ensure the consistency and reliability of the data, we applied standardized image segmentation. All PET/CT DICOM images were imported into 3D Slicer (version 5.2) (23) and uploaded to the uAI Research Portal (version 20241130) in both DICOM and nii.gz formats for deep learning and radiomics analysis. Lesions were identified on CT by the presence of lytic or sclerotic changes, with or without associated soft tissue mass, abnormal postcontrast enhancement. In PET, the maximum standard ingested value (SUVmax) was calculated using both visual and semi-quantitative methods. Abnormal lesions were defined as those with an increased FDG uptake, with an SUVmax higher than physiologic hepatic background activity.

Three experienced readers (two radiologists and one nuclear medicine physician with 10–12 years of experience each) independently delineated each ROI along the tumor margin, from the first to the last layer of the whole tumor, using 3D Slicer, under the supervision of a senior radiologist (30 years’ experience). All readers were blinded to histopathological results. We traced abnormal areas in these images and attempted to delineate the burr at the edge of each tumor.

The ROI segmentation followed strict criterias:

1. Include the entire lesion as completely as possible.

2. Minimize inclusion of surrounding non-lesion tissue.

3. For lesions with unclear boundaries, integrate PET and CT data for delineation.

4. Necrotic regions(Areas of necrosis in bone metastases are areas within the bone metastases that have formed due to tumor cell death and destruction of tissue structure due to a variety of reasons)or calcified regions(Calcified areas in bone metastases are areas of higher density within or around bone metastases that are formed as a result of abnormal local deposition of calcium salts due to the metabolism and proliferation of tumor cells as well as the body’s repair processes)were included for accurate radiomic feature extraction.Necrotic/calcified regions were included if occupying >10% of lesion volume (verified by 3D Slicer’s volumetric analysis) to ensure radiomic feature stability.

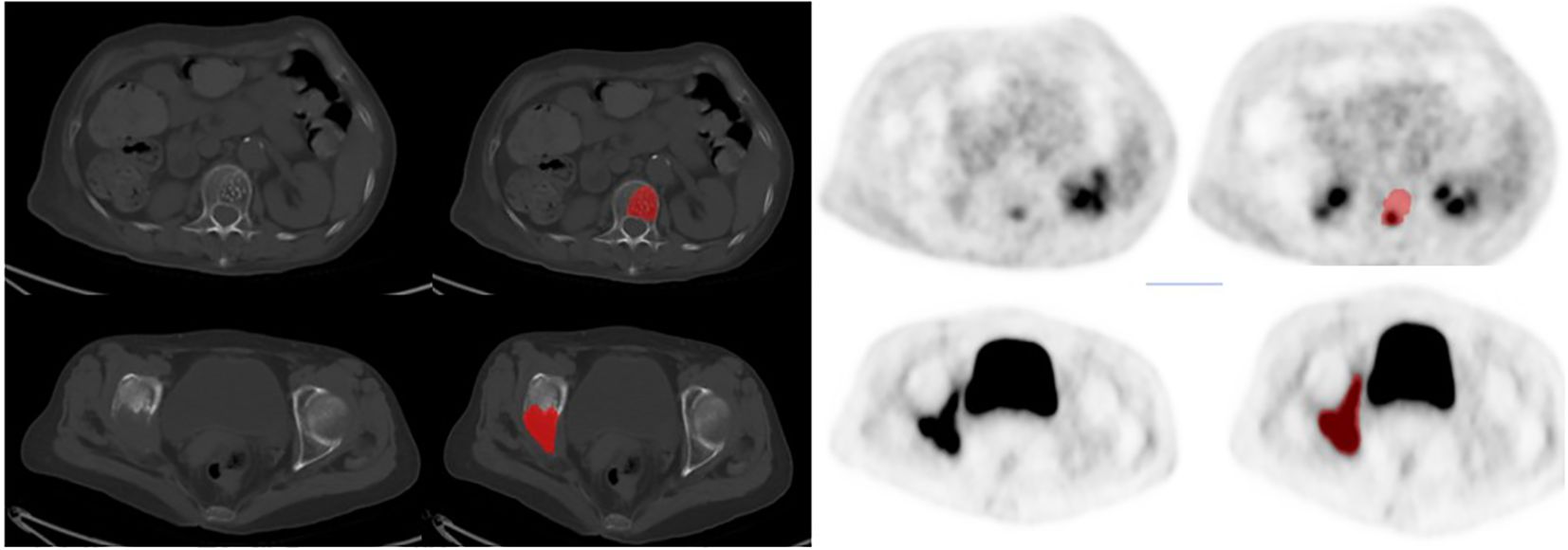

Each patient’s ROI segmentation took approximately 10–15 minutes, with a review time of 5–8 minutes. Example segmentations are shown in Figure 2.

Figure 2. The typical imagings of two patients:The first row of images showed a 67-year-old woman, 6 months after right breast cancer surgery, CT showed the first lumbar had low density foci, and accompanied with multiple punctate high-density focals, PET images of this lesion local metabolism slightly increased, and finally diagnosed as benign hemangioma after 6 months of clinical follow-up. The second row of images showed a 72-year-old woman, 4 years after left breast cancer surgery, CT showed right sciatic and right iliac were all destructed, PET images of these lesions' metabolism were obviously increased, and finally diagnosed as bone metastasis with puncture pathology.

2.4 Model Selection and Transfer Learning

We selected a 3D deep supervised residual BasicNet model (24) for transfer learning, due to its efficiency and proven effectiveness in medical image analysis. BasicNet, a lightweight convolutional neural network architecture with moderate number of parameters, high computational efficiency, and ease of migration, is particularly suitable for lesion detection and classification tasks in medical imaging. Compared to ResNet-101 (25) (23.5M params) and 3D U-Net (19.7M) (26), BasicNet’s lightweight design (4.2M params) achieved faster convergence (98% accuracy by epoch 50 vs. 65 in ResNet) with lower computational cost. This model was pre-trained on ImageNet for robust feature extraction and fine-tuned on PET/CT bone lesion data.

Separate transfer learning processes were implemented for PET and CT to leverage modality-specific information. The network comprised an encoder–decoder architecture adopted as the mainstream network, residual connections, and deep supervision layers. The parameter configuration during the training process had a decisive impact on the model performance. Optimal training parameter combinations were determined. Through many preliminary experiments and a literature research. Considering the common category imbalance problem in medical data, similar to the 3D U-Net (27), this study used the loss function to choose focal loss function (Focal Loss) instead of the traditional cross-entropy loss (28). Target weights were set to reflect the different tolerances for false positives and false negatives in clinical practice, with weights set to 0.3 for benign lesions and 0.7 for malignant lesions. This optimal combination was determined by iteratively adjusting the weight values through receiver operating characteristic (ROC) curve analysis and clinical expert assessment. Adaptive Moment Estimation was selected as the optimizer. Compared with the traditional stochastic gradient descent method, Adam was able to adaptively adjust the learning rate of each parameter, making the training process more stable and achieving more rapid convergence. The learning rate was initially set to 0.0001, but was gradually reduced during the training phase to find the global minimum of the loss function. The number of training iterations was set to 1001, and the batch size was set to 32 samples per batch, defined as a balance between model performance and computational resources. To fully utilize the parallel computing capability of the multi-core processor, the number of IO threads was set to 8, which significantly accelerated the data loading and preprocessing process and reduced the IO bottleneck in training.

For the training process, we adopted a validation set performance monitoring strategy, in which the model performance was evaluated on an independent validation set every 10 epochs. The accuracy, sensitivity, specificity, AUC value, and many other indicators were assessed. An early stopping mechanism was applied to prevent overfitting. The training process was automatically terminated when the validation set performance did not improve for five consecutive evaluations. At the end of training, the model parameters saved at the epoch point with the best performance on the validation set were collected. This strategy avoided overfitting which may otherwise occur in the late stage of training, and further ensured the generalization capabilities of the model.

The Max Pooling layer (29) was used to extract the most salient features in the image, retaining structural information while reducing data dimensionality and computational complexity. Specifically, the output of the penultimate maximum pooling layer was extracted, to retain sufficient semantic information, while ensuring a high spatial resolution.

We further adopted a pre-fusion (30) approach to obtain joint PET/CT features by fusing deep learning features from both CT and PET. This pre-fusion strategy achieved information integration at the feature level, preserving the semantic relevance of the original features, and capturing inter-modal interactions better than post-fusion (decision-level fusion). For concrete implementation, we first normalized the feature vectors of the two modalities, and then merged them into one augmented feature vector by concatenation operation.

2.5 Extraction of radiomics features

Radiomics feature extraction, in which PET/CT images are quantitatively analyzed to extract feature information difficult to recognize with the naked eye, is one of the core aspects of this study. In the present study, the PyRadiomics (31) embedded in the uAI Research Portal was applied for standardized feature extraction. Prior to feature extraction, images were first preprocessed, including voxel resampling to 1×1×1 mm³ to eliminate any specific differences in scanning parameters between different devices, while a standardized grayscale discretization algorithm was applied to quantify the grayscale values to a fixed number of bins. This was then used to segment and extract radiomic features from the semi-automated segmentation of attenuation-corrected PET images by selecting an absolute SUVmax threshold of 2.2. For PET images, the bin size was 0.7936508 and the number of gray levels in intensity discretization was 64; further, an absolute intensity rescaling with a minimum bound of zero and maximum of 50 was selected. For intensity discretization of CT images, the bin size was 32 and the number of gray levels was 400. Kernel 3 was applied, and an intensity rescaling of minimum bound of −1000 and maximum bound of 3000 was applied (32). A circular 3D ROI in the region with the highest FDG avid bone lesion was chosen for analysis. This preprocessing step ensured the stability and comparability of the extracted features.

Radiomics was predominantly conducted using the following stems: First-order feature, second order texture, conventional PET/CT parameters (SUV and TLG), shape feature, GLCM, Gray Level Run Length Matrix, GLRLM), Gray Level Size Zone Matrix (GLSZM), Gray Level Dependence Matrix (Gray Level Dependence Matrix, GLDM) and Neighboring Gray Tone Difference Matrix.

2.6 Feature selection and model construction

Feature selection and model construction sessions are key to ensure the performance of the final classifier. In the present study, we adopted a multi-step feature selection strategy combined with several machine learning algorithms to achieve the accurate qualitative diagnosis of bone oligo lesions. First, Z-score normalization was conducted on all extracted deep learning features and radiomics features to transform feature values into a standard distribution with a mean of 0 and a standard deviation of 1. To initially reduce the spatial dimensionality of the features, the Mann–Whitney U test (a nonparametric test) was applied to assess the ability of each feature to discriminate between groups of benign and malignant lesions. In terms of initial threshold setting, the Mann Whitney U test uses a relatively loose p<0.05 threshold to avoid premature exclusion of potentially useful features. We chose a nonparametric test rather than a t-test based on the consideration that medical imaging features generally do not conform to the normal distribution assumption. Indeed, there is often a high degree of correlation between medical imaging features, and this redundant information not only increases computational complexity, but may also lead to unstable model performance. Pearson correlation analysis was applied to calculate the correlation between feature parameters and construct the feature correlation matrix. Pearson correlation threshold (|r|>0.85) was selected to balance feature independence and information retention, validated by 10-fold CV showed optimal AUC at this threshold.Those showing a stronger correlation with the target variable (benign and malignant classification) were retained. The remaining features were further screened using LASSO, and the sparse representation of the features is achieved by introducing the L1 regularization term, which drives some of the regression coefficients to be precisely equal to zero. The present study used a 5-fold cross-validation method to find the optimal value among a series of candidate λ values. Experiments show that when λ is set to approximately 0.015, the model reached the optimal equilibrium point, and the screened subset of features maintained a high predictive power, while avoiding the risk of overfitting.

Overall, this study compared various machine learning classifiers, including Random Forest (RF) (33), Support Vector Machines (SVM) (34), Extra Trees (ET) (34), K-nearest neighbor (KNN) (35) and Mamba (36), ultimately selecting RF as the final classification model. The realization of multi-level fusion of DLR and clinical metabolic parameters features is one of the most important innovations of our study. Our feature selection process was initially applied to deep learning features and imaging radiomics features, respectively, to obtain their respective optimal feature subsets. Subsequently, these two types of features were combined with PET metabolic parameters (including SUVmax, SUVmean, etc.). Subsequently, these were used in clinical practice to construct a Complex model integrating multi-source information.

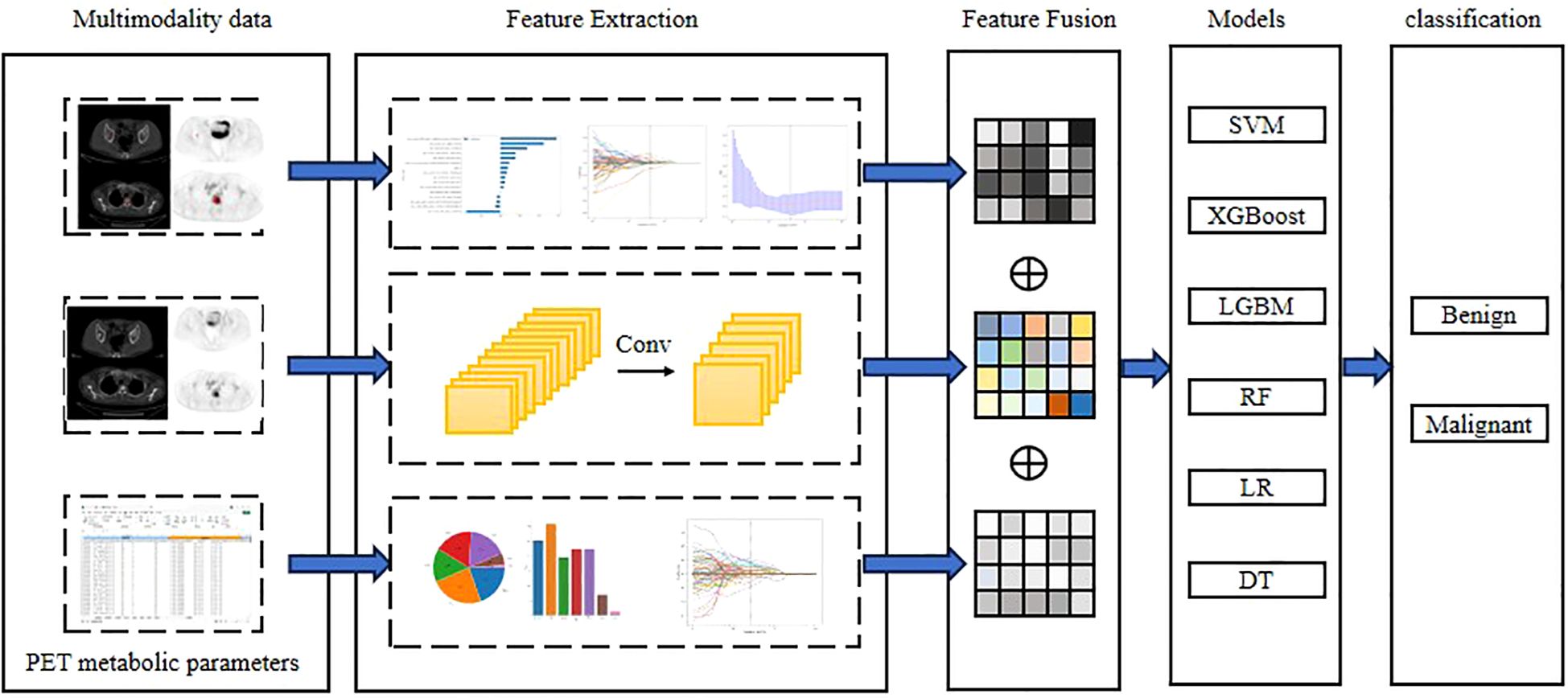

For the fusion strategy, feature-level, rather than decision-level, fusion was used. All selected features were combined into a single feature vector and input into the RF classifier. This strategy allowed the model to automatically learn the complex interactions between different types of features, and fully utilize the complementary advantages of each type of features. Experiments further demonstrated that the Complex model significantly outperformed models using only a single type of features (deep learning, radiomics, clinical parameter model), validating the effectiveness of multi-source feature fusion. The workflow for classification model construction is shown in Figure 3.

Figure 3. Workflow of Deep learning, Radiomics and Complex with PET metabolic parameters from multimodal data. Conventional radiomic features were extracted from CT, PET and PET/CT images. Feature selection and fusion techniques were applied to reduce dimensionality and integrate complementary information. BasicNet was employed in transfer learning using a pretrained model. The classiffcation model was constructed using six machine learning algorithm and visualisation of the decision process.

2.7 Statistical analysis

Statistical analysis was performed using SPSS version 26 (IBM Corp., USA) (37). Normally distributed data were expressed as mean ± standard deviation and compared using Student’s t-test. Non-normally distributed data were presented as medians and analyzed with the Mann–Whitney U test. Categorical variables were compared using the chi-square (χ²) test.Model performance was evaluated via ROC curves, sensitivity, specificity, accuracy, precision, and F1-score. The DeLong test was used to compare ROC curves between models, with P < 0.05 considered statistically significant.

3 Results

3.1 Clinical characteristics

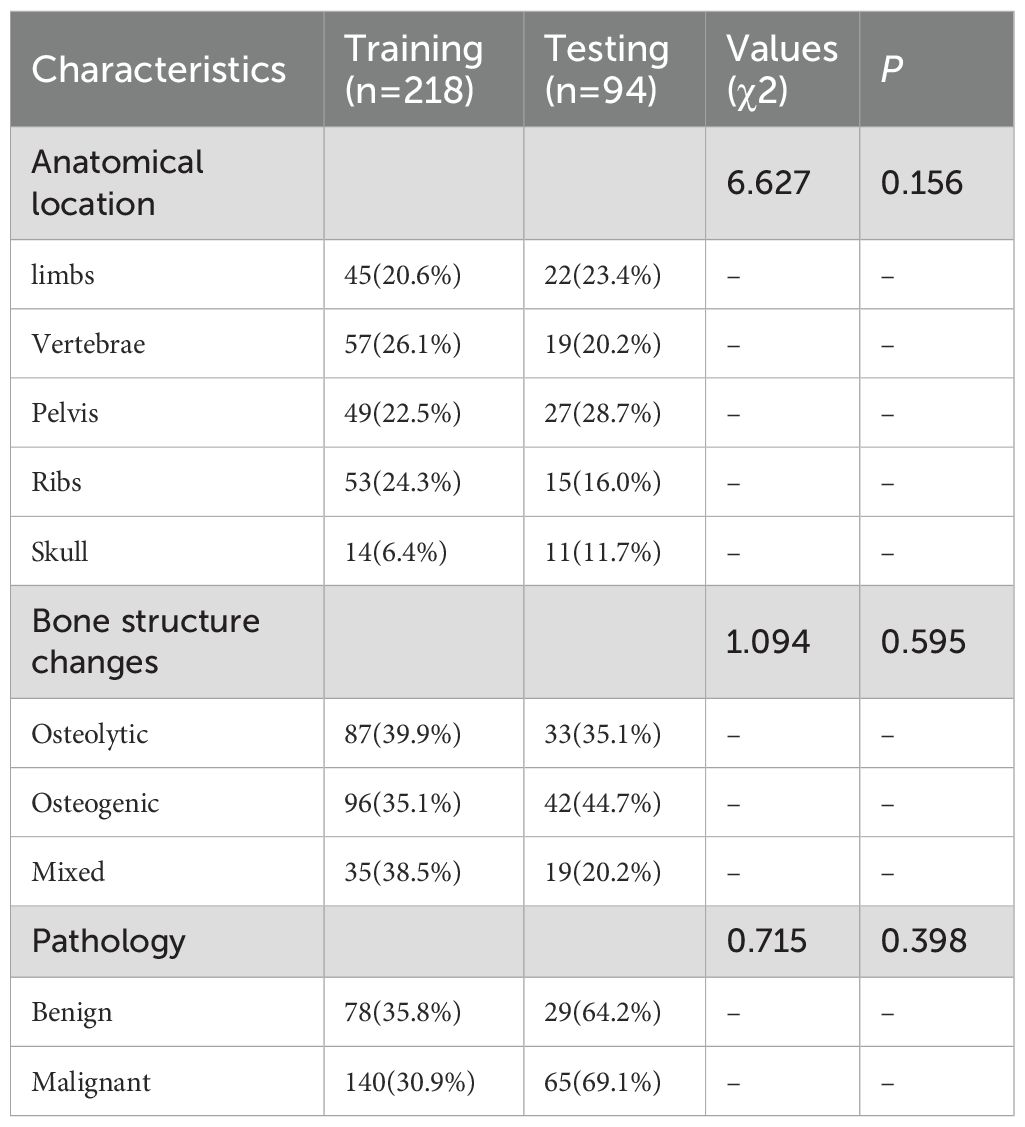

A total of 207 female patients with clinically highly suspected breast cancer bone metastasis were enrolled, contributing 312 bone lesions, yielding an average of 1.5 lesions per patient. The mean age was 58.23 ± 14.05 years. Among the lesions, 107 were benign and 205 were malignant. The lesions were randomly divided into a training cohort (218 lesions) and a testing cohort (94 lesions).

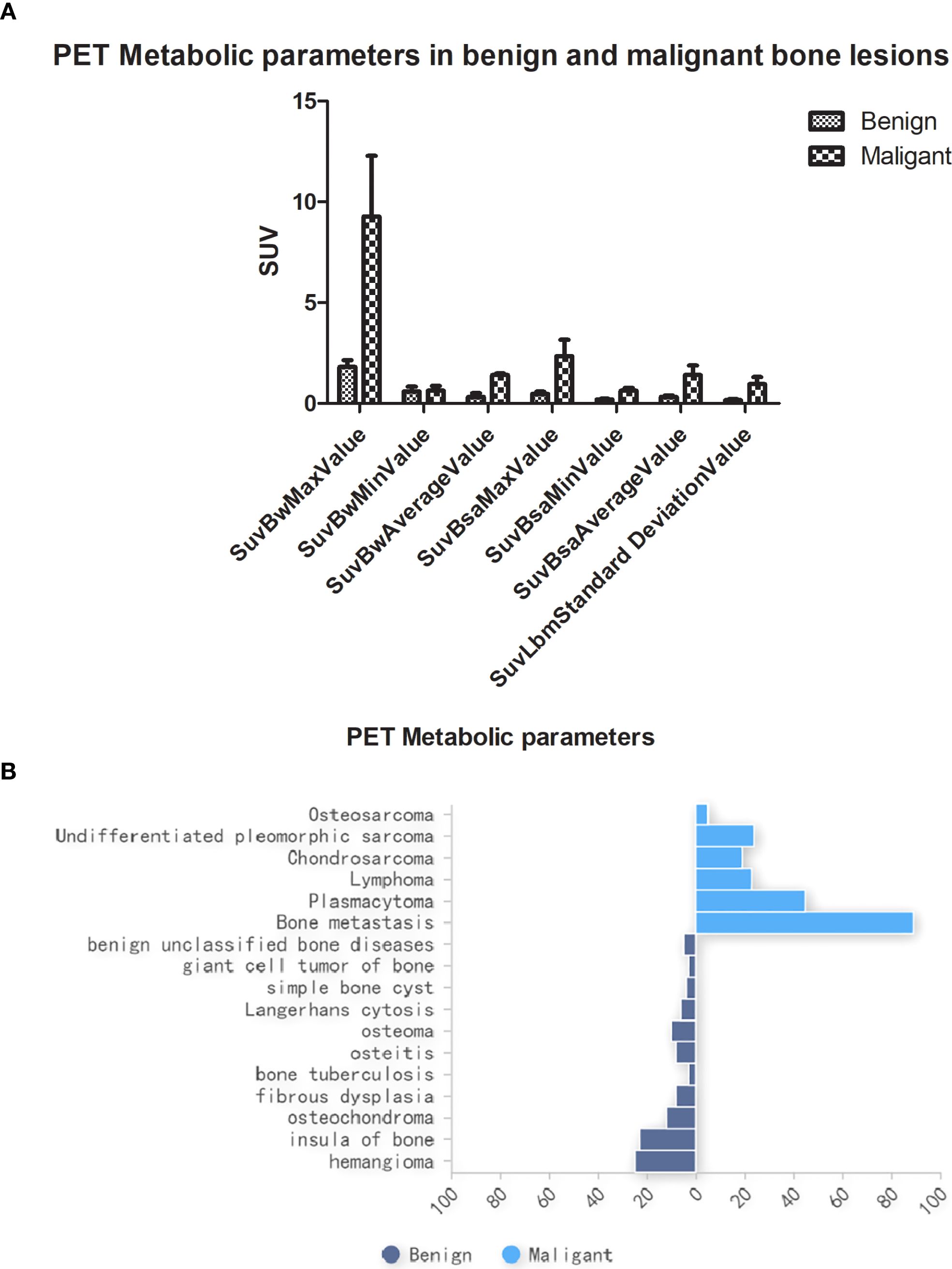

Clinicopathological and multimodal PET/CT imaging data yielded a total of 234,668 data features. Statistically significant differences (P < 0.05) were observed in PET metabolic parameters between benign and malignant bone lesions (Figure 4A). No significant differences were found in other clinical features across cohorts (P > 0.05), as detailed in Table 1. The pathological findings of the bone oligolesions are illustrated in Figure 4B.

Figure 4. (A) PET metabolic parameters in benign and malignant bone oligolesions. (B) The pathology in benign and malignant bone oligolesions.

3.2 Visual assessment based on PET/CT reader performance

A double-blind visual analysis was independently conducted by two experienced physicians (LGX and HSH, each with over 10 years of experience). Physicians were blinded to patients’ clinical and pathological data. Of the 312 lesions suspected of bone metastases, 98 (31.4%) showed morphological abnormalities on CT, 195 (62.5%) showed abnormalities in PET metabolic parameters, and 227 (72.8%) were abnormal on PET/CT fused images.

Through visual assessment, 57 lesions (53.3%) were diagnosed as benign and 138 (67.4%) as malignant. According to the reference standard, 89 of the malignant lesions (43.4%) were ultimately confirmed as bone oligometastases. The diagnostic accuracy of PET/CT was 50.6% (45/89).

3.3 Radiomics analysis of multimodal imaging

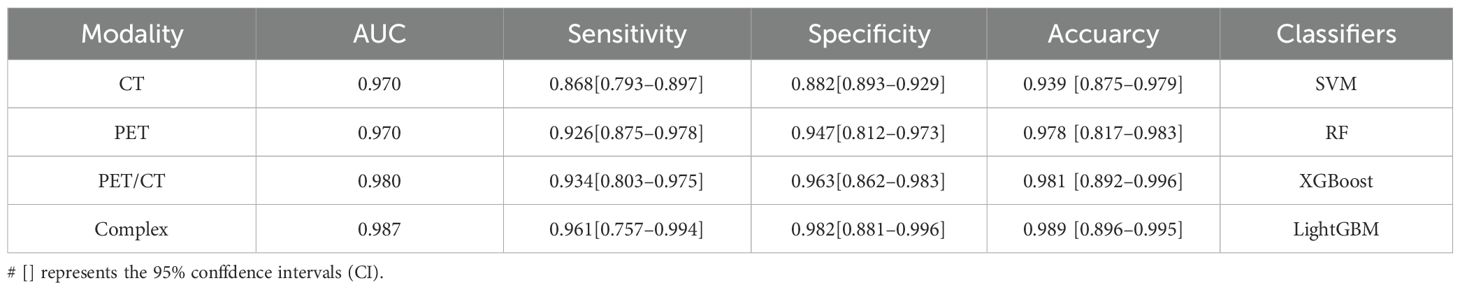

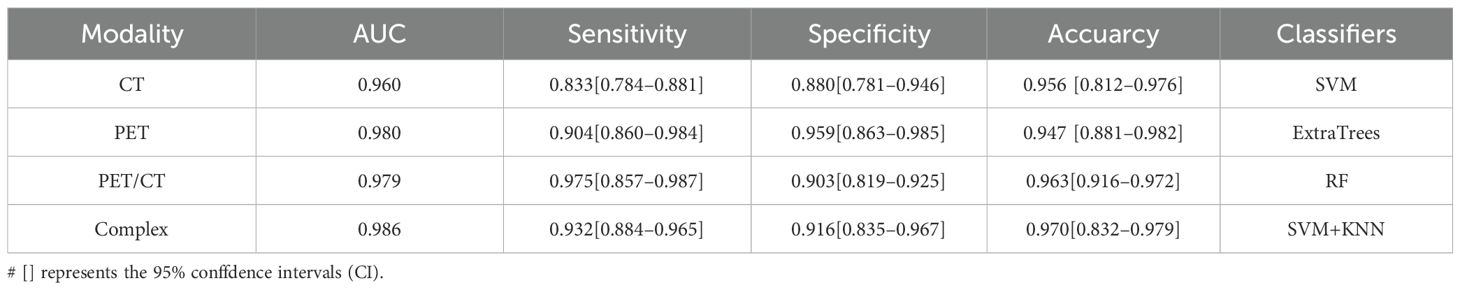

Rectangular ROI images were extracted from 48 groups of CT and PET feature values. Features were classified into the single-mode radiomics model and perfusion model (PET/CT) features. Overall, 7 classifiers were tested in the experiment to finally yield the optimal classification model. Notably, when training the first classification model, we set up random seeds to fix the instances of the training and test sets, to ensure the consistency of training and testing of all classification models and thus the fairness of model evaluation.

Multimodal fusion models combining CT, PET, and PET/CT images demonstrated high validity and stability. Diagnostic metrics for different imaging modalities were calculated for both the validation and test cohorts. Among the single modalities, PET radiomics achieved higher accuracy (97.8%) and AUC (0.970) compared to CT. The PET/CT fusion model had a sensitivity of 93.4% [80.3%–97.5%]. The best-performing model, a complex classifier, achieved a sensitivity of 96.1% [75.7%–99.4%], specificity of 98.2% [88.1%–99.6%], accuracy of 98.7% [89.6%–99.5%], and AUC of 0.989 [0.927–0.994], as shown in Table 2.

3.4 Deep learning models for multimodal imaging

A CNN-based segmentation model was developed using a 3D U-Net architecture. Input data included CT, PET, and label masks, which were resampled using trilinear interpolation and concatenated along the channel dimension. Each lesion patch had a volume of 100 × 100 × 100 voxels with a voxel size of 3.0 × 1.37 × 1.37 mm. The output of the CNN was a probability map for a 12 × 12 × 12 region at the center of the input patch.

Training employed categorical cross-entropy loss with evenly sampled training data from both classes. To improve learning, more samples were drawn from regions with high SUV uptake and previously misclassified voxels to emphasize difficult-to-classify areas (38–40). For single-modality models, PET achieved an accuracy of 94.7%, sensitivity of 90.4%, and specificity of 95.9%. The multimodal fusion model demonstrated an improved accuracy of 96.3%, with an AUC of 0.979, sensitivity of 97.5% [85.7%–98.7%], and specificity of 90.3% [81.9%–92.5%]. The ensemble fusion model further enhanced performance with an accuracy of 97.0%, AUC of 0.986, sensitivity of 93.2%, and specificity of 91.6%, as summarized in Table 3.

3.5 DLR fusion models

To further improve classification performance, conventional radiomics features were integrated with deep learning (DL) features derived from multimodal imaging of bone lesions of breast cancer oligometastases. Feature fusion of DLR features was performed using Z-score normalization. The Spearman correlation coefficient was used to assess feature correlations, retaining one feature from pairs with a correlation above 0.9. LASSO logistic regression was subsequently applied, with penalty parameter tuning via 10-fold cross validation to identify bone lesions features with nonzero coefficients (41). The classification model combining radiomics and DL features showed robust performance.

Evaluation of the performance of the PET/CT fusion models revealed that the complex ensemble model with PET/CT fused clinical parameters in RF classifier achieved the best AUC of 0.990, as well as the highest accuracy, sensitivity, specificity and accuracy of 98.6%, 99.8%, and 99.7%, respectively (Table 4).

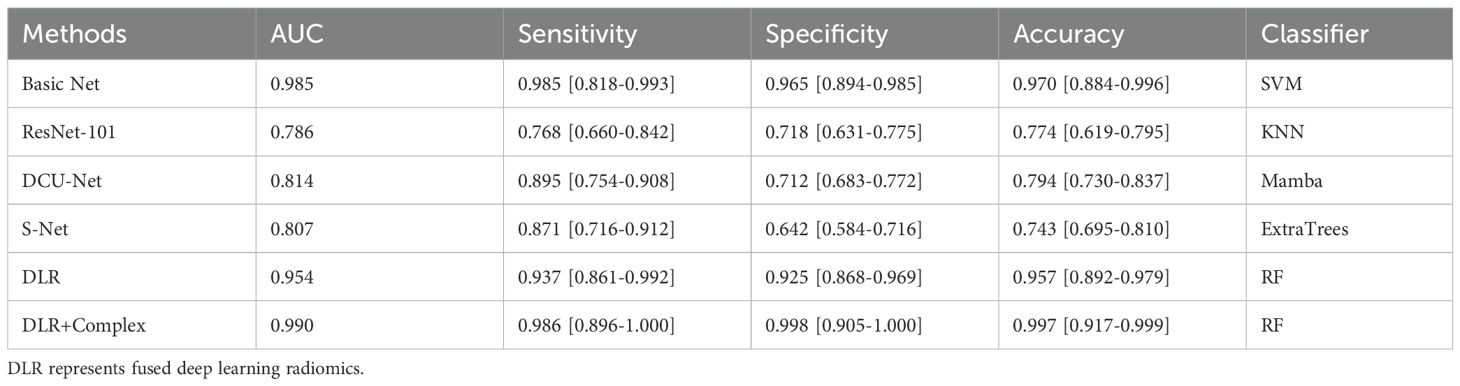

Table 4. The feature fusion results of conventional radiomic features and deep features from transfer learning.

3.6 Comparison of classification models

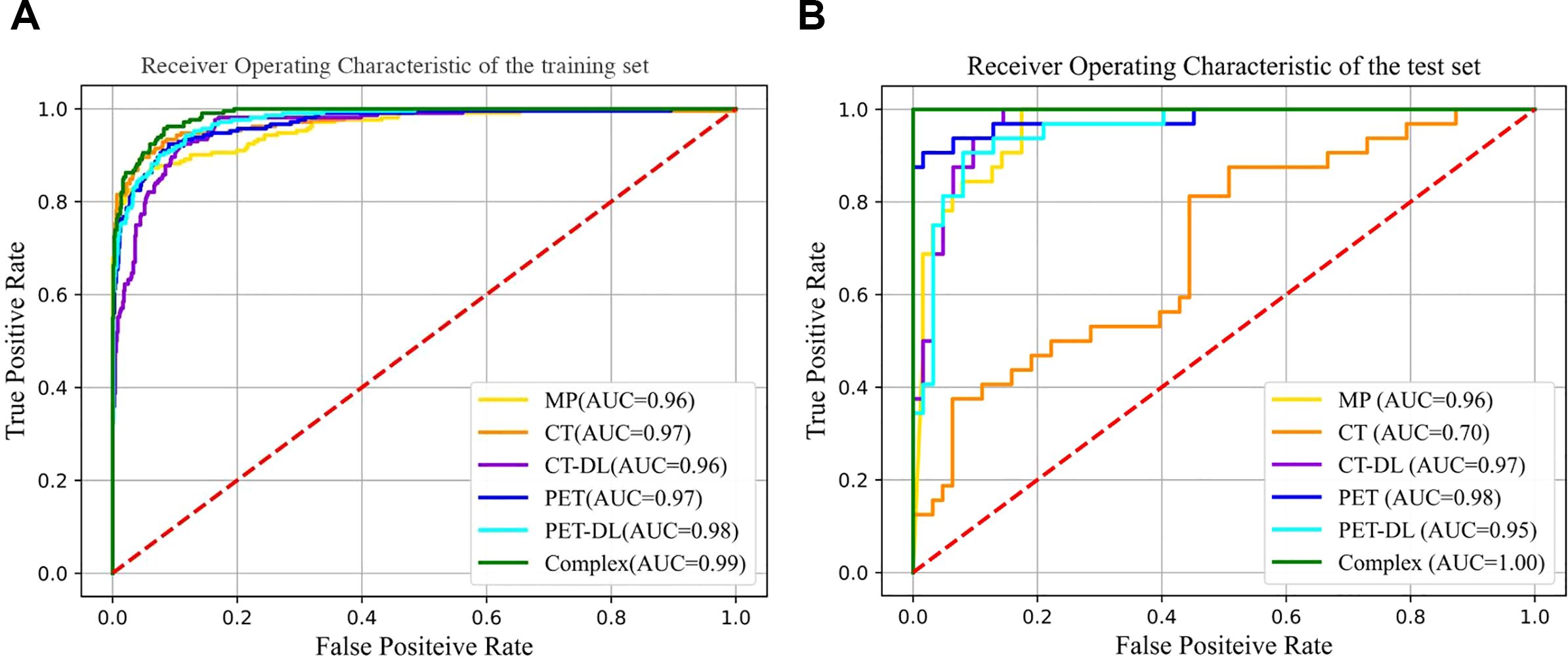

Various classification models were compared to distinguish benign from malignant bone oligolesions in breast cancer. Models based on BasicNet (24), ResNet-101 (42), DCU-Net (43), and S-Net (44) were evaluated. The best performance was achieved by the complex model that combined traditional radiomics, DL, and clinical parameters using the RF classifier. This model performed best in both the training and testing cohorts. The comparative results are illustrated in Figures 5A, B.

Figure 5. Comparison of ROC curves under different classiffers with trainging set (A) and testing set (B).

The DLR+Complex model showed the best performance on all evaluation metrics, with an AUC of 0.990, sensitivity of 98.6% [95% CI: 0.896-1.000], specificity of 99.8% [95% CI: 0.905-1.000], and accuracy of 99.7% [95% CI: 0.917-0.999]. More importantly, compared with the BasicNet model, the AUC of the DLR+Complex model increased by 0.005 and the accuracy increased by 0.27, demonstrating a more significant performance advantage. Further performance comparison analysis shows that compared with the BasicNet model, the DLR+Complex model has improved sensitivity (98.6% vs 98.5%), specificity (99.8% vs 96.5%), and accuracy (99.7% vs 97%), which is of great significance in clinical applications.

4 Discussion

Bone is the third most common site of metastasis after the lungs and liver. Bone metastases most frequently originate from breast and prostate cancers, which together account for approximately 70% of primary tumors (45). Bone is affected by various types of malignancies. Among them, bone oligometastases of breast cancer represents a significant subtype, for which early diagnosis and precision treatment are critical for improving patient outcomes. However, the unique biological characteristics of bone oligometastases still require further investigation, especially the roles played by microenvironmental remodeling and the osteogenic/osteoclastic balance, both of which are crucial in determining prognosis (46).

The most commonly used diagnostic modality for detecting bone metastases is whole-body bone scintigraphy (WBS) using 99mTc-MDP due to its high sensitivity and full-body scanning capability (47). However, WBS has limited accuracy in detecting osteoclastic lesions, particularly when the number of lesions is fewer than three or the lesion size is under 1 cm.

Our study offers significant advancements by integrating radiomics and DL models with multimodal PET/CT images to improve the prediction of bone metastasis in breast cancer. Based on expert diagnostic visual assessment, CT scans detected 98 abnormal morphological lesions—56 osteogenic, 29 osteoclastic, and 13 mixed-type. However, CT alone failed to identify 214 lesions, demonstrating its limitations as a single modality.

In contrast, PET/CT provides a multidimensional view by combining metabolic and anatomical information, which is critical for early diagnosis, disease staging, and evaluating treatment efficacy. Fused PET/CT images revealed 227 abnormal foci, including 89 osteoclastic lesions. The diagnostic accuracy of visual evaluation in distinguishing benign from malignant lesions was 53.3% and 67.4%, respectively. Using a conventional radiomics model, fused PET/CT imaging achieved improved diagnostic performance, with an accuracy of 90.1%, specificity of 86.3%, sensitivity of 83.4%, and an AUC of 0.894.

Pallavi et al. (32) also found that combined models incorporating PET and contrast-enhanced CT (CECT) outperformed single-modality models in differentiating multiple myeloma from skeletal metastases. Other studies have similarly reported positive outcomes using radiomics and traditional machine learning techniques for bone metastasis prediction. For example, Chen et al. (48) demonstrated the effectiveness of a Vision Transformer model, which achieved an AUC of 0.918 on the test set in predicting bone metastasis in colorectal cancer using both plain and contrast-enhanced CT. Song (49) also developed a semi-automated model integrating radiomics, DL, and clinical features using biparametric MRI, achieving an internal AUC of 0.934 and an external AUC of 0.903 for bone metastasis prediction in prostate cancer.

Our results are consistent with the above findings, confirming the value of radiomics features in predicting metastasis. Meanwhile, we compared the Mamba-based recent classifier with the traditional classifies.With its unique structure and algorithmic optimization, the Mamba with multimodal feature fusion method can efficiently extract and fuse the features of different modalities to enhance the system performance and efficiency (50). Its high efficiency and accuracy have made it an important strategy for multimodal data processing (9, 51).While in our study, although the former based on generative feature extraction effectively integrates the datas from CT and PET, it only achieved good performance in the DCU-net model, while the other models underperformed, which may be related to less raw datas in our study.

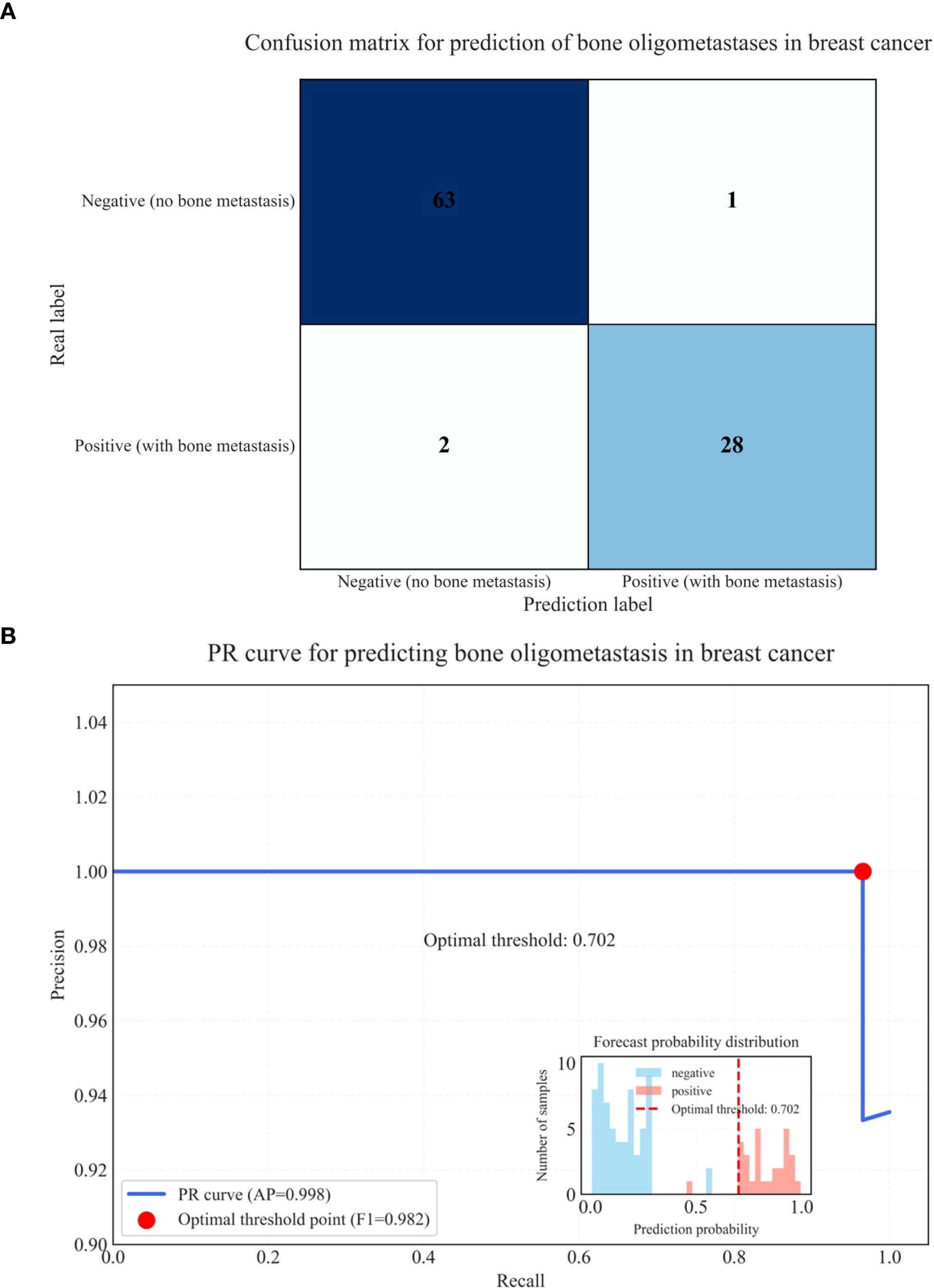

Few studies have specifically targeted bone oligometastases in breast cancer using PET/CT with integrated DLR. In our study, the integration of radiomics, DL (particularly the BasicNet model), and clinical metabolic parameters significantly enhanced predictive performance. The DLR + Complex model achieved outstanding diagnostic metrics, including an AUC of 0.990, accuracy of 99.7%, specificity of 99.8%, and sensitivity of 98.6%, indicating exceptional discriminative ability and generalizability.Our model’s superior performance may stem from its ability to automatically learn complex features directly from raw CT, PET, and fused PET/CT images, combined with clinical metabolic parameters. DL methods capture intricate spatial relationships and hierarchical patterns. These architectural advantages—such as enhanced feature reuse and improved gradient flow—contributed to the model’s high accuracy and robustness. The DLR + Complex model based on multimodal PET/CT imaging has significant potential in accurately identifying and monitoring bone oligometastases in breast cancer, thereby enabling timely and individualized treatment strategies. The confusion matrix (28 cases of true positive, 1 case of false negative, 2 cases of false positive, 63 cases of true negative) reveals the core performance characteristics of the model in the prediction of bone oligometastasis of breast cancer, as shown in (Figure 6A). From a clinical perspective, the high sensitivity (98.6%) showed that the model had excellent positive case capture ability, and only one false negative case was missed. This is crucial in the screening of metastatic cancer, because missed diagnosis may lead to treatment delay. The high specificity (99.8%) proved that the model can effectively exclude non metastatic patients and avoid over treatment. Combined with the overall accuracy of 98.6%, it was confirmed that the deep learning radiomics model was significantly superior to the conventional diagnostic method. The deep learning radiomics model established in this study has achieved effective improvement in sensitivity, specificity and accuracy, and its PR curve has confirmed its stability under high recall demand, providing a reliable tool for early intervention of bone metastasis of breast cancer, as shown in (Figure 6B).

Figure 6. Confusion matrix for prediction of bone oligometastases in breast cancer (A) and PR curve for predicting bone oligometastases in breast cancer (B).

Despite our promising results, several limitations remain, as follows:

1. The sample size of 312 lesions is relatively small and lacks diversity in oligometastases types (52).

2. The study is a single-center, retrospective design, which may introduce selection bias and does not encompass all subtypes and metastatic patterns of breast cancer (53).

3. The DLR + clinical complex models are still in the experimental stage and require prospective, multicenter validation.

4. This research focused solely on the diagnostic performance of PET/CT images and did not investigate the relationship between imaging features and underlying molecular mechanisms, such as bone microenvironmental remodeling.

Future research should therefore aim to expand the sample size, improve automated analysis pipelines, and integrate multi-omics data with imaging features. These efforts will support the advancement of precision diagnostics and therapeutic strategies for breast cancer patients with bone oligometastases.

5 Conclusion

Overall, this study demonstrates the potential of integrating radiomics, DL, and clinical complex models to predict bone oligometastases in breast cancer patients using fused PET/CT imaging. The DLR + Complex model significantly outperformed traditional radiomics and other deep learning architectures, achieving high AUC, accuracy, specificity, and sensitivity in both training and testing cohorts.

Accurate early prediction of bone oligometastases enables timely, targeted treatment interventions, improving patient outcomes and optimizing resource utilization. Ultimately, while PET/CT-based models show strong predictive power, further refinement incorporating histological subtypes, imaging features, and molecular biomarkers will be essential for comprehensive and personalized diagnosis.

Taking histological subtypes as an example, based on our data analysis and relevant literature, triple negative breast cancer (TNBC) and HER2 positive breast cancer showed a higher tendency of bone metastasis. Specifically, the risk of bone metastasis in TNBC patients is about 2–3 times higher than in hormone receptor positive patients, and the metabolic activity of metastatic lesions is higher, which is manifested as higher SUVmax values in our PET/CT imaging. HER2 positive breast cancer tends to have multiple bone metastases rather than oligo metastasis. Therefore, future predictive models should consider these molecular subtypes as key stratification factors, especially in TNBC and HER2 positive patients, which may require the development of more sensitive early detection strategies.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Author contributions

GL: Writing – original draft, Writing – review & editing, Conceptualization, Formal analysis, Validation. RT: Conceptualization, Data curation, Software, Validation, Writing – review & editing. WY: Data curation, Resources, Writing – review & editing. WZ: Data curation, Resources, Writing – review & editing. JC: Software, Writing – review & editing. ZX: Data curation, Investigation, Writing – original draft, Writing – review & editing. GZ: Conceptualization, Formal analysis, Funding acquisition, Project administration, Supervision, Writing – original draft, Writing – review & editing. SH: Project administration, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This work was partially supported by grants from Applied Basic Research Program of Liaoning Province (2022JH2/101500011); Livelihood Science and Technology Plan Joint Plan Project of Liaoning Province (2021JH2/10300098).

Conflict of interest

Author WC was employed by United Imaging Intelligence Beijing Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Nathanson SD, Dieterich LC, Zhang XH, Chitale DA, Pusztai L, Reynaud E, et al. Associations amongst genes, molecules, cells, and organs in breast cancer metastasis. Clin Exp metastasis. (2024) 41:417–37. doi: 10.1007/s10585-023-10230-w

2. Lei S, Zheng R, Zhang S, Wang S, Chen R, Sun K, et al. Global patterns of breast cancer incidence and mortality: A population-based cancer registry data analysis from 2000 to 2020. Cancer Commun (Lond). (2021) 41:1183–94. doi: 10.1002/cac2.12207

3. Kovacevic L, Cavka M, Marusic Z, Kresic E, Stajduhar A, Grbanovic L, et al. Percutaneous CT-guided bone lesion biopsy for confirmation of bone metastases in patients with breast cancer. Diagnostics (Basel). (2022) 12:2094. doi: 10.3390/diagnostics12092094

4. Ponzetti M and Rucci N. Switching homes: how cancer moves to bone. Int J Mol Sci. (2020) 21:4124. doi: 10.3390/ijms21114124

5. Salim N, Tumanova K, Popodko A, and Libson E. Second chance for cure: stereotactic ablative radiotherapy in oligometastatic disease. JCO Global Oncol. (2024) 10:e2300275. doi: 10.1200/GO.23.00275

6. Downey RJ and Ng KK. The management of non-small-cell lung cancer with oligometastases. Chest Surg Clin N Am. (2001) 11:121–32,ix. doi: 10.1016/S1052-3359(25)00556-3

7. Singh D, Yi WS, Brasacchio RA, Muhs AG, Smudzin T, Williams JP, et al. Is there a favorable subset of patients with prostate cancer who develop oligometastases? Int J Radiat oncology biology Phys. (2004) 58:3–10. doi: 10.1016/s0360-3016(03)01442-1

8. Yin F-F, Das S, Kirkpatrick J, Oldham M, Wang Z, and Zhou S-M. Physics and imaging for targeting of oligometastases. Semin Radiat Oncol. (2006) 16:85–101. doi: 10.1016/j.semradonc.2005.12.004

9. Wang Z, Tao Q, Zhong Z, Yang M, Wang X, Luo X, et al. Enhancing YOLOv8n with Mamba-like linear attention for defect detection and coating thickness analysis of irregular film tablet. Int J pharmaceutics. (2025) 678:125704. doi: 10.1016/j.ijpharm.2025.125704

10. Cummings MC, Simpson PT, Reid LE, Jayanthan J, Skerman J, Song S, et al. Metastatic progression of breast cancer: insights from 50 years of autopsies. J Pathol. (2014) 232:23–31. doi: 10.1002/path.4288

11. Yilmaz MH, Ozguroglu M, Mert D, Turna H, Demir G, Adaletli I, et al. Diagnostic value of magnetic resonance imaging and scintigraphy in patients with metastatic breast cancer of the axial skeleton: a comparative study. Med Oncol. (2008) 25:257–63. doi: 10.1007/s12032-007-9027-x

12. Lecouvet FE, Geukens D, Stainier A, Jamar F, Jamart J, d’Othée BJ, et al. Magnetic resonance imaging of the axial skeleton for detecting bone metastases in patients with high-risk prostate cancer: diagnostic and cost-effectiveness and comparison with current detection strategies. J Clin Oncol. (2007) 25:3281–7. doi: 10.1200/JCO.2006.09.2940

13. deSouza NM, Liu Y, Chiti A, Oprea-Lager D, Gebhart G, Van Beers BE, et al. Strategies and technical challenges for imaging oligometastatic disease: Recommendations from the European Organisation for Research and Treatment of Cancer imaging group. Eur J Cancer (Oxford England: 1990). (2018) 91:153–63. doi: 10.1016/j.ejca.2017.12.012

14. Aryal A, Kumar VS, Shamim SA, Gamanagatti S, and Khan SA. What is the comparative ability of 18F-FDG PET/CT, 99mTc-MDP skeletal scintigraphy, and whole-body MRI as a staging investigation to detect skeletal metastases in patients with osteosarcoma and ewing sarcoma? Clin Orthop Relat Res. (2021) 479:1768–79. doi: 10.1097/CORR.0000000000001681

15. Zamani-Siahkali N, Mirshahvalad SA, Farbod A, Divband G, Pirich C., Veit-Haibach P., et al. SPECT/CT, PET/CT, and PET/MRI for Response Assessment of Bone Metastases. Semin Nucl Med. (2024) 54(3):356–70. doi: 10.1053/j.semnuclmed.2023.11.005

16. Janssen J-C, Meißner S, Woythal N, Prasad V, Brenner W, Diederichs G, et al. Comparison of hybrid 68Ga-PSMA-PET/CT and 99mTc-DPD-SPECT/CT for the detection of bone metastases in prostate cancer patients: Additional value of morphologic information from low dose CT. Eur Radiol. (2018) 28:610–9. doi: 10.1007/s00330-017-4994-6

17. McVeigh LG, Linzey JR, Strong MJ, Duquette E, Evans JR, Szerlip NJ, et al. Stereotactic body radiotherapy for treatment of spinal metastasis: A systematic review of the literature. Neurooncol Adv. (2024) 6:iii28–47. doi: 10.1093/noajnl/vdad175

18. deSouza NM and Tempany CM. A risk-based approach to identifying oligometastatic disease on imaging. Int J Cancer. (2019) 144:422–30. doi: 10.1002/ijc.31793

19. Ceranka J, Wuts J, Chiabai O, Lecouvet F, and Vandemeulebroucke J. Computer-aided diagnosis of skeletal metastases in multi-parametric whole-body MRI. Comput Methods programs biomedicine. (2023) 242:107811. doi: 10.1016/j.cmpb.2023.107811

20. Shang F, Tang S, Wan X, Li Y, and Wang L. BMSMM-net: A bone metastasis segmentation framework based on mamba and multiperspective extraction. Acad Radiol. (2025) 32:1204–17. doi: 10.1016/j.acra.2024.11.018

21. Moreau N, Rousseau C, Fourcade C, Santini G, Ferrer L, Lacombe M, et al. Deep learning approaches for bone and bone lesion segmentation on 18FDG PET/CT imaging in the context of metastatic breast cancer. Annu Int Conf IEEE Eng Med Biol Soc. (2020) 2020:1532–5. doi: 10.1109/EMBC44109.2020.9175904

22. Moreau N, Rousseau C, Fourcade C, Santini G, Brennan A, Ferrer L, et al. Automatic segmentation of metastatic breast cancer lesions on (18)F-FDG PET/CT longitudinal acquisitions for treatment response assessment. Cancers. (2021) 14:101. doi: 10.3390/cancers14010101

23. Hu S, Lawrence J, Schuster CR, Gunduz Sarioglu A, Yusuf C, Reiche E, et al. Moving to 3D: quantifying virtual surgical planning accuracy using geometric morphometrics and cephalometrics in facial feminization surgery. J Craniofac Surg. (2025). doi: 10.1097/SCS.0000000000011360

24. Ma C, Liu X, and Ling K. Design and development of analysis software for acid-base balance disorder based on Visual Basic.NET. Zhonghua Wei Zhong Bing Ji Jiu Yi Xue. (2021) 33:216–22. doi: 10.3760/cma.j.cn121430-20201012-00667

25. Jang JH, Choi M-Y, Lee SK, Kim S, Kim J, Lee J, et al. Clinicopathologic risk factors for the local recurrence of phyllodes tumors of the breast. Ann Surg Oncol. (2012) 19:2612–7. doi: 10.1245/s10434-012-2307-5

26. Kubo Y, Motomura G, Ikemura S, Hatanaka H, Utsunomiya T, Hamai S, et al. Effects of anterior boundary of the necrotic lesion on the progressive collapse after varus osteotomy for osteonecrosis of the femoral head. J orthopaedic Sci. (2020) 25:145–51. doi: 10.1016/j.jos.2019.02.014

27. Takahashi Y, Sugino T, Onogi S, Nakajima Y, and Masuda K. Improved segmentation of hepatic vascular networks in ultrasound volumes using 3D U-Net with intensity transformation-based data augmentation. Med Biol Eng Comput. (2025) 63:2133–44. doi: 10.1007/s11517-025-03320-2

28. Sachpekidis C, Goldschmidt H, Edenbrandt L, and Dimitrakopoulou-Strauss A. Radiomics and artificial intelligence landscape for [18F]FDG PET/CT in multiple myeloma. Semin Nucl Med. (2024) 55:387–95. doi: 10.1053/j.semnuclmed.2024.11.005

29. Kim H. AresB-Net: accurate residual binarized neural networks using shortcut concatenation and shuffled grouped convolution. PeerJ Comput Sci. (2021) 7:e454. doi: 10.7717/peerj-cs.454

30. Zhang Z, Zhang D, Yang Y, Liu Y, and Zhang J. Value of radiomics and deep learning feature fusion models based on dce-mri in distinguishing sinonasal squamous cell carcinoma from lymphoma. Front Oncol. (2024) 14:1489973. doi: 10.3389/fonc.2024.1489973

31. Li Y, Chen C-M, Li W-W, Shao M-T, Dong Y, and Zhang Q-C. Radiomic features based on pyradiomics predict CD276 expression associated with breast cancer prognosis. Heliyon. (2024) 10:e37345. doi: 10.1016/j.heliyon.2024.e37345

32. Mannam P, Murali A, Gokulakrishnan P, Venkatachalapathy E, and Venkata Sai PM. Radiomic analysis of positron-emission tomography and computed tomography images to differentiate between multiple myeloma and skeletal metastases. Indian J Nucl Med. (2022) 37:217–26. doi: 10.4103/ijnm.ijnm_111_21

33. Bao Y-W, Wang Z-J, Shea Y-F, Chiu PK-C, Kwan JS, Chan FH-W, et al. Combined quantitative amyloid-β PET and structural MRI features improve alzheimer’s disease classification in random forest model - A multicenter study. Acad Radiol. (2024) 31:5154–63. doi: 10.1016/j.acra.2024.06.040

34. Li J, Shi Q, Yang Y, Xie J, Xie Q, Ni M, et al. Prediction of EGFR mutations in non-small cell lung cancer: a nomogram based on 18F-FDG PET and thin-section CT radiomics with machine learning. Front Oncol. (2025) 15:1510386. doi: 10.3389/fonc.2025.1510386

35. Hou X, Chen K, Wan X, Luo H, Li X, and Xu W. Intratumoral and peritumoral radiomics for preoperative prediction of neoadjuvant chemotherapy effect in breast cancer based on 18F-FDG PET/CT. J Cancer Res Clin Oncol. (2024) 150:484. doi: 10.1007/s00432-024-05987-w

36. Ren Z, Zhou M, Shakil S, and Tong RK-Y. Alzheimer’s disease recognition via long-range state space model using multi-modal brain images. Front Neurosci. (2025) 19:1576931. doi: 10.3389/fnins.2025.1576931

37. Hong G, Lee S-I, Kang DH, Chung C, Park HS, and Lee JE. Delta-he as a novel predictive and prognostic biomarker in patients with NSCLC treated with PD-1/PD-L1 inhibitors. Cancer Med. (2025) 14:e70826. doi: 10.1002/cam4.70826

38. Lindgren Belal S, Larsson M, Holm J, Buch-Olsen KM, Sörensen J, Bjartell A, et al. Automated quantification of PET/CT skeletal tumor burden in prostate cancer using artificial intelligence: The PET index. Eur J Nucl Med Mol Imaging. (2023) 50:1510–20. doi: 10.1007/s00259-023-06108-4

39. Perk T, Bradshaw T, Chen S, Im H-J, Cho S, Perlman S, et al. Automated classification of benign and Malignant lesions in 18F-NaF PET/CT images using machine learning. Phys Med Biol. (2018) 63:225019. doi: 10.1088/1361-6560/aaebd0

40. Trägårdh E, Borrelli P, Kaboteh R, Gillberg T, Ulén J, Enqvist O, et al. RECOMIA-a cloud-based platform for artificial intelligence research in nuclear medicine and radiology. EJNMMI Phys. (2020) 7:51. doi: 10.1186/s40658-020-00316-9

41. Zhou P, Zeng R, Yu L, Feng Y, Chen C, Li F, et al. Deep-learning radiomics for discrimination conversion of alzheimer’s disease in patients with mild cognitive impairment: A study based on 18F-FDG PET imaging. Front Aging Neurosci. (2021) 13:764872. doi: 10.3389/fnagi.2021.764872

42. Haennah JHJ, Christopher CS, and King GRG. Prediction of the COVID disease using lung CT images by Deep Learning algorithm: DETS-optimized Resnet 101 classifier. Front In Med. (2023) 10:1157000. doi: 10.3389/fmed.2023.1157000

43. Wang K, Han Y, Ye Y, Chen Y, Zhu D, Huang Y, et al. Mixed reality infrastructure based on deep learning medical image segmentation and 3D visualization for bone tumors using DCU-Net. J Bone Oncol. (2025) 50:100654. doi: 10.1016/j.jbo.2024.100654

44. Shang Q, Wang G, Wang X, Li Y, and Wang H. S-Net: A novel shallow network for enhanced detail retention in medical image segmentation. Comput Methods Programs BioMed. (2025) 265:108730. doi: 10.1016/j.cmpb.2025.108730

45. Tsukasaki M. Dive into the bone: new insights into molecular mechanisms of cancer bone invasion. J Bone Miner Res. (2025) 40:827–33. doi: 10.1093/jbmr/zjaf054

46. Deng M, Ding H, Zhou Y, Qi G, and Gan J. Cancer metastasis to the bone: Mechanisms and animal models (Review). Oncol Lett. (2025) 29:221. doi: 10.3892/ol.2025.14967

47. Van den Wyngaert T, Strobel K, Kampen WU, Kuwert T, van der Bruggen W, Mohan HK, et al. The EANM practice guidelines for bone scintigraphy. Eur J Nucl Med Mol Imaging. (2016) 43:1723–38. doi: 10.1007/s00259-016-3415-4

48. Chen G, Liu W, Lin Y, Zhang J, Huang R, Ye D, et al. Predicting bone metastasis risk of colorectal tumors using radiomics and deep learning ViT model. J Bone Oncol. (2025) 51:100659. doi: 10.1016/j.jbo.2024.100659

49. Xinyang S, Tianci S, Xiangyu H, Shuang Z, Yangyang W, Mengying D, et al. A semi-automatic deep learning model based on biparametric MRI scanning strategy to predict bone metastases in newly diagnosed prostate cancer patients. Front Oncol. (2024) 14:1298516. doi: 10.3389/fonc.2024.1298516

50. Ahamed MA and Cheng Q. TSCMamba: mamba meets multi-view learning for time series classification. Int J Inf fusion. (2025) 120:103079. doi: 10.1016/j.inffus.2025.103079

51. Ren H, Zhou Y, Zhu J, Lin X, Fu H, Huang Y, et al. Rethinking efficient and effective point-based networks for event camera classification and regression. IEEE Trans Pattern Anal Mach Intell. (2025) 47:6228–41. doi: 10.1109/TPAMI.2025.3556561

52. Nevens D, Jongen A, Kindts I, Billiet C, Deseyne P, Joye I, et al. Completeness of reporting oligometastatic disease characteristics in the literature and influence on oligometastatic disease classification using the ESTRO/EORTC nomenclature. Int J Radiat oncology biology Phys. (2022) 114:587–95. doi: 10.1016/j.ijrobp.2022.06.067

Keywords: breast cancer, bone oligometastases, deep learning, radiomics, deep learning radiomics, PET/CT

Citation: Lu G, Tian R, Yang W, Zhao J, Chen W, Xiang Z, Hao S and Zhang G (2025) Prediction of bone oligometastases in breast cancer using models based on deep learning radiomics of PET/CT imaging. Front. Oncol. 15:1621677. doi: 10.3389/fonc.2025.1621677

Received: 01 May 2025; Accepted: 31 July 2025;

Published: 21 August 2025.

Edited by:

Arka Bhowmik, Memorial Sloan Kettering Cancer Center, United StatesReviewed by:

Amrutanshu Panigrahi, Siksha O Anusandhan University, IndiaMeng Wang, Liaoning Technical University, China

Copyright © 2025 Lu, Tian, Yang, Zhao, Chen, Xiang, Hao and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shanhu Hao, aGFvc2hhbmh1MzI1N0AxNjMuY29t; Guoxu Zhang, emhhbmdndW94dV81MDJAMTYzLmNvbQ==

Guoxiu Lu

Guoxiu Lu Ronghui Tian3

Ronghui Tian3 Shanhu Hao

Shanhu Hao