- 1Department of Radiology, Jiangjin Central Hospital of Chongqing, Chongqing, China

- 2Chongqing General Hospital, Chongqing University, Chongqing, China

- 3Department of Pathology, Jiangjin Central Hospital of Chongqing, Chongqing, China

- 4Department of Research and Development, Shanghai United Imaging Intelligence Co., Ltd., Shanghai, China

Objective: To predict Ki-67 expression levels in non-small cell lung cancer (NSCLC) using an interpretable model combining clinical-radiological, radiomic, and deep learning features.

Methods: This retrospective study included 259 NSCLC patients from Center 1 (training/validation sets) and 112 from Center 2 (independent test set). Patients were grouped by a 40% Ki-67 cutoff. Radiomic features and deep learning features were extracted from CT images, where the deep learning features were obtained via a deep residual network (ResNet18). The least absolute shrinkage and selection operator (LASSO) was used to select optimal features and compute radiomics (rad-score) and deep learning (deep-score) scores. Univariate and multivariate logistic regression were used to identify independent clinical-radiological predictors of Ki-67. Four support vector machine models were developed: a clinical-radiological model (based on independent clinical-radiological features), a radiomic model (using the rad-score), a deep learning model (using the deep-score), and a combined model (integrating all the above features). SHapley Additive exPlanations (SHAP) analysis was used to visualize feature contributions. Models’ performance was assessed using receiver operating characteristic (ROC) curves and the integrated discrimination improvement (IDI) index.

Results: High Ki-67 expression occurred in 76 (42.0%), 38 (48.7%), and 33 (29.5%) patients in the training, validation, and independent test sets, respectively. In the independent test set, the combined model achieved the highest predictive performance, with an AUC of 0.892 (95% CI: 0.828–0.956). This improvement over the clinical-radiological (0.820, 95% CI: 0.721–0.918), radiomics (0.750, 95% CI: 0.655–0.844), and deep learning (0.817, 95% CI: 0.732–0.902) models was statistically significant (all p<0.05), as supported by IDI values of 0.115, 0.288, and 0.095, respectively. SHAP analysis identified the deep-score, histological type, and rad-score as key predictors.

Conclusion: The interpretable combined model can predict Ki-67 expression in NSCLC patients. This approach may provide imaging evidence to assist clinicians in optimizing personalized therapeutic strategies.

1 Introductions

Non-small cell lung cancer (NSCLC) is the most common pathological type of lung cancer and is associated with a relatively low average five-year survival rate (1–4). This clinical reality highlights the urgent need for improved prognostic assessment. Therefore, Ki-67, a validated indicator of tumor cell proliferation, has gained significant attention (5). Its expression level is not only closely linked to the aggressive behavior of NSCLC (6, 7) but also serves as a critical prognostic determinant, with high Ki-67 expression being associated with markedly shorter progression-free and overall survival periods (8, 9). Furthermore, research has demonstrated the Ki-67 index may be clinically significant for predicting neoadjuvant chemotherapy effectiveness in stage I-IIIA NSCLC and chemotherapy responses in advanced NSCLC (10, 11). Currently, Ki-67 expression is typically assessed using immunohistochemistry (IHC); however, this method faces two key challenges: its invasive nature (12), and the inability of a localized sample to represent the entire tumor, given NSCLC’s significant heterogeneity (13, 14). Thus, a non-invasive method for characterizing tumor heterogeneity is needed for the accurate evaluation of Ki-67.

Radiomics addresses this by quantifying image-based features, which correlate with histopathology and show potential for Ki-67 prediction (15, 16). However, such predefined features are intrinsically limited in capturing the complex NSCLC microenvironment (17, 18). In contrast, deep learning can automatically extract high-level features directly from images, capturing complex information inaccessible to handcrafted radiomics (19, 20). The study further indicates that integrating radiomic and deep learning features can improve the classification of lung cancer subtypes and prognosis while achieving multi-modal information fusion (21, 22).

Therefore, this study aims to develop a model combining clinical-radiological, radiomic, and deep learning features to predict Ki-67 expression levels. We further use the Shapley Additive Explanations (SHAP) technique to interpret model outputs, with the goal of providing imaging evidence for personalized treatment planning.

2 Methods

2.1 Patient population

This retrospective study was conducted in accordance with ethical standards and was approved by the institutional review boards of [Jiangjin Central Hospital of Chongqing (Center 1)] (Approval No: KY20241204-001) and [Chongqing General Hospital (Center 2)] (Approval No: KY S2024-058-01), with a waiver of informed consent. It analyzed patient data from Center 1 and Center 2 between June 2022 and September 2024.

Inclusion criteria (1): Pathologically confirmed NSCLC by surgical resection or biopsy, with documented Ki-67 IHC results (2); Chest CT scans obtained before biopsy or surgery. Exclusion criteria (1): Patients with poor CT image quality or incomplete clinical/imaging data (2); Patients with unclear delineation between lesions and adjacent obstructive pneumonia or atelectatic tissue (3); Patients who received any treatment or any invasive examination before CT examination.

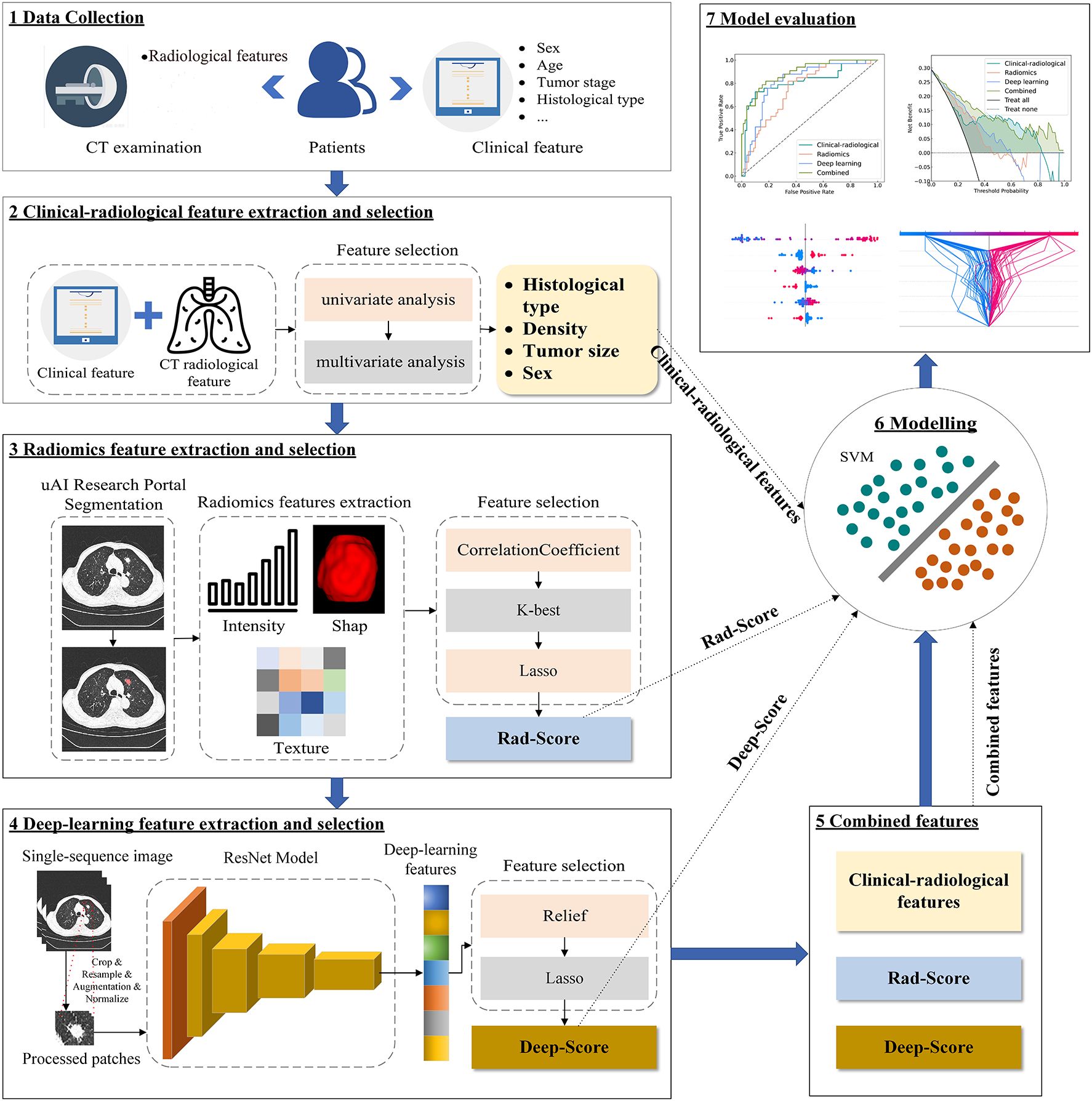

A total of 259 patients were enrolled from Center 1 (163 males, 96 females, mean age 65.4 ± 9.7 years), randomly divided into a training set (N = 181) and a validation set (N = 78) at a ratio of 7:3. At Center 2, an independent test set was established with 112 patients (60 males, 52 females, mean age 65.5 ± 9.6 years). The overall study design and analytical pipeline are summarized in Figure 1.

2.2 Pathological assessment

IHC analysis was used to assess Ki-67 expression, defined as the percentage of positively stained cells. Under high magnification (*400), the number of Ki-67 positive tumor cells was quantified; Ki-67 expression was calculated as: (number of positive tumor cells in each zone/total number of tumor cells in each zone) ×100%. Values from five different areas were recorded and averaged. Previous studies have reported significant prognostic differences in NSCLC patients when a 40% cutoff value for Ki-67 expression is used (23–26). Furthermore, several radiomics studies aiming to predict Ki-67 levels have also adopted this 40% threshold (27, 28). Thus, this study defined high Ki-67 expression as ≥40% and low expression as <40%.

2.3 CT examination

CT examination was performed using two scanners: a UCT530 40-row helical CT scanner (United Imaging Healthcare, Shanghai, China) at Center 1, and a Philips IQon Spectral 64-row helical CT scanner (Philips Medical Systems, Best, Netherlands) at Center 2. The scanning parameters were configured as follows: Center 1: Tube voltage of 120 kV, automatic tube current modulation (reference: 80 mAs), pitch factor of 1.075, rotation time of 0.6 s/rev, collimation width of 22 mm, acquisition matrix of 512×512, reconstruction slice thickness/interval of 1 mm, and lung window settings of window width 1200 HU/window center -600 HU; Center 2: Tube voltage of 120 kV, automatic tube current modulation (reference: 129 mAs), pitch factor of 1.000, rotation time of 0.5 s/rev, maintaining identical collimation width (22 mm), acquisition matrix (512×512), reconstruction parameters (1 mm thickness/interval), and lung window settings (window width 1200 HU/window center -600 HU).

2.4 Clinical data collection and CT radiological feature evaluation

Baseline clinical data of patients were systematically documented, encompassing demographic characteristics (gender, age, alcohol consumption, smoking history), tumor profiles (clinical stages, histological type), clinical symptoms (cough, sputum production, fever, breathing difficulty, chest tightness, headache, lymphadenopathy), and laboratory data (white blood cell count, lymphocyte count, platelet count, hemoglobin level, red blood cell count, creatinine level).

Two radiologists independently assessed the CT radiological features in a double-blind method, which were defined as expert-based descriptions of lesion morphology. The assessed features included tumor location, shape, maximum cross-sectional diameter, bronchial cutoff sign, lobulation, air bronchogram, spiculation, pleural tag, vacuole (or cystic component), and tumor density. Any disagreements were resolved by consensus.

2.5 Image segmentation

The uAI Research Portal (29) (version: 20240730, https://urp.united-imaging.com/; Shanghai United Imaging Intelligence Co., Ltd., Shanghai, China), a licensed commercial platform, was employed for image segmentation and all subsequent classification modeling. This one-stop analysis system for multimodal clinical research images was developed using Python (version 3.7.3) and incorporated the widely used PyRadiomics package. Access to the platform was granted under our institutional license agreement with the vendor. Using the platform’s integrated VB-Net model, regions of interest (ROIs) were automatically segmented and reconstructed into 3D volumes of interest (VOIs). This model, previously validated for pulmonary nodules [average Dice similarity coefficient (DSC): 0.915] (30). Additionally, a three-tier arbitration mechanism was established: two radiologists with 5 and 8 years of experience, respectively, independently performed slice-by-slice corrections of the ROIs. The inter-observer DSC was 0.887. For regions with high inter-observer agreement (DSC ≥ 0.700), the contours from the more experienced radiologist (8 years) were used. In cases of inter-observer discrepancies (DSC < 0.700), a senior radiologist with over 15 years of experience arbitrated and finalized the contours.

2.6 Feature extraction and selection

2.6.1 Radiomic feature extraction and selection

Before feature extraction, all CT images underwent a standardized preprocessing pipeline. This included isotropic resampling to a median voxel size of 0.7×0.7×1 mm³, gray-level discretization with a fixed bin width of 25, and setting the window width and level to 1500 HU and -600 HU, respectively. Radiomic feature extraction was performed on the segmented VOIs in compliance with the Image Biomarker Standardization Initiative (IBSI) guidelines. A total of 2264 features were extracted, which can be categorized into three groups: 104 shape-based features, 432 first-order statistical features, and 1728 second-order texture features.

Feature selection was exclusively conducted on the training set to avoid data leakage and overfitting, following a multi-step process (1): Z-score normalization of all features (2); removal of features with near-zero variance or high inter-correlation (Pearson’ r > 0.8) (3); selection of outcome-associated features via the SelectKBest method (F-statistic) (4); identification of the most robust, non-redundant feature set for modeling using the least absolute shrinkage and selection operator (LASSO) with 10-fold cross-validation.

The radiomics score (rad-score) was computed as a linear combination of the LASSO-selected features, weighted by their coefficients. The formula for the rad-score is as follows (Equation 1):

where n is the number of selected features, and are the Z-score standardized value and its corresponding LASSO regression coefficient for the i-th feature, respectively, and b is the intercept of the model

2.6.2 Deep learning feature extraction and selection

A deep residual network (ResNet18) was implemented for feature extraction and trained from scratch, without using pre-trained weights or transfer learning. The original CT images were subjected to multi-stage preprocessing (see Supplementary Text 1 for specific preprocessing procedures). The preprocessed images were fed into ResNet18, optimized with a hybrid loss function combining Focal Loss and Dice Loss. Focal Loss served as the primary classification objective, while Dice Loss—computed between class activation maps (CAM) and ground-truth lesion regions—guided the network to focus on lesion areas, thereby improving both interpretability and classification accuracy. The total loss is defined as (Equation 2):

where is an empirically determined weighting coefficient (default =0.05) that controls the relative contribution of the Dice loss.

The model was performed using the AdamW optimizer (β1=0.9, β2=0.999, weight decay=0.01) combined with a step-based learning rate scheduler (step size: 1000 epochs, decay factor: 0.1) for dynamic learning rate adjustment. Early stopping was employed to prevent overfitting. After training, the model with the highest validation AUC was selected as the final model for both end-to-end classification performance evaluation and deep feature extraction.

For deep feature extraction, the global average pooling layer was selected as the feature representation layer. Input CT images were preprocessed and fed into the finalized model (with frozen parameters), and the activations from the global average pooling layer were extracted as 256-dimensional feature vectors. Throughout this process, all model weights remained fixed without fine-tuning.

Deep learning feature selection comprised the following steps (1): Z-score normalization of all features (2); Application of the Relief algorithm to evaluate feature relevance (3); Final selection and weighting of the most discriminative features using LASSO regression.

The deep learning score (deep-score) was then computed as a linear combination of these selected features, weighted by their LASSO coefficients, following the formula (Equation 3):

where n is the number of selected features, and are the Z-score standardized value and its corresponding LASSO regression coefficient for the i-th feature, respectively, and b is the intercept of the model

2.7 Model development and validation

All modeling was conducted within the uAI Research Portal using the open-source Scikit-learn library (Scikit-learn 0.23.2). To ensure a fair comparison of predictive performance across feature modalities, clinical-radiological (based on independent clinical-radiological features), radiomics (using rad-score), and deep learning (using deep-score) models were developed with a uniform support vector machine (SVM) classifier. SVM is well-suited for handling high-dimensional data and potential non-linear relationships. For the combined model integrating clinical-radiological features, rad-score, and deep-score, the SVM classifier was compared against four other algorithms: Decision Tree, Random Forest, XGBoost, and Logistic Regression. The SVM demonstrated better performance on the validation set (Supplementary Table S1) and was consequently selected. All models were optimized for hyperparameters via grid search, with the specific parameters detailed in Supplementary Table S2.

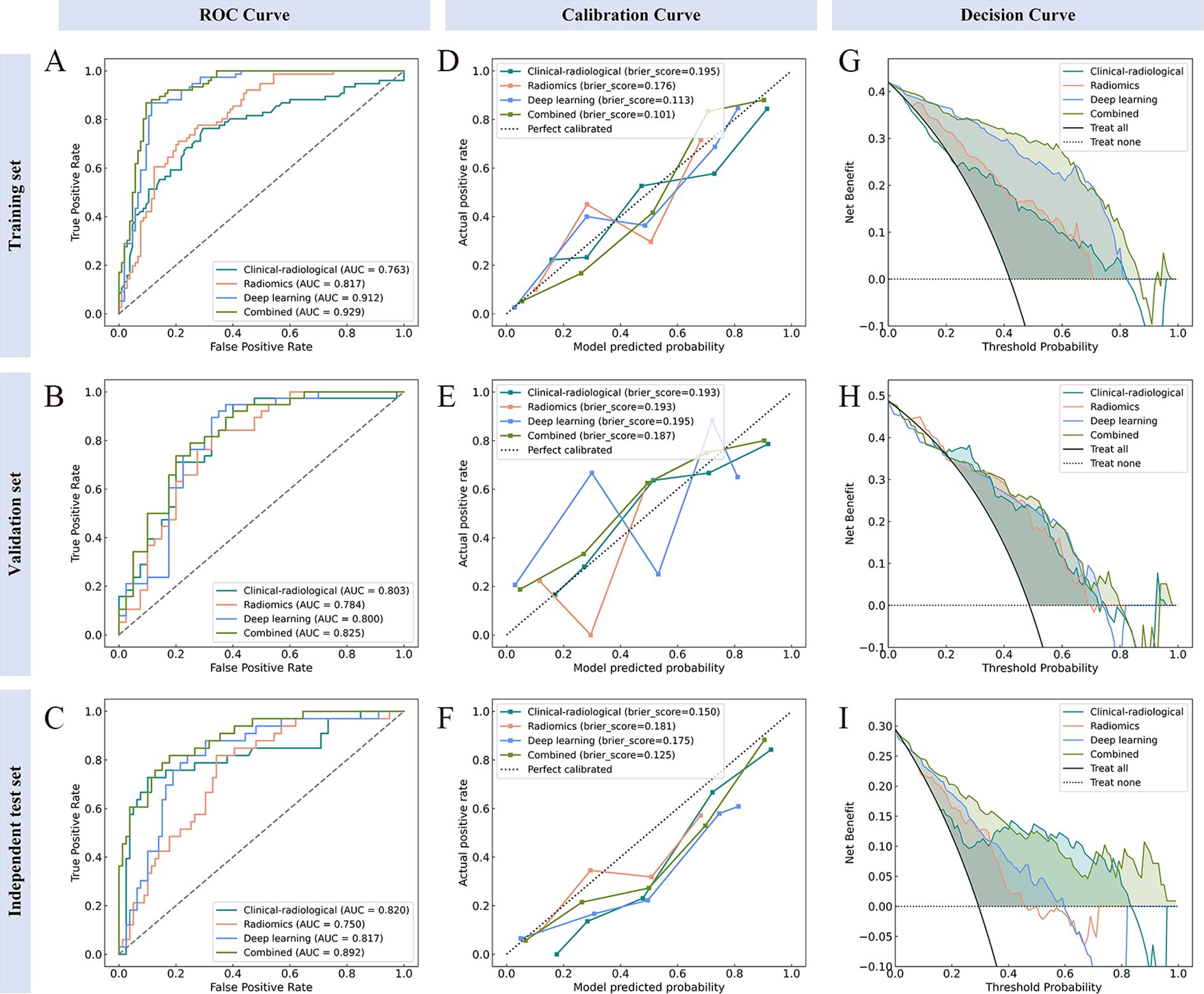

Receiver operating characteristic (ROC) curves were plotted to calculate the area under the curve (AUC), specificity, sensitivity, and accuracy. Calibration curves, along with the Brier score, assessed the agreement between model predictions and pathologic outcomes. Decision curve analysis (DCA) was applied to quantify the clinical net benefit across a range of threshold probabilities from 0% to 100%.

2.8 Model interpretation

SHAP is an open-source python library (RPID: SCR_021362) for machine learning model interpretation. It quantitatively assesses the contribution of each feature to model outputs through Shapley value calculation. In this study, feature summary plot, beeswarm plot, decision plot and force plot were generated to provide global and local interpretations.

2.9 Statistical analysis

The Kolmogorov–Smirnov test was applied to assess data distribution normality. Normally distributed data were presented as mean ± standard deviation ( ± s), whereas non-normally distributed data were reported as median with interquartile range [M (Q25, Q75)].

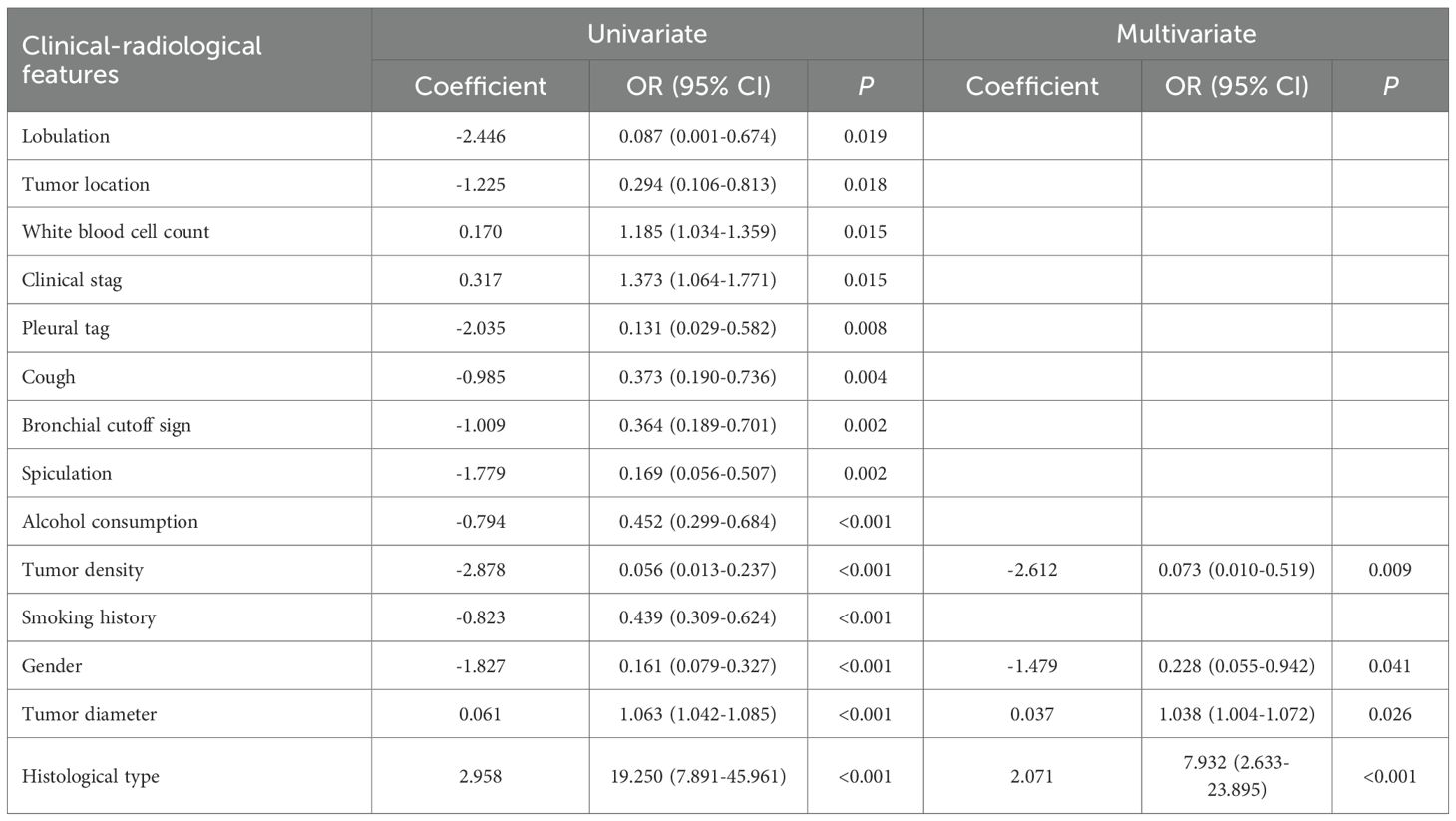

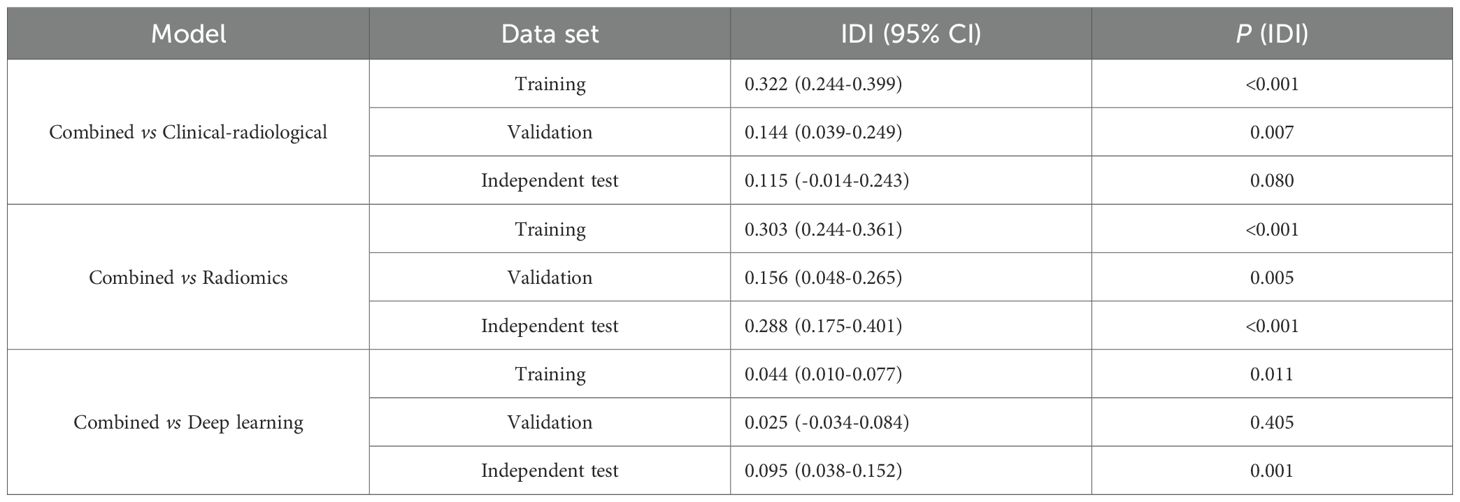

A univariate analysis was performed in the train set to assess each clinical and radiological feature’s association with the outcome. Continuous variables were compared using the student’s t-test or Mann-Whitney U test, while categorical variables were compared using the Chi-square test or Fisher’s exact test. Features with a p-value < 0.05 in the univariate analysis were subsequently included in the multivariate analysis. Multivariate analysis was conducted to identify clinical and radiological features independently associated with Ki-67 expression level. Model comparison between the combined model and the individual models was performed using the integrated discrimination improvement (IDI) index to quantify the magnitude of the net improvement in predicted probabilities. Statistical analyses were executed using the uAI Research Portal and SPSS statistical software (version 26.0, https://www.ibm.com). A p-value<0.05 was considered statistically significant.

3 Results

3.1 Clinical-radiological feature and clinical-radiological model

The clinical-radiological features of the three datasets are summarized in Table 1. IHC stratification showed high Ki-67 expression (≥40%) was present in 42.0% (76/181), 48.7% (38/78), and 29.5% (33/112) of cases in the training, validation, and independent test sets, respectively. Multivariate analyses identified histologic type (p<0.001), diameter (p=0.026), density (p=0.009) and gender (p=0.041) as independent clinical-radiological features predicting Ki-67 expression level (Table 2). The clinical-radiological model achieved the AUC values of 0.763 (training set), 0.803 (validation set), and 0.820 (independent test set), as detailed in Table 3.

3.2 Prediction performance of the radiomics model

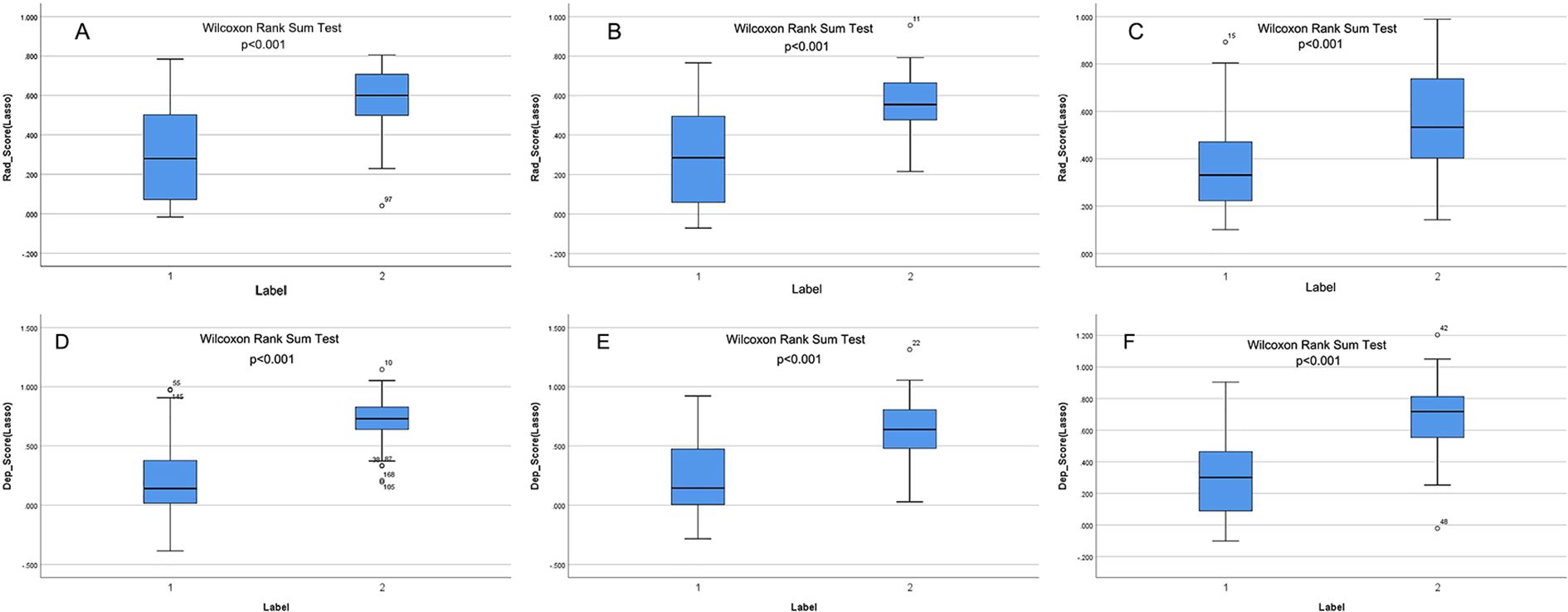

Following feature selection, four robust radiomics features were retained to compute the rad-score (Supplementary Table S3). The high-expression group exhibited a significantly greater rad-score compared to the low-expression group across all three cohorts (training, validation, and independent test; all p<0.001), as shown in Figures 2A–C. The radiomics model achieved AUC values of 0.817 in the training set, 0.784 in the validation set, and 0.750 in the independent test set (Table 3).

Figure 2. (A–F) show the rad-score and deep-score in training, validation, and independent test sets, respectively. Label 1 and Label 2 indicate the low- and high-expression groups.

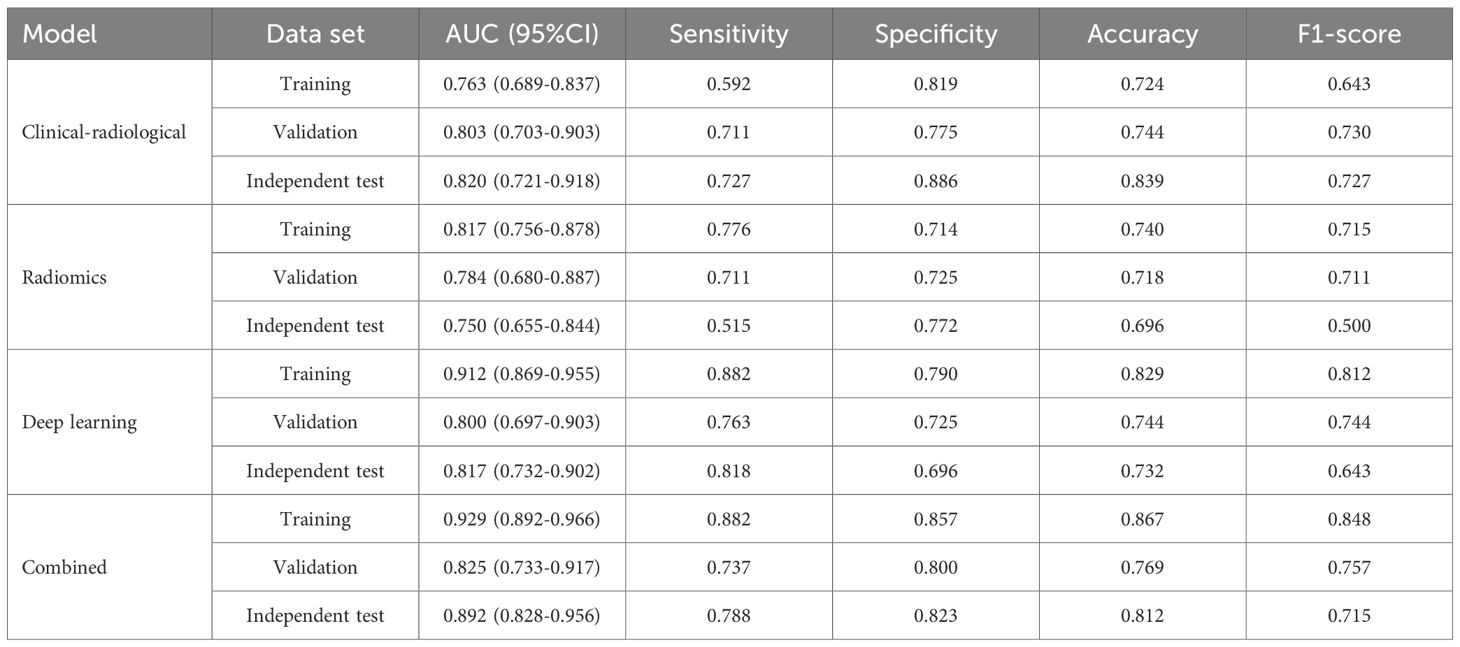

3.3 Prediction performance of the deep learning model

The CAM module was used to visualize the attention regions that the deep learning network focused on when making classification decisions (Figure 3; a detailed explanation is provided in Supplementary Text 2). Four discriminative deep learning features were retained to compute the deep-score (Supplementary Table S4). The high-expression group exhibited a significantly greater deep-score compared to the low-expression group across all three cohorts (all p<0.001), as shown in Figures 2D–F. The AUCs of the training, validation, and independent test sets of the deep learning model were 0.912, 0.800, and 0.817, respectively (Table 3).

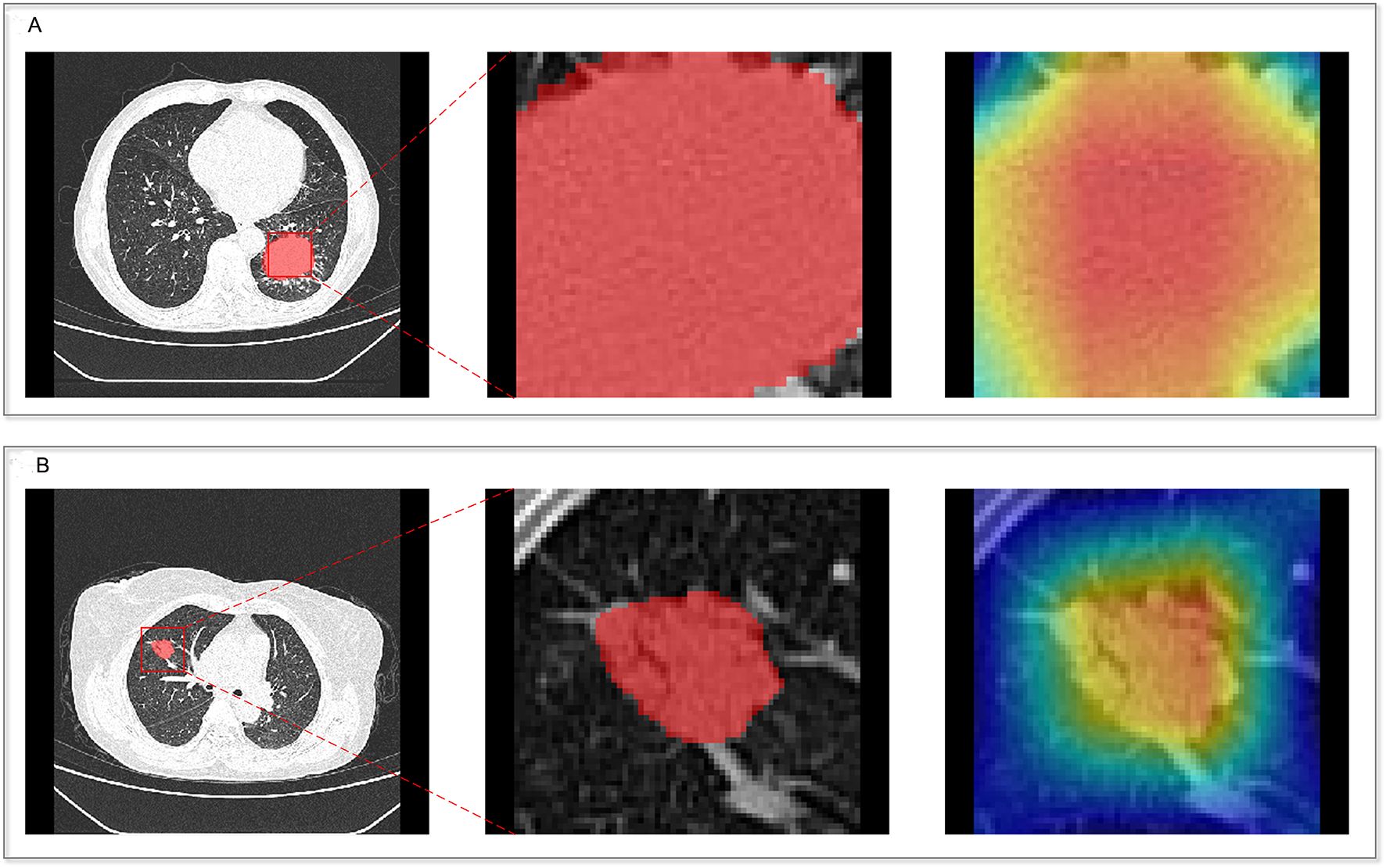

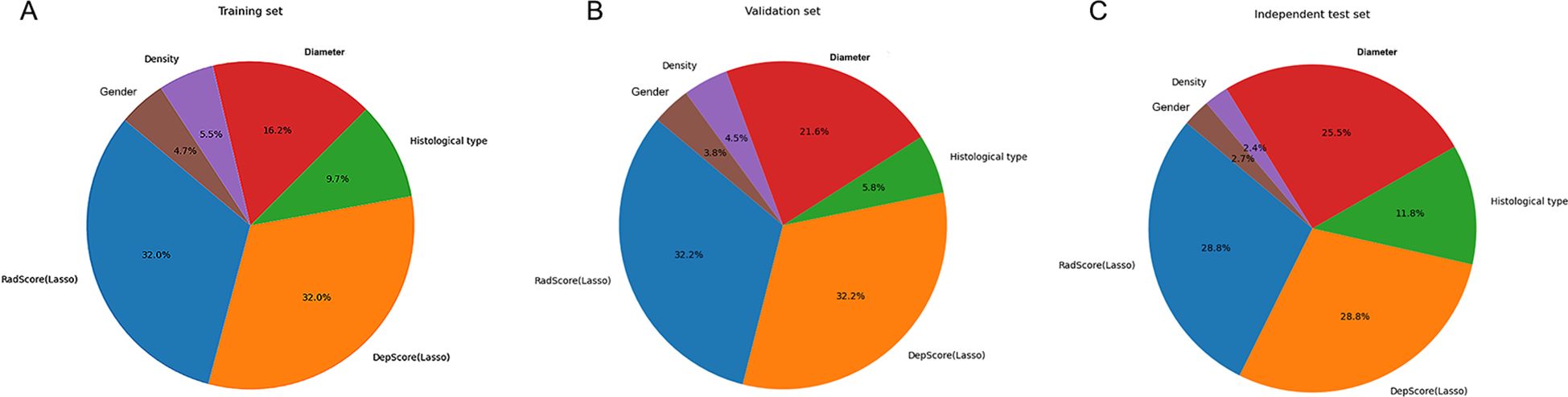

3.4 Prediction performance of the combined model

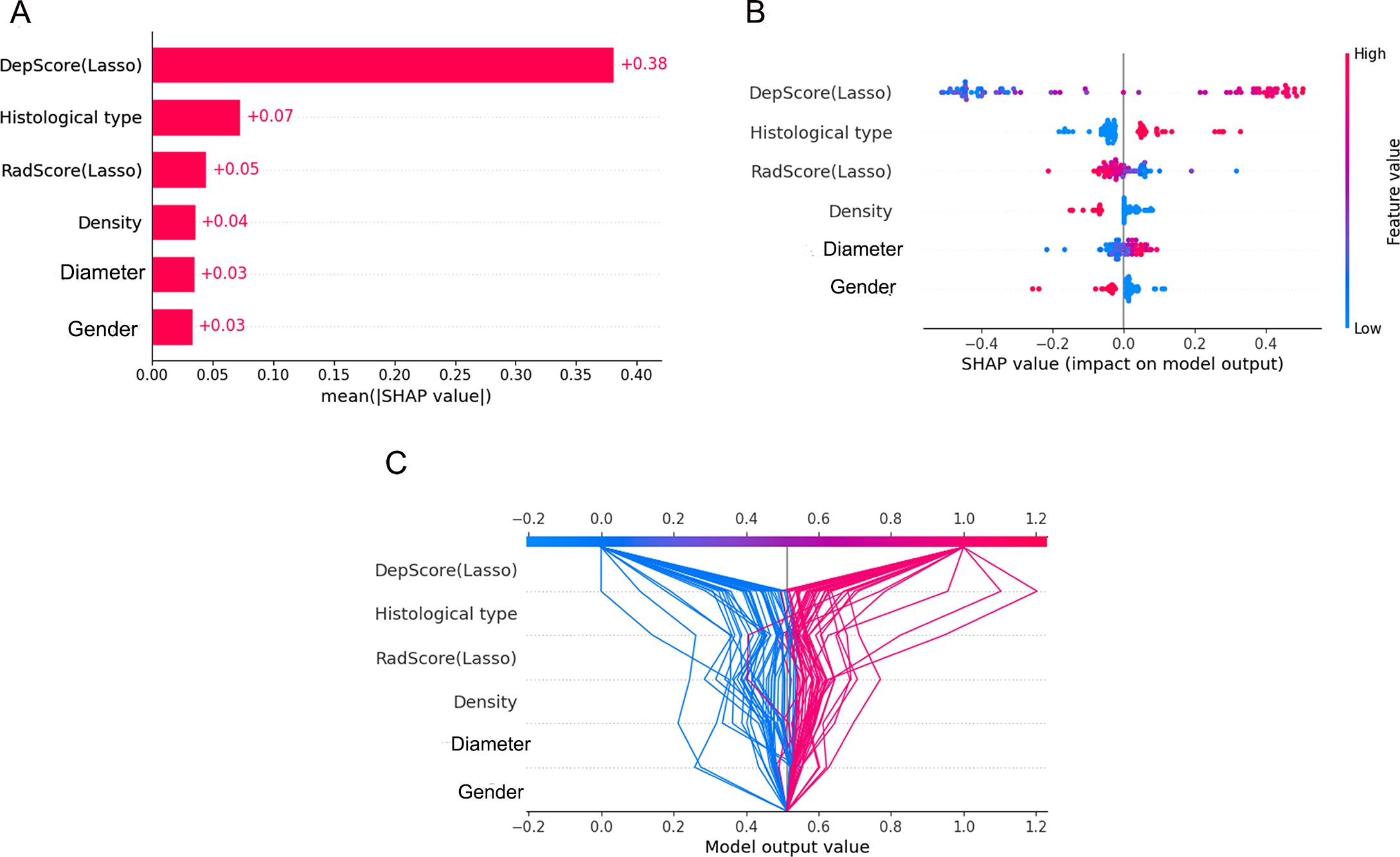

The combined model performance metrics revealed AUC values of 0.929 in the training set, 0.825 in the validation set, and 0.892 in the independent test set (Table 3, Figures 4A–C). In the independent test set, the combined model demonstrated significant improvements in overall predictive performance, with IDI values of 0.115, 0.288, and 0.095 over the clinical-radiological, radiomics, and deep learning models, respectively (Table 4). The DCA demonstrated that the combined model had a superior clinical net benefit than all the other models (Figures 4D–F). The calibration curves revealed that the combined model displayed strong agreement between predictions and pathology results (Figures 4G–I), with Brier scores of 0.101 (training set), 0187 (validation set) and 0.125 (independent test set), indicating excellent probabilistic calibration. Among all the features, deep-score and rad-score contributed the most to the prediction (Figures 5A–C).

Figure 4. Performance evaluation of the model across different datasets. ROC (A–C), calibration (D–F), and decision curve analysis (G–I) curves for the training, validation, and independent test sets, respectively.

Figure 5. The relative contribution of features in the training (A), validation (B), and independent test (C) sets.

3.5 Model visualization and interpretation

For global feature analysis: the importance plot (Figure 6A) showed the relative significance ranking of features, with Deep-score being the most influential; the bee-swarm plot (Figure 6B) displayed SHAP values for each sample across features (positive values increased the probability of high Ki-67 expression, while negative values reduced it); the decision plot (Figure 6C) illustrated cumulative feature impacts on model predictions, with gray lines denoting baseline values, red trajectories mapping positive samples (high-expression group), and blue trajectories tracking negative samples (low-expression group).

Figure 6. Global visualization plots. (A–C) represent the feature importance plot, swarm plot, and decision plot, respectively.

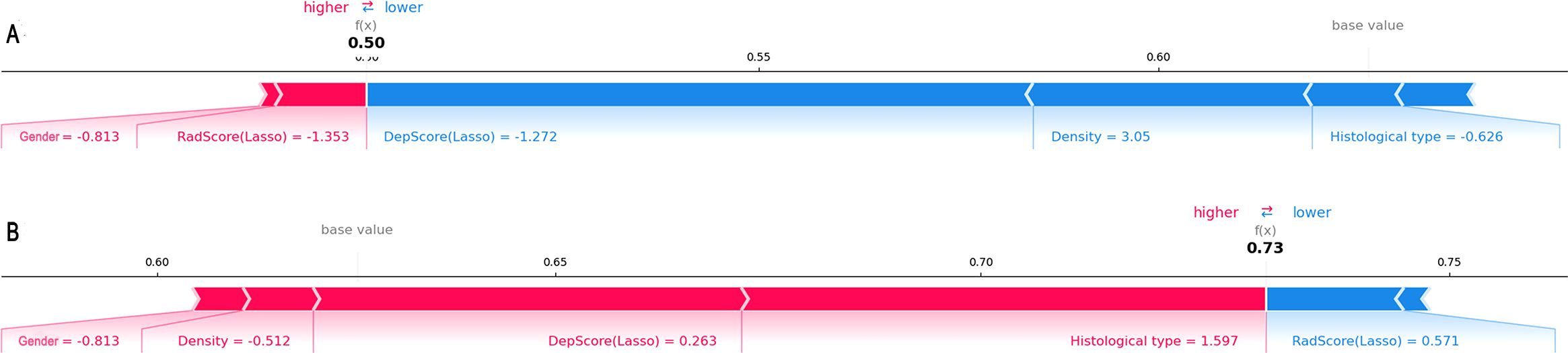

For individual visualization, force plots (Figures 7A, B) illustrated the model’s decision-making process for both high- and low-expression cases. Score calculation started from the baseline value, with each feature represented as a directional force via SHAP values. Feature contributions were quantified by colored bars: bar length corresponded proportionally to the feature’s influence magnitude on the final prediction value f(x). Specifically, red bars indicated increased probability of high expression, while blue bars denoted decreased probability. These directional forces were then cumulatively aggregated to yield the overall effect.

Figure 7. Force plots illustrating individual predictions. Shown are two representative samples from the low-expression group (A) and the high-expression group (B).

4 Discussion

In this study, we developed a combined model integrating clinical-radiological, radiomics, and deep learning features to non-invasively characterize intra-heterogeneity and predict Ki-67 expression level in NSCLC. Our findings demonstrate that the combined model achieved superior predictive performance compared to single models, maintaining robust generalizability with an AUC of 0.892 in the independent test set. SHAP analysis elucidated the combined model’s decision-making process, reinforcing its clinical credibility and generalizability. This study is anticipated to enable clinicians to preoperatively evaluate tumor invasiveness and optimize personalized therapeutic strategies.

NSCLC with different Ki-67 expression levels exhibits distinct biological behaviors and gene expression patterns. These visually imperceptible “differences” can be captured via medical imaging and quantified through high-throughput feature extraction, thereby clarifying the association between these features and the underlying pathophysiological processes (31, 32). Previous studies have explored the predictive value of CT-derived radiomics features for Ki-67 expression levels, with test set AUCs ranging from 0.77–0.84 (15, 27, 33). However, conventional radiomics features predominantly rely on mathematical formulations and are susceptible to technical variabilities, such as image noise, manual segmentation variability, and CT scan parameters (34). In contrast, deep learning algorithms excel at autonomously learning high-level abstract representations from images, effectively overcoming the limitations of handcrafted features. This advantage has driven their widespread use in recent medical research (20, 35). Building on these foundations, our study leverages the ResNet18 convolutional neural network to extract deep learning features from CT images for Ki-67 prediction. More importantly, we integrate diverse feature types through Machine Learning to achieve comprehensive multi-modal information fusion. The combined model demonstrated significantly enhanced predictive performance, underscoring the complementary value of multi-modal features. Liu et al. (36) fused radiomics and deep learning features from DCE-MRI images, and similarly found that the fusion improved the accuracy of preoperative lymph node metastasis prediction in breast cancer. Kim et al. (37) combined deep learning and radiomics features extracted from CT images to predict the epidermal growth factor receptor mutation status in NSCLC patients; results showed this combination of radiomics and deep learning was feasible.

In clinical practice, lung cancer treatment decisions should rely on robust evidence rather than algorithm-generated probabilities. This underscores the critical need to address interpretability challenges inherent in machine learning’s “black-box modeling.” Our study used SHAP technology to enhance the combined model’s interpretability, providing transparency into feature contributions and the model’s decision-making process. Results showed significantly higher deep-score and rad-score in the high-Ki-67 expression group than the low-expression group, consistent with previous research (27). Deep-score and rad-score are typically positively correlated with tumor heterogeneity (38). NSCLC with high Ki-67 expression exhibits stronger proliferative, infiltrative, and invasive abilities, resulting in more complex intra-tumoral texture and greater heterogeneity (9, 39). The high-Ki-67 expression group primarily included males, squamous cell carcinoma cases, and solid tumors with larger diameters, consistent with previous research (28, 40). Warth et al. (41) evaluated Ki-67 expression in three large independent NSCLC cohorts (total n=1,065) and found that squamous cell carcinoma had a mean expression level (52.8%) twice that of adenocarcinoma (25.8%). A meta-analysis further confirmed higher Ki-67 expression in squamous cell carcinoma than in adenocarcinoma (7). Additionally, more solid tumor components and larger diameters indicate more aggressive tumor biology, hence higher Ki-67 expression compared to non-solid tumors and smaller lesions (27, 42). Previous studies reported that CT radiological features (e.g., lobulation, vacuole or cystic component) showed significant differences across Ki-67 expression levels, but findings were inconsistent (15, 43). In the present study, no such differences were observed between the high- and low-expression groups. This discrepancy may stem from two key limitations: Firstly, physicians can only obtain limited valuable information from CT radiological features via visual inspection. Secondly, evaluation of these features often relies on physicians’ subjective judgment and clinical experience, leading to poor consistency and reproducibility that introduces bias into research findings.

There were some limitations to this study. First, this study has a retrospective design with an inherent risk of selection bias, and the sample size, though validated in an external set, remains limited. Therefore, the findings should be interpreted as proof-of-concept, and prospective validation in a larger, multi-center cohort is warranted before clinical application. Second, our analysis focused exclusively on intra-tumoral features, and the predictive value of peritumoral regions warrants future investigation. Third, data from enhanced CT were not included in this study. Because previous studies had suggested that high-density contrast in enhanced images may mask the original textural features of the lesion tissue (44). And enhanced CT scanning also carried the risk of iodine contrast use and renal burden. However, it was also noted that radiomics features based on biphasic enhanced CT images could predict Ki-67 expression level (27). Therefore, the inclusion of data from enhanced scans needs to be further explored. Finally, future work will aim to incorporate multi-modal data (e.g., pathomics, transcriptomics) to improve the biological interpretability of the models.

5 Conclusion

This study developed and validated an interpretable model combining clinical-radiological, radiomic, and deep learning features for the non-invasive prediction of Ki-67 expression levels. This model is expected to support clinicians in the early assessment of tumor proliferation activity in patients with NSCLC, providing complementary information to inform personalized treatment strategies.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Medical Ethics Committee of The Jiangjin Central Hospital of Chongqing. The studies were conducted in accordance with the local legislation and institutional requirements. The ethics committee/institutional review board waived the requirement of written informed consent for participation from the participants or the participants’ legal guardians/next of kin because this is a retrospective study utilizing medical records obtained from previous clinical diagnoses and treatments.

Author contributions

SQ: Conceptualization, Data curation, Methodology, Project administration, Writing – original draft. QJ: Methodology, Software, Writing – original draft. CZ: Data curation, Writing – original draft. ML: Writing – review & editing, Data curation, Software. XFZ: Data curation, Investigation, Writing – original draft. XZ: Investigation, Writing – original draft. DS: Data curation, Investigation, Writing – original draft. YL: Data curation, Formal analysis, Investigation, Writing – original draft. JZ: Conceptualization, Funding acquisition, Supervision, Writing – review & editing.

Funding

The author(s) declared financial support was received for this work and/or its publication. This study was funded by the Bureau of Science and Technology of Jiangjin District, Chongqing Municipality (Grant No. Y2023012), and Beijing Medical Award Foundation (Grant No. YXJL-2025-0483-0265).

Conflict of interest

Author ML was employed by the company Shanghai United Imaging Intelligence Co., Ltd.

The remaining authors declared that this work was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was not used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2025.1655714/full#supplementary-material

References

1. Bray F, Laversanne M, Sung H, Ferlay J, Siegel RL, Soerjomataram I, et al. Global cancer statistics 2022: globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2024) 74:229–63. doi: 10.3322/caac.21834

2. Duma N, Santana-Davila R, and Molina JR. Non-small cell lung cancer: epidemiology, screening, diagnosis, and treatment. Mayo Clin Proc. (2019) 94:1623–40. doi: 10.1016/j.mayocp.2019.01.013

3. Wao H, Mhaskar R, Kumar A, Miladinovic B, and Djulbegovic B. Survival of patients with non-small cell lung cancer without treatment: a systematic review and meta-analysis. Syst Rev. (2013) 2:10. doi: 10.1186/2046-4053-2-10

4. Ganti AK, Klein AB, Cotarla I, Seal B, and Chou E. Update of incidence, prevalence, survival, and initial treatment in patients with non-small cell lung cancer in the us. JAMA Oncol. (2021) 7:1824–32. doi: 10.1001/jamaoncol.2021.4932

5. Gerdes J, Lemke H, Baisch H, Wacker HH, Schwab U, and Stein H. Cell cycle analysis of a cell proliferation-associated human nuclear antigen defined by the monoclonal antibody ki-67. J Immunol. (1984) 133:1710–5. doi: 10.4049/jimmunol.133.4.1710

6. Spiliotaki M, Neophytou CM, Vogazianos P, Stylianou I, Gregoriou G, Constantinou AI, et al. Dynamic monitoring of pd-l1 and ki67 in circulating tumor cells of metastatic non-small cell lung cancer patients treated with pembrolizumab. Mol Oncol. (2023) 17:792–809. doi: 10.1002/1878-0261.13317

7. Wei D, Chen W, Meng R, Zhao N, Zhang X, Liao D, et al. Augmented expression of ki-67 is correlated with clinicopathological characteristics and prognosis for lung cancer patients: an up-dated systematic review and meta-analysis with 108 studies and 14,732 patients. Respir Res. (2018) 19:150. doi: 10.1186/s12931-018-0843-7

8. Zhao Y, Shi F, Zhou Q, Li Y, Wu J, Wang R, et al. Prognostic significance of pd-l1 in advanced non-small cell lung carcinoma. Med (Baltimore). (2020) 99:e23172. doi: 10.1097/MD.0000000000023172

9. Tabata K, Tanaka T, Hayashi T, Hori T, Nunomura S, Yonezawa S, et al. Ki-67 is a strong prognostic marker of non-small cell lung cancer when tissue heterogeneity is considered. BMC Clin Pathol. (2014) 14:23. doi: 10.1186/1472-6890-14-23

10. Hong X, Yang Z, Wang M, Wang L, and Xu Q. Reduced decorin expression in the tumor stroma correlates with tumor proliferation and predicts poor prognosis in patients with i-iiia non-small cell lung cancer. Tumour Biol. (2016) 37:16029–38. doi: 10.1007/s13277-016-5431-1

11. Wang D, Chen D, Zhang C, Chai M, Guan M, Wang Z, et al. Analysis of the relationship between ki-67 expression and chemotherapy and prognosis in advanced non-small cell lung cancer. Transl Cancer Res. (2020) 9:3491–8. doi: 10.21037/tcr.2020.03.72

12. Wiener RS, Schwartz LM, Woloshin S, and Welch HG. Population-based risk for complications after transthoracic needle lung biopsy of a pulmonary nodule: an analysis of discharge records. Ann Intern Med. (2011) 155:137–44. doi: 10.7326/0003-4819-155-3-201108020-00003

13. Koo JM, Kim J, Lee J, Hwang S, Shim HS, Hong TH, et al. Deciphering the intratumoral histologic heterogeneity of lung adenocarcinoma using radiomics. Eur Radiol. (2025) 35:4861–72. doi: 10.1007/s00330-025-11397-4

14. Li J, Qiu Z, Zhang C, Chen S, Wang M, Meng Q, et al. Ithscore: comprehensive quantification of intra-tumor heterogeneity in nsclc by multi-scale radiomic features. Eur Radiol. (2023) 33:893–903. doi: 10.1007/s00330-022-09055-0

15. Bao J, Liu Y, Ping X, Zha X, Hu S, and Hu C. Preoperative ki-67 proliferation index prediction with a radiomics nomogram in stage t1a-b lung adenocarcinoma. Eur J Radiol. (2022) 155:110437. doi: 10.1016/j.ejrad.2022.110437

16. Dong Y, Jiang Z, Li C, Dong S, Zhang S, Lv Y, et al. Development and validation of novel radiomics-based nomograms for the prediction of egfr mutations and ki-67 proliferation index in non-small cell lung cancer. Quant Imaging Med Surg. (2022) 12:2658–71. doi: 10.21037/qims-21-980

17. Aerts HJ. The potential of radiomic-based phenotyping in precision medicine: a review. JAMA Oncol. (2016) 2:1636–42. doi: 10.1001/jamaoncol.2016.2631

18. Manafi-Farid R, Askari E, Shiri I, Pirich C, Asadi M, Khateri M, et al. (18)f]fdg-pet/ct radiomics and artificial intelligence in lung cancer: technical aspects and potential clinical applications. Semin Nucl Med. (2022) 52:759–80. doi: 10.1053/j.semnuclmed.2022.04.004

19. Wang J, Yang Y, Xie Z, Mao G, Gao C, Niu Z, et al. Predicting lymphovascular invasion in non-small cell lung cancer using deep convolutional neural networks on preoperative chest ct. Acad Radiol. (2024) 31:5237–47. doi: 10.1016/j.acra.2024.05.010

20. Tao J, Liang C, Yin K, Fang J, Chen B, Wang Z, et al. 3d convolutional neural network model from contrast-enhanced ct to predict spread through air spaces in non-small cell lung cancer. Diagn Interv Imaging. (2022) 103:535–44. doi: 10.1016/j.diii.2022.06.002

21. Zhang K, Zhao G, Liu Y, Huang Y, Long J, Li N, et al. Clinic, ct radiomics, and deep learning combined model for the prediction of invasive pulmonary aspergillosis. BMC Med Imaging. (2024) 24:264. doi: 10.1186/s12880-024-01442-x

22. Chu X, Niu L, Yang X, He S, Li A, Chen L, et al. Radiomics and deep learning models to differentiate lung adenosquamous carcinoma: a multicenter trial. Iscience. (2023) 26:107634. doi: 10.1016/j.isci.2023.107634

23. Ahn HK, Jung M, Ha S, Lee J, Park I, Kim YS, et al. Clinical significance of ki-67 and p53 expression in curatively resected non-small cell lung cancer. Tumor Biol. (2014) 35:5735–40. doi: 10.1007/s13277-014-1760-0

24. Berghoff AS, Ilhan-Mutlu A, Wöhrer A, Hackl M, Widhalm G, Hainfellner JA, et al. Prognostic significance of ki67 proliferation index, hif1 alpha index and microvascular density in patients with non-small cell lung cancer brain metastases. Strahlenther Onkol. (2014) 190:676–85. doi: 10.1007/s00066-014-0639-8

25. Vigouroux C, Casse JM, Battaglia-Hsu SF, Brochin L, Luc A, Paris C, et al. Methyl(r217) hur and mcm6 are inversely correlated and are prognostic markers in non small cell lung carcinoma. Lung Cancer. (2015) 89:189–96. doi: 10.1016/j.lungcan.2015.05.008

26. Zhu WY, Hu XF, Fang KX, Kong QQ, Cui R, Li HF, et al. Prognostic value of mutant p53, ki-67, and ttf-1 and their correlation with egfr mutation in patients with non-small cell lung cancer. Histol Histopathol. (2019) 34:1269–78. doi: 10.14670/HH-18-124

27. Sun H, Zhou P, Chen G, Dai Z, Song P, and Yao J. Radiomics nomogram for the prediction of ki-67 index in advanced non-small cell lung cancer based on dual-phase enhanced computed tomography. J Cancer Res Clin Oncol. (2023) 149:9301–15. doi: 10.1007/s00432-023-04856-2

28. Fu Q, Liu SL, Hao DP, Hu YB, Liu XJ, Zhang Z, et al. Ct radiomics model for predicting the ki-67 index of lung cancer: an exploratory study. Front Oncol. (2021) 11:743490. doi: 10.3389/fonc.2021.743490

29. Wu J, Xia Y, Wang X, Wei Y, Liu A, Innanje A, et al. Urp: an integrated research platform for one-stop analysis of medical images. Front Radiol. (2023) 3:1153784. doi: 10.3389/fradi.2023.1153784

30. Chen L, Gu D, Chen Y, Shao Y, Cao X, Liu G, et al. An artificial-intelligence lung imaging analysis system (alias) for population-based nodule computing in ct scans. Comput Med Imaging Graph. (2021) 89:101899. doi: 10.1016/j.compmedimag.2021.101899

31. Chen M, Copley SJ, Viola P, Lu H, and Aboagye EO. Radiomics and artificial intelligence for precision medicine in lung cancer treatment. Semin Cancer Biol. (2023) 93:97–113. doi: 10.1016/j.semcancer.2023.05.004

32. Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. (2019) 69:127–57. doi: 10.3322/caac.21552

33. Yan J, Xue X, Gao C, Guo Y, Wu L, Zhou C, et al. Predicting the ki-67 proliferation index in pulmonary adenocarcinoma patients presenting with subsolid nodules: construction of a nomogram based on ct images. Quant Imaging Med Surg. (2022) 12:642–52. doi: 10.21037/qims-20-1385

34. Zhao W, Yang J, Ni B, Bi D, Sun Y, Xu M, et al. Toward automatic prediction of egfr mutation status in pulmonary adenocarcinoma with 3d deep learning. Cancer Med. (2019) 8:3532–43. doi: 10.1002/cam4.2233

35. Caii W, Wu X, Guo K, Chen Y, Shi Y, and Chen J. Integration of deep learning and habitat radiomics for predicting the response to immunotherapy in nsclc patients. Cancer Immunol Immunother. (2024) 73:153. doi: 10.1007/s00262-024-03724-3

36. Liu W, Chen W, Xia J, Lu Z, Fu Y, Li Y, et al. Lymph node metastasis prediction and biological pathway associations underlying dce-mri deep learning radiomics in invasive breast cancer. BMC Med Imaging. (2024) 24:91. doi: 10.1186/s12880-024-01255-y

37. Kim S, Lim JH, Kim CH, Roh J, You S, Choi JS, et al. Deep learning-radiomics integrated noninvasive detection of epidermal growth factor receptor mutations in non-small cell lung cancer patients. Sci Rep. (2024) 14:922. doi: 10.1038/s41598-024-51630-6

38. Lucia F, Visvikis D, Desseroit MC, Miranda O, Malhaire JP, Robin P, et al. Prediction of outcome using pretreatment (18)f-fdg pet/ct and mri radiomics in locally advanced cervical cancer treated with chemoradiotherapy. Eur J Nucl Med Mol Imaging. (2018) 45:768–86. doi: 10.1007/s00259-017-3898-7

39. Lambin P, Leijenaar R, Deist TM, Peerlings J, de Jong E, van Timmeren J, et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol. (2017) 14:749–62. doi: 10.1038/nrclinonc.2017.141

40. Liu F, Li Q, Xiang Z, Li X, Li F, Huang Y, et al. Ct radiomics model for predicting the ki-67 proliferation index of pure-solid non-small cell lung cancer: a multicenter study. Front Oncol. (2023) 13:1175010. doi: 10.3389/fonc.2023.1175010

41. Warth A, Cortis J, Soltermann A, Meister M, Budczies J, Stenzinger A, et al. Tumour cell proliferation (ki-67) in non-small cell lung cancer: a critical reappraisal of its prognostic role. Br J Cancer. (2014) 111:1222–9. doi: 10.1038/bjc.2014.402

42. Werynska B, Pula B, Muszczynska-Bernhard B, Piotrowska A, Jethon A, Podhorska-Okolow M, et al. Correlation between expression of metallothionein and expression of ki-67 and mcm-2 proliferation markers in non-small cell lung cancer. Anticancer Res. (2011) 31:2833–9.

43. Ma X, Zhou S, Huang L, Zhao P, Wang Y, Hu Q, et al. Assessment of relationships among clinicopathological characteristics, morphological computer tomography features, and tumor cell proliferation in stage i lung adenocarcinoma. J Thorac Dis. (2021) 13:2844–57. doi: 10.21037/jtd-21-7

Keywords: radiomics, deep learning, interpretability, non-small cell lung cancer, Ki-67 expression levels

Citation: Qin S, Jia Q, Zhang C, Li M, Zhang X, Zhou X, Su D, Liu Y and Zhou J (2025) Predicting Ki-67 expression levels in non-small cell lung cancer using an explainable CT-based deep learning radiomics model. Front. Oncol. 15:1655714. doi: 10.3389/fonc.2025.1655714

Received: 28 June 2025; Accepted: 26 November 2025; Revised: 11 November 2025;

Published: 10 December 2025.

Edited by:

Morgan Michalet, Institut du Cancer de Montpellier (ICM), FranceCopyright © 2025 Qin, Jia, Zhang, Li, Zhang, Zhou, Su, Liu and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jun Zhou, eHJheTE4OTVAMTYzLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Shize Qin1†

Shize Qin1† Xiufu Zhang

Xiufu Zhang Jun Zhou

Jun Zhou