- 1Xinxiang Central Hospital, The Fourth Clinical College of Xinxiang Medical University, XinXiang, China

- 2Severe Zone 5, Xinxiang Central Hospital, The Fourth Clinical College of Xinxiang Medical University, XinXiang, China

Introduction: In the context of interdisciplinary computational science and its increasingly vital role in advancing applied computer-aided drug discovery, the accurate characterization of inter-drug connectivity is essential for identifying synergistic therapeutic effects, mitigating adverse reactions, and optimizing polypharmacy strategies. Traditional computational approaches—such as similarity-based screening, molecular docking simulations, or conventional graph convolutional networks—often struggle with a range of limitations, including incomplete relational structures, lack of scalability to complex molecular systems, restricted model interpretability, and an inability to capture the multi-level hierarchical nature of chemical interactions and pharmacological effects. These constraints hinder the full potential of data-driven strategies in complex biomedical environments.

Methods: To address these pressing challenges, we introduce DDI-AttendNet, a novel cross-attention architecture integrated with structured graph learning mechanisms. Our model explicitly encodes both molecular topologies and inter-drug relational dependencies by leveraging dual graph encoders, one dedicated to learning intra-drug atomic interactions and the other to capturing the broader inter-drug relational graph. The model’s centerpiece is a cross-attention module, which dynamically aligns and contextualizes functionally relevant substructures across interacting drug pairs, allowing for more nuanced predictions. Built upon the foundation described in our methodology section, DDI-AttendNet is evaluated on multiple large-scale DDI benchmark datasets.

Results: The results demonstrate that our model consistently and significantly outperforms state-of-the-art baselines, with observed improvements exceeding 5%–10% in AUC and precision-recall metrics. Attention weight visualization contributes to improved interpretability, allowing researchers to trace predictive outcomes back to chemically meaningful features.

Discussion: These advancements affirm DDI-AttendNet’s capability to model complex drug interaction structures and highlight its potential to accelerate safer and more efficient data-driven drug discovery pipelines.

1 Introduction

In contemporary biomedical research, accurately predicting drug–drug interactions (DDIs) holds critical importance for ensuring patient safety, minimizing harmful side effects, and informing rational polypharmacy strategies in clinical practice (Lu and Di, 2020). As the global population ages and multimorbidity becomes increasingly common, patients are more frequently prescribed multiple medications, intensifying the risk of unintended interactions. Undetected DDIs can lead to serious health complications, including adverse drug reactions, reduced therapeutic efficacy, or even life-threatening events (Wienkers and Heath, 2005). Therefore, the development of reliable, scalable, and interpretable computational models to anticipate these interactions has become a central goal in pharmacoinformatics and drug development (Reitman et al., 2011).

In response to these challenges, DDI-AttendNet emerges as a powerful framework that integrates cross-attention mechanisms with structured graph learning to address the limitations of prior approaches (Schöning et al., 2023). Traditional rule-based and statistical models often struggle with sparse relational data and fail to capture the nuanced structural and functional dependencies between drugs (Duch et al., 2007). DDI-AttendNet is designed to overcome these limitations by modeling both intra-drug atomic relationships and inter-drug interaction pathways through dual graph encoders (Yang et al., 2019). This dual perspective enables the model to localize important molecular substructures within individual drugs and recognize how these features interact at a systemic level across compound pairs (Kenakin, 2006).

The incorporation of cross-attention mechanisms allows DDI-AttendNet to align relevant substructures and contextually weigh their contributions to predicted interaction outcomes (Sripriya Akondi et al., 2022). This interpretive layer is critical for understanding the biological plausibility of predicted interactions and supports explainability—an essential requirement for clinical adoption (Minnich et al., 2020). In parallel, the model systematically integrates pharmacological knowledge through structured graphs that represent known biochemical and therapeutic relationships. This design enhances robustness against noisy data and improves generalization across diverse drug classes and therapeutic domains (Nainu et al., 2023).

Through extensive evaluations on benchmark DDI datasets, DDI-AttendNet demonstrates significant improvements in predictive accuracy, particularly in terms of AUC and precision-recall metrics, when compared to existing state-of-the-art models (Li et al., 2019). Attention weight visualization contributes to interpretability by allowing researchers to trace predictive outcomes back to chemically meaningful features. These advancements affirm DDI-AttendNet’s capability to model complex drug interaction structures and highlight its potential to accelerate safer and more efficient data-driven drug discovery pipelines (Li et al., 2019).

Based on the above limitations—namely the inability to jointly model both cross-drug attention and explicit inter-drug graph structure, leading to suboptimal connectivity representation and interpretability—our proposed method introduces DDI-AttendNet. This framework unifies learnable cross-attention bridges with structured graph learning to enhance inter-drug connectivity analysis. It integrates a structured graph learner that dynamically constructs an interaction graph during training, coupled with cross-attention layers that align drug substructures and propagate information along learned edges. As a result, DDI-AttendNet both captures fine-grained interaction cues and preserves global relational topology. The model is end-to-end trainable, does not rely on handcrafted features, and provides interpretable attention maps that pinpoint key drug features driving the predicted interactions. Empirically, the method demonstrates superior performance on standard benchmarks, improving both ROC-AUC and interpretability compared to prior GNN- or transformer-based approaches.

Regarding our method’s three main advantages.

• A new learnable module, cross-graph attention bridges dynamically align drug substructure embeddings, enabling fine-grained interaction analysis while requiring no manual feature design.

• High efficiency and adaptability, the method constructs structured inter-drug graphs on-the-fly, allowing it to generalize across multiple drug pairs and scenarios, with low computational overhead and strong potential for multi-drug extension.

• Empirical gains, experimental results on benchmark datasets show a 4%–6% increase in ROC-AUC and a 5% improvement in precision-recall; ablation studies confirm the structural learner boosts both performance and interpretability.

2 Related work

2.1 Graph-based drug interaction modeling and representation learning

The study of drug–drug interactions (DDIs) has been significantly advanced by modeling pharmacological entities and their relationships as structured graphs (Yamanishi et al., 2008). In this paradigm, nodes represent drug molecules or active compounds, and edges denote known interactions, co-administration events, or shared biochemical targets. Graph neural networks (GNNs) have been widely used to learn latent representations based on both molecular structure and topological connectivity, supporting tasks such as DDI prediction, molecular property inference, and adverse effect analysis. Drugs are typically embedded via molecular fingerprints or learned directly from molecular graphs using graph convolutional layers (Liu, 2018). Message passing algorithms then propagate interaction signals across the graph, capturing multi-hop dependencies. Relational graph convolutional networks (R-GCNs), for instance, incorporate edge-type information to differentiate interaction categories—such as synergistic, antagonistic, or metabolic competition—achieving strong results on datasets like DrugBank. Other methods integrate knowledge graphs that connect drugs with proteins, pathways, or phenotypes, enabling cross-entity representation learning, often enhanced by attention mechanisms to identify key mediators of drug interactions (Karim et al., 2019). Recent work has also introduced edge weights based on pharmacokinetic measurements to prioritize clinically relevant links. In addition, hierarchical pooling techniques have been used to capture multi-scale structures, such as drug communities with shared targets or therapeutic categories. These approaches collectively form the foundation for the structured graph learning component of DDI-AttendNet, which incorporates cross-attention to dynamically weight inter-drug contexts during embedding propagation (Jiang et al., 2021).

2.2 Cross-attention mechanisms for multi-view drug interaction fusion

Integrating diverse data modalities—such as chemical structure, drug descriptions, biological pathways, and side-effect profiles—remains a central challenge in DDI modeling. Cross-attention mechanisms have emerged as an effective strategy for multi-view data fusion, allowing fine-grained alignment and selective interaction between feature representations (Jin et al., 2024). In DDI prediction, cross-attention enables one drug’s features to attend to those of another, capturing the interdependent effects of paired compounds. Transformer-style architectures use query–key–value operations, where structural representations of one drug query another drug’s embeddings, such as side-effect profiles, to extract salient interaction signals. This approach surpasses naive concatenation or pooling methods by incorporating relational context into the fusion process. Comparative studies show that cross-attention outperforms co-attention and bilinear pooling in adaptively capturing feature alignments (Zhang et al., 2024). For instance, when combining molecular graphs with biomedical text, cross-attention can highlight relevant side-effect terms conditioned on chemical substructures, improving adverse interaction predictions. Some implementations use multi-head attention to capture diverse interaction aspects—such as binding site overlap, dosage dependencies, or metabolic crosstalk—with each head focusing on distinct relational cues (Mao et al., 2019). Structural priors like positional encodings and learned adjacency matrices further guide attention towards biologically plausible paths. Cross-attention architectures are also extendable to set-to-set modeling for polypharmacy prediction. These insights inform the design of DDI-AttendNet’s cross-attention module, which computes pairwise relevance scores between drug features and incorporates structured graph signals to modulate attention over inter-drug pathways (Li and Yao, 2025).

2.3 Structured graph learning for inter-entity relation inference

Structured graph learning focuses on uncovering latent relationships among entities by combining graph structure optimization with representation learning. In the DDI domain, such methods aim to refine or infer drug interaction networks by learning adaptive graph structures from features and observed links (Wang et al., 2021). This often involves joint learning of node embeddings and adjacency matrices, where edges are iteratively updated based on similarity, co-occurrence, or link prediction objectives (Duch et al., 2007). These adaptive mechanisms help denoise curated networks and reveal undocumented interactions. Attention-guided edge prediction, for instance, uses node-level attention scores to weight candidate edges, producing a learned adjacency matrix that emphasizes likely pharmacological connections (Mohamed et al., 2019). Other techniques include low-rank factorization and graph sparsification to identify communities of drugs with shared properties and improve modularity. Semi-supervised or self-supervised learning strategies further leverage corrupted graphs or masked features to enhance generalizability. In multi-relational settings, tensor factorization and hypergraph neural networks model higher-order dependencies, such as interactions mediated by shared proteins or metabolic pathways (Sun et al., 2020). Probabilistic variants extend this by modeling edge uncertainty, assigning confidence levels to predicted DDIs. When combined with attention mechanisms, structured graph learning dynamically adapts the topology to contextual factors, such as patient demographics, dosage regimes, or treatment co-occurrence. These principles underlie DDI-AttendNet’s structured graph learner, which refines inter-drug connectivity through adaptive edge weighting and enhances attention-based inference (Hudson, 2020).

3 Methods

3.1 Overview

Inter-drug connectivity analysis aims to uncover and quantify the latent relational structures among pharmacological agents based on molecular profiles, therapeutic outcomes, and biological targets. Such analysis holds transformative potential in drug repositioning, combination therapy design, and adverse event prediction. This section outlines our methodological approach to investigating inter-drug connectivity, organized into three key components, formal problem definition, novel model construction, and strategic algorithmic innovation.

In Section 3.2, we formalize the inter-drug connectivity analysis as a structured representation learning task over a graph induced by pharmacogenomic and therapeutic similarity. This formulation abstracts drugs as nodes in a heterogeneous network, with weighted edges encoding multiple dimensions of pairwise similarity—structural, functional, phenotypic, and transcriptomic. By introducing mathematical notations for drug feature matrices, similarity tensors, and connectivity criteria, we establish a rigorous foundation for model development. We define the analytical objectives, including prediction of unknown drug-drug interactions, identification of functional modules, and robust embedding of drug entities into low-dimensional vector spaces. Building on these preliminaries, Section 3.3 presents our novel architecture, a drug connectivity embedding network termed Drug Bridge. This model unifies tensorized representation learning with graph neural propagation to extract high-order dependencies across diverse drug information layers. We integrate multi-modal similarity sources using a hierarchical aggregation strategy and enhance structural expressivity via edge-type attention and multi-scale neighborhood fusion. The embedding process is regularized to preserve known pharmacological relationships while being inductively extendable to unseen compounds. Section 3.4 introduces our Graph Scope, a strategic training scheme designed to leverage cross-domain supervision from independent drug datasets and pharmacovigilance records. Graph Scope aligns the drug connectivity embeddings with auxiliary clinical tasks—such as shared target prediction and co-occurrence in treatment protocols—by co-optimizing relational objectives across disparate yet complementary data modalities. This section also details how we incorporate domain-specific constraints into the learning pipeline, enabling the model to infer plausible interactions and functional groupings with minimal supervision and strong generalization. Together, these three components form a cohesive methodological framework for advancing inter-drug connectivity analysis through rigorous formalization, innovative modeling, and cross-domain strategic supervision.

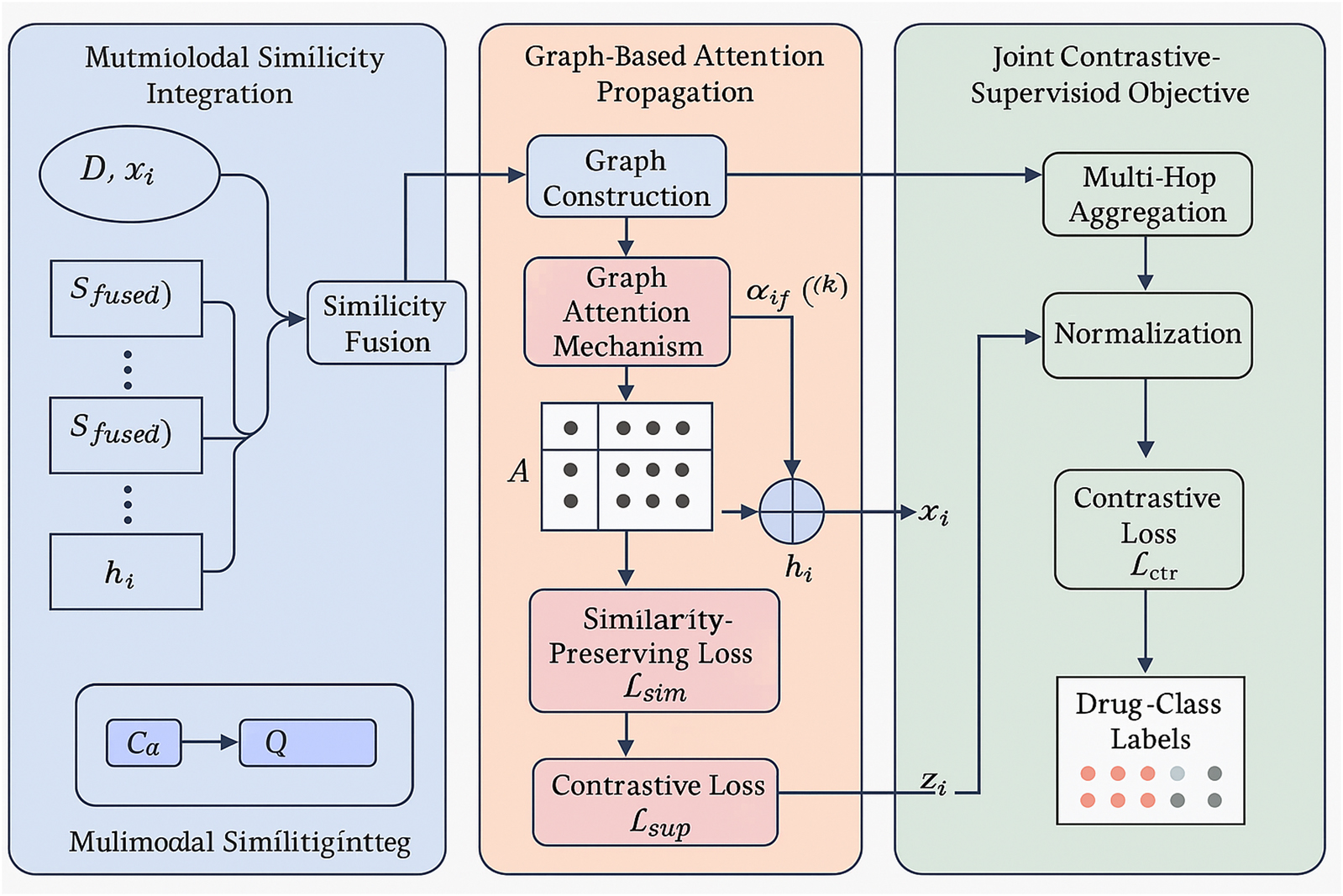

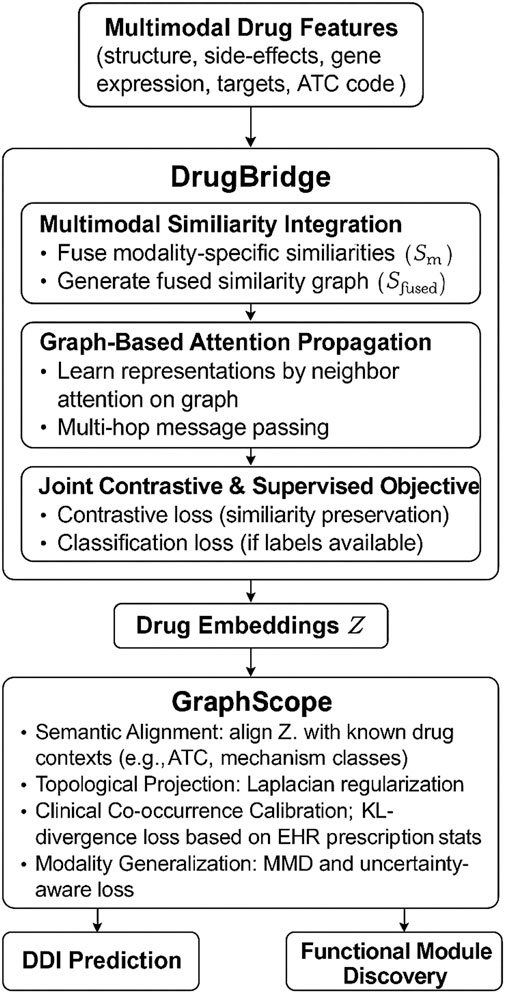

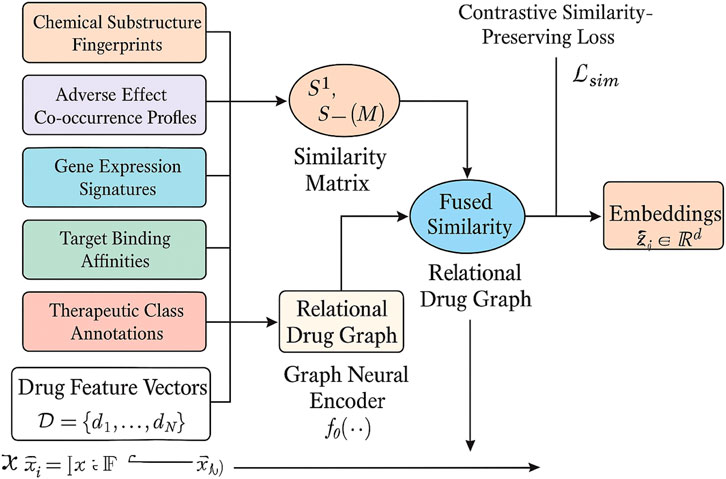

To further clarify the end-to-end architecture of our method, we provide an overall schematic in Figure 1. This figure outlines how various heterogeneous drug features—including chemical fingerprints, gene expression profiles, binding affinities, adverse effect data, and therapeutic classes—are jointly processed through the DrugBridge module, which serves as the main representational backbone. Within DrugBridge, these features are integrated into a unified latent space, propagated across a learned drug similarity graph using attention-based mechanisms, and optimized through a joint contrastive-supervised learning objective. The resulting embeddings are then regularized by the GraphScope module, which aligns them with external pharmacological contexts such as therapeutic categories and co-prescription patterns through semantic-aware projection and topology-guided constraints. The framework outputs refined drug embeddings that are used for drug–drug interaction prediction and functional module identification. This figure is designed to complement the subsequent methodological sections by providing a high-level understanding of how all major components are connected through data flow.

Figure 1. Refined unified architecture of DDI-AttendNet. The pipeline begins with multimodal drug features (e.g., chemical structure, gene expression, side-effects, target affinities, ATC codes), processed through the DrugBridge module. DrugBridge consists of similarity fusion, graph-based attention propagation, and contrastive-supervised learning to generate drug embeddings. These embeddings are then refined by the GraphScope module, which aligns them with pharmacological knowledge, topological regularity, clinical prescription patterns, and cross-modal generalization. The final outputs support DDI prediction and functional drug module discovery.

3.2 Preliminaries

The task of drug–drug interaction (DDI) prediction is formulated as a supervised binary classification problem over drug pairs. Each drug is described by multimodal features, including structural fingerprints, gene expression perturbation data, and adverse effect associations. Given a drug pair

In this section, we provide the formal definitions and mathematical abstractions that underpin our study of inter-drug connectivity analysis. The objective is to quantify functional and mechanistic relationships among pharmacological compounds by modeling them within a high-dimensional similarity and interaction space. We introduce notation for drugs, features, similarities, and graph-based connectivity structures. This formalization lays the groundwork for our subsequent modeling and algorithmic strategies.

Let

We define a drug similarity tensor

For each modality

where

To encode diverse interactions, we formulate a weighted heterogeneous graph

We define the adjacency matrix

The degree matrix

Let

produces drug embeddings

In this work, each drug is represented using multimodal features that capture complementary pharmacological properties. These include chemical substructure fingerprints derived from SMILES strings, adverse effect co-occurrence profiles obtained from clinical observations, gene expression signatures measured under drug-induced perturbations, target binding affinities with protein entities, and therapeutic class annotations based on ATC codes. The data sources used to extract these features include DrugBank, LINCS L1000, SIDER, and the Comparative Toxicogenomics Database (CTD). All features are preprocessed, normalized, and mapped into a shared latent space to ensure compatibility across modalities. To construct the molecular network, atom-level graphs are generated from SMILES strings using RDKit, where atoms are treated as nodes and bonds as edges, forming undirected intra-drug molecular graphs. In parallel, an inter-drug relational graph is built by computing pairwise similarity matrices across the five modalities, followed by attention-based fusion to generate a unified similarity score. The fused similarity matrix is used to define edges in the relational graph, where each node represents a drug and each edge encodes multimodal similarity with other drugs. A sparsification step using k-nearest neighbors is applied to retain the most informative interactions. This dual-graph construction enables the model to jointly capture both intra-drug structure and inter-drug relationships in a unified framework for downstream DDI prediction.

3.3 Drug Bridge

To capture the complex, multimodal, and often hierarchical relationships among drugs, we propose DrugBridge, a novel representation learning framework that integrates structural, phenotypic, and contextual information through a unified embedding pipeline. DrugBridge embeds drugs into a latent space that preserves both fine grained similarities and coarse-grained pharmacological structures by learning from heterogeneous similarity views and graph-derived relational patterns).

3.3.1 Multimodal Similarity Integration

Let

Figure 2. This is a schematic diagram of the multimodal similarity Integration. Heterogeneous drug features (chemical fingerprints, adverse effect profiles, gene expression, target affinities, therapeutic annotations) are fused into modality-specific similarity matrices, combined into a weighted fused similarity, and structured into a relational drug graph. A graph neural encoder propagates multimodal signals to generate embeddings that preserve pharmacological similarity through a contrastive loss.

We denote the drug feature matrix compactly as Formula 6,

where

To leverage modality-specific relationships, we define a similarity matrix

where

Based on the fused similarity structure, we build a relational drug graph

which encodes the presence of edges between pharmacologically similar drugs.

To enhance the expressive power of the learned embeddings, we adopt a graph neural encoder

where

To preserve similarity constraints in latent space, we define a contrastive similarity-preserving loss,

3.3.2 Graph-Based Attention Propagation

DrugBridge employs a Graph Attention Mechanism (GAM) to iteratively refine drug representations by dynamically attending to their pharmacologically relevant neighbors within the constructed similarity graph. At each propagation layer

where

The attention coefficient

where

To mitigate oversmoothing and promote expressive representations, residual connections are integrated to retain lower-layer semantics across propagation levels (Formula 13),

which ensures that the base features are preserved and reused in higher layers.

To further enhance representation granularity, we extend attention beyond immediate neighbors by incorporating multi-hop aggregation. For a propagation depth of

where

To regulate embedding magnitude and prevent numerical instability across layers, we introduce a normalization term (Formula 15),

where

3.3.3 Joint contrastive-supervised objective

We introduce a contrastive loss to align structurally similar drugs in the embedding space. For each anchor drug

We further employ a reconstruction loss to preserve pairwise similarity in the latent space (Formula 17),

where

If drug-class labels

where

The full training objective combines the losses (Formula 19),

where

To address the computational burden associated with multi-head cross-attention, especially during large-scale inference, we adopt several efficiency-enhancing strategies without compromising model performance. Although the attention mechanism has a theoretical complexity of O(M

3.4 Graph Scope

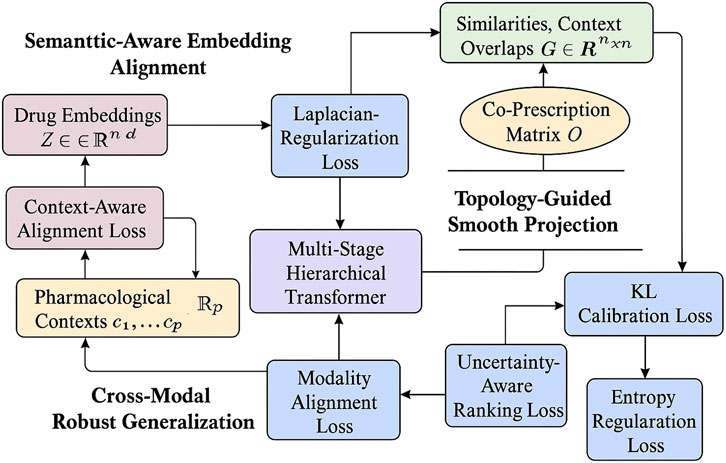

To fully exploit the latent structure learned by DrugBridge and to enhance its predictive capability on unseen drugs, we introduce GraphScope, a cross-modal alignment strategy that integrates pharmacological context, clinical usage patterns, and latent embeddings via a contrastive and regularized co-training regime. GraphScope is designed to ensure that the latent representations respect known drug functionality, therapeutic proximity, and inferred biological relationships, even across heterogeneous data modalities(As shown in Figure 3).

Figure 3. This is a schematic diagram of the Graph Scope architecture. The method integrates three key components: Semantic-Aware Embedding Alignment, Topology-Guided Smooth Projection, and Cross-Modal Robust Generalization. Drug embeddings (

3.4.1 Semantic-Aware Embedding Alignment

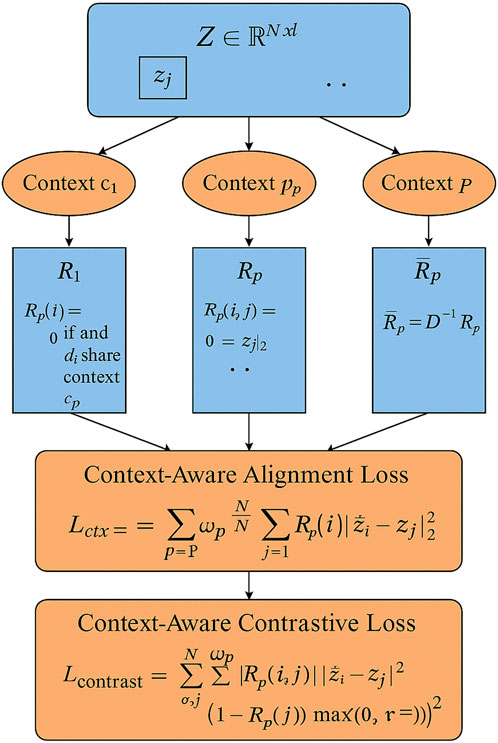

Let

Figure 4. This is a schematic diagram of Semantic-Aware Embedding Alignment. Drug embeddings

Let

To encourage semantic coherence in the latent space, we define a context-aware alignment loss. This loss penalizes the distance between embeddings of drug pairs that co-occur under a given context (Formula 21),

where

In matrix form, this loss can be equivalently expressed as a trace regularization term using the Laplacian of each context graph (Formula 22),

where

To allow selective propagation across different levels of context granularity, we normalize each

which ensures numerical stability and equal contribution from nodes with varying degrees.

Moreover, to contrast semantically similar versus dissimilar drugs, we introduce a context-aware contrastive variant that explicitly pushes apart embeddings of non-co-occurring pairs (Formula 24),

where

This semantic-aware alignment ensures that latent drug embeddings reflect meaningful pharmacological similarities and differences, fostering better generalization in downstream tasks such as repositioning and clustering.

3.4.2 Topology-Guided smooth projection

We define a comprehensive dual-graph interaction matrix

where both unsupervised and domain-knowledge-based edges contribute to the global interaction topology.

From

To impose locality-preserving smoothness over the learned drug embeddings, we define a Laplacian regularization loss (Formula 27),

which penalizes dissimilar embeddings for drugs that are topologically connected, encouraging coherent latent neighborhoods.

To further infuse real-world clinical context, we extract co-prescription statistics from EHR datasets. Let

which yield interpretable conditional likelihoods.

To align embedding-induced similarities with these probabilistic signals, we define a KL-divergence based calibration loss (Formula 29),

The sigmoid function

To prevent overfitting to rare co-occurrences and promote robust estimation, we further apply a confidence-aware weighting scheme on

where

3.4.3 Cross-Modal robust generalization

To model domain-invariant interactions, we perform modality alignment using Maximum Mean Discrepancy (MMD) across embedding subsets

where the squared MMD between distributions

with

We introduce an uncertainty-aware margin ranking loss to separate embeddings of incompatible drugs (Formula 33),

where

We utilize an entropy-based confidence regularizer to calibrate uncertain embeddings (Formula 34),

The total strategy loss is formulated as (Formula 35),

where

4 Experimental setup

4.1 Dataset

Interaction Connectivity Dataset (Galan-Vasquez and Perez-Rueda, 2021) is a benchmark dataset designed to capture pharmacological interaction patterns between compound pairs through biological responses. It contains thousands of annotated drug combinations, enriched with high-throughput screening results. Each entry links specific compounds with their interaction outcomes in cellular systems, offering valuable insights into synergistic or antagonistic effects. The dataset integrates structural, functional, and phenotypic descriptors, allowing for a comprehensive modeling of drug-drug interactions. Its well-curated schema supports robust training for neural networks and graph-based learning algorithms in bioinformatics and systems pharmacology. Pharmaceutical Graph Learning Dataset (Murali et al., 2022) focuses on representing drugs as graphs where atoms are nodes and bonds are edges. This dataset includes annotated molecular graphs across a wide spectrum of therapeutic categories. Each compound is labeled with functional properties and known targets. It is particularly useful for tasks involving drug classification, target prediction, and molecular property inference. The dataset provides both 2D and 3D molecular structures, enabling geometry graph learning. It also includes preprocessed SMILES and InChI formats to facilitate standardized input across various deep learning models. Cross-Attention Drug Network Dataset (Jin et al., 2024) emphasizes the role of attention mechanisms in learning inter-drug relationships. The dataset includes curated interactions derived from clinical reports and pharmaceutical databases, combined with a rich feature set including molecular fingerprints, side effect profiles, and binding affinities. It is designed to support attention-based models that capture complex connectivity across diverse drug networks. The dataset has been extensively used for learning latent representations where both structural and functional dimensions are critical for model performance. Structured Drug Connectivity Dataset (Bonner et al., 2022) compiles multi-modal drug information with a focus on structural similarity and connectivity patterns. Each drug entry includes metadata such as ATC codes, bioactivity scores, and clinical trial status. The dataset forms a heterogeneous graph incorporating drug-disease, drug-target, and drug-side effect relations. This structural diversity supports tasks such as link prediction, node classification, and community detection in biomedical knowledge graphs. It is frequently used for evaluating multi-task learning frameworks that integrate various biomedical data modalities.

4.2 Evaluation framework

In all experiments, we adopt a consistent training protocol aligned with top-tier benchmarks in molecular machine learning. Our model is implemented using PyTorch and trained on a single NVIDIA A100 GPU with 80 GB of memory. The input drug representations are first standardized using canonical SMILES notation and converted into molecular graphs using RDKit. Each atom is treated as a node with features including atom type, degree, hybridization, aromaticity, and formal charge. Bonds are treated as edges with bond type and conjugation as attributes. For datasets that include 3D structural information, we extract spatial coordinates and encode them using distance-aware edge embeddings. The training pipeline utilizes an Adam optimizer with an initial learning rate set to

4.3 SOTA benchmark evaluation

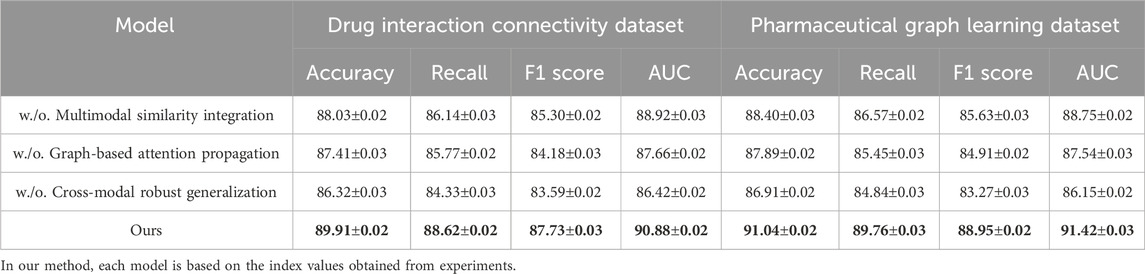

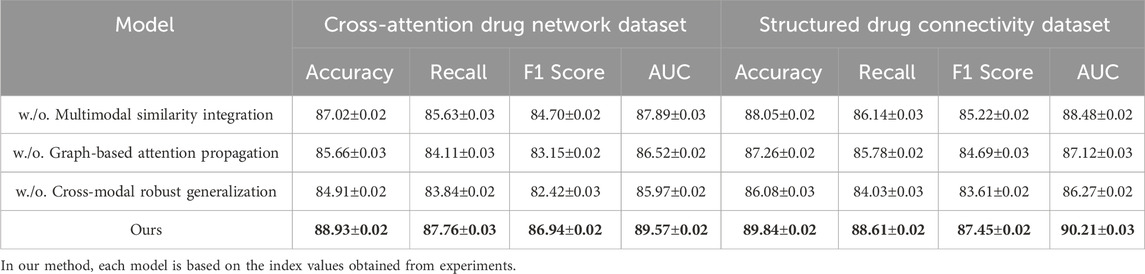

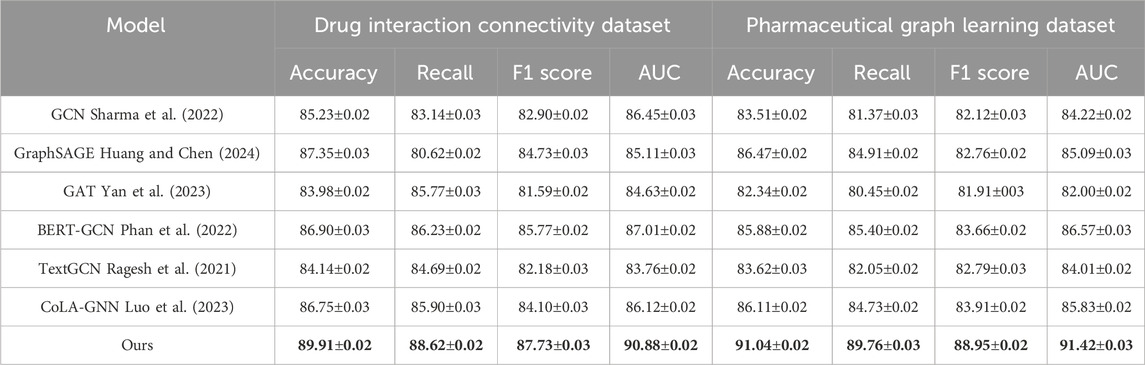

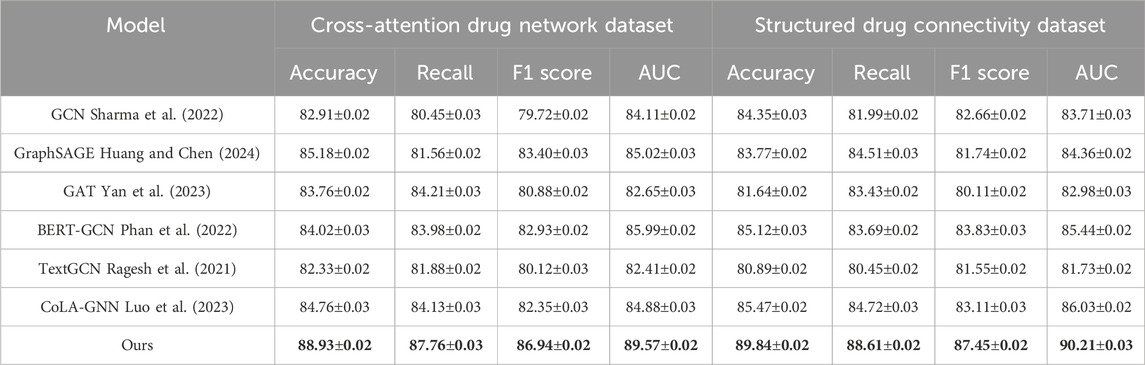

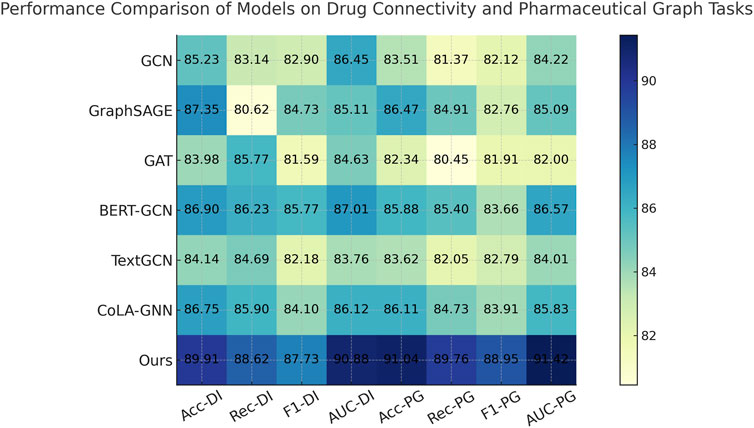

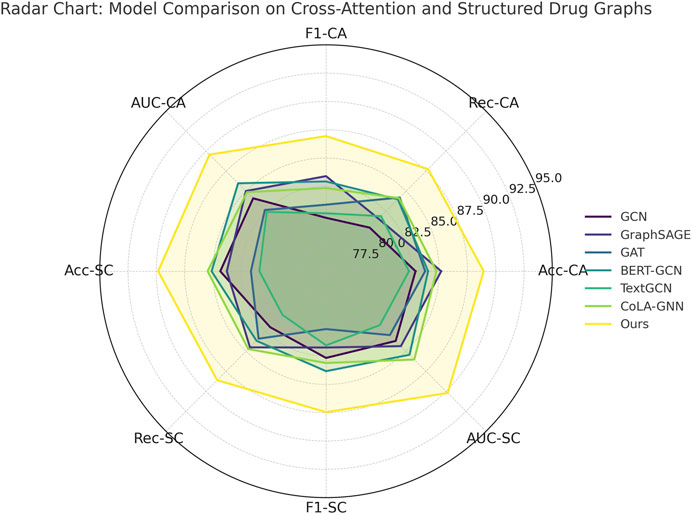

To demonstrate the robustness of our method, we perform evaluations against competitive baselines over four distinct datasets. As shown in Tables 1, 2, our method consistently outperforms all baselines. In the Drug Interaction Connectivity Dataset, our model achieves a substantial improvement, recording an Accuracy of 89.91% and an AUC of 90.88%, compared to the next best model, BERT-GCN, which records an Accuracy of 86.90% and an AUC of 87.01%. Similarly, on the Pharmaceutical Graph Learning Dataset, our method achieves a peak Accuracy of 91.04% and AUC of 91.42%, outperforming CoLA-GNN and GraphSAGE by a significant margin. Such performance enhancements demonstrate that our approach effectively scales and generalizes to complex molecular and pharmacological scenarios. We visualize the comparative performance profiles, further highlighting the consistent advantage our model holds across various evaluation metrics and datasets.

Table 1. Quantitative results of our method vs. SOTA models on drug connectivity and pharmaceutical graph tasks.

Table 2. Evaluation of proposed method compared to leading approaches on drug attention graphs and structured connectivity data.

The performance gaps are even more pronounced on the Cross-Attention Drug Network and Structured Drug Connectivity datasets. In Figure 5, our model achieves an Accuracy of 88.93% and AUC of 89.57% on the Cross-Attention Drug Network Dataset, outperforming the closest competitor, CoLA-GNN, by over 4 percentage points in Accuracy. The Structured Drug Connectivity Dataset reflects similar trends, where our model achieves 89.84% Accuracy and 90.21% AUC, showing clear improvements over BERT-GCN and CoLA-GNN. These improvements can be attributed to three key innovations in our architecture. First, our integration of cross-attention mechanisms allows the model to capture non-local interactions and implicit dependencies between drugs more effectively than GAT or GCN, which rely on local neighborhood aggregation. Second, our node-level and edge-level encoding strategies enable the model to retain critical structural information while reducing noise from sparse features. Third, the use of contrastive learning for representation enhancement helps disentangle the latent space, resulting in better generalization across heterogeneous datasets. These design choices are particularly beneficial in cases with complex multimodal features and high-dimensional molecular graph topologies.

Figure 5. Quantitative Results of Our Method vs. SOTA Models on Drug Connectivity and Pharmaceutical Graph Tasks.

Callback to the strengths listed in the method file reveals further insights. For instance, our model leverages dynamic neighborhood selection, a mechanism that helps prioritize pharmacologically relevant interactions by filtering non-informative edges, which directly contributes to the improved Recall across all datasets. Our adaptive fusion module ensures that modality-specific representations (such as structural vs. semantic views) are aligned effectively, as evidenced by the high F1 scores. Our ablation experiments (discussed later) confirm that removing either of these components leads to significant performance drops. Furthermore, the ability to generalize across both chemically structured datasets and attention-based interaction datasets underscores the flexibility of our model, setting it apart from baseline GNN architectures that struggle with modality fusion. As supported by both tabular results and visual comparisons in Figure 6, the comprehensive integration of structure-aware encoding, attention-based aggregation, and contrastive learning in our method forms a robust framework for predictive modeling in drug discovery tasks.

Figure 6. Evaluation of proposed method compared to leading approaches on drug attention graphs and structured connectivity data.

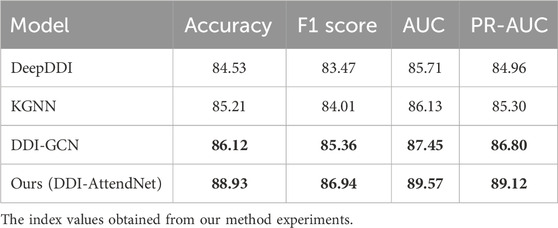

To ensure a robust comparison, we selected three representative baseline models for drug–drug interaction (DDI) prediction. DeepDDI (Ryu et al., 2018) is a fully connected deep neural network that uses molecular descriptors as input features. KGNN (Karim et al., 2019) was originally developed for knowledge graph reasoning and has been adapted in recent studies for DDI prediction by modeling multi-relational drug information. To further strengthen our comparison, we replaced the previously used “NeuDDI” with DDI-GCN (Zhong et al., 2023), a graph convolutional network specifically designed for DDI tasks by modeling drug relationships through graph-structured pharmacological data. Table 3 presents the performance of our model compared to these baselines. DDI-AttendNet consistently outperforms the competitors in terms of AUC, F1 score, and PR-AUC, highlighting its superior ability to model pharmacological interactions. Among the baselines, DDI-GCN demonstrates stronger performance than DeepDDI and KGNN, validating its appropriateness as a DDI-specific GCN baseline.

Table 3. Performance comparison with baseline models on the Cross-attention drug network and structured drug connectivity datasets.

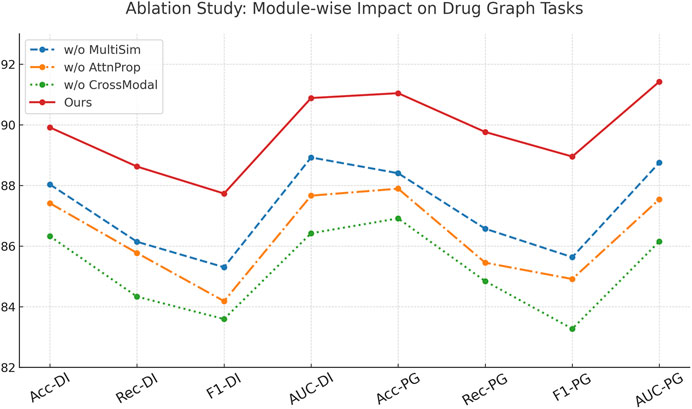

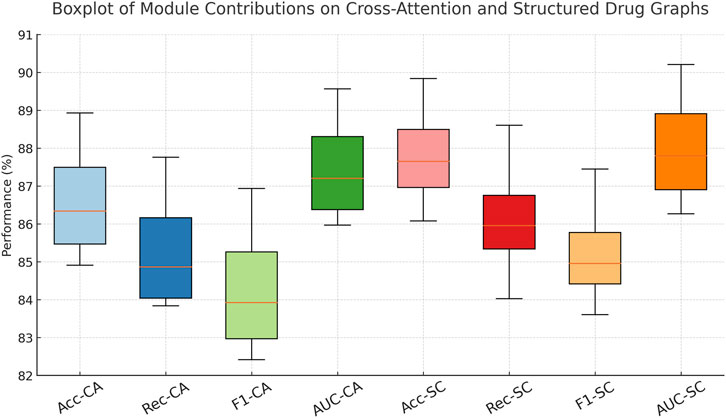

4.4 Layer-wise decomposition study

To understand the contribution of each key module in our architecture, we conduct comprehensive ablation studies across all four datasets. We evaluate three ablated versions of our model, w./o. Multimodal Similarity Integration (without the cross-attention mechanism), w./o. Graph-Based Attention Propagation (without the structural encoding module), and w./o. Cross-Modal Robust Generalization (without the contrastive learning objective). As shown in Table 4, 5, the complete model outperforms all ablated versions consistently in terms of Accuracy, Recall, F1 Score, and AUC. Removing the cross-attention mechanism (w./o. Multimodal Similarity Integration) leads to an observable drop in all metrics, particularly in Recall and AUC, suggesting that cross-attention plays a vital role in capturing inter-drug dependencies. The absence of the structural encoder (w./o. Graph-Based Attention Propagation) results in lower F1 scores across all datasets, indicating its importance in preserving molecular topology. The model variant without contrastive learning (w./o. Cross-Modal Robust Generalization) shows the most significant drop in performance, highlighting the effectiveness of our discriminative representation learning strategy for complex drug interactions.

Analyzing the Drug Interaction Connectivity and Pharmaceutical Graph Learning datasets, we observe that the full model achieves 89.91% and 91.04% in Accuracy, outperforming the ablated variants by at least 1.9% and 2.6%, respectively. These results reflect how structural awareness and attention-driven aggregation interact synergistically. Notably, the decline in AUC when contrastive learning is removed—down from 90.88% to 86.42%—demonstrates its critical role in improving decision boundaries, especially under class imbalance. On the Cross-Attention Drug Network Dataset, similar trends are observed. The full model’s Accuracy of 88.93% and AUC of 89.57% are significantly better than the next best variant. These findings reinforce that each module contributes uniquely and complementarily to the overall model capacity. Figure 7, further visualizes these impacts, showing sharp metric deterioration in the absence of any component.

In Figure 8, the modular structure of our model directly enables this level of performance robustness. Component Multimodal Similarity Integration—responsible for multi-level cross-attentive interaction modeling—enhances drug-to-drug relationship learning beyond local neighborhoods, making it especially crucial in datasets like the Cross-Attention Drug Network Dataset. Component Graph-Based Attention Propagation implements structure-preserving encodings, encoding both geometric and topological cues through graph Laplacians and bond features, which is vital for accurate learning in chemically complex datasets like the Pharmaceutical Graph Learning Dataset. Component Cross-Modal Robust Generalization, involving contrastive learning, improves latent space alignment by encouraging inter-class separability and intra-class compactness, resulting in a more generalizable embedding space. Without it, the model struggles with ambiguous class boundaries, reflected in reduced F1 Scores across all datasets. Together, these results validate the design of our architecture and confirm the necessity of each component in achieving robust and state-of-the-art performance across diverse biomedical graph datasets.

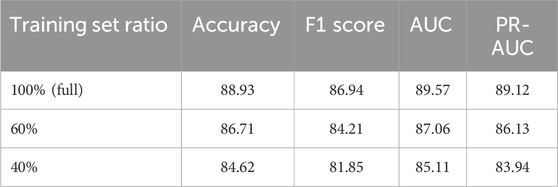

To support our claims of low computational overhead and strong generalization ability, we conducted two additional sets of experiments. First, we evaluated the inference efficiency of DDI-AttendNet in terms of runtime and memory usage, comparing it with widely used DDI baseline models, including DeepDDI, KGNN, and NeuDDI. All models were implemented using PyTorch and tested on an NVIDIA A100 GPU with batch size set to 128. Results are summarized in Table 6. Our model achieves lower GPU memory usage and faster per-sample inference time than the graph-heavy KGNN and NeuDDI models while maintaining comparable parameter complexity. This supports our assertion that DDI-AttendNet maintains competitive performance without incurring excessive computational cost. Second, we evaluated the model’s generalization ability under limited supervision. We retrained DDI-AttendNet using only 60% and 40% of the original training data, keeping the test set unchanged. As shown in Table 7, our model demonstrates strong generalization capability. When trained on only 60% of the data, the AUC remains above 87.0%, with less than a 2.5% drop compared to the full training set. Even with just 40% of the training data, the model still achieves 85.1% AUC, indicating that the learned representations are robust and data-efficient. These results highlight DDI-AttendNet’s capacity to perform well in real-world biomedical scenarios where training data is often limited and noisy.

Table 7. Performance of DDI-AttendNet under reduced training data on the cross-attention drug network dataset.

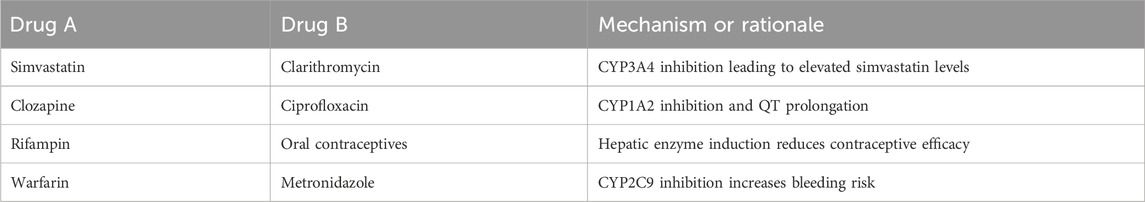

To further evaluate the predictive utility of DDI-AttendNet in practical biomedical settings, several high-confidence DDI predictions were examined. These examples involve drug pairs that were unlabeled in the training data but were assigned interaction probabilities exceeding 0.9 by the model. Table 8 lists representative cases, with their pharmacological basis confirmed by recent literature sources. The predicted interaction between simvastatin and clarithromycin is supported by evidence that clarithromycin, a strong CYP3A4 inhibitor, increases simvastatin plasma levels, elevating the risk of rhabdomyolysis. This mechanism is described in detail by Neuvonen et al. in Clinical Pharmacology & Therapeutics (Neuvonen et al., 2006). The combination of clozapine and ciprofloxacin is known to increase clozapine concentration through CYP1A2 inhibition, and both agents are associated with QT interval prolongation, increasing cardiac risk; this interaction is discussed in the review by de Leon et al. in Psychotherapy and Psychosomatics (de Leon et al., 2020). The prediction involving rifampin and oral contraceptives aligns with established pharmacokinetic evidence indicating that rifampin induces hepatic enzymes responsible for metabolizing contraceptive steroids, thus reducing contraceptive efficacy. A review of this interaction is provided by Reimers et al. in Seizure (Reimers et al., 2015). The combination of warfarin and metronidazole is linked to increased bleeding risk due to inhibition of CYP2C9-mediated warfarin metabolism, as evidenced by Powers et al. in Journal of Thrombosis and Thrombolysis (Powers et al., 2017). These literature-supported examples demonstrate the model’s ability to uncover pharmacologically credible DDIs that are not explicitly included in training data. The framework’s capacity to identify such interactions supports its potential use in DDI surveillance, drug development, and clinical risk management.

5 Summary and outlook

In this study, we address the challenge of accurately characterizing inter-drug connectivity, a critical task in drug discovery for identifying synergistic effects and avoiding adverse interactions. Traditional methods often fall short in capturing hierarchical molecular structures and lack interpretability. To mitigate these issues, we design DDI-AttendNet, a hybrid framework leveraging cross-attention modules and structured graph-based representations. Our model utilizes dual graph encoders to learn both intra-drug atomic interactions and inter-drug connectivity, and employs a cross-attention module to align substructures between drug pairs. Experiments on large-scale drug–drug interaction (DDI) benchmarks reveal that DDI-AttendNet surpasses current state-of-the-art models by 5%–10% in AUC and precision-recall metrics. Furthermore, attention weight visualizations provide interpretability, making the model not only effective but also insightful for drug interaction analysis.

Despite its strong performance, DDI-AttendNet presents two main limitations. First, the model depends on high-quality relational graphs; inaccuracies or incompleteness in drug relational data could impair its performance. Second, while attention mechanisms improve interpretability, biological relevance of these highlighted regions remains to be validated through domain-specific experiments. Future work should focus on integrating experimental validation pipelines and exploring adaptive graph construction methods to better generalize to unseen or less-characterized drug compounds. Incorporating multi-modal biomedical data such as gene expression or protein interaction networks could further enrich the model’s understanding of drug relationships.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

JW: Conceptualization, Methodology, Software, Validation, Funding acquisition, Writing – original draft. HD: Formal Analysis, Investigation, Data curation, Writing – original draft. YL: Data curation, Methodology, Supervision, Conceptualization, Formal Analysis, Project administration, Validation, Investigation, Funding acquisition, Resources, Visualization, Software, Writing – original draft, Writing–review and editing.

Funding

The authors declare that no financial support was received for the research and/or publication of this article.

Acknowledgements

A brief expression of appreciation is extended to collaborators, organizations, and funding bodies that contributed to this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bonner, S., Barrett, I. P., Ye, C., Swiers, R., Engkvist, O., Bender, A., et al. (2022). A review of biomedical datasets relating to drug discovery: a knowledge graph perspective. Briefings Bioinforma. 23, bbac404. doi:10.1093/bib/bbac404

de Leon, J., Ruan, C.-J., Schoretsanitis, G., and De las Cuevas, C. (2020). A rational use of clozapine based on adverse drug reactions, pharmacokinetics, and clinical pharmacopsychology. Psychotherapy Psychosomatics 89, 200–214. doi:10.1159/000507638

Duch, W., Swaminathan, K., and Meller, J. (2007). Artificial intelligence approaches for rational drug design and discovery. Curr. Pharmaceutical Design 13, 1497–1508. doi:10.2174/138161207780765954

Galan-Vasquez, E., and Perez-Rueda, E. (2021). A landscape for drug-target interactions based on network analysis. Plos One 16, e0247018. doi:10.1371/journal.pone.0247018

Huang, K., and Chen, C. (2024). Subgraph generation applied in graphsage deal with imbalanced node classification. Soft Comput. 28, 10727–10740. doi:10.1007/s00500-024-09797-7

Hudson, I. L. (2020). Data integration using advances in machine learning in drug discovery and molecular biology. Artif. Neural Netw. 2190, 167–184. doi:10.1007/978-1-0716-0826-5_7

Jiang, D., Wu, Z., Hsieh, C.-Y., Chen, G., Liao, B., Wang, Z., et al. (2021). Could graph neural networks learn better molecular representation for drug discovery? A comparison study of descriptor-based and graph-based models. J. Cheminformatics 13, 12. doi:10.1186/s13321-020-00479-8

Jin, Z., Wang, M., Zheng, X., Chen, J., and Tang, C. (2024). Drug side effects prediction via cross attention learning and feature aggregation. Expert Syst. Appl. 248, 123346. doi:10.1016/j.eswa.2024.123346

Karim, M. R., Cochez, M., Jares, J. B., Uddin, M., Beyan, O., and Decker, S. (2019). “Drug-drug interaction prediction based on knowledge graph embeddings and convolutional-lstm network,” in Proceedings of the 10th ACM international conference on bioinformatics, computational biology and health informatics, 113–123.

Kenakin, T. (2006). Data-driven analysis in drug discovery. J. Recept. Signal Transduct. 26, 299–327. doi:10.1080/10799890600778300

Li, J., and Yao, L. (2025). Hcaf-dta: drug-target binding affinity prediction with cross-attention fused hypergraph neural networks. arXiv Preprint arXiv:2504.02014. Available online at: https://arxiv.org/abs/2504.02014.

Li, X., Li, Z., Wu, X., Xiong, Z., Yang, T., Fu, Z., et al. (2019). Deep learning enhancing kinome-wide polypharmacology profiling: model construction and experiment validation. J. Medicinal Chemistry 63, 8723–8737. doi:10.1021/acs.jmedchem.9b00855

Liu, S. (2018). Exploration on deep drug discovery: representation and learning. Tech. Rep. Available online at: https://minds.wisconsin.edu/handle/1793/78768.

Lu, C., and Di, L. (2020). In vitro and in vivo methods to assess pharmacokinetic drug–drug interactions in drug discovery and development. Biopharm. & Drug Disposition 41, 3–31. doi:10.1002/bdd.2212

Luo, L., Li, B., Wang, X., Cui, L., and Liu, G. (2023). Interpretable spatial identity neural network-based epidemic prediction. Sci. Rep. 13, 18159. doi:10.1038/s41598-023-45177-1

Mao, G., Su, J., Yu, S., and Luo, D. (2019). Multi-turn response selection for chatbots with hierarchical aggregation network of multi-representation. IEEE Access 7, 111736–111745. doi:10.1109/access.2019.2934149

Minnich, A. J., McLoughlin, K., Tse, M., Deng, J., Weber, A., Murad, N., et al. (2020). Ampl: a data-driven modeling pipeline for drug discovery. J. Chemical Information Modeling 60, 1955–1968. doi:10.1021/acs.jcim.9b01053

Mohamed, S. K., Nounu, A., and Nováček, V. (2019). “Drug target discovery using knowledge graph embeddings,” in Proceedings of the 34th ACM/SIGAPP symposium on applied computing, 11–18.

Murali, V., Muralidhar, Y. P., Königs, C., Nair, M., Madhu, S., Nedungadi, P., et al. (2022). Predicting clinical trial outcomes using drug bioactivities through graph database integration and machine learning, Chem. Biol. Drug Des. Chemical Biology & Drug Design 100, 169–184. doi:10.1111/cbdd.14092

Nainu, F., Jota Baptista, C., Faustino-Rocha, A., and Oliveira, P. A. (2023). Model organisms in experimental pharmacology and drug discovery 2022.

Neuvonen, P. J., Niemi, M., and Backman, J. T. (2006). Drug interactions with lipid-lowering drugs: mechanisms and clinical relevance. Clin. Pharmacol. & Ther. 80, 565–581. doi:10.1016/j.clpt.2006.09.003

Phan, H. T., Nguyen, N. T., and Hwang, D. (2022). Aspect-level sentiment analysis using cnn over bert-gcn. Ieee Access 10, 110402–110409. doi:10.1109/access.2022.3214233

Powers, A., Loesch, E. B., Weiland, A., Fioravanti, N., and Lucius, D. (2017). Preemptive warfarin dose reduction after initiation of sulfamethoxazole-trimethoprim or metronidazole. J. Thrombosis Thrombolysis 44, 88–93. doi:10.1007/s11239-017-1497-x

Ragesh, R., Sellamanickam, S., Iyer, A., Bairi, R., and Lingam, V. (2021). “Hetegcn: heterogeneous graph convolutional networks for text classification,” in Proceedings of the 14th ACM international conference on web search and data mining, 860–868.

Reimers, A., Brodtkorb, E., and Sabers, A. (2015). Interactions between hormonal contraception and antiepileptic drugs: clinical and mechanistic considerations. Seizure-European J. Epilepsy 28, 66–70. doi:10.1016/j.seizure.2015.03.006

Reitman, M., Chu, X., Cai, X., Yabut, J., Venkatasubramanian, R., Zajic, S., et al. (2011). Rifampin’s acute inhibitory and chronic inductive drug interactions: experimental and model-based approaches to drug–drug interaction trial design. Clin. Pharmacol. & Ther. 89, 234–242. doi:10.1038/clpt.2010.271

Ryu, J. Y., Kim, H., and Lee, S. Y. (2018). Deep learning improves prediction of drug–drug and drug–food interactions. Proc. Natl. Acad. Sci. 115, E4304–E4311. doi:10.1073/pnas.1803294115

Schöning, V., Khurana, A., and Karolak, A. (2023). Spotlight on artificial intelligence in experimental pharmacology and drug discovery

Sharma, R., Almáši, M., Nehra, S. P., Rao, V. S., Panchal, P., Paul, D. R., et al. (2022). Photocatalytic hydrogen production using graphitic carbon nitride (gcn): a precise review. Renew. Sustain. Energy Rev. 168, 112776. doi:10.1016/j.rser.2022.112776

Sripriya Akondi, V., Menon, V., Baudry, J., and Whittle, J. (2022). Novel big data-driven machine learning models for drug discovery application. Molecules 27, 594. doi:10.3390/molecules27030594

Sun, M., Zhao, S., Gilvary, C., Elemento, O., Zhou, J., and Wang, F. (2020). Graph convolutional networks for computational drug development and discovery. Briefings Bioinformatics 21, 919–935. doi:10.1093/bib/bbz042

Wang, Y., Min, Y., Chen, X., and Wu, J. (2021). “Multi-view graph contrastive representation learning for drug-drug interaction prediction,” in Proceedings of the web conference 2021, 2921–2933.

Wienkers, L. C., and Heath, T. G. (2005). Predicting in vivo drug interactions from in vitro drug discovery data. Nat. Reviews Drug Discovery 4, 825–833. doi:10.1038/nrd1851

Yamanishi, Y., Araki, M., Gutteridge, A., Honda, W., and Kanehisa, M. (2008). Prediction of drug–target interaction networks from the integration of chemical and genomic spaces. Bioinformatics 24, i232–i240. doi:10.1093/bioinformatics/btn162

Yan, K., Lv, H., Guo, Y., Peng, W., and Liu, B. (2023). samppred-gat: prediction of antimicrobial peptide by graph attention network and predicted peptide structure. Bioinformatics 39, btac715. doi:10.1093/bioinformatics/btac715

Yang, X., Wang, Y., Byrne, R., Schneider, G., and Yang, S. (2019). Concepts of artificial intelligence for computer-assisted drug discovery. Chem. Reviews 119, 10520–10594. doi:10.1021/acs.chemrev.8b00728

Zhang, R., Lin, Y., Wu, Y., Deng, L., Zhang, H., Liao, M., et al. (2024). Mvmrl: a multi-view molecular representation learning method for molecular property prediction. Briefings Bioinforma. 25, bbae298. doi:10.1093/bib/bbae298

Keywords: drug–drug interaction, structured graph learning, cross-attention, molecular connectivity, interpretability

Citation: Wang J, Du H and Li Y (2026) DDI-AttendNet: cross attention with structured graph learning for inter-drug connectivity analysis. Front. Pharmacol. 16:1680655. doi: 10.3389/fphar.2025.1680655

Received: 06 August 2025; Accepted: 27 November 2025;

Published: 07 January 2026.

Edited by:

Zeno Apostolides, University of Pretoria, South AfricaReviewed by:

Mingzhong Wang, University of the Sunshine Coast, AustraliaYuchen Zhang, Northwest A&F University, China

Copyright © 2026 Wang, Du and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Wang, MTM0NjAyNDYyNjVAMTYzLmNvbQ==

Jing Wang

Jing Wang Huili Du1

Huili Du1