- 1Psychiatric and Mental Health Nursing Department, Faculty of Nursing, King Abdulaziz University, Jeddah, Saudi Arabia

- 2Faculty of Nursing, King Abdulaziz University, Jeddah, Saudi Arabia

- 3Nursing Administration Department, Eradah Mental Health Complex (Eradah Service), Jeddah, Saudi Arabia

- 4Nursing Department, Eradah Mental Health Complex, Jeddah, Saudi Arabia

- 5Department of Humanities and Social Sciences, G.P. Koirala Memorial (Community) College, Kathmandu, Nepal

- 6Department of Behavioral Medicine and Psychiatry, West Virginia University, Morgantown, WV, United States

- 7School of Nursing, Johns Hopkins University, Baltimore, MD, United States

Background: Artificial intelligence (AI) holds significant potential for enhancing mental health care, but uptake is limited, potentially impacted by demographic factors of healthcare professionals. Further, while AI use in Saudi Arabia is progressive, there is minimal exploration of its role and impact within mental health services.

Objective: This study presents a unique exploration of psychiatric professional’s perceptions of AI in mental health care in Jeddah, Saudi Arabia.

Methods: A cross-sectional online survey was conducted with a sample of mental health professionals from two governmental mental health hospitals in Jeddah, Saudi Arabia. The study tool was made up of two sections, the first consisting of sociodemographic questions and the second was the Shinners Artificial Intelligence Perception (SHAIP) questionnaire assessing the perceptions towards AI in mental healthcare, with data analyzed using IBM SPSS Statistical software.

Results: A total of 251 mental health professionals, mostly females (56.6%), aged 31-40 (50%), married (45%), and nurses (55.4%). Only 24.3% used AI in practice, though 85.7% were aware of AI. Participants positively rated AI’s impact (mean item range: 3.48-3.75) and felt unprepared for role-specific AI (mean 2.78). Nurses and those aware of AI had higher AI impact perceptions (p<0.0001) Specialty and AI awareness affected AI preparedness (p=0.001, p=0.029).

Discussion: The study provides insights into mental health professionals ‘ views on AI in mental healthcare, emphasizing the need for targeted education to improve AI literacy and preparedness among Saudi healthcare professionals. It highlights the importance of ethical AI implementation to enhance patient care and advance psychiatric practice in the region.

Introduction

The World Health Organization (WHO) describes mental health as a state of well-being where an individual recognizes their abilities, manages everyday stress, works effectively, and contributes to their community (1). Mental health disorders include various issues such as depression, anxiety, addiction, bipolar disorder, and other disorders, which significantly affect a person’s daily life, relationships, and physical health (2). In recent years there has been rapid uptake and integration of Artificial Intelligence (AI) in mental healthcare with the goal of improving patient care through greater efficiency in processes, prediction, and resources to individualize care (3, 4). While there is no standard definition for AI, the American Psychological Association defines it as ‘a subdiscipline of computer science that aims to produce programs that simulate human intelligence’ (5).

In practice there are different types of AI including machine learning – neural network and deep learning; and natural language processing (NLP); rule-based expert systems; robotic process automation and physical robots (6, 7). Research in artificial intelligence (AI) has primarily focused on components such as learning, reasoning, problem-solving, decision making, creativity, perception, independence and language use (8–10). Benefits of AI include improved efficiency, accuracy, and productivity (11). AI has been extensively used in health care system (12), including mental health systems (3, 13, 14). (6, 15–21) Attitudes toward AI in healthcare are often positive (22–24), with professionals noting the potential of AI to support medical diagnosis, decision-making, drug discovery, patient experience, data management, and robotic surgery (25, 26). However, an important distinction is the perspective among many healthcare professionals is that AI should serve as a partner not a replacement as a way of maximizing the benefits of AI and the expertise of the clinician (27). Other factors raised by healthcare professionals reflecting on the integration of into healthcare relate to job security, ethical and moral dilemmas, impact on quality of care including patient-provider relationships, training needs, and legal implications and regulatory standards (27). AI-driven advancements can improve personalized, precise, and predictive care, while challenges to integration include data access, domain expertise, public trust, ethical concerns, cybersecurity, and language barriers (12, 13, 22). Additionally, effective AI implementation depends on robust evaluation, bias mitigation, generalizability, and interpretability to maximize patient benefits (28). However, limited digital literacy remains a barrier, with studies showing that nearly half (48.2%) of healthcare professionals exhibit poor digital literacy, which may limit use of AI in supporting clinical practice (29).

AI in mental health

Advances in AI offer promising opportunities for patients and clinicians alike, to benefit from mental health interventions (3, 5, 13). AI tools have demonstrated accuracy in detecting, classifying, and predicting mental health disorders, assessing treatment responses, and monitoring prognosis (14). Additionally, AI-driven mental health interventions, including digital apps and web-based therapy, are improving patient access and individualized care (18). AI-based mental healthcare systems (AIMS) integrate chatbots with machine learning to assess patients, predict conditions, and support clinical decisions while prioritizing privacy, autonomy, and accessibility (19). Further, impact on patient outcomes via chatbot-based systems show potential in psychoeducation, treatment adherence, and patient engagement by analyzing energy levels, mood, stress, and sleep habits (4, 15). In addition, in some instances patients have responded positively to the care not coming from a human, based on the control this allowed them in self-pacing treatments and education (30). Machine learning models can predict conditions such as bipolar disorder, schizophrenia, anxiety, depression, post-traumatic stress disorder (PTSD), and childhood mental health disorders (20). Though AI can analyze speech, text, and facial expressions to provide insights into mental states (6) and detect patterns related to non-compliance (31), encoding human empathy into AI remains a fundamental challenge (16).

Thus, while preliminary evidence supports the use of chatbots in psychiatry (21), effectiveness requires technological advancement, resource availability, and reduction of stigma (16), ethical concerns remain. Ethical implications are wide-reaching, including but not limited to potential harm to patients, biases and lack of representation in datasets, over-reliance, limited emotional intelligence, and challenges in preserving the patient-therapist relationship (15, 30, 32). A recent systematic review identified 18 ethical considerations within three main domains: use of AI interventions in mental health and wellbeing, principles to ensure responsible practice and positive outcomes associated with development and implementation of AI technology, and guidelines and recommendation for ethical use of AI in mental health treatments (32). To be trustworthy, AI-driven mental healthcare must be competent, reliable, transparent, and empathetic, along with clear communication about its validity and limitations (17). Despite AI’s rapid evolution in healthcare, its application in mental health is also limited by barriers in clinical integration (28, 33). Digital literacy among clinicians is becoming increasingly essential as AI-generated data grows (34). AI presents transformative potential, but ensuring ethical implementation and clinical effectiveness will be key to its success in mental healthcare (3).

Use of AI in healthcare in Saudi Arabia

AI is rapidly transforming healthcare in Saudi Arabia, aligning with Vision 2030 by enhancing efficiency through AI-powered diagnostics, predictive analytics, personalized treatments, and digital platforms like the Mawid system, which streamlines scheduling and service delivery (35). These advancements contribute to improved patient outcomes and operational effectiveness across the healthcare sector. Despite AI’s growing role in Saudi, knowledge gaps persist among its healthcare professionals. Studies indicate that 50.1% of medical students lack basic AI knowledge, 55.8% are unaware of its applications in dentistry, and 40.9% primarily learn about AI through social media. AI awareness increases with academic progression, with first-year students showing the lowest awareness (27.6%) and sixth-year students the highest (64.6%) (36), which provides some explanation for greater support for AI education among postgraduate (48.9%) than undergraduate (40.4%) programs (36). In the field of mental health, AI is emerging as a valuable tool for diagnosis, treatment, and accessibility. King Faisal Specialist Hospital & Research Centre (KFSHRC) is leveraging AI-driven digital platforms to enhance mental health services, reduce stigma, and improve care access, particularly in underserved areas (37). These initiatives highlight AI’s potential to bridge gaps in mental healthcare, but further efforts are needed to integrate AI effectively, ensuring healthcare professionals are equipped with the necessary digital skills.

Research gap

AI perceptions among mental health professionals appear to be influenced by demographic factors though exploration shows inconclusive and contradictory findings. For example, age plays a role, with younger professionals generally more receptive, though findings are mixed (38–40). (24, 41) Gender differences are also inconclusive, with some studies suggesting men favor AI due to socialization (42), while others report higher acceptance among women (24). Specialization also shapes attitudes, with pathologists showing greater willingness compared to other medical specialists, such as psychiatrists, radiologists, and surgical specialists (39), and cognitive behavioral practitioners demonstrating more positive views (43). Exploration of experience, digital literacy and AI familiarity also give mixed findings, wherein experience is not a strong predictor, and digital literacy and AI familiarity being found to both enhance or diminish positive perceptions (40, 42, 44). (39) Understanding the interplay of these demographic influences is essential for effective AI adoption in mental healthcare.

Despite the exploration highlighted, literature on the intersection of AI and mental health remains relatively scarce (45). AI adoption in healthcare is expanding, but research on its role in mental health care in Saudi Arabia remains limited. Existing studies primarily focus on general healthcare applications, overlooking demographic variations in AI perception, particularly in mental health care. There is a lack of research examining how factors such as age, gender, professional background and experience influence attitudes toward AI in mental health settings. Thus, there is a need to identify insights for developing targeted strategies to enhance AI integration, improve training programs, and optimize AI tool usage, ultimately fostering more effective and accessible mental health care in Saudi Arabia. Therefore, this study addresses this gap with an aim of exploring AI perceptions in mental health care across diverse demographic groups in Saudi Arabia.

Methods

Research design

A cross-sectional study was conducted to assess the perceptions of mental health professionals towards AI technology in mental healthcare. The study focused on two specialized psychiatric hospitals in Jeddah, Saudi Arabia: XX and XX. These institutions, affiliated with the Ministry of Health (MOH), were selected due to their specialization in psychiatric care and location. XX, established in 1988, provides comprehensive psychiatric care, while XX features eight wards, including six inpatient units (four male and two female wards), an outpatient department (OPD), and an emergency room (ER), totaling approximately 125 beds.

Ethical considerations

Ethical approval was obtained from the Nursing Research Ethical Committee of The Faculty of Nursing at King Abdulaziz University (NREC Serial No: Ref No 2B. 45) and the Institutional Review Board of The Ministry of Health (IRB Log No: A01892). Participants were informed about the study’s aim, importance, expected completion time, and confidentiality measures through a cover page included in the online survey. Informed consent was implied upon survey completion. Data anonymity was ensured by not collecting personal identifiers, and data security was maintained using password-encrypted storage accessible only to the research team.

Sampling and sample size

The study aimed to include a diverse range of Mental Health Professionals that are representative of clinicians providing direct care to individuals with mental health disorders in Saudi Arabia. The sample therefore included psychiatrists, medical interns, nurses, nursing interns, psychologists, psychologist interns, sociologists, and other allied health professionals. Assuming 60% of study subjects are having good knowledge towards AI with ± 6% precision and at 0.05 level of significance we need 253 subjects. These 253 subjects were selected using systematic random sampling from the sampling frame of 705 professionals across both hospitals.

Instrumentation/data collection method

Data were collected via a questionnaire comprised of two sections and a total of 19 questions. Section one comprised the validated Shinners Artificial Intelligence Perception (SHAIP) questionnaire (used with author permission) (46). The SHAIP tool was selected for use in this study due to the methodological rigor and strength in psychometric validation (46). The SHAIP questionnaire consists of 10 items assessed using a 5-point Likert scale (totally disagree, disagree, unsure, agree and total agree), exploring perceptions of professional impact (Cronbach’s alpha.804), and perceptions of preparedness for AI (Cronbach’s alpha.620) (46). Within the ten items, six indicate professional impact: I believe that the use of AI in my specialty could improve the delivery of patient care; I believe that the use of AI in my specialty could improve clinical decision making; I believe that AI can improve population health outcomes; I believe that AI will change my role as a healthcare professional in the future; I believe that the introduction of AI will reduce financial cost associated with my role; I believe that one day AI may take over part of my role as a healthcare professional. Four items explore preparedness for AI: I believe that overall healthcare professionals are prepared for the introduction of AI technology; I believe that I have been adequately trained to use AI that is specific to my role; I believe there is an ethical framework in place for the use of AI technology in my workplace; I believe that should AI technology make an error; full responsibility lies with the healthcare professional. The second section comprised nine socio-demographic questions reflecting the same information gathered by Shinner et al. (46). These included: age, gender, marital status, role including (i) professional role, and (ii) clinical or administrative role, years of experience in mental health care, and AI use including (i) current use of AI in practice, (ii) any prior AI training, and (iii) ability to define AI. The questionnaire takes on average 5–10 minutes to complete.

Data collection procedure

A standardized approach to data collection was employed to facilitate robust statistical analysis and derive meaningful insights into professionals’ perceptions of AI integration in mental healthcare. The questionnaire included language about the voluntary nature of participation, anonymization of responses, and a statement that completion of the questionnaire indicated informed consent. Following ethical approval, potential participants were notified about the study using electronic distribution via hospital emails and official WhatsApp groups to ensure participant anonymity and accessibility. The questionnaire remained active for a one-month period between April-May 2024, and two electronic reminders were sent to encourage completion. The survey was closed once the target sample size had been achieved.

Data analysis

Data were analyzed using IBM SPSS Statistical software for Windows version 26.0 (IBM Corp., Armonk, N.Y., USA). Descriptive statistics (mean, standard deviation, frequencies and percentages) were used to describe the quantitative and categorical variables. The student’s t-test for independent samples and one-way analysis of variance followed by Tukey’s post-hoc tests were used to compare the mean values of two factors of SHAIP questionnaire in relation to the demographic, professional characteristics and AI awareness items of the study participants which had two and more than two categories. A p-value of <0.05 were used to report the statistical significance of results.

Results

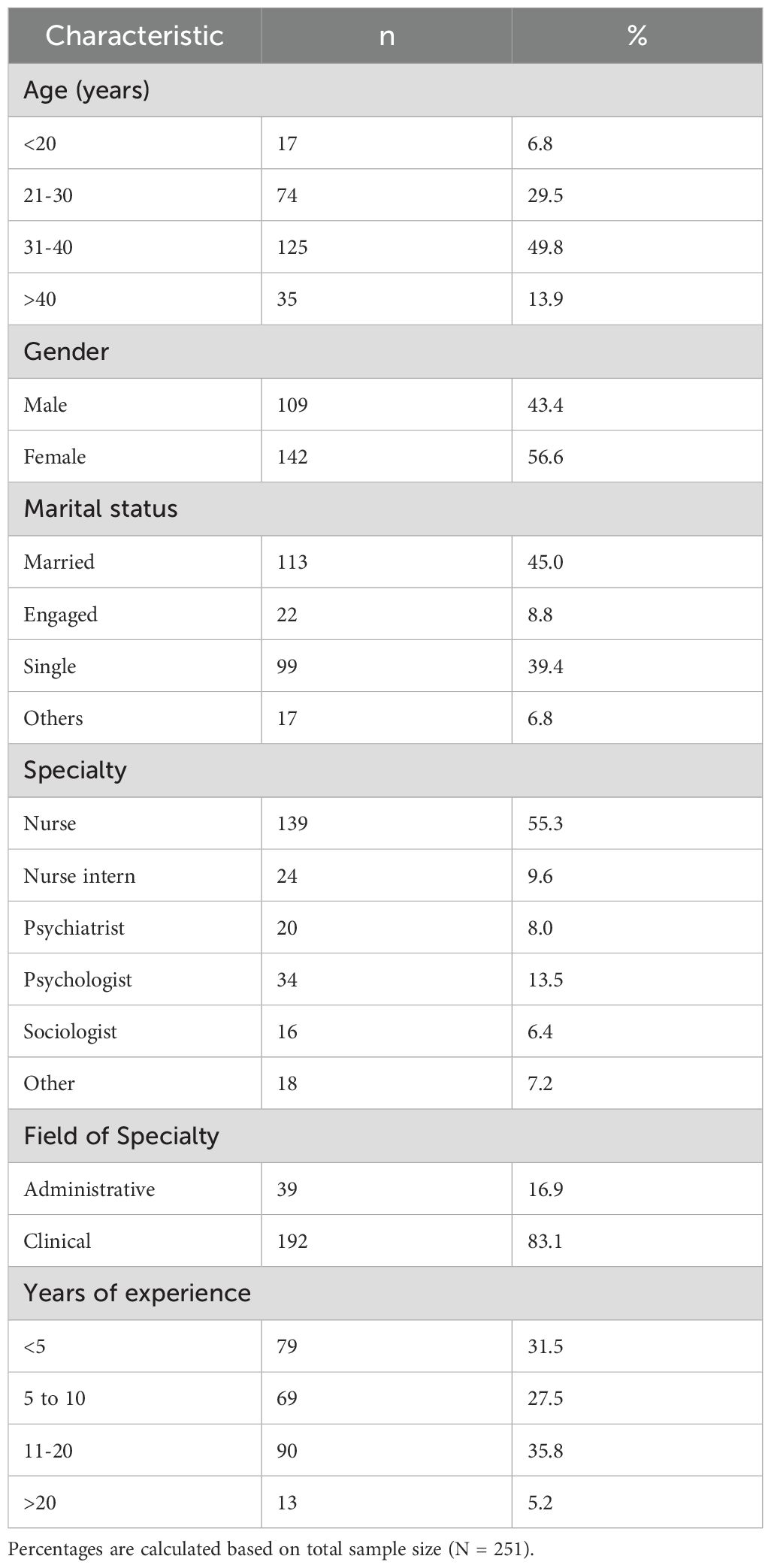

Out of 251 study participants, 56.6% were females, about 50% were in age group of 31 to 40 years, 45% were married. Professionally, 55.4% were nurses, and 83.1% were in a clinical role. Work experience varied between 5–10 years (27.5%), and 11–20 years (35.9%) (Table 1).

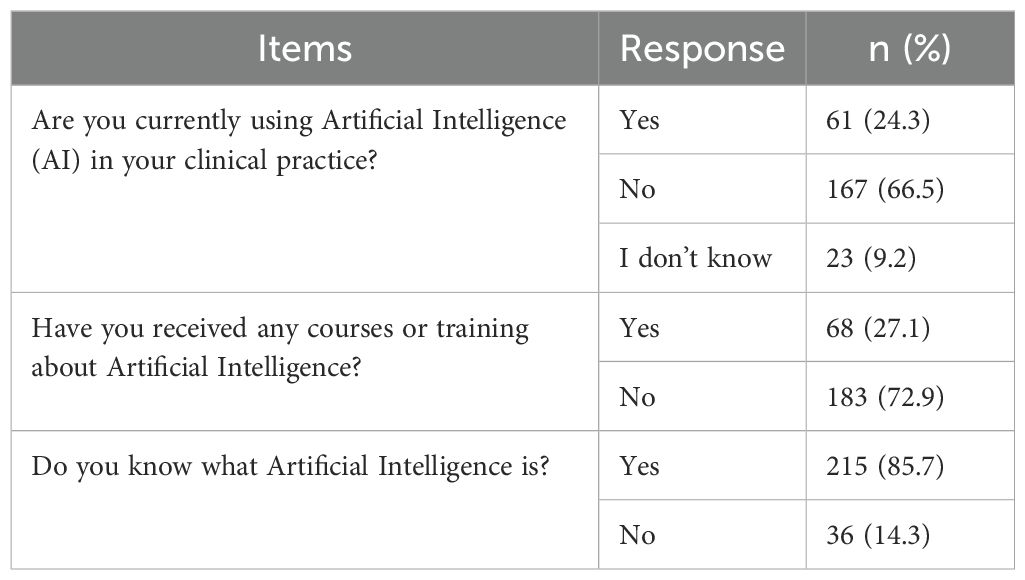

Use or familiarity with AI varied across the sample. While the majority claimed they knew what AI was (85.7%), most had not received any training in its use (72.9%). Notably, while 66.5% stated they were not using AI in their clinical practice, and 24.3% stated they were using it, 9.2% indicated they were unsure (Table 2).

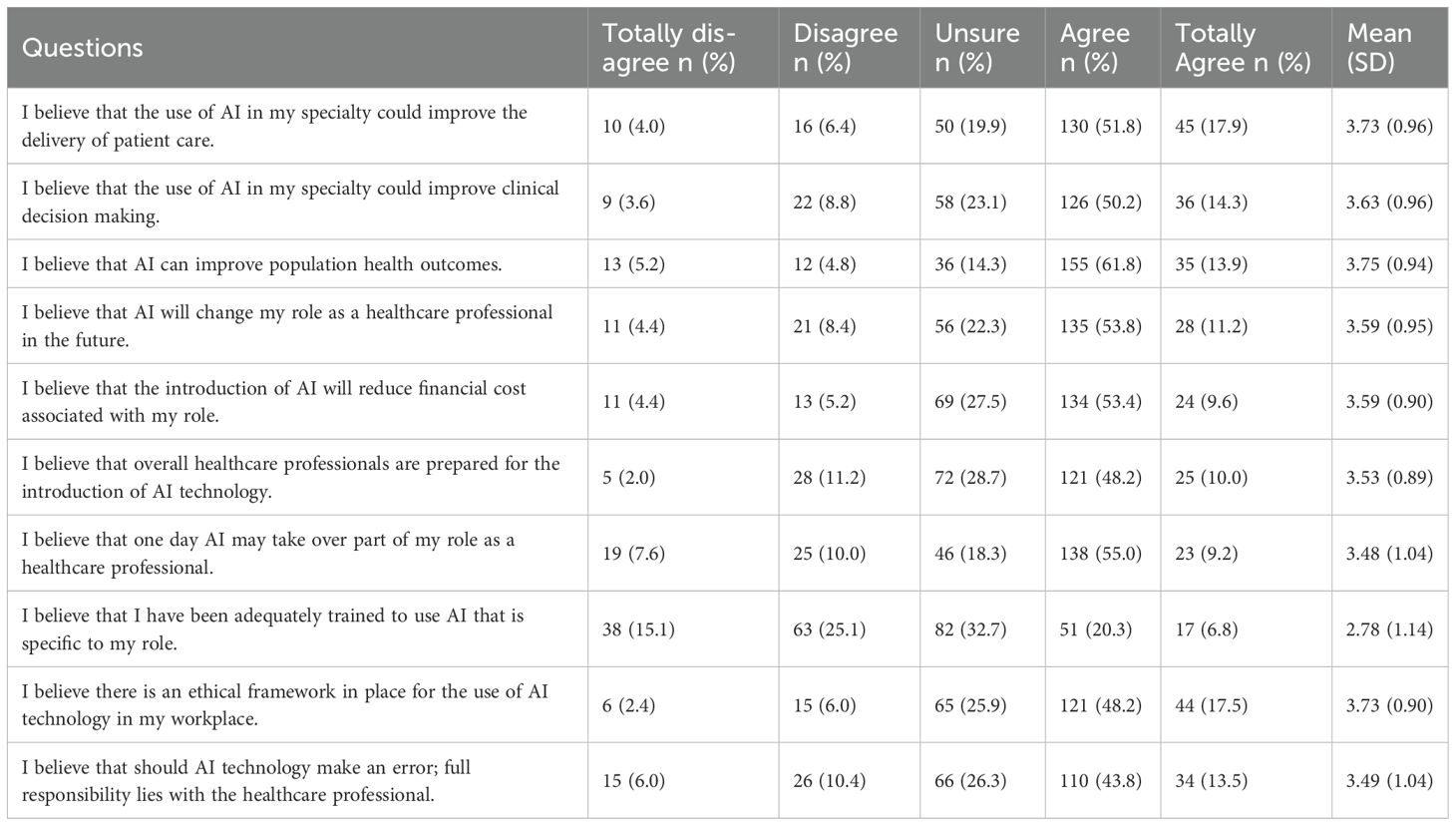

Participant responses to the SHAIP questionnaire highlight important complexities within their attitudes towards AI (Table 3). For example, 61.8% indicated ‘agree’ to the item ‘I believe that AI can improve population health outcomes’, 53.8% agreed that AI would change their healthcare role, and 55% agreed AI may take over portions of their healthcare role. However, responses to preparedness items suggest contradictory perceptions among participants. Only 20.3% agreed they had been adequately trained to use AI within their specialty role, affirmed by 32.7% and 25.1% stating unsure and disagree respectively, yet 48.2 agreed that the healthcare professionals are prepared for the introduction of AI technology.

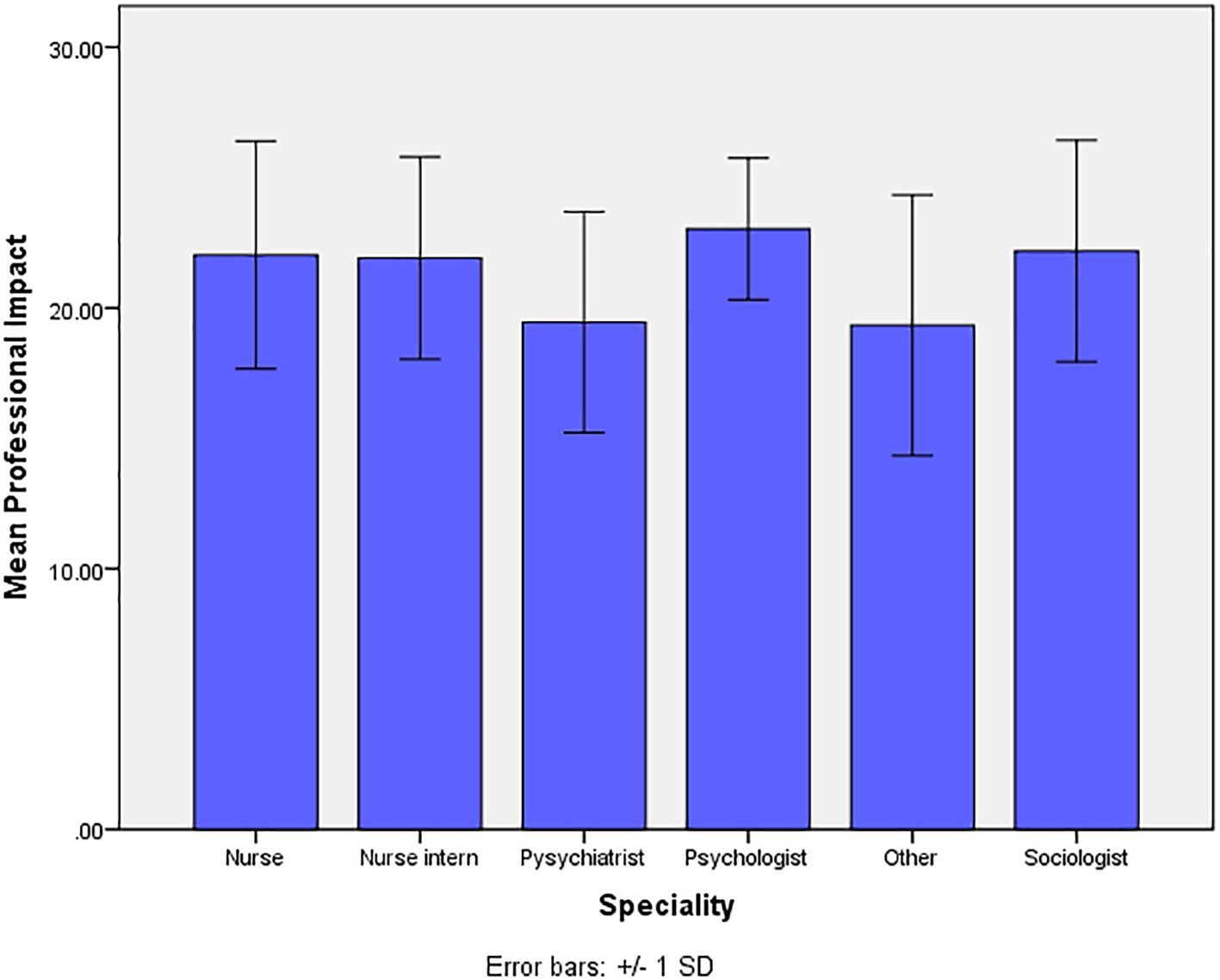

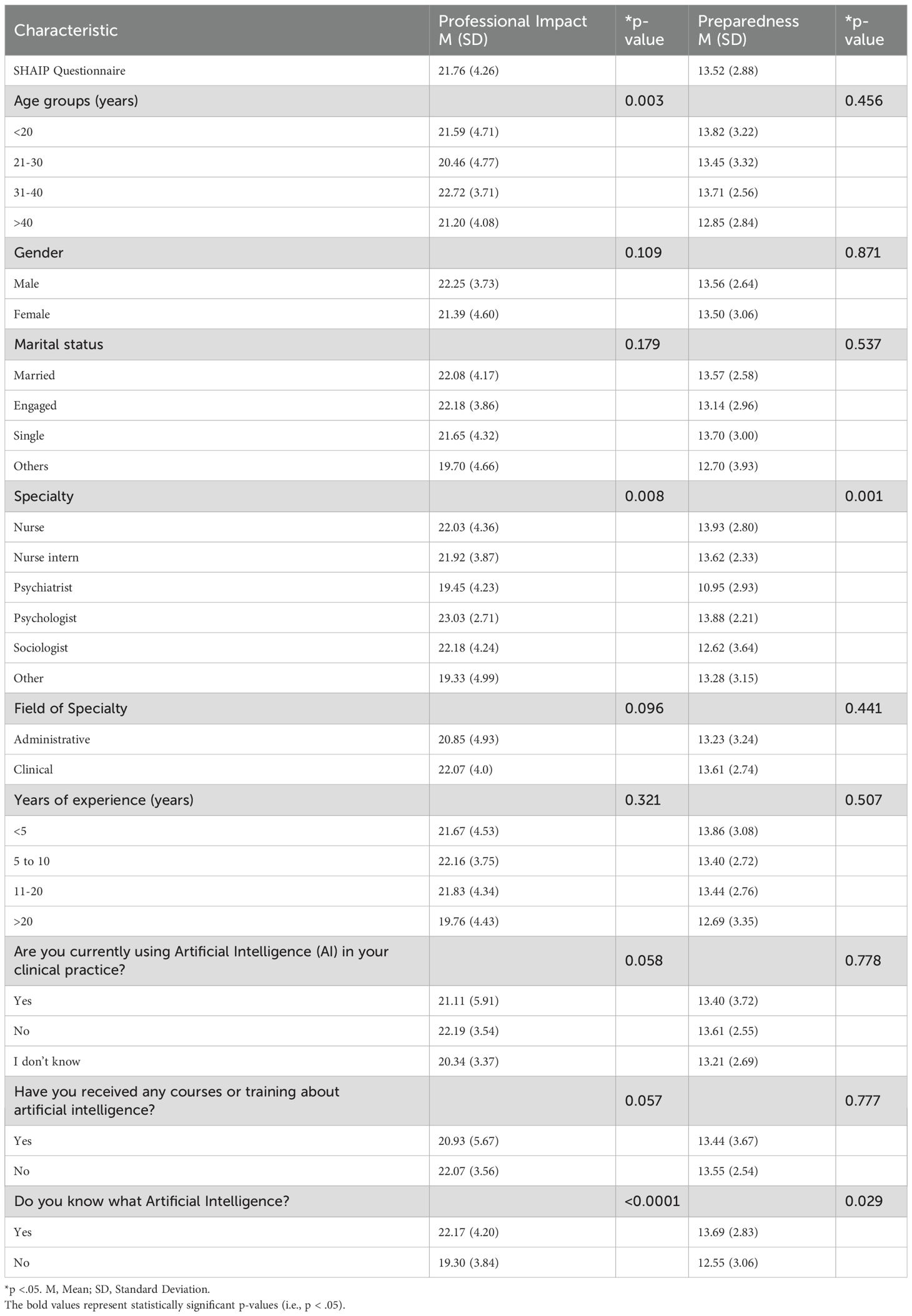

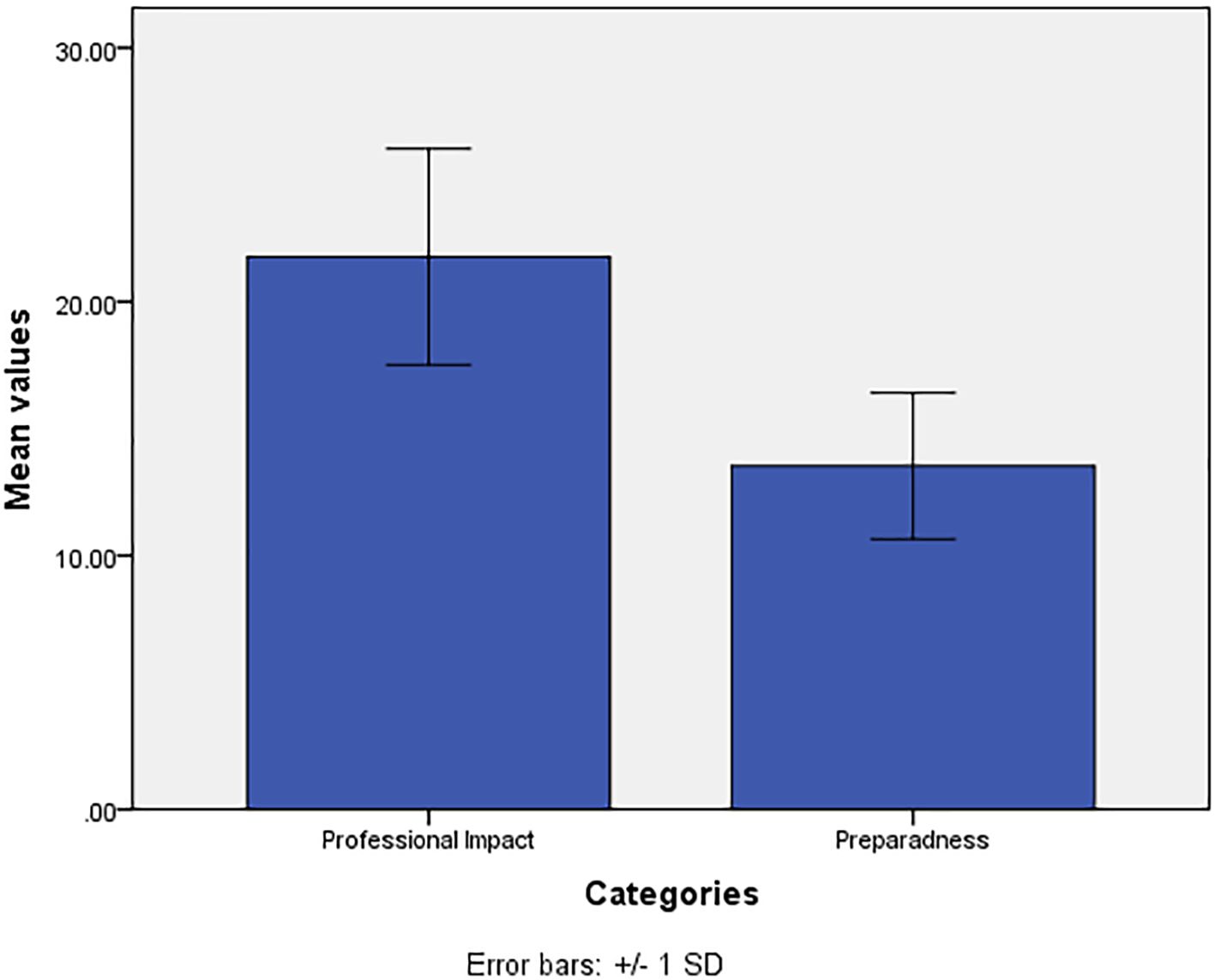

The mean (Sd.), of the two factors under exploration (Professional Impact of AI, Preparedness of AI), are 21.76(4.26), and 13.52(2.88) respectively (Figure 1). The comparison of mean values of the two factors of the SHAIP questionnaire in relation to the demographic, professional and awareness items on AI show statistically significant differences (Table 4). These were found within the variable of ‘specialty’ and the personal profile characteristic ‘do you know what AI is?’ for both factors of professional impact of AI and preparedness of AI. The variable ‘age’ however, was also found to be significant for differences found within the factor of professional impact of AI. The mean values of professional impact of AI are significantly different across the 4 age groups (p=0.003). The mean values of participants are significantly higher in those aged <20, 31–40 and > 40 years when compared with the participants who are aged 21–30 years.

The mean values of professional impact of AI are significantly higher in participants who were nurses, nurse interns, psychologists, and sociologists when compared with the mean values of participants who were psychiatrists and of other specialties (p=0.008) (Figure 2). The post-hoc test indicates no significant difference in the mean values of pairs of nurses, nurse interns, psychologists and sociologists. The mean value of professional impact of AI is significantly higher among participants who had responded positively (yes) to the statement ‘do you know what AI is?’ when compared with the participants who had responded negatively (No) (p<0.0001). No statistically significant difference was observed for the mean values of professional impact of AI in relation to the other variables (Table 4).

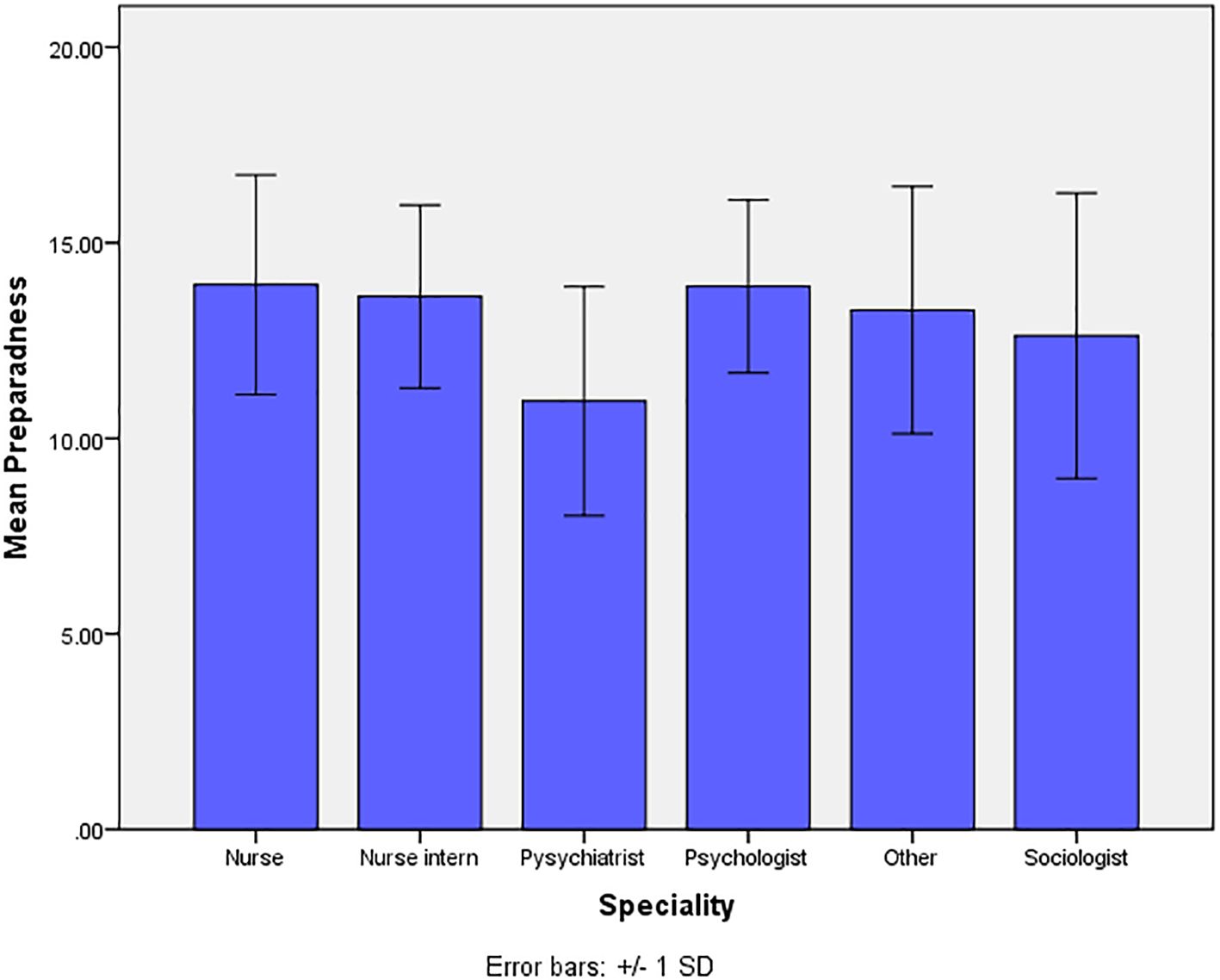

For the mean values of preparedness of AI, we observed statistically significantly higher difference in the mean values among the participants who were nurses, nurse interns, psychologists, and other specialties when compared with the mean values of participants who were psychiatrists and sociologists (p=0.001) (Figure 3). The post-hoc test indicates no significant difference in the mean values of pairs of nurses, nurse interns, psychologists and other specialties. The mean value of preparedness of AI are significantly higher among participants who had responded positively (yes) to the statement ‘do you know what AI?’ when compared with the participants who had responded negatively (No) (p=0.029). No statistically significant difference was observed for the mean values of preparedness of AI in relation to the other variables (Table 4).

Discussion

This cross-sectional study aimed to explore perceptions of AI among a diverse range of mental healthcare professionals in Saudi Arabia. The study sample consisted predominantly of individuals aged 31–40 years (49.8%), with a significant representation of females (56.6%). A large proportion of participants were married (45.0%), compared to single (39.4%). Professionally, nurses constituted the majority (55.4%), followed by psychologists (13.5%) and nurse interns (9.6%). Most participants worked in clinical roles (83.1%), with a smaller percentage in administrative positions (16.9%). Over a third of participants had 11–20 years of clinical experience (35.9%), while a significant portion had less than 5 years (31.5%). These demographic trends highlight a sample with diverse professional backgrounds and experience levels, which may influence their perceptions and preparedness for AI integration in healthcare. Notably, the underrepresentation of certain specialties, such as psychiatrists (8.0%) and sociologists (6.4%), may limit the generalizability of findings across all healthcare professions.

The findings of this study shed light on three key elements connected to AI preparedness and perceived impact among mental health professionals in Saudi Arabia. First, we found there was an overarching general awareness of AI, but a lack of training supporting its use. This suggests a potential risk for poor or unsafe practices if AI interventions are implemented without establishment of institutional infrastructure to provide training and support. The second area pertains to the perceived professional Impact of AI. Overall, participants were largely positive their perceptions of the role of AI in mental healthcare. However, while about half believed AI would alter their clinical role and potentially take on aspects of their responsibilities, the survey did not capture if they felt this change would also be a positive move – an area requiring further investigation. The third element builds on this aspect, with notable differences in perceptions and preparedness between different professional roles and demographics (explored further below). Nurses, psychologists, and sociologists reported a greater level of perceived impact and preparedness than psychiatrists and some other professionals, associated with higher levels of knowledge. This reinforces the need for training to accompany intervention development if AI is to be integrated to greater effect in the clinical setting. It further suggests a relationship between familiarity and attitude.

Age revealed complex relationships between demographic factors and AI perception. Age emerged as a significant predictor of professional impact but not preparedness, adding nuance to existing literature. While some studies found younger age groups (18–42 years) more receptive to AI mental health tools (38), others reported no significant age-related differences in AI perception and attitudes (24, 39, 40). (41) The age-related variation in our study appears more closely tied to professional impact rather than general preparedness, suggesting that age influences how professionals view AI’s effect on their work rather than their readiness to adopt it. This is further supported by Brinker et al. (2019) who found that diagnostic performance patterns vary with age—younger clinicians showing higher sensitivity and older clinicians better specificity—indicating that AI tools may need to be tailored to different experience levels (47).

Gender showed no significant relationship with AI perception in our study, aligning with several recent studies (39, 40). However, this finding exists within a complex landscape of contradictory evidence. Ofosu-Ampong (2023) found men perceived AI-based learning tools more positively, attributing this to traditional gender roles and socialization patterns (42). Conversely, Fritsch et al. (2022) reported women showed more favorable attitudes toward AI in healthcare (24). Gender differences were also observed in diagnostic performance, with female clinicians showing higher sensitivity and male clinicians greater specificity (47). These contradictory findings suggest the presence of unmeasured moderating variables that warrant further investigation. Our study proved marital status to be a non-significant factor in AI perception, though this demographic variable has received limited attention in previous research.

Professional specialization emerged as a crucial factor in shaping AI perceptions. Psychologists reported the highest professional impact scores, while nurses demonstrated the highest preparedness levels. This finding is particularly noteworthy as previous research often excluded these key healthcare professionals (39). Within mental health specialties, those practicing cognitive behavioral approaches showed more positive attitudes toward AI (43). However, the adoption landscape remains complex, particularly in psychiatry, where evidence gaps regarding AI’s benefits and limitations make implementation decisions challenging (16). This specialty-specific variation in AI perception suggests the need for tailored implementation strategies that consider each profession’s unique needs and concerns. However, our study found no relationship between field of specialty, such as administrative and clinical roles, and AI perception. Participants from both fields exhibited no differences in AI perception across both factors. Years of experience showed no significant relationship with AI perception, consistent with previous findings (39, 40). However, some nuanced differences emerged: senior physicians were reported to be less familiar with AI (40), and more experienced doctors tended to prioritize human expertise over AI in diagnostics (44).

In our analysis, the current use of AI in mental health care settings did not reveal a relationship with AI perception in either professional impact and AI preparedness. We observed no connection between professional’s training in AI and AI perception. Interestingly, professionals who received training and professionals who had not, had similar levels of perceptions towards AI. While in the literature, digital literacy and training in technology emerged as key factors influencing healthcare professionals’ attitudes toward AI adoption (29), in our work, the relationship between professional’s knowledge and AI perception proved significant. Specifically, that those familiar with AI showed a more positive perception. This aligns with findings on technology familiarity’s importance (42), though again, wider literature is not consistent on this relationship (39).

From a cultural perspective, our findings among mental healthcare professionals are reflected among other healthcare specialties in the region. For example, a cross-sectional study in Jeddah, Saudi Arabia found healthcare workers, including mental health professionals, demonstrated substantial awareness of AI, but limited AI experience and training (48). Similarly, a study from the United Arab Emirates pointed to positive attitudes towards the role of AI in certain administrative clinical processes, with limited training and education opportunities (49). More globally, perceptions towards the potential role of AI include questions about its ability or appropriateness to replace human connection and empathy in mental healthcare, along with concerns about ethical elements (50). However, a systematic review about the future role of AI in Saudi healthcare more generally, suggests that its potential benefits in improving care processes mean the work to ensure ethical application is a worthwhile endeavor (51). Within this wider context, the findings from the present study suggest that in Saudi Arabian mental healthcare, gaps in level of preparedness can be supplemented by rigorous training across professions, a need for more work to ensure safe and ethical integration of AI to improve patient care processes, and support and partnership with mental healthcare professionals in understanding the potential evolution of their roles and responsibilities.

Strengths and limitations

This study has several strengths and limitations. The findings of the study underscore the need for targeted training and education for mental health professionals regarding AI technologies. Furthermore, it highlights the importance of addressing concerns and misconceptions that may hinder the adoption of AI in mental healthcare. Given the mixed responses towards AI, the use of anonymous reporting may have helped to mitigate social desirability and response biases. Its cross-sectional design captures perceptions at a single point in time, limiting the ability to assess changes over time. The study was conducted in two specialized psychiatric hospitals in Jeddah, which may restrict the generalizability of findings to other psychiatric settings or healthcare sectors, though the settings were selected due to their specialized nature. Additionally, while the survey demonstrated acceptable reliability, some sections had Cronbach’s alpha values slightly above 0.5, indicating potential limitations in internal consistency. The sample relied on electronic distribution, which may have led to selection bias, favoring participants more comfortable with digital platforms. Lastly, unmeasured factors such as prior AI exposure, institutional policies, and cultural attitudes toward technology may have influenced responses, although this was beyond the scope of our primary research aim. Nonetheless, this is an important area for future researchers to consider, with use of longitudinal designs, further exploration across other psychiatric settings to improve generalizability, and qualitative exploration to provide context and further nuance to better understand mental health professionals’ attitudes, perspectives, and needs for integrating AI into patient care.

Conclusion

Our findings indicate that there are relationships between the demographic variables of healthcare professionals and perceptions and use of AI in practice. Specialization and AI knowledge significantly influence perceptions among mental health professionals. Psychologists reported the highest professional impact, while nurses showed the greatest preparedness. Psychiatrists and sociologists exhibited lower preparedness scores, highlighting potential gaps. Participants familiar with AI had more positive perceptions, emphasizing the role of knowledge. Age affected professional impact but not preparedness. Gender, marital status, experience, and training showed no significant relationships with AI perceptions. These results underscore the need for tailored strategies to address specialty-specific needs and enhance AI literacy.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by the Nursing Research Ethical Committee of the Faculty of Nursing at King Abdulaziz University (NREC Serial No: Ref No 2B. 45) and the Institutional Review Board of the Ministry of Health (IRB Log No: A01892). The studies were conducted in accordance with the local legislation and institutional requirements.

Author contributions

LS: Supervision, Methodology, Writing – original draft, Formal analysis, Project administration, Writing – review & editing, Conceptualization. RA: Writing – original draft. AA: Writing – original draft. FAlq: Writing – original draft. FAls: Supervision, Writing – review & editing. SQ: Writing – review & editing. WA: Writing – review & editing. AM: Writing – review & editing. DP: Writing – review & editing. KS: Writing – review & editing. RW: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The project was funded by KAU Endowment (WAQF) at King Abdulaziz University, Jeddah, Saudi Arabia. The authors, therefore, acknowledge with thanks WAQF and the Deanship of Scientific Research (DSR) for technical and financial support.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

AI, Artificial Intelligence; AIMS, Artificial Intelligence-Based Mental Healthcare Systems; NLP, Natural language processing; SHAIP, Shinners Artificial Intelligence Perception; WHO, World Health Organisation.

References

1. WHO. Mental health . Available online at: https://www.who.int/health-topics/mental-healthtab=tab_1 (Accessed February 2, 2025).

2. Felman A and Tee-Melegrito RA. What is mental health? Medical News Today (2024). Available online at: https://www.medicalnewstoday.com/articles/154543 (Accessed March 2, 2025).

3. Thakkar A, Gupta A, and De Sousa A. Artificial intelligence in positive mental health: a narrative review. Front Digit Heal. (2024) 6:1280235. doi: 10.3389/fdgth.2024.1280235

4. Dehbozorgi R, Zangeneh S, Khooshab E, Nia DH, Hanif HR, Samian P, et al. The application of artificial intelligence in the field of mental health: a systematic review. BMC Psychiatry. (2025) 25:132. doi: 10.1186/s12888-025-06483-2

5. American Psychological Association [APA]. Artificial intelligence in mental health care (2025). Available online at: www.apa.orghttps://www.apa.org/practice/artificial-intelligence-mental-health-care.

6. Davenport T and Kalakota R. The potential for artificial intelligence in healthcare. Futur Healthc J. (2019) 6:94–8. doi: 10.7861/futurehosp.6-2-94

7. Cui S, Tseng H-H, Pakela J, Ten Haken RK, and El Naqa I. Introduction to machine and deep learning for medical physicists. Med Phys. (2020) 47:e127–47. doi: 10.1002/mp.14140

8. Copeland BJ. Artificial intelligence (2025). Available online at: https://www.britannica.com/technology/artificial-intelligence (Accessed February 2, 2024).

9. Stryker C and Kavlakoglu E. What is artificial intelligence (AI)? United States: IBM (2024). Available at: https://www.ibm.com/think/topics/artificial-intelligence (Accessed Februrary 5, 2025).

10. Beets B, Newman TP, Howell EL, Bao L, and Yang S. Surveying public perceptions of artificial intelligence in health care in the United States: systematic review. J Med Internet Res. (2023) 25:1–12. doi: 10.2196/40337

11. Mashood K, Kayani HUR, Malik AA, and Tahir A. Artificial intelligence recent trends and applications in industries. Pak J Sci. (2023) 75:219–31. doi: 10.57041/pjs.v75i02.855

12. Bajwa J, Munir U, Nori A, and Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Futur Healthc J. (2021) 8:e188–94. doi: 10.7861/fhj.2021-0095

13. Alhuwaydi AM. Exploring the role of artificial intelligence in mental healthcare: current trends and future directions – A narrative review for a comprehensive insight. Risk Manag Healthc Policy. (2024) 17:1339–48. doi: 10.2147/RMHP.S461562

14. Cruz-gonzalez P, He AW, Lam EP, Ng IMC, Li MW, Hou R, et al. Artificial intelligence in mental health care: a systematic review of diagnosis, monitoring, and intervention applications. Psychol Med. (2025) 55:1–52. doi: 10.1017/S0033291724003295

15. Denecke K, Abd-Alrazaq A, and Househ M. Artificial intelligence for chatbots in mental health: opportunities and challenges. In: Househ M, Borycki E, and Kushniruk A, editors. Multiple Perspectives on Artificial Intelligence in Healthcare: Opportunities and Challenges. Switzerland, AG: Springer International Publishing (2021). p. 115–28. doi: 10.1007/978-3-030-67303-1_10

16. Minerva F and Giubilini A. Is AI the future of mental healthcare? Topoi. (2023) 42:809–17. doi: 10.1007/s11245-023-09932-3

17. Higgins O, Chalup SK, and Wilson RL. Artificial Intelligence in nursing: trustworthy or reliable? J Res Nurs. (2024) 29:143–53. doi: 10.1177/17449871231215696

18. D’Alfonso S. AI in mental health. Curr Opin Psychol. (2020) 36:112–7. doi: 10.1016/j.copsyc.2020.04.005

19. Alrashide I, Alkhalifah H, Al-Momen A-A, Alali I, Alshaikh G, Rahman A, et al. AIMS: AI based mental healthcare system. IJCSNS Int J Comput Sci Netw Secur. (2023) 23:225–34. doi: 10.22937/IJCSNS.2023.23.12.24

20. Chung J and Teo J. Mental health prediction using machine learning: taxonomy, applications, and challenges. Appl Comput Intell Soft Comput. (2022) 2022:1–19. doi: 10.1155/2022/9970363

21. Vaidyam AN, Wisniewski H, Halamka JD, Kashavan MS, and Torous JB. Chatbots and conversational agents in mental health: A review of the psychiatric landscape. Can J Psychiatry. (2019) 64:456–64. doi: 10.1177/0706743719828977

22. Vo V, Chen G, Aquino YSJ, Carter SM, Do QN, and Woode ME. Multi-stakeholder preferences for the use of artificial intelligence in healthcare: A systematic review and thematic analysis. Soc Sci Med. (2023) 338:116357. doi: 10.1016/j.socscimed.2023.116357

23. Mousavi Baigi SF, Sarbaz M, Ghaddaripouri K, Ghaddaripouri M, Mousavi AS, and Kimiafar K. Attitudes, knowledge, and skills towards artificial intelligence among healthcare students: A systematic review. Heal Sci Rep. (2023) 6:1–23. doi: 10.1002/hsr2.1138

24. Fritsch SJ, Blankenheim A, Wahl A, Hetfeld P, Maassen O, Deffge S, et al. Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients. Digit Heal. (2022) 8:1–16. doi: 10.1177/20552076221116772

25. Daley S. AI in Healthcare: Uses, Examples and Benefits. United States: Builtin (2025). Available at: https://builtin.com/artificial-intelligence/artificial-intelligence-healthcare.

26. Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J Clin. (2019) 69:127–57. doi: 10.3322/caac.21552

27. Rony MKK, Parvin MR, Wahiduzzaman M, Debnath M, Das Bala S, and Kayesh I. I wonder if my years of training and expertise will be devalued by machines”: Concerns about the replacement of medical professionals by artificial intelligence. SAGE Open Nurs. (2024) 10:1–17. doi: 10.1177/23779608241245220

28. Kelly CJ, Karthikesalingam A, Suleyman M, Corrado G, and King D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. (2019) 17:1–9. doi: 10.1186/s12916-019-1426-2

29. Tegegne MD, Tilahun B, Mamuye A, Kerie H, Nurhussien F, Zemen E, et al. Digital literacy level and associated factors among health professionals in a referral and teaching hospital: An implication for future digital health systems implementation. Front Public Heal. (2023) 11:1130894. doi: 10.3389/fpubh.2023.1130894

30. Fiske A, Henningsen P, and Buyx A. Your robot therapist will see you now: Ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J Med Internet Res. (2019) 21:1–12. doi: 10.2196/13216

31. Marr B. AI in mental health: Opportunities and challenges in developing intelligent digital therapies. United States: Forbes (2023). Available at: https://www.forbes.com/sites/bernardmarr/2023/07/06/ai-in-mental-health-opportunities-and-challenges-in-developing-intelligent-digital-therapies/.

32. Saeidnia HR, Hashemi Fotami SG, Lund B, and Ghiasi N. Ethical considerations in artificial intelligence interventions for mental health and well-being: Ensuring responsible implementation and impact. Soc Sci. (2024) 13:1–15. doi: 10.3390/socsci13070381

33. Zakhem GA, Fakhoury JW, Motosko CC, and Ho RS. Characterizing the role of dermatologists in developing artificial intelligence for assessment of skin cancer. J Am Acad Dermatol. (2021) 85:1544–56. doi: 10.1016/j.jaad.2020.01.028

34. Stoumpos AI, Kitsios F, and Talias MA. Digital transformation in healthcare: technology acceptance and its applications. Int J Environ Res Public Health. (2023) 20:1–44. doi: 10.3390/ijerph20043407

35. Sauditimes. AI revolution: Saudi Arabia’s Journey Toward a Smarter Society in 2024 (2024). Available online at: https://sauditimes.org/narratives/conversations/ai-revolution-saudi-arabias-journey-toward-a-smarter-society-in-2024/ (Accessed May 2, 2025).

36. Khanagar S, Alkathiri M, Alhamlan R, Alyami K, Alhejazi M, and Alghamdi A. Knowledge, attitudes, and perceptions of dental students towards artificial intelligence in Riyadh, Saudi Arabia. Med Sci. (2021) 25:1857–67.

37. Dharab. KFSHRC enhances mental health with AI (2024). Available online at: https://dharab.com/kfshrc-enhances-mental-health-with-ai/ (Accessed May 2, 2025).

38. Alanzi T, Alsalem AA, Alzahrani H, Almudaymigh N, Alessa A, Mulla R, et al. AI-powered mental health virtual assistants’ acceptance: an empirical study on influencing factors among generations X, Y and Z. Cureus. (2023) 15:e49486. doi: 10.7759/cureus.49486

39. Al-Medfa MK, Al-Ansari AMS, Darwish AH, Qreeballa TA, and Jahrami H. Physicians’ attitudes and knowledge toward artificial intelligence in medicine: Benefits and drawbacks. Heliyon. (2023) 9:e14744. doi: 10.1016/j.heliyon.2023.e14744

40. AlZaabi A, AlMaskari S, and AalAbdulsalam A. Are physicians and medical students ready for artificial intelligence applications in healthcare? Digit Health. (2023) 9:20552076231152167. doi: 10.1177/20552076231152167

41. Syed W, Babelghaith SD, and Al-Arifi MN. Assessment of Saudi public perceptions and opinions towards artificial intelligence in health care. Medicina. (2024) 60:938. doi: 10.3390/medicina60060938

42. Ofosu-Ampong K. Gender differences in perception of artificial intelligence-based tools. J Digit Art Humanit. (2023) 4:52–6. doi: 10.33847/2712-8149.4.2_6

43. Sebri V, Pizzoli SFM, Savioni L, and Triberti S. Artificial intelligence in mental health: Professionals’ attitudes towards AI as a psychotherapist. Annu Rev CyberTher Telemed. (2020) 18:229–33.

44. Oh S, Kim JH, Choi SW, Lee HJ, Hong J, and Kwon SH. Physician confidence in artificial intelligence: an online mobile survey. J Med Internet Res. (2019) 21:e12422. doi: 10.2196/12422

45. Wilson RL, Higgins O, Atem J, Donaldson AE, Gildberg FA, Hooper M, et al. Artificial intelligence: An eye cast towards the mental health nursing horizon. Int J Ment Health Nurs. (2023) 32:938–44. doi: 10.1111/inm.13121

46. Shinners L, Grace S, Smith S, Stephens A, and Aggar C. Exploring healthcare professionals’ perceptions of artificial intelligence: piloting the Shinners Artificial Intelligence Perception tool. Digit Health. (2022) 8:20552076221078110. doi: 10.1177/20552076221078110

47. Brinker TJ, Hekler A, Hauschild A, Berking C, Schilling B, Enk AH, et al. Comparing artificial intelligence algorithms to 157 German dermatologists: the melanoma classification benchmark. Eur J Cancer. (2019) 111:30–7. doi: 10.1016/j.ejca.2018.12.016

48. Serbaya SH, Khan AA, Surbaya SH, and Alzahrani SM. Knowledge, attitude and practice toward artificial intelligence among healthcare workers in private polyclinics in Jeddah, Saudi Arabia. Adv Med Educ Pract. (2024) 15:269–80. doi: 10.2147/AMEP.S448422

49. Issa WB, Shorbagi A, Al-Sharman A, Rababa M, Al-Majeed K, Radwan H, et al. Shaping the future: perspectives on the integration of artificial intelligence in health profession education: a multi-country survey. BMC Med Educ. (2024) 24:1166. doi: 10.1186/s12909-024-06076-9

50. Blease C, Locher C, Leon-Carlyle M, and Doraiswamy M. Artificial intelligence and the future of psychiatry: Qualitative findings from a global physician survey. Digit Heal. (2020) 6:1–18. doi: 10.1177/2055207620968355

Keywords: artificial intelligence, mental healthcare, perceptions, psychiatry, Saudi Arabia

Citation: Sharif L, Almabadi R, Alahmari A, Alqurashi F, Alsahafi F, Qusti S, Akash W, Mahsoon A, Poudel DB, Sharif K and Wright R (2025) Perceptions of mental health professionals towards artificial intelligence in mental healthcare: a cross-sectional study. Front. Psychiatry 16:1601456. doi: 10.3389/fpsyt.2025.1601456

Received: 27 March 2025; Accepted: 05 June 2025;

Published: 10 July 2025.

Edited by:

Stefan Borgwardt, University of Lübeck, GermanyReviewed by:

Mamdouh El-hneiti, The University of Jordan, JordanGabriel Beraldi, University of São Paulo, Brazil

Marwan Abdeldayem, Applied Science University, Bahrain

Ratna Yunita Setiyani Subardjo, University of Aisyiyah Yogyakarta, Indonesia

Copyright © 2025 Sharif, Almabadi, Alahmari, Alqurashi, Alsahafi, Qusti, Akash, Mahsoon, Poudel, Sharif and Wright. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Loujain Sharif, THNoYXJpZkBrYXUuZWR1LnNh

Loujain Sharif

Loujain Sharif Reem Almabadi2

Reem Almabadi2 Alaa Mahsoon

Alaa Mahsoon Dev Bandhu Poudel

Dev Bandhu Poudel Rebecca Wright

Rebecca Wright