- 1Centre for Research in Intellectual and Developmental Disabilities (CIDD), University of Warwick, Coventry, United Kingdom

- 2Intellectual Disabilities Research Institute (IDRIS), School of Social Policy and Society, University of Birmingham, Birmingham, United Kingdom

- 3Department of Psychiatry, School of Clinical Sciences at Monash Health, Monash University, Melbourne, VIC, Australia

- 4Council for Disabled Children, London, United Kingdom

- 5School of Health & Wellbeing, University of Glasgow, Glasgow, United Kingdom

- 6Centre for Trials Research, Cardiff University, Cardiff, United Kingdom

- 7The Great Ormond Street Institute of Child Health, University College London, London, United Kingdom

Background: Evaluating the effectiveness of interventions relies on understanding what change in a main outcome is sufficient to be considered meaningful. Our aim was to estimate a Minimum Clinically Important Difference (MCID) for the Developmental Behaviour Checklist, parent-report (DBC-P)- a measure of behavioural and emotional problems in children and adolescents with intellectual disabilities.

Methods: We generated distribution-based estimates through meta-analysis of intervention evaluations using the DBC-P as an outcome measure. We also generated anchor-based estimates using case scenarios with 10 parent carers and 21 professionals working with people with intellectual disabilities.

Results: 21 studies were included in the meta-analyses and indicated an average DBC total raw score decrease of 3.01 or 4.73 (depending on analytic methods) in randomised controlled trials, and an average decrease of 9.16 points in pre-post designs. Parent carers provided a median MCID estimate of 6 (IQR 4, 7) and professionals provided a median estimate of 8 (IQR 5, 14).

Conclusions: These findings contextualise DBC-P score changes in relation to outcomes from other interventions and parent carer and professional views. Which MCID value to choose depends on what factors are prioritised for an intervention.

Introduction

Children with intellectual disabilities are more likely to display elevated behavioural and emotional problems compared with children without intellectual disabilities (1, 2). Moreover, these behavioural and emotional difficulties are often persistent over time (3–5) and, along with the intrinsic importance of directly reducing the distress of the child with an intellectual disability, are associated with a range of other outcomes such as out-of-home care (6), psychotropic polypharmacy (7, 8), and poorer psychological outcomes for family members (3, 9). Reducing behavioural and emotional problems amongst children with intellectual disabilities is, therefore, a critical research and clinical priority.

Rigorous evaluation of the effectiveness of interventions depends upon robust outcome measurement (10, 11). One widely used measure of behavioural and emotional problems amongst children with intellectual disabilities is the Developmental Behaviour Checklist, parent report (DBC-P) (12). The DBC-P was also updated to form the DBC2-P but, given the changes were minor, we use DBC to refer to both measures unless otherwise specified. Evidence has indicated strong psychometric properties for the DBC-P such as its validity, reliability, and responsiveness to change (12). However, evaluating an intervention’s effectiveness also relies upon an understanding of what changes in scores on an outcome measure such as the DBC-P are sufficient to be considered clinically or otherwise meaningful. This relates to the concept of a Minimum Clinically Important Difference (MCID) - the smallest change in an outcome measure that is important to those receiving an intervention and/or other stakeholders (13, 14).

There are several reasons that estimating a MCID for an outcome measure is important. With sufficiently large samples, clinically negligible intervention effects may be statistically significant – making statistical significance alone a poor standard by which to judge intervention effectiveness. MCID estimates offer a valuable standard for evaluating whether intervention effects are sufficient to be considered meaningful, as well as statistically robust (13, 14). Second, an MCID may be used to inform sample size calculations to ensure that studies are appropriately statistically powered to identify meaningful effects (15, 16). Finally, an MCID can be directly used by clinicians and service providers to evaluate individual treatment outcomes against a meaningful standard and inform clinical decision-making (14).

There are two broad categories of approaches to estimating an MCID (13, 14, 17). Distribution-based methods are based on the statistical characteristics of studies, such as the change in scores that correspond to a chosen effect size cut-off in a sample (13, 14). Anchor-based methods compare a change in scores with an external criterion, often by identifying changes in the scores of participants who describe themselves as having improved (vs not) following an intervention (13, 14). However, it has also been suggested that an MCID could be anchored to stakeholders’ own direct estimates of what change in scores they would consider to be important or meaningful (17). Indeed, direct consultation with parent carers and professionals has been used to estimate an MCID (18) for the Aberrant Behaviour Checklist – Irritability sub-scale (19).

Although these two approaches are widely used (13, 14), both have limitations. Distribution-based approaches are criticised for the arbitrariness of chosen effect size cut-offs and the lack of grounding in stakeholder perspectives, whilst anchor-based approaches have been criticised for their susceptibility to influence by recall bias and individuals’ current health states (14). A valuable approach is, therefore, to adopt both distribution-based and anchor-based methods in conjunction to generate informative MCID estimates (14, 17, 18).

The research question for the current study was: What is a suitable Minimum Clinically Important Difference for the Total Behaviour Problem Score of the Developmental Behaviour Checklist (DBC2), parent carer report-form?

Methods

This study was preregistered before collecting any data or conducting the literature searches (https://osf.io/ctwum/?view_only=c08ab197ce654e608dade4c451df22f0). Data for the meta-analyses and R scripts have also been deposited for transparency (https://osf.io/v6kud/files/osfstorage). The distribution-based MCID estimates were generated through a systematic review and meta-analysis, whilst the anchor-based estimates were generated through consultation with parent carers and healthcare professionals.

Distribution-based approach

To generate distribution-based estimates for an MCID on the DBC-P Total Behaviour Problem Score, we conducted a systematic review and meta-analysis of intervention evaluation studies that included the DBC-P as an outcome measure.

Eligibility criteria

Population

Participants needed to have an intellectual disability, autism, or a genetic syndrome associated with intellectual disability and/or autism. Intellectual disabilities, autism, or associated genetic syndromes could be confirmed by report of a diagnosis by a family member, receipt of intellectual disability education or services, genetic testing, or meeting diagnostic thresholds on psychometric tests within the study. Participants may have had other additional diagnoses as well as intellectual disability, autism, or an associated genetic syndrome. Studies were eligible if data were reported for a group in which ≥70% of participants met this criterion. Participants were aged between 4 and 18 years as this is the age range for the DBC-P. Studies were eligible if data were reported for a group in which ≥70% of participants meet this criterion. Studies were excluded if participants had a specific learning difficulty or other neurodevelopmental condition (e.g. dyslexia, dyscalculia, ADHD) but did not have an identified intellectual disability, autism, or an associated genetic syndrome.

Intervention

Any intervention study which used the DBC-P, parent version as a primary or secondary outcome measure was eligible, irrespective of the nature of the intervention.

Types of study

Randomised controlled trials, non-randomised controlled trials, and uncontrolled pre-post studies were eligible. We excluded case series and case studies, including single-case experimental designs.

Comparator

Studies with any control comparison or none were eligible.

Context

Any context of intervention delivery was eligible for inclusion

Outcomes

The Developmental Behaviour Checklist, parent-form or Developmental Behaviour Checklist 2, parent form, including authorised translations of the DBC2-P in languages other than English. We excluded studies which reported only on other versions of the DBC-P such as the teacher version, the adult version, the short form, or individual items, subscales, or subsets of items of the DBC but not information required to calculate the Total Behaviour Problems Score.

Search strategy

We searched Medline, Embase, PsycINFO, and Web of Science (all databases) using the terms “Developmental Behaviour Checklist” OR “Developmental Behavior Checklist”. The last searches took place on 22/08/2024 and there were no date restrictions. Using Google Scholar and Web of Science, we also conducted forward citation searches of key DBC-P psychometric publications (12, 20–23). Finally, once eligible full texts had been identified, forwards and backwards citation searches were conducted on these to identify any other eligible research.

Study selection

All identified records were then imported into Covidence (24) and underwent electronic de-duplication. All of the titles and abstracts of the remaining records were independently screened by two reviewers (DS and ET) in Covidence, with discrepancies being discussed and a consensus reached. During title and abstract screening, the reviewers showed a good level of agreement (96.44%, Kappa = 0.702). All remaining records then underwent independent full-text screening by two reviewers, with discrepancies being resolved through discussion. A good level of agreement for inclusion during full-text screening was reached (agreement= 88.57%, Kappa= 0.701).

Data extraction

Data were extracted independently by two reviewers (DS and ET) from 100% of eligible studies. Discrepancies were discussed between the two reviewers and an agreement was reached. If data necessary for meta-analysis were not available, study authors were contacted to request this information.

Analysis

We calculated the pooled SD from the baseline intervention groups of all studies to generate estimates for a change score on the DBC-P for small (d=0.2), medium (d=0.3), and large (d=0.5) effect sizes.

We then conducted random-effects meta-analyses of DBC-P scores based on: 1) ANCOVA estimates for RCTs; 2) final outcome scores for RCTs; and 3) pre-post changes in DBC-P scores for controlled and pre-post studies. The original pre-registration proposed meta-analysing the change in DBC-P scores for all study designs together, after adjusting for the change in control comparisons where one was present. However, we judged that separate analyses of RCTs and pre-post changes would provide more informative estimates of potential MCIDs for each study design. The pre-registration outlined that meta-analyses would be conducted using the R package metafor (25). However, for the analyses of RCTs, we used the R shiny tool by Papadimitropoulou et al. (26) since this is largely powered by metafor but offers more options for ANCOVA analyses which better account for baseline differences between trial arms. Since the correlations between baseline and follow-up DBC-P scores which are required for ANCOVA estimates could only be obtained from 9/15 trials, with the remaining correlations being automatically imputed, we also conducted a meta-analysis of RCTs comparing final outcome scores as a more conservative estimate. Several crossover RCTs were identified in the study selection process. Including all data from these crossover trials in the meta-analysis as though they were parallel group trials would give rise to a unit-of-analysis-error and provide overly conservative effect estimates (27). In two trials where data were reported for each group at each timepoint (28, 29), we therefore used only data from the first intervention that participants received (27). However, one trial (30) did not report data necessary for this approach and so we evaluated the effect of including/excluding this study in the meta-analyses in Supplementary Materials B. For one study (31) which compared two active intervention arms, both of the two arms were analysed separately in the meta-analysis of pre-post studies.

If the purpose of the meta-analyses were to evaluate the effectiveness of interventions, it would be important to investigate potential sources of heterogeneity in effect sizes. However, since the analyses are focused on exploring the range of outcomes in studies using the DBC, such examinations of heterogeneity were judged not relevant to the current research and were not conducted.

Anchor-based approach

We generated MCID estimates which were anchored to the views of parent carers and professionals through consultation. We developed five clinical vignettes (see Supplementary Materials A) describing children and adolescents with varying severity and range of behavioural and emotional problems. Four of these vignettes were adapted from examples in the DBC2 manual and one was entirely new. The vignettes were deliberately designed to reflect varying severity of intellectual disability, DBC total scores, and presentations of behavioural and emotional problems. Parent carers and professionals were presented with these cases and the individual’s current DBC-P scores before being asked to identify the smallest possible change in DBC-P Total Behaviour Problem Score that would be needed for them to consider an intervention to have had a meaningful beneficial effect for that individual and their family. Participants were asked to provide this judgement both through indicating which individual items on the DBC-P would be important to change and by what amount to be meaningful, and what the minimum meaningful change would be overall across those items. These data were collected from 10 parent carers, who were part of existing co-production research groups, through individual online meetings. Meanwhile, data were collected from 21 health and social care professionals: six through direct consultation meetings and 15 through survey responses. The professionals were recruited through professional networks of the research team, social media, and through distributing the survey through a professional mailing list for clinicians and researchers working with people with an intellectual disability in the United Kingdom. There were initially 18 completed survey responses, but two were excluded because the respondents subtracted one point from every item, and so we judged that it was not clear they had engaged with the task instructions; and one further response was excluded because they were a General Practitioner and did not report working in a role with a particular focus on children or adolescents with intellectual disabilities. The 21 professionals included 12 psychologists, 3 psychiatrists, 2 speech and language therapists, 1 nursing manager, 2 behaviour analysts, and 1 Positive Behaviour Support practitioner. The pre-registration initially stated that we would report separate MCID estimates for case vignettes with low, moderate, and severe levels of behavioural and emotional problems. However, we decided to report only one combined MCID estimate based upon all cases given the small sample size.

Results

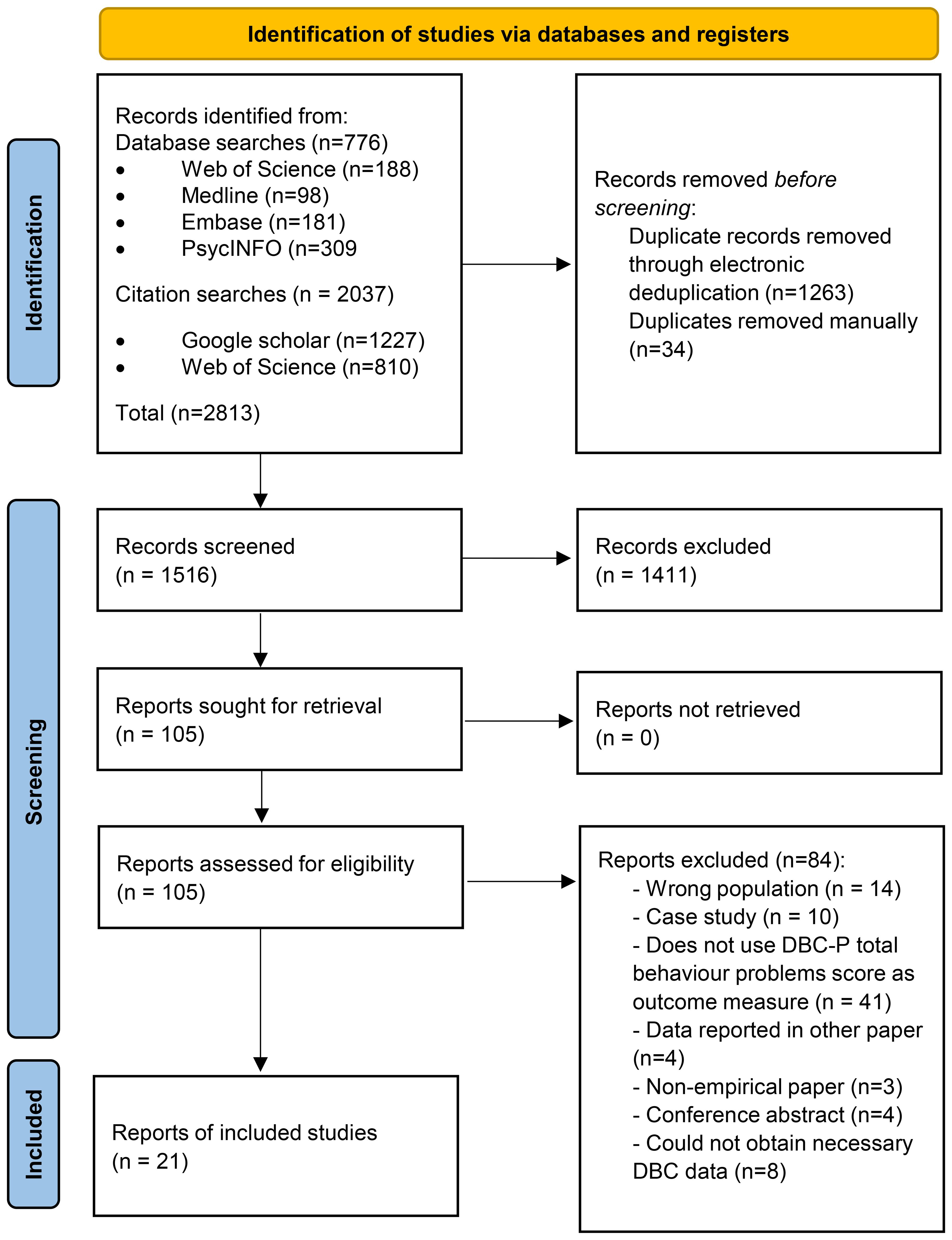

Figure 1 shows a PRISMA diagram (32) illustrating the search and study selection process for the meta-analysis. We identified 776 records through database searches and 2037 records through forwards and backwards searches of the key DBC-P references. 1263 records were removed by electronic de-duplication in Covidence and 34 duplicates were removed manually. Of 1516 records which underwent title and abstract screening, 1411 were excluded, leaving 105 to undergo full-text screening; and 84 of these were excluded at full-text screening, leaving 21 records included in the review.

Figure 1. PRISMA diagram (32) illustrating the search and study selection process.

Study characteristics

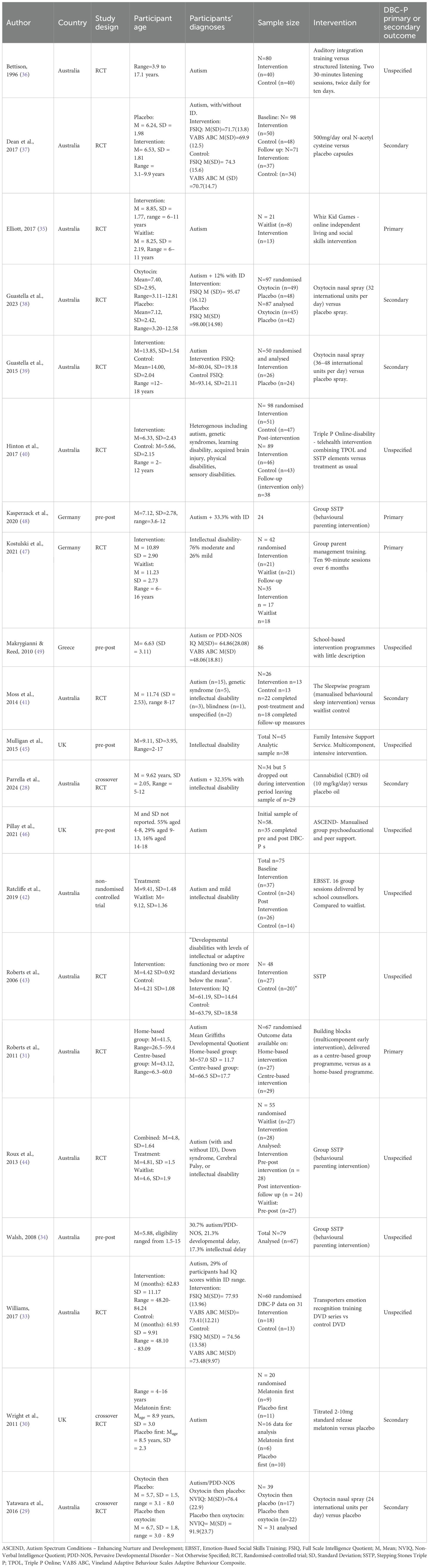

Table 1 summarises the characteristics of each study. Included studies were mostly journal articles (n=17) except for three theses (33–35) and one pre-print (28). Studies were mostly conducted in Australia (n=15) (28, 29, 31, 33–44), where the DBC-P was developed, along with small numbers in the UK (n=3) (30, 45, 46), Germany (n=2) (47, 48), and Greece (n=1) (49). Study designs included 12 randomised controlled trials (31, 33, 35–41, 43, 44, 47), three crossover randomised controlled trials (28–30), five pre-post studies (34, 45, 46, 48, 49), and one non-randomised controlled trial (42). Although many studies included participants who were autistic and had intellectual disabilities, 14 (28–31, 33, 35–39, 44, 46, 48, 49) studies had samples where all participants were autistic, three had samples where all participants had intellectual disabilities (43, 45, 47), and four recruited samples of participants who were either autistic or had intellectual disabilities, or where all participants had both conditions (34, 40–42).

Interventions

Studies included both pharmacological (n=6) and non-pharmacological interventions (n=15). Pharmacological interventions included oxytocin nasal spray (n=3) (29, 38, 39), melatonin (n=1) (30), cannabidiol (CBD) oil (n=1) (28), and N-acetyl cysteine (n=1) (37). The most common non-pharmacological interventions were Triple P behavioural parent training programmes (n=5). Three of these were evaluations of group Stepping Stones Triple P (the version of Triple P for parents of disabled children) (34, 44, 48), one was an evaluation of individual Stepping Stones Triple P (43), and one evaluated Triple P Online – Disability (TPOL-D) an adaptation of Triple P Online for parents of disabled children (40). Several studies (n=3) evaluated social skills interventions. These included Transporters (a DVD-based emotion-recognition training programme) (33), Emotion-Based Social Skills Training (EBSST) – a group programme delivered by school counsellors (42), and Whiz Kid, an online videogame-based intervention (35). Two studies evaluated group parent support programmes besides Triple P. These were Autism Spectrum Conditions – Enhancing Nurture and Development (ASCEND), (a group-based psychoeducational programme) (46), and group parent management training (47). The remaining studies evaluated varied interventions including a manualised behavioural and psychoeducational sleep intervention (41), a family intensive support service (45), auditory integration training (36), ambiguous school-based interventions (49), and Building Blocks, a multicomponent autism early intervention programme (31).

Pooled standard deviation

The pooled SD of the baseline intervention groups of all studies was 23.71 but ranged in individual studies from 14.82 to 32.91. Based upon the pooled standard deviation, changes in DBC-P scores for small (d=0.2), medium (d=0.3), and large (d=0.5) effect sizes would be approximately 4.74, 7.11, and 11.86, respectively.

Meta-analysis of RCTs

ANCOVA meta-analysis of randomised controlled trials

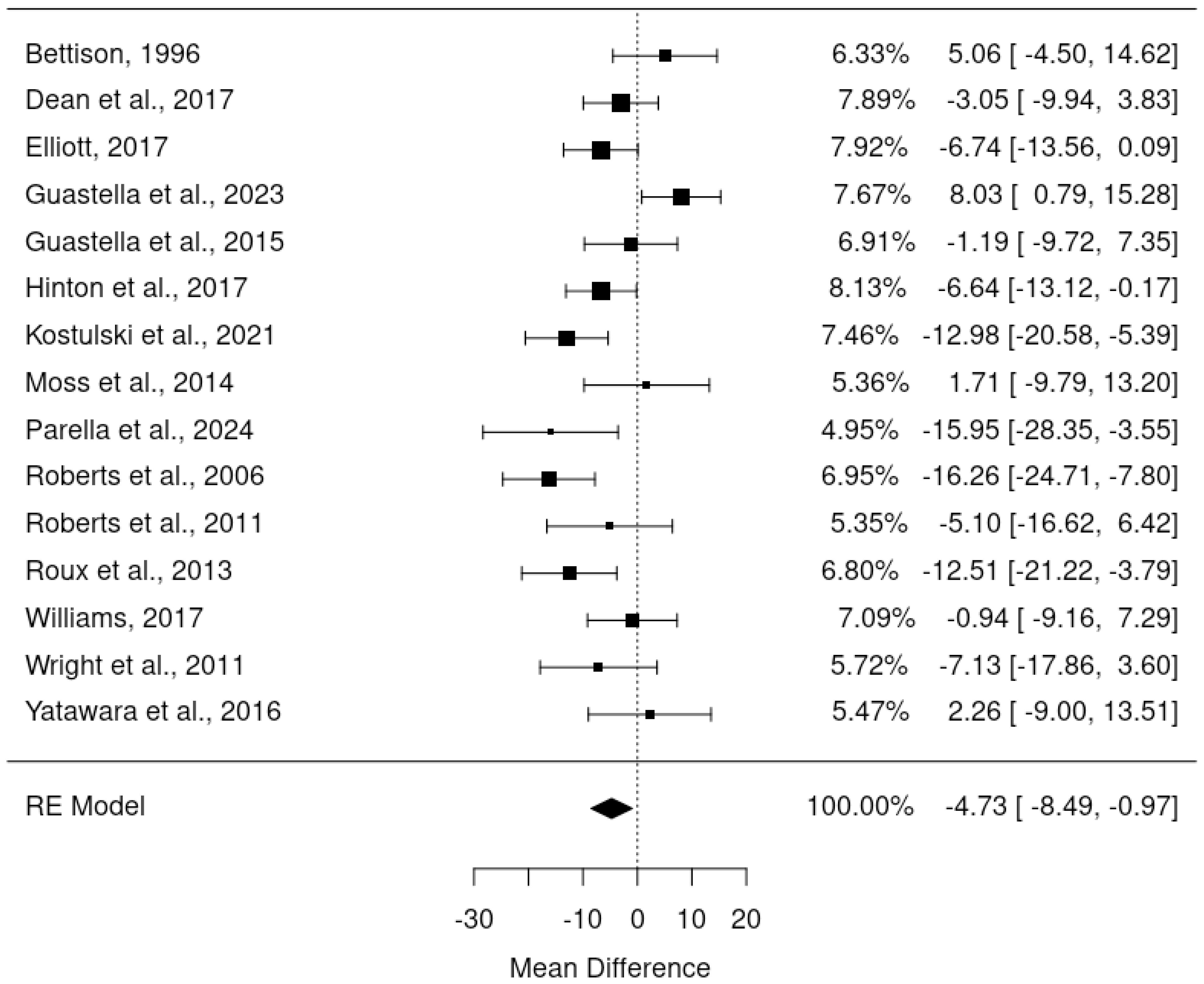

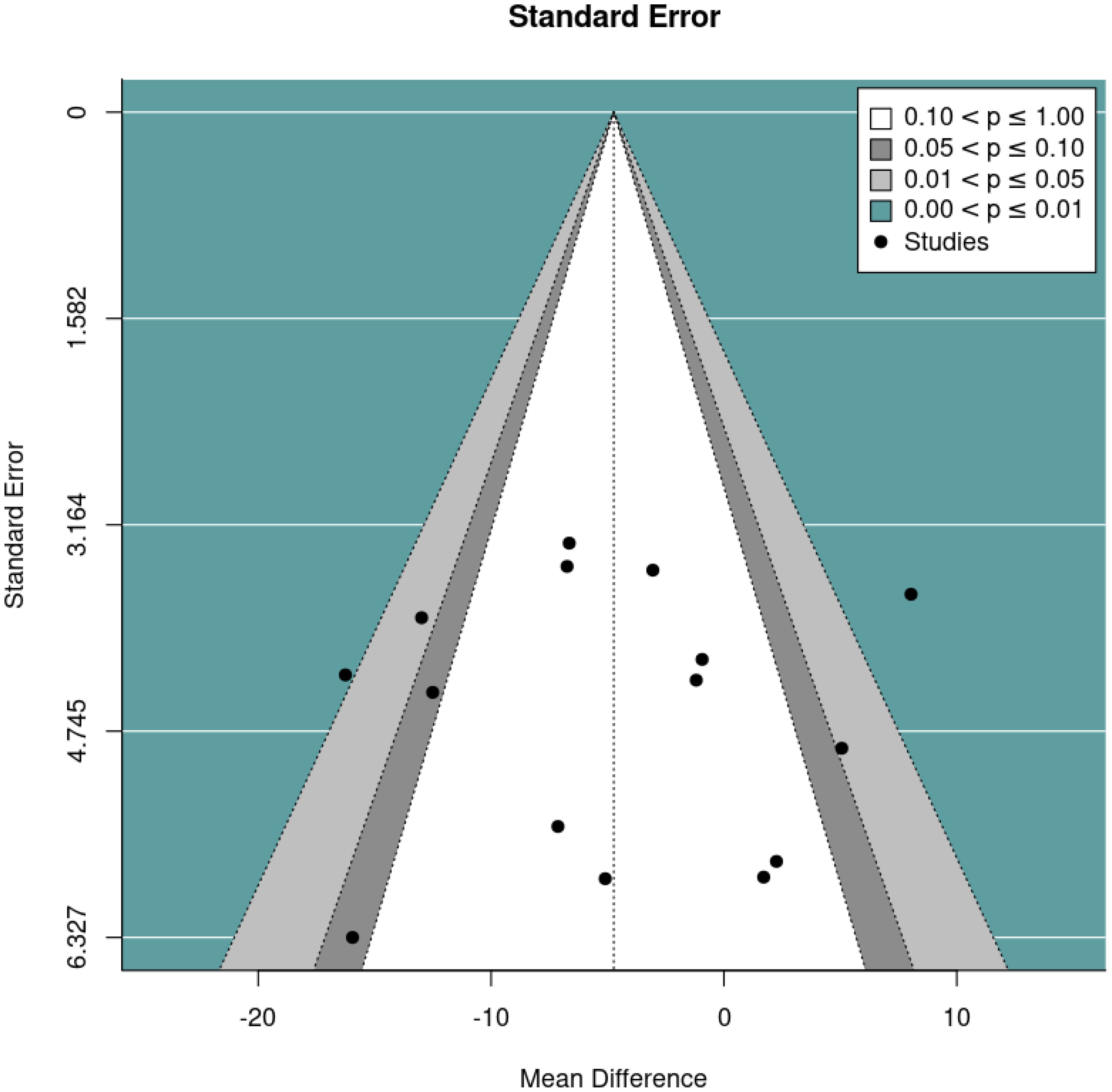

The primary meta-analysis was a random-effects model which adjusted for baseline DBC-P scores based on ANCOVA recovered effect estimates. This provided an estimated mean difference of -4.73, standard error= 1.92, 95% CI= -8.49, –0.97, p=0.014, k=15, tau2 = 34.42, I2 = 64.40%. The large I2 suggests considerable heterogeneity; yet this is relatively unsurprising given the diversity of the interventions and participant samples included. Figures 2 and 3 show a forest plot and funnel plot respectively for the ANCOVA meta-analysis of RCTs. Removing the crossover trial without complete data at each timepoint (30) resulted in a slightly smaller estimated mean difference of -4.59 but did not substantially alter the overall findings (see Supplementary Materials B). Visual inspection of the funnel plot suggests approximate symmetry and, therefore, little evidence of publication bias.

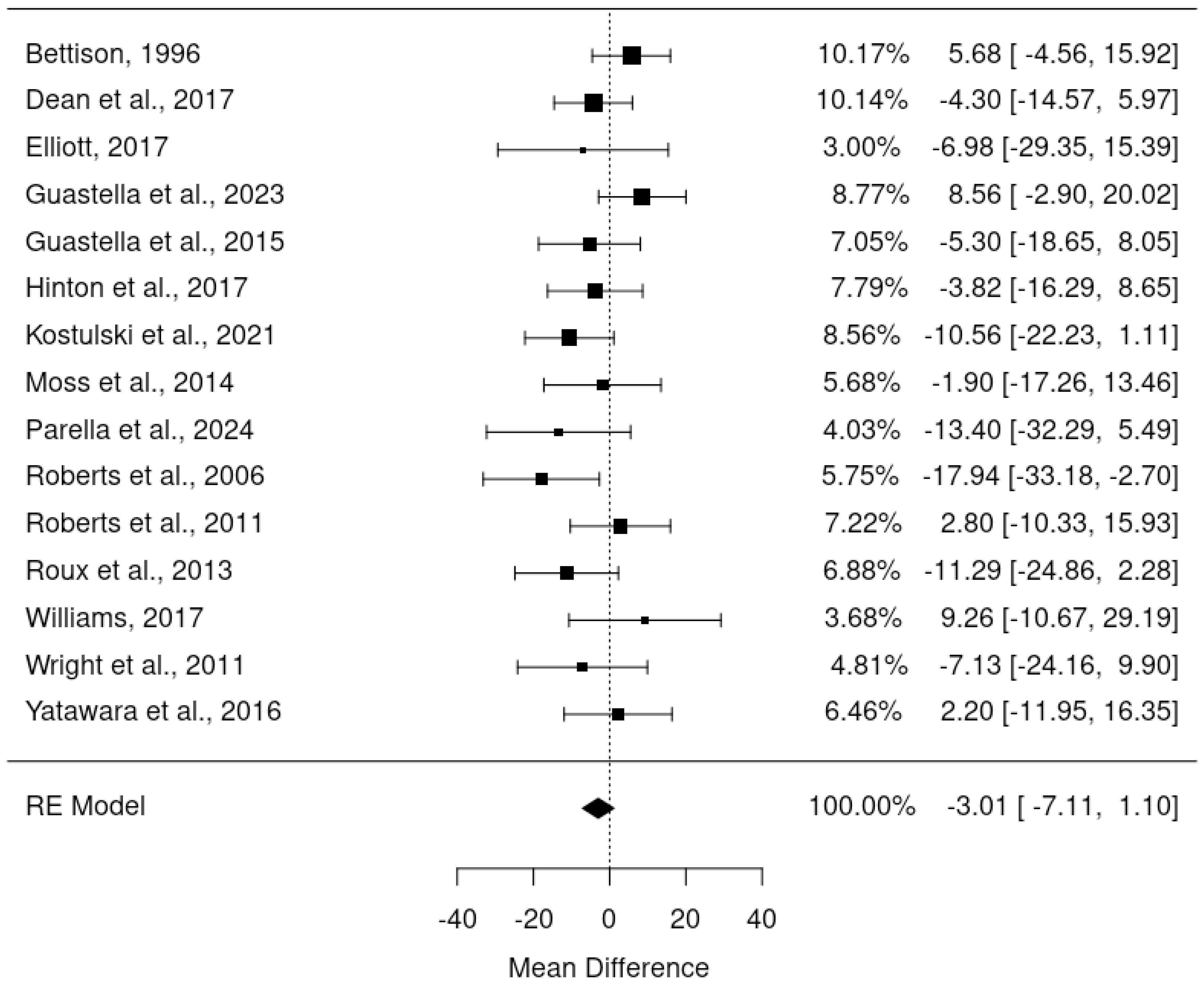

Meta-analysis of randomised controlled trials final outcome scores

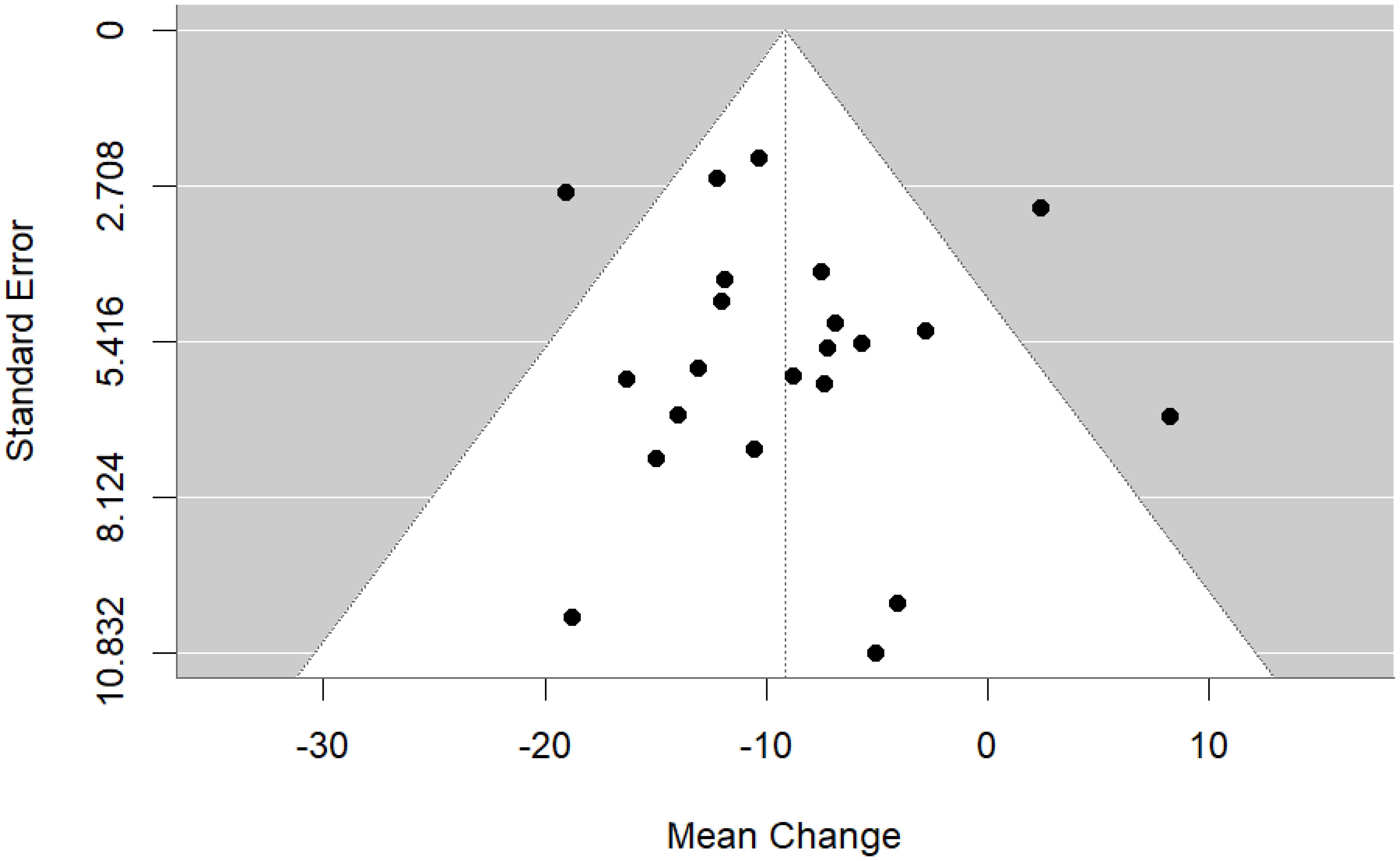

Since pre-post DBC-P correlations which are required for ANCOVA were not available for all RCTs, we also conducted a random-effects meta-analysis of change scores. This provided an estimated mean difference of -3.01, standard error= 2.09, 95% CI= -7.11, 1.10, p=0.15, k=15, tau2 = 15.82, I2 = 24.61%. Figures 4 and 5 show forest and funnel plots respectively for the meta-analysis of RCT change scores. Removing the crossover trial without complete data at each timepoint (30) resulted in a slightly smaller estimated mean difference of -2.82 but did not substantially alter the overall findings (see Supplementary Materials B). Visual inspection of the funnel plot indicated possible asymmetry, with fewer studies with higher standard error showing reductions in DBC scores, which could indicate publication bias. However, this is difficult to evaluate with confidence given the small number of studies.

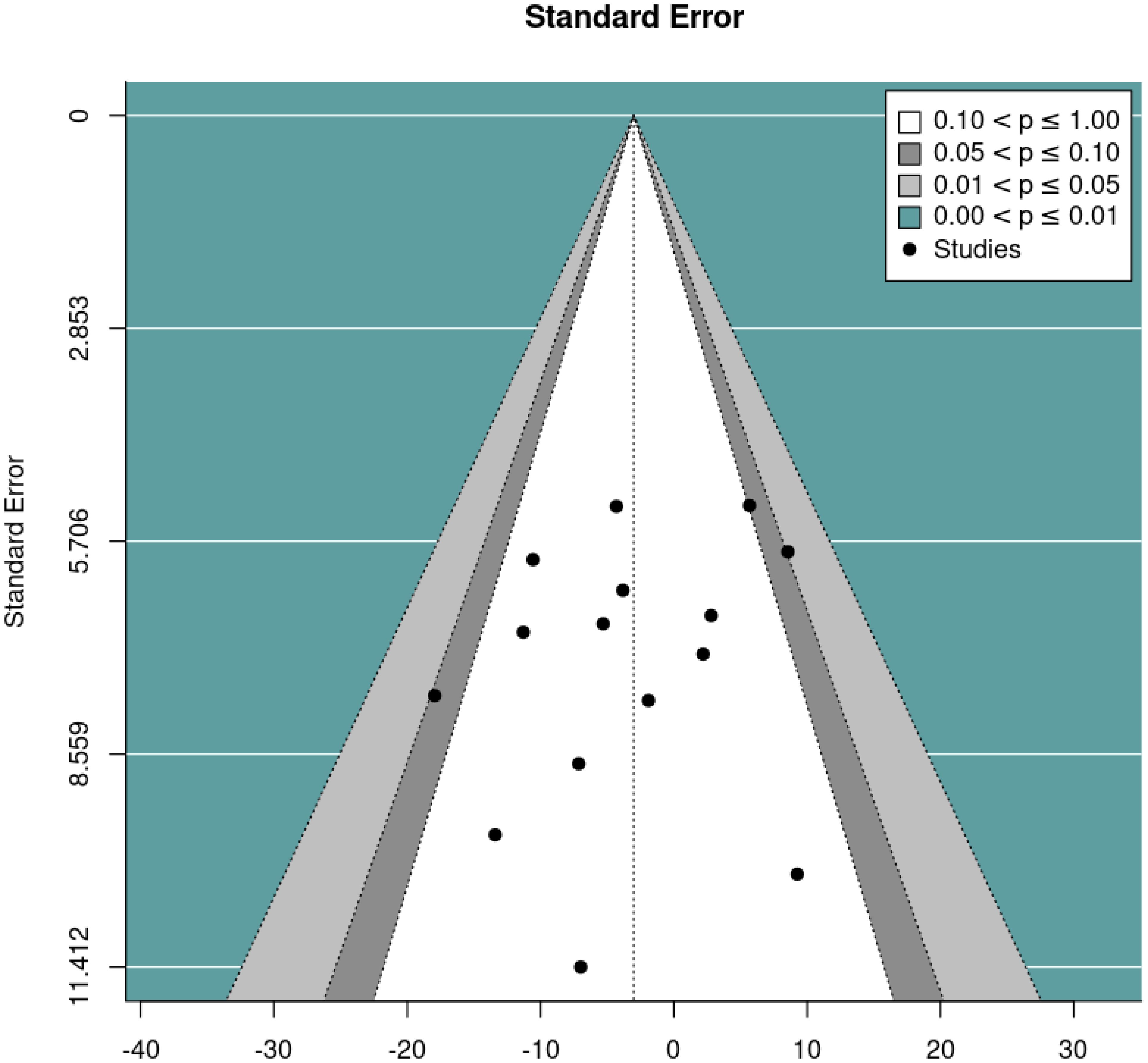

Meta-analysis of pre-post DBC-P change

We then performed a random effects meta-analysis of pre-post studies. This indicated a mean difference of -9.16, standard error= 1.49, 95% CI= -12.09, -6.23, p<0.001, k=22, tau2 = 20.82, I2 = 49.69%. Figures 6 and 7 show forest and funnel plots respectively illustrating the results from the meta-analysis of pre-post changes in DBC-P scores Visual inspection of the funnel plot indicated a possible small amount of skew, which could suggest some publication bias, but this is difficult to evaluate with confidence given the small number of studies.

Anchor-based approach

Consultation with parent carers

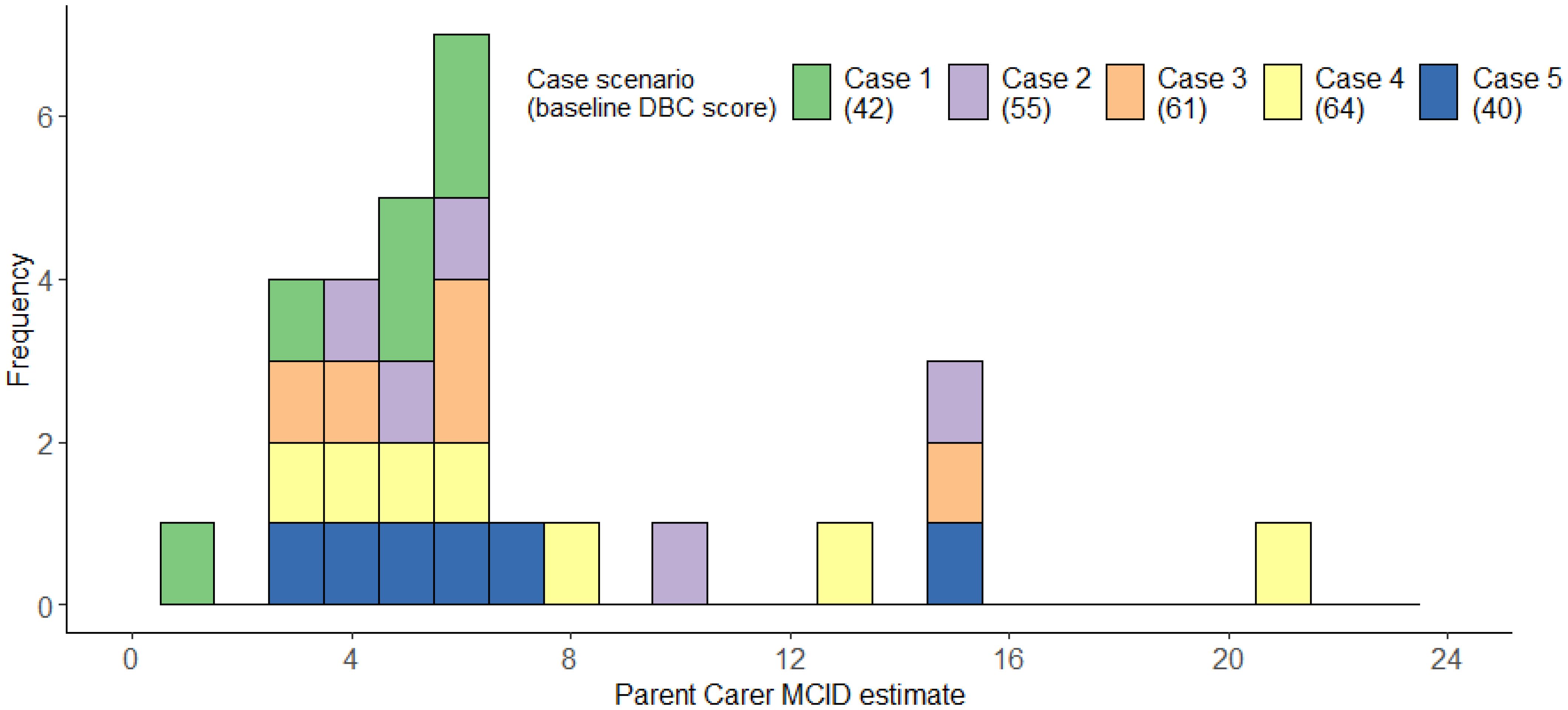

The 10 parent carers we consulted completed an average of 2.90 case scenarios (range = 2-4). The median MCID across scenarios was 6, IQR=4-7; range=1-21, mean=6.90, bootstrapped, bias-corrected 95% confidence intervals (1000 repeats) = 5.55-8.93, SD=4.62 (Figure 8). Figure 8 illustrates that parent carer estimates clustered around 3–6 points, with smaller numbers of substantially higher estimates. Descriptive statistics for each individual case can be found in Supplementary Materials C.

Consultation with professionals

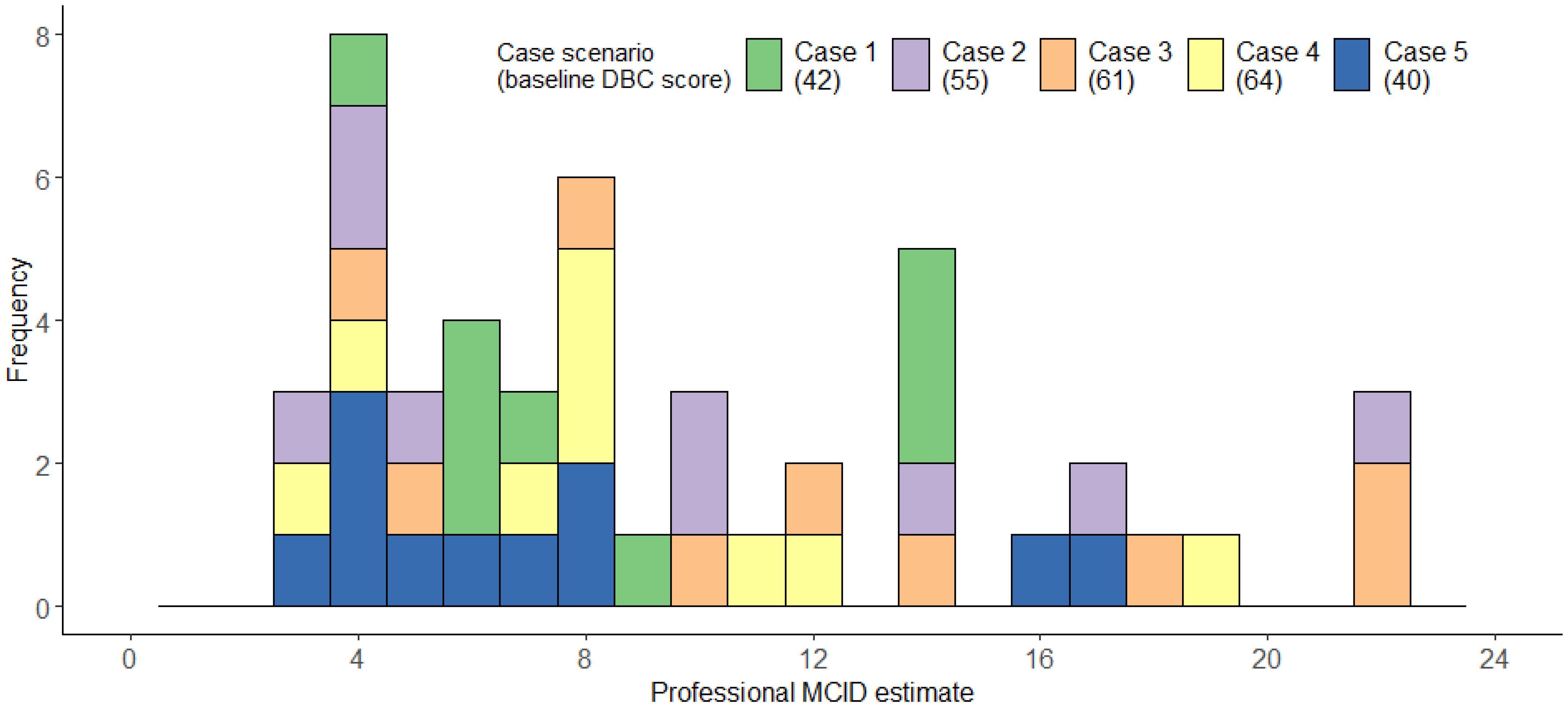

The 21 professionals completed an average of 2.24 cases each, providing a total of 47 MCID estimates. Professionals’ median MCID across scenarios was 8, IQR=5-14; range=3-22, mean=9.49, bootstrapped, bias-corrected 95% confidence intervals (1000 repeats) =8.06-11.24, SD=5.54 (Figure 9). Figure 9 illustrates that professionals’ MCID estimates were more variable- with some possible clustering around 3–8 points of change, but many estimates being much higher. Descriptive statistics for each individual case can be found in Supplementary Materials C.

Discussion

We describe an approach to estimating an MCID for the DBC-P Total Behaviour Problems Score through both distribution-based and anchor-based methods. Meta-analyses indicated an average decrease of approximately 3.01 to 4.73 points in RCTs, depending on analytic methods, with a larger average decrease of 9.16 in pre-post studies as would be expected. This overlaps with parent carers’ anchor-based estimates – with 68.97% of parent carers MCID estimates being between 3 and 6 points. Professionals’ MCID estimates showed greater variability, with some professionals providing larger estimates, but 38.30% of estimates were still between 3 and 6 and the modal estimate was still a 4-point decrease. What constitutes a meaningful change in a specific context may be influenced by factors such as an individual’s baseline behavioural and emotional problems score, the nature and intensity of an intervention, and the context in which the child or young person is being assessed. However, where standardised MCID estimates may be valuable, such as for trial sample size calculations, our findings suggest that an appropriate MCID may be in the region of approximately 3–6 points. Based upon the pooled SD from studies included in the meta-analyses, this would correspond to a standardised mean difference of between -0.13 and -0.25.

It is notable that professionals’ MCID estimates were, on average, approximately 2–3 points larger than those of parents. This should be interpreted with considerable caution given the small sample sizes and variable estimates provided. However, it could indicate that, compared to professionals, parents consider smaller decreases in behavioural and emotional problems to be meaningful perhaps because they are coping with these challenges on a daily basis and even small improvements may lead to considerable positive impact for the family. Professionals may benefit from reflecting on the potential importance to parents of seemingly small changes in behavioural and emotional problems when considering how best to support families. Alternatively, the higher scores among professionals may simply be driven by greater variability in estimates, which could be related to factors such as clinical discipline. Unfortunately, the sample of professionals in this research is too small to allow comparisons between different groups of healthcare professionals.

These MCID estimates should not be viewed as some intrinsic property of the DBC-P, nor uncritically adopted by researchers or clinicians without careful consideration about how they were generated and how this relates to the intended use. Research has demonstrated that MCID estimates generated using different methods are frequently non-convergent (14, 17). MCID estimates though are still useful. Regardless of the complexity of their estimation, researchers, clinicians, and policymakers cannot avoid decisions that rely upon consideration of what constitutes an MCID; for example, when designing RCTs, evaluating patient outcomes, or deciding whether to fund an intervention. Our goal was to provide readers with data regarding the views of stakeholders and the approximate changes in DBC-P scores that may reasonably be anticipated from a broad range of interventions. We hope that this may allow readers to make well-informed and empirically grounded judgements of their own.

Several strengths of this research should be noted. Integrating distribution-based and anchor-based methods from both parent carers and professionals provides a multifaceted understanding of appropriate MCIDs for the DBC-P and allows researchers to prioritise these according to their own circumstances and priorities (14, 17). However, there are also several limitations to this work. We only obtained data from a small number of parent carers, which may impact the reliability and generalisability of the estimate, and the larger variability in professionals’ estimates suggests a lack of consensus in what constitutes an MCID. Another challenge in generating MCID estimates was the perceived non-equivalence of individual DBC-P items. Parents and professionals would frequently comment that a decrease of a single point in one critical item (often relating to self-injury, aggression, school non-attendance, or running away) could be more meaningful than a larger decrease for several items perceived to be less important. In providing MCID estimates, many respondents stated that they generated these based upon changes in the items they perceived to be most important. Many of these estimates may, therefore, reflect a lower bound of a meaningful difference, but a larger decrease may be necessary if changes are distributed across all DBC-P items.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/v6kud/files/osfstorage OSF project: osf.io/v6kud.

Ethics statement

The studies involving humans were approved by University of Warwick Humanities and Social Sciences Research Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

DS: Visualization, Formal Analysis, Writing – original draft, Data curation, Conceptualization, Project administration, Investigation, Methodology, Writing – review & editing. ET: Data curation, Investigation, Conceptualization, Writing – review & editing, Project administration, Methodology, Validation. KG: Conceptualization, Writing – review & editing, Methodology, Funding acquisition, Supervision. RH: Methodology, Supervision, Conceptualization, Writing – review & editing, Funding acquisition. AA: Writing – review & editing, Conceptualization, Funding acquisition. JC: Writing – review & editing, Conceptualization. JG: Conceptualization, Funding acquisition, Writing – review & editing. NM: Writing – review & editing, Conceptualization, Funding acquisition. ER: Conceptualization, Funding acquisition, Writing – review & editing. DR: Conceptualization, Writing – review & editing. BW-R: Conceptualization, Writing – review & editing. JW: Writing – review & editing, Funding acquisition. PT: Funding acquisition, Visualization, Writing – review & editing, Formal Analysis, Methodology, Supervision, Conceptualization.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This research was funded by the National Institute for Health and Care Research (NIHR) Health Technology Assessment Programme (NIHR160414).

Conflict of interest

KG is an author of the Developmental Behaviour Checklist (DBC2) manual. All royalties received by the author from the sale of the DBC2 manual are donated to the funding of ongoing research in intellectual and developmental disabilities.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2025.1612911/full#supplementary-material

References

1. Buckley N, Glasson EJ, Chen W, Epstein A, Leonard H, Skoss R, et al. Prevalence estimates of mental health problems in children and adolescents with intellectual disability: A systematic review and meta-analysis. Aust New Z J Psychiatry. (2020) 54:970–84. doi: 10.1177/0004867420924101

2. Totsika V, Liew A, Absoud M, Adnams C, and Emerson E. Mental health problems in children with intellectual disability. Lancet Child Adolesc Health. (2022) 6:432–44. doi: 10.1016/S2352-4642(22)00067-0

3. Gray K, Piccinin AM, Hofer SM, Mackinnon A, Bontempo DE, Einfeld SL, et al. The longitudinal relationship between behavior and emotional disturbance in young people with intellectual disability and maternal mental health. Res Dev Disabil. (2011) 32:1194–204. doi: 10.1016/j.ridd.2010.12.044

4. Emerson E, Blacher J, Einfeld S, Hatton C, Robertson J, and Stancliffe RJ. Environmental risk factors associated with the persistence of conduct difficulties in children with intellectual disabilities and autistic spectrum disorders. Res Dev Disabil. (2014) 35:3508–17. doi: 10.1016/j.ridd.2014.08.039

5. Einfeld SL, Piccinin AM, Mackinnon A, Hofer SM, Taffe J, Gray KM, et al. Psychopathology in young people with intellectual disability. JAMA. (2006) 296:1981. doi: 10.1001/jama.296.16.1981

6. Shannon J, Wilson NJ, and Blythe S. Children with intellectual and developmental disabilities in out-of-home care: A scoping review. Health Soc Care Community. (2023) 2023:1–20. doi: 10.1155/2023/2422367

7. O’Brien MJ, Pauls AM, Cates AM, Larson PD, and Zorn AN. Psychotropic medication use and polypharmacy among children and adolescents initiating intensive behavioral therapy for severe challenging behavior. J Pediatr. (2024) 271:114056. doi: 10.1016/j.jpeds.2024.114056

8. Logan SL, Carpenter L, Leslie RS, Garrett-Mayer E, Hunt KJ, Charles J, et al. Aberrant behaviors and co-occurring conditions as predictors of psychotropic polypharmacy among children with autism spectrum disorders. J Child Adolesc Psychopharmacol. (2015) 25:323–36. doi: 10.1089/cap.2013.0119

9. Woodman AC, Mawdsley HP, and Hauser-Cram P. Parenting stress and child behavior problems within families of children with developmental disabilities: Transactional relations across 15 years. Res Dev Disabil. (2015) 36:264–76. doi: 10.1016/j.ridd.2014.10.011

10. Flake JK and Fried EI. Measurement schmeasurement: questionable measurement practices and how to avoid them. Adv Methods Pract Psychol Sci. (2020) 3:456–65. doi: 10.1177/2515245920952393

11. Fried EI, Flake JK, and Robinaugh DJ. Revisiting the theoretical and methodological foundations of depression measurement. Nat Rev Psychol. (2022) 1:358–68. doi: 10.1038/s44159-022-00050-2

12. Gray K, Tonge B, Einfeld S, Gruber C, and Klein A. DBC2: developmental behavior checklist 2 manual. Western psychol Serv. (2018).

13. Copay AG, Subach BR, Glassman SD, Polly DW, and Schuler TC. Understanding the minimum clinically important difference: A review of concepts and methods. Spine J. (2007) 7:541–6. doi: 10.1136/bmj.k3750

14. Wright A, Hannon J, Hegedus EJ, and Kavchak AE. Clinimetrics corner: A closer look at the minimal clinically important difference (MCID). J Manual Manipulative Ther. (2012) 20:160–6. doi: 10.1179/2042618612Y.0000000001

15. Cook JA, Julious SA, Sones W, Hampson LV, Hewitt C, Berlin JA, et al. DELTA 2 guidance on choosing the target difference and undertaking and reporting the sample size calculation for a randomised controlled trial. BMJ (Online). (2018) 363. doi: 10.1136/bmj.k3750

16. Chuang-Stein C, Kirby S, Hirsch I, and Atkinson G. The role of the minimum clinically important difference and its impact on designing a trial. Pharm Stat. (2011) 10:250–6. doi: 10.1002/pst.459

17. Draak THP, de Greef BTA, Faber CG, and Merkies ISJ. The minimum clinically important difference: which direction to take. Eur J Neurol. (2019) 26:850–5. doi: 10.1111/ene.13941

18. Hassiotis A, Melville C, Jahoda A, Strydom A, Cooper SA, Taggart L, et al. Estimation of the minimal clinically important difference on the Aberrant Behaviour Checklist–Irritability (ABC-I) for people with intellectual disabilities who display aggressive challenging behaviour: A triangulated approach. Res Dev Disabil. (2022) 124:104202. doi: 10.1016/j.ridd.2022.104202

19. Aman MG and Singh NN. Aberrant behavior checklist manual. 2nd ed. East Aurora, NY: Slosson Educational Publications, Inc. (2017).

20. Einfeld SL and Tonge BJ. Population prevalence of psychopathology in children and adolescents with intellectual disability: I rationale and methods. J Intellectual Disability Res. (1996) 40:91–8. doi: 10.1046/j.1365-2788.1996.767767.x

21. Einfeld SL and Tonge BJ. Population prevalence of psychopathology in children and adolescents with intellectual disability: II epidemiological findings. J Intellectual Disability Res. (1996) 40:99–109. doi: 10.1046/j.1365-2788.1996.768768.x

22. Einfeld SL and Tonge BJ. Manual for the Developmental Behaviour Checklist: Primary carer version (DBC-P) and teacher version (DBC-T). 2nd ed. Clayton, Melbourne, Australia: Western Psychological Services (2002).

23. Einfeld SL and Tonge BJ. The Developmental Behavior Checklist: The development and validation of an instrument to assess behavioral and emotional disturbance in children and adolescents with mental retardation. J Autism Dev Disord. (1995) 25:81–104. doi: 10.1007/BF02178498

24. Covidence systematic review software. Veritas health innovation. Melbourne, Australia (2024). Available online at: www.covidence.org (Accessed December 9, 2024).

25. Viechtbauer W. Conducting meta-analyses in R with the metafor package. J Stat Softw. (2010) 36. doi: 10.18637/jss.v036.i03

26. Papadimitropoulou K, Riley RD, Dekkers OM, Stijnen T, and le Cessie S. MA-cont: pre/post effect size: An interactive tool for the meta-analysis of continuous outcomes using R Shiny. Res Synthesis Methods. (2022) 13:649–60. doi: 10.1002/jrsm.1592

27. Higgins JP, Eldridge S, and Li T. Including variants on randomized trials. In: Higgins J and Thomas J, editors. Cochrane Handbook For Systematic Reviews of Interventions Version. John Wiley & Sons, Chichester (UK) p. 65. Available online at: https://training.cochrane.org/handbook/current (Accessed October 29, 2024).

28. Parrella NF, Hill AT, Enticott PG, Botha T, Catchlove S, Downey L, et al. Effects of Cannabidiol on Social Relating, Anxiety, and Parental Stress in Autistic Children: A Randomised Controlled Crossover Trial. (2024). doi: 10.1101/2024.06.19.24309024.

29. Yatawara CJ, Einfeld SL, Hickie IB, Davenport TA, and Guastella AJ. The effect of oxytocin nasal spray on social interaction deficits observed in young children with autism: A randomized clinical crossover trial. Mol Psychiatry. (2016) 21:1225–31. doi: 10.1038/mp.2015.162

30. Wright B, Sims D, Smart S, Alwazeer A, Alderson-Day B, Allgar V, et al. Melatonin versus placebo in children with autism spectrum conditions and severe sleep problems not amenable to behaviour management strategies: A randomised controlled crossover trial. J Autism Dev Disord. (2011) 41:175–84. doi: 10.1007/s10803-010-1036-5

31. Roberts J, Williams K, Carter M, Evans D, Parmenter T, Silove N, et al. A randomised controlled trial of two early intervention programs for young children with autism: Centre-based with parent program and home-based. Res Autism Spectr Disord. (2011) 5:1553–66. doi: 10.1016/j.rasd.2011.03.001

32. Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int J Surgery. (2021) 88:105906. doi: 10.1016/j.ijsu.2021.105906

33. Williams BT. Evaluation of an emotion training programme for young children with autism. Melbourne, Australia: Monash University (2017).

34. Walsh NK. The impact of therapy process on outcomes for families of children with disabilities and behaviour problems attending group parent training. Perth, Australia: Curtin University (2008).

35. Elliott L. Improving social and behavioural functioning in children with autism spectrum disorder: A videogame skills based feasibility trial. Victoria, Australia: Deakin University (2017).

36. Bettison S. The long-term effects of auditory training on children with autism. J Autism Dev Disord. (1996) 26:361–74. doi: 10.1007/BF02172480

37. Dean OM, Gray KM, Villagonzalo KA, Dodd S, Mohebbi M, Vick T, et al. A randomised, double blind, placebo-controlled trial of a fixed dose of N -acetyl cysteine in children with autistic disorder. Aust New Z J Psychiatry. (2017) 51:241–9. doi: 10.1177/0004867416652735

38. Guastella AJ, Boulton KA, Whitehouse AJO, Song YJ, Thapa R, Gregory SG, et al. The effect of oxytocin nasal spray on social interaction in young children with autism: A randomized clinical trial. Mol Psychiatry. (2023) 28:834–42. doi: 10.1038/s41380-022-01845-8

39. Guastella AJ, Gray KM, Rinehart NJ, Alvares GA, Tonge BJ, Hickie IB, et al. The effects of a course of intranasal oxytocin on social behaviors in youth diagnosed with autism spectrum disorders: A randomized controlled trial. J Child Psychol Psychiatry. (2015) 56:444–52. doi: 10.1111/jcpp.12305

40. Hinton S, Sheffield J, Sanders MR, and Sofronoff K. A randomized controlled trial of a telehealth parenting intervention: A mixed-disability trial. Res Dev Disabil. (2017) 65:74–85. doi: 10.1016/j.ridd.2017.04.005

41. Moss AHB, Gordon JE, and O’Connell A. Impact of sleepwise: an intervention for youth with developmental disabilities and sleep disturbance. J Autism Dev Disord. (2014) 44:1695–707. doi: 10.1007/s10803-014-2040-y

42. Ratcliffe B, Wong M, Dossetor D, and Hayes S. Improving emotional competence in children with autism spectrum disorder and mild intellectual disability in schools: A preliminary treatment versus waitlist study. Behav Change. (2019) 36:216–32. doi: 10.1017/bec.2019.13

43. Roberts C, Mazzucchelli T, Studman L, and Sanders MR. Behavioral family intervention for children with developmental disabilities and behavioral problems. J Clin Child Adolesc Psychol. (2006) 35:180–93. doi: 10.1207/s15374424jccp3502_2

44. Roux G, Sofronoff K, and Sanders M. A randomized controlled trial of group stepping stones triple P: A mixed-disability trial. Fam Process. (2013) 52:411–24. doi: 10.1111/famp.12016

45. Mulligan B, John M, Coombes R, and Singh R. Developing outcome measures for a Family Intensive Support Service for Children presenting with challenging behaviours. Br J Learn Disabil. (2015) 43:161–7. doi: 10.1111/bld.12091

46. Pillay M, Alderson-Day B, Wright B, Williams C, and Urwin B. Autism Spectrum Conditions - Enhancing Nurture and Development (ASCEND): An evaluation of intervention support groups for parents. Clin Child Psychol Psychiatry. (2011) 16:5–20. doi: 10.1177/1359104509340945

47. Kostulski M, Breuer D, and Döpfner M. Does parent management training reduce behavioural and emotional problems in children with intellectual disability? A randomised controlled trial. Res Dev Disabil. (2021) 114:103958. doi: 10.1016/j.ridd.2021.103958

48. Kasperzack D, Schrott B, Mingebach T, Becker K, Burghardt R, and Kamp-Becker I. Effectiveness of the Stepping Stones Triple P group parenting program in reducing comorbid behavioral problems in children with autism. Autism. (2020) 24:423–36. doi: 10.1177/1362361319866063

Keywords: intellectual disabilities, autism, minimum clinically important difference (MCID), meta analysis, Developmental Behavior Checklist (DBC)

Citation: Sutherland DL, Taylor EL, Gray KM, Hastings RP, Allard A, Carr J, Griffin J, McMeekin N, Randell E, Russell D, Willoughby-Richards B, Wolstencroft J and Thompson PA (2025) Estimating a minimum clinically important difference for the Developmental Behaviour Checklist – parent report. Front. Psychiatry 16:1612911. doi: 10.3389/fpsyt.2025.1612911

Received: 16 April 2025; Accepted: 25 July 2025;

Published: 15 August 2025.

Edited by:

Byungmo Ku, Yong In University, Republic of KoreaReviewed by:

Jiayang Qu, Third Affiliated Hospital of Zhejiang Chinese Medical University, ChinaBumcheol Kim, University of Seoul, Republic of Korea

Copyright © 2025 Sutherland, Taylor, Gray, Hastings, Allard, Carr, Griffin, McMeekin, Randell, Russell, Willoughby-Richards, Wolstencroft and Thompson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniel L. Sutherland, ZGFuaWVsLnN1dGhlcmxhbmQuMUB3YXJ3aWNrLmFjLnVr

Daniel L. Sutherland

Daniel L. Sutherland Emma L. Taylor1,2

Emma L. Taylor1,2 Kylie M. Gray

Kylie M. Gray Richard P. Hastings

Richard P. Hastings Jeanne Wolstencroft

Jeanne Wolstencroft Paul A. Thompson

Paul A. Thompson

![Forest plot displaying studies with mean change and 95% confidence intervals. Each study is listed on the left, with corresponding mean changes plotted as squares with horizontal lines representing confidence intervals to the right. A diamond at the bottom represents the overall random-effects model mean change of -9.16 with a confidence interval of [-12.09, -6.23]. The vertical line at zero marks the point of no effect.](https://www.frontiersin.org/files/Articles/1612911/fpsyt-16-1612911-HTML-r1/image_m/fpsyt-16-1612911-g006.jpg)