- 1Behavioral Research Program, Health Communication and Informatics Research Branch, National Cancer Institute, Rockville, MD, United States

- 2Department of Communication, George Mason University, Fairfax, VA, United States

Growing evidence points to the significant amount of health misinformation on social media platforms, requiring users to assess the believability of messages and trustworthiness of message sources. This mixed methods experimental study fills this gap in research by examining social media users' (n = 53) trust assessment of simulated cancer-related messages using eye-tracking, surveys, and cognitive interviews. Posts varied by information veracity (evidence-based vs. non-evidence-based) and source type (government agency, health organization, lay individual); topics included HPV vaccination and sun safety. Among sources, participants reported trusting the government more than individuals, regardless of veracity. When viewing non-evidence-based messages, participants reported higher trust in health organizations than individuals. Participants with high trust in message source tended to report high message believability. Furthermore, attention (measured by total fixation duration) spent on viewing the source of the post was not associated with the amount of trust in the source of message, which suggests that participants may have utilized other cognitive heuristics when processing the posts. Through post-experiment interviews, participants described higher trust in government due to reputation and familiarity. Further verification of the quality of information is needed to combat the spread of misinformation on Facebook. Future research should consider messaging strategies that include sources that are already trusted and begin to build trust among other credible sources.

Introduction

Facebook is a ubiquitous destination for individuals to seek, obtain, and share health-related information (Perrin and Anderson, 2019), and can be effective for experience sharing, awareness-raising, and support-seeking related to health (Farmer et al., 2009; Bender et al., 2012). While growth of user-generated content on social media has enabled peer-to-peer health communication within and across social networks, such content poses increased risks in circulating misinformation. Health misinformation is defined as “a health-related claim of fact that is currently false due to a lack of scientific evidence” (Chou et al., 2018, p. 2417). An example of increasing concern on Facebook is the spread of misinformation about vaccine safety, and controversies around vaccine ingredients, misperceptions of efficacy, and overall mistrust in the healthcare system (Kennedy et al., 2011). Another study noted that ~67% of cancer-related information exchanged on Facebook was deemed scientifically accurate, while 19% was not scientifically accurate and 14% described unproven treatment modalities (Gage-Bouchard et al., 2018). Therefore, even if accurate information is disseminated through social media, the risk of believing misinformation still exists, and evidence points to the spread of health-related misinformation as more popular or believable on social media platforms (Scanfeld et al., 2010; Guidry et al., 2015; Loeb et al., 2019).

Examination of users' processing of messages on social media is needed to understand and improve the dissemination of evidence-based health information. Studies suggest message source is an important vehicle for relaying credible and trustworthy health-related messages via Facebook (Eastin, 2001; Hong, 2006; Van der Meer and Jin, 2019). As people routinely use “crowd sourcing” and invoke cognitive heuristics to evaluate the credibility and trustworthiness of sources online (Metzger et al., 2010), research is needed to understand how and why message source affects users' perceptions of health information on social media. McGuire's Communication-Persuasion model accounts for the importance of credibility and trustworthiness of source, and how it may increase the persuasive impact of a message (McGuire, 1984). The model assumes that persuasion is the result of successfully transitioning through several hierarchical steps; therefore, exposure and attention to a message must occur before comprehending a message (McGuire et al., 2001).

Communication scholars have recognized the importance of trust in sources disseminating content on social media (Eysenbach and Köhler, 2002; Hesse et al., 2005). Trust is a dynamic process informed by one's perception of a message's source and the evaluation of content disseminated by that source. Source attribution is a critical determinant of perceived information quality, and subsequently influences the amount of trust an individual puts on a message (Giffin, 1967). Studies have shown that one's trust in the sources of vaccine information largely predicts perceptions about vaccine risks/benefits and vaccine uptake (Zhang et al., 2013; Greenberg et al., 2017).

Self-reported trust in message source has been examined in other fields to understand the influence of message credibility, message believability, or attention spent on online messages (Wiener and Mowen, 1986; Austin and Dong, 1994); however, these have never been examined concurrently to evaluate how individuals appraise health misinformation. Individuals' evaluation of trust in message source may impact perceptions of credibility in the health message, resulting in acceptance of (mis)information. Common patterns in which individuals interact with their social media have been identified through eye-tracking studies, examining information processing and attention to components of social media messages (Cipresso et al., 2019; Hussain et al., 2019). One study showed users pay attention to the source of a Facebook news post and use this information as a criterion for the decision to read or to skip the post, revealing that users spend more time looking at posts from highly credible sources compared to sources with lower credibility (Sülflow et al., 2018). A gap in the literature includes whether different source types influence source trust. Individuals who trust a source to deliver factual information are more likely to be persuaded to adopt positive attitudes about the information presented to them and thus accept that message (Compton et al., 2016; Jennings and Russell, 2019). Limited research has assessed whether source trust is associated with believability in health messages on Facebook, and subsequently whether attention paid to a health message is influenced by source trust. A better understanding of these associations may have an impact on effective communication of health information.

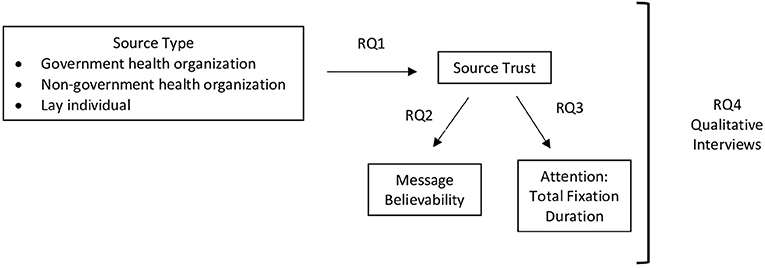

This mixed methods experimental study utilized eye tracking methodology, surveys, and qualitative interviews to understand participants' trust assessment of simulated cancer-related messages on Facebook. The study's purpose was to assess whether the source type is associated with participants' assessment of source trust. We examined the effect of source trust on perceived message believability, stratified by evidence-based and non-evidence-based messages. Research has indicated that the source of an online message may influence message credibility, such that messengers with high source credibility are associated with trustworthiness of the message (Eastin, 2001; Greer, 2003; Srivastava et al., 2018). We also examined whether source trust is associated with an individual's attention on the message to understand ones' information processing based on source type. Building on previous research, four research questions guide our conceptual model (Figure 1): (1) Does trust in message source differ by source type (government, non-government organization, lay individual)? How does the association differ when stratified by evidence-based vs. non-evidence-based messages? (2) What is the association between individual's trust in message source and their evaluation of message believability? How does the association differ when stratified by evidence-based vs. non-evidence-based messages? (3) Is trust in message source associated with individuals' attention spent on health messages? and (4) Through qualitative assessment, what strategies did individuals employ to assess source trust and believability in source of message?

Figure 1. Conceptual model examining individuals' trust assessment of source on message believability and information processing of simulated cancer-related Facebook posts.

Methods

Recruitment and Participants

Eighty participants from the metropolitan Washington DC-Maryland-Virginia area were recruited by phone by a research recruiting firm, which screened and captured demographic data for eligibility determination. Eligible participants had to be at least 18 years old and use social media regularly, defined as logging into at least one social media account daily (e.g., Facebook, Twitter, Instagram, or Pinterest). Efforts were made to recruit respondents from diverse demographic groups. A total of 27 participants were excluded due to cancellations, computer technical issues, or poor eye calibration and low gaze samples. A final sample of 53 participants was included in the analysis.

Study Stimuli and Procedures

The overall study employed a mixed methods approach, integrating eye tracking, survey questionnaires, and qualitative interviews. The protocol was reviewed and deemed exempt by the Ethics Committee and IRB at the authors' institution. Data were collected June-October 2018 in a computer laboratory-based setting. Participants consented to utilization of quotes from their interviews in this manuscript. After obtaining informed consent, participants were guided through a standardized on-screen calibration exercise for accuracy check using a Tobii T120 Eye Tracker (Tobii Technology AB, Stockholm, Sweden). Participants were then directed to view three simulated Facebook feeds, each containing six Facebook posts—five “distractor” posts about non-health topics (e.g., weather, fashion) plus one version of the investigator-developed target posts that always appeared as the second post in the Facebook feed. A study team member with a background in web design recreated the posts so that they were consistent with the format and layout of a Facebook post (e.g., links in the same colors, same size images, same fonts). Examples of target posts and distractor posts can be found in Appendix A (Supplementary Material).

Target posts were developed based on the results from phase one testing. Manipulation checks were conducted during this phase. As a result of phase one testing, some of the stimuli were adapted to reflect the conditions appropriately (e.g., modifying the source of a message, updating the wording to sound more current if the post was older, and adapting existing narratives to generate comparable non-narrative versions of these messages). Pairs of target posts varied based on the following manipulated conditions: first, message format varied based on whether the text of the message included a personal narrative or anecdote about the topic or if it presented non-narrative (factual) information only. Second, message source was manipulated such that the source for the post came from either (a) a lay individual; (b) a government health organization or agency (National Institutes of Health); or (c) a non-government health organization (e.g., National Center for Prevention Science). Third, message veracity of the posts was manipulated, to contain either evidence-based or non-evidence-based information. The former was determined by current best scientific consensus on the topic and the latter represent misinformation as defined in Chou et al. (2018). Messages focused on one of two prominent cancer-prevention topics on social media: Human Papillomavirus (HPV) vaccination or sunscreen (Kelly et al., 2009; Vance et al., 2009). Although the format for each target post was designed to approximate authentic Facebook feeds, posts were not interactive (i.e., no hyperlinks or “like” button) to facilitate interpretation of eye tracking data. Distractor and target posts were generally comparable in size, length of text, and use of imagery.

Each participant was randomized to view three of the 16 possible target post stimuli in their feeds, containing at least one post about the HPV vaccine and one about sunscreen. All received a random sequence of stimuli to limit order effects. After viewing the feeds, participants completed a series of individual surveys for each of the randomized posts they viewed, then completed a post-survey on overall trust in sources and social media health content. Lastly, participants engaged in a qualitative interview. Interviews lasted ~10–15 min. A moderator utilized a semi-structured interview guide with open-ended questions to inquire about strategies participants employed to assess the credibility of the posts, what aspects of posts influenced their trust in the message, and whether the posts presented were personally relevant. As sessions concluded, participants were debriefed with scientifically accurate information about the HPV vaccine and sun safety and received $75.00 in compensation.

Survey Measures

Source trust composite score was calculated based on a 5-item scale on the post-survey that asked, “The next question asks your opinion of the message source. Please tell us what you think of the individual or group that provided this message: 1) They are trustworthy, 2) They provide accurate information, 3) They are unfair, 4) They tell the whole story, 5) They are biased. An average of the 5-item scale was created for a mean trust score. Response options ranged from 1 (strongly disagree) to 7 (strongly agree) with reverse coding for “They are unfair” and “They are biased”. Message believability was measured by a 1-item question on the post-survey that asked, “Please tell us what you think about this post. The post is…” Response options ranged from 1 (Not believable) to 7 (Believable). Health literacy was measured using The Newest Vital Sign, and response options were dichotomized as limited health literate and adequate health literate.

Eye-Tracking Measure

A commonly used metric, Total Fixation Duration is defined in this study as total time (in seconds) fixated on the source Areas of Interest (AOI) of a post (Bergstrom and Schall, 2014; Schall and Bergstrom, 2014). AOIs were created to capture the attention spent in a specific area (e.g., source) of the Facebook post. To account for variability in the amount of visual information per page (e.g., text length, image size), analyses were pixel size-adjusted.

Analytic Approach

Five types of data analyses were conducted. First, frequencies were calculated for participant characteristics. Second, a one-way ANOVA test was conducted with source type as an independent predictor and trust in message source as the dependent variable. Independent samples t-test and one-way ANOVA were then conducted for subgroup analyses that tested source type as an independent predictor and trust in message source as dependent variable by message credibility. Third, Pearson Correlation analysis was conducted to assess the association between trust in message source and message believability. These prior analyses were conducted using IBM SPSS Statistics 23.0. Fourth, linear regression models assessed the association of trust in message source on total fixation duration, conducted using STATA (StataCorp LP, 2015). Lastly, qualitative analysis was conducted to describe how individuals assessed trust and believability in source of Facebook messages.

Qualitative Analysis

In this mixed methods study, we combined quantitative and qualitative insights. In particular, we used an explanatory approach and used the quantitative findings to inform and drive the qualitative analysis, attempting to explain the trust and credibility assessment through participants' own words, whereby they were asked to provide rationale and context for trusting or not trusting certain (real and simulated) Facebook posts. Qualitative data included transcripts of the sessions and observer's notes taken during the sessions. Three authors independently reviewed and coded interview transcripts, particularly looking for participants' reflections on drivers of trust and credibility assessment when viewing health related posts. Major themes emerged, largely driven by the Interview Guide. After the authors reviewed the transcripts and notes, they met to discuss, reconcile different interpretations and discrepancies, and reach consensus on the overall sentiments expressed by the participants and together, most representative and salient excerpts where selected to be reported in this paper. The qualitative themes focused on source trustworthiness, message relevancy, message credibility, and message believability.

Results

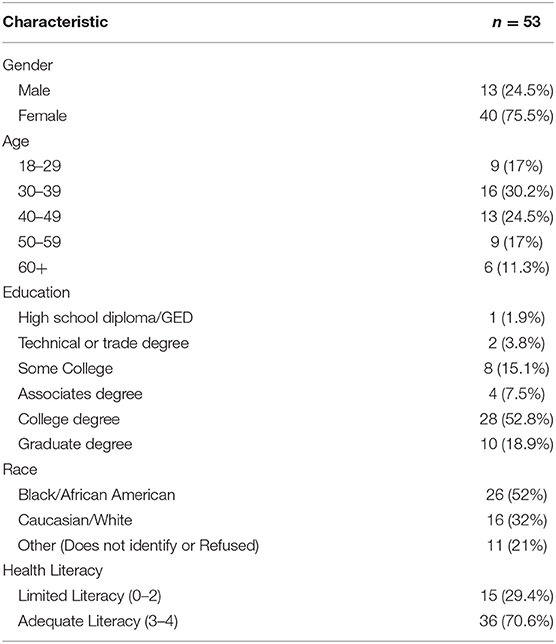

As presented in Table 1, the final sample included more females (n = 40, 75.5%) than males (n = 13, 24.5%). Participants' reported race was nearly divided between Black/African American and other races. Age categories, levels of education attainment, and levels of health literacy were generally well-balanced in the sample.

RQ1 was exploratory, seeking to examine differences in participants' reported trust in message source by three source types (government agency, health organization, and lay individual), both in the full sample and subsequently in the stratified sample by message veracity. Differences between groups were determined by a one-way ANOVA test [F(2,341)] = 5.109, p = 0.007). A Tukey post hoc test revealed that trust in health messages was lower when the message source was lay individuals (M = 4.41, SD = 1.39, p = 0.008) compared to government agencies (M = 5.03, SD = 1.57). There was no difference in reported source trust between health organizations and government agencies (p = 0.42). However, in the stratified analysis, we found that among non-evidence-based messages, participants reported trusting health organizations (M = 4.67, SD = 1.27) more than lay individuals (M = 4.06, SD = 1.50, p = 0.005). When viewing evidence-based messages, there was no difference in trust across message source types (p = 0.39).

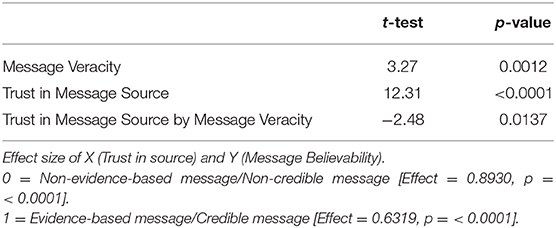

We then assessed whether participants' perceived source trust was associated with reported message believability to examine whether individuals' trust in message source would increase the likelihood of accepting the presented information as reliable. For RQ2, a Pearson Correlation test was conducted. Overall, there was a positive correlation between trust and believability, r = 0.606, n = 346, p < 0.01 (2-tailed), indicating a strong relationship between how much participants trust the message source and how much they believe the message. We also repeated this analysis, stratifying the sample by veracity condition. Results show participants with high trust in message source tend to report the message as highly believable when viewing both evidence-based and non-evidence-based messages (Table 2). The mean score was 4.62 (SD = 1.41) for trust in message source and 5.23 (SD = 1.81) for believability in message. We proceeded to calculate Cohen's d to assess the effect size between the means of trust in message source and message believability. The large effect size (d = 0.89) suggests that the relationship between trust in message source and message believability is larger for non-evidence-based messages than the difference of means for those who viewed evidence-based messages (d = 0.63).

Concurrently, for RQ3, we examined whether this trust in message source was associated with the time it took to view and process the message. This analysis enabled us to correlate self-reported survey data with the eye tracking data in order to assess information processing that occurred while participants viewed the message source of the simulated Facebook post. No differences were observed in trust in message source and attention spent on source of post [F(1, 119) = 1.10, p = 0.297].

Lastly, quantitative results were bolstered by qualitative interview findings examining factors influencing participants' trust in Facebook messages. To capture additional cognitive heuristics that individuals may have utilized when viewing Facebook posts for health-related information, we asked all participants to reflect on their assessment process. Three dominant themes emerged from interview data to address RQ4 concerning how individuals evaluated levels of trust in message source and believability of messages: (1) general high trust in government health agencies, (2) higher trust in health organization over individuals, and (3) a sense of skepticism over unknown individuals as message sources. Each theme is illustrated below with representative quotes from the interview transcripts.

Theme 1: General High Trust in Government Health Agencies

When participants were asked how they typically decide whether to trust health information on Facebook, the majority stated they look at the source of the post. More specifically, they examined whether the information presented is from a reputable government agency. Participants noted that when reviewing the study stimuli, they were more inclined to trust messages from government health agencies because of their familiarity with their reputation, research, and knowledge around health topics. For example, one participant stated,

“Anything that comes from a government site, CDC being one of them. Those health claims aren't biased. Those are based on decades and decades of research from the US government, so those are the things that I would trend to.” (M, 30-39)

Many participants said they rated posts from agencies such as NIH, the U.S. Food and Drug Administration (FDA), and the Centers for Disease Control and Prevention (CDC) as highly believable because of the evidence-based cancer-related research conducted by these agencies. When the same posts were alternately presented by a government agency or individual as the message source, participants indicated greater trust in the government agency message due to their general recognition of these organizations. The following are two examples:

“So, like this source says usa.gov. So, the federal government, I trust generally. But if some random person, like Rachel Miller here, posts about the HPV vaccine, who knows, right? I have no idea who they are. But, you can go online and find thousands of blog posts that say that vaccines cause autism and are not true. So, I look for reputable organizations I've heard of or the federal government, CDC.” (M, 30-39)

“If it's coming from a national organization, National Institutes of Health, I would absolutely trust it. They're nationally run, they cover research, they're an organization that has an affiliation, you know there's doctors, there's not just an individual perception or client. If I had a health-related issue, I would go to the national organization of….” (F, 40-49)

In addition, for some participants, indications of a message's affiliation with a government agency was a sufficient heuristic cue to establish trust prior to evaluating the post's actual content. If a message was posted by an agency that sounded reputable, one may be more inclined to trust the message. One participant stated,

“Well, I guess if it's the institute of something, that would make me think it's authentic. If it's.gov or.org, I'll probably consider it more authentic, as I'm familiar with that organization for whatever reason.” (M, 18-29)

Several participants noted the information presented in posts by government agencies validated their existing beliefs and were consistent with their ideological views about health-related issues. Many participants believed, unlike personal stories and experiences, messages posted by government agencies are monitored and do not necessarily have to be cross-referenced.

Theme 2: Higher Trust in Healthcare Organization Over Individuals

Similar to reported high trust in government agencies, many participants stated if the cancer-related message is from a health organization, they would be more inclined to trust it than from an individual posting on Facebook. Consistent with messages from government health agencies, reputation of health organization mattered for whether participants trust the post. One participant stated,

“If it's an individual it's something I'd take into consideration, but I would require interaction with… maybe I would reach out to the person that's writing the post. But I don't give that post the same weight as I would the Dermatology Association, if it's a skin issue.” (F, 60+)

One discrepancy noted was that many participants did not differentiate between government agency and health organization. At times, these two sources were perceived to be equivalent, but still elicited higher trust than a lay individual. For example, one participant noted,

“If it's linked to an organization, a reputable medical organization. If it is a.gov website or if it is something connected to a hospital, like the Johns Hopkins website or something like that.” (F, 18-29)

Participants noted that they are more inclined to trust a health organization if the research is linked to a “medical place,” “research facility,” or “doctor doing research and has searchable publications.” Participants stated such sources allow for credibility in the messages they are disseminating through Facebook because it is not a singular, individual perception from a person on social media. A few participants also noted that if the health organization has a track record of accuracy, and cites the study they are posting, it seems trustworthy.

Theme 3: Skepticism and Limited Trust in Individuals as Message Sources

Compared to trust in government and organizations, participants noted lower levels of trust in cancer-related posts from lay individuals on Facebook. This stemmed from an insufficient background on the individual and feelings that posts could be meant as attention-seeking anecdotes rather than reliable information. The majority of participants stated that if they viewed posts from individuals, they would need to do additional research in order to verify the content of the post. One participant stated,

“I just feel like people in general on social media post things just to get attention, so I feel like most of the posts on there are just attention grabbing so I don't know how reliable they are. Yeah, I wouldn't trust it as much as if I were to see the same article, if I found an article on Facebook if I could get the same article by Googling it, I'd probably trust it a little bit more if I Googled it.” (M, 18-29)

The need to verify information was more prevalent when evaluating trust in individuals posting on Facebook than for organizations, and that they actually used the organizations to verify individual's claims. One participant said,

“I would have been more apt to comment/share if I can verify the information through Google. You know, then I might go back and actually share the information whereas, I initially might not. And hopefully I would also find a better source like the CDC that could actually support these claims. If I did a Google search and I found the CDC supported it, I'd be much more likely to share information directly from them.” (M,30-39)

A few participants added that if similar content from the post was shared by multiple individuals as opposed to just one, they may trust the post more because it is validated by others. In addition, many participants stated that if they knew the individual they would contact the poster to get more information. The following is an example:

“Well they all seem to be like they were from specific people rather than from an organization or from something I chose to follow. Like they're not from National Cancer Institute or NIH, I think there was a mention of NPR, I might pay more attention to it. So the fact that it was from the individual, if I knew those people, I'd reach out then I would read it more carefully.” (F, 60+)

Overall, the qualitative findings suggested that participants reported higher trust in government agencies and health organizations because of the familiarity they have with the research being conducted, and reputation these agencies/organizations have established over time. These findings help solidify the quantitative findings found in the stratified analyses examining trust in message source by veracity of messages participants viewed.

Discussion

This mixed methods study utilized simulated Facebook posts about cancer information to investigate the association between message source type and participants' trust in these sources. The findings emphasize the importance of message source on cancer information conveyed on social media. Key findings indicated participants reported higher trust in government agencies compared to individuals as sources of health messages. These findings are important as it suggests the emphasis of government agencies' voices on social media in disseminating health information to the public. Information relayed from government agencies may be more easily trusted rather than from individuals, given their expertise on the content. Thus, there may be a responsibility on these agencies to engage in direct social media outreach to relay accurate information. Additionally, when viewing non-evidence-based messages, participants placed higher trust in health organizations as the message source as opposed to individuals. These findings suggest that health organizations tend to garner more trust than individuals, which may lend to recognition or familiarity with the organization; however, some health organizations may not always be spreading accurate health information. The findings suggest that threats such as trolls/bots, and ideological extreme organizations may be masquerading as legitimate sources and disseminating false information, which may garner more trust than if the message originated from an individual (Shao et al., 2017, 2018; Bratu, 2018). Such threats contribute to non-credible sources masking as reliable and contribute to the perpetuation of misinformation. Issues arise when these sources gain legitimacy with the general public because of an official sounding name. Research emphasizes how different source types are utilizing social media platforms readily to disseminate inaccurate health information and gaining higher reach, but misleading vulnerable audiences (Syed-Abdul et al., 2013; Mueller et al., 2019). Though difficult, distinguishing between authentic and inauthentic health organizations is imperative to help combat the spread of misinformation.

The results of RQ2 that examined whether participants' perceived source trust was associated with reported message believability showed that participants both strongly trust the message source and believe the message. This association suggests that diffusion of misinformation can occur regardless of a evidence-based or non-evidence-based health message. The intertwined features of trust in source and believability of the message impact how messages are received among individuals (Eastin, 2001; Metzger et al., 2010). Surprisingly, the effect of this association was slightly higher for individuals who viewed non-evidence-based messages, suggesting that individuals tend to have higher trust in sources that may be disseminating false messages, raising concern for the importance of verifying sources that are sharing these messages. These results highlight how important the source is for a health message (Hu and Shyam Sundar, 2010; Buchanan and Beckett, 2014), and thus imperative for federal and health organizations to build name recognition and trust with their target audiences so that they have credibility and reach online that can stand out in a sea of health (mis)information.

Furthermore, RQ3 investigated the association between self-reported source trust and attention paid to the post, which showed that participants did not spend more time on messages from sources that they trusted. Eye tracking technology allowed us to verify that participants were in fact spending time looking at the source of the message. As indicated in McGuire's model, the exposure to simulated Facebook-posts did garner attention to the source; however, the lack of time spent on trusted sources leads to questioning whether the participants fully comprehended the message. Additionally, findings suggest that regardless of how much trust individuals might have in a message source, they are navigating through information on social media quickly, which suggests that they may be prone to heuristic-driven evaluations of information on Facebook, rather than carefully processing the information they are reading. Attentional response to health messages may differ based on message components persuasiveness; therefore, if the source of the message is not eliciting attention, perhaps other message components are more persuasive for the individual (Compton et al., 2016).

The findings raise an alarm for public health communication: Individuals may interchangeably believe health information from government agencies and illegitimate health organizations as the same, potentially perpetuating the endorsement and even dissemination of health-related misinformation. Qualitative findings from RQ4 describe how individuals have trouble distinguishing real vs. fake health organizations and are therefore more susceptible to believing messages that are not supported by evidence-based science. The quote, “Well, I guess if it's the institute of something…” helps to depict how individuals assess and decipher the differences between reputable government agencies and an organization with a similar name. The qualitative results highlight the degree to which individuals turn to the source to influence trust and therefore believability. In response, health educators and communicators need to work to expose and familiarize the public with the many government agencies and organizations in order to increase recognizability of a reliable source of health information. Reputable organizations should consider debunking false information through comments or reposts to help combat misinformation on Facebook. Because our findings show that people are familiar with and trust the CDC and NIH, it is likely that seeing these sources refute information on a falsified health organization's page will prompt an individual to resist trusting the authentic-sounding sources in the future.

Study strengths include its mixed methods experimental design allowing the analysis of data gathered from multiple modalities. Triangulated results bring together a richer understanding of how messages and their sources are viewed and evaluated. Eye tracking data provided an objective measure of how messages are scanned rather than self-reported survey data, and interviews allowed us to probe on observed similarities and differences. As our results indicate, individuals looked to the message source to determine their trust in the information; however, other message components may help persuade individuals to scrutinize messages on Facebook. Further examining individuals' comprehension of social media messages may help researchers design believable and trustworthy messages on social media.

We also note there are several study limitations. First, because the Facebook posts were simulated, many participants noted that the individual posters were unfamiliar to them, whereas their personal Facebook feeds contained posts from known individuals. Additionally, the simulated Facebook posts were static (not clickable); therefore, participants were unable to interact with the post by liking/sharing, hence authenticity of the interface was limited. Future research utilizing non-laboratory methods should observe behavior on participants' personal social media accounts to investigate source trust and message believability within one's own social network. In addition, our sample was highly educated and high literate, which may limit the generalizability of these findings. A further limitation of this study is its relatively small sample size, subsequently limiting the power of the study. Future eye tracking studies should include larger sample sizes to detect appropriate differences. Finally, it is possible that the study setting within a government agency introduced bias to the sample and to participants' input. Learning how people respond to social media messaging can help agencies develop credible social media campaigns.

The current study prompts discussion on trust as a construct and the need to clearly define trust in research. Public health practitioners can help to distinguish the differences in interpersonal trust and technological trust characteristics to help social media users' understand the distinctions between trust and believability among various sources (Lankton and McKnight, 2011). Collaborative efforts between public health leaders and social media companies to verify sources of social media posts can help mitigate the burden of the individual when deciphering credible health messages. Studies show that individuals verify information through searching for other reputable sources to substantiate message importance when predicting intentions to share the message (Oh and Lee, 2019). This work highlights the complexities of studying audience perceptions of health information in a rapidly evolving information environment. Future studies should consider using similar methods to observe audience responses to live social media feeds and compare across platforms to evaluate whether the importance of message source holds in different social media environments, and in health contexts beyond cancer prevention. Lastly, researchers should continue to qualitatively explore why people trust organizations, whether legitimate or fake, and answer how exposure to source types online or an organization's social media presence and posting behavior influences trust.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The overall study employed a mixed methods approach, integrating eye tracking, survey questionnaires, and qualitative interviews. The protocol was reviewed and deemed exempt by the Ethics Committee and IRB at the National Cancer Institute at NIH. Written and informed consent was obtained from participants for participation and the publication of verbatim quotes.

Author Contributions

All authors listed have made substantial, direct and intellectual contribution to the work, and approved it for publication. NT took lead of analysis and writing the manuscript with substantial assistance from all authors, especially the senior author.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the following colleagues for their assistance with various aspects of the project: Anna Gaysynsky, Sheel Shah, Jonathan Strohl, Edward Peirce of Fors Marsh Group, Anita Ousley and Silvia Salazar of the NCI Human-System Integration Lab, Naomi Brown of Userworks, and Dahye Yoon of Georgetown University.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2020.00012/full#supplementary-material

References

Austin, E. W., and Dong, Q. (1994). Source v. content effects on judgments of news believability. J. Quart. 71, 973–983. doi: 10.1177/107769909407100420

Bender, J. L., Wiljer, D., Matthew, A., Canil, C. M., Legere, L., Loblaw, A., et al. (2012). Fostering partnerships in survivorship care: report of the 2011 Canadian genitourinary cancers survivorship conference. J. Cancer Survivor. 6, 296–304. doi: 10.1007/s11764-012-0220-3

Bergstrom, J. R., and Schall, A. (2014). Eye Tracking in User Experience Design. Waltham, MA: Elsevier.

Bratu, S. (2018). Fake news, health literacy, and misinformed patients: the fate of scientific facts in the era of digital medicine. Anal. Metaph. 17, 122–127. doi: 10.22381/AM1720186

Buchanan, R., and Beckett, R. D. (2014). Assessment of vaccination-related information for consumers available on Facebook®. Health Inf. Libr. J. 31, 227–234. doi: 10.1111/hir.12073

Chou, S. W., Oh, A., and Klein, W. (2018). Addressing health-related misinformation on social mediaaddressing health-related misinformation on social mediaaddressing health-related misinformation on social media. JAMA 320, 2417–2418. doi: 10.1001/jama.2018.16865

Cipresso, P., Mauri, M., Semonella, M., Tuena, C., Balgera, A., Villamira, M., et al. (2019). Looking at one's self through facebook increases mental stress: a computational psychometric analysis by using eye-tracking and psychophysiology. Cyberpsychol. Behav. Soc. Netw. 22, 307–314. doi: 10.1089/cyber.2018.0602

Compton, J., Jackson, B., and Dimmock, J. A. (2016). Persuading others to avoid persuasion: inoculation theory and resistant health attitudes. Front. Psychol. 7:122. doi: 10.3389/fpsyg.2016.00122

Eastin, M. S. (2001). Credibility assessments of online health information: the effects of source expertise and knowledge of content. J. Comput. Mediat. Commun. 6. doi: 10.1111/j.1083-6101.2001.tb00126.x

Eysenbach, G., and Köhler, C. (2002). How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. BMJ 324, 573–577. doi: 10.1136/bmj.324.7337.573

Farmer, A. D., Holt, C. B., Cook, M., and Hearing, S. (2009). Social networking sites: a novel portal for communication. Postgrad. Med. J. 85, 455–459. doi: 10.1136/pgmj.2008.074674

Gage-Bouchard, E. A., LaValley, S., Warunek, M., Beaupin, L. K., and Mollica, M. (2018). Is cancer information exchanged on social media scientifically accurate? J. Cancer Educ. 33, 1328–1332. doi: 10.1007/s13187-017-1254-z

Giffin, K. (1967). The contribution of studies of source credibility to a theory of interpersonal trust in the communication process. Psychol. Bull. 68, 104–120. doi: 10.1037/h0024833

Greenberg, J., Dubé, E., and Driedger, M. (2017). Vaccine hesitancy: in search of the risk communication comfort zone. PLoS Curr. 9:ecurrents.outbreaks.0561a011117a1d1f9596e24949e8690b. doi: 10.1371/currents.outbreaks.0561a011117a1d1f9596e24949e8690b

Greer, J. D. (2003). Evaluating the credibility of online information: a test of source and advertising influence. Mass Commun. Soc. 6, 11–28. doi: 10.1207/S15327825MCS0601_3

Guidry, J. P., Carlyle, K., Messner, M., and Jin, Y. (2015). On pins and needles: how vaccines are portrayed on Pinterest. Vaccine 33, 5051–5056. doi: 10.1016/j.vaccine.2015.08.064

Hesse, B. W., Nelson, D. E., Kreps, G. L., Croyle, R. T., Arora, N. K., Rimer, B. K., et al. (2005). Trust and sources of health information: the impact of the internet and its implications for health care providers: findings from the first health information national trends survey. Arch. Intern. Med. 165, 2618–2624. doi: 10.1001/archinte.165.22.2618

Hong, T. (2006). The influence of structural and message features on web site credibility. J. Am. Soc. Inf. Sci. Technol. 57, 114–127. doi: 10.1002/asi.20258

Hu, Y., and Shyam Sundar, S. (2010). Effects of online health sources on credibility and behavioral intentions. Commun. Res. 37, 105–132. doi: 10.1177/0093650209351512

Hussain, Z., Simonovic, B., Stupple, E. J. N., and Austin, M. (2019). Using eye tracking to explore facebook use and associations with facebook addiction, mental well-being, and personality. Behav. Sci. 9:E19. doi: 10.3390/bs9020019

Jennings, F. J., and Russell, F. M. (2019). Civility, credibility, and health information: the impact of uncivil comments and source credibility on attitudes about vaccines. Public Underst. Sci. 28, 417–432. doi: 10.1177/0963662519837901

Kelly, B. J., Leader, A. E., Mittermaier, D. J., Hornik, R. C., and Cappella, J. N. (2009). The HPV vaccine and the media: how has the topic been covered and what are the effects on knowledge about the virus and cervical cancer? Patient Educ. Couns. 77, 308–313. doi: 10.1016/j.pec.2009.03.018

Kennedy, A., LaVail, K., Nowak, G., Basket, M., and Landry, S. (2011). Confidence about vaccines in the united states: understanding parents' perceptions. Health Aff. 30, 1151–1159. doi: 10.1377/hlthaff.2011.0396

Lankton, N. K., and McKnight, D. H. (2011). What does it mean to trust facebook? Examining technology and interpersonal trust beliefs. Assoc. Comput. Machin. 42, 32–54. doi: 10.1145/1989098.1989101

Loeb, S., Sengupta, S., Butaney, M., Macaluso, J. N. Jr, Czarniecki, S. W., Robbins, R., et al. (2019). Dissemination of misinformative and biased information about prostate cancer on YouTube. Eur. Urol. 75, 564–567. doi: 10.1016/j.eururo.2018.10.056

McGuire, W. J. (1984). Public communication as a stratey for inducing health-promoting behaviorial change. Prev. Med. 13, 299–319. doi: 10.1016/0091-7435(84)90086-0

McGuire, W. J., Rice, R., and Atkin, C. (2001). Input and output variables currently promising for constructing persuasive communications. Public Commun. Campaign 3, 22–48. doi: 10.4135/9781452233260.n2

Metzger, M. J., Flanagin, A. J., and Medders, R. B. (2010). Social and heuristic approaches to credibility evaluation online. J. Commun. 60, 413–439. doi: 10.1111/j.1460-2466.2010.01488.x

Mueller, S. M., Jungo, P., Cajacob, L., Schwegler, S., Itin, P., and Brandt, O. (2019). The absence of evidence is evidence of non-sense: cross-sectional study on the quality of psoriasis-related videos on youtube and their reception by health seekers. J. Med. Internet Res. 21:e11935. doi: 10.2196/11935

Oh, H. J., and Lee, H. (2019). When do people verify and share health rumors on social media? the effects of message importance, health anxiety, and health literacy. J. Health Commun. 24, 837–847. doi: 10.1080/10810730.2019.1677824

Perrin, A., and Anderson, M. (2019). Share of U.S. Adults Using Social Media, Including Facebook, is Mostly Unchanged Since 2018. Available online at: https://pewrsr.ch/2VxJuJ3 (accessed February 8, 2019).

Scanfeld, D., Scanfeld, V., and Larson, E. L. (2010). Dissemination of health information through social networks: Twitter and antibiotics. Am. J. Infect. Control 38, 182–188. doi: 10.1016/j.ajic.2009.11.004

Schall, A., and Bergstrom, J. R. (2014). “Introduction to eye tracking,” in Eye Tracking in User Experience Design, eds M. Dunkerley (Waltham, MA: Elsevier), 3–26.

Shao, C., Ciampaglia, G. L., Varol, O., Flammini, A., and Menczer, F. (2017). The spread of fake news by social bots. arXiv [preprint] arXiv 1707.07592, 96–104.

Shao, C., Hui, P.-M., Wang, L., Jiang, X., Flammini, A., Menczer, F., et al. (2018). Anatomy of an online misinformation network. PLoS ONE 13:e0196087. doi: 10.1371/journal.pone.0196087

Srivastava, J., Saks, J., Weed, A. J., and Atkins, A. (2018). Engaging audiences on social media: Identifying relationships between message factors and user engagement on the American Cancer Society's Facebook page. Telemat. Inform. 35, 1832–1844. doi: 10.1016/j.tele.2018.05.011

StataCorp, LP (2015). Stata Statistical Software: Release 14. [Computer Program]. College Station, TX: StataCorp LP.

Sülflow, M., Schäfer, S., and Winter, S. (2018). Selective attention in the news feed: an eye-tracking study on the perception and selection of political news posts on Facebook. New Media Soc. 21, 168–190. doi: 10.1177/1461444818791520

Syed-Abdul, S., Fernandez-Luque, L., Jian, W.-S., Li, Y.-C., Crain, S., Hsu, M.-H., et al. (2013). Misleading health-related information promoted through video-based social media: anorexia on YouTube. J. Med. Internet Res. 15:e30. doi: 10.2196/jmir.2237

Van der Meer, T. G. L. A., and Jin, Y. (2019). Seeking formula for misinformation treatment in public health crises: the effects of corrective information type and source. Health Commun. 14, 1–16. doi: 10.1080/10410236.2019.1573295

Vance, K., Howe, W., and Dellavalle, R. P. (2009). Social internet sites as a source of public health information. Dermatol. Clin. 27, 133–136. doi: 10.1016/j.det.2008.11.010

Wiener, J. L., and Mowen, J. C. (1986). Source credibility: on the independent effects of trust and expertise. ACR North Am. Adv. 13, 306–310.

Keywords: social media, health misinformation, health communication, cancer, mixed methods, eye-tracking

Citation: Trivedi N, Krakow M, Hyatt Hawkins K, Peterson EB and Chou W-Y S (2020) “Well, the Message Is From the Institute of Something”: Exploring Source Trust of Cancer-Related Messages on Simulated Facebook Posts. Front. Commun. 5:12. doi: 10.3389/fcomm.2020.00012

Received: 31 October 2019; Accepted: 10 February 2020;

Published: 28 February 2020.

Edited by:

Sunny Jung Kim, Virginia Commonwealth University, United StatesReviewed by:

Patrick J. Dillon, Kent State University at Stark, United StatesIsaac Nahon-Serfaty, University of Ottawa, Canada

Copyright © 2020 Trivedi, Krakow, Hyatt Hawkins, Peterson and Chou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Neha Trivedi, bmVoYS50cml2ZWRpQG5paC5nb3Y=

Neha Trivedi

Neha Trivedi Melinda Krakow1

Melinda Krakow1