- Digital Education, Information Services Division, University College London, London, United Kingdom

In recent years there has been growing concern around student wellbeing and in particular student mental-health. Numerous newspaper articles (Ferguson, 2017; Shackle, 2019) have been published on the topic and a BBC 3 documentary (Byrne, 2017) was produced on the topic of student suicide. These have coincided with a number of United Kingdom Higher Education sector initiatives and reports, the highest profile of these being the Universities United Kingdom “#StepChange” report (Universities UK, 2017) and the Institute for Public Policy Research “Not By Degrees” report (“Not by Degrees: Improving Student Mental Health in the UK’s Universities” 2017). Simultaneously, learning analytics has been growing as a field in the United Kingdom, with a number of institutions running services predominantly based on student retention and progression, the majority of which make use of the Jisc Learning Analytics service. Much of the data used in these services is behavioral data: interactions with various IT systems, attendance at events and/or engagement with library services. Wellbeing research indicates that since changes in wellbeing, are indicated by changes in behavior, these changes could be identified via learning analytics. Research has also shown that students react very emotively to learning analytics data and that this may impact on their wellbeing. The 2017 Universities United Kingdom (UUK) #StepChange report states: “Institutions are encouraged to align learning analytics to the mental health agenda to identify change in students’ behaviors and to address risks and target support.” (Universities UK, 2017). This study was undertaken in the 2018/19 academic year, a year after the launch of the #StepChange framework and after the formal transition of Jisc’s learning analytics work with partner HEIs to a national learning analytics service. With further calls for whole institutional responses to address student wellbeing and mental health concerns, including the recently published University Mental Health Charter this study aims to answer two questions. Firstly, is there evidence of the #StepChange recommendation being adopted in current learning analytics implementations? Secondly, has there been any consideration of the impact on staff and student wellbeing and mental health resulting from the introduction of learning analytics? Analysis of existing learning analytics applications have found that there is insufficient granularity in the data used to be able to identify changes in an individual’s behavior at a required level, in addition this data is collected with insufficient context to be able to truly understand what the data represents. Where there are connections between learning analytics and student support these are related to student retention and academic performance. Although it has been identified that learning analytics can impact on student and staff behaviors, there is no evidence of staff and student wellbeing being considered in current policies or in the existing policy frameworks. The recommendation from the 2017 Stepchange framework has not been met and reviews of current practices need to be undertaken if learning analytics is to be part of Mentally Healthy Universities moving forward. In conclusion, although learning analytics is a growing field and becoming operationalized within United Kingdom Higher Education it is still in its reactive infancy. Current data models rely on proxies for student engagement and may not truly represent student behaviors. At this time there is inadequate sophistication for the use of learning analytics to identify student wellbeing concerns. However, as with all technologies, learning analytics is not benign, and changes to ways of working impact on both staff and students, wellbeing professionals should be included as key stakeholders in the development of learning analytics and student support policies and wellbeing considerations explicitly mentioned and taken into account.

Introduction

In recent years there has been growing concern around student wellbeing and in particular mental health. The World Health Organization defines mental health as “a state of well-being in which every individual realizes his or her own potential, can cope with the normal stresses of life, can work productively and fruitfully, and is able to make a contribution to her or his community.” (Saxena and Setoya, 2014, p. 6), it is this definition against which this work is framed.

Numerous newspaper articles have been published on the topic and a BBC 3 documentary was produced on the topic of student suicide. These have coincided with a number of United Kingdom Higher Education sector initiatives and reports. The highest profile of these being the Universities United Kingdom “#StepChange” report, the Institute for Public Policy Research “Not By Degrees” report and the University Mental Health Charter, the development of which is being led by the charity Student Minds in conjunction with other partner organizations.

The 2017 Universities United Kingdom (UUK) #StepChange report and framework aim to encourage university leaders to adopt a whole-institution approach to improving mental health this is required as student wellbeing is shaped by the environment created by the higher education institution (HEI) attended and the support available to the student, including any learning analytics implementations. As noted in the GuildHE report (GuildHE, 2018, p. 5) “It should not be left to the student services team to develop and implement a wellbeing strategy, but activities should be owned and enacted in every part of institutional life, from security and estates to the academic curriculum.”

The #StepChange report and framework provide a number of recommendations linked to eight dimensions on how this can be achieved (Universities UK, 2017). The second of these dimensions, Data, has become increasingly important in the Higher Education sector. The #Stepchange framework recommendation 2.5 sees institutions encouraged to use traditionally attainment focused data in a new way: “Institutions are encouraged to align learning analytics to the mental health agenda to identify change in students’ behaviors and to address risks and target support” (Universities UK, 2017).

Data used in learning analytics applications comes from existing university systems; student information systems, VLEs, library data, and attendance monitoring (Wong and Li, 2019), all of which is behavioral data. Changes in wellbeing can be indicated by changes in behavior. As the data used by learning analytics systems is behavioral, it may be possible to identify wellbeing related behavior changes via these systems. Thereby, potentially providing an early alert mechanism for potential wellbeing issues, as opposed to monitoring students’ wellbeing. For a discussion on the potential of learning analytics to support student wellbeing see (Ahern, 2018).

In addition to potentially being able to flag wellbeing or welfare issues, learning analytics applications in themselves can pose a risk to wellbeing. It is recognized that students have a range of emotive responses to dashboards and do not always respond in the most appropriate manner, with some students worrying unnecessarily (Bennett, 2018). Staff may not be prepared for these responses and, by providing an additional system and expecting usage it can add an additional workload burden to staff, harming their wellbeing.

Learning analytics in the United Kingdom moved from pilot systems to a full service provided by Jisc in Summer 2018 (Jisc, 2019). As this is now an offering to HEIs, and given the potential shortcomings mentioned above, the following two questions have gained further relevance and urgency: Has the #StepChange recommendation been incorporated into these applications? And: has any consideration been given to wellbeing in relation to the introduction and use of learning analytics?

Student Support and Learning Analytics

Student Support

The nature and structure of student support can vary greatly amongst and within HEIs. Earwaker (1992, p. 95) identifies the need for HEIs to make strategic decisions about the nature of the support, including:

• Is the student support to be seen as preventative or as a cure?

• Is the HEI prepared to take initiatives or only respond to expressed needs?

• Is the support provision understood to be integral to the educational task, or ancillary to it?

To understand if recommendation 2.5 has been included, we need to understand the nature of the student support provision provided by HEIs. This will provide a framework for analysing the nature of the support provided at study HEIs and whether or not there is alignment of the recommendation and current learning analytics (LA) implementations.

Student support is affected by perspectives of micro-politics including: the current focus of the HEI’s energy and related discourse, the HEI’s agenda, both public and private, and the position of student support in relation to access to resources. The levels within the institution can be considered as the Macro (whole institution), Meso (department or course/subject team) or Micro (individual academic) (Thomas et al., 2006). These will impact both policy development at the institution level and where the provision is provided and by whom, in addition to the nature of the provision itself at the individual department level.

In the United Kingdom HEIs are organized as a set of academic units (Schools) and central administrative units (Professional Services). Schools are further disaggregated into Faculties with associated Departments.

• For the purposes of this report an Advisor is defined as either a Personal Tutor/Faculty Advisor or a member of Professional Services staff who provide specialist advice and guidance to students for example, Careers Officers, Academic Support or Wellbeing Professionals. Advising, is defined as the activity undertaken by these staff members with regards to supporting students personally and academically.

• Models for delivering student support can be categorized as one of three organizational models: Centralized, Decentralized, or Shared (Pardee, 2004). However, these models do not specify the nature of the support nor who it is provided by.

• In the centralized model, all advisors are located in one academic or administrative unit. In contrast, for the decentralized model all advisors are located within their respective academic departments. In some institutions decentralized support is provided solely by a department advisor to whom the student is assigned. In the United Kingdom, this is usually an academic staff member from the student’s discipline in the role of Personal Tutor. In the Shared model some advisors meet with students in a central administrative unit, while others meet in their academic department. This has also been referred to as the Hybrid professional model (Thomas et al., 2006). Most commonly seen within United Kingdom HEIs, is a combination of faculty based Personal Tutors and central Professional support.

Advising may be provided by a specific type or a combination of Professional, Faculty (academic staff) or Peer advisors (Migden, 1989). Not all advisor types are offered by all HEIs.

Professional Advisors

Professional advisors are professionally trained staff where the provision is centered around academic or welfare services and interaction is predicted on student need. Students will often see different advisors at different times depending on their particular needs at that time and the expertise of a particular advisor. This potentially limits the development of staff/student relationships as the majority of students are more likely to see a number of different specialists for different needs throughout their time at the institution (Thomas et al., 2006).

Faculty Advisors

• Faculty Advisors are members of teaching staff within an academic unit (usually within a faculty or department) who advise students. Their function can vary widely across and between institutions, and in the United Kingdom they are commonly associated with the role of Personal Tutor.

In this section we will discuss the student perception of Personal Tutors and the different types of support provided by Faculty Advisors; Academic, Pastoral and Developmental.

Students perceive the role of personal tutor as providing:

• Academic feedback and development,

• Information about processes, procedures, and expectations,

• Personal welfare support,

• Referral to further information and support,

• and developing their Relationship with the HEI and a sense of belonging (Thomas et al., 2006).

Although Academic, Pastoral, or Developmental support may be provided within the academic unit, an academic unit may provide only one or a combination of these types of support (Mynott, 2016). This can be at odds with students perceptions of what should be available.

• The aim of academic support is to support students to gain academic success and their desired qualification (Mynott, 2016). This may take place on a one-to-one basis or as part of a tutorial group. Where tutorials are integrated into the curriculum, students are required to attend a timetabled module with their tutor group. These sessions may incorporate learning skills with information about the institution and higher education more generally. As students are required to attend, it is assumed that all students potentially benefit, and the process enables relationships to develop between students, staff, and peers (Thomas et al., 2006). Some institutions have an academic support-only model in which staff are expected to immediately direct students to centralized services and trained counselor provision for any additional support needs (Smith, 2005).

• Pastoral support in the United Kingdom is often seen as responsive and reactive, centered around crisis intervention (Smith, 2005; Thomas et al., 2006). A specific staff member is assigned to each student and they may provide a combination of personal and academic support. Students are often required to arrange meetings and may only do so when they have a problem (Thomas et al., 2006). The support provided may be unstructured and may not meet the students’ expectations or needs. Some approaches to this model are more pro-active with required meetings at regular intervals throughout the year and may be structured.

• Developmental support can include structured personal development planning and may include employability skills. Many aspects are discipline specific (Mynott, 2016).

Peer Advisors

Peer advising programs utilize undergraduate students to provide guidance, support, and referrals for other undergraduate students (Kuba, 2010). They are usually implemented in addition to existing advising provision.

There are a number of advantages to implementing a peer advisory service (Koring, 2005), these include:

• Versatility.

• Compatibility with pre-existing advising programs.

• Sensitivity to student needs.

• Ability to extend the range and scope of advising times and venues when advising is not usually available.

These are in addition to supporting key institutional priorities such as student retention and persistence, promotion of student success and helping students to meet their career goals (Zahorik, 2011). However, peer-led programs see a number of limitations, including difficulties for the advisors in balancing their advisor and student roles, a potential lack of objectivity regarding teaching staff and courses, a lack of knowledge of courses or programs of study, and a lack of student development theory (King, 1993).

However, programs such as Psychology Peer Advising (PPA) at James Madison University and the College of Natural Sciences Peer Advising at Michigan State University exemplify how these limitations can be resolved. In both these programs the student advisors undertake structured training and receive ongoing support/mentoring. For the PPA program, this involved the student advisors enrolling on a 2 credit class each semester. In addition to meeting with the students, the peer advisors also undertake additional tasks such as producing support materials (Koring, 2005; DuVall et al., 2018). It should be noted that these programs are quite involved and require levels of funding that may not be available to other institutions.

The literature notes (King, 1993; Koring, 2005; Kuba, 2010; Purdy, 2013) that there often are high levels of trust between peer mentors and their mentees and that these are easier to establish than between mentees and other advisor types. Therefore, it is important that the boundaries and limits of the role are clearly identified, and that training is provided in order for peer mentors to provide accurate information, constructive feedback, and to know when a mentee should be referred to specialist provision (Kuba, 2010). Peer advisors can be useful supplements to, but not a replacement for existing advisory systems.

Student Support and Data Usage

In order for Faculty and Professional advisors to provide timely and informed support to students they need to be provided with a wide range of data. This has resulted in the increase in the use of dashboards across the sector to present data from a range of sources such as the VLE and student record systems (Lochtie et al., 2018). In the United Kingdom it is common for Faculty Advisors not to teach their advisees, data are often used as the starting point for advisory meetings. However, as noted by Sclater (2017), this can add an additional workload to existingly over-stretched staff. Increasingly dashboards are the main user interface for learning analytics implementations.

Learning Analytics

Learning analytics are defined by The Society for Learning Analytics Research as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (Siemens and Gasevic, 2012).

In the United Kingdom, Jisc provide a national learning analytics service, this came into effect almost a year after the publication of the #StepChange report (Jisc, 2018). Almost all United Kingdom HEI implementations of learning analytics have been developed in partnership with Jisc in either an advisory or service provider capacity. The focus of these projects were learner analytics, developing models of learning engagement. With many of the pilot projects predominantly focusing on identifying at-risk students with regards to retention and progression.

Jisc, formally the Joint Information Systems Committee is a not-for-profit organization and is funded mainly by the United Kingdom government and universities. Pre-2012, Jisc was directly by the United Kingdom Higher Education Funding Councils. It was formed to provide networking and specialist information services to the United Kingdom post-16 education sector. It currently provides digital solutions and services to the United Kingdom Higher Education, Further Education and skills sectors (Who we are and what we do, 2020).

Development Frameworks

For the United Kingdom Post-16 sector, and as part of their initial pilot project work, Jisc developed their Learning Analytics Discovery Toolkit and a Discovery Readiness Questionnaire. These are part the onboarding process. The focus of the pilot projects and the Jisc Learning Analytics service has been on identifying students at academic risk, whether that be retention or underachievement.

In addition to the Jisc process, there has also been the Erasmus + funded SHEILA project focused on creating a policy development framework and the LA Deck project, a deck of cards for Learning Analytics co-design which was created by a doctoral researcher at the University of Technology Sydney (CIC Editor, 2019).

Jisc Onboarding Process

The Jisc onboarding process for the learning analytics consists of 5 steps. The steps are:

1. Orientation.

2. Discovery.

3. Culture and Organization Setup.

4. Data Integration.

a. Live Data.

b. Historical Data and predictive modeling.

5. Implementation roll-out/planning. (On-boarding Guide | Effective Learning Analytics, 2018).

The Discovery Toolkit and Discovery Questionaire form part of step 2 -Discovery, of this process. The toolkit has 5 stages of activity:

• Goals for learning analytics,

• Governance and leadership,

• Discovery questionnaire,

• Review areas that need development and create and action plan,

• And Start to address readiness recommendations.

The Discovery questionnaire focuses on 5 key areas and consists of 27 questions. The key areas are:

• Culture & Vision.

• Ethics and legal issues.

• Strategy & Investment.

• Structure & Governance.

• Technology & data.

Questions in the Culture & Vision section focus upon the aims of the implementation, management support and institutional buy-in.

The Jisc onboarding process will help institutions to understand their aims for and how to implement learning analytics, but they do not provide guidance on policy development.

Similarly, the LA-Deck cards focus on designing a learning analytics implementation but does not address policy. However, there are wild cards that could be used for this purpose if desired (Prietoalvarez, 2018).

Whereas in contrast, the aims of the SHEILA project (an Erasmus + funded program encompassing a team of 7 European universities and 58 associate partners) was to build a policy development framework that would assist European universities to become more mature users and custodians of data about their students as they learn online (About–SHEILA, 2018).

The SHEILA Framework

The SHEILA framework focuses on the development of learning analytics policies, the framework consists of 6 dimensions. These dimensions are:

1. Map political context.

2. Identify key stakeholders.

3. Identify desired behavior changes.

4. Develop engagement strategy.

5. Analyze internal capacity to effect change.

6. Establish monitoring and learning frameworks.

Each dimension has associated actions, challenges, and policy considerations (SHEILA-framework_Version-2.pdf, 2018). It was designed to be used as part of an iterative process. As the framework has been designed to aid the development learning analytics policy, the dimensions could also be used as a starting point to identify key features of existing learning analytics policies. If learning analytics is to be aligned to student wellbeing and mental health activities, the relevant stakeholders, e.g., student support teams, and expected outcomes should be identified as part of dimensions 2 and 3.

Implementations and Interventions

Current applications of learning analytics include early alert and student success (some of these applications focus on improving student outcomes (grades) whilst others focus on student retention and progression), course recommendation, adaptive learning, and curriculum design (Sclater, 2017). At present in the United Kingdom the focus is on early alert and student success, which may in part be due to the growing body of research investigating the relationship between student engagement and student outcomes.

Engagement can be charaterized in three ways: Behavioral, Emotional and Cognitive. Behavioral engagementis associated with participation with academic, social and extra-curricular activities. It is associated with students’ academic success. Emotional engagement is associated with students’ feelings about and responses to their educators and place of study. This is thought to impact on students’ retention and willingness to study. Whereas, cognitive engagement is aligned to the idea of investment. The willingness to engage with cognitive complex tasks and ideas, exerting the extra effort required (Paris et al., 2016).

In context of learning analytics, student engagement refers to behavioral engagement. The recorded student interactions with the institution’s systems and services e.g., VLE activity, attendance at a lecture and use of library services. Another partial factor may be the Higher Education Commission’s recommendation that “all institutions should consider introducing an appropriate learning analytics system to improve student support and performance.” (Shacklock, 2016, p. 4). However, there has been some recent debate about whether we are measuring engagement or attention. These are quite different things, and we need to be clear about much of what we are measuring. Much of the data that we use for measuring engagement, can be considered as a measure of attention, we know students have interacted with something but we do not know if they have engaged with it or to what extent. This should be taken into account when looking at the data captured by these systems and how we choose to use it (Thomson, 2019).

Much of the published research to date has focused on the development of learning analytics models and determining their potential usefulness in the context of teaching and learning, relatively little has been written about resulting interventions and their effectiveness with regard to student academic outcomes and changes in staff advising and student learning behaviors. The most recent review of learning analytics inventions in higher education (Wong and Li, 2019) reviews 24 case studies published between 2011 and 2018, 13 of which originated in the United States. Previous reviews of learning analytics interventions reviewed fewer articles, with 13 reviewed by Na and Tasir (2017) and of which 6 reported empirical intervention practices. Only 11 articles were found to evaluate the effectiveness of the intervention in the review by Sonderlund and Smith (2017). Of these 11 articles, only 2 were identified as having “Strong” Research Quality with regards to methodology. Overall the studies found that the interventions had a positive effect, however, some studies reported negative effects. These studies highlight the lack of quantity and quality of review of interventions undertaken as a result of learning analytics. With the limited evidence base for the effectiveness of current practice, should learning analytics be extended into more contentious or complex, with regards to modeling, areas? At present we risk a impacting a student negatively due to inadequate or inappropriate interventions, which may have welfare and wellbeing implications for the student. New analytics or more complex models should not be developed until there is a sound evidence base or the impact of these systems and the effectiveness of the associated interventions.

Visualizations of learner data are the most common form of intervention and are a key part of almost systems, but are still an active area of research in learning analytics and can have both positive and negative effects. Most visualizations, in the form of dashboards, are deployed to enable staff to find students at academic risk, obtain an overview of course activity and reflect on their teaching (Verbert et al., 2013).

Although it has been found that early dashboard usage by students is related to academic achievement later in the academic year (Broos et al., 2019), it is recognized that students have a range of emotive responses to dashboards and do not always respond in the most appropriate manner, with some students worrying unnecessarily (Bennett, 2018). In addition, aggregate data visualizations may over or underestimate the complex and dynamic underlying engagement of learners with different attitudes, behaviors and cognition (Rienties et al., 2017).

Research is currently being undertaken with regards to curriculum design (the design of programs of study or of individual modules from the view of increasing teaching effectiveness) and the intelligent campus within the United Kingdom, this is also an emerging theme within the international learning analytics research community as indicated by the theme “Capturing Learning and Teaching” listed in the 2020 Learning Analytics and Knowledge conference call for papers (10th International Learning Analytics and Knowledge (Lak) Conference, 2019). The intelligent campus is “where data from the physical, digital and online environments can be combined and analyzed, opening up vast possibilities for more effective use of learning and non-learning spaces” (Owen, 2018) by staff and students. It is envisioned that learning environments will be optimized for student engagement e.g., temperature and lighting and that resource usage and allocation will be adjusted to reduce waste and improve timetabling.

Much like student support, the nature and purpose of learning analytics implementations varies between institutions. These are impacted upon by the political context of the implementation and institutions’ capacity to effect change.

Learning Analytics and Student Wellbeing

The mental health and wellbeing of young people is an increasingly important topic with regard to United Kingdom government policy. This has included the formation of the Children and Young People’s Mental Health Taskforce which produced a number of reports and recommendations (Improving mental health services for young people–GOV.UK, 2015). There have since been a number of studies undertaken relating to mental health in schools and colleges (Supporting mental health in schools and colleges, 2018). In 2016 UUK adopted mental health as a proactive policy priority leading to the publication of the #StepChange framework in 2017 which is currently under review. December 2019 saw the launch the initial version of the University Mental Health Charter (Student Minds, 2019), a collaboration between UUK and the charity Student Minds.

As reported by Wong and Li (2019), students’ behavioral data, online learning, and study performance were amongst the most commonly used data sources along with demographic data. It is the behavioral data that is of interest regarding student wellbeing.

In 2018, Jisc published a report on the opportunities presented by learning analytics to support student wellbeing and mental health (Hall, 2018). The report predominantly focused on students with poor attainment and/or engagement, it failed to address potential issues with well performing and/or highly engaged students that may be at risk from burnout or maladjusted perfectionism. However, the report does identify the need for a broader range of data sources and the need for a whole university approach. This has started to become an area of active learning analytics research in the United Kingdom, and in November 2018 Universities United Kingdom hosted the roundtable meeting Mental health in higher education: Data analytics for student mental health.

In addition, the recently published update to the StepChange framework, Stepchange: Mentally Healthy Universities, notes that “Universities are extending learning or staff analytics to identify difficulties or encourage positive behaviors” (Universities UK, 2020).

As reflected in the WHO definition of mental health: “a state of well-being in which every individual realizes his or her own potential, can cope with the normal stresses of life, can work productively and fruitfully, and is able to make a contribution to her or his community.” (Saxena and Setoya, 2014, p. 6), wellbeing is multi-faceted and is best considered over multiple domains (Kern et al., 2015).

An example of this from the field of positive psychology, the scientific study of human flourishing – the strengths and virtues that enable us to thrive, is Seligman’s PERMA model of flourishing (Seligman, 2012):

• P: Positive Emotions – feeling good.

• E: Engagement – psychological connection to activities and organizations.

• R: Relationships – feeling socially integrated.

• M: Meaning – purposeful existence.

• A: Achievement – a sense of accomplishment.

Although individuals may have differing levels of wellbeing for each dimension, they are interrelated. To thrive, and to meet the WHO definition of mental health, it is important to have a good level of wellbeing in each of the dimensions.

By considering and identifying the level of wellbeing in each of these dimensions HEIs could better meet the ongoing and changing wellbeing needs of the their student population. Aligning with the idea of positive education, education for both traditional skills and for happiness (Seligman et al., 2009; Kern et al., 2015). This aligns to recommendation in the updated Stepchange framework that institutions “review the design and delivery of the curriculum, teaching and learning to position health gain alongside learning gain” (Universities UK, 2020, pg 14)

Returning to the 2017 #StepChange recommendation: “Institutions are encouraged to align learning analytics to the mental health agenda to identify change in students’ behaviors and to address risks and target support.” (Universities UK, 2017), given the complexities of human wellbeing, how appropriate are learning analytics applications for identifying difficulties? Are they able to provide actionable insights?

As previously noted, one of the main data sources for learning analytics applications is behavioral data. The field of Applied Behavior Analysis defines behavior as anything an individual does when interacting with the physical environment. Behavior influenced by environmental factors, important factors include:

• the context in which the response occurs,

• motivational factors,

• antecedents that signal which responses will be successful,

• and the consequences or outcomes of responses that influence whether they will recur in the future (Fisher et al., 2013).

Although students may be demonstrating the same behaviors, e.g., undertaking an online quiz, through their interactions with university systems such as virtual learning environments, there may be widely varying factors influencing why they have done so e.g., for revision, is a required activity, want to improve mark etc. This has implications for any models developed from the data. There needs to be an understanding of the students and their situation for a meaningful analysis and the analyze and handling of any identified gaps in the data, as opposed to blindly throwing data into a statistical model (Shaffer, 2017; Leitner et al., 2019). This calls into question of validity of existing learning analytics data sources, not just from a wellbeing perspective, but also as a measure of learner engagement (Leitner et al., 2019).

Given that the validity of existing learning analytics data sources is questionable, more expansive and granular data sources would be required to understand the motives behind the student behaviors. As wellbeing is multi-dimensional for learning analytics to be more effective in identifying wellbeing related changes in student behaviors it is likely that more intrusive data capture methods would be required.

As identified in studies focusing on identification of epistemic emotions, the emotions related to the cognitive processing of information and tasks that students encounter in a learning environment. Detection methods for epistemic emotions may include:

• asking students to self-report feelings,

• measuring physiological changes,

• and students’ behavioral expressions (Arguel et al., 2019).

In addition, social network analysis of discussion forum interactions within online learning environments has been identified as a method of identifying potentially isolated students (Dawson, 2010). However, this is only a small part of how students interact, and to have a more representative picture, we would need to incorporate more data sources.

At what point do these methods step beyond what is acceptable and are perceived as forms of needless surveillance? A study from the Open University scanning their implementation of Predictive Learning Analytics over a 4-year period highlighted the limited usage of the system and a number of challenges. The highest level of engagement, 56.5%, was from the Faculty of Business and Law. Most staff access to the service was linked to assignment submission deadlines and students who were “silent” or raising concerns, this usage limited opportunities to potentially identify issues related to students who were doing well or present on the system and the potential usefulness of the system. Challenges included difficulties in interpreting the data presented, introducing additional burden onto staff. Would academic staff who are already uncomfortable in their role as personal tutors engage with a system that could potential expose student wellbeing issues? Or should access be limited to already stretched student wellbeing services?

In addition, as some groups of students are more likely to experience mental ill-health or poor wellbeing than others, do we risk stigmatizing these students through this monitoring and and interventions that are put into place? As argued by Prinsloo and Slade (2017) it is therefore important that an ethics of justice and care is developed transparently and in conjunction with all stakeholders (Herodotou et al., 2020). This is particularly important as most interventions and innovations lead to unexpected and potentially negative consequences (Rienties et al., 2017; Bennett, 2018).

Summary

From the literature it has been identified that there is a lot of variety in the student support landscape with regard to the structure and nature of support given to students. There is more homogeny in the United Kingdom’s approach to learning analytics with a focus on early alert and student success, but there will be divergence in the nature of the implementations and associated interventions.

These implementations and interventions will impact upon institutions student support models as they inform who has access to the learning analytics system and for what purpose.

The complex nature of both learning and wellbeing have questioned the validity of current data sources used in learning analytics, with many questions being raised around the recommendation of using learning analytics tools to identify changes in student behavior related to wellbeing. However, as identified the very use of these tools can impact the behaviors and wellbeing of both staff and students. It is therefore important to consider if and to what extent this has been taken into consideration and features in the associated policies.

The rest of this paper will aim to identify firstly, to what extent if any has the 2017 #StepChange recommendation “Institutions are encouraged to align learning analytics to the mental health agenda to identify change in students’ behaviors and to address risks and target support.” (Universities UK, 2017) been met. Secondly, as we have identified the use of these tools can impact the behaviors and wellbeing of staff and suggests we will identify to what if any extent this has been taken into account in the development of both student support and learning analytics policies.

Methodology

This project identifies the current nature and purpose of student support and learning analytics implementations, as well as any synergies or conflicts between these two systems. The aim is to establish if and how the two systems can be aligned to better support student wellbeing and mental health, as recommended in the UUK #StepChange report. A qualitative approach was undertaken. This includes a thematic analysis of student support and learning analytics policy documents.

using NVivo and an online survey that was designed to capture information about data usage in the student support process. These were conducted during the academic year 2018/19.

In addition to there being variation between student support implementations between institutions, there is also variance within. For the purposes of this work I focused on policies at the macro, institutional level, to develop an understanding of service implementation.

United Kingdom Higher Education Institutions were invited to participate in the study via a combination of an open call for participants that was advertised via a blog post, mailing lists and tweets, and targeted emails to institutions that were known to have an interest in learning analytics via their involvement with the Jisc Learning Analytics Network (as either host or presenting HEIs). Details of the recruitment process can be viewed in Supplementary Appendix A.

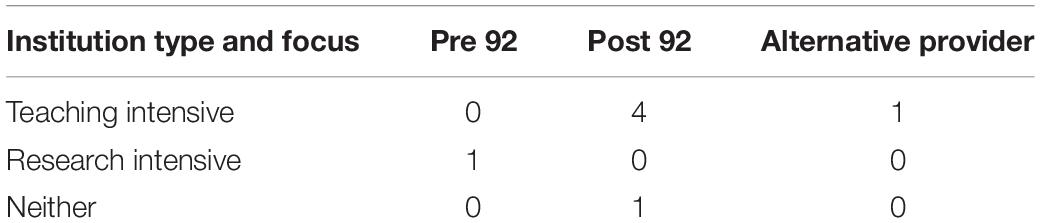

All participating institutions were required to complete a registration form and were recruited between December 2018 and February 2019. Seven English HEIs registered to take part in the study, four of which have a learning analytics implementation which are predominantly used to support students’ academic development (see Table 1).

Student Support Policies

The analysis of student support policies was conducted as a thematic analysis, using the qualitative data analysis computer software package NVivo 11. A top-down approach was taken, by which pre-identified initial themes from the literature review were used to define the initial nodes and sub-nodes for the analysis. The list of nodes and sub-nodes can be viewed in Supplementary Appendix B.

During the analysis the researcher recognized that the pre-defined themes used for analysing the student support and wellbeing policies were based on a review of predominantly personal tutoring literature. As a result, these may not be suitable for all the policy documents. Having included a broader range of student support literature including academic and wellbeing causes for concern and support mechanisms for students with disabilities would have helped to provide a broader theoretical grounding for the analysis, enabling all the policies to be analyzed effectively.

Some additional nodes were added to capture additional information that was considered important e.g., referral to an additional policy/process. The final list of nodes and sub-nodes used for coding can be viewed in the Supplementary Appendix B. Each HEI was considered as a case for the purposes of the analysis with the following recorded for each institution: Name, Type and Focus.

The primary aims of this analysis were to:

• Identify the support model used;

• Identify is student support was reactive or pro-active;

• and what form the support undertook –

∘ The types of advisor,

∘ The primary type of advising.

Additionally, each policy document was reviewed outside of NVivo to identify specific staff roles, services, outside agencies and other policies explicitly referenced. The purpose of this activity was to identify any relationships between the student support policies and where applicable, learning analytics policies. A full list of the shared policies can be viewed in Supplementary Appendix B.

Learning Analytics Policies

Not all of the institutions in the study have learning analytics implementations. There are fewer policies developed for learning analytics compared to student support where there is usually a suite of associated policies. However, learning analytics policies could be considered as part of the suite of student support policies where the implementation is to form part of the personal tutor support. As there were notably fewer learning analytics policy documents than student support policies it was decided that a thematic analysis would not be conducted for these documents.

Like the student support policies each learning analytics policy document was reviewed to identify specific staff roles, services, outside agencies and other policies explicitly referenced. The purpose of this activity was to identify any relationships between these policies and the student support policies.

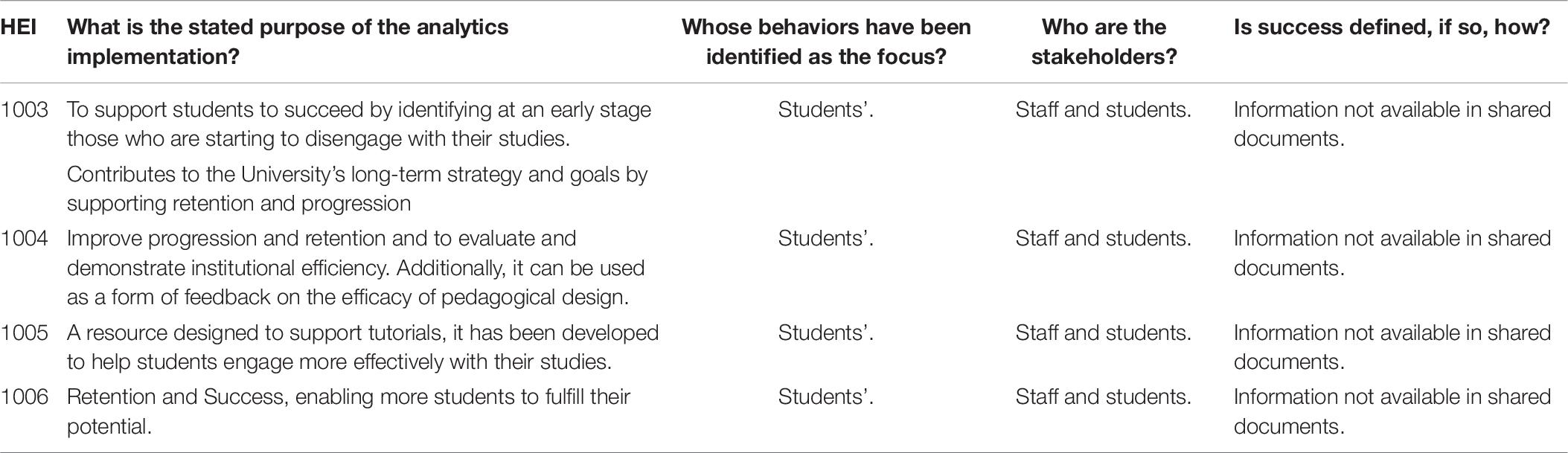

As the SHEILA framework is designed to aid the development of learning analytics policies, I thought this would be a good starting point for analysing the policies. Effectively using the framework prompts to reverse engineer the policy documents. To devise key questions for reviewing the learning analytics policies prompts from the SHEILA framework (SHEILA-framework_Version-2.pdf, 2018), taken from the dimensions 2 (Identify key stakeholders), 3 (Identify desired behavior changes) and 6 (Establish monitoring and learning frameworks), were used.

These dimensions were selected as their Action prompts were the most relevant to the policy analysis tasks. The prompts were:

• Dimension 2: Write down the people that will need to be involved in the design, implementation, and evaluation phases.

• Dimension 3: Write down the changes that you would like learning analytics to bring to your institutional environment or particular stakeholders. Why are these changes important to your institution?

• Dimension 6: Define success indicators and consider both qualitative and quantitative measurements.

These prompts were adapted and the key questions developed were:

• What is the stated purpose of the analytics implementation?

• Whose behaviors have been identified as the focus?

• Who are the stakeholders?

• Is success defined, if so, how?

Additional policy documents were identified on the websites of the participatory institutions to address some of these questions.

Online Survey

Analysis of student support policies identified that at least one policy from each of the participating institutions included the term “monitoring” and for 6 of the institutions at least one policy contained the term “data.” However, it is not clear from these policies what the data is or how it is used. As only 4 of the participating institutions have a learning analytics implementation it was determined that a survey would be developed to help develop an understanding of this data usage.

An online survey was designed and distributed to capture if and what data was being used by personal tutors and student support services, how it was obtained, if it was shared and who it was shared with. For the institutions with learning analytics implementations it was hoped that this would provide a greater insight into how they are used to support students, by whom and for what purposes. The survey contained a combination of closed and open questions.

A link to the survey was sent via email invitation to the contact identified via the registration process. A generic invitation was initially sent, followed by a repeat invitation 20 days later. A reminder was sent a further 13 days later.

Six responses were recorded for the survey, however, it was only completed by 3 institutions.

Analysis – Policies

The #StepChange framework recommendation 2.5 states that “Institutions are encouraged to align learning analytics to the mental health agenda to identify change in students’ behaviors and to address risks and target support.” (Universities UK, 2017). A key finding from analysing the policies shared with this research project from the participating Higher Education Institutions is that there are three elements to student support and wellbeing, each with different institutional behaviors. These elements are day-to-day support, wellbeing cause for concern and academic cause for concern. For the purposes of this report day-to-day support refers to the routine support available to all students such as Personal Tutor sessions and Careers advice. Where a student is at risk of academic failure, an academic cause for concern process may be triggered. Wellbeing cause for concern processes may be triggered if a student is presenting behaviors that may result in a threat to their health or the wellbeing of others e.g., symptoms of mental ill-health or substance abuse. If the #StepChange recommendation has been or is starting to be adopted, then you would expect to find learning analytics referred to in the corresponding policies for the purpose of enhancing student wellbeing and mental health provision.

Day-to-Day Support

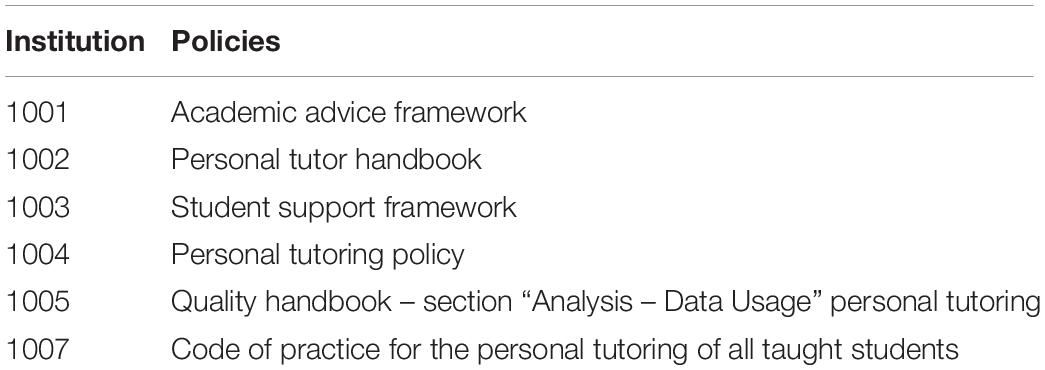

Personal tutoring is part of the day-to-day/routine support of students in HEIs. In the majority of the participating institutions this was provided by academic staff within faculties with specialist support being available centrally via professional staff. A hybrid model of student support. Only one institution had a dedicated tutoring team. These institutions and policies are given in Table 2.

The primary role of the tutors/advisers in these institutions is academic coaching and mentoring, however, they are also expected to provide pastoral support. This is illustrated in the following quote:

“The primary purpose of the Academic Adviser role is to proactively monitor a student’s academic performance and to act as a named contact that a student can approach to discuss their academic, personal and professional development during their time studying at the university1.”

As there is an expectation of providing pastoral support, the personal tutor is often informed of student issues relating to their wellbeing or welfare. If learning analytics were being used to identify wellbeing concerns, staff in these roles would be expected to make initial contact with students (Ahern, 2018).

In some institutions, as illustrated below, there is more emphasis on the academic and professional development of students and tutors are encouraged to refer students to professional colleagues sooner rather than later. Having such an emphasis may deter staff and students from discussing pastoral concerns as these are immediately referred to professional colleagues (Smith, 2005). This is also an illustration of the hybrid professional model of student support.

“Tutors will work in partnership with their students to encourage academic and professional development and include consideration of a student’s progress, including formative or summative assessment feedback, and where relevant, exam feedback in the summer, helping students understand their feedback and prepare for assessments or reassessments, or their transition into the next stage of their career. …. Tutors will refer students directly to central and specialist support services to ensure students receive the correct support as speedily as possible, for example, for support for mental health difficulties. If there is any doubt what the most appropriate service is, they will refer to the Student Support Officer for further guidance2.”

With regard to learning analytics, the terms “analytics” and “dashboard| only appear in the personal tutoring policy for one institution3. It specifies that “The … Student Dashboard is a resource designed to support tutorials. It contains important information useful for different points in the academic year. Where possible and appropriate, staff should use the resource (including recording notes and agreed actions).”

In addition to outlining the role of the tutor/adviser, a number of the policies also specify student responsibilities as tutees. These include notifying their tutor if they are experiencing any academic, health or personal problems that are affecting their academic work and actively engage with any additional support services4.

Four of the participating institutions specify a minimum number of meetings between tutors and tutees in an academic year, with some additionally specifying how many of these should be face-to-face. For the majority of sessions, there is no specification of the format or scheduling for these meetings, it is for individual departments to decide.

“Each student will have a timetabled meeting with their tutor within 3 weeks of the start of study (whether 1-1 or in a small group tutorial), with attendance recorded5.”

As shown below, the requirements can vary dependent on the students’ stage of study.

“• First Years.

• Students are given the name of their personal tutor (and Subject Advice Tutor where relevant), with whom they will meet during the first week.

• Students should normally meet with their personal tutors on four occasions during the year.

• For subsequent undergraduate years:

• Students should normally engage with their tutors on three occasions in each year6.”

However, at the institution with a dedicated tutoring team; students have timetabled group tutorials, are able to book one-to-one tutorials with their tutor or attend a Personal Tutor Drop-In session, similar to office hours offered at the other institutions.

Wellbeing Causes for Concern

In contrast to the day-to-day policies, policies pertaining to wellbeing or academic cause for concern outline specific protocols.

Causes for concern policies and processes are either wellbeing, including mental health, or academic focused. Wellbeing cause for concern processes are often linked to Fitness to Study/Practice policies and procedures.

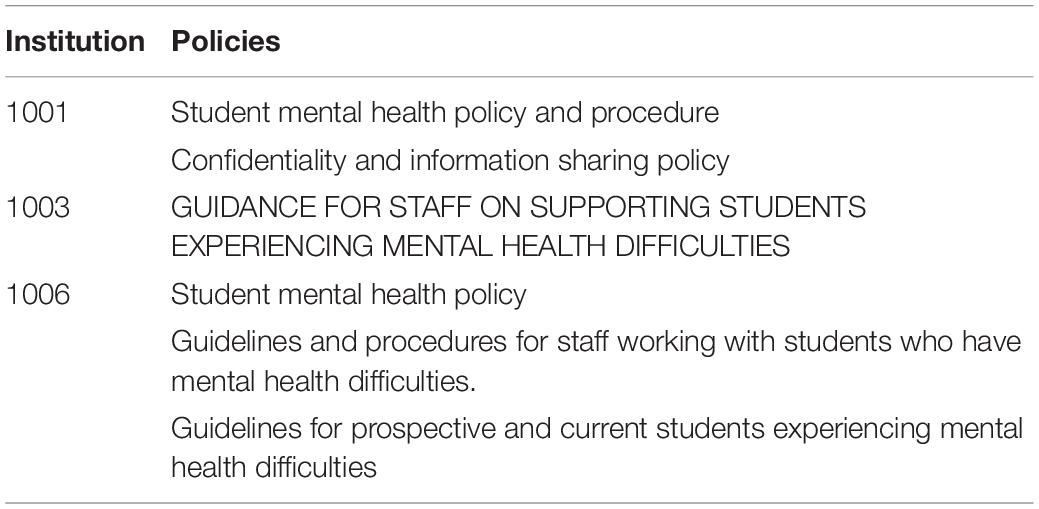

Policies that fall into this category also include specific guidance relating to student mental health. Of the participating institutions, three shared policies explicitly related to student mental health. These institutions and policies are given in Table 3.

Additionally, institutions 1004 and 1007 shared policies related to students with disabilities and long-term health conditions.

Participating institution 1004, shared two additional policies:

• Students Giving Cause for Concern,

• Health, Wellbeing and Fitness to Study Policy & Procedure.

The second policy is of a similar nature to policies from 1002 (Study and Wellbeing Review Policy and Procedures) and 1007 (Support to Study Policy). All three policies present a clear procedure related to 3 levels of concern. Required actions and timelines for each level are specified in addition to possible outcomes and any conditions for automatic escalation.

Academic Causes for Concern

Institutions 1003 and 1007 shared policies relating to students considered to be at academic risk. These policies are enacted if a student does not engage with their program of study in the required manner.

The institution 1003 “Procedure for Students at Risk of Academic Failure” policy, 1003-04, is referenced explicitly in their learning analytics policy “Student Engagement Policy” where students have not met the engagement requirements specified. This is the only example of where a student support policy is referred to in the learning analytics policies analyzed. The procedure for Withdrawal of a Student by the university will be triggered as a result of evidence of one or more of the following:

• failure to attend lectures and/or other timetabled elements of a course;

• failure to submit work for formative or summative assessment;

• failure to engage in other way with the requirements of a course (e.g., through Moodle).

• Referral from Stage 2 of the Fitness to Study Policy.

Institution 1007 is not currently engaged with learning analytics.

Where data is mentioned in these policies it is in regard to Data Protection regulations and the sharing of data with relevant agencies to facilitate student support. Data is not mentioned in the context of identifying concerns related to wellbeing or mental health, therefore there is no evidence to indicate that the #StepChange framework recommendation 2.5 is currently being implemented.

Analysis – Data Usage

With the exception of the personal tutoring policy of participating institution 1003 learning analytics and explicit data usage is not mentioned in any of the other student support and wellbeing policies analyzed by this project.

Three of the participating institutions completed the online survey related to data sharing and usage. A response rate of 42.9%. Of these, 2 have an institutional learning analytics service. The institutions that completed the survey were 1001, 1005, and 1006, all of which are Post 92 and Teaching Intensive.

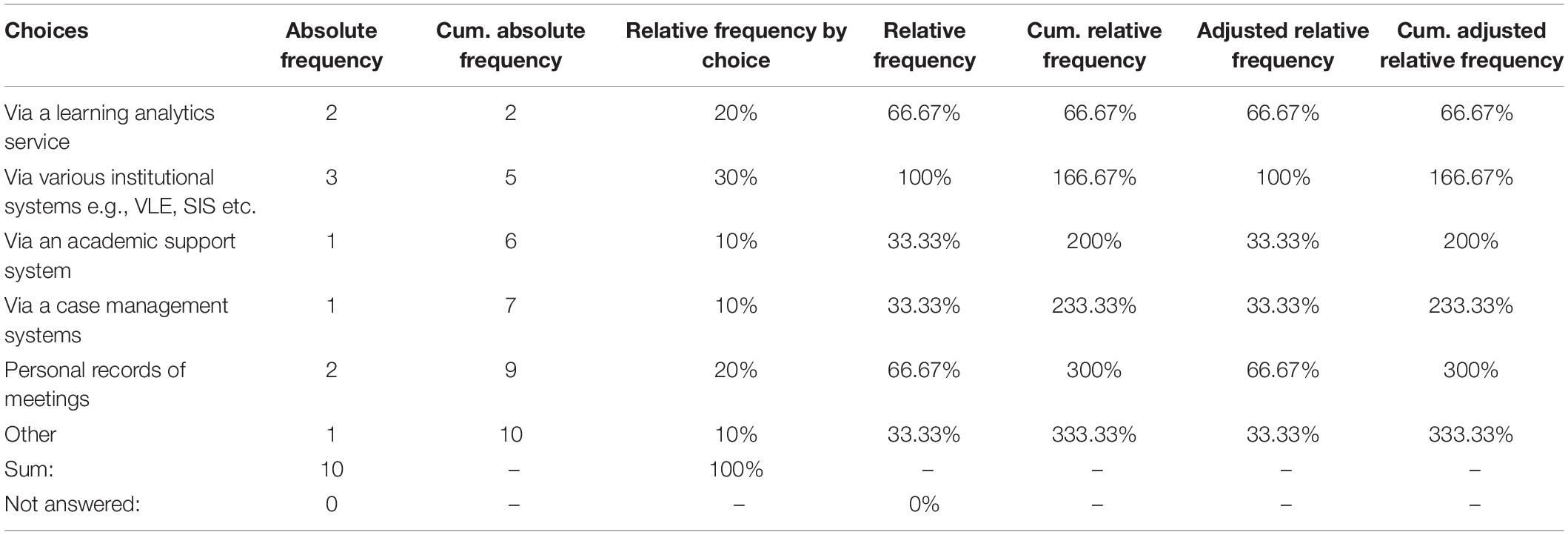

Outcomes of the survey show that for all institutions, academic/personal tutors use data to inform their tutoring. A range of data is used and is made available to tutors via a variety of methods (see Table 4).

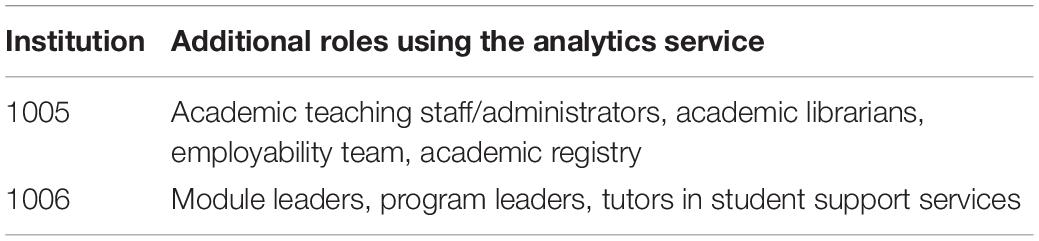

Where surveyed institutions had an institutional learning analytics service tutors were required to make use of the service and received training on how to interpret the data provided. Additionally, in these institutions student support services also had access to the learning analytics service. Other roles also had access to the service (see Table 5).

In addition, at the three institutions that completed the survey, data is shared between tutors and student support services. However, this tends to be from the tutor to student support services. Data related to interactions with student support services are only shared with tutors with student permission. At institution 1005, staff can refer students to student support services via the learning analytics service with the student’s consent. A confirmatory note will be left in the service for the tutor if student support services have successfully contacted the student. Full survey results can be seen in Supplementary Appendix C.

Although a lot of data is available to academic/personal tutors this is primarily data relating to the students’ academic performance and demographics available via the institution’s student information system. Unless a student states that they are seeking support or agree for the data relating to student support services visits to be shared the academic/personal tutor will be unaware. There is a tension between protecting students’ privacy and providing effective support. Without data sharing it may be difficult for tutors to know if a student is having any non-academic related difficulties or difficulties that may be affecting their academic performance. As a result, guidance may be given or discussions had that impact negatively on a student’s wellbeing or mental health. For instance a tutor may discuss concerns around academic performance with a student not knowing they are suffering from anxiety issues and exacerbate the anxiety or be unaware of financial concerns and insist that a student attends a meeting or lecture that requires travel at peak times.

Learning Analytics

Of the participating institutions, four currently having a learning analytics implementation and associated policy(ies). Analysis of the policies shared and those publicly available against the SHEILA framework-based questions found that for all of the institutions, the aims of the service are to enhance student retention and attainment (see Table 6: Overview analysis of learning analytics).

The majority of these systems primarily focus on identifying students with low engagement who may be at risk of withdrawing early.

“8.1 The purpose of learning analytics at … is to:

a. enhance student retention, by alerting staff and individual students when a student is potentially at risk of early withdrawal7.” “The key driver for this is to support students to succeed by identifying at an early stage those who are starting to disengage with their studies8.” “Our primary focus therefore in using such data is to support students in their personal learning journeys toward degree attainment, concurrently maintaining our reputation as a student-centered university9.”

One institution aims to use learning analytics to enhance teaching quality “Academic teams can use analytics about student activity (individual or cohort) as part of course review and re-design processes as well as potentially using analytics as a form of in-course monitoring and feedback10.”

In addition, no mention is made of using learning analytics with regard to mental health in “identify change in students’ behaviors and to address risks and target support.” (Universities UK, 2017). For the institutions participating in this study the main focus is on supporting students’ academic success. Student academic success is defined as completing their course of study and/or obtaining a good degree outcome (Upper Second Class or higher).

The Institutional learning analytics policies reviewed rarely reference their student support and wellbeing policies. Many of these policies have direct references to data collection notices, academic regulations and Tier 4 compliance policies. The policy from 1005 mentions their “Policy for crisis intervention – students causing concern/students at risk,” however, no link is provided to it. In addition to referencing their policy, “Procedures for students at risk of academic failure,” 1003 also reference their “Exceptional Factors form” in their learning analytics policy.

A majority of these systems focus on low behavioral engagement as this has been associated with a risk of academic cause for concern, but the focus on low behavioral engagement is also particularly important for students with a known mental health condition. It should also be noted that poor attendance may be an indicator of low emotional engagement with the HEI. Nottingham Trent University (NTU) have found that 19% more students with a known mental health condition if their engagement is Low (28%), compared to those with whose engagement is Good/High (9%). NTU also found that students with a known mental health condition were more likely to have 14 day non-engagement alerts (7%), than their peers without a declared disability (4%) (Foster, 2019).

However, low behavioral engagement (low levels of interaction with an institution’s systems and services) should not be our only concern with regards to student wellbeing and welfare. From the information available about these systems it is unclear if over or unhelpful engagement can be identified. These are important to identify as some students may be prone to overwork. Some may be over-engaging and high achieving; will others may be over-engaging and have low or average achievement.

The PISA 2015 (OECD, 2017) survey found that 15yr olds in the United Kingdom reported that 95% of the students surveyed wanted to achieve top grades in all or most of their classes, with 76% wanting to be one of the best students in their class, and 90% wanting to be the best whatever they do. This motivation is, however, coupled with school-work related anxiety. Fifty two percent of the surveyed students agreed that they get very tense when they study, with 72% feeling very anxious even if they are well prepared for a test. These concerns have been found to be reflected in the HEI student population. Study was found to be the primary cause of stress among students, this is coupled with pressure to find a high-class degree as “Finding a job after university” is the second highest cause of stress reported by students (Not by Degrees, 2017).

An example of study stress and over-engagement was highlighted in the BBC 3 documentary Death on Campus (Byrne, 2017) where a state school pupil had obtained a place at Oxford University. Said student was struggling, and had started to work all night on a regular basis just to keep up. Sadly, the student hadn’t sought help and took their own life.

In addition to the volume of engagement, it is additionally important to aware of what students are engaging with, analysis I have previously undertaken of student VLE interaction data highlighted the repeated taking of a formative quiz and was negative correlated with outcome.

Additionally, some engagement may be due to factors other than teaching and learning purposes. These could be welfare related, for example, students who overnight in the library may be doing so as there may have issues with their accommodation or have become homeless.

Although the data used in these learning analytics systems is behavioral data, it is unclear from the information provided by the institutions as to the granularity of the data provided and if it is sufficient to identify behavior changes other than broad changes in engagement. Behavior changes are a known signifier of changes in wellbeing, it therefore important to be able to identify changes in an individual’s behavior.

Conclusion

This study draws two main conclusions from the documents that have been reviewed, firstly, there appears to be very little integration of learning analytics and student support and wellbeing. Secondly, there is an overlap of the staff roles and departments involved in the implementation of both sets of policies.

Where there are direct connections between policies these are between academic tutoring and learning analytics, driven by the institutional student retention and success policies. However, a recent systematic review has raised concerns about the efficacy of the systems and their lack of grounding in educational research. It also identifies issues with staff and student interpretation of the date presented (Matcha et al., 2020). At present there is little consideration in these policies of the role for learning analytics could play in supporting students wellbeing and mental health.

If learning analytics and student support and wellbeing are to be aligned as recommended in the 2017 #StepChange report, this should be taken into consideration at the system development stage. There will be differing data requirements and granularity than that currently provided. There would also need to be clear expectations and guidance on how often the system should be accessed and how to respond to the data presented. This could potentially be at more regular intervals than at present for many users of these systems.

Although there is the potential for the alignment of learning analytics and student wellbeing, there are a number of ethical and data collection issues that first need to be addressed. At present it seems that the institutional policies and learning analytics systems in place are not currently sufficiently aligned for this to become a reality.

In addition, there is no consideration seemingly given for the potential impact of learning analytics on both staff and student wellbeing. As both the updated Stepchange framework and the University Mental Health Charter call for a whole university approach to wellbeing this needs to be addressed going forwards.

Further Work

There are limitations to this work as it was a very small study and information used was extracted from institutional policy documents. It is acknowledged that these documents may not represent what happens in practice, and may omit details of systems and practices. Many of the policies analyzed were published before the publication of the #StepChange framework.

As learning analytics implementations are predominantly designed for use by those supporting students there is need to connect the literature on advising and tutoring with any research into the impacts of learning analytics on student behavior. In terms of both learning behaviors and welfare (wellbeing and mental health). At present the design of interventions such as dashboards does not seem to have a grounding in this literature.

Next steps for this work would be to review existing learning analytics systems to identify whether or not the granularity of the data provided is sufficient to identify behavior changes other than broad changes in engagement. It would also be beneficial to interview staff using these systems to identify what they are looking for in the data and how they would feel about using this data for student wellbeing purposes.

Additionally, further research is required into the impact on staff and student mental health of current learning analytics implementations as this does not appear to have been considered in the policies reviewed.

The recommendation for and research into the alignment of learning analytics with student support and wellbeing with regards to mental health is a United Kingdom specific phenomenon. However, any lessons learned with regard to wellbeing and mental health impacts for staff and students occurring as a result of a learning analytics system being implemented are important for the wider global learning analytics user community.

Data Availability Statement

The data generated from complete survey responses is available in the Supplementary Material. The qualitative data has not been shared as this would identify the participating institutions, some of whom wished to remain anonymous.

Author Contributions

SA was solely responsible for all elements of the research and production of the corresponding manuscript.

Funding

This research was funded as part of the SA’s daily work, no additional funding was received.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was mentored by the United Kingdom Advising and Tutoring Research Committee. I would also like to extend thanks to Dr. Steve Rowett and Ms. Joanna Stroud for their assistance in proofing and editing the text.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2020.531424/full#supplementary-material

Footnotes

- ^ 1001-02 section “Student Support and Learning Analytics” purpose of academic advising.

- ^ 3-02 section “Learning Analytics” Academic and personal tutors.

- ^ 1005-01.

- ^ 1004-08, 1007-02, 1001-02.

- ^ 3-02.

- ^ 1007-02.

- ^ 1005-01, section Tables 1–6.

- ^ 3 https://www.celt.3.ac.uk/sem/index.php.

- ^ 1006 – Using Student Engagement Data policy.

- ^ 1004 – Learning Analytics Purpose https://www.gre.ac.uk/articles/planning-and-statistics/learning-analytics-purpose.

References

10th International Learning Analytics and Knowledge (Lak) Conference (2019). Available at: https://lak20.solaresearch.org/ (accessed August 27, 2019).

About– SHEILA (2018). Available online at: http://sheilaproject.eu/about-sheila/ (accessed July 18, 2018).

Ahern, S. J. (2018). The potential and pitfalls of learning analytics as a tool for supporting student wellbeing. J. Learn. Teach. High. Educ. 1, 165–172. doi: 10.29311/jlthe.v1i2.2812

Arguel, A., Pachman, M., and Lockyer, L. (2019). “Identifying epistemic emotions from activity analytics in interactive digital learning environments,” in Learning Analytics in the Classroom: Translating Learning Analytics Research for Teachers, eds J. C. Horvath, J. M. Lodge, and L. Corrin (London: Routledge), 56–67. doi: 10.4324/9781351113038-5

Bennett, L. (2018). Students’ Learning Responses to Receiving Dashboard Data. Huddersfield: University of Huddersfield.

Broos, T., Pinxten, M., Delporte, M., Verbert, K., and Laet, T. D. (2019). Learning dashboards at scale: early warning and overall first year experience. Assess. Eval. High. Educ. 1, 1–20. doi: 10.1080/02602938.2019.1689546

Byrne, A. (2017). Death on Campus: Our Stories. BBC 3. Available online at: https://www.bbc.co.uk/programmes/p05lyqp9 (accessed August 9, 2019).

CIC Editor (2019). Playing Cards That Empower Teachers and Students to Join the Analytics Co-Design Process | Uts:Cic. Available online at: https://cic.uts.edu.au/playing-cards-that-empower-teachers-and-students-to-join-the-analytics-co-design-process/ (accessed July 31, 2020).

Dawson, S. (2010). SNAPP: Realising the Affordances of Real-Time SNA Within Networked Learning Environments. Tehran: SNAPP.

DuVall, K. D. R., Rininger, A., and Sliman, A. T. (2018). Peer Advising: Building a Professional Undergraduate Advising Practicum (n.d.). Available online at: https://nacada.ksu.edu/Resources/Academic-Advising-Today/View-Articles/Peer-Advising-Building-a-Professional-Undergraduate-Advising-Practicum.aspx

Earwaker, J. (1992). Helping and Supporting Students: Rethinking the Issues. Buckingham: Society for Research into Higher Education and Open UP.

Ferguson, D. (2017). Mental Health: What can new Students do to Prepare for University? The Guardian. Available online at: http://www.theguardian.com/education/2017/aug/29/the-rise-in-student-mental-health-problems-i-thought-my-tutor-would-say-deal-with-it

Fisher, W. W., Piazza, C. C., and Roane, H. S. (eds). (2013). Handbook of Applied Behavior Analysis (1 edition). New York, NY: Guilford Press.

Foster, E. (2019). Can learning analytics warn us early on mental health? | Wonkhe | Analysis. Available online at: https://wonkhe.com/blogs/can-learning-analytics-warn-us-early-on-mental-health/ (accessed August 9, 2019).

GuildHE (2018). Wellbeing in Higher Education. Available online at: https://guildhe.ac.uk/wp-content/uploads/2018/10/GuildHE-Wellbeing-in-Higher-Education-WEB.pdf (accessed August 7, 2019).

Herodotou, C., Rienties, B., Hlosta, M., Boroowa, A., Mangafa, C., and Zdrahal, Z. (2020). The scalable implementation of predictive learning analytics at a distance learning university: insights from a longitudinal case study. Internet High. Educ. 45:100725. doi: 10.1016/j.iheduc.2020.100725

Improving mental health services for young people–GOV.UK (2015). Available online at: https://www.gov.uk/government/publications/improving-mental-health-services-for-young-people (accessed January 22, 2020).

Jisc (2018). Learning Analytics. Jisc. Available online at: https://www.jisc.ac.uk/learning-analytics

Jisc (2019). Learning Analytics: Going Live. Available online at: https://www.jisc.ac.uk/news/learning-analytics-going-live-01-apr-2019 (accessed January 22, 2020).

Kern, M. L., Waters, L. E., Adler, A., and White, M. A. (2015). A multidimensional approach to measuring well-being in students: application of the PERMA framework. J. Posit. Psychol. 10, 262–271. doi: 10.1080/17439760.2014.936962

King, M. C. (1993). Advising models and delivery systems. New Direct. Commun. Colleges 1993, 47–54. doi: 10.1002/cc.36819938206

Koring, H. (2005). Peer Advising: A Win-Win Initiative. Available online at: https://www.nacada.ksu.edu/Resources/Academic-Advising-Today/View-Articles/Peer-Advising-A-Win-Win-Initiative.aspx (accessed October 9, 2018).

Kuba, S. E. (2010). The Role of Peer Advising in the First-Year Experience. Ph.D., The University of Wisconsin, Madison.

Leitner, P., Ebner, M., and Ebner, M. (2019). “Learning analytics challenges to overcome in higher education institutions,” in Utilizing Learning Analytics to Support Study Success, eds D. Ifenthaler, D.-K. Mah, and J. Y.-K. Yau (Cham: Springer), 91–104. doi: 10.1007/978-3-319-64792-0_6

Learning Analytics Discovery Toolkit (n.d.). Available online at: https://docs.analytics.alpha.jisc.ac.uk/docs/learning-analytics/Discovery-Toolkit (accessed August 8, 2019).

Lochtie, D., McIntosh, E., Stork, A., and Walker, B. (2018). Effective Personal Tutoring in Higher Education. St Albans: Critical Publishing.

Matcha, W., Uzir, N. A., Gašević, D., and Pardo, A. (2020). A systematic review of empirical studies on learning analytics dashboards: a self-regulated learning perspective. IEEE Trans. Learn. Technol. 13, 226–245. doi: 10.1109/TLT.2019.2916802

Mynott, G. (2016). Personal tutoring: positioning practice in relation to policy. Innovat. Pract. 10, 103–112.

Na, K. S., and Tasir, Z. (2017). “A systematic review of learning analytics intervention contributing to student success in online learning,” in Proceedings of the 2017 International Conference on Learning and Teaching in Computing and Engineering (LaTICE), Piscataway, NJ, 62–68.

OECD (2017). PISA2015-students-well-being-United-Kingdom.pdf. Available at: https://www.oecd.org/pisa/PISA2015-students-well-being-United-Kingdom.pdf (accessed July 4, 2019).

On-boarding Guide | Effective Learning Analytics (2018). Available online at: https://analytics.jiscinvolve.org/wp/on-boarding/ (accessed August 8, 2019).

Owen, J. (2018). What Makes an Intelligent Campus? Education Technology. Available online at: https://edtechnology.co.uk/Blog/what-makes-an-intelligent-campus/ (accessed August 27, 2019).

Pardee, C. (2004). Organizational Models for Advising. Available online at: https://www.nacada.ksu.edu/Resources/Clearinghouse/View-Articles/Organizational-Models-for-Advising.aspx (accessed October 8, 2018).

Paris, J. A. F., Blumenfeld, P. C., and Alison, H. (2016). School engagement: potential of the concept, state of the evidence - jennifer a fredricks, phyllis c blumenfeld, alison H Paris, 2004. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Prietoalvarez, C. (2018). LADECK. Available online at: http://ladeck.utscic.edu.au/ (accessed May 1, 2020).

Prinsloo, P., and Slade, S. (2017). “Big data, higher education and learning analytics: Beyond justice, towards an ethics of care,” in Big Data and Learning Analytics in Higher Education, ed. B. K. Daniel (Cham: Springer), 109–124. doi: 10.1007/978-3-319-06520-5_8

Purdy, H. (2013). Peer Advisors: Friend or Foe? Available online at: https://www.nacada.ksu.edu/Resources/Academic-Advising-Today/View-Articles/Peer-Advisors-Friend-or-Foe.aspx (accessed October 9, 2018).

Rienties, B., Cross, S., and Zdrahal, Z. (2017). “Implementing a learning analytics intervention and evaluation framework: what works?,” in Big data and learning analytics in higher education, ed. A. Abi, 147–166. Cham Springer. doi: 10.1007/978-3-319-06520-5_10

Saxena, S., and Setoya, Y. (2014). World Health Organization’s comprehensive mental health action plan 2013-2020. Psychiatry Clin. Neurosci. 68:585. doi: 10.1111/pcn.12207

Seligman, M. E. (2012). Flourish: A visionary New Understanding of Happiness and well-being. New York, NY: Simon and Schuster.

Seligman, M. E., Ernst, R. M., Gillham, J., Reivich, K., and Linkins, M. (2009). Positive education: positive psychology and classroom interventions. Oxf. Rev. Educ. 35, 293–311. doi: 10.1080/03054980902934563

Shackle, S. (2019). ‘The Way Universities are Run is Making us ill’: Inside the Student Mental Health Crisis. The Guardian. Available online at: https://www.theguardian.com/society/2019/sep/27/anxiety-mental-breakdowns-depression-uk-students

Shacklock, X. (2016). From Bricks to Clicks: The Potential of Data and Analytics in Higher Education/Written by Xanthe Shacklock. London: Policy Connect.

SHEILA-framework_Version-2.pdf (2018). Available online at: http://sheilaproject.eu/wp-content/uploads/2018/08/SHEILA-framework_Version-2.pdf (accessed January 22, 2019).

Siemens, G., and Gasevic, D. (2012). Guest editorial-Learning and knowledge analytics. Educ. Technol. Soc. 15, 1–2.