- 1OER Program, Houston Community College, Houston, TX, United States

- 2OpenStax, Rice University, Houston, TX, United States

This article examines the impact of Zero Cost Textbooks (ZCB) courses on two key factors of students success: pass rates and completion rates. We examine 3 years of data at Houston Community College, ending with the first year of their implementation of a ZCB program. Following the “access hypothesis,” we suppose that students who would not otherwise be able to purchase traditional textbooks will have higher pass rates and completion rates in ZCB courses. We use Pell recipient status and ethnicity as proxies for socio-economically disadvantaged students, targeting those populations where the access hypothesis will have the greatest impact. We isolate faculty who taught both ZCB and non-ZCB courses during the period under review and conduct a post-hoc analysis, using mixed effects logistic regression, to identify interactions between student and course characteristics and success metrics. We find that HCC's ZCB program had a statistically significant positive effect on pass rates for all students, but no effect on completion. We find a trend to suggest the ZCB program may improve success among Black students, but only a statistically significant positive effect among Asian students, and no interaction with Pell recipient status. These results are not well-explained by the access hypothesis; they suggest the need for further research.

1. Introduction

The adoption and promotion of open textbooks and Open Educational Resources (OER) has become one of the key tools colleges and universities deploy to reduce the cost of higher education. By reducing costs and lowering barriers to access, colleges and universities hope to promote equity, and, ultimately, student success. OER and open textbooks allow greater access and reduced cost because they are teaching and learning resources that are licensed using an open copyright license that permits free use and repurposing by others (UNESCO, 2019). That said, OER are not the only teaching and learning resources that can be provided to students free of charge. Faculty may use resources on the open web, independently developed resources, library resources, or, even, publisher resources on a trial basis, to provide students free learning materials sufficient to meet curricular goals. There may be pedagogical or personal reasons to pursue one type of free and open resource over another. Nevertheless, all of these strategies can broadly be classed in terms of their use of free and open instructional materials, resulting in zero additional costs to students for textbooks. In this study, we evaluate the impact of a Zero Cost Books Program, which was designed to replace high cost textbooks with freely available alternatives. We suspect that by reducing the cost of textbooks to zero, faculty using Zero Cost Books improve the access that all students have to their instructional materials, resulting in higher completion and success rates.

2. Efficacy of OER Textbooks for Student Success

The research questions of primary interest to OER investigators have been characterized by the acronym “COUP” (Cost, Outcomes, Use, and Perceptions) (Bliss, 2013). The present study focuses on the outcomes or efficacy of using free and open textbooks by examining student success measures, with specific focus on student populations who may have lacked access to commercial materials because of the cost. Hilton (2016) provided the first published review of research on efficacy and perceptions. That study identifies 9 articles, from September 2015 and prior, that examined the impact of free and open resources on student learning outcomes. Hilton (2019) provided a similar review for the time period from October 2015 to December 2018. The 2019 review identifies 16 studies that examine the efficacy of implementing OER on student learning. Clinton and Khan (2019) conducted a statistical meta-analysis of published efficacy studies. Clinton and Khan (2019) limited their analysis to studies that reported sufficient statistical information for them to perform the meta-analysis. They identified 22 published papers and a total of 23 studies examining the efficacy of open and free resources on student learning.

Among studies that report effects on completion rates, Hilton et al. (2013) found that one in five math courses reported a statistically significant decrease in completion rates. For other courses, the results were mixed but not statistically significant. In the course that had lower completion, Hilton et al. (2013) also found lower success rates. The authors suggest that this can be partially explained by changes in math placement that occurred at the institution during the time of the study. Robinson (2015) examined seven courses across seven different institutions. He found a mix of positive and negative effects on completion from the use of OER, but only in the case of Biology was there a statistically significant positive effect. Fischer et al. (2015) appear to be analyzing a similar (or overlapping) dataset as Robinson (2015). They find a statistically significant positive effect on success rates in Biology and Business courses. At least in the case of Business, they make clear that this difference is driven by a drop in withdraw rates (or increase in completion rates). Ross et al. (2018) and Croteau (2017) find no statistical difference between the OER and commercial textbook classes. Ross et al. (2018) saw very little measurable difference at all, while Croteau (2017) saw variability across institutions and sections, resulting in a lack of statistical significance in the overall analysis.

In general, studies on OER efficacy tend to report withdrawal rates rather than completion rates. There is likely to be a strong inverse relationship between withdrawal and completion, where completion identifies students who have neither withdrawn nor been dropped by the end of the course. Clinton and Khan (2019) provides a meta-analysis of withdrawal rate statistics from 12 studies, including most of the studies cited above. Clinton and Khan (2019) found that effects vary widely, but in the five cases where the odds ratio of withdrawal was statistically significant, only one study showed a positive effect on withdrawal. That is, only one study predicted that the use of OER increased withdrawal rates. Consequently, the combined analysis of all studies resulted a negative effect, a 0.71 odds ratio (p = 0.005), of OER on withdrawal rates. In sum, across these studies it appears that OER provide a small but statistically robust positive impact on reducing withdrawal rates for students enrolled in OER courses.

In order to assess the impact of substituting a free or open resource for a traditional, commercial textbook, studies have employed different methods. Lovett et al. (2008) used a randomized selection process in their assessment of use of Open Learning Initiative OER material. Bowen et al. (2014) expanded the work of Lovett et al. (2008) and employed the same method. More commonly, studies have used quasi-experimental, statistical methods when conducting a post-hoc analysis of larger or previously implemented free and open textbook projects. See Grimaldi et al. (2019) and Griggs and Jackson (2017) for a discussion of the methodological limitations of these approaches.

Hilton and Laman (2012), Allen et al. (2015), Chiorescu (2017), Choi and Carpenter (2017), Jhangiani et al. (2018), and Clinton (2018) controlled for instructor effects by comparing student grades from the same instructor in prior semesters with grades in subsequent semesters, using the open textbooks in Psychology, Chemistry, Math, Human Factors and Ergonomics, Psychology, and Psychology respectively. Ross et al. (2018) used a similar method to control for instructor in a multi-course study.

Recent discussions among OER researchers have emphasized the importance of identifying mechanisms for the improvement of students learning outcomes when comparing the efficacy of OER to traditional, commercial textbooks. Though many OER practitioners would like to advocate for the expansion of OER programs by pointing to their positive impact on student success, studies need to clarify why there should be such an impact. Given that OER textbooks are typically designed as a textbook replacement, meaning that they cover the same learning objectives and are written with similar learning strategies, it's unclear why swapping the textbook alone would improve student learning outcomes. One plausible mechanism that has been discussed is access (Grimaldi et al., 2019). Several surveys indicate that a sizable portion of students lack access to their textbook because of cost and it is likely that this has an impact on their success in the course. The most recent Florida Virtual Campus Textbook Survey found that 64.25% of students in their sample in the state of Florida did not purchase a textbook during the school year, 35.62% reported receiving a poor grade because they could not purchase a textbook, and 22.91% report dropping a course due to textbook cost (Florida Virtual Campus, 2018). The most recent (2020) unpublished surveys at HCC found that 23.68% of students surveyed did not purchase a textbook because they could not afford it. If we assume textbooks improve learning, then providing access to free instructional materials is expected to improve student learning outcomes for those students who would have otherwise avoided purchasing a textbooks due to its cost. This relationship is referred to as the access hypothesis (Grimaldi et al., 2019).

One of the keys to testing the access hypothesis is to examine whether the use of open and free resources differentially impacts students who otherwise would not have had access to those learning materials. Few existing OER impact studies can plausibly address this question. While several studies have attempted to control for student variables using quasi-experimental statistical designs (Gurung, 2017; Clinton, 2018; Jhangiani et al., 2018; Venegas Muggli and Westermann, 2019), these studies did not evaluate whether the effects of OER were different for demographic groups that may be more likely to have issues accessing commercial textbooks. By contrast, Colvard et al. (2018) predicted that “students from low socioeconomic backgrounds that require substantial financial assistance to attend college would exceedingly benefit from courses that have adopted a free textbook when compared to previous semesters when traditional, commercial textbooks were used.” Using receipt of a Federal Pell Grant as a proxy for low socioeconomic status, Colvard et al. (2018) found some evidence that OER resulted in a greater impact for students who received Pell grants than students who did not. However, this study did not use statistical modeling to control for potential confounding factors. In sum, while access is the dominant explanatory mechanism for explaining the benefits of OER and free resources, solid evidence in support of the access hypothesis is under explored.

3. Zero Cost Books Program

Houston Community College (HCC) is an urban community college with a large minority population. The HCC district spans the Houston-metro area, including 24 campus locations with 6 campus centers. In the Fall of 2017, HCC launched a Z-Degree program, focused on providing a completely zero cost textbooks degree path for the Associate of Arts (AA) degree plan in Business, as well as the AA and Associate of Science (AS) general education transfer degrees. They identified 35 instructors from every program area needed to complete an AA Degree in Business. Instructors were provided with some training on the use of OER, but they were permitted to use any freely available resources. In most cases, this meant the adoption of OER (including open textbooks, open courseware1, and other open learning materials), but in some cases it involved use of library resources, and in two specific cases, it involved using publisher-based resources on a temporary, free trial basis. For those instructors who elected to use an OER courseware platform to deliver course materials, the institution subsidized access for students through a private grant so that students did not incur any cost for access to those materials.

Prior to the Z-Degree initiative, HCC had sustained some grassroots interest in OER with some administrative support. The college worked with the Rice University Connexions, where two faculty, in History and Computer Science, authored open textbooks. Several psychology faculty used an OER textbook, available through Flat World Knowledge. They collected evidence on efficacy, and produced a study related to that implementation (Hilton and Laman, 2012). Additionally, HCC librarians had produced a LibGuide on OER that won the OEConsortium outstanding website award in 2015. As a result, there was some faculty use of free and open instructional materials prior to the launch of the Z-Degree program and continuing alongside the Z-Degree. To capture faculty usage prior to the launch of the Z-Degree, we used survey results, sign-in sheets, and word of mouth to identify instructors who may be using OER. For those instructors whose courses were included in our collected dataset, we verified the online syllabus and contacted the instructor directly when such a syllabus was not available to determine if they were using zero cost books in the course.

During the 2017–2018 school year, the Z-Degree program was promoted through a website, email and social media. The OER Coordinator attempted outreach with interested students. Even though many students completed an online form expressing interest in the program, very few of those students actually enrolled. For the years prior to Fall 2017, there was no official notice or policy that would alert students to the class being a Zero Cost Books class. Consequently, the likelihood of self-selection on the part of students in this sample is very small.

4. Study

4.1. Overview

The following study examines the course level impacts of providing zero cost books (ZCB) to students. As mentioned previously, the study was prompted by the launch of a Z-Degree (zero cost textbook degree plan) at Houston Community College in Fall of 2017. We were primarily interested in pass rates and completion rates of students in ZCB courses as compared to students in courses using traditional commercial publisher materials. The study is guided by the following research questions:

1. Are students more likely to pass (receive a C or better as a final grade) a ZCB courses than courses with a traditional commercial textbook? Is this effect dependent on socio-economic status?

2. Are students more likely to complete (receive a final grade) ZCB courses than courses with a traditional commercial textbook? Is this effect dependent on socio-economic status?

Applying the access hypothesis (Grimaldi et al., 2019), students who would have been unable to purchase traditional commercial textbooks will be able to gain access to instruction materials when those materials are free. Given that access to instructional materials are, in most cases, important for student success, the access hypothesis predicts that students in ZCB courses will be more likely to pass than students in non-ZCB courses. If we assume that the decision to drop or withdraw from a course is partially motivated by performance early in the course, then we can also predict that students will be more likely to complete a ZCB course than a non-ZCB course. Finally, the access hypothesis also predicts that this effect ought to be detected disproportionately in students for whom textbook cost presents a significant barrier, namely, students of lower socio-economic status. Thus, students from lower SES groups in particular should be more likely to pass and complete courses in ZCB courses than non-ZCB courses.

To test these predictions, we conducted a post-hoc analysis of data obtained from the Houston Community College institutional research office from Fall 2015 to Spring 2018. The original data request included all students enrolled in courses that were offered as part of the Z-Degree at Central Campus (the largest and most urban campus in the district) and Online. For the purposes of this study, we limited the scope of data obtained to instructors who had used ZCB and non-ZCB materials in at least one of their courses throughout the targeted time period. The rationale for limiting the sample in this way was that we wanted to be able to control for instructor level effects in our analysis, which is not possible if instructor is confounded with ZCB adoption. ZCB materials were defined as any material that was provided at zero-cost to students (whether those materials were OER courseware, open textbooks, library materials, self-authored materials, publisher-provided materials, or other OER). We did not distinguish between faculty who used OER as part of the Z-Degree program specifically from those who used ZCB outside of the Z-Degree program. This is because we are only evaluating performance at the course level, and thus, whether instructors used ZCB within or outside the program is irrelevant. We also only included students that had a prior GPA score. This was done in order to control for overall student aptitude.

In order to evaluate the interaction between ZCB and socio-economic status, we were required to use several proxy variables. Ideally, we would have direct information about a student's socio-economic conditions (such as annual income), but this data was not available due to privacy concerns. A commonly used proxy variable is Pell eligibility, which describes whether students meet the requirements to be eligible for Federal assistance. Unfortunately, we were unable to obtain Pell Eligibility information due to limitations in the way HCC collects this information, only whether a student received a Pell grant. This is less desirable, as not all students who meet the eligibility requirements for Pell apply for assistance each year. Several studies have demonstrated that Pell eligibility likely underrepresents the total number of economically disadvantaged students (McKinney and Novak, 2015; Delisle, 2017). Given HCC's student population, with a large number of international students, first generation students, and given that it is an open enrollment community college, we expect Pell status to underrepresent low SES students even more. To supplement, we use student ethnicity as a proxy variable, as there are profound economic gaps that exist among racial groups in the Houston area. According to a recent local survey (Klineberg, 2019), Hispanic and Black families are far more likely to have an annual household income under $37,500 than White or Asian families. They are also less likely to have health insurance. Thus, given the known economic inequities in the Houston area, ethnicity is probably related to socio-economic status. We recognize the limitations of these proxies for assessing the access hypothesis, but use of such proxies is common in educational research and without an alternative measure, these measures remain the best means we have for assessing this important variable.

4.2. Description of Analytic Sample

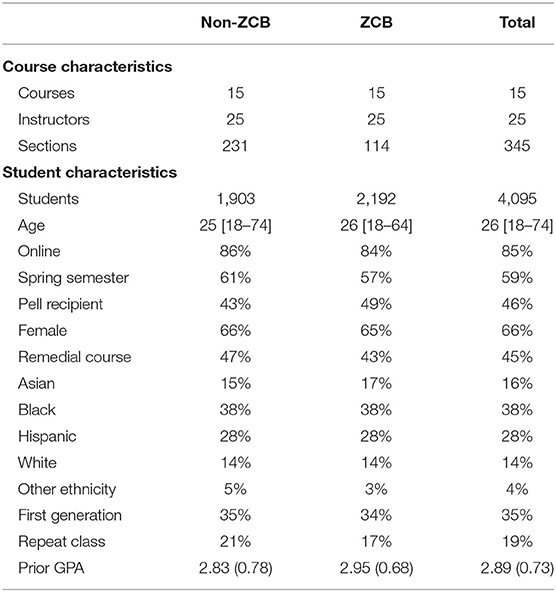

We will begin our analysis by first describing our analytic sample. Houston Community College has a very large, diverse student body. A summary table of the sample is shown on Table 1. The data were drawn from 15 courses (SOCI 1301, CHEM 1305, ASTR 1303, HIST 1302, ENGL 1302, BCIS 1405, MATH 1324, GEOL 1305, EDUC 1300, BIOL 1308, PSYC 2301, HIST 1301, GOVT 2305, ENGL 1301, MATH 1332), and a total of 25 instructors. Across the courses, instructors, and sections used in this sample, there were 4,095 students. Note that there are several cases where a student appeared multiple times throughout the dataset. This is not unexpected, as a student could happen to enroll in multiple of these classes by chance. However it is problematic from a statistical perspective, as we assume independence of the observations. In order to resolve this, we only included a pseudo-randomly chosen single observation for each student. Specifically, we randomly chose from observations in ZCB sections first. If a student was not in a ZCB section, we randomly chose from the remaining observations. This was done to maximize the number of observations in the ZCB course (which was generally lower than non-ZCB courses).

There are several unique aspects of the sample illustrated on Table 1 that are worth noting. First, a fairly large percentage of students in the sample received Pell grant funds (46%) during the semester in which they were enrolled in the course. Second, a majority of students were of a historically under-served ethnic groups (Black or Hispanic). These qualities make this analytic sample particularly well-suited for examining the influence of ZCB, as these communities are often the hardest hit by the high cost of course materials. Moreover, if these students are more likely to benefit from zero cost materials, as predicted by the access hypothesis, then we are more likely to see positive effects in the sample overall (Grimaldi et al., 2019). Another unique aspect of this sample is that the majority of students took the course online. This is noteworthy because in person classes had a statistically significant positive impact on completion rates, but not on pass rates. Finally, as mentioned previously, we only included students who had a prior GPA score in order to control for overall student proficiency. However, we recognize that prior GPA is not a perfect indicator, and can fluctuate across semesters. While SAT or ACT scores would be ideal here, most students did not have these scores because HCC is open enrollment and does not require standardized test scores for admission. However, HCC admissions does examine the applications of all incoming students to determine whether students must enroll in a remedial course, based on a holistic evaluation of high school GPA, SAT/ACT, or Texas Success Initiative Assessment scores. We included whether students were flagged for this remedial course as an additional measure of student aptitude. As seen on Table 1, there was a substantial number of students who met this classification (45%).

Because the analytic sample was drawn from a collection of student data aligned with the ZCB program offerings for the 2017 Academic Year, the sample is not perfectly representative of HCC as a whole. In particular, our dataset was drawn from courses offered at one campus, Central Campus, and all online sections. This heavily biases the sample in favor of online. 85% of the students in the analytic sample took their course online, whereas only 19% of all students at HCC during the period of our analysis were enrolled in an online course. Additionally, our sample includes more female students (66–58%), more Black students (38–31%), slightly more Asian students (16–14%), and fewer Hispanic students (28–37%). Finally, it contains slightly more Pell recipients (46–39%). Because the purpose of this study is to assess the access hypothesis and not necessarily to make generalizations about HCC students, we did not make any effort to adjust our sample to better represent the HCC population.

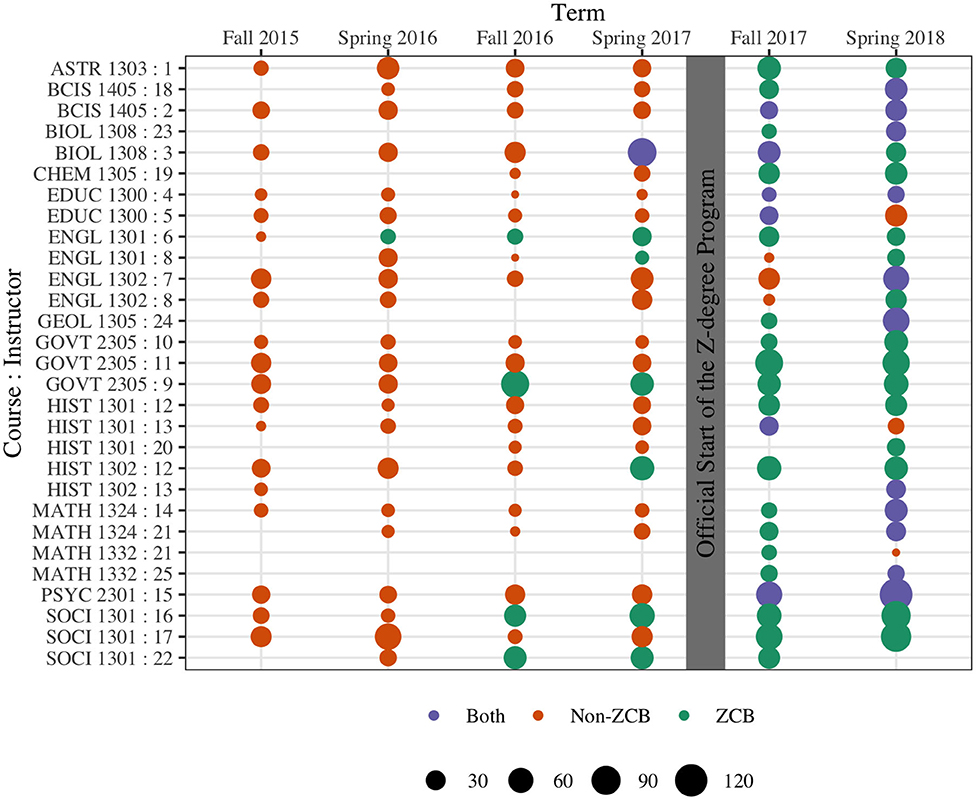

A visual representation of the ZCB adoption across instructors and courses throughout the target time period is shown on Figure 1. In the figure, each row represents a course taught by a particular instructor, and each cell is a different semester. The color of the cell represents whether the course was taught using ZCB materials or Non-ZCB materials, and the size of the point indicates the number of students. There are several important things to note on Figure 1: First, while most instructors in this sample adopted ZCB after the implementation of the ZCB program, several were using ZCB prior to the programs conception. Second, there were several instructors that appear to have taught a course with both ZCB and non-ZCB materials simultaneously. This was always the result of an instructor teaching an online and in person sections of the course, where each section used different materials. Finally, and most importantly, the courses and instructors are not balanced in terms of the number of ZCB and non-ZCB students they taught. This imbalance illustrates why it is so critical to control for course level and instructor level effects, as some of the influence of ZCB is confounded by instructor. As we will see, course and teacher level effects are substantial predictors of student success.

Figure 1. Overview of the ZCB usage by each instructor. Color of the points indicate the type of material used (ZCB or Non-ZCB). Size of the points indicate the number of students.

4.3. Modeling the Effects of ZCB

In order to examine the influence of using ZCB on student outcomes, we used mixed effect logistic regression models. Specifically we fit this model to predict two binary outcome variables (1) Completion and (2) Passing. Completion was defined as whether a student completed the course (i.e., did not withdraw and was not dropped). Passing was defined as whether the student completed the course with a grade of C or better. When predicting passing, we only included students who completed the course. As fixed effects in the models, we included: Gender (Male vs. Female), Ethnicity (Asian, Black, Hispanic, White or Other), Pell (Received vs. Did Not Receive), Prior GPA, Age, First Generation (First Generation vs. Not First Generation), Remedial Course (Remedial Course vs. No Remedial Course), Repeat (Repeat vs. first attempt), Modality (In Person or Hybrid vs. Online), and Courses Taken. Note, for practical reasons, we excluded students with a gender other than female or male. Unfortunately, there were too few observations for such students and we were unable to get our models to converge when they were included. As random effects, we included Instructor nested under Course (i.e., the topic domain). The random effects were specified as varying intercepts only. Inclusion of the random effects allows for us to control for the differences in instructor level and course level artifacts (such as easier or more difficult courses/instructors). For each completion and pass analysis, we also fit an interaction versions of the model, which included interaction terms that crossed ZCB with Pell or ZCB with Ethnicity. Inclusion of these interaction terms afforded the ability to test whether the effects of ZCB depending on socioeconomic status.

4.4. Results

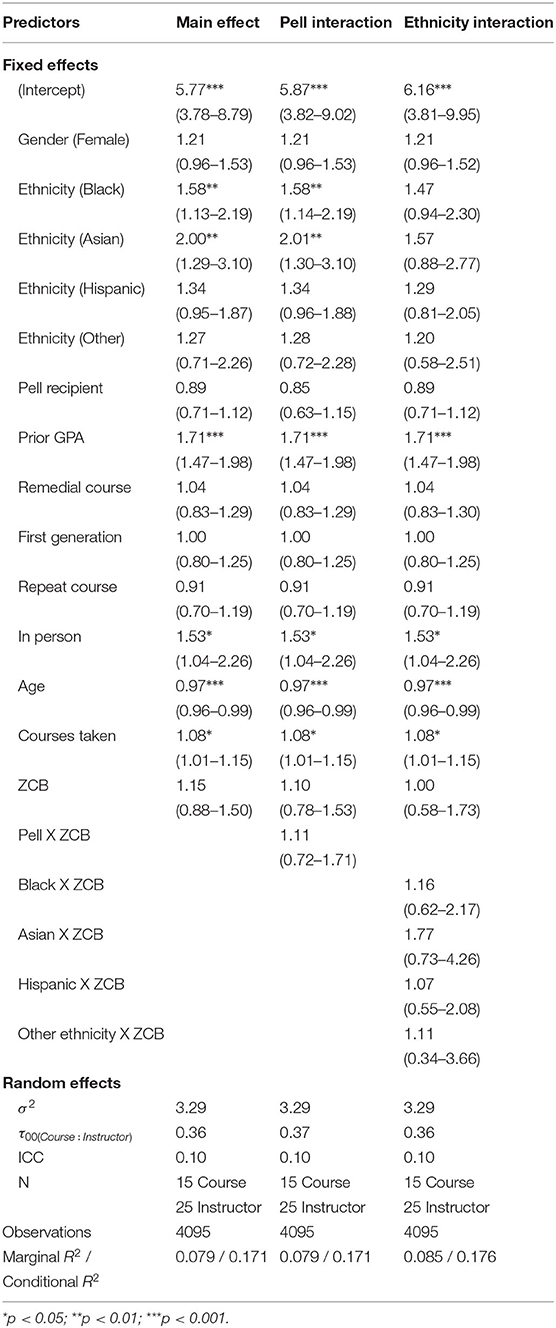

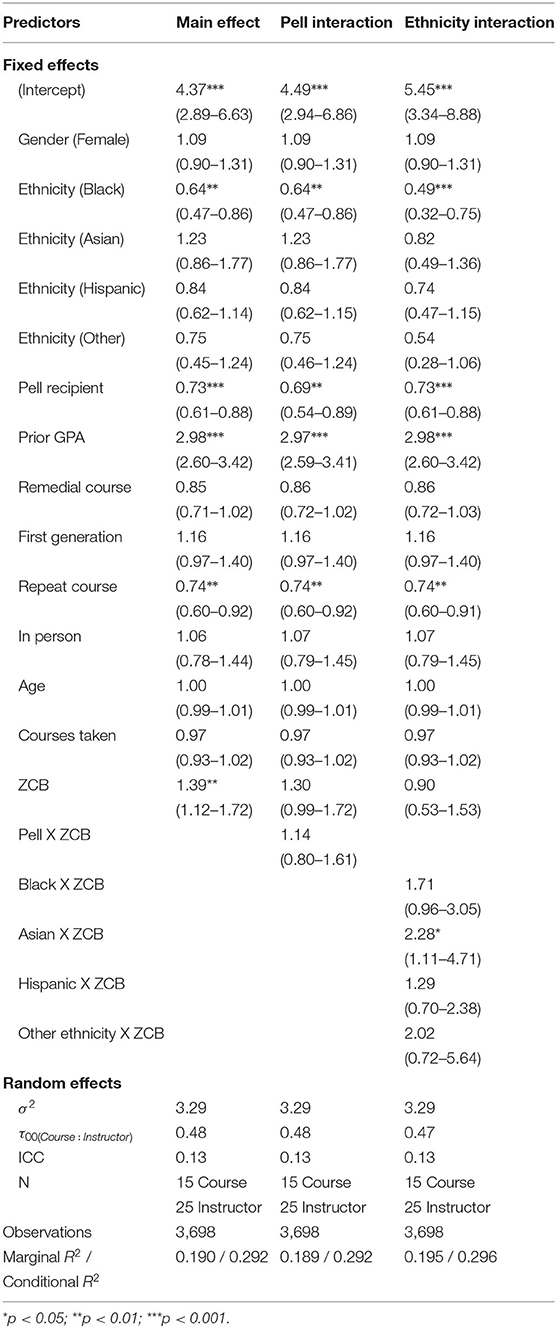

The full results from our models for course completion and passing are shown on Tables 2, 3, respectively. The tables show the estimated effects of each fixed effect as an odds ratio, as well as the 95% confidence interval in parenthesis. Briefly, an odds ratio indicates the odds of completing or passing the course, given a 1 unit change in the variable of interest. Thus, an odds ratio of 1 indicates that there is no effect, while values greater or less than one indicate a positive or negative relationship, respectively. Table 2 includes both a main effect and interaction models for course completion, and Table 3 shows the results for course passing.

We will first describe the model results for completion, shown on Table 2. Examining the results from the main effect model, we see that Ethnicity, Prior GPA, In Person, Age, and Courses Taken were the significant predictors of course completion. Black and Asian students were more likely to complete their courses relative to White students. Students with higher prior GPA, more courses taken, and taking the course in person were more likely to complete the course. Age was the only negative predictor of course completion, with older students less likely to complete their course. A full discussion of each coefficient result is beyond the scope of this paper. The critical results were that ZCB was not associated with a students likelihood of completing the course. Moreover, ZCB did not interact with Pell or Ethnicity groups, indicating that ZCB did not have differential effects on completion.

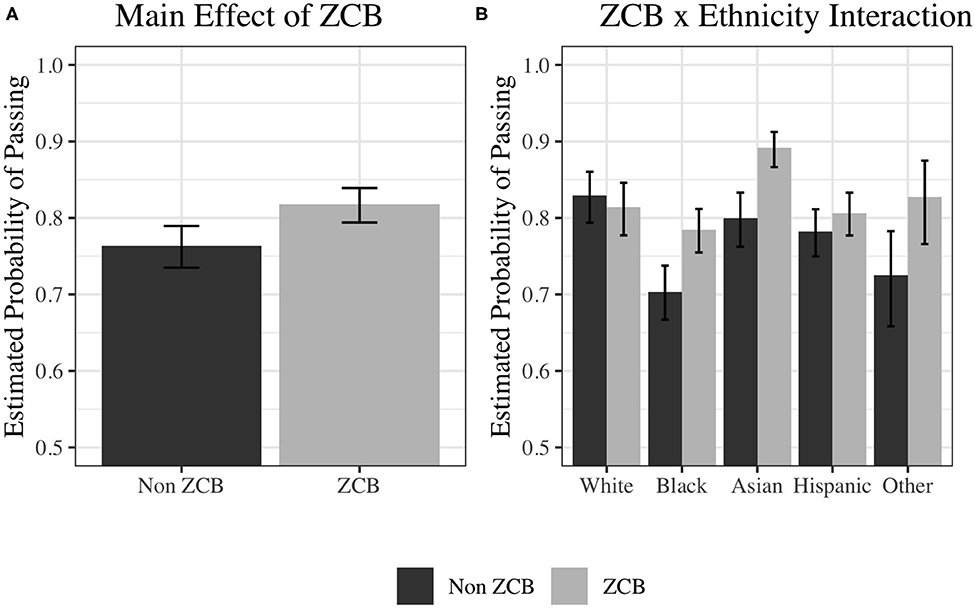

Next, we consider the model results for passing, shown on Table 3. The significant predictors in the pass models were slightly different than completion. Examining the results from the main effect model, we see that Ethnicity, Pell Recipient, Prior GPA, Repeat course, and ZCB were significant predictors. Black students were less likely to pass the course than White students, Pell students were less likely to pass than non-Pell students, and students who were repeating the course were less likely to pass than students taking the course for the first time. Prior GPA was positively associated with passing. Critically, students in ZCB courses were more likely to pass than students in Non-ZCB courses. When we examine the interaction models, we see that there was no interaction between Pell and ZCB. For the Ethnicity and ZCB interaction, we see a significant interaction between ZCB and Asian, indicating that Asian students were affected differently from ZCB than White students. The interaction for Black students in particular only narrowly missed the threshold for significance (p = 0.07).

In order to visualize the significant main effect of ZCB and interaction with ethnicity shown on Table 3, we report corrected marginal probabilities on Figure 2. Note that these are only visualizations of the statistically significant differences—all regression estimates are reported on Table 3. Figure 2A shows the probability of passing the course for ZCB and Non-ZCB students, after correcting for the other covariates in the main effect model. Correcting for confounding variables, we see that the probability of passing for students in ZCB was approximately 0.82, whereas the probability for passing for students in Non-ZCB courses was 0.76. Figure 2B shows the same results broken down by Ethnicity. Recall from the regression results on Table 3 that the effects of ZCB was only significantly different for Asian students, who had a 0.89 probability of passing in ZCB courses, and a 0.80 probability of passing in non-ZCB courses. Although, not statistically reliable, we do see a similar trend for Black students and students of other ethnicities.

Figure 2. Estimated marginal probability of passing after controlling for confounding variables. (A) Shows the overall main effect of ZCB, generated using the main effect model. (B) Shows the interaction effects between ethnicity and ZCB, generated using the interaction model. Error bars are standard error.

As a final observation, we note that the marginal and conditional R2 values (which represent the fixed effects and fixed+random effects, respectively) were overall quite low for both the completion and passing models. For the main effect models the marginal/conditional R2 values were 0.08/0.17 for course completion, and 0.19/0.29. R2 values can be interpreted as the proportion of variance in the outcome variable accounted for by the models. While low R2 values are fairly common in social science research, it is important to point out because it indicates that the majority of variance in course completion was not accounted for by these models. Although the factors examined here are important and have real influence, they are less important than the factors we are not accounting for.

5. Discussion

In this study, we examined the effect of adopting zero-cost books (ZCB) on the likelihood of students completing as well as passing a course. We examined these effects in a demographically diverse sample of students enrolled in a range of course topics at Houston Community College. After controlling for a variety of confounding factors and course/instructor level effects, we found that the adoption of ZCB had no effect on whether students completed their course, but it did have an effect of the probability of passing a course. We found no evidence that the effect depended on Pell status of the student, and only some evidence that the effect varied across ethnic groups.

5.1. Implications for the Access Hypothesis

Next, we will discuss these findings with respect to the access hypothesis (Grimaldi et al., 2019). As discussed in the introduction, the access hypothesis provides an explanatory mechanism for why low cost materials might ultimately lead to better outcomes for students. Specifically, students who would not otherwise have access to their course materials due to the high cost no longer have this barrier. And, if we assume that course materials will help students better learn the course topics, then we suppose that these students will perform better in the course. But one of the most important findings of that study is that the predicted benefits only apply to a subset of students. Practically, this means that observing benefits at an overall level should be quite difficult, as most students will have access to their materials, and therefore would be unaffected by adoption of ZCB. Moreover, benefits will be most pronounced for students sub groups that are likely to have troubles accessing materials (typically financially related).

We did observe positive effects of ZCB on student pass rates, as predicted by the access hypothesis. However, we did not observe the predicted interaction with Pell and ZCB. One interpretation of this lack of an interaction is that there was a mechanism other than access that might explain the effects of ZCB on student passing. Another interpretation is that our Pell variable may also not have been particularly well-suited for identifying students of low socio-economic status. As mentioned previously, Pell eligibility is typically used, because eligibility for funding is based on the income of the student or parents (if a dependent) and expected family contribution. Many schools collect this data to apply for Pell grants on behalf of students. But even in these cases, as has been demonstrated by national studies (McKinney and Novak, 2015; Delisle, 2017), Pell status is an imperfect gauge of SES. In the present study, we were at a further disadvantage because we were unable to access FAFSA information directly due to privacy concerns. Instead, we were only able to determine whether a student actually received Pell funds in a given semester.

We attempted to remedy some of the deficiencies of “Pell recipient” status by examining the interaction of ZCB and ethnicity. While we did see a trend of an effect for Black students, the only significant interaction we found was with Asian students, who were more likely to pass in ZCB than non-ZCB courses. Whether this was a substantive finding or a random false positive is not clear. We did not expect this finding, as we know of no systematic difference in access between Asian students and other students that may predict such a pattern. Asian populations in Houston typically show similar levels of economic prosperity as Whites (Klineberg, 2019). Thus we are left without an explanatory mechanism, and any attempts at explanation at this point would be purely conjecture.

In their original presentation of the access hypothesis, Grimaldi et al. (2019) focused discussion on performance based outcomes (such as grade or test results). However, it is reasonable to extrapolate that access might affect course completion, if we assume that students are more likely to withdraw from a course if they are performing poorly before the withdrawal deadline. Course completion (or conversely withdrawal) is a frequent measure used in OER efficacy studies (see Clinton and Khan, 2019; Hilton, 2019). The effectiveness of OER on reducing withdrawal rates has been somewhat more robust than the effect of OER on pass rates. However, in this study, we observed no benefit of ZCB on course completion. Moreover, we found no evidence that there were differential effects of ZCB on course completion by subgroup. Thus, these results would seem not to support the access hypothesis. However, it is important to note that course completion is a second order effect of access. Concretely, a student's performance would have to be positively impacted by ZCB and that benefit would have to influence their decision to complete the course. Considering that the joint probability of both of these events is necessarily smaller than either by itself, and considering how difficult it is to observe effects of access at all, it is not particularly surprising that we don't see an effect on completion rates.

In sum, the access hypothesis does not adequately explain the results here. As we pointed out, there were several limitations of our dataset and variables used that may reasonably explain why the predictions of the access hypothesis were not observed. Of course, this is not to say the access hypothesis is rejected or that access has no affect on student learning. Access is only one mechanism underlying the potential benefits of OER and low cost materials. It is possible that other mechanisms were at play in this particular implementation of ZCB.

5.2. Limitations

There are several limitations of this study that must be addressed. The biggest limitation is that we did not conduct a true experiment and randomly assign students and instructors to ZCB and Non-ZCB classes. Selection bias on the part of students was not as much of a concern for this study, as students had no indication whether a course they were signing up for was ZCB or not. Nevertheless, there may have been unobserved reasons why students selected particular courses or instructors that were correlated with ZCB status. While we only examined instructors who taught with both ZCB and Non-ZCB materials, thereby allowing some control of instructor level effects, instructor bias is still a concern. For example, instructors may have adopted ZCB with a belief that it would be effective, and therefore put more effort or attention into those courses.

Another limitation of this study is that there are other possible explanations for the effects we observed that have nothing to do with the cost savings afforded by ZCB. For example, when an instructor adopts any new course materials, it is necessary to redesign many aspects of the course, including new lecture slides, new exams, etc. It is possible that in the process of this restructuring, the course is improved and the resulting improved outcomes have more to do with that than the cost of the materials. It is also possible that the exams are inadvertently made easier, which would result in greater pass rates. It is also possible that the new ZCB materials were of higher quality than the commercial materials they replaced. Recall that our sample was disproportionately composed of students in online courses—it is possible that the OER materials were better suited for the largely digital environment in which students were studying. Without a tightly controlled experiment, it is impossible to rule any of these alternate explanations out. Indeed, as we noted in the results section, the vast majority of variance on course completion and passing was unaccounted for by our models, leaving the possibility of unobserved confounds a real concern. In sum, while these results suggest promising positive effects of ZCB on student achievement, it is important to recognize they are far from conclusive.

Both of these limitations could be minimized in future research by employing some mixed methods approaches. For instance, it is important to understand the economic and social situation of students to better understand how observed characteristics of students interact with their access to learning materials. Similarly, questions about course design, effort, and disposition of instructors could be obtained with careful qualitative responses from those instructors.

6. Conclusions

This study examined the impact of Zero Cost Books (including OER and other free resources) on student learning outcomes, specifically, course completion rates and pass rates. We isolated a single instance of each student in our collected data, attempted to control for instructor effects, and used statistical modeling to control for student-level effects. These methods represent some of the best available research methods in OER research. Moreover, we focused our analysis on testing the “access hypothesis” which provides an explanatory mechanism for why open and free resources might improve student learning outcomes. While we observed positive effects of ZCB, including an overall improvement in pass rates and a specific improvement in pass rates for Asian students, the specific predictions of the access hypothesis were not observed. It is likely that there are a number of different mechanisms that could explain why switching to open and free resources might improve student learning outcomes, and why it would improve those outcomes for specific student populations more than others. More work needs to be done to identify those mechanisms and design research studies that could test them.

Data Availability Statement

The data analyzed in this study is subject to the following licenses/restrictions: IRB restrictions required secure access for the authors only. Requests to access these datasets should be directed to Nathan Smith, bmF0aGFuLnNtaXRoMkBoY2NzLmVkdQ==.

Ethics Statement

The studies involving human participants were reviewed and approved by Houston Community College, IRB. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

NS conceptualized the project, collected the data, and wrote large sections of the manuscript. PG conducted the majority of the data analysis and wrote large sections of the manuscript. DB contributed to the analysis and made edits to the manuscript. All authors contributed to the article and approved the submitted version.

Funding

Houston Community College received a private grant from the Kinder Foundation of $300,000 over 3 years for the implementation of the Zero Cost Textbooks Degree program.

Conflict of Interest

NS oversees the Z-Degree and OER program at Houston Community College. DB was employed by OpenStax, a provider of free, open source educational resources. PG was employed by OpenStax for the duration of this project.

Acknowledgments

Thank you to the Office of Institutional Research at Houston Community College, especially Dr. Martha Oburn and Hazel Milan for helping to collect data. And thank you to the Kinder Foundation for their support.

Footnote

1. ^“Open courseware” is publisher-based software that provides learning resources to supplement OER. Courseware may include interactive video and graphic components, formative and summative assessments, communication tools, and other features to assist in the delivery of instructional materials. Many for-profit and non-profit companies provide courseware to support OER, usually at low cost to students.

References

Allen, G., Alberto Guzman-Alvarez, Molinaro, M., and Larsen, D. S. (2015). Assessing the Impact and Efficacy of the Open-Access ChemWiki Textbook Project. Educause Learning Initiative.

Bliss, T. J. (2013). A model of digital textbook quality from the perspective of college students (Ph.D. thesis). Brigham Young University, Provo, Utah.

Bowen, W. G., Chingos, M. M., Lack, K. A., and Nygren, T. I. (2014). Interactive learning online at public universities: evidence from a six-campus randomized trial. J. Policy Anal. Manage. 33, 94–111. doi: 10.1002/pam.21728

Chiorescu, M. (2017). Exploring open educational resources for College Algebra. Int. Rev. Res. Open Distance Learn. 18, 50–59. doi: 10.19173/irrodl.v18i4.3003

Choi, Y. M., and Carpenter, C. (2017). Evaluating the impact of open educational resources: a case study. Portal 17, 685–693. doi: 10.1353/pla.2017.0041

Clinton, V. (2018). Savings without sacrifice: a case report on open-source textbook adoption. Open Learn. 33, 177–189. doi: 10.1080/02680513.2018.1486184

Clinton, V., and Khan, S. (2019). Efficacy of open textbook adoption on learning performance and course withdrawal rates: a meta-analysis. AERA Open 5:233285841987221. doi: 10.1177/2332858419872212

Colvard, N. B., Watson, C. E., and Park, H. (2018). The impact of open educational resources on various student success metrics. Int. J. Teach. Learn. Higher Educ. 30, 262–275.

Croteau, E. (2017). Measures of student success with textbook transformations: the affordable learning Georgia initiative. Open Praxis 9:93. doi: 10.5944/openpraxis.9.1.505

Delisle, J. (2017). The Pell Grant Proxy: A Ubiquitous but Flawed Measure of Low-Income Students. Evidence Speaks Reports, Vol 2, #26. Brookings, SD: Economics Studies.

Fischer, L., Hilton, J., Robinson, T. J., and Wiley, D. A. (2015). A multi-institutional study of the impact of open textbook adoption on the learning outcomes of post-secondary students. J. Comput. Higher Educ. 27, 159–172. doi: 10.1007/s12528-015-9101-x

Florida Virtual Campus (2018). 2018 Student Textbook and Course Materials Survey: Results and Findings. Technical report, Office of Distance Learning & Student Services, Tallahassee, FL.

Griggs, R. A., and Jackson, S. L. (2017). Studying open versus traditional textbook effects on students-course performance: confounds abound. Teach. Psychol. 44, 306–312. doi: 10.1177/0098628317727641

Grimaldi, P. J., Basu Mallick, D., Waters, A. E., and Baraniuk, R. G. (2019). Do open educational resources improve student learning? Implications of the access hypothesis. PLoS ONE 14:e0212508. doi: 10.1371/journal.pone.0212508

Gurung, R. A. R. (2017). Predicting learning: Comparing an open educational resource and standard textbooks. Scholarsh. Teach. Learn. Psychol. 3, 233–248. doi: 10.1037/stl0000092

Hilton, J. (2019). Open educational resources, student efficacy, and user perceptions: a synthesis of research published between 2015 and 2018. Educ. Technol. Res. Dev. 68, 853–876. doi: 10.1007/s11423-019-09700-4

Hilton, J. III, and Laman, C. (2012). CASE STUDY One college's use of an open psychology textbook. Open Learn. 27, 265–272. doi: 10.1080/02680513.2012.716657

Hilton, J. III. (2016). Open educational resources and college textbook choices: a review of research on efficacy and perceptions. Educ. Technol. Res. Dev. 64, 573–590. doi: 10.1007/s11423-016-9434-9

Hilton, J. L. III., Gaudet, D., Clark, P., Robinson, J., and Wiley, D. (2013). The adoption of open educational resources by one community college math department. Int. Rev. Res. Open Distance Learn. 14, 37–50. doi: 10.19173/irrodl.v14i4.1523

Jhangiani, R., Dastur, F., Grand, R. L., and Penner, K. (2018). As good or better than commercial textbooks: students' perceptions and outcomes from using open digital and open print textbooks. Can. J. Scholarsh. Teach. Learn. 9. doi: 10.5206/cjsotl-rcacea.2018.1.5

Klineberg, S. L. (2019). The 2019 Kinder Houston Area Survey. RICE University, KINDER Institute for Urban Research.

Lovett, M., Meyer, O., and Thille, C. (2008). JIME - The open learning initiative: measuring the effectiveness of the OLI statistics course in accelerating student learning. J. Interact. Media Educ. 2008:13. doi: 10.5334/2008-14

McKinney, L., and Novak, H. (2015). FAFSA filing among first-year college students: who files on time? Who doesn't? And why does it matter? Res. Higher Educ. 56, 1–28. doi: 10.1007/s11162-014-9340-0

Robinson, T. J. (2015). The effects of open educational resource adoption on measures of post-secondary student success (Theses and Dissertations), 5815. Available online at: https://scholarsarchive.byu.edu/etd/5815

Ross, H. M., Hendricks, C., and Mowat, V. (2018). Open textbooks in an introductory sociology course in Canada: student views and completion rates. Open Praxis 10:393. doi: 10.5944/openpraxis.10.4.892

Keywords: OER, open, education, socio-economic status, access hypothesis, cost, textbook, student success

Citation: Smith ND, Grimaldi PJ and Basu Mallick D (2020) Impact of Zero Cost Books Adoptions on Student Success at a Large, Urban Community College. Front. Educ. 5:579580. doi: 10.3389/feduc.2020.579580

Received: 02 July 2020; Accepted: 03 September 2020;

Published: 15 October 2020.

Edited by:

Tom Crick, Swansea University, United KingdomReviewed by:

Robert Farrow, The Open University, United KingdomIbrahim Yildirim, University of Gaziantep, Turkey

Copyright © 2020 Smith, Grimaldi and Basu Mallick. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nathan D. Smith, bmF0aGFuLnNtaXRoMkBoY2MuZWR1

Nathan D. Smith

Nathan D. Smith Phillip J. Grimaldi

Phillip J. Grimaldi Debshila Basu Mallick

Debshila Basu Mallick