- Rossier School of Education, University of Southern California, Los Angeles, CA, United States

O’Keefe et al. (2018) did not sufficiently narrow the implicit theory-of-interest development to accurately address the targeted domain: potential development of an entirely new interest. This was revealed when current participants expressed alternative interpretations of the word “change” in the indicator’s stem. This study therefore sought to first characterize a way to think about implicit theories of interest and refine the wording. However, the revised items revealed low reliability in a targeted population of Singaporeans. Was this due to the manipulation of the questions or the new test population? This was evaluated by following the same sampling procedure as O’Keefe et al. (2018) and participants were presented with both the revised and the original versions of the items. Factor analysis revealed a preferred factor structure for both versions having potential implications for understand implicit theories, as well as the dimensions of implicit theories-of-interests specifically.

Introduction

Individual interests are often experienced as core to one’s identity—developed predispositions that are characterized by a desire to reengage content through one’s own volition (Hidi and Renninger, 2006). But how do we believe core interests develop? Our interests and passions are often experienced as if they emerged naturally, but assuming this is the only path for interest development limits our potential interests, closing our eyes to new experiences. Conversely, believing interests can be developed through effortful engagement opens doors to new opportunities in personal, academic, and professional domains (Harackiewicz et al., 2002). However, the effort individuals are willing to put into regulating their interest and enjoyment varies (Sansone and Morgan, 1992; Sansone et al., 1992, 1999; Renninger and Hidi, 2015), and the amount of effort we are willing to put in is thought to be restricted by our implicit beliefs about the nature of development.

Seeking to understand this inter-individual variation has led to a growing focus on creating instruments to measure the implicit beliefs that facilitate or limit the effort put toward exploring potential new interests (O’Keefe et al., 2017). To this end, O’Keefe et al. (2018) offered an instrument modeling an individual’s implicit theory of interest development (ITID) by minimally adapting Dweck’s classic mindset survey (implicit theories of intelligence, ITI) (Dweck, 1999). This survey on which ITID was based assumed a unidimensional construct for mindsets (implicit theories) ranging from beliefs that our intelligence level is fixed to beliefs that our intelligence level can be improved (or, more accurately, is malleable). Like ITI, O’Keefe et al.’s (2018) approach to ITID focuses on a single semantic dimension of “change.” However, instead of concerning itself with beliefs about the nature of performance improvement, O’Keefe et al. (2018) focuses on one’s perceived ability to generate new interests: an “entity” approach suggests that our interests are resistant to “change” while an “incremental” approach suggests that our interests may “change” with effort or experience. Up to this point, O’Keefe et al. (2018) has used this instrument to show how an implicit theory of interest may influence our likelihood to find readings outside our interest area interesting, but this instrument is still in its infancy and has not been widely used as of yet. This present study exposes shortcomings in the adapted form offered by O’Keefe et al. (2018), improves upon it with further adjustments to the survey, and suggests additional next steps based on participant feedback and resulting analysis.

ITID is of importance to researchers and practitioners in education because there may be initial resistance from students to fully engage in educational content and activities that are immediately dismissed as uninteresting. Assuming that interest is a valued part of education, it is important to point out that without an adequate instrument to measure ITID, its impact on a variety of personal and academic outcomes cannot be evaluated.

Theoretically, establishing the validity of an instrument that represents ITID is critical because this implicit theory is thought to play a mediating role in the relationship between “exposure to a potential interest” (an independent variable) and “effort put into the process of developing a new interest” (a dependent variable). This paper focuses on improvements to measuring this mediating variable.

Implicit Theories

What are implicit theories? Implicit theories or “lay theories” are informal, common-sense explanations that individuals hold about how the application of effort may cause changes in their skills, beliefs, or personality (Dweck, 1999, 2012a,b; Yeager et al., 2011). These implicit theories are thought to play a role in the development of cognitive structures called “meaning systems” which are utilized by individuals to interpret, evaluate, or “make meaning of” the outcomes of their efforts impacting one’s sense of self efficacy and motivation across academic and non-academic domains (Bernecker and Job, 2019). We make sense of our successes, failures, opportunities, and limitations through implicit theories (Komarraju and Nadler, 2013).

A method of examining implicit theory is to identify the location of an individual’s beliefs along a continuum from the permanence of personal attributes (fixed or entity) vs. the flexibility of personal attributes (growth or incremental) with which individuals understand the potential for effort to affect those attributes. This continuum for implicit theories has been employed across a variety of studies that address different domains: ability to regulate your own anxiety level (Schroder et al., 2019), your level of shyness (Valentiner et al., 2011), your own emotion states (Schroder et al., 2015), your own body weight (Burnette, 2010), romantic relationship development (Franiuk et al., 2002), and domains as specific as focusing on chess playing ability (Tenemaza Kramaley and Wishart, 2020). While it is acknowledged that implicit theories in each of these domains may vary independently (Dweck et al., 1995a), it is also generally accepted that there may tend to be a positive manifold, where a more generalized way of approaching implicit theories emerges (Dweck et al., 1995b). By utilizing the instrument adapted by tis class of studies, O’Keefe et al. (2018) is assuming ITID shares this single continuum.

Designing Instruments for Implicit Theories

The dominant approach for instrument design in implicit theory research has been to utilize the “find-and-replace” method (Chiu et al., 1997a,b; Burnette, 2010; Valentiner et al., 2011) for instrument adaptation from the highly reliable 4-item instrument developed for measuring the entity to incremental continuum of implicit theories (Dweck et al., 1995a; Dweck, 1999), i.e., “You can be exposed to (x), but your (x) won’t really change.” However, what exactly do participants believe “change” is referring to: what dimensions of the domain are malleable or fixed? A basic semantic analysis of some of the uses of this find-and-replace methodology in implicit theory research will elucidate some common problems found in survey adaptation (Sousa et al., 2017). Here, I will discuss the semantic domains of the word “change” and what was considered potentially problematic, a priori.

Unidimensional Domains

Interpretations for implicit theory instruments are straight forward when there is essentially one semantic dimension along which a personal attribute can be evaluated. As a result, some domains intrinsically lend themselves well to measuring implicit theory across a single dimension as a change in degree or intensity. Take for example the use of the word “change” in the following sample items indicating an entity theory-of-intelligence (Dweck, 1999) and entity theory-of-anxiety, respectively (Schroder et al., 2019):

a. To be honest, you can’t really change how intelligent you are.

b. To be honest, you can’t really change how anxious you are.

In this context, the complement of “change” asks, “How intelligent are you?”: a unidimensional measure indicating degree from “not at all intelligent” to “very intelligent”; the same can be seen with reference to anxiety level from “not at all anxious” to “very anxious.” An incremental implicit theory would indicate that one believes that with the application of effort one can “change” this single scaled numerical value.

Domains of change may also have intrinsic properties that restrict potential interpretations. The general intelligence quotient has been widely accepted across the general population as a measure of overall intelligence, and the numerical quotient itself has become the construct (Boring, 1923). Interpretations are essentially limited by the semantic space of the domain.

This is not to say these domains cannot be interpreted in multiple ways, but the survey item wording can restrict this interpretation to a single dimension. Simply dropping the “how” from the above items could allow for multiple interpretations of change in anxiety and intelligence. Where one run into trouble is when adapting these items to other constructs where “change” may be more widely interpreted. Below are two examples that are similar to ITID where assuming a unidimensional construct may be problematic.

Multidimensional domains

Like ITID, not all domains lend themselves so easily to a single dimensional interpretation. The following are taken from implicit theories of emotion regulation and personality respectively (Schroder et al., 2015):

c. No matter how hard they try, people can’t really change the emotions they have.

d. People can do things differently, but the important parts of who they are can’t really be changed.

While these items are still attempting to place individuals along the implicit theory continuum from entity to incremental, the object of the word “change” is unclear. Ratings are no longer reflective of a single dimension and may not have a scaled interpretation at all. In (c), what does it mean to change emotions? Does it mean to regulate the degree of emotions in the moment? Does it mean to change the type of emotion from anger to joy? Does it mean to change the set of emotions an individual can experience? These are all potential interpretations. Similar issues are in the personality item. If there are multiple dimensions along which one may interpret the items, we cannot be sure that they are interpreting the items along the desired dimension compromising the reliability and validity of the instrument itself (McDermott and Sarvela, 1998). The semantic space of emotions and personality are diverse making inferences form item responses questionable. Importantly, it is also essential to remember that while implicit theories are associated with separate domains it is also likely that there are different semantic dimensions within a domain: one could answer the questions concerning (c) above differently although there may be a positive manifold. It is therefore essential to be as specific as possible until we have better understandings of the constructs under question. ITID has similar issues and Study 2 will explore these in participant responses.

One or two factors?

In addition to this potential difference in identifiable dimensions based on the domain’s semantic space for potential the implicit theories, there is also some question in the literature as to whether incremental and entity implicit theories are anchors on the ends of a continuum of two separate but related belief systems. One could potentially believe that core interests are very resistant to change (entity theory) while simultaneously believing that with effort they can change even though they are resistant to change (incremental theory). This two-factor interpretation in mindset theory is not without other examples.

The implicit theory-of-creativity appears to load separately on both incremental and entity (Hass et al., 2016; Puente-Diaz and Cavazos-Arroyo, 2019). Cury et al. (2006) similarly identified two separable implicit theories with reference to math skill with a correlation of -0.36 between the factors. Also, even implicit theory-of-intelligence appears to, at times, load on two separable constructs (Faria and Fontaine, 1997; Abd-El-Fattah and Yates, 2006). This suggests that it is always worth evaluating the factor structure when adapting implicit theory instruments.

If one combines this two-factor possibility with the potential for multiple semantic dimensions, we can rapidly see the problems of reliability and validity compounding when adapting an instrument.

Cronbach’s Alpha

Internal consistency of ITID was indicated by the acceptable alpha for the three studies in O’Keefe et al. (2018) where each reported acceptable reliability. However, these studies did not evaluate the homogeneity of variance among the items.

Too often, Cronbach’s alpha is reported for new constructs without an analysis of the factor structure. Cronbach’s alpha is a measure of internal consistency. However, internal consistency is a necessary but not sufficient condition for the measurement of homogeneity of variance (Cortina, 1993; Green et al., 1977). In other words, one may have high internal consistency when potentially separate factors covary. Therefore, factor analysis is a necessary step in evaluating the reliability of the revised instrument–particularly when there are competing theories suggesting both single and multiple factor approaches as is seen above.

As described above, O’Keefe et al.’s (2018) ITID instrument suffers from two potential problems common in survey adaptation (Sousa et al., 2017): a lack of structural validity (whether the number of factors it measures is accurate) and content validity (whether it appears to measure what it is supposed to measure). In study one below, I refined the instrument by replacing semantically ambiguous words with more descriptive language to improve the content validity. This was verified in the second study where participants were presented with both instruments and given the opportunity to describe the difference to better understand the semantic scope of the items. A factor analysis on both was conducted and revealed that a two-factor structure was a better fit for both the original and the revised. The result is a carefully adapted six-item survey that isolates the dimension of ITID suggested by O’Keefe et al. (2018) while respecting its discriminant validity from the other potential dimensions of implicit theories interest development revealed by participants.

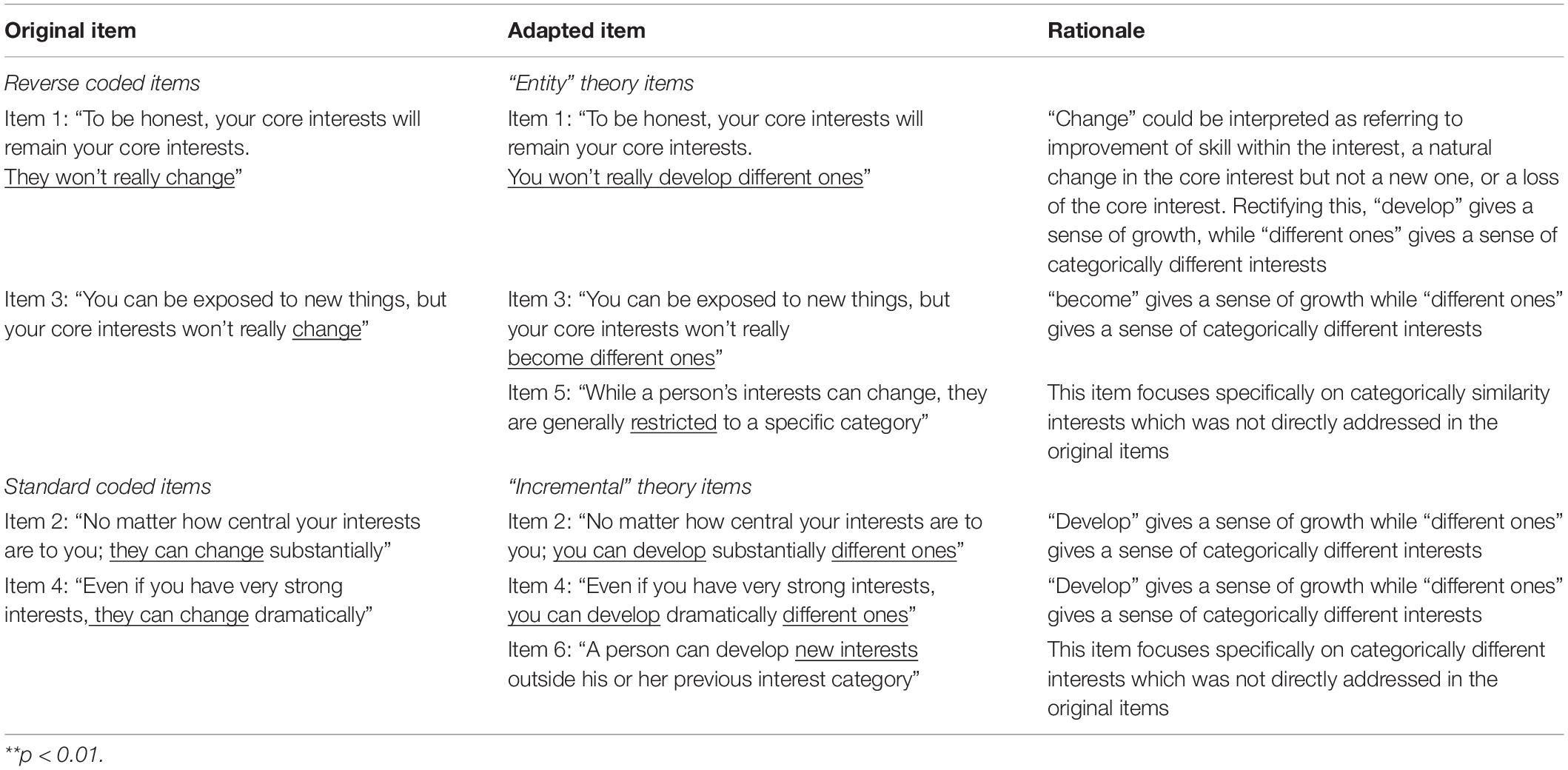

Survey Adaptation

The first step in the adaptation of the instrument using the find-and-replace methodology should be to determine if the instrument is indeed following the same semantic dimensions. Based on the semantic analysis presented above critiquing the use of the word “change” across implicit theory instruments, the word “change” was too semantically broad allowing too much room for participant interpretation. Therefore, the word change was removed from the instrument and replaced with more specific language which attempted to get at the unidimensional aspect of the survey suggested by O’Keefe et al. (2018). Under the guidance of field experts, the instrument was adapted and reviewed via pilot testing with graduate students and researchers at the National Institute of Education, Singapore and international collaborators. Successive refinements resulted in the wording here which was then used in a larger study to examine cultural influences on interest development. The changes and rationale are listed in Table 1.

Study 1: Administration of Revised Instrument With Singaporean Sample

This study introduced the adapted set of items attempting to constrain the items to address the targeted domain of O’Keefe et al. (2018) to a sample of participants from a wider study of interest development in Singapore. While the purposes of this study were wider, the current analysis is restricted to the validity of the instrument at hand, conducting a confirmatory factor analysis on the results.

Methods

Participants

The present study was restricted to college students in graduate courses at a public autonomous research university in Singapore. Keeping in line with the larger study, not discussed here, the 82 students sampled were Singaporean which was verified by the number of years they lived in Singapore matching their age (50 female students, 32 male students; age: M = 25.54 years, SD = 6.88). This study targeted graduate students because of the relative stability of their interests as moving them toward specific areas of study and career objectives. Participants were paid $10SGD in the form of a gift card. Surveys were completed online.

To detect a medium effect size, a sample of at least 80 participants was estimated for the larger study; however, this was also in line with the planned confirmatory factor analysis for this instrument. Kline (2014) suggests that it is important to consider the number of participants in relation to the number of items citing a high estimate of 20–1 (Hair et al., 1995). However, more recent analysis has suggested a more complicated relationship between the number of indicators, factors, and expected factor loading. Given the expected factor loadings at 0.80, an 80 participant sample was viewed as minimally sufficient for 1–2 factors (Wolf et al., 2013).

Procedure

Participants were told that they would be asked to answer questions related to their interests. After consenting to the study via an online presentation of a consent form, participants answered four questions in the format described below, hoping to limit the scope of item interpretation.

Measures

Implicit theories of interest—revised

The revised questions are displayed in Table 1 with the following Likert scale: (1 = strongly disagree, 7 = strongly agree; α = 0.69, M = 4.32, SD = 0.91). Assuming a 1-factor solution, the first and third items were reverse coded. These four items were the first items presented in this larger study, so this study was not concerned about the influence of later surveys on the responses.

Analysis

The Cronbach’s alpha was “questionable” for the four items. This suggested that the items were not tau equivalent, however, analysis continued as the instrument almost met the minimum standard for acceptability.

Following this low reliability, it was hypothesized that the two-factor structure may be a better fit. The revised structure would be consistent with the literature above and posited separate but correlated incremental and entity implicit theories. Being aware of this possibility a priori and taking into account the questionable reliability of the revised scale, a confirmatory factor analysis was conducted.

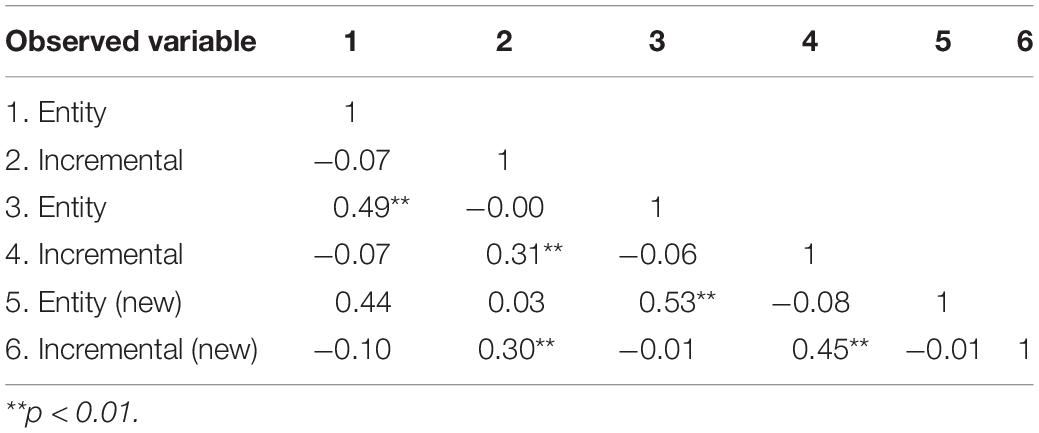

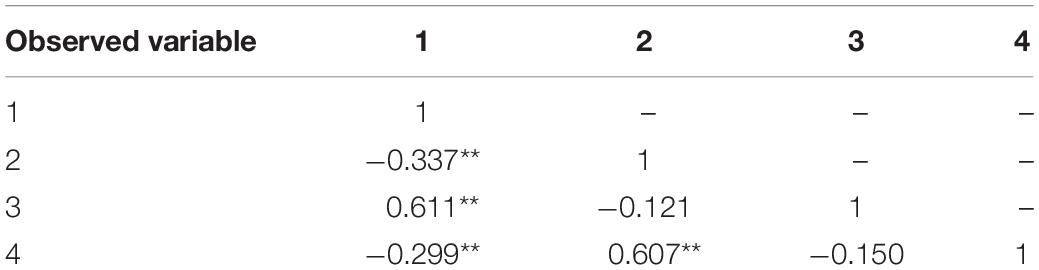

The CFA was conducted using Mplus version 8 software (Muthén and Muthén, 1998–2017). All four items from the revised Implicit Interest Survey were used. However, in the scoring, this analysis did not reverse score the items, as there is no reason to do this if the incremental and entity implicit theories are indeed separable. Interitem correlations are shown in Table 2. I evaluated the assumptions of multivariate normality and linearity and observed no multivariate outliers (p < 0.001). There was no missing data. I chose maximum likelihood parameter estimation over other estimation methods [weighted least squares, two-stage least squares, asymptotically distribution-free (ADF)] because the data were distributed normally (Kline, 2005). Item one and item four were set with a factor loading of 1 while items 2 and 3 were free to vary. In addition, while all residuals were free to vary in the one-factor model, the two-factor model had a negative residual for item one. In the second model the residuals for the first item were fixed at zero. This is acceptable practice as zero residuals are possible and do occur when one of the items fits too well with the factor in the data set. Notably this residual was also not significant. Not doing this will result in a residual covariance matrix (theta) which is not positive definite and will result in exceeded iterations.

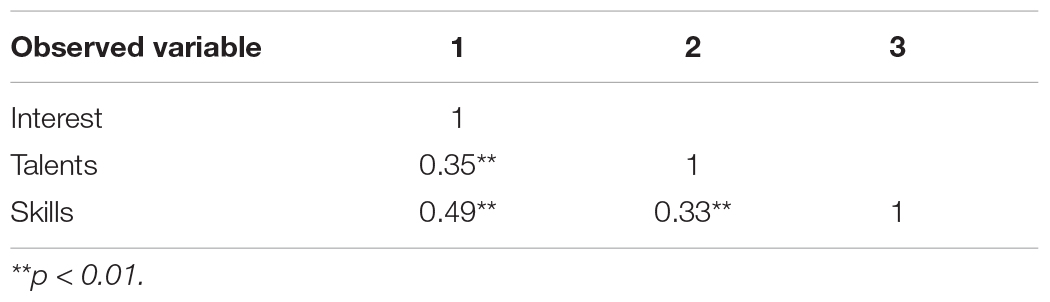

Table 2. Correlations for CFA analysis of revised four-item survey on Singaporean graduate students.

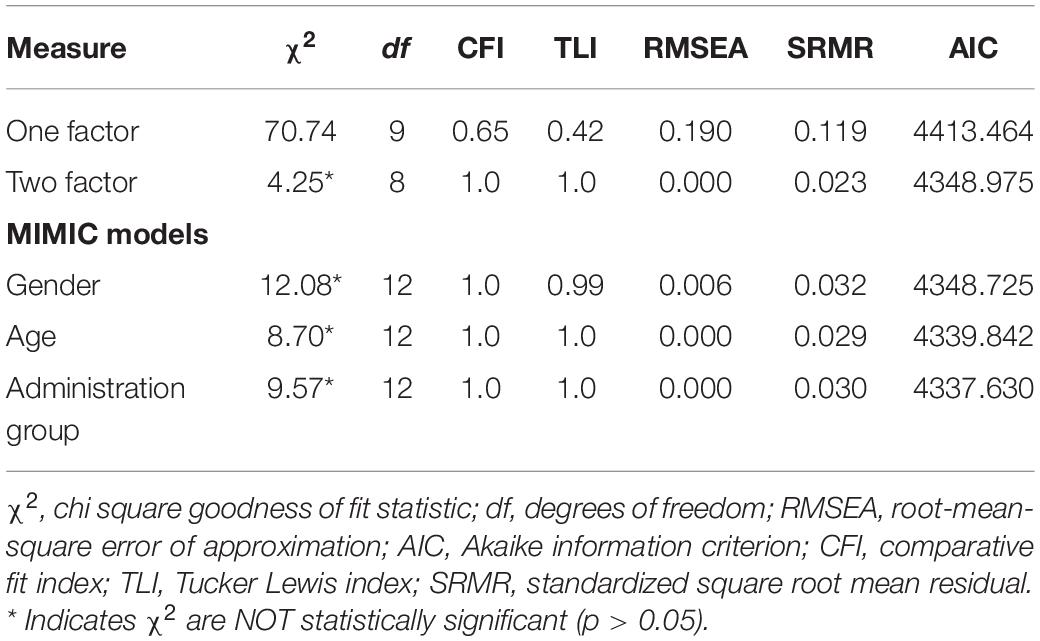

Results

As can be seen in Table 3, the two-factor model is a significantly better fit. All fit indices were appropriate, and the chi square was not significant for the two-factor model only. The hypothesized two-factor model appears to be a good fit for the data: CFI and TLI approach one. The RMSEA (0.00) and SRMR (0.024) approached zero. In accordance with Hu and Bentler’s (1999) recommendations for fit indices, the residual as evaluated by the RMSEA is below 0.06 and the SRMR is below 0.08 and the comparative fit indices suggest good model fit with the TLI and CFI greater than 0.95. In addition, the lower value for the Akaike Information Criteria for the two-factor model in contrast to the one-factor model also suggests that the two-factor model exhibits less entropy.

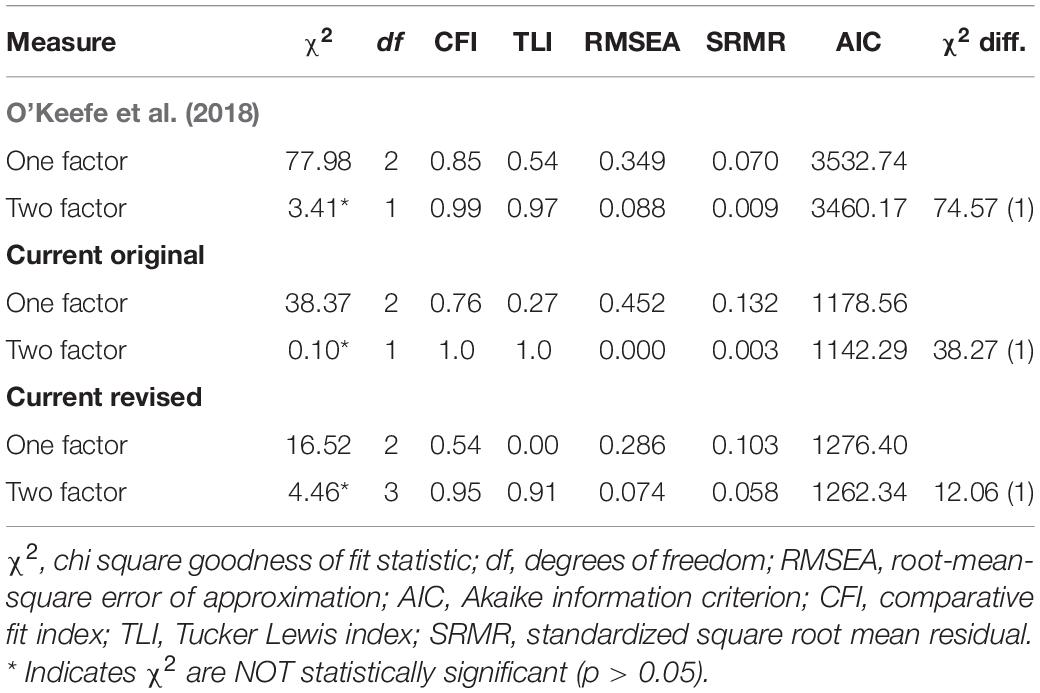

Table 3. Fit indices for alternative factor models of implicit theory-of-interest development on Singaporean graduate students.

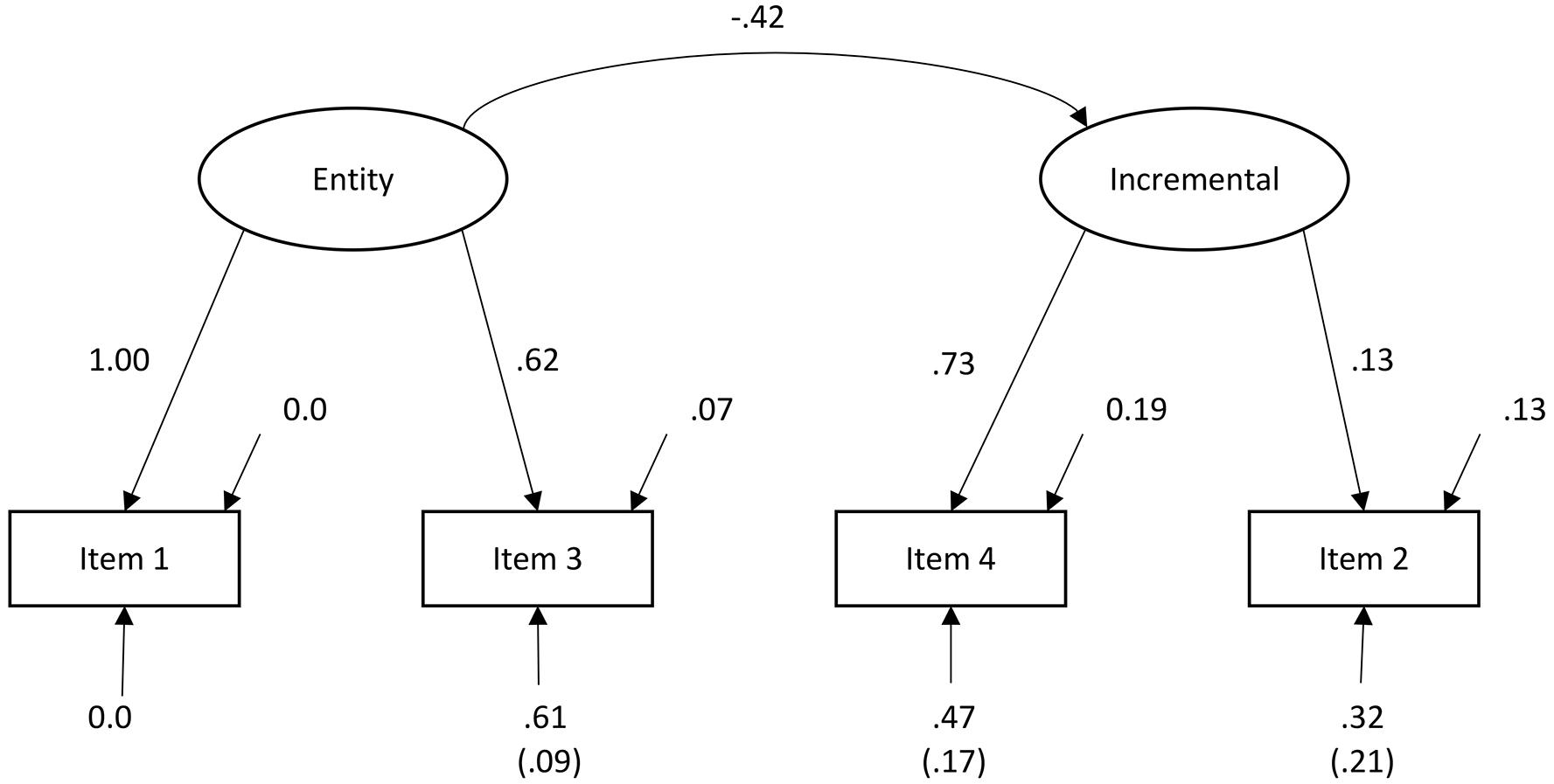

The resulting two items for each were then averaged for each construct and their correlation compared. The result in the incremental(growth) implicit-theory (α = 0.74, M = 4.83, SD = 1.03) and the entity(fixed) implicit-theory (α = 0.76, M = 4.20, SD = 1.22) correlated significantly at r = −0.42 (p < 0.01). The model for this 2-factor solution is in Figure 1. However, it is noted that this is in line with Cury et al. (2006), mentioned above, where the implicit theories had a correlation of r = −0.36.

Figure 1. Factor loading diagrams for confirmatory factor analysis (CFA) model fit to a 2-factor model of implicit theories of interest utilizing 4 items.

Discussion

While the above study suggests a two-factor structure, it is generally advised for each factor to have at least three indicators. A second concern arose from the analysis in study one. While this was an attempt to simply use a minimally adjusted existing instrument, it was unclear if the instrument it was based on also showed a potential for two factors. The second study therefore examined the factor structure of both the original and the revised instrument. In addition, it was preferred to have at least one additional indicator per factor if indeed a two-factor solution continued to be preferable.

Study 2: Instrument Generalizability Understanding the Dimensions and Factor Structure

The second study sought to understand the generalizability of the findings of the first study with a large population of individuals in the United States and India via MTurk as well re-evaluate the findings of O’Keefe et al. (2018) through secondary data analysis using a population similar to the original studies participants. Given the findings of study one, study two sought to examine and develop the instrument utilizing similar methods to O’Keefe et al. (2018) with Mturk online data collection. Study two had two research questions. Was a two-factor structure a better fit for both the original and revised items, evidenced via CFA? Was the dimension of interest development constrained as evidenced by a qualitative analysis of participants’ item-interpretations?

Based on study one and the literature, a two-factor model was hypothesized to have an incremental and entity factor; and therefore, two additional indicators were added. Finally, the correlation between beliefs about innateness of interests, talents, and skills with both the original and the revised instrument were used as a way of evaluating if the construct of interest was targeted.

Methods

Participants

In this study two additional survey administrations were conducted each following the same process as O’Keefe et al. (2018). First, eighty-nine college students in the United States (43 female students, 46 male students; age: M = 30.87 years, SD = 10.38) were recruited from Mechanical Turk, referred to subsequently in this study as “Group A.” Participants were paid $3 for their participation. In the second data collection period, 99 college students in the United States and India (67 female students, 32 male students; age: M = 30.34 years, SD = 8.65) were recruited from Mechanical Turk, referred to subsequently in this study as “Group B.” Participants were paid $3 for their participation, which took a median of 3.25 min; there was no time limit. Except for in the final analysis, these two groups were combined.

Keeping in line with O’Keefe et al. (2018), it was verified whether the participants were college students by asking them whether they were currently enrolled in an undergraduate degree program. In addition, participants were asked their country of residence as well as how long they lived there.

In addition, data from study one and two in O’Keefe et al. (2018), utilizing their original format, was also analyzed. This combined data set from the original article consisted of 106 Males and 161 females (age: M = 23.21 years, SD = 4.28), referred to subsequently in this study as “Group O’Keefe.”

Procedure

Participants from Group A and B were told that they would be asked to answer questions related to their interests. After consenting to the study via an online presentation of a consent form, participants answered four questions in the format offered by O’Keefe et al. (2018) followed by the four revised questions hoping to limit the scope of interpretation. Two additional indicators one for each potential construct were also added.

Participants were then presented one of the items in both formats. They were then asked to explain what they thought each item in turn was communicating. This was followed by a demographics questionnaire and additional supplementary surveys described in the supplementary materials. The entire session took approximately 10 min. The two implicit surveys, total of 10 items, took approximate 3.5 min to complete.

Because the qualitative part of this study required well thought-out answers, only one of the indicators was presented to participants. It was found in pilot research that, when all questions were asked, the quality of the answers was drastically reduced, and participants became agitated at the repetition. It was then determined that one of the questions might be sufficient to get at whether or not the semantics of the question had been successfully constrained with the replacement of the word “change.” Because there was much overlap between the items a detailed analysis was enough to reveal the potential misinterpretation when researchers use the word change, as will be shown below.

Measures

Implicit Theories of Interest

The items in this section were identical to those found in O’Keefe et al. (2018). These original items were generated following the find and replace methodology discussed above on the theories-of-intelligence scale (Dweck, 1999); items are shown in Table 1. However, to keep this scale in-line with another scale being used in a larger study, in place of the standard 5 or 6-point Likert, this study used a 7-point Likert (1 = strongly disagree, 7 = strongly agree; α = 0.47, M = 4.72, SD = 1.13). The first and third items were reverse coded. O’Keefe et al. (2018) used a 6-point Likert scale: 1 = strongly disagree, 6 = strongly agree (α = 0.77, M = 3.68, SD = 0.89).

Implicit Theories of Interest—Revised and Expanded

Two items were added: “While a person’s interests can change, they are generally restricted to a specific category,” “A person can develop new interests outside his or her previous interest category.” These questions were generated with an attempt get specifically at the origin of new interests as being categorically separate from existing interests. The same 7-point Likert scale was used.

Item Interpretation

In the first group, participants were then presented with one of the items they had just completed from each version of the survey: “To be honest, your core interests will remain your core interests. They won’t really change,” “To be honest, your core interests will remain your core interests. You won’t really develop different ones,” with the text of the second sentence of each item bolded. They were then asked to “Explain the bolded phrasing” in a multiple line text box for each item in turn.

Innateness

In addition, items were constructed to get at one’s beliefs about the innateness of interests, talents, and skills. It would be expected that those with a stronger agreement to innateness would also show a greater entity implicit theory-of-interest development. These items utilized the same scale as the implicit theories and were as follows “A person is born with specific interests,” “A person is born with specific skills,” “A person is born with specific talents.”

Analysis 1: Qualitative Responses

The goal of the semantic coding of participant descriptions of one original and one revised item was to determine what interpretations were made by the participants. While the wording of the revised instrument was influenced by expert review, it was essential to understand how participants spontaneously made sense of the items. The methodology was adapted from Sousa et al. (2017) cognitive interviewing technique but was adjusted to get short responses from online participants to obtain a set of potential item interpretations. The participants spent less than 1 min on each question.

Coding

After data collection, descriptions were printed onto cards with one interpretation per card. Individual responses between the two question versions were aggregated and shuffled making it less likely bias would enter the analysis. All coding was completed by one individual; therefore, bias was consistent across items. A priori, the only assumption was that some of the items would be interpreted in line with the ITID dimension offered by O’Keefe et al. (2018): the target of the item is a reference to the possibility of developing a categorically new interest. Otherwise, the coding of the participant interpretations generally followed an inductive method of qualitative data coding allowing for novel categories to emerge through the sorting process.

To sort data into themes this study employed coding, rating, and Two phases of coding were conducted by the current author following the methodology described by Azungah (2018). In the first phase, a short descriptor was written for every response onto a separate set of cards to simplify the data and these descriptors were organized into first order semantic clusters. In the second phase, links were identified between first order clusters to generate second order themes (clusters of codes). This process was conducted iteratively until acceptable semantic categories were clear. In addition, after coding, the resulting clusters were rated according to their relatedness to the targeted construct mentioned in ITID literature (O’Keefe et al., 2017, 2018). The resulting codes were then transferred back to the original data set for further analysis.

Excluded Responses

First there were two types of responses which were removed from further analysis: uninterpretable and uninformative. Uninterpretable responses consisted of items like “solid,” “I’m addicted,” “I like sports more than anything else,” or the answer was missing. Together, these indicate that either the participant was unclear about the expectations of the qualitative question or their response requires further context to interpret. In addition, some participants responded to the items as if they were another Likert item and responded to the effect of “I agree” or “yes.” Of these uninterpretable responses, five (5.5%) were in response to the original item and 11 (11.6%) were in response to the revised item.

In contrast, uninformative responses were those that were too general or too close to the original item to lend any additional information to understanding item interpretation. These included items which were generic: “they won’t change,” as well as those that were generic plus one qualifier: “it possibly won’t change.” Sixteen (17.6%) of this category of responses were identified in response to the original item and 11 (11.6%) were in response to the revised item. While this generic response does not mean the individuals did not have a narrow interpretation of the construct under examination, it does not give us sufficient information to better understand the construct. Also, note that there were only three additional excluded responses in response to the original item as opposed to the revised item. Excluded responses for original and revised items were 21 (23.1%) and 18 (19.0%), respectively; this is a large but necessary reduction in data available for the current analysis.

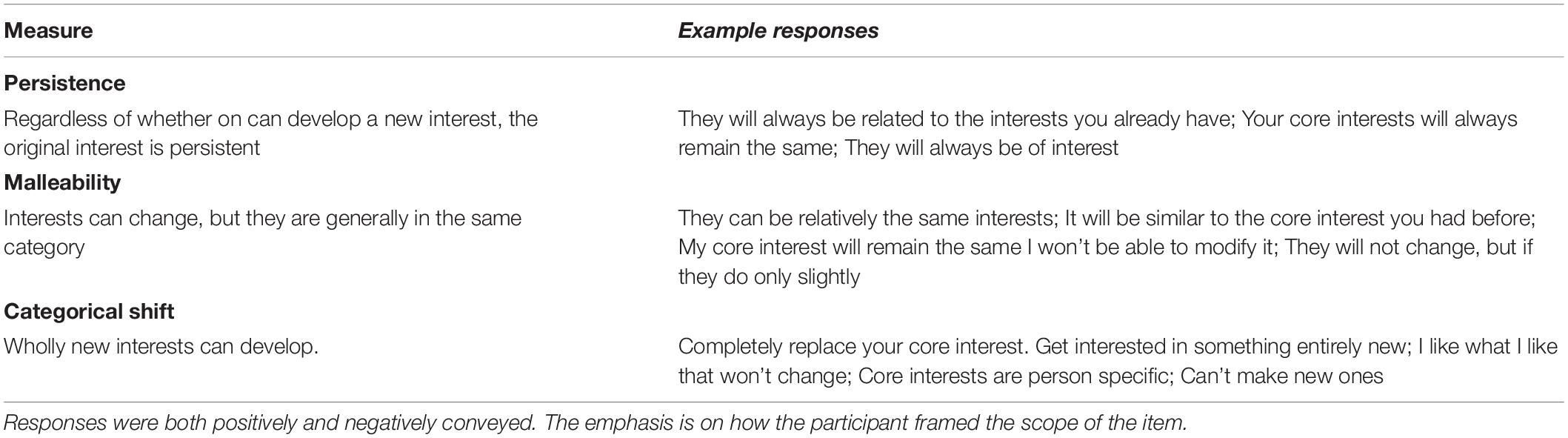

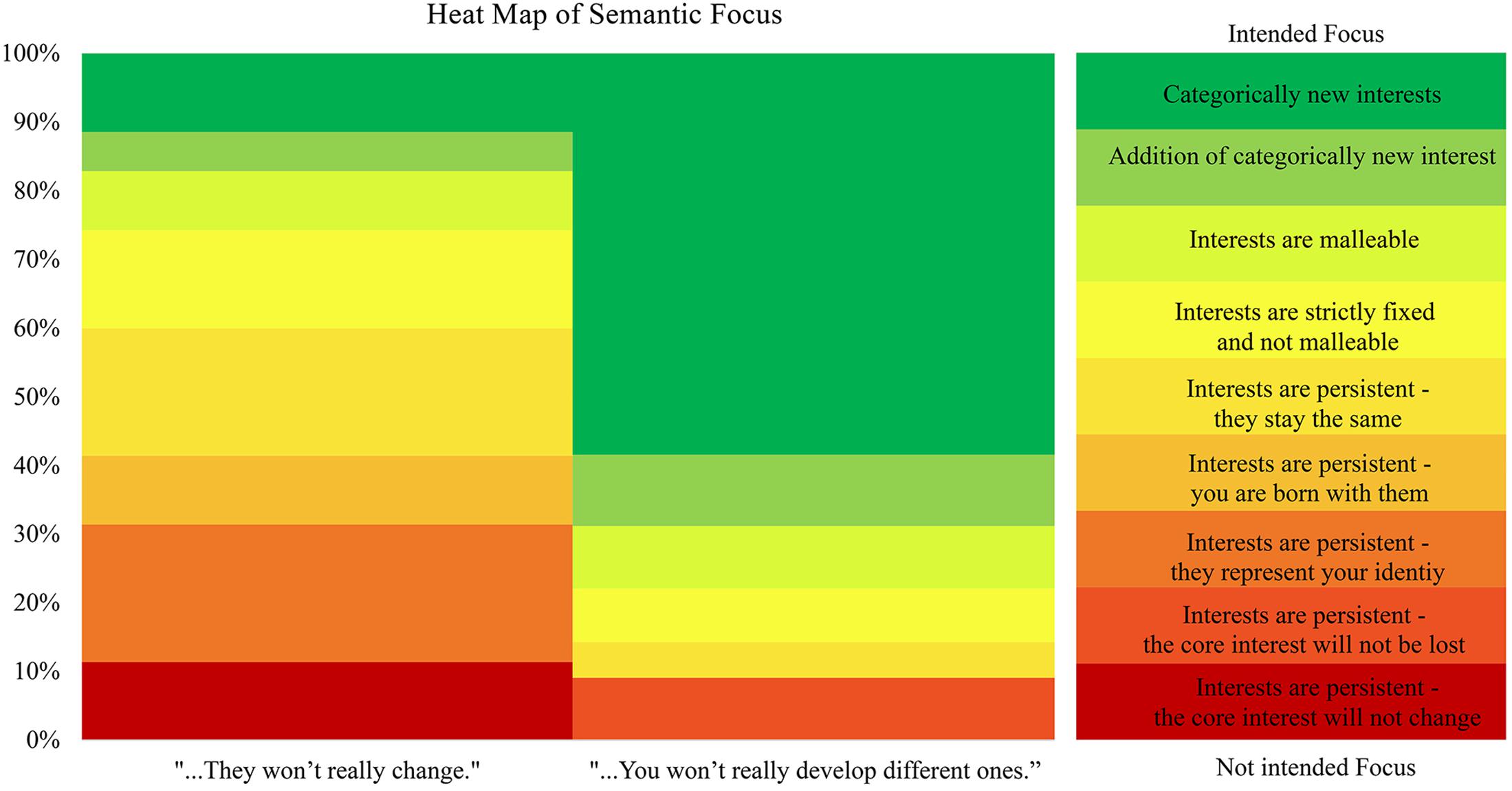

Codes and Themes

The coding process resulted in 9 small categories shown in Figure 2. The subsequent linking revealed three semantic themes for the interpretations: potential change in the persistence of the core interest even when new interests emerge, potential change in the malleability of the ways the core interest was practiced while still assuming a stable core, and categorical shift from the core interest (the targeted interpretation). Examples representing these second order codes may be seen in Table 4.

Figure 2. The percentage of participants who indicated the three categories of code in response to an open-ended request for reflection to the item.

Relatedness to ITID’s Targeted Interpretation

Subsequent to qualitative clustering, each cluster was evaluated to determine the relative semantic distance from the targeted construct. Figure 2 captures this data through a heat map where green is considered the most semantically close to the desired construct target and red is communicative of being far off target.

For example, a category was considered off target if it focused on the idea that a core interest is persistent and won’t change because it is part of one’s identity but that new interests develop all the time without replacing the core. Thus, here someone could see artistic practice as central to their identity and unchangeable but feel a deep and meaningful interest in mathematic could develop. In this example, the participant might appear entity oriented in their response because the core did not change, but their actual implicit theory is more complicated.

Also, another possibility for an off-target response what the dimension of change or area of interest malleability. These interpretations suggest that an interest may change but only within the bounds of its current domain. An oil painter can learn acrylic painting or water color but may not be able to develop a new interest in mathematics. This participant would appear to have a more incremental orientation while they may actually believe that the development of categorically new interests is unlikely. Each of these interpretations is semantically distant from the prototypical example and therefore makes the item interpretation for these respective individuals less valid. While item interpretation in a survey always varies it is hoped to minimize this type of error through item design. As can be seen in Figure 2, the revised question format increased the percentage of response that were near the targeted construct.

Analysis 2: Number of Factors in the Original Indicators

In this first analysis, responses from Group O’Keefe were compared to the same items from both Group A and B. To do this a multiple indicators and multiple causes (MIMIC) model was used assuming invariance and comparing it to a model assuming non-invariance (Muthén, 1989). A categorical comparison between this and the data in Group O’Keefe was used as a covariate in the model.

Results

Number of Factors

Comparisons of one- and two-factor solutions were conducted on all three data sets: the original data from O’Keefe et al. (2018) using the original items (Group O’Keefe), the re-administration of those original items in the current study (Groups A and B), and the responses from new 6-item scale (Groups A and B). In all three situations, the confirmatory factor analysis indicated that a two-factor solution was a significantly better fit than the one-factor solutions (see Table 5). This is indicated by the smaller values of model misfit (RMSEA and SRMR), the larger values for model fit (CFI and TLI), decreased entropy, and a significant drop in the chi square. In all cases the chi-square difference test demonstrated significant improvements in the model when two factors were used instead of one.

Table 5. Fit indices for alternative factor models of original and revised implicit theories-of-interest development.

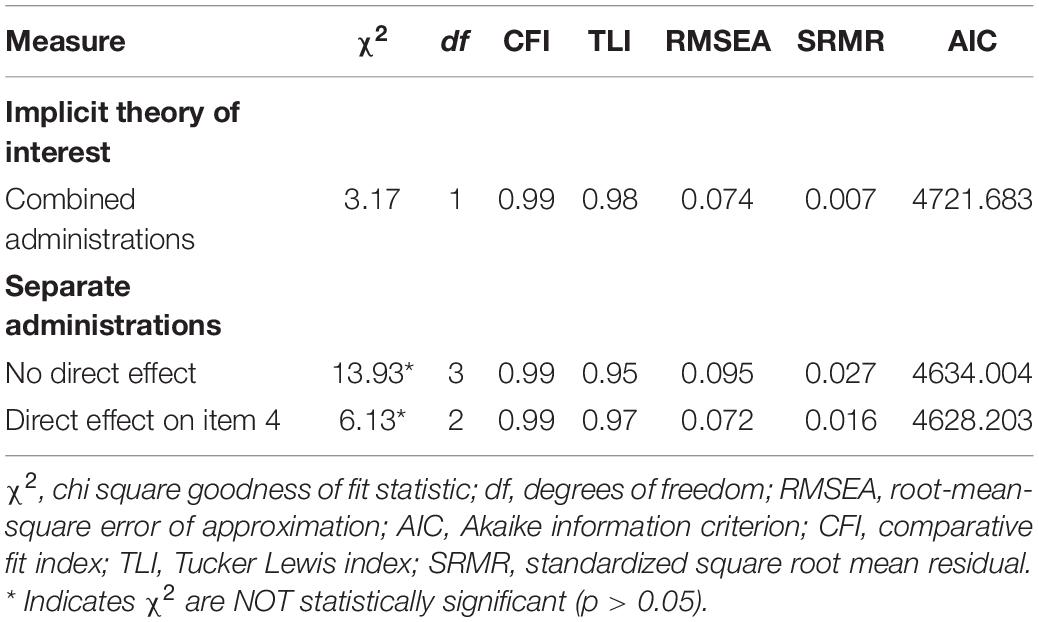

Invariance Between Testing Administrations

In a second step examining invariance between the administration of the items in Group O’Keefe and the same items administered to Groups A and B, item 4 revealed a direct effect on the between group comparison (see Table 6), suggesting that O’Keefe et al. (2018) and the current administration of the instrument have measurement non-invariance. Including the direct effect significantly improved the model χ2 = 7.808, df = 1, p < 0.001. With the direct effect of survey administration demonstrating non-invariance, there are potential problems in the reliability of this instrument, suggesting participants in O’Keefe et al. (2018) and the current study interpreted the items differently. Additional information on the O’Keefe et al. (2018) interpretations would be necessary to further interpret this non-invariance.

Table 6. Fit indices for MIMIC model on original four items identifying non-invariance for item four.

The Six Item Revised

With regard to the revised six item measure, first the model was verified followed by a check for non-invariance between potential groupings. The results of the CFA are in Table 5. First note that the two-factor solution was a significantly better fit than the one factor solution. An examination was conducted using the MIMIC model with potential covariates of gender, age, and testing administration period (Group A vs. Group B).

Covariates and Correlations

Utilizing the two-factor structure, non-invariance was examined using the MIMIC model with covariates of gender, age, and testing administration date (Group A vs. B) as potential covariates. This step would allow us to examine differences along these independent variables. The results in Table 7 demonstrate that across all three covariates the factor structure remained robust. Additionally, the model in Figure 3 shows little relationship between the factors in the 6-item scale and strong item loadings on each factor. Correlations between the items are in Table 8. An examination of the modification indices showed that there were no direct effects of any of these three variables on the items. With invariance confirmed, it was then examined whether the independent variables had a significant influence on either factor. No correlations reached significance.

Figure 3. Factor loading diagrams for confirmatory factor analysis (CFA) model fit to a 2-factor model of implicit theories of interest utilizing the final instrument with four revised and two additional items.

Innateness

A final analysis was conducted on the second administration of the survey in the current study. With, invariance supported, correlation analysis considering beliefs in the innateness of skills, talents and interests was conducted.

Holding an entity theory of interest development positively correlated beliefs that interests were innate at 0.63 (p < 0.001), that talents were innate a r = 0.19 (p < 0.05), and that skills were innate r = 0.39 (p < 0.001). There were no significant correlations with incremental theories of interest development. For comparison, the administration of the original survey items applied here from O’Keefe et al. (2018) revealed only a correlation of 0.39, 0.27, and 0.33, respectively. It is important to take note that those who believe interests are innate are more likely to endorse an entity implicit theory of interest development and this relationship is stronger for the revised items. This is further evidence of the separation of the two constructs although there was no variable in this data set which correlated with incremental implicit theories.

Among the three items examining the innateness of interests, talents, and skills there was a small but significant correlation. The low correlations here were not examined further except to note that all of them correlated with entity but not incremental implicit theories of interest. The intercorrelations are shown in Table 9.

Conclusion and Limitation

One limitation is that the participant sample was from two very different separate sources, college courses in Singapore and Mechanical Turk participants from the United States and India. However, this meets the goals of the current study which began as a small, adapted survey for a local population in Singapore and then validated this survey with a larger global population found on Mechanical Turk. In addition, the survey was not accompanied by extensive qualitative analysis utilizing in depth interviews. However, it should also be pointed out that the original instrument was validated using Mechanical Turk without any item interpretation metrics and so the current study adds additional metrics of validity as compared to O’Keefe et al.’s (2018) study.

Defining “Change” in Interests

This research narrowed the focus of the survey items previously utilized by O’Keefe et al. (2018) from a broad interpretation of implicit theories about one’s ability to “change” to ask very specifically about one’s ability to develop categorically new interests. However, the alternative interpretations of the original items offered by participants in the study above should also be explored in the future as potentially separate factors: perceived persistence of the “core” interests and the drift of interests into related but different interests. These follow-up studies should necessarily include sample sizes large enough to test potential higher order factors.

Increasing Validity

As has been shown here, the find-and-replace method of adapting a survey is not sufficient alone and some minimum qualitative review of item interpretation is necessary to validate an instrument. With short open-ended survey items, the current study was able to demonstrate how the revised instrument was an improvement upon the original. Limited qualitative analysis was possible here, however, even this minimal analysis was able to demonstrate the potential problems with the find-replace method alone in adapting instruments. One may argue that the changes here have been shown to only be superficial because the revised instrument is highly correlated with O’Keefe et al. (2018)’s instrument. However, correlations are an upper bound of an association between two constructs. Yes, one would expect categorical change to be related to the broader construct of implicit theories of “interest change” as would be predicted by the ideas that separate implicit theories within an individual may show a positive manifold as mentioned above; the correlation between the measures is necessary but not sufficient for validation.

Questioning the Number of Factors in Implicit Theory

An additional reminder presented here is that reliability coefficients, such as Cronbach’s alpha, do not necessarily indicate the presence of a single factor and multiple factors may be hidden if the factors covary in the sample. While in the current study evidence was found supporting the idea that entity and incremental implicit theories are relativity independent factors, this may be simply due to the influence of positive bias for the non-reverse coded items (Podsakoff et al., 2003).

However, it is possible that owever, positivity bias may be baked into a incremental theory. In other words, positive bias may not be independent of incremental implicit theory-of-interest and may be a component of it. If it is a separable construct, finding a covariate needs to accompany the search in the ongoing development of implicit theory surveys. However, the current analysis supports the perspective that both entity and incremental implicit theories-of-interest be measured with the inclusion of additional items when attempting to better understand the internal structure of these constructs. A willingness to apply effort to develop new interests for previously uninteresting activities has the potential to lead to the development of interests with social, economic, physiological, and psychological benefits: success in academic courses, engagement with physical fitness or nutrition habits, career training, or participation in previously disregarded social activities. In addition, reliance on an entity perspective concerning interest development may result in “putting all our eggs into one basket” which can be dangerous because focusing on a single interest may crowd out other important aspects of our well-being and limit flexible responses to changing conditions when there are impenetrable barriers or impediments to furthering our interest at a particular time in life (O’Keefe et al., 2017, 2018.

One application of studies in this field of research into implicit theories has been based on the idea that an implicit belief system can be primed through readings, writing assignments, or course materials encouraging one to frame situations with a particular implicit theory (Dweck et al., 1995b). Priming an implicit theory, is hoped to change interpretation of effort in a domain. To determine the effectiveness of these interventions, carefully constrained instruments will be important when researchers are attempting to interpret the impact (Yeager et al., 2019).

While simple compliance in academic activities may be sufficient, it is important to keep in mind the well documented benefits of being interested in a topic. In general, interest increases the likelihood of engagement in an activity and the likelihood one will independently seek additional information or experiences with the object of interest. Moreover, when one is engaged in an activity that they find interesting, they experience intense periods of focus or “flow,” increased memory both for the features learned about their interest as well as incidental and unrelated information preset in the environment, and increased motivation to reengage with the task even when the task is challenging (see Hidi and Renninger, 2006; Renninger and Hidi, 2015 for excellent reviews). Thus, if implicit theories about interest development are indeed a mediating variable, we need to be able to evaluate individual variance in this construct as both a dependent and independent variable in behavioral studies and we need to do so with reliable and valid instruments to determine what the operable features are.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the National Insititute of Education, Singapore. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

This research was funded by a grant from the National Institute of Education (No. PG 05/19 EEJ).

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Abd-El-Fattah, S. M., and Yates, G. C. R. (2006). “Implicit theory of intelligence scale: testing for factorial invariance and mean structure,” in Australian Association for Research in Education Conference, Adelaide, (South Australia).

Azungah, T. (2018). Qualitative research: deductive and inductive approaches to data analysis. Qual. Res. J. 18, 383–400. doi: 10.1108/qrj-d-18-00035

Burnette, J. L. (2010). Implicit theories of body weight: entity beliefs can weigh you down. Pers. Soc. Psychol. Bull. 36, 410–422. doi: 10.1177/0146167209359768

Chiu, C. Y., Dweck, C. S., Tong, J. Y. Y., and Fu, J. H. Y. (1997a). Implicit theories and conceptions of morality. J. Pers. Soc. Psychol. 73, 923–940. doi: 10.1037/0022-3514.73.5.923

Chiu, C. Y., Hong, Y. Y., and Dweck, C. S. (1997b). Lay dispositionism and implicit theories of personality. J. Pers. Soc. Psychol. 73, 19–30. doi: 10.1037/0022-3514.73.1.19

Cortina, J. M. (1993). What is coefficient alpha? an examination of theory and applications. J. Appl. Psychol. 78, 98–104. doi: 10.1037/0021-9010.78.1.98

Cury, F., Elliot, A., Da Fonseca, D., and Moller, A. (2006). An approach–avoidance elaboration of the socialcognitive model of achievement motivation. J. Pers. Soc. Psychol. 90:4.

Dweck, C. S. (1999). Self-Theories. Their Role in Motivation, Personality, and Development. Philadelphia: Psychology Press.

Dweck, C. S. (2012a). “Implicit theories,” in Handbook of Theories of Social Psychology, Volume 2, eds P. A. M. Van Lange, A. W. Kruglanski, and E. T. Higgins (London: SAGE Publications Ltd).

Dweck, C. S. (2012b). Mindsets and human nature: promoting change in the middle east, the schoolyard, the racial divide, and willpower. Am. Psychol. 67, 614–622. doi: 10.1037/a0029783

Dweck, C. S., Chiu, C. Y., and Hong, Y. Y. (1995a). Implicit theories and their role in judgments and reactions: a word from two perspectives. Psychol. Inquiry 6, 267–285. doi: 10.1207/s15327965pli0604_1

Dweck, C. S., Chiu, C. Y., and Hong, Y. Y. (1995b). Implicit theories: elaboration and extension of the model. Psychol. Inquiry 6, 322–333. doi: 10.1207/s15327965pli0604_12

Faria, L., and Fontaine, A. M. (1997). Adolescents’ personal conceptions of intelligence: the development of a new scale and some exploratory evidence. Eur. J. Psychol. Educ. 12:51. doi: 10.1007/bf03172869

Franiuk, R., Cohen, D., and Pomerantz, E. M. (2002). Implicit theories of relationships: implications for relationship satisfaction and longevity. Pers. Relationsh. 9, 345–367. doi: 10.1111/1475-6811.09401

Green, S. B., Lissitz, R. W., and Mulaik, S. A. (1977). Limitations of coefficient alpha as an index of test unidimensionality1. Educ. Psychol. Meas. 37, 827–838. doi: 10.1177/001316447703700403

Hair, J. F., Babin, B. J., Anderson, R. E., and Black, W. C. (1995). Multivariate Data Analysis, 8th Edn. India: Cengage.

Harackiewicz, J. M., Barron, K. E., Tauer, J. M., and Elliot, A. J. (2002). Predicting success in college: a longitudinal study of achievement goals and ability measures as predictors of interest and performance from freshman year through graduation. J. Educ. Psychol. 94, 562–575. doi: 10.1037/0022-0663.94.3.562

Hass, R. W., Katz-Buonincontro, J., and Reiter-Palmon, R. (2016). Disentangling creative mindsets from creative self-efficacy and creative identity: do people hold fixed and growth theories of creativity? Psychol. Aesthet. Creat. Arts 10, 436–446. doi: 10.1037/aca0000081

Hidi, S., and Renninger, K. A. (2006). The four-phase model of interest development. Educ. Psychol. 41, 111–127. doi: 10.1207/s15326985ep4102_4

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Modeling 6, 1–55. doi: 10.1080/10705519909540118

Kline, R. B. (2005). Principles and Practice of Structural Equation Mod, 3rd Edn. New York, NJ: Guilford Press.

Komarraju, M., and Nadler, D. (2013). Self-efficacy and academic achievement: why do implicit beliefs, goals, and effort regulation matter? Learn. Individ. Differ. 25, 67–72. doi: 10.1016/j.lindif.2013.01.005

McDermott, R. J., and Sarvela, P. D. (1998). Health Education Evaluation and Measure- Ment: a Practitioner’s Perspective, 2nd Edn. Dubuque, IA: William C. Brown.

Muthén, B. (1989). Latent variable modeling in heterogeneous populations. Psychometrika 54, 557–585. doi: 10.1007/bf02296397

O’Keefe, P. A., Dweck, C. S., and Walton, G. M. (2018). Implicit theories of interest: finding your passion or developing it? Psychol. Sci. 29, 1653–1664. doi: 10.1177/0956797618780643

O’Keefe, P. A., Horberg, E. J., and Plante, I. (2017). “The multifaceted role of interest in motivation and engagement,” in The Science of Interest, eds P. A. O’Keefe and J. M. Harackiewicz (New York, NY: Springer), 49–67. doi: 10.1007/978-3-319-55509-6_3

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., and Podsakoff, N. P. (2003). Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 88, 879–903. doi: 10.1037/0021-9010.88.5.879

Puente-Diaz, R., and Cavazos-Arroyo, J. (2019). Creative mindsets and their affective and social consequences: a latent class approach. J. Creat. Behav. 53, 415–426. doi: 10.1002/jocb.217

Renninger, K. A., and Hidi, S. (2015). The Power of Interest for Motivation and Engagement. Milton Park: Routledge.

Sansone, C., and Morgan, C. (1992). Intrinsic motivation and education: competence in context. Motiv. Emot. 16, 249–270. doi: 10.1007/bf00991654

Sansone, C., Weir, C., Harpster, L., and Morgan, C. (1992). Once a boring task always a boring task? interest as a self-regulatory mechanism. J. Pers. Soc. Psychol. 63, 379–390. doi: 10.1037/0022-3514.63.3.379

Sansone, C., Wiebe, D. J., and Morgan, C. (1999). Self-regulating interest: the moderating role of hardiness and conscientiousness. J. Pers. 61, 701–733. doi: 10.1111/1467-6494.00070

Schroder, H. S., Callahan, C. P., Gornik, A. E., and Moser, J. S. (2019). The fixed mindset of anxiety predicts future distress: a longitudinal study. Behav. Ther. 50, 710–717. doi: 10.1016/j.beth.2018.11.001

Schroder, H. S., Dawood, S., Yalch, M. M., Donnellan, M. B., and Moser, J. S. (2015). The role of implicit theories in mental health symptoms, emotion regulation, and hypothetical treatment choices in college students. Cogn. Ther. Res. 39, 120–139. doi: 10.1007/s10608-014-9652-6

Sousa, V. E., Matson, J., and Dunn Lopez, K. (2017). Questionnaire adapting: little changes mean a lot. Western J. Nursing Res. 39, 1289–1300. doi: 10.1177/0193945916678212

Tenemaza Kramaley, D., and Wishart, J. (2020). Can fixed versus growth mindset theories of intelligence and chess ability, together with deliberate practice, improve our understanding of expert performance? Gifted Educ. Int. 36, 3–16. doi: 10.1177/0261429419864272

Valentiner, D. P., Mounts, N. S., Durik, A. M., and Gier-Lonsway, S. L. (2011). Shyness mindset: applying mindset theory to the domain of inhibited social behavior. Pers. Individ. Differ. 50, 1174–1179. doi: 10.1016/j.paid.2011.01.021

Wolf, E. J., Harrington, K. M., Clark, S. L., and Miller, M. W. (2013). Sample size requirements for structural equation models: an evaluation of power, bias, and solution propriety. Educ. Psychol. Meas. 73, 913–934. doi: 10.1177/0013164413495237

Yeager, D. S., Hanselman, P., Walton, G. M., Murray, J. S., Crosnoe, R., Muller, C., et al. (2019). A national experiment reveals where a growth mindset improves achievement. Nature 573, 364–369. doi: 10.1038/s41586-019-1466-y

Keywords: growth mindset, entity mindset, motivation, interests, implicit theory of interest, fixed mindset, incremental mindset, implicit theories

Citation: Jahner EE (2021) Can You Develop New Interests? an Improved Instrument for Measuring Implicit Theories of Interest Development. Front. Educ. 6:646970. doi: 10.3389/feduc.2021.646970

Received: 12 January 2021; Accepted: 30 March 2021;

Published: 22 April 2021.

Edited by:

Jon-Chao Hong, National Taiwan Normal University, TaiwanReviewed by:

Tzung-Jin Lin, National Taiwan Normal University, TaiwanHi-Lian Jeng, National Taiwan University of Science and Technology, Taiwan

Kai-Hsiang Yang, National Taipei University of Education, Taiwan

Copyright © 2021 Jahner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Erik Erwin Jahner, ZXJpay5qYWhuZXJAdXNjLmVkdQ==

Erik Erwin Jahner

Erik Erwin Jahner