- The Centre for the Advancement of Teaching and Learning, University of Manitoba, Winnipeg, MB, Canada

The rapid shift to online teaching and learning in postsecondary education during COVID-19 forced institutions to provide additional support and resources to instructors, especially those who were teaching online for the first time. The Online and Blended Teaching Readiness Assessment (OBTRA) was designed to assess the perceptions and competencies of instructors undertaking the move to online teaching to identify strengths and limitations. The present study identified the underlying factor structure and evidence of construct validity of the OBTRA for a sample of 223 postsecondary instructors (data collected from November 2019 to January 2020). An exploratory factor analysis revealed 5 factors that were interpreted as Technology, Engagement and Communication, Pedagogy, Perceptions of Teaching Online, and Organization. OBTRA scores were also found to be positively correlated with scores obtained from measures of instructional practices and teacher efficacy. The next steps in the development of the OBTRA are to examine how it can be used to enable academic units to provide the most appropriate support and resources aligned with instructor needs and to guide instructors to the initial steps required for successful transition to online teaching.

Introduction

Online courses are increasingly more common given the COVID-19 pandemic. Instructors who taught students in face-to-face classes for much of their careers were forced to shift to the online teaching and learning environment rapidly and with little preparation. In response, postsecondary institutions developed general resources to assist instructors through the transition but with little time to identify components of online teaching that required the greatest support. Self-assessment instruments that can determine areas for individualized development quickly are important for instructors and academic units (e.g., teaching and learning centres) to ensure resources are aligned with instructor needs. The primary objective of this study was to describe the development of a self-assessment instrument designed to explore instructor readiness to teach online, the Online and Blended Teaching Readiness Assessment (OBTRA), and begin to examine its psychometric properties.

Readiness for teaching online has been defined as the qualities or predispositions of an instructor that exemplify teaching high-quality online courses (Palloff and Pratt, 2011). Mental and physical preparedness (Cutri and Mena, 2020), a willingness to create active, collaborative learning environments that foster a sense community (Palloff and Pratt, 2011), and acceptance of online teaching (Gibson et al., 2008) also demonstrate readiness for the online teaching and learning modality. An inability or unwillingness to adopt student-focused approaches and the perception that online courses provide low quality learning environments (Gibson et al., 2008) and are not worthwhile (Allen and Seaman, 2015) can be important barriers to the successful transition to teaching online.

An important step in addressing instructor readiness to teach online is to examine their perceptions of teaching online. The perceived effectiveness of online courses increases when instructors have more experience and opportunities to teach online courses (Seok et al., 2010), and can access both technology and instructional design supports (Roby et al., 2013). Furthermore, identifying and supporting the pedagogical practices that promote high quality online learning experiences for students is essential. Pedagogical methods, such as active learning strategies (Freeman et al., 2014), can be transferred from face-to-face to online environments (Khan et al., 2017). Student-centered instructional strategies that include active discussion and frequent communication with students play a critical role in successful online teaching and learning. This is particularly true when instructors establish relationships with students to help them to feel that they are part of a learning community (Kebritchi et al., 2017). Instructors already utilizing these teaching strategies in face-to-face settings may be able to transfer these strategies to the online environment with relatively more success than those implementing such strategies for the first time.

Instructors most ready to teach online are often those who can also align learning outcomes with appropriate learning activities and the technologies to facilitate completion of those activities (Palloff and Pratt, 2011). Because of the obvious use of technological tools to perform tasks associated with online teaching, facilitate online learning, and communicate with students, a certain degree of technology competency is required to teach online (Bigatel et al., 2012; Reilly et al., 2012; Kebritchi et al., 2017). Upon mastering teaching and learning technologies, instructor attitudes and acceptance of online teaching also increases (Seok et al., 2010; Reilly et al., 2012). Administrative aspects of teaching, such as having strong organization skills (Palloff and Pratt, 2011) and displaying leadership characteristics, including classroom decorum and policy enforcement (Bigatel et al., 2012), have also emerged in the readiness to teach online literature but evidence is sparse. Other important qualities for the online instructor include visibility, compassion, communication, commitment (Palloff and Pratt, 2011), and general content knowledge (Kebritchi et al., 2017).

Comprehensive theoretical frameworks that outline the underlying components of an instructor’s readiness to teach online were not identified at the time this study was developed, however, a scan of existing readiness to teaching online self-assessments revealed that items were related primarily to teaching style, time management, and technology competency. One of the earliest self-assessment readiness to teach online measures, the Readiness Assessment Tool (Mercado, 2008) examines technology skills and attitudes (e.g., abilities, motivation, behavior) towards online learning, and includes a 59-item student version, a 63-item instructor version, and a 30-item institutional version. Unfortunately, numerous prompts in this instrument appear outdated [e.g., “I know how to log in to the internet service provider (ISP)”; Mercado, 2008, p. 4], and its usability, factor structure, and psychometric properties have not been reported in the literature.

The Faculty Self-Assessment: Preparing for Online Teaching is a publicly accessible self-administered measure of readiness to teach online (Penn State University and Central Florida University, 2008). This 22-item instrument was designed to assess preparedness to enter the online teaching landscape in the four competencies of experience, organization, communication, and technical skills. The categories and items retained in this measure were based on the results of reliability and exploratory factor analyses (Bigatel et al., 2012; Ragan et al., 2012). The Assessment of Faculty Readiness to Teach Online (Palloff and Pratt, 2011) is an adaptation of the Faculty Self-Assessment: Preparing for Online Teaching (Penn State University and Central Florida University, 2008) with additional questions to address teaching philosophy and pedagogy to a greater degree. This measure balances the critical qualities that encompass instructor readiness. Chi (2015) Readiness to Teach Online measure adheres to the Handbook of Quality Scorecard (Shelton and Moore, 2014) in terms of its focus on course design, but only provides a short section on readiness to teach online.

Although these instruments can provide a useful first step in helping instructors understand their readiness to teach online, evidence for reliability and validity is limited and competency in technology use is often the primary focus. The latter may be due, in part, to the fact that most resources available to instructors who teach online (Lane, 2013) and research on readiness to teach online (Wingo et al., 2017) have been technology focused. Emphasizing technology over other areas (e.g., pedagogy and perceptions) is an inadequate approach to measuring readiness to teach online (Hixon et al., 2011; Lane, 2013) as technical training alone is insufficient to ensure quality online instruction (Chang et al., 2014; Teräs and Herrington, 2014). The OBTRA capitalizes on the strengths of existing technology, organizational, and administrative readiness to teach online scales and extends these measures to explore instructor perceptions around student-centred pedagogies for online teaching. The focus of this study was to examine the factor structure of the OBTRA and begin to gather evidence for its construct validity.

Materials and Methods

Instrument Development

OBTRA development began with two activities conducted simultaneously. One activity involved conducting an informal needs assessment with the staff at a teaching and learning centre to learn about instructors’ requests for online teaching support and how these requests are met. The second activity involved a scan of the research literature using the Educational Resources Information Center (ERIC), PsycINFO, Science Direct, Scopus, and Web of Science databases. We restricted our search to articles published from 2008 (to ensure current relevance) to 2018 (time of the scan). An environmental scan of teaching and learning centre websites was conducted to ensure we captured existing readiness to teach online instruments that were currently in use. Items from four existing measures were adapted and included in the OBTRA. Two usability tests were then conducted with teaching and learning centre staff and instructors at a research-intensive Canadian university to obtain feedback about the readability and clarity of the instrument. Improvements to the instructions and items were made based on this feedback.

Instrument Testing

Participant Recruitment

Effective instrument testing requires a wide spectrum of participants with a broad range of teaching experiences to ensure constructs of interest can be measured across a diverse population (McCoach et al., 2013). Our recruitment strategy involved contacting numerous professional academic associations to recruit postsecondary instructors with various years of online and face-to-face teaching experience at the postsecondary level. There were no other inclusion or exclusion criteria. Data from 272 individuals who consented to participate in this study were collected prior to the onset of the COVID-19 pandemic (November 2019 to January 2020).

Instruments and Procedure

Upon informed consent, participants were encouraged to respond to all items in the 20 min survey (in the order described below) based on their experiences in postsecondary education. Participants were provided with an opportunity to enter a draw for an e-gift card. This study was approved by the Research Ethics Board at the University of Manitoba, Winnipeg, Canada.

Participants responded to items pertaining to demographic information such as gender, institution, faculty, role, and years teaching online. If applicable, participants were asked to provide the average student evaluation score that they have received when teaching online courses and to self-assess their confidence and skillsets for teaching online using a 4-point Likert scale (1 = Poor, 2 = Below average, 3 = Above average, 4 = Excellent).

Participants then completed the Postsecondary Instructional Practices Survey (PIPS) (Walter et al., 2016) to obtain information about their instructional practices. Responses were made on a 5-point Likert-type scale (1 = Not at all descriptive of my teaching to 5 = Very descriptive of my teaching). The 2-factor model of the PIPS includes instructor-centered practices (PIPS ICP; 9 items) and student-centered practices (PIPS SCP; 13 items). The PIPS has demonstrated reliability (α = 0.80) and validity (Walter et al., 2016).

Next, participants completed the Teachers’ Sense of Efficacy Scale - 12 Item Short Form (TSES) (Tschannen-Moran and Hoy, 2001) based on Bandura’s (1997) explanation of self-efficacy applied to teachers. The TSES consists of items pertaining to efficacy in three subscales: student engagement (TSES SE), instructional strategies (TSES IS), and classroom management (TSES CM). Responses were made on a 9-point Likert-type scale (1 = Nothing to 9 = A great deal) indicating the extent to which a particular teaching capability can be demonstrated. TSES composite and subscale scores have shown to be reliable (α = 0.90) and valid (Tschannen-Moran et al., 1998; Tschannen-Moran and Hoy, 2001).

Finally, participants completed the 31-item OBTRA, (designed to assess perceptions of online courses, perceived ability, and current teaching practices) and consisted of four sections. Technology [e.g., basic online and computer skills and literacy, learning management system (LMS) knowledge] consisted of ten items rated on a 4-point Likert-type scale (1 = Never, 2 = Seldom, 3 = Usually, 4 = Frequently). Pedagogy (e.g., perceived online teaching quality, transferable teaching strategies) consisted of seven items rated on a 4-point scale (1 = Never done this, 2 = Done this and had mixed success, 3 = Done this successfully, 4 = I am an expert and can teach others). Nine items fell within the Perceptions toward online/blended learning domain and five under the Administration domain (e.g., organization, time management, commitment) and these were rated on a 4-point scale (1 = Strongly disagree to 4 = Strongly agree). Higher OBTRA composite scores (mean of all items) and subscale scores (mean of subscale items) indicate greater readiness to teach online.

Results

Data were examined for normality, outliers, and independence of errors (intraclass correlation). Missing data across scale items (PIPS, TSES, and OBTRA) ranged from 0 to 5%. Mean substitution was utilized to account for missing subscale item responses.

Participant Demographics

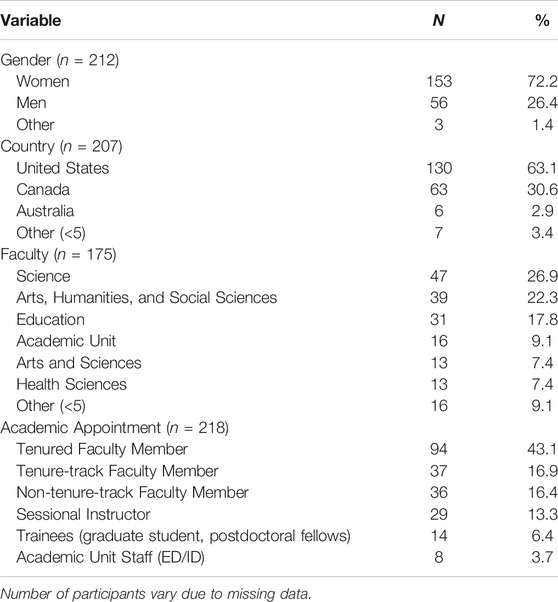

Forty-nine of 272 individuals did not answer any questions in the survey; therefore, we analyzed the data provided by 223 individuals (Mage = 46 years, SDage = 11 years). Most participants identified as women, being from the United States and Canada, identified their faculty as science, arts, humanities, and social sciences, and education, and were tenured or tenured-track faculty (see Table 1). Many reported experiencing an online course as a student (n = 136, 61.0%) and/or teaching online courses (n = 138, 61.9%). Of those with previous online teaching experience, their average years of experience was 6.42 (SD = 5.15 years) and typical student evaluation scores were above average (Md = 3.0, Range = 1–4). Over half of participants (n = 130, 58.3%) had designed online courses, reporting an average of 5.83 years (SD = 5.08 years) of experience doing so. Of the 113 participants (58.7%) who reported participating in some form of training on how to teach online, 102 attended a workshop at their own institution, 45 attended a webinar external to their institution, and 36 engaged in other types of training (e.g., courses, conferences, colleagues et al.). Skillsets for teaching online and confidence for teaching online was average (M = 2.8, SD = 0.7; M = 2.8, SD = 0.8, respectively).

Factor Structure

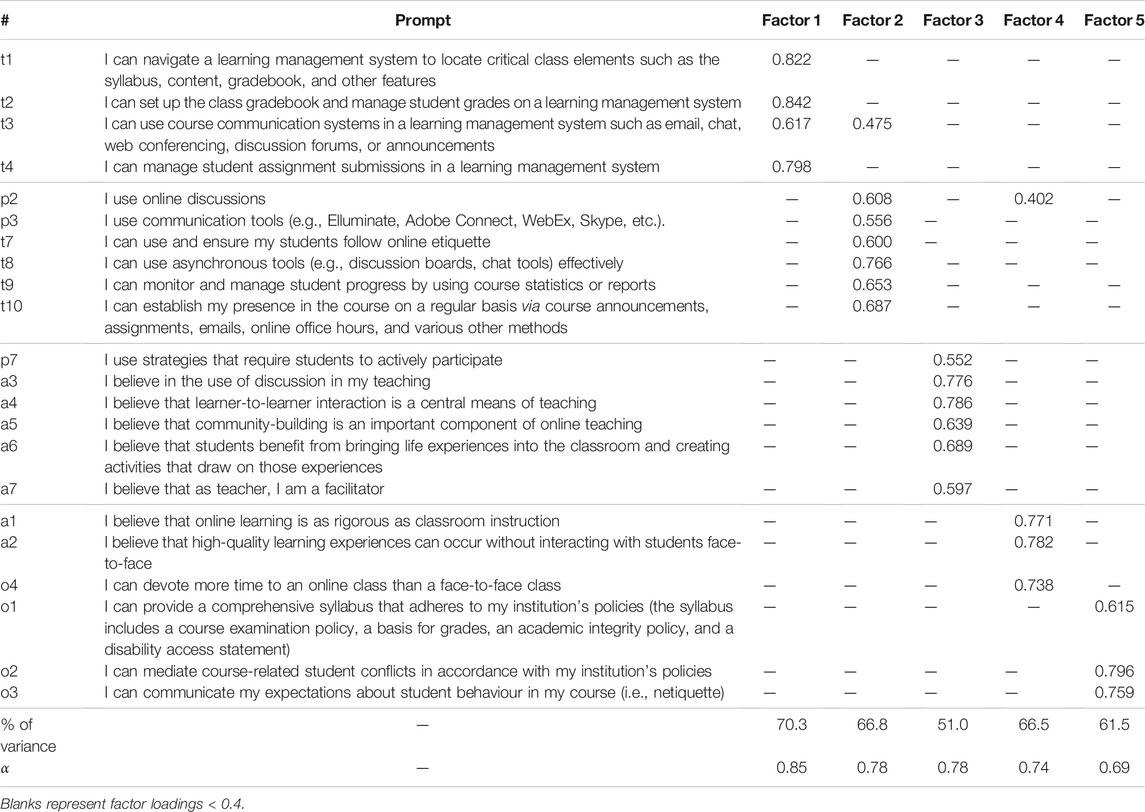

A Kaiser-Meyer-Olkin (KMO) (Kaiser, 1974) value verified that the sampling adequacy for the analysis was “meritorious” (KMO = 0.799) (Hutcheson and Sofroniou, 1999). An initial analysis was run to determine the most adequate factors to extract. Based on the visual analysis of the scree plot (Cattell, 1966), five points appeared above the elbow and eight factors had eigenvalues above the Kaiser criterion of >1 (Kim and Mueller, 1978). Five components were retained as this solution was clearer and fit the conceptual model better. Principal component analysis was then conducted with an orthogonal varimax rotation in SPSS version 27 (IBM Corp, 2020). Items with loadings above 0.40 were considered at this point in the analysis (Ford et al., 1986), resulting in a 22-item OBTRA. The retained items (Table 2) indicated a good factor structure with minimal cross-loadings on secondary factors.

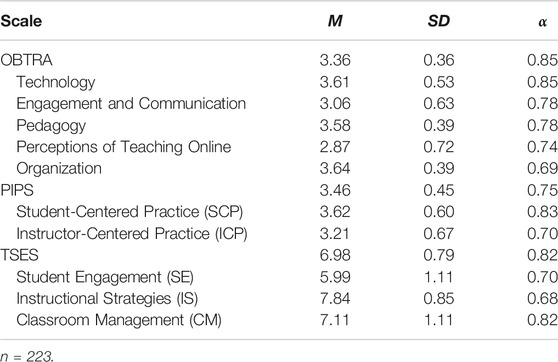

The five-factor solution explained 61.2% of the variance, and each factor was “theoretically meaningful” (Hutcheson and Sofroniou, 1999, p. 222). Technology (Factor 1) emerged with the most explanatory power (Tables 2 and 3) and represents the perceived ability of technology use, specifically use of the LMS. Engagement and Communication (Factor 2) represents perceived ability to facilitate student engagement and use effective communication strategies in the online modality. Pedagogy (Factor 3) represents the utilization of active teaching strategies and the belief that these strategies are important. Perceptions of Teaching Online (Factor 4) represents the perception that online teaching and learning can be effective and valuable. Organization (Factor 5) represents perceived ability to be administratively competent and flexible in terms of managing a course and adhering to institutional policy. Alpha values of all subscales of the 22-item OBTRA (see Table 2) were marginally or relatively reliable (Sattler, 2001).

TABLE 3. Descriptive statistics for the Online and Blended Teaching Readiness Assessment (OBTRA), Post-secondary Instructional Practices Survey (PIPS), and Teacher Self-Efficacy Scale (TSES).

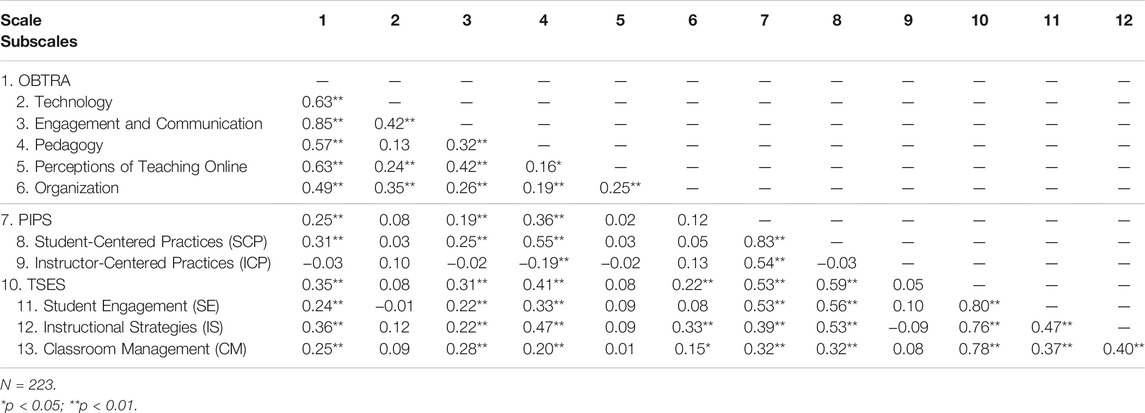

Evidence for Validity

Evidence for concurrent validity of the OBTRA is shown in the significant relationships (i.e., r ≥ 0.30; Nunnally and Bernstein, 1994) between the OBTRA and PIPS scores, particularly between the OBTRA Pedagogy and PIPS SCP (r = 0.55, p < 0.01), TSES SE (r = 0.33, p < 0.01), and TSES IS (r = 0.47, p < 0.01) subscale scores. As expected, very weak relationships (r < 0.20) between the OBTRA Pedagogy and Engagement and Communication subscales and the PIPS ICP subscale scores were observed, providing evidence for discriminant validity. Correlations between composite and subscales scores are shown in Table 4.

TABLE 4. Correlations between the Online and Blended Teaching Readiness Assessment (OBTRA), Postsecondary Instructional Practices Survey (PIPS), teachers’ Sense of Efficacy Scale (TSES).

Discussion

We designed the OBTRA to explore the components related to instructors’ readiness to teach online for those moving from face-to-face to online teaching and to identify areas for further instructional development. The present study identified an underlying factor structure and preliminary psychometrics properties of the OBTRA using data provided by a sample of 223 postsecondary instructors. Exploratory factor analysis was utilized to identify items with high loadings on a limited number of factors. Five factors, including Technology, Engagement and Communication, Pedagogy, Perceptions of Teaching Online, and Organization, emerged as a result of this analysis. We also found preliminary evidence of concurrent and discriminant validity of the OBTRA. The results suggest that, with further development, the OBTRA has the potential to be a useful tool to enable academic units to provide the most appropriate support and resources for instructors as they transition to online teaching and further develop their online teaching skills.

Previous measures of readiness to teach online are primarily checklists, focus on technology, and/or evaluate instructors’ acceptance of online vs face-to-face courses. The strength of the OBTRA lies in its potential to guide instructors to appropriate supports and training related to technology (i.e., LMS), communication strategies and the use of technology to engage learners, and pedagogy to better prepare them for online teaching. Cutri and Mena (2020) suggest that instruments to measure readiness to teach online are needed and should be used as practical tools to inform faculty development instead of being used to shame instructors. The OBTRA was utilized by a postsecondary teaching and learning centre during the pivot to online learning due to the COVID-19 pandemic. Instructors completed the OBTRA via a survey platform independently, received customized feedback, and were directed to supports that aligned with their responses. Further work to understand whether this feedback was useful to instructors would provide additional evidence of the validity of the instrument. It may also be interesting to determine whether the OBTRA would be helpful for K-12 educators and their professional development in online education, as many of them have also faced the transition from the face-to-face to online teaching and learning due to the COVID-19 pandemic.

Development of an assessment instrument must involve an examination that the tool measures what it purports to measure. We found preliminary evidence for construct validity of the OBTRA by examining relationships between its scores and scores obtained from other similar instruments. Significant positive correlations between our measure and the PIPS and TSES support the notion that an instructor with higher OBTRA scores is knowledgeable of student-centred instructional practices, focuses on student engagement, is efficacious, and is prepared to teach in the online modality. These findings are important because student-centered teaching practices are known to be highly effective (i.e., improved student outcomes) in both the face-to-face (Freeman et al., 2014) and online (Palloff and Pratt, 2011; Kirwan and Rounell, 2015; Kebritchi et al., 2017) modalities. The next steps in the development of the OBTRA are to employ confirmatory factor analysis with a larger sample to test the proposed factor structure identified in this study and examine associations between readiness to teach online, the implementation of effective pedagogies, and student outcomes.

Despite its strengths, we acknowledge several limitations of our study. First, we designed a self-report measure that assumes that respondents can assess themselves accurately. There is evidence to support the notion that instructor self-assessment is a powerful technique for facilitating professional growth (Ross and Bruce, 2007). In future studies, results from the OBTRA could be compared with other sources of evidence for high-quality teaching (e.g., expert observation). Second, we used a convenience sample that was relatively small, limiting the extent of the analyses and its interpretation (Hutcheson and Sofroniou, 1999). Future research using larger sample sizes are needed to continue to refine the OBTRA and to allow for generalizability to the population of postsecondary instructors. Future studies should also include investigations into the “blended” portion of the OBTRA as recent work suggests distinct skills are required for teaching blended courses vs fully online courses (Archibald et al., 2021). A third limitation of our study is that the data were collected prior to the onset of the COVID-19 pandemic. It is possible that the results of the study might have differed should the data have been collected during or after the pandemic as more postsecondary instructors would have experienced teaching online. A follow up post-pandemic study would allow for a unique opportunity to examine the effects that the pandemic had on instructor readiness to teach online or preparedness to implement blended, hybrid, or flexible approaches when students and academic staff return to campuses.

Another limitation of our work is the narrow focus of the OBTRA Pedagogy subscale. Future research should further define the characteristics of high-quality pedagogy in online courses and refine the measurement of this characteristic. Several researchers have suggested the importance of instructors’ emotional intelligence in the online teaching modality, particularly empathy for student needs (Cutri et al., 2020; Torrisi-Steele, 2020). Considering the current environment of the COVID-19 pandemic and increasing importance of addressing the needs of students, flexibility and empathy for students should be considered primary characteristics of an effective online instructor. In an integrated literature review of 44 peer-reviewed studies on online teaching readiness, Cutri and Mena (2020) identified 3 shared key concepts—affective, pedagogical, and organizational considerations (found in 44.8, 40.0, and 18.2% of studies, respectively). Affective considerations encompass “affective dispositions involved in creating online versions of existing courses, such as response to risk taking, response to change, identity disruption, and stress” (Cutri and Mena, 2020, p. 365). Pedagogical considerations focused on “sharing power with students, apprehensions regarding conveying personality online, and avoiding monologues” (Cutri & Mena, 2020, p. 365). The OBTRA’s Perceptions of Teaching Online and Pedagogy subscales begin to address these concepts, however, the OBTRA’s Organization subscale, represented by items such as time management and flexibility, is most closely linked to the organizational considerations outlined by Cutri and Mena.

Finally, we acknowledge that the development of the OBTRA was not guided by an established theoretical framework for readiness to teach online which is a significant limitation. Several authors have outlined possibilities for theory-driven approaches to assess readiness to teach online (Martin et al., 2019; Cutri and Mena, 2020) in more recent publications. To develop their readiness instrument, Martin et al. (2019) created a framework, based on a literature review and existing readiness instruments, that included four components: course design, course communication, time management, and technical. The OBTRA subscales align closely with this framework. Cutri and Mena, however, adapted the theory of professional vulnerability (Kelchtermans, 1996; Kelchtermans, 2009) to frame their review of the readiness to teach online research (described above). Professional vulnerability refers to the “feeling that one’s professional identity and moral integrity. . . are questioned” (Kelchtermans, 1996, p. 319), during the transition from face-to-face to online teaching, instructors may feel vulnerable when their identities and values associated with teaching in traditional classroom settings are threatened (Cutri and Mena, 2020). Moreover, “the structure and culture of academia can exacerbate the professional vulnerability that faculty experience when transitioning to online teaching” (Cutri and Mena, 2020, p. 363). Items that measure professional vulnerability related to teaching online could be added to future iterations of the OBTRA and contribute to the development of a theoretical framework related to readiness to teach online.

Conclusion

We present the OBTRA as a new tool that can be used by instructors and academic units during a shift from face-to-face to online learning environments. This tool will help to identify areas of professional development and instructional support. Instructor perceptions of teaching online, accompanied by the willingness to be involved in online education (Hoyt and Oviatt, 2013), and appropriate professional development opportunities (Adnan, 2018) play key roles toward the future success of online education and its effectiveness for our students.

Data Availability Statement

The datasets presented in this article are not readily available because permissions to share data publicly were not obtained from participants.

Ethics Statement

The studies involving human participants were reviewed and approved by the Joint Faculty Research Ethics Board, University of Manitoba. The participants provided their written informed consent to participate in this study.

Author Contributions

RL and AD were involved in formulating the project and design of the study. RL collected the data. RL and BS conducted the analyses and drafted the article. All authors contributed to revising the work critically.

Funding

The Centre for the Advancement of Teaching and Learning, University of Manitoba supported this project.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We wish to thank Marisol Soto from The Centre for the Advancement of Teaching and Learning, University of Manitoba, for her assistance with data cleaning.

References

Adnan, M. (2018). Professional Development in the Transition to Online Teaching: The Voice of Entrant Online Instructors. ReCALL 30 (1), 88–111. doi:10.1017/S0958344017000106

Allen, E., and Seaman, J. (2015). Grade Level: Tracking Online Education in the United States. Availableat: http://www.onlinelearningsurvey.com/reports/gradelevel.pdf.

Archibald, D. E., Graham, C. R., and Larsen, R. (2021). Validating a Blended Teaching Readiness Instrument for Primary/secondary Preservice Teachers. Br. J. Educ. Technol. 52 (2), 536–551. doi:10.1111/bjet.13060

Bigatel, P. M., Ragan, L. C., Kennan, S., May, J., and Redmond, B. F. (2012). The Identification of Competencies for Online Teaching success. Olj 16 (1), 59–78. doi:10.24059/olj.v16i1.215

Cattell, R. B. (1966). The Scree Test for the Number of Factors. Multivariate Behav. Res. 1 (2), 245–276. doi:10.1207/s15327906mbr0102_10

Chang, C., Shen, H.-Y., and Liu, Z.-F. (2014). University Faculty's Perspectives on the Roles of E-Instructors and Their Online Instruction Practice. Irrodl 15 (3), 72–92. doi:10.19173/irrodl.v15i3.1654

Chi, A. (2015). Development of the Readiness to Teach Online Scale. [University of Denver]. Availableat: https://digitalcommons.du.edu/etd/1018.

Cutri, R. M., and Mena, J. (2020). A Critical Reconceptualization of Faculty Readiness for Online Teaching. Distance Education 41 (3), 361–380. doi:10.1080/01587919.2020.1763167

Cutri, R. M., Mena, J., and Whiting, E. F. (2020). Faculty Readiness for Online Crisis Teaching: Transitioning to Online Teaching during the COVID-19 Pandemic. Eur. J. Teach. Education 43 (4), 523–541. doi:10.1080/02619768.2020.1815702

Ford, J. K., MacCallum, R. C., and Tait, M. (1986). The Application of Exploratory Factor Analysis in Applied Psychology: A Critical Review and Analysis. Personnel Psychol. 39 (2), 291–314. doi:10.1111/j.1744-6570.1986.tb00583.x

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active Learning Increases Student Performance in Science, Engineering, and Mathematics. Proc. Natl. Acad. Sci. U S A. 111 (23), 8410–8415. doi:10.1073/pnas.1319030111

Gibson, S. G., Harris, M. L., and Colaric, S. M. (2008). Technology Acceptance in an Academic Context: Faculty Acceptance of Online Education. J. Education Business 83 (6), 355–359. doi:10.3200/JOEB.83.6.355-359

Hixon, E., Barczyk, C., Buckenmeyer, J., and Feldman, L. (2011). Mentoring university Faculty to Become High Quality Online Educators: A Program Evaluation. Online J. Distance Learn. Adm. 14 (5).

Hoyt, J. E., and Oviatt, D. (2013). Governance, Faculty Incentives, and Course Ownership in Online Education at Doctorate-Granting Universities. Am. J. Distance Education 27 (3), 165–178. doi:10.1080/08923647.2013.805554

Hutcheson, G. D., and Sofroniou, N. (1999). Factor Analysis. The Multivariate Social Scientist: Introductory Statistics Using Generalized Linear Models. London: Sage, 217–251.

Kaiser, H. F. (1974). An index of Factorial Simplicity. Psychometrika 39 (1), 31–36. doi:10.1007/BF02291575

Kebritchi, M., Lipschuetz, A., and Santiague, L. (2017). Issues and Challenges for Teaching Successful Online Courses in Higher Education. J. Educ. Technology Syst. 46 (1), 4–29. doi:10.1177/0047239516661713

Kelchtermans, G. (1996). Teacher Vulnerability: Understanding its Moral and Political Roots. Cambridge J. Education 26 (3), 307–323. doi:10.1080/0305764960260302

Kelchtermans, G. (2009). Who I Am in How I Teach Is the Message: Self‐understanding, Vulnerability and Reflection. Teach. Teach. 15 (2), 257–272. doi:10.1080/13540600902875332

Khan, A., Egbue, O., Palkie, B., and Madden, J. (2017). Active Learning: Engaging Students to Maximize Learning in an Online Course. Electron. J. E-Learning 15 (2), 107–115.

Kim, J., and Mueller, C. W. (1978). Introduction to Factor Analysis: What it Is and How to Do it. Newbury Park, CA: Sage.

Kirwan, J., and Rounell, E. (2015). Building a Conceptual Framework for Online Educator Dispositions. Jeo 12 (1), 30–61. doi:10.9743/jeo.2015.1.7

Lane, L. (2013). An Open, Online Class to Prepare Faculty to Teach Online. Jeo 10 (1). doi:10.9743/JEO.2013.1.1

Martin, F., Wang, C., Jokiaho, A., May, B., and Grübmeyer, S. (2019). Examining Faculty Readiness to Teach Online: A Comparison of US and German Educators. Eur. J. Open, Distance E-Learning 22 (1), 53–69. doi:10.2478/eurodl-2019-0004

McCoach, D. B., Gable, R. K., and Madura, J. P. (2013). Instrument Development in the Affective Domain. New York: Springer-Verlag. doi:10.1007/978-1-4614-7135-6

Mercado, C. A. (2008). Readiness Assessment Tool for an eLearning Environment Implementation. Fifth International Conference on ELearning for Knowledge-Based Society, Bangkok, Thailand, 1–11.

Palloff, R. M., and Pratt, K. (2011). The Excellent Online Instructor: Strategies for Professional Development. Indianapolis, IN: Jossey-Bass.

Penn State University, and Central Florida University, (2008). Faculty Self-Assessment: Preparing for Online Teaching. Availableat: https://hybrid.commons.gc.cuny.edu/teaching/getting-started/faculty-self-assessment-preparing-for-online-teaching/.

Ragan, L. C., Bigatel, P. M., Kennan, S. S., and Dillon, J. M. (2012). From Research to Practice: Towards an Integrated and Comprehensive Faculty Development Program. Olj 16 (5), 71–86. doi:10.24059/olj.v16i5.305

Reilly, J., Vandenhouten, C., Gallagher-Lepak, S., and Ralston-Berg, P. (2012). Faculty Development for E-Learning: A Multi-Campus Community of Practice (COP) Approach. Olj 16 (2), 99–110. doi:10.24059/olj.v16i2.249

Roby, T., Ashe, S., Singh, N., and Clark, C. (2013). Shaping the Online Experience: How Administrators Can Influence Student and Instructor Perceptions through Policy and Practice. Internet Higher Education 17 (1), 29–37. doi:10.1016/j.iheduc.2012.09.004

Ross, J. A., and Bruce, C. D. (2007). Teacher Self-Assessment: A Mechanism for Facilitating Professional Growth. Teach. Teach. Education 23 (2), 146–159. doi:10.1016/j.tate.2006.04.035

Sattler, J. M. (2001). Assessment of Children: Cognitive Applications. 4th ed. San Diego, CA: Jerome M. Sattler Publisher, Inc.

Seok, S., Kinsell, C., DaCoster, B., and Tung, C. K. (2010). Comparison of Instructors ’ and Students’ Perceptions of the Effectiveness of Online Courses. Q. Rev. Distance Education 11 (785), 25–36.

Shelton, K., and Moore, J. (2014). Quality Scorecard for the Administration of Online Programs: A Handbook. Online Learning Consortium Website. Availableat: http://onlinelearningconsortium.org/quality-scorecard.

Teräs, H., and Herrington, J. (2014). Neither The Frying pan Nor the Fire: In Search of a Balanced Authentic E-Learning Design through an Educational Design Research Process. Irrodl 15 (2), 232–253. doi:10.19173/irrodl.v15i2.1705

Torrisi-Steele, G. (2020). Facilitating the Shift from Teacher Centred to Student Centred University Teaching. Int. J. Adult Education Technology 11 (3), 22–35. doi:10.4018/IJAET.2020070102

Tschannen-Moran, M., Hoy, A. W., and Hoy, W. K. (1998). Teacher Efficacy: Its Meaning and Measure. Rev. Educ. Res. 68, 202–248. doi:10.3102/00346543068002202

Tschannen-Moran, M., and Hoy, A. W. (2001). Teacher Efficacy: Capturing an Elusive Construct. Teach. Teach. Education 17 (7), 783–805. doi:10.1016/S0742-051X(01)00036-1

Walter, E. M., Henderson, C. R., Beach, A. L., and Williams, C. T. (2016). Introducing the Postsecondary Instructional Practices Survey (PIPS): A Concise, Interdisciplinary, and Easy-To-Score Survey. CBE Life Sci. Educ. 15 (4), 1–11. doi:10.1187/cbe.15-09-0193

Keywords: COVID-19, exploratory factor analysis, online teaching and learning, readiness to teach, student-centered pedagogy, validity, perceptions of teaching online

Citation: Los R, De Jaeger A and Stoesz BM (2021) Development of the Online and Blended Teaching Readiness Assessment (OBTRA). Front. Educ. 6:673594. doi: 10.3389/feduc.2021.673594

Received: 27 February 2021; Accepted: 27 September 2021;

Published: 15 October 2021.

Edited by:

M. Meghan Raisch, Temple University, United StatesReviewed by:

Denice Hood, University of Illinois at Urbana-Champaign, United StatesCharles Graham, Brigham Young University, United States

Copyright © 2021 Los, De Jaeger and Stoesz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Brenda M. Stoesz, YnJlbmRhLnN0b2VzekB1bWFuaXRvYmEuY2E=

Ryan Los

Ryan Los Amy De Jaeger

Amy De Jaeger Brenda M. Stoesz

Brenda M. Stoesz