- 1Expertise Centre for Education and Learning, Thomas More University of Applied Sciences, Antwerp, Belgium

- 2Faculty of Educational Sciences, Open University of the Netherlands, Heerlen, Netherlands

This survey research, assessed whether novice secondary school teachers knew and understood the effectiveness of empirically-supported learning strategies, namely spaced practice, retrieval practice, interleaved practice, using multimodal representations, elaborative interrogation and worked-out examples. These ‘proven’ strategies can be contrasted with frequently used learning strategies that have been found to be less effective, such as re-reading, taking verbatim notes, highlighting/underlining, summarizing, and cramming. This study broadens previous research on teachers’ knowledge of learning strategies by both refining and extending the methodology used in the scenario studies, and by administering it to a different, previously unexplored population. Novice teachers enrolled in a teacher training program (N = 180) in Flanders, Belgium were presented with a three-part survey, consisting of open-ended questions, learning scenarios and a list of study strategies. The results show that misconceptions about effective study strategies are widespread by novice teachers and suggests that they are unaware of several specific strategies that could benefit student learning and retention. While popular but less effective strategies such as highlighting and summarising were commonly named by them in open-ended questions, this was not the case for proven effective strategies (e.g., studying worked-out examples, interleaving, and using multi-modal representations) which were not or hardly mentioned. We conclude that this study adds to the growing literature that it is not only students, but also novice teachers who make suboptimal metacognitive judgments when it comes to study and learning. Explicit instruction in evidence-informed learning strategies should be stressed and included in both teacher professional development programs and initial teacher training.

Introduction

Educators are often asked for advice on how to improve their students’ self-study behavior. This requires teachers to expand their teaching of subject-specific information with teaching their students how to best process this information (i.e., how to study; Weinstein and Mayer, 1986). Research into human cognition has provided information on concrete learning strategies that support student learning (e.g., Dunlosky et al., 2013), but has also shown that many learners have flawed mental models of how they learn, making them more likely to mismanage their learning (Bjork et al., 2013). Teachers are in the position to teach students how to optimize their use of study time to promote efficient and effective learning and better retention of knowledge and skills in both generic learning to learn lessons or within their subject-specific classes (Education Council, 2006). Since the beginning of the 21st century, learning strategy-instruction has indeed become part of several national curricula (Glogger-Frey et al., 2018). The idea is that teachers’ use of the evidence-base on effective study-strategies when advising students can improve students’ self-study behavior (see, e.g., Biwer et al., 2022, for a practical implementation of an evidence-based program). To move from the evidence into the actual design of pedagogical practices informed by this best-evidence, it is necessary to have a deep understanding of what, how, and when something works in optimal circumstances. To improve students’ study behaviors, it is worth exploiting the most promising guidelines that have been shown to work for the largest possible group of pupils. Implementation of a so-called evidence-informed approach on teaching and learning, based on stable and robust scientific findings (best-evidence), then offers the chance to raise practice (see, e.g., Slavin, 2020). The question is, however, whether novice teachers have this accurate knowledge of the evidence on which they can base their practice. Knowledge, acquired during teacher education, can work as a starting point in their teaching career upon which the can gain further expertise during their ensuing professional career (Berliner, 2001). In this survey research, we assessed whether novice secondary school teachers, who recently graduated from initial teacher training, in Flanders (Belgium) have accurate knowledge of the effectiveness and non-effectiveness of particular study strategies.

Effective strategies for acquiring knowledge and skills

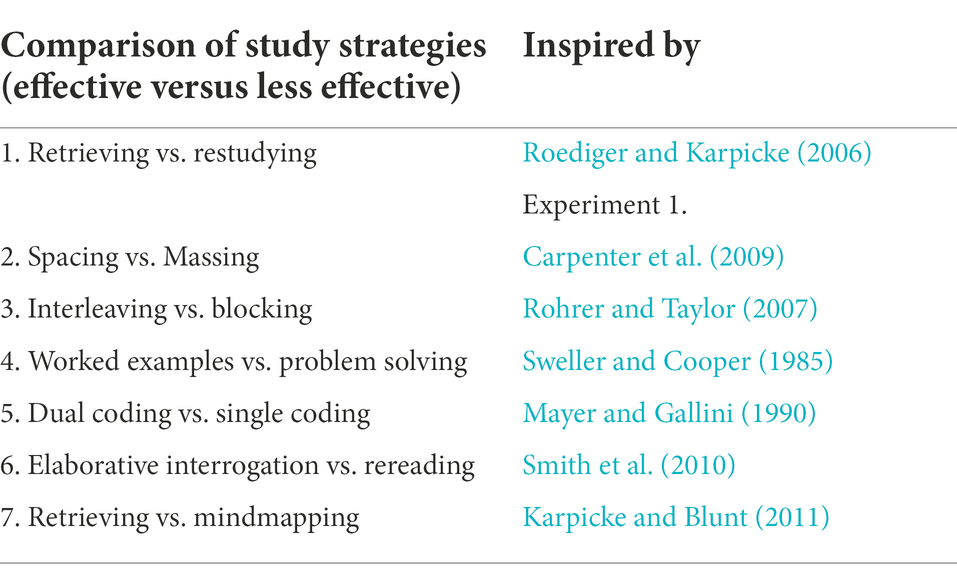

Research on teachers’ knowledge is multifaced because of the multiple definitions given to the knowledge itself (Elbaz, 1983; Shulman, 1986; Darling-Hammond and Bransford, 2005). Teachers’ knowledge about effective study-strategies is part of what Lee Shulman termed principles of teachers’ propositional knowledge (i.e., ‘know that’, principles derived from empirical research and theory about learning and instruction (Shulman, 1986; Verloop et al., 2001)). Well over a century of laboratory and applied research in cognitive and educational psychology has brought us a number of well-established principles: certain learning strategies promote retention more and lead to more durable learning than others (Pashler et al., 2007; Dunlosky et al., 2013; Fiorella and Mayer, 2016). These strategies can be labeled as study strategies when students independently employ them to promote their learning by achieving goal oriented instructional tasks, often characterized by tests or exams (Winne and Hadwin, 1998; Dinsmore et al., 2016). Many experiments where learners are taught or encouraged to apply specific study strategies, such as rereading, spacing practice, summarizing or highlighting have been conducted to determine if and how they work and to determine which lead to longer-lasting learning (as opposed to achievement on exams). Several key reviews reach converging findings (Pashler et al., 2007; Dunlosky et al., 2013; Putnam and Roediger, 2018; Weinstein et al., 2018). In their extensive review, Dunlosky et al. (2013) discussed 10 frequently used and researched strategies: spaced practice, retrieval practice, interleaved practice, rereading, imagery use for text learning, keyword mnemonic, highlighting, summarization, self-explanation and elaborative interrogation. They assessed the effectiveness of these strategies for different age groups, subject areas, types of learning materials, study tasks and types of learning. Spaced practice and retrieval practice were, amongst others, qualified as useful strategies that promote learning, whereas highlighting, rereading, summarizing and keyword mnemonics were seen as strategies with low utility. Similarly, Pashler et al. (2007) identified seven effective learning and study strategies that overlap considerably with Dunlosky et al. (2013): spaced practice, studying worked examples, combining graphics with verbal descriptions, using concrete representations, retrieval practice and elaborative interrogation. These findings has led the National Council on Teacher Quality (NCTQ) to describe six learning and study strategies as the core of prospective teachers’ knowledge base on effective learning processes, as their effectiveness is supported by evidence from multiple sources and replications, ranging from lab-based studies with paired associates as study materials to real classroom-settings with authentic study materials (Pomerance et al., 2016; Weinstein et al., 2018). In Table 1 these six strategies are presented and accompanied by an example of their implementation in students’ self-study.

Distributed or spaced practice (i.e., study sessions of the same material are distributed across time) usually improves retention of that material in comparison to massing study of that same material in one long session, keeping total study time equivalent in both conditions. In a typical experiment, Nazari and Ebersbach (2019) compared two groups of secondary school students on learning mathematical calculations (basic probability) in either spaced fashion (i.e., three practice sessions of 15 min on three consecutive days) or massed fashion (i.e., one 45-min session delivered within a single day). Students in the spaced condition outperformed the students in the massed condition on post-tests after 2 and 6 weeks. Distributing practice extends the total time hypothesis (i.e., people tend to learn more as a simple function of time spent on the learning task; Ebbinghaus, 1964) with a timing aspect: introducing spacing gaps between study sessions enhances long term retention. This advantage is known as the spacing effect. For recent reviews, (see Carpenter, 2017; Wiseheart et al., 2019; Latimier et al., 2021).

A related strategy is interleaved practice, where learners alternate amongst several separate but related topics during one practice session as compared to blocked practice devoted to a single topic (Firth et al., 2021). When interleaving (also known as variability of practice; Van Merriënboer and Kirschner, 2018), practice of each specific topic or task is separated from the next occurrence by the practicing of other topics or tasks. For example, in study sequence A-B-C-B-A-C-A-B-C… there are three tasks between the first and second instance of A, one between the first and second instance of B, and so forth. Thus, by using interleaved practice, learners also achieve spacing effects but the reverse is not necessarily true. Simple spacing (A-A-A-interval-A-A-A) does not lead to interleaving. Interleaving practice is appropriate when students must learn to distinguish among concepts or terms, principles or types of problems that appear to be similar on the surface, or see deeper level similarities in concepts that appear on the surface to be different (e.g., when to use the formulae for acceleration, velocity, and resistance). For recent reviews, see, for example, Firth et al. (2021), Carvalho and Goldstone (2017), and Kang (2016).

Retention is also enhanced when learners engage in retrieval practice (practice testing) as a study strategy. Here students retrieve what they have learned either by testing themselves or by being tested by others such as peers or the teacher. Simply put, when students are tested on a particular learning material, they are required to retrieve it from their long term memory to get the correct answer. Note, these are no-stakes tests meant to support learning and not to assess learning (summative testing), to unfold the study process (formative testing) or as a means for self-evaluation. Retrieval strategies have been shown to be superior to non-retrieval strategies such as restudying, re-reading or copying the information, a benefit known as the testing effect (Adesope et al., 2017; Sotola and Crede, 2021).

Elaboration entails study strategies that foster conscious and deliberate/intentional connecting of the to-be-learned material with pre-existing (i.e., prior) knowledge (Hirshman, 2001). To take advantage of elaboration, students can, for instance, engage in what is known as elaborative interrogation (i.e., posing and answering questions about to-be-learned material). The practice of asking epistemic questions such as “why,” “when,” and “how,” can help increase students’ understanding and retention of concepts (Ohlsson, 1996; Popova et al., 2014). Elaborative interrogation demands more than just recall of facts requiring learners to think about information on a deeper level, on such things as causal mechanisms and comparisons between important concepts (Pressley et al., 1987).

Learning from multimodal representations of to-be learned material (i.e., complementing text-based study materials with explanatory visual information such as graphs, figures and pictures) facilitates student learning and retention compared to studying single representations. Verbal and pictorial coding has additive effects on recall (Paivio, 1986; Camp et al., 2021; Mayer, 2021). Illustrations are especially helpful when the concept is complex or involves multiple steps (Eitel and Scheiter, 2015).

Finally, students learn more by alternating between studying worked-out examples (i.e., studying example problems with their solution) and solving similar problems on their own than they do when just given problems to solve on their own (Kalyuga et al., 2001; Renkl, 2002). Renkl et al., 1998; Kirschner et al., 2006; Van Gog et al., 2019). Students’ procedural knowledge can be improved by replacing approximately half the practice problems with fully-worked-out examples and then removing steps, one at a time (i.e., partially worked-out examples) until only the problem remains. A common variation is to combine worked examples with prompts to allow students to explain the information to oneself (Bisra et al., 2018). Connecting concrete examples to more abstract representations also allows students to apply concepts in new situations (Weinstein et al., 2018).

Popular but less effective study strategies

Teachers’ propositional knowledge about less effective study-strategies can also be useful; knowing which strategies are less effective should not be ignored in evidence-informed practice (Gorard, 2020). Research has shown that students often employ suboptimal study-strategies such as re-reading, taking verbatim notes, highlighting/underlining, and cramming (see, e.g., Morehead et al., 2016; Anthenien et al., 2018; Dirkx et al., 2019). However, in order to recognize, identify and evaluate these strategies when used by their students in order to eventually correct this, it is necessary for teachers to have an accurate understanding of them. Suboptimal strategies can be misleading when it comes to allocating study time in self-paced learning (Dunlosky et al., 2013).

Re-reading texts, an often used and suggested study strategy, is a passive study strategy as it does not require effortful processing of the text (Morehead et al., 2016; Dirkx et al., 2019). Moreover, it provides students with the false impression of successful learning due to the increased perceived fluency at second reading of the text (Rawson and Dunlosky, 2002). That is to say, when reading a text for a second time students recognize the information in the text but this is quite different from being able to remember it. A similar manifestation of this metacognitive overconfidence can be observed with students copying or rewriting notes or texts (Kobayashi, 2005). Here students passively engage in often verbatim copying of information which does not require a type of processing of the information that stimulates long-term retention, such as elaboration or retrieval processes. Highlighting or underlining is a popular study strategy because of its ease of use and its assumed potential for assisting the storage for important sections in text materials (Morehead et al., 2016; Dirkx et al., 2019). Although there is evidence to suggest that students recall highlighted information better than the non-highlighted information, in general, students’ highlighting habits are mostly ineffective as they usually underline unessential information, or too much or too little information (Ponce et al., 2022). Cramming is a widely used study strategy where students mass their study sessions directly prior to exams or tests (Hartwig and Dunlosky, 2012). Massing study sessions, though fruitful for recall at a short retention interval (i.e., performance on a test), yields sub-standard recall in the long-term (i.e., learning).

Although summarizing and concept mapping could be seen as potential examples of active and generative study strategies (Fiorella and Mayer, 2016), the results of their use are often disappointing. Summarizing is the act of concisely stating key ideas from to-be-learned material using one’s own words and excluding irrelevant or repetitive material. While summarizing is effective in certain domains and study tasks (e.g., summarizing short expository texts for history lessons), research has shown that there are a few important boundaries (e.g., procedural knowledge in for instance physics and chemistry is not appropriate for creating a summary as is vocabulary learning; Dunlosky et al., 2013). Concept mapping might be considered as a form of summarizing where a graphic organizer is created by identifying key words or ideas, by placing them in nodes, by drawing lines linking related terms and by writing about the nature of the relationship along those lines (Schroeder et al., 2018). Similar to summarizing, boundary conditions of the strategy have been identified. For instance, Karpicke and Blunt (2011) found that for studying text passages retrieval practice is more effective than concept mapping while observing the learning materials. Studies have also shown that students can struggle to create summaries or concept maps of sufficient quality if they have only received basic instructions (e.g., capturing the main points and on excluding unimportant material, see Rinehart et al., 1986; Bednall and James Kehoe, 2011; Schroeder et al., 2018) or have either not sufficiently practiced summarizing or concept mapping so as to acquire the necessary skills to do it well or lack the necessary prior knowledge to identify what is important.

However, as noted by Miyatsu et al. (2018), even the aforementioned more shallow strategies can be tweaked into a more effective approach by enriching or combining them with effective strategies. For instance, rewriting notes by reorganizing them elicits elaborative processing and studying one’s summary followed by trying to reproduce it without the summary being visible takes advantage of the benefits of the testing effect. It is known that students who solely engage in less effective strategies (e.g., highlighting without engaging in retrieval practice) tend to reduce their potential of recall and transfer (Blasiman et al., 2017).

Why do students not know what is germane to their learning?

The accumulated knowledge from cognitive psychology about how to study effectively and how to avoid ineffective study strategies does not necessarily lead to improved learning behavior by students. The majority of self-report questionnaires reveals that students are often not aware of the advantages of retrieval practice, spaced practice, and elaboration strategies and do not often implement them in their self-regulated learning. Most students use strategies, such as repeatedly rereading their learning materials or massing their study, which hamper, rather than improve, their effectiveness as learners (see, e.g., Kornell and Bjork, 2007; Karpicke et al., 2009; McCabe, 2011; Hartwig and Dunlosky, 2012; Dirkx et al., 2019). This might be partially explained by two accounts. First, students (and teachers were former students) are susceptible to – often false – metacognitive intuitions or beliefs about learning which influences their knowledge (for an overview of biases and classic beliefs in human learning, see, e.g., Koriat, 1997; Bjork et al., 2013). For instance, monitoring judgments of learning is typically based on cognitive cues that learners consider to be predictive for their future memory performance, that is, they confuse initial performance with learning for long-term maintenance (Soderstrom and Bjork, 2015). Ineffective strategies such as massed practice (as opposed to spaced practice), blocked practice (as opposed to interleaved practice), rereading (as opposed to elaboration and retrieval practice) intuitively seem to be more satisfying and fluent because the learner makes quicker gains during initial study. These quick gains create “illusions of learning” such as the stability bias which make learners believe that their future performance will remain as high as during initial study (Kornell and Bjork, 2009).

Study strategies such as spaced practice, interleaved practice and retrieval practice reduce this illusion of learning. They can be grouped under the overarching concept of desirable difficulties, learning strategies that initially feel difficult in that they do cause errors and appear to slow down learning, but result in long lasting learning (Bjork, 1994). Even when learners experience memory benefits from these desirable difficulties, earlier research has shown a lack of awareness of the effectiveness of the strategies when predicting their own future learning while using spaced practice (Rawson and Dunlosky, 2011), retrieval practice (e.g., Roediger and Karpicke, 2006), and interleaved practice (e.g., Kornell and Bjork, 2008; Hartwig et al., 2022).

A second reason why students might not use the most effective study techniques is that students never learned how to study effectively or having learnt it, have not properly practiced it so as to make it a part of their repertoire, or struggle to maintain beneficial habits of studying (Fiorella, 2020). One influential source of such information is the teacher, who could provide students with metacognitive instructions (see further). Research suggests that teachers could improve students’ knowledge about study strategies by embedding explicit strategy instruction into their subject-content teaching (Putnam et al., 2016; Rivers, 2021). However, several surveys indicate that only 20–36% of students report having been taught about study strategies (Kornell and Bjork, 2007; Hartwig and Dunlosky, 2012). In large international assessments, Flemish students self-report that only 55% of their teachers support their learning processes (OECD, 2019).

The case for explicit strategy instruction

Pintrich (2002) and Muijs and Bokhove (2020) suggest that explicit instruction of study-strategies should consist of pointing out the significance of a strategy (‘know that’, i.e., conceptual or propositional knowledge), how to employ the strategy in classroom settings (‘know how’, i.e., prescriptive or procedural knowledge), and monitoring and evaluating proper use of the strategy while providing instructional scaffolds. For instance, students in courses with explicit instruction on implementing retrieval practice in self-study were more likely to use the strategy compared to the control group who did not receive explicit instruction (McCabe, 2011). Biwer et al. (2020) compared two groups of undergraduate students who were randomly assigned to either a 12-week “Study Smart”-program where they received explicit instruction on metacognitive knowledge or a control group. During three sessions students learned about when and why particular learning strategies were effective; reflected on and discussed their strategy use, motivation, and goal-setting; experienced ineffective versus effective strategies (i.e., highlighting versus practice testing) and practiced the strategies in subject-specific courses. Students in the Study-Smart-condition gained more accurate knowledge of effective study strategies (e.g., rated methods based on retrieval practice as more effective and highlighting as less effective) and reported, for instance, an increased use of practice testing and less usage of ineffective study strategies such as highlighting and rereading.

If teachers do not have the propositional knowledge relating to effective study strategies, they cannot be expected to use, model them or explicitly teach students to use them. Willingham (2017) describes this as the necessity to “have a mental model of the learner”: because the teacher can recognize the underlying mechanisms in instructional methods or study approaches (e.g., retrieval processes while using flashcards), they can also transfer these strategies to novel situations. Teacher knowledge has indeed been defined as a central element and precursor of teaching competence (for a full discussion on teacher knowledge for teaching and learning, see, e.g., Toom, 2017). Understanding the essential theoretical concepts of the strategies is required to notice, scaffold, and teach strategy-use in generic learning-to-learn courses or subject-specific courses (Glogger-Frey et al., 2018). Earlier studies on the use of research evidence find that teachers pay limited attention to best-evidence findings and rarely consult it to improve their practices (Dagenais et al., 2012; Walker et al., 2019). In addition, there is some evidence that teachers do not begin their careers with this foundational knowledge about effective strategies for learning and study. Research by the National Council for Teaching Quality in United States showed that the way in which essential information on effective learning is covered in the written study material used in in pre-service teacher education programs is inadequate (Pomerance et al., 2016). This was partially replicated by Surma et al. (2018) for Dutch and Flemish teacher education. They found that in general, teacher education textbooks and syllabi do not sufficiently cover essential learning strategies from cognitive psychology or, in some cases, do not cover them at all. For instance, only three teacher education programs (out of 24) provided textbooks and syllabi with a full coverage on spaced practice and retrieval practice (i.e., conceptual information, prescriptive information on how to apply the strategy in regular classrooms, and references to research). Such results indicate that teacher candidates may be under-informed, or not informed by their study materials about effective learning strategies.

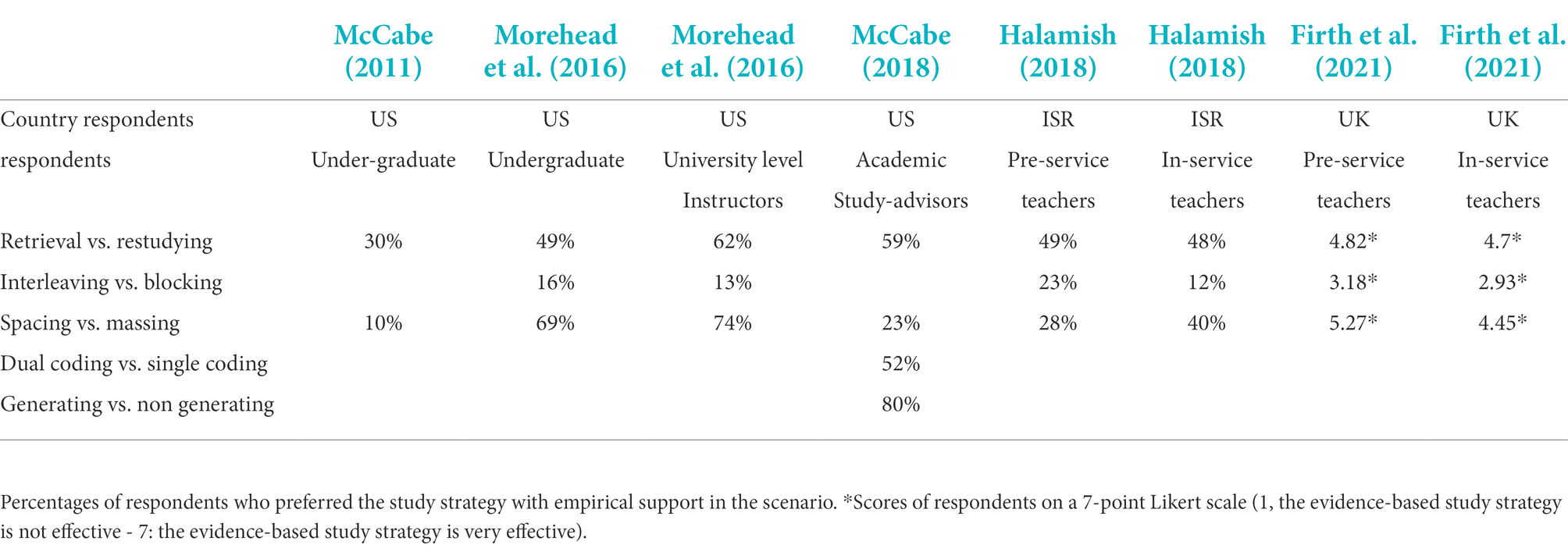

In addition to research on the textbooks and syllabi used in teacher education, survey research is an often-used method to gain insight in teachers’ knowledge. McCabe (2018) had academic support instructors rate a list of 36 study strategies for their effectiveness on 5-point Likert-scale (from not effective to extremely effective). Several effective study strategies were recognized as effective (e.g., retrieval practice, answering questions, spacing study sessions), whereas some (e.g., multi-modal learning, interleaved practice) were less recognized. Ineffective study strategies (e.g., rereading, copying notes verbatim) consistently had lower ratings. McCabe also asked the instructors to predict the outcomes of four learning scenarios where two contrasting study strategies were contrasted, each one describing a ecologically valid/realistic educational situation. Learning scenarios are a type of vignette-based research, which is becoming more popular in social science studies because it allows respondents to react to context-specific cues such as real-life classroom conditions (Aguinis and Bradley, 2014). The use of learning scenarios to grasp instructors’ knowledge has since then been replicated and extended for other populations, such as pre-service teachers and in-service teachers (Halamish, 2018; Firth et al., 2021), university instructors (Morehead et al., 2016) and medical faculty (Piza et al., 2019). The results were mixed, with some educators capable of both identifying some effective strategies (e.g., retrieval practice contrasted with the more passive restudying, Firth et al., 2021) and simultaneously being unsuccessful in distinguishing an effective strategy from a less effective one (e.g., interleaved practice versus blocked practice in all off the aforementioned studies). Table 2 provides a summary of all the studies on metacognitive judgments of learning strategies using scenario-methodology.

Broadening the research base on knowledge of effective study strategies

The current research broadens previous research on teachers’ knowledge of effective study strategies by both refining and extending the methodology used in the earlier scenario studies, and by administering it to a different, previously unexplored population (i.e., novice teachers). Earlier studies using the scenario-method had some limitations regarding the number of study strategies being assessed/rated, the sampling method used, and the lack of open-ended questions that were presented to the respondents.

First, most studies only examined a limited number of learning scenarios where more effective study strategies were contrasted with less effective ones (Morehead et al., 2016; Halamish, 2018; Firth et al., 2021). Only spacing, testing, and interleaving were included in each study, which are all examples of study strategies within the desirable difficulties paradigm (see Table 2). Other study strategies with a robust evidence base, such as studying worked-out examples, elaboration and using multi-modal representations were rarely or not assessed by teachers. Moreover, retrieval practice, for example, has not been assessed in relation to a non-passive study strategy (such as concept mapping). Increasing the number of scenarios is particularly interesting because the study strategies can also be interpreted as instructional strategies from the teacher’s perspective. For instance, teachers can use retrieval practice by integrating regular low stakes quizzes in their classrooms (Agarwal et al., 2021). As such, the knowledge about the strategies in the scenarios also provides insight into the teacher’s pedagogical knowledge. In the present research we introduce the participants to seven learning scenario’s which tackle all the aforementioned limitations.

Second, in previous studies the sample ranged from pre-service teachers to more experienced teachers (Halamish, 2018; Firth et al., 2021), but did not explicitly gauge the knowledge of novice teachers (i.e., teachers who very recently graduated from teacher training institutions; see participants). This is valuable because novice teachers have not benefitted from wide-ranging practical classroom experience nor professional development programs, both of which might be influential to clarify how human memory works in the classroom. Earlier research did not find significant differences between pre-serve and in-service teachers (Halamish, 2018; Firth et al., 2021). This study adds to a baseline measurement of novice teacher knowledge, which might contribute to the understanding of the impact of teacher education on imparting the essential knowledge and skills to start the profession.

Third, authors of the previous studies indicated that the sampling of teachers was probably not consistently representative due to selection bias arising from convenience sampling (Halamish, 2018; Firth et al., 2021). In Firth’s study Firth et al. (2021), data were collected from students in one teacher training college and in-service teachers were sampled using self-selection. Halamish (2018), recruited respondents by self-selection through a call in an online teacher discussion group. We used cluster sampling, where the sample population is selected in groups (clusters) based on location and timing.

Finally, it is also worth pointing out that earlier survey research used closed-answer questioning (McCabe, 2011; Morehead et al., 2016; Blasiman et al., 2017; Halamish, 2018; Firth et al., 2021) and, thus, did not ask for spontaneous recommendations on effective study strategies using open-ended questions (with notable exceptions for McCabe (2018), who asked academic support-centers to prioritize three learning strategies, and Glogger-Frey et al. (2018), who limited their research to comprehension-oriented learning strategies). McCabe (2018) found limited evidence for the use of terms from cognitive psychology (such as retrieval practice or metacognition) which could indicate that the academic support-center heads were not familiar with the evidence-base in the field of effective learning and studying. Open-ended questions examine the respondents’ organization of the knowledge schemes present. If teachers have sufficient in-depth knowledge of effective learning strategies, they will be able to prioritize and coherently explain why one strategy is preferred to another. It is therefore expected that novice teachers can access their knowledge about effective learning-strategies according to the knowledge structures they possess. One would expect that the most effective learning strategies (such as spaced practice and retrieval practice) would be recalled first (Glogger-Frey et al., 2018). This is especially important because an adequate knowledge organization is predictive for the accessibility of that information at a later stage (Prawat, 1989). Open questions should also be positioned at the beginning of the survey because measuring this coherent knowledge is more challenging when the respondents have not already been shown a list of study strategies: prior knowledge is activated by the list, which can lead to bias in the assessment.

The current study

Taken together, the results of earlier research on teachers’ knowledge of study strategies indicates that teacher knowledge might not be sufficient or even available to equip their students with effective study strategies. It is hypothesized that, based on earlier research, novice teachers might not be aware of the effectiveness of study-strategies such as retrieval practice, interleaved practice and spaced practice and that spontaneous study advice might include less effective strategies. Given the methodological concerns in the particular context of survey research in the area of teachers’ propositional knowledge of evidence-based study strategies, more research is needed. The present study examines knowledge about the effectiveness of study strategies within novice secondary school teachers and further examines whether these teachers’ spontaneous study-strategy advice is underpinned by research into human learning. This study thereby gives insight into the baseline level of knowledge of novice teachers and extends the methodology used in previous research by adding learning scenarios and open-ended questions.

Materials and methods

Participants

Participants were 240 novice teachers who followed an introductory course for novice teachers in secondary education, organized in two provinces in Flanders, Belgium from 19 Flemish teacher education institutions encompassing both bachelor and master-level teacher education programs. Novice teachers were defined, based on the theory of stages of expertise development, as practicing teachers with comparable in-group and between-group professional experience before they reached the stadium of advanced beginners, which is reached at approximately 1.5 years of experience, above which an increased teachers expertise level can be expected (see, e.g., Sabers et al., 1991).

The participants were informed about the research and that the survey data would be used for research purposes. The participants were then asked to consent to their responses being used in this research. One participant did not consent and was excluded from all analyses. Of the remaining 239, 59 participants indicated they had more than 2 years of teaching experience and were excluded from the analysis. This resulted in a final sample of 180 respondents (Median age = 25; SD = 6.5; Mean 25.7; male = 62; female = 118).

Procedure

The survey was administered to a large population at an annual kick-off meeting for all novice teachers in two provinces where 19 teacher education institutions were represented. Permission from the Flemish pedagogical support network was asked and obtained to conduct the research. There was no response bias, as most teachers attending the meeting were expected to participate by their school leaders. The pen and pencil survey, which took approximately 30 min to complete, was administered live during the meeting. The survey was completed by the participants anonymously.

The open-ended questions were placed at the start of the survey in order to identify the study strategies that teachers would ‘spontaneously’ recommend (i.e., recall from their long-term memory) before being primed by the learning scenarios or lists of study strategies. Respondents then completed the second part (i.e., seven learning scenarios) and the final part (i.e., study strategy list) of the survey before providing demographic information (age, gender, type of teacher education, teacher education institute, years of teaching experience, subject-domain of teaching). Respondents were restricted from viewing the remaining parts of the survey and could not return to earlier answered questions to limit prior questions influencing subsequent answers.

Materials

The instrument used in this study consisted of three major parts: open-ended questions on study strategy advice; learning scenarios based on the learning scenarios as described by McCabe (2011, 2018), Morehead et al. (2016), and Halamish (2018); and a list of study strategies (based on McCabe, 2018) that respondents had to rate for effectiveness.

Open-ended questions

First, participants were asked to write down three study strategies they would recommend to their students to help them pass a subsequent test. They were instructed to think about general, not subject-specific study strategies. So as not to influence respondents with the direction of their response, no answer categories were provided. In the second open-ended question, more context-specific cues were added by articulating that the test would take place in 3 weeks and the student had already studied the material once, prompting participants to deliberately consider spaced and/or retrieval practice as preferred study strategy. For the second question, teachers were asked to recommend one single study strategy to their students.

Following the open design of qualitative studies (Creswell and Creswell, 2017), the data from the first open question was analyzed before moving to the second open question. First, the first author read every answer to gain a general overview. As a second step, the first author followed a process of mixed coding, both theoretical (i.e., based on the 15-category coding scheme of Dirkx et al., 2019, as described below) and in vivo (i.e., based on the participants’ responses). Third, after coding 20 questions, the first author cleaned the codes, and made a final lists of codes with relevant example statements. This process resulted in a coding scheme consisting of 16 categories. Fourth, data from the free-response question about the three most recommended study strategies were then classified into 16 categories. The first 10 codes in the coding frame by Dirkx et al. (2019) correspond to the 10 learning strategies discussed by Dunlosky et al. (2013). The following four codes correspond to strategies that were not covered by the above-mentioned article but are often reported as being used as a study strategy by students. The categories added by Dirkx and colleagues were copying (i.e., copying of course materials; see also Blasiman et al., 2017), generating examples (see Karpicke et al., 2009), cramming (as opposed to spaced practice), and solving practice problems (i.e., solving problems provided in students’ learning materials such as textbooks and electronic learning environments). Another final category was added after the second phase of coding, namely the code in which recommendations are collected that form the ‘behind-the-scenes of studying’ and that are not dominated by information-processing, such as time-management, avoidance of behaviors counterproductive for learning, concentration, study aids, attitude, self-discipline, intrinsic or extrinsic motivation (Credé and Kuncel, 2008). These constructs are also important in research on learning and metacognition but go beyond the scope of this article, which focuses on cognitive learning strategies that facilitate long-term learning.

After the coding process, the percentage of teachers with a response in each category was calculated. When teachers specified more study strategies than asked for, the additional strategies were nevertheless included in the results. For instance, the recommendation students should test themselves several times before the test, consists of two study strategies (i.e., retrieval practice and spaced practice). Two researchers assessed 25% of the surveys whether the students’ responses were an example of one of the strategies that would fit into the coding frame of Dirkx et al. (2019). The coders discussed their findings, and intercoder reliability was found to be 82%, which was satisfactory. When inconsistencies were uncovered, the researchers re-reviewed the recommendations until they reached agreement. To establish intercoder reliability, the researchers reanalyzed the same selection of responses after a period of 4 months and obtained a 96% level of agreement with previous coding results. The first author coded the remaining surveys twice.

Learning scenarios

The second part of the survey consisted of seven hypothetical study scenarios, each describing two students using two different study-strategies, one empirically validated as being effective and one not. Each scenario was based on a educationally relevant study that investigated the effectiveness of study strategies (see Table 3). The participants were asked which strategy they would recommend to their students to achieve long-term learning (i.e., better outcomes as measured by delayed-test scores) given a particular situation.

For example, one scenario contrasting spaced practice and massed practice presented the following situation: Two students are preparing for a written test in 3 weeks. They have to study one chapter, comprising both theory and practice problems. Student A spaces their practice and study over the 3 weeks. Student B studies and practices intensively just prior to the test (i.e., the night before). All told, they study an equal amount of time. Rate the effectiveness of both students’ study strategies for long term retention.

In each scenario, participants used a 5-point Likert-scale to score each strategy of each student in the scenarios. The use of separate scores per strategy made it possible to assess both the absolute perceived effectiveness of each strategy and the difference in perceived effectiveness between the strategies. The authentic context provided in the scenarios was designed to activate prior knowledge about cognitive learning strategies. The retrieving and interleaving scenarios were drawn from previous surveys (McCabe, 2011, 2018; Halamish, 2018) with minor modifications in wording to make the learning scenarios more appropriate for Flemish respondents. This can be seen as replicating and extending the evaluation of learning scenarios presented in the aforementioned studies. The remaining five scenarios were novel (spacing vs. massing; worked examples vs. problem solving; dual coding vs. single coding; elaborative interrogation vs. rereading; retrieving vs. mind mapping), with similar style and length, and were reviewed by a team of international experts in cognitive science and translatory research in order to validate their contents. After an iterative process of three rounds of feedback, full consensus was reached on the content and wording of the new scenarios.

Study strategy list

In the final part of the survey, participants were provided with a list of 22 specific study strategies (obtained and adapted from McCabe, 2018) and they were asked to rate on a 5-point Likert scale, on average, how effective they thought each strategy was for their students’ learning. The list was slightly refined by adding some elaborated comments to the initial statements by McCabe. For example, in the original study strategy list ‘Using pictures’ is adapted to ‘Search pictures in order to clarify difficult concepts’, as the first statement did not describe how pictures should be used.

Statistical analyses

All survey data was analyzed via SPSS. The alpha level was set to 0.05 for all statistical tests reported. For the analysis of the learning scenarios, paired samples t-tests were used to compare the mean ratings given to the empirically validated and non-empirically validated study strategies for each scenario and the resulting effect size are reported with Cohen’s d (De Winter and Dodou, 2010). Positive effect sizes showed effects supporting the evidence-based study strategy, while negative effect sizes showed effects supporting the non-evidence-based strategy. Hinge-points for small, medium or large effects were 0.2, 0.5, and 0.8, respectively. The data from the seven scenarios were combined to form an overall accuracy score for each participant. For each scenario question, each individual participant was coded as a 0 if the non-empirically validated strategy was given a higher rating than the empirically validated strategy and a 1 if the empirically validated scenario was given a higher rating than the non-empirically validated scenario. Accuracy scores ranged from a minimum score of 0 (zero correct scenario judgments) to a maximal score of 7 (all scenarios were judged correctly). The overall accuracy comparing groups (e.g., masters vs. bachelors and gender) across all scenarios were calculated via chi-square tests.

For the analysis of the study scenarios, descriptive statistics were calculated. Paired t-tests were used to compare items that rely on the same strategy (e.g., “test yourself with practice tests” and “use flashcards to test yourself” both rely on the testing effect).

Results

To identify relevant clustering in the dataset, a number of exploratory analyses were first carried out. There were no significant results from analyses comparing correct strategy endorsements from the learning scenarios among self-reported teacher education types (collapsing into three categories for universities, universities of applied sciences or adult education programs; (χ2 = 6.141; p > 0.05)), nor bachelor/master level (χ2 = 4.872; p = 0.56) nor were strategy endorsements correlated with teachers years of experience (i.e., 0 or 1 year teaching experience; χ2 = 6.244; p = 0.396) nor were strategy endorsements correlated with age (χ2 = 154.732; p = 0.256) or gender (χ2 = 6.620; p = 0.357). It was not possible to compare the various teacher education institutions and subject domains due to a limited number of respondents per teacher education institution or subject domain. As a result, associations with the demographic factors mentioned earlier will not be examined further.

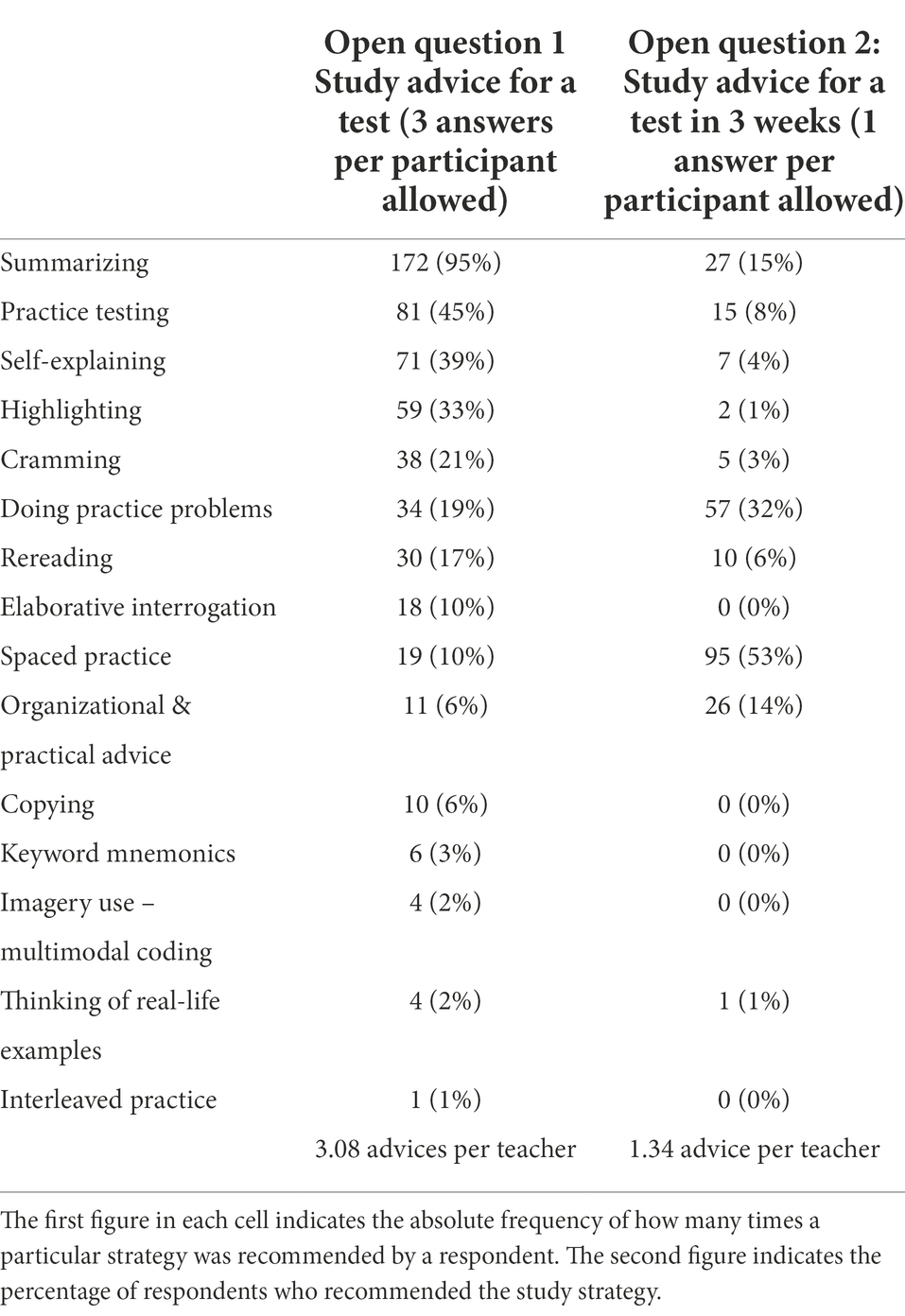

Learning strategy recommendations

For a full overview of the top-three recommendations that would be given to students if they were studying for a test, see Table 4. Here, we present the most notable results: Summarization was advised by 95% of the teachers. Less than half suggested taking a practice test and only 19 (10%) explicitly mentioned that repeating the subject matter in more than one session (spaced practice) was advantageous. In contrast, 38 teachers (21%) said that students should cram the material just before the test. Self-explanation was a relatively often suggested strategy (39%), especially in the context of trying to explain the subject matter to yourself or explaining it to someone else. Some effective strategies, such as studying worked examples, interleaving, and using multimodal representations were not or hardly mentioned.

Less effective study strategies such as copying notes, using mnemonics or re-reading were given less attention. When highlighting was mentioned as a recommendation (33%), it was in combination with another study strategy, such as rereading and summarizing (e.g., “highlight the most important information while rereading”; “make a summary using the highlighted text.”).

In the second open question, when it was explicitly stated that the test would only take place in 3 weeks and students had already studied once, spacing of study moments was explicitly mentioned by 53% of the teachers. Taking a practice test, however, was only suggested by 15 teachers (8%). Note that teachers were only allowed to provide one study-advice on the second open-ended question. Less effective study strategies such as copying notes, highlighting and cramming were hardly mentioned. Similar to McCabe (2018), there was limited evidence for the use of terms originating from cognitive or educational psychology in both open questions; that is, there was no mention of concepts such as “retrieval,” “metacognition,” “testing effects,” etc.

Learning scenarios

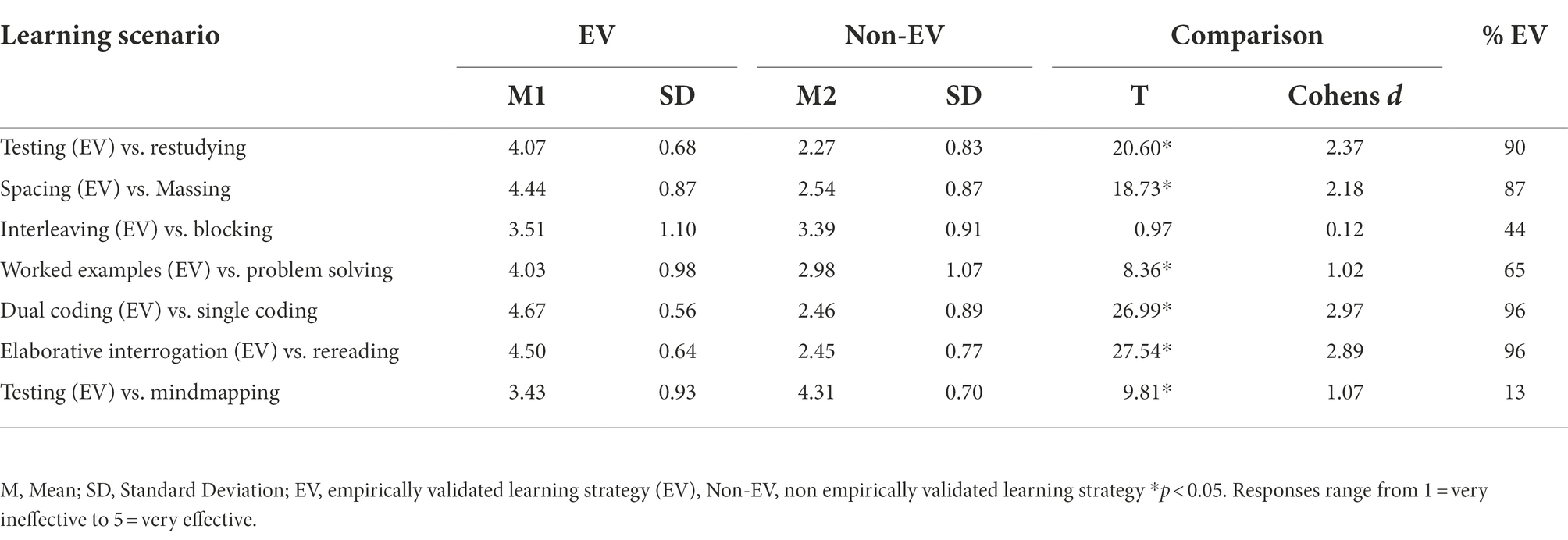

Novice teachers in the current study made predictions about learning outcomes for scenarios representing seven evidence-based study strategies. In Table 5 the descriptive and inferential statistics per scenario are presented. In all cases, the responses ranged from the minimum (1) to the maximum (5). For five of the seven scenarios, participants provided mean ratings indicating their endorsement of the evidence-based strategy. Interleaved practice and retrieval practice were not seen as being effective in scenarios when compared with blocked practice and mind mapping, respectively. Retrieval practice was judged as being effective in comparison with restudying.

Table 5. Mean ratings (and standard deviations) for empirically validated learning strategies (EV) and non-empirically validated learning strategies (non-EV) for the learning scenario questions.

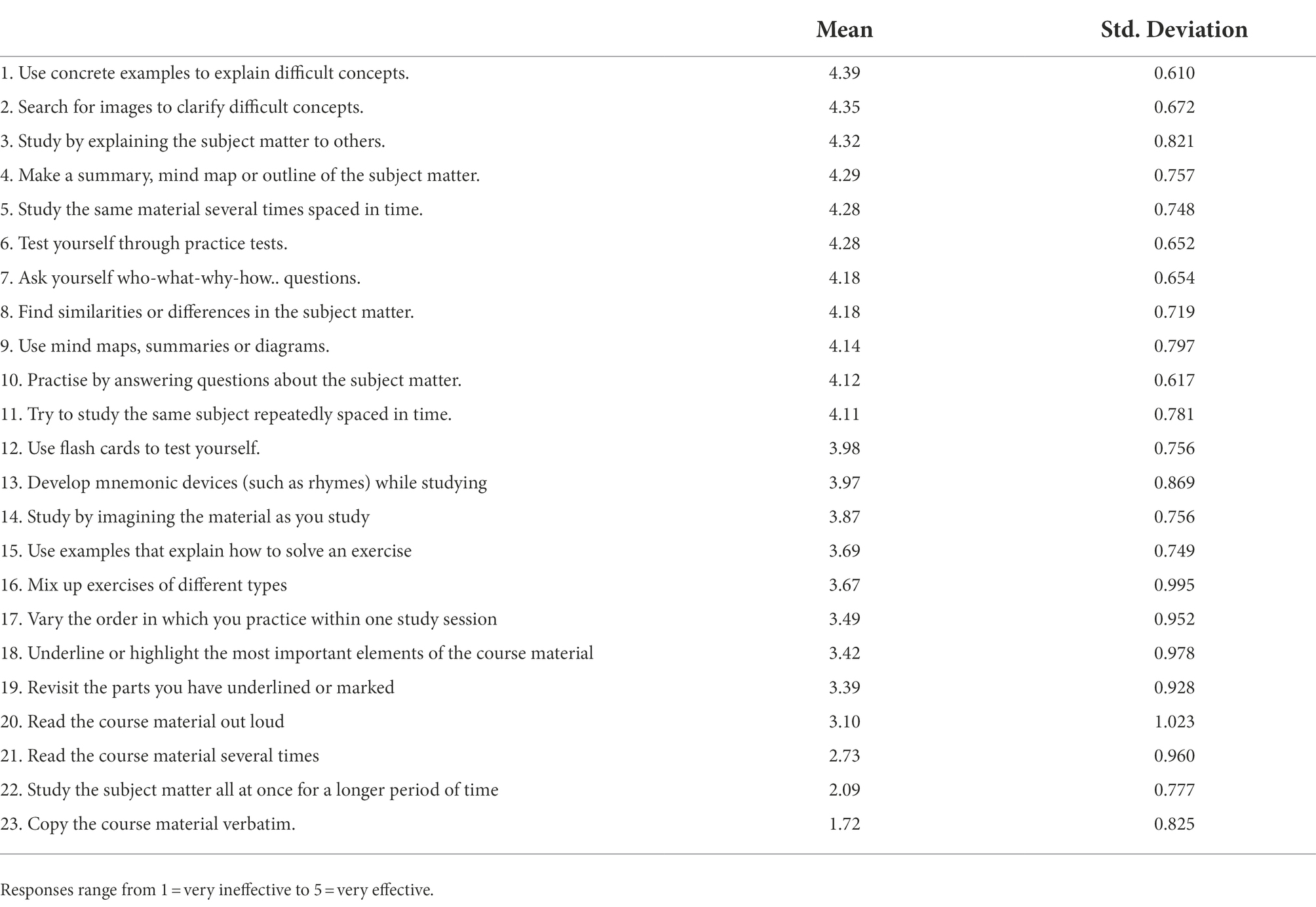

Study strategy list

Ratings of the strategy’s perceived effectiveness (rated on a 5-point scale with 5 indicating highest effectiveness) are found in Table 6. The study strategies that are described in the literature as the least effective (i.e., copying notes, cramming, rereading …) are also rated the lowest by novice teachers. Novice teachers consider study strategies that are based on spaced practice, retrieval practice, elaboration, multimodal representations, and worked examples to be effective. Generative study strategies such as summarizing and mind mapping are also evaluated as being effective. Items 6 “test yourself with practice tests” and 12 “use flashcards to test yourself” which both rely on the underlying mechanism of retrieval practice were not perceived equally effective (t(179) = 8,85, p < 0.01). A similar pattern I for items related to spacing (i.e., items 5 and 11; t(179) = 3.10, p < 0.01) and interleaving (i.e., items X and X; t(179) = 2.420, p < 0.05) and rereading (i.e., items 5 and 11; t(179) = 3.10, p < 0.01). Items concerning elaboration (i.e., items 3 and 7; t(179) = 0, p = 1.00) and marking (i.e., items X and X; t(179) = 0.533, p = 0.594) were perceived equally effective.

Discussion

This study explored novice teachers’ knowledge of effective study strategies. The results of a three-part survey in which participants were asked to provide study advice for their students (open-ended questions) and assess the effectiveness of given study strategies (closed questions) were presented. The results showed that some misconceptions about effective study strategies are widespread within novice teachers albeit with a dissimilar pattern compared to previous empirical research. The results were consistent across demographic factors. For instance, why teachers who have recently completed a master’s program do not tend to have a broader knowledge of effective study strategies. This can be explained by the curriculum used: a master’s program in teacher education in Flanders does not encapsulate a more in-depth package of, for instance, educational psychology, but mainly expands subject-specific learning content. Overall, we found two main results. First, there is considerable variability in the perceived effectiveness of the most effective study strategies when comparing answers from open questions (i.e., section 1 of this survey) and closed questions (i.e., sections 2 and 3 in this survey; learning scenarios and study strategy list). Second, teachers often have incomplete knowledge about strategies that do not tend to produce durable learning; they sometimes prefer strategies in their study recommendations that have been shown not to work. In what follows, we elaborate on these two observations.

Perceived effectiveness of the most effective strategies

This study contrasts with prior work in that respondents were asked to answer open-ended questions on effective strategy-use before assessing learning scenarios contrasting two commonly used study strategies. There was considerable variation between strategy recommendations of highly effective study strategies in the open-ended questions (requiring recall from long-term memory) and the endorsement of these strategies in closed questions (possibly requiring only recognition). Results show that the respondents very often - but not always - provided appropriate judgments (i.e., preferring the strategy which is backed up by evidence) when they had to weigh two study-strategies against each other, but the same effective study-strategies were not recommended spontaneously to their students in the open-ended questions. Strong endorsements in learning scenarios does not automatically turn into obvious recommendations. Spaced practice, for instance, was mentioned as a strategy by less than half of the teachers after it was prompted in the second open-ended question (i.e., that students had already studied for the test once and that the test would take place within 3 weeks), while the majority of the respondents identified spaced practice as a more effective strategy than massed practice in a learning scenario. A similar tendency was observed in the third section of the survey, where items referring to the spacing effect (i.e., “study the same materials several times spaced in time”) were considered highly effective. Likewise, retrieval practice was assessed as effective when contrasted with a rather passive study strategy (i.e., rereading) but was suggested as a strategy by less than half of the teachers in the first open-ended question. Interleaved practice, elaboration, using worked examples, and using multi-modal representations were also marginally recommended in the open-ended questions. However, when they were presented in opposition to a less effective study strategy in the learning scenarios, all except for interleaved practice were appropriately and almost unanimously identified as effective.

If novice teachers were presented forced-choice questions, in many cases they will opt for the right answer, which paints an relatively optimistic picture. That is, they remember or are capable of discerning in a paired comparison what works (i.e., they might possess the tacit knowledge) but cannot freely recall it when only prompted to do so (i.e., they might not possess deep conceptual propositional knowledge). This limits the chance that those not freely recalling the strategy will use the strategy in their teaching repertoire is probably negligible. Possessing certain propositional knowledge is known to precede competently handling the pedagogical skills related to the knowledge areas in real classroom situations (Munby et al., 2001). In optimal circumstances, they should also spontaneously recommend the strategy to their students, which is not entirely the case with strategies such as spaced practice, retrieval practice, interleaved practice, using multimodal representations, and using worked examples. This also confirms the claim for the introduction of open-ended questions as a methodological improvement for measuring learners’ knowledge about study strategies: performing well on the learning scenarios does not necessarily imply that teachers spontaneously transfer their knowledge to more ecologically valid settings.

Another noteworthy observation was that even within one study single study strategy such as retrieval practice, there were considerable differences in perceived effectiveness. For instance, concept mapping, which is essentially a generative strategy, is considered to be more effective than retrieval practice in a learning scenario, while the memory benefits of retrieval practice (i.e., engaging immediately in trying to remember after a first reading) are more profitable over time than merely generating concept maps from open books (Karpicke and Blunt, 2011; Camerer et al., 2018). Nevertheless, when contrasted with rereading, retrieval practice yielded superior results. In the first open-ended question, retrieval practice was advised by less than half of the teachers, but we were unable to determine from the responses whether retrieval practice was conceived as merely self-testing (a strategy for self-evaluation at the very end of the study process) or as a study strategy to strengthen one’s memory. This suggests that the respondents might not be fully aware of the cognitive principles supporting strategies such as retrieval practice (Rivers, 2021). This limits novice teachers’ to generalize the strategies to novel situations and instructional methods (Willingham, 2017). Whether teachers’ and learners are aware of the full advantages of retrieval practice and for explanations why retrieval practice is not considered a study strategy but merely an self-evaluation strategy, should be tackled by future research (see, e.g., Rivers, 2021).

When novice teachers had to assess the effectiveness from a list of 36 study strategies, on the whole, the most effective strategies were more often rated higher than those with a weaker evidence-base. A notable exception – again - is interleaving, where both items were rated low in effectivity (“Mix up exercises of different types”; “Alternate the order in which you practice within one study session”). The lower accuracy of the strategy endorsements related to mixing up study sequence (i.e., interleaving) is consistent with earlier research (McCabe, 2018; Firth et al., 2021). Some well recognized study strategies such as interleaving are counterintuitive to people as they pose difficulties during the initial learning process (Bjork, 1994; Clark and Bjork, 2014). Metacognitive insight into desirable difficulties may be different from that of other effective strategies and require explicit instruction and practice as some of the advantages do not appear to be obvious for learners and teachers at first sight (Soderstrom and Bjork, 2015). Conditions of retrieval practice and interleaved practice that often facilitate long-term retention may appear unhelpful in the short term as they appear to impede current performance.

One might suspect that when the information on effective strategies is presented clearly and in contrast to less effective strategies, the former will appear obvious in hindsight. However, this does not explain why Flemish novice teachers assess the study scenarios using desirable difficulties (i.e., spaced practice, retrieval practice, interleaved practice) differently than other populations. Compared to earlier studies with similar scenarios, Flemish novice teachers seem to be notably more accurate in identifying desirable difficulties than their mostly Anglo-Saxon counterparts. Table 2 shows three study scenarios which were replicated for seven different population groups in different countries. The explanation for these differences may be grounded in the fact that novice teachers recently graduated from teacher education and topics regarding memory and cognition are still vivid in their minds. If, however, that was the case, more-effective strategies should have been spontaneously mentioned and more subject-specific terms from cognitive psychology should have been generated in the open questions. This is also at odds with the findings of Surma et al. (2018) on the contents of teacher education textbooks and their accompanying syllabi. This research therefore also identifies possible geographical and curricular issues in surveys on respondents’ knowledge: generalization about teachers’ mental models of learning over countries and related teacher education curricula do not seem to be self-evident.

Perceived effectiveness of the least effective strategies

The respondents tended to suggest strategies that have been shown not to work while avoiding strategies that do work. For example, the vast majority of novice teachers recommend summarizing as a principal study strategy while this strategy is described by Dunlosky et al. (2013) as a low-utility strategy. In the list of study strategies, however, while summarizing was also seen as highly effective, highlighting and cramming were listed among the least effective strategies. Copying notes was not often spontaneously mentioned in the open questions, nor was it strongly appreciated in the study strategies list.

The reasons for this dispersed perception of effectiveness for summarizing versus copying/ highlighting/cramming may have several explanations. First, Pressley et al. (1989, p.5) stated that “summarizing is not one strategy but a family of strategies.” When a participant notes that summarizing is a robust strategy, it is not necessarily known what the participant considers to be summarizing (i.e., declarative knowledge: for one student, summarizing is perceived as schematizing single words while for another it might be making a verbatim transcription of their textbook) and the way in which summarizing proceeds (i.e., procedural knowledge: do I summarize with the textbook open or closed? Do I summarize after I have already studied the material thoroughly? Do I use a summary to review afterwards or to test myself? Do I summarize aloud or in writing?). For copying and highlighting, the conceptual and procedural interpretation appears to be more straightforward. In reality, the manifestations of summarizing as a study strategy are probably more diverse and prone to individual differences than the narrow definition that researchers assign to the concept (Miyatsu et al., 2018). Despite the fact that teachers do prefer summarizing over retrieval practice, this choice is unlikely a symptom of their knowledge of effective studying because learners appear, based on earlier research, not always fully aware of the boundary conditions of certain study strategies (Bjork et al., 2013).

A second explanation as to why the novice teachers spontaneously suggest suboptimal strategies can be found in a theory-practice gap. Study strategies are often studied in cognitive science literature as “singletons,” that is as individual and generic phenomena, whereas in ecologically valid situations, a given study strategy is often sequentially linked within a series of other study strategies and linked with specific type of learning content. For instance, a student who first reads the learning material, rereads the material while highlighting relevant information, summarizes, and finishes by testing themselves, uses a number of strategies labeled as less effective (i.e., rereading, highlighting, summarizing). As noted earlier by Miyatsu et al. (2018), ineffective strategies, under certain conditions, can be potent. For example, a strategy labeled as ineffective such as massed or blocked practice will sometimes result in a good performance on an immediate test even though it does little for long-term retention and distracts the learner by providing suboptimal judgments of future learning. So far, research on learning strategies has been fairly myopic, focusing on study strategies in isolation but not often tracing optimal combinations or study arrangements in holistic ecologically valid settings (Dirkx et al., 2019). Follow-up research should look at how learners perceive effective study strategies from a semantic point of view, which strategies they choose depending on the type of learning content or subject area, how they combine study strategies chronologically, and why they do so. A more qualitative research design may be appropriate for this purpose.

Limitations

One must be careful when interpreting the results of this study, because multiple factors could have contributed to the discrepancy between the results of the open-ended and closed questions, and the lack of consistency regarding the perceived effectiveness of the study strategies. The limitations with respect to semantics (i.e., do all respondents interpret the term summarizing identically?), the focus on individual strategies (i.e., students are likely to use more than one study strategy during the study process) and the geographical differences (i.e., Flemish novice teachers score better on scenarios that probe desirable difficulties than respondents from other countries) were outlined earlier. The validity of a measurement instrument is not established in one or two (sets of) studies. For example, in follow-up studies learning scenarios can be added that contrast popular and frequently used strategies such as summarizing with other generative strategies such as mind mapping to gain a more fine grained image of novice teachers’ knowledge of study strategies. A more qualitative approach can be used to determine how teachers interpret certain (combinations of) study strategies. Finally, and to state the obvious: Responses are self-reported and may not reflect novice teachers true educational advice given in real classrooms.

Conclusion

There remains a noticeable gap between the typical way learners perceive study strategies and the empirical evidence regarding their effect on learning, and novice teachers seem to be no different than their peers elsewhere. The results from this study add to the growing literature that not only students, experienced teachers, university instructors, and pre-service teachers can be suboptimal in their judgments (Morehead et al., 2016; Halamish, 2018; McCabe, 2018; Firth et al., 2021). Overall, our data suggests that Flemish novice teachers are consistent in evaluating given study strategies (specifically: spaced practice, multimodal representations, and elaboration), but are less able to spontaneously formulate study-advices about the same study strategies. Other aspects of the results are more complex. Novice teachers appeared to be less consistent in their evaluation of study strategies that rely on the desirable difficulties framework. Indeed, strategies with the strongest evidence-base, such as spaced practice and retrieval practice, were not often spontaneously recommended in open-ended questions. Since there are large discrepancies between spontaneously recommended study strategies and the effectiveness scores of the same strategies in closed questions, it is possible that that novice teachers do not yet exhibit a coherent image of the learners cognitive architecture. Student teachers knowledge of learning strategies has been previously described as ‘knowledge in pieces’ (Glogger-Frey et al., 2018, p. 228), and the same conundrums are found in novice-teachers study strategy knowledge. Our indications of the lack of sophistication in novice teachers’ knowledge highlights the need for teaching them about and training them in the use of evidence-based strategies (McCabe, 2018).

Teacher learning and their classroom skills should be seen as and a dynamic process and a continuum rather than an judgment of teachers’ knowledge at a fixed time (Blömeke et al., 2015). From the perspective of translational research— the amalgam of processes and activities associated with the use of findings from empirical research to incorporate best-evidence guidelines into everyday practice (see, e.g., Gorard, 2020) – the presented study offers an opportunity to examine curricula in both teacher education and continuing professional development whether they disseminate the most consistent research results regarding learning processes. Where best-evidence is used as part of initial teacher education and continuing professional development curricula, teacher performance is found to be superior (Brown and Zhang, 2016). Explicit strategy instruction in teacher education and continuous professional development may thus provide a tangible solution for this ‘knowledge in pieces’ in novice teachers. Explicit instruction about the concepts, use and advantages of employing empirically supported learning strategies thus might promote teachers’ understanding of the mental model of the learner (Willingham, 2017). As argued by Lawson et al. (2019), learning about learning and cognition should perhaps be seen as a separate knowledge domain so that pre-service and in-service teachers can transfer that propositional knowledge both implicitly and explicitly to their students. It is important that all teachers have deep conceptual knowledge of study strategies as teachers might be considered as ‘memory workers’ who have the responsibility of teaching their students how learning happens and how to use effective study strategies to create lasting learning.

Data availability statement

The datasets presented in this article are not readily available because in the informed consent, it was stated that the dataset would only be used for this particular research. Requests to access the datasets should be directed to dGltLnN1cm1hQHRob21hc21vcmUuYmU=.

Ethics statement

The studies involving human participants were reviewed and approved by Open University of the Netherlands. The patients/participants provided their written informed consent to participate in this study.

Author contributions

TS, GC, PK, and RG contributed to the conception and the design of the study. TS analyzed the data and wrote, revised, and reviewed sections of the manuscript. GC, RG, and PK provided feedback during the writing process. All authors contributed to the article and approved the submitted version.

Acknowledgments

We would like to thank Peter Verkoeijen, Pedro Debruyckere, Dominique Sluijsmans, Kristel Vanhoyweghen, Tine Hoof, Eva Maesen, and Laurie Delnoij for their feedback during various stages of this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adesope, O. O., Trevisan, D. A., and Sundararajan, N. (2017). Rethinking the use of tests: a meta-analysis of practice testing. Rev. Educ. Res. 87, 659–701. doi: 10.3102/0034654316689306

Agarwal, P. K., Nunes, L. D., and Blunt, J. R. (2021). Retrieval practice consistently benefits student learning: a systematic review of applied research in schools and classrooms. Educ. Psychol. Rev. 33, 1409–1453. doi: 10.1007/s10648-021-09595-9

Aguinis, H., and Bradley, K. J. (2014). Best practice recommendations for designing and implementing experimental vignette methodology studies. Organ. Res. Methods 17, 351–371. doi: 10.1177/1094428114547952

Anthenien, A. M., DeLozier, S. J., Neighbors, C., and Rhodes, M. G. (2018). College student normative misperceptions of peer study habit use. Soc. Psychol. Educ. 21, 303–322. doi: 10.1007/s11218-017-9412-z

Bednall, T. C., and James Kehoe, E. (2011). Effects of self-regulatory instructional aids on self-directed study. Instr. Sci. 39, 205–226. doi: 10.1007/s11251-009-9125-6

Berliner, D. C. (2001). Learning about and learning from expert teachers. Int. J. Educ. Res. 35, 463–482. doi: 10.1016/S0883-0355(02)00004-6

Bisra, K., Liu, Q., Nesbit, J. C., Salimi, F., and Winne, P. H. (2018). Inducing self-explanation: a meta-analysis. Educ. Psychol. Rev. 30, 703–725. doi: 10.1007/s10648-018-9434-x

Biwer, F., de Bruin, A., and Persky, A. (2022). Study smart – impact of a learning strategy training on students’ study behavior and academic performance. 1–21. Adv. Health Sci. Educ. 1–11. doi: 10.1007/s10459-022-10149-z

Biwer, F., Oude Egbrink, M. G., Aalten, P., and de Bruin, A. B. (2020). Fostering effective learning strategies in higher education–a mixed-methods study. J. Appl. Res. Mem. Cogn. 9, 186–203. doi: 10.1016/j.jarmac.2020.03.004

Bjork, R. A. (1994). “Memory and metamemory considerations in the training of human beings,” in Metacognition. eds. J. Metcalfe and A. Shimamura (Cambridge, MA: The MIT Press), 185–205.

Bjork, R. A., Dunlosky, J., and Kornell, N. (2013). Self-regulated learning: beliefs, techniques, and illusions. Annu. Rev. Psychol. 64, 417–444. doi: 10.1146/annurev-psych-113011-143823

Blasiman, R. N., Dunlosky, J., and Rawson, K. A. (2017). The what, how much, and when of study strategies: comparing intended versus actual study behaviour. Memory 25, 784–792. doi: 10.1080/09658211.2016.1221974

Blömeke, S., Hoth, J., Döhrmann, M., Busse, A., Kaiser, G., and König, J. (2015). Teacher change during induction: development of beginning primary teachers’ knowledge, beliefs and performance. Int. J. Sci. Math. Educ. 13, 287–308. doi: 10.1007/s10763-015-9619-4

Brown, C., and Zhang, D. (2016). Is engaging in evidence‐informed practice in education rational? What accounts for discrepancies in teachers’ attitudes towards evidence use and actual instances of evidence use in schools? British Educational Research Journal 42, 780–801.

Camerer, C. F., Dreber, A., Holzmeister, F., Ho, T. H., Huber, J., Johannesson, M., et al. (2018). Evaluating the replicability of social science experiments in nature and science between 2010 and 2015. Nat. Hum. Behav. 2, 637–644. doi: 10.1038/s41562-018-0399-z

Camp, G., Surma, T., and Kirschner, P. A. (2021). “Foundations of multimedia learning,” in The Cambridge handbook of multimedia learning. eds. R. E. Mayer and L. Fiorella (Cambridge, UK: Cambridge University Press), 17–24.

Carpenter, S. K. (2017). “Spacing effects on learning and memory,” in Cognitive Psychology of Memory: Learning and Memory. Vol. 2. ed. J. T. Wixted, (Oxford, UK: Elsevier).

Carpenter, S. R., Mooney, H. A., Agard, J., Capistrano, D., DeFries, R. S., Díaz, S., et al. (2009). Science for managing ecosystem services: beyond the Millennium Ecosystem Assessment. PNAS 106, 1305–1312.

Carvalho, P. F., and Goldstone, R. L. (2017). The sequence of study changes what information is attended to, encoded, and remembered during category learning. Journal of experimental psychology. Learn. Memory Cogn. 43, 1699–1719. doi: 10.1037/xlm0000406

Clark, C. M., and Bjork, R. A. (2014). “When and why introducing difficulties and errors can enhance instruction” in Applying science of learning in education: Infusing psychological science into the curriculum. eds. V. A. Benassi, C. E. Overson, and C. M. Hakala. Society for the Teaching of Psychology, 20–30.

Credé, M., and Kuncel, N. R. (2008). Study habits, skills, and attitudes: the third pillar supporting collegiate academic performance. Perspect. Psychol. Sci. 3, 425–453. doi: 10.1111/j.1745-6924.2008.00089.x

Creswell, J., and Creswell, D. (2017). Research Design: Qualitative, Quantitative, and Mixed Methods approaches (5th ed.) London: Sage Publications.

Dagenais, C., Lysenko, L., Abrami, P., Bernard, R., Ramde, J., and Janosz, M. (2012). Use of research-based information by school practitioners and determinants of use: a review of empirical research. Evid. Policy 8, 285–309. doi: 10.1332/174426412X654031

Darling-Hammond, L., and Bransford, J. (2005). Preparing Teachers for a Changing World: What Teachers should Learn and be Able to Do. San Francisco: Jossey-Bass.

De Winter, J. F., and Dodou, D. (2010). Five-point likert items: t test versus Mann-Whitney-Wilcoxon. Pract. Assess. Res. Eval. 15, 1–16. doi: 10.7275/bj1p-ts64

Dinsmore, D. L., Grossnickle, E. M., and Dumas, D. (2016). “Learning to study strategically,” in Handbook of Research on Learning and Teaching. 2nd Edn. eds. R. E. Mayer and P. A. Alexander. (New York: Routledge).

Dirkx, K. J. H., Camp, G., Kester, L., and Kirschner, P. A. (2019). Do secondary school students make use of effective study strategies when they study on their own? Appl. Cogn. Psychol. 33, 952–957. doi: 10.1002/acp.3584

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., and Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: promising directions from cognitive and educational psychology. Psychol. Sci. Public Interest 14, 4–58. doi: 10.1177/1529100612453266

Ebbinghaus, H. (1964). Memory: A contribution to experimental psychology. eds. H. A. Ruger, C. E. Bussenius, and E. R. Hilgar. New York, NY: Dover Publications.

Education Council (2006). Recommendation of the European Parliament and the council of 18 December 2006 on key competencies for lifelong learning (Brussels, Official Journal of the European Union), 30 December.

Eitel, A., and Scheiter, K. (2015). Picture or text first? Explaining sequence effects when learning with pictures and text. Educ. Psychol. Rev. 27, 153–180. doi: 10.1007/s10648-014-9264-4

Fiorella, L. (2020). The science of habit and its implications for student learning and well-being. Educ. Psychol. Rev. 32, 603–625. doi: 10.1007/s10648-020-09525-1

Fiorella, L., and Mayer, R. E. (2016). Eight ways to promote generative learning. Educ. Psychol. Rev. 28, 717–741. doi: 10.1007/s10648-015-9348-9

Firth, J., Rivers, I., and Boyle, J. (2021). A systematic review of interleaving as a concept learning strategy. Rev. Educ. 9, 642–684. doi: 10.1002/rev3.3266

Glogger-Frey, I., Ampatziadis, Y., Ohst, A., and Renkl, A. (2018). Future teachers’ knowledge about learning strategies: Misconcepts and knowledge-in-pieces. Think. Skills Creat. 28, 41–55. doi: 10.1016/j.tsc.2018.02.001

Gorard, S. (2020). Getting Evidence into Education. Evaluating the Routes to Policy and Practice. London, UK: Routledge.

Halamish, V. (2018). Pre-service and in-service teachers' metacognitive knowledge of learning strategies. Front. Psychol. 9:2152. doi: 10.3389/fpsyg.2018.02152

Hartwig, M. K., and Dunlosky, J. (2012). Study strategies of college students: are self-testing and scheduling related to achievement? Psychon. Bull. Rev. 19, 126–134. doi: 10.3758/s13423-011-0181-y

Hartwig, M. K., Rohrer, D., and Dedrick, R. F. (2022). Scheduling math practice: students’ underappreciation of spacing and interleaving. J. Exp. Psychol. Appl. 28, 100–113. doi: 10.1037/xap0000391

Hirshman, E. (2001). “Elaboration in memory,” in International Encyclopedia of the Social & Behavioral Sciences. eds. N. J. Melser and P. B. Baltes (Oxford, England: Pergamon)

Kalyuga, S., Chandler, P., and Sweller, J. (2001). Learner experience and efficiency of instructional guidance. Educ. Psychol. 21, 5–23. doi: 10.1080/01443410124681

Kang, S. H. (2016). “The benefits of interleaved practice for learning,” in From the Laboratory to the Classroom: Translating the Science of Learning for Teachers. eds. J. C. Horvath, J. M. Lodge, and J. Hattie (London, UK: Routledge).

Karpicke, J. D., and Blunt, J. R. (2011). Retrieval practice produces more learning than elaborative studying with concept mapping. Science 331, 772–775. doi: 10.1126/science.1199327

Karpicke, J. D., Butler, A. C., and Roediger, H. L. (2009). Metacognitive strategies in student learning: do students practise retrieval when they study on their own? Memory 17, 471–479. doi: 10.1080/09658210802647009