- School of Education, University of Exeter, Exeter, United Kingdom

There is growing interest in multi-dimensional approaches for investigating student engagement with written feedback (WF), drawing and building on prior theoretical work carried out both within and beyond second language teaching and learning. It is thought that understandings of developing L2 writers’ affective, behavioural, and cognitive processes and responses explain the utility they gain from WF. The present study constitutes a systematic methodological review of 35 empirical studies of student engagement, reviewing their conceptual orientations, methodologies and methods, contexts and sampling approaches, written texts, and WF. The study identified a pre-eminent methodological approach constituting mixed method case studies (often situated in Chinese tertiary settings) involving the triangulation of textual measures with student verbal reports (usually semi-structured or stimulated recall interviews), albeit with variations in the operationalisation of behavioural and cognitive engagement. Teachers constituted the main feedback provider queried (frequently for the purposes of examining engagement with corrective feedback), although were seldom recruited as informants to provide their perspectives on student engagement. Relatively few studies contrasted engagement across multiple feedback sources, such as peers or AWE applications. Texts subject to written feedback tended to be short (and perhaps elicited for the purposes of research), with fewer studies investigating engagement with WF on authentic high-stakes or longer-form writing (e.g., theses drafts, research articles). Methodological limitations of existing scholarship are posited and suggestions for future research outlined.

Introduction

Written feedback (WF) on second language writing offers considerable possibilities to support the development of learners’ language and/or writing skills. Yet, because developing writers sometimes find the information confusing, excessive, or difficult to act on (Goldstein, 2004), it does not always live up to its potential (Ferris, 2006; Bitchener, 2018). Increasingly, researchers are turning their attention to the mental and behavioural states and processes that underly students’ processing and response as the key to unlocking how and why learners do or do not gain utility from written feedback (e.g., Ferris et al., 2013; Han and Hyland, 2015; Han, 2017; Mahfoodh, 2017). Such research, where the student writer is situated as an informant, has contributed insights into the key issues of feedback recipience, including students’ awareness and understanding of the teacher’s corrective intent (Qi and Lapkin, 2001; Sachs and Polio, 2007; Storch and Wigglesworth, 2010), the sense students are able to make (or not) out of written commentary, the (potentially hidden) messages they take away from it (Hyland, 2019; Yu and Jiang, 2020), and how and why students chose (not) to act on WF (Storch and Wigglesworth, 2010; Uscinski, 2017; Yu et al., 2018; Ranalli, 2021).

A concept that collectively captures students’ mental and behavioural responses to written feedback is engagement. In L2 learning settings, engagement may usefully be defined as the quantity and quality of learners’ processing and written responses to written feedback in light of their ability and willingness to respond. The definition captures the importance of observable artefacts of engagement (e.g., revision operations, verbal reports) as well as quantitative (frequency counts of revision operations) and qualitative (illustrative excerpts of textual extracts) ways of knowing. The emphasis on ability reflects the cognitive demands placed on learners to make sense out of WF and to respond appropriately (Simard et al., 2015; Yu et al., 2018; Zheng and Yu, 2018), while the notion of willingness recognises learners are unlikely to develop their language or writing skills unless there is a sense of personal agency (Price et al., 2011; Simard et al., 2015). The definition also captures the complexity underlying engagement (Han and Hyland, 2015; Yu et al., 2018; Zhang and Hyland, 2018; Zheng and Yu, 2018; Fan and Xu, 2020), which requires a diversity of instruments to generate information that is characteristically rich and detailed (Hyland, 2003). It is believed that the states and processes of engagement are mediated by individual and contextual characteristics, especially learner beliefs, agency, motivation, language proficiency, feedback literacy, and the content and delivery of written feedback (Hyland, 2003; Han and Hyland, 2015; Han, 2017; Mahfoodh, 2017; Chong, 2021). Owing to this complexity, such features may constitute the locus of discrete inquiries in their own right (e.g., Han, 2017; Pearson, 2022b).

Engagement as a phenomenon in teaching and learning is a broad, multi-faceted construct (Fredricks et al., 2004; Price et al., 2011; Eccles, 2016). Like a Rorschach-image, engagement means different things to different people (Eccles, 2016), exhibited in the diversity of approaches to operationalising the construct in L2 writing research, including SLA-orientated studies that explore students’ depth of WF processing and uptake (Sachs and Polio, 2007; Storch and Wigglesworth, 2010), naturalistic case studies focusing on learners’ behavioural responses (Hyland, 2003; Uscinski, 2017; Ranalli, 2021), and studies that investigate an operationalisation of engagement encompassing affective, behavioural, and cognitive sub-dimensions (referred to as multi-dimensional or componential research) (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018; Yu and Jiang, 2020), the focus of the present review. Multi-dimensional engagement was initially posited as a heuristic by Ellis (2010). He theorised engagement with corrective feedback (CF) can be analysed from three distinct, but interrelated perspectives:

a cognitive perspective (where the focus is on how learners attend to the CF they receive), a behavioural perspective (where the focus is on whether and in what way learners uptake oral corrections or revise their written texts), and an affective perspective (where the focus is on how learners respond attitudinally to the CF) (p. 342).

Ellis (2010) drew on the work of Fredricks et al. (2004), who posited the three dimensions in the context of school engagement. Fredricks et al. (2004) situated the concept as a means of addressing student alienation (i.e., reductions in student effort and motivation and rising school dropout rates). This emancipatory potential has transferred into research located in L2 writing settings. Engagement offers a prism through which powerful explanations of the utility learners gain from written corrective feedback (WCF) or content-focused written feedback (CFWF) can be known (Ellis, 2010; Han and Hyland, 2015; Zhang and Hyland, 2018). It is hoped new knowledge generated by research will cascade down to practitioners by offering insights into: (a) content and delivery strategies, (b) the value of supplementary computer-mediated feedback applications, (c) the role of ancillary practices such as feedback conferences, (d) strategies for acknowledging and understanding learners’ perspectives, and, (e) the provision of response guidance (Han and Hyland, 2015, 2019; Han, 2017; Uscinski, 2017; Ma, 2019; Koltovskaia, 2020).

The study

The present study constitutes a critical review of the state-of-scholarship of multi-dimensional student engagement with written feedback in second language writing settings from a methodological perspective. The review is motivated by the recent upsurge in interest in multi-dimensional student engagement since the publication of Han and Hyland’s (2015) influential empirical study. While a recent qualitative research synthesis usefully explored multi-dimensional engagement from a conceptual perspective (see Shen and Chong, 2022), the wider methodological features of studies adopting variations of Fredricks et al. (2004) or Han and Hyland’s (2015) frameworks (e.g., in terms of methodologies, methods, sampling, features of written feedback) have not yet been systematically reviewed and rarely subjected to extended discussion (see Ma, 2019). In contrast, in non-language education disciplinary areas, there has been much debate surrounding the multi-dimensional nature of engagement, including the extent to which the dimensions can be considered as independent contributors of written outcomes (or aggregated to capture their synergistic qualities) and the potential malleable nature of the construct (Boekaerts, 2016; Eccles, 2016).

There is, thus, an opportune moment to take stock of the multi-dimensional approach to investigating student engagement with WF, not least in terms of synthesising salient concepts, methodologies, and methods involved and identifying prevalent contexts, approaches to written feedback, and texts to which feedback is provided on. As in other areas of language education (e.g., Plonsky and Gass, 2011; Liu and Brown, 2015), this methodological reviews seeks to generate insights into study strengths and weaknesses across the research topic, identify prospects for future inquiries, and challenge, enrich, and refine existing concepts and epistemologies. The following research questions guide the design of the review:

1. What are the conceptual characteristics of multi-dimensional research into student engagement with written feedback on L2 writing?

2. What research designs and procedures do authors use to investigate student engagement?

3. What are the contextual and sampling characteristics of current research?

4. How have authors of existing research gone about investigating engagement in terms of student texts and written feedback?

Study identification

As with other systematic reviews, the present study followed PRISMA guidelines (Page et al., 2021), beginning with the identification of relevant studies using appropriate search terms across multiple online research indices. The following search string was developed after reviewing the titles, abstracts, and keywords found in several influential studies (Han and Hyland, 2015; Zhang, 2017; Yu et al., 2018; Zhang and Hyland, 2018):

“engag*” OR “student* engagement” OR “learner* engagement” OR “affective engagement” OR “behavioural engagement” OR “behavioral engagement” OR “cognitive engagement” AND “written feedback” OR “written corrective feedback”

A variety of online sources were searched to retrieve published material. The logical starting point were renowned databases of indexed research, namely the Web of Science (WoS), Scopus, ERIC (Educational Resources Information Centre), ScienceDirect, and the Wiley Online Library. Search terms were applied to the abstract field to generate bibliometric results that were exported to a CSV file. As not all journals devoted to second language writing are listed on these indices, the catalogues of five journals (Journal of Academic Writing, Journal of Basic Writing, Journal of Response to Writing, Journal of Writing Research, and Writing & Pedagogy) were searched using the above string. Finally, an ancestral search of the reference lists of all identified studies was undertaken along with searching the first five pages of hits of Goggle Scholar using the term “student engagement with written feedback” to identify potentially missed papers.

Study selection

A range of inclusion/exclusion criteria were applied at multiple stages of the PRISMA process. The retrieved literature was limited to studies published in academic journals and edited book chapters, the main repositories for primary L2 research (Plonsky, 2013). No articles were retrieved before 2004, the publication year of Fredricks et al.’s influential paper conceptualising engagement as multi-dimensional, while 2022—the most recent completed year when data retrieval took place—was chosen as a cut off. Non-English documents were automatically filtered out at as a function of the search index or were ignored in manual analysis of Google Scholar search results. A total of 380 discrete documents were identified using the search string. Titles and abstracts were initially screened to determine study relevance, with 128 records being removed because they were clearly unrelated to written feedback on L2 writing. The remaining 95 articles were retrieved for closer analysis (with three full texts unavailable). A further 60 titles were removed, because:

• they did not investigate student engagement with written feedback (N = 34),

• engagement was not conceived of as multi-dimensional (N = 11),

• not all three dimensions of the framework considered (N = 6),

• the study was not empirical (N = 4),

• the study was not peer-reviewed (N = 2).

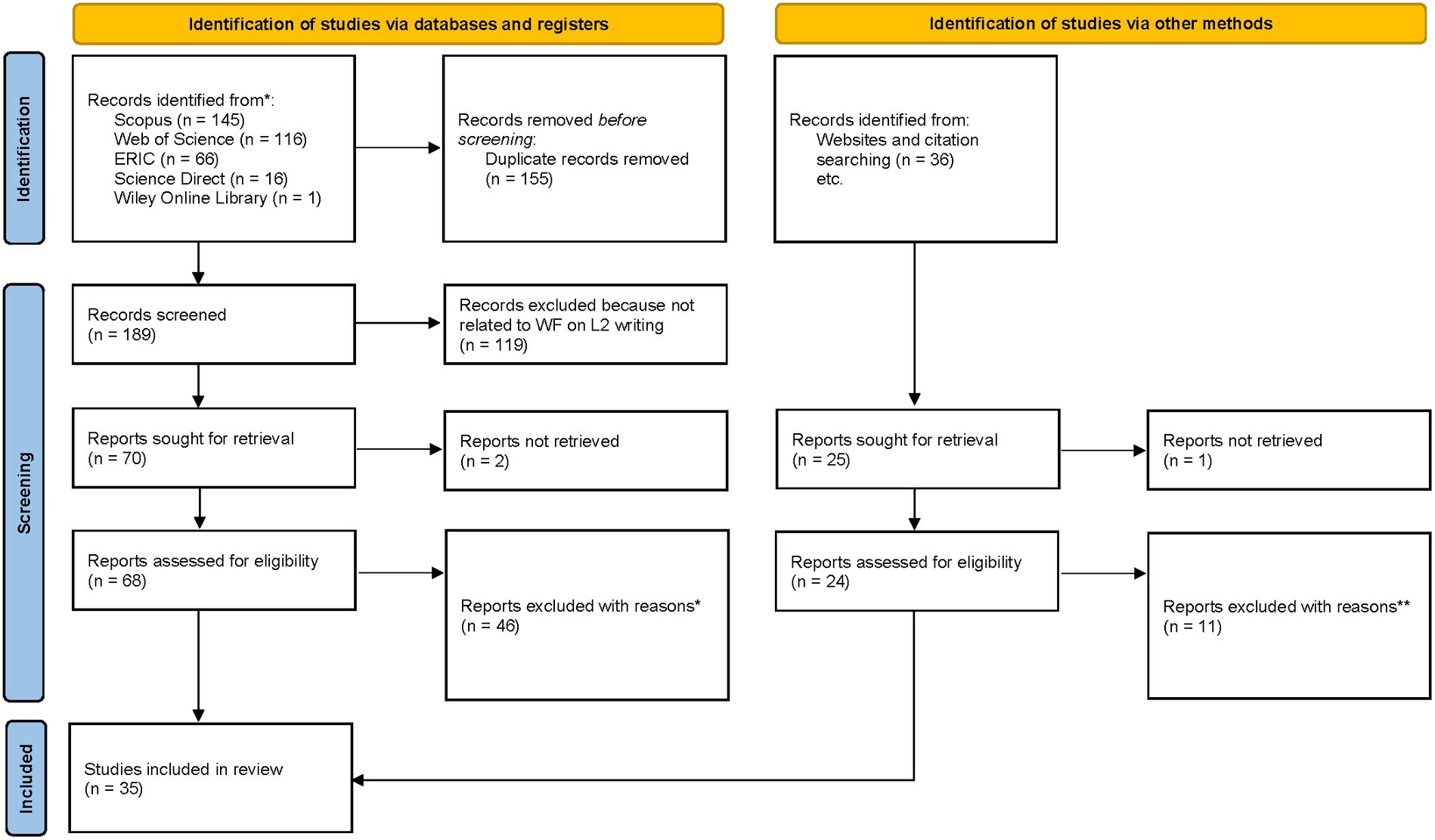

As a result of the PRISMA checking process (summarised in Figure 1), 35 empirical studies employing the componential framework were retrieved for review.

Figure 1. PRISMA flow diagram for article selection. * Not a study of student engagement with WF (29), engagement not conceived of as multi-dimensional (8), not all three dimensions of the framework considered (5), not an empirical study (2), not peer reviewed research (2). ** Not a study of student engagement with WF (5), engagement not conceived of as multi-dimensional (3), not an empirical study (2), not all three dimensions of the framework considered (1).

Data analysis

The study employs quantitative data analysis methods, centred on a deductive scheme to code conceptual and methodological features of research into student engagement with WF. First, systematic (e.g., Plonsky, 2013; Hiver et al., 2021) and methodological reviews in other areas of language education (e.g., Plonsky and Gass, 2011; Liu and Brown, 2015) were consulted to determine a set of candidate categories and variables of interest. The scheme was then iteratively and recursively developed by identifying and refining values, variables, and categories of interest across the 35 studies. The draft coding scheme was piloted on 15 studies, whereupon revisions were made in response to consistency issues (e.g., in how authors operationalise engagement), particularly with variables that were either more open to interpretation (e.g., data analysis procedures) or had not been foreseen in initial development.

Four pre-eminent categories of interest emerged from design and piloting: conceptual considerations (addressing research question 1), study methodologies and methods (research question 2), contexts and sampling (research question 3), and student texts and written feedback (research question 4). Each category contained a number of variables, which featured either open-ended (e.g., research methods, operationalisation of engagement) or categorical variables (e.g., country context, type of feedback provider). In most instances, values could be objectively identified through careful reading of research reports, often the Method and Results sections. However, there were a number of variables subject to interpretation (e.g., approach of the feedback provider, text genres), requiring a recursive coding approach across the retrieved literature to ensure consistency. To reduce the threat of coding unreliability, all variables were iteratively inspected by the researcher after a two-month interval. Additionally, an intra-rater reliability check of 96 values was undertaken a further 2 months later, yielding a level of agreement of 0.917. Variances were examined and affected variables reviewed across the wider dataset. The findings are presented in the form of frequency counts/proportions of the uncovered methodological features, indicating prevalent and unusual practices, reporting practices, gaps in the literature, and study quality.

Findings and discussion

Overview of the sample

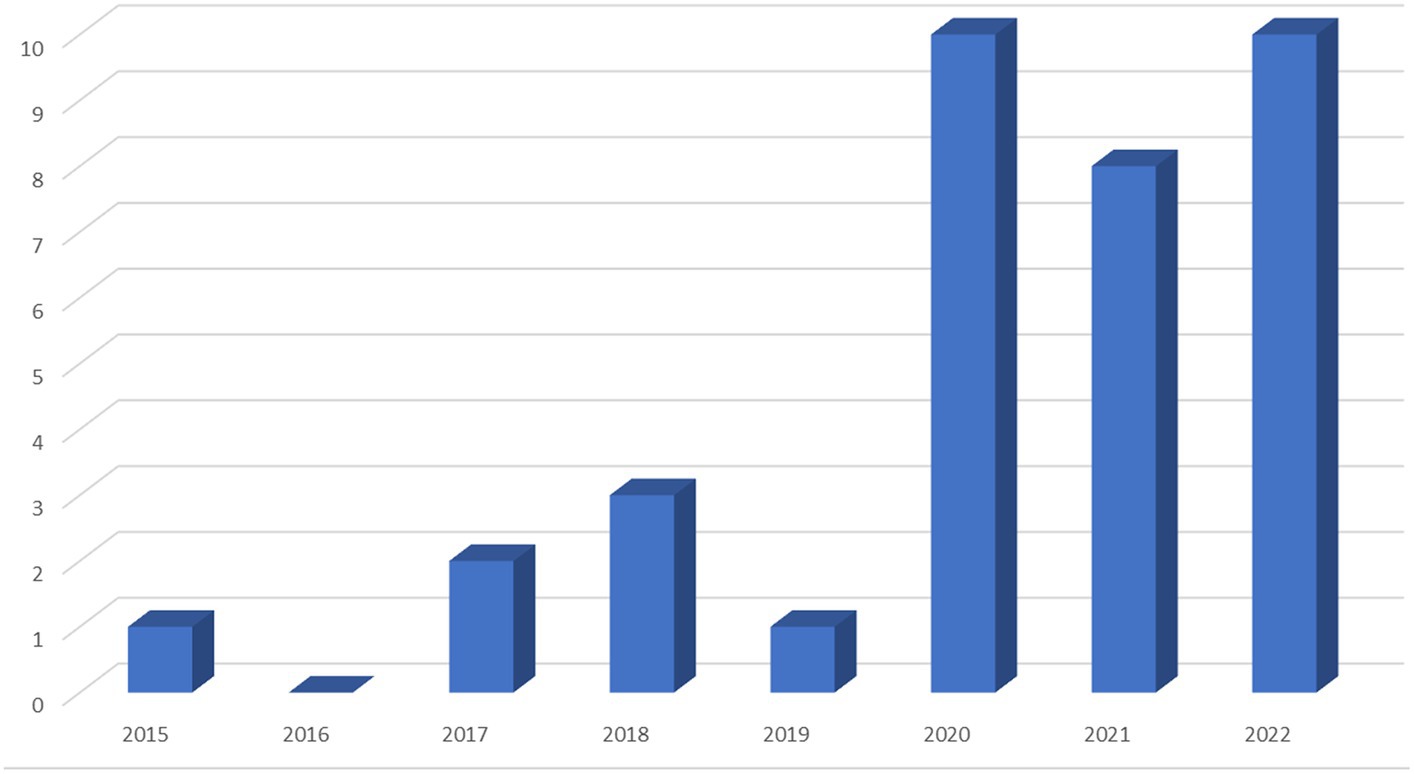

PRISMA screening and eligibility processes yielded 35 multi-dimensional studies of student engagement with written feedback published between 2015 and 2022. As shown in Figure 2, it is noticeable that many of these documents were published between 2020 and 2022. All but one study (Han and Hyland, 2019) featured in an academic journal, with much research located in high-quality publications (defined as SSCI or ESCI-indexed), especially Assessing Writing (N = 6) and System (N = 3). With the exception of these journals, engagement research is dispersed across a wide range of applied linguistics/TESOL publications, with no other journal featuring more than two studies. This suggests student engagement is a somewhat diffuse interest across applied linguistics/TESOL, which may lessen research visibility and complicate its retrieval. Further reducing research retrievability is the fact that only eight studies were available through open access publishing arrangements.

Conceptual characteristics

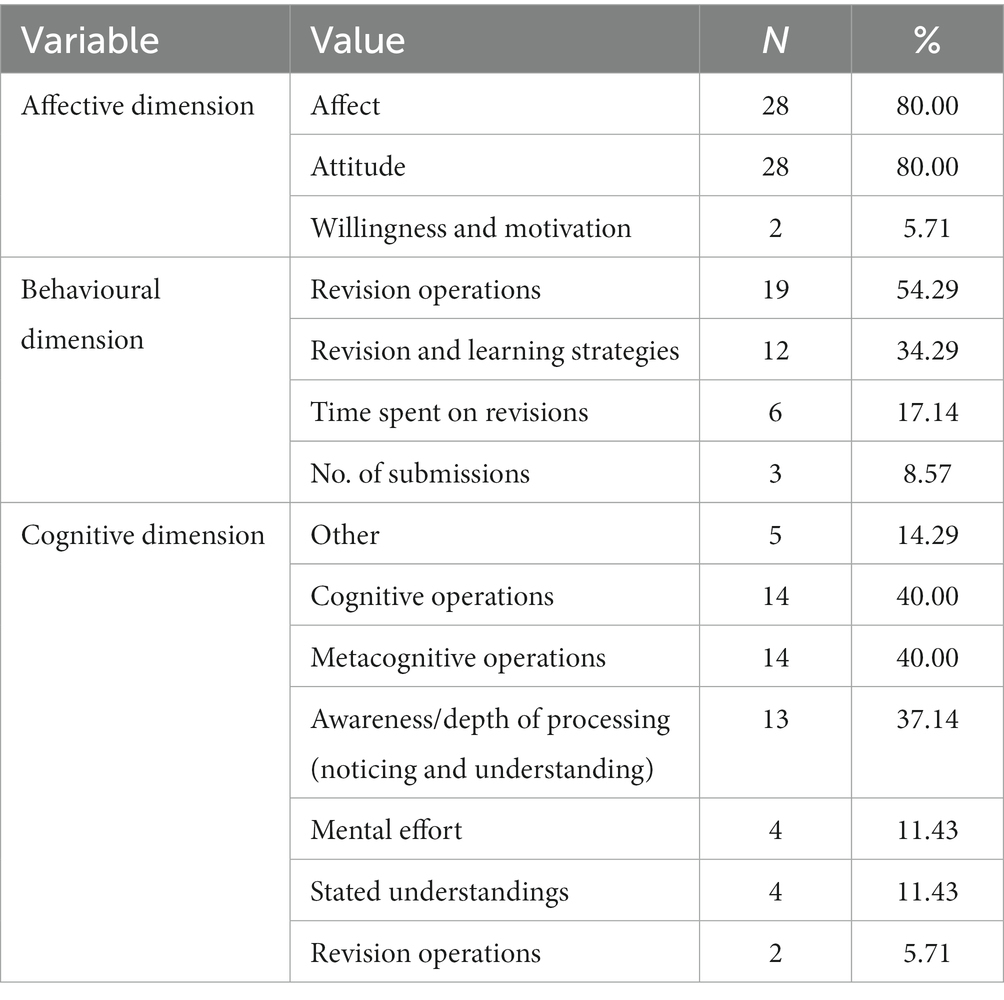

Reflecting the umbrella nature of the concept, multi-dimensional studies defined engagement atomistically as the interlocking and overlapping synthesis of affective, behavioural, and cognitive dimensions (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018; Koltovskaia, 2020). To counter its fragmentary tendency of engagement, some authors characterised student engagement globally or across a singular dimension, adopting positive (actively, developing, dynamic, extensive, fully) and negative labels (inconsistent, nonchalant, perfunctory, selectively, superficial) derived through triangulating the various forms of evidence (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018; Tian and Zhou, 2020; Zhang, 2020; Zheng et al., 2020b). Most operationalisations were built upon the initial heuristic of Ellis (2010), with Han and Hyland’s (2015) categories and sub-categories of engagement being influential. A few studies (e.g., Zhang, 2017; Yu et al., 2018; Zhang and Hyland, 2018) explicitly drew upon the work of Fredricks et al. (2004). Table 1 outlines the variations across operationalisations of the three dimensions of engagement, which are discussed in turn.

Affective engagement

The affective dimension exhibited the least conceptual diversity, with 80% of studies querying students’ affective and attitudinal responses. As some studies adopted longitudinal designs (e.g., Lyu and Lai, 2022; Tay and Lam, 2022), the focus was sometimes on identifying and measuring changes in students’ affect or attitude (e.g., Yu and Jiang, 2020; Zhang, 2021). Reference to theoretical constructs of affective responses in existing literature was rare, with the exception of Martin and Rose’s (2003) framework, comprising affect, judgement¸ and appreciation, which five studies drew on (e.g., Zheng et al., 2020a; Lira-Gonzales et al., 2021).

The sub-dimension of affect encompassed students’ emotional reactions towards WF upon receiving it, usually explored via oral reporting, sometimes after a delay of one or more days once feedback had been received (e.g., Han and Hyland, 2015; Koltovskaia, 2020). Collecting student verbal reports during receipt of WF could improve the authenticity of the captured affective responses (e.g., Zheng and Yu, 2018; Zheng et al., 2020a). Likewise, interviewing in participants’ L1 better ensures students are able to express themselves clearly and that responses are more representative of their inner thoughts and feelings (Zhang, 2020; Zhang and Hyland, 2022). Analysing student affect poses challenges, not least in attributing labels to complex and diverse responses to WF and potentially misrepresenting participants’ emotions in research reports. I recommend researchers emphasise the likely complexity in this area (e.g., Han and Hyland, 2015; Shi, 2021) and avoid simplifying representations to mere “positive” and “negative” emotions (e.g., Farsani and Aghamohammadi, 2021; Jin et al., 2022).

Varying approaches to attitude were operationalised across studies. Interestingly, attitude as students’ judgements of the value of particular content and delivery features of written feedback was rather rare, perhaps reflective of students’ inexperience as informants on pedagogical matters. Instead, a conception of attitude that addressed broader features of teacher practice (e.g., the extent of face-to-face support provided, the amount and tone of feedback provided) and personal attributes (e.g., their perceived effort and investment in the student) was investigated, nearly always through semi-structured interviewing. Most studies queried students’ attitudes towards WF retrospectively. While the goal of describing and explaining student engagement with written feedback is a commendable one, researchers may be missing opportunities to explore an important quality of WF; its malleability (Fredricks et al., 2004). Future research could consider students’ perspectives prior to delivering feedback and seek to tailor content and delivery based on students’ views (e.g., Pearson, 2022a,b). This is not always possible, for example in instances of AWE provision (e.g., Zhang, 2017) or if the feedback provider needs to remain anonymous, for example, in peer review (e.g., Yu and Jiang, 2020).

Behavioural engagement

As shown in Table 1, behavioural engagement was commonly operationalised in two ways; students’ revision operations (54.29%) and revision and learning strategies (34.29%). The first centres on textual artefacts themselves, and denotes alterations developing writers make in response to WF over a series of drafts as well as the perceived successes of revisions, usually coded deductively. The latter is a more heterogeneous category of actions and processes that go beyond the text (often uncovered via interviewing), including for example, consultation with peers (e.g., Han and Xu, 2021; Man et al., 2021) and/or the teacher (e.g., Han and Hyland, 2015, 2019), use of dictionaries and grammars (e.g., Fan and Xu, 2020; Koltovskaia, 2020; Liu, 2021), and digital tools (e.g., Tian and Zhou, 2020; Zhang, 2020). In some cases, the collected data cuts across behavioural and cognitive engagement, such as examining what information or knowledge (e.g., linguistic, intuitive) learners refer to (Shi, 2021) and the perceived extent of learners’ efforts to respond (e.g., Han and Hyland, 2019; Bastola, 2020).

Methodologically, some authors opted to query students’ self-reports of such behaviours (e.g., Man et al., 2021; Shi, 2021), while others recorded and examined students’ observable strategies while responding to feedback (e.g., Han and Hyland, 2015, 2019; Han and Xu, 2021). Clearly, such approaches are liable to generate varying accounts of students’ behavioural engagement. Thus, the two methods could be combined to provide a more comprehensive version of behavioural engagement. Finally, the functionality of AWE systems provide additional indicators of behavioural engagement, namely the time students spend revising their texts and the number of submissions to the application (e.g., Zhang, 2017; Zhang and Hyland, 2018). Such metrics, which can be considered “effort” are, unfortunately, rarely extended to studies involving human feedback providers.

Cognitive engagement

Cognitive engagement comprised the dimension which was operationalised in the most divergent ways. 40% of studies opted to investigate developing writers’ cognitive and meta-cognitive operations, either at the point of processing WF or when undertaking revisions (or both) (e.g., Han and Hyland, 2015, 2019; Zheng and Yu, 2018; Fan and Xu, 2020). Cognitive operations encompass the mental strategies and skills learners use to process and respond to WF (Han and Hyland, 2015), such as their use of reasoning, language knowledge, and the context of writing. Perhaps as learners have limited access to such interior states or because they are challenging for researchers to elicit, the typical cognitive operations uncovered lack specificity (e.g., Zheng and Yu, 2018; Zheng et al., 2020a) or overlap with features of behavioural engagement, such as asking for help (Lyu and Lai, 2022) or attempting to memorise a word (Zheng et al., 2020c). In contrast, metacognitive operations denote, “strategies and skills the learner employs to regulate his or her mental processes, practices, and emotional reactions” (Han and Hyland, 2015, p. 43). These include being able to move on from an unresolved error, reading to see whether the revision made a sentence read better, and relying on intuition and self-knowledge. As learners seem more able to provide information about such processes and because they are more observable, studies tend to focus more intensively on querying meta-cognitive operations, often through interviewing (e.g., Yu et al., 2018; Yu and Jiang, 2020; Zheng et al., 2020b).

Han and Hyland (2015) were the first to operationalise cognitive engagement in this way, drawing upon the taxonomy of cognitive and metacognitive strategies developed by Oxford (2011). The authors rightfully dispensed with the term strategies, given that it is not always clear whether students are implementing strategies deliberately or applying skills less consciously during feedback response. All subsequent studies of engagement querying students’ (meta-) cognitive operations have followed this approach, refraining from specifying whether an operation embodied a definite strategy or skill.

The focus on mental operations was complemented by another prevalent line of inquiry, depth of processing (37.14%). This appeared particularly prevalent in studies of engagement with WCF (Han and Hyland, 2015) or where there was a prominent focus on accuracy (e.g., Zheng et al., 2020c; Tay and Lam, 2022). Conceptually, such studies took their cues from several influential earlier works (e.g., Qi and Lapkin, 2001; Sachs and Polio, 2007; Storch and Wigglesworth, 2010) that differentiate between noticing (i.e., detecting an error, diagnosing the teacher’s corrective intent, and attending to accuracy) and understanding (successfully diagnosing an error and providing an accurate metalinguistic explanation) (Han and Hyland, 2015). Unlike these leading works, few authors observed learners working through feedback to determine student noticing or understanding of discrete errors or WF (e.g., Han and Hyland, 2015; Zheng and Yu, 2018), using what are termed language-related episodes (see Storch and Wigglesworth, 2010). Instead, many took a broader view, conceiving of depth of processing as how well students reported they understood WF (e.g., Yu et al., 2018; Choi, 2021; Shi, 2021; Cheng and Liu, 2022), joining four studies that queried students’ understandings of WF and how/why they made revisions without reference to the depth of processing (e.g., Zhang, 2021; Pearson, 2022a). A further four studies conceived of cognitive engagement as a learner’s mental effort exerted in comprehending and handling the feedback information (e.g., Man et al., 2021; Mohammed and Al-Jaberi, 2021).

Interestingly, some studies opted for a more behavioural conception of cognitive engagement, with a few citing learning and revision strategies as evidence of cognitive engagement (e.g., Zheng et al., 2020a; Lyu and Lai, 2022) and others drawing on revision operations as proof of student understandings (or lack of) (e.g., Zhang, 2017; Zhang and Hyland, 2018). It should also be mentioned that on a few occasions, how authors operationalised cognitive engagement was not always clearly explained or illustrated, leaving the reader to speculate on what was meant by “students’ seriousness in work and self-regulation of learning” (Bastola, 2020), “students’ mental processes of WCF” (Han and Xu, 2021), and “sophisticated, deep, and personalised learning strategies” (Zhang, 2020). Such ambiguities can be usefully resolved through an illustrative coding table, perhaps in the form of appendices or supplementary material (e.g., Han and Hyland, 2015).

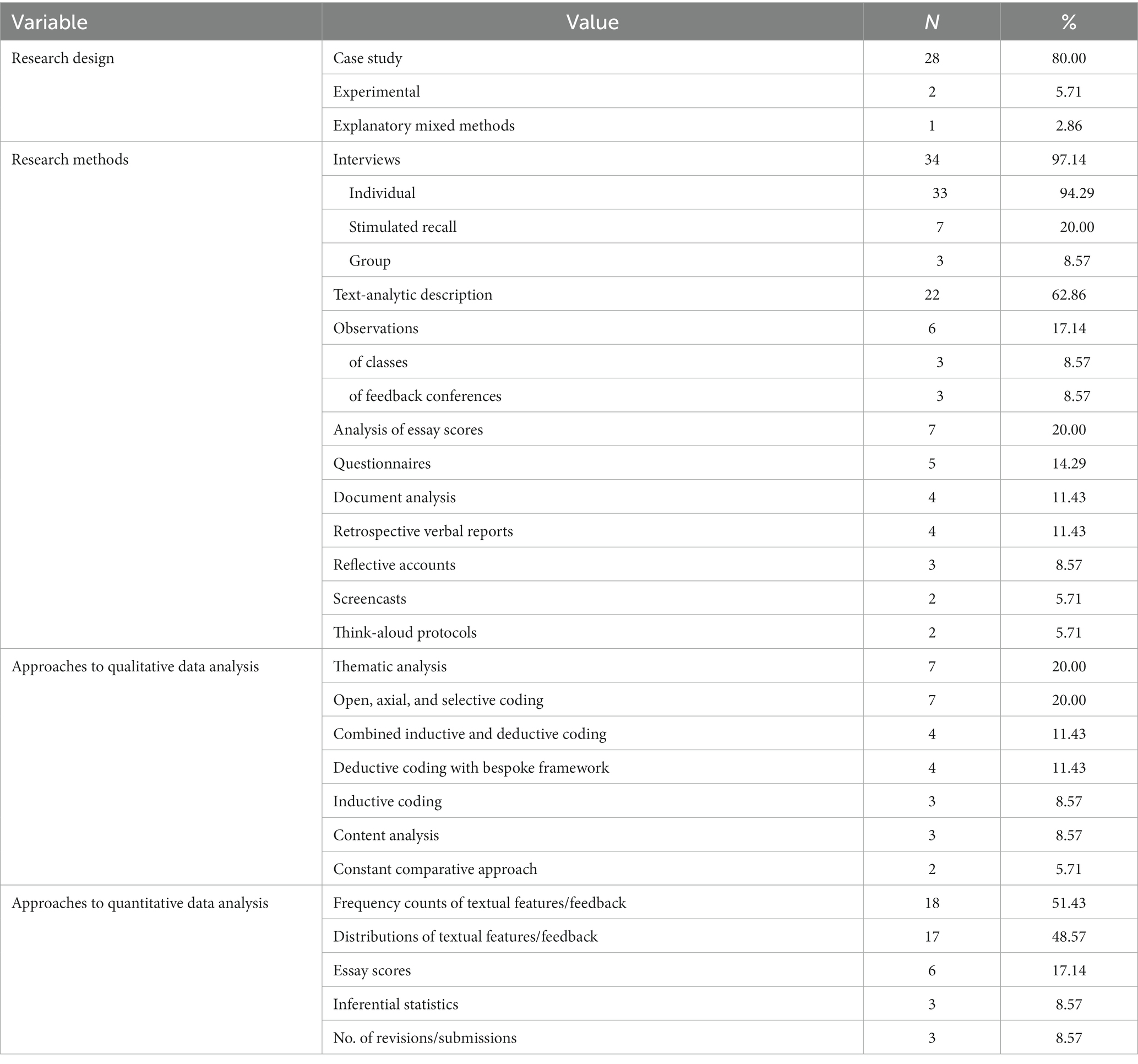

Methodologies and research methods

Given the complex and multi-faceted nature of engagement as a construct, researchers use a range of methods to collect diverse forms of evidence. This is reflected in the preponderance of mixed methods designs, which accounted for 82.86% of studies. A common mixed method approach encompassed textual data in the form of text-analytic description (Ferris, 2012) combined with one or multiple forms of students’ self-reports. The triangulation of multiple data sources, particularly the synthesis of more objective features of engagement evinced through students’ texts, and their more subjective oral reports helps capture the complexity and dynamicism of engagement (e.g., Han and Hyland, 2015; Liu, 2021), while providing for greater trustworthiness and completeness (e.g., Bastola, 2020; Farsani and Aghamohammadi, 2021; Lyu and Lai, 2022).

Some studies (usually implicitly) prioritised the qualitative dimension, attaching more importance to students’ understandings and conceptions of feedback and their affective responses to it (Han and Hyland, 2019; Mohammed and Al-Jaberi, 2021; Pearson, 2022a). Others prioritised textual evidence (e.g., Zhang, 2017; Yu et al., 2018; Zhang and Hyland, 2018), reflecting a prominence attributed to learners’ revision behaviours through metrics, such as revision operations, document editing times, and frequencies of submissions/drafts. Few studies (17.14%) enlisted solely qualitative approaches (Han, 2017; Pearson, 2022b), conceivably owing to an underlying ontological perspective that characterises engagement as an interpretive process centred on human meaning making. Alternatively, written texts or feedback may not have been available (Yu and Jiang, 2020). As can be seen from Table 2, case study research prevailed (80%), most of which was collective (i.e., involving multiple participants), bound by the phenomenon of multi-dimensional engagement (i.e., encompassing intrinsic case study research). Aside from use as a tool to recruit participants (e.g., Zhang, 2017; Zhang and Hyland, 2018), questionnaire research was surprisingly uncommon (e.g., Bastola, 2020; Tian and Zhou, 2020). While two studies self-identified as “experimental” (Man et al., 2021; Santanatanon and Chinokul, 2022), it was the case that qualitative data was also gathered.

Qualitative data collection and analysis

All studies featured a prominent qualitative component, involving one or a combination of semi-structured interviewing (97.14%, e.g., Zhang and Hyland, 2018; Fan and Xu, 2020; Yu and Jiang, 2020), stimulated recall (20%, e.g., Koltovskaia, 2020; Tian and Zhou, 2020; Lira-Gonzales et al., 2021), observations of feedback conferences or classes (17.14%, e.g., Han and Hyland, 2015; Fan and Xu, 2020), and retrospective verbal reports (11.43%, e.g., Han and Hyland, 2015; Han, 2017; Mohammed and Al-Jaberi, 2021). Typically, semi-structured interviews queried students’ feelings upon receiving feedback, their decision-making and actions taken in response, and the extent to which they understood the WF (e.g., Yu and Jiang, 2020; Zhang, 2020; Pearson, 2022a). Oral reporting occasionally involved thinking aloud (e.g., Choi, 2021; Cheng and Liu, 2022), or immediately followed the process of revisions (e.g., Zheng and Yu, 2018; Liu, 2021). Perhaps to reduce the cognitive load or mitigate logistical constraints, students’ reports were frequently undertaken retrospectively (e.g., Han and Hyland, 2015; Han and Xu, 2021), tapping into participants’ short-term memories of processing WF.

Student recall was often stimulated (e.g., Yu et al., 2018; Zheng et al., 2020b), with learners prompted by their written drafts (e.g., Han and Hyland, 2015; Zheng and Yu, 2018) or a screencast of them processing the feedback (e.g., Koltovskaia, 2020; Koltovskaia and Mahapatra, 2022). Often, the focus was on revealing students’ understandings (e.g., Koltovskaia, 2020), though other scholars (e.g., Yu et al., 2018) explore students’ affective responses in this way. Think alouds and immediate verbal reports offer the benefit of capturing real time aspects of student response (especially their emotional states and immediate awareness or understanding of the WF) and are often used in conjunction with interviewing to triangulate observations and gain more complete insights (Yu et al., 2018; Zheng and Yu, 2018; Koltovskaia, 2020). Alternatively, a hybrid approach encompassing prompted retrospective reporting was undertaken (e.g., Tian and Zhou, 2020; Yu and Jiang, 2020; Zheng et al., 2020b), offering the advantage of participant recall scaffolded by in-situ questioning.

Taking authors’ descriptions of their research at face value, a raft of qualitative data analytical approaches was exhibited across the included studies, with no obvious preferred approach (see Table 2). While many studies emphasised the inductive nature of coding and pattern formation (e.g., Choi, 2021; Lira-Gonzales et al., 2021; Liu, 2021), abductive approaches that were neither completely data-driven nor theory-driven prevailed. While attempting to keep an open-mind about the data, the multi-dimensional model naturally set parameters of what authors were looking for in the data. Initial coding tended to be inductive, with authors identifying points of interest at the individual or cross-case level before refining codes and developing themes that cohered with the dimensions of engagement, most evident in the open, axial, and selective coding approach used by Zhang and Hyland (2018) and in subsequent studies (e.g., Koltovskaia, 2020; Tian and Zhou, 2020). A few papers opted not to specify a discrete analytical approach (e.g., Zhang, 2017; Yu and Jiang, 2020; Choi, 2021), instead applying general principles of qualitative analysis, such as data reduction, pattern formation, and conclusion drawing (Miles and Huberman, 1994). As in other disciplinary areas, detailed description and illustration of qualitative coding processes (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018) helps improve the transparency, and hence, trustworthiness of research.

Quantitative data collection and analysis

How students engage behaviourally is usually approached from the text-analytic description tradition in written feedback research (62.86%, see Ferris, 2012), i.e., frequency counts (51.43%) and/or proportions (48.57%) of students’ textual issues (usually lexicogrammatical errors), WF content and delivery characteristics, and/or students’ revisions (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018; Zheng and Yu, 2018; Fan and Xu, 2020; Zheng et al., 2020c). Such characteristics are coded deductively, with researchers drawing upon various taxonomies of second language writing and written feedback. The result is a quantitative picture of student writing and teacher feedback, allowing researchers to make more objective claims about student engagement. In a few studies that were qualitative (e.g., Han, 2017; Saeli and Cheng, 2021) or CFWF focused (e.g., Yu and Jiang, 2020; Pearson, 2022b), text-analytic findings were either not reported or served merely as contextual background, with conclusions concerning behavioural engagement drawn solely from learners’ reported revision and learning strategies.

Of particular interest is the quantitative analysis of students’ revisions in light of WF, referred to as revision operations (Han and Hyland, 2015), with approaches varying depending on whether feedback encompassed WCF or CFWF. Naturally, studies of WCF incorporated form-focused revision operations (FFROs) as measures of behavioural (and at times, cognitive) engagement (e.g., Han and Hyland, 2015; Zhang and Hyland, 2018; Zheng and Yu, 2018). 31.43% of studies opted for a binary distinction in revision outcomes, although delineations varied, for example, “incorporated”/“not incorporated” (Shi, 2021), “response”/“no response” (e.g., Lyu and Lai, 2022), “target-like revision”/“non-target-like revision” (Zheng et al., 2020a). Other studies opted for more sophisticated measures, with six studies (e.g., Han and Xu, 2021; Lira-Gonzales et al., 2021) following the FFRO coding scheme of Han and Hyland (2015), adapted from Ferris (2006), which features five discrete categories of revision (“correct revision”, “incorrect revision”, “deletion”, “substitution”, “no revision”).

A few studies analysed both form-and content-focused revision operations using the same scheme (e.g., Tian and Zhou, 2020; Shi, 2021), sometimes modifying Han and Hyland’s (2015) framework by adding supplementary categories to account for content-focused revision operations (CFROs), notably, “reorganisation” (e.g., Zhang and Hyland, 2018; Zhang, 2020) and “rewriting” (e.g., Zhang and Hyland, 2018; Liu, 2021). Although somewhat crude measures, they have gained wider traction in the literature (e.g., Liu, 2021). Other less sophisticated measures of revisions targeting CFWF include the binary distinction between “adopted” and “not adopted” (Tian and Zhou, 2020; Choi, 2021; Lyu and Lai, 2022). Only one study, Pearson (2022a), utilised a coding scheme targeting only CFWF, incorporating Christiansen and Bloch’s (2016) seven categories of CFROs, which focus on the extent the student followed the teacher’s instructions and added other non-requested changes. Frequency counts of revision operations are usually presented in tabular form, and are often discussed in context with reference to textual extracts, although rarely in the form of “before and after WF” to illustrate claims (e.g., Koltovskaia, 2020; Zhang, 2020; Zheng et al., 2020b).

Contexts and sampling

Contexts

A notable feature of written feedback, and by extension student engagement, is its contextually and culturally-bound nature (Fredricks et al., 2004; Price et al., 2011). As such, and consistent with a study’s paradigmatic approach, features of the teaching and learning context (as well as individual learner factors) that mediate the manner and intensity of student engagement are of notable importance to generating understandings (Yu and Jiang, 2020; Shi, 2021). Indeed, such features constituted the focal area of a handful of inquiries (e.g., Han, 2017; Pearson, 2022b).

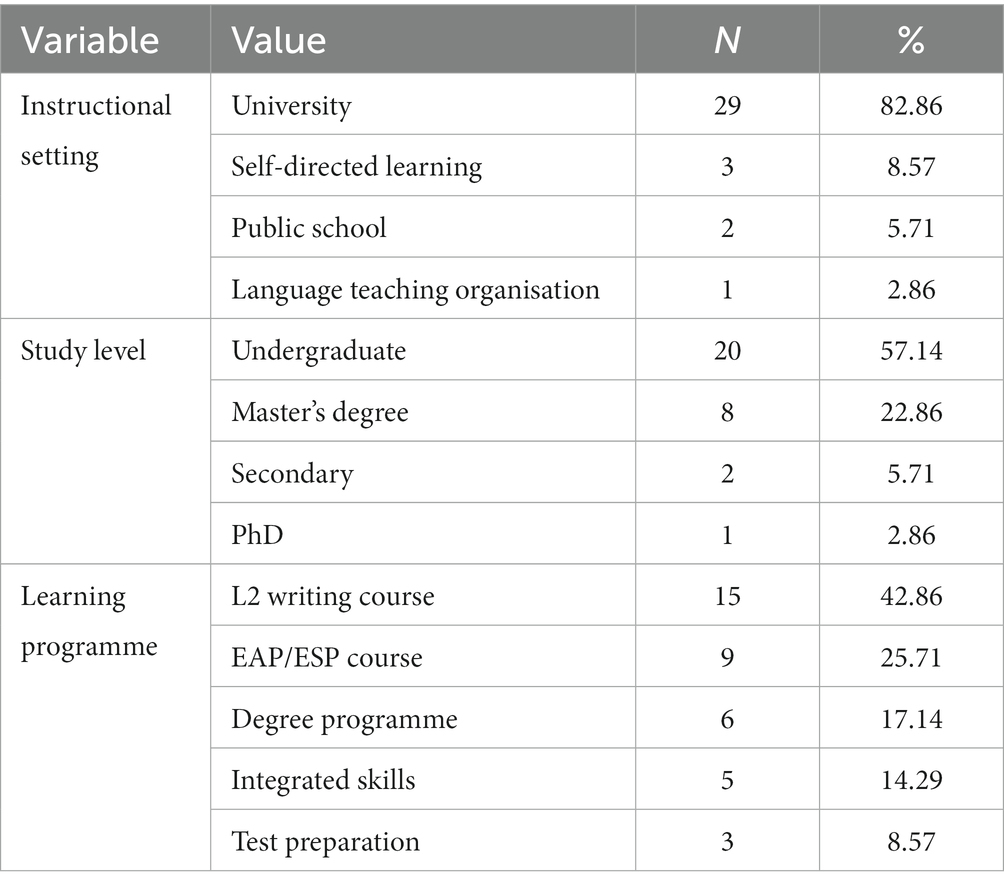

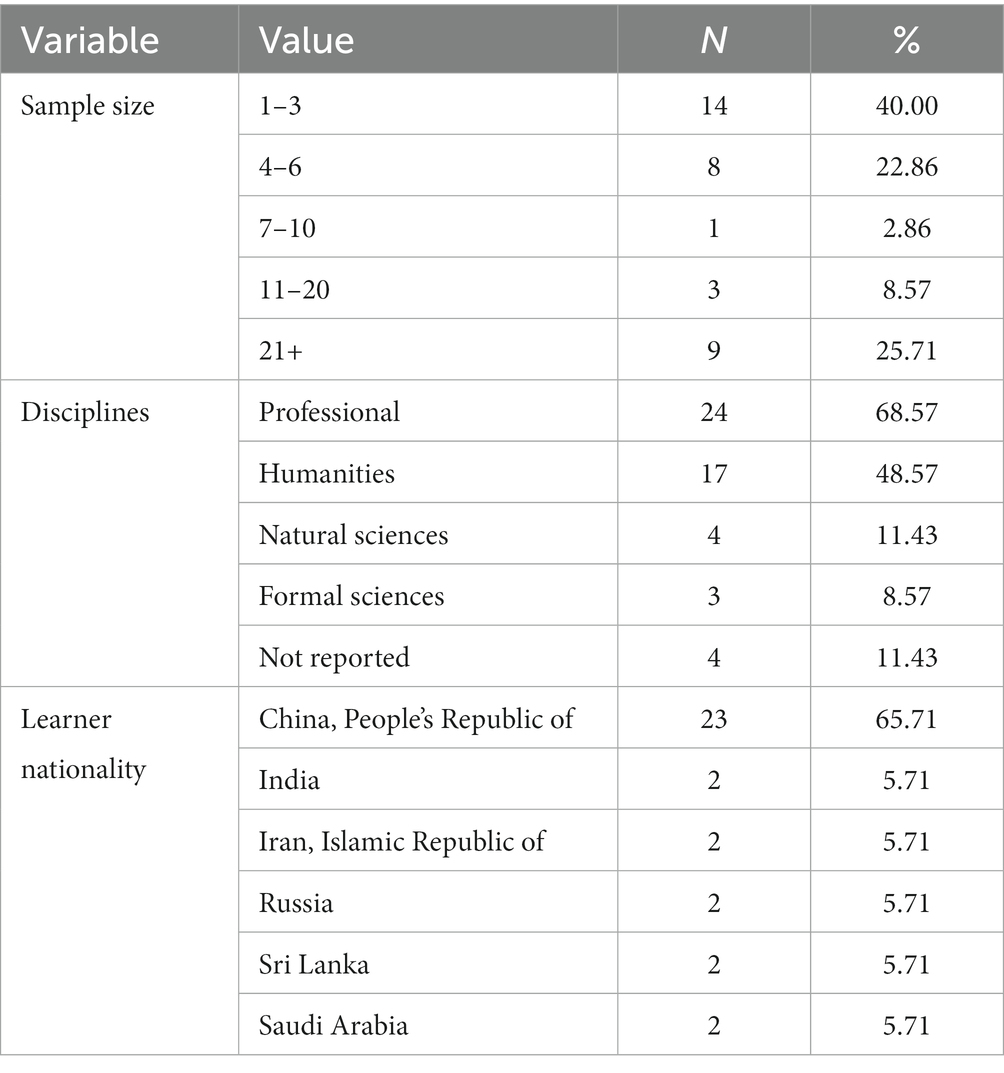

In terms of the contextual features of research, several key trends were apparent across the 35 studies. As indicated in Table 3, all but six studies were situated within a HEI instructional setting (and only two in an Anglophone country context), mostly featuring samples of students on Bachelor’s (57.14%) and Master’s programmes (22.86%), perhaps for reasons of sampling convenience. While it is important to avoid overgeneralising and to acknowledge contextual diversity, if the findings of the current body of research reflect the features of such contexts and learners, it is probable that the existing literature base reflects the engagement of more educationally successful and socioeconomically advantaged individuals, who through attachment to an HEI have wider access to resources that support learning (Bastola, 2020) and are likely to be more familiar with, or even trained in responding to written feedback. This could mean the literature portrays a more optimistic view of engagement than if more educationally and socioeconomically diverse samples of learners had been studied. However, it is important to recognise the important role feedback literacy plays in engagement (Lee, 2008; Han and Xu, 2021), and that potential gains could be cancelled out by the tendency of tertiary-level feedback to be deeply coded using the rhetorical conventions of expert academic discourse (Williams, 2005).

More research involving adult learners within private language teaching organisation contexts is necessary to confirm the extent existing findings transfer to non-academic settings. Given operationalisations of behavioural engagement highlight the role of external sources of human and non-human input, limited access to library resources and supportive peers may constrain behavioural engagement outside of HEI settings (Man et al., 2021). On the other hand, a lack of resources or structured supervision could promote a more intense form of engagement (Bastola, 2020; Man et al., 2021), as the instructor’s feedback becomes a key conduit of learning (Bastola, 2020; Pearson, 2022a). Additionally, academic and non-academic learners’ writing goals differ, and a reduced stakes’ setting may provide a lower risk environment for engagement. Only two studies recruiting young language learners within public school settings could be retrieved (Santanatanon and Chinokul, 2022; Tay and Lam, 2022). Since existing research indicates some learners modify their engagement to reflect differences between school-and university-based learning (Han, 2017; Han and Hyland, 2019), additional studies at the primary and secondary level could highlight longitudinal learner development trajectories. It would also seem prudent that, given one of the goals of engagement research is to identify how to enhance it (Shi, 2021), further experimental or action research is conducted, perhaps with young learners who may be more malleable to engagement via training in feedback response.

Two finer distinctions can be discerned across tertiary-level learning-to-write contexts, with implications for written tasks and WF. Most commonly, studies are situated in L2 English writing (42.86%), EAP/ESP (25.71%), and to a lesser extent, integrated skill (14.29%) courses, undertaken as part of a student’s main discipline in the event of English-major student participants or as a supplementary programme (e.g., Han and Hyland, 2015, 2019; Zheng and Yu, 2018; Fan and Xu, 2020; Koltovskaia, 2020). Typically, these constitute process writing environments reflective of Chinese HEIs, involving learners drafting one or more short essays structured around the provision of teacher, peer, and/or AWE feedback. Settings involving product approaches (e.g., school, language test preparation) may not be as conducive to deeper engagement, particularly if revision processes are unfamiliar or not expected (see Pearson, 2022a,b). Secondly, in several studies, participation in a supplementary English course was noted as mandatory (e.g., Han and Hyland, 2015; Han, 2017; Yu et al., 2018). Some students, particularly those who are not undertaking English majors, might fail to see value in the given writing tasks or even the modules themselves. The (in)authenticity of tasks, compulsory nature of learning programmes (and, possibly, feedback response), and implications of course outcomes are salient mediators of engagement, albeit rarely were these accounted for in the discussion of findings (e.g., Yu et al., 2018). As such, contextualising research within students’ main degree programmes (e.g., assignment, dissertation writing, which constituted 17.14% of settings) could generate useful insights with implications for instructors who provide feedback on student writing in Anglophone settings and who may not be specialists in language education or EAP.

Student samples

Table 4 outlines relevant study features as they pertain to students, the primary type of participant in engagement research. Reflecting qualitative or mixed methods approaches where learners are framed as individuals with distinct perspectives and responses towards feedback, sample sizes of one to six participants were common (62.86%). Notable exceptions included Bastola (2020) and Man et al.’s (2021) mixed methods studies that incorporated larger samples of survey informants (N = 50 and 118 respectively). As such, the focus of research tends to be on capturing the complexity and particularity of learner engagement. Authors should be cautious in generalising findings, although this is often acknowledged in the conclusions of research reports (e.g., Zhang and Hyland, 2018; Lira-Gonzales et al., 2021). Future research could be enhanced by authors ruminating on the transferability of the findings, an important quality criterion of qualitative research (Miles and Huberman, 1994).

Participants were usually recruited purposively (68.57%), often on the basis of a combination of their willingness to participate, language proficiency, experience using WF, or teacher’s recommendation, which several studies clearly spelled out (e.g., Zhang and Hyland, 2018; Zheng and Yu, 2018; Yu and Jiang, 2020; Zhang, 2020; Zheng et al., 2020c). In seven studies, the approach to participant sampling was not explained, which given that each of these studies featured a qualitative component, can be considered a limitation. Greater transparency with reporting relevant and appropriate mediating student factors would help improve quality and allow future researchers to make more explicit connections across the body of literature. This applies to both categorical learner factors (age, gender, and language proficiency) and more qualitative insights (e.g., feedback literacy, experience of engaging with feedback). In terms of the former, gender was well-reported, being stated in 85.71% of studies, followed by age (65.71%) and language proficiency (57.14%). Given that language proficiency is highlighted as a key mediating factor (Qi and Lapkin, 2001; Zheng and Yu, 2018; Fan and Xu, 2020; Tian and Zhou, 2020; Liu, 2021), it is incumbent on authors to provide this information (in a way that is meaningful), perhaps referencing the instrument that was used to determine students’ language proficiency levels (e.g., IELTS, TOEFL). Learner familiarity with and experience of responding to written feedback tended to be ignored in descriptions of samples and contexts, with the exception of pedagogical efforts integrated into the research process (e.g., Fan and Xu, 2020; Choi, 2021). This appears a missed opportunity to enrich the thickness of study description.

A notable feature of research into student engagement is that it predominantly samples Chinese learners (65.71%) (including from Hong Kong and Macau). This phenomenon reflects the uptake of the multi-dimensional model and its application to written feedback which has been led by Chinese researchers. As such, the current body of knowledge needs to be interpreted in light of learners’ shared characteristics. These encompass the tendency of Chinese learners to seek to meticulously follow teachers’ instructions, respect for their authority (Ho and Crookall, 1995), and a preference for knowledge transmission which may encourage repetition, reviewing, and memorisation (Hu, 2002; Han, 2019). Such characteristics might explain the largely positive perceptions towards WF (e.g., Fan and Xu, 2020; Zhang, 2020; Cheng and Liu, 2022). Owing to the selectivity of Chinese higher education institutions and the sampling of students on masters (Yu et al., 2018) or doctoral programmes (Yu and Jiang, 2020), such learners can be considered academically gifted or successful. It may be assumed that they exhibit more sophisticated engagement than learners in non-tertiary contexts or prospective students who have not yet gained admission, by virtue of having developed or refined strategies and skills to regulate their mental processes, respond to WF, and identify and undertake additional learning to enhance the quality of their writing. Clearly, a wider and more diverse student sample, i.e., by L1, age, language level, time spent learning the language, should be a priority to reflect the nuances and complexity of engagement with respect to individual differences (Zheng et al., 2020a).

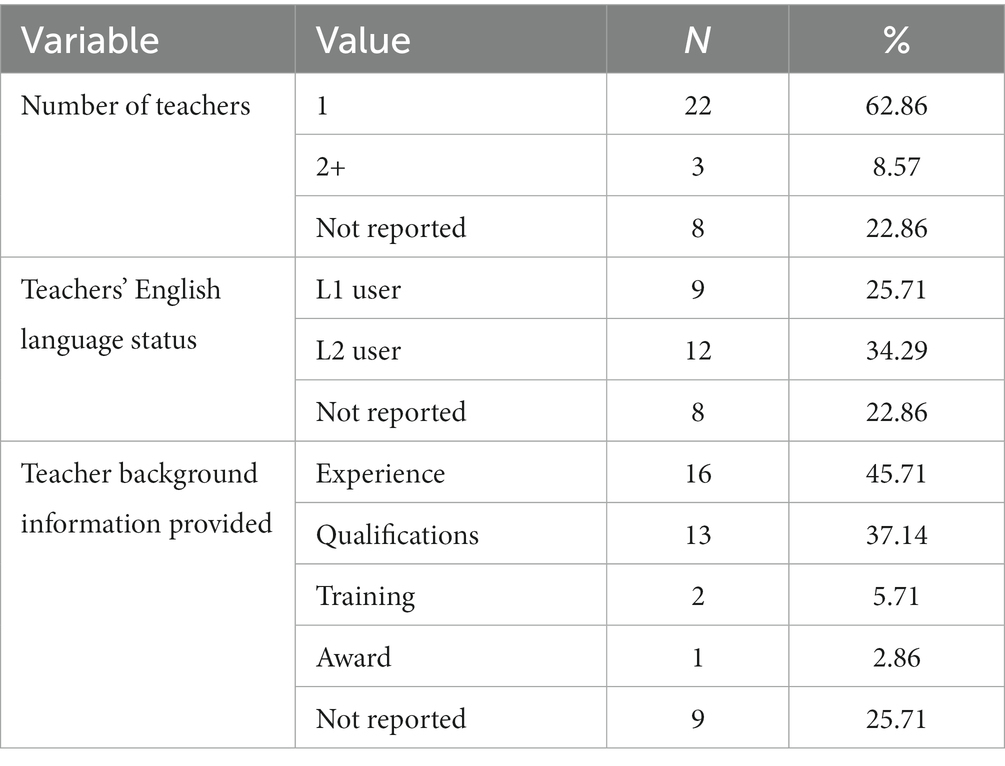

Teacher samples

The teacher (including the role of supervisor) constituted a/the feedback provider in 74.29% of studies. Typically, the teacher was working within the chosen context and not a member of the research team (except in four studies). In only six papers did teacher participation extend beyond the mere provision of feedback (e.g., Han, 2017; Bastola, 2020; Zhang, 2021; Zhang and Hyland, 2022). In such studies, teachers participated in one or multiple rounds of semi-structured interviews, although only in Bastola’s (2020) comparative investigation of supervisor/student feedback are the perspectives of feedback providers addressed in significant detail. It is my belief that further research that incorporates the teacher as an informant would yield more nuanced understandings of student engagement. Feedback provision is mediated by teachers’ training, experience, values, emotions, beliefs, and interpretations of institutional/programmatic policies relating to written feedback (Diab, 2005; Junqueira and Payant, 2015; Bastola, 2020), while the back and forth between teacher and student is underscored by socially constructed interpersonality, not only information transmission (Hyland and Hyland, 2019; Han and Xu, 2021). Teachers’ accounts of how and why items of feedback arose may help to more fully elaborate the nature, intensity, and outcomes of student engagement. Likewise, understandings of teacher/student social relationships can yield insights into students’ affective reactions, the extent they feel comfortable providing feedback on feedback, and their investment in undertaking textual revisions, which have yet to be comprehensively explored.

With the locus of the teacher’s role centred on the provision of written feedback in a classroom learning context, authors devoted usually a paragraph of methodological description to explicating teachers’ background characteristics. Table 5 provides a summary of the key features synthesised across the studies. It can be seen that, commensurate with qualitative approaches featuring small sample sizes, most studies (62.86%) incorporated one teacher as written feedback provider. Perhaps as a claim of practitioner expertise or to enhance the richness of description, a range of relevant background characteristics were presented, most frequently the teacher’s experience measured in years (45.71%), qualifications (37.14%), and relevant training undertaken (5.71%). Furthermore, while two studies were found to be situated in an L1-context (Koltovskaia, 2020; Koltovskaia and Mahapatra, 2022), L1-speaking teachers constituted slightly fewer participants recruited onto engagement studies (N = 9) relative to L2 users (N = 12). Han’s (2017) exploration of teacher’s mediating beliefs was one of only three studies to sample more than one teacher, and was the only to recruit both L1 and L2 teachers. Future comparative research involving L1 and L2 teachers may help shed light on how engagement varies in light of teacher practices or student expectations (e.g., Han, 2017; Tian and Zhou, 2020; Choi, 2021).

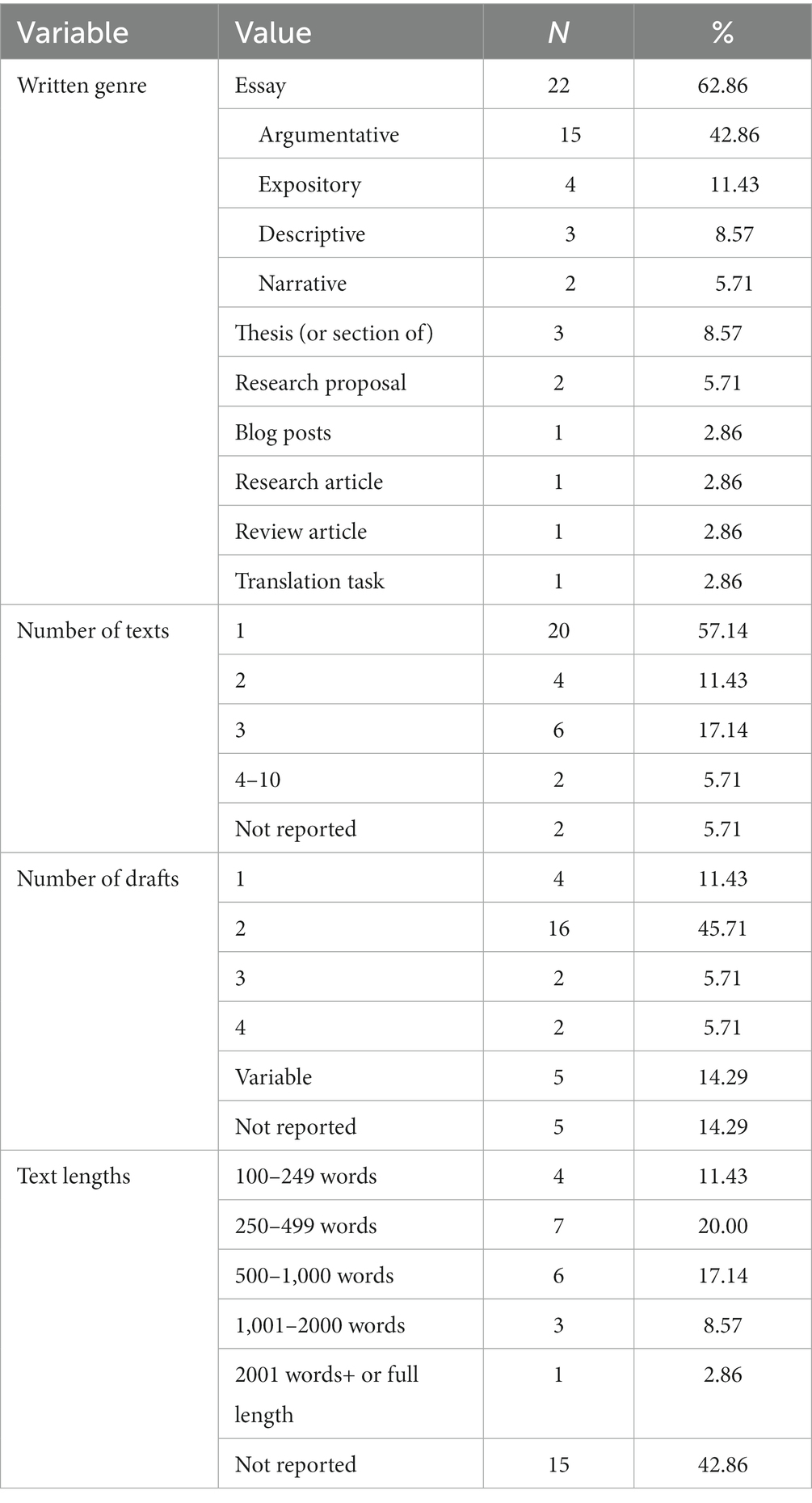

Texts

Clearly, the nature of the text, including the purposes for writing, the intended audience, the expectation (or lack of) for revision, and the implications of writing outcomes play substantial mediating roles in how students engage with written feedback. Given the preponderance of studies situated in tertiary-level settings, it is not surprising that engagement with written feedback on academic texts has tended to preoccupy researchers. As shown in Table 6, academic essays constituted the most common text type (62.86%), particularly of the argumentative (42.86%) and to a lesser extent, expository (11.43%) rhetorical tradition. Such essays were characteristically short, usually between 200 and 1,000 words, although a concerning number of studies (42.86%) omitted information about text length. The preponderance of such texts likely reflects the importance attributed to argumentative writing in the academy (Rapanta et al., 2013) and the frequency with which it constitutes assessed writing tasks on EFL/ESL programmes there (e.g., Han and Hyland, 2019; Zheng et al., 2020a; Shi, 2021), rather than the salience of argumentative genres to engagement per se—although this is not always the case (e.g., Farsani and Aghamohammadi, 2021; Pearson, 2022b).

Among research that featured essays, it was found 42.86% of studies investigated feedback that was provided on texts that were written as part of normal course requirements. In several instances, performance in the essay task contributed to students’ overall programme outcomes (e.g., Han, 2017; Choi, 2021; Zhang and Hyland, 2022), heightening the expectation for students to actively engage. A further 25.71% of studies incorporated student writing that was (or in some cases, interpreted as being) elicited for the purposes of research (e.g., Saeli and Cheng, 2021; Pearson, 2022b). Here, student motivation to engage seemed to stem from more intrinsic sources, often to achieve short and medium-term education and/or career goals. Beyond essays, a smaller group of studies explored engagement with feedback on authentic high-stakes writing, such as texts that mirrored or contributed to students’ main programme award (e.g., Yu et al., 2018; Koltovskaia, 2020; Zheng et al., 2020b), research proposals (e.g., Mohammed and Al-Jaberi, 2021; Koltovskaia and Mahapatra, 2022), or early career research article writing (e.g., Yu and Jiang, 2020). It was found WF on such writing served prominent content-focused purposes (e.g., Yu et al., 2018; Yu and Jiang, 2020), although being situated in a Chinese-to-English translation course context meant that the WF purveyed in Zheng et al. (2020c) was necessarily form focused. Given the individual nature of student supervision (Bastola, 2020), further inquiries into engagement with feedback on authentic longer-form academic writing, possibly inviting the perspectives of the supervisor would provide more nuanced insights into the role of interpersonality in feedback engagement.

As indicated by the number of texts (and drafts) students were required to write, much research into engagement with written feedback is cross-sectional. 57.14% of studies featured one text to produce, while students were required to write only two drafts in 45.71% of cases. Such approaches capture a cross-section of understandings, which are necessarily limited in the insights they provide. As Barnes (1992) stresses, “we do not one moment fail to understand something and the next moment grasp it entirely” (p. 123). Rather, learning unfolds and emerges over time through learners constructing their own understandings via an interpersonal feedback dialogue with a facilitator in a situated context (Boud and Molloy, 2013). Key to the temporal dimension of knowledge construction is the dynamic role of learner agency (Mercer, 2012); constantly fluctuating and adapting to contextual changes while being intimately bound to perceptions of past experiences and a more stable, longer-term sense of agency. A “less and more often” approach to data collection (e.g., Mercer, 2012) incorporating multiple opportunities to explore students’ multi-dimensional feedback responses better reflects engagement as a malleable, longer-term process (Fredricks et al., 2004). Designs that feature three or four rounds of multi-draft composition writing (with post-feedback written outcomes clearly conveyed) would both lower the stakes of feedback response and allow learners to get a better sense of what successful engagement encompasses. Methodologically, a longitudinal approach would lower the cognitive demands in interviews and help students become more acquainted with the potentially unfamiliar and taxing process of orally reporting or thinking aloud cognitive and behavioural responses, for which learners benefit from training and practice. Alternatively, designs that provide students with choices in how often they write (e.g., Zhang, 2017; Zhang and Hyland, 2018, 2022) are valuable, since the number of drafts and submissions are both indicators of engagement.

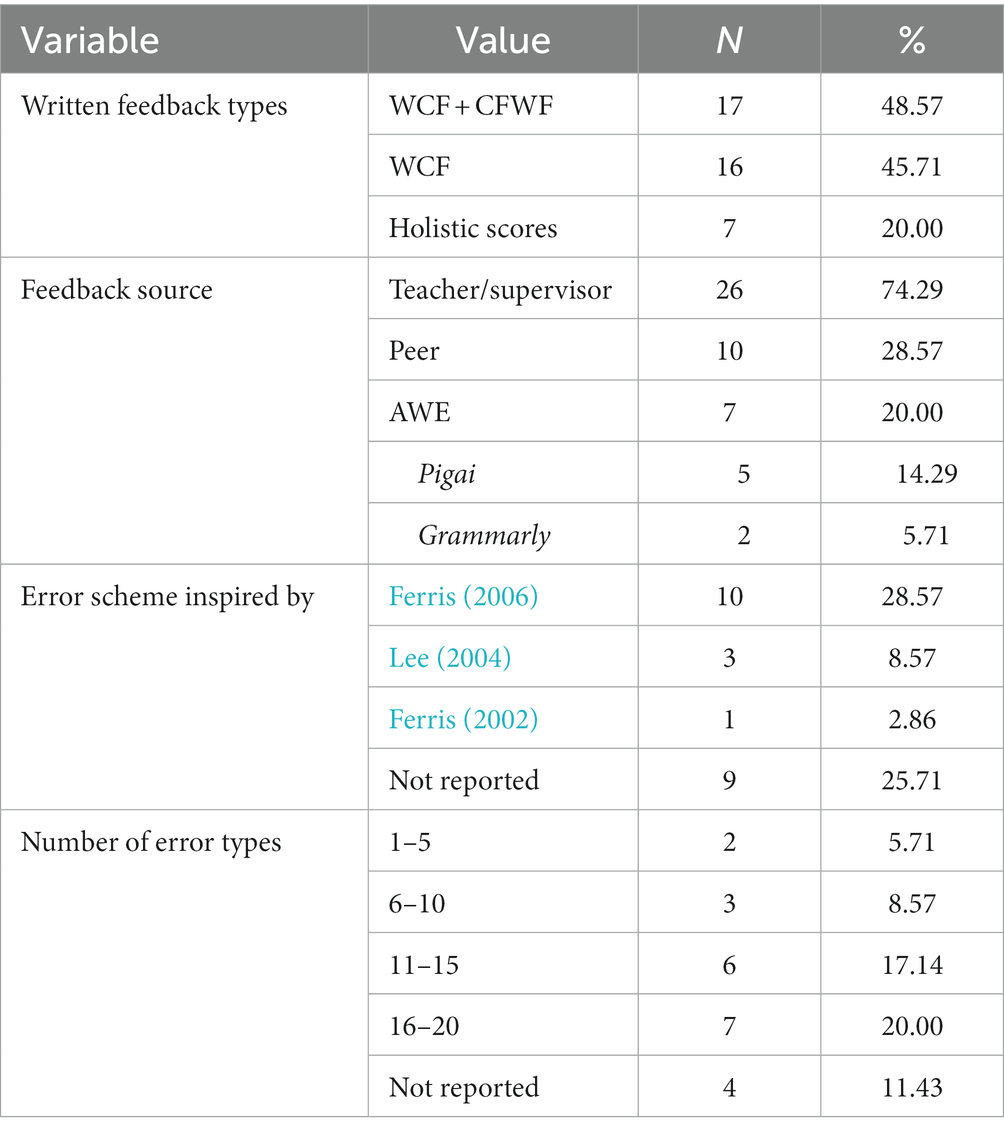

Written feedback

Table 7 outlines the characteristics of written feedback among studies of student engagement. As originally operationalised in terms of WCF provision (see Han and Hyland, 2015), the most common study pattern investigated student engagement with both CFWF as well as FFWF (form-focused written feedback) (48.57%, e.g., Zhang and Hyland, 2018; Liu, 2021; Saeli and Cheng, 2021). This is perhaps reflective of classroom realities where the goals and purposes of WF extend beyond the treatment of error (Junqueira and Payant, 2015). However, in most cases, FFWF received greater attention in both feedback provision and attention in the report, evident by the number of feedback points and categories of revision operations (e.g., Yu et al., 2018; Fan and Xu, 2020; Tian and Zhou, 2020).

While a handful of studies featured a predominant focus on CFWF (e.g., Yu and Jiang, 2020; Choi, 2021; Pearson, 2022a), it was evident writers in these contexts received language-related feedback comments. Also of note are a handful of studies where students received some form of summative score for their writing along with corrections and/or comments, for example in the test preparation contexts of Pearson (2022a,b) and studies involving AWE (although scores were not always addressed). Marks and grades on high-stakes writing are well-known to exert a powerful affective response (Carless and Boud, 2018), a phenomenon visible in a few studies (e.g., Zheng et al., 2020c; Pearson, 2022a,b). Nevertheless, their mediating role on the behavioural and cognitive dimensions of engagement has yet-to-be properly explored. Researchers would also do well to account for the complexity in feedback delivery, by addressing responses via ad hoc channels, such as through email interactions and unplanned conferences/discussions (e.g., Han and Hyland, 2015; Zheng et al., 2020b).

Written feedback provided by an instructor (including a research supervisor), unsurprisingly, constituted the focal area of much research (74.29%). To a lesser extent, authors have also examined engagement with peer (28.57%, e.g., Yu et al., 2018; Saeli and Cheng, 2021) and AWE feedback (20% e.g., Zhang, 2017; Koltovskaia, 2020). Peer feedback on English courses (e.g., Farsani and Aghamohammadi, 2021; Shi, 2021) tended to be better studied than on students’ actual majors (see Zheng and Yu, 2018), while only one study (Yu and Jiang, 2020) has focused on peer review feedback in high-stakes early career academic publishing. Recent developments in AWE have prompted a flurry of inquiries of student engagement, with a particular interest in the Chinese application Pigai (N = 5) and to a lesser extent, Grammarly (N = 2). Research comparing student engagement across multiple feedback providers (e.g., Zhang and Hyland, 2018, 2022; Tian and Zhou, 2020; Shi, 2021) is still relatively rare, although an important source of future inquiries in light of the mediating role of interpersonality, WF individualisation and immediacy, and the provider’s authority and emotional support in WF provision.

In coding students’ lexicogrammatical errors, authors tended to adopt the influential taxonomy of Ferris (2006) (28.57%) and, to a lesser extent, Lee (2004) (8.57%), often with modifications (e.g., Fan and Xu, 2020; Zhang, 2020; Lira-Gonzales et al., 2021). While the number of studies that did not report the coding of errors may seem concerningly high, it was apparent that categories of errors were not always a study focus (e.g., Saeli and Cheng, 2021; Lyu and Lai, 2022). For (especially WCF-focused) studies that did investigate behavioural engagement with varying error types (e.g., Han and Hyland, 2015; Koltovskaia, 2020), analytical codes ranged from 17 error types to just four (Shi, 2021) or five (Tian and Zhou, 2020), making cross-study comparisons of behavioural engagement difficult. In 42.86% of papers, a screenshot or photo extract of the feedback was provided, which usefully served to demonstrate how it was conveyed to students (e.g., Zheng et al., 2020b; Mohammed and Al-Jaberi, 2021; Lyu and Lai, 2022).

Another feature of study quality was the transparency in which the approach of the feedback provider was conveyed. Clearly, teacher (and peer) written feedback provision is a highly interpersonal process (Hyland and Hyland, 2019), with engagement being prominently mediated by the (potentially idiosyncratic) content and delivery choices of the human provider. 51.43% of studies boosted the richness of description through explicating the general approach of the teacher provider, perhaps contextualised within their wider second language writing instructional practice (e.g., Han, 2017; Han and Hyland, 2019; Zheng et al., 2020a; Choi, 2021). This also included information about AWE content and delivery over material explaining how the underlying system worked (e.g., Koltovskaia, 2020; Zhang, 2020). A less common reporting strategy was conveying text-analytical information about feedback provision, either a breakdown of WF points by feedback form or focal area (both 28.57%). Another notable contextual feature that mediates engagement is student feedback literacy (Carless and Boud, 2018; Han and Xu, 2021). Yet, 71.43% did not explain whether students had received any prior training in responding to WF, what their prior experiences of WF response were (usually, explanations of peer feedback training or written instructions), and what instructions were provided to students. Student readiness to engage with WF constitutes a point of clarity worth attending to in future research.

Conclusion

Student engagement is considered a crucial factor that mediates the learning outcomes of written feedback on second language writing (Han and Hyland, 2015; Han, 2017; Zhang and Hyland, 2018), making it an exciting and important research concern within L2 writing. The multi-dimensional framework, comprising affective, behavioural, and cognitive dimensions, originally developed by Ellis (2010) and taking inspiration from Fredricks et al. (2004), has gained traction in empirical research in recent years among scholars, particularly those investigating the engagement of L2 learners with mostly WCF provided by teachers on short compositions set on English skills, EAP, and ESP courses in tertiary-level contexts. As evidenced by the rapidly increasing number of studies, some of which are well-cited on indices such as the Web of Science and Scopus, the multi-dimensional model constitutes a robust framework that enables researchers to manageably generate rich and comprehensive datasets, accounting for the well-recognised complexity of the phenomenon (Han and Hyland, 2015). Nevertheless, it is recognised that learner qualities (notably, beliefs, feedback literacy) and contextual factors (teacher-student relationship, WF content and delivery), mediate student engagement (Han, 2017; Zhang and Hyland, 2018; Han and Xu, 2021), making for a complex form of inquiry. For reasons that are not clear, the model has not been widely adopted outside of China nor subject to rigorous discussion, unlike beyond language education (e.g., Eccles, 2016).

While heterogeneity was exhibited in the operationalisation of student engagement, a number of patterns were exhibited across the 35 included studies. The majority of researchers (although by no-means all) operationalised affective engagement as student affect and/or attitudes, behavioural engagement as revision operations and/or observed or students’ purported revision and learning strategies, and cognitive engagement as students’ awareness and/or cognitive and metacognitive operations. Research designs encompassing mixed methods case studies drawing on a combination of student texts (especially in the form of quantitative text-analytic descriptions of feedback forms and focal areas and student revisions) and oral reports (usually, semi-structured or stimulated recall interviews) prevailed (82.86%). The triangulation of text-analytic descriptions with verbal reports strengthens the external validity of insights into the quantity and quality of learners’ processing and responses (Han and Hyland, 2015). Since not acting on WF may be an indicator of growing writer autonomy or helplessness, it would be perilous to rely on revision operations alone to draw conclusions about students’ understandings. Similarly, textual data is essential to support knowledge claims of the extent learners can act on purported understandings. Only 17.14% of studies opted solely for qualitative approaches, with a focus on students’ self-reported mental and behavioural states and processes over revision operations or perhaps because ethical barriers prohibited publishing extracts of student texts or written feedback. The lack of longitudinal designs featuring multiple writing tasks limits the conclusions that can be drawn about the relationship between engagement and WF uptake or its potential for malleability.

Given the preponderance of grammar correction in L2 classrooms and the preoccupation with WCF in second language writing research (see Lee, 2004; Ferris, 2006, 2012), it is not surprising that all papers addressed student engagement with corrective feedback, with the teacher the most frequent feedback provider. While there is increasing interest in the potential of peer (28.57%) and AWE feedback (20%), only five studies explicitly contrasted engagement across multiple providers. Reflecting the complex, messy realities of L2 writing classrooms, 48.57% of research incorporated CFWF, although schemes for analysing revision operations remain rather crude and often neglect the effect of the revision on the reader. It was found that studies adopted a diversity of error schemes and approaches to operationalising revisions, which may pose an obstacle to synthesising the findings of existing research. Perhaps for reasons of manageability, only 17.14% of studies incorporated the teacher as research informant. The teacher constitutes a crucial participant in the feedback process and may be able to shed light on student engagement, particularly from the perspective of interpersonality, since the teacher-student relationship mediates student engagement (Hyland, 2019). It is hoped that the issues discussed in this review contribute to renewed theoretical debate on student engagement with written feedback on L2 writing, with a view to enhancing the breadth, diversity, and robustness of the literature body.

Author contributions

WP: Conceptualization, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Barnes, D. (1992). “The role of talk in learning” in Thinking voices: the work of the national oracy project. ed. K. Norman (London: Hodder & Stoughton), 123–128.

*Bastola, M. N. (2020). Engagement and challenges in supervisory feedback: supervisors’ and students’ perceptions. RELC J., 53, 56–70. doi: 10.1177/0033688220912547

Bitchener, J. (2018). “Teacher written feedback” in The TESOL encyclopedia of English language teaching. ed. J. I. Liontas (New York: John Wiley & Sons), 1–7.

Boekaerts, M. (2016). Engagement as an inherent aspect of the learning process. Learn. Instr. 43, 76–83. doi: 10.1016/j.learninstruc.2016.02.001

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Carless, D., and Boud, D. (2018). The development of student feedback literacy: enabling uptake of feedback. Assess. Eval. High. Educ. 43, 1315–1325. doi: 10.1080/02602938.2018.1463354

*Cheng, X., and Liu, Y. (2022). Student engagement with teacher written feedback: insights from low-proficiency and high-proficiency L2 learners. System, 109,:102880. doi: 10.1016/j.system.2022.102880

*Choi, J. (2021). L2 writers’ engagement and needs for teacher written feedback: a case of a Korean college english composition class. Korean J. Engl. Lang. Linguist., 21, 551–580. doi: 10.15738/kjell.21.202106.551

Chong, S. W. (2021). Reconsidering student feedback literacy from an ecological perspective. Assess. Eval. High. Educ. 46, 92–104. doi: 10.1080/02602938.2020.1730765

Christiansen, M. S., and Bloch, J. (2016). Papers are never finished, just abandoned: the role of written teacher comments in the revision process. J. Response Writ. 2, 6–42.

Diab, R. L. (2005). Teachers’ and students’ beliefs about responding to ESL writing: a case study. TESL Can. J. 23, 28–43. doi: 10.18806/tesl.v23i1.76

Eccles, J. S. (2016). Engagement: where to next? Learn. Instr. 43, 71–75. doi: 10.1016/j.learninstruc.2016.02.003

Ellis, R. (2010). Epilogue: a framework for investigating oral and written corrective feedback. Stud. Second. Lang. Acquis. 32, 335–349. doi: 10.1017/S0272263109990544

*Fan, Y., and Xu, J. (2020). Exploring student engagement with peer feedback on L2 writing. J. Second. Lang. Writ., 50,:100775. doi: 10.1016/j.jslw.2020.100775

*Farsani, M. A., and Aghamohammadi, N. (2021). Exploring students’ engagement with peer- and teacher written feedback in an EFL writing course: a multiple case study of Iranian graduate learners. MEXTESOL J., 45, 1–17.

Ferris, D. R. (2002). Treatment of error in second language student writing. The University of Michigan Press.

Ferris, D. R. (2006). “Does error feedback help student writers? New evidence on the short-and long-term effects of written error correction” in Feedback in second language writing: contexts and issues. eds. K. Hyland and F. Hyland (Cambridge: Cambridge University Press), 81–104.

Ferris, D. R. (2012). Written corrective feedback in second language acquisition and writing studies. Lang. Teach. 45, 446–459. doi: 10.1017/S0261444812000250

Ferris, D. R., Liu, H., Sinha, A., and Senna, M. (2013). Written corrective feedback for individual L2 writers. J. Second. Lang. Writ. 22, 307–329. doi: 10.1016/j.jslw.2012.09.009

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: potential of the concept, state of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Goldstein, L. M. (2004). Questions and answers about teacher written commentary and student revision: teachers and students working together. J. Second. Lang. Writ. 13, 63–80. doi: 10.1016/j.jslw.2004.04.006

*Han, Y. (2017). Mediating and being mediated: learner beliefs and learner engagement with written corrective feedback. System, 69, 133–142. doi: 10.1016/j.system.2017.07.003

Han, Y. (2019). Written corrective feedback from an ecological perspective: the interaction between the context and individual learners. System 80, 288–303. doi: 10.1016/j.system.2018.12.009

*Han, Y., and Hyland, F. (2015). Exploring learner engagement with written corrective feedback in a Chinese tertiary EFL classroom. J. Second. Lang. Writ., 30, 31–44. doi: 10.1016/j.jslw.2015.08.002

*Han, Y., and Hyland, F. (2019). Learner engagement with written feedback: a sociocognitive perspective. In K. Hyland and F. Hyland (Eds.), Feedback in second language writing: contexts and issues 2nd ed., pp. 247–264). Cambridge: Cambridge University Press

*Han, Y., and Xu, Y. (2021). Student feedback literacy and engagement with feedback: a case study of Chinese undergraduate students. Teach. High. Educ., 26, 181–196. doi: 10.1080/13562517.2019.1648410

Hiver, P., Al-Hoorie, A. H., Vitta, J. P., and Wu, J. (2021). Engagement in language learning: a systematic review of 20 years of research methods and definitions. Lang. Teach. Res. 28, 201–230. doi: 10.1177/13621688211001289

Ho, J., and Crookall, D. (1995). Breaking with Chinese cultural traditions: learner autonomy in English language teaching. System 23, 235–243. doi: 10.1016/0346-251X(95)00011-8

Hu, G. (2002). Potential cultural resistance to pedagogical imports: the case of communicative language teaching in china. Lang. Cult. Curric. 15, 93–105. doi: 10.1080/07908310208666636

Hyland, F. (2003). Focusing on form: student engagement with teacher feedback. System 31, 217–230. doi: 10.1016/S0346-251X(03)00021-6

Hyland, K. (2019). “What messages do students take from teacher feedback?” in Feedback in second language writing: contexts and issues. eds. K. Hyland and F. Hyland (Cambridge: Cambridge University Press), 265–284. doi: 10.1017/9781108635547.016

Hyland, K., and Hyland, F. (2019). Interpersonality and teacher-written feedback. In K. Hyland and F. Hyland (Eds.), Feedback in second language writing: Contexts and issues. Cambridge University Press. doi: 10.1017/9781108635547.011

*Jin, X., Jiang, Q., Xiong, W., Feng, Y., and Zhao, W. (2022). Effects of student engagement in peer feedback on writing performance in higher education. Interact. Learn. Environ., 1–16. doi: 10.1080/10494820.2022.2081209, 1–16

*Koltovskaia, S. (2020). Student engagement with automated written corrective feedback (AWCF) provided by grammarly: a multiple case study. Assess. Writ., 44,:100450. doi: 10.1016/j.asw.2020.100450

*Koltovskaia, S., and Mahapatra, S. (2022). Student engagement with computer-mediated teacher written corrective feedback: a case study. JALT CALL J., 18, 286–315. doi: 10.29140/jaltcall.v18n2.519

Junqueira, L., and Payant, C. (2015). “I just want to do it right, but it’s so hard”: a novice teacher’s written feedback beliefs and practices. J. Second. Lang. Writ. 27, 19–36. doi: 10.1016/j.jslw.2014.11.001

Lee, I. (2004). Error correction in L2 secondary writing classrooms: the case of Hong Kong. J. Second. Lang. Writ. 13, 285–312. doi: 10.1016/j.jslw.2004.08.001

Lee, I. (2008). Understanding teachers’ written feedback practices in Hong Kong secondary classrooms. J. Second. Lang. Writ. 17, 69–85. doi: 10.1016/j.jslw.2007.10.001

*Lira-Gonzales, M.-L., Nassaji, H., and Chao Chao, K.-W. (2021). Student engagement with teacher written corrective feedback in a French as a foreign language classroom. J. Response Writ., 7, 37–73.

*Liu, Y. (2021). Understanding how Chinese university students engage with teacher written feedback in an EFL context: a multiple case study. Lang. Teach. Res. Q., 25, 84–107. doi: 10.32038/ltrq.2021.25.05

Liu, Q., and Brown, D. (2015). Methodological synthesis of research on the effectiveness of corrective feedback in L2 writing. J. Second. Lang. Writ. 30, 66–81. doi: 10.1016/j.jslw.2015.08.011

*Lyu, B., and Lai, C. (2022). Analysing learner engagement with native speaker feedback on an educational social networking site: an ecological perspective. Comput. Assist. Lang. Learn., 1–35. doi: 10.1080/09588221.2022.2030364, 1–35

Ma, J. J. (2019). “L2 students’ engagement with written corrective feedback” in The TESOL encyclopedia of English language teaching. eds. J. I. Liontas , T. International Assocation , and M. Delli Carpini (New York: John Wiley & Sons)

Mahfoodh, O. H. A. (2017). “I feel disappointed”: EFL university students’ emotional responses towards teacher written feedback. Assess. Writ. 31, 53–72. doi: 10.1016/j.asw.2016.07.001

*Man, D., Chau, M. H., and Kong, B. (2021). Promoting student engagement with teacher feedback through rebuttal writing. Educ. Psychol., 41, 883–901. doi: 10.1080/01443410.2020.1746238

Miles, M. B., and Huberman, A. M. (1994). Qualitative data analysis: a sourcebook of new methods 2nd ed Thousand Oaks, CA: SAGE Publications Ltd.

*Mohammed, M. A. S., and Al-Jaberi, M. A. (2021). Google Docs or Microsoft Word? Master’s students’ engagement with instructor written feedback on academic writing in a cross-cultural setting. Comput. Compos., 62,:102672. doi: 10.1016/j.compcom.2021.102672

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., et al. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. The BMJ 372, 1–9. doi: 10.1136/bmj.n71

*Pearson, W. S. (2022a). Student engagement with teacher written feedback on rehearsal essays undertaken in preparation for IELTS. SAGE Open, 12:215824402210798. doi: 10.1177/21582440221079842

*Pearson, W. S. (2022b). The mediating effects of student beliefs on engagement with written feedback in preparation for high-stakes English writing assessment. Assess. Writ., 52,:100611. doi: 10.1016/j.asw.2022.100611

Plonsky, L. (2013). Study quality in SLA: An assessment of designs, analyses, and reporting practices in quantitative L2 research. Stud. Second. Lang. Acquis. 35, 655–687. doi: 10.1017/S0272263113000399

Plonsky, L., and Gass, S. (2011). Quantitative research methods, study quality, and outcomes: the case of interaction research. Lang. Learn. 61, 325–366. doi: 10.1111/j.1467-9922.2011.00640.x

Price, M., Handley, K., and Millar, J. (2011). Feedback: focusing attention on engagement. Stud. High. Educ. 36, 879–896. doi: 10.1080/03075079.2010.483513

Qi, D. S., and Lapkin, S. (2001). Exploring the role of noticing in a three-stage second language writing task. J. Second. Lang. Writ. 10, 277–303. doi: 10.1016/S1060-3743(01)00046-7

Ranalli, J. (2021). L2 student engagement with automated feedback on writing: potential for learning and issues of trust. J. Second. Lang. Writ. 52:100816. doi: 10.1016/j.jslw.2021.100816

Rapanta, C., Garcia-Mila, M., and Gilabert, S. (2013). What is meant by argumentative competence? An integrative review of methods of analysis and assessment in education. Rev. Educ. Res. 83, 483–520. doi: 10.3102/0034654313487606

Sachs, R., and Polio, C. (2007). Learners’ uses of two types of written feedback on a L2 writing revision task. Stud. Second. Lang. Acquis. 29, 67–100. doi: 10.1017/S0272263107070039

*Saeli, H., and Cheng, A. (2021). Peer feedback, learners’ engagement, and L2 writing development: the case of a test-preparation class. TESL-EJ, 25, 1–18.

*Santanatanon, T., and Chinokul, S. (2022). Exploring and analysis of student engagement in English writing: grammar accuracy based on teacher written corrective feedback. Pasaa, 63, 35–65.

Shen, R., and Chong, S. W. (2022). Learner engagement with written corrective feedback in ESL and EFL contexts: a qualitative research synthesis using a perception-based framework. Assess. Eval. High. Educ. 48, 276–290. doi: 10.1080/02602938.2022.2072468

*Shi, Y. (2021). Exploring learner engagement with multiple sources of feedback on L2 writing across genres. Front. Psychol., 12::758867. doi: 10.3389/fpsyg.2021.758867

Simard, D., Guénette, D., and Bergeron, A. (2015). L2 learners’ interpretation and understanding of written corrective feedback: insights from their metalinguistic reflections. Lang. Aware. 24, 233–254. doi: 10.1080/09658416.2015.1076432

Storch, N., and Wigglesworth, G. (2010). Learners’ processing, uptake, and retention of corrective feedback on writing: case studies. Stud. Second. Lang. Acquis. 32, 303–334. doi: 10.1017/S0272263109990532

*Tay, H. Y., and Lam, K. W. L. (2022). Students’ engagement across a typology of teacher feedback practices. Educ. Res. Policy Prac., 21, 427–445. doi: 10.1007/s10671-022-09315-2