Abstract

Many mating signals consist of multimodal components that need decoding by several sensory modalities on the receiver's side. For methodological and conceptual reasons, the communicative functions of these signals are often investigated only one at a time. Likewise, variation of single signal traits are frequently correlated by researchers with senders' quality or receivers' behavioral responses. Consequently, the two classic and still dominating hypotheses regarding the communicative meaning of multimodal mating signals postulate that different components either serve as back-up messages or provide multiple meanings. Here we discuss how this conceptual dichotomy might have hampered a more integrative, perception encompassing understanding of multimodal communication: neither the multiple message nor the back-up signal hypotheses address the possibility that multimodal signals are integrated neurally into one percept. Therefore, when studying multimodal mating signals, we should be aware that they can give rise to multimodal percepts. This means that receivers can gain access to additional information inherent in combined signal components only (“the whole is something different than the sum of its parts”). We review the evidence for the importance of multimodal percepts and outline potential avenues for discovery of multimodal percepts in animal communication.

Multimodality is a Characteristic of Many Mating Signals

To attract mates, many animals simultaneously signal in more than one modality. Signals with components in more than one modality are multimodal signals (for glossary see

Box 1). Multimodal signals are taxonomically widespread: flies court with a display that combines visual, acoustic, vibratory, and chemical signal components. Frog calls are often accompanied by visually conspicuous vocal sac movements and/or water surface vibrations (

Figure 1A). In both these examples, signal variants in single vs. combined modalities result in different behavioral reactions of receivers (Narins et al.,

2005; Bretman et al.,

2011). A closer look at other taxonomic groups shows more examples: Many species of birds show complex, rhythmic visual displays during singing (Williams,

2001; Dalziell et al.,

2013; Ullrich et al.,

2016), fish grunt and quiver (Estramil et al.,

2014; de Jong et al.,

2018), spiders and grasshoppers have visual-vibratory courtship displays (Stafstrom and Hebets,

2013; Kozak and Uetz,

2016; Vedenina and Shestakov,

2018), and some bats sing songs while fanning odors from a wing-pouch toward their intended mates (Voigt et al.,

2008). Although multimodal mating signals are common, the single modalities are mostly studied apart (often owing to the technical specializations required to conduct the research). Consequently, description, analyses, and experimental tests of the form and function of animal mating signals have mostly been unimodal. This changed in the 1990's when behavioral ecologists started to draw attention to multi-component and multimodal signaling (Møller and Pomiankowski,

1993; Partan and Marler,

1999; Candolin,

2003) and how (multimodal) receiver psychology might excert in itself selective pressures on signal evolution (Rowe,

1999). Experimental studies in diverse fields, e.g., aposematic signaling (Rowe and Guilford,

1996) started to investigate how multimodal signals might be integrated by receivers. The research field on mating signals conceptually took a different direction by focusing more on signal content (discussed in Hebets et al.,

2016). Perhaps owing to the field's strong focus on function rather than behavioral mechanisms, influential reviews at the time (Møller and Pomiankowski,

1993; Johnstone,

1996; Candolin,

2003) centered around two signal-content centered hypotheses:

Multimodal signals are backup signals—the same message (e.g., species identity) is given in multiple sensory modalities and if one channel is blocked, a potential receiver can still receive the intended message.

Multimodal signals convey multiple messages—simultaneously emitted signal components in multiple modalities contain different information content (e.g., one component conveys species identity and the other the intention to mate).

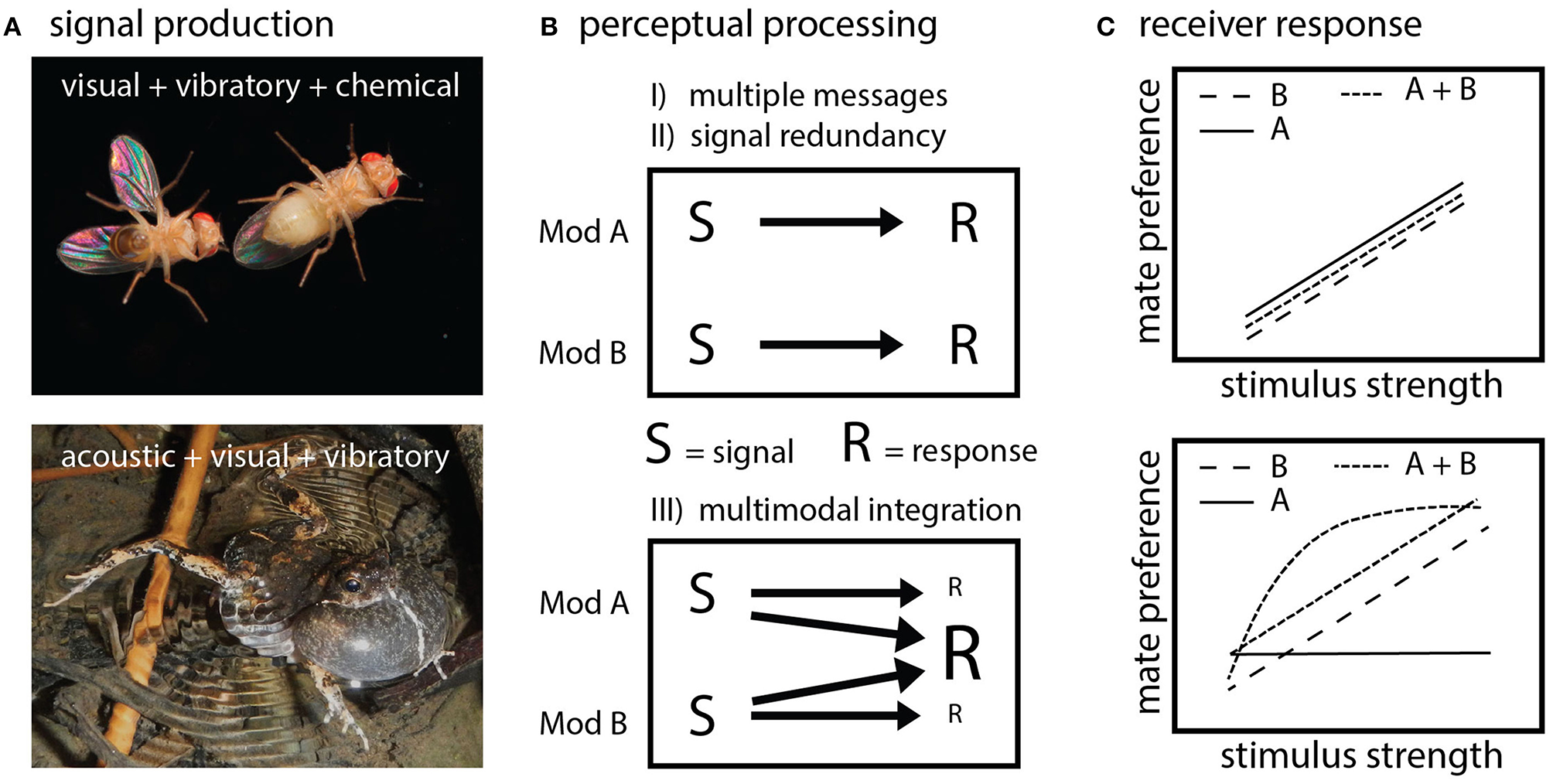

Figure 1

Multimodal signal production, perception and response. (A) Many mating displays involve components that are produced in and received through different physical modalities. Drosophila fruit flies displays combine chemical signal components, with visual and vibratory wing components (top panel). Tungara frogs produce sounds, while simultaneously inflating a visually conspicuous vocal sac and making water surface vibrations (bottom panel). (B) Multimodal components are either independently processed by the receiver's brain (top panel), as proposed by the multiple messages and backup signal hypotheses, or are integrated at higher levels of sensory processing, or so-called centers of multisensory integration (bottom panel). Mod A refers to one signal modality (e.g., visual) and Mod B refers to another signal modality (e.g., acoustic). S refers to signal component as produced by the sender, R refers to receiver response. (C) Expected receiver responses to either backup, multiple messages or models incorporating multisensory integration illustrated by using preference function plots (x-axis depicting a relative/normalized stimulus dimension/trait value e.g., calling rate or plumage hue in relation to preference strength on the y-axis). The two classic hypotheses of backup and multiple messages predict that the response to signal components presented in isolation does not differ from the response to their combined presentation (top panel). The difference between the two classic models being that response to A in isolation is either similar (backup) or different (multiple messages) from response to B. During multisensory integration responses to unimodal vs. multimodal presentation differ: they could increase, decrease or show non-linear characteristics. For illustration, two cases are plotted where the response to multimodal presentation either reflects a linear or non-linear (curved line) process.

Box 1

| Term | Definition |

|---|---|

| Multi-component signals | Displays with >1 component, but all received within one sensory modality |

| Multiple signals | Multiple signals within the same modality but eliciting separate responses (in same or different receivers) |

| Multimodal signal | >1 component and >1 sensory modality |

| Cross-sensory integration | Information from different sensory modalities is integrated at higher sensory levels |

| Multimodal perception | >1 component and >1 sensory modality are integrated to form 1 multimodal percept |

| Perceptual binding (also: sensory binding) | Process through which multimodal cues or signals (in communication) are grouped by the brain to belonging to one object or being one signal |

| Unity assumption | Well-studied sensory phenomenon in humans: two stimuli close in space or time are assumed to belong to the same object, at basis of ventriloquism effect |

Glossary.

Both these hypotheses overly focus on message coding on the sender's rather than on the perceptual processes on the receiver's side (Hebets et al., 2016). Growing empirical and theoretical insights show that this focus on signal production should be complemented by studying receivers' perceptual mechanisms to fully characterize the complexity of (multimodal) animal communication (Rowe, 1999; Hebets and Papaj, 2005; Partan and Marler, 2005; Starnberger et al., 2014; Halfwerk and Slabbekoorn, 2015; Hebets et al., 2016; Ryan et al., 2019). Importantly, documenting multimodal signals is but a first step; it will not reveal whether receivers process the signal components of the different modalities separately (as suggested by the backup-signal and multiple-message hypotheses) or integrate them into one single multimodal percept with perhaps a qualitatively different meaning. It is this process of multimodal perception in the narrow sense, i.e., higher sensory integration of multimodal input (see Box 1) for which we aim to raise further awareness in the context of mate signaling, because sensory integration resulting in multimodal percepts can lead to receiver responses that may fundamentally differ from responses to unimodal components. Multimodal perception is intensively researched in human psychobiology and the cognitive neurosciences [see e.g., (Spence, 2011; Stein, 2012; Chen and Vroomen, 2013)] where cross-sensory integration has been demonstrated in different vertebrates species, for example, non-human primates (Maier et al., 2008; Perrodin et al., 2015), cats (Meredith and Stein, 1996), and birds (Whitchurch and Takahashi, 2006).

A multimodal percept sensu strictu arises, whenever the central nervous system integrates simultaneous information of separate sensory modalities so that the resulting percept is qualitatively different from the sum of the properties of its components. A multimodal percept is thus contingent on the combined input of the involved modalities and absent when only a single component is present. A multimodal percept involves a concurrent larger, smaller or unique neural and/or behavioral response to multimodal vs. unimodal stimulation (Stein et al., 2014). This means that a multimodal percept can convey unique messages, a notion that is different from the “back-up” or “multiple-messages” concepts. This has important consequences for empirical work because some components when studied in isolation may be inadvertently dismissed as irrelevant in mate attraction or mate choice (Figure 1). In extremis unimodal experimental presentations can lead to false negatives, wrongly dismissing the ecological function and evolutionary importance of a particular sexual display. With this perspective paper our foremost aim is to raise awareness for multimodal perception in the context of mating signals by first reviewing how it is identified in human psychology and the neurosciences and then discuss selected candidate examples of similar phenomena in animals.

Multimodal Perception: Evidence From Psychophysical Studies in Humans

Multisensory processing in humans is evident in daily life and perhaps best illustrated by multisensory illusions (Shams et al., 2000). For example, in the double-flash illusion, subjects will perceive two visual light flashes during the presentation of a single flash if the latter is accompanied by two quick repetitions of a sound. The illusion demonstrates that auditory information can alter the perception of visual information and that the information from the two modalities is combined to one percept. The double-flash illusion exemplifies the process of cross-sensory integration (also referred to as “perceptual or sensory binding,” see Box 1). Ventriloquist effects on the other hand demonstrate how visual modifies auditory information (Stein, 2012). If concurrent audio and visual cues come from different locations (= conflicting spatial information) the visual dominates the auditory cue. Puppeteers use this effect when speaking with unmoving lips while simultaneously moving the puppet's mouth, leaving the audience with the impression that this is the source of the sound. Another striking example of multisensory integration in speech perception is the McGurk-effect (McGurk and Macdonald, 1976). Mismatching lip movements during speech production can alter what subjects hear: for example when human subjects see a video of a person's face articulating the syllables “ga-ga-ga” while hearing a person saying “ba-ba-ba,” most native speakers of American English will report hearing “da-da-da.” The simultaneous presentation of mismatched visual and auditory speech cues that provide ambiguous information can lead to a novel percept that is different from the physical properties of the two original stimuli—the visual information changes what subjects are hearing (McGurk and Macdonald, 1976). These phenomena result from the process of perceptual binding, which is the capacity to group different stimuli as belonging to the same source (see Box 1). Cues that arrive from the same direction, or at similar time intervals likely belong together and are thus grouped as such (Stein, 2012).

Multimodal Perception in non-Human Animals

There are plenty of examples of multisensory processing in animals—predators, such as bats, locate their prey faster if they can use information in more than one modality (Rhebergen et al., 2015; Leavell et al., 2018). Bumblebees learn new food sources faster if they can combine visual and weakly electric signals of flowers (Clarke et al., 2013). Birds learn to avoid predators quicker if vision is combined with sound (Rojas et al., 2018). Such changes in behavioral output during multi- vs. unimodal presentations are potential, but not conclusive evidence for a multimodal percept sensu strictu. These changes can also arise from other cognitive processes, like faster reaction times arising from alerting effects (Rowe, 1999). However, well-designed experiments can demonstrate sensory integration resulting in multimodal percepts. Many prey animals use toxic substances as primary defense and signal these with conspicuous signals (e.g., the bright yellow and red warning colorations of many invertebrates or poison frogs). Naïve young chickens will peck equally often at novel yellow or green colored food grains but prefer green and avoid yellow in the presence of the chemical cue pyrazine (a substance that makes many insects unpalatable). Here multisensory integration results in a new emergent percept that is different from the sum of its parts: pyrazine odors trigger a color aversion not shown in the absence of these odors (Rowe and Guilford, 1996). Multimodal integration is also evidenced by ventriloquism effects in frogs and birds (Narins and Smith, 1986; Narins et al., 2005; Feenders et al., 2017). In an operant task simulating a “temporal-order-judgment-task” used to test sensory binding in humans (involving the presentations of simple tones and light flashes), starlings received a food reward if they identified which of two different lights was activated first by pecking an associated response key. The starlings showed better discrimination when the visual stimuli were preceded or followed by a sound (Feenders et al., 2017). Because both sounds either before (“leading”) or after (“trailing”) the visual presentation improved visual temporal resolution, an alerting function of the sound can be excluded. Trailing (or leading) sounds seem to perceptually attract the second light flash, thereby perceptually increasing the gap between the two visual stimuli, thus improving their discrimination. In this example, the starlings were trained in a foraging context, but clearly, multimodal perceptual grouping would improve identifying and locating competitors and mates in situations with high sensory information load like frogs' mate advertising choruses (Figure 2). So how strong is the case for multimodal perception in a sexually selected context?

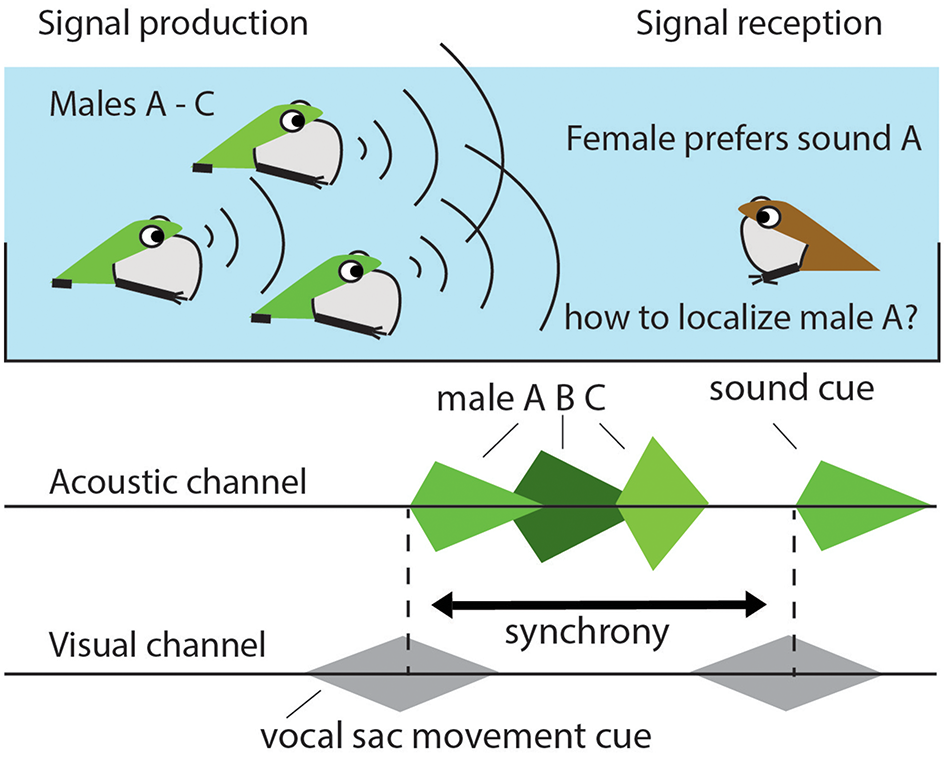

Figure 2

Multimodal perception requires comparing cues across sensory systems. A female frog that prefers to mate with a male that makes (to her) the most attractive sound can face the problem of not being able to locate that male in dense mating chorus. By focusing on the synchrony in sound production and the movements of a male's water surface waves or vocal sac she may be able to pick out her mate. Synchrony can e.g., be assessed by focusing on the timing in intensity between multimodal cues, as illustrated, or on the onset or offset of cues.

Multimodal Perception in Mate Attraction and Resource Defense

Numerous observations show that identification of potential mates or rivals requires information from more than one sensory modality: female fish approach potential mates faster or exclusively if presented with signals in two modalities e.g., vision and sound (Estramil et al., 2014) or vision and pheromones (McLennan, 2003). Fruitflies only react to conspecifics if stimulated in at least two modalities (interestingly so, in any combination of the acoustic, volatile, visual, gustatory or vibratory components) by species-specific signals (Bretman et al., 2011). These examples are highly suggestive of multimodal percepts, but as discussed above, a stronger response to a multimodal signal is not conclusive demonstration of multimodal perception yet. Fortunately, alternative explanations, such as increased attention resulting from alerting or additive effects on motivation, can often be excluded on the behavioral level with suitable experimental designs.

A first example is provided by a study of tungara frogs. Males can produce two different call elements, a whine and a chuck. Females will only react to a chuck when it shortly follows the whine. Experimentally increasing the temporal gap between the call elements reduces the attractiveness of the playback to female frogs. However, when a robot frog inflates and deflates its vocal sac during the silent gaps in the unattractive audio playbacks females will prefer the combined audio-visual over the audio-only stimulus. The visual cue thus perceptually binds the two acoustic cues together (Taylor and Ryan, 2013). Inflating the vocal sac prior to or after the gap between the acoustic elements does not restore mate choice, excluding increased attention, discrimination or memorability as alternative explanations (Rowe, 1999). Cross-modal perceptual binding has also been demonstrated to aid females to locate and choose males in other taxonomic groups, for example spiders and birds (e.g., Lombardo et al., 2008; Kozak and Uetz, 2016).

Another example, that shows how multimodal percepts not only help locating a sender, but lead to a qualitative change in judgment compared to a unimodal signal, concerns the multimodal displays shown by duetting avian species. Duets have an important function in joined territory defense and pairbonding (Hall, 2009). Although the next example concerns males' and females' joined breeding territory defense rather than mate attraction, we discuss it in this section for its methodology and because it provides an experimental demonstration of how an avian multimodal display can be crossmodally integrated. Duetting magpie-larks often produce synchronized visual wing waving movements during joined singing (Rek and Magrath, 2017). Combining taxidermic robotic birds with acoustic playbacks revealed that adding visual cues changed the interpretation of auditory cues. During unimodal audio-presentations, behavioral responses were weaker during solo than duet playbacks. During multimodal presentations, adding two wing-waving birds always caused a strong response, whereas adding one wing-waving bird always a weak response, regardless of whether the audio was playing back solo or duet singing. The authors did not set out to test for multimodal percepts in this study but a functional question (whether pseudo-duets are deceptive) and interpret their findings that receivers weigh visual information stronger than auditory information. We would expand this interpretation by suggesting that the observed perceptual weighing indicates cross-sensory binding: The crucial observation here is that adding a single wing-waving bird weakened the previously stronger response shown to duet singing in the audio-only condition—an example of a response that differs from “the sum of its parts.” We would argue that, akin to the “double-flash illusion” that triggers humans to “see” two flashes when hearing two sounds, the birds that previously heard a duet are now perhaps tricked into “hearing” only one singer when seeing only one bird displaying. In the experiment, the robobirds tricked the receivers, but in real life, cross-modal comparisons would enable receivers to detect the deceptive “pseudo-duets” sometimes used by single singers when out of sight successfully mimicking the structure and complexity of two duetting birds (Rek and Magrath, 2017).

Using Ecological Variation in Space and Time to Test for Multimodal Perception and Communication

In this last section we want to place multimodal mating signals in their ecological context since both signal production and perception are affected by social and environmental factors. Thus, for complete characterization of the function of multimodal signals we also need to study the when and how of signal production on the sender's side, where intended signal receivers are located in relation to the signaler in time and space, habitats' transmission properties (often varying even at a small scale) as well as the processes of reception and perception. An additional dimension of an ecological vs. a laboratory context is the potentially higher number of possible receivers of mating signals: often these are next to mates and rivals also eavesdropping predators (Ratcliffe and Nydam, 2008; Rhebergen et al., 2015).

As discussed above, ventriloquism effects based on sensory binding require temporal and/or spatial proximity (Narins et al., 2005). It is thus crucial, whether signal components of multimodal displays are produced synchronously or asynchronously (temporally and spatially). Many frogs call by in- and deflating a vocal sac which incidentally creates synchronous water-borne vibratory signal components (Halfwerk et al., 2014a). Wolf spiders on the other hand can use one set of legs for drumming and wave another set in the air to create visual signals, not being constrained by mechanical linkage between signal components (Uetz and Roberts, 2002). However, synchrony of the production of signal components can still (and often will) disintegrate during transmission. Light for example transmits a million times faster than airborne sound. The components of a synchronously produced audio-visual mating display will therefore arrive with a temporal lag that increases with distance. Multimodal perception could help detecting senders: synchronously produced visual and acoustic signal components arriving from the same direction likely belong to the same sender and by perceptually binding the acoustic cue to a visual cue, receivers might be able to locate their preferred mate (Figure 2), as seems indeed the case in diverse species, e.g., frogs, spiders, and birds (see examples above).

Manipulating either the temporal or the spatial configuration of the signal components can thus be used in field tests. By delaying the timing of water surface vibrations in relation to the timing of the airborne sound, male tungara frogs were tricked into perceiving their rival as displaying from a location outside of their territory (Halfwerk et al., 2014b). Likewise, the synchrony between signal components can be manipulated to assess whether females use temporal cues during multimodal perceptual binding (Figure 2). Changing the location from which different signal components are broadcast to receivers may also reveal whether spatial cues are also important for binding (Lombardo et al., 2008; Kozak and Uetz, 2016). Future work can make use of the fast technological progress in audio-video presentations [for review and caveats see Chouinard-Thuly et al. (2017)] or combining acoustic or chemical playbacks accompanied by robots to present different signal components synchronously or asynchronously (Rek and Magrath, 2017; Stange et al., 2017).

Conclusions

With this brief perspective we hope to have raised interest and awareness regarding potential presence of multimodal signals in mating contexts and the importance of studying perception to understand their function. Supporting previous appeals to integrate cognitive processes on the receiver's side into the study of animal communication [“receiver psychology” (Rowe, 1999; Bateson and Healy, 2005; Ryan et al., 2019)], we hope to have shown that adding the question as to how receivers integrate multiple signal components from different modalities into integrated percepts might add an important dimension to studying multimodal mating signals. Well-designed behavioral experiments have already demonstrated how stimuli of two modalities are coupled via perceptual binding, which can eventually lead to a multimodal percept. However, to date, this process has been predominantly studied in contexts unrelated to mate choice (e.g., foraging or predator-prey interactions) but their increasing documentation across contexts and taxa suggests that the same perceptual processes will also apply to (some) mating signals. A perception-orientated approach can thus shed new light on the discussion of multiple-messages vs. backup-signals. When co-occurring signals in two or more modalities are perceptually integrated the behavioral and evolutionary implications may be different than when those signals are processed in parallel. Multimodal integration provides thus an additional hypothesis with its own predictions regarding message meaning that might need testing when studying multimodal mating signals. Ignoring this possibility can yield misleading results regarding the relative importance of the different signal components when only unimodal tests are conducted—a crucial component could look irrelevant for mate choice when tested in isolation.

Statements

Author contributions

WH and KR conceived the idea for the review. JV, RS, EM, CS, WH, and KR contributed to literature search and writing.

Funding

Funding is gratefully acknowledged from the Human Frontier Science Program # RGP0046/2016.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1

Bateson M. Healy S. D. (2005). Comparative evaluation and its implications for mate choice. Trends Ecol. Evol.20, 659–664. 10.1016/j.tree.2005.08.013

2

Bretman A. Westmancoat J. D. Gage M. J. G. Chapman T. (2011). Males use multiple, redundant cues to detect mating rivals. Curr. Biol.21, 617–622. 10.1016/j.cub.2011.03.008

3

Candolin U. (2003). The use of multiple cues in mate choice. Biol. Rev.78, 575–595. 10.1017/S1464793103006158

4

Chen L. H. Vroomen J. (2013). Intersensory binding across space and time: a tutorial review. Atten. Percept. Psychophys.75, 790–811. 10.3758/s13414-013-0475-4

5

Chouinard-Thuly L. Gierszewski S. Rosenthal G. G. Reader S. M. Rieucau G. Woo K. L. et al . (2017). Technical and conceptual considerations for using animated stimuli in studies of animal behavior. Cur. Zool.63, 5–19. 10.1093/cz/zow104

6

Clarke D. Whitney H. Sutton G. Robert D. (2013). Detection and learning of floral electric fields by bumblebees. Science.340, 66–69. 10.1126/science.1230883

7

Dalziell A. H. Peters R. A. Cockburn A. Dorland A. D. Maisey A. C. Magrath R. D. (2013). Dance choreography is coordinated with song repertoire in a complex avian display. Curr. Biol.23, 1132–1135. 10.1016/j.cub.2013.05.018

8

de Jong K. Amorim M. C. P. Fonseca P. J. Heubel K. U. (2018). Noise affects multimodal communication during courtship in a marine fish. Front. Ecol. Evol.6:113. 10.3389/fevo.2018.00113.

9

Estramil N. Bouton N. Verzijden M. N. Hofker K. Riebel K. Slabbekoorn H. (2014). Cichlids respond to conspecific sounds but females exhibit no phonotaxis without the presence of live males. Ecol. Freshw. Fish.23, 305–312. 10.1111/eff.12081

10

Feenders G. Kato Y. Borzeszkowski K. M. Klump G. M. (2017). Temporal ventriloquism effect in European starlings: evidence for two parallel processing pathways. Behav. Neurosci.131, 337–347. 10.1037/bne0000200

11

Halfwerk W. Jones P. L. Taylor R. C. Ryan M. J. Page R. A. (2014a). Risky ripples allow bats and frogs to eavesdrop on a multisensory sexual display. Science.343, 413–416. 10.1126/science.1244812

12

Halfwerk W. Page R. A. Taylor R. C. Wilson P. S. Ryan M. J. (2014b). Crossmodal comparisons of signal components allow for relative distance assessment. Curr. Biol.24, 1751–1755. 10.1016/j.cub.2014.05.068

13

Halfwerk W. Slabbekoorn H. (2015). Pollution going multimodal: the complex impact of the human-altered sensory environment on animal perception and performance. Biol. Lett.11:20141051. 10.1098/rsbl.2014.1051

14

Hall M. L. (2009). A review of vocal duetting in birds. Adv. Stud. Behav.40, 67–121. 10.1016/s0065-3454(09)40003-2

15

Hebets E. A. Barron A. B. Balakrishnan C. N. Hauber M. E. Mason P. H. Hoke K. L. (2016). A systems approach to animal communication. Proc. R. Soci. B Biol. Sci.283:20152889. 10.1098/rspb.2015.2889

16

Hebets E. A. Papaj D. R. (2005). Complex signal function: developing a framework of testable hypotheses. Behav. Ecol. Sociobiol.57, 197–214. 10.1007/s00265-004-0865-7

17

Johnstone R. A. (1996). Multiple displays in animal communication: ‘Backup signals' and ‘multiple messages'. Phil. Trans. R. Soc. B.351, 329–338. 10.1098/rstb.1996.0026

18

Kozak E. C. Uetz G. W. (2016). Cross-modal integration of multimodal courtship signals in a wolf spider. Anim. Cogn.19, 1173–1181. 10.1007/s10071-016-1025-y

19

Leavell B. C. Rubin J. J. McClure C. J. W. Miner K. A. Branham M. A. Barber J. R. (2018). Fireflies thwart bat attack with multisensory warnings. Sci. Adv.4:eaat6601. 10.1126/sciadv.aat6601

20

Lombardo S. R. Mackey E. Tang L. Smith B. R. Blumstein D. T. (2008). Multimodal communication and spatial binding in pied currawongs (Strepera graculina). Anim. Cogn.11, 675–682. 10.1007/s10071-008-0158-z

21

Maier J. X. Chandrasekaran C. Ghazanfar A. A. (2008). Integration of bimodal looming signals through neuronal coherence in the temporal lobe. Curr. Biol.18, 963–968. 10.1016/j.cub.2008.05.043

22

McGurk H. Macdonald J. (1976). Hearing lips and seeing voices. Nature.264, 746–748. 10.1038/264746a0

23

McLennan D. A. (2003). The importance of olfactory signals in the gasterosteid mating system: sticklebacks go multimodal. Biol. J. Linnean Soc.80, 555–572. 10.1111/j.1095-8312.2003.00254.x

24

Meredith M. A. Stein B. E. (1996). Spatial determinants of multisensory integration in cat superior colliculus neurons. J. Neurophysiol.75, 1843–1857. 10.1152/jn.1996.75.5.1843

25

Møller A. P. Pomiankowski A. (1993). Why have birds got multiple sexual ornaments. Behav. Ecol. Sociobiol.32, 167–176. 10.1007/BF00173774

26

Narins P. M. Grabul D. S. Soma K. K. Gaucher P. Hodl W. (2005). Cross-modal integration in a dart-poison frog. Proc. Natl. Acad. Sci. U.S.A.102, 2425–2429. 10.1073/pnas.0406407102

27

Narins P. M. Smith S. L. (1986). Clinal variation in alduran advertisement calls: basis for acoustic isolation?Behav. Ecol. Sociobiol.19, 135–141. 10.1007/BF00299948

28

Partan S. Marler P. (1999). Communication goes multimodal. Science.283, 1272–1273. 10.1126/science.283.5406.1272

29

Partan S. R. Marler P. (2005). Issues in the classification of multimodal communication signals. Am. Nat.166, 231–245. 10.1086/431246

30

Perrodin C. Kayser C. Logothetis N. K. Petkov C. I. (2015). Natural asynchronies in audiovisual communication signals regulate neuronal multisensory interactions in voice-sensitive cortex. Proc. Natl. Acad. Sci. U.S.A.112, 273–278. 10.1073/pnas.1412817112

31

Ratcliffe J. M. Nydam M. L. (2008). Multimodal warning signals for a multiple predator world. Nature.455, 96–U59. 10.1038/nature07087

32

Rek P. Magrath R. D. (2017). Deceptive vocal duets and multimodal display in a songbird. Proc. R. Soc. B.284:20171774. 10.1098/rspb.2017.1774

33

Rhebergen F. Taylor R. C. Ryan M. J. Page R. A. Halfwerk W. (2015). Multimodal cues improve prey localization under complex environmental conditions. Proc. R. Soc. Lond. B Biol. Sci.282:20151403. 10.1098/rspb.2015.1403

34

Rojas B. Burdfield-Steel E. De Pasqual C. Gordon S. Hernández L. Mappes J. et al . (2018). Multimodal aposematic signals and their emerging role in mate attraction. Front. Ecol. Evol.6:93. 10.3389/fevo.2018.00093

35

Rowe C. (1999). Receiver psychology and the evolution of multicomponent signals. Anim. Behav.58, 921–931. 10.1006/anbe.1999.1242

36

Rowe C. Guilford T. (1996). Multiple colour aversions in domestic chicks triggered by pyrazine odours of insect warning colours. Nature.383, 520–522. 10.1038/383520a0

37

Ryan M. J. Page R. A. Hunter K. L. Taylor R. C. (2019). ‘Crazy love': nonlinearity and irrationality in mate choice. Anim. Behav.147, 189–198. 10.1016/j.anbehav.2018.04.004

38

Shams L. Kamitani Y. Shimojo S. (2000). Illusions–what you see is what you hear. Nature.408, 788–788. 10.1038/35048669

39

Spence C. (2011). Crossmodal correspondences: a tutorial review. Atten. Percept. Psychophys.73, 971–995. 10.3758/s13414-010-0073-7

40

Stafstrom J. A. Hebets E. A. (2013). Female mate choice for multimodal courtship and the importance of the signaling background for selection on male ornamentation. Curr. Zool.59, 200–209. 10.1093/czoolo/59.2.200

41

Stange N. Page R. A. Ryan M. J. Taylor R. C. (2017). Interactions between complex multisensory signal components result in unexpected mate choice responses. Anim. Behav.134, 239–247. 10.1016/j.anbehav.2016.07.005

42

Starnberger I. Preininger D. Hoedl W. (2014). From uni- to multimodality: towards an integrative view on anuran communication. J. Comp. Physiol. A.200, 777–787. 10.1007/s00359-014-0923-1

43

Stein B. E. (2012). The New Handbook of Multisensory Processing.Cambridge, MA: MIT Press.

44

Stein B. E. Stanford T. R. Rowland B. A. (2014). Development of multisensory integration from the perspective of the individual neuron. Nat. Rev. Neurosci.15, 520–535. 10.1038/nrn3742

45

Taylor R. C. Ryan M. J. (2013). Interactions of multisensory components perceptually rescue Tungara frog mating signals. Science.341, 273–274. 10.1126/science.1237113

46

Uetz G. W. Roberts J. A. (2002). Multisensory cues and multimodal communication in spiders: Insights from video/audio playback studies. Brain Behav. Evol.59, 222–230. 10.1159/000064909

47

Ullrich R. Norton P. Scharff C. (2016). Waltzing Taeniopygia: integration of courtship song and dance in the domesticated Australian zebra finch. Anim. Behav.112, 285–300. 10.1016/j.anbehav.2015.11.012

48

Vedenina V. Y. Shestakov L. S. (2018). Loser in fight but winner in love: how does inter-male competition determine the pattern and outcome of courtship in cricket Gryllus bimaculatus?Front. Ecol. Evol.6:197. 10.3389/fevo.2018.00197

49

Voigt C. C. Behr O. Caspers B. von Helversen O. Knornschild M. Mayer F. et al . (2008). Songs, scents, and senses: sexual selection in the greater sac-winged bat, Saccopteryx bilineata. J. Mammal.89, 1401–1410. 10.1644/08-mamm-s-060.1

50

Whitchurch E. A. Takahashi T. T. (2006). Combined auditory and visual stimuli facilitate head saccades in the barn owl (Tyto alba). J. Neurophysiol.96, 730–745. 10.1152/jn.00072.2006

51

Williams H. (2001). Choreography of song, dance and beak movements in the zebra finch (Taeniopygia guttata). J. Exp. Biol.204, 3497–3506.

Summary

Keywords

multimodal percepts, sensory integration, mating signals, emergent properties, perceptual or sensory binding, mate choice, animal communication

Citation

Halfwerk W, Varkevisser J, Simon R, Mendoza E, Scharff C and Riebel K (2019) Toward Testing for Multimodal Perception of Mating Signals. Front. Ecol. Evol. 7:124. doi: 10.3389/fevo.2019.00124

Received

05 December 2018

Accepted

27 March 2019

Published

17 April 2019

Volume

7 - 2019

Edited by

Astrid T. Groot, University of Amsterdam, Netherlands

Reviewed by

Daizaburo Shizuka, University of Nebraska-Lincoln, United States; Keith Tarvin, Oberlin College, United States

Updates

Copyright

© 2019 Halfwerk, Varkevisser, Simon, Mendoza, Scharff and Riebel.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wouter Halfwerk w.h.halfwerk@vu.nl

This article was submitted to Behavioral and Evolutionary Ecology, a section of the journal Frontiers in Ecology and Evolution

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.