Abstract

The abstract basis of modern computation is the formal description of a finite state machine, the Universal Turing Machine, based on manipulation of integers and logic symbols. In this contribution to the discourse on the computer-brain analogy, we discuss the extent to which analog computing, as performed by the mammalian brain, is like and unlike the digital computing of Universal Turing Machines. We begin with ordinary reality being a permanent dialog between continuous and discontinuous worlds. So it is with computing, which can be analog or digital, and is often mixed. The theory behind computers is essentially digital, but efficient simulations of phenomena can be performed by analog devices; indeed, any physical calculation requires implementation in the physical world and is therefore analog to some extent, despite being based on abstract logic and arithmetic. The mammalian brain, comprised of neuronal networks, functions as an analog device and has given rise to artificial neural networks that are implemented as digital algorithms but function as analog models would. Analog constructs compute with the implementation of a variety of feedback and feedforward loops. In contrast, digital algorithms allow the implementation of recursive processes that enable them to generate unparalleled emergent properties. We briefly illustrate how the cortical organization of neurons can integrate signals and make predictions analogically. While we conclude that brains are not digital computers, we speculate on the recent implementation of human writing in the brain as a possible digital path that slowly evolves the brain into a genuine (slow) Turing machine.

Introduction

The present essay explores key similarities and differences in the process of computation by the brains of animals and by digital computing, by anchoring the exploration on the essential properties of a Universal Turning Machine, the abstract foundation of modern digital computing. In this context, we try to explicitly distance XVIIIth century mechanical automata from modern machines, understanding that when computation allows recursion, it changes the consequences of determinism. A mechanical device is usually both deterministic and predictable, while computation involving recursion is deterministic but not necessarily predictable. For example, while it is possible to design an algorithm that computes the decimal digits of π, the value of any finite sequence following the nth digit, cannot (yet) be computed, hence predicted, with n sufficiently large. This implies that the consequences of replacing feedback (a common principle in mechanics) with recursion (a much deeper process, using a program that calls itself) are not yet properly addressed because they do not belong to widely shared knowledge. It is remarkable that recursion, associated with appropriate energy management, creates information (Landauer, 1961; Hofstadter et al., 1979). How this happens has been illustrated by Douglas Hofstadter in his book Gödel, Escher, Bach, An Eternal Golden Braid, as what he named a “strange loop,” illustrated by a painting in an art gallery representing a person contemplating the painting in that very gallery. This illustration shows how a completely open new world where paradoxes are the rule is emerging (Hofstadter, 2007).

However, this happens on condition that a material support is involved, introducing a certain level of analogical information even in electronic computers. This involvement of the basic currencies of Reality other than information (mass, energy, space and time) opens up computing to another universe. This has consequences very similar to the result of Gödel’s demonstration that arithmetic is incomplete: nothing in the coded integers used in the demonstration can say, within the number system, that there is a contradiction that can never be solved. It is only by going outside the coded system (so as to be able to observe it) that one can see the incompleteness. The very fact that the outcome of the demonstration can only be understood outside the frame of its construction—namely in a world where judgments exist—introduced a certain level of analogical information into the picture. The meaning of Gödel’s last sentence (I cannot be proved) is not valid within the framework of the axioms and definitions of Number Theory, but only when one looks at Number Theory from the outside. Recursion is possible even in a world where analogical computing dominates, and the structure of the brain, organized in cortical layers, and through feedforward and feedback loops, may well allow the development of this procedure. However, the introduction of language, and of writing in particular, could well allow the modern human brain to behave like a Turing Machine, thus explaining how Homo sapiens could generate demonstrations of the type of Gödel’s incompleteness theorems (Hofstadter et al., 1979).

Analog and Digital Computing

We use “digital” to describe information and computation involving explicit numerical representations and manipulations, no matter how the numbers are themselves represented. In contrast, “analog” as it refers to neuronal information representation and computation, means a biological or other physical process like an action potential, that has another (i.e., is analogous to some) representational meaning or manipulation. In order to know whether thinking of the “brain as a computer” is more than a metaphor, we need to agree on a description of computing. We generally assume that computing involves an abstract process, the manipulation of integers with the standard rules of arithmetic. In this context the number three lies in an abstract world, beyond the way it is denoted: trois in French, drei in German, τρıα in Greek, 三 in Chinese, etc. It belongs to the abstract domain of “information.” This conception is based on the assumption that information is a true physical currency of reality (Landauer, 1996), along with mass, energy, space and time, allowing us to work in the abstract domain of digital computing. We must recognize, however, that the very concept of information, although widely used as a word, is not usually considered by biologists as an explicit physical entity, although some synaptic physiologists may conjecture that neural information is embodied in synaptic “strengths.” As a consequence, when it comes to describing the role of the cell or the brain in computation, we have to oscillate between deep abstraction and concrete physiology. With a little more insight, when we use electronic computers, we combine Number Theory (Rosen, 2011) with the rules of logic, in particular Boolean logic (Sikorski, 1969). The vast majority of computing approaches are simply based on manipulation of bits. Computer users create algorithms, by subsuming a binary frame of reference, usually referred to as “digital” as a consequence of the way we calculate in the decimal system. However, the construction of relevant digital processing units asks for the understanding of the consequences of recursion and therefore Number Theory. Indeed, the consequences of Gödel’s theorems makes that it remains impossible to design a processor which would be “hacking-free.” This requirement is visible in constructs such as those belonging to the class of Verifiable Integrated Processor for Enhanced Reliability [VIPER, (Brock and Hunt, 1991)]. This vision remains a very crude abstract view of what computing is. It is based entirely on a discontinuous, discrete conception of physical reality. In contrast, the material world behaves as if it were continuous. In biology, the biochemical networks used in synthetic biology constructs are based on processes that amplify, synchronize, integrate signals and store information in a continuous way. Even computers are not exempt from the constraints imposed by the material world. The computer you are using to read this text is a material machine, made of components that have mass and obey the laws of physics. For example, the rules of logic are implemented as thresholds operating on continuous parameters, and even the very definition of a threshold cannot be entirely digitized. For example, it displays an inherent variability due to thermal noise. The history of digital computing acknowledges that, in parallel with an authentic shift toward a digital world possibly beginning with the ENIAC in 1946, computing kept being developed with analog devices (Misa, 2007).

In that sense, computation can be seen as both analog and digital. The idea of analog computation is not new. It seems to have been present very early, even in the ancient Greek civilization. In Greek, αvλoγoς means “proportionate” with the notion that the due proportions associated with solving a certain problem can be used to solve that same problem via its simulation, not necessarily requiring understanding. The efficiency of analog computing is strikingly illustrated by an extraordinary device built more than 2,000 years ago, which seems to work like an analog computer to calculate a large number of properties of meteors in the sky, planets and stars, the Antikythera Mechanism (Freeth et al., 2021).

Today, the central processing unit that runs computers manipulates electrons, not cogs, in an organized way. It does not directly manipulate logical bits. How does the modern computer, which is constructed with components that have mass and space, perform its digital calculations? This may be a key question in our quest for the interaction between the analog world (that of matter with mass) and the digital world (that of the abstract genomic sequences manipulated by bioinformaticians for example) when we want to understand how the brain works. The question is indeed at the heart of what life is all about. How do we articulate the analog/digital interaction? We can recognize at least two different physical processes taking place in the electronic circuits of a digital computer. Continuous signals are transformed into digital (in fact usually Boolean) computation by: (1) exploiting the non-linearity of the circuits (transistors are either off or saturated, capacitors are either empty or fully charged). This entails that changes are rapid and large when compared to thermal fluctuations and means that we introduce thresholds such that a digital coding becomes reasonably robust. (2) Error correction mechanisms, such as redundancy, to overcome possible errors due to thermal electronic fluctuations. Essentially, this is the result of combining careful design of the basic electronic and physical phenomena with a coarse shaping (in a sense, a “clustering”) and “constraining process” of the physical observables.

A frightening example illustrates the dichotomy between analog and digital information, not in the brain, but when we see cells as computers making computers, with their genetic program both analog, when it has to be accommodated within the cell’s cytoplasm, and digital when it is interpreted as an algorithm for the survival of a cell and construction of its progeny. Viruses illustrate this dichotomy. Smallpox is a lethal virus. The sequence of its genome (digital information) is available, and can be exchanged via the Internet without direct action on the analog setup of living organisms, hence harmless. However, synthetic biology techniques (gene synthesis) allow this digital information to “transmute” into the analog information of the chemistry of nucleotides, regenerating an active virus. This coupling makes the digital virus deadly (Danchin, 2002). The difference between the textual information of the virus sequence and the final information of the finished material virus illustrates the complementarity between analog and digital computation. The analog implementation is extremely powerful, and we will have to remember this observation when exploring the way the brain appears to compute.

Physiological experiments meant to illustrate the first steps of computing in living systems are based on discrete digital designs but implemented in continuous properties of matter, such as concentration of ingredients. Typically, an early synthetic implementation of computing in cells, the toggle switch, consisted of the design of a pair of coding sequences of two genes, lacI and tetR, with relevant regulatory signals where the product of each gene inhibits the expression of the other (Gardner et al., 2000). This is possibly the simplest circuit capable of performing a calculation in a cell, in this case the ability to store one bit of information. Other digital circuits have been built since, and recent work has highlighted the level of complexity achieved by digital biological circuits, where metabolic constraints blur the picture. A deeper understanding of what happens in the cells where this construct has been implemented shows that their behavior is not fully digital (Soma et al., 2021). Cells, however, can still be seen as computers making computers and this performance can be described in a digital way (Danchin, 2009a). When we discuss the algorithmic view of the cell, we implicitly assume a digital view of reality. This is how we can introduce information, through “bits,” i.e., entities that can have two states, 0 and 1. This is very similar to the way physics describes states as specific energy levels, for example (and this can be seen when an atom is illuminated, in the form of optically detected lines, which allows researchers to characterize the nature of that particular atom). However, because this oversimplified vision overlooks the analog dimension of computation, it omits taking into account material processes, such as aging for example, which requires specific maintenance steps involving specific functions that are rarely considered in digital machines [see (Danchin, 2015) and note that, in computers, processors also do age indeed, with important consequences on the computing speed and possibly accuracy (Gabbay and Mendelson, 2021)].

Unlike digital circuits, where a species has only two states, analog circuits represent ranges of values using continuous ranges of concentrations. In cases where energy, resources and molecular components are limited, analog circuits can allow more complex calculations than digital circuits. Using a relatively small set of components, Daniel et al. (2013) designed in cells a synthetic circuit that performs analog computations. This matches well with the common vision of synthetic biology, that of a cell factory which allows the expression of complex programs that can answer many metabolic engineering questions, as well as the development of computational capabilities. The take-home message of this brief discussion on the difference between analog and digital computing is that, in order to calculate, it is not necessary to do so numerically. Nature, in fact, appears as a dialog between continuous and discontinuous worlds. Some reject the idea of discontinuity. Forgetting about Number Theory, the French mathematician René Thom emphasized the continuous nature of the Universe and rejected the discontinuous view proposed by molecular biology, such as the way in which the genetic program is written as a sequence of nucleotides, acting as letters in a linear text written with a four-letter alphabet. He insisted on continuity even in the evolution of language (Thom et al., 1990), a point of view that we will discuss at the end of this essay. In summary, there are many facets of natural computing that we need to be aware of if we are to explore the brain computing metaphor (Kari and Rozenberg, 2008). We have seen how the Antikythera Mechanism was an early attempt using analog computing, and this line of engineering has been pursued over centuries. For example in 1836 a way was proposed to solve differential equations using a thread wrapped around a cylinder (Coriolis, 1836), and more recently using analog computers (Hartree, 1940; Little and Soudack, 1965; Barrios et al., 2019). Finally, after simulations of the behavior of the neuronal networks hardware was created that implemented analog computing into microprocessors (Wijekoon and Dudek, 2012; Martel et al., 2020).

Variations on the Concept of the Turing Machine

Our digital computers are built according to an abstract vision, the Turing Machine (TM), elaborated by Alan Turing in the 1930s and developed in descriptions intended to make it more concrete by Turing and John von Neumann after the Second World War. The “machine” is often seen as a device reading a tape-like medium triggering specific behaviors, as we see them performed by computers. This view is rather superficial. It does not capture the key properties of the TM that created a general model of logic and computation, including the identification of impossibilities (Copeland, 2020). The machine is an abstract entity and, as in all other cases where we consider information as a genuine currency of physics, its implementation in objects with mass will create a considerable number of idiosyncratic constraints that can only be solved by what are sometimes called “kludges” in hardware machines, i.e., clumsy but critical solutions to a specific problem1 [(Danchin, 2021b)]. This necessary overlap between information and (massive/spatial) matter creates the immense diversity of life, explaining why we witness so many “anecdotes” that interfere with our efforts to identify basic principles of life. Examples range from various solutions to the question opened by the presence of proline in the translation machinery, because proline is not an amino acid (Hummels and Kearns, 2020), to the need for a specific protease that cleaves off the first nine residues of the ribosomal protein L27 in the bacterial clade Firmicutes (Danchin and Fang, 2016), to macroscopic extraordinary display of color and behavior in birds of paradise (Wilts et al., 2014). It is important to remember that the way in which machine states could be concretely implemented, which marks the analog world, had no impact on the way the TM was used to contribute to the mathematical field of Number Theory (Turing, 1937). The “innards” of the machine were not taken into consideration.

The TM is a finite-state machine. In manipulating an abstract tape, it performs the following operations (the operations performed by computers are conceptually the same, although they appear to the general observer to be performed in a very detailed and therefore less comprehensible manner):

-

•

Changing a symbol in a finite number of places, after reading the symbols found there (note that changing more than one symbol at a time can be reduced to a finite number of successive basic changes).

-

•

Changing from the point which is being read to other points, at a given maximum distance away in the message.

-

•

Changing the state of the machine.

All this can be summarized as specified by a series of quintuples, which each have one of the three possible following forms:

pαβLq or pαβRq or pαβNq

where a quintuple means that the machine is in configuration p, where symbol α is read, and is replaced by β to enter into configuration q, while displacing the reading toward the (L)eft, the (R)ight, or staying at the same (N)eutral place.

Several points need to be made here. The machine does not just read, it reads and writes. The machine can move forward, backward, and jump from one place to another. Thus, despite the impression that it uses a linear strip marked by sequences of symbols, its behavior is considerably more diverse. This is important when considering genomes as hardware implementations of a TM tape. For example, we tend to think of the processes of transcription, translation and replication as unidirectional, when in fact they are designed to be able to backtrack and change the building blocks that they had implemented in the forward steps, a process that is essential to their activity. More complex processes such as splicing and trans-splicing are also compatible with the TM metaphor. Furthermore, the above description corresponds to the Universal Turing Machine (UTM), which Turing showed to be equivalent to any construction using a finite multiplicity of tapes in parallel. A highly parallel machine can be imitated by a single-tape machine, which is of course considerably slower, but with exactly the same properties in terms of computational performance.

An essential point of the machine is that it is a finite-state machine. Despite its importance, this point is often overlooked, and it is here that the analogy between the cell or brain and a TM needs to be critically explored. What are and where are the states of the cell- (resp. brain-) machine located? Allosteric proteins have well-defined states, usually an active and an inactive state, and synapses are turned on or off, depending on the presence of effective neurotransmission. Their activity depends on the state of specific post-synaptic receptors, and often allosteric proteins (Changeux, 2013). More complex views may also take space into account, with the state of a protein or a complex defined by their presence at specific locations such as mid-cell or at the cell’s poles for bacteria, or particular dendritic compartments of neurons (Hsieh et al., 2021). How do they evolve over time as the cell (resp. brain) “computes”? Of course, the same question can be asked of the hardware that makes up a computer, but in this case this is generally a key function of its memory parts, with specific addressing functions. We must also try to identify the vehicles that carry the information. In standard electronics, this role is played by electrons (with a specific role for the electric potential, which can move extremely quickly with an effect at long distance, whereas the physical movement of individual electrons is always slow). In optoelectronics, photons are used as information carriers, rather than electrons. What about cells? One could assume, in fact, that in most cases the information carriers are protons, which travel mainly on water molecules (forming hydrogen bonds) and on the surface of macromolecules and metabolites, also in forming hydrogen bonds (Danchin, 2021a). Part of the difficulty we have in visualizing what is going on in the cell is that we do not know well how water is organized, particularly around macromolecules, and how this might provide a series of hydrogen-bond channels carrying information from one place to another.

At this point the question becomes: how are the states of the cell fixed locally, i.e., what type of memory is retained, for how long and with which consequences? When we come to the way the brain computes, we will have to answer all these same questions. In the case of neurons, the carriers of information are primarily transmembrane potentials involving ionic currents that are initiated from dendrites, accumulate at the soma of neurons and after initiating action potentials propagate along the axons. But this is only part of the story: at synapses (except for electrical synapses), information is carried forward by specific neurotransmitters that trigger both ionotropic and metatropic neurotransmission, which introduces a strong coupling between information transfers and cellular metabolism (Chen and Lui, 2021), a feature that may be difficult to reconcile with the actual functioning of computers. It should be noted, however, that this organization creates de facto a range of relevant time frames that are considerably slower than the movements and state changes of the entities subject to thermal noise. This allows even short-term memories to be available for creation and recall without too much interference from temperature (recall that thermal vibrations typically occur in the femto-/pico-second range, while diffusion of a neurotransmitter occurs in the micro-millisecond range).

To transpose the TM concept into biology, further developments are needed in physics (what is information, how to represent it, etc.) and perhaps in mathematics (is there a need for mathematical developments other than number theory and logic, which are the basis of the formal description of TM and its parallel equivalents), in order to be able to embody it explicitly in soft matter. It is worth noting that, despite progress, there has not been much recent developments in Information Theories since the time of Elements of Information Theory (Cover and Thomas, 2006) and Decoding Reality: The Universe as Quantum Information (Vedral, 2012). The main problem is simple: in Turing’s description, nothing is said about the machine, which is purely abstract, whereas it needs to be given some “flesh,” with management of space, mass, time and energy.

Indeed, this tells us that there is a huge conceptual opportunity for recording the informational state of the cell as a TM (not only the transsynaptic cell membrane, cytoplasm, etc., but also the conformation of the chromosome, for example), which is much larger than the information carried by the genetic program as described as a sequence of abstract nucleotides (Danchin, 2012). Therefore, the transcription/translation machinery of the cell, as the concrete implementation of the mechanical part of the TM, the one that decides to move the program forward or backward, to read and write it, has enough opportunity to store and modify its states (its information). This is probably where the information retained by natural selection operates when cells multiply (and no longer just survive). Living systems can therefore act as information traps, storing for a time some of the most common states of the environment. Indeed, one might expect that what is involved in the machine’s “decision” to progress (explore and produce offspring) is only a tiny subset of its information. From this point of view, natural selection seems to have a gigantic field of possibilities. We shall see that the problem is even more difficult to solve if we consider the brain. However, among the many features that characterize the TM, including the fact that it is a finite-state machine, it seems essential for systems that would ask to be recognized as a TM, to present distinct physical entities between the data/program set, and the machine that will interpret it into actions that modify the states of the machine.

Besides Enzymes and Templates: Learning and Memory in the Brain

In 1949, Donald Hebb proposed that changes in the effective strength of synapses could explain associative learning (or conditioning, the process by which two unrelated elements become connected in the brain when one predicts the other). The idea was that the strength of a synapse could increase when the use of that synapse contributed to the generation of action potentials in the postsynaptic neuron (Hebb, 2002). With the intention of representing by an adequate formalism the learning phenomenon in the vertebrate central nervous system, based on Hebb’s postulate, we have developed a theory of learning in the developing brain. This theory, implemented according to the axiomatic method, is placed within the general theory of systems where the nervous system is represented by a particular automaton. It is based on the idea of selective stabilization of synapses, depending on their activity (Changeux et al., 1973), phenomena that have been validated (Bliss and Collingridge, 1993; Bear and Malenka, 1994). The key to this vision (CCD model) is an epistemological premise: we seek to account for the properties of neural systems by means of a selective theory [in contrast with instructive theories, see discussion in Darden and Cain (1989)].

Based on families of experimental observations, this work restricted the study of memory and learning to neural networks (thus neglecting neuroglia and other features of the nervous system) and more specifically to the connections between neurons, the synapses. It proposed that, in addition to the now classic properties of networks traversed by impulses as found in computers (with the numerical logic rules that this imposes, as well as the feedback and feedforward loops), there is an original characteristic of neural networks, namely the possibility of a qualitative (and not only quantitative) evolution of synapses according to their activity. For simplicity, the model postulated that a synapse evolves, changing its state, in the graph:

where it passes during growth from a virtual state (V) to an unstable, labile state (L), then, depending on its local activity and the general activity of the posterior neuron, can either regress and disconnect (D) or stabilize in an active form (S).

With these very general premises, it was shown that a neural network is able to acquire the stable associative ability to recognize the form of afferent signals after a finite time. This memory and learning capacity comes from a transformation of the connectivity during the operation of the network. Thus, a temporal pattern is stored in the nervous network as a geometric spatial form. Besides quantitative involvement of synapse efficiency, the main originality of the approach lies in the fact that learning comes from the loss of connectivity. Learning carves a figure in the brain tissue that is memorized as a neural network. Moreover, even if the genetic constraints necessary to code for the implementation rules of this learning were very small, the system would nevertheless lead to the storage of an immense amount of information: each memorized event corresponds to a particular path traveling among the 1015 synapses of the network, so that the number of possible paths (and thus of memorized events) is combinatorially infinite. The only limit to our ability to learn—but it is a terribly constraining limit—is the slow access to our brain by our sensory organs, as well as the slow speed of the brain activity (as compared to that of modern computers, for example).

The CCD model explored the role of selective stabilization in learning and memory in the nervous system. This exploration preceded the fashion for neural networks, but with a twist rarely highlighted: living brain synapses evolved in such a way that they could regress and irreversibly disconnect from their downstream dendrite, making memorization irreversible at least for a time. In contrast, in most artificial neural networks, the state of the synapses is actually a quantitative feature that can revert to the initial values if the training set is noisy [see for example the initial model of the Perceptron (Lehtiö and Kohonen, 1978)]. As quantity is favored over quality, the latter has an important consequence: the outcome of the learning process is considerably sensitive to the length of the training period. The positive learning outcome first increases in parallel with the training period, then stabilizes and then gradually decreases if the training continues.

The role of neural networks has been and still is the subject of a considerable amount of work. An important sequel was the idea of Neural Darwinism proposed by Gerald Edelman (1987). The central idea of this work is that the nervous system of each individual functions as a selective system composed of groups or neurons evolving under selective pressure as selection operates in the generation of the immune response and in the evolution of species. By providing a fundamental neural basis for categorizing things, the aim of this hypothesis was to unify perception (network inputs), action (network outputs) and learning. The theory also revised our view of memory as a dynamic process of re-categorization rather than a replicative storage of attributes. This has profound implications for the interpretation of various psychological states, from attention to dreams, and of course, for the brain’s computational capacity. Many other models of the links between memory, learning and computation have been proposed in recent decades. Most of them are based on neural networks, showing individual and collective behaviors with interesting properties that are not discussed here [see some eclectic examples in a vast literature (Dehaene and Changeux, 2000; Miller and Cohen, 2001; Mehta, 2015; Chaudhuri and Fiete, 2016; Mashour et al., 2020; Tsuda et al., 2020)].

To return to our question, can the brain be described as a computer, the basic idea behind these developments is that groups of neurons can allow the emergence of global behaviors while respecting the local organization of specific brain architectures. However, in general, this is mere conjecture, as there is no explicit demonstration of the behavior of the postulated structures. Nevertheless, this has triggered the emergence of a multitude of artificial neural networks (ANNs) that have developed metaphorically, independently of our knowledge of the brain. It is therefore interesting to see briefly how computation with neural networks has been implemented, which may now lead to a re-evaluation of their renewed link to brain behavior.

Neural Networks

Simulation vs. Understanding

The work just mentioned is all centered on the interconnections of neurons, with the key view that neural networks are the objects that we should prioritize, before understanding the cell biology of neurons. In an ANN, a neural program is given which takes into account the “genetic” data (the “genetic envelope”) of the phenomenon, the geometric data (essentially the maximum possible graph of all connections compatible with the genetic program, as well as, in some cases, the length of the axons in the form of propagation delays of the nerve impulse between one synapse and the next) and the operating data. Each neuron displays an integration function which, depending on the multi-message (afferent via the neuron dendrites), specifies the efferent message and the evolution function which, for each synapse, specifies its evolution toward a stable functional state which can be quantified. Again, in the CCD model a key property was that functioning under a genetically-programmed threshold led the synapse to evolve toward a degenerated non-functional state, thus disappearing as a connection. These processes depended on the afferent multi-message, as well as on a temporal law taking growth into account, i.e., the emergence of a new synapse in a functional state. During the operation of the system, a realization of the neural program is obtained at each time, which represents the effective anatomy of the network at that instant as well as its internal functioning.

Since it is quite difficult to understand the internal behavior of networks, especially when they consist of a large number of individual elements, neural networks have been studied mainly by modeling. In the absence of precise biological data, it has been necessary to propose hypotheses on the neural program data, especially regarding the function and structure of synapses. It has not yet been possible to create a detailed model of the synapse based on plausible physicochemical assumptions, so very approximate assumptions have been proposed for the integration and evolution functions of the neuron. The consequence is that, in general, the path followed is the development of ANNs that do not really mimic authentic neural networks. They are implemented as algorithms and then used with fast computers. It should be noted that ANNs can be trained to perform arithmetic operations with significant accuracy. Models of neural arithmetic logic units keep being continuously improved (Schlör et al., 2020). Whether such structures can be explicitly observed in authentic neural circuits remains to be seen. They often produce remarkably interesting results, but at a cost: it is not possible to understand how they achieved their performance. Many achievements made headlines, especially after AlphaGo beat European Go champion Fan Hui in 2015 and then Korean champion Lee Sedol in 2016. This demonstrated that deep learning techniques are extremely powerful. They continue to be developed by improving the structures and functioning of various networks (Silver et al., 2018; Czech et al., 2020). The use of these neural networks is currently limited to image or shape analysis or related diagnostic methods based on recognition of generally imperceptible patterns. As classic examples, these networks are used for making classes of objects, protein function prediction, protein-protein interaction prediction or in silico drug discovery and development (Muzio et al., 2021).

Unfortunately, successful predictions do not provide an explanation of the underlying phenomena, but only a phenomenological simulation of the process of interest, i.e., a process aimed at reproducing the observables we have chosen of a given phenomenon. These approaches, while extremely useful for diagnostic purposes, are unable to distinguish correlation from causation. To make the most of ANNs and use them as an aid to discovery, the result of their operation must be traceable in a causal chain. This restriction explains why legal regulators, in particular in the European Union, now require creators of AI-based models, often based on ANNs, to be able to demonstrate the internal causal chain of their successful models. This is understood as a way to associate prediction with understanding [2 for an example of the way understanding can be visualized in an AI model, see for example Prifti et al. (2020)]. A major reason for the difficulty in tracing causality is the sheer size of networks required to perform simple tasks. For example, a simple visual image involves at least a million neurons in object-related cortex and about two hundred million neurons in the entire visual cortex (Levy et al., 2004). In this context, understanding causal relationships is often related to the ability of ANNs to generate systematic errors [see e.g., (Coavoux, 2021)], while error identification and correction is also important as it relates to intrinsic vulnerabilities against attacks, with the concomitant generation of spurious results (Comiter, 2019).

Neural Networks Organization: Cortical Layers

The fundamental organization of the cerebral cortical circuit of vertebrates remains poorly understood. In particular, it is not fully clear whether the considerable diversity of neuron types (Hobert, 2021) always form modular units that are repeated across the cortex in a way similar to what is observed in the cerebellum for example [Brain Initiative Cell Census Network [BICCN], 2021; Farini et al., 2021; Kim and Augustine, 2021]. The cortex of mammals has long been perceived as different from that of birds, in particular because in birds the folding of the cortical surface is particularly marked, but it now appears that the general organization in neuronal layers is quite similar in both phyla (Ball and Balthazart, 2021). This may be related to similar aptitudes in cognition/computation. There are so many models and conjectures about the role of the brain tissue organization that we had to make a choice for this essay. We will use the description/conceptualization proposed by Hawkins and Blakeslee in their book On Intelligence. How a New Understanding of the Brain Will Lead to the Creation of Truly Intelligent Machines (Hawkins and Blakeslee, 2005) because it provides a compelling description of how the brain might work, notwithstanding the identification of new or alternative brain structures and functions over time. The title of the book comes from the idea that the cerebral cortex is composed of repeated micro-columns of microcircuits stacked side by side that cooperate to generate cognitive capacity. The book proposes that each of these columns has a good deal of innate capacity (“intelligence”), but only very partial information of the overall context. Yet, the cortical columns work together to reach a consensus about how the world works.

Hawkins and Blakeslee pictured the cortex: as a sheet of cells the size of a dinner napkin, and thick as six business cards, where the connections between various regions give the whole thing a hierarchical structure. An important feature in this description is its hierarchical organization, a feature identified as critical since the early work of Simon (1991). The cerebral cortex of mammals comprises six layers of specific neurons organized into columns. Layers are defined by the cell body (soma) of the neurons they contain (Shamir and Assaf, 2021). About two and a half millimeters thick, they are composed of repetitive units (Wagstyl et al., 2020). The strongest connections are vertical, from cells in the upper layers to those in the lower layers and back again. Layers seem to be divided into micro-columns, each about a millimeter in diameter, which function semi-independently, as we discuss below. The outermost layer of the neocortex, Layer I, is highly conserved across cortical areas and even species. It is the predominant input layer for top-down information, relayed by a rich and dense network of long-range projections that provide signals to the branches of the pyramidal cell tufts (Schuman et al., 2021). Layer II, is an immature neuron reservoir, important for the global plasticity of the brain connections. Within the view of the CCD model, it is an important place where synapses are expected to emerge from a virtual to a labile state. This layer contains small pyramidal neurons and numerous stellate neurons but seems dominated by neurons that remain immature even in adulthood, being a source of considerable plasticity (La Rosa et al., 2020). Pyramidal cells of different classes are predominant in layer III. In addition, multipolar, spindle, horizontal, and bipolar cells with vertically oriented intra-cortical axons are present in this layer. It also contains important inhibitory neurons and receives connections from adjacent and more distant columns while projecting to distant cortical areas. This layer has been explicitly implicated in learning and aging (Lin et al., 2020). Layers I-III are referred to as supragranular layers.

Layer IV is another site of cortical plasticity. It contains different types of stellate and pyramidal cells, and is the main target of thalamocortical afferents that project into distinct areas of the cortex, with, at the molecular level, specific involvement of phosphorylation regulatory cascades (Zhang et al., 2019). The major cell types in cortical layer V form a network structure combining excitatory and inhibitory neurons that form radial micro-columns specific to each cell type. Each micro-column functions as an information processing unit, suggesting that parallel processing by massively repeated micro-columns underlies various cortical functions, such as sensory perception, motor control and language processing (Hawkins and Blakeslee, 2005; Hosoya, 2019). Interestingly, the micro-columns are organized in periodic hexagonal structures, which is consistent with the planar tiling of a layered organization (Danchin, 1998). Individual micro-columns are organized as modular synaptic circuits. Three-dimensional reconstructions of anatomical projections suggest that inputs of several combinations of thalamocortical projections and intra- and trans-columnar connections, specifically those from infragranular layers, could trigger active action potential bursts (Sakmann, 2017). Layer VI contains a few large inverted and upright pyramidal neurons, fusiform cells and a specific category, von Economo neurons, characterized by a large soma, spindle-like soma, with little dendritic arborization at both the basal and apical poles, suggesting a significant role of bottom up inputs (González-Acosta et al., 2018). This layer sends efferent fibers to the thalamus, establishing a reciprocal interconnection between the cortex and the thalamus. These connections are both excitatory and inhibitory and they are important for decision making (Mitchell, 2015).

Integrating Inputs and Outputs

The brain is connected to the various organs of the body. The sense organs provide it with information about the environment, while the internal organs allow it to monitor the states of the body, both in space and in time. This family of inputs is distributed in different areas of the brain, connected to cortical layers organized to integrate these inputs and allow them to drive specific outputs, in particular motor outputs (O’Leary et al., 2007). In this general structural signal processing, signals that reach a specific area of the brain connected to a given receptor organ pass through other areas, with feedback signals to connect to other sense organs. Locally, the integration structures of the brain are the micro-columns covering the six layers just described. The layered organization results in a limited number of neurons that integrate signals from other layers and parallel columns. In many cases signal integration may end up in a single cell, giving rise to the disputed concept of “grandmother cell,” individual neurons that would memorize complex signals, such as the concept of one’s grandmother or famous individuals like Halle Berry and Jennifer Aniston (Hawkins and Blakeslee, 2005; Quiroga et al., 2005, 2008; Bowers et al., 2019). To place this controversy in perspective, note that even complex brains can assign vital functions to individual neurons. For example, the deletion of a single neuron in a vertebrate brain abolishes essential behavior forever: the giant Mauthner cell, the largest known neuron in the vertebrate brain, is essential for rapid escape, so its loss means that rapid escape is also lost forever (Hecker et al., 2020).

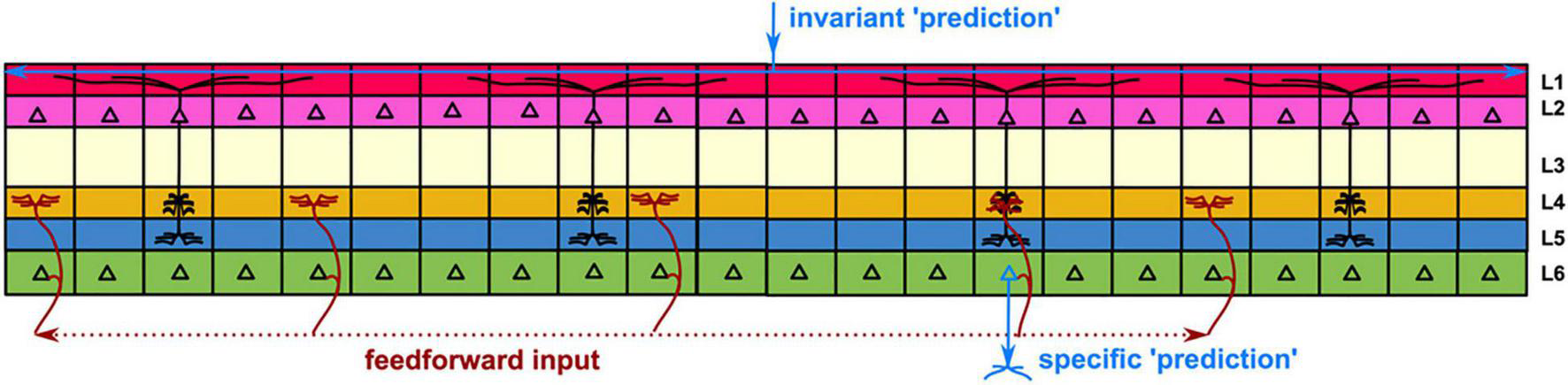

The details of the integration of the input signals have been explored by Hawkins and Blakeslee, who provided an overview with a plausible scenario. The idea is that the individual columns are trained by experience via selective stabilization to represent and memorize particular families of environmental features. This implies that they encode invariant properties that can be used as a substrate to store and make invariant “predictions” (i.e., anticipation of future behavior) related to those particular features, from top to bottom (layer I to layer VI). Now, when the brain receives a particular input that matches one of these predictions, rather than triggering the activity of all the columns that represent similar features, it can be prompted to make an explicit individual prediction via a feedforward input that feeds the columns from the bottom up, consistent with the anatomy of cortical layer VI (Figure 1). This view is illustrated by Hawkins with the following image that shows how convolution of top/down and bottom/up inputs may result in a meaningful output. Imagine two sheets of paper with many small holes in them. The holes on one paper represent the columns that have active layer II or layer III cells, marking invariant predictions. The holes on the other paper represent columns with partial inputs from below. If you place one sheet of paper on top of the other, some holes will line up, others will not. The holes that line up represent the columns that should be active in making a specific prediction. This mechanism not only allows specific predictions to be made, but also resolves ambiguities in sensory input. This bottom/up top/down matching mechanism allows the brain to decide between two or more interpretations and to anticipate events that it has never witnessed before. Further developments of the modular organization of the animal brain may have developed with the emergence of Homo sapiens, resulting in specific amplification of new connection modules (Changeux et al., 2021). This important behavior results from an organization that combines the columns into layers with overlapping lateral connections, a feature we explore later.

FIGURE 1

Redrawn from Hawkins and Blakeslee (2005). Formation of a specific “prediction” in the cortex. The cortex is represented by six layers connecting micro-columns. Generic “predictions” result from memories entered in the columns of the supragranular layers (see text) and triggered by interaction with the environment in a top-down manner. To obtain a specific “prediction” that will result in a specific output, a family of anticipatory feedforward signals is input from the lower layers in a bottom-up manner. The convolution of the descending and ascending signals produces the specific output.

Synchronization

Finally, a critical feature for computation is the need for synchronization of processes. Understanding how cortical activity generates sensory perceptions requires a detailed dissection of the function of time in cortical layers (Adesnik and Naka, 2018). This is the case, for example, of the eye saccade movement that controls vision, allowing proper positioning of the retina to keep proper focus while the eye moves (Girard and Berthoz, 2005). Synchronization is important not only with single computing units but especially important when computing is developed in parallel. For this reason it seems relevant, before understanding whether brain computing can become digital, to identify at least some families of synchronization processes. Many regular waves, spanning a wide time frame, have been identified in the brain, witnessing large scale synchronization processes, particularly important for information processing in virtually all domains including sensation, memory, movement and language (Buzsáki, 2010; Meyer, 2018). Time keeping can be achieved for example via coupling two autonomous dynamic systems (Pinto et al., 2019). Recent work follows older work where small populations with a feedback loop were shown to mimic the behavior of authentic neural networks (Zetterberg et al., 1978). In addition, the need to make use of the states that have been stored requires a scanning process that is essential to enable functions such as memory recall that is distinct from encoding the information from experience (Dvorak et al., 2018).

Indeed, the neural oscillations observed in local field potentials that result from spatially and temporally synchronized excitatory and inhibitory synaptic currents (Buzsáki et al., 2012) provide powerful network mechanisms to segregate and discretize neural computations operating within a hierarchy of time scales such as theta (140 ms) cycles, within which (30 ms slow gamma and (14 ms fast gamma oscillations are nested and theta phase organized. This temporal organization is intrinsic, arising from the biophysical properties of the transmembrane currents through ion-conducting channel proteins. The information processing modes within and between cortical processing modules that these oscillations enable are themselves controlled by top-down synchronous inputs such as medial entorhinal cortex-originating dentate spike events (Schomburg et al., 2014; Dvorak et al., 2021). By hierarchically synchronizing synaptic activations, the intrinsic biophysics of neural transmission accomplishes a remarkable form of digitization. Continuous inputs at the level of individual neurons are converted into oscillation-delineated population synchronized activity with digital features of a syntax for discretized information processing (Buzsáki, 2010), disturbances of which result in mental dysfunction (Fenton, 2015).

An Automatic Scanning Process, the Unexpected Benefit of Fuzziness

As described above, large parts of the animal brain are organized as an association of local micro-networks of similar structure, arranged along planar layers and micro-columns. It is therefore of interest to identify the basic units that might play a role in this organization. Phylogenetic analyses are important in trying to identify functions of neuronal structures that appear for the first time in a particular lineage. Typically, at the onset of the emergence of animals, a neuron was a kind of relay structure that couples a sensory process to a motor process. At the very beginning of the development of such structures during the evolution of multicellular organisms, the role of neurons was simply to couple sensing with the movement produced by distant organs. However, this simple process is bound to have a variety of undesirable consequences if it does not resolve its role within a well-defined spatial and temporal framework. This means that the sequence associating the presence of a signal to its physiologic or motor consequence must be delimited in time and space. A relevant design to ensure the quality of this process is to divert a small part of the output to inhibit the effect of the upstream input, in short, to achieve a homeostat (Cariani, 2009).

Homeostasis: The Negative Feedback Loop

In his Neural Darwinism Edelman developed the concept of “reentry,” a key mechanism for the integration of brain functions (Edelman and Gally, 2013). This concept is based on the idea that a small part of the output signal of a network is diverted to the input region and fed back into the network with a time delay. This phenomenon belongs to the family of signals that ensure homeostasis. A central theme governing the functional design of biological networks is their ability to maintain stable function despite intrinsic variability, including noise. In neural networks, local heterogeneities progressively disrupt the emergence of network activity and lead to increasingly large perturbations in low frequency neural activity. Many network designs can mitigate this constraint. For example, targeted suppression of low-frequency perturbations could ameliorate heterogeneity-induced perturbations in network activity. The role of intrinsic resonance, a physiological mechanism for suppressing low-frequency activity, either by adding an additional high-pass filter or by incorporating a slow negative feedback loop, has been successfully explored in model neurons (Mittal and Narayanan, 2021).

The cerebellum, with its highly regular organization and single-fiber output from Purkinje cells, is a good example of repetitive networks. Mutual inhibition of granule cells, mediated by feedback inhibition from Golgi cells—much less numerous than their granule counterparts—prevents simultaneous activation. Granule cells differentiate by their priming threshold, resulting in bursts of spikes in a “winner take all” sequential pattern (Bratby et al., 2017). Taken together, the local implementation of networks with embedded feedback loops as a strong output used to re-enter relevant cortical large networks resulted in a pattern that was proposed to explain the origins of consciousness and its scanning properties (Edelman et al., 2011), and further extended into the Global Neuronal Workspace hypothesis that attempts to account for key scientific observations regarding the basic mechanisms of conscious processing in the human brain (Mashour et al., 2020). These views, where inhibition is crucial, are strongly supported by the considerable importance of circuits comprising inhibitory neurons. Inhibition in the cortical areas is implemented by GABAergic neurons, which comprise about 20–30% of all cortical neurons. Witnessing the importance of this negative function, this proportion is conserved across mammalian species and during the lifespan of an animal (Sahara et al., 2012).

Finally, the role of inhibition, which typically occurs locally but is typically triggered by inputs from distant areas of the brain, is particularly important for the discrimination of classes of processes. When neural network excitatory inputs are both mutually excitatory and also recruit inhibition globally, the motif generates winner-take-all dynamics such that the strongest and earliest neural inputs will dominate and suppress weaker and later inputs, which in turn causes further enhancement of the dominant inputs. The net result is not merely a signal-to-noise enhancement of the dominant activity, but a network selection and discretization of what would be otherwise continuously variable activity. This motif is learned, improves with experience and in the entorhinal cortex-hippocampal circuit is responsible for learning to learn (Chung et al., 2021). The study of child brain development shows that there is a progressive overlap of organized responses to specific inputs (in the way objects and then numbers are identified) with other types of input from, for example, visual areas. The consequence of this overlap is that “intuitive” conceptions, resulting from prior anchoring in a particular environment, are barriers to conceptual learning. This implies that the inhibition of these inputs is important to allow the development of rationality (Brault Foisy et al., 2021). Interestingly, this duality between intuition and rational reasoning can be attributed to a difference between heuristics and algorithmic reasoning (Roell et al., 2019), a feature that may support the transition from a purely analog to a digital process.

Consequences of Imperfect Feedback: Endogenous Scanning of Brain Areas

The phenomenon of consciousness suggests that the brain generates an autonomous process that allows it to continuously scan the network, extracting information to promote action, at least during the waking period. It is therefore important to propose conjectures about how this process is generated. Many connection schemes using feedback or feedforward signaling are well suited to enable homeostasis, but there is a particularly simple one that seems to have interesting properties for producing scanning behavior. Suppose that at some level sensory inputs are split and thus follow parallel paths, only to be re-associated and, for example, because one of the cells in one path activates an inhibitory neuron, negatively controlling the downstream neuron, and then activates the neurons in the other path, subtracting one from the other only at the level of a specific class of cells, with a XOR-like local network (Kimura et al., 2011; Michiels van Kessenich et al., 2018). Measuring fine differences is a way to extract subtle information from the environment and make it relevant. Cells of the latter class are then assumed to return one of the duplicated sensory inputs or intermediate inputs corresponding to “modifications” of these inputs (see a metaphoric illustration in Figure 2). If the difference read by the cell integrating the commands from the two parallel pathways is very small, the feedback inhibition command will have no effect; if the difference is large, this command may cancel or reinforce one of the upstream pathways, so as to cancel the difference, thus resulting in homeostatic behavior. If the cell in the last layer remains activated, it tends on the one hand to produce an action via its connection to a motor center, and on the other hand to correct the influence of the input system that leads it to command the action.

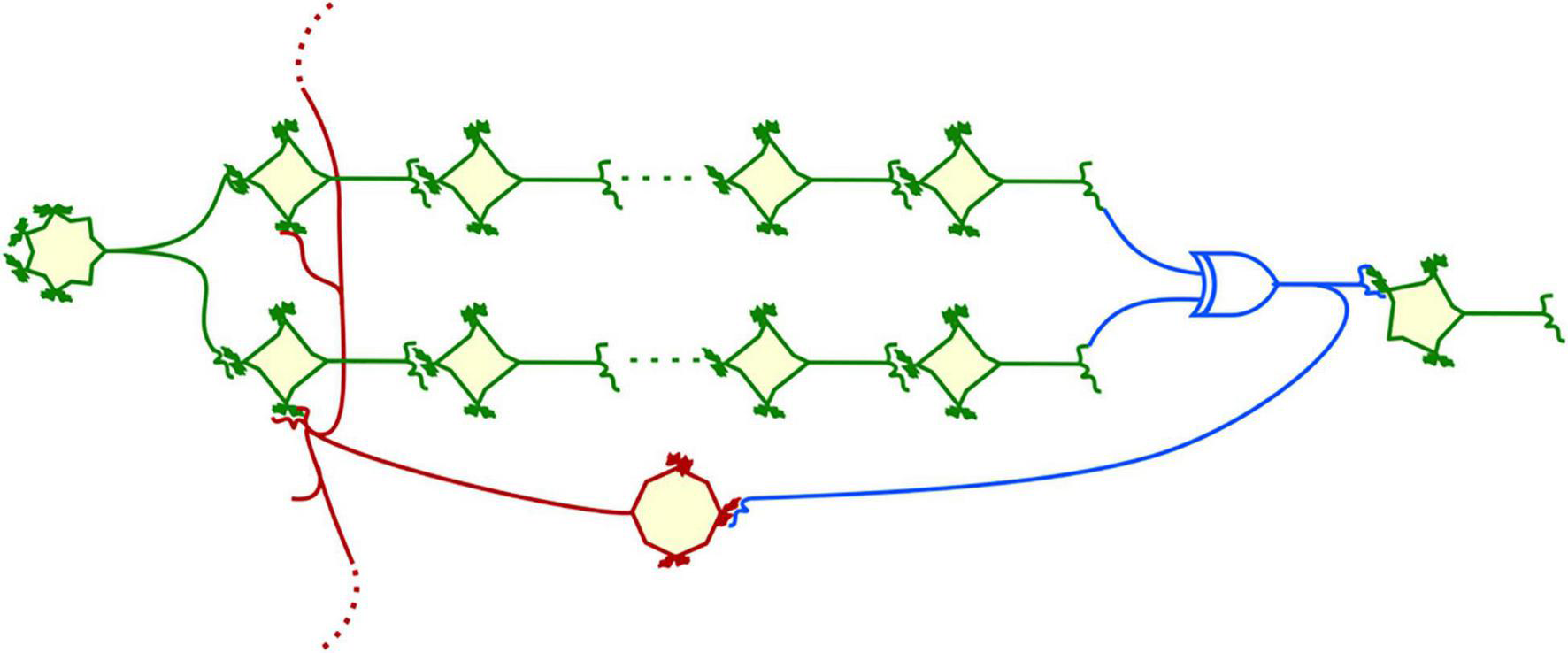

FIGURE 2

A representation of a unit cell for a quasi-homeostat. An input cell is connected to an array of duplicated column cells that inputs in a microcircuit behaving as an XOR logic gate (blue) subtracting signals from the two parallel columns. A fraction of the network output is diverted to activate an inhibitory neuron (red) that feedbacks to the origin of the duplicated columns. Since the corresponding connections cannot be coded individually, they will also connect to adjacent columns and trigger their activity, initiating a scanning process.

Now, consider how these structures are built during brain development. Living matter, unlike standard inorganic matter, is soft matter. This intrinsic flexibility must be taken into account when considering the fine architecture of authentic neural networks. When we describe columns of cells organized into a hexagonal planar structure, it cannot consist of structures with precisely defined boundaries (Tecuatl et al., 2021). In another level of fuzziness, involving time, the effectiveness of individual synapses is not strictly defined, resulting in pervasive synaptic noise (Kohn, 1998). Moreover, the individuality of each synapse can only be programmed exceptionally as such: this would require at least one gene per synapse, and remember that there are at least 1015. This implies that there is considerable variation in the temporal and spatial dependence of neuronal connectivity. Rather than being an obstacle, this weakness gives rise to a new strength: it is because neural networks cannot be programmed exactly to create precise homeostatic structures that they lead to the repetition of approximate structures that are quasi-homeostatic, creating interactions with their neighbors that can be used to implement emerging functions.

Indeed, such networks have the interesting property of being able to trigger an automatic network scanning process. When an input signal triggers a homeostatic response from one column meant to inactivate it after a time, it inevitably activates the response of adjacent columns to which it is connected because of inevitable variation in dendrites and axons connections. In turn, this initiates a homeostatic response evolved to silence them. In so doing they now activate adjacent columns, thus initiating a local scan of the memorized information stored in those columns, progressing by contiguity as a wave. In line with the notion of reentry, why not propose this process at the origin of consciousness? This metaphoric vision developed into a dialog between biology and the formal properties of syntactic structures proposed by Noam Chomsky [see Danchin/Marshall exchange in Modgil and Modgil (1987)]. Of course, an infinite number of variations on this theme, playing on the differences between nearly identical signals can act as a scanning process that will recall memories via the sequential activation followed by the inactivation of parallel structures. Since this process is spatially constrained by contiguity, it will give the recall of memories a spatial component, such as we all experience when we have to retrace our steps to find a memory that has just escaped our attention.

The Computing Brain

A TM must separate the machine and the data/program physically, noting that the data/program entity cannot be split into specific entities but belongs to a single category, that is processed by the machine to modify its state. Where does the brain fit in this context? Can we distinguish between a set of data/programs and the state machine that manages it? An interesting observation from an interview by the Edge Magazine with Freeman Dyson in 2001 gives us a hand in broadening our discussion3. Freeman Dyson, as usual, is an extraordinary mind: The two ways of processing information are analog and digital. [.]. We define analog-life as life that processes information in analog form, digital-life as life that processes information in digital form. To visualize digital-life, think of a transhuman inhabiting a computer. To visualize analog-life, think of a Black Cloud [reference to the novel of Fred Hoyle (1957)]. The next question that arises is, are we humans analog or digital? We don’t yet know the answer to this question. The information in a human is mostly to be found in two places, in our genes and in our brains. The information in our genes is certainly digital, coded in the four-level alphabet of DNA. The information in our brains is still a great mystery. Nobody yet knows how the human memory works. It seems likely that memories are recorded in variations of the strengths of synapses connecting the billions of neurons in the brain with one another, but we do not know how the strengths of synapses are varied. It could well turn out that the processing of information in our brains is partly digital and partly analog. If we are partly analog, the down-loading of a human consciousness into a digital computer may involve a certain loss of our finer feelings and qualities.

An important feature that can be added to the question posed by Dyson is that the brain, through its learning process, constructs a hierarchical tree structure and symbolic links from the data submitted to it (basically, it throws away most of the data, condenses it into another form at a higher level of abstraction to sort and order it). Capturing the involvement of elusive information is difficult (we still do not have a proper formalism to describe what it is). The most common approach to tie information to energy has been proposed by Rolf Landauer and Charles Bennett, with the understanding that during computation, creation of information is reversible (hence does not dissipate energy) while erasing memory to make the result of computation stand out against the background costs kTln2 per bit of information (Landauer, 1961; Bennett, 1988b). It is well established that the brain consumes a considerable amount of energy, but the relationships with information processing have not been investigated in-depth.

The word “program,” often used loosely to describe the concrete implementation of a TM, implies the anthropocentric requirement of a goal. However, a TM does not have an objective, it is “declarative,” i.e., it functions as soon as a tape carrying a string of data is introduced into its read/write machinery. Understanding how it works is therefore better suited to the idea of data not program manipulation. The distinction between data and program opens a difficult scene in the concept of information. Data has no meaning in itself, whereas the program depends on the context (Danchin, 2009b). This distinction is evident in the cell where genetic information duplicated during the process of DNA replication starts as soon as a DNA double helix meets a DNA polymerase machinery. This process does not see the biological significance of the encoded genes or other features of the DNA sequence. This has been well established with Bacillus subtilis cells transformed with a cyanobacterial genome that is faithfully replicated but not expressed, whereas it drives the synthesis of an offspring when present in its parent host (Watanabe et al., 2012). This distinction between Shannon-like information (meaningless) and information with “value” has been discussed for a long time under the name “semantic” information [(Bar-Hillel and Carnap, 1953; Deniz et al., 2019; Lundgren, 2019; Miłkowski, 2021), see also emphasis on the requirement for recursive modeling to account for information in the brain (Conant and Ashby, 1970)]. However, with the exception of the idea of logical depth, proposed by Bennett in 1988 (Bennett, 1988a), there is still no well-developed theory on the subject. We will restrict our discussion to the role of data in the TM.

The possibility of moving from one set of data to a smaller set, as illustrated in the functioning of cortical layers, is quite similar to the measure proposed by Bennett when he illustrated Landauer’s principle by the process of arithmetic division. In this illustration, Bennett showed that this operation could be implemented in a reversible way, leaving the remainder of the division as its result (Bennett, 1988b). However, in order to bring out the division remainder, to make it visible, it is necessary to erase all the steps that led to the result: this is what costs energy. What is indeed important is the sorting that allows the relevant data to be isolated from the background. To carry out a sorting, a choice, it is necessary to carry out a measurement, as Herbert Simon pointed out in his decision theory (Simon, 1974). In living cells, this process is fairly easy to identify in the process of discriminating between classes of entities, for example young and old proteins. In this case, the question is how to verify that the cell is measuring something before “deciding” to degrade a protein. Many ways of achieving this discrimination can be proposed. In a cell, the cleaning process could simply be a prey-predator competition between proteins and peptidases. It could be the result of spatial arrangement with producing or moving proteins in a place where there are few degrading enzymes, or the fact that when the proteins are functioning, they form a block, an aggregate, that is difficult to attack. All these processes dissipate energy at steps that specifically involve information management (Boel et al., 2019). But what about neural networks?

There is no doubt that the brain manipulates information and computes. But where are its states stored and how are they managed? Discrimination processes can easily be identified in the way the brain tackles its environment, but where do we find specific energy-dependent processes underlying discrimination? Furthermore, there does not seem to be any data/program entity that can be exchanged between brains. Homo sapiens is perhaps an exception, when true language has been established. Animal communication may also make use of the same observation, but less obviously, and certainly not if we follow the Chomskian definition of language (Hauser et al., 2002). Sentences can be exchanged between different brains, in a way that alters the behavior of the machine that carries the brain: this is particularly visible with writing, which is the metaphor used by Turing, but it is certainly true as soon as writing is established, which makes writing the benchmark of humanity.

Writing: Toward a Turing Machine?

We enter here a very speculative section of this essay, meant to help generate new visions of the brain’s competence and performance. In fact, while von Neumann and others invented computers with mimicking the brain in mind, the brain does not appear to behave as a TM. Table 1 compares the key features of a Turing Machine, a computer, and the human brain (Table 1). In case we accept that the brain could work as a digital computer, several features of the digital world should be highlighted as they should have prominent signatures. Among those that have unexpected but recognizable consequences, we find recursion (Danchin, 2009a) and this fits with the concept of reentry. An original feature of the TM is that it allows recursion. Recursion is built into numerical worlds when a routine executes a program that calls itself. A consequence of recursion, which was addressed by Hofstadter in his Gödel Escher Bach (Hofstadter, 1999), is that it produces inherently creative behavior (i.e., giving rise to something that has no precedent), a feature commonly observed in the role of the brain. This happens in cells, even before they multiply (especially when they repair their DNA, during the stationary phase). The digital life of the cell provides a recursive way to creatively explore its future. Creation is also a key feature of TMs, and brains. Consider the wiring diagram of the mammalian brain comprised as it is of parallel cortico-striatal-thalamo-cortical loops each specialized for motor, visual, motivational, or executive functions (Alexander et al., 1986; Seger, 2006). These canonical loops provide the essential circuitry for recursive information processing, as observed in the creation of complex abstract rules from simple sensory motor sequences (Miller and Buschman, 2007). An elegant study stimulated the mossy fiber component of the cerebellar circuity within the additional parallel cortico-cerebellar-thalamo-cortical loop to causally demonstrate recursion in the formation of a classically conditioned eyeblink response (Khilkevich et al., 2018). Indeed, such recursion-implementing circuity is widespread and characteristic of the mammalian brain (Alexander et al., 1986).

TABLE 1

| Turing Machine | Computer | Brain | |

| Separation data/machine | Yes | Yes | No in general Yes for grammatical language performance and numbering |

| Declaration | Yes | No (not yet) | Yes |

| Prescription | No | Yes | Yes in social organisms |

| Digital | Yes | Yes | Limited to numbering and language performance |

| Analog | No | Yes for the machine | Yes |

| Recursive | Yes | Yes | Yes |

| States | Finite | Many finite states | Unlimited, poorly defined |

| Organizational granularity | Two separate levels | Multilevel | Multilevel |

| Memory | Past state | RAM and ROM | Distributed, limited only by lifespan |

| Computation | Algorithmic | Algorithmic (heuristics can be implemented via algorithms) | Heuristic and algorithmic |

Some specific features of a Turing Machine, a computer, and the human brain.

Among the characteristics necessary to identify a TM is the physical separation of the data/program from the machine that interprets it. The data/program entity is illustrated as a string of symbols, a purely digital representation. The metaphoric string of the Universal TM can be embodied into a variety of strings (parallelization is allowed). A brain, on the other hand, seems to work with a completely different approach. There is no separate program involved in its operation. It is simply “programmed” by the interconnections between its active components, neurons. The brain does not appear to fetch instructions or data from a memory located in a well-defined area, decode and interpret instructions etc. Neurons get input data from other neurons, operate upon these data and generate output data that are fed to receiving neurons. Memory is distributed all over the brain tissue. This view is a bit oversimplified but it is enough to bring us to the following questions. Do we find entities that can be separated from the brain, extracted and reintroduced, in the way it works? In an animal brain, the question is to understand what might play the role of strings of symbols.

Separation has a considerable consequence: it requires some kind of communication and exchange, which could occur between parts of the brain. This feature was discussed in an interesting essay by Julian Jaynes, where he surmised that consciousness emerged from a dialog between coded sequences between the brain hemispheres [our ancestors had “voices” (Jaynes, 2000)]. While this vision is now obsolete, it points out how this could be the first step of a pre-TM where the brain exchanges strings of signals between hemispheres (contemporary views consider areas rather than hemispheres, with particular emphasis on inhibition) to generate novel information. In fact, such phenomena have been experimentally demonstrated by interhemispheric transfer and interhemispheric synthesis of lateralized engrams, studies that exploited the ability to reversibly silence one and then the other of the brain’s bilateral structures (Nadel and Buresova, 1968; Fenton et al., 1995). This organization of the cortex is also used by animals to map future navigation goals (Basu et al., 2021). Alongside this evolution of information transfer within the brain, strings of symbols could be exchanged between brains, implying that the social brain is at the heart of what is needed for a brain to become a TM. When language comes into play (probably first through grammatically organized phonemes and then through writing), part of the brain may behave as a digital device, with important properties derived from the corresponding TM scenario. This is what is happening now, when you read this text: your brain behaves like a TM, and you can modify the text, exchange it via someone else’s brain, etc. In fact, some of this may already be true in the ability to see. The images seen by the retina are in a way digitized via the very construction of the retina as layers of individual cells, that “pixelize” the image of the environment. It is not far-fetched to assume that the processing of the corresponding information by brain neural networks has retained some of the characteristics of this digitization.

Before the invention of writing, making reusable tools would also represent a primitive way of implementing a TM. Homo sapiens is one of the very few animals to do so. In birds, tools can be made, but tool reuse and tool exchange have rarely been observed. It may therefore be that the genus Homo began to build a TM-like brain, but that its actual implementation as an important feature only appeared with complex languages (i.e., with grammatical properties linked to a syntax of the type described by Noam Chomsky) and, most importantly, with the invention of writing. Looking again at the network layer organization of the cortex, we can see that there is a certain analogy between the simplest elements of syntactic structures and this neural structure. The afferent pathways (and not the cells), all constructed in the same way (but not identical), would represent the nominal syntagm (with all that the numerous variants of afferents, interferences, modifications can bring to meaning) and the cells integrating their output commands, the verbal syntagm (with all that this implies in terms of motor actions, including imaginary ones, since by construction the verbal syntagm acts on the nominal syntagm.) This is a gradual evolution, which will certainly undergo further stages in the future. Invention of language with its linear sequences of phonemes, when spoken, and letters when written, would mark, in Homo sapiens the transition moment when it behaved as a Turing Machine and separate human beings from other animals. One caveat, though. The emphasis here has been on one of the characteristics of the TM, namely the physical separation of the data from the machine, where data can be replaced by other data without changing the specific nature of the machine. However, there is a second essential characteristic of a TM: it is a finite state machine. It would be difficult to accept that the brain behaves like such a machine. Even its states are quite difficult to identify (although progress in identifying the functioning of various areas may provide some insight into the localized features of specific states). It would be necessary to validate the hypothesis discussed here, to couple the way writing is used with specific states. This remains quite futuristic.

In Guise of Conclusion: The Brain Is not a Digital Computer, but it could Evolve to Become One