- 1Department of Radiation Oncology, The University of Texas MD Anderson Cancer Center, Houston, TX, United States

- 2Department of Radiation Medicine, Oregon Health & Science University, Portland, OR, United States

- 3Medical Scientist Training Program, Oregon Health & Science University, Portland, OR, United States

- 4Department of Clinical Oncology, Menoufia University Shibin El Kom, Shibin El Kom, Egypt

- 5Department of Computer Science, Aalto University School of Science, Espoo, Finland

Background/Purpose: Sarcopenia is a prognostic factor in patients with head and neck cancer (HNC). Sarcopenia can be determined using the skeletal muscle index (SMI) calculated from cervical neck skeletal muscle (SM) segmentations. However, SM segmentation requires manual input, which is time-consuming and variable. Therefore, we developed a fully-automated approach to segment cervical vertebra SM.

Materials/Methods: 390 HNC patients with contrast-enhanced CT scans were utilized (300-training, 90-testing). Ground-truth single-slice SM segmentations at the C3 vertebra were manually generated. A multi-stage deep learning pipeline was developed, where a 3D ResUNet auto-segmented the C3 section (33 mm window), the middle slice of the section was auto-selected, and a 2D ResUNet auto-segmented the auto-selected slice. Both the 3D and 2D approaches trained five sub-models (5-fold cross-validation) and combined sub-model predictions on the test set using majority vote ensembling. Model performance was primarily determined using the Dice similarity coefficient (DSC). Predicted SMI was calculated using the auto-segmented SM cross-sectional area. Finally, using established SMI cutoffs, we performed a Kaplan-Meier analysis to determine associations with overall survival.

Results: Mean test set DSC of the 3D and 2D models were 0.96 and 0.95, respectively. Predicted SMI had high correlation to the ground-truth SMI in males and females (r>0.96). Predicted SMI stratified patients for overall survival in males (log-rank p = 0.01) but not females (log-rank p = 0.07), consistent with ground-truth SMI.

Conclusion: We developed a high-performance, multi-stage, fully-automated approach to segment cervical vertebra SM. Our study is an essential step towards fully-automated sarcopenia-related decision-making in patients with HNC.

Introduction

Sarcopenia – the excessive loss of skeletal muscle (SM) mass and function – is a common and debilitating phenomenon in head and neck cancer (HNC) patients (1). Weight loss is frequent in HNC due to nutritional deficiencies induced by tumor geometry affecting normal tissues (2) and/or side effects caused by therapeutic interventions (3). Although the link between treatment-associated weight loss and survival in HNC is unclear (4), sarcopenia has been strongly associated with oncologic outcomes and late radiation-induced toxicities (5–7). Notably, in a recent meta-analysis of HNC patients by Surov et al. (5), sarcopenia was significantly associated with lower overall survival (hazard ratio = 1.64, p < 0.00001) and disease-free survival (hazard ratio = 2.00, p < 0.00001). Therefore, sarcopenia prediction is of paramount importance in patients with HNC.

Sarcopenia can be identified using different diagnostic criteria (8). One quantitative method investigated in various studies is using a threshold based on the skeletal muscle index (SMI), the cross-sectional area of skeletal muscle measured on axial imaging normalized to the square of the patient’s height (9). The SMI is most commonly calculated and referenced using CT imaging of abdominal musculature (10–14). However, abdominal imaging is not available for all HNC patients. Importantly, Swartz et al. (15), van Rijn-Dekker et al. (6), and Olson et al. (16) have recently suggested the C3 cervical vertebra musculature cross-sectional area may also be used to quantify sarcopenia accurately.

Current approaches to generate C3 musculature segmentations needed for SMI calculation rely on either semi-automated or completely manual segmentation (6), which can be time-consuming, introduce unnecessary errors, and suffer from interobserver variability. A fully-automated approach would be an attractive alternative to the current manual/semi-automated standard. Deep learning, which has found success in medical image segmentation (17–20), may be an ideal choice for fully-automated segmentation of SM. Several recent studies have utilized deep learning methods for automated SM measurement based on abdominal CT scans with reasonable performance (21–26). However, to date, no studies have attempted to automate the SMI calculation workflow based on head and neck imaging.

The primary objective of this study was to develop a fully-automated approach to segment skeletal muscle at the C3 vertebral level for use in SMI calculations. These calculations could be directly used to determine sarcopenia status for predicting prognostic outcomes. To achieve this goal, we developed and implemented a two-stage deep learning system that utilizes 3D and 2D ResUNets to detect the C3 vertebra and segment the corresponding C3 musculature, respectively. We show that our approach can faithfully generate segmentations comparable to ground-truth human-generated segmentations. By fully automating the sarcopenia determination workflow, we can ensure rapid, reproducible, and accurate measurements for use in clinical decision-making.

Materials and methods

Patient and imaging data

495 patients from the head and neck squamous cell carcinoma (HNSCC) publicly available dataset collection on The Cancer Imaging Archive (TCIA) (27–29) were retrospectively collected in 2021. All patients had a histopathologically-proven diagnosis of squamous cell carcinoma of the oropharynx and were treated with curative-intent intensity-modulated radiotherapy. DICOM-formatted contrast-enhanced CT scans were acquired from the TCIA databases (27–29). Of the 495 patients available in the HNSCC collection, 396 were selected due to their inclusion of the C3 vertebrae on imaging. Subsequently, 6 patients were removed due to image reconstruction errors (n=1), image processing errors (n=1), or oblique image orientations (n=4), leading to a final set of 390 patients used in this analysis. The clinical and demographic characteristics of these patients are shown in Table 1. The majority of patients were male (86.6%) with base of tongue tumors (51.6%). SM (paraspinal and sternocleidomastoid muscles) was manually segmented for each CT image in one slice (2D image) at the level of the C3 vertebra. The segmentations were performed using sliceOmatic, version 5.0 (Tomovision) using previously published Hounsfield unit thresholds to define muscle and fat (12, 30); specifically, a range of -29 to +150 Hounsfield units was used to initially define SM followed by manual corrections. No pathological tissue was located in the segmented SM. The single-slice 2D CT images selected for segmentation and the corresponding SM segmentation masks were exported as DICOM files and tag files, respectively. Segmentations are made publicly available on Figshare (doi: 10.6084/m9.figshare.18480917); additional information on the dataset used in this analysis can be found in the corresponding data descriptor (31).

Image processing

The DICOM 3D volumetric and single-slice 2D CT images were converted to Neuroimaging Informatics Technology Initiative (NIfTI) format using the DICOM processing toolkit DICOMRTTool v. 0.3.21 (32). The SM segmentation. tag files were converted to NIfTI format using an in-house Python script. The NIfTI files for the single-slice 2D CT images and SM segmentation were used to train the 2D segmentation model (described below). The 2D CT slice location in the C3 vertebra was extracted from the DICOM file, which was then used to generate the ground-truth segmentation mask for the C3 section, defined as a volume 33 mm in thickness centered at the location of the 2D CT slice. The tissue regions in the 3D CT images were distinguished from the background by thresholding the images using a value of greater than -500 Hounsfield units with any air gaps within the tissue region filled to generate a binary mask for the external boundaries. The generated external boundary masks and the locations of the 2D CT slices were used to create the ground-truth C3 section segmentations to train the 3D model (described below). As we have described elsewhere (33), all the images and masks were resampled to a fixed image resolution of 1 mm across all dimensions. The CT intensities were truncated in the range of [−250, 250] Hounsfield units to increase soft tissue contrast and then normalized to the range of [-1, 1] scale (Figures 1A, B). We used the Medical Open Network for AI (MONAI) (34) software transformation functions to rescale and normalize images.

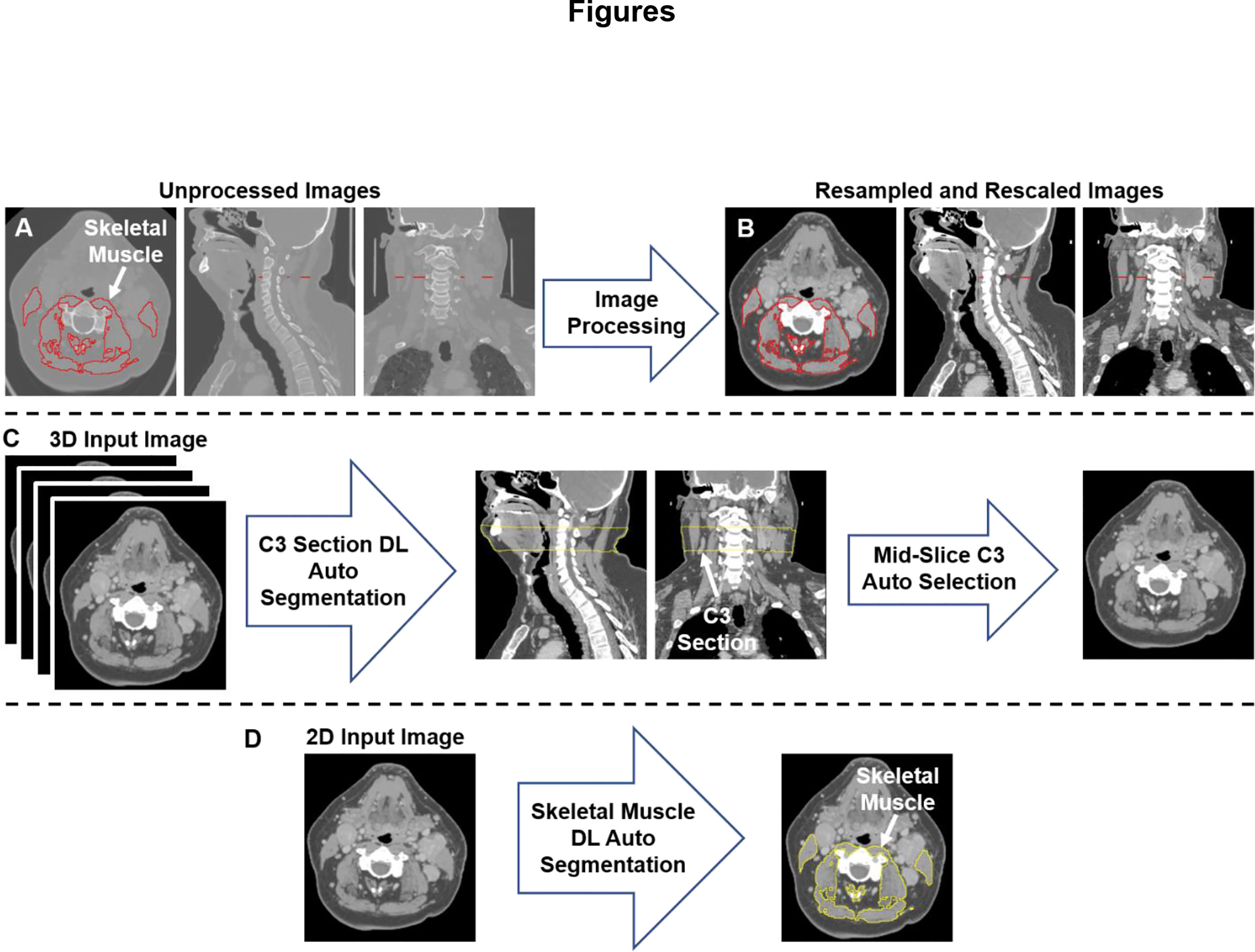

Figure 1 An illustration of the workflow used for skeletal muscle (SM) auto-segmentation at the C3 vertebra. (A) Overlays of the ground-truth SM segmentation and the original CT images. (B) Overlays of the ground-truth SM segmentation and the processed CT images. (C) An illustration of the workflow used to auto-select a single CT slice at the C3 vertebra for SM auto-segmentation. The auto-selected slice is the middle slice of the auto-segmented C3 section (33 mm in height) using a 3D ResUNet applied to the 3D volumetric CT image. (D) Auto-segmentation of SM using a selected C3 vertebra CT image using a 2D ResUNet model.

Segmentation model

We used a multi-stage deep learning convolutional neural network approach for SM segmentation. Our approach was based on the UNet architecture with residual connections (ResUNet) included in the MONAI software package, as we have described in previous publications (33, 35). In the first stage of our approach (Figure 1C), a 3D ResUNet model auto-segmented the C3 vertebra section (33 mm), which was then followed by auto-selection of the middle slice of the section. In the second stage of our approach (Figure 1D), a 2D ResUNet model auto-segmented the SM on the auto-selected slice of the C3 section. Additional details of our architecture are described in Appendix A.

Model implementation

We randomly split the data into 300 patients for training and 90 patients for testing. For training, we used a 5-fold cross-validation approach where the 300 patients from the training data were divided into five non-overlapping sets. Each set (60 patients) was used for model validation while the 240 patients in the remaining sets were used for training, i.e., each set was used once for testing and four times for training, leading to five sub-models. The processed CT and corresponding masks for 3D ResUNet model and 2D ResUNet models (C3 section and SM, respectively) were randomly cropped to four random fixed-sized regions (patches) of size (96, 96, 96) and (96, 96) per patch per patient, respectively. Additional details on the model implementation are described in Appendix A. We implemented additional data augmentation to both image and mask patches to minimize overfitting, including random horizontal flips of 50% and random affine transformations with an axial rotation range of 12 degrees and a scale range of 10%. We used the Adam optimizer for computing the parameter updates and the soft Dice loss function. The models were trained for 300 iterations with a learning rate of 2×10-4 for the first 250 iterations and 1×10-4 for the remaining 50 iterations. The values for the Adam optimizer coefficients β1 and β2 were 0.9 and 0.999, respectively. Data augmentation and loss functions were provided by the MONAI framework (34). The final segmentations on the test set for both models were obtained by a majority vote on a pixel-by-pixel basis for all predicted segmentation masks by the 5-fold cross-validation sub-models (model ensemble), as described in a previous study (33).

Model validation

For both the 3D ResUNet and 2D ResUNet models, we evaluated the performance on the corresponding cross-validation sets as well as the final ensemble segmentation on the test set using the Dice similarity coefficient (DSC) (36). Specific to the 3D model, we also evaluated the accuracy of the C3 section segmentation by quantifying the absolute difference between the slice locations of the mid-section of the C3 section predicted by the 3D model and the 2D CT ground-truth image (in mm). Specific to the 2D model, we compared the SM cross-sectional areas obtained using the SM ground-truth segmentation with 1. the 2D model predicted SM segmentations on the same ground-truth CT image (Pred_GT) and 2. the 2D model predicted SM segmentations on the slices auto-selected by the 3D model (Pred_C3). We evaluated the correlation between the SM cross-sectional areas using the Pearson correlation coefficient; we also used a two-sided Wilcoxon signed-rank test to determine if these SM values were significantly different. Additionally, to derive the SMI, we normalized the SM cross-sectional areas (in cm2) with the patients’ heights (in m2). We then examined the correlation between the SMI values produced by the ground-truth and deep learning segmentations using the Pearson correlation coefficient; we also used a two-sided Wilcoxon signed-rank test to determine if these SMI values were significantly different. Based on previous work by Swartz et al. (15) and van Rijn-Dekker et al. (6), we used Equation 1 to calculate the cross-sectional area (CSA) at the L3 lumbar level based on the CSA at the C3 cervical level and subsequently Equation 2 to calculate the lumbar SMI:

Based on previous work by Prado et al. (30), SMI thresholds of 52.4 cm2/m2 (males) and 38.5 cm2/m2 (females) were applied to lumbar SMI derived from SM ground-truth and deep learning segmentations to stratify patients by sarcopenia status (‘normal’ and ‘depleted’ muscle); body composition related measurements in the training and testing sets are shown in Appendix B. These stratifications were then used for Kaplan-Meier analysis to determine associations between sarcopenia status and overall survival probabilities. To determine the sarcopenia status for the whole data set (i.e., 390 patients), we implemented Kaplan-Meier analysis on the 5-fold cross-validation data and the test data. We aggregated the SMI estimated for each cross-validation fold (i.e., 60 patients per fold) using the corresponding trained 3D and 2D models in addition to the SMI for the test data using the average predictions of the five cross-validation models.

Results

3D ResUNet model performance: C3 section auto-segmentation

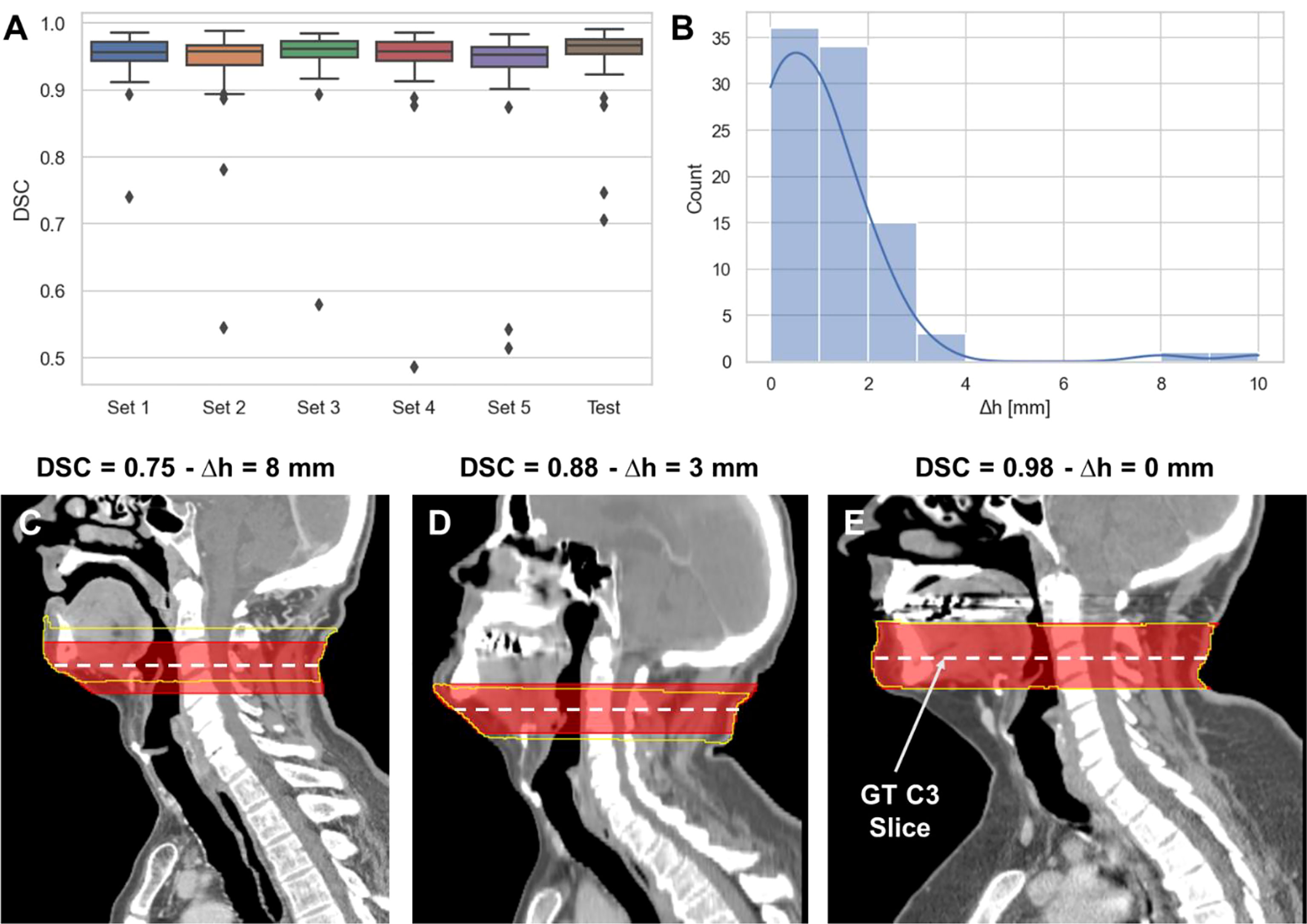

The performance of the 3D ResUNet model for segmenting the C3 section of the neck is summarized in Figure 2A. When assessing the performance of each individual sub-model from our 5-fold cross-validation, the DSCs calculated between the predicted region segmentations and the ground-truth region segmentations were high and consistent between all training folds, with a mean (± standard deviation) DSC of 0.95 ± 0.01. When combining the cross-validation fold predictions using our ensemble approach, the performance on the test set increased to 0.96 ± 0.06. The middle slices of the predicted 3D regional segmentations for the test set were mostly within 4 mm of the ground-truth segmentation slice locations, with the greatest number of patients being within 1 mm (Figure 2B); the maximum outlier was at a distance of 10 mm. Examples of test set predictions for cases with low, medium, and high performance compared to the mean DSC are shown in Figures 2C–E. As can be visually confirmed, the low-performance case still generated a segmentation such that the middle slice was contained in the C3 region.

Figure 2 3D ResUNet model performance for segmentation of C3 vertebra section. (A) Boxplots of the Dice similarity coefficient (DSC) distributions for the 5-fold cross-validation data sets (Set 1 to Set 5 – 60 patients each) and the test data (90 patients). (B) Histogram of the absolute difference (in mm) of the C3 slice location at the middle slice of the auto-segmented C3 section and the location of the ground-truth manually segmented CT slice. Illustrative examples overlaying the C3 ground-truth segmentations (red) (33 mm centered at the ground-truth manually segmented CT slice) and predicted segmentations (yellow) on the CT images with different DSC values (low – 0.75 (C), medium – 0.88 (D), and high – 0.98 (E) performance compared to the mean DSC value of 0.95). The middle slice at the center of mass of the segmented C3 region was auto-selected for further skeletal muscle auto-segmentation by the 2D ResUNet model.

2D ResUNet model performance: SM auto-segmentation

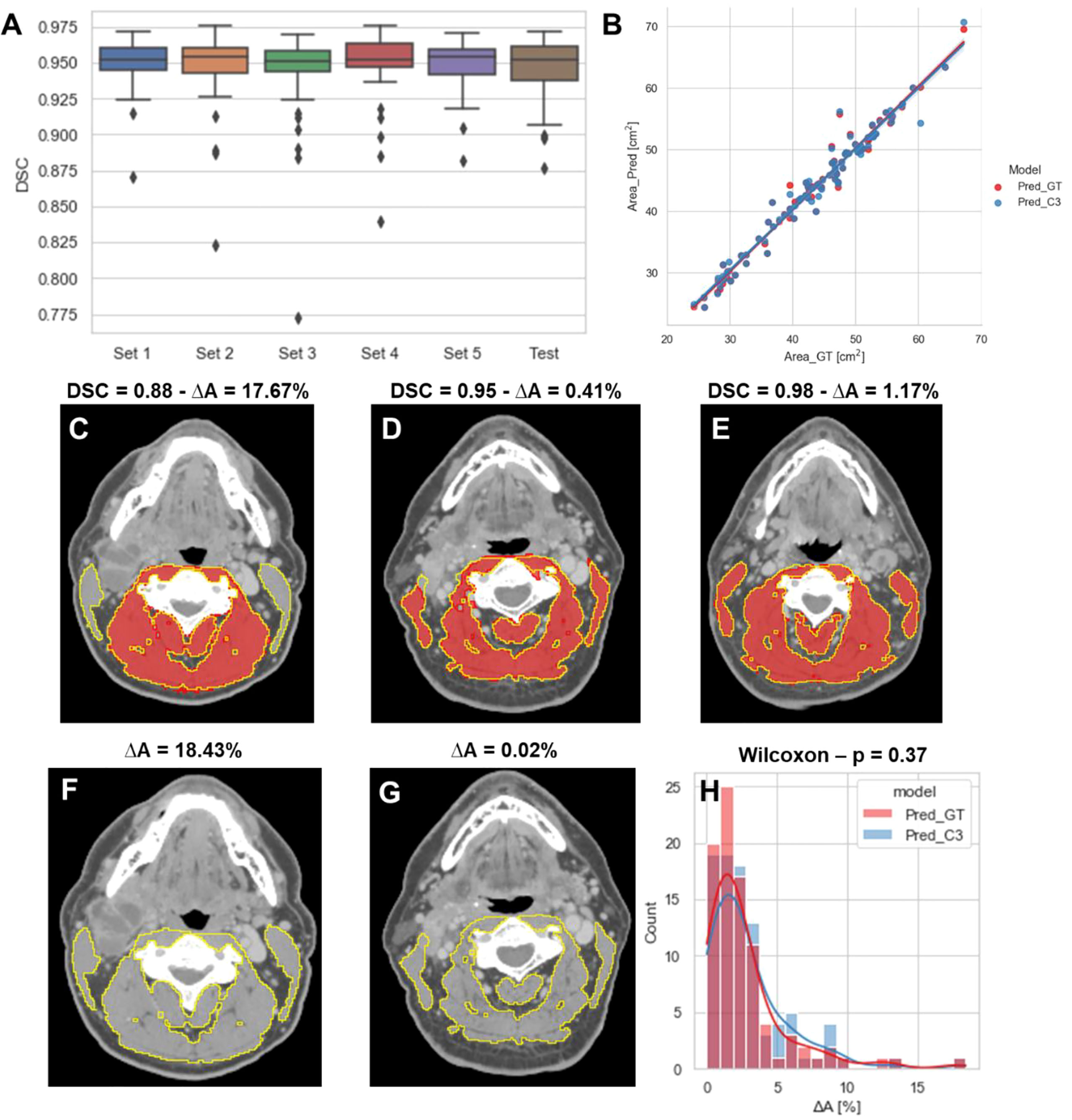

The performance of our 2D ResUNet model for segmenting the C3 vertebra SM is summarized in Figure 3A. The DSCs calculated between the model-predicted segmentations and the ground-truth segmentations were high and consistent between all training folds, with a mean DSC of 0.95 ± 0.002. When combining the cross-validation fold predictions using our ensemble approach, the mean DSC performance on the test set remained consistent at 0.95 ± 0.02. The cross-sectional areas derived from the 2D model predictions using both the ground-truth slice locations and auto-selected slice locations from the 3D ResUNet model were highly correlated to the cross-sectional areas derived from the ground-truth segmentations (Figure 3B). The predicted areas using the ground-truth slice locations had a Pearson r=0.98 (p < 0.0001) and nonsignificant Wilcoxon test (p=0.43). Similarly, the predicted areas using the auto-selected slice locations had a Pearson r=0.98 (p < 0.0001) and nonsignificant Wilcoxon test (p=0.22). Examples of test set predictions for cases with low, medium, and high performance compared to the mean DSC for predictions using ground-truth slice location are shown in Figures 3C–E. As can be visually confirmed, the low-performance case successfully generated a segmentation for musculature that was not included in the ground-truth segmentation. Moreover, the predictions using the auto-selected slice location from the 3D ResUNet model yielded virtually indistinguishable results for the low-performance and medium-performance cases (Figures 3F, G) and identical results for the high-performance case (Figure 3E). Finally, when investigating the percentage difference in cross-sectional areas between the model-generated and ground-truth segmentations, there was no significant difference when using the ground-truth slice location or the auto-selected slice location (p=0.37) (Figure 3H).

Figure 3 2D ResUNet model performance for segmentation of C3 skeletal muscle (SM). (A) Boxplots of the Dice similarity coefficient (DSC) distributions for the 5-fold cross-validation datasets (Set 1 to Set 5 – 60 patients each) and the test data (90 patients). (B) A scatter plot of the SM cross-sectional area using the ground-truth manual segmentation (x-axis) and the SM cross-sectional areas (y-axis) using predicted segmentations of the 2D ResUNet applied to the ground-truth CT image slice (Pred_GT) and the auto-selected CT image slice using the C3 section auto-segmentation (Pred_C3). Illustrative examples overlaying the skeletal muscle (SM) ground-truth segmentations (red) and predicted segmentations (yellow) on the same ground-truth CT images (C-E) and auto-selected CT images (F, G) with different DSC values (low – 0.88, medium - 0.95, and high – 0.98 compared to the mean estimated DSC value of 0.95). The auto-selected CT image for the high-performance example was identical to the ground-truth image and therefore provided the same segmentation as shown in panel C (H) Histogram of percentage difference of SM cross-sectional areas between ground-truth segmentations compared to the predicted SM cross-sectional areas (ΔA%) corresponding to the model using ground-truth slice location (red) or auto-selected slice location (blue).

SMI measurement comparisons

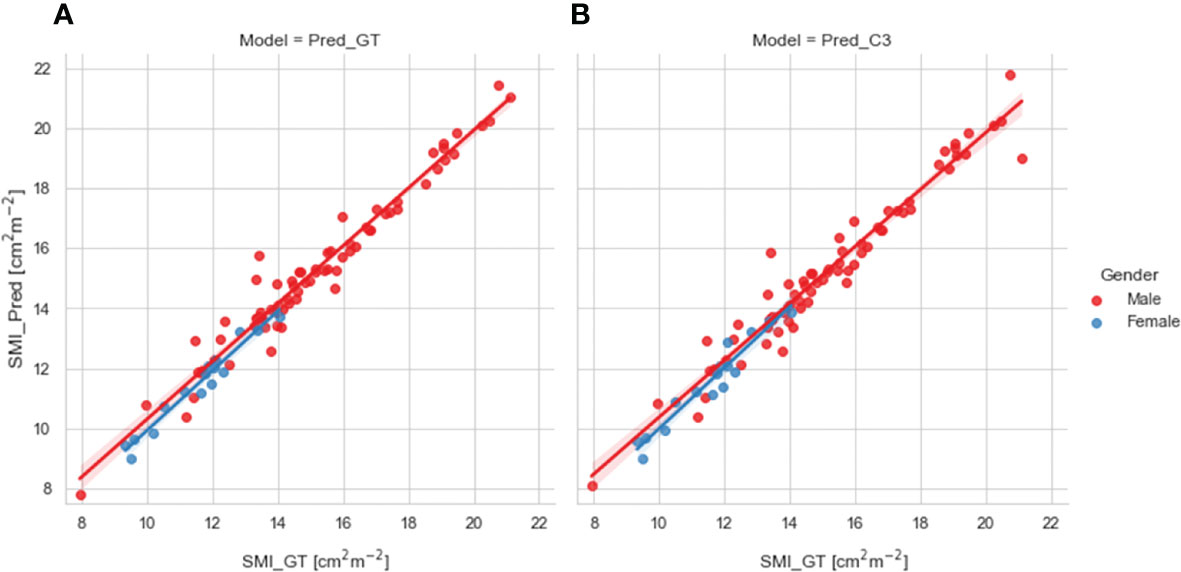

We compared SMI values for test set patients calculated using ground-truth SM segmentations with predicted SMI values calculated using SM segmentations generated from our 2D ResUNet models using the ground-truth slice location (Figure 4A) or auto-selected slice location (Figure 4B). Both model SM segmentations led to predicted SMI values that were highly correlated to the ground-truth SMI values. The predicted SMI values using the ground-truth slice location for males and females both had a Pearson r=0.98 (p < 0.0001) and nonsignificant Wilcoxon signed-rank tests (p=0.17 and p=0.43, respectively) compared to ground-truth SMI values. Similarly, the predicted SMI values using the auto-selected slice location for males and females had Pearson r values of 0.97 and 0.96, respectively (both p < 0.0001) and nonsignificant Wilcoxon signed-rank tests (p=0.19 and p=0.98, respectively) compared to the ground-truth SMI values.

Figure 4 Scatter plots of the skeletal muscle index (SMI) values determined for test set patients (stratified by gender) using the ground-truth manual segmentation (x-axis) and those determined using predicted segmentations of the 2D ResUNet (y-axis) using (A) the ground-truth CT image slice (Pred_GT) and (B) the auto-selected CT image slice using the C3 section auto-segmentation (Pred_C3).

Survival analysis

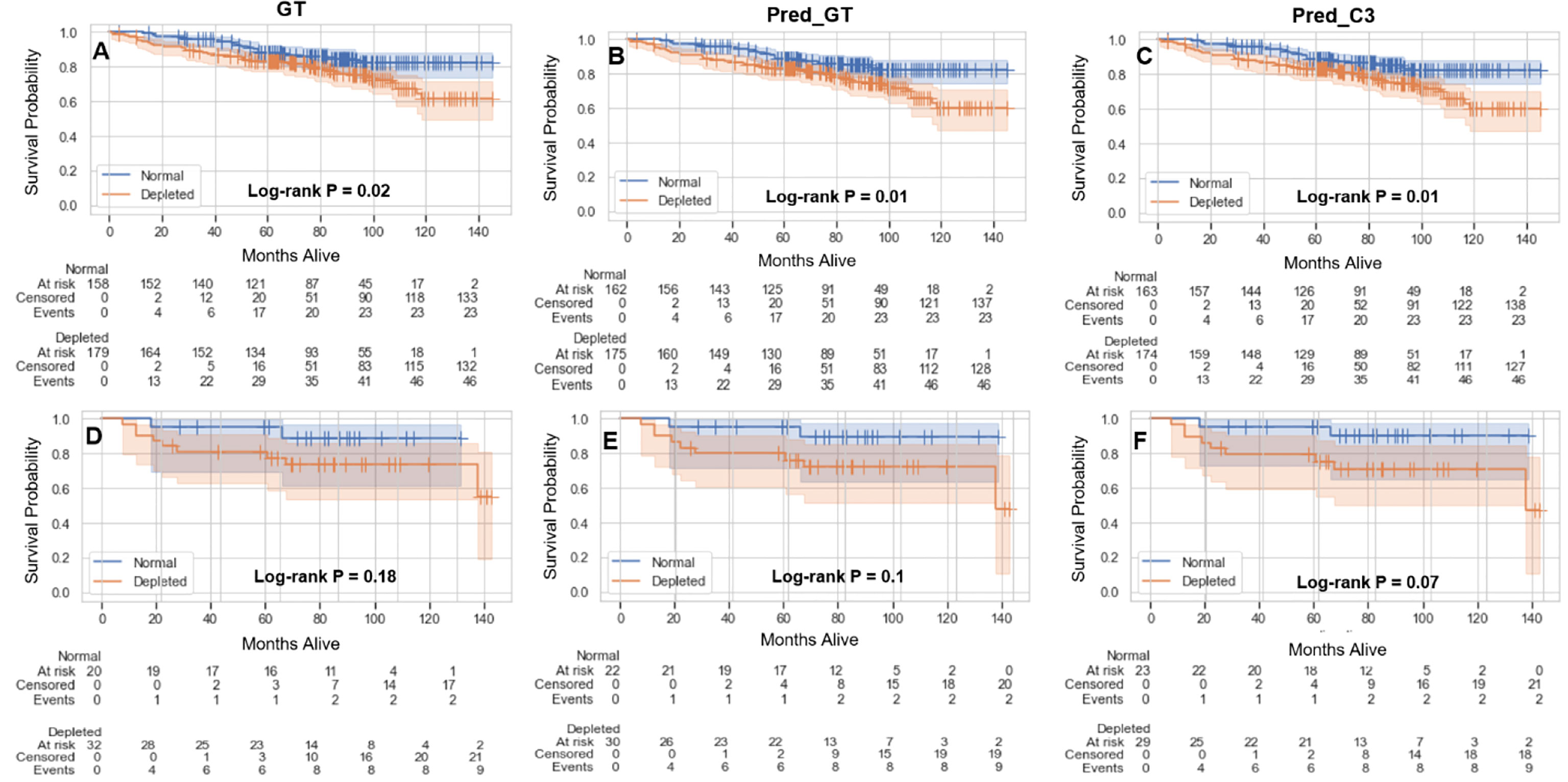

The results of the overall survival analysis based on sarcopenia thresholds are shown in Figure 5. Independent of the method of SMI calculation (GT, Pred_GT, or Pred_C3), there were significant differences in overall survival of males between those with normal and depleted muscle tissue (Figures 5A–C), while females exhibited no significant differences (Figures 5D–F). Hazard ratios (95% confidence intervals) in males for GT, Pred_GT, and Pred_C3 were 1.82 (1.1-3.0), 1.95 (1.18-3.22), and 1.97 (1.19-3.25), respectively. Hazard ratios (95% confidence intervals) in females for GT, Pred_GT, and Pred_C3 were 2.76 (0.59-13.02), 3.4 (0.73-15.83), and 3.72 (0.8-17.31), respectively.

Figure 5 Kaplan-Meier plots showing overall survival probabilities (test and validation set combined, 390 patients) as a function of time in days for estimated skeletal muscle (SM) index (normal vs depleted) in male (A-C) and female (D-F) patients using the ground-truth SM segmentation (GT) (A, D), auto-segmented SM using the ground-truth slice location (Pred_GT) (B, E), and auto-segmented SM using the auto-selected slice location (Pred_C3) (C, F).

Discussion

In this study, we utilized a multi-stage deep learning approach to segment the C3 region of the head and neck, auto-select a single representative slice, and auto-segment the corresponding SM. Our approach determined slice location and segmented SM with high accuracy when compared to ground-truth segmentations. By fully automating this workflow, we have enabled more rapid testing and application of sarcopenia-related clinical decision-making. To our knowledge, this is the first study to fully automate sarcopenia prediction based on non-abdominal HNC imaging.

We utilized both 2D and 3D ResUNet models in our approach. By decomposing the C3 detection and SM segmentation problem into two separate tasks, we ensure that accurate representations of patient anatomy are identified by the models (C3 region) and subsequently maximize performance for SM segmentation. While previous SM auto-segmentation studies often required specific slices as model inputs (21, 26) or utilized separate pre-processing software (23, 25), multi-stage deep learning methods have recently been adapted in this domain as well (22, 24). Both the 2D and 3D ResUNet models that make up our segmentation pipeline had high performance, with mean DSC values in the test set above 0.95. Importantly, the performance of our C3 SM segmentation model is comparable to that of previous L3 SM deep learning segmentation models, which also demonstrate test set DSCs of ~0.95 (21–26). Moreover, for cases with relatively low performance, we visually confirmed that results were reasonable, i.e., the auto-selected slice was still contained within the C3 region for the 3D model, and the correct musculature was segmented on the 2D model. Importantly, we also showed minimal differences in the 2D SM segmentation model regardless of how the slice location was determined, indicating the model is robust to the specific C3 slice location. Consistent with quantitative measures of segmentation performance, using our deep learning segmentations to calculate SMI demonstrated a high correlation with ground-truth SMI independent of gender stratification.

A recent meta-analysis by Surov et al. calculated the cumulative prevalence of sarcopenia in HNC patients at 42% (5), highlighting the clinical need for accurate quantification of sarcopenia. Several previous studies have demonstrated that SMI values can be used to stratify patients into sarcopenia subgroups that are strongly associated with prognostic outcomes (5–7). Using lumbar SMI conversion equations previously derived by Swartz et al. (15) and van Rijn-Dekker et al. (6) combined with validated SMI thresholds (12), we demonstrated that calculations based on our deep learning segmentations predict similar overall survival outcomes as calculations based on ground-truth segmentations. Moreover, p-values for all methods were significant for males but not females. These results are consistent with recent literature by Olson et al. (16) which emphasized that sarcopenia was associated with poor survival outcomes in males but not in females. Our results suggest that our automated methods are dependable for use in prognostic outcome prediction.

While our study presents encouraging results towards full automation of sarcopenia-related clinical decision-making for HNC patients, there were some limitations. First, we only tested our method on pre-therapy images. Importantly, some studies have suggested prognostic evidence for sarcopenia measurements based on alternative or additional timepoints (e.g., body composition changes) (7, 37). Therefore, further confirmatory work is needed to ensure our methods can be used accurately and reproducibly for intra-therapy and post-therapy imaging. Additionally, when defining sarcopenia using SMI cutoffs, we have relied on historically accepted thresholds in literature, but several recent developments in standardizing SMI values, e.g., through body mass index (38), have been proposed that warrant further exploration. We must also note that while no universal consensus on sarcopenia definitions currently exists, European consensus guidelines (39) emphasize the importance of evaluating muscle performance and strength in addition to muscle mass; therefore, by European consensus guidelines we have only investigated “presarcopenia” in this analysis. Moreover, we have limited our analysis to CT images as CT is the most common imaging modality for HNC radiotherapy treatment planning. However, the use of additional modalities for SM segmentation, i.e., MRI, as has been utilized in other studies (40), may warrant additional auto-segmentation investigations. Finally, while we believe current model performance is satisfactory for clinical applications as demonstrated by comparisons with ground-truth segmentations and SMI measures, different architectural choices or ensemble approaches could be further explored to improve performance.

Conclusions

In summary, using open-source toolkits and public data, we applied 3D and 2D deep learning approaches to head and neck CT images to develop an end-to-end automated workflow for SM segmentation at the C3 vertebral level. When evaluated on independent test data, our fully-automated approach yielded mean DSCs of up to 0.96 for segmenting the C3 vertebra region and 0.95 for segmenting the corresponding SM. Cross-sectional areas and calculated SMI values derived from our approach were highly correlated to ground-truth (r>0.95), indicating their potential clinical acceptability. Moreover, our methods can be reliably combined with validated SMI thresholds for use in prognostic stratification. Our study is an essential first step towards fully-automated workflows for sarcopenia-related clinical-decision making. Future studies should consider incorporating additional imaging timepoints and modalities for automated sarcopenia prediction.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: Segmentations generated in this project are available on Figshare, doi: 10.6084/m9.figshare.18480917. The original unprocessed images used in this project can be found on The Cancer Imaging Archive: https://wiki.cancerimagingarchive.net/display/DOI/Radiomics+outcome+prediction+in+Oropharyngeal+cancer.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

Study concepts: MN, KW, AG, BO, RJ, AM, KK, and CF; Study design: MN, KW, AG, BO, RJ, and AM; Data acquisition: AG, BO, RJ, DE-H, CD, VS, and MA; Quality control of data and algorithms: MN, KW, RH, JJ, JS, and KK; Data analysis and interpretation: MN, KW, AG, BO, RH, JJ, and JS; Manuscript editing: MN, KW, AG, BO, RJ, AM, and KK. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Institutes of Health (NIH)/National Cancer Institute (NCI) through a Cancer Center Support Grant (CCSG; P30CA016672-44). MN is supported by an NIH grant (R01DE028290-01). KW is supported by the Dr. John J. Kopchick Fellowship through The University of Texas MD Anderson UTHealth Graduate School of Biomedical Sciences, the American Legion Auxiliary Fellowship in Cancer Research, and an NIH/National Institute for Dental and Craniofacial Research (NIDCR) F31 fellowship (1 F31DE031502-01). AG received funding from the National Cancer Institute (K08 245188, R01 CA264133) and the American Association for Cancer Research/Mark Foundation “Science of the Patient” Award (20-60-51-MARK). BO received funding from the Radiologic Society of North America Research Medical Student Grant (RMS2026). VS received funding from The University of Texas, Graduate School of Biomedical Sciences Graduate research assistantship. CF received funding from the NIH/NIDCR (1R01DE025248-01/R56DE025248); an NIH/NIDCR Academic-Industrial Partnership Award (R01DE028290); the National Science Foundation (NSF), Division of Mathematical Sciences, Joint NIH/NSF Initiative on Quantitative Approaches to Biomedical Big Data (QuBBD) Grant (NSF 1557679); the NIH Big Data to Knowledge (BD2K) Program of the NCI Early Stage Development of Technologies in Biomedical Computing, Informatics, and Big Data Science Award (1R01CA214825); the NCI Early Phase Clinical Trials in Imaging and Image-Guided Interventions Program (1R01CA218148); an NIH/NCI Pilot Research Program Award from the UT MD Anderson CCSG Radiation Oncology and Cancer Imaging Program (P30CA016672); an NIH/NCI Head and Neck Specialized Programs of Research Excellence (SPORE) Developmental Research Program Award (P50CA097007); and the National Institute of Biomedical Imaging and Bioengineering (NIBIB) Research Education Program (R25EB025787).

Acknowledgments

The authors acknowledge Sunita Patterson (Research Medical Library, MD Anderson Cancer Center) for editorial assistance.

Conflict of interest

CF has received direct industry grant support, speaking honoraria, and travel funding from Elekta AB.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2022.930432/full#supplementary-material

Abbreviations

DSC, Dice similarity coefficient; HNC, head and neck cancer; ROI, region of interest; SM, skeletal muscle; SMI, skeletal muscle index.

References

1. Anjanappa M, Corden M, Green A, Roberts D, Hoskin P, McWilliam A, et al. Sarcopenia in cancer: Risking more than muscle loss. Tech Innov Patient Support Radiat Oncol Elsevier (2020) 16:50–7. doi: 10.1016/j.tipsro.2020.10.001

2. Zhao J-Z, Zheng H, Li L-Y, Zhang L-Y, Zhao Y, Jiang N. Predictors for weight loss in head and neck cancer patients undergoing radiotherapy: A systematic review. Cancer Nurs LWW (2015) 38(6):E37–45. doi: 10.1097/NCC.0000000000000231

3. Powrózek T, Dziwota J, Małecka-Massalska T. Nutritional deficiencies in radiotherapy-treated head and neck cancer patients. J Clin Med Multidiscip Digital Publ Inst (2021) 10(4):574. doi: 10.3390/jcm10040574

4. Ghadjar P, Hayoz S, Zimmermann F, Bodis S, Kaul D, Badakhshi H, et al. Impact of weight loss on survival after chemoradiation for locally advanced head and neck cancer: secondary results of a randomized phase III trial (SAKK 10/94). Radiat Oncol Springer (2015) 10(1):1–7. doi: 10.1186/s13014-014-0319-y

5. Surov A, Wienke A. Low skeletal muscle mass predicts relevant clinical outcomes in head and neck squamous cell carcinoma. a meta analysis. Ther Adv Med Oncol SAGE Publ SAGE UK: London England (2021) 13:17588359211008844. doi: 10.1177/17588359211008844

6. van Rijn-Dekker MI, van den Bosch L, van den Hoek JGM, Bijl HP, van Aken ESM, van der Hoorn A, et al. Impact of sarcopenia on survival and late toxicity in head and neck cancer patients treated with radiotherapy. Radiother Oncol Elsevier (2020) 147:103–10. doi: 10.1016/j.radonc.2020.03.014

7. Findlay M, White K, Stapleton N, Bauer J. Is sarcopenia a predictor of prognosis for patients undergoing radiotherapy for head and neck cancer? A meta-analysis. Clin Nutr Elsevier (2021) 40(4):1711–8. doi: 10.1016/j.clnu.2020.09.017

8. Han A, Bokshan SL, Marcaccio SE, DePasse JM, Daniels AH. Diagnostic criteria and clinical outcomes in sarcopenia research: A literature review. J Clin Med Multidiscip Digital Publ Inst (2018) 7(4):70. doi: 10.3390/jcm7040070

9. Hua X, Liu S, Liao J-F, Wen W, Long Z-Q, Lu Z-J, et al. When the loss costs too much: A systematic review and meta-analysis of sarcopenia in head and neck cancer. Front Oncol Front (2020) 9:1561. doi: 10.3389/fonc.2019.01561

10. Cho Y, Kim JW, Keum KC, Lee CG, Jeung HC, Lee IJ. Prognostic significance of sarcopenia with inflammation in patients with head and neck cancer who underwent definitive chemoradiotherapy. Front Oncol Front (2018) 8:457. doi: 10.3389/fonc.2018.00457

11. Stone L, Olson B, Mowery A, Krasnow S, Jiang A, Li R, et al. Association between sarcopenia and mortality in patients undergoing surgical excision of head and neck cancer. JAMA Otolaryngol Neck Surg Am Med Assoc (2019) 145(7):647–54. doi: 10.1001/jamaoto.2019.1185

12. Grossberg AJ, Chamchod S, Fuller CD, Mohamed AS, Heukelom J, Eichelberger H, et al. Association of body composition with survival and locoregional control of radiotherapy-treated head and neck squamous cell carcinoma. JAMA Oncol Am Med Assoc (2016) 2(6):782–9. doi: 10.1001/jamaoncol.2015.6339

13. Fattouh M, Chang GY, Ow TJ, Shifteh K, Rosenblatt G, Patel VM, et al. Association between pretreatment obesity, sarcopenia, and survival in patients with head and neck cancer. Head Neck Wiley Online Library (2019) 41(3):707–14. doi: 10.1002/hed.25420

14. Chamchod S, Fuller CD, Mohamed ASR, Grossberg A, Messer JA, Heukelom J, et al. Quantitative body mass characterization before and after head and neck cancer radiotherapy: A challenge of height-weight formulae using computed tomography measurement. Oral Oncol Elsevier (2016) 61:62–9. doi: 10.1016/j.oraloncology.2016.08.012

15. Swartz JE, Pothen AJ, Wegner I, Smid EJ, Swart KM, de Bree R, et al. Feasibility of using head and neck CT imaging to assess skeletal muscle mass in head and neck cancer patients. Oral Oncol (2016) 62:28–33. doi: 10.1016/j.oraloncology.2016.09.006

16. Olson B, Edwards J, Degnin C, Santucci N, Buncke M, Hu J, et al. Establishment and validation of pre-therapy cervical vertebrae muscle quantification as a prognostic marker of sarcopenia in patients with head and neck cancer. Front Oncol (2022) 12. doi: 10.3389/fonc.2022.812159

17. Tajbakhsh N, Jeyaseelan L, Li Q, Chiang JN, Wu Z, Ding X. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med Image Anal Elsevier (2020) 63:101693. doi: 10.1016/j.media.2020.101693

18. Zhou T, Ruan S, Canu S. A review: Deep learning for medical image segmentation using multi-modality fusion. Array Elsevier (2019) 3:100004. doi: 10.1016/j.array.2019.100004

19. Bakator M, Radosav D. Deep learning and medical diagnosis: A review of literature. Multimodal Technol Interact Multidiscip Digital Publ Inst (2018) 2(3):47. doi: 10.3390/mti2030047

20. Naser MA, Deen MJ. Brain tumor segmentation and grading of lower-grade glioma using deep learning in MRI images. Comput Biol Med Elsevier Ltd (2020) 121:103758. doi: 10.1016/j.compbiomed.2020.103758

21. Amarasinghe KC, Lopes J, Beraldo J, Kiss N, Bucknell N, Everitt S, et al. A deep learning model to automate skeletal muscle area measurement on computed tomography images. Front Oncol Front Media SA (2021) 11. doi: 10.3389/fonc.2021.580806

22. Kanavati F, Islam S, Arain Z, Aboagye EO, Rockall A. Fully-automated deep learning slice-based muscle estimation from CT images for sarcopenia assessment. arXiv Prepr arXiv (2020) 218:200606432. doi: 10.48550/arXiv.2006.06432

23. Pickhardt PJ, Perez AA, Garrett JW, Graffy PM, Zea R, Summers RM. Fully automated deep learning tool for sarcopenia assessment on CT: L1 versus L3 vertebral level muscle measurements for opportunistic prediction of adverse clinical outcomes. Am J Roentgenol Am Roentgen Ray Soc (2021) 1–8. doi: 10.2214/AJR.21.26486

24. Burns JE, Yao J, Chalhoub D, Chen JJ, Summers RM. A machine learning algorithm to estimate sarcopenia on abdominal CT. Acad Radiol Elsevier (2020) 27(3):311–20. doi: 10.1016/j.acra.2019.03.011

25. Graffy PM, Liu J, Pickhardt PJ, Burns JE, Yao J, Summers RM. Deep learning-based muscle segmentation and quantification at abdominal CT: Application to a longitudinal adult screening cohort for sarcopenia assessment. Br J Radiol Br Inst Radiol (2019) 92(1100):20190327. doi: 10.1259/bjr.20190327

26. Paris MT, Tandon P, Heyland DK, Furberg H, Premji T, Low G, et al. Automated body composition analysis of clinically acquired computed tomography scans using neural networks. Clin Nutr Elsevier (2020) 39(10):3049–55. doi: 10.1016/j.clnu.2020.01.008

27. Elhalawani H, Mohamed ASR, White AL, Zafereo J, Wong AJ, Berends JE, et al. Matched computed tomography segmentation and demographic data for oropharyngeal cancer radiomics challenges. Sci Data Nat Publ Group (2017) 4:170077. doi: 10.1038/sdata.2017.77

28. Grossberg A, Elhalawani H, Mohamed A, Mulder S, Williams B, White AL, et al. HNSCC [ dataset ]. Cancer Imaging Arch (2020). doi: 10.7937/k9/tcia.2020.a8sh-7363

29. Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, et al. The cancer imaging archive (TCIA): maintaining and operating a public information repository. J Digit Imaging Springer (2013) 26(6):1045–57. doi: 10.1007/s10278-013-9622-7

30. Prado CMM, Lieffers JR, McCargar LJ, Reiman T, Sawyer MB, Martin L, et al. Prevalence and clinical implications of sarcopenic obesity in patients with solid tumours of the respiratory and gastrointestinal tracts: A population-based study. Lancet Oncol Elsevier (2008) 9(7):629–35. doi: 10.1016/S1470-2045(08)70153-0

31. Wahid KA, Olson B, Jain R, Grossberg AJ, El-Habashy D, Dede C, et al. Muscle and adipose tissue segmentations at the C3 vertebral level for sarcopenia-related clinical decision-making in patients with head and neck cancer. medRxiv (2022) 2022:1. doi: 10.1101/2022.01.23.22269674

32. Anderson BM, Wahid KA, Brock KK. Simple python module for conversions between dicom images and radiation therapy structures, masks, and prediction arrays. Pract Radiat Oncol Elsevier (2021) 11(3):226–9. doi: 10.1016/j.prro.2021.02.003

33. Naser MA, Wahid KA, van Dijk LV, He R, Abdelaal MA, Dede C, et al. Head and neck cancer primary tumor auto segmentation using model ensembling of deep learning in pet-ct images. 3D Head Neck Tumor Segmentation PET/CT Challenge Lect Notes Comput Sci Springer Cham (2022) 13209:121–33. doi: 10.1007/978-3-030-98253-9_11

35. Wahid KA, Ahmed S, He R, van Dijk LV, Teuwen J, McDonald BA, et al. Evaluation of deep learning-based multiparametric MRI oropharyngeal primary tumor auto-segmentation and investigation of input channel effects: Results from a prospective imaging registry. Clin Transl Radiat Oncol (2022) 32:6–14. doi: 10.1016/j.ctro.2021.10.003

36. Taha AA, Hanbury A. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med Imaging BioMed Central (2015) 15(1):1–28. doi: 10.1186/s12880-015-0068-x

37. Ferrão B, Neves PM, Santos T, Capelas ML, Mäkitie A, Ravasco P. Body composition changes in patients with head and neck cancer under active treatment: A scoping review. Support Care Cancer (2020) 28(10):4613–25. doi: 10.1007/s00520-020-05487-w

38. Martin L, Birdsell L, Macdonald N, Reiman T, Clandinin MT, McCargar LJ, et al. Cancer cachexia in the age of obesity: skeletal muscle depletion is a powerful prognostic factor, independent of body mass index. J Clin Oncol Off J Am Soc Clin Oncol United States (2013) 31(12):1539–47. doi: 10.1200/JCO.2012.45.2722

39. Cruz-Jentoft AJ, Baeyens JP, Bauer JM, Boirie Y, Cederholm T, Landi F, et al. Sarcopenia: European consensus on definition and diagnosis: Report of the European working group on sarcopenia in older people. Age Ageing (2010) 39(4):412–23. doi: 10.1093/ageing/afq034

Keywords: auto-segmentation, deep learning, skeletal muscle index, head and neck cancer, sarcopenia

Citation: Naser MA, Wahid KA, Grossberg AJ, Olson B, Jain R, El-Habashy D, Dede C, Salama V, Abobakr M, Mohamed ASR, He R, Jaskari J, Sahlsten J, Kaski K and Fuller CD (2022) Deep learning auto-segmentation of cervical skeletal muscle for sarcopenia analysis in patients with head and neck cancer. Front. Oncol. 12:930432. doi: 10.3389/fonc.2022.930432

Received: 27 April 2022; Accepted: 29 June 2022;

Published: 28 July 2022.

Edited by:

Puneeth Iyengar, University of Texas Southwestern Medical Center, United StatesReviewed by:

Remco De Bree, University Medical Center Utrecht, NetherlandsBianca Muresan, Hospital General Universitario De Valencia, Spain

Copyright © 2022 Naser, Wahid, Grossberg, Olson, Jain, El-Habashy, Dede, Salama, Abobakr, Mohamed, He, Jaskari, Sahlsten, Kaski and Fuller. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Clifton D. Fuller, Y2RmdWxsZXJAbWRhbmRlcnNvbi5vcmc=

Mohamed A. Naser

Mohamed A. Naser Kareem A. Wahid

Kareem A. Wahid Aaron J. Grossberg

Aaron J. Grossberg Brennan Olson

Brennan Olson Rishab Jain2

Rishab Jain2 Dina El-Habashy

Dina El-Habashy Moamen Abobakr

Moamen Abobakr Abdallah S. R. Mohamed

Abdallah S. R. Mohamed Kimmo Kaski

Kimmo Kaski Clifton D. Fuller

Clifton D. Fuller