- 1The First Clinical Medical College of Lanzhou University, Lanzhou, China

- 2National Health Commission of People’s Republic of China (NHC) Key Laboratory of Diagnosis and Therapy of Gastrointestinal Tumor, Gansu Provincial Hospital, Lanzhou, China

- 3The First Clinical Medical College of Gansu University of Chinese Medicine, Lanzhou, China

- 4Department of Center of Medical Cosmetology, Chengdu Second People’s Hospital, Chengdu, China

- 5Department of Urology, The 940 Hospital of Joint Logistics Support Force of Chinese PLA, Lanzhou, China

- 6Department of Radiology, Gansu Provincial Hospital, Lanzhou, China

- 7Department of Urology, Second People’s Hospital of Gansu Province, Lanzhou, China

- 8Department of Urology, Gansu Provincial Hospital, Lanzhou, China

Purpose: Patients with advanced prostate cancer (PCa) often develop castration-resistant PCa (CRPC) with poor prognosis. Prognostic information obtained from multiparametric magnetic resonance imaging (mpMRI) and histopathology specimens can be effectively utilized through artificial intelligence (AI) techniques. The objective of this study is to construct an AI-based CRPC progress prediction model by integrating multimodal data.

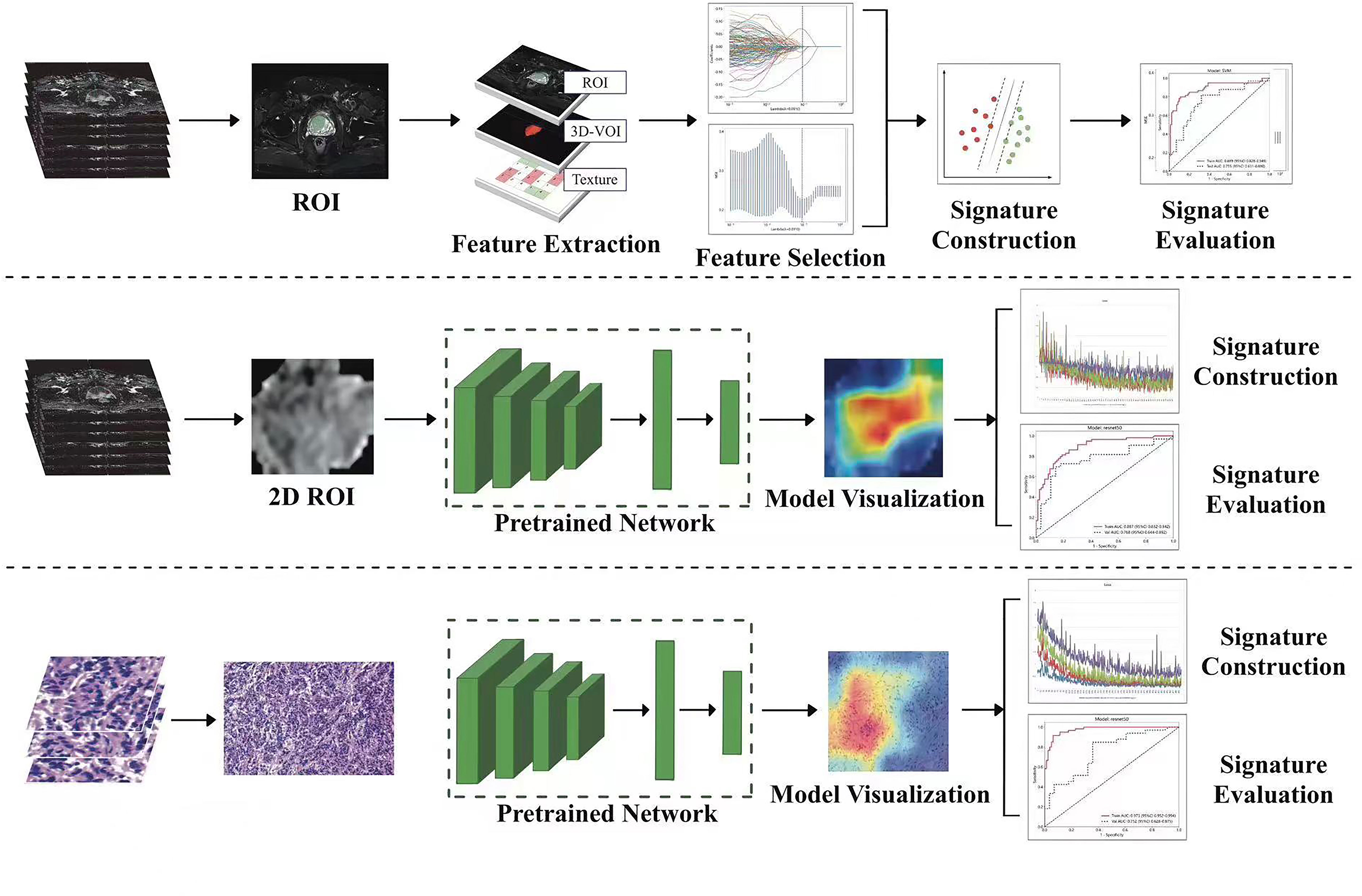

Methods and materials: Data from 399 patients diagnosed with PCa at three medical centers between January 2018 and January 2021 were collected retrospectively. We delineated regions of interest (ROIs) from 3 MRI sequences viz, T2WI, DWI, and ADC and utilized a cropping tool to extract the largest section of each ROI. We selected representative pathological hematoxylin and eosin (H&E) slides for deep-learning model training. A joint combined model nomogram was constructed. ROC curves and calibration curves were plotted to assess the predictive performance and goodness of fit of the model. We generated decision curve analysis (DCA) curves and Kaplan–Meier (KM) survival curves to evaluate the clinical net benefit of the model and its association with progression-free survival (PFS).

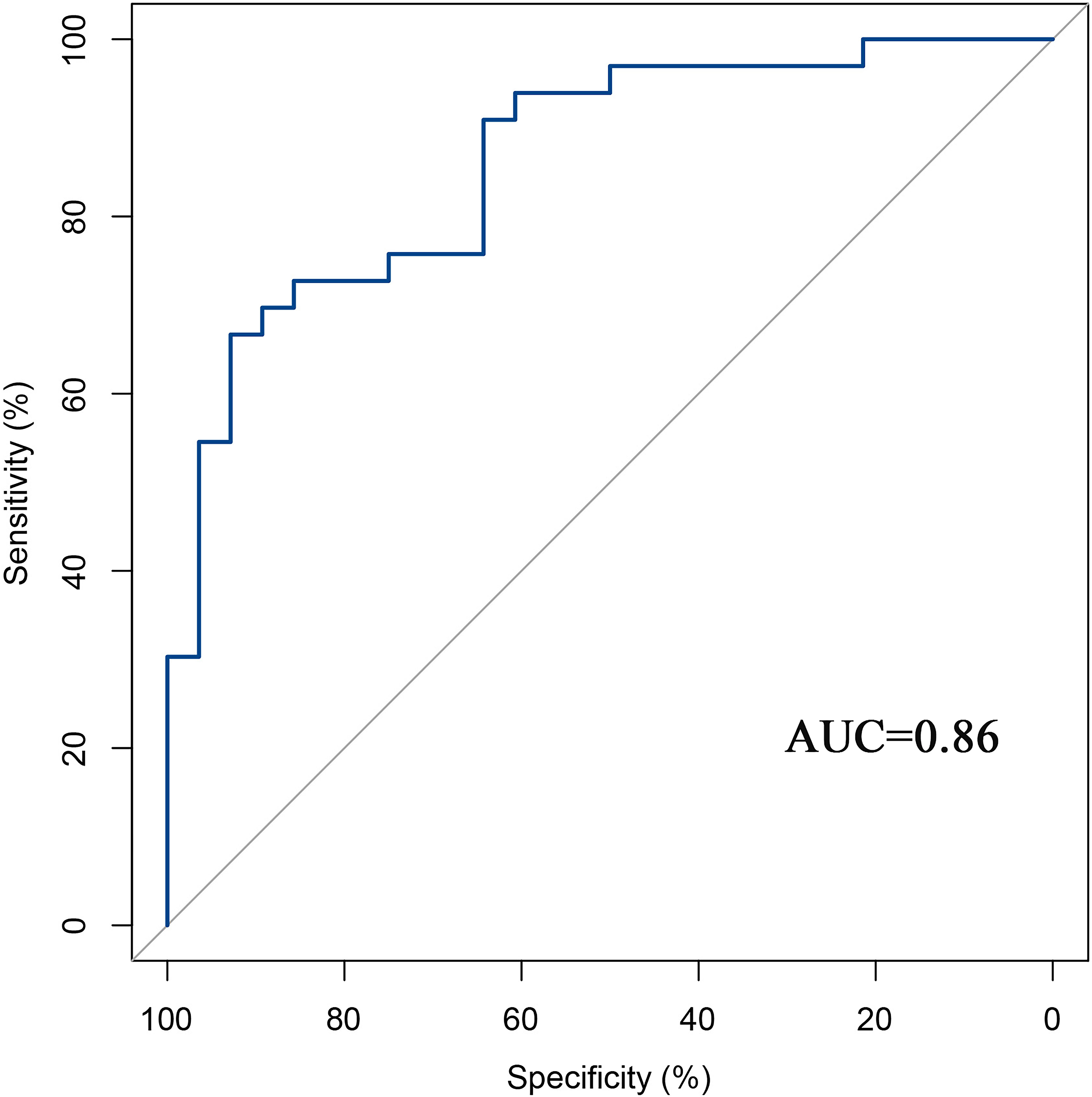

Results: The AUC of the machine learning (ML) model was 0.755. The best deep learning (DL) model for radiomics and pathomics was the ResNet-50 model, with an AUC of 0.768 and 0.752, respectively. The nomogram graph showed that DL model contributed the most, and the AUC for the combined model was 0.86. The calibration curves and DCA indicate that the combined model had a good calibration ability and net clinical benefit. The KM curve indicated that the model integrating multimodal data can guide patient prognosis and management strategies.

Conclusion: The integration of multimodal data effectively improves the prediction of risk for the progression of PCa to CRPC.

1 Introduction

Prostate cancer (PCa) affects men worldwide and is a significant health concern, with a global incidence rate of 13.5% (1). Additionally, the mortality rate of 6.7% makes PCa the fifth leading cause of death among men (2). Androgen deprivation therapy (ADT) is considered the primary treatment modality for men diagnosed with advanced symptomatic PCa, also known as castration-sensitive PCa (CSPC) (3). However, subsequent to the initial favorable treatment response, it is frequently observed in PCa patients that there is a decline in response and eventual progression to CRPC, which is characterized by a dismal prognosis (3). The median duration and mean survival period of patients until progression to CRPC range from 18 to 24 months and 24 to 30 months (4, 5), respectively. The status of the depot condition (testosterone [TST] 50 ng/dL or 1.7 nmol/L) and subsequent disease development (a sustained rise in prostate-specific antigen [PSA] and progression seen in images) are now the two most important criteria for detecting CRPC. However, tailored precision medicine is limited by the use of monomodal indicators such as PSA and serum testosterone (6, 7). The early detection of CRPC can help physicians determine the optimal timing for administering second-line therapies, possibly increasing the survival rate among patients. Predicting the risk of CRPC is an important factor affecting prognosis in patients with severe PCa. There is an urgent need for early diagnosis and precise management of CRPC.

Despite advancements in technology, there are still persistent challenges in accurately detecting, characterizing, and monitoring cancers (8). The assessment of diseases through radiographic methods primarily relies on visual evaluations, which can be enhanced by advanced computational analyses. Notably, AI holds the potential to significantly improve the qualitative interpretation of cancer imaging by expert clinicians (9). This includes the ability to accurately delineate tumor volumes over time, infer the tumor’s genotype and biological progression from its radiographic phenotype, and predict clinical outcomes (10). Radiomics, and pathomics have rapidly emerged as cutting-edge techniques to aid and enhance the interpretation of vast medical imaging data, which may benefit clinical applications. The techniques have the ability to directly process images, giving rise to numerous subdomains for further research (11). Clinical outcomes, such as survival, response to treatment, and recurrence, may be accurately predicted using AI models that use multimodal data (12–14). The utilization of radiomics and pathomics exhibits significant promise in enhancing clinical decision-making processes and ultimately enhancing patient outcomes via medical imaging techniques (15–17).

Hence, to effectively and precisely anticipate the likelihood of developing CRPC without invasive procedures. We constructed radiomics and pathomics prediction models based on deep-learning algorithms and investigated their application value in clinical decision-making and the prognosis of PCa. This may allow more accurate prediction of the risk of CRPC and provide a reference for accurate diagnosis and treatment of PCa.

2 Materials and methods

Clinicopathological data from patients with PCa were acquired retrospectively from the electronic medical record system of the three centers (center A; center B; center C) after receiving approval from the ethics committee of the local institution. This retrospective study was also approved by the Ethics Committee of the Gansu Provincial Geriatrics Association (2022-61), and the requirement for informed consent was waived. Our research program was designed based on the AI model of a local institution.

2.1 Participants

We conducted a retrospective study including patients with a pathologically confirmed diagnosis of PCa from the three centers between January 2018 and February 2021. The inclusion criteria were (a) first pathological diagnosis of PCa; (b) use of the same ADT treatment regimen; (c) availability of all MRI scans within 30 days of PCa diagnosis to exclude confounding effects of medication on measurements; and (d) no missing stained tissue slides. The exclusion criteria were (a) missing clinical information; (b) poor quality of MRI images (inability to identify the specific location of the lesion); (c) poor quality of stained tissue slides (uneven staining); and (d) missing follow-up information.

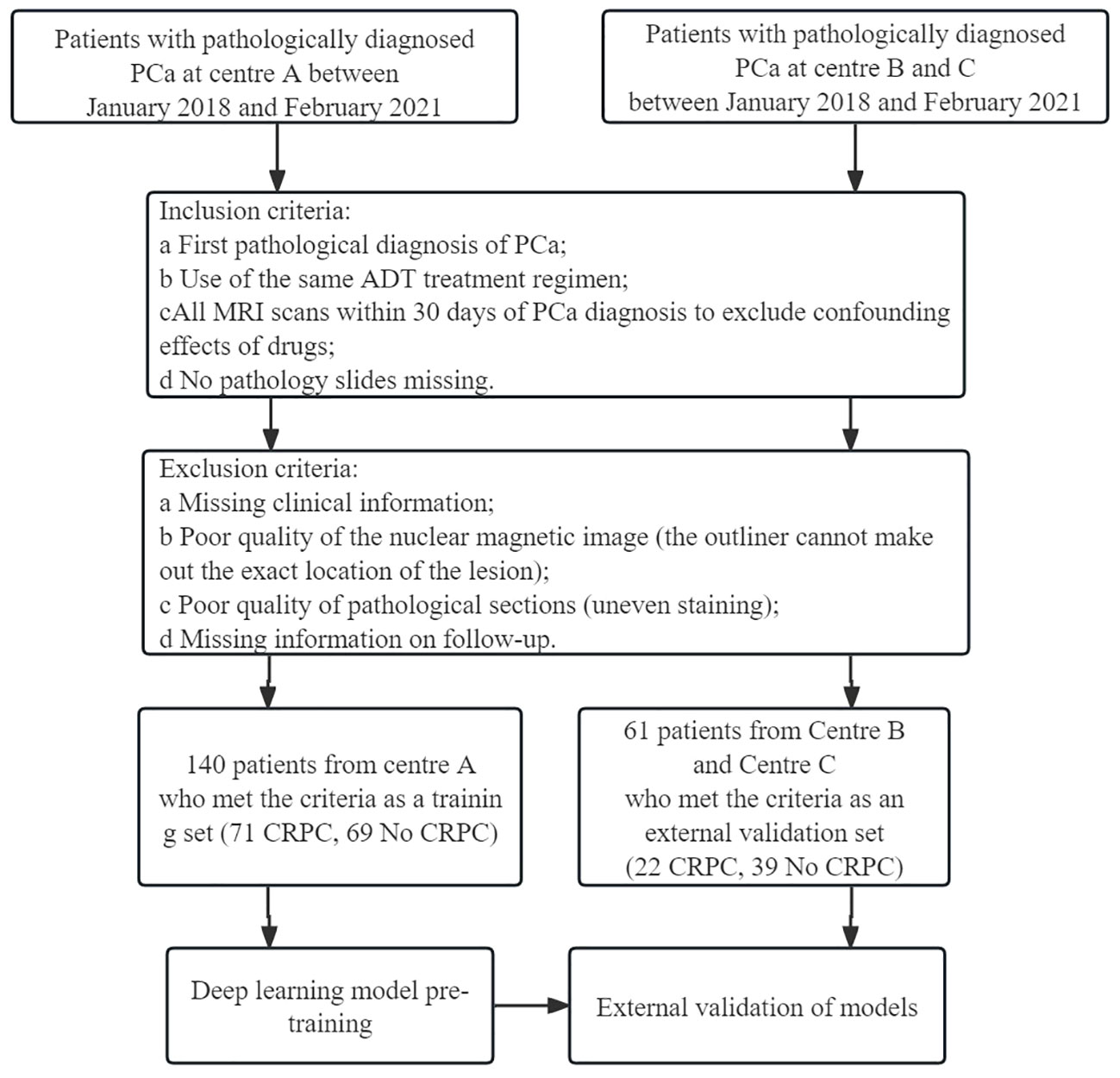

Clinical data from 399 patients with PCa were collected, including 254 from the Gansu Provincial Hospital (Center A), 112 from the 940 Hospital of Joint Logistics Support Force of Chinese PLA (Center B), and 33 from the Second People’s Hospital of Gansu Province (Center C). Figure 1 shows the flowchart for patient recruitment.

Figure 1 Flow chart of patient recruitment. Center (A) Gansu Provincial Hospital; Center (B) The 940 Hospital of Joint Logistics Support Force of Chinese PLA; Center (C) Second People’s Hospital of Gansu Province.

2.2 Prostate tumor segmentation

A radiologist (R.W) with 5 years of experience in prostate MRI diagnosis and a urologist (FH.Z) with 30 years of experience in PCa MRI diagnosis were involved in delineating the regions of interest (ROIs). Disagreements regarding individual lesions were resolved after consultation with a third radiologist (LP. Z), and a consensus was attained. The radiologist were unaware of the patients’ CRPC status and adhered to the guidelines outlined the Prostate Imaging Reporting and Data System Version 2 (PI-RADS-V2). Once the delineation of the Region of Interest (ROI) was finalized, a random screening of the 11 features extracted from the ADC sequences was performed. Subsequently, Mann-Whitney U tests were conducted on both sets of features to ascertain the presence of any potential bias in the results obtained by the two experts (R.W and FH.Z) during the delineation process. The main sequence parameters of mp-MRI in Supplementary Table 1. The ITK-SNAP software, version 4.0.0 (http://itk-snap.org), was used to annotate the ROIs for each patient from three sequences, including T2-weighted (T2WI), diffusion-weighted imaging (DWI), and apparent diffusion coefficient (ADC). The volume of interest was created by overlapping the ROIs of each patient. To pretrain the DL model, 2-dimensional (2D) ROIs were extracted from the original images of the three sequences by using a clipping tool based on the tumor’s 3D segmentation mask. The standard protocol of Digital Imaging and Communications in Medicine (DICOM) is commonly used for managing medical imaging information and related data. To ensure data quality, we standardized it to a resampling format with a resolution of 1 cm × 1 cm × 1 cm and performed N4 bias correction on all images before delineation.

A pathologist (X.Z) selected a histopathological hematoxylin and eosin (H&E) slide (20×10 magnification) of a typical tumor area as the pathological image for the patient. To prevent data heterogeneity, we used Photoshop to adjust each histopathological slide to the same pixel size (640×480) for pretraining the DL model. Overall, 141 patients from Center A were included in the training group, while 60 patients from Center B and Center C were included in the external validation group for building ML and DL models.

2.3 Signature construction

2.3.1 Radiomics signature construction

PyRadiomics (http://www.radiomics.io/pyradiomics.html) was used for extracting radiomics features. Additionally, the Z-score was employed for dataset standardization ([column−mean]/standard). The method involved using the Spearman correlation coefficient to evaluate the consistency among observers in feature extraction. Features with a correlation coefficient greater than 0.9 were considered reliable and formed a feature set for subsequent analysis. Normalization was performed by subtracting the mean value of each feature and dividing it by the standard deviation. The least absolute shrinkage and selection operator (LASSO) algorithm was used for feature selection and construction, with multiple iterations to assess the importance of each feature. Lastly, ML classifiers, such as logistic regression (LR) and support vector machines (SVM), were utilized to build the predictive models.

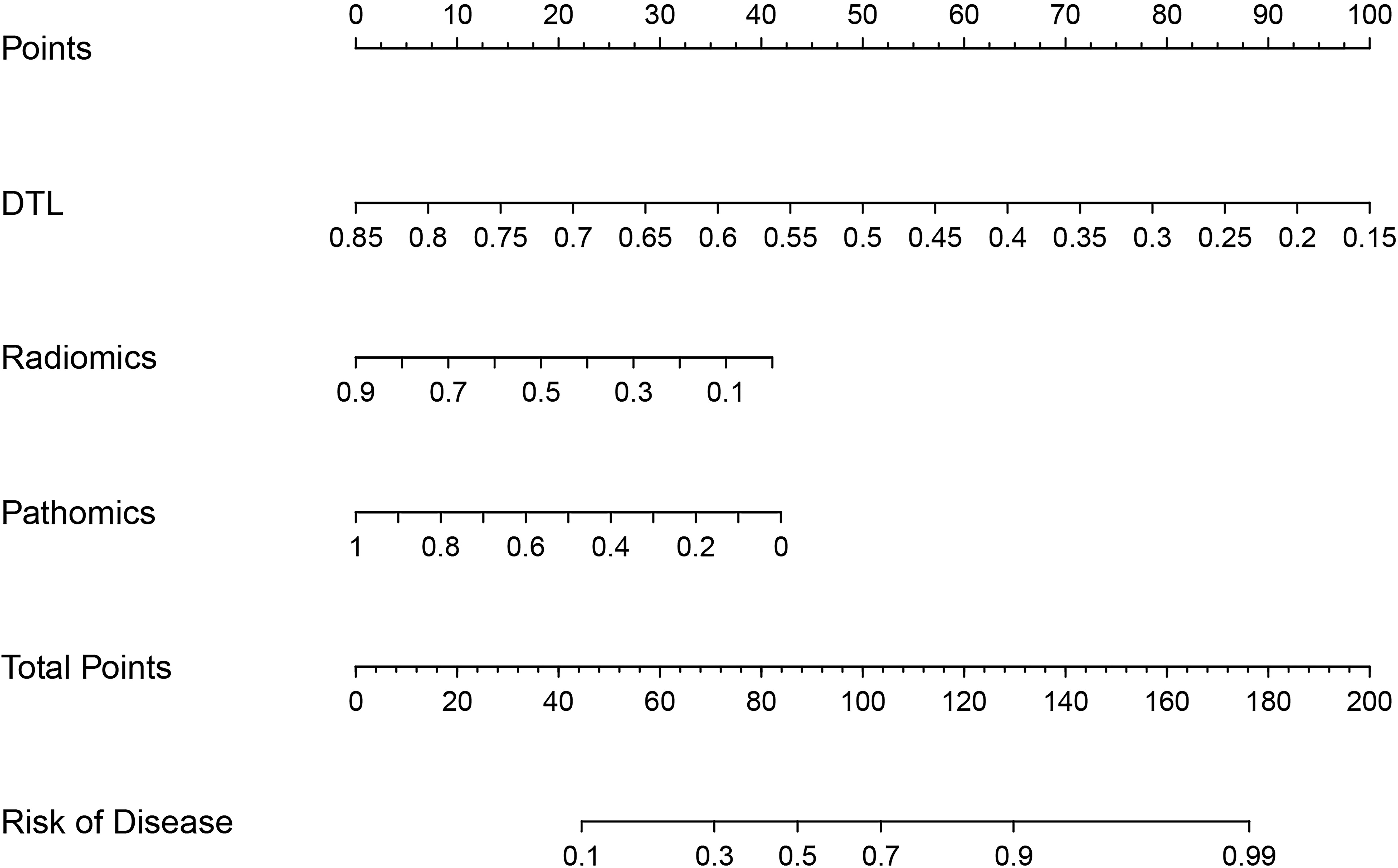

2.3.2 DL signature construction

In this study, ResNet-50, ResNet-34, ResNet-18, Vgg19, and other deep transfer learning (DTL) models were used for model pretraining. The number of iterations (epochs) was set to 100, with a batch size of 32. Imagenet was employed as the regularization method. To enhance the interpretability of the model’s decision-making process, we applied the Gradient-weighted Class Activation Mapping (Grad-CAM) method for visual analysis of the model. This method utilizes the gradient information from the last convolutional layer of the neural network to generate a weighted fusion of the class activation map. This class activation map highlights the important regions of the classified target image, thereby allowing us to better understand the decision-making principles of the model.

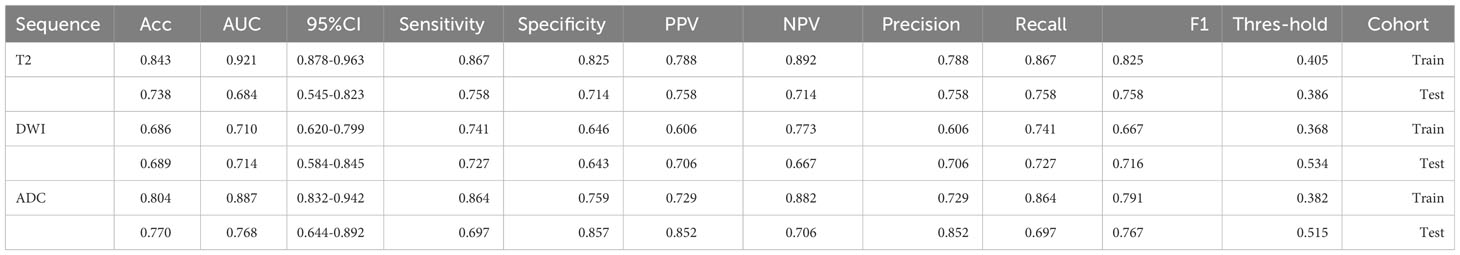

2.3.3 Construction of nomogram

We integrated radiomics models, DL models, and pathomics models to construct a nomogram and investigated the contributions of various modalities in the joint model.

2.4 Model evaluation

To evaluate the predictive performance of the models, we plotted ROC curves for each model and calculated the area under the curve (AUC) values. Decision curve analysis (DCA) curves and calibration curves were used to assess the net clinical benefit and goodness of fit of the joint model. Kaplan–Meier (KM) curves were used to evaluate its relationship with progression-free survival (PFS).

2.5 Statistical analysis

Statistical Package for Social Sciences (SPSS) 23.0 and R statistical software (version 3.6.1 R, https://www.r-project.org/) were used for statistical analysis. The Kolmogorov–Smirnov test was used to evaluate the normality of the measures, and those that conformed to a normal distribution were expressed as x ± s. The measures that did not conform to a normal distribution were expressed as the median (upper and lower quartiles). An independent samples t-test (normally distributed with equal variance) or Mann–Whitney U-test (skewed distribution or unequal variance) was used to compare the measures. Multi-factor LR analysis was used to screen out the independent predictors to construct the prediction model and plot the nomogram. The AUC of the receiver operating characteristics (ROC) was calculated to evaluate the discriminative power of the model. A DCA curve was plotted to compare the clinical value of the model. A p-value of <0.05 indicated a statistically significant difference.

3 Results

3.1 Clinical characteristics

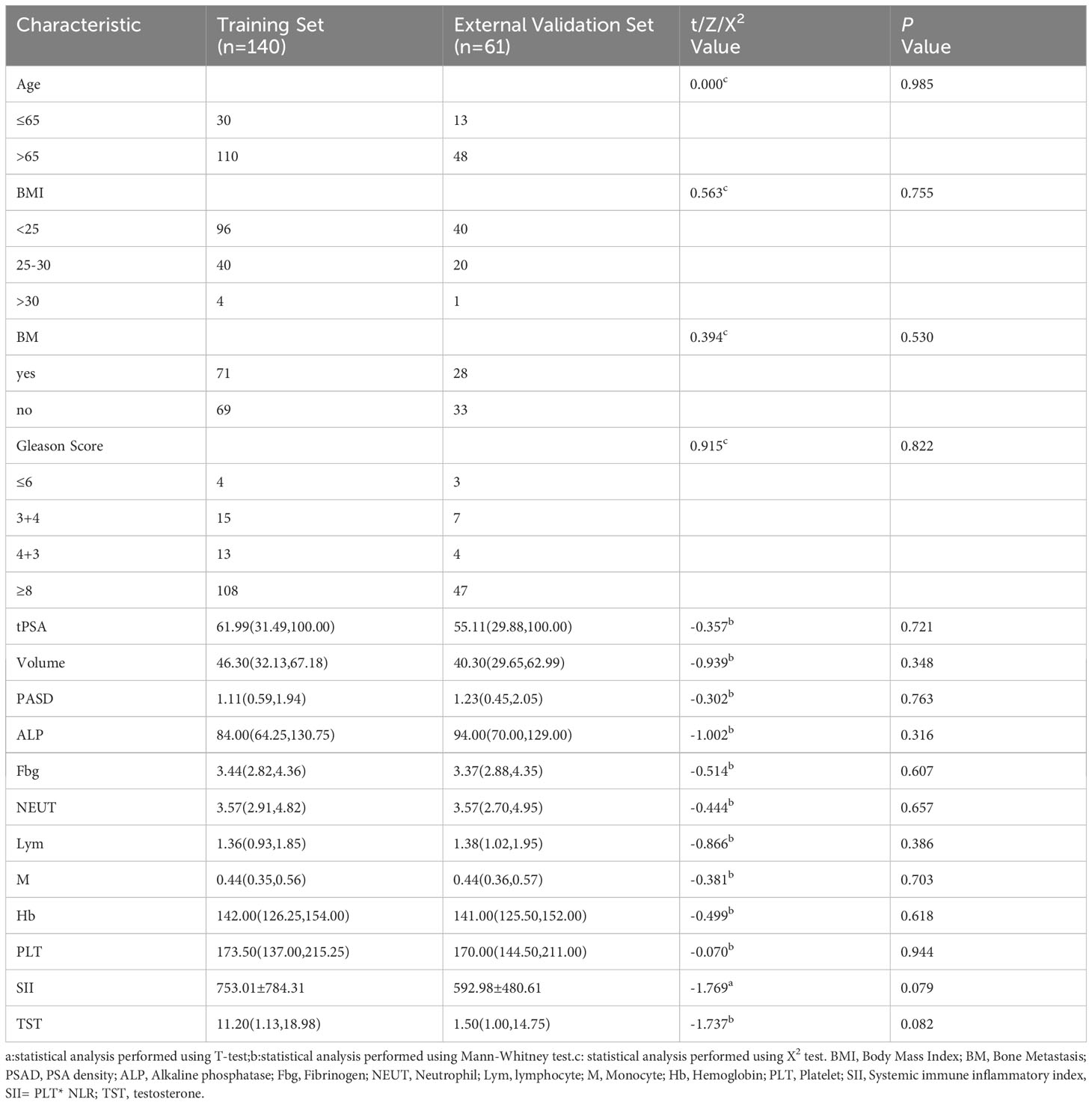

The study flow is shown in Figure 2. A total of 198 patients were excluded for not meeting the inclusion criteria, and 201 patients were included; 93 included patients progressed to CRPC. Statistical analysis revealed no significant differences in clinical features between the training and validation groups (Table 1).

Table 1 Comparison of clinical data of patients with prostate cancer in the training set and validation set.

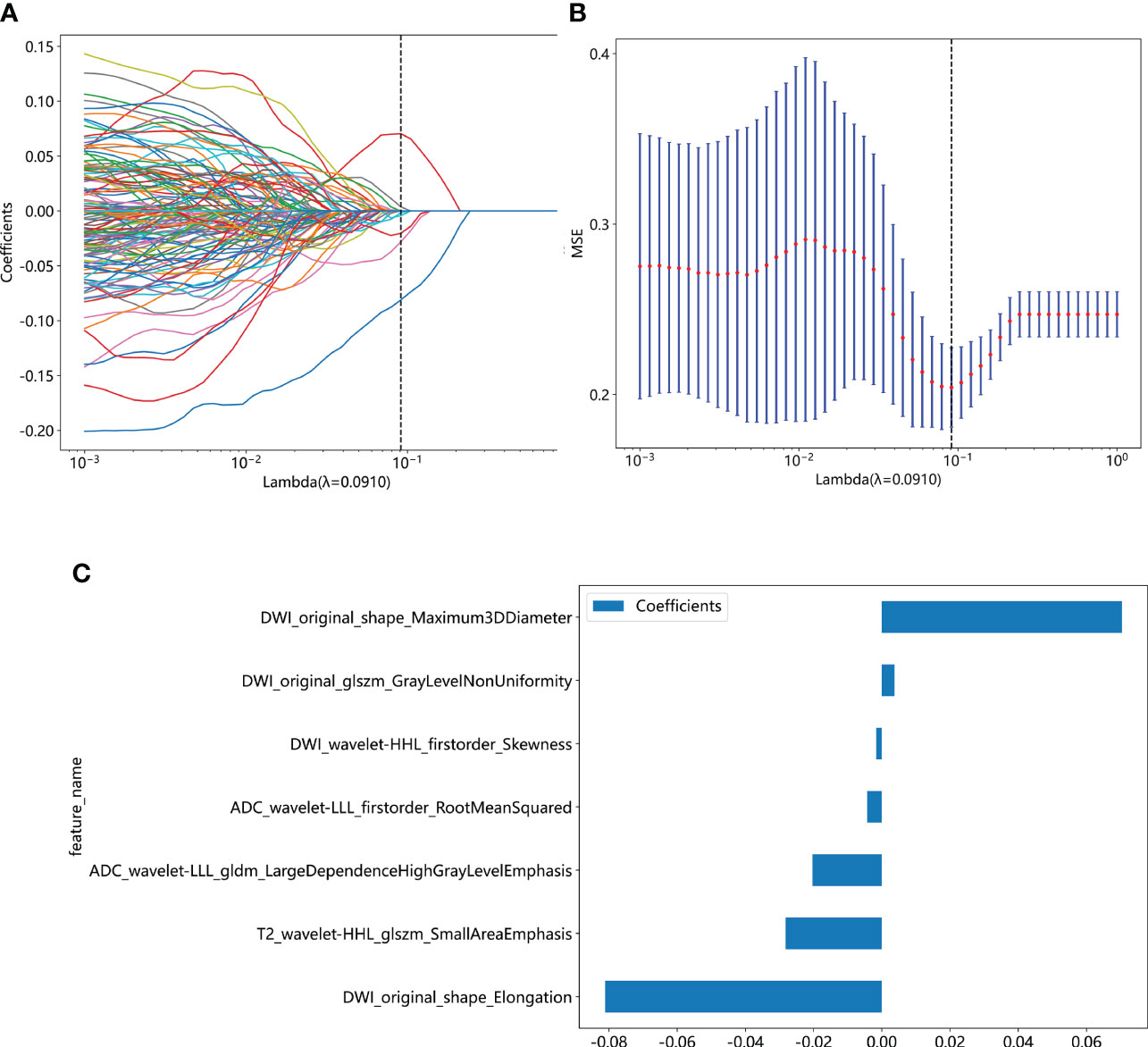

3.2 Feature selection and signature construction

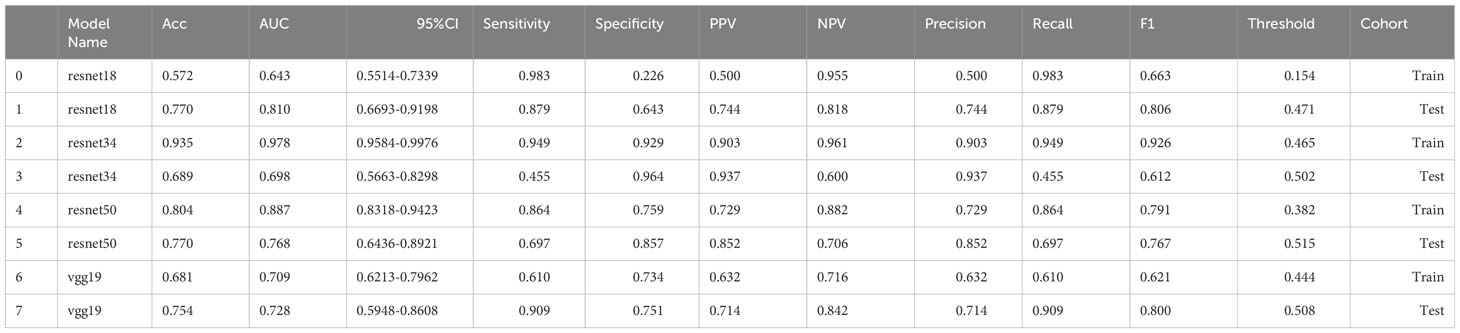

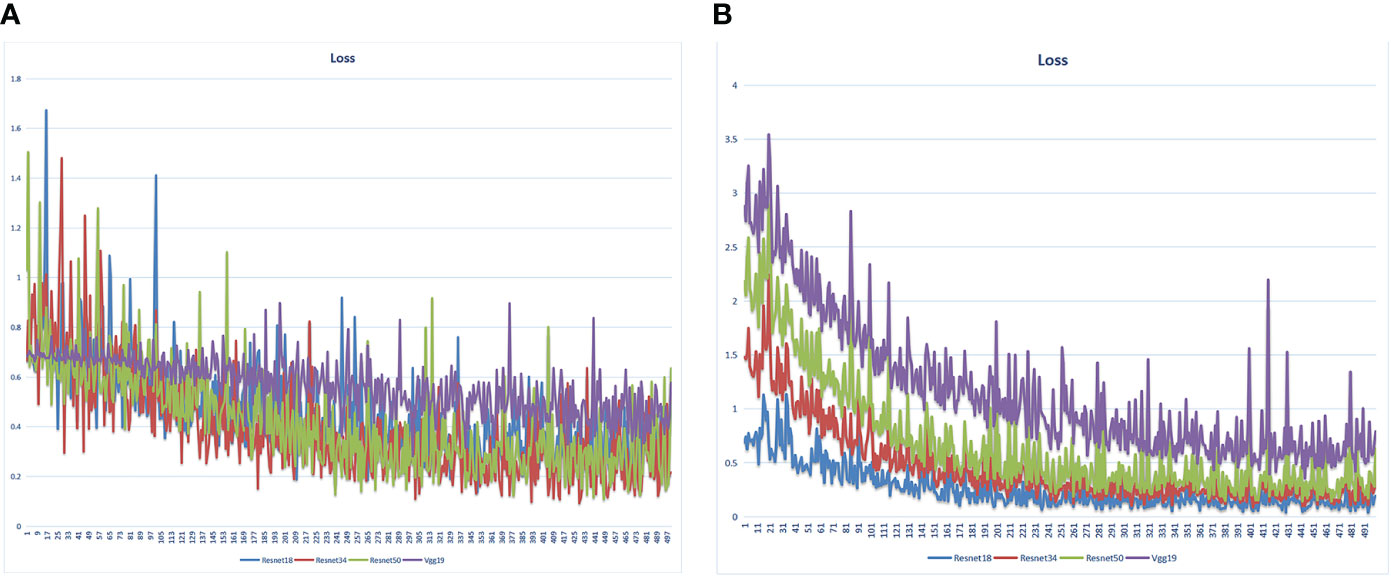

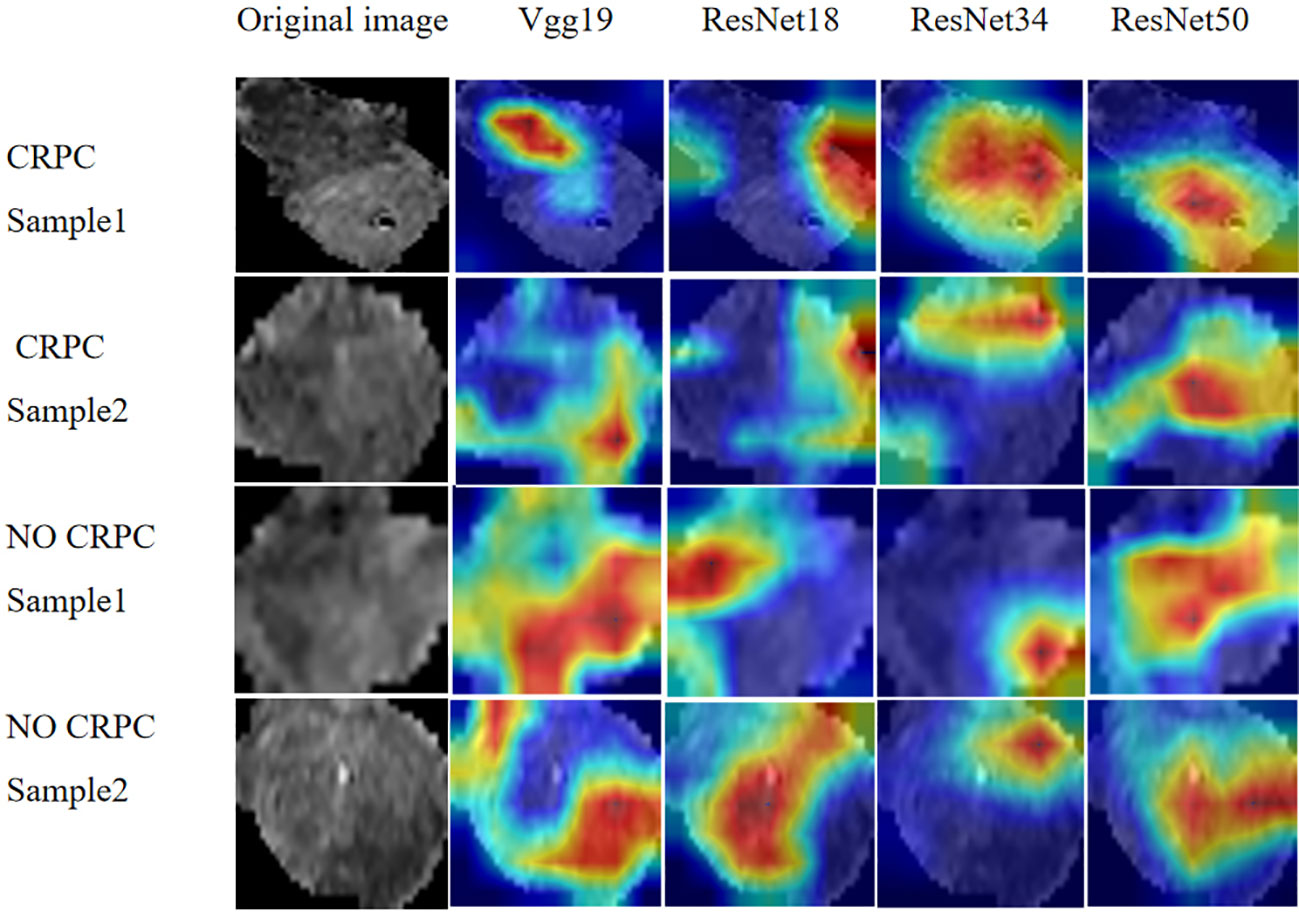

We extracted 2553 radiomic features using PyRadiomics. According to the ROI results presented by the two experts, a random selection of 11 features derived from ADC sequences was subjected to a Mann-Whitney U test. The analysis revealed no statistically significant distinction between the two groups of features (Supplementary Table 2). Seven radiomic features were selected using the LASSO algorithm (Figures 3A–C). Three 2D ROIs with maximum cross-sections were chosen, and different deep-learning models were used for pretraining and external validation. Model evaluation (Table 2) demonstrated that ResNet-50 had better overall performance in the external validation set, with the lowest loss value. This indicates that ResNet-50 had fewer errors during the training process and converged faster than any other Convolutional Neural Network(CNN)model (Figures 4A, B). In terms of model interpretability, each model had distinct attention regions in the samples. In comparison, ResNet-50 had clearer attention regions primarily focused on the internal regions of the tumor, while the tumor regions in the surrounding tissue were not activated (Figure 5). Furthermore, the ResNet-50 model performed better in the ADC sequence among the three sequences (Table 3).

Figure 3 (A) Coefficient profiles of the features in the LASSO model are shown. Each feature is represented by a different color line indicating its corresponding coefficient. (B) Tuning parameter (λ) selection in the LASSO model. (C) Weights for each feature in the model. LASSO, least absolute shrinkage and selection operator.

Figure 4 Loss value of different DL models in the training set varied with the iteration steps. (A) radiomics model (B) pathomics model.

Figure 5 Regions of attention in prostate cancer MRI analysis with different DL models. MRI, magnetic resonance imaging.

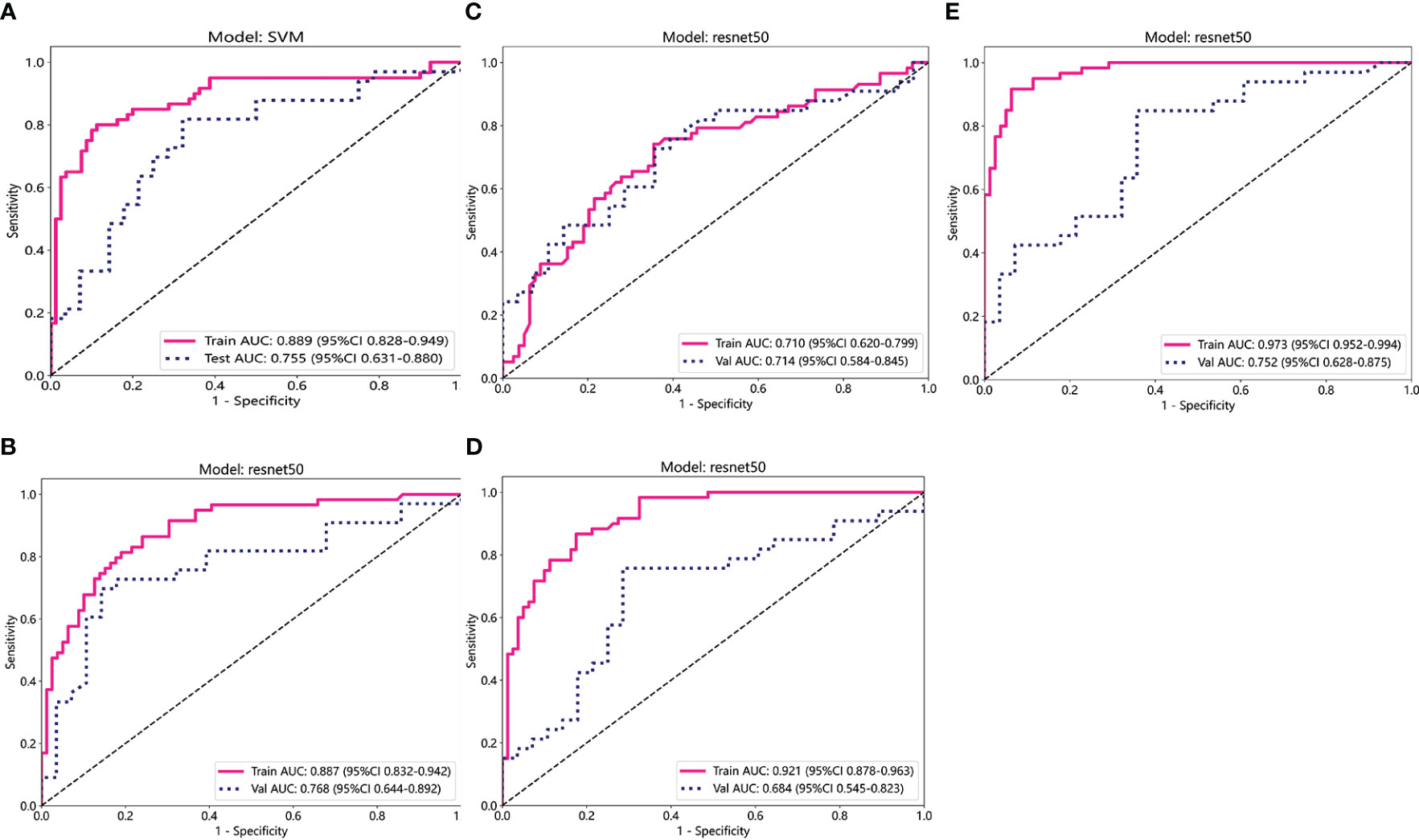

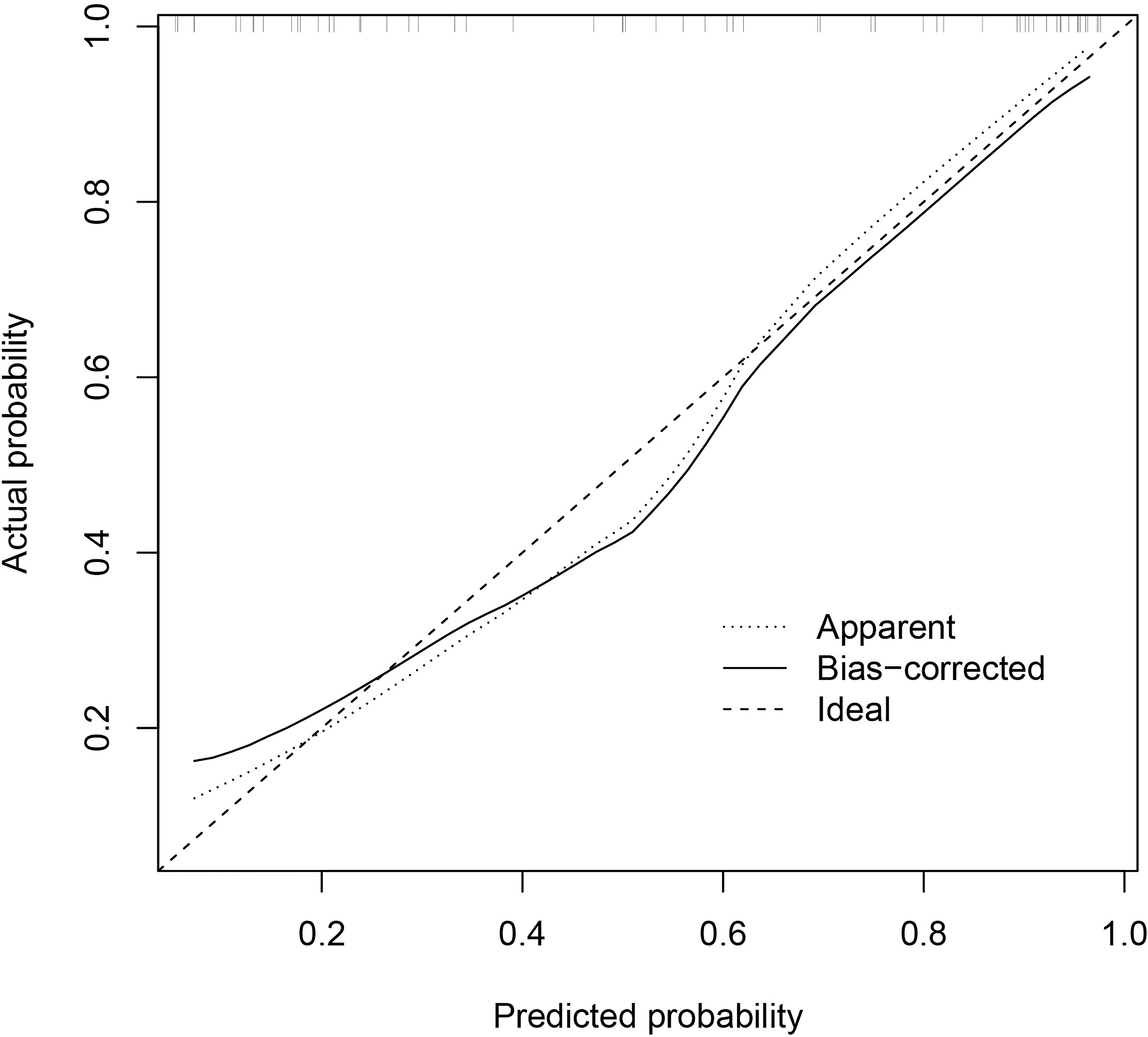

3.3 Validation of radiomics and pathomics signature

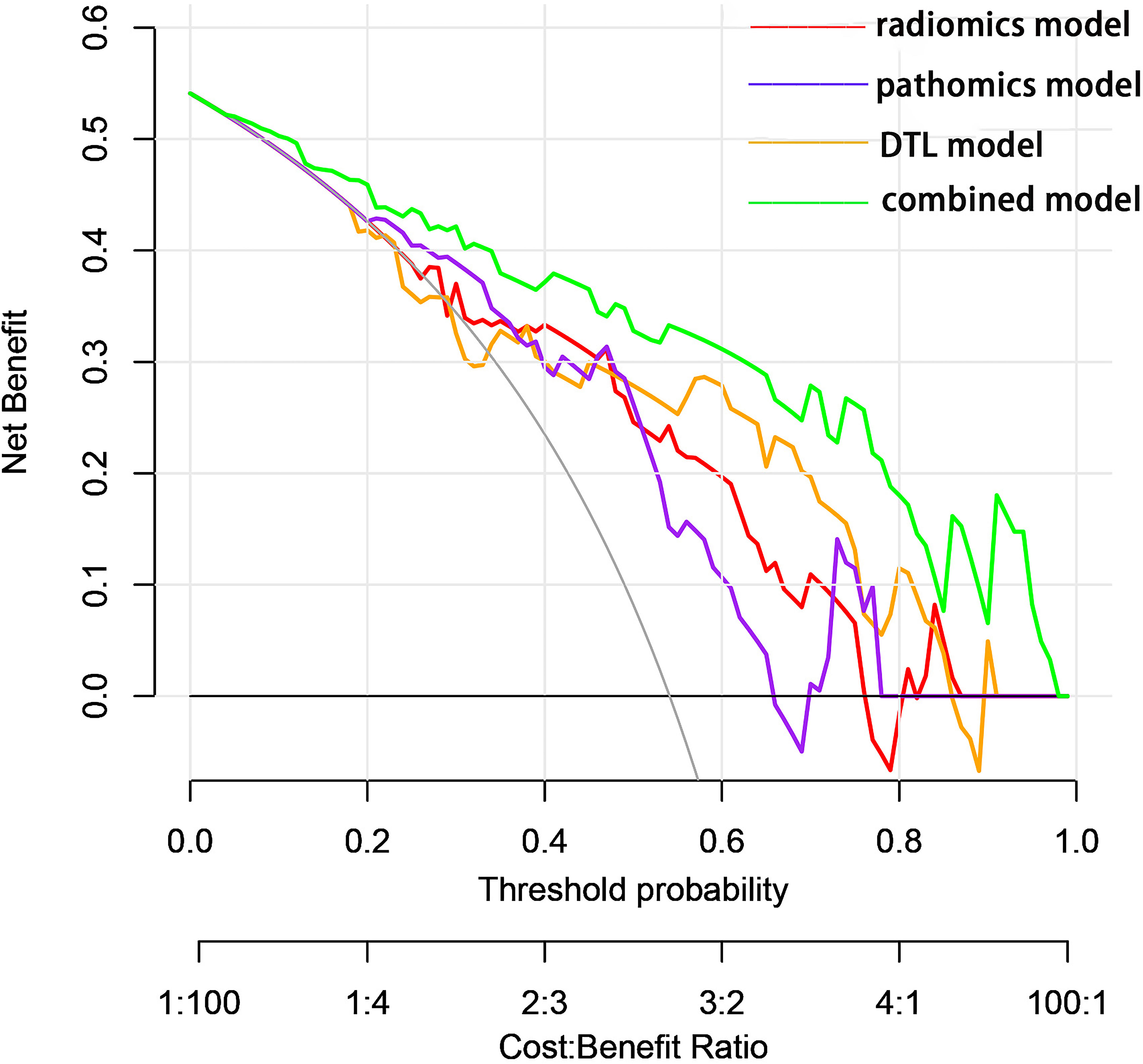

The predictive performance of the models was evaluated using ROC analysis. The best ML model for radiomics was SVM, with an AUC of 0.755 (Figure 6A). For DTL and pathomics, the best model was ResNet-50, with AUC values of 0.768, 0.714, 0.684, and 0.752 (Figures 6B–E). The nomogram graph showed that DTL contributed the most in the combined model (Figure 7), and the AUC of the combined model was 0.86 (Figure 8). Calibration curve analysis showed that the joint model has a good fit and strong calibration capability (Figure 9). The DCA curve showed that all models had good clinical net benefit, with the combined model showing higher net benefit (Figure 10).

Figure 6 ROC curve analysis for each model. (A) Radiomics. (B-D) DL (ADC, DWI, and T2WI) (E) Pathomics. T2WI, T2-weighted imaging; DWI, diffusion-weighted imaging; ADC, apparent diffusion coefficient images; ROC, receiver operating characteristic.

Figure 9 Calibration curve of the combined model indicates a better agreement between the predicted probabilities and the actual observed frequencies.

Figure 10 Decision curves showed that each model could achieve clinical benefit and that the net benefit of the combined model was better.

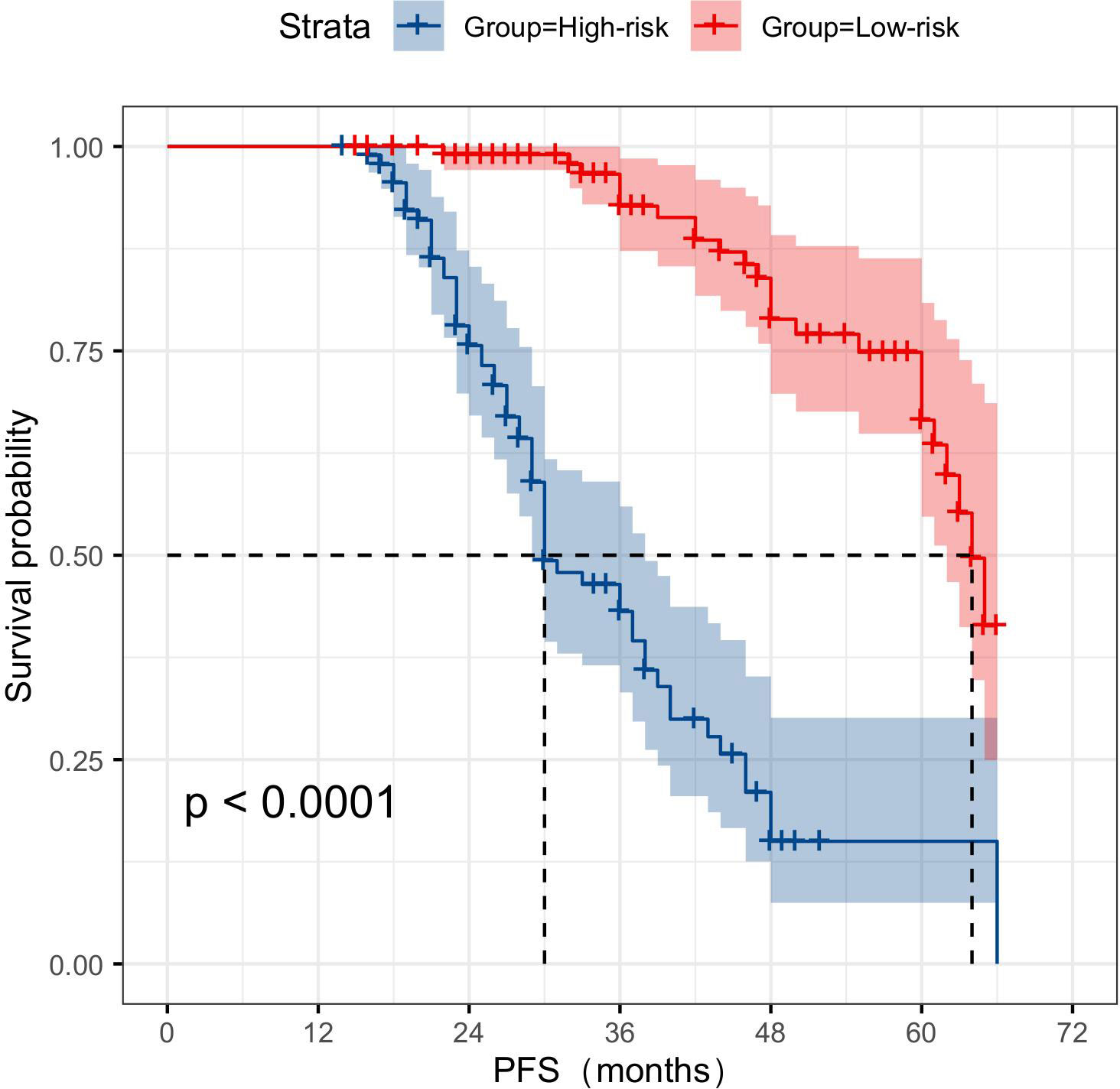

3.4 Prognosis

In the classification study of CRPC risks, a total of 87 patients experienced tumor progression-related events. The KM curve analysis showed that the joint model suggests significantly lower PFS for patients at high risk of CRPC compared to those at low risk (Figure 11).

Figure 11 KM survival curve analysis demonstrates that multimodal data can serve as a reliable predictor of the risk of CRPC occurrence. CRPC, castration-resistant prostate cancer.

4 Discussion

To our knowledge, in this retrospective cohort study conducted across multiple centers, a novel prediction model was developed and validated for the first time. This model integrated radiomics, DTL, and pathomics data to provide strong predictive capabilities in primary prostate cancer progressing to CRPC following two years of ADT. The utilization of multiparametric radiological modeling, as employed in this investigation, may aid urologist in evaluating the probability of CRPC progression and formulating personalized treatment strategies.

The prognosis of CRPC is notably unfavorable, and the challenges in its treatment are diverse among patients (18). The acquisition of reliable data from an initial diagnosis of localized PCa managed with ADT is constrained in clinical practice (19). Previous research has demonstrated a significant correlation between N-glycan score and adverse prognosis in CRPC (20). Additionally, the assessment of skeletal muscle index and skeletal muscle attenuation holds predictive value for the prognosis of metastatic CRPC (21). PSA nadir and Grade 5 were both associated with CRPC progression (22). It was also established that AR-V7 mRNA, significantly predicted biochemical recurrences and CRPC progression (23). However, none of these findings provided specific and prospective indications regarding the likelihood of castration-CRPC progression in patients with PCa. Our approach demonstrated significant predictive performance and provided therapeutic advantage. In addition, the calibration curve and KM survival curve were well-suited for the model and provided useful predictive information for patients with PCa. This finding could potentially be attributed to the multimodal data integration and the selection of suitable AI methodologies.

4.1 Multimodal data integration

Data fusion addresses inference problems by amalgamating data from various modalities that provide different viewpoints on a shared phenomenon (24, 25). Consequently, the integration of multiple modalities may facilitate the resolution of such challenges with greater precision compared to the utilization of singular modalities (26). This is particularly important in medicine, as similar results from different measurement techniques might provide different conclusions (27, 28). In recent years, the growing prevalence of original studies utilizing imaging and pathology images in the field of prostate cancer has created an opportunity for AI technology to demonstrate its potential (29, 30). Additionally, DL approaches have direct applications for segmentation, multimodal data integration and model construction (31).

We used late-stage fusion, also known as decision-level fusion, to train a separate model for each modality and then aggregate the predictions from each model to produce a final prediction. Aggregation can be done by averaging, majority voting, and Bayesian-based rules among other methods (32). During the data collection phase, we found that some of the data were missing and incomplete, while late fusion still maintained the predictive power. Since each model is trained individually, aggregation methods, such as majority voting, can be applied even if one mode is missing. In contrast, if the unimodal data do not complement one another or have weak interdependencies, late fusion may be preferred due to its simpler design and fewer parameters in comparison to other fusion procedures. This is also advantageous in instances with insufficient data. In this study, MRI and H&E tissue sections were weakly complementary to each other, and hence our post-fusion model demonstrated good predictive ability. Examples of late fusion include the integration of imaging data with non-imaging inputs, such as the fusion of MRI scans and PSA blood tests for PCa diagnosis (33). Survival prediction using the fusion of genomics and histology profiles by Chen et al. was also performed (34).

4.2 Supervised method

In this study, we selected a supervised AI approach for training radiomics models using radiology image annotations with patient outcomes to input data into predefined labels (e.g., cancer/non-cancer) (35). Since the feature extraction was not part of the learning process, the models typically had more simple architecture and lower computation costs. An additional benefit was a high level of interpretability because the predictive features could be related to the data. In contrast, the feature extraction was time-consuming and could translate human bias to the models. Based on the sample size included in this study, the supervised method was sufficient due to its simplicity and ability to learn from our radiomics model.

Self-supervised techniques effectively leverage accessible unlabeled data to acquire superior image features, subsequently transferring this acquired knowledge to supervised models. Consequently, supervised methods like CNNs are employed to address diverse pretexting tasks, wherein labels are automatically generated from the data (36). Notably, self-supervised methods are particularly well-suited for more robust computational systems and higher-resolution images (37, 38).

4.3 Model selection for DL

DL is the current state-of-the-art ML algorithm, which simulates the connections between the neurons of the human brain. It learns and extracts complex high-level features from the input data through multi-layer neural networks, thus realizing automatic classification, recognition, and prediction of data. Traditional deep CNNs often encounter the issues of gradient vanishing or gradient explosion as the number of network layers increases, leading to challenging model training. ResNet addresses this problem by introducing the concept of residual connections. The structure promotes the flow of gradients and information transfer, thereby facilitating the training of deeper networks. In this study, we selected DL models including ResNet-50, ResNet-34, ResNet-18, and Vgg19 for pre-training. Comparing these models revealed that ResNet-50 outperformed the others. The main advantage of ResNet-50 lies in its ability to effectively train very deep neural networks while avoiding issues such as gradient vanishing and gradient explosion. Consequently, it excels in image classification tasks and can manage large and complex datasets. Due to its versatile application and remarkable performance, ResNet-50 serves as a benchmark model in various computer vision tasks and is widely utilized in target detection, image segmentation, and image generation. In Lei et al.’s training study of MRI DL involving 396 patients with PCa, training a DL model for PCa classification using pairs of ResNet-50 anti-paradigms improved the generalization and classification abilities of the model (39). In another pathomics study, texture features captured using the ResNet DL framework were able to better distinguish unique Gleason patterns (40).

4.4 Limitations

The study has limitations. First, this is a retrospective study from a multicenter institution, and potential biases, such as differences in MRI acquisition parameters, are inevitable. However, as mentioned previously, we completed the data alignment and pre-processed the images to minimize the impact of these differences on the results. Second, key prognostic factors in clinical characterization were not considered in this study due to incomplete clinical data for most patients. Third, our sample size was relatively small, and the number of patients with different Gleason score classifications was unevenly distributed, which may affect the stability and reproducibility of our model. Therefore, the results of this study need to be validated externally using a large sample and a multi-region, multicenter institution in the future.

5 Conclusions

In summary, we collected a multimodal dataset from patients who developed CRPC and used it to develop and integrate radiological and histopathological models to improve CRPC risk prediction. This result encourages to conduct further large-scale studies utilizing multimodal DL.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding authors.

Ethics statement

The studies involving humans were approved by The Ethics Committee of the Gansu Provincial Geriatrics Association (2022-61). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

CZ: Conceptualization, Data curation, Funding acquisition, Investigation, Software, Supervision, Validation, Writing – original draft, Writing – review & editing. Y-FZ: Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft. SG: Data curation, Formal analysis, Investigation, Methodology, Software, Writing – review & editing. Y-QH: Data curation, Formal analysis, Investigation, Methodology. X-NQ: Investigation, Methodology, Writing – review & editing. RW: Investigation, Methodology, Writing – review & editing. L-PZ: Investigation, Methodology, Writing – review & editing. D-HC: Resources, Writing – review & editing. L-MZ: Resources, Writing – review & editing. M-XD: Conceptualization, Funding acquisition, Resources, Writing – review & editing, Data curation, Formal analysis, Visualization, Writing – original draft. F-HZ: Conceptualization, Funding acquisition, Resources, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was funded by grants from the following sources: National Natural Science Foundation of China (No.81860047), The Natural Science Foundation of Gansu Province (No.22JR5RA650), Key Science and Technology Program in Gansu Province (NO.21YF5FA016).

Acknowledgments

We thank for Chinese Medical Association of Gansu Province Geriatrics Society providing guidance and suggestions during the project. We thank for Dr.Yu-Qian Huang contributed to all the Figures in this paper.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2024.1287995/full#supplementary-material

Abbreviations

ADC, apparent diffusion coefficient; ADT, Androgen deprivation therapy; AI, Artificial intelligence; AUC, area under the curve; CNN, Convolutional Neural Network; CRPC, castration-resistant prostate cancer; CSPC, castration-sensitive prostate cancer; DCA, decision curve analysis; DICOM, Digital Imaging and Communications in Medicine; DTL, deep transfer learning; DWI, diffusion-weighted imaging; Grad-CAM, The Gradient-weighted Class Activation Mapping; KM curve, Kaplan–Meier curve; LASSO, The least absolute shrinkage and selection operator; LR, logistic regression; MRI, magnetic resonance imaging; PCa, prostate cancer; PFS, progression-free survival; PI-RADS-V2, Prostate Imaging Reporting and Data System Version 2; PSA, prostate-specific antigen; ROI, regions of interest; SVM, support vector machines; T2WI, T2-weighted.

References

1. Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin (2018) 68(6):394–424. doi: 10.3322/caac.21492

2. Sung H, Ferlay J, Siegel RL, Laversanne M M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin (2021) 71(3):209–49. doi: 10.3322/caac.21660

3. Pernigoni N, Zagato E, Calcinotto A, Troiani M, Mestre RP, Calí B, et al. Commensal bacteria promote endocrine resistance in prostate cancer through androgen biosynthesis. Science (2021) 374(6564):216–24. doi: 10.1126/science.abf8403

4. Ryan CJ, Smith MR, Fizazi K, Saad F, Mulders PF, Sternberg CN, et al. Abiraterone acetate plus prednisone versus placebo plus prednisone in chemotherapy-naive men with metastatic castration-resistant prostate cancer (COU-AA-302): final overall survival analysis of a randomised, double-blind, placebo-controlled phase 3 study. Lancet Oncol (2015) 16(2):152–60. doi: 10.1016/S1470-2045(14)71205-7

5. Harris WP, Mostaghel EA, Nelson PS, Montgomery B. Androgen deprivation therapy: progress in understanding mechanisms of resistance and optimizing androgen depletion. Nat Clin Pract Urol (2009) 6(2):76–85. doi: 10.1038/ncpuro1296

6. Cornford P, van den Bergh R, Briers E, Van den Broeck T, Cumberbatch MG, De Santis M, et al. EAU-EANM-ESTRO-ESUR-SIOG guidelines on prostate cancer. Part II-2020 update: treatment of relapsing and metastatic prostate cancer. Eur Urol (2021) 79(2):263–82. doi: 10.1016/j.eururo.2020.09.046

7. Heidenreich A, Bastian PJ, Bellmunt J, Bolla M, Joniau S, van der Kwast T, et al. EAU guidelines on prostate cancer. Part II: Treatment of advanced, relapsing, and castration-resistant prostate cancer. Eur Urol (2014) 65(2):467–79. doi: 10.1016/j.eururo.2013.11.002

8. Aerts HJ, Velazquez ER, Leijenaar RT, Parmar C, Grossmann P, Carvalho S, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun (2014) 5:4006. doi: 10.1038/ncomms5006

9. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer (2018) 18(8):500–10. doi: 10.1038/s41568-018-0016-5

10. Boeken T, Feydy J, Lecler A, Soyer P, Feydy A, Barat M, et al. Artificial intelligence in diagnostic and interventional radiology: Where are we now. Diagn Interv Imaging (2023) 104(1):1–5. doi: 10.1016/j.diii.2022.11.004

11. van Timmeren JE, Cester D, Tanadini-Lang S, Alkadhi H, Baessler B. Radiomics in medical imaging-”how-to” guide and critical reflection. Insights Imaging (2020) 11(1):91. doi: 10.1186/s13244-020-00887-2

12. Lai YH, Chen WN, Hsu TC, Lin C, Tsao Y, Wu S. Overall survival prediction of non-small cell lung cancer by integrating microarray and clinical data with deep learning. Sci Rep (2020) 10(1):4679. doi: 10.1038/s41598-020-61588-w

13. Echle A, Grabsch HI, Quirke P, van den Brandt PA, West NP, Hutchins GGA, et al. Clinical-grade detection of microsatellite instability in colorectal tumors by deep learning. Gastroenterology (2020) 159(4):1406–1416.e11. doi: 10.1053/j.gastro.2020.06.021

14. Stahlschmidt SR, Ulfenborg B, Synnergren J. Multimodal deep learning for biomedical data fusion: a review. Brief Bioinform (2022) 23(2):bbab569. doi: 10.1093/bib/bbab569

15. Wang R, Dai W, Gong J, Huang M, Hu T, Li H, et al. Development of a novel combined nomogram model integrating deep learning-pathomics, radiomics and immunoscore to predict postoperative outcome of colorectal cancer lung metastasis patients. J Hematol Oncol (2022) 15(1):11. doi: 10.1186/s13045-022-01225-3

16. Bera K, Braman N, Gupta A, Velcheti V, Madabhushi A. Predicting cancer outcomes with radiomics and artificial intelligence in radiology. Nat Rev Clin Oncol (2022) 19(2):132–46. doi: 10.1038/s41571-021-00560-7

17. Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J Clin (2019) 69(2):127–57. doi: 10.3322/caac.21552

18. Xiong Y, Yuan L, Chen S, Xu H, Peng T, Ju L, et al. WFDC2 suppresses prostate cancer metastasis by modulating EGFR signaling inactivation. Cell Death Dis (2020) 11(7):537. doi: 10.1038/s41419-020-02752-y

19. Cheng Q, Butler W, Zhou Y, Zhang H, Tang L, Perkinson K, et al. Pre-existing castration-resistant prostate cancer-like cells in primary prostate cancer promote resistance to hormonal therapy. Eur Urol (2022) 81(5):446–55. doi: 10.1016/j.eururo.2021.12.039

20. Matsumoto T, Hatakeyama S, Yoneyama T, Tobisawa Y, Ishibashi Y, Yamamoto H, et al. Serum N-glycan profiling is a potential biomarker for castration-resistant prostate cancer. Sci Rep (2019) 9(1):16761. doi: 10.1038/s41598-019-53384-y

21. Lee J, Park JS, Heo JE, Ahn HK, Jang WS, Ham WS, et al. Muscle characteristics obtained using computed tomography as prognosticators in patients with castration-resistant prostate cancer. Cancers (Basel) (2020) 12(7):1864. doi: 10.3390/cancers12071864

22. Nakamura K, Norihisa Y, Ikeda I, Inokuchi H, Aizawa R, Kamoto T, et al. Ten-year outcomes of whole-pelvic intensity-modulated radiation therapy for prostate cancer with regional lymph node metastasis. Cancer Med (2023) 12(7):7859–67. doi: 10.1002/cam4.5554

23. Khurana N, Kim H, Chandra PK, Talwar S, Sharma P, Abdel-Mageed AB, et al. Multimodal actions of the phytochemical sulforaphane suppress both AR and AR-V7 in 22Rv1 cells: Advocating a potent pharmaceutical combination against castration-resistant prostate cancer. Oncol Rep (2017) 38(5):2774–86. doi: 10.3892/or.2017.5932

24. Llinas J, Hall DL. An introduction to multisensor data fusion. Proc IEEE (1997) 85(1):6–23. doi: 10.1109/5.554205

25. Iv WCS, Kapoor R, Ghosh P. Multimodal classification: current landscape, taxonomy and future directions. ACM Computing Surveys (CSUR) (2021) 55(7):1-3. doi: 10.1145/3543848

26. Durrant-Whyte HF. Sensor models and multisensor integration. Int J Robotics Res (1988) 7(6):97–113. doi: 10.1177/027836498800700608

27. Boehm KM, Khosravi P, Vanguri R, Gao J, Shah SP. Harnessing multimodal data integration to advance precision oncology. Nat Rev Cancer (2022) 22(2):114–26. doi: 10.1038/s41568-021-00408-3

28. Boehm KM, Aherne EA, Ellenson L, Nikolovski I, Alghamdi M, Vázquez-García I, et al. Multimodal data integration using machine learning improves risk stratification of high-grade serous ovarian cancer. Nat Cancer (2022) 3(6):723–33. doi: 10.1038/s43018-022-00388-9

29. Barone B, Napolitano L, Calace FP, Del Biondo D, Napodano G, Grillo M, et al. Reliability of multiparametric magnetic resonance imaging in patients with a previous negative biopsy: comparison with biopsy-naïve patients in the detection of clinically significant prostate cancer. Diagnostics (Basel) (2023) 13(11):1939. doi: 10.3390/diagnostics13111939

30. Massanova M, Vere R, Robertson S, Crocetto F, Barone B, Dutto L, et al. Clinical and prostate multiparametric magnetic resonance imaging findings as predictors of general and clinically significant prostate cancer risk: A retrospective single-center study. Curr Urol (2023) 17(3):147–52. doi: 10.1097/CU9.0000000000000173

31. Abrol A, Fu Z, Salman M, Silva R, Du Y, Plis S, et al. Deep learning encodes robust discriminative neuroimaging representations to outperform standard machine learning. Nat Commun (2021) 12(1):353. doi: 10.1038/s41467-020-20655-6

32. Ramanathan TT, Hossen Md.J, Sayeed Md.S. Naïve bayes based multiple parallel fuzzy reasoning method for medical diagnosis. J Eng Sci Technol (2022) 17(1):0472–90.

33. Reda I, Khalil A, Elmogy M, Abou El-Fetouh A, Shalaby A, Abou El-Ghar M, et al. Deep learning role in early diagnosis of prostate cancer. Technol Cancer Res Treat (2018) 17:1533034618775530. doi: 10.1177/1533034618775530

34. Chen RJ, Lu MY, Wang J, Williamson D, Rodig SJ, Lindeman NI, et al. Pathomic fusion: an integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis. IEEE Trans Med Imaging (2022) 41(4):757–70. doi: 10.1109/TMI.2020.3021387

35. Bertsimas D, Wiberg H. Machine learning in oncology: methods, applications, and challenges. JCO Clin Cancer Inform (2020) 4:885–94. doi: 10.1200/CCI.20.00072

36. Jing L, Tian Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans Pattern Anal Mach Intell (2021) 43(11):4037–58. doi: 10.1109/TPAMI.2020.2992393

37. Li P, He Y, Wang P, Wang J, Shi G, Chen Y. Synthesizing multi-frame high-resolution fluorescein angiography images from retinal fundus images using generative adversarial networks. BioMed Eng Online (2023) 22(1):16. doi: 10.1186/s12938-023-01070-6

38. Eun DI, Jang R, Ha WS, Lee H, Jung SC, Kim N. Deep-learning-based image quality enhancement of compressed sensing magnetic resonance imaging of vessel wall: comparison of self-supervised and unsupervised approaches. Sci Rep (2020) 10(1):13950. doi: 10.1038/s41598-020-69932-w

39. Hu L, Zhou DW, Guo XY, Xu WH, Wei LM, Zhao JG. Adversarial training for prostate cancer classification using magnetic resonance imaging. Quant Imaging Med Surg (2022) 12(6):3276–87. doi: 10.21037/qims-21-1089

Keywords: radiomics, pathomics, castration-resistant prostate cancer, deep learning, multi-modal

Citation: Zhou C, Zhang Y-F, Guo S, Huang Y-Q, Qiao X-N, Wang R, Zhao L-P, Chang D-H, Zhao L-M, Da M-X and Zhou F-H (2024) Multimodal data integration for predicting progression risk in castration-resistant prostate cancer using deep learning: a multicenter retrospective study. Front. Oncol. 14:1287995. doi: 10.3389/fonc.2024.1287995

Received: 03 September 2023; Accepted: 25 January 2024;

Published: 14 March 2024.

Edited by:

Fabio Grizzi, Humanitas Research Hospital, ItalyReviewed by:

Biagio Barone, Azienda Ospedaliera di Caserta, ItalyShady Saikali, AdventHealth, United States

Jeffrey Tuan, National Cancer Centre Singapore, Singapore

Copyright © 2024 Zhou, Zhang, Guo, Huang, Qiao, Wang, Zhao, Chang, Zhao, Da and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ming-Xu Da, bGR5eV9kYW14QGx6dS5lZHUuY24=; Feng-Hai Zhou, bGR5eV96aG91ZmhAbHp1LmVkdS5jbg==

†These authors have contributed equally to this work

Chuan Zhou1,2†

Chuan Zhou1,2† Feng-Hai Zhou

Feng-Hai Zhou