- 1Department of Urology, Qidong People’s Hospital, Qidong Liver Cancer Institute, Affiliated Qidong Hospital of Nantong University, Qidong, Jiangsu, China

- 2Central Laboratory, Qidong People’s Hospital, Qidong Liver Cancer Institute, Affiliated Qidong Hospital of Nantong University, Qidong, Jiangsu, China

Introduction: Machine learning (ML) has shown significant potential in improving prostate cancer (PCa) diagnosis, prognosis, and treatment planning. Despite rapid advancements, a comprehensive quantitative synthesis of global research trends and the knowledge structure of ML applications in PCa remains lacking. This study aimed to systematically map the evolution, research hotspots, and collaborative landscape of ML-PCa research.

Methods: A systematic bibliometric review was performed on English-language articles and reviews published between January 2005 and December 2024. Publications were retrieved from the Web of Science (WOS) and Scopus databases. Analytical tools including CiteSpace, VOSviewer, and the R-bibliometrix package were employed to assess publication growth trends, country and institutional contributions, collaboration networks, author productivity, journal outlets, and keyword co-occurrence patterns.

Results: A total of 2,632 publications were identified. Annual output increased from fewer than 20 papers during 2005–2014 to 661 in 2024, with 82% of all studies published since 2021. Emerging frontiers included deep learning, radiomics, and multimodal data fusion. China (649 publications) and the United States (492 publications) led in research volume, while Germany demonstrated the highest proportion of multinational collaboration (39.29%). Leading institutions by output were the Chinese Academy of Sciences, the University of British Columbia, and Shanghai Jiao Tong University. In terms of citation impact, the University of Toronto, Case Western Reserve University, and the University of Pennsylvania ranked highest. The journals Cancers, Frontiers in Oncology, and Scientific Reports published the most ML-PCa studies, highlighting the cross-disciplinary nature of the field. Madabhushi Anant emerged as the most central author hub in global collaboration networks.

Discussion: ML applications in PCa research have experienced exponential growth, with methodological innovations driving interest in deep learning and radiomics. However, a persistent translational gap exists between algorithmic development and clinical implementation. Future directions should focus on fostering interdisciplinary collaboration, conducting prospective multicenter validation studies, and aligning with regulatory standards to accelerate the integration of ML models into clinical PCa workflows.

Introduction

In recent years, prostate cancer (PCa) has emerged as a leading public-health challenge for men (1), with new cases accounting for approximately 14.1% of all male cancers and PCa-specific deaths comprising about 7% of global cancer mortality (2). Although the widespread adoption of prostate-specific antigen (PSA) screening since the 1990s and advances in surgical and radiotherapeutic techniques have improved early detection and treatment (3, 4), existing biomarkers still suffer from limited specificity, contributing to overdiagnosis rates as high as 30%–40% (5, 6). Moreover, high-risk subtypes such as castration-resistant PCa continue to bear poor prognoses, with five-year survival rates below 30% (7), underscoring the urgent need to transcend traditional diagnostic and therapeutic paradigms.

Machine learning (ML), as a transformative technological force, has demonstrated substantial promise across multiple facets of PCa management (8). In imaging diagnostics, MRI-based radiomics models and deep-learning algorithms have facilitated automated Gleason grading and early tumor detection, markedly enhancing diagnostic accuracy (9–11). In genomics, multimodal ML approaches can mine complex gene-expression profiles and exosomal signatures to uncover novel biomarkers (12, 13). At the therapeutic level, deep neural networks have been employed to predict patient outcomes under varying treatment regimens, guiding personalized medication strategies and radiotherapy planning (14, 15).

Despite these advances, concerns persist regarding reproducibility, external validation, and the clinical utility of ML applications. Multiple systematic reviews have highlighted a pattern of methodological innovation outpacing clinical readiness. For example, among AI systems benchmarked against clinicians (11, 16, 17), only a minority were prospectively tested or deployed in real-world settings, and adherence to reporting guidelines such as CONSORT-AI remains inconsistent (18). This imbalance underscores the need for bibliometric evaluations to characterize the trajectory of research outputs, identify key domains of progress, and expose areas where translational gaps remain.

Against this backdrop, the present study conducts a systematic review and bibliometric analysis of ML-PCa literature published between 2005 and 2024 in the Web of Science (WOS) and Scopus databases. Utilizing CiteSpace, VOSviewer, and the R-bibliometrix package, we analyze publication trends, authorship and institutional networks, journal and reference co-citations, and the evolution of thematic keywords, providing a structured overview of this rapidly evolving field. In addition to these descriptive analyses, we further examine proportional signals related to clinical validation and translation. Specifically, within our corpus (2005–2024; n=2,632), the explicit use of the keyword “validation” accounted for only ~2.8% (73/2,632), and terms directly reflecting prospective evaluation, randomization, or real-world implementation were absent among the most frequent author keywords. By combining quantitative bibliometric mapping with critical appraisal of clinical integration, we aim to pinpoint both the technological and implementation gaps that must be bridged for ML to fulfil its promise in PCa care.

Methods

Data collection and preprocessing

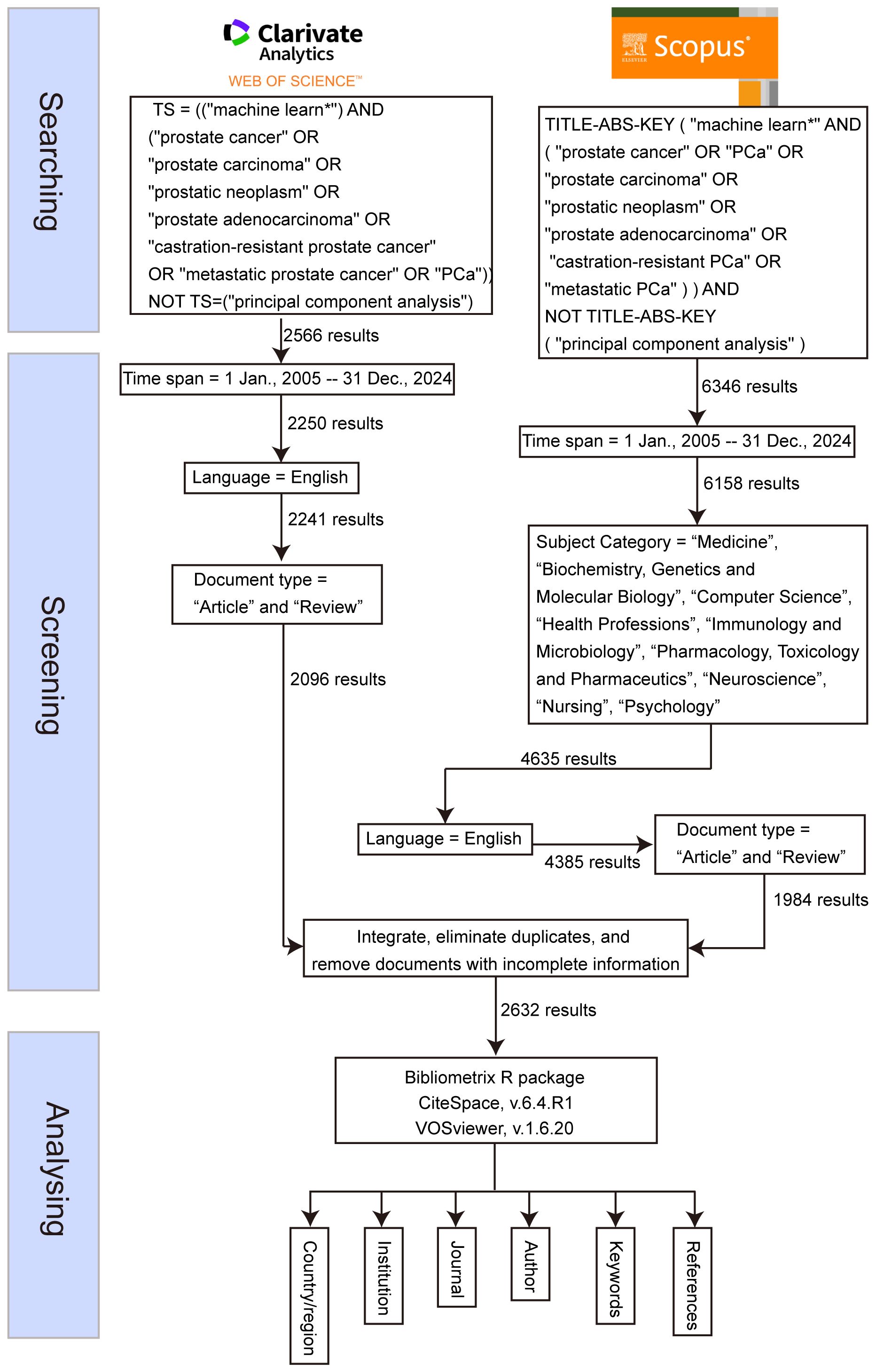

On July 5, 2025, a systematic literature search was conducted across two widely recognized databases: the WOS Core Collection and Scopus. The search strategy for WOS was defined by the query: TS=(“machine learn*”) AND (“prostate cancer” OR “prostate carcinoma” OR “prostatic neoplasm” OR “prostate adenocarcinoma” OR “castration-resistant prostate cancer” OR “metastatic prostate cancer” OR “PCa”), while the search for Scopus used the query: TITLE-ABS-KEY (“machine learn*” AND (“prostate cancer” OR “PCa” OR “prostate carcinoma” OR “prostatic neoplasm” OR “prostate adenocarcinoma” OR “castration-resistant PCa” OR “metastatic PCa”)). Both searches were limited to publications from January 1, 2005, to December 31, 2024, and restricted to articles and reviews published in English. For the Scopus database, additional filtering was applied to include only articles from the following subject categories: “Medicine,” “Biochemistry, Genetics and Molecular Biology,” “Computer Science,” “Health Professions,” “Immunology and Microbiology,” “Pharmacology, Toxicology and Pharmaceutics,” “Neuroscience,” “Nursing,” and “Psychology.” Following the retrieval, the documents from both databases were merged using Python (version 3.9.14), ensuring consistent terms across the two datasets. Duplicate entries were removed, and records with incomplete or missing information were excluded (Figure 1). The remaining documents were then retained for further analysis.

Bibliometric toolchain configuration

The analysis leveraged a tripartite toolchain to ensure methodological rigor and multidimensional insights. First, CiteSpace 6.4.R1 (19, 20) was deployed to detect citation bursts and temporal trends. It was configured with 1-year time slices (2005–2024), a g-index term selection criterion (k=25), and Pathfinder network pruning (γ=0.7) to optimize cluster resolution. Concurrently, VOSviewer 1.6.20 (21) was used to generate co-authorship and keyword co-occurrence networks. For mapping countries and institutions, a minimum threshold of five documents per node was applied, with full counting and association strength normalization to minimize bias toward high-frequency terms. For statistical validation and thematic evolution tracking, bibliometrix (version 4.1.0) (22) in R (version 4.3.1) was used to perform Latent Dirichlet Allocation topic modeling—initiating 10 topics through Gibbs sampling over 2,000 iterations. Exponential smoothing (α=0.8) was applied to model productivity trends.

Results

Publication trends and document characteristics

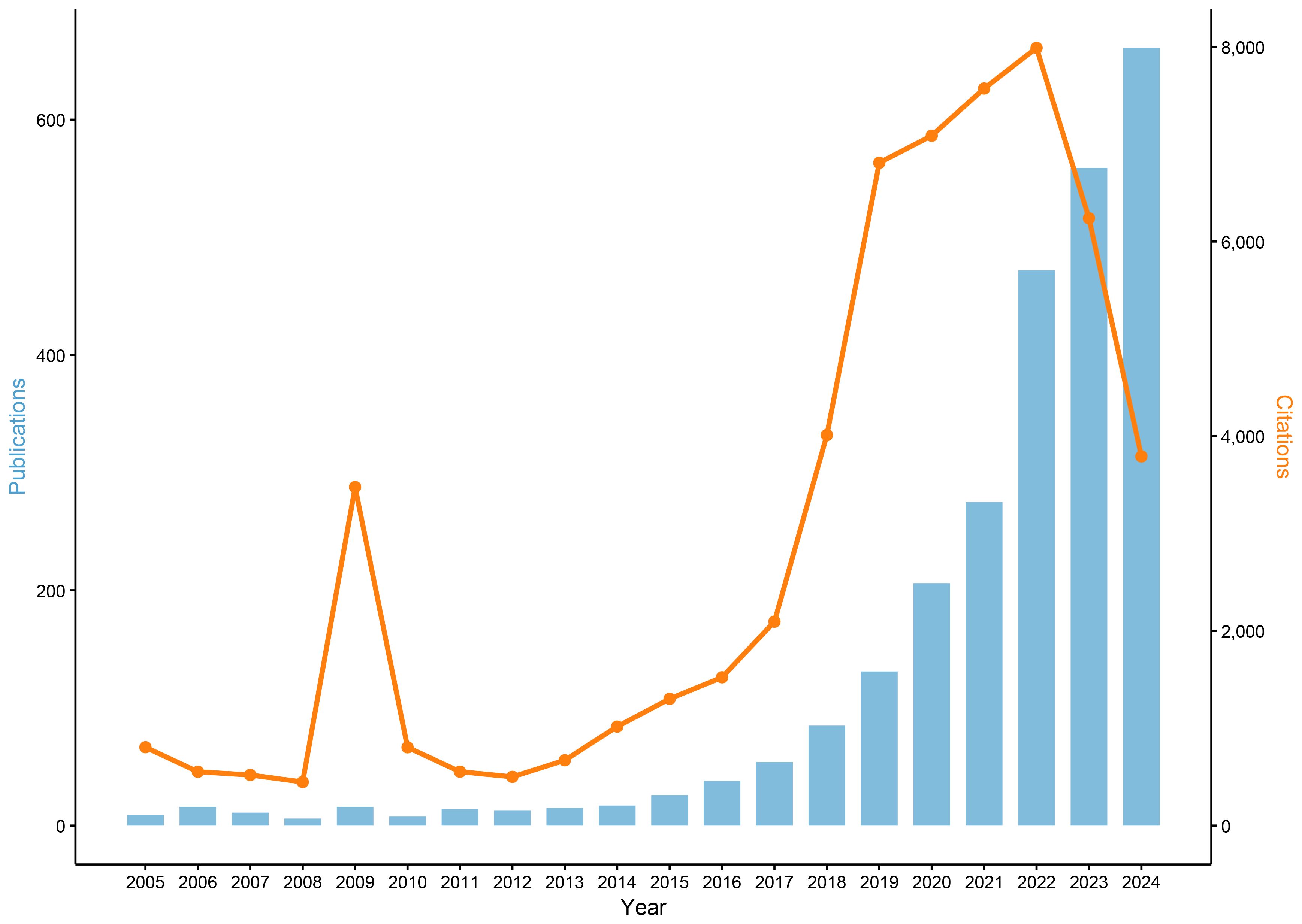

Between 2005 and 2024, a comprehensive analysis identified 2,632 publications in the field of ML-PCa (Figure 2). Initial progress was measured, with annual publications consistently below 20 throughout 2005–2014. Accelerated growth commenced in 2015, driving a sustained increase in output that exceeded 100 articles by 2018 and reached 206 in 2020. The period 2021–2024 witnessed exponential expansion, with annual publications peaking at 472 (2022), 559 (2023), and 661 (2024). Collectively, this trajectory generated an average annual growth rate of 25.4% over the two-decade period, with 82% of cumulative publications (2,173/2,632) concentrated in the final four years (2021–2024). Cumulative citations reached 57,771, attesting to the field’s scholarly significance. Parallel citation trends show moderate fluctuations (400–800 citations annually) during 2005–2013, interrupted by a transient 2009 spike (3,478 citations) attributable to highly influential works. From 2014 onward, citations grew robustly, surpassing 4,000 in 2018 and surging to 6,809 in 2019. The 2020–2022 period sustained exceptional impact (7,000–8,000 citations annually), peaking at 7,989 in 2022. Although 2023–2024 saw a moderate decline to ~3,700 annual citations, persistently elevated levels confirm ML-PCa’s enduring academic relevance.

Country contributions

Globally, researchers from 92 countries/regions have contributed to ML-PCa research. China emerged as the dominant contributor with 649 publications (24.66% of total output), followed by the United States (492 publications, 18.69%) and India (162 publications, 6.16%) (Table 1). Network analysis positioned the United States and China as central hubs (Figure 3A), with the United Kingdom, Canada, and India forming key peripheral connections. Annual growth patterns indicate accelerated global output after 2018, with China and the United States establishing overwhelming dominance by 2024 (Figure 3B).

Table 1. Top 10 most productive countries in ML-PCa research and their pattern of international collaboration patterns.

Figure 3. Country contributions and collaboration network in ML-PCa research. (A) Annual publication trends of the most productive countries from 2005 to 2024. (B) Global collaboration map illustrating international cooperation between countries or regions based on co-authorship. (C) Chord diagram of country-level collaborations, where the ribbon width represents the collaboration intensity between countries. (D) Country citation clustering network, where node size reflects the number of citations received by each country and node color indicates different citation clusters.

Analysis of international collaboration revealed significant strategic differences: While China maintained a relatively low multinational collaboration proportion (MCP ratio=13.56%), the United States exhibited higher collaborative engagement (MCP ratio=20.93%). Germany demonstrated the most extensive international integration among top contributors (MCP ratio=39.29%) (Table 1).

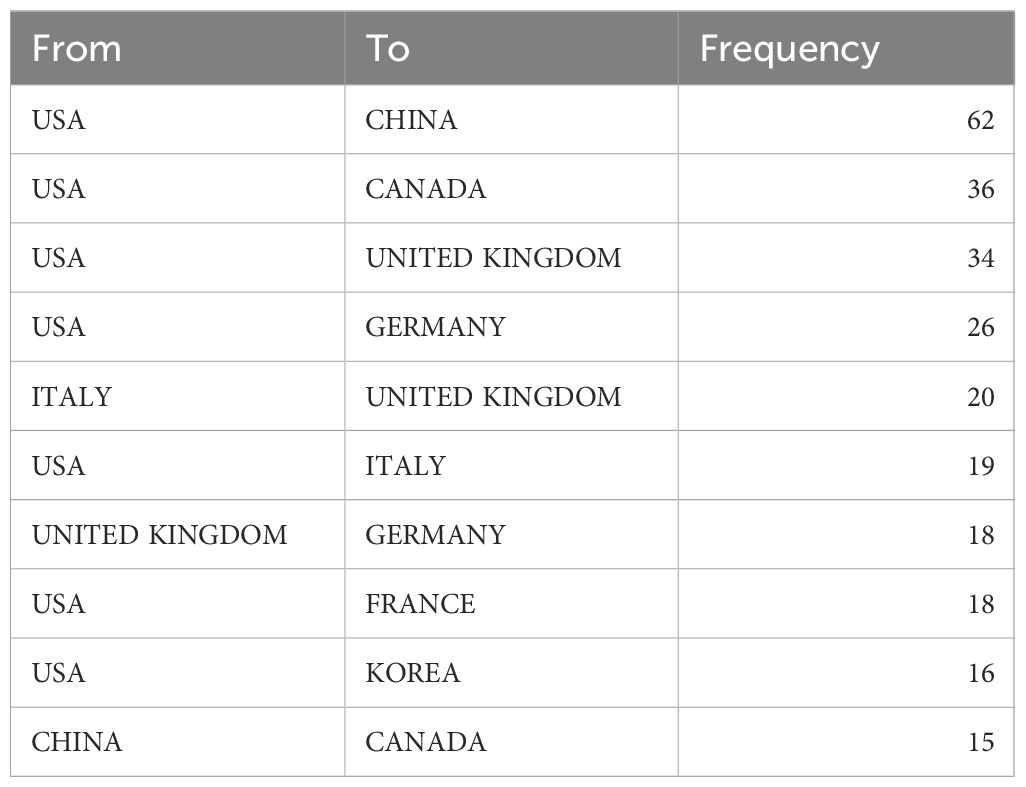

Bilateral analysis identified the United States-China partnership (62 joint publications) as the strongest collaborative dyad, followed by United States-Canada (36) and United States-United Kingdom (34) (Figure 3C, Table 2). Citation-based clustering confirmed the dual centrality of the United States and China, while revealing distinct regional clusters: Italy, Germany, and the United Kingdom formed European-oriented groupings, whereas India anchored a separate citation community (Figure 3D).

Institution features

Global institutional engagement in ML-PCa research spans 3,638 unique organizations. Annual publication trends demonstrate markedly accelerated output after 2015, with particularly steep growth emerging post-2020 (Figure 4A). Leading institutions by publication volume include the Chinese Academy of Sciences (42 publications), University of British Columbia (32 publications), and Shanghai Jiao Tong University (25 publications). Disparities emerge when assessing scholarly impact: while the Chinese Academy of Sciences leads in volume, its average citation rate (CPP=38.31) trails behind several Western counterparts. The University of Toronto achieves the highest CPP (70.54), followed closely by Case Western Reserve University (69.86) and University of Pennsylvania (59.83), signaling exceptionally influential research from these institutions (Table 3).

Figure 4. Institutional contributions to ML-PCa research. (A) Annual publication statistics of the top contributing institutions from 2005 to 2024. (B) Institutional collaboration network in ML-PCa research. Each node represents a research institution, with node size proportional to the number of publications. Colors denote distinct clusters of collaborating institutions, and connecting lines represent co-authorship links. (C) Cluster map of keyword co-occurrence for institutions. Each colored cluster corresponds to a major thematic area, with node size reflecting keyword frequency and link strength indicating co-occurrence. (D) Institutional citation clustering network. Nodes represent institutions, sized by total citation counts, and colored by citation clusters; link thickness reflects citation relationships.

Table 3. Top 10 most productive institutions in ML-PCa research, including citation count, average citations per article, and centrality.

The institutional collaboration network reveals distinct structural patterns (Figure 4B). Western institutions, particularly University of British Columbia (highest centrality=0.06), Stanford University, and University of Pennsylvania, function as primary global connectors with extensive international linkages. Major Chinese institutions including the Chinese Academy of Sciences and Shanghai Jiao Tong University participate actively in global networks while exhibiting stronger regional cohesion. Research specialization clusters show clear geographic alignment (Figure 4C): North American and European institutions demonstrate concentrated expertise in digital pathology and artificial intelligence applications, whereas Chinese counterparts show heightened focus on cell-free DNA analysis and medical imaging diagnostics.

Citation network analysis confirms a multipolar global impact landscape (Figure 4D). Both the Chinese Academy of Sciences and University of British Columbia anchor densely connected citation cores, with additional regional clusters emerging: Chinese institutions including Huazhong University of Science and Technology form a distinctive citation collective, while institutions from the United States and United Kingdom establish self-contained high-impact modules. This structural configuration reflects China’s transition from peripheral contributor to central knowledge producer, while Western institutions maintain leadership in specialized methodologies and collaborative infrastructure.

Journal distribution and citation impact

Globally, ML-PCa research has been disseminated across 10,437 unique journals, with Cancers (82 publications), Frontiers in Oncology (70 publications), and Scientific Reports (52 publications) constituting the dominant outlets (Table 4). Analysis of scholarly impact reveals Cancers as the most cited journal (1,127 total citations), though Medical Physics demonstrates superior per-article influence (CPP=27.79) despite moderate output volume. Notably, Cancers, Scientific Reports, and IEEE Access share equivalent long-term impact metrics (H-index=17), indicating comparable dominance within the domain (Table 4).

Table 4. Top 10 most productive journals in ML-PCa research, including citation metrics and 2024 impact factors.

Annual publication trends exhibit exponential growth after 2018, peaking in 2024 with Cancers, Frontiers in Oncology, and Applied Sciences-Basel leading this expansion (Figure 5A). Thematic clustering delineates distinct journal specializations: Cancers and Frontiers in Oncology anchor oncology-focused research, while Sensors and IEEE Access concentrate on computational modeling applications. Medical Physics emerges as a critical interdisciplinary hub through its bridging position between clinical and technological clusters (Figure 5B).

Figure 5. Journal-level bibliometric and thematic analysis of ML-PCa research. (A) Annual article output by top journals from 2010 to 2024, shown as a stacked bar chart where each color represents one of the leading journals. (B) Thematic clustering of journals based on their publication profiles in ML-PCa research: nodes represent journals, colored by dominant thematic cluster, with size proportional to publication volume. (C) Journal co-citation network: nodes represent journals sized by the number of times they are cited in ML-PCa articles, colors denote co-citation clusters, and edges indicate co-citation links.

Co-citation network analysis positions high-impact journals including Nature, IEEE Transactions on Medical Imaging, and Cancers as foundational knowledge sources within tightly interconnected citation modules (Figure 5C). These citation relationships underscore the integration of machine learning methodologies into medical research paradigms. Temporal keyword evolution further reveals a transformative trajectory: pre-2015 research emphasized conventional techniques like feature extraction and support vector machines, whereas post-2020 publications increasingly prioritize deep learning, convolutional neural networks (CNN), and radiogenomics (Supplementary Figure 1).

Analysis of cross-domain knowledge flows revealed three primary diffusion trajectories, each demonstrating distinct transdisciplinary pathways (Figure 6). The most prominent trajectory originates from systems and computer engineering journals, with knowledge subsequently adopted by publications in medicine and genetics research. A secondary pathway involves mathematical modeling sources transferring methodological innovations to clinical medical imaging applications. The third trajectory captures how sensor technology literature progressively informs molecular biology and immunology studies. Collectively, this tripartite diffusion pattern establishes ML-PCa research as a multidisciplinary convergence domain wherein computational innovations continually enable transformative advances in precision oncology, fundamentally reshaping biomedical discovery paradigms through cross-pollination of computational and life science methodologies.

Figure 6. Dual-map overlay of citing and cited journals across scientific domains: left-hand map shows subject areas of citing journals, right-hand map shows domains of cited journals, and curved paths trace knowledge flows.

Author productivity and collaboration networks

The global ML-PCa research community comprises 12,345 authors, with 23.21% of publications involving international collaborations. As documented in Table 5 and Figure 7A, Madabhushi Anant stands as the foremost contributor with 19 publications achieving 1,475 total citations, yielding an exceptional average citation rate of 77.63 per paper and an H-index of 16, establishing clear scholarly leadership. Additional high-impact authors include Abolmaesumi Purang and Mousavi Parvin (8 publications each), while specialists like Shiradkar Rakesh (average citations = 45.13) and Cuocolo Renato (47.50) demonstrate significant influence through focused high-quality output despite modest publication volumes.

Table 5. Top 10 most productive authors in ML-PCa research, including documents, citation count, average citations per article, and H-index.

Figure 7. Author and topic-level mapping of ML-PCa research. (A) Publication productivity of authors in the current study: each node represents an author, node size reflects the number of articles they published. (B) Author citation network: nodes sized by citation counts of authors, edges represent citation relationships, indicating authors’ scholarly impact. (C) Cited-author co-citation network: nodes are authors cited in ML-PCa publications, sized by co-citation frequency, with colors denoting co-citation clusters.

Collaboration network analysis reveals critical structural patterns (Figure 7B). Madabhushi Anant occupies the central network hub, maintaining robust ties with Abolmaesumi Purang, Comelli Albert, and Cacciamani Giovanni E., forming the nucleus of a densely interconnected cluster. Distinct multinational subgroups—notably Italian, Spanish, and U.S. researcher collectives—form peripheral subnetworks, reflecting the domain’s internationalized character.

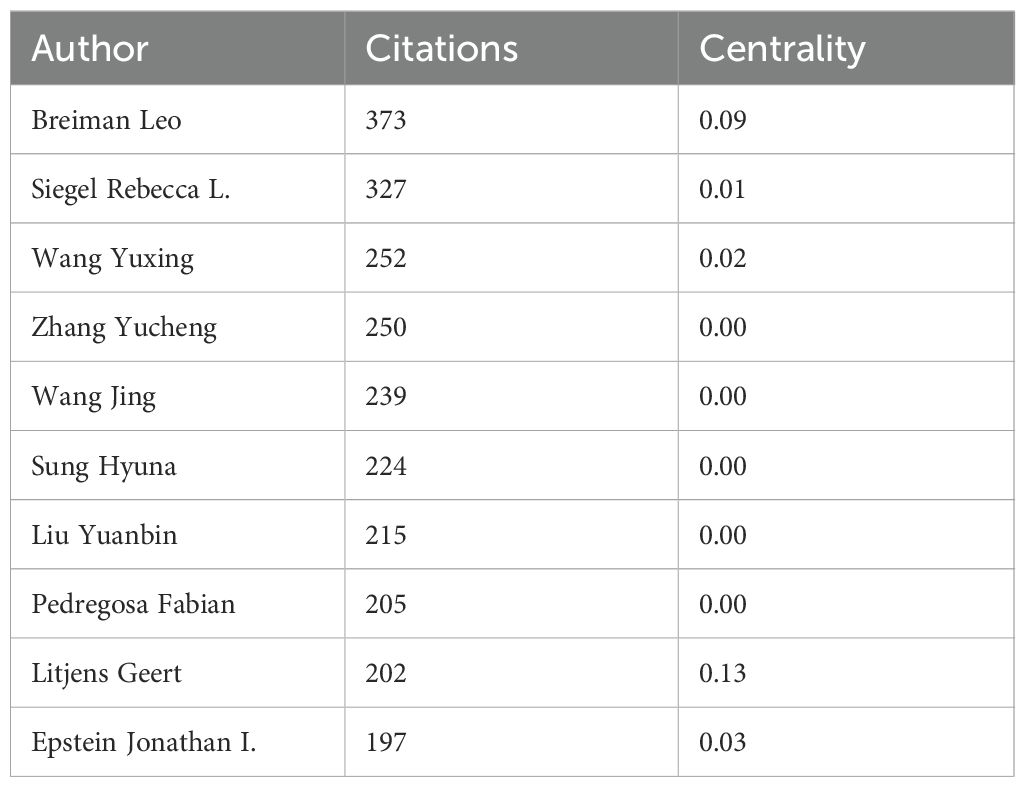

Co-citation analysis identifies foundational knowledge contributors transcending direct publication output (Figure 7C; Table 6). Leo Breiman emerges as the most influential cited author (373 co-citations) with maximal centrality (0.09), underscoring his methodological primacy. Complementary authorities include Siegel Rebecca L. (327 citations) in epidemiological foundations and Wang Yuxing (252 citations) in statistical applications. Thematic clustering demarcates specialized knowledge streams: red and pink clusters concentrate on computational innovations in image recognition and deep learning, while blue and green clusters anchor clinical diagnostics and statistical epidemiology. Litjens Geert’s bridging centrality (0.13) despite moderate citations (202) confirms his role in cross-domain knowledge integration.

Longitudinal keyword evolution documents a profound methodological transition (Supplementary Figure 2). Pre-2015 research emphasized feature engineering techniques (“support vector machines”, “texture analysis”, “TRUS imaging”). During 2015–2020, focus shifted toward integrated approaches (“radiomics”, “multiparametric MRI”, “deep learning”). Post-2020 innovations feature advanced architectures (“transformer models”, “attention mechanisms”) and multimodal integration (“radiogenomics”). This trajectory delineates the field’s progression from manual feature extraction toward sophisticated multimodal AI frameworks, increasingly prioritizing automated pattern discovery and biological correlation.

Keyword co-occurrence and thematic evolution

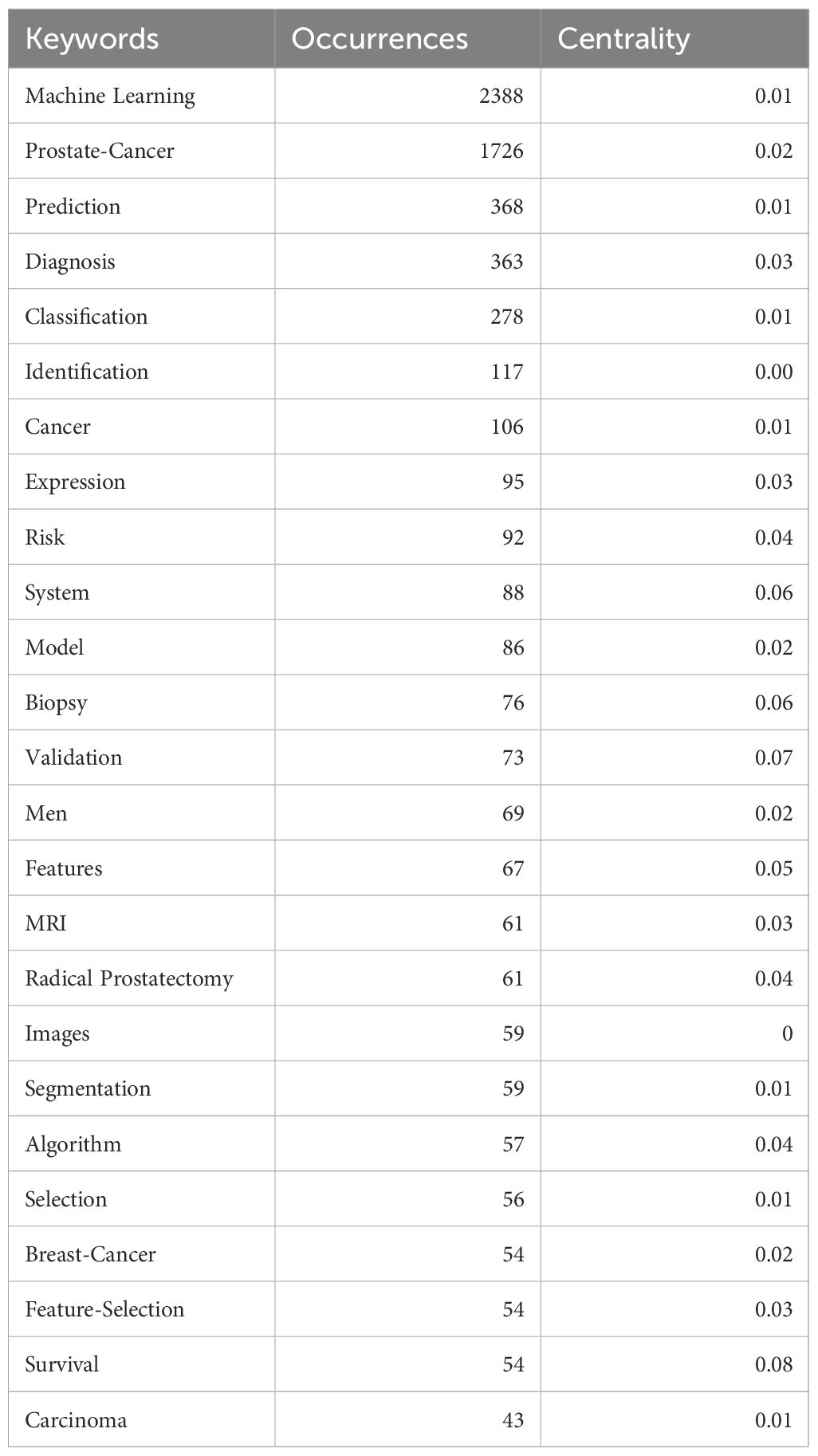

Keyword co-occurrence analysis establishes a multidisciplinary framework for ML-PCa research, integrating computational methods with clinical diagnostics (Figure 8A; Table 7). “machine learning” emerges as the dominant keyword (2,388 occurrences), followed by “prostate cancer” (1,726 occurrences), confirming their foundational importance. Centrality analysis identifies critical bridging terms including “Survival” (centrality=0.08), “System”, “Biopsy”, and “Validation” (centrality > 0.06), which facilitate knowledge exchange between technical and clinical domains. While less frequent, “Identification” and “MRI” also demonstrate significant network connectivity, underscoring their role in thematic integration.

Figure 8. Keyword-based bibliometric analysis of ML-PCa research. (A) Co-occurrence network of author-assigned keywords: each node represents a keyword, node size reflects occurrence frequency, edges indicate co-occurrence relationships, and color intensity denotes citation centrality. (B) Keyword clustering map: Keywords are grouped into distinct thematic clusters based on co-occurrence, visualized with color-coded clusters. Node size represents frequency, and colors indicate cluster membership.

Thematic mapping reveals distinct research concentrations through cluster analysis (Figure 8B). Key groupings include Cluster #0 (“deep learning”), Cluster #2 (“prostate cancer”), Cluster #6 (“artificial intelligence”), and Cluster #15 (“predictive modeling”), delineating core domains spanning diagnostic modeling and AI applications. Density visualization (Supplementary Figure 3) further highlights intensive research activity around “prostate cancer”, “diagnosis”, “biopsy”, and “identification”, forming the field’s substantive core.

Temporal evolution demonstrates a paradigm shift in research priorities (Supplementary Figure 4; Supplementary Figure 5). Early-phase research (pre-2015) emphasized conventional techniques like “pattern recognition”, “algorithm”, “classification”, and “support vector machine”. Post-2015 witnessed accelerating adoption of “deep learning”, “radiomics”, and “image segmentation”, with post-2020 research dominated by emergent concepts including “transfer learning”, “segmentation”, and “radiogenomics”. This progression reflects the field’s transition from feature engineering toward complex multimodal integration.

Burst detection analysis (Figure 9) reinforces this evolutionary trajectory. Foundational methodologies including “algorithm” and “automated pattern recognition” exhibited strong bursts during 2005–2018, while contemporary emphases feature “transfer learning”, “radiotherapy dosage”, and “radiology” (2020–2024). This shift from methodological exploration to clinical implementation signifies ML-PCa’s maturation into a translational research domain focused on precision oncology applications.

Figure 9. Top 25 keywords with the strongest citation bursts (2005–2024): red bars mark the active burst period for each keyword, and numbers indicate burst strength and timing.

To quantify the extent of clinically oriented research, we relied on bibliometric proxies. Author keyword frequency provided a conservative lower bound: validation appeared 73 times, representing approximately 2.8% of the corpus (73/2,632; Table 7). Keywords such as prospective, randomized, trial, implementation, or real-world were absent from the top 25, suggesting low prevalence overall.

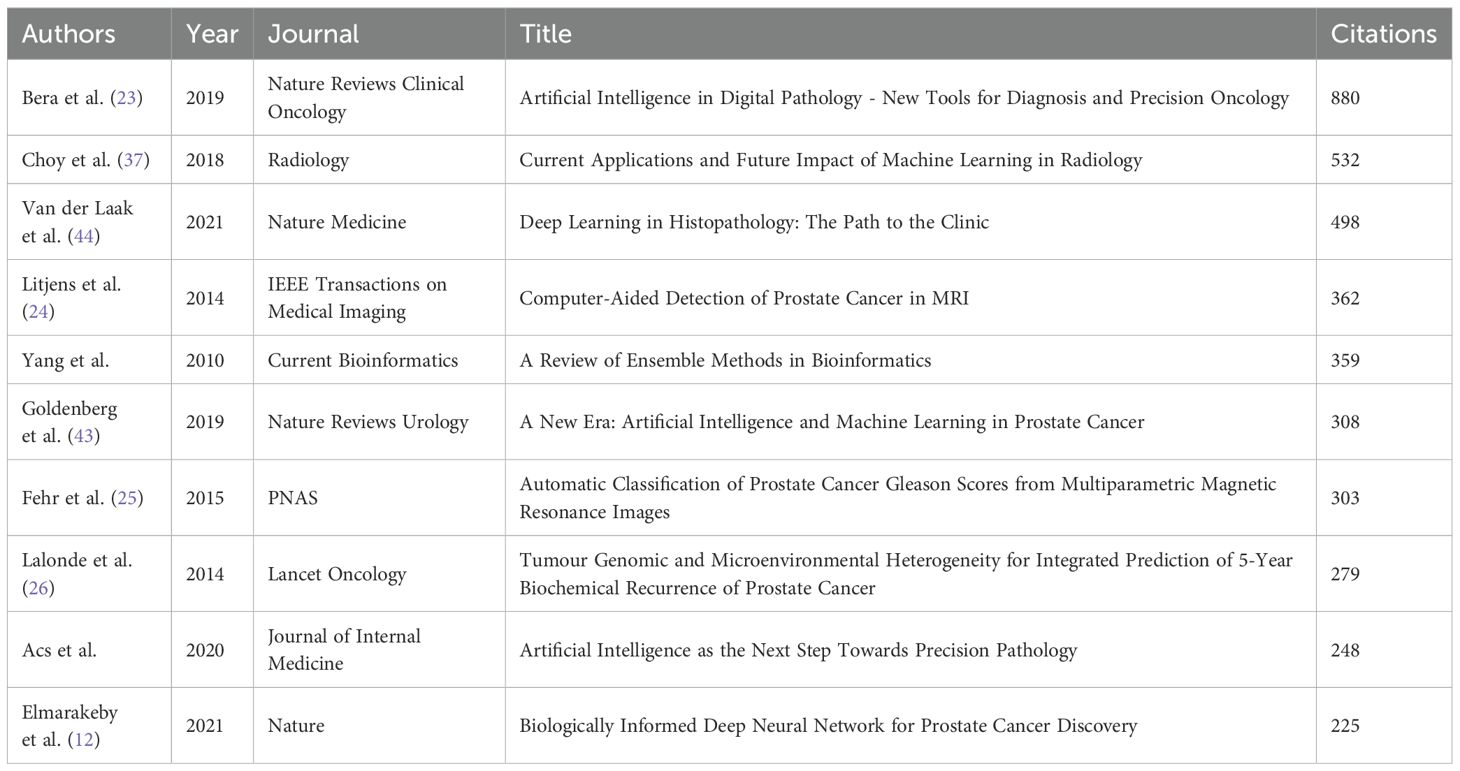

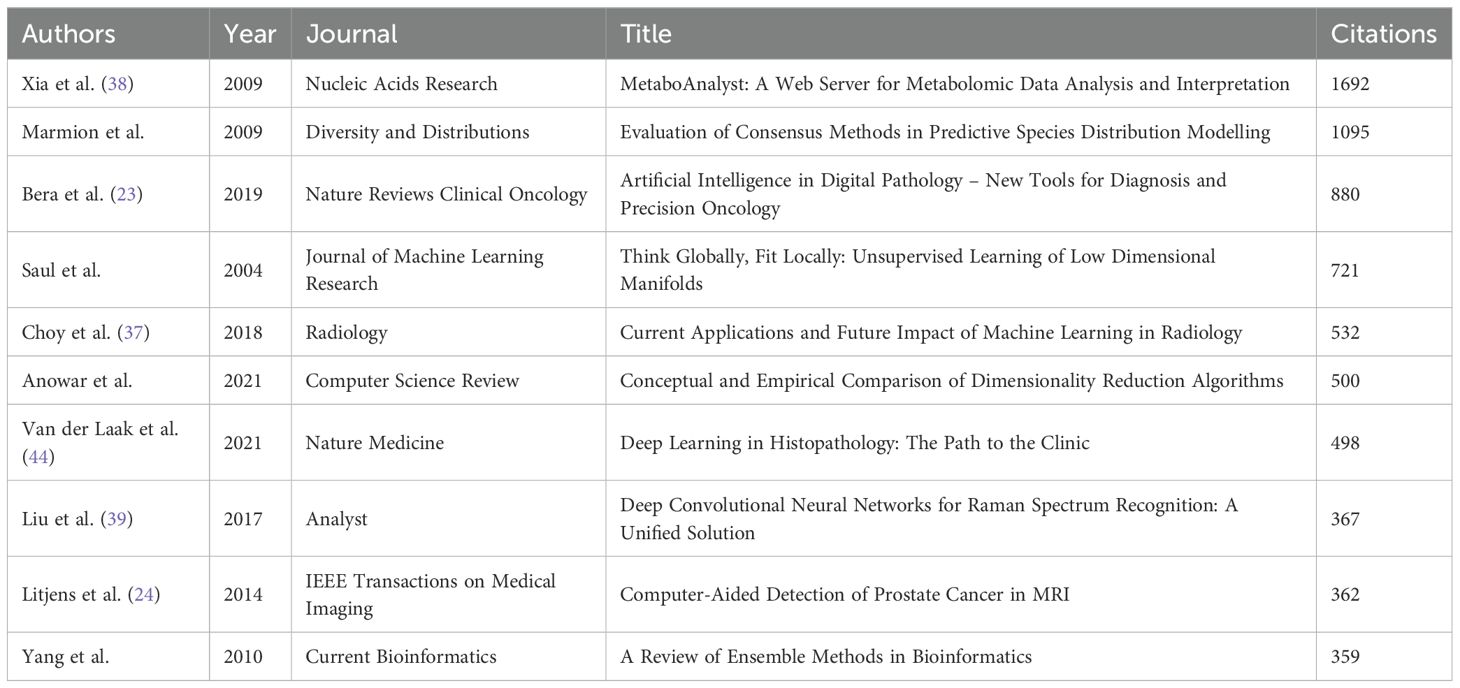

References and knowledge base

Core literature analysis identifies seminal works anchoring the intellectual structure of ML-PCa research. Bera et al. (2019) (23) (Nature Reviews Clinical Oncology, 880 citations) establishes AI’s transformative role in digital pathology and precision oncology, while Litjens (2014) (24), Fehr (2015) (25), and Lalonde (2014) (26) demonstrate foundational advances in MRI-based detection and tumor microenvironment analysis (Figure 10A; Table 8). These highly connected works reflect the domain’s emphasis on clinical-AI integration across radiology and pathology.

Figure 10. Reference-based bibliometric analysis in ML-PCa research. (A) Local co-citation network based on included literature: Constructed using VOSviewer, where each node represents a document, node size indicates the number of local citations, edges denote co-citation relationships between documents, and colors correspond to different thematic clusters. (B) Citation network analysis of external references cited by ML-PCa publications. Each node corresponds to a referenced article, with size proportional to the number of times it was cited. Nodes are color-coded by citation centrality. (C) Co-citation network of references. Nodes represent frequently co-cited references within ML-PCa literature. Clusters denote groups of papers that are often cited together, revealing major research themes and intellectual structure.

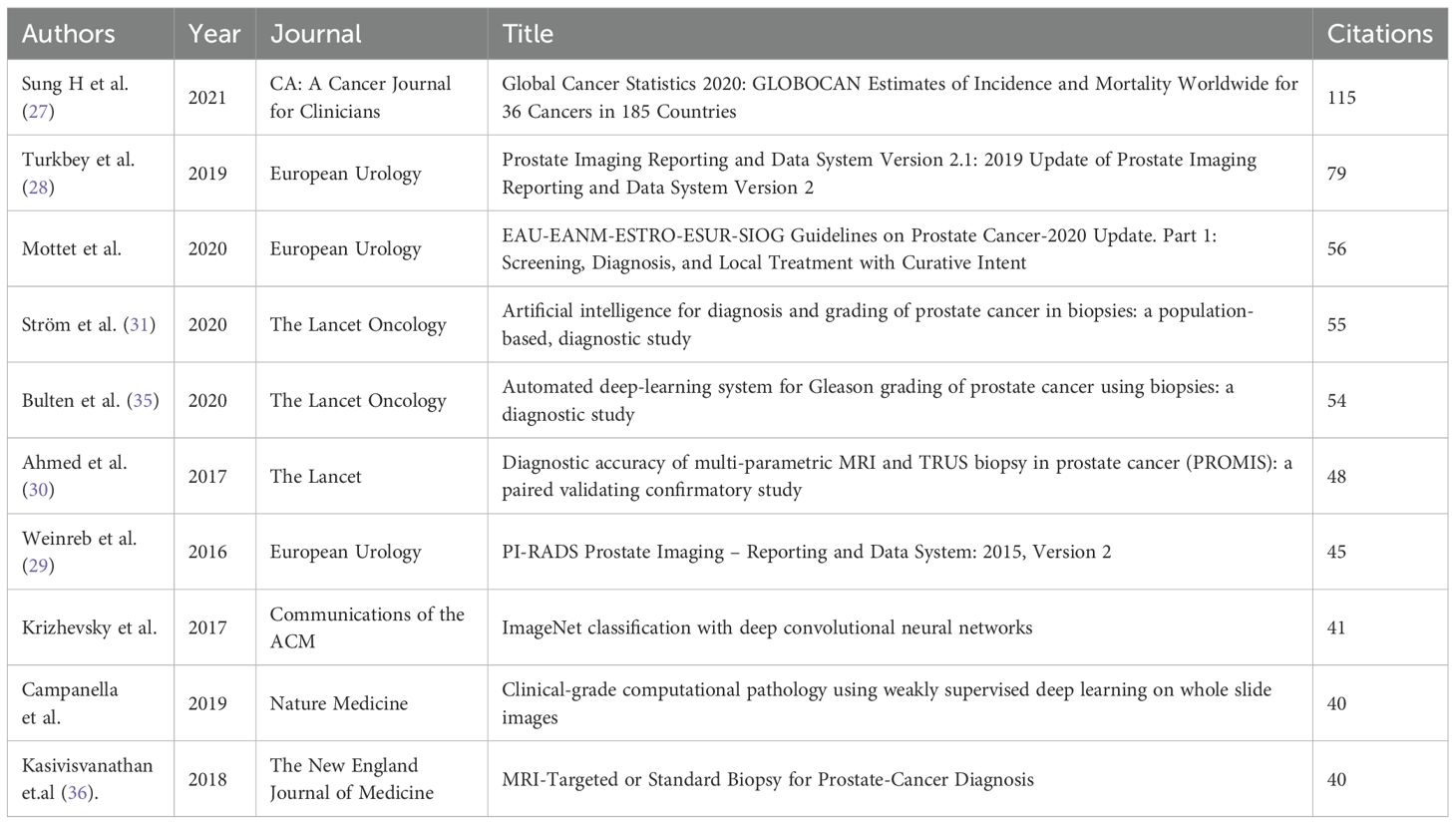

The cited-reference network reveals central clinical guidelines underpinning ML-PCa development (Figure 10B; Table 9). Sung et al. (2021) (115 citations; Table 9) provides critical epidemiology data through GLOBOCAN 2020 (27), while Turkbey et al. (2019) (28)and Weinreb et al. (2016) (29) standardize mpMRI interpretation via PI-RADS v2.1/v2. Key validation studies – Ahmed et al. (2017)’s PROMIS trial (30) and Ström et al. (2020)’s AI-assisted biopsy system (31) – demonstrate the translational impact of these frameworks. Keyword clustering (Supplementary Figure 6) confirms this cross-disciplinary focus, with “machine learning,” “multiparametric MRI,” and “predictive models” forming dominant conceptual hubs.

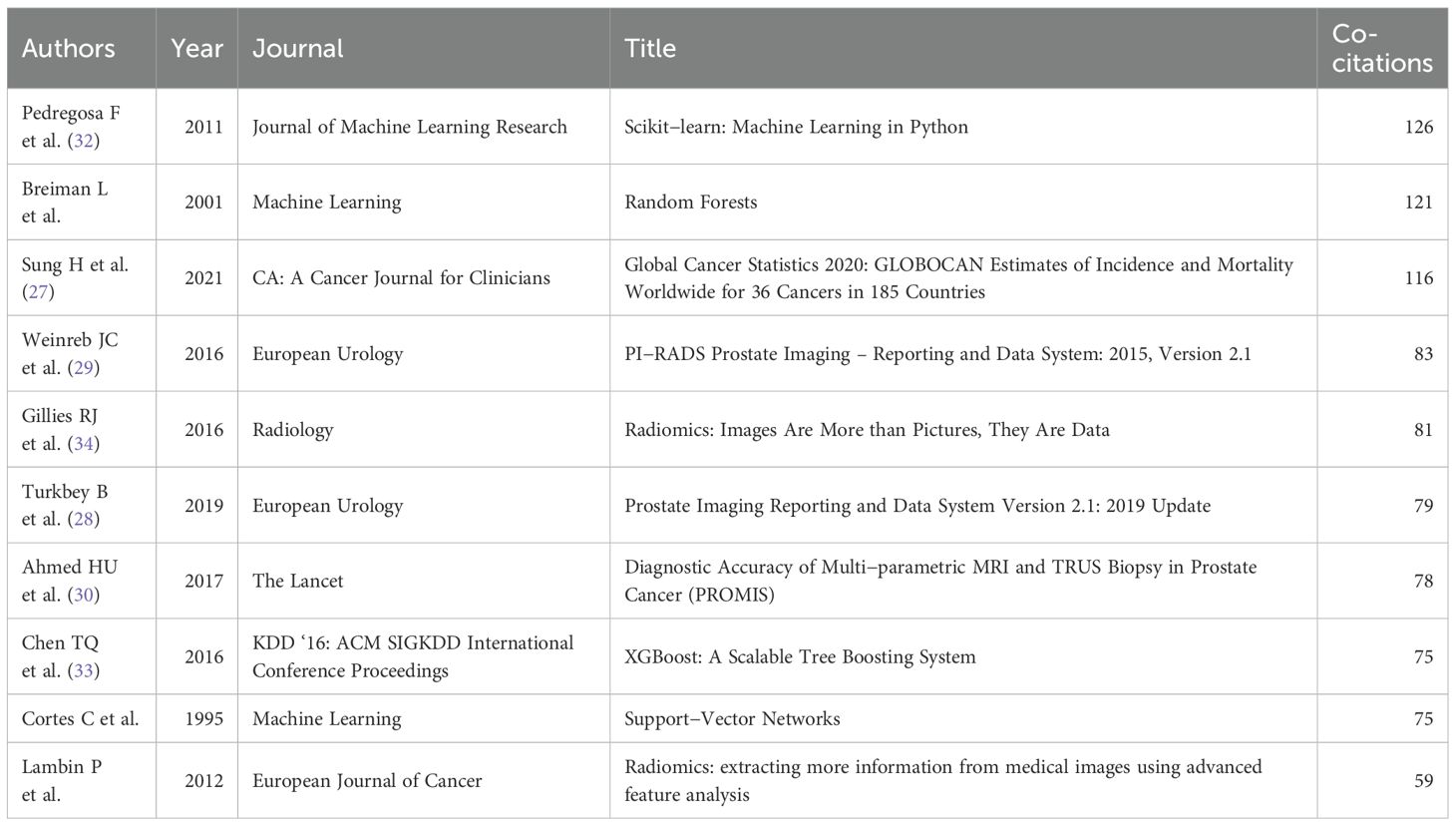

Co-citation analysis reveals five interconnected thematic clusters that define the methodological architecture of ML-PCa research (Figure 10C; Table 10). The red cluster anchors the field in algorithmic foundations, dominated by Pedregosa et al. (2011)’s Scikit-learn framework (126 co-citations) (32) and Chen et al. (2016)’s XGBoost model (33), establishing Python-based machine learning workflows as standard practice. Directly adjacent, the blue cluster encompasses clinical and imaging standardization, integrating epidemiological benchmarks (27) such as Sung et al. (2021)’s global cancer statistics with PI-RADS validation studies that operationalize mpMRI interpretation guidelines. Complementing these clinical pillars, the purple cluster develops radiomics frameworks for quantitative imaging biomarker extraction, exemplified by Gillies et al. (2016)’s (34) seminal work positioning medical images as mineable data sources. Parallel advances in bioinformatics methods (green cluster) underpin multi-omics data integration through gene expression analysis tools like GSEA and Limma, while the yellow cluster traces clinical translation pathways that bridge technical innovations with implementation workflows, ultimately connecting algorithm development to diagnostic applications.

Highly cited and co-cited reference sets further reinforced this pattern. Landmark clinical validation studies (Strom 2020 (31); Bulten 2020 (35); Kasivisvanathan 2018 (36)) were represented, yet the majority of influential works emphasized methodological development, algorithmic benchmarking, or standardization (Tables 9, 10). Taken together, these bibliometric signals converge on the conclusion that robust clinical validation and implementation studies constitute only a small minority within the field.

Bibliographic coupling (Supplementary Figure 7; Table 11) further validates research convergence into distinct domains: AI-driven clinical imaging applications (Choy et al., 2018 (37); Bera et al., 2019) (23), multi-omics integration platforms (Xia et al., 2009’s MetaboAnalyst) (38), and emerging multimodal fusion techniques (Liu et al., 2017’s Raman spectroscopy approach) (39).

Temporal trends emerge through citation burst analysis (Figure 11). Early bursts (pre-2018) feature radiomics methods (Gillies et al., 2016) (34)and PI-RADS standardization (Weinreb et al., 2016) (29), while later surges prioritize clinical-AI integration: Sung et al. (2021)’s (27) epidemiology burst (intensity 17.36, 2022–2024) and Kasivisvanathan et al. (2018)’s validation of MRI-targeted biopsies (36). The 2018–2020 inflection saw deep learning methodologies (LeCun et al., 2015; XGBoost) (40) gain prominence, accelerating the shift toward clinically deployable tools.

Figure 11. Top 25 references with the strongest citation bursts from 2005 to 2024. Red bars denote the active burst period for each reference, and the burst strength quantifies the sudden increase in citations over time, highlighting pivotal or trending literature in the field.

Discussion

The evolution of ML-PCa research represents a transformative convergence of computational innovation and precision oncology. These findings directly align with our study’s stated goals of not only mapping technological evolution but also critically examining the extent to which these advances have translated into clinical application. From its nascent interdisciplinary origins, the field has rapidly matured into a dynamic knowledge ecosystem characterized by accelerated global engagement, structural diversification across methodological and clinical domains, and reconfigured geopolitical knowledge hierarchies. This growth trajectory underscores profound synergies between algorithmic advancements and biomedical discovery, yet persistent translational gaps in clinical validation, interdisciplinary harmonization, and equitable implementation highlight critical challenges.

Publication trajectory and intellectual emergence

The exponential growth in ML-PCa publications post-2015 signals a critical shift from theoretical exploration to clinical integration. This inflection aligns with pivotal advancements: deep learning maturation (CNN architectures enabling automated image analysis), data infrastructure proliferation (public repositories like PROSTATEx, TCIA), and cross-disciplinary consortia bridging computational and clinical domains (41, 42). The 82% output concentration in 2021–2024, coupled with 57,771 cumulative citations, underscores ML-PCa’s rapid maturation into a core oncology subfield. Yet, the recent citation dip (2023–2024) may reflect preliminary saturation in algorithm-focused studies, urging a pivot toward clinical validation and implementation research.

Geopolitical dynamics and collaborative networks

China’s volumetric dominance (24.66%) highlights state-led investment in AI/healthcare priorities, though its lower MCP ratio (13.56%) suggests regional collaboration preferences. Conversely, the US’s centrality—despite smaller output (18.69%)—exemplifies global scientific integration (MCP ratio: 20.93%), reinforcing its role in knowledge diffusion. Germany’s outlier MCP ratio (39.29%) reflects strategic multilateralism within EU frameworks (Horizon Europe). The bipolar US-China collaboration hub (62 joint publications), alongside India’s autonomous citation cluster, reveals a stratified network topology. Risks include knowledge siloing (Western-centric clinical standards vs. Eastern imaging focus) and resource asymmetry, necessitating policies incentivizing Global South inclusion and data-sharing equity.

Institutional output and impact asymmetries

Though Chinese institutions dominate publication volume (e.g., Chinese Academy of Sciences: 42 papers), Western counterparts lead influence (University of Toronto CPP: 70.54 vs. 38.31). This quantity-impact divergence stems from differential specialization: North American/European institutions focus on high-impact AI methodology and digital pathology (University of Pennsylvania’s radiomics innovations), while Chinese clusters prioritize imaging diagnostics. Western centrality in networks (UBC: 0.06 centrality) accelerates clinical translation, yet China’s emerging citation cores signify rising methodological credibility. Future gains necessitate bidirectional collaboration: integrating Chinese computational efficiency with Western clinical-validation pipelines.

Journal integration and cross-domain synthesis

ML-PCa research exhibits robust interdisciplinary diffusion across three dominant pathways. Engineering-driven innovations (IEEE Access, Sensors) catalyze clinical adoption in oncology journals (Cancers, Frontiers in Oncology), while Medical Physics (CPP=27.79) bridges methodological and clinical domains with superior per-article influence. Temporal keyword evolution confirms deepening integration: post-2020 prioritization of “transformer models” and “radiogenomics” reflects the field’s shift from siloed applications toward biologically contextualized AI systems. Despite this convergence, fragmentation persists between computational and clinical clusters. Standardized reporting frameworks (MI-CLAIM) are urgently needed to streamline translation, particularly as Scientific Reports and IEEE Access emerge as critical venues for methodological prototyping preceding clinical validation.

Author productivity and methodological evolution

Madabhushi Anant’s leadership (H-index: 16, CPP:77.63) epitomizes the blend of computational expertise and clinical partnerships driving high-impact innovation. Author clusters reveal globalized specialization: North American/European teams pioneer deep learning integration, while regional hubs (e.g., Italy’s Comelli cluster) refine clinical applications. Co-citation patterns affirm dual foundations: Breiman’s ML theory (centrality: 0.09) and Siegel’s epidemiological frameworks. Temporal keyword shifts—from SVM/feature engineering (pre-2015) to deep learning (2015–2020) and multimodal AI (post-2020)—highlight accelerating biological complexity. Future success hinges on nurturing “bilingual” researchers fluent in both biomedicine and algorithmic design.

Thematic trajectories and translational bottlenecks

The evolution from technical exploration to clinical implementation defines ML-PCa’s maturation. Early emphases on “support vector machines” and “feature extraction” (pre-2015) transitioned toward integrative paradigms (“deep learning,” “multiparametric MRI”) during 2015–2020, culminating in today’s focus on multimodal frameworks (“attention mechanisms,” “radiogenomics”). Burst detection corroborates this trajectory: algorithm-centric bursts (2005–2018) gave way to clinical implementation keywords (“radiotherapy dosage,” burst intensity 5.72, 2020–2024). However, network centrality metrics expose critical translational gaps. High-connectivity terms like “validation” (centrality=0.06), “survival,” and “biopsy” remain underdeveloped compared to methodological terms, indicating insufficient linkage between AI performance metrics and clinical endpoints. Similar concerns have been noted in other bibliometric studies of AI in oncology, where methodological innovation often outpaces clinical integration (23, 43). For example, Elmarakeby et al. (12) highlighted that biologically informed neural networks demonstrated strong discovery potential, yet lacked systematic validation in prospective cohorts. This pattern mirrors our bibliometric evidence of an implementation gap. This imbalance—coupled with geographical bias in training data (78% Western cohorts)—hampers real-world deployment despite technical sophistication.

Our results are consistent with other domain-level bibliometric analyses, which have similarly observed rapid output growth coupled with translational inertia. For instance, recent mapping of AI in radiology underscored that fewer than 15% of studies incorporated prospective validation or multicenter trials, despite exponential publication growth (37, 44). By situating ML-PCa within this broader landscape, our analysis reinforces that bibliometric expansion alone is not a surrogate for clinical readiness.

Knowledge foundations and future imperatives

Five interconnected thematic clusters underpin ML-PCa’s intellectual architecture. Algorithmic foundations (Scikit-learn, XGBoost) directly enable clinical-imaging standards (PI-RADS, PROMIS trials), while radiomics frameworks (Gillies et al.) and multi-omics integration tools (GSEA, Limma) support biologically anchored discovery. This convergence enables emerging translational pathways where methodologies evolve into clinical tools (Bera et al.’s digital pathology frameworks). Citation bursts confirm accelerating clinical emphasis: Sung et al.’s epidemiology (burst intensity 17.36, 2022–2024) and Kasivisvanathan’s biopsy trials dominate post-2020 citations. Yet significant voids persist in the knowledge base—fewer than 3% of highly cited works address ethical governance, health economics, or regulatory science.

Our bibliometric analysis is consistent with broader evidence syntheses: while publication volume and methodological innovation are expanding, high-quality clinical validation remains the exception rather than the rule. For instance, a systematic review of 41 ML RCTs found only a handful conducted with full adherence to CONSORT-AI (18); among 81 non-randomized deep learning imaging studies comparing AI with clinicians, only 9 were prospective and just six tested in clinical settings (45, 46); and in primary-care predictive algorithms, only 28% of FDA- or CE-marked tools satisfied even half of the Dutch AIPA guideline’s evidence criteria (47).

These external findings align closely with our internal bibliometric signals: validation appeared in just ~2.8% of articles (Table 7), trial-related terms were absent from high-frequency keywords, and clinical trial reports were under-represented in the most-cited clusters (Tables 9, 10). Together, these patterns underscore a structural imbalance—abundant retrospective algorithmic benchmarks but scarce prospective, validated, and implemented studies.

Looking forward, closing this gap will require: (1) multi-institutional validation studies using standardized imaging biomarkers (48); (2) Federated Learning solutions for data-scarce populations (49, 50); and (3) SNOMED-CT integration to bridge EHR siloes (51). Without these, ML-PCa risks becoming a methodological echo chamber rather than a transformative clinical discipline.

Research hotspots

Multimodal MRI deep learning diagnosis

Research in PCa diagnostics increasingly leverages multiparametric MRI (mpMRI)-based machine learning to improve detection, grading, and characterization (52). Deep learning models now integrate T2-weighted, diffusion-weighted imaging (DWI), and dynamic contrast-enhanced (DCE) sequences to enhance identification of clinically significant prostate cancer (csPCa) (53). For instance, the Deep Radiomics model, trained on 615 patients from four cohorts (PROSTATEx, Prostate158, PCaMAP, NTNU/St. Olavs Hospital), achieved a patient-level AUROC of 0.91 in independent testing, demonstrating robustness comparable to PI-RADS assessment (AUROC: 0.94) without significant difference (54). Similarly, an MRI-TRUS fusion 3D-UNet model tested on 3,110 patients showed superior sensitivity (80% vs. 73%) and lesion Dice coefficient (42% vs. 30%) over MRI-alone approaches, alongside higher specificity (88% vs. 78%) in 110 controls (55). These approaches provide more accurate clinical decision support than PI-RADS v2.0 alone.

CNN imaging feature engineering

CNNs excel in automatically learning discriminative features from prostate images, outperforming traditional handcrafted feature methods. Recent innovations include lightweight 3D-CNN variants (XmasNet, ResNet-based blocks) with transfer learning, enabling rapid convergence on small datasets; XmasNet achieved an AUC of 0.84 using 199 training and 200 test cases from PROSTATEx (56). Automated segmentation via nnU-Net followed by voxel-wise radiomics feature extraction and XGBoost classification balances interpretability and efficacy (54). Further enhancements integrate channel and spatial attention mechanisms to weight multiscale features, improving tumor boundary delineation and heterogeneity detection while increasing sensitivity by >5% (57).

Large-scale public datasets and shared platforms

Multicenter public datasets address single-institution limitations and establish validation benchmarks. The SPIE-AAPM-NCI Prostate MR Classification Challenge (PROSTATEx) provided 330 training and 208 testing lesions with standardized mpMRI quality control, while its successor PROSTATEx-2 focused on Gleason grade prediction (42, 58). The TCIA Prostate-MRI-US-Biopsy dataset (1,151 patients) has been extensively validated in >17 core publications Natarajan et al. Combined analysis of six independent microarray datasets identified high-confidence biomarker gene sets, significantly improving cross-cohort generalization and enabling multi-omics integration (42, 59).

Challenge-driven interdisciplinary collaboration

Public competitions foster synergy among clinical, physics, and computational experts to accelerate translation. Initiatives like the SPIE-AAPM-NCI PROSTATEx Challenge (launched 2016) catalyzed algorithm innovation with real-time validation at SPIE conferences SPIE (58, 60). The MVP-CHAMPION project integrates clinical, genomic, and imaging data within the Million Veteran Program, enabling closed-loop refinement of ML-PCa models for clinical deployment. Open-science platforms (e.g., Grand-Challenge.org) share preprocessing scripts, model code, and visualization tools, establishing transparent, reproducible community standards (61, 62).

Overcoming the translational gap: future opportunities

ML holds transformative potential across prostate cancer research. In diagnostics, integrating multi-omics data (genomic, proteomic, metabolic) with high-resolution imaging enables identification of novel biomarker combinations, significantly enhancing early detection sensitivity and specificity (63). Therapeutically, ML facilitates personalized treatment by predicting optimal drug combinations/sequences based on patient genetics, tumor microenvironment, and treatment history—improving efficacy while reducing adverse effects (64). It further refines radiotherapy planning through precision dose delivery tailored to tumor morphology, maximizing therapeutic impact while sparing healthy tissue (15). Future advances center on enhanced data processing and modeling: Automated AI-driven data annotation (deep learning for pathology images) will streamline workflows (65), ensemble and transfer learning will boost model robustness and accelerate task-specific adaptation (66), and the development of explicable models remains critical for clinical trust and adoption (67).

Strengths and limitations of the study

This study offers a comprehensive analysis of the rapidly growing field of ML in PCa, identifying key trends, influential authors, journals, and research hotspots. By utilizing multiple bibliometric tools, this study provides a multidimensional view of the field. However, the study’s reliance on bibliometric data introduces certain limitations, particularly in evaluating research quality beyond citation metrics. Furthermore, language bias and self-citation may introduce potential sources of errors in the data. Finally, while bibliometrics provide valuable insights into publication trends, they do not offer a nuanced understanding of the methodologies and clinical applicability of this study.

Conclusion

This study reveals explosive growth in ML-PCa research, dominated by contributions from China and the United States. Key advances center on multimodal imaging integration, deep learning-driven tumor classification, and large-scale dataset utilization, offering transformative potential for early diagnosis and personalized therapy. However, critical barriers persist, particularly the limited proportion of studies progressing to clinical validation and real-world testing. This echoes prior systematic reviews of AI in cancer care that found less than one-third of published models underwent external or prospective validation (31, 35). Thus, despite methodological sophistication, clinical adoption remains the exception rather than the rule. Encouragingly, recent international initiatives such as the PI-CAI challenge and consortium have begun to directly address these barriers by fostering cross-population validation, methodological standardization, and benchmarking, with several prostate AI spin-offs already benefiting from this framework. Future progress hinges on establishing international consortia for validation studies, developing explainable AI systems, and creating open-access data repositories to accelerate clinical translation and optimize global prostate cancer management.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding authors.

Author contributions

SG: Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. JC: Investigation, Methodology, Writing – original draft, Writing – review & editing. CF: Data curation, Methodology, Validation, Writing – original draft, Writing – review & editing. XH: Data curation, Methodology, Validation, Writing – original draft, Writing – review & editing. LL: Conceptualization, Methodology, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. HZ: Conceptualization, Data curation, Funding acquisition, Investigation, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This research was funded by Nantong Municipal Science and Technology Program, grant number JCZ2022071; Qidong Municipal Science and Technology Program (2023-04); Nantong University Special Research Fund for Clinical Medicine, grant number 2024JZ026; Nantong Municipal Health Commission, grant number MS2024103; Qidong Municipal Science and Technology Program (2024).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2025.1675459/full#supplementary-material

References

1. Cornford P, van den Bergh RCN, Briers E, Van den Broeck T, Brunckhorst O, Darraugh J, et al. EAU-EANM-ESTRO-ESUR-ISUP-SIOG guidelines on prostate cancer-2024 update. Part I: screening, diagnosis, and local treatment with curative intent. Eur Urol. (2024) 86:148–63. doi: 10.1016/j.eururo.2024.03.027

2. Bray F, Laversanne M, Sung H, Ferlay J, Siegel RL, Soerjomataram I, et al. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2024) 74:229–63. doi: 10.3322/caac.21834

3. Marshall CH. Acting on actionable mutations in metastatic prostate cancer. J Clin Oncol. (2023) 41:3295–9. doi: 10.1200/JCO.23.00350

4. Pinsky PF and Parnes H. Screening for prostate cancer. N Engl J Med. (2023) 388:1405–14. doi: 10.1056/NEJMcp2209151

5. Beltran A, Parker LA, Moral-Pélaez I, Caballero-Romeu JP, Chilet-Rosell E, Hernandez-Aguado I, et al. Overdiagnosis for screen-detected prostate cancer incorporating patient comorbidities. Eur J Public Health. (2024) 34:ckae144.1304. doi: 10.1093/eurpub/ckae144.1304

6. James ND, Tannock I, N’Dow J, Feng F, Gillessen S, Ali SA, et al. The Lancet Commission on prostate cancer: planning for the surge in cases. Lancet. (2024) 403:1683–722. doi: 10.1016/S0140-6736(24)00651-2

7. Vellky JE, Kirkpatrick BJ, Gutgesell LC, Morales M, Brown RM, Wu Y, et al. ERBB3 overexpression is enriched in diverse patient populations with castration-sensitive prostate cancer and is associated with a unique AR activity signature. Clin Cancer Res. (2024) 30:1530–43. doi: 10.1158/1078-0432.CCR-23-2161

8. Ning J, Spielvogel CP, Haberl D, Trachtova K, Stoiber S, Rasul S, et al. A novel assessment of whole-mount Gleason grading in prostate cancer to identify candidates for radical prostatectomy: a machine learning-based multiomics study. Theranostics. (2024) 14:4570–81. doi: 10.7150/thno.96921

9. Cao Y, Sutera P, Silva Mendes W, Yousefi B, Hrinivich T, Deek M, et al. Machine learning predicts conventional imaging metastasis-free survival (MFS) for oligometastatic castration-sensitive prostate cancer (omCSPC) using prostate-specific membrane antigen (PSMA) PET radiomics. Radiother Oncol. (2024) 199:110443. doi: 10.1016/j.radonc.2024.110443

10. Harder C, Pryalukhin A, Quaas A, Eich ML, Tretiakova M, Klein S, et al. Enhancing prostate cancer diagnosis: artificial intelligence-driven virtual biopsy for optimal magnetic resonance imaging-targeted biopsy approach and gleason grading strategy. Mod Pathol. (2024) 37:100564. doi: 10.1016/j.modpat.2024.100564

11. Padhani AR and Turkbey B. Detecting prostate cancer with deep learning for MRI: A small step forward. Radiology. (2019) 293:618–9. doi: 10.1148/radiol.2019192012

12. Elmarakeby HA, Hwang J, Arafeh R, Crowdis J, Gang S, Liu D, et al. Biologically informed deep neural network for prostate cancer discovery. Nature. (2021) 598:348–52. doi: 10.1038/s41586-021-03922-4

13. Elmarakeby HA, Hwang J, Arafeh R, Crowdis J, Gang S, Liu D, et al. Novel machine learning model uncovers key prostate cancer pathways cancer discovery. Nature. (2021) 11:348–52. doi: 10.1158/2159-8290.CD-RW2021-140

14. Koo KC, Lee KS, Kim S, Min C, Min GR, Lee YH, et al. Long short-term memory artificial neural network model for prediction of prostate cancer survival outcomes according to initial treatment strategy: development of an online decision-making support system. World J Urol. (2020) 38:2469–76. doi: 10.1007/s00345-020-03080-8

15. Shiradkar R, Podder TK, Algohary A, Viswanath S, Ellis RJ, and Madabhushi A. Radiomics based targeted radiotherapy planning (Rad-TRaP): a computational framework for prostate cancer treatment planning with MRI. Radiat Oncol. (2016) 11:148. doi: 10.1186/s13014-016-0718-3

16. Li R, Zhu J, Zhong WD, and Jia Z. Comprehensive evaluation of machine learning models and gene expression signatures for prostate cancer prognosis using large population cohorts. Cancer Res. (2022) 82:1832–43. doi: 10.1158/0008-5472.CAN-21-3074

17. Cysouw MCF, Jansen BHE, van de Brug T, Oprea-Lager DE, Pfaehler E, de Vries BM, et al. Machine learning-based analysis of [(18)F]DCFPyL PET radiomics for risk stratification in primary prostate cancer. Eur J Nucl Med Mol Imaging. (2021) 48:340–9. doi: 10.1007/s00259-020-04971-z

18. Plana D, Shung DL, Grimshaw AA, Saraf A, Sung JJY, and Kann BH. Randomized clinical trials of machine learning interventions in health care: A systematic review. JAMA Netw Open. (2022) 5:e2233946. doi: 10.1001/jamanetworkopen.2022.33946

19. Chen C and Leydesdorff L. Patterns of connections and movements in dual-map overlays: A new method of publication portfolio analysis. J Assoc Inf Sci Technol. (2014) 65:334–51. doi: 10.1002/asi.22968

20. Sabe M, Pillinger T, Kaiser S, Chen C, Taipale H, Tanskanen A, et al. Half a century of research on antipsychotics and schizophrenia: A scientometric study of hotspots, nodes, bursts, and trends. Neurosci Biobehav Rev. (2022) 136:104608. doi: 10.1016/j.neubiorev.2022.104608

21. Perianes-Rodriguez A, Waltman L, and van Eck NJ. Constructing bibliometric networks: A comparison between full and fractional counting. J Informetrics. (2016) 10:1178–95. doi: 10.1016/j.joi.2016.10.006

22. Aria M and Cuccurullo C. bibliometrix: An R-tool for comprehensive science mapping analysis. J Informetrics. (2017) 11:959–75. doi: 10.1016/j.joi.2017.08.007

23. Bera K, Schalper KA, Rimm DL, Velcheti V, and Madabhushi A. Artificial intelligence in digital pathology - new tools for diagnosis and precision oncology. Nat Rev Clin Oncol. (2019) 16:703–15. doi: 10.1038/s41571-019-0252-y

24. Litjens G, Debats O, Barentsz J, Karssemeijer N, and Huisman H. Computer-aided detection of prostate cancer in MRI. IEEE Trans Med Imaging. (2014) 33:1083–92. doi: 10.1109/TMI.2014.2303821

25. Fehr D, Veeraraghavan H, Wibmer A, Gondo T, Matsumoto K, Vargas HA, et al. Automatic classification of prostate cancer Gleason scores from multiparametric magnetic resonance images. Proc Natl Acad Sci U S A. (2015) 112:E6265–73. doi: 10.1073/pnas.1505935112

26. Lalonde E, Ishkanian AS, Sykes J, Fraser M, Ross-Adams H, Erho N, et al. Tumour genomic and microenvironmental heterogeneity for integrated prediction of 5-year biochemical recurrence of prostate cancer: a retrospective cohort study. Lancet Oncol. (2014) 15:1521–32. doi: 10.1016/S1470-2045(14)71021-6

27. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2021) 71:209–49. doi: 10.3322/caac.21660

28. Turkbey B, Rosenkrantz AB, Haider MA, Padhani AR, Villeirs G, Macura KJ, et al. Prostate imaging reporting and data system version 2.1: 2019 update of prostate imaging reporting and data system version 2. Eur Urol. (2019) 76:340–51. doi: 10.1016/j.eururo.2019.02.033

29. Weinreb JC, Barentsz JO, Choyke PL, Cornud F, Haider MA, Macura KJ, et al. PI-RADS prostate imaging - reporting and data system: 2015, version 2. Eur Urol. (2016) 69:16–40. doi: 10.1016/j.eururo.2015.08.052

30. Ahmed HU, El-Shater Bosaily A, Brown LC, Gabe R, Kaplan R, Parmar MK, et al. Diagnostic accuracy of multi-parametric MRI and TRUS biopsy in prostate cancer (PROMIS): a paired validating confirmatory study. Lancet. (2017) 389:815–22. doi: 10.1016/S0140-6736(16)32401-1

31. Strom P, Kartasalo K, Olsson H, Solorzano L, Delahunt B, Berney DM, et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: a population-based, diagnostic study. Lancet Oncol. (2020) 21:222–32. doi: 10.1016/S1470-2045(19)30738-7

32. Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: machine learning in python. J Mach Learn Res. (2011) 12:2825–30. Available online at: http://jmlr.org/papers/v12/pedregosa11a.html

33. Chen T and Guestrin C. (2016). XGBoost: A scalable tree boosting system, in: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ‘16), New York, NY, USA: Association for Computing Machinery. p. 785–94. doi: 10.1145/2939672.2939785

34. Gillies RJ, Kinahan PE, and Hricak H. Radiomics: images are more than pictures, they are data. Radiology. (2016) 278:563–77. doi: 10.1148/radiol.2015151169

35. Bulten W, Pinckaers H, van Boven H, Vink R, de Bel T, van Ginneken B, et al. Automated deep-learning system for Gleason grading of prostate cancer using biopsies: a diagnostic study. Lancet Oncol. (2020) 21:233–41. doi: 10.1016/S1470-2045(19)30739-9

36. Kasivisvanathan V, Rannikko AS, Borghi M, Panebianco V, Mynderse LA, Vaarala MH, et al. MRI-targeted or standard biopsy for prostate-cancer diagnosis. N Engl J Med. (2018) 378:1767–77. doi: 10.1056/NEJMoa1801993

37. Choy G, Khalilzadeh O, Michalski M, Do S, Samir AE, Pianykh OS, et al. Current applications and future impact of machine learning in radiology. Radiology. (2018) 288:318–28. doi: 10.1148/radiol.2018171820

38. Xia J, Psychogios N, Young N, and Wishart DS. MetaboAnalyst: a web server for metabolomic data analysis and interpretation. Nucleic Acids Res. (2009) 37:W652–60. doi: 10.1093/nar/gkp356

39. Liu J, Osadchy M, Ashton L, Foster M, Solomon CJ, and Gibson SJ. Deep convolutional neural networks for Raman spectrum recognition: a unified solution. Analyst. (2017) 142:4067–74. doi: 10.1039/C7AN01371J

40. LeCun Y, Bengio Y, and Hinton G. Deep learning. Nature. (2015) 521:436–44. doi: 10.1038/nature14539

41. Liu S, Zheng H, Feng Y, and Li W. Prostate cancer diagnosis using deep learning with 3D multiparametric MRI. arXiv preprint. (2017).

42. Natarajan S, Priester A, Margolis D, Huang J, and Marks L. Prostate MRI and Ultrasound With Pathology and Coordinates of Tracked Biopsy (Prostate-MRI-US-Biopsy). Little Rock, AR, USA: The Cancer Imaging Archive (2020).

43. Goldenberg SL, Nir G, and Salcudean SE. A new era: artificial intelligence and machine learning in prostate cancer. Nat Rev Urol. (2019) 16:391–403. doi: 10.1038/s41585-019-0193-3

44. van der Laak J, Litjens G, and Ciompi F. Deep learning in histopathology: the path to the clinic. Nat Med. (2021) 27:775–84. doi: 10.1038/s41591-021-01343-4

45. Nagendran M, Chen Y, Lovejoy CA, Gordon AC, Komorowski M, Harvey H, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ. (2020) 368:m689. doi: 10.1136/bmj.m689

46. Otles E, Oh J, Li B, Bochinski M, Joo H, Ortwine J, et al. Mind the Performance Gap: Examining Dataset Shift During Prospective Validation. In: Jung K, Yeung S, Sendak M, Sjoding M, and Ranganath R, editors. Proceedings of the 6th Machine Learning for Healthcare Conference. Cambridge, MA, USA: Virtual: PMLR (2021). p. 506–34.

47. Rakers MM, van Buchem MM, Kucenko S, de Hond A, Kant I, van Smeden M, et al. Availability of evidence for predictive machine learning algorithms in primary care: A systematic review. JAMA Netw Open. (2024) 7:e2432990. doi: 10.1001/jamanetworkopen.2024.32990

48. Rajagopal A, Redekop E, Kemisetti A, Kulkarni R, Raman S, Sarma K, et al. Federated learning with research prototypes: application to multi-center MRI-based detection of prostate cancer with diverse histopathology. Acad Radiol. (2023) 30:644–57. doi: 10.1016/j.acra.2023.02.012

49. Ankolekar A, Boie S, Abdollahyan M, Gadaleta E, Hasheminasab SA, Yang G, et al. Advancing breast, lung and prostate cancer research with federated learning. A systematic review. NPJ Digit Med. (2025) 8:314. doi: 10.1038/s41746-025-01591-5

50. Moradi A, Zerka F, Bosma JS, Sunoqrot MRS, Abrahamsen BS, Yakar D, et al. Optimizing federated learning configurations for MRI prostate segmentation and cancer detection: A simulation study. Radiol Artif Intell. (2025) 7:e240485. doi: 10.1148/ryai.240485

51. International S. SNOMED-CT Clinical Implementation Guide for Cancer Synoptic Reporting. London, UK: SNOMED International. (2025).

52. Algohary A, Viswanath S, Shiradkar R, Ghose S, Pahwa S, Moses D, et al. Radiomic features on MRI enable risk categorization of prostate cancer patients on active surveillance: Preliminary findings. J Magn Reson Imaging. (2018) 48:111–21. doi: 10.1002/jmri.25983

53. Gaudiano C, Mottola M, Bianchi L, Corcioni B, Cattabriga A, Cocozza MA, et al. Beyond multiparametric MRI and towards radiomics to detect prostate cancer: A machine learning model to predict clinically significant lesions. Cancers (Basel). (2022) 14:6156. doi: 10.3390/cancers14246156

54. Nketiah GA. Deep radiomics detection of clinically significant prostate cancer on multicenter MRI: initial comparison to PI-RADS assessment. arXiv. (2024).

55. Jahanandish H. Multimodal MRI-ultrasound AI for prostate cancer detection outperforms radiologist MRI interpretation: A multi-center study. arXiv. (2025).

56. Noujeim JP, Belahsen Y, Lefebvre Y, Lemort M, Deforche M, Sirtaine N, et al. Optimizing multiparametric magnetic resonance imaging-targeted biopsy and detection of clinically significant prostate cancer: the role of perilesional sampling. Prostate Cancer Prostatic Dis. (2023) 26:575–80. doi: 10.1038/s41391-022-00620-8

57. Alkadi R, Taher F, El-Baz A, and Werghi N. A deep learning-based approach for the detection and localization of prostate cancer in T2 magnetic resonance images. J Digit Imaging. (2019) 32:793–807. doi: 10.1007/s10278-018-0160-1

58. AAPM. SPIE-AAPM-NCI Prostate MR Gleason Grade Group Challenge (PROSTATEx-2). Alexandria, VA, USA: American Association of Physicists in Medicine (AAPM). (2022).

59. Puthiyedth N, Riveros C, Berretta R, and Moscato P. A new combinatorial optimization approach for integrated feature selection using different datasets: A prostate cancer transcriptomic study. PloS One. (2015) 10:e0127702. doi: 10.1371/journal.pone.0127702

60. SPIE-AAPM-NCI. The PROSTATEx Challenge Society of Photo-Optical Instrumentation Engineers (SPIE) in collaboration with AAPM and NCI: Bellingham, WA, USA (SPIE headquarters). (2017).

61. Rusu M, Shao W, Kunder CA, Wang JB, Soerensen SJC, Teslovich NC, et al. Registration of presurgical MRI and histopathology images from radical prostatectomy via RAPSODI. Med Phys. (2020) 47:4177–88. doi: 10.1002/mp.14337

62. Justice AC, McMahon B, Madduri R, Crivelli S, Damrauer S, Cho K, et al. A landmark federal interagency collaboration to promote data science in health care: Million Veteran Program-Computational Health Analytics for Medical Precision to Improve Outcomes Now. JAMIA Open. (2024) 7:ooae126. doi: 10.1093/jamiaopen/ooae126

63. Chawla S, Rockstroh A, Lehman M, Ratther E, Jain A, Anand A, et al. Gene expression based inference of cancer drug sensitivity. Nat Commun. (2022) 13:5680. doi: 10.1038/s41467-022-33291-z

64. Saeed K, Rahkama V, Eldfors S, Bychkov D, Mpindi JP, Yadav B, et al. Comprehensive drug testing of patient-derived conditionally reprogrammed cells from castration-resistant prostate cancer. Eur Urol. (2017) 71:319–27. doi: 10.1016/j.eururo.2016.04.019

65. Kott O, Linsley D, Amin A, Karagounis A, Jeffers C, Golijanin D, et al. Development of a deep learning algorithm for the histopathologic diagnosis and gleason grading of prostate cancer biopsies: A pilot study. Eur Urol Focus. (2021) 7:347–51. doi: 10.1016/j.euf.2019.11.003

66. Sherafatmandjoo H, Safaei AA, Ghaderi F, and Allameh F. Prostate cancer diagnosis based on multi-parametric MRI, clinical and pathological factors using deep learning. Sci Rep. (2024) 14:14951. doi: 10.1038/s41598-024-65354-0

Keywords: prostate cancer, machine learning, bibliometric analysis, deep learning, radiomics, translational research

Citation: Gu S, Chen J, Fan C, Huang X, Li L and Zhang H (2025) Algorithms on the rise: a machine learning–driven survey of prostate cancer literature. Front. Oncol. 15:1675459. doi: 10.3389/fonc.2025.1675459

Received: 29 July 2025; Accepted: 17 September 2025;

Published: 02 October 2025.

Edited by:

Neil Mendhiratta, George Washington University, United StatesReviewed by:

Remco Jan Geukes Foppen, IRCCS Istituto Nazionale Tumori Regina Elena, ItalyGabriel Addio Nketiah, Norwegian University of Science and Technology (NTNU), Norway

Copyright © 2025 Gu, Chen, Fan, Huang, Li and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Linbo Li, cWRzcm15eV9MaW5ib0BhbGl5dW4uY29t; Hua Zhang, NzE3ODI5ODAxQHFxLmNvbQ==

Simin Gu1

Simin Gu1 Linbo Li

Linbo Li