- 1Department of Advertising and Public Relations, Michigan State University, East Lansing, MI, United States

- 2Department of Life Sciences Communication, University of Wisconsin-Madison, Madison, WI, United States

People are increasingly exposed to science and political information from social media. One consequence is that these sites play host to “alternative influencers,” who spread misinformation. However, content posted by alternative influencers on different social media platforms is unlikely to be homogenous. Our study uses computational methods to investigate how dimensions we refer to as audience and channel of social media platforms influence emotion and topics in content posted by “alternative influencers” on different platforms. Using COVID-19 as an example, we find that alternative influencers’ content contained more anger and fear words on Facebook and Twitter compared to YouTube. We also found that these actors discussed substantively different topics in their COVID-19 content on YouTube compared to Twitter and Facebook. With these findings, we discuss how the audience and channel of different social media platforms affect alternative influencers’ ability to spread misinformation online.

Introduction

People are increasingly exposed to science and political information from social media (Brossard and Scheufele, 2013), while traditional sources of information, such as television and newspapers, are increasingly ceding their share of the information marketplace. As a result, online platforms provide new opportunities to educate the public (Sugimoto and Thelwall, 2013) but have also become playgrounds for misinformation (Syed-Abdul et al., 2013) and manipulation (Lewis, 2018). Individuals who post political and science information on social media vary widely in their expertise and intentions. While social media empower non-expert actors to make contributions to debates with traditionally undervalued expertize in local knowledge and community preferences (Wynne, 1992), they also increase the ability of ill-intentioned actors (i.e., alternative influencers) to the circulation of empirically false claims, such as the vaccine-autism link (Kata, 2012).

Research into political and scientific misinformation is an active research area which has explored patterns of misinformation use and effects on individuals. However, one major gap in that literature is a theoretically-informed accounting of how communication platforms, such as Facebook, YouTube, or Twitter, fundamentally differ from one another and how those differences affect the information available there. The content of social media platforms, we argue, is in part a function of differences in audience makeup and user interactions. Together, these two factors affect the structure of the information that actors choose to post with respect to what content they post and how they present the content (Hiaeshutter-Rice, 2020). This study uses this framework to begin building theoretically-informed expectations of informational differences across platforms, drawing on COVID-19 content posted by actors known to spread misinformation.

Though we know that alternative influencers (Lewis, 2018) spread science and political misinformation on social media, and that social media platforms shape information in different ways, we have very little understanding, both in theory and in practice, of how platforms and actors intersect to influence what information the public is exposed to on social media. That is, content posted by alternative influencers on different social media platforms is unlikely to be homogenous. Instead, platform differences are likely to alter the emotion and associated topics discussed by alternative influencers, features known to affect public attitudes and trust in science and politics (Cobb, 2005; Brader, 2006; Nisbet et al., 2013; Hiaeshutter-Rice, 2020). Given increasing concern during the COVID-19 pandemic about the deleterious effects that social media content is having on democratic society and public health, understanding how different social media platforms shape and circulate content produced by actors known to spread misinformation is vital for unpacking how some segments of the public become misinformed about controversial issues including COVID-19 (Pew Research Center, 2015), as well as shed light on how to slow the spread of misinformation online.

Content Differences Across Communication Platforms

Communication Platforms

There is more information available to individuals now than at any point in history, a fact that will likely remain true for all future points in time. Yet where people can access information is also vitally important because communication platforms shape the structure of information. For example, Wikipedia may be very similar to a physical encyclopedia, but the capacity for editing and hyperlinking means that information on Wikipedia is structured differently than a physical book can be. Communication platforms differ fundamentally from one to another, and those differences have consequences for the content and structure of information communicated via that platform. Importantly, these informational differences can shape public knowledge and beliefs.

Despite this, most scholarship into misinformation has relied on investigations of single communication platforms. These studies are not invaluable, far from it, and we have learned a great deal about how individual platforms operate. However, this limitation has consequently masked the realities of the entire information ecosystem. In part, this has been a function of limitations of data collection. We have an abundance of information about platforms from which data is easier to collect, such as Twitter. Yet overreliance on these platforms limits our understanding and ability to make claims about media ecosystems more broadly, and in particular about how information moves across platforms (Thorson et al., 2013). While some authors have looked at communication across platforms (e.g., Bossetta, 2018; Golovchenko et al., 2020; Lukito, 2020), we argue that there are still gaps in the literature for a systematic analysis of the underlying structures of the communication ecosystem and the corresponding consequences on content (Bode and Vraga, 2018). Here, we outline how different dimensions of platforms structure content (Hiaeshutter-Rice, 2020), followed by a discussion of how this content affects belief in misinformation online.

Platform Audiences and Channels

Although platforms do not exist independently of one another, they are distinct in how they are constructed and used (Segerberg and Bennett, 2011; Hiaeshutter-Rice, 2020). Platform differences are, we argue, vital to understanding differences in information across platforms. In this paper, we propose two relevant dimensions to consider: audience and channel. This is a new framework which we propose as a way to think about how information on platforms is shaped by the ways content creators view a platform’s technical features and their intended use. Much like a political campaign, content creators develop their messages and information with a platform’s audience and channel in mind, which necessarily has consequences on content. By defining the audience and channel of platforms, we can move toward building expectations about content differences between platforms. As we move into our explanation, we also want to note that these are wide categorizations rather than discrete components of a platforms.

A platform’s audience can range from narrow to broad, referring to the homogeneity of the recipients. Broader audiences may be characterized by diversity with respect to political partisanship, age, racial demographics, location, or other interests, while narrow audiences are more similar in their demographic makeup or beliefs. We should note that broad does not mean large but instead refers to degree of diversity. Further, a platform’s audience is defined by the content creator’s perceptions, not necessarily how the platform functions as a whole. We provide specific examples below.

Platforms we categorize as narrow audiences are ones where the audience of users are largely made-up of a constrained set of beliefs, ideologies, or partisanship. For instance, the audience of the Twitter account of a sports team is likely to be made up of fans of that team. This broadly applies to users on platforms as a whole. A broad audience is one that has a larger and, potentially at times, conflictual set of beliefs and preferences. Thus, platforms like Facebook and Twitter have narrow audiences. While these social media platforms have wide and diverse user bases, regular direct exposure to an account on Facebook or Twitter is predicated on following that account.1 Actors on Facebook and Twitter can be reasonably assured that their content is largely being viewed by the interested users and that they are incentivized to tailor that content to the narrower range of interests that their audiences want (Wang and Kraut, 2012). In comparison to Facebook or Twitter, we characterize YouTube as a broad audience platform (Iqbal, 2020). Though users have the option to follow content on YouTube, they are exposed to a much wider range of content creators as they use the site. This is in part because YouTube provides access to content through at least three distinct modes. The first is direct connection with users, such as subscribing to an account. The second is goal-oriented exposure, such as searching for specific content without necessarily a specific content creator in mind. Finally, users are also exposed to content through recommendations, such as popular content on the main page and along the side of a playing video as well as videos playing automatically after the one being watched ends. It is notably the second and third mechanisms which we theorize encourages content that resonates with a broader audience. For content creators on broad audience platforms, their videos are being shown to a much wider and more diverse audience than they might otherwise encounter on a different social media platform. We argue that content creators on YouTube are thus incentivized, indeed financially so, to tailor their content to a wide range of audiences. Differences in the perceived audience of a platform may affect the content and presentation of information. For example, content on platforms with narrower audiences may focus on emotions and topics that are more motivating to one’s loyal base, while content on platforms with broader audiences may use emotions and content with wider appeal.

The other important dimension of a platform is the degree to which actors must share the attention of the audience. Here, we refer to the channel of the platform, which may be independent (free of interaction from other, possibly opposing, actors) or shared (in which creators must anticipate and respond to others). Importantly, this is not a binary classification, but rather represents a range perceived by users of the platform. For example, Facebook and Twitter are shared channel platforms because other users can quickly respond to what creators post in both their own spaces and on the creator’ page directly. Yet Twitter is likely more shared than Facebook; for instance, opposing political candidates have regularly and directly engaged with each other on Twitter but have not connected to a similar degree on Facebook (such as Hillary Clinton telling Donald Trump on Twitter to “delete your account” during the 2016 United States Presidential Election). YouTube is a more independent channel than Facebook and Twitter because the content of the video cannot be interrupted by other actors, but it is less independent than traditional broadcast television because of the ability to post comments under a video. Whether the platform has a more shared channel, in which content creators compete for attention, or a more independent channel, in which their messages are largely uncontested, is likely to affect the content of their messages. For example, content in shared channels may include more emotional cues that attract audience attention or respond about topics raised by others, compared to content in more independent channels.

We note that there are myriad other influences that may drive differences in content across communication platforms, including business practices, curation methods, and economic incentives (Thorson and Wells, 2016; Caplan and Gillespie, 2020). Further, we are not prescribing hard and fast rules as to how platforms operate. Instead, we offer a theoretically-informed framework for thinking about platform differences and which dimensions may influence the content and information that is produced. These are based on our read of the vast extant literatures on how platforms are used instead of, rather than one study or source of descriptive data specific data source or study. Moreover, we are attempting to be specific what components of a platform fit into which category. For instance, we consider Facebook to be a narrow and shared platform when it comes to users posting content to their pages, whereas paying for an advertisement through the Facebook interface would likely be narrow and independent as the structure of that communication is different. Classifying platforms by their audience and channel offers an initial framework for building theoretically informed expectations about content differences across platforms. We therefore apply this structure to our investigation of alternative influencers’ COVID-19 content to build understanding of what platform audiences and channels may have enabled or minimized the spread of misinformation during a global pandemic.

Content Differences Across Platforms and Misinformation

Actors’ awareness of platforms differences with respect to audience and channel are likely to affect the content of the information they post on different platforms. That is, the content that actors share on two platforms is likely to be different even if it is about the same issue. Two differences that are particularly relevant to the spread of misinformation on social media are 1) the emotional cues in messages, particularly anger and anxiety or fear, and 2) content differences, referring to the surrounding topics prevalent to content about a specific issue.

Emotion

Emotions can be broadly defined as brief, intense mental states that reflect an evaluative response to some external stimulus (Lerner and Keltner, 2000; Nabi, 2003). Content that contains emotional cues or language is more attention grabbing, leading to greater online viewing, than non-emotional content (Most et al., 2007; Maratos, 2011; Bail, 2016). This is particularly the case for high-arousal emotions, such as awe, anger, or anxiety (Berger and Milkman, 2012). In the context of misinformation research, investigating the appraisal tendencies and motivations associated with discrete emotions has been particularly fruitful with respect to anger and anxiety or fear (Nabi, 2010; Weeks, 2015). Anger is experienced as a negative emotion in response to an injustice, offense, or impediment to one’s goals, and is characterized by an “approach” tendency or motivation to act (Nabi, 2003; Carver and Harmon-Jones, 2009). Similar to anger in their negative valance, the related emotions of anxiety and fear are aroused in response to a threat of harm, encounter with an unknown, or anticipation of something negative (Carver and Harmon-Jones, 2009; Nabi, 2010; Weeks, 2015). In contrast to anger, though, anxiety and fear are characterized by “avoidance” tendencies or a lack of motivation to engage, confront, or act (Carver and Harmon-Jones, 2009; Weeks, 2015). Both anger and fear are known to be associated with misinformation sharing and belief, so investigating their prevalence in content posted by alternative influencers on different platforms offers insight into the role that audience and channel may play in distributing misinformation online.

Emotion and Misinformation Spread on Social Media

Both anger and anxiety or fear have been tied to information behaviors that spread misinformation on social media. Angry individuals are more likely to selectively expose themselves to content that reinforces prior beliefs or identities (MacKuen et al., 2010), a behavior that increases the likelihood of being exposed to false information online (Garrett et al., 2016). In addition, anger-inducing content online is more likely to be clicked (Vargo and Hopp, 2020) and circulated (Berger and Milkman, 2012; Hasell and Weeks, 2016) than less emotional content. Like anger, fear or anxiety cues increase attention to content (Ali et al., 2019; Zhang and Zhou, 2020) and can promote the circulation of misinformation. For example, in the absence of consistent, credible information about extreme events like natural disasters, anxiety and fear can facilitate the spread of rumors and misinformation on social media as individuals seek information to alleviate uncertainties (Oh et al., 2010). But fear and anxiety have also been deployed strategically to bring attention to and spread false information; by intentionally provoking fear, doubt, and uncertainty in their online content, anti-vaccine activists sow confusion and misperceptions about vaccines (Kata, 2012). Similarly, conspiracy theories, characterized by paranoia, distrust, and fear supposedly powerful groups posing some threat, are spread widely on social media because users are encouraged to engage with conspiratorial content (Aupers, 2012; Prooijen, 2018; Katz and Mays, 2019).

The emotions of anger and anxiety or fear can promote information behaviors that spread misinformation because they increase attention to and engagement with the content. Consequently, this can lead to increased visibility of an influencers content (e.g., clicks on content or sharing on one’s own account). These information behaviors may be particularly desirable for actors posting content on shared channel platforms, where actors compete with many others to convey their messages. That said, such strategies may also be used on independent channel platforms. In sum, the use of anger and fear language increases engagement with content, and such language might be unevenly distributed across platforms with different audiences and channels.

Emotion and (Mis) Information Processing

Importantly, these emotions not only affect attention and sharing behaviors but also affect how individuals evaluate information. Attaching emotions to information facilitates that information’s retrieval from memory (Nabi et al., 2018) and affects cognitive processing (Kühne and Schemer, 2015; Lee and Chen, 2020; Chen et al., 2021). Angry individuals are more likely to rely on heuristic or biased information processing that support their prior beliefs, leading to greater belief in identity-supporting misinformation (Weeks, 2015). In addition, angry individuals are more likely to perceive content as hostile to their political beliefs or positions (Weeks et al., 2019), which may motivate them to dismiss or counter argue accurate information. In contrast to anger, anxious individuals engage in less biased information processing (Weeks, 2015) and are instead inclined to seek additional information (MacKuen et al., 2010). However, it has been noted in some health contexts that fear-inducing content without efficacy information may lead to reactance or information avoidance (Maloney et al., 2011). Thus, while there may be some boundary conditions regarding the intensity of fear or anxiety, in general individuals are more likely to reach accurate conclusions about information when they are anxious or fearful, as compared to when they are angry, due to the different ways these emotions motivate information processing (Nabi, 2010; Weeks, 2015).

In the context of alternative influencers’ COVID-19 content, investigating platform differences in emotional language may offer insight into where individuals are exposed to misinformation and why they may be inclined to believe it. The differences in information processing tendencies associated with these emotions (anger vs. fear as we discussed above) could influence content differences across platforms. On platforms with narrow audiences comprised of users who actively choose to follow content, anger-inducing language may mobilize a loyal base. In contrast, content with fear and anxiety cues may be more engaging on platforms with wider audiences, as it could draw users, who are not yet persuaded of a position, to an actor’s content as a means of seeking further information. However, actors could use similar emotional strategies across different platforms to maximize engagement and reach. Given little prior literature to support these predictions, we ask the following research questions whose answers will aid us in building theoretically-informed expectations regarding how emotional language may be shaped by the channel and audience of platforms.

RQ1: How does the proportion of anger language in alternative influencers’ COVID-19 content differ between platforms with different audience and channel (YouTube vs. Facebook vs. Twitter)?

RQ2: How does the proportion of fear language in alternative influencers’ COVID-19 content differ between platforms with different audience and channel (YouTube vs. Facebook vs. Twitter)?

Content Differences

Differences in platform audience and channel may additionally drive differences in the topics within content about the same issue across platforms. That is, the substance of actors’ content is expected to differ by platform, even when that content is ostensibly on a single issue.

The topics an actor discusses on a platform may vary based on their perceived audience (narrow or broad) and the channel of the platform on which they are posting (independent or shared). Even when discussing the same issue, actors may emphasize shared identities or concerns on platforms with wide audience (e.g., Americans, infection rates) and narrower identities or interests on platforms with narrower audiences (e.g., opposition to local lawmakers). Topics in content may also differ between platforms with shared and independent channels; an actor must respond to others’ arguments, concerns, or questions on a shared channel platform, but is less motivated to do so on a more independent platform. Thus, not only the emotionality, but the topics in content an actor posts are likely to differ across platforms with different audiences and channels.

Importantly, these content differences may alter how audiences react to issues, which could have effects on attitudes and accuracy (Chong and Druckman, 2010). For example, work on climate change news coverage has shown that content which features skeptical positions alongside consensus positions leads to less accurate beliefs about climate change than content which emphasizes scientists’ views (Dunwoody and Kohl, 2017). Politicizing cues can shift attitudes about scientific topics because evoking partisan identities and values leads individuals to follow partisan elites over other experts, even if those positions are incorrect (Bolsen and Druckman, 2018). Alternatively, emphasizing narratives about “naturalness” can reduce support for vaccination and GM foods (Blancke et al., 2015; Bradshaw et al., 2020; Hasell and Stroud, 2020). In sum, the language and topics that are discussed alongside an issue may influence peoples’ conceptualization or interpretation of that issue.

For these reasons, differences in the topics in alternative influencers’ COVID-19 content across platforms are important to understand. The presence of content differences means that individuals exposed to content posted by these actors across different platforms may come away with systematically different information and attitudes. Identifying the extent to which topic differences in content are driven a platforms’ audience and channel may help us uncover the roles that different platforms play in spreading misinformation. However, while we expect that the prevalence of different topics will vary by platform in COVID-19 content posted by alternative influencers, we are unsure what those differences may be. We therefore ask the following research question:

RQ3: How does alternative influencers’ COVID-19 content, as observed via the prevalence of associated topics, differ between platforms with different audience and channel (YouTube vs. Facebook vs. Twitter)?

The Present Study

In this study, we address gaps in extant literature concerning how platforms affect the structure of information by investigating how alternative influencers’ content about COVID-19 differs with respect to emotion and topics across different social media platforms. Here we collect and analyze a novel dataset of content posted by actors who are infamous for spreading misinformation (Lewis, 2018) from Facebook, Twitter, and YouTube. We investigate platform differences using dictionary methods and structural topic modeling. In doing so, we advance the field’s theoretical understanding about misinformation online and explicate the roles that platform audience and channel play in shaping information online.

Materials and Methods

Data Collection

We collected data from Lewis’s (2018, Appendix B) list of “alternative influencers,” actors who are known to share misinformation online. There are total of 66 alternative influencers which represent numerous ideologies from “classical liberal” to “conservative white nationalist” (see Figure 1 in Lewis, 2018). The people on this list are not media elites. In fact, as Lewis described, the list focuses on political influencers from both the extreme left and the right wing. Some are professors, while majority are individual content creators who founded their own talk shows or vlogs on YouTube (see Appendix A in Lewis, 2018 paper for biographical information on these influencers). We searched for the alternative influencers’ accounts on three platforms: Twitter, YouTube, and Facebook. We could not collect data from all influencers on all platforms; some did not have accounts with all platforms, others had been banned or de-platformed, while others had “private” settings on their accounts. Among these 66 influencers, 77% had YouTube accounts, 38% had Facebook accounts, and 56% had Twitter accounts from which we were able to collect data. Though starting dates varied by platform (we had Twitter data from 2008, Facebook from 2012, and YouTube from 2008), we were able to collect all publicly available data posted by these users on all platforms through mid-November 2020. Supplementary Table S1 in the supplemental materials lists all accounts from which we collected data.

To collect all Facebook posts made by these influencers we used CrowdTangle, a third-party platform that provides researchers with historical data for public content on Facebook pages (content that has been removed either by the user or by Facebook are not included in the dataset). The CrowdTangle API, owned by Facebook, is marketed as containing all posts for public facing Facebook pages. Though we are relying on their API to produce results, we feel reasonably confident that the data collected is as close to, if not actually, population level data for these pages. The data we collected includes the text of the post, the engagement metrics, date the post was made, unique ID for the post, as well as various other metrics that we do not use here.

Twitter data was collected using a two-step process. The first was to use the Python package “snscraper” to collect a list of URLs for up to 50,000 tweets by each account. We then used the Python package “tweepy” to crawl through the list of URLs and download the relevant components of the tweet. This includes the screen name of the account, the text of the tweet (which includes any links), the date the tweet was sent, Twitter’s unique ID for the tweet, and the number of retweets.

To collect all the YouTube videos that were posted by these influencers, we used a YouTube Application Programming Interface (API) wrapper from GitHub developed by Yin and Brown (2018).2 This wrapper allows researchers to collect all the videos that were posted by a channel and all the video-level information such as video description, the number of views, likes, and shares. We then used the Python open-source package, youtube-transcript-api, to collect all the transcripts of each video. Around 10% of the video does not have transcripts available either because the videos were censored or due to content creators’ privacy settings.

Both the Twitter and Facebook APIs as well as the YouTube transcript script produce .csv files with each post/video being represented by a row. The text of the post is contained in its own cell with the corresponding metadata (date published, author, etc.) in separate cells. This allows us to cleanly analyze the textual content of the posts without having to remove superfluous information.

Table 1 describes the number of posts/videos we collected from each platform, and how many influencers we were able to find for each platform. In our analyses, we only included the alternative influencers who were active on the platforms we were comparing (discussed further below), so that our results reflected platform differences, not user differences.

In this paper, we focus on the textual features of these posts and videos and thus we did not collect the visual content of the YouTube videos, Twitter, and Facebook posts. The audio information in YouTube videos is partially captured by the transcript. This means that we do not have information on the visual components of the videos. We acknowledge that it will be fruitful for future research to expand our current analysis to examine the differences in image use across platforms.

Analytical Approach

As this paper is interested at comparing the emotion and topics in COVID-19 content posted by these alternative influencers across platforms, we first used a dictionary keyword search to identify COVID-19-related content. Drawing from Hart et al. (2020), our search included the keywords “corona,” “coronavirus,” “covid,” and “covid-19,” as well as, “pandemic,” “china virus,” “wuhan flu,” and “china flu.” Facebook or Twitter posts that contained one of these keywords were included in our dataset. However, YouTube video transcripts are longer, and it is likely that COVID-19 could be mentioned briefly in a video that about another topic. Therefore, we only included YouTube video transcripts in our dataset that mentioned a COVID-19 keyword two or more times to ensure that some portion of the video was substantively about COVID-19.

Our analytic approach uses two methods to understand emotion and topic differences across platforms. We use dictionary methods to look at the prevalence of fear and anger language in COVID-19 content on each platform. We used the NRC Word-Emotion Association Lexicon that was developed by Mohammad and Turney (2013). The NRC has eight basic emotion (anger, fear, anticipation, trust, surprise, sadness, joy and disgust) and two sentiments (negative and positive). We used the anger dictionary and fear dictionary (which contained anxiety-related words as well) in these analyses. However, we excluded the word “pandemic” from the fear dictionary, as it overlapped with the keywords used to select COVID-19 content. There are several reasons we chose the NRC emotion dictionary over others. First, this dictionary, was built through a crowdsourcing method by asking participants to indicate which word is closest to an emotion (for around 85% of the words, at least four of five workers reached agreement). Thus, these emotion dictionaries are built from the user’s perspective, rather than constructed prescriptively by researchers. Second, in terms of validation and suitability of NRC to analyzing social media posts, scholars have conducted extensive validity checks on different emotion dictionaries for studying emotion on social media. For instance, Kusen et al. (2017) applied three widely used emotion dictionaries to code social media posts and then validated the performance of these dictionaries with online human coders. They found that NRC is more accurate at identifying emotion compared to other emotion dictionaries such as EmosenticNet and DepecheMood. Following the formal validation of dictionary approach suggested in González-Bailón and Paltoglou (2015) and van Atteveldt et al. (2021), we selected a random sample of messages covering different accounts from the three platforms (102 Facebook messages, 113 Twitter messages, and 101 YouTube segments). Three researchers coded each message for fear or anger (i.e., binary variable). Then we calculated the precision and recall for the anger and fear for each platform comparing hand annotation result and the dictionary result. The precision for anger ranged from 53 to 83% for the three platforms; the recall for anger ranged from 64 to 95%. For fear, the precision ranged from 60 to 80% and the recall ranged from 88 to 98% (see Supplementary Table S4 for details).

We then used the R software and its quanteda.dictionaries package to apply this dictionary to our text data. The main function liwcalike() gives the percentage of emotion words relative to the total number of words, which we computed for all platforms. For example, this method first counts the number of fear or anger words in each YouTube video transcript and calculated the percentage of emotion words relative to the total number of words in the transcript. The fear or anger score assigned to the YouTube dataset represents the average fear or anger score across all videos’ transcripts. After computing the fear and anger scores for Facebook and Twitter in a similar way, we then conducted a linear regression to examine whether influencers used emotions differently on different platforms (results from which are discussed below). Specifically, we conducted three linear regressions, with each one comparing the posts of the overlapping accounts for two platforms. In each linear regression, our dependent variable is the percentage of emotion language for a post/video, predicted by a categorical variable that captures which platform a post comes from, such as whether a post comes from Twitter or from Facebook. We controlled for the account-level information in the linear regression to address non-independence among these posts, which come from an overlapping group of influencers. Thus, we were able to control for the impact of account on emotion. We reported the marginal effect of platform influence on emotion using the R ggeffects package in the result section.

Second, to analyze topic differences in COVID-19 content across their platforms, we used structural topic modeling (STM; Roberts et al., 2019). STM has been notably used across political subfields of research and is a useful tool for text analysis (e.g., Farrell, 2016; Kim, 2017; Rothschild et al., 2019). Functionally speaking, STM utilizes the co-occurrence of all words in given corpus (for example, all COVID-19 Facebook posts) to identify topics reflected in groups of words that regularly co-occur. We chose STM over other frequently used models (LDA or CTM) because STM uses document metadata which allows us to classify which platform the text comes from. Given our research questions, this is an extremely useful component of the model. Here, we were not focused on investigating the content of the topics, per se, but were instead interested in the degree to which topics vary by platform.

Running the STM on our corpus required a fair amount of pre-processing of the text to yield understandable topics. We eliminated emoticons, dates, numbers, URLs, Bitcoin wallets, and other topically meaningless text using base R gsub functions. We then removed stopwords, common words such as “the” and “its,” using the STM stopwords removal function.3 Finally, we stemmed the remaining text (e.g., “essential” and “essentials” stemmed to “essenti”). This leaves us with 34,450 words spread across our corpus. We should note that, unlike the entire corpus, the COVID-19 subset that we use here contains, on average, longer texts. Median word count for COVID-19 texts is 52 whereas the overall corpus is 15. Longer text makes for easier topic modeling and a further reason to use the STM package.

However, there are reasons to consider not stemming, notably those raised by Schofield and Mimno (2016). We chose to stem the corpus for a few reasons, the more pressing of which is that sheer quantity of words prohibited us from make evaluations of each word in context. However, the concerns raised by Schofield and Mimno may be addressed by their recommendation of using a Porter stemmer, which is employed here.

From there, we used the spectral initialization function to produce our topics (see Mimno and Lee, 2014; Roberts et al., 2019). This algorithm provides a good baseline number of topics and is a recommendation for a corpus that requires long processing times.4 While we use spectral initialization, we also show some optimization results in Supplementary Tables S5–S10 and Figure S1. For these, we used a sample of 800 randomly drawn observations from our COVID dataset. We started by using the default fixed beta of 1/K and varied, first, the K values surrounding the K produced by the spectral initialization (80, 90, and 100). We then used k = 92 and varied the alpha (0.01, 0.05, and 0.1). These six models (K = 80, alpha = 0.01; K = 90, alpha = 0.01; K = 100, alpha = 0.01; K = 92, alpha = 0.01; K = 92, alpha = 0.05; K = 92, alpha = 0.1) are all shown in the Appendix. This background work provides a useful test of our hyperparameter optimization. We do use the results of the spectral initialization throughout the remainder of this piece as we find that the topics selected are reasonable for clarity and coherence. The result is 92 topics (see Supplementary Table S2 for a full list of topics and the top-7 words that distinguish them). The extent to which different topics were associated with different platforms are discussed in the results below.

Results

Emotion

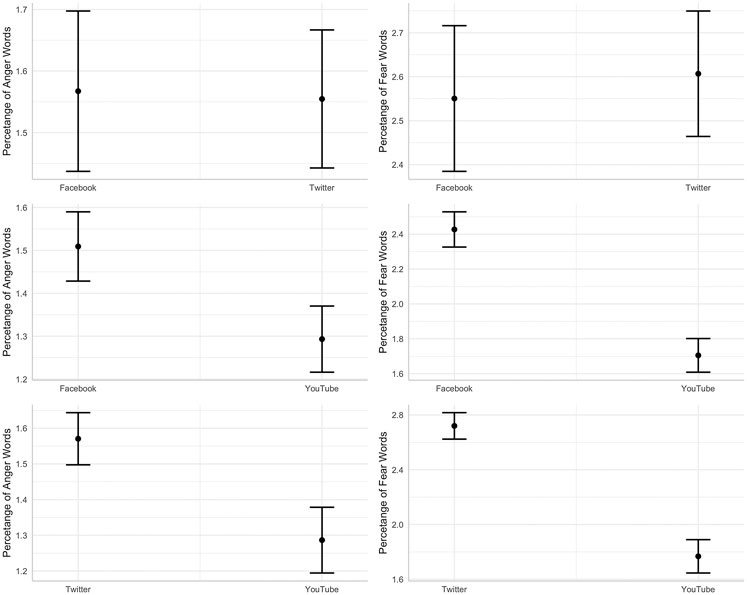

We compared how anger and fear, two of the most important emotions in misinformation and conspiratorial content, were used by alternative influencers across the three platforms in their COVID-19 content, controlling for account. Figure 1 presents the marginal effects of platform on fear and anger, with 95% confidence interval. Regression tables are in Supplementary Table S3. We conducted three linear regressions, each comparing two platforms (e.g., Facebook vs. Twitter). For each linear regression model, the platform variable only utilizes data from influencers that are active on both platforms being compared, and in addition we controlled for what account the post came from. In this way, we ensure that differences in content are attributable to differences in the audience and channel of the platforms, rather than reflecting different content creators. There is data from 32 influencers included in the Twitter and the YouTube linear regression model, 24 in the YouTube and Facebook, and from 20 in the Twitter and Facebook.

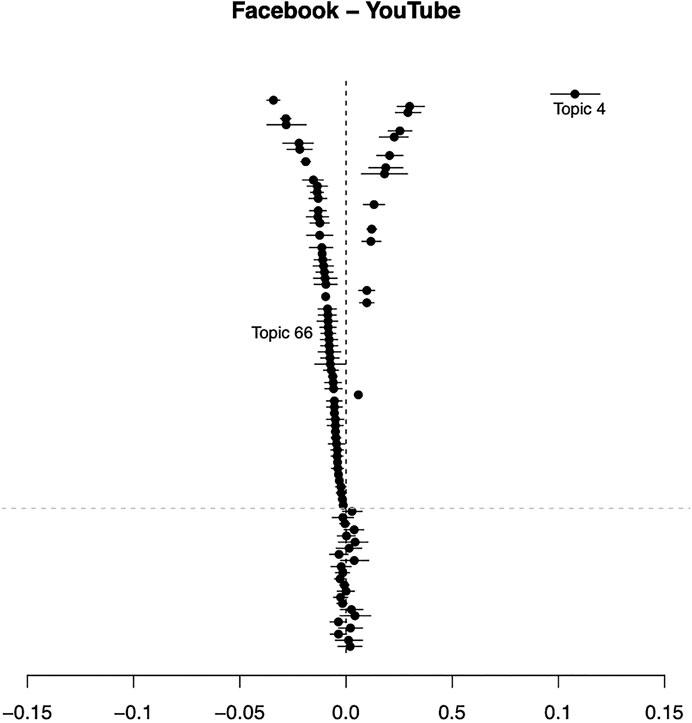

FIGURE 1. Pairwise Comparison on the Use of Anger and Fear across Facebook, Twitter and YouTube on COVID-19 Content. Note: The estimated point in Figure 1 is the mean percentage use of an emotion for a platform, with 95% confidence interval. The top panel compares Facebook vs. Twitter in terms of the use of anger and fear; the middle panel compares Facebook vs. YouTube; the bottom panel compares Twitter vs. YouTube.

Examining the marginal effects from the linear regression models, we found that there was a greater proportion of fear and anger language on Facebook and Twitter compared to YouTube. In their COVID-19 content, alternative influencers used a much higher percentage of fear on Facebook (2.43%) than on YouTube (1.71%) (p < 0.01). They also used a higher percentage of anger on Facebook (1.51%) compared to YouTube (1.29%) (p < 0.01). This pattern held when comparing Twitter and YouTube: alternative influencer used more fear words on Twitter (2.72%) than on YouTube (1.77%) (p < 0.01) and a higher percentage of anger words on Twitter (1.57%) than on YouTube (1.29%) (p < 0.01). Comparing Facebook and Twitter, whose audience and channel are more similar, we saw fewer differences. Although we observed a slightly lower percentage of fear words on Facebook (2.55%) than on Twitter (2.61%), the difference is not statistically significant (p = 0.63). For anger, there was no significant difference between the two platforms, too (p = 0.89). In sum, in response to our first two research questions, we found that shared channel platforms (Twitter, Facebook) contained more fear and anger language than independent channel platforms (RQ1, RQ2).

Topics in COVID-19 Content

We also examined how topics varied in COVID-19 content across platforms. Recall that this series of tests is designed to answer RQ3, in which we wanted to understand whether the topics, as extracted through the Structural Topic Model, would vary across platforms. Our intention here is to highlight variations in topic, not necessarily to do a deep dive into the topics themselves. To accomplish that, we are going to largely focus on the distribution of topics by platform rather than the content of the topics. A full list of the topics that were extracted from the STM results are included in Supplementary Table S1). Again, this is focusing only on the COVID-19 content from these alternative influencers, who have demonstrated a pattern of spreading misinformation and radicalized messages on social media (Lewis, 2018).

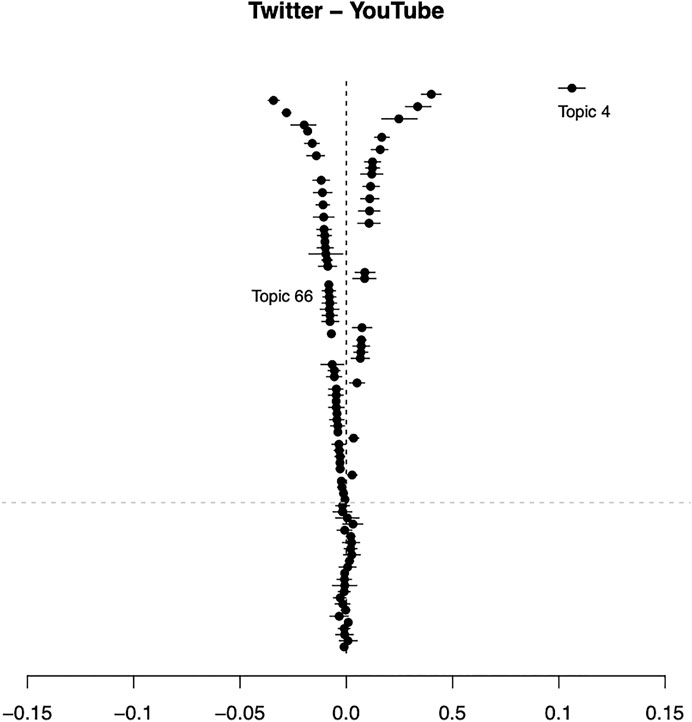

Figures 2–4 below show pairwise comparisons between the three platforms. As with the pairwise comparisons above, these results only include data from alternative influencers with accounts on both platforms. Results are shown with a vertical line at zero indicating the non-significant relationship point and topics are points with 95% confidence intervals. Points and intervals that do not overlap the zero line are statistically associated with whichever platform represents that side of the line. We have ordered the topics by the strength of their association with one platform over the other, with the topics with the strongest associations at the top and those with the lowest (or more equally shared between the platforms) at the bottom. What we are looking for here is divergence trends between the relationships. If there are differences in how topics are deployed by platform, then we ought to see different distributions of topics. Moreover, if our contention that platform structure matters, then we ought to see similarities between the distribution of Facebook vs. YouTube and Twitter vs. YouTube. The reason for that is that Facebook and Twitter are similar in our categorization and should have similar patterns of topics.

Our anticipated relationship is exactly what we see. Facebook and Twitter do have divergences in content, but 47 of the 92 topics are non-significant. We use a dashed gray line to indicate the point at which topics above the line are statistically associated with one platform more than another at the p < 0.05 level. In comparison, a great deal more topics differ on those platforms when compared to YouTube (69 topics for Facebook and YouTube and 68 topics for Twitter and YouTube). Our argument, as we presented above, is that topics should differ based on audience and channel and we see that represented in the relationships here. That is, alternative influencers’ COVID-19 posts on Twitter and Facebook are associated with similar topics; in contrast, YouTube content about COVID-19 that is posted by these same influencers is dissimilar from Twitter and Facebook. There are topic differences between Facebook and Twitter, of course, though many are close to the 0 line, suggesting that the magnitude of difference is lower.

In practice, this means that alternative influencers discuss similar topics in COVID-19 content on Facebook and Twitter, whereas their YouTube videos contain different considerations, connections, and topics. While we are primarily interested in uncovering whether topics vary systematically as a function of platform audience and channel, the substance of these topic differences is also important to evaluate. Perhaps the most interesting finding concerns topics closely related to COVID-19 that are strongly associated with different platforms. For example, Topic 4 (differentiated by the words: coronavirus, pandem, covid, travel, februari, downplay, and panic) is explicitly about COVID-19, using language we might expect to be common to all platforms’ COVID-19 content. However, what we find is that discussion of Topic 4 is unequally distributed across platforms. Topic 4 is more closely associated with Twitter than Facebook by a fair margin (see Figure 2). Additionally, it appears in YouTube content more than Facebook or Twitter. That is, across content that mentions COVID-19, this topic is more likely to appear on YouTube than the other platforms and that users of YouTube may be systematically more exposed to this topic than users of other platforms are. We see a different pattern concerning Topic 66, which appears to concern critical care COVID patients and the outbreak in Italy (top words are: ventil, icu, model, itali, beard, bed, peak). This topic is more closely associated with Twitter and Facebook than YouTube. Contrasted to the more general discussion of COVID-19 in Topic 4, Topic 66 is more specific and potentially more fear inducing. These differences in topics related to COVID-19 between narrower audience, shared channel platforms (Facebook and Twitter) and broader audience, independent channel platforms (YouTube) support our contention that topics are not equally distributed by platform and that these differences may be driven by these platform characteristics.

Though we selected alternative influencers because of existing evidence that they spread misinformation on political and scientific topics (Lewis, 2018), the STM topics also offer evidence that these actors are likely spreading misinformation surrounding COVID-19, and that exposure to misinformation may vary by platform. For example, Topic 23 (differentiating words: chines, china, wuhan, taiwan, hong, kong, beij), which appeared to focus on the Chinese origins of or blame for COVID-19 (e.g., “China, of course, appears to have worked alongside the WHO to hide the virus’s true nature, leaving many countries, including the United States, unprepared for the severity of the coronavirus epidemic.” Facebook post from the Daily Wire), was more prevalent on Facebook than on YouTube or Twitter, and more prevalent on Twitter than YouTube. Similarly, allegations of protesters committing crimes (Topic 75; top words: riot, portland, protest, loot, rioter, antifa, violenc) and antisemitic claims (Topic 92; top words: jewish, jew, roger, semit, holocaust, conspiraci, milo) are more common on Facebook than Twitter and are more common on Twitter than YouTube. Additionally, alternative influencers’ allegations of “fake” mainstream news (Topic 45; top words: press, cnn, journalist, fox, fake, media, news) are similarly prevalent on Twitter and Facebook, but less common on YouTube.

Discussion

Past works stress that misinformation preys on our emotions; attention to, sharing of, and belief in false information is often associated by emotions like anger and fear (Maratos, 2011; Weeks, 2015; Vosoughi et al., 2018). This study presents a novel examination how emotion varies in COVID-19 content likely to contain misinformation across prominent communication platforms. As we showed, emotional language on YouTube differed substantially from the other two platforms, Twitter and Facebook. When communicating about COVID-19, alternative influencers used more anger and fear words on Facebook and Twitter compared to YouTube. In part, this could be due to a technical feature in which the actor is limited by the length of the post, and thus needs to maximize emotion use to draw continuous attention and interaction from the audience. In comparison to YouTube, Twitter and Facebook are more interactive and competitive, and so this observation suggests that content on shared channel platforms contains greater emotional language than independent channel platforms, likely to draw audience attention (RQ1, RQ2). As a reminder, we consider platform structures such as audience and channel to exist on a spectrum, and that our classifications are about these platform’s relative positions to one another, not hard and fast rules. We did not find many differences in the use of fear and anger language. Across all platforms, alternative influencers’ COVID-19 content contained a greater proportion of fear-words than anger-words. This may be attributable to the topic; at the time of data collection, COVID-19 cases were rising nationally, and a vaccine had not yet been approved. These results are important to the study of misinformation, which tends to be presented with more emotional language that facilitates its spread. We do note that, while Facebook and Twitter content contained similar levels of anger, Facebook content had slightly more fear language than Twitter content about COVID-19. It is not immediately clear why this is the case; we note that though the differences are significant, with such a large corpus we are likely to find significant differences with similar magnitudes to what we see here. Our estimation is that the difference, while statistically notable, is not functionally meaningful. However, as always, further work will need to investigate whether this result reflects a systematic difference attributable to the platforms or is a function of the topic of COVID-19.

We also highlighted how the topics in content surrounding mentions of COVID-19 on these platforms vary in systematic ways. Results show that narrow audience and shared channel platforms (Facebook and Twitter) discuss similar topics surrounding COVID-19 but vary in systematic ways from more broad audience, independent channel platforms (YouTube) (RQ3). The content of these variations is also notable, though we discussed just a few of the topics themselves. Further, while many of the topics are quickly identifiable as COVID-19 related, some are not. In our view, the tangential connection between some topics and COVID-19 is not as large of an issue as it may seem. We were primarily interested in how the substance of COVID-19 content, as observed with these topics, would vary as a function of the audience and channel of the platform. We argue here that our work shows both systematic variations in content and meaningful topic associations with different platforms. We also want to point out that the accounts studied here are identified as members of the Alternative Influencer Network: individuals or organizations that spread alternative facts about social issues to foment radicalization and challenge established norms (Lewis, 2018). It remains difficult to distinguish disinformation campaigns from misinformation inadvertently spread by alternative media, particularly on an issue like COVID-19, in which best available information changes quickly (Freiling et al., 2021). In response to calls for better understanding the close connection between disinformation and alternative facts across platforms (Ong and Cabanes, 2019; Wilson and Starbird, 2020), our paper demonstrates how platform characteristics are associated with different topics and emotions that facilitate misinformation spread in the cross-platform ecosystem at the post-normal science and post-truth age (Funtowicz and Ravetz, 1993; Fischer, 2019).

Strengths and Limitations

This study proposes a novel framework for building theoretically-informed expectations of how content is likely to differ across communication platforms, which we apply and test in the context of alternative influencers’ COVID-19 content. We do this by drawing on a novel dataset, namely, all publicly available Facebook, Twitter, and YouTube content posted by alternative influencers (Lewis, 2018). With this data, we are able to test expectations about how a platform’s audience and channel shape content likely to contain misinformation. Previous work into misinformation has largely focused on single-platform studies, yet by comparing such content on different platforms, we offer novel insight into how platform structure might enable or inhibit the spread and acceptance of misinformation.

There are also limitations worth noting. First, and perhaps foremost, we limit this study to the spread of misinformation and specifically COVID-19 misinformation. Our results are necessarily limited to that specific topic. However, our intention is to highlight how platforms are fundamentally different and encourage scholarship along those lines. In addition to our study, there is also evidence of platform audience and channel playing crucial roles in political campaigning (Hiaeshutter-Rice, 2020). Further, while we were able to collect all publicly available content, we were not able to retrieve content that had been removed (either by the influencer or the platform) or content by alternative influencers who had been banned from different platforms (e.g., Gavin Mclnnes, the founder of the Proud Boys). Social media platforms are not uniform in their terms of service or their application of punitive actions for those who violate the terms of service by spreading misinformation or hate speech. Therefore, it is possible that initial content posted on platforms was more or less similar than our results indicate, with observed differences resulting from platforms’ inconsistencies in reporting and removing content. Even if content on these platforms was initially similar before being subjected to moderation, it remains that users of different social media platforms see different emotional cues and associated topics between more broad, independent platforms and more narrow, shared platforms. In addition, alternative influencers who have not been banned are likely to be aware of platforms’ terms of service and moderation practices, which would inform the content they share. This brings up a related point: we focus on alternative influencers, raising the question of whether these findings can generalize across other content creators. We suspect that there are similarities between influencers and the general population of these sites as the overarching structures of the sites are the same. However, we also suspect that influencers may be more adept at using the sites as well as operating with a slightly different set of goals. Thus, we constrain our findings to influencers and leave open the possibility for further study of users as a whole. A final limitation concerns the insight gained from this study’s methodological approach. Though we are able to broadly describe differences in emotion and topics in alternative influencers’ content via computational content analytic methods, additional close reading of these posts could reveal additional connections and information about the content of misinformation being spread by these actors regarding COVID-19.

Conclusion

This study offers insight into the under-researched area of how the audience and channel of platforms shape the structure of information to which individuals are exposed. This work is particularly important to understanding why and how misinformation is shared and believed online, as well as for informing corrective interventions tailored to specific platforms and audiences.

Perhaps more importantly, audiences differ by platform. That is to say, audience demographics fundamentally differ from one another from platform to platform (Perrin and Andrews, 2020) with older generations on Facebook and younger ones on YouTube and Twitter. What this means, functionally, is that audiences are being systematically exposed to different content (as we have shown here) and that those differences are likely not randomly distributed across the population. While many people use multiple sites, as audiences become further segmented into different platforms as their primary source of information, our results suggest that they will have access to different information than if they used a different site. This has potentially serious implications for how citizens understand political and social issues.

These findings also suggest several practical implications for those involved in mitigating and correcting misinformation online. Corrective interventions need to be tailored to respond to content differences across platforms; for instance, when correcting misinformation on shared channel platforms, whose content contains stronger negative emotions, the corrective message may need to utilize more positive emotions like hope (Newman, 2020) and positive framing (Chen et al., 2020a) than corrective interventions for independent channel platforms. For social media companies, understanding what emotional language and topics are used by alternative influencers to spread misinformation on different platforms may enable companies to develop a more sophisticated, platform-specific moderation strategies (Gillespie, 2018). Finally, our findings further suggest that users should be more alert to manipulation in messages containing strong, negative emotions. Awareness and skepticism of anger-or fear-based messages may help individuals become more resistant to misinformation (Chen et al., 2020b; Pennycook et al., 2020).

Though this study offered novel insight into the ways in which platforms shaped the COVID-19 content of actors prone to spreading misinformation, there are many pathways for future work into the role that communication platforms play in enabling or inhibiting misinformation. Future work should investigate whether the patterns we observe here, regarding emotion and topic differences between platforms with different audiences and channels, are consistent across different issues (e.g., election misinformation). Additionally, it is not known whether content posted by actors who share more accurate information (e.g., NASA astronauts) differs by platform in similar ways as we observe here. Finally, research into misinformation on social media must go further in tracking the movement of misinformation and rumors across platforms, with particular how platform structure gives birth to and amplifies false information.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpos.2021.642394/full#supplementary-material

Footnotes

1Of course, the other way that individuals see content is to be algorithmically exposed to it through interactions by their connections. For instance, if User A follows User B and User B follows User C, who is not followed by User A, sometimes User A will see User C’s content even though they do not follow them. As we are primarily interested in how the initial user creates content, this type of indirect exposure is of less interest for our project.

2For details about this GitHub wrapper, please check: https://github.com/SMAPPNYU/youtube-data-api and the tutorial is in: http://bit.ly/YouTubeDataAPI.

3The list of stopwords can be found here: http://www.ai.mit.edu/projects/jmlr/papers/volume5/lewis04a/a11-smart-stop-list/english.stop.

4The default hyperparameters for the model (alpha = 0.01 and beta = 1/K) produced convergence around iteration 116.

References

Ali, K., Zain-ul-abdin, K., Li, C., Johns, L., Ali, A. A., and Carcioppolo, N. (2019). Viruses Going Viral: Impact of Fear-Arousing Sensationalist Social media Messages on User Engagement. Sci. Commun. 41 (3), 314–338. doi:10.1177/1075547019846124

Aupers, S. (2012). 'Trust No One': Modernization, Paranoia and Conspiracy Culture. Eur. J. Commun. 27 (1), 22–34. doi:10.1177/0267323111433566

Bail, C. A. (2016). Emotional Feedback and the Viral Spread of Social media Messages about Autism Spectrum Disorders. Am. J. Public Health 106 (7), 1173–1180. doi:10.2105/AJPH.2016.303181

Berger, J., and Milkman, K. L. (2012). What Makes Online Content Viral?. J. Marketing Res. 49 (2), 192–205. doi:10.1509/jmr.10.0353

Blancke, S., Van Breusegem, F., De Jaeger, G., Braeckman, J., and Van Montagu, M. (2015). Fatal Attraction: The Intuitive Appeal of GMO Opposition. Trends Plant Sci. 20 (7), 414–418. doi:10.1016/j.tplants.2015.03.011

Bode, L., and Vraga, E. K. (2018). Studying Politics across media. Polit. Commun. 35 (1), 1–7. doi:10.1080/10584609.2017.1334730

Bolsen, T., and Druckman, J. N. (2018). Do partisanship and Politicization Undermine the Impact of a Scientific Consensus Message about Climate Change?. Group Process. Intergroup Relations 21 (3), 389–402. doi:10.1177/1368430217737855

Bossetta, M. (2018). The Digital Architectures of Social Media: Comparing Political Campaigning on Facebook, Twitter, Instagram, and Snapchat in the 2016 U.S. Election. Journalism Mass Commun. Q. 95 (2), 471–496. doi:10.1177/1077699018763307

Brader, T. (2006). Campaigning for Hearts and Minds: How Emotional Appeals in Political Ads Work. University of Chicago Press.

Bradshaw, A. S., Shelton, S. S., Wollney, E., Treise, D., and Auguste, K. (2020). Pro-Vaxxers Get Out: Anti-vaccination Advocates Influence Undecided First-Time, Pregnant, and New Mothers on Facebook. Health Commun. 36, 693–702. doi:10.1080/10410236.2020.1712037

Brossard, D., and Scheufele, D. A. (2013). Science, New media, and the Public. Science 339 (6115), 40–41. doi:10.1126/science.1232329

Caplan, R., and Gillespie, T. (2020). Tiered Governance and Demonetization: The Shifting Terms of Labor and Compensation in the Platform Economy. Soc. Media + Soc. 6 (2), doi:10.1177/2056305120936636

Carver, C. S., and Harmon-Jones, E. (2009). Anger Is an Approach-Related Affect: Evidence and Implications. Psychol. Bull. 135 (2), 183–204. doi:10.1037/a0013965

Chen, K., Bao, L., Shao, A., Ho, P., Yang, S., Wirz, C., et al. (2020b). How Public Perceptions of Social Distancing Evolved over a Critical Time Period: Communication Lessons Learnt from the American State of Wisconsin. Jcom 19 (5), A11. doi:10.22323/2.19050211

Chen, K., Chen, A., Zhang, J., Meng, J., and Shen, C. (2020a). Conspiracy and Debunking Narratives about COVID-19 Origins on Chinese Social media: How it Started and Who Is to Blame. HKS Misinfo Rev. 1 (8), 1–30. doi:10.37016/mr-2020-50

Chen, K., Shao, A., Jin, Y., and Ng, A. (2021). I Am Proud of My National Identity and I Am superior to You: The Role of Nationalism in Knowledge and Misinformation. Available at: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3758287.

Chong, D., and Druckman, J. N. (2010). Dynamic Public Opinion: Communication Effects over Time. Am. Polit. Sci. Rev. 104 (04), 663–680. doi:10.1017/S0003055410000493

Cobb, M. D. (2005). Framing Effects on Public Opinion about Nanotechnology. Sci. Commun. 27 (2), 221–239. doi:10.1177/1075547005281473

Dunwoody, S., and Kohl, P. A. (2017). Using Weight-Of-Experts Messaging to Communicate Accurately about Contested Science. Sci. Commun. 39 (3), 338–357. doi:10.1177/1075547017707765

Farrell, J. (2016). Corporate Funding and Ideological Polarization about Climate Change. Proc. Natl. Acad. Sci. USA 113 (1), 92–97. doi:10.1073/pnas.1509433112

Fischer, F. (2019). Knowledge Politics and post-truth in Climate Denial: On the Social Construction of Alternative Facts. Crit. Pol. Stud. 13 (2), 133–152. doi:10.1080/19460171.2019.1602067

Freiling, I., Krause, N. M., Scheufele, D. A., and Brossard, D. (2021). Believing and Sharing Misinformation, Fact-Checks, and Accurate Information on Social media: The Role of Anxiety during COVID-19. New Media & Society, doi:10.1177/14614448211011451

Funtowicz, S. O., and Ravetz, J. R. (1993). Science for the post-normal Age. Futures 25 (7), 739–755. doi:10.1016/0016-3287(93)90022-l

Garrett, R. K., Weeks, B. E., and Neo, R. L. (2016). Driving a Wedge between Evidence and Beliefs: How Online Ideological News Exposure Promotes Political Misperceptions. J. Comput-mediat Comm. 21 (5), 331–348. doi:10.1111/jcc4.12164

Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation and the Hidden Decisions that Shape Social Media. Yale University Press.

Golovchenko, Y., Buntain, C., Eady, G., Brown, M. A., and Tucker, J. A. (2020). Cross-Platform State Propaganda: Russian Trolls on Twitter and YouTube during the 2016 U.S. Presidential Election. The Int. J. Press/Politics 25 (3), 357–389. doi:10.1177/1940161220912682

González-Bailón, S., and Paltoglou, G. (2015). Signals of Public Opinion in Online Communication. ANNALS Am. Acad. Polit. Soc. Sci. 659 (1), 95–107. doi:10.1177/0002716215569192

Hart, P. S., Chinn, S., and Soroka, S. (2020). Politicization and Polarization in COVID-19 News Coverage. Sci. Commun. 42 (5), 679–697. doi:10.1177/1075547020950735

Hasell, A., and Stroud, N. J. (2020). The Differential Effects of Knowledge on Perceptions of Genetically Modified Food Safety. Int. J. Public Opin. Res. 32 (1), 111–131. doi:10.1093/ijpor/edz020

Hasell, A., and Weeks, B. E. (2016). Partisan Provocation: The Role of Partisan News Use and Emotional Responses in Political Information Sharing in Social media. Hum. Commun. Res. 42 (4), 641–661. doi:10.1111/hcre.12092

Hiaeshutter-Rice, D. (2020). Political Platforms: Technology, User Affordances, and Campaign Communication. Ann Arbor, United States of America: Doctoral Dissertation, University of Michigan. Retrieved from: https://deepblue.lib.umich.edu/handle/2027.42/163218.

Iqbal, M. (2020). YouTube Revenue and Usage Statistics. Business of Apps. Retrieved from: https://www.businessofapps.com/data/youtube-statistics/.

Kata, A. (2012). Anti-vaccine Activists, Web 2.0, and the Postmodern Paradigm - an Overview of Tactics and Tropes Used Online by the Anti-vaccination Movement. Vaccine 30 (25), 3778–3789. doi:10.1016/j.vaccine.2011.11.112

Katz, J. E., and Mays, K. K. (2019). Journalism and Truth in an Age of Social Media. Oxford University Press.

Kim, I. S. (2017). Political Cleavages within Industry: Firm-Level Lobbying for Trade Liberalization. Am. Polit. Sci. Rev. 111 (1), 1–20. doi:10.1017/S0003055416000654

Kühne, R., and Schemer, C. (2015). The Emotional Effects of News Frames on Information Processing and Opinion Formation. Commun. Res. 42 (3), 387–407. doi:10.1177/0093650213514599

Lee, Y.-H., and Chen, M. (2020). Emotional Framing of News on Sexual Assault and Partisan User Engagement Behaviors. Journalism Mass Commun. Q, 1–22. doi:10.1177/1077699020916434

Lerner, J. S., and Keltner, D. (2000). Beyond Valence: Toward a Model of Emotion-specific Influences on Judgement and Choice. Cogn. Emot. 14 (4), 473–493. doi:10.1080/026999300402763

Lewis, R. (2018). Alternative Influence: Broadcasting the Reactionary Right on YouTube. Data & Society Research Institute. Retrieved from: https://datasociety.net/wp-content/uploads/2018/09/DS_Alternative_Influence.pdf.

Lukito, J. (2020). Coordinating a Multi-Platform Disinformation Campaign: Internet Research Agency Activity on Three U.S. Social Media Platforms, 2015 to 2017. Polit. Commun. 37 (2), 238–255. doi:10.1080/10584609.2019.1661889

MacKuen, M., Wolak, J., Keele, L., and Marcus, G. E. (2010). Civic Engagements: Resolute Partisanship or Reflective Deliberation. Am. J. Polit. Sci. 54 (2), 440–458. doi:10.1111/j.1540-5907.2010.00440.x

Maloney, E. K., Lapinski, M. K., and Witte, K. (2011). Fear Appeals and Persuasion: A Review and Update of the Extended Parallel Process Model. Social Personal. Psychol. Compass 5 (4), 206–219. doi:10.1111/j.1751-9004.2011.00341.x

Maratos, F. A. (2011). Temporal Processing of Emotional Stimuli: The Capture and Release of Attention by Angry Faces. Emotion 11 (5), 1242–1247. doi:10.1037/a0024279

Mimno, D., and Lee, M. (2014). “October). Low-Dimensional Embeddings for Interpretable Anchor-Based Topic Inference,” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 1319–1328.

Mohammad, S. M., and Turney, P. D. (2013). Crowdsourcing a Word-Emotion Association Lexicon. Comput. Intelligence 29 (3), 436–465. doi:10.1111/j.1467-8640.2012.00460.x

Most, S. B., Smith, S. D., Cooter, A. B., Levy, B. N., and Zald, D. H. (2007). The Naked Truth: Positive, Arousing Distractors Impair Rapid Target Perception. Cogn. Emot. 21 (5), 964–981. doi:10.1080/02699930600959340

Nabi, R. L. (2003). Exploring the Framing Effects of Emotion. Commun. Res. 30 (2), 224–247. doi:10.1177/0093650202250881

Nabi, R. L., Gustafson, A., and Jensen, R. (2018). Framing Climate Change: Exploring the Role of Emotion in Generating Advocacy Behavior. Sci. Commun. 40 (4), 442–468. doi:10.1177/1075547018776019

Nabi, R. L. (2010). The Case for Emphasizing Discrete Emotions in Communication Research. Commun. Monogr. 77 (2), 153–159. doi:10.1080/03637751003790444

Newman, T. (2020). Science Elicits hope in Americans – its Positive Brand Doesn’t Need to Be partisanThe Conversation. doi:10.1287/ab5eef8e-195a-498b-b72a-63d0b359bc33Retrieved from: https://theconversation.com/science-elicits-hope-in-americans-its-positive-brand-doesnt-need-to-be-partisan-124980?utm_source=twitter&utm_medium=bylinetwitterbutton.

Nisbet, E. C., Hart, P. S., Myers, T., and Ellithorpe, M. (2013). Attitude Change in Competitive Framing Environments? Open-/closed-Mindedness, Framing Effects, and Climate Change. J. Commun. 63 (4), 766–785. doi:10.1111/jcom.12040

Oh, O., Kwon, K. H., and Rao, H. R. (2010). December) An Exploration of Social media in Extreme Events: Rumor Theory and Twitter during the Haiti Earthquake 2010. Icis 231, 7332–7336.

Ong, J. C., and Cabañes, J. V. A. (2019). When Disinformation Studies Meets Production Studies: Social Identities and Moral Justifications in the Political Trolling Industry. Int. J. Commun. 13, 20.

Pennycook, G., McPhetres, J., Zhang, Y., Lu, J. G., and Rand, D. G. (2020). Fighting COVID-19 Misinformation on Social media: Experimental Evidence for a Scalable Accuracy-Nudge Intervention. Psychol. Sci. 31 (7), 770–780. doi:10.1177/0956797620939054

Perrin, A., and Anderson, M. (2020). Share of U.S. Adults Using Social media, Including Facebook, Is Mostly Unchanged since 2018. Retrieved from: https://www.pewresearch.org/fact-tank/2019/04/10/share-of-u-s-adults-using-social-media-including-facebook-is-mostly-unchanged-since-2018/ (Accessed December 15, 2020).

Pew Research Center (2015). Public and Scientists’ Views on Science and Society. Pew Research Center. Retrieved from: https://www.pewresearch.org/science/2015/01/29/public-and-scientists-views-on-science-and-society/.

Roberts, M. E., Stewart, B. M., and Tingley, D. (2019). Stm: An R Package for Structural Topic Models. J. Stat. Softw. 91 (1), 1–40. doi:10.18637/jss.v091.i02

Rothschild, J. E., Howat, A. J., Shafranek, R. M., and Busby, E. C. (2019). Pigeonholing Partisans: Stereotypes of Party Supporters and Partisan Polarization. Polit. Behav. 41 (2), 423–443. doi:10.1007/s11109-018-9457-5

Schofield, A., and Mimno, D. (2016). Comparing Apples to Apple: The Effects of Stemmers on Topic Models. Tacl 4, 287–300. doi:10.1162/tacl_a_00099

Segerberg, A., and Bennett, W. L. (2011). Social media and the Organization of Collective Action: Using Twitter to Explore the Ecologies of Two Climate Change Protests. Commun. Rev. 14 (3), 197–215. doi:10.1080/10714421.2011.597250

Sugimoto, C. R., and Thelwall, M. (2013). Scholars on Soap Boxes: Science Communication and Dissemination in TED Videos. J. Am. Soc. Inf. Sci. Tec 64 (4), 663–674. doi:10.1002/asi.22764

Syed-Abdul, S., Fernandez-Luque, L., Jian, W.-S., Li, Y.-C., Crain, S., Hsu, M.-H., et al. (2013). Misleading Health-Related Information Promoted through Video-Based Social media: Anorexia on YouTube. J. Med. Internet Res. 15 (2), e30. doi:10.2196/jmir.2237

Thorson, K., Driscoll, K., Ekdale, B., Edgerly, S., Thompson, L. G., Schrock, A., et al. (2013). Youtube, Twitter and the Occupy Movement. Inf. Commun. Soc. 16 (3), 421–451. doi:10.1080/1369118X.2012.756051

Thorson, K., and Wells, C. (2016). Curated Flows: A Framework for Mapping media Exposure in the Digital Age. Commun. Theor. 26 (3), 309–328. doi:10.1111/comt.12087

van Atteveldt, W., van der Velden, M. A. C. G., and Boukes, M. (2021). The Validity of Sentiment Analysis:Comparing Manual Annotation, Crowd-Coding, Dictionary Approaches, and Machine Learning Algorithms. Communication Methods and Measures, 1–20. doi:10.1080/19312458.2020.1869198

Vargo, C. J., and Hopp, T. (2020). Fear, Anger, and Political Advertisement Engagement: A Computational Case Study of Russian-linked Facebook and Instagram Content. Journalism Mass Commun. Q. 97 (3), 743–761. doi:10.1177/1077699020911884

Vosoughi, S., Roy, D., and Aral, S. (2018). The Spread of True and False News Online. Science 359 (6380), 1146–1151. doi:10.1126/science.aap9559

Wang, Y. C., and Kraut, R. (2012). “May). Twitter and the Development of an Audience: Those Who Stay on Topic Thrive!,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 1515–1518.

Weeks, B. E. (2015). Emotions, Partisanship, and Misperceptions: How Anger and Anxiety Moderate the Effect of Partisan Bias on Susceptibility to Political Misinformation. J. Commun. 65 (4), 699–719. doi:10.1111/jcom.12164

Weeks, B. E., Kim, D. H., Hahn, L. B., Diehl, T. H., and Kwak, N. (2019). Hostile media Perceptions in the Age of Social media: Following Politicians, Emotions, and Perceptions of media Bias. J. Broadcasting Electron. Media 63 (3), 374–392. doi:10.1080/08838151.2019.1653069

Wilson, T., and Starbird, K. (2020). Cross-platform Disinformation Campaigns: Lessons Learned and Next Steps. Harv. Kennedy Sch. Misinformation Rev. 1 (1).

Wynne, B. (1992). Misunderstood Misunderstanding: Social Identities and Public Uptake of Science. Public Underst Sci. 1, 281–304. doi:10.1088/0963-6625/1/3/004

Keywords: misinformation, social media, alternative influencers, platforms, computational social science

Citation: Hiaeshutter-Rice D, Chinn S and Chen K (2021) Platform Effects on Alternative Influencer Content: Understanding How Audiences and Channels Shape Misinformation Online. Front. Polit. Sci. 3:642394. doi: 10.3389/fpos.2021.642394

Received: 16 December 2020; Accepted: 10 May 2021;

Published: 31 May 2021.

Edited by:

Dominik A. Stecula, Colorado State University, United StatesReviewed by:

Dror Walter, Georgia State University, United StatesSean Long, University of California, Riverside, United States

Copyright © 2021 Hiaeshutter-Rice, Chinn and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dan Hiaeshutter-Rice, ZGhyaWNlQG1zdS5lZHU=

Dan Hiaeshutter-Rice

Dan Hiaeshutter-Rice Sedona Chinn

Sedona Chinn Kaiping Chen

Kaiping Chen