- 1Department of Psychiatry and Behavioral Neurosciences, International St. Mary’s Hospital, Catholic Kwandong University College of Medicine, Incheon, Republic of Korea

- 2Catholic Kwandong University Industry Cooperation Foundation, Incheon, Republic of Korea

Introduction: Amotivation in depression is linked to impaired reinforcement learning and effort expenditure via the dopaminergic reward pathway. To understand its computational and neural basis, we modeled incentive, temporal and cognitive burden effects, identifying key components and brain networks of cost-benefit valuation.

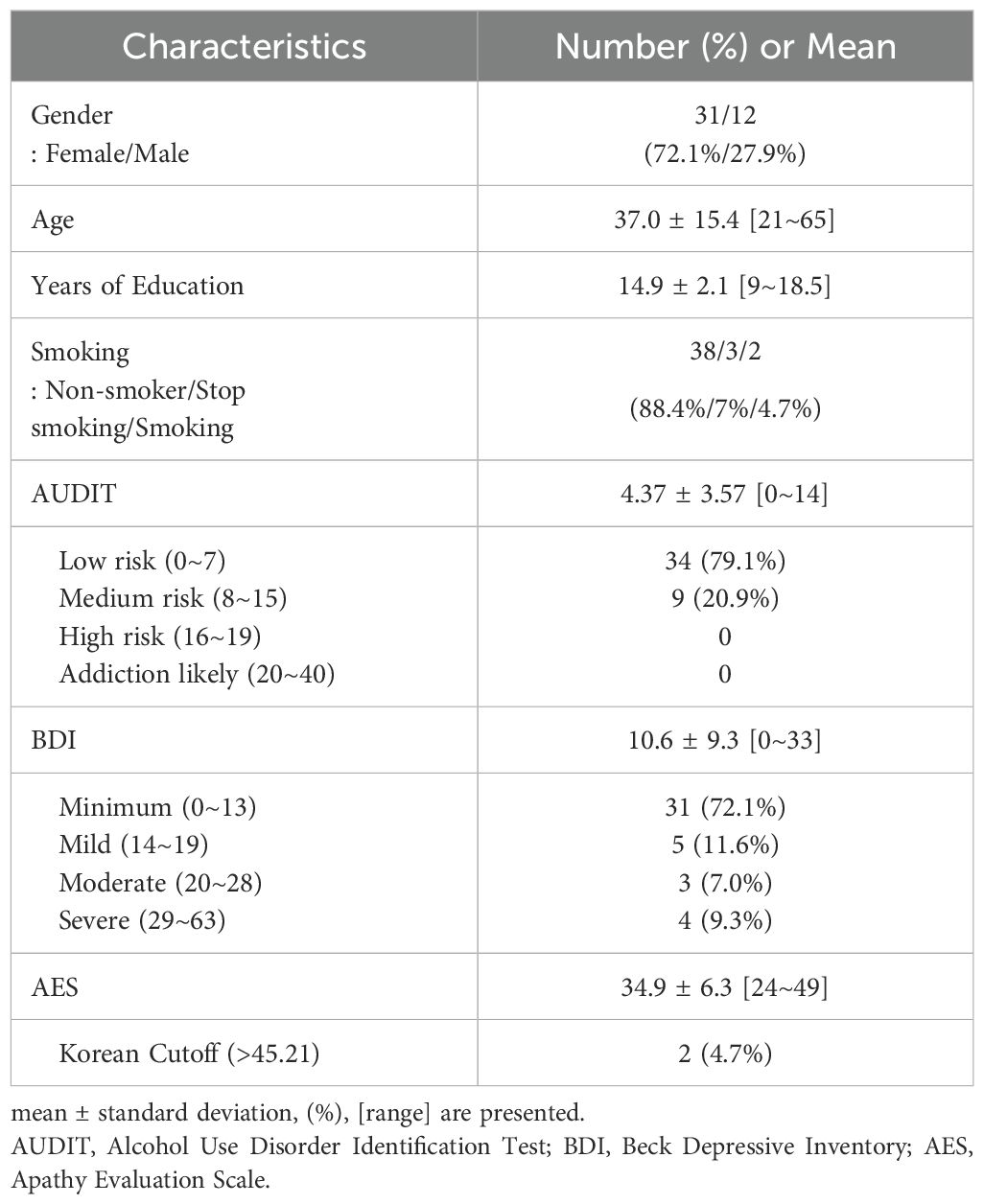

Methods: Data from 43 psychotropic-free individuals (31 non- or minimally depressed individuals), including Beck Depression Inventory (BDI), Apathy Evaluation Scale (AES), n-back task performance, and resting-state fMRI, were analyzed. Cost-benefit valuation was modeled using loss aversion, learning, temporal, and cognitive effort discounting factors. Model fitting and comparison (two-learning rate vs. two-temporal discounting) were performed. Principal Component Analysis and linear regression identified factors predicting amotivation severity. Correlations of estimated factors with nucleus accumbens and anterior insular cortex (AIC) functional connectivity were analyzed.

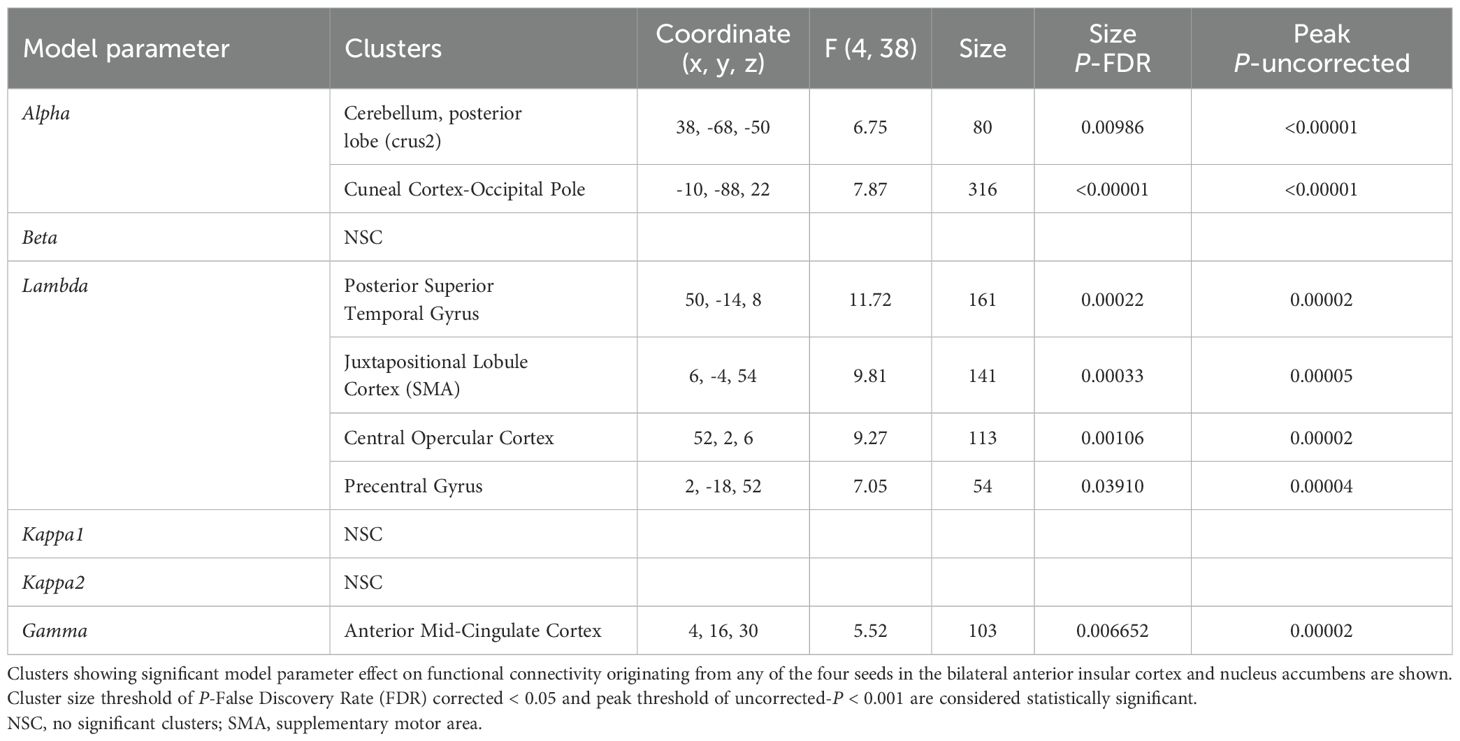

Results: Overall, greater 2-back than 0-back accuracy occurred in longer, positively incentivized tasks. Non- or minimally depressed individuals showed accuracy difference by N-back load at higher rewards, with divergence between reward and loss tasks at higher incentive and longer lengths. The two-temporal discounting model best explained these results. Cognitive effort discounting specifically predicted amotivation scores, derived from BDI and AES, and correlated with AIC-anterior mid cingulate cortex (aMCC) functional connectivity.

Conclusions: Our findings demonstrate amotivation is specifically associated with cognitive effort devaluation in a cost-benefit analysis incorporating loss aversion, incentive learning, temporal discounting, and cognitive effort discounting. Modulation of effort valuation via the AIC-aMCC network suggests a potential treatment target.

1 Introduction

Major depression is characterized by a marked decrease in interest or pleasure in most or all activities. It can lead to motivational deficit, which is associated with functional impairment in patients with major depressive disorder (1). These symptoms of apathy, anhedonia, and amotivation are related to and can arise from impairment in any components of goal-directed behavior, including learning of rewards, approach-related behaviors, and willingness to exert effort to obtain rewards (2). Particularly, motivation facilitates overcoming the cost of an effortful action to achieve the desired rewarding outcome (3).

Preference to choose approach/avoidance and effortful behavior are implicitly learned through reinforcement. Reward learning, which may involve separate neural pathways for approach and avoidance behavior, occurs in both positive and negative outcomes (4). Reward incentives can also boost attentional effort and conversely, effort can discount choice values in decision-making modulated by dopamine (5–7). Individuals typically place a higher value on effort when it is directed towards avoiding punishment rather than obtaining rewards (8, 9). Thus, intrinsic motivation for loss aversion is greater particularly for effortful goal-directed behavior. Discounting of learned reward values is sensitive to time delay and reward magnitude (10, 11). Additionally, temporal discounting of learned values is less steep for losses than rewards, and the magnitude effect on discounting in rewarding outcomes is absent in monetary loss outcomes (12, 13).

Reward-based decision making involves the dopaminergic pathway-projected brain regions including the nucleus accumbens (NAc), caudate, putamen, orbitofrontal cortex, anterior insula (AIC), anterior and posterior cingulate cortex (14). Particularly, the NAc and AIC are involved in evaluating effort. The NAc is more active when making rewarding choices requiring less physical effort, while the AIC is associated with devaluing effortful options (15, 16). Moreover, variability in dopamine responses in the NAc is associated with willingness to exert effort for larger, low-probability rewards, whereas such variability in the insula is negatively correlated with unwillingness to exert effort for rewards (7).

A meta-analysis of behavioral data using a reinforcement learning framework revealed that anhedonia and major depressive disorder were associated with diminished reward sensitivity but not impaired learning (17). Considering that effort enhances both reward and loss sensitivity to outcomes (18) and can devalue choice options in decision-making (6, 7), effort expenditure may be critical factor in the reinforcement learning processes of depressed individuals. Indeed, studies of effort-based decision-making have demonstrated that individuals with major depressive disorder are less inclined to exert effort for rewards (19, 20). Moreover, another study indicated that these patients exhibit reduced effort in both reward acquisition and loss avoidance, despite intact anticipation of negative outcomes (9). Consequently, the neurocognitive underpinnings of anhedonia and amotivation in depression encompass effort cost valuation. However, the extent to which different dimensions of effort cost, such as exertion time and magnitude, are differentially impacted in depression remains unclear.

To examine the implicit neural processing of cost-benefit analysis, we developed and conducted a monetary incentive n-back task and employed resting-state fMRI. We manipulated cognitive load, incentive valence, incentive magnitude and task length to investigate the influence of integrated valuation on cognitive performance and associated brain networks. Integrating reward and effort-based valuation models, we aimed to identify the computational mechanisms and the neural network underlying cost-benefit valuation, and to identify the components associated with amotivation. We hypothesized that cognitive effort discounting is linked to amotivation and explored the relationship between model-derived parameters and functional connectivity within the NAc and AIC, key valuation processing hubs.

2 Materials and methods

2.1 Participants and procedure

Forty-nine participants consented to the procedures approved by the Institutional Review Board of International St. Mary’s Hospital. Participants aged 19 to 65 years were included in the study. Exclusion criteria encompassed individuals with a history of major psychiatric disorders, neurological disorders, acute or severe physical illnesses requiring hospitalization or surgery, alcohol or substance abuse disorders, or neurodevelopmental disorders. In addition, any individuals who were on any CNS drugs within the past 2-weeks were excluded. We screened for potential alcohol use disorder using the Alcohol Use Disorder Identification Test (AUDIT), with a score greater than 15 indicating harmful alcohol consumption (21). The self-rating scales including the Beck Depression Inventory – II (BDI) (22, 23) and the Apathy Evaluation Scale (AES) (24, 25) were used to measure the severities of depressive symptoms and amotivation in all participants. All participants performed a monetary incentive N-back task on a laptop computer and then underwent a brain scan including structural and functional Magnetic Resonance Imaging (MRI).

2.2 Monetary incentive N-back task

We modified a prior task (9) by incorporating a n-back task to examine the implicit effects of incentive valence, incentive magnitude, and costs of temporal delay and cognitive effort on cognitive performance.

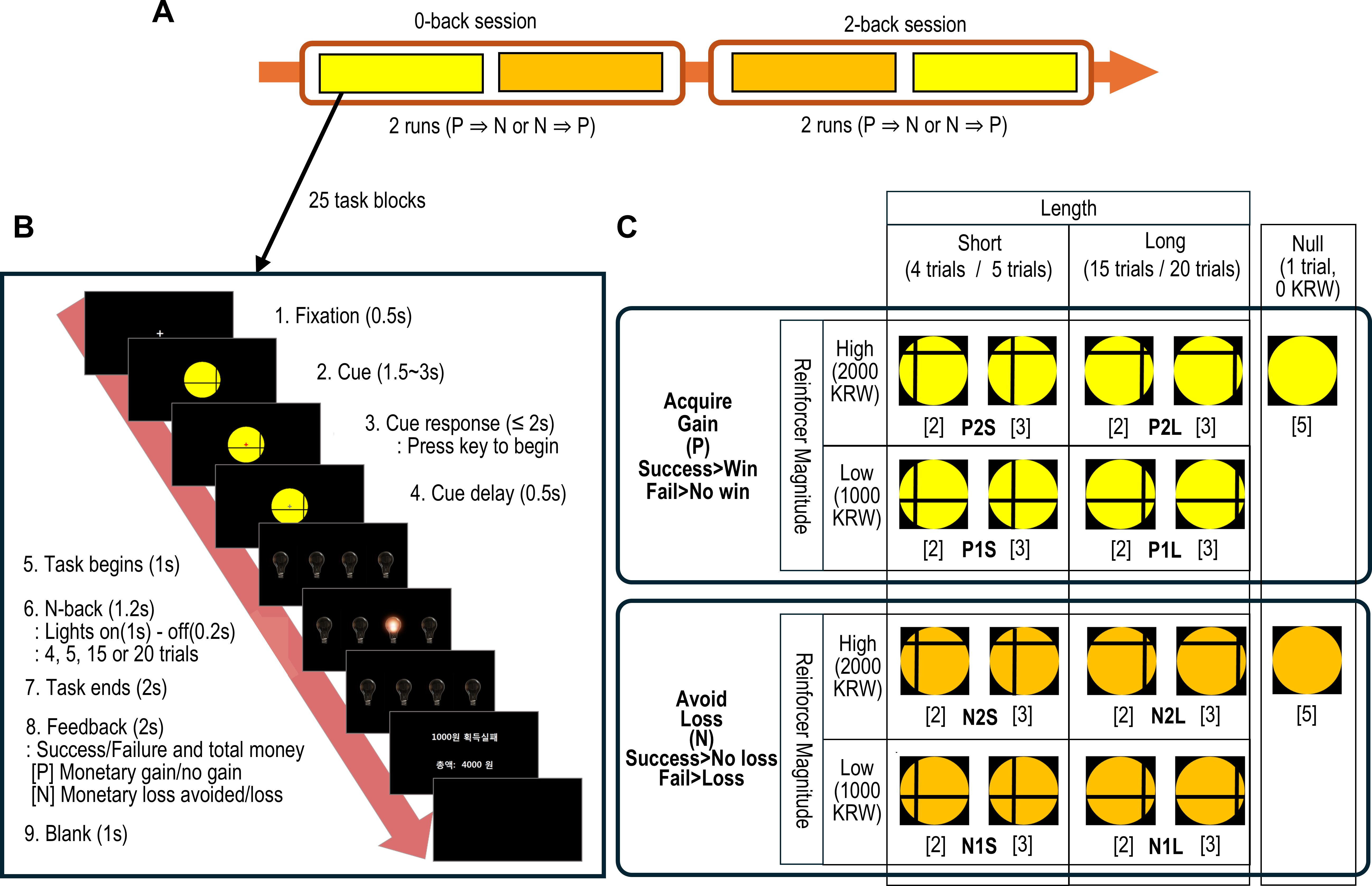

The task was conducted in two sessions, in the order of 0-back and 2-back. Each session comprised one positive and one negative incentive run, and their order was counterbalanced across participants. In positive incentive runs, participants earned monetary rewards for successful completions and received no reward for unsuccessful ones. Conversely, in negative incentive runs, they avoided monetary losses for successful completions and incurred losses for unsuccessful ones (Figure 1A).

Figure 1. The schematic description of the monetary incentive N-back task. (A) The task was performed sequentially from a 0-back to a 2-back session. Each session consisted of a reward (positive incentive) and a loss (negative incentive) run in a pseudo-randomly counterbalanced order across participants. (B) A single task block consisted of a cue presentation, the n-back trials and performance feedback. (C) The cues indicated the combination of task block conditions defined by 2 incentive valences (i.e., reward and loss), 2 incentive magnitudes as monetary value in South Korean Won (KRW) (i.e., high and low), and 2 task lengths (i.e., short and long). Additionally, null conditions were denoted by 2 cues. The number of blocks per cue is specified in brackets. Each condition comprised 5 n-back task blocks.

The task began with the presentation of a cue that indicated the condition for the current task block. Participants then pressed a start key to initiate the trial. Subsequently, four light bulbs were displayed, one of which illuminated randomly and consecutively. Participants were required to press a key corresponding to the currently illuminated bulb for the first session (0-back), or the bulb lit two sequences prior for the second session (2-back). Following the completion of 4 to 20 trials in each n-back block, feedback of money earned/loss avoided after successful performance, or no money earned/money lost after failed performance was presented (Figure 1B).

The n-back blocks varied in task length (i.e., short or long trials) and incentive magnitude (i.e., high or low). A single task run comprised a randomized sequence of 20 n-back blocks (each with a fixed incentive type and n-back task) and 5 null blocks (each consisting of a single trial without any contingencies) (Figure 1C).

Participants received instructions on n-back task procedures and were informed that the cue figure indicated the length of the task block and the magnitude of potential monetary earnings or losses avoided for successful completion. However, the specific task lengths and contingencies assigned to each cue were not explained. Participants were informed of the task load (i.e., 0-back or 2-back) at the beginning of each session. A practice session was administered to ensure participants comprehended and correctly executed the task.

After completing the task, all participants filled out a cue assessment questionnaire to ascertain the formation of subjective cue values. The post-task cue assessment questionnaire had three sections: subjective cue value assessment, cue preference within task incentive valences, and cue preference between task incentive valences. In the subjective cue value assessment, participants rated the value of individual cues using a scale of 0 to 3,000 Korean Won, in 500 Won increments. They were explicitly instructed to assign these values subjectively, independent of the actual task values. For cue preference within incentive valences, participants ranked each of the 12 cue triplets from 1 to 3. Each triplet set had identical incentive valence and included one null cue and all possible incentive cue pairs. Cue preference between incentive valences was assessed by participants selecting a preferred cue from comparisons of reward and loss task cue pairs (16 pairs total).

2.3 Image data acquisition

The MRI data were obtained using a SIEMENS 3.0T scanner (MAGNETOM Skyra, SIEMENS, Germany). High-resolution T1- weighted images were acquired using a 3D magnetization prepared rapid acquisition gradient echo (MP-RAGE) sequence with the following parameters: field of view = 256 mm, voxel size = 1.0×1.0×1.0 mm3, TR = 2300 ms, TE = 2.19 ms, flip angle = 9°. Then functional MRI (fMRI) data were acquired using T2*-weighted single-shot echo-planar imaging (EPI) sequence with the following parameters: field of view = 195 mm, voxel size = 2.5 × 2.5 × 4.0 mm3, number of slices = 34, TR = 2000 ms, TE = 30 ms, flip angle = 90°. Participants were instructed to keep their eyes fixed at a projected crosshair and not think about anything, while being scanned for 6 min 46 s.

2.4 Behavioral task data analysis

To differentiate the within-task block effects of incentive magnitude and task length, mean accuracy rates were calculated in two distinct ways. First, eight mean accuracy rates were computed for each combination of N-back load, incentive valence, and incentive magnitude, disregarding task length. Second, mean accuracy rates for each combination of N-back load, incentive valence, and task length were calculated, disregarding incentive magnitude.

Since the distribution of the n-back task accuracy rates were skewed due to ceiling effects, we conducted non-parametric tests. For within-group comparisons of task performance between conditions, Wilcoxon signed rank tests were applied separately for the mean accuracy rates by incentive magnitude and task length. The relationship of the accuracy rates with the BDI and AES scores were examined by performing Spearman’s rank correlation tests. A post-hoc analyses after excluding participants with BDI score greater than 13 (i.e. mild to severe depression) was conducted to clarify the conditional effects on performance unconfounded by depression. Analyses results were considered statistically significant at Bonferroni-corrected P < 0.05 (i.e. P × 8 for Wilcoxon tests; P × 16 for Spearman tests). Statistical tests of accuracy were performed using R Statistical Software (v4.3.1; R Foundation for Statistical Computing 2023).

2.5 The post-task cue assessment data analyses

The cue preference rank score was defined as the average of each cue’s rank-reversed scores derived from the within-incentive-valence cue preference data. For the between-incentive-valence cue preference data, relative cue preference was defined as the ratio of preferring reward cues over loss cues. We calculated six distinct relative cue preference ratios, each corresponding to specific combinations of incentive magnitude and task length. These included the relative preference for completely matched and mismatched reward over loss (N→P [C], N→P [IC]), low reward over high loss and high reward over low loss regardless of task length (N2→P1, N1→P2), and short reward over long loss tasks and long reward over short loss tasks regardless of incentive size (NL→PS, NS→PL). Scores ranged from 1 to 3, with higher scores indicating a greater preference for the cue.

Wilcoxon one-sample tests were performed on the subjective cue values to compare their median to the actual cue values. For the cue preference rank scores, Wilcoxon signed-rank tests were conducted to compare reward or loss cue scores of either matched incentive magnitude or task length. The significance of the relative cue preference ratio was assessed using Wilcoxon one-sample tests. Additionally, Wilcoxon signed-rank tests were used to investigate the effects of incentive magnitude and/or task length by comparing matched to other unmatched relative cue preference ratios. Wilcoxon signed-rank tests were considered statistically significant at Bonferroni-corrected P < 0.05 (i.e. P × 2 for cue preference rank scores; P × 5 for relative cue preference).

2.6 Computational model

We assumed that the intra-individual variability in the n-back task performance is modulated by the task’s anticipated value. This value is derived from a cost-benefit analysis that incorporates incentive valence and magnitude, task duration, and cognitive load. To capture these conditional components within a unified value function, we integrated three distinct models: the loss aversion model for accounting the different impact of monetary gains versus avoided losses; the temporal difference learning model for learning the reward magnitude and temporal delay associated with each cue; and the effort discounting model, which accounted for cognitive burden. Lastly, the softmax function was used to model performance as determined by cognitive capacity.

Based on the prospect theory, outcomes of “gains” and “losses” have different value functions (26, 27). When x is the amount of reward, the value of the reward is determined by rho (ρ), the degree of risk aversion, and lambda (Λ), the loss aversion factor, as follows.

We used the hyperbolic function for modeling cognitive effort (28). At time t, the value is obtained by the reward amount (x), discounting factor for cognitive effort (γ) and the cognitive load (c). Values for c was 0 for 0-back session and 2 for 2-back session. The γ values ranged between 0 and 1.

Then we assumed ρ = 1 for simplicity and combined the reward and effort value functions by substituting the x in the cognitive effort model with the reward value function, Va(x). Thus, the trial cue stimulus value at n-back turn t (i.e., V(St)) is formulated as follows.

For positive incentive (i.e., x ≤ 0),

For negative incentive (i.e., x > 0),

The cue stimulus value is further updated by reward received, learning rate (α), and temporal discounting factor (κ) by the temporal difference learning model (29). The α and κ values ranged between 0 and 1.

Given prior reports of the differential impairments in reward and punishment reinforcement and the reported abnormalities in delay discounting in depression, we constructed two distinct learning models (30, 31): one incorporating dual learning rate factors (α1 and α2) and another featuring dual temporal discounting factors (κ1 and κ2).

We assumed that the probability of correct response was determined by the cognitive capacity (β) and the cue stimulus value before receiving reward. The softmax function was modified to incorporate 4 response choices so that random response would result in a probability of 25% (i.e., when β = 0) as follows.

2.7 Model fitting and comparison

Two models were compared; the dual learning rate model (2-LR) and the dual temporal discounting model (2-TD) in which separate learning rates and temporal discounting rates were assigned for positive and negative incentive trials. (Supplementary Methods S1 code for 2-TD & Supplementary Methods S2. code for 2-LR model).

We estimated the model parameters, identified individually responsible models, and performed a group-level model comparison using the hierarchical Bayesian inference (HBI; https://payampiray.github.io/cbm) (32). First, each model was fit to individual subject data using Laplace approximation. Then HBI was conducted for parameter estimation and to generate model frequency and protected exceedance probability. The estimate of how much each model is expressed across the group (i.e., model frequency) and the probability that each model is the most likely across the group while considering differences between model frequency arising by chance (i.e., protected exceedance probability, Ppx) were computed. The model with higher Ppx was selected for model validation.

2.8 Model validation

To validate the task model for clinical amotivation, we performed a multiple linear regression to examine whether the parameters from the winning model (higher Ppx) can predict the dependent variable representing clinical amotivation. First, a Principal Component Analysis (PCA) was conducted to extract the general component scores representing the overlapping clinical construct of amotivation from the BDI and AES scores. Following standardization (centering and scaling) of the BDI and AES scores, PCA was performed on the correlation matrix. The amotivation component was identified if a principal component met Kaiser’s criterion (Eigenvalue > 1) and contributed to a cumulative proportion of variance of at least 80%. For interpretability, only BDI and AES scores with positive loadings on this component were considered.

Then, we used the amotivation component scores (ACS) as the dependent variable and the five parameters (excluding beta, the cognitive capacity factor) from the winning model in an Ordinary Least Squares (OLS) Linear Regression. The model equation for the regression included ACS for the i-th individual(yi), intercept (β0), regression coefficients for the winning model parameters (β1~5i), values for the winning model parameters(x1~5i), and the error term or residual (∈i) is as follows.

All model validation procedures were conducted using R Statistical Software (v4.3.1; R Foundation for Statistical Computing, 2023). PCA was performed using the prcomp function, and OLS linear regression was carried out using the lm function. To address potential heteroscedasticity, robust standard errors were calculated using the HC3 estimator, implemented via the coeftest function (from the lmtest package) in conjunction with the vcovHC function (from the sandwich package).

2.9 Image data analysis

Preprocessing and functional connectivity analysis of the fMRI data were conducted using the CONN toolbox (conn v.21.a, http://www.nitrc.org/projects/conn, RRID: SCR: 006394) and SPM12 (RRID: SCR:007037) procedures. Realignment and unwarp procedures were conducted in which all scans were coregistered and resampled to the first scan as a reference image to adjust head motion and match the deformation field. Next, slice-timing differences were corrected, and outlier scans were identified. Functional and anatomical data were normalized into standard MNI space and segmented into grey matter, white matter, and CSF tissue classes. Lastly, functional data was spatially smoothed by convolution with a 6 mm full-width-at-half-maximum Gaussian kernel.

In the denoising step, an anatomical component-based noise correction method (aCompCor) was implemented to regress out noise components from the white matter and cerebrospinal fluid areas, estimated subject-motion parameters, identified outlier scans and quality assurance metrics. Then despiking was conducted to remove artificially high signals and temporal band-pass filter at 0.001~0.1Hz was applied to specifically focus on slow-frequency fluctuations. Subsequently, seed-to-whole brain (seed-to-voxel) correlation maps were computed using seeds in the anterior insular cortex (AIC) selected from the salience network defined by CONN’s independent component analyses of the Human Connectome Project dataset. Additionally, the nucleus accumbens (NAc), defined by the Harvard-Oxford atlas and generated using FSLeyes (https://git.fmrib.ox.ac.uk/fsl/fsleyes/fsleyes), was also used as a seed in the first-level analyses.

In the 2nd-level analyses, regression analyses with each model parameter as covariate across all subjects were conducted examining the seed-to-voxel functional connectivity arising from the four seeds in the bilateral AIC and the NAc. First, F-tests were conducted to find any effects among the four seeds of interest. Then, post-hoc T-tests were performed to specify the seed that was the main contributor to the F-test results. Results were considered statistically significant with cluster threshold at False Discovery Rate-corrected P (FDR-P) < 0.05 and voxel threshold at uncorrected-P < 0.001 using random field theory parametric statistics.

3 Results

Thirty-one females and twelve males with a mean age of 37.0 years (range 21~65, SD = 15.4) completed the study. The participants’ depression severity by BDI scores ranged from minimum to severe (0~33) with a mean score of 10.6 (SD = 9.3) and 12 participants (27.9%) were mildly to severely depressed (BDI > 13). The mean AES score was 34.9 (SD=6.26) and only two participants (4.7%) showed AES score greater than the Korean cutoff values of 45.2 (Table 1).

3.1 Task accuracy

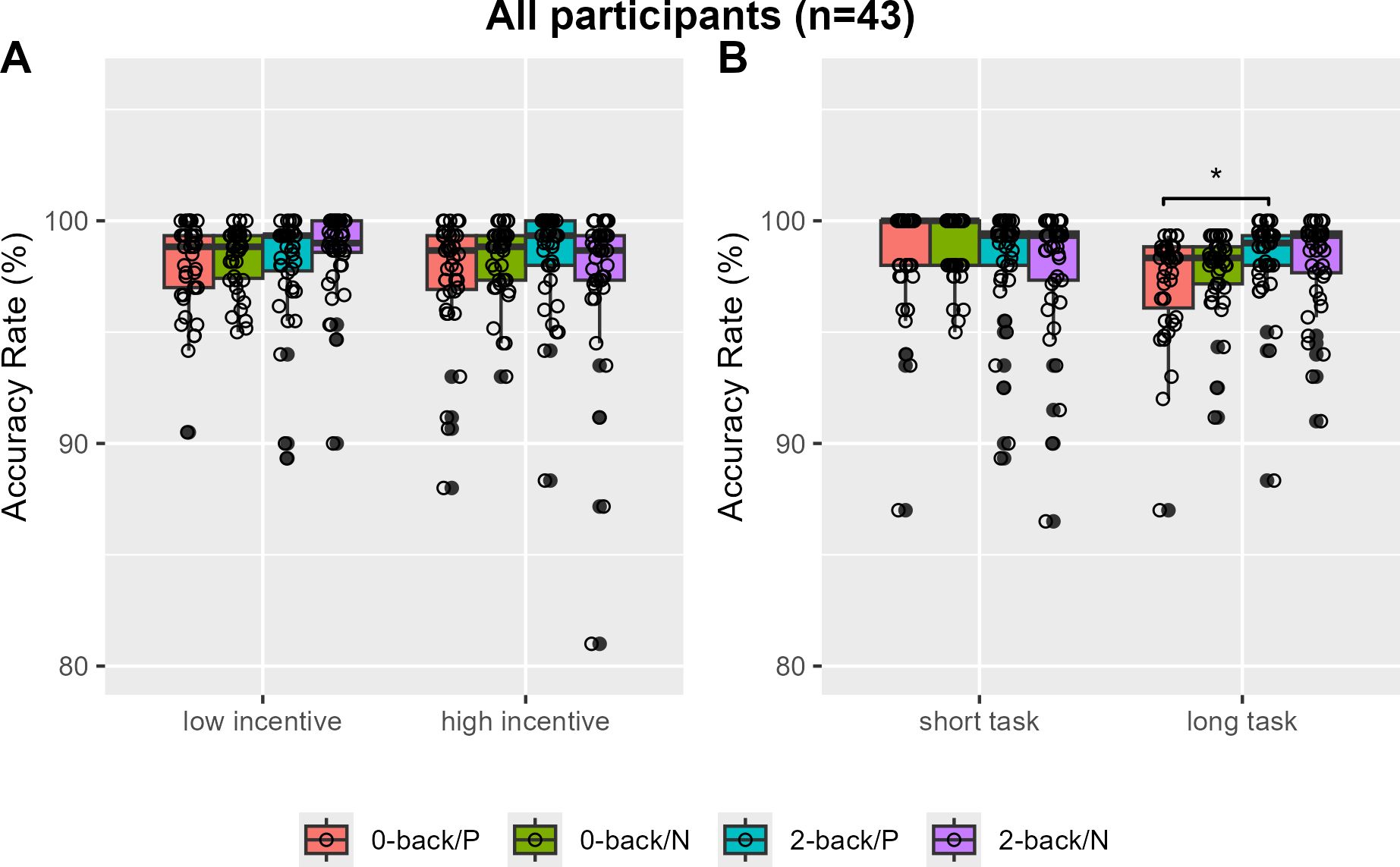

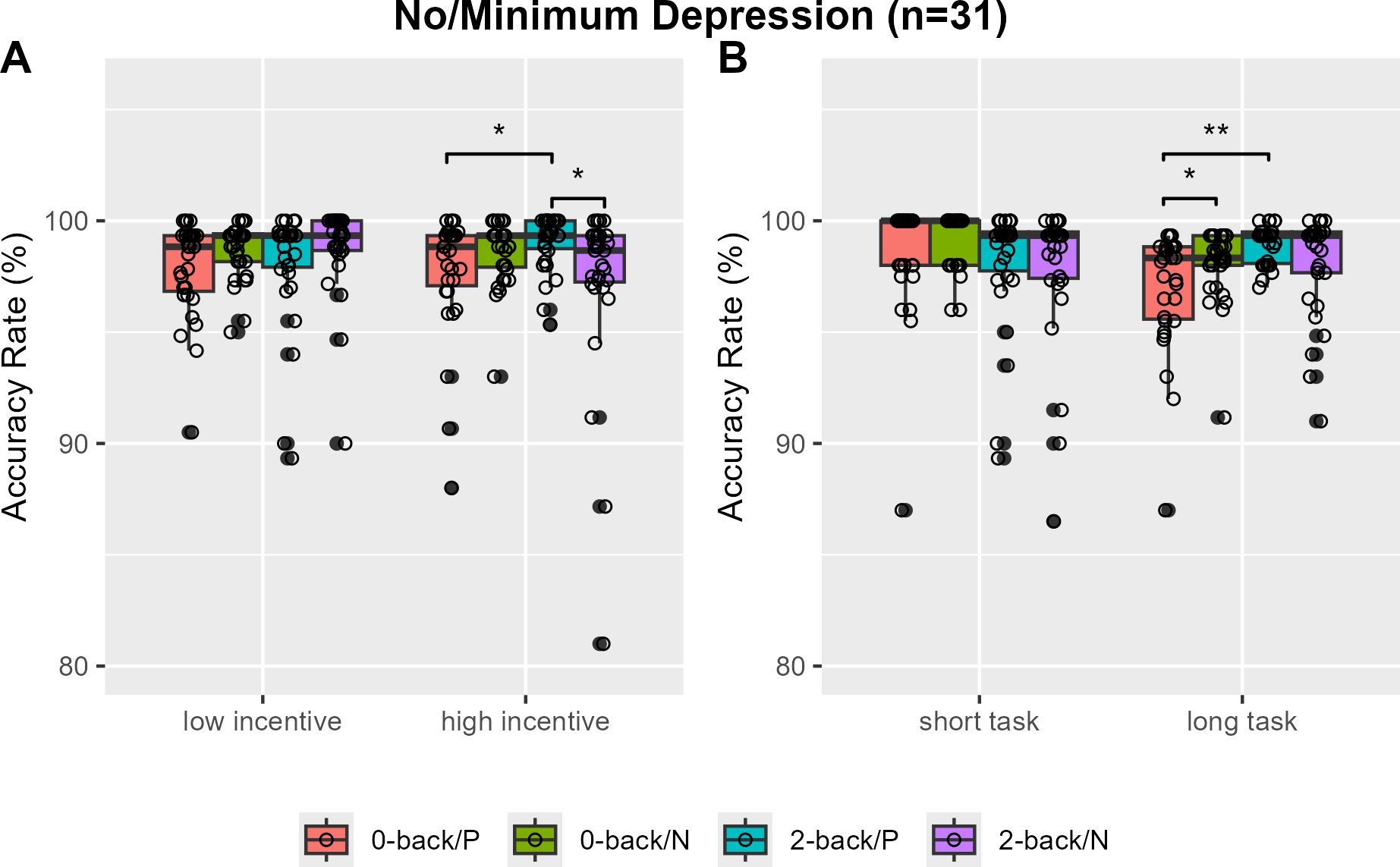

The 2-back task showed a significantly higher overall accuracy rate compared to the 0-back task, with median rates of 0.983 (IQR = 0.020) and 0.985 (IQR = 0.022), respectively (V=175, P=0.0016). Specifically, no accuracy rate differences by N-back load or incentive valence were found in low and high incentive trials (Figure 2A). However, greater 2-back than 0-back task accuracy rates were observed only with positively reinforced longer trials (V=202, Bonferroni-corrected P=0.0250, Cohen’s d=-0.357) (Figure 2B). When analysis was restricted to individuals with no or minimum depression (n=31), the accuracy rates of the 2-back task were greater than that of the 0-back task in positive incentive trials with higher reward or longer trials (V=56, Bonferroni-corrected P=0.0115, Cohen’s d=-0.572; V=43, Bonferroni-corrected P=0.0008, Cohen’s d=-0.761) (Figures 3A, B). Furthermore, accuracy was higher in 2-back trials featuring positive incentives of higher magnitude, in contrast to trials involving negative incentives (V=349.5, Bonferroni-corrected P=0.0353, Cohen’s d=0.481). Conversely, accuracy was lower in long 0-back trials under conditions of positive incentives compared to negative incentives (V=72.5, Bonferroni-corrected P=0.0424, Cohen’s d=-0.522) (Figures 3A, B).

Figure 2. Accuracy rates of the monetary incentive n-back task in all participants. Accuracy rates are compared by N-back and incentive valence in low and high magnitude conditions (A) and in short and long task length conditions (B). P, positive incentive (reward); N, negative incentive (loss); Bonferroni-corrected *P <0.05.

Figure 3. Accuracy rates of the monetary incentive N-back task in individuals with no or minimal depression. Accuracy rates are compared by N-back and incentive valence in low and high magnitude conditions (A) and in short and long task block length conditions (B). P, positive incentive (reward); N, negative incentive (loss); Bonferroni-corrected *P <0.05, **P<0.001.

BDI scores were significantly associated with accuracy rates in the 2-back task involving positive incentives of high magnitude, showing a moderate effect size (Figure 4A). Conversely, AES scores correlated with accuracy rates in the 0-back task with negative incentives of high magnitude, showing a moderate effect size (Figure 4B). AUDIT scores did not correlate with any of the task accuracy rates.

![Scatterplots labeled A and B. Plot A shows the relationship between sqrt(BDI) and sqrt(1 - Accuracy[2-back/P/high]), with a positive trend. Plot B shows AES against sqrt(1 - Accuracy[0-back/N/high]), also with a positive trend. Both include a trend line with shaded confidence intervals.](https://www.frontiersin.org/files/Articles/1581802/fpsyt-16-1581802-HTML/image_m/fpsyt-16-1581802-g004.jpg)

Figure 4. Scatter plots with the trend fitted lines (i.e. grey solid lines) using locally estimated scatterplot smoothing showing the association of the accuracy of the 2-back task with the Beck Depression Inventory (BDI) and Apathy Evaluation Scale (AES) scores. The transformed (i.e. square root, sqrt) accuracy values of the 2-back task blocks with high reward (positive incentive) magnitudes (A), and high loss (negative incentive) magnitude (B) are presented for better visualization. (A) Spearman’s rho = -0.460, Bonferroni-corrected P = 0.031; (B) Spearman’s rho = -0.457, Bonferroni-corrected P = 0.033.

3.2 Post-task cue valuation and preference

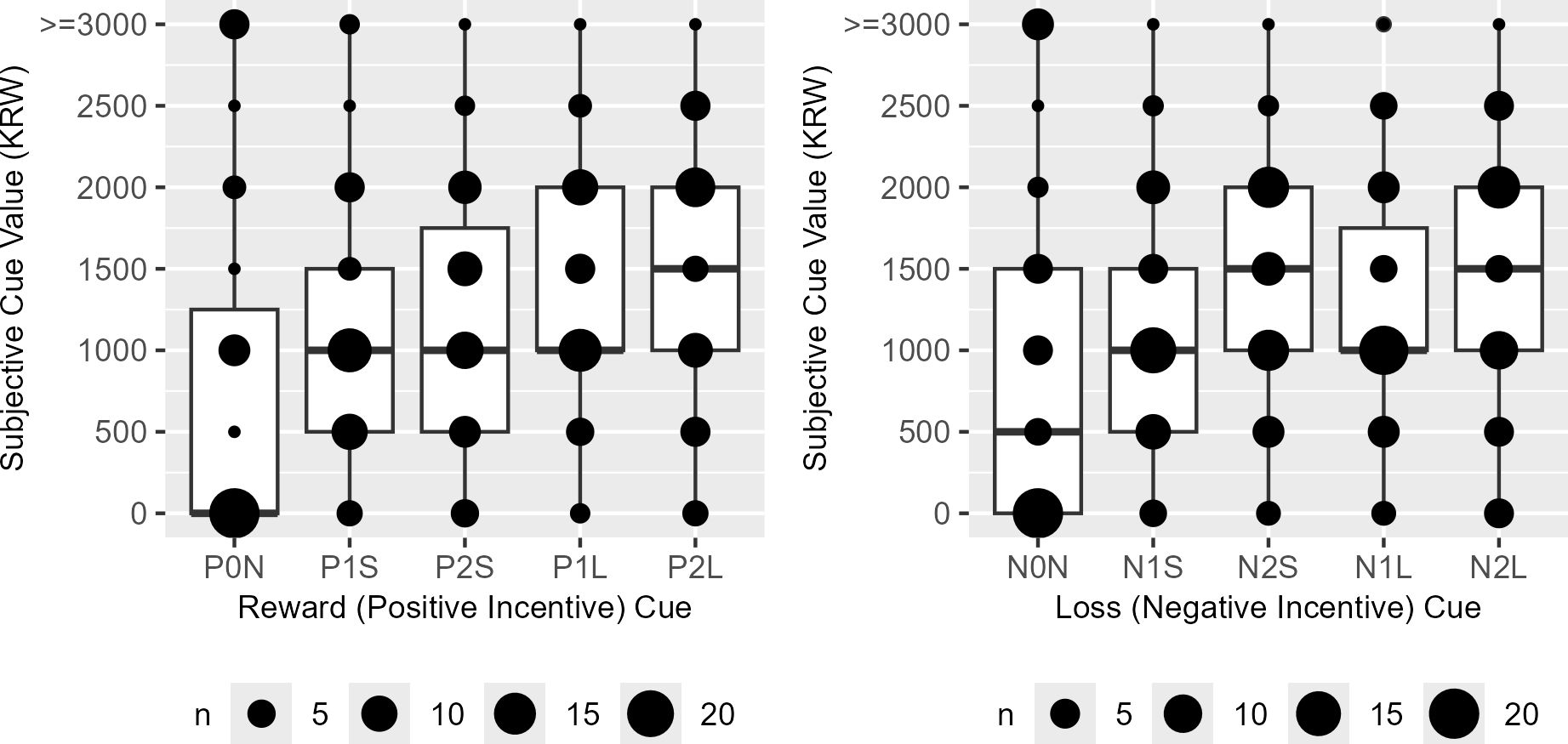

The subjective cue values exhibited a distribution symmetric around their actual incentive magnitude only when reward and loss cues were associated with short trials and low incentive magnitudes (P1S, V = 207, P = 0.421; N1S, V = 246, P = 0.165); in all other conditions, a significant difference in distribution was observed (P0N, V = 190, P < 0.001; P2S, V = 30, P < 0.001; P1L, V = 313, P = 0.003; P2L, V = 49, P < 0.001; N0N, V = 276, P < 0.001; N2S, V = 26, P < 0.001; N1L, V = 222, P = 0.038; N2L, V = 44, P < 0.001) (Figure 5).

Figure 5. The boxplots of the reward (P) and loss (N) cue subjective values in Korean Won (KRW). P0N/N0N, null cues; P1S/N1S/P1L/N1L, 1000 KRW incentive cues; P2S/N2S/P2L/N2L, 2000 KRW incentive cues; P1S/N1S/P2S/N2S, short task cues; P1L/N1L/P2L/N2L, long task cues.

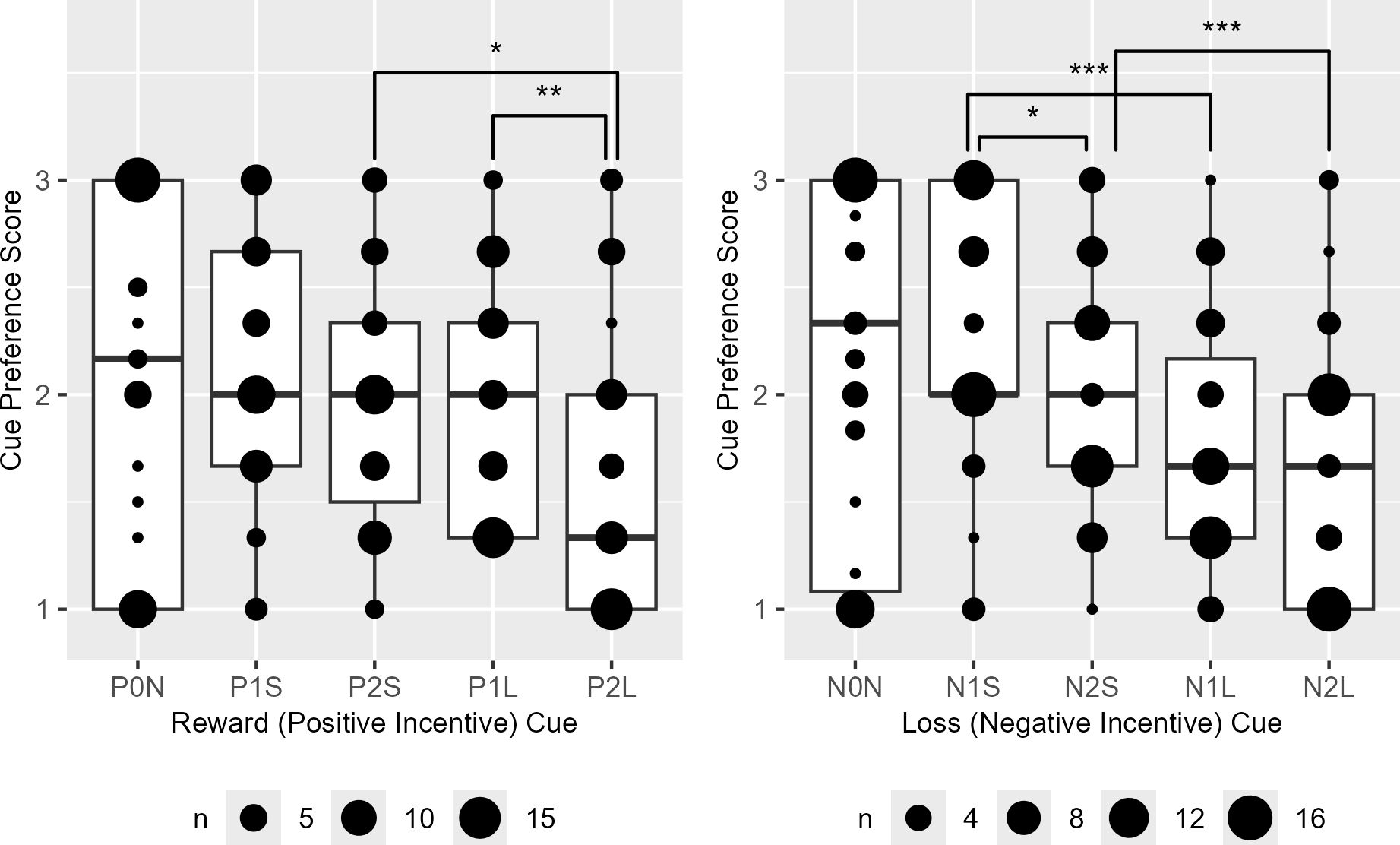

Among the reward cues, preference scores were significantly higher for cues associated with shorter trials delivering high rewards (P2S > P2L) and long trials offering lower rewards (P1L > P2L). Conversely, for loss incentive cues, higher preference scores were observed for cues representing short trials with lower losses (N1S > N2S), shorter trials incurring low losses (N1S > N1L), and shorter trials with high losses (N2S > N2L) (Figure 6).

Figure 6. The boxplots of the reward (P) and loss (N) cue preference rank scores. P0N/N0N, null cues; P1S/N1S/P1L/N1L, 1000 KRW incentive cues; P2S/N2S/P2L/N2L, 2000 KRW incentive cues; P1S/N1S/P2S/N2S, short task cues; P1L/N1L/P2L/N2L, long task cues. Significant difference was observed in P1L > P2L (V = 604), P2S > P2L (V=511), N1S > N2S, (V=631), N1S > N1L (V=782) and N2S > N2L (V=708). Bonferroni-corrected P < *0.05, **0.01, ***0.001.

Overall cues associated with reward incentives were significantly preferred over those with loss incentives regardless of incentive magnitudes and task lengths with the exception when the task was shorter in loss cues than in reward cues (N→P [C], V = 629, P < 0.001; N→P [IC], V = 280, P = 0.001; N2→P1, V = 609, P < 0.001; N1→P2, V = 464, P = 0.034; NL→PS, V = 693, P < 0.001; NS→PL, V = 402, P = 0.437). The distribution of reward-over-loss cue preference became significantly less skewed toward reward when incentive magnitudes and task lengths were mismatched, when incentive magnitude was lower in loss than reward cues and when task lengths were shorter in loss than reward cues (Figure 7).

![Box plot showing relative cue preference with a scale from zero to one on the x-axis. Six conditions, N->P[C], N->P[IC], N2->P1, N1->P2, NL->PS, and NS->PL, are plotted along the y-axis. Circle sizes indicate sample sizes, ranging from five to twenty. Significant differences are marked with asterisks on the right side.](https://www.frontiersin.org/files/Articles/1581802/fpsyt-16-1581802-HTML/image_m/fpsyt-16-1581802-g007.jpg)

Figure 7. The boxplots of relative cue preference for reward (P) than loss (N) cues. [C]/[IC], compatible/incompatible cues with matched/mismatched incentive magnitudes and task lengths; P1/N1, 1000 Korean Won; P2/N2, 2000 Korean Won; PL/NL, long trials; PS/NS, short trials. Significant differences from N→P[C] were observed in N→P[IC] (V = 189), N1→P2 (V = 219), and NS→PL (V = 288). Bonferroni-corrected P < *0.05, **0.001.

3.3 Model comparison

The two-temporal discounting (2-TD) model was the most likely model across the group as compared to the two-learning rate (2-LR) model (Ppx = 1 for 2-TD model and Ppx = 3.387 10–12 for 2-LR model; model frequency 97.67% in 2-TD and 2.33% in 2-LR). The estimated responsibility and the log evidence of each model generated in each individual dataset are shown in Supplementary Table 1. The median value of the parameter estimates from the two-temporal discounting model are presented in Supplementary Table 2.

3.4 Model validation: OLS linear regression for amotivation component score

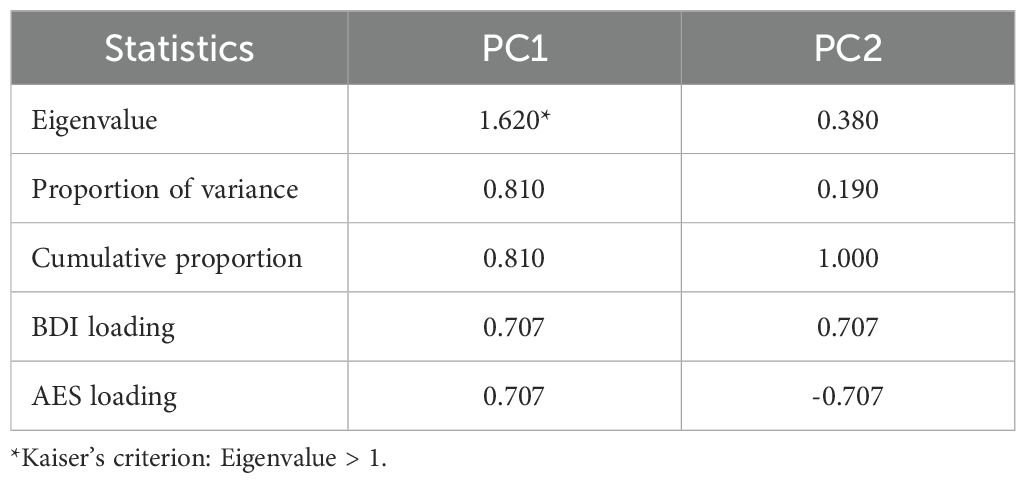

The PCA revealed two principal components (PC). PC1 accounted for 81.0% of the total variance with an Eigenvalue greater than 1, while PC2 accounted for 19.0% of the total variance. Together, these two components explained 100% of the total variance in the BDI and AES scores. PC1 showed strong positive loading from both BDI (0.707) and AES (0.707) suggesting that this component represents a general construct related to both depression and apathy severity. PC2, on the other hand, showed a positive loading for BDI and negative loading for AES, indicating a discordant component. Therefore, PC1 was identified as the amotivation component score (ACS) for the regression analysis (Table 2).

Table 2. Result of the principal component (PC) analysis for beck depression Inventory (BDI) and apathy evaluation scale (AES) scores.

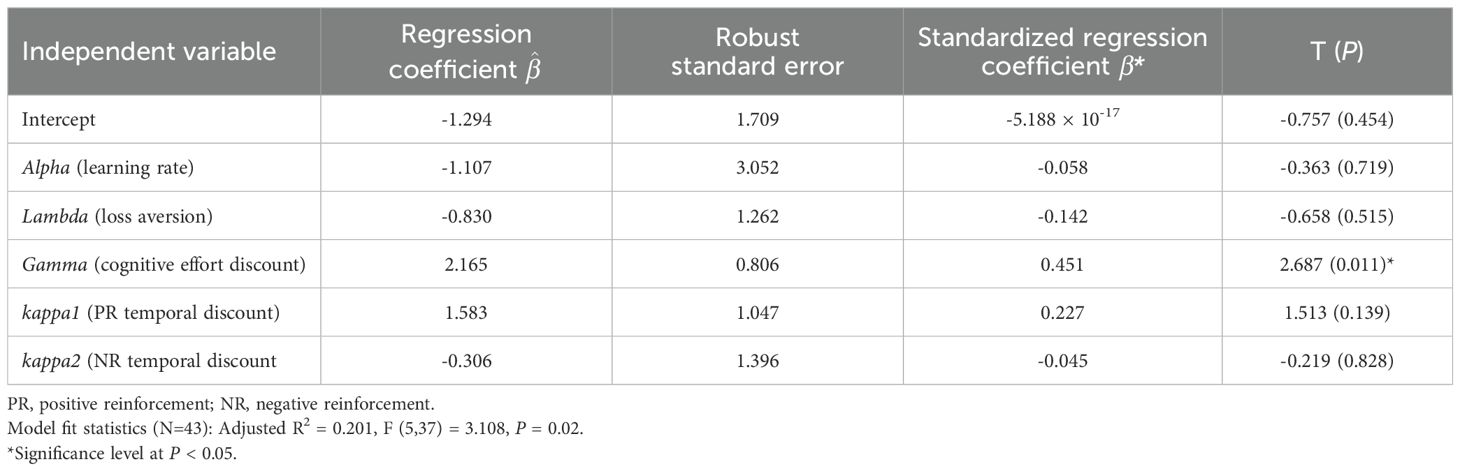

The full regression model was statistically significant (F(5,37) = 3.108, P < 0.05), indicating that the alpha, lambda, gamma, kappa1, kappa2 parameters collectively explain a significant portion of the variance in the ACS. Moreover, 20% of the variance in the ACS could be accounted for by the five independent variables in the model (Adjusted R2 = 0.201). Among the five independent variables, only gamma (cognitive effort discounting factor) emerged as a statistically significant predictor of the ACS by an increasing it by 0.45 standard deviations, holding other variables constant (β* = 0.451, robust SE = 0.806, T = 2.685, P < 0.05) (Table 3).

Table 3. The ordinary least squares linear regression results for the principal component score of the beck depression scale and apathy evaluation scale.

3.5 Functional connectivity

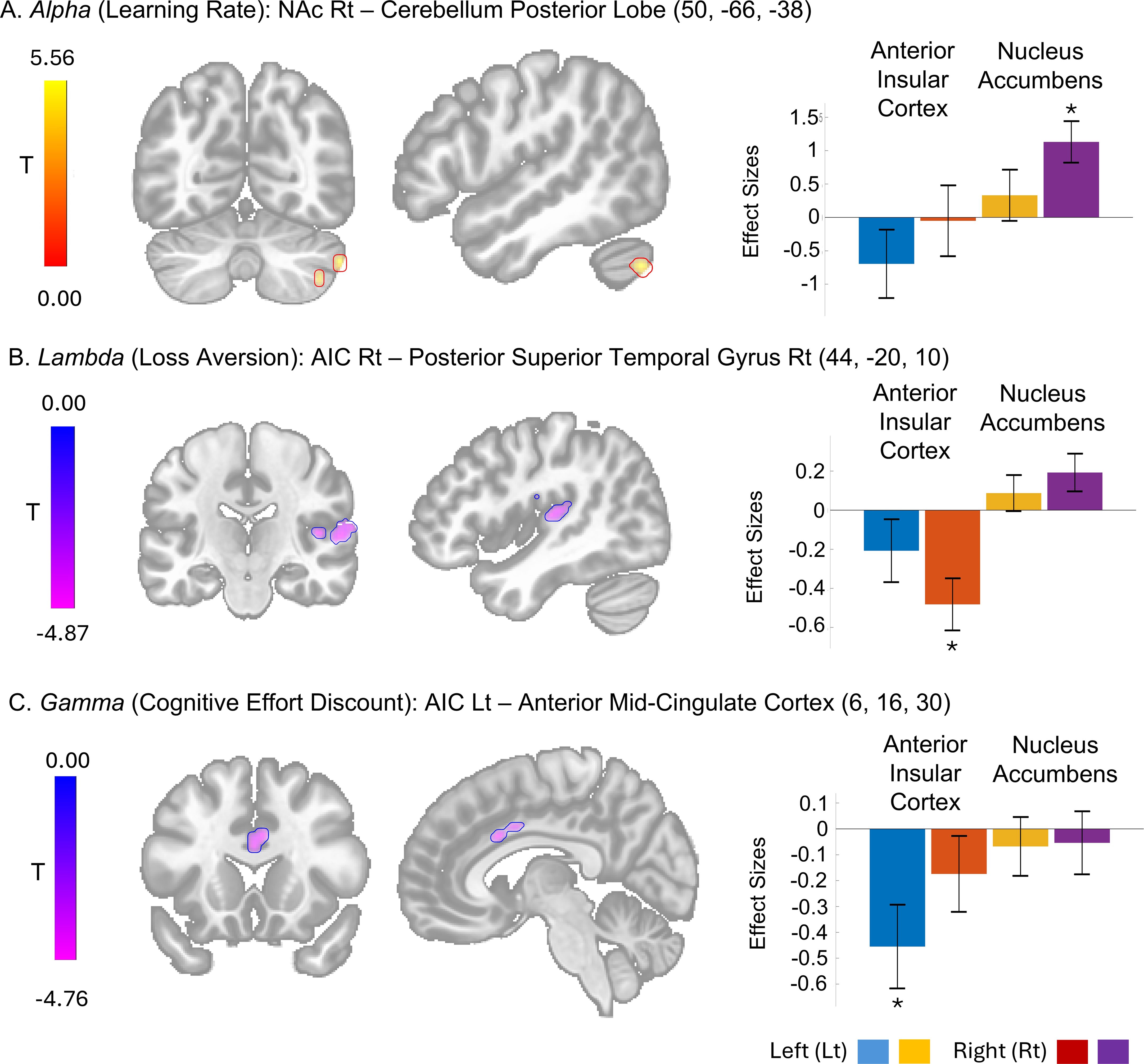

Multivariate analyses (F-tests) revealed that the seed-to-voxel functional connectivity originating from the AIC and NAc covaried with alpha, lambda, and gamma parameters. Specifically, the learning factor (alpha) covaried functional connectivity to the posterior lobe of the cerebellum and the cuneal cortex extending to the occipital pole. The loss aversion factor (lambda) showed covariance with functional connectivity to the right posterior superior temporal gyrus, the juxtapositional lobule cortex (or supplementary motor area), the central opercular cortex, and the precentral gyrus. Furthermore, the cognitive effort discounting factor (gamma) covaried with functional connectivity to the anterior mid-cingulate cortex. In contrast, the cognitive capacity (beta) and temporal discounting factors (kappa1 and kappa2) did not exhibit any significant effects (Table 4).

The post-hoc t-tests revealed that the learning rate factor (alpha) positively correlated with functional connectivity between the right NAc and the posterior lobe of the cerebellum (Figure 8A). In contrast, the loss aversion factor (lambda) negatively covaried with functional connectivity between the right AIC and the right posterior superior temporal gyrus (Figure 8B). The cognitive effort discounting factor (gamma) also showed a negative correlation with functional connectivity between the left AIC and the anterior mid-cingulate cortex (aMCC) (Figure 8C).

Figure 8. The seed-to-voxel functional connectivities with model parameter effects and their effect size. Cluster with significant effect from the T-tests of specific seed of interest including the bilateral anterior cingulate cortex (AIC) and nucleus accubmens (NAc) at cluster level False Discovery Rate-corrected P < 0.05 and voxel level uncorrected P < 0.001 are shown. The effect sizes as Fisher transformed correlation coefficient and their error bars representing 90% confidence intervals are presented. *The bar graphs representing the statistically significant effect size [(A) size = 131, T(41) = 6.12; (B) size = 272, T(41) = -6.08; (C) size = 97, T(41) = -4.73].

The AUDIT score did not show significant effect on the functional connectivity originating from the seeds of interest.

4 Discussion

We initially established the validity of the monetary incentive n-back task in differentiating the interactive effects of incentive valence and magnitude, task length, and cognitive load. Task trials with greater cognitive load exhibited enhanced performance when reinforced with higher positive incentives or during longer tasks. The divergent performance outcomes observed with positive and negative incentives were mediated by the incentive magnitude and the task length. The conditional effects became evident only after individuals with depression were excluded from the behavioral data. Additionally, the formation of subjective cue values through task performance was evident in the varied cue preference across both reward and loss tasks and the reduced reward-over-loss cue preference linked to incentive magnitude and task length. Subsequently, we demonstrated that a computational model incorporating temporal difference learning, loss aversion, cognitive effort discounting and dual temporal discounting factors accounted for the variability in the n-back task performance across conditions of incentive valence, incentive magnitude, task length, and cognitive load. Finally, among the model parameters, the cognitive effort discounting factor accounted for the variance in clinical amotivation severity as hypothesized. Moreover, in partial support of the model’s neural underpinnings, learning rate, loss aversion, and cognitive effort discounting were associated with distinct neural networks originating in the NAc and AIC.

The effort-based decision-making paradigm has been employed to neurobehaviorally operationalize motivation. Previous research examining the influence of reward and effort values on preferences has demonstrated an association between depression and reduced willingness to exert effort for reward, consistent with anticipatory anhedonia (20, 33, 34). Moreover, a recent study suggests that expenditure of effort and reward sensitivity may be independently linked to anhedonia (35). Computational modeling studies have revealed that elevated sensitivity to effort cost is associated with depression across preference, performance, and learning tasks, with individuals with major depressive disorder exhibiting a steeper discounting of rewards in response to cognitive effort (36, 37). While these studies explicitly assessed willingness to exert effort through choice-based paradigms, our investigation examined the implicit impact of reward and effort on cognitive performance, yielding congruent yet extended findings. Specifically, the cognitive effort discounting factor, within the valuation process affecting cognitive performance variability, emerged as the key correlate of amotivation, as opposed to the loss aversion, learning or temporal discounting factors. These results indicate that the detrimental effects of heightened effort devaluation extend beyond decision-making to influence behavioral performance, potentially contributing to the concentration difficulties observed in depression.

The observed functional connectivity patterns associated with loss aversion and learning rate align with previous research findings. The loss aversion-related functional connectivity centered on the AIC corroborates prior studies implicating the AIC in aversive conditioning and decisions to avoid imminent loss (38, 39). Given the established role of the right posterior superior temporal gyrus (pSTG) in serial visual feature search and object identification involving complex goal-directed movement (40, 41), the AIns-right pSTG connectivity likely reflects the process of assigning or identifying aversive value from visual cue features.

Learning rate, a critical parameter in reinforcement learning models, has been extensively linked to prediction error. Previous animal and human studies have demonstrated that learning rate modulates reward prediction error signals originating from midbrain dopaminergic projections to the NAc (42–44). Notably, our findings revealed learning rate-associated NAc connectivity to the cerebellum. Prior clinical and animal research has established the cerebellum’s role in reward-based reversal learning and its influence over reward circuitry via projections to the ventral tegmental area (45, 46). Furthermore, the cerebellum has been implicated in indirectly affecting reinforcement learning by reducing motor noise (47). Considering the cerebellum’s established role in reward learning, we hypothesize that it may regulate the extent to which dopaminergic prediction error signals in the NAc contribute to updating previously acquired value representations.

Regarding cost valuation, we demonstrated distinct functional neural correlates for cognitive effort discounting in the AIC, while those for temporal discounting were not observed. Previous studies indicate partially separate valuation networks for delay and effort costs, including the ventromedial prefrontal cortex, ventral striatum, posterior cingulate cortex, and lateral parietal cortex for delayed reward valuation, the AIC and anterior cingulate cortex (ACC) for effort valuation, and the right orbitofrontal cortex and lateral temporal and parietal cortices for encoding the value of chosen options related to delay and effort discounting (16, 48). The lack of observed neural correlates for temporal discounting may be due to the limitations of resting-state functional connectivity in capturing the temporal variability required for valuation. Task-activated imaging data might be more suitable for examining the neural correlates of temporal discounting.

Our model-based analysis revealed the role of the left AIC and aMCC network in modulating cognitive effort valuation, a finding that is partially consistent with prior studies. Previous research demonstrated the role of AIC and ACC in decision-making based on effortful reward, distinct from effects of reward delays (16, 49). While prior studies primarily investigated physical effort, Aben et al. (50) established the dorsal ACC’s involvement in processing cognitive effort demands through its connectivity with task-specific cortical regions. The adjacent aMCC is also functionally connected to the AIC, as demonstrated during both resting and task performance, and is associated with cognitive-motor control (51). Moreover, Touroutoglou et al. (52) have suggested the aMCC’s role in motivation by predicting energy needs and guiding behavior toward allostatic energy balance. We observed activity in the aMCC instead of more rostral ACC regions because our task primarily required motor execution and cognitive control and did not elicit conflict or incorporate decision-making. Our results expand on prior findings by linking AIC-aMCC connectivity to cognitive effort discounting and its impact on performance variability. This association reflects the implicit influence of effort valuation in cognitive processing.

Furthermore, our results showing the effects of effort discounting on incentive valence has been underexplored. Notably, Hernandez Lallement et al. (18) showed that cost-benefit valuation may engage distinct neural networks for gains and losses. They found that the AIC activity is significantly modulated by loss magnitude when effort is involved. Our observations of the AIC functional connectivity related to loss aversion and effort discounting are consistent with and extend their findings.

Notably, we observed laterality in the right and left anterior insular cortex (AIC) differentially associated with loss aversion and cognitive effort discounting, respectively. Lateralized engagement of the AIC has been observed in different aspects of loss aversions in prior studies. Specifically, a right dominance was observed for aversive salience (53, 54), while bilateral or left-lateralized activation was found in learning-related risk prediction errors (55–57). Therefore, our observed association between resting-state functional connectivity in the right AIC and loss aversion aligns with the AIC’s role in saliency processing.is congruent with its role in the salience component of loss aversion.

Conversely, the left lateralization of the AIC in cognitive discounting observed in our study contrasts with some prior studies reporting right AIC activation related to expected effort cost, effort prediction during probabilistic learning, and the valuation of prospective effort (58, 59). However, this finding may reflect a resting-state network involved in the subjective experience of task demand, consistent with prior studies reporting left AIC activation during self-evaluation of mental effort investment modulated by task demands (60).

Crucially, we were able to demonstrate the relationship between baseline AIC-aMCC connectivity and amotivaton-related tendency to discount cognitive effort during a working memory task using model-based analysis. These results implicate the AIC-aMCC network as a potential pathophysiological mechanism underlying concentration difficulties in depression. Our findings therefore imply that enhancing baseline salience network function, particularly concerning cognitive effort valuation, could be a promising therapeutic target for depressive disorders.

A key limitation of this study is the overall small sample size. Given the number of parameters included in our computational and regression models, small sample size could decrease the statistical power of our analyses and increase the potential for overfitting, which might affect the generalizability of our findings. Another limitation is the absence of task-based fMRI data to match and confirm that the functional connectivities demonstrated by model-based analyses are the brain regional network recruited during task performance. Lastly, the inclusion of nine medium-risk drinkers in our sample could introduce confounding effects on the behavioral and neural results. Prior studies report that severe alcohol use can diminish neural capacity in working memory task performance and affect resting-state neural networks (61, 62). However, we did not observe any correlations between AUDIT scores and either task accuracy rates or resting-state functional connectivity within our sample.

In conclusion, we found that among components of cost-benefit analysis in cognitive performance, devaluation of effort, involving the AIC-aMCC network, may underly a general mechanism of amotivation. We demonstrated that a computational model of temporal difference learning and value discounting is feasible for examining the implicit behavioral effect of cost-benefit analysis and identifying the subconstruct behind amotivation. Furthermore, our results suggest that interventions targeting the salience network to modulate effort valuation as a potential therapy for treating amotivation. To validate the applicability of this model and to establish the pathophysiological role of these findings in depressive disorders, larger-scale studies are required for cross-validation and comparison between individuals with major depressive disorder and healthy controls.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Institutional Review Board of International St. Mary’s Hospital. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

IP: Conceptualization, Formal analysis, Funding acquisition, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. CL: Data curation, Formal analysis, Investigation, Project administration, Writing – original draft. KJ: Resources, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2017R1D1A1B04035829).

Acknowledgments

We thank Sohyun Ahn and Dohyung Koo for early data collection and Dr. Terry Lohernz (Fralin Biomedical Research Institute at VTC, U.S.A.) for advice on the computational modelling methods.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2025.1581802/full#supplementary-material

References

1. Fervaha G, Foussias G, Takeuchi H, Agid O, and Remington G. Motivational deficits in major depressive disorder: Cross-sectional and longitudinal relationships with functional impairment and subjective well-being. Compr Psychiatry. (2016) 66:31–8. doi: 10.1016/j.comppsych.2015.12.004

2. Cooper JA, Arulpragasam AR, and Treadway MT. Anhedonia in depression: biological mechanisms and computational models. Curr Opin Behav Sci. (2018) 22:128–35. doi: 10.1016/j.cobeha.2018.01.024

3. Chong TT, Bonnelle V, and Husain M. Quantifying motivation with effort-based decision-making paradigms in health and disease. Prog Brain Res. (2016) 229:71–100. doi: 10.1016/bs.pbr.2016.05.002

4. Frank MJ, Seeberger LC, and O’Reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. (2004) 306:1940–3. doi: 10.1126/science.1102941

5. Massar SA, Lim J, Sasmita K, and Chee MW. Rewards boost sustained attention through higher effort: A value-based decision making approach. Biol Psychol. (2016) 120:21–7. doi: 10.1016/j.biopsycho.2016.07.019

6. Randall PA, Pardo M, Nunes EJ, Lopez Cruz L, Vemuri VK, Makriyannis A, et al. Dopaminergic modulation of effort-related choice behavior as assessed by a progressive ratio chow feeding choice task: pharmacological studies and the role of individual differences. PLoS One. (2012) 7:e47934. doi: 10.1371/journal.pone.0047934

7. Treadway MT, Buckholtz JW, Cowan RL, Woodward ND, Li R, Ansari MS, et al. Dopaminergic mechanisms of individual differences in human effort-based decision-making. J Neurosci. (2012) 32:6170–6. doi: 10.1523/JNEUROSCI.6459-11.2012

8. Chen X, Voets S, Jenkinson N, and Galea JM. Dopamine-dependent loss aversion during effort-based decision-making. J Neurosci. (2020) 40:661–70. doi: 10.1523/JNEUROSCI.1760-19.2019

9. Park IH, Lee BC, Kim JJ, Kim JI, and Koo MS. Effort-based reinforcement processing and functional connectivity underlying amotivation in medicated patients with depression and schizophrenia. J Neurosci. (2017) 37:4370–80. doi: 10.1523/JNEUROSCI.2524-16.2017

10. Ballard K and Knutson B. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage. (2009) 45:143–50. doi: 10.1016/j.neuroimage.2008.11.004

11. McClure SM, Laibson DI, Loewenstein G, and Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. (2004) 306:503–7. doi: 10.1126/science.1100907

12. Green L, Myerson J, Oliveira L, and Chang SE. Discounting of delayed and probabilistic losses over a wide range of amounts. J Exp Anal Behav. (2014) 101:186–200. doi: 10.1002/jeab.56

13. Mitchell SH and Wilson VB. The subjective value of delayed and probabilistic outcomes: Outcome size matters for gains but not for losses. Behav Processes. (2010) 83:36–40. doi: 10.1016/j.beproc.2009.09.003

14. Liu X, Hairston J, Schrier M, and Fan J. Common and distinct networks underlying reward valence and processing stages: a meta-analysis of functional neuroimaging studies. Neurosci Biobehav Rev. (2011) 35:1219–36. doi: 10.1016/j.neubiorev.2010.12.012

15. Kurniawan IT, Seymour B, Talmi D, Yoshida W, Chater N, and Dolan RJ. Choosing to make an effort: the role of striatum in signaling physical effort of a chosen action. J Neurophysiol. (2010) 104:313–21. doi: 10.1152/jn.00027.2010

16. Prévost C, Pessiglione M, Metereau E, Clery-Melin ML, and Dreher JC. Separate valuation subsystems for delay and effort decision costs. J Neurosci. (2010) 30:14080–90. doi: 10.1523/JNEUROSCI.2752-10.2010

17. Huys QJ, Pizzagalli DA, Bogdan R, and Dayan P. Mapping anhedonia onto reinforcement learning: a behavioural meta-analysis. Biol Mood Anxiety Disord. (2013) 3:12. doi: 10.1186/2045-5380-3-12

18. Hernandez Lallement J, Kuss K, Trautner P, Weber B, Falk A, and Fliessbach K. Effort increases sensitivity to reward and loss magnitude in the human brain. Soc Cognit Affect Neurosci. (2014) 9:342–9. doi: 10.1093/scan/nss147

19. Treadway MT, Bossaller NA, Shelton RC, and Zald DH. Effort-based decision-making in major depressive disorder: a translational model of motivational anhedonia. J Abnorm Psychol. (2012) 121:553–8. doi: 10.1037/a0028813

20. Yang XH, Huang J, Zhu CY, Wang YF, Cheung EF, Chan RC, et al. Motivational deficits in effort-based decision making in individuals with subsyndromal depression, first-episode and remitted depression patients. Psychiatry Res. (2014) 220:874–82. doi: 10.1016/j.psychres.2014.08.056

21. Saunders JB, Aasland OG, Babor TF, de la Fuente JR, and Grant M. Development of the alcohol use disorders identification test (AUDIT): WHO collaborative project on early detection of persons with harmful alcohol consumption–II. Addiction. (1993) 88:791–804. doi: 10.1111/j.1360-0443.1993.tb02093.x

22. Lim SY, Lee EJ, Jeong SW, Kim HC, Jeong CH, Jeon TY, et al. The validation study of Beck Depression Scale 2 in Korean Version. Anxiety Mood. (2011) 7:48–53.

23. Beck AT, Steer RA, and Brown GK. Manual for the Beck Depression Inventory-II. San Antonio, TX: Psychological Corporation (1996).

24. Lee YM, Park IH, Koo MS, Ko SY, Kang HM, and Song JE. The reliability and validity of the Korean version of Apathy Evaluation Scale and its application in patients with schizophrenia. Korean J Schizophr Res. (2013) 16:80–5. doi: 10.16946/kjsr.2013.16.2.80

25. Marin RS, Biedrzycki RC, and Firinciogullari S. Reliability and validity of the apathy evaluation scale. Psychiatry Res. (1991) 38:143–62. doi: 10.1016/0165-1781(91)90040-v

26. Sokol-Hessner P and Rutledge RB. The psychological and neural basis of loss aversion. Curr Dir Psychol Sci. (2019) 28:20–7. doi: 10.1177/0963721418806510

27. Tversky A and Kahneman D. Loss aversion in riskless choice: A reference-dependent model. Q J Econ. (1991) 106:1039–61. doi: 10.2307/2937956

28. Chong TT, Apps M, Giehl K, Sillence A, Grima LL, and Husain M. Neurocomputational mechanisms underlying subjective valuation of effort costs. PloS Biol. (2017) 15:e1002598. doi: 10.1371/journal.pbio.1002598

29. Sutton RS and Barto AG. Reinforcement Learning: An Introduction. Cambridge: The MIT Press (1998).

30. Eshel N and Roiser JP. Reward and punishment processing in depression. Biol Psychiatry. (2010) 68:118–24. doi: 10.1016/j.biopsych.2010.01.027

31. Pulcu E, Trotter PD, Thomas EJ, McFarquhar M, Juhasz G, Sahakian BJ, et al. Temporal discounting in major depressive disorder. Psychol Med. (2014) 44:1825–34. doi: 10.1017/S0033291713002584

32. Piray P, Dezfouli A, Heskes T, Frank MJ, and Daw ND. Hierarchical Bayesian inference for concurrent model fitting and comparison for group studies. PLoS Comput Biol. (2019) 15:e1007043. doi: 10.1371/journal.pcbi.1007043

33. Hershenberg R, Satterthwaite TD, Daldal A, Katchmar N, Moore TM, Kable JW, et al. Diminished effort on a progressive ratio task in both unipolar and bipolar depression. J Affect Disord. (2016) 196:97–100. doi: 10.1016/j.jad.2016.02.003

34. Sherdell L, Waugh CE, and Gotlib IH. Anticipatory pleasure predicts motivation for reward in major depression. J Abnorm Psychol. (2012) 121:51–60. doi: 10.1037/a0024945

35. Slaney C, Perkins AM, Davis R, Penton-Voak I, Munafo MR, Houghton CJ, et al. Objective measures of reward sensitivity and motivation in people with high v. low anhedonia. Psychol Med. (2023) 53:4324–32. doi: 10.1017/S0033291722001052

36. Vinckier F, Jaffre C, Gauthier C, Smajda S, Abdel-Ahad P, Le Bouc R, et al. Elevated effort cost identified by computational modeling as a distinctive feature explaining multiple behaviors in patients with depression. Biol Psychiatry Cognit Neurosci Neuroimaging. (2022) 7:1158–69. doi: 10.1016/j.bpsc.2022.07.011

37. Ang YS, Gelda SE, and Pizzagalli DA. Cognitive effort-based decision-making in major depressive disorder. Psychol Med. (2023) 53:4228–35. doi: 10.1017/S0033291722000964

38. Büchel C, Morris J, Dolan RJ, and Friston KJ. Brain systems mediating aversive conditioning: an event-related fMRI study. Neuron. (1998) 20:947–57. doi: 10.1016/s0896-6273(00)80476-6

39. Fukunaga R, Brown JW, and Bogg T. Decision making in the Balloon Analogue Risk Task (BART): anterior cingulate cortex signals loss aversion but not the infrequency of risky choices. Cognit Affect Behav Neurosci. (2012) 12:479–90. doi: 10.3758/s13415-012-0102-1

40. Ellison A, Schindler I, Pattison LL, and Milner AD. An exploration of the role of the superior temporal gyrus in visual search and spatial perception using TMS. Brain. (2004) 127:2307–15. doi: 10.1093/brain/awh244

41. Schultz J, Imamizu H, Kawato M, and Frith CD. Activation of the human superior temporal gyrus during observation of goal attribution by intentional objects. J Cognit Neurosci. (2004) 16:1695–705. doi: 10.1162/0898929042947874

42. Hart AS, Rutledge RB, Glimcher PW, and Phillips PE. Phasic dopamine release in the rat nucleus accumbens symmetrically encodes a reward prediction error term. J Neurosci. (2014) 34:698–704. doi: 10.1523/JNEUROSCI.2489-13.2014

43. O’Doherty JP, Dayan P, Friston K, Critchley H, and Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. (2003) 38:329–37. doi: 10.1016/s0896-6273(03)00169-7

44. Schultz W, Dayan P, and Montague PR. A neural substrate of prediction and reward. Science. (1997) 275:1593–9. doi: 10.1126/science.275.5306.1593

45. Carta I, Chen CH, Schott AL, Dorizan S, and Khodakhah K. Cerebellar modulation of the reward circuitry and social behavior. Science. (2019) 363:eaav0581. doi: 10.1126/science.aav0581

46. Thoma P, Bellebaum C, Koch B, Schwarz M, and Daum I. The cerebellum is involved in reward-based reversal learning. Cerebellum. (2008) 7:433–43. doi: 10.1007/s12311-008-0046-8

47. Therrien AS, Wolpert DM, and Bastian AJ. Effective reinforcement learning following cerebellar damage requires a balance between exploration and motor noise. Brain. (2016) 139:101–14. doi: 10.1093/brain/awv329

48. Massar SA, Libedinsky C, Weiyan C, Huettel SA, and Chee MW. Separate and overlapping brain areas encode subjective value during delay and effort discounting. Neuroimage. (2015) 120:104–13. doi: 10.1016/j.neuroimage.2015.06.080

49. Arulpragasam AR, Cooper JA, Nuutinen MR, and Treadway MT. Corticoinsular circuits encode subjective value expectation and violation for effortful goal-directed behavior. Proc Natl Acad Sci U.S.A. (2018) 115:E5233–42. doi: 10.1073/pnas.1800444115

50. Aben B, Buc Calderon C, Van den Bussche E, and Verguts T. Cognitive effort modulates connectivity between dorsal anterior cingulate cortex and task-relevant cortical areas. J Neurosci. (2020) 40:3838–48. doi: 10.1523/JNEUROSCI.2948-19.2020

51. Hoffstaedter F, Grefkes C, Caspers S, Roski C, Palomero-Gallagher N, Laird AR, et al. The role of anterior midcingulate cortex in cognitive motor control: evidence from functional connectivity analyses. Hum Brain Mapp. (2014) 35:2741–53. doi: 10.1002/hbm.22363

52. Touroutoglou A, Andreano JM, Adebayo M, Lyons S, and Barrett LF. Motivation in the service of allostasis: the role of anterior mid cingulate cortex. Adv Motiv Sci. (2019) 6:1–25. doi: 10.1016/bs.adms.2018.09.002

53. Liljeholm M, Dunne S, and O’Doherty JP. Anterior insula activity reflects the effects of intentionality on the anticipation of aversive stimulation. J Neurosci. (2014) 34:11339–48. doi: 10.1523/JNEUROSCI.1126-14.2014

54. Simmons A, Strigo I, Matthews SC, Paulus MP, and Stein MB. Anticipation of aversive visual stimuli is associated with increased insula activation in anxiety-prone subjects. Biol Psychiatry. (2006) 60:402–9. doi: 10.1016/j.biopsych.2006.04.038

55. Kim JC, Hellrung L, Nebe S, and Tobler PN. The anterior insula processes a time-resolved subjective risk prediction error. J Neurosci. (2025) 45:e2302242025. doi: 10.1523/JNEUROSCI.2302-24.2025

56. Metereau E and Dreher JC. Cerebral correlates of salient prediction error for different rewards and punishments. Cereb Cortex. (2013) 23:477–87. doi: 10.1093/cercor/bhs037

57. Preuschoff K, Quartz SR, and Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. (2008) 28:2745–52. doi: 10.1523/JNEUROSCI.4286-07.2008

58. Aridan N, Malecek NJ, Poldrack RA, and Schonberg T. Neural correlates of effort-based valuation with prospective choices. Neuroimage. (2019) 185:446–54. doi: 10.1016/j.neuroimage.2018.10.051

59. Skvortsova V, Palminteri S, and Pessiglione M. Learning to minimize efforts versus maximizing rewards: computational principles and neural correlates. J Neurosci. (2014) 34:15621–30. doi: 10.1523/JNEUROSCI.1350-14.2014

60. Otto T, Zijlstra FR, and Goebel R. Neural correlates of mental effort evaluation–involvement of structures related to self-awareness. Soc Cognit Affect Neurosci. (2014) 9:307–15. doi: 10.1093/scan/nss136

61. Fede SJ, Grodin EN, Dean SF, Diazgranados N, and Momenan R. Resting state connectivity best predicts alcohol use severity in moderate to heavy alcohol users. NeuroImage Clin. (2019) 22:101782. doi: 10.1016/j.nicl.2019.101782

Keywords: depression, motivation, apathy, reward valuation, working memory, insular cortex, anterior cingulate cortex, effort discounting

Citation: Park IH, Lee CE and Jhung K (2025) Cognitive effort devaluation and the salience network: a computational model of amotivation in depression. Front. Psychiatry 16:1581802. doi: 10.3389/fpsyt.2025.1581802

Received: 23 February 2025; Accepted: 29 July 2025;

Published: 01 September 2025.

Edited by:

Claire Marie Rangon, Hôpital Raymond-Poincaré, FranceReviewed by:

Laith Alexander, King’s College London, United KingdomMichele Bertocci, University of Pittsburgh, United States

Copyright © 2025 Park, Lee and Jhung. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Il Ho Park, ZWlocGFya0BnbWFpbC5jb20=

Il Ho Park

Il Ho Park Chae Eun Lee2

Chae Eun Lee2 Kyungun Jhung

Kyungun Jhung