- 1Institute of Biomaterials and Biomedical Engineering, University of Toronto, Toronto, ON, Canada

- 2Autism Research Centre, Bloorview Research Institute, Holland Bloorview Kids Rehabilitation Hospital, Toronto, ON, Canada

Affecting 1 in 68, autism spectrum disorder (ASD) is a complex neurodevelopmental disorder characterized by social skill impairments. While prognosis can be significantly improved with intervention, few evidence-based interventions exist for social skill deficits in ASD. Existing interventions are resource-intensive, their outcomes vary widely for different individuals, and they often do not generalize to new contexts. Technology-aided intervention is a motivating, low-cost, and versatile approach for social skills training in ASD. Although early studies support the feasibility of technology-aided intervention, existing approaches have been criticized for teaching social skills through human-to-computer interaction, paradoxically leading to increased social isolation. To address this gap, we propose a system to help guide human-to-human interaction called Holli, a wearable technology to serve as a social skills coach for children with ASD. The Google Glass-based application listens to conversations and prompts the user with appropriate social responses. In this paper, we describe a usability study we conducted to determine the feasibility of using wearable technology to prompt children with ASD throughout social conversations. Fifteen children with ASD (mean age = 12.92 ± 2.33, verbal intelligent quotient = 103.3 ± 18.73) used the application while engaging in a restaurant-themed interaction with a research assistant. The application was evaluated on its effectiveness (i.e., how accurately the application responds), efficiency (i.e., how quickly the user and the application respond), and user satisfaction (based on a post-session questionnaire). All users were able to successfully complete the 10-turn exchange while using Holli. The results indicated the Holli accurately detected and recognized user utterance in real time. Participants reported positive experiences of using the application. To the best of our knowledge, this system is the first technology-aided intervention for ASD that employs human-to-human social coaching, and our results demonstrate the device is a viable medium for treatment delivery.

Introduction

Autism spectrum disorder (ASD) is a complex neuro-developmental disorder characterized by impairments in social communication and the presence of restricted and repetitive behaviors. Affecting 1 in 68 (Developmental, D. M. N. S. Y., & 2010 Principal Investigators, 2014), ASD is a life-long condition that profoundly impacts individuals, families, and society at large. A defining and debilitating feature of ASD is difficulties in interpreting social information and participating in social interactions. Social impairment in ASD has been associated with negative outcomes such as unemployment, low rates of independent living, and increased risk of psychiatric disorders (e.g., anxiety and depression) (Howlin et al., 2013).

Various behavioral interventions for social skills have been proposed, including video modeling (D’Ateno et al., 2003), social stories (Gray, 2010), social skills training groups (Cappadocia and Weiss, 2011), cognitive behavioral therapy (Bauminger, 2007), and school-based training (Bellini et al., 2007). Further research for these social intervention strategies is still necessary to ensure training promotes generalization and peer acceptance (Autism Ontario, 2011). Many of the above interventions are expensive and difficult to implement due to the need for human resources and training. This issue is exacerbated by the fact that effective learning and generalization of skills requires rehearsal and repetition over periods of weeks or months. The resource needs of these interventions, therefore, significantly limit accessibility to treatment, as evidenced by service wait times that range from months to years (Autism Ontario, 2011). Some of these barriers can be reduced through the use of technology for treatment delivery.

Technology is a motivating learning medium for children with ASD (Williams et al., 2002; Hourcade et al., 2011), and several studies have shown that children’s attention, communication, and social skills improve when computers or tablets are used (Bernard-Opitz et al., 2001; Gal et al., 2009; Burke et al., 2013; Murdock et al., 2013). Moreover, the observation that most children with ASD show an affinity to computers (Moore, 1998) and a preference for the use of touchscreens (Hourcade et al., 2013), supporting the potential of technology as an effective and cost-efficient teaching tool for social skills. Specifically, the shift toward multi-touch tablet and mobile-based interventions as instructional and assistive tools offers children with ASD a socially acceptable means to experience a level of safety and control that may not be attained when interacting with other individuals (Konstantinidis et al., 2009). This approach to teaching new skills permits the development of a broad range of accessible applications for skill learning, behavior management, and facilitation of social communication (Bernard-Opitz et al., 2001; Williams et al., 2002; Gal et al., 2009; Hourcade et al., 2011; Burke et al., 2013).

Accordingly, the idea of applying technology to assist and teach children with ASD has been around for over 40 years (Colby, 1973), and there have been many studies that demonstrate its effectiveness (Weiss et al., 2011; Hourcade et al., 2013; Ploog et al., 2013). Various types of technology applications have been developed for children with ASD, including interactive robots (Zheng et al., 2013; Bekele et al., 2014), virtual characters (Bosseler and Massaro, 2003; Tartaro and Cassell, 2006; Hopkins et al., 2011), video games (Gotsis et al., 2010), and virtual reality (Ke and Im, 2013).

Technology-aided intervention may be used to reduce some of the barriers limiting access to social skills intervention. In particular, technology-aided intervention can provide a highly motivating medium for rehearsal of skills in a safe, controlled, and self-paced manner (Parsons and Beardon, 2000), allow for treatment programs to be implemented with high precision and fidelity and less variability, and reduce the cost of intervention and other accessibility barriers (Ploog et al., 2013). Despite this promise, technology-aided intervention in ASD is an emerging field (Ploog et al., 2013). An important criticism of existing technologies is teaching skills learned from human–computer interaction, which may paradoxically increase social isolation and hinder skill generalizability. To address this gap, we propose a system to help guide human-to-human interaction called Holli, a wearable technology to serve as a social skills coach for children with ASD.

Holli is currently implemented on the Google Glass platform (Google Inc., 2016). Feasibility of the Google Glass in clinical applications has been demonstrated in several studies: as an assistive tool for doctors in surgery (Muensterer et al., 2014), as a job interview guide for individuals with ASD (Xu et al., 2015), and to support dietary management for individuals with diabetes (Wall et al., 2014).

In this paper, we describe a usability study we conducted to determine the feasibility of using the Google Glass to prompt children with ASD throughout social conversations. Usability describes the ease of use of a particular device and how well matched it is for a user’s specific needs. This study is the first step in our system’s development, ensuring it meets the needs and is an acceptable medium for technology-based social skills coaching for children with ASD.

Materials and Methods

System Development

Google Glass

The Google Glass is a head-mounted display designed in the shape of eyeglasses, with lightweight titanium frames, as seen below in Figure 1. It can be worn as a standalone frame or mounted on top of prescription glasses. The Glass was chosen as a platform for our application because it includes a microphone and display while allowing the user to look at his/her conversation partner. The Glass has a touchpad on the side of the device that is used for navigation through the device’s user interface. Other than the touchpad, the device can be controlled through the device’s microphone and speech recognition capabilities. It also has a front facing camera that has the ability to take photos and record 720p HD videos, and it uses a clear display over the user’s right eye to overlay images onto the user’s field of view. At the time of the study, the cost of Holli was $1,500. When using Holli, the battery can last several hours.

Holli

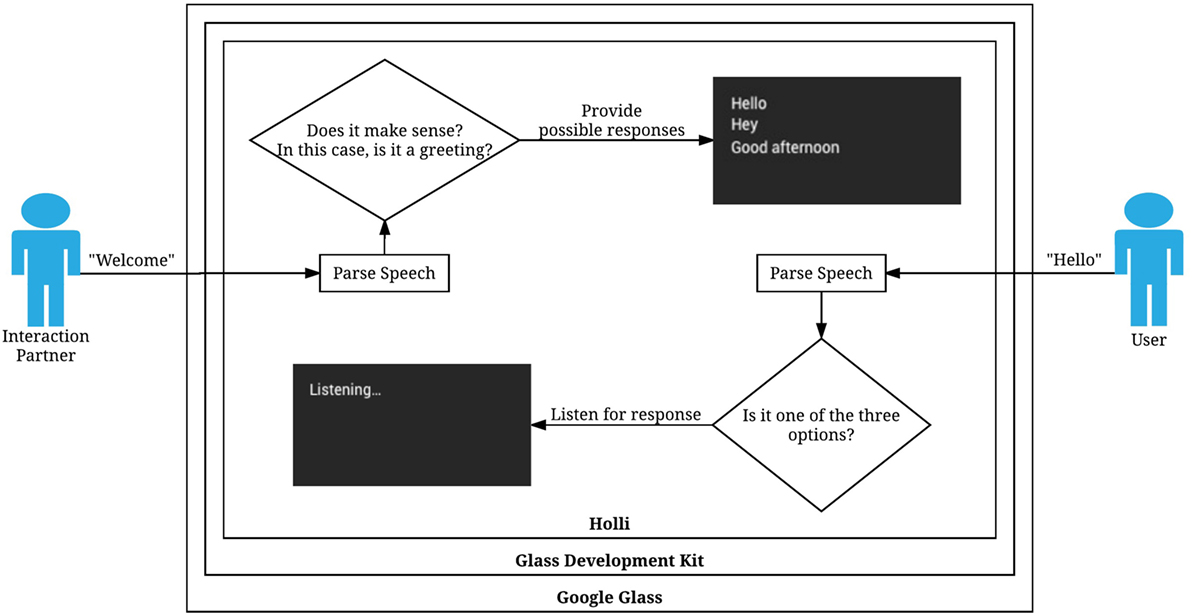

Holli is a software application developed using the Glass Development Kit, an open source software package, and running directly on the Google Glass. User and interaction partner speech are captured through the on-board microphone, as seen in Figure 2. The Google speech recognition engine is used to translate the spoken word into text. We have developed a rule-based system that processes the input text and generates appropriate responses, which are then displayed to the user through the head-mounted display. When the user utters one of the responses, prompts disappears and Holli begins to listen for the next turn in conversation. Figures 3 and 4 are screenshots taken from Holli demonstrating an example of what the user sees in each phase. In this example, the screen says “Listening…” until the interaction partner says a greeting, such as “Welcome.” Holli then provides various greetings for the user to choose from, as seen in Figure 4. When Holli recognizes the user’s response, the prompt disappears, and Holli begins to listen for the next exchange in conversation. This process can be seen in Figure 5.

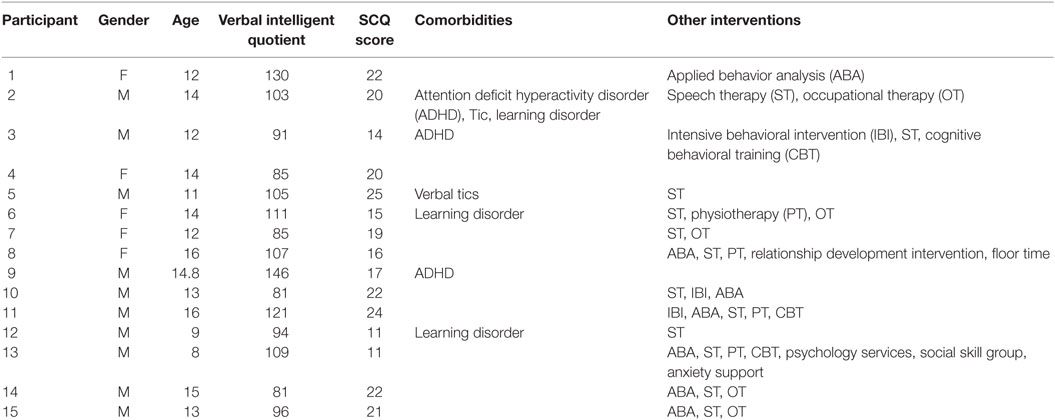

Participants

A convenience sample of 15 children (male = 10) with ASD was recruited for this study. This sample size is sufficient for revealing at least 90% of usability problems (Faulkner, 2003). Our inclusion criteria were a clinical diagnosis of ASD, age between 8 and 16 years, verbal intelligent quotient (IQ) greater than or equal to 80, and able to read without glasses.

Participants were recruited through the Autism Research Center at Holland Bloorview Kids Rehabilitation Hospital. All participants were diagnosed by an expert clinical team, using DSM-IV criteria supported by the Autism Diagnostic Observation Schedule (Lord et al., 2000). Intellectual functioning was assessed using the Wechsler Abbreviated Scale of Intelligence (I and II). ASD symptom severity and anxiety was measured using the Social Communication Questionnaire. Other information collected on the participants included age, gender, comorbidities, and other received interventions.

The Bloorview Research Institute research ethics board approved the study. Written consent was obtained from all participants who were deemed to have the capacity for consent. For all other participants, assent and written consent were obtained from the children and their legal guardians, respectively.

Outcome Measures

This study investigates the usability of our design, based on ISO 92411-11 guidelines on usability evaluation. This standard recommends the use of measures of effectiveness, efficiency, and user satisfaction (Abran et al., 2003).

Effectiveness

This measure evaluates the system’s ability to accurately detect and recognize the participants’ exchanges. To this end, we used two effectiveness measures namely, detection accuracy and recognition accuracy. Detection accuracy, as defined below in Eq. 1, measures if the device detected user utterances. Detection accuracy is determined for both the user and the interaction partner and is calculated as follows.

Recognition accuracy, as defined below in Eq. 2, measures how accurately the device recognized specific phrases. Recognition accuracy is only determined for the user because an inaccuracy while recognizing the interaction partner’s speech may cause the conversation to diverge from the planned study script.

Both accuracy measures were computed by reviewing video recordings of the session and automatically generated logs that document the system’s detected phrases and the corresponding responses.

Efficiency

This measure evaluates the system’s speed in interpreting and responding to speech, as well as the user response time to prompts. The user response time is defined as the time between a prompt being displayed and the beginning of the user’s next utterance. The system response time is defined as the time between the end of an utterance and the system’s recognition of a phrase. These measures are determined by cross-examining the videos at 12.5% playback speed to determine the beginning and end of actual utterances, and logs created by Holli recording the timing of phrase detection and prompt display.

User Satisfaction

This aspect of usability was evaluated using a post-session satisfaction questionnaire, shown in Appendix A. The questionnaire included a 5-point Likert scale to measure the participants’ level of agreement with 10 statements. The questionnaire also included open-ended questions to help guide a semi-structured interview.

Procedures

After providing consent, the participants filled out a demographics questionnaire and were given an overview of Holli. The participants were guided through a restaurant-themed practice conversation. The restaurant-themed conversation included 10 unique exchanges and 128 unique words. Each exchange consists of one phrase from each conversation partner. The restaurant theme was chosen to emphasize functional interactions. The participants were given the Glass to adjust until comfortable. The Holli app was then started. A research assistant played the role of a restaurant staff (interaction partner) and prompted the participant for their order. Holli then provides possible responses to the participant using the heads-up display. The conversation continued in this manner for 10 interactions. Only the participant wore the Google Glass equipped with Holli. The interaction partner had no supplementary device, with the intent that their voice would be picked up by the user’s Glass. After the conversation, participants filled out a satisfaction questionnaire and we conducted a semi-structured interview.

Analysis

Descriptive statistics were computed for measures of effectiveness and efficiency, and for each item on the user satisfaction questionnaire. In addition, quantitative content analysis was undertaken to quantify the results from the semi-structured interview and extract several common themes.

Results

Participants

The participants’ mean age was 12.92 ± 2.33 with a mean verbal IQ of 103.3 ± 18.73 and mean SCQ score of 18.1 ± 4.42. Comorbidities included attention-deficit hyperactivity disorder (ADHD), tic disorders, and learning disorders. Other interventions varied across participants, but common elements include applied behavior analysis, speech therapy, occupational therapy, physiotherapy, intensive behavioral intervention, relationship development intervention, and cognitive behavioral training. Participant characteristics are shown below in Table 1.

Effectiveness

All users were able to successfully complete the 10-turn exchange while using Holli. None of the users showed a preference for a single prompt location (13/15 participants chose prompts appearing in all locations).

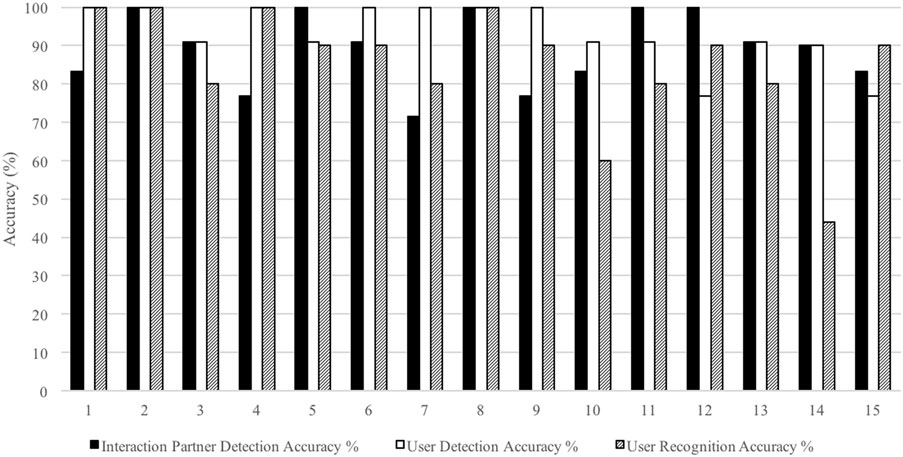

The average detection and recognition accuracies are shown below in Figure 6. Average interaction partner detection accuracy was 89.2 ± 9.69%, the average user detection accuracy was 93.23 ± 2.05%, and average user recognition accuracy was 84.93 ± 4.04%.

Efficiency

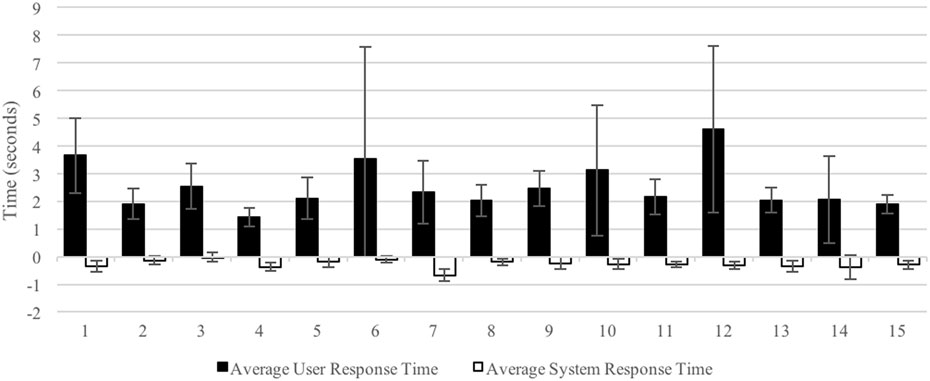

The mean user response time and system response time were determined by averaging the times for each of the 10 exchanges, as seen in Figure 7. The mean user response time was 2.52 ± 0.84 s and mean system response time was −0.28 ± 0.15 s.

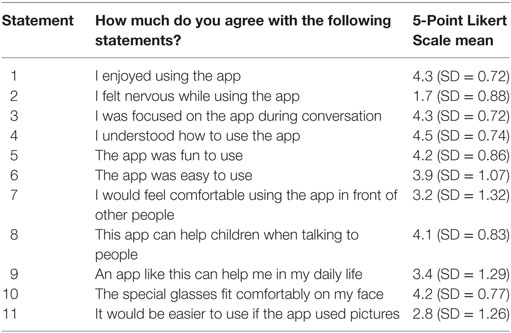

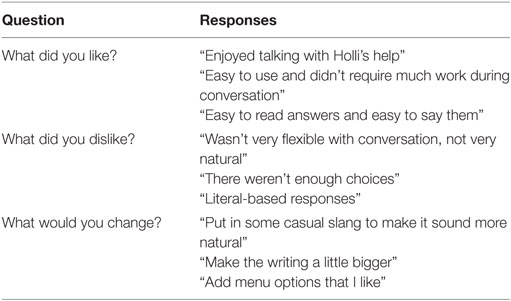

User Satisfaction

Likert scale results were averaged across participants to obtain an overall snapshot of the users’ opinions (Table 2). Example representative responses from the semi-structured interview can be seen below in Table 3.

Discussion

In this study, we examined the usability of a wearable social skills training technology for children with ASD. The goal of the technology is to prompt children with ASD throughout social conversations, listening to what is said in the environment and providing various appropriate responses to choose from. This investigation was the first step in our system’s development, ensuring it meets the needs and is an acceptable mechanism of delivery for children with ASD. We examined prompt order preference to ensure that participants considered all three presented prompts.

Effectiveness

As additional measures of effectiveness, we also examined the system’s ability to detect utterances and recognize specific phrases. The device was successfully able to detect, on average, 9/10 utterances during the conversation. Most conversations were completed without error. We expected the interaction partner detection accuracy to be lower than the user detection accuracy due to the position of the microphone on the device, and because the microphone is intended for sole use by the wearer. This was reflected by the interaction partner detection accuracy of 89.2% and user detection accuracy of 93.23%. Potential mitigation strategies include improving the speech recognition search criteria and the system’s decision making algorithms. Both detection accuracies can be improved with a more robust algorithm in a future iteration.

Users’ speech disfluencies were a source of the recognition error in our system. Some participants had comorbid verbal tics, while 12/15 participants had received or were receiving ST. Video analysis revealed the lowest accuracy (participant 10) was due to stutters throughout the session, and other participants’ low accuracies were also caused by speech disfluencies. This limitation can be mitigated in future iterations by adding the ability to handle filler words and stutters to the speech recognition algorithm.

Overall, our results indicate that the sample population can effectively use Holli to complete the 10-exchange conversation used in our experiment. A potential functionality issue identified in this study was the effect of ASD-related speech disfluencies affecting the accuracy of the recognition system. As such, future development should focus on speech recognition technology that is geared toward the speech characteristics of children with ASD.

Efficiency

In the context of usability, efficiency examines the ease and speed with which the users can complete the intended task. In this study, we quantified efficiency through system response time (i.e., the time it takes for the system to respond once a phrase has been spoken) and the user response time (i.e., the time it takes for the user to begin speaking after being shown prompts). A positive result was the mean system response time, −0.28 s. A negative system response time represents that Holli understood what the user was saying before he/she finished saying it. This result demonstrates the speech recognition response time is robust enough to process speech, make appropriate predictions, and generate responses in real time. This result also supports further development on the Glass for similar applications.

The mean user response time was 2.52 s, which represents the average time for a user to read all possible prompts and choose one. Although the average gap between turns in typical conversation is 250 ms (Stivers et al., 2009), the additional response time is due to the user reading and choosing between the prompts.

Overall, the system response time was not an issue in this design iteration; however, the user response time varied noticeably across participants. The user response time varied due to the time it took each user to read, process, and choose a response. Future work in this area should focus on reducing processing time by supporting customization for individual users and allowing caregivers to change prompt location, size, and medium to cater to the child’s unique preferences and abilities.

User Satisfaction

In this study, the participants showed a strong affinity for using Holli. The Likert scale confirmed several positive aspects about our application, such as the participants enjoying the app, the participants understanding how to use the app, and that the app can help children when talking to people. The participants also confirmed their comfort with using the Google Glass and that they felt minimal nervousness while using the app.

The questionnaire allowed us to extract several themes of what the participants liked, disliked, and would like to see changed. Overall, the participants enjoyed the prompts and found it easy to use. Participants also reported that they could focus on the app effectively, confirming our expectation that a technology-based medium would be appropriate for prompt delivery in this population. Participants also found the Google Glass conformable to wear. Interestingly, speech recognition error did not seem to affect participants’ satisfaction with the device.

Another theme that emerged from the satisfaction questionnaire was the need for enhanced personalization. For example, some participants proposed personalization with respect to prompt modality and esthetics. These included different modalities for prompt presentation (visual display) and changing the font size. Other participants suggested more personalization with respect to content of the prompts, including customization of the food items and inclusion of slang.

With regards to customization of food items and including more sophisticated conversation options, the next iteration of the design will include a more complex artificial intelligence algorithm that will automatically learn user preferences over time.

Implications

To the best of our knowledge, this system is the first technology-aided intervention for ASD that employs human-to-human coaching in naturalistic settings. Despite some of the discussed potential limitations, we believe that this study can help promote the development of technology-aided intervention in children with ASD by demonstrating its positive acceptance and overall feasibility in this population.

Future directions will include improvements to the prototype by incorporating relevant usability feedback, such as adding personalization options. In addition, more robust speech recognition algorithms are needed that the unique speech characteristics of children with ASD to improve accuracy. Artificial intelligence algorithm would be an interesting enhancement to learn users’ preferences and generate appropriate responses accordingly. Future studies should also look at alternative prompt modalities as well as the appropriate number of prompt choices.

Participants agreed only moderately with the notion that Holli could help them in their daily life. This may be due to perceived stigma associated with wearing technology such as Holli in every day settings. This hypothesis is supported by the moderate responses to the question addressing the participants’ level of comfort in wearing Holli in public.

Conclusion

In this study, we evaluated the usability of a wearable device, called Holli, for social skills coaching of higher-functioning and verbal children with ASD. Holli uses the Google Glass and real-time speech recognition and processing to provide appropriate prompts to users during a conversation. As a first step in evaluating the effectiveness of this device for children with ASD, we conducted a usability study. Overall, our results support the usability of Holli in this sample. Future improvements to the system include improving the speech recognition accuracy by considering unique characteristics of children with ASD and modifying the user interface to reduce processing demands on the participants. While evidence strongly supports the use of technology-aided intervention in ASD, a wave of landscape-altering developments and designs has not yet followed. This study shows that our novel design is an appropriate and feasible intervention and may ultimately create new ways to promote and support human-to-human interaction in ASD.

Ethics Statement

The Bloorview Research Institute research ethics board approved the study. Written consent was obtained from all participants who were deemed to have the capacity for consent. For all other participants, assent and written consent were obtained from the children and their legal guardians, respectively.

Author Contributions

BK, SC, and AK developed the study protocols. BK and SC completed the study sessions with participants. BK and AK wrote the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank the participants and their families for volunteering their time. The authors would also like to thank the Kimel Family for their support.

Funding

This work was supported in part by the Holland Bloorview Kids Rehabilitation Hospital Foundation and the Kimel Graduate Student Scholarship in Pediatric Rehabilitation.

References

Abran, A., Khelifi, A., Suryn, W., and Seffah, A. (2003). Usability meanings and interpretations in ISO Standards. Softw. Qual. J. 11, 323–336. doi: 10.1023/A:1025869312943

Autism Ontario. (2017). Social Matters: Improving Social Skills Interventions for Ontarians with ASD. Available at: http://www.autismontario.com/Client/ASO/AO.nsf/object/SocialMatters/$file/Social+Matters.pdf [accessed June 30, 2017].

Bauminger, N. (2007). Brief report: individual social-multi-modal intervention for HFASD. J. Autism Dev. Disord. 37, 1593–1604. doi:10.1007/s10803-006-0245-4

Bekele, E., Crittendon, J. A., Swanson, A., Sarkar, N., and Warren, Z. E. (2014). Pilot clinical application of an adaptive robotic system for young children with autism. Autism 18, 598–608. doi:10.1177/1362361313479454

Bellini, S., Peters, J. K., Benner, L., and Hopf, A. (2007). A meta-analysis of school-based social skills interventions for children with autism spectrum disorders. Remed. Spec. Educ. 28, 153–162. doi:10.1177/07419325070280030401

Bernard-Opitz, V., Sriram, N., and Nakhoda-Sapuan, S. (2001). Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. J. Autism Dev. Disord. 31, 377–384. doi:10.1023/A:1010660502130

Bosseler, A., and Massaro, D. W. (2003). Development and evaluation of a computer-animated tutor for vocabulary and language learning in children with autism. J. Autism Dev. Disord. 33, 653–672. doi:10.1023/B:JADD.0000006002.82367.4f

Burke, R. V., Allen, K. D., Howard, M. R., Downey, D., Matz, M. G., and Bowen, S. L. (2013). Tablet-based video modeling and prompting in the workplace for individuals with autism. J. Vocat. Rehabil. 38, 1–14. doi:10.3233/JVR-120616

Cappadocia, M. C., and Weiss, J. A. (2011). Review of social skills training groups for youth with Asperger syndrome and high functioning autism. Res. Autism Spectr. Disord. 5, 70–78. doi:10.1016/j.rasd.2010.04.001

Colby, K. M. (1973). The rationale for computer-based treatment of language difficulties in nonspeaking autistic children. J. Autism Child. Schizophr. 3, 254–260. doi:10.1007/BF01538283

D’Ateno, P., Mangiapanello, K., and Taylor, B. A. (2003). Using video modeling to teach complex play sequences to a preschooler with autism. J. Posit. Behav. Interv. 5, 5–11. doi:10.1177/10983007030050010801

Developmental, D. M. N. S. Y., & 2010 Principal Investigators. (2014). Prevalence of autism spectrum disorder among children aged 8 years-autism and developmental disabilities monitoring network, 11 sites, United States, 2010. MMWR Morb. Mortal. Wkly. Rep. 63(2), 1 [Surveillance summaries (Washington, DC)].

Faulkner, L. (2003). Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav. Res. Methods Instrum. Comput. 35, 379–383. doi:10.3758/BF03195514

Gal, E., Bauminger, N., Goren-Bar, D., Pianesi, F., Stock, O., Zancanaro, M., et al. (2009). Enhancing social communication of children with high-functioning autism through a co-located interface. AI Soc. 24, 75–84. doi:10.1007/s00146-009-0199-0

Google Inc. (2016). GLASS FAQ. Available at: https://sites.google.com/site/glasscomms/faqs

Gotsis, M., Piggot, J., Hughes, D., and Stone, W. (2010). “SMART-games: a video game intervention for children with autism spectrum disorders,” in Proceedings of the 9th International Conference on Interaction Design and Children – IDC ’10 (New York, NY: ACM Press), 194.

Hopkins, I. M., Gower, M. W., Perez, T. A., Smith, D. S., Amthor, F. R., Wimsatt, F. C., et al. (2011). Avatar assistant: improving social skills in students with an ASD through a computer-based intervention. J. Autism Dev. Disord. 41, 1543–1555. doi:10.1007/s10803-011-1179-z

Hourcade, J. P., Bullock-Rest, N. E., and Hansen, T. E. (2011). Multitouch tablet applications and activities to enhance the social skills of children with autism spectrum disorders. Pers. Ubiquitous Comput. 16, 157–168. doi:10.1007/s00779-011-0383-3

Hourcade, J. P., Williams, S. R., Miller, E. A., Huebner, K. E., and Liang, L. J. (2013). “Evaluation of tablet apps to encourage social interaction in children with autism spectrum disorders,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems – CHI ’13, 3197, Paris.

Howlin, P., Moss, P., Savage, S., and Rutter, M. (2013). Social outcomes in mid- to later adulthood among individuals diagnosed with autism and average nonverbal IQ as children. J. Am. Acad. Child Adolesc. Psychiatry 52, 572.e–581.e. doi:10.1016/j.jaac.2013.02.017

Ke, F., and Im, T. (2013). Virtual-reality-based social interaction training for children with high-functioning autism. J. Educ. Res. 106, 441–461. doi:10.1080/00220671.2013.832999

Konstantinidis, E. I., Luneski, A., and Nikolaidou, M. M. (2009). “Using affective avatars and rich multimedia content for education of children with autism,” in Proceedings of the 2nd International Conference on Pervasive Technologies Related to Assistive Environments, Corfu, Greece.

Lord, C., Risi, S., Lambrecht, L., Cook, E. H., Leventhal, B. L., DiLavore, P. C., et al. (2000). The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. J. Autism Dev. Disord. 30, 205–223. doi:10.1023/A:1005592401947

Moore, D. (1998). Computers and people with autism/Asperger Syndrome. Communication (the magazine of The National Autistic Society), Summer, 20–1.

Muensterer, O. J., Lacher, M., Zoeller, C., Bronstein, M., and Kübler, J. (2014). Google Glass in pediatric surgery: an exploratory study. Int. J. Surg. 12, 281–289. doi:10.1016/j.ijsu.2014.02.003

Murdock, L. C., Ganz, J., and Crittendon, J. (2013). Use of an iPad play story to increase play dialogue of preschoolers with autism spectrum disorders. J. Autism Dev. Disord. 43, 2174–2189. doi:10.1007/s10803-013-1770-6

Parsons, S., Beardon, L., Neale, H. R., Reynard, G., Eastgate, R., Wilson, J. R., et al. (2000). “Development of social skills amongst adults with Asperger’s Syndrome using virtual environments: the ‘AS Interactive’project,” in Proc. The 3rd International Conference on Disability, Virtual Reality and Associated Technologies, ICDVRAT, Sardinia, Italy, 23–25.

Ploog, B. O., Scharf, A., Nelson, D., and Brooks, P. J. (2013). Use of computer-assisted technologies (CAT) to enhance social, communicative, and language development in children with autism spectrum disorders. J. Autism Dev. Disord. 43, 301–322. doi:10.1007/s10803-012-1571-3

Stivers, T., Enfield, N. J., Brown, P., Englert, C., Hayashi, M., Heinemann, T., et al. (2009). Universals and cultural variation in turn-taking in conversation. Proc. Natl. Acad. Sci. U.S.A. 106, 10587–10592. doi:10.1073/pnas.0903616106

Tartaro, A., and Cassell, J. (2006). “Authorable virtual peers for autism spectrum disorders,” in Proceedings of the Combined Workshop on Language Enabled Educational Technology and Development and Evaluation for Robust Spoken Dialogue Systems at the 17th European Conference on Artificial Intelligence, Vol. 174 (Riva del Garda, Italy), 169–174.

Wall, D., Ray, W., Pathak, R. D., and Lin, S. M. (2014). A Google Glass application to support shoppers with dietary management of diabetes. J. Diabetes Sci. Technol. 8, 1245–1246. doi:10.1177/1932296814543288

Weiss, P. L. T., Gal, E., Eden, S., Zancanaro, M., and Telch, F. (2011). “Usability of a multi-touch tabletop surface to enhance social competence training for children with autism spectrum disorder,” in Proceedings of the Chais Conference on Instructional Technologies Research: Learning in the Technological Era (Raanana), 71–78.

Williams, C., Wright, B., Callaghan, G., and Coughlan, B. (2002). Do children with autism learn to read more readily by computer assisted instruction or traditional book methods? Autism 6, 71–91. doi:10.1177/1362361302006001006

Xu, Q., Cheung, S. S., and Soares, N. (2015). “LittleHelper: an augmented reality glass application to assist individuals with autism in job interview,” in 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA) (Hong Kong: IEEE), 1276–1279.

Zheng, Z., Zhang, L., Bekele, E., Swanson, A., Crittendon, J. A., Warren, Z., et al. (2013). “Impact of robot-mediated interaction system on joint attention skills for children with autism,” in IEEE International Conference on Rehabilitation Robotics: [Proceedings], Seattle, Washington, 6650408.

Appendix A

User Satisfaction Questionnaire

Investigating the Usability of a Social Skills Training App in Children with Autism Spectrum Disorder

Child Satisfaction Questionnaire

Date Completed: ______________________________

Name of Person Completing Questionnaire: ________________

Please check or write down the answers to the questions below:

| How much do you agree with each of the following statements? (1 = not at all, 3 = somewhat, 5 = a lot) | 1 | 2 | 3 | 4 | 5 |

| I enjoyed using the app | |||||

| I felt nervous while using the app | |||||

| I was focused on the app during conversation | |||||

| I understood how to use the app | |||||

| The app was fun to use | |||||

| The app was easy to use | |||||

| I would feel comfortable using the app in front of other people | |||||

| This app can help children when talking to people | |||||

| An app like this can help me in my daily life | |||||

| The special glasses fit comfortably on my face | |||||

| It would be easier to use if the app used pictures | |||||

What did you like about Holli?

What did you dislike about Holli?

What would you change about Holli?

What did you like about the special glasses?

What did you dislike about the special glasses?

What would make the special glasses better?

Keywords: autism spectrum disorder, social skills, wearable technology, technology-aided intervention, usability study, prompt, Google Glass

Citation: Kinsella BG, Chow S and Kushki A (2017) Evaluating the Usability of a Wearable Social Skills Training Technology for Children with Autism Spectrum Disorder. Front. Robot. AI 4:31. doi: 10.3389/frobt.2017.00031

Received: 18 October 2016; Accepted: 16 June 2017;

Published: 12 July 2017

Edited by:

Kaspar Althoefer, Queen Mary University of London, United KingdomReviewed by:

Huan Tan, GE Global Research, United StatesLuis Gomez, University of Las Palmas de Gran Canaria, Spain

Copyright: © 2017 Kinsella, Chow and Kushki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Azadeh Kushki, YWt1c2hraUBob2xsYW5kYmxvb3J2aWV3LmNh

Ben G. Kinsella

Ben G. Kinsella Stephanie Chow

Stephanie Chow Azadeh Kushki

Azadeh Kushki