- Department of Communication and Cognition, Tilburg Center for Cognition and Communication, Tilburg School of Humanities and Digital Sciences, Tilburg University, Tilburg, Netherlands

Brainstorming is a creative technique used to support productivity and creativity during the idea generation phase of an innovation process. In professional practice, a facilitator structures, regulates, and motivates those behaviors of participants that help maintain productivity and creativity during a brainstorm. Emerging technologies, such as social robots, are being developed to support or even automate the facilitator’s role. However, little is known about whether and how brainstorming with a social robot influences productivity. To take a first look, we conducted a between-subjects experiment (N = 54) that explored 1) whether brainstorming with a Wizard-of-Oz operated robot facilitator, compared to with a human facilitator, influences productivity; and 2) whether any effects on productivity might be explained by the robot’s negative effects on social anxiety and evaluation apprehension. The results showed no evidence for an effect of brainstorming with a teleoperated robot facilitator, compared to brainstorming directly with a human facilitator, on productivity. Although the results did suggest that overall, social anxiety caused evaluation apprehension, and evaluation apprehension negatively affected productivity, there was no effect of brainstorming with a robot facilitator on this relationship. Herewith, the present study contributes to an emerging body of work on the efficacy and mechanisms of the facilitation of creative work by social robots.

Introduction

Originally developed by Osborn (1957), the brainstorming technique motivates people to generate and express as many outrageous ideas as they can, while refraining from criticizing each other’s ideas. In this way, they can build upon each other’s ideas freely, under the assumption that quantity will ultimately lead to creativity. The role of a facilitator is to structure, regulate, and motivate those behaviors that enable participants in a brainstorm to maintain productivity and creativity throughout (Isaksen et al., 2010). For example, by enforcing brainstorm rules when participants deviate from these. However, facilitation requires advanced knowledge and skill about creative thinking that is hard to come by. Emerging technologies, such as co-creative agents and specifically social robots, are therefore increasingly looked at as an alternative to professional human facilitation (Davis et al., 2015; Frich et al., 2019). This research program is further emboldened by experimental findings that suggest that generating ideas with a social robot facilitator can enhance productivity and creativity, when compared to facilitation delivered via other technologies (Kahn et al., 2016; Ali et al., 2021; Alves-Oliveira et al., 2020). Although social robots are generally defined as being (semi) autonomous (Bartneck and Forlizzi 2004), in the present work we have used teleoperation to explore the potential future in which social robots would be able to autonomously facilitate brainstorming. Therefore, in the present study “social” mainly refers to the humanlike appearance and behavior of the robot as perceived by others, rather than its social intelligence. Surprisingly little is known about how working with a social robot facilitator compares to working with a human facilitator, and what the mechanisms may be that underlie its potentially advantageous effects on productivity and creativity. The present study takes a first look at how brainstorming with a social robot facilitator compares to brainstorming with a human facilitator.

Compared to virtual co-creative agents, brainstorming with a social robot facilitator shows great potential because these embodied machines can be designed to perceive and understand the world around them, and to communicate with humans using natural language (Fong et al., 2003). Thus, they can deliver facilitation via known and readily understandable communication channels, and in situ (Zawieska, 2014). Recent findings support that doing brainstorming and other creative work with a social robot facilitator might be advantageous over using other technologies. Alves-Oliveira et al. (2020), for example, showed that using the social robot YOLO as a character in a storytelling task, led children to generate more original ideas when YOLO actively facilitated creative thinking than when YOLO was turned off. In addition, Ali et al. (2021) showed that facilitating figural creativity by engaging and managing turn-taking in a drawing completion task by means of the social robot Jibo, increased productivity, flexibility, and originality scores of children’s drawings, compared to facilitation by an iPad application. Furthermore, Kahn et al. (2016) found that facilitation by a (teleoperated) social robot led adult participants to generate more creative expressions while designing a Zen rock garden, than when facilitation was delivered via a PowerPoint presentation. The authors of the present paper, however, propose that understanding the true efficacy of brainstorming with a social robot facilitator also requires comparison with a human facilitator, rather than with another technology.

To explore this open scientific and applied problem, the following research question will be answered:

“Does brainstorming with a social robot facilitator, compared to brainstorming with a human facilitator, influence productivity?”

Previous research on brainstorming in groups suggests that social interactions with other people may cause productivity losses (Sawyer 2011). Specifically, past experimental work by Camacho and Paulus (1995) showed how people that have a stronger, compared to a weaker, disposition to experience a fear of being watched or judged by others produced fewer ideas when they brainstormed with others, compared to when they brainstormed alone. Such a disposition, or trait social anxiety, is thought to increase the chance that people experience this anxiety in transient emotional form, state social anxiety, while interacting with another human being (Spielberger 1966). In turn, the social anxiety experienced may cause evaluation apprehension (Leary 1983; Bordia, Irmer, and Abusah 2006), where people during a brainstorm or other creative task do not express all of their ideas because they fear the social consequences of sharing these ideas (Diehl and Stroebe 1987; Warr and O’Neill 2005). Experiencing social anxiety would thus result in a productivity loss during brainstorming due to its effects on evaluation apprehension, while the likelihood that this occurs is moderated by the disposition to experience social anxiety.

Interestingly, there is also evidence that suggests that social robots can help mitigate social anxiety. A recent study by Nomura et al. (2020) showed that when anticipating collaboration, people with a stronger, compared to a weaker, disposition to experience social anxiety were more likely to prefer collaborating with a social robot than with a human being. Speculatively, this may be because some social robots tend to be perceived as non-judgmental and patient (Breazeal 2011), or because of the perception that social robots do not possess the same agency as human beings, but are rather considered as being somewhere in between inanimate toys and animate social beings (Scassellati et al., 2012). This unique relationship between human and social robot might lead people to engage in social interactions with these machines, with a decreased chance of experiencing the feeling that what they say or do is being evaluated or judged in any way by the robot. Though a mere conjecture, this previous work suggests that brainstorming with a social robot facilitator, compared to brainstorming with a human facilitator, might increase productivity because it prevents triggering a psychological mechanism where social anxiety causes evaluation apprehension to occur, with productivity loss as a consequence.

Based on these conjectures, the following working hypothesis will be explored:

“Brainstorming with a social robot facilitator, compared to brainstorming with a human facilitator, increases productivity due to its effects on the relationship between state social anxiety and evaluation apprehension, which is moderated by trait social anxiety.”

Materials and Methods

To explore the research question and working hypothesis an experiment was conducted with a between-subjects design, where participants were asked to brainstorm with either a social robot, teleoperated by a professional human facilitator, or directly with the human facilitator.

Participants

Fifty-four people participated in the experiment (Mage = 23.21, SDage = 3.24, Rangeage = [18, 35], 34 females, 20 males). The participants were recruited via the researchers’ own network and the human subjects pool of Tilburg University. The participants were predominantly Dutch (N = 29). Only a few participants that brainstormed with the robot facilitator had seen (N = 7) or collaborated (N = 5) with the social robot before in another situation. The participants possessed an acceptable to good level of knowledge about the brainstorming task topic (M = 3.35, SD = 0.96), and experienced an acceptable to good ability to think creatively during the facilitated brainstorm sessions (M = 3.56, SD = 0.74). Participants that were recruited through the human subject pool received study credits. The study was approved by the TSHD Research Ethics and Data Management Committee, Tilburg University.

Materials and Measures

The protocol, source code for the robot interaction, and measurement instruments are all available in the supplementary files.1

Brainstorming Task

The participants were asked to brainstorm ideas using Osborn's, (1957) now classical brainstorm rules for the problem: “How can you help to reduce mental illness among students?”. This topic was chosen for its sensitiveness and actuality among the participants (RIVM 2018). The former increases the chance that evaluation apprehension occurs (Diehl and Stroebe 1987; Pinsonneault et al., 1999). There were no criteria of what constituted an idea: this could be an initial thought, or a concrete solution (e.g., mindfulness app). All ideas were written down by the participant on Post-Its, which were color coded to indicate whether the idea originated from the participant or the facilitator. The brainstorm task took 15 min.

Robot vs. Human Facilitator

Participants were randomly assigned to brainstorm with a social robot facilitator (N = 27; coded: 0) or a human facilitator (N = 27; coded: 1). For the social robot facilitator, the Wizard-of-Oz method was used where the participants sat face-to-face with a social robot (SoftBank Robotics NAO v5) that was invisibly controlled from another room by the same professional facilitator that was present in the human facilitator condition. The Wizard-of-Oz method is used in related work as well (Kahn et al., 2016), and allowed us to maximize consistency between the two conditions. To enable robot facilitation, the Choregraphe software (Pot et al., 2009) was used to remotely send pre-defined and custom responses to the participant while a camera was used to monitor the brainstorm (Figure 1). The responses were vocalized to the participants through the robot’s text-to-speech capabilities. The robot was “breathing” (swaying its arms and legs slightly) to simulate life-likeness, but did not use any other forms of non-verbal communication. In both conditions, the facilitator used the same response protocol. This protocol was developed to strike a balance between the rich role that facilitators play in a brainstorm, while maintaining the believability of the robot and the human as a facilitator. This entailed pre-defining short general purpose responses that covered instructions needed to structure the different phases of the brainstorm (e.g., mentioning Osborn’s brainstorm rules), and process-regulating (e.g., “Do you know another way to solve the problem?”) and motivating messages (e.g., “I like that idea as well!”) needed to keep a brainstorm going. When participants were stuck or too fixated on a line of thinking, the facilitator deviated from using only pre-defined messages and relied on their experience to provide the participant with an idea to keep the brainstorm going. This unscripted assistance was provided in both conditions, and the number of facilitator-proposed ideas was counted to control for variation between participants (see Assessment of Facilitator Intervention). Five participants suspected or were unsure whether the social robot was controlled by a human being, but only when explicitly asked after the brainstorm and not during the brainstorm. Although an influence therefore cannot be ruled out, it is likely to be small. Thus, their data was included in the analyses to prevent an imbalanced distribution across the experimental conditions.

Assessment of Trait and State Social Anxiety

Trait and state social anxiety were both assessed using a 13-item five-point Likert scale (1 = strongly disagree, 5 = strongly agree) from the Social Interaction Anxiety Scale (Heimberg et al., 1992). Seven items were removed from the original 20-item scale because they did not apply to both trait and state anxiety. Two items were reverse coded. To assess trait anxiety the original items were administered. Cronbach alpha suggested good internal consistency, α = 0.821. State anxiety was assessed with rephrased questions that fit the experience of social anxiety during the brainstorming task. For example, the item “I find myself worrying that I don’t know what to say in social situations” was rephrased as “I found myself worrying that I wouldn’t know what to say in the session”. Here, Cronbach alpha suggested minimally acceptable internal consistency, α = 0.679. The means for the trait and state anxiety items were used in the analysis.

Assessment of Evaluation Apprehension

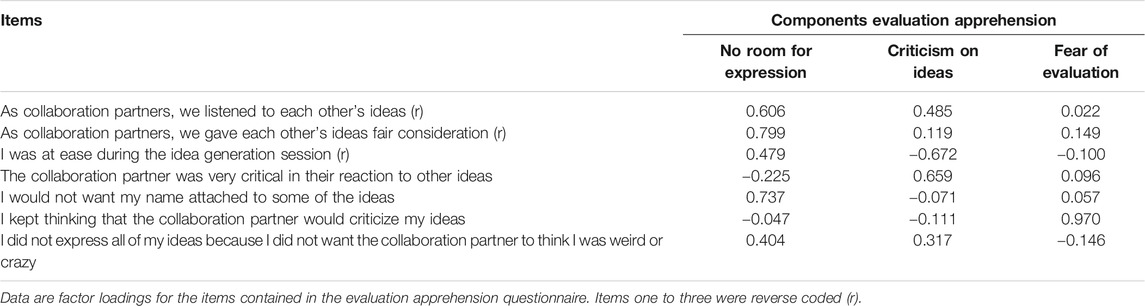

Evaluation apprehension was assessed with a seven-item five-point Likert scale developed by Bolin and Neuman (2006) (1 = strongly disagree, 5 = strongly agree). The original items were reformulated to better fit the dyadic nature of the present study. For example, items such as “As a group, we listened to … ” were reformulated into “As collaboration partners, we listened to … ”. The first three items were reverse coded. Although previous work suggested good consistency of the scale, the Cronbach alpha in the present study was not acceptable, α = 0.181. To check whether one or more unwieldly items may be responsible, Cronbach alphas were calculated while excluding items from the scale. This to no avail. Therefore, principle component analysis (oblique rotation) was used to explore whether the scale measured different factors (Field 2013). The results showed three factors with an eigenvalue over 1.00 that together explained 60.82% of the variance. Sampling adequacy was acceptable, KMO = 0.60. Inspection of the items suggested that these three factors could be interpreted as measures of “no room for expression,” “criticism on ideas,” and “fear of evaluation.” The three factors were used in the analysis. The items and factor loadings are presented in Table 1.

Assessment of Productivity

To measure the participants’ productivity the number of ideas they produced during the brainstorm was counted (Diehl and Stroebe 1987; Paulus and Yang 2000). This is in line with common instructions used during brainstorming in professional practice where there is an initial focus on producing many ideas (quantity), without criticizing or otherwise evaluating generated ideas (quality) (Paulus and Yang 2000). Only non-redundant ideas, written down on Post-Its by each participant, were counted.

Assessment of Facilitator Intervention

Because the facilitator intervenes at times to keep the brainstorm going by generating an idea, the number of ideas introduced by the facilitator was also counted. The number of ideas introduced may confound the tested relationships between state anxiety, evaluation apprehension, and productivity. If this is the case, these will be included as a covariate in the statistical analysis.

Demographics and Task-Relevant Sample Characteristics

Participants filled in basic demographic information (age, gender, and nationality) and were asked to “… indicate your level of expertise about the topic of the brainstorm” and “… rate your level of creativity during the idea generation session” on a five-point Likert scale (1 = very poor, 5 = very good). As knowledge is at the basis of creativity (Abraham 2018), and good facilitation entails ensuring that people feel they are creative (Isaksen et al., 2010), these are reported as relevant sample characteristics. These are reported in Participants.

Procedure

The study was conducted at the Media Design Lab of Tilburg University. There, participants were seated in a room at a table (Figure 1) and read the study information, COVID-19 protocols, task instructions, and signed informed consent. Information that could reveal the use of the Wizard-of-Oz method and the true purpose of the experiment was not yet shared. After this, the participants filled in the trait social anxiety questionnaire. Then, they engaged in the brainstorm task with either the robot or the human facilitator. After the brainstorm, the participants filled in the questionnaires used to assess state social anxiety, evaluation apprehension, and their demographics. Finally, they were fully debriefed and thanked for taking part in the experiment. After they left, the researcher recorded the number of ideas generated by the facilitator and by the participant.

Results

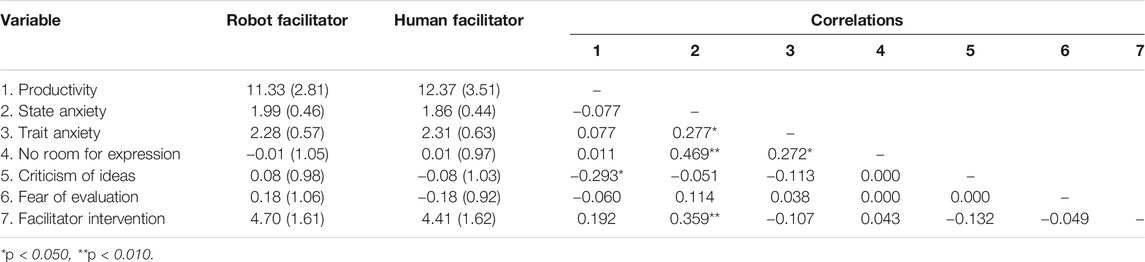

To explore whether brainstorming with a social robot facilitator, compared to brainstorming with a human facilitator, influences productivity, an independent-samples t-test was conducted with facilitator type (robot facilitator code = 0; human facilitator code = 1) as the independent variable and productivity as the dependent variable. See Table 2 for an overview of the descriptive statistics and correlations.

The results showed no significant difference between brainstorming with a social robot facilitator (M = 11.33, SD = 2.81) and a human facilitator (M = 12.37, SD = 3.51), for productivity, t (52) = −1.20, p = 0.236, 95% CI [−2.77 0.70]. Further checks suggested that these findings could not be explained by facilitator intervention. That is, an independent-samples t-test showed no significant difference between brainstorming with a social robot facilitator (M = 4.70, SD = 1.61) and a human facilitator (M = 4.41, SD = 1.62), for the number of ideas the facilitator brought in, t (52) = 0.67, p = 0.504, 95% CI [−0.59 1.18]; and no significant correlation was found between facilitator intervention and productivity, r (54) = 0.192, p = 0.163. These findings suggest no evidence for a difference in productivity when people brainstorm with a social robot facilitator, compared to when they brainstorm with a human facilitator.

To explore whether brainstorming with a social robot facilitator, compared to brainstorming with a human facilitator, might increase productivity due to its effects on the relationship between state social anxiety and evaluation apprehension, and whether this is moderated by trait social anxiety, further analyses were conducted. That is, additional independent-samples t-tests were conducted with facilitator type as the independent variable, and social anxiety and the three evaluation apprehension factors as the dependent variables. Furthermore, correlations were calculated to explore whether the expected relationships between social anxiety, evaluation apprehension, and productivity could be confirmed. Combined, significant results could justify further exploration by means of (moderated) mediation analyses (Hayes 2017).

The results showed no significant difference between brainstorming with a social robot facilitator (M = 1.99, SD = 0.46) and a human facilitator (M = 1.86, SD = 0.44), for state social anxiety, t (52) = 1.08, p = 0.286, 95% CI [−0.11 0.37]. This finding was not likely to be unduly influenced by sampling errors. That, is an independent-samples t-test showed no significant difference between brainstorming with a social robot facilitator (M = 2.28, SD = 0.57) and a human facilitator (M = 2.31, SD = 0.63), for trait social anxiety, t (52) = −0.20, p = 0.846, 95% CI [−0.36 0.30]. As a consequence, trait anxiety does not moderate the relationship between facilitator type and state social anxiety. Further checks showed a significant positive correlation between facilitator intervention and state social anxiety, r (54) = 0.359, p = 0.008.

Regarding the three evaluation apprehension factors, the results showed no significant difference between brainstorming with a social robot facilitator (M = −0.01 SD = 1.05) and a human facilitator (M = 0.01, SD = 0.97), for no room for expression, t (52) = −0.11, p = 0.917, 95% CI [−0.55 0.49]; between brainstorming with a social robot facilitator (M = 0.08, SD = 0.98) and a human facilitator (M = −0.08, SD = 1.03), for criticism on ideas, t (52) = 0.56, p = 0.576, 95% CI [−0.40 0.70]; nor between brainstorming with a social robot facilitator (M = 0.18, SD = 1.06) and a human facilitator (M = −0.18, SD = 0.92), for fear of evaluation, t (52) = 1.37, p = 0.178, 95% CI [−0.18 0.87]. Note that the Shapiro-Wilk tests showed that the data of no room for expression and feature of evaluation were not normally distributed (p < 0.050). Therefore, emphasis must be placed on the bootstrapped 95% confidence intervals, rather than on the p-values.

The results did, however, show a significant positive correlation between state social anxiety and the evaluation apprehension factor no room for expression, r (54) = 0.469, p < 0.001; and a significant negative correlation between the evaluation apprehension factor criticism on ideas and productivity, r (54) = −0.293, p = 0.032.

Given these results further exploration by means of (moderated) mediation analyses is unlikely to provide further insight into the results. Therefore, these were not conducted (Hayes 2017). These findings suggest that, at least to some extent, the expected relationship between social anxiety, evaluation apprehension, and productivity was replicated. Brainstorming with a social robot facilitator, compared to a human facilitator, however, did not appear to influence this relationship in any way.

Discussion

The presented study was conducted to take a first look at how brainstorming with a social robot facilitator compares to brainstorming with a human facilitator.

Summary and Interpretation of the Results

The results showed no evidence that brainstorming with a teleoperated social robot facilitator, compared to brainstorming with a human facilitator, influenced productivity. Where previous studies found positive effects of social robot facilitation compared to other technologies, such as an iPad application (Ali et al., 2021), PowerPoint presentation (Kahn et al., 2016) or a social robot that was turned off (Alves-Oliveira et al., 2020), the present study thus adds no evidence indicative that robot facilitation led people to generate more ideas than human facilitation. However, participants also did not generate fewer ideas with a robot than with a human facilitator. When a human facilitator is unavailable, or undesirable, a social robot might be a suitable replacement, provided that it can be programmed to facilitate brainstorming autonomously.

The results also showed no evidence that brainstorming with a social robot facilitator, compared to with a human facilitator, increased productivity due to its effects on the relationships between social anxiety and evaluation apprehension. This finding adds to previous work, which suggested that when people anticipate to collaborate on a task, people with a strong, compared to a weak disposition to experience social anxiety prefer to work with a social robot rather than a with human collaborator (Nomura et al., 2020). In the present study, participants actually worked with the social robot, but this had no notable effect on state social anxiety, nor was this effect moderated by trait social anxiety. Actually working with a social robot may thus not affect social anxiety, at least not within the context of an appropriately facilitated brainstorm, in this case by a professional facilitator via teleoperation. Further conjectures about subsequent effects on productivity via evaluation apprehension were therefore by extension also inaccurate.

The results did confirm, at least partly, the general theoretical assumptions about the relationships between social anxiety, evaluation apprehension, and productivity during brainstorming (Table 2). Social anxiety positively influenced participants’ experience that there was no room for expression, and experienced criticism on their ideas negatively affected productivity (Leary 1983; Bordia et al., 2006). Moreover, a stronger disposition to experience social anxiety, led participants to experience more state social anxiety during the brainstorm (Spielberger 1966). Trait and state anxiety, however, did not influence productivity. Although this seems to contradict Camacho and Paulus’s (1995) findings, their study was about brainstorming with peers rather than with a facilitator. Instead, the present study showed that the facilitator shared more ideas to keep the brainstorm going with participants that experienced more state social anxiety, compensating rightly for their reduced productivity (Sanders and Stappers 2008). The general psychological mechanism by which a social robot facilitator was thought to affect productivity, was therefore at least partially confirmed. It was just that no evidence was found of an effect of brainstorming with a robot facilitator, compared to a human facilitator, on these relationships between trait and state anxiety, evaluation apprehension, and productivity during brainstorming.

Limitations and Future Research

As with any first look, there are limitations that need to be taken into account when interpreting and building upon the results.

Firstly, next to any limitations introduced by the modest sample size, it may have been the case that the social anxiety experienced during the brainstorm was not sufficiently strong to lay bare effects of brainstorming with a social robot thereon. The scores on the trait and state anxiety questionnaires were low, indicating on average slight disagreement with statements indicative of social anxiety. This limits the generalizability of the results. Even so, if people with high trait and state social anxiety are the only demographic for which brainstorming with a social robot facilitator may be advantageous, this may not provide a strong case for investing in further research and development in social robot facilitators. Before such conclusions can be drawn, however, it may be advantageous to do further exploratory testing, a second look if you will, that includes further variables that may affect the relationship between human and robot facilitation, such as variation in level of training, facilitation styles, different group sizes, perceived robot autonomy, and online vs. offline differences.

Secondly, analysis of the evaluation apprehension scale revealed that three separate constructs were measured (Table 1). Although there were relationships between state social anxiety and no room for expression, and between criticism on ideas and productivity, these factors showed that state anxiety and productivity could not be correlated directly via the mechanisms that underlie evaluation apprehension. The imposed reliance on factor analysis, here, rather being able to rely on more in-depth theory to tease out the cause-and-effect relationships between social anxiety, evaluation apprehension, and productivity, threatens the study’s internal and construct validity; which is further threatened by the resultant reliance on a non-simple factor structure (items 1 and 7), inclusion of factor loadings of close but inverted intensity (item 3), a factor expressed by a single item (factor “fear of evaluation” and item 6), and deviations from normality (factors “no room for expression” and “fear of evaluation”) (e.g., Sellbom and Tellegen, 2019; Thurstone, 1947). See also Table 1. Combined with the fact that only one type of robot form was tested (the SoftBank Robotics NAO v5), further work could benefit from testing the effects of dedicated robotic forms and behaviors on the precise psychological mechanisms that drive the relationships between social anxiety, evaluation apprehension and productivity during brainstorming.

Thirdly, it must be noted that relying on the Wizard-of-Oz method threatens the study’s external validity, because it remains to be seen whether the AI of a social robot can be developed to effectively deliver our brainstorm facilitation protocol. Although the Wizard-of-Oz method is widely used in research on the efficacy of brainstorming or doing other types of creative work with a social robot facilitator (Kahn et al., 2016; Alves-Oliveira et al., 2020), more research is needed to develop the computational backbone of social robot facilitators. In this regard, researchers such as Ali et al. (2021) are leading the way.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the TSHD Research Ethics and Data Management Committee, Tilburg University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors contributed to developing the theory, method and article, and approved the submitted version. JG collected the data. AR conducted the statistical analysis.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1https://osf.io/g5bhy/?view_only=6606a7779fed43188914d803bc053407.

References

Abraham, Anna. (2018). The Neuroscience of Creativity. Cambridge University Press. doi:10.1017/9781316816981

Ali, S., Park, H. W., and Breazeal, C. (2021). A Social Robot's Influence on Children's Figural Creativity during Gameplay. Int. J. Child-Computer Interaction 28, 100234. doi:10.1016/j.ijcci.2020.100234

Alves-Oliveira, P., Arriaga, P., Cronin, M. A., and Paiva, A. (2020). “Creativity Encounters between Children and Robots,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, 379–388. doi:10.1145/3319502.3374817

Bartneck, C., and Forlizzi, J. (2004). A Design-Centred Framework for Social Human-Robot Interaction. ” Proceedings - IEEE International Workshop on Robot and Human Interactive Communication, 591–594. doi:10.1109/roman.2004.1374827

Bolin, A. U., and Neuman, G. A. (2006). Personality, Process, and Performance in Interactive Brainstorming Groups. J. Bus Psychol. 20 (4), 565–585. doi:10.1007/s10869-005-9000-7

Bordia, P., Irmer, B. E., and Abusah, D. (2006). Differences in Sharing Knowledge Interpersonally and via Databases: The Role of Evaluation Apprehension and Perceived Benefits. Eur. J. Work Organizational Psychol. 15 (3), 262–280. doi:10.1080/13594320500417784

Breazeal, C. (2011). Social Robots for Health Applications. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA. IEEE, 5368–5371. doi:10.1109/IEMBS.2011.6091328

Camacho, L. M., and Paulus, P. B. (1995). The Role of Social Anxiousness in Group Brainstorming. J. Personal. Soc. Psychol. 68 (6), 1071–1080. doi:10.1037/0022-3514.68.6.1071Mabel

Davis, N., Hsiao, C.-P., Popova, Y., and Magerko, B. (2015). An Enactive Model of Creativity for Computational Collaboration and Co-creation. In Springer Series on Cultural Computing. Springer, 109–133. doi:10.1007/978-1-4471-6681-8_7

Diehl, M., and Stroebe, W. (1987). Productivity Loss in Brainstorming Groups: Toward the Solution of a Riddle. J. Personal. Soc. Psychol. 53 (3), 497–509. doi:10.1037/0022-3514.53.3.497

Field, Andy. (2013). Discovering Statistics Using IBM SPSS Statistics. Washington, DC: Sage Publications.

Fong, T., Nourbakhsh, I., and Dautenhahn, K. (2003). A Survey of Socially Interactive Robots. Robotics Autonomous Syst. 42 (3–4), 143–166. doi:10.1016/S0921-8890(02)00372-X

Frich, J., MacDonald Vermeulen, L., Remy, C., Biskjaer, M. M., and Dalsgaard, P. (2019). “Mapping the Landscape of Creativity Support Tools in HCI,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 1–18. doi:10.1145/3290605.3300619

Hayes, A. F. (2017). Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach. New York, NY: Guilford publications.

Heimberg, R. G., Mueller, G. P. Craig. S. Holt, Debra. A. Hope., Holt, C. S., Hope, D. A., and Liebowitz, M. R. (1992). Assessment of Anxiety in Social Interaction and Being Observed by Others: The Social Interaction Anxiety Scale and the Social Phobia Scale. Behav. Ther. 23 (1), 53–73. doi:10.1016/S0005-7894(05)80308-9

Isaksen, Scott. G., Brain, K., and Treffinger, Donald. J. (2010). Creative Approaches to Problem Solving: A Framework for Innovation and Change. Thousand Oaks, CA: Sage Publications.

Kahn, P. H., Kanda, T., Ishiguro, H., Gill, B. T., Shen, S., Ruckert, J. H., et al. (2016). Human Creativity Can Be Facilitated through Interacting with a Social Robot. In ACM/IEEE International Conference on Human-Robot Interaction, 2016-April. 173–180. doi:10.1109/HRI.2016.7451749

Leary, M. R. (1983). Social Anxiousness: The Construct and its Measurement. J. Personal. Assess. 47 (1), 66–75. doi:10.1207/s15327752jpa4701_8

Nomura, T., Kanda, T., Suzuki, T., and Yamada, S. (2020). Do People with Social Anxiety Feel Anxious about Interacting with a Robot? AI Soc. 35 (2), 381–390. doi:10.1007/s00146-019-00889-9

Paulus, P. B., and Yang., H.-C. (2000). Idea Generation in Groups: A Basis for Creativity in Organizations. Organizational Behav. Hum. Decis. Process. 82 (1), 76–87. doi:10.1006/obhd.2000.2888

Pinsonneault, A., Barki, H., Gallupe, R. B., and Hoppen, N. (1999). Electronic Brainstorming: The Illusion of Productivity. Inf. Syst. Res. 10 (2), 110–133. doi:10.1287/isre.10.2.110

Pot, E., Monceaux, J., Gelin, R., Maisonnier, B., and Robotics, Aldebaran. (2009). Choregraphe: A Graphical Tool for Humanoid Robot Programming. In Proceedings - IEEE International Workshop on Robot and Human Interactive Communication, 46–51. doi:10.1109/ROMAN.2009.5326209

RIVM (2018). Factsheet Mentale Gezondheid Van Jongeren: Enkele Cijfers En Ervaringen. Rijsinstituut Voor Volksgezondheid En Milieu. Available at: https://www.rivm.nl/documenten/factsheet-mentale-gezondheid-van-jongeren-enkele-cijfers-en-ervaringen .

Sanders, E. B.-N., and Stappers, P. J. (2008). Co-Creation and the New Landscapes of Design. CoDesign 4 (1), 5–18. doi:10.1080/15710880701875068

Sawyer, R. Keith. (2011). Explaining Creativity: The Science of Human Innovation. Oxford University Press. doi:10.1093/oxfordhb/9780199204540.003.0003

Scassellati, B., Henny Admoni, Henny., and Matarić, M. (2012). Robots for Use in Autism Research. Annu. Rev. Biomed. Eng. 14, 275–294. doi:10.1146/annurev-bioeng-071811-150036

Sellbom, M., and Tellegen, A. (2019). Factor Analysis in Psychological Assessment Research: Common Pitfalls and Recommendations. Psychol. Assess. 31 (12), 1428.

Spielberger, C. D. (1966). Theory and Research on Anxiety. Anxiety Behav. 1 (3), 3–20. doi:10.1016/b978-1-4832-3131-0.50006-8

Thurstone, L. L. (1947). Multiple-Factor Analysis; A Development And Expansion of the Vectors of Mind. Chicago, IL: University of Chicago Press.

Warr, A., and O'Neill, E. (2005). “Understanding Design as a Social Creative Process,” in Proceedings of the 5th Conference on Creativity & Cognition, 118–127. doi:10.1145/1056224.10562422005

Keywords: social robot, brainstorming, facilitator, creativity, social anxiety, evaluation apprehension

Citation: Geerts J, de Wit J and de Rooij A (2021) Brainstorming With a Social Robot Facilitator: Better Than Human Facilitation Due to Reduced Evaluation Apprehension?. Front. Robot. AI 8:657291. doi: 10.3389/frobt.2021.657291

Received: 22 January 2021; Accepted: 14 May 2021;

Published: 25 June 2021.

Edited by:

Patricia Alves-Oliveira, University of Washington, United StatesReviewed by:

Natalia Calvo-Barajas, Uppsala University, SwedenTesca Fitzgerald, Carnegie Mellon University, United States

Claude Houssemand, University of Luxembourg, Luxembourg

Alla Gubenko, University of Luxembourg, Luxembourg, in collaboration with reviewer CH

Copyright © 2021 Geerts, de Wit and de Rooij. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alwin de Rooij, QWx3aW5kZVJvb2lqQHRpbGJ1cmd1bml2ZXJzaXR5LmVkdQ==

Julia Geerts

Julia Geerts Jan de Wit

Jan de Wit Alwin de Rooij

Alwin de Rooij