- 1Human Robotics Laboratory, Department of Mechanical Engineering, The University of Melbourne, Parkville, VIC, Australia

- 2Department of Surgery, St. Vincent’s Hospital, The University of Melbourne, Parkville, VIC, Australia

- 3Aikenhead Centre for Medical Discovery (ACMD), St. Vincent’s Hospital, Parkville, VIC, Australia

Haptic perception is one of the key modalities in obtaining physical information of objects and in object identification. Most existing literature focused on improving the accuracy of identification algorithms with less attention paid to the efficiency. This work aims to investigate the efficiency of haptic object identification to reduce the number of grasps required to correctly identify an object out of a given object set. Thus, in a case where multiple grasps are required to characterise an object, the proposed algorithm seeks to determine where the next grasp should be on the object to obtain the most amount of distinguishing information. As such, the paper proposes the construction of the object description that preserves the association of the spatial information and the haptic information on the object. A clustering technique is employed both to construct the description of the object in a data set and for the identification process. An information gain (IG) based method is then employed to determine which pose would yield the most distinguishing information among the remaining possible candidates in the object set to improve the efficiency of the identification process. This proposed algorithm is validated experimentally. A Reflex TakkTile robotic hand with integrated joint displacement and tactile sensors is used to perform both the data collection for the dataset and the object identification procedure. The proposed IG approach was found to require a significantly lower number of grasps to identify the objects compared to a baseline approach where the decision was made by random choice of grasps.

1 Introduction

Haptics is one of the important sensing modalities used to perceive object physical properties, surface properties, and interaction forces between the end-effectors and the objects (Shaw Cortez et al., 2019; Scimeca et al., 2020; Mayer et al., 2020). Object identification is one of the most important applications of haptics, particularly, in cases where the identification process needs to rely on the information provided only through the physical interaction between the end-effector and the objects, or when it cannot be conveniently achieved by other means (Dargahi and Najarian, 2004). While haptics is most likely used in conjunction with other sensing modalities in practice (e.g. with vision) (Liu et al., 2017; Faragasso et al., 2018), it is also important for haptic-based object identification to be studied in isolation to understand the extent of its capabilities. It should be noted that haptics refers to the description of an object through all the information obtained by touching the object (Overvliet et al., 2008; Grunwald, 2008; Hannaford and Okamura, 2016). In this work, the haptic information involves not only the tactile information at the contact points/surface but also the proprioceptive information such as the pose of the fingers upon touching the objects.

Accuracy and efficiency are two important evaluation metrics in object identification in general as well as in haptic object identification. In haptic-based object identification, multiple grasps of the object presented are often required to identify it from the given set of objects. This is also naturally observed in human efforts of object identification when relying on handling the object without vision (Gu et al., 2016; Luo et al., 2019). The efficiency of the identification process is therefore to do with identifying the object in as few numbers of grasps as possible. Most existing literature focuses on improving the accuracy. Examples include histogram-based methods (Luo et al., 2015; Schneider et al., 2009; Pezzementi et al., 2011; Zhang et al., 2016) and various supervised learning techniques (such as random forest and neural network) (Spiers et al., 2016; Schmitz et al., 2015; Liu et al., 2016; Funabashi et al., 2018; Mohammadi et al., 2019). Relative to the accuracy, the efficiency of haptic object identification techniques has been less investigated with only a few reported studies (Kaboli et al., 2019; Xu et al., 2013). In (Xu et al., 2013), an efficient exploratory algorithm was presented specifically for texture identification. In (Kaboli et al., 2019), a method was presented to improve the efficiency of the learning process of object physical properties for the purpose of constructing object descriptions, not object identification.

The objective of this paper is to provide a systematic method for analysing and quantifying the efficiency of haptic object identification. The process affects both stages of the exercise: the object dataset construction process and haptic object identification process based on information gain technique. An approach for the object dataset construction is proposed to preserve the association of the spatial information on the objects and the haptic information they yield. During the data set construction, the clustering technique is employed to reduce the dimensionality and complexity of the object descriptions, such as in (Pezzementi et al., 2011). Based on the preserved spatial information (haptic object description), the haptic information associated with each pose on the object is available to the algorithm. In contrast, histogram-based approaches (Luo et al., 2015; Schneider et al., 2009; Pezzementi et al., 2011; Zhang et al., 2016) construct object descriptions by counting how many times each grasp cluster appears in each object, but not which poses they belong to, thus cannot be utilised to determine where to grasp the objects to obtain specific information.

The proposed object label construction in the object dataset is then combined with a proposed information gain based technique to calculate the amount of information at each pose that can be obtained to distinguish the object to be identified from the rest of the objects in the object set. Efficiency in haptic object identification is pursued in this algorithm by selecting the pose that contains the largest amount of information. This leads to a significant reduction in the number of grasps required to correctly identify the object out of a given object set compared to the conventional practice where such object information is not utilised in the identification process.

2 Proposed Methodology

Given a set of objects

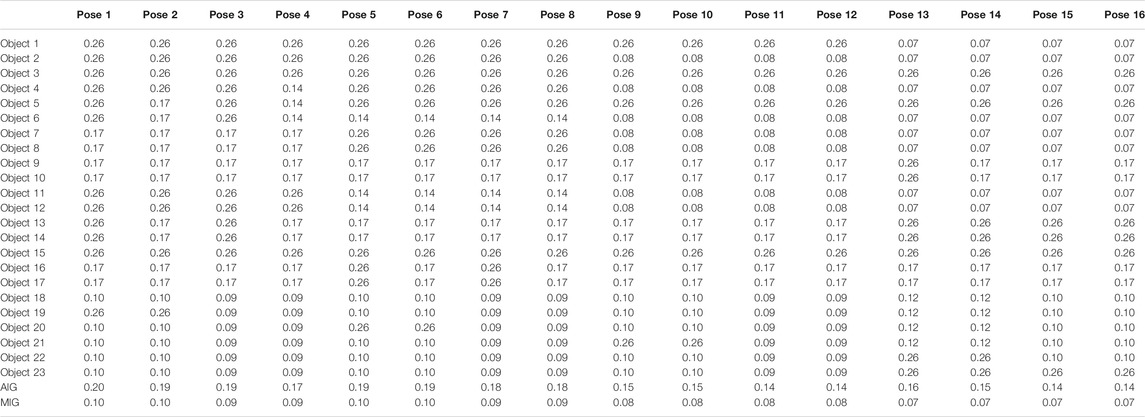

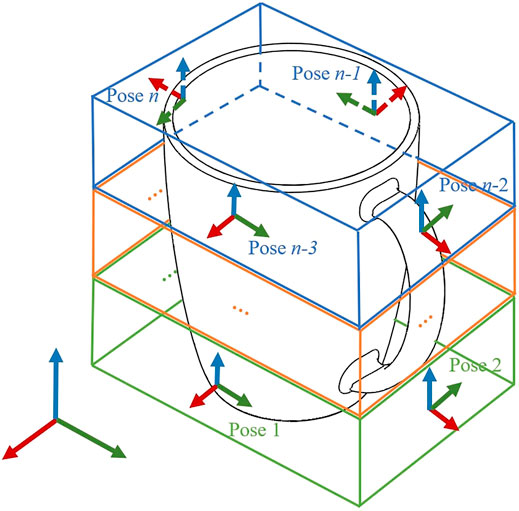

FIGURE 1. The characteristics of an object are regarded to be fully captured by grasping the object at n different poses. The n poses are spread, if possible uniformly, across the surface of the object.

The objective of this work, therefore, is to identify an object presented to the algorithm, among possible objects in the object dataset, with the fewest number of grasps, where the possible grasp poses (the location and orientation that a robotic hand is used to grasp the object, relative to the object) are common for all objects.

The process involved in this exercise includes the construction of the object dataset (that incorporates the grasp pose information to the corresponding haptic information) and the object identification. Once the object dataset is established according to the proposed method, the problem is to identify which object (out of the object set) is being presented to the algorithm.

2.1 Object Dataset Construction

The object dataset construction procedure is presented in three steps: Data acquisition and normalisation, categorising the grasp clusters and, constructing the object description. The details of each step are presented below.

2.1.1 Data Acquisition and Normalisation

Haptic measurements for all objects are taken at the predefined poses and the signals are processed. Each grasp on the object will yield a M dimensional measurement, corresponding to the number of sensors on the robotic hand. To collect the data, the robotic hand grasps each object at n different poses covering the object for all ℓ objects in the object set. The grasp at each pose is repeated T times to account for the expected amount of uncertainty associated with the grasping process, for example, the sensor noise and the robot hand pose uncertainties. Due to the different dimensions associated with the different types of physical variables involved (joint displacement and pressure), a normalisation method is needed to remove the influence of the dimension and the unit. In this work, the “Min-Max” normalisation method (Jain et al., 2005), which scales the values of all variables to the range of zero and one, is employed.

2.1.2 Categorising the Grasp Clusters

An unsupervised learning technique is then utilised to cluster all the M dimensional normalised measurements for all n poses in an object for all objects in the dataset into K grasp clusters. In this paper, the well-established K-Means approach is adopted due to its high-speed performance. Details of the K-Means algorithm can be found in (Parsian, 2015). To determine the number of clusters, the ‘elbow method’ is used (Bholowalia and Kumar, 2014).

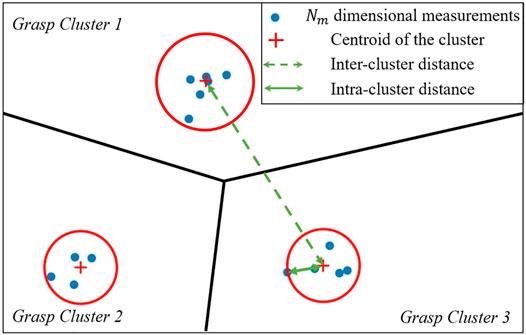

As an illustrative example, a 2-dimensional plot representing the clustering results is shown in Figure 2. Each point on Figure 2 represents 1 M dimensional normalised measurement (for ease of reading, the M dimension plot is conceptually represented in a 2-dimensional plot). Therefore, in order to focus the study on the efficiency of the algorithm, it is assumed that the object dataset is well designed with distinguishable objects, and noise/uncertainty in the haptic measurements are bounded in a range that is clearly smaller than the variance in the distinguishing information between distinct grasps. Therefore, grasping an object at a given pose repeated multiple times will result in measurements that should all be categorized into the same cluster, which guarantees the identification accuracy can be decoupled from the efficiency. Furthermore, for each cluster, the spread of measurements will be completely bounded (represented by the red circles in Figure 2) and the minimum distance between the centroids of distinct clusters (inter-cluster distance) should be much larger than the radius of this bound (intra-cluster distance). Note that this assumption is only used for the object dataset construction. During the object identification step, practical uncertainties are included in the test, where the effect of such uncertainties is evaluated in the experiment.

FIGURE 2. An illustration of a 2D projection of the clusters formed by the M-dimensional normalised measurements (represented by each point on the plot) for all n poses in an objects for all ℓ objects in the dataset. The points are categorised into K grasp clusters.

2.1.3 Constructing the Object Description

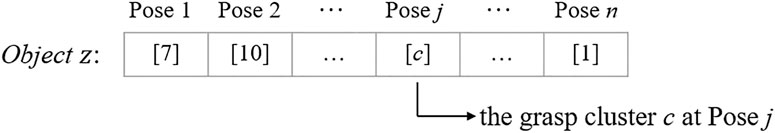

After categorisation, an object can be labelled uniquely by the string (of length n) for the n poses across the object. Each pose p1 … pn is assigned a cluster number, which is the cluster that the haptic measurements at that pose for that object have been categorised into. An example of the label of an object in the set is represented in Figure 3. In this example, when grasped at Pose 1, the object yields haptic measurements across the M sensors on the robotic grasper that has been categorised into grasp cluster number seven through the clustering process. The resulting object description therefore preserves the information of which pose yields which grasp measurement, which is now represented as the grasp cluster number.

2.2 Object Identification

The proposed object identification approach in this paper utilises the information gain based (IG-based) technique. In this paper, it would be compared to a baseline approach, where the knowledge of the characteristics in the object dataset is not utilised. In this work, any objects presented to be identified should belong to the object set. If a new object (which does not belong to the object set) is presented, it should first be added to the object data set, by undergoing the Object Dataset Construction step.

It should be noted that when performing the object identification, the relative pose between the robotic grasper and the object is assumed to be known within the bound of uncertainties. This is a common assumption made in the studies of haptic-based object identification techniques, such as in (Madry et al., 2014; Luo et al., 2015; Schmitz et al., 2015; Liu et al., 2016; Spiers et al., 2016), to simplify the analysis and to keep the paper focused on the main question at hand. In practice, the relative displacements of subsequent poses grasped by the robotic end-effector are known to the algorithm that commands the robot. Only the pose of the initial grasp (the first grasp the robot makes contact with the object) is unknown. This, however, can be obtained as the robot makes its subsequent grasps, in a technique analogous to the well established simultaneous localisation and map building (SLAM). This technique, adapted to the haptic-based object identification problem, is established in the literature and was presented in (Lepora et al., 2013).

2.2.1 Information Gain Based Approach (The Proposed Approach)

Information gain is a commonly used concept to represent the amount of information that is gained by knowing the value of a feature (variable) (Gray, 2011). Specifically, IG assigns the most distinguishable feature with the highest information value. Currently, IG has been developed and applied to decision trees, simultaneous localization and mapping (SLAM), and feature selection techniques (Kent, 1983; Lee and Lee, 2006) and in object shape re-construction by improving the exploration efficiency (Ottenhaus et al., 2018).

2.2.1.1 Background on Information Gain Approach

For a random variable X with a possible outcomes, xα, α = 1, … , a, assume that each outcome has a probability of p(xα). The information entropy H(X) is defined as:

Conditional entropy is then used to quantify the information needed to describe the outcome of a random variable X given the value of another random variable Y. Assume that there are b possible outcomes of the random variable Y. Each outcome yβ of the random variable Y has a probability of p(yβ), β = 1, … , b. Let p(xα|yβ) be the conditional probability of xα given yβ. H(X|Y) represents the information entropy of the variable X conditioned upon the random variable Y, which can be computed as:

Information Gain represents the degree to which uncertainty in the information is reduced when the random variable Y happens, which is defined as

2.2.1.2 IG-Based Approach for Haptic Object Identification

An IG-based approach is proposed here that estimates the degree to which the information uncertainty is reduced by each choice of the pose in the haptic identification applied to a set of ℓ candidate objects.

At each iteration, the algorithm will calculate the information gain for each pose for every remaining object in the set. Firstly, the information entropy is calculated for each object. Considering that N remaining objects in the set, the given object will be sequentially indexed as o1, o2, … , oN. Note that at the first iteration, N = ℓ. When the object to be identified is assumed to be oi, all other remaining objects in the set will then be named ¬oi (i.e., not oi). Therefore, the object set for object oi can be represented as two possible outcomes: Ooi = {oi, ¬oi}, representing the random variable. The probability of each possible outcome can then be calculated as:

Therefore, the information entropy for the object oi can be calculated as:

A second variable considered here is Cpj = {cpj,1,...,cpj,gi} containing non-repeating grasp cluster numbers at pose pj for all remaining objects in the set, while gj is the number of non-repeating grasp cluster numbers in the set Cpj. At pose pj, each possible outcome

Therefore, the conditional entropy for object oi at pose pj can be computed as

The

otherwise, if the grasp cluster number of oi at pj is not cpj,t, then

The information gain (IG) for object oi at pose pj can be calculated as:

The information gain can be calculated for all remaining objects for each pose. The algorithm then selects the pose that contains the highest IG. In other words, the algorithm will grasp the object at the pose that would provide the most information gain. For each pose, each object has its IG value, representing the degree of distinction of this object from all other remaining objects in the set at this pose. At a given pose, the greater the IG value of an object, the greater the degree of distinction between this object and other remaining objects at this pose. There may be a few ways to quantify the measure of the “highest information gain”. In this paper, the algorithm first selects the pose with the largest value of minimum information gain (MIG). When the largest value of MIG is shared by more than one pose, the algorithm then chooses the pose with the highest value of average IG (AIG) out of these poses. If the highest value of AIG is yet shared by more than one pose, any of these poses can be chosen since they all have the same amount of information for the object identification purpose. If such situation arises, in this paper, the pose selection will follow the order of poses.

The summary of the proposed method is shown in Algorithm 1. Note that for a given object dataset, the first pose in the identification process is always the same, as the algorithm will go first for the pose with the largest value of minimum IG for the entire object dataset. After the first grasp, depending on the measurements encountered on the object to the identified, the appropriate candidate objects are eliminated from consideration and a “reduced object set” is generated, containing the remaining possible options (of objects) that could not be eliminated. The proposed algorithm will be repeated until the number of objects in the set is less than or equal to one. The outcome of the object identification process can be one of the following: 1) the object is correctly identified, or 2) the object is misidentified (as another in the object set). It is also possible in the case of misidentification that the resulting object description does not match any in the object dataset. In this case, 3) the object is considered as unidentified. Note that this does not imply that the new object is not contained in the object dataset. It simply means that the uncertainties in the measurements resulted in an object description that cannot be matched to the dataset.

2.2.2 Baseline Approach

In this paper, a baseline approach is constructed to be compared with the proposed IG based approach, and the summary of it is shown in Algorithm 2. By baseline approach, we mean that the decision on where to grasp next in the haptic object identification process does not consider the information content of the object dataset. The procedure of the baseline approach is similar to the IG based approach (it still eliminates inadmissible candidates following the grasps performed), but the subsequent grasp pose is selected at random. The grasp poses where measurements are already taken are removed from the set p, which means this grasp pose can not be chosen in the following identification iterations. The baseline approach has also been utilised in (Corradi et al., 2015; Vezzani et al., 2016; Zhang et al., 2016) where efficiency is not the focus of their study.

3 Experimental Evaluation

3.1 Experimental Setup

To evaluate the performance of the proposed object identification algorithm, the following experimental setup is used in this paper:

3.1.1 Object Set

To represent a wide range of objects with different values of stiffness and shapes, 23 objects are selected in this study (as shown in Figure 4). Objects used for this study are: 1) Glass bottle, 2) Cylindrical can, 3) Peppercorn dispenser, 4) Mug, 5) Spray bottle, 6) Cuboid can, 7) 3D printed cylinder, 8) Arbitrarily shaped 3D-printed object, 9) Pepsi bottle, and 10) Pepsi bottle otherwise identical to Object 9) but with a bump on the bottle cap, 11) Tennis ball (soft), 12) Silicone ball (hard), 13) Oral-B blue bottle, 14) Oral-B white bottle, 15) Soft drink bottle, 16) Sports drink bottle (full), 17) Sports drink bottle (empty), 18) Assembled Lego blocks, 19) Assembled Lego blocks with a specific part at Height 1, 20) Assembled Lego blocks with a specific part at Height 2, 21) Assembled Lego blocks with a specific part at Height 3, 22) Assembled Lego block with a specific part at Height 4, 23) Assembled Lego blocks with a shorter height. All selected objects satisfy the assumption on distinguishability of the objects.

Objects in the set are intentionally selected to have a high level of similarity, such as Objects 9 and 10 which are identical except for the bump on the bottle cap on Object 10. Object 11 and 12 have the same shape of their main body, with stiffness difference overall. Object 13 and 14 have similar size and shapes but with different stiffness at Pose 1. Therefore, when grasping these two, the joint angle will be similar, but the tactile map will be different. Object 15 and 16 have similar object convex envelopes, such that they result in similar finger displacements when grasped. However, they differ in that one has clearly defined edges while the other shows a rounded shape. The tactile measurements upon grasping these two objects are significantly different, while the finger displacements of the grasps are similar. Object 15 and 17 are the same object (identical plastic bottles), but one is filled full with water while the other is empty. Object 18–23 have similar shapes on the majority of the portions of the objects, except on one specific part on each object.

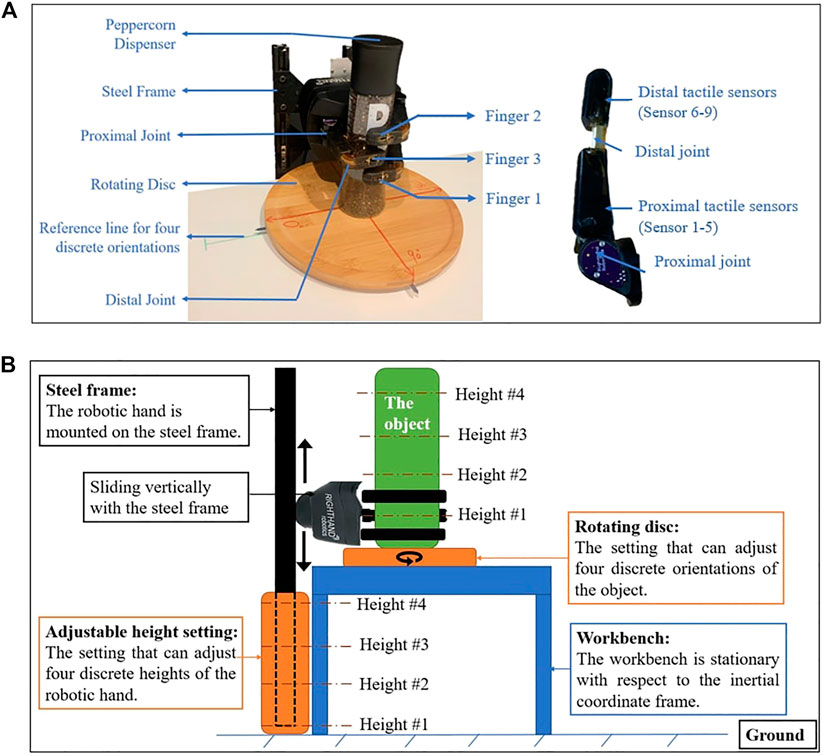

3.1.2 Robotic Hand and Tactile Sensors

The experimental platform is shown in Figure 5. The ReFlex TakkTile (Right Hand Robotics, US), which consists of three under-actuated fingers, is used in the study. The ReFlex hand has 4 Degrees of Freedom (DoFs): the flexion/extension of each finger and one coupled rotation between the orientation of finger No.1 and No.2). In this work, only the three DoFs of finger flexion/extension are used. Each under-actuated finger is controlled by an actuator that can drive the tendon spanning both the proximal and distal joint. The proximal joint connects the proximal link to the base, and the distal joint connects the distal link to the proximal link. For each finger, there are one proximal joint encoder, one tendon spool encoder, and nine embedded Takktile pressure sensors arranged along with the finger. The reading of the tendon spool encoder represents the angular displacement of the whole finger (the sum of the angular displacement of proximal joint and distal joint), representing the proprioceptive information. Therefore, the degree of flexion of the distal joint can be calculated from the difference between the tendon spool decoder and the proximal joint encoder.

FIGURE 5. Experimental platform. (A) The physical experimental setup. (B) The schematic of the experimental setup (side view).

In this work, the ReFlex hand is mounted on a steel frame and its three fingers are positioned parallel to the horizontal direction. The height of the robotic hand can be determined by adjusting the vertical position of the steel frame. All the data is collected at 40Hz sampling. During each grasp, all fingers are commanded to move 3 rad over 6 s. A torque threshold is also set for each motor. For each finger, once this threshold is reached, this finger will stop moving and keep that pose as the final pose for measurements.

3.1.3 Software

Robotic hand operation and data collection are all performed via Robot Operating System (ROS), version ‘ROS-indigo’, under Linux Ubuntu 14.04 computer system. The information (joint angle and pressure value information) is recorded via ‘rosbag’ command and is saved in the ‘comma-separated values’(’.csv’) file.

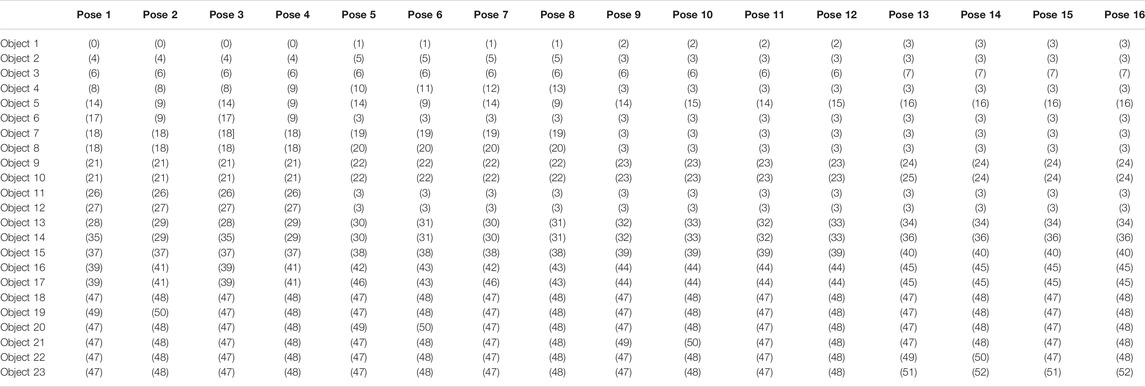

3.2 Object Dataset Construction

Each object oℓ ∈ O, ℓ = 1, … , 23 is grasped at 16 different poses, n = 16, divided across four grasp heights (with 55 mm increments). The object is grasped in four different orientations about the vertical axis at each height setting (with 90o increments). For each object, the grasp is repeated 10 times at each pose. The collected data are then normalised using the “Min-Max” normalisation method. To categorise the data, fifty-three grasp clusters are identified through the ‘elbow method’. Since each object is grasped at n = 16 different poses, the label for each object is made up of a series of n = 16 grasp cluster numbers.

3.3 Object Identification

Using the established data set, the object identification procedure with IG-based algorithm for efficiency was validated for two scenarios, where the measurements taken for the object identification process were done:

• Scenario 1: without uncertainty. This represents a sanity check, representing the theoretical-best-possible outcome, evaluating only the ability of the IG-based algorithm in utilising the information of the objects in the dataset to make decisions on where to make the subsequent measurement grasp. Note that the sensor measurement errors (of the robotic grasper encoders and tactile sensors) are still present in this case but were not significant to cause any false outcomes in identifying the grasp types.

• Scenario 2: with an amount of uncertainties approximated to a typical practical process. In this paper, the amount of practical uncertainties was realised as the positioning error of the grasper relative to the object, bounded within ±20 degrees of grasping orientation uncertainty (with respect to the object). This scenario evaluates the performance of the proposed method in a practical situation where measurement and grasp positioning noise are present.

Two tests are conducted in both scenarios: the accuracy evaluation and the efficiency evaluation. In the accuracy evaluation, ten sets of identification trials for each object are conducted, and the identification accuracy of each object will be recorded. The procedure is shown in Algorithm 3. In the efficiency evaluation, each object presented is to be correctly identified ten times. The procedure of the efficiency evaluation is summarised in Algorithm 4. The measure of evaluation is defined as the number of grasps used to correctly identify the presented object. When uncertainties are involved in the identification, where there is a possibility of the algorithm misidentify the grasp type when measuring one of the poses, this definition means that in each (of the ten attempts per object presented) to identify an object correctly, the number of grasps made on the object when a correct identification is not achieved are also counted towards calculating the efficiency. Each time the algorithm misidentify the presented object, the algorithm starts a new attempt by identifying the object from the beginning.

4 Results and Discussion

4.1 Object Dataset Construction

The result of the object dataset construction used in the experimental evaluation is represented in Table 1. In the resulting object dataset, each of the 23 objects is described as a 16-number long string, representing the 16 cluster numbers for that specific object from the measurements taken at the 16 grasping poses performed on the object.

4.2 Object Identification

4.2.1 Scenario 1 (Without Uncertainties)

Accuracy Evaluation

Ten sets of identification trials for each object are conducted. In this scenario where no uncertainties were included, it was confirmed that all trials yielded a 100% accuracy for both the IG-based approach and the baseline approach, as expected.

Efficiency Evaluation

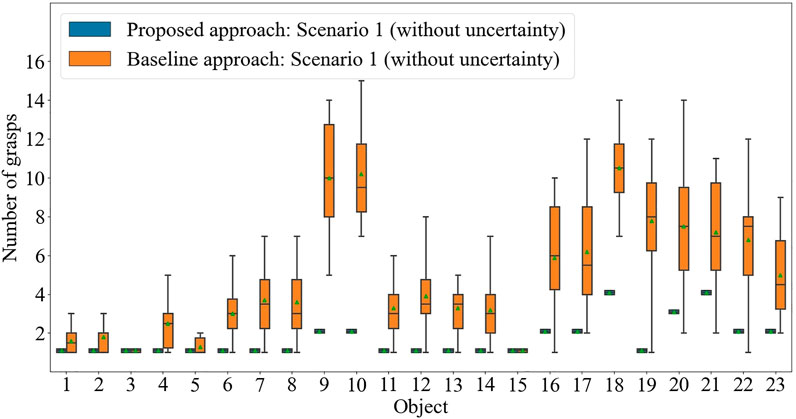

The number of grasps required to correctly identify the presented object, using the baseline approach and the proposed IG-based approach, are shown in Figure 6. Using the constructed object dataset, the IG value for each object at each pose can be calculated for the first iteration. The IG values for all the objects for all possible poses are shown in Table 2. To decide on the pose for the first grasp, the algorithm looks at the largest minimum IG (MIG) values for all the poses in Table 2. In our example, a MIG value of 0.10 can be found for Pose 1 and 2, and Pose 5 and 6 (see the last row of Table 2). The algorithm then selects out of these poses, the one with the highest averaged IG (AIG) value, which is 0.20 for Pose 1. Therefore, for the first grasp, Pose 1 is selected. Note that once the first grasp is made, depending on the grasp type identified, inadmissible candidates are removed and the IG values for the subsequent grasp will need to be calculated with the set of the remaining objects.

FIGURE 6. The number of grasps required to correctly identify each of 23 objects using the proposed approach and the baseline approach: Scenario 1 (without uncertainty).

The experiment results of the number of grasps required to correctly identify the object through the IG-based approach are shown in Figure 6. It is observed that the IG-based approach outperforms the baseline approach in general, even if the difference in performance differs from object to object. The average number of grasps needed for the baseline approach is 4.81 while the IG-based approach was observed to correctly identify the object on average in 1.65 grasps. As expected, the number of grasps required by the baseline approach to identify an object varies widely between different attempts due to the random selection in deciding the subsequent grasps. The IG-based approach is repeatable for all objects in the same set in the absence of practical uncertainties. This demonstrates the theoretically achievable performance of the proposed approach.

The advantage of the proposed approach is highlighted in the more challenging cases, where objects are more similar to others in the object set. Objects 9 and 10 are identical except for a dent in one of the two bottle caps - and the baseline approach struggled to identify the object without exploiting the knowledge of the objects in the dataset. Similar observations can be made to Objects 18 to 23 (See Figure 4).

4.2.2 Scenario 2 (With Uncertainty)

This section explores how the proposed object identification approach performs when the measurement includes a level of uncertainty typical to that seen in practical conditions. The identification procedure remains the same as shown in Section 4.2.1 where the first grasp is carried out at Pose 1.

Accuracy Evaluation

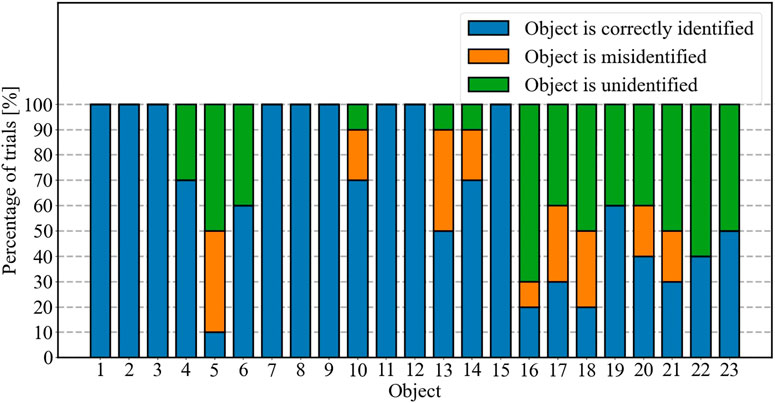

The resulting percentage accuracy of the proposed approach in the presence of practical uncertainties is shown in Figure 7. The percentage of misidentification (when an object identified as another) and unidentified (when the resulting descriptor does not match any object in the dataset due to the uncertainties involved) are also shown. It can be seen that some objects are more susceptible to uncertainties. In general, more cylindrical objects are less susceptible, while objects with sharp, angular features are more prone to misidentification. This is due to the nature of the tactile sensors on the robotic grasper used in our experiment, where individual sensors are embedded in the fingers. A change in the grasping pose will cause a sharp angular feature on an object to press on the adjacent tactile sensor, activating a completely different sensor in the resulting reading.

FIGURE 7. The accuracy of identifying each of 23 objects through the proposed approach: Scenario 2 (with uncertainty).

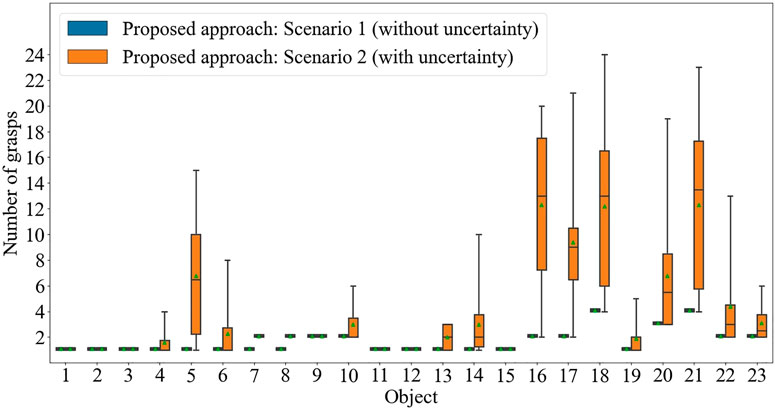

Efficiency Evaluation

The effect of the inclusion of the practical uncertainties in the performance of the proposed approach is shown in Figure 8, where it is compared to the performance without practical uncertainties. The average number of grasps required for correct identification increases from 1.65 Scenario 1 to 4.08 in this Scenario.

FIGURE 8. The number of grasps required to correctly identify each of 23 objects using the proposed approach: Scenario 2 (with uncertainty).

As seen in the outcome of the accuracy evaluation, the identification efficiency of rectangular-shaped objects - objects with sharp angular features on their surface (such as Object 5, 6, 13, 14, and 18) are the most affected, due to its poor accuracy, thus requiring, on average, more attempts (thus more grasps) to make a correct identification. It is also demonstrated that the constructed measure of efficiency is successful in capturing/accounting for the accuracy of the identification approach in reflecting the efficiency performance of the approach.

5 Conclusion

This paper presents an approach to utilise the information in the object dataset in improving the efficiency of a haptic object identification approach. It is shown that compared to an equivalent haptic-based object identification approach that does not exploit such information (representative of the conventional approaches), the number of grasps required to correctly identify the presented object was significantly reduced. In the experimental evaluation with 23 objects in the object set, the proposed approach required on average 1.65 grasps to 4.81 grasps in the baseline approach. The presence of uncertainties in any practical applications, such as in the positioning inaccuracies of the pose of the robotic grasper upon the object or the sensor noise in haptic sensors onboard the robotic grasper, affects the performance of the approach. This is because inaccuracies in the identification approach require the algorithms more attempts to achieve the same number of correct identification of the objects. It was observed that the shapes of the objects and their interaction with the robotic grasper used in the task also determine the susceptibility of the object to these uncertainties.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Author Contributions

YX, AM, YT, and DO: literature, experiment, data analysis, and paper. BC and PC: paper design, experiment design, and paper review.

Funding

This project was funded by the Valma Angliss Trust and the University of Melbourne.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bholowalia, P., and Kumar, A. (2014). Ebk-Means: A Clustering Technique Based on Elbow Method and K-Means in Wsn. Int. J. Computer Appl. 105 (9), 17–24. doi:10.5120/18405-9674

Corradi, T., Hall, P., and Iravani, P. (2015). “Bayesian Tactile Object Recognition: Learning and Recognising Objects Using a New Inexpensive Tactile Sensor,” in Proceedings of IEEE International Conference on Robotics and Automation, Seattle, WA, IEEE, 3909–3914. doi:10.1109/icra.2015.7139744

Dargahi, J., and Najarian, S. (2004). Human Tactile Perception as a Standard for Artificial Tactile Sensing - a Review. Int. J. Medi. Robotics and Computer Assisted Surgery. 01, 23–35. doi:10.1581/mrcas.2004.010109

Faragasso, A., Bimbo, J., Stilli, A., Wurdemann, H., Althoefer, K., and Asama, H. (2018). Real-Time Vision-Based Stiffness Mapping. Sensors 18, 1347. doi:10.3390/s18051347

Funabashi, S., Morikuni, S., Geier, A., Schmitz, A., Ogasa, S., Torno, T. P., et al. (2018). “Object Recognition through Active Sensing Using a Multi-Fingered Robot Hand with 3D Tactile Sensors,” in Proceedings of IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, IEEE, 2589–2595. doi:10.1109/iros.2018.8594159

Gray, R. M. (2011). Entropy and information theory. Berlin, Germany: Springer. doi:10.1007/978-1-4419-7970-4

Grunwald, M. (2008). Human Haptic Perception: Basics and Applications. Berlin, Germany: Springer Science and Business Media. doi:10.1007/978-3-7643-7612-3

Gu, H., Fan, S., Zong, H., Jin, M., and Liu, H. (2016). Haptic Perception of Unknown Object by Robot Hand: Exploration Strategy and Recognition Approach. Int. J. Hum. Robot. 13, 1650008. doi:10.1142/s0219843616500080

Hannaford, B., and Okamura, A. M. (2016). Haptics. In Springer Handbook of Robotics. Berlin, Germany: Springer, 1063–1084. doi:10.1007/978-3-319-32552-1_42

Jain, A., Nandakumar, K., and Ross, A. (2005). Score Normalization in Multimodal Biometric Systems. Pattern Recognition. 38, 2270–2285. doi:10.1016/j.patcog.2005.01.012

Kaboli, M., Yao, K., Feng, D., and Cheng, G. (2019). Tactile-based Active Object Discrimination and Target Object Search in an Unknown Workspace. Auton. Robot. 43, 123–152. doi:10.1007/s10514-018-9707-8

Kent, J. T. (1983). Information Gain and a General Measure of Correlation. Biometrika 70, 163–173. doi:10.1093/biomet/70.1.163

Lee, C., and Lee, G. G. (2006). Information Gain and Divergence-Based Feature Selection for Machine Learning-Based Text Categorization. Inf. Process. Management. 42, 155–165. doi:10.1016/j.ipm.2004.08.006

Lepora, N. F., Martinez-Hernandez, U., and Prescott, T. J. (2013). Active Bayesian Perception for Simultaneous Object Localization and Identification. Proc. Robotics: Sci. Syst. Berlin, Germany. doi:10.15607/rss.2013.ix.019

Liu, H., Guo, D., and Sun, F. (2016). Object Recognition Using Tactile Measurements: Kernel Sparse Coding Methods. IEEE Trans. Instrum. Meas. 65, 656–665. doi:10.1109/tim.2016.2514779

Liu, H., Wu, Y., Sun, F., and Di, G. (2017). Recent Progress on Tactile Object Recognition. Int. J. Adv. Robotic Syst. 14, 1–12. doi:10.1177/1729881417717056

Luo, S., Mou, W., Althoefer, K., and Liu, H. (2015). Novel Tactile-SIFT Descriptor for Object Shape Recognition. IEEE Sensors J. 15, 5001–5009. doi:10.1109/jsen.2015.2432127

Luo, S., Mou, W., Althoefer, K., and Liu, H. (2019). Iclap: Shape Recognition by Combining Proprioception and Touch Sensing. Auton. Robot. 43, 993–1004. doi:10.1007/s10514-018-9777-7

Madry, M., Bo, L., Kragic, D., and Fox, D. (2014). “St-hmp: Unsupervised Spatio-Temporal Feature Learning for Tactile Data,” in Proceedings of IEEE International Conference on Robotics and Automation, Hong Kong, China, IEEE, 2262–2269. doi:10.1109/icra.2014.6907172

Mayer, R. M., Garcia-Rosas, R., Mohammadi, A., Tan, Y., Alici, G., Choong, P., et al. (2020). Tactile Feedback in Closed-Loop Control of Myoelectric Hand Grasping: Conveying Information of Multiple Sensors Simultaneously via a Single Feedback Channel. Front. Neurosci. 14, 348. doi:10.3389/fnins.2020.00348

Mohammadi, A., Xu, Y., Tan, Y., Choong, P., and Oetomo, D. (2019). Magnetic-based Soft Tactile Sensors with Deformable Continuous Force Transfer Medium for Resolving Contact Locations in Robotic Grasping and Manipulation. Sensors 19, 4925. doi:10.3390/s19224925

Ottenhaus, S., Kaul, L., Vahrenkamp, N., and Asfour, T. (2018). Active Tactile Exploration Based on Cost-Aware Information Gain Maximization. Int. J. Hum. Robot. 15, 1850015. doi:10.1142/s0219843618500159

Overvliet, K., Smeets, J. B., and Brenner, E. (2008). The use of proprioception and tactile information in haptic search. Acta Psychologica. 129, 83–90. doi:10.1016/j.actpsy.2008.04.011

Parsian, M. (2015). Data Algorithms: Recipes for Scaling up with Hadoop and Spark. Sebastopol, California: O’Reilly Media, Inc.

Pezzementi, Z., Plaku, E., Reyda, C., and Hager, G. D. (2011). Tactile-object Recognition from Appearance Information. IEEE Trans. Robot. 27, 473–487. doi:10.1109/tro.2011.2125350

Schmitz, A., Bansho, Y., Noda, K., Iwata, H., Ogata, T., and Sugano, S. (2015). “Tactile Object Recognition Using Deep Learning and Dropout,” in Proceedings of IEEE International Conference on Humanoid Robots, Madrid, Spain, IEEE, 1044–1050.

Schneider, A., Sturm, J., Stachniss, C., Reisert, M., Burkhardt, H., and Burgard, W. (2009). Object Identification with Tactile Sensors Using Bag-Of-Features. Proceedings of IEEE International Conference on Intelligent Robots and Systems, St. Louis, MO, IEEE, 243–248. doi:10.1109/iros.2009.5354648

Scimeca, L., Maiolino, P., Bray, E., and Iida, F. (2020). Structuring of Tactile Sensory Information for Category Formation in Robotics Palpation. Autonomous Robots. 44 (8), 1–17. doi:10.1007/s10514-020-09931-y

Shaw-Cortez, W., Oetomo, D., Manzie, C., and Choong, P. (2019). Robust Object Manipulation for Tactile-Based Blind Grasping. Control. Eng. Pract. 92, 104136. doi:10.1016/j.conengprac.2019.104136

Spiers, A. J., Liarokapis, M. V., Calli, B., and Dollar, A. M. (2016). Single-Grasp Object Classification and Feature Extraction with Simple Robot Hands and Tactile Sensors. IEEE Trans. Haptics. 9, 207–220. doi:10.1109/toh.2016.2521378

Vezzani, G., Jamali, N., Pattacini, U., Battistelli, G., Chisci, L., and Natale, L. (2016). A Novel Bayesian Filtering Approach to Tactile Object Recognition. Proceedings of IEEE International Conference on Humanoid Robots, Cancun, Mexico, IEEE, 256–263. doi:10.1109/humanoids.2016.7803286

Xu, D., Loeb, G. E., and Fishel, J. A. (2013). Tactile Identification of Objects Using Bayesian Exploration. Proceedings of IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, IEEE, 3056–3061. doi:10.1109/icra.2013.6631001

Keywords: haptic based object identification, identification efficiency, object description, clustering, information gain

Citation: Xia Y, Mohammadi A, Tan Y, Chen B, Choong P and Oetomo D (2021) On the Efficiency of Haptic Based Object Identification: Determining Where to Grasp to Get the Most Distinguishing Information. Front. Robot. AI 8:686490. doi: 10.3389/frobt.2021.686490

Received: 26 March 2021; Accepted: 08 July 2021;

Published: 29 July 2021.

Edited by:

Perla Maiolino, University of Oxford, United KingdomReviewed by:

Alessandro Albini, University of Oxford, United KingdomJoao Bimbo, Italian Institute of Technology (IIT), Italy

Gang Yan, Waseda University, Japan

Copyright © 2021 Xia, Mohammadi, Tan, Chen, Choong and Oetomo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yu Xia, eXhpYTNAc3R1ZGVudC51bmltZWxiLmVkdS5hdQ==

Yu Xia

Yu Xia Alireza Mohammadi

Alireza Mohammadi Ying Tan

Ying Tan Bernard Chen1

Bernard Chen1 Peter Choong

Peter Choong Denny Oetomo

Denny Oetomo