- 1Social Cognition in Human-Robot Interaction, Istituto Italiano di Tecnologia (IIT), Genoa, Italy

- 2DIBRIS, Università degli Studi di Genova, Genoa, Italy

- 3Piccolo Cottolengo Genovese di Don Orione, Genoa, Italy

In this paper, we investigate the impact of sensory sensitivity during robot-assisted training for children diagnosed with Autism Spectrum Disorder (ASD). Indeed, user-adaptation for robot-based therapies could help users to focus on the training, and thus improve the benefits of the interactions. Children diagnosed with ASD often suffer from sensory sensitivity, and can show hyper or hypo-reactivity to sensory events, such as reacting strongly or not at all to sounds, movements, or touch. Considering it during robot therapies may improve the overall interaction. In the present study, thirty-four children diagnosed with ASD underwent a joint attention training with the robot Cozmo. The eight session training was embedded in the standard therapy. The children were screened for their sensory sensitivity with the Sensory Profile Checklist Revised. Their social skills were screened before and after the training with the Early Social Communication Scale. We recorded their performance and the amount of feedback they were receiving from the therapist through animations of happy and sad emotions played on the robot. Our results showed that visual and hearing sensitivity influenced the improvements of the skill to initiate joint attention. Also, the therapists of individuals with a high sensitivity to hearing chose to play fewer animations of the robot during the training phase of the robot activity. The animations did not include sounds, but the robot was producing motor noise. These results are supporting the idea that sensory sensitivity of children diagnosed with ASD should be screened prior to engaging the children in robot-assisted therapy.

1 Introduction

In Human-Robot Interaction (HRI), and especially in settings where robots serve the role of social assistants, user-adaptation is an important factor to study. Indeed, fitting the behavior of the robot to the user’s needs can improve the social interaction during HRI. During the last decades, inter-individual differences were investigated in HRI [e.g., users’ personality (Robert, 2018)], or their prior experience with robots (Bartneck et al., 2007) to observe how much they may affect the interaction with robots. Similarly, the robot’s specifics were investigated, for example how much robots’ embodiment (Deng et al., 2019) or displayed personality (Mou et al., 2020) can influence the interaction. Understanding inter-individual differences among users would help robot designers to endow artificial agents with features that can smoothen the interaction, making it more engaging for the human counterpart. This aspect is crucial in socially assistive robotics, where tailor-made technical solution could have an impact on the clinical outcome (Khosla et al., 2015; Mataric, 2017; Clabaugh et al., 2019).

To address inter-individual differences among users, sensory sensitivity profiles can be crucial. Sensory sensitivity is defined as the detection and reaction ones can have regarding sensory events (e.g., visual, auditory, touch, taste, smell, vestibular, and proprioception) (Dunn, 1999). Individuals perceive and react differently to sensory information. For example, some individuals would seek a quiet environment to work, when others would turn on the TV or the radio to have background noises. Some would work in a bright environment, whereas others would close the blinds. During social interaction we constantly process sensory information (i.e., the use of vision cues when we look at our interlocutor’s facial expression, or the use of auditory cues when we listen to our interlocutor voice tone). Robots are a complex source of sensory information in a their own way (particular embodiment, presence of mechanical parts, LEDs, or noises from the motor) and can affect the interactions with the user (Deng et al., 2019). A number of studies reported that sensory sensitivity could predict behaviors in both clinical and general (healthy) population (in clinical population: Chevalier et al., 2016; Chevalier et al., 2017; Chevalier et al., 2021a; and in general population: Agrigoroaie and Tapus, 2017; Chevalier et al., 2021b). Sensory sensitivity affects the task performance of healthy adults during a human-robot interaction. The performance in a Stroop task with the Tiago robot (PAL robotics, Pages et al., 2016) was shown to be influenced by the participants’ auditory sensitivity (Agrigoroaie and Tapus, 2017). In a recent work (Chevalier et al., 2021b), the authors investigated the influence of visual sensitivity on the performance on an imitation task with both a physical and virtual version of the R1 robot (Parmiggiani et al., 2017). Results showed that higher visual sensitivity increased imitation accuracy, providing evidence that screening user’s sensory sensitivity is a helpful tool to evaluate and design agent-user interactions. Sensory sensitivity was also investigated in clinical populations. In a series of studies, vision and proprioception sensitivity was found to predict the performance of children diagnosed with Autism Spectrum Disorder (ASD) in imitation (Chevalier et al., 2017) and joint attention (Chevalier et al., 2016) during an interaction with the robot NAO (Softbank robotics, Gouaillier et al., 2008). Furthermore, visual and proprioception sensitivity predict performance in an emotion recognition task that involved two robots (Nao from Softbank Robotics and R25 from Robokind), an avatar (Mary from MARC, Courgeon and Clavel, 2013), and a human agent. In all the above-mentioned studies, participants with visual hyper-reactivity and proprioception hypo-reactivity showed higher performance in all the tasks compared to the participants showing vision hypo-reactivity and hyper-reactivity on proprioception. Finally, in Chevalier et al. (2021a), children diagnosed with ASD played a “Simon says” game with the iCub robot presented on a monitor screen, once with the motor noises turned on, and once with the motor noises turned off. The results showed that participants reporting to be overwhelmed by unexpected loud noises were more able to focus on the game when the robot’s motor noises were turned off.

The findings from these studies show that sensory sensitivity appears to affect the social interactions between a human and a robot in both clinical and general population. Additionally, the sensory stimuli generated by the robot’s embodiment are reported to affect the interactions. Therefore, understanding the effect of sensory sensitivity in HRI, appears to be of great importance, and it will be impactful for both healthy and clinical populations.

This statement is particularly true regarding the clinical population of individuals with ASD. Indeed, this population seems to benefit from the use of socially assistive robots during standard therapy (Aresti-Bartolome and Garcia-Zapirain, 2014; Coeckelbergh et al., 2016; Ghiglino et al., 2021). This might be due to the specificity of impairments of ASD individuals, which include deficient communication and social skills, along with restricted and repetitive patterns of behaviors, interests, or activities (APA, 2013). Robots are believed to be a fitting tool for this population as they present a mechanistic and predictable nature, which appears to be attractive and reassuring for children with ASD (Scassellati et al., 2012; Cabibihan et al., 2013). Children with ASD participating in robot-assisted intervention showed improvements in their social skills or a reduction of repetitive behavior (Scassellati et al., 2012). However, the sensory stimulation due to the robots’ mechanical embodiment and motor noise during interactions designed for children diagnosed with ASD needs to be addressed. Indeed, individuals with ASD suffer also from sensory hypo or hypersensitivity (APA, 2013) and some authors claim that robots are an overwhelming source of sensory stimulation (Ferrari et al., 2009). Thus, even if robots seem to be beneficial for ASD individuals (Scassellati et al., 2012; Pennisi et al., 2016), the attentional engagement they require from the individual might be detrimental for the processing of the social aspect of the interaction (van Straten et al., 2018). Understanding how sensory sensitivity affects HRI would improve the design of tailor-made robot-assisted interventions developed for children with ASD.

The aim of this study is to explore the effect of sensory sensitivity on improvements of social skills related to a joint attention training with the Cozmo robot (Anki Robotics/Digital Dream Labs). The training of joint attention is often included in standard ASD treatment plan, as impairments of joint attention are typical of ASD individuals (Mundy and Newell, 2007; APA, 2013; Mundy, 2018). Joint attention is claimed to be a fundamental prerequisite of mentalizing abilities, as its development allows an individual to share the focus of his/her attention with another individual, attending the same object or event (Emery, 2000). However, children diagnosed with ASD use uncommon joint attention strategies (Charman et al., 1997; Johnson et al., 2007; Mundy and Newell, 2007). The inclusion of joint attention training in the standard treatment plan of ASD showed positive effects on social learning (Johnson et al., 2007; Mundy and Newell, 2007). The use of robot-assisted therapies of ASD tailored on joint attention showed encouraging results [see (Chevalier et al., 2020) for a review].

2 Methods

2.1 Participants

Thirty-six children diagnosed with ASD were recruited at the Piccolo Cottolengo Genovese di Don Orione (Genoa, Italy) (age = 5.69 years ± 1.06, five females). Prior to the beginning of the study, participants’ formal diagnosis of ASD was confirmed healthcare professionals of Piccolo Cottolengo Genovese di Don Orione, using the ADOS screening tool (Rutter et al., 2012). Parents or legal tutors of the children recruited for the study were asked to provide the healthcare professionals with a signed written informed consent. Our experimental protocols followed the ethical standards laid down in the Declaration of Helsinki and were approved by the local Ethics Committee (Comitato Etico Regione Liguria).

2.2 Experimental Design

Our previous paper (Ghiglino et al., 2021) describes in more detail the training protocol and its efficacy. Here, we focus specifically on how the sensory sensitivity of the children, screened with the Sensory Profile Checklist Revised (SPCR, Bogdashina, 2003), affected the interaction with the robot.

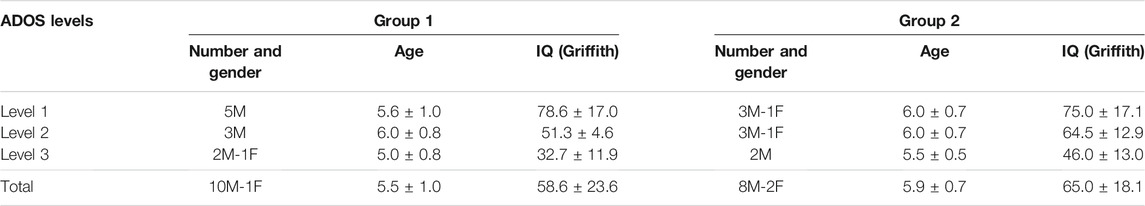

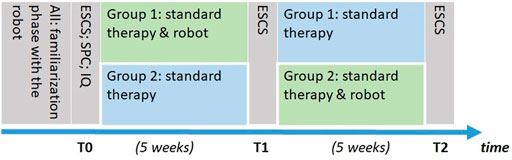

Due to the limited sample size, and to grant all the children involved in the study the possibility to interact with the robot, we investigated the efficacy of the training using a two-period crossover design. Participants were pseudo-randomly assigned to two groups, balanced by their chronological age and by their ADOS score. Participants in one group (Group 1) received the robot-assisted intervention during the first period of the study, while participants in the other group (Group 2) received it during the second period of the study (see Figure 1). A trained psychologist screened the children’s social skills with the ESCS three times during the study. The first time, before the beginning of the experiment (T0), the second time after the first period of the study (T1), the third time after the second period of the study (T2) (see Figure 1). Additionally, prior to the beginning of the activities, participants’ IQ and sensory sensitivity were screened using the Italian versions of Griffiths’ Developmental Scales (Green et al., 2015) and the Sensory Profile Checklist Revised (SPCR, Bogdashina, 2003).

FIGURE 1. Timeline of the study. First, all participants did the familiarization phase. Participants remaining in the training protocol were separated in two groups. All the remaining participants were then screened for their social skills with the Early Social Communication Scale (ESCS), their sensory sensitivity with the Sensory Profile Checklist Revised (SPCR) and their IQ (Griffiths) at T0. Then, Group 1 did the Standard therapy and robot condition and Group 2 the Standard therapy condition. After 5 weeks, children from both groups were screened with the ESCS at T1. Then, Group 1 did the Standard therapy condition and Group 2 the Standard therapy and robot condition. After 5 weeks, children from both groups were screened with the ESCS at T2.

The activities with the robot were always embedded in the standard therapy. Therefore, children interacted with the Cozmo robot during their 1 h therapy with their usual therapist. Prior to the training activity, all participants underwent a familiarization phase with a simpler version of the training. The familiarization was supposed to ensure that the children involved in the study were all able to understand the instructions of the training, and were at ease with the robot. After the familiarization phase, ten participants were excluded from the experiment, as they were not able to perform the task or were too uncomfortable with the robot. Two participants were withdrawn from the training due to familial reasons. Two participants were excluded as their SPCR evaluation was missing. In total, 22 participants were considered for the analysis in the present study (see Table 1 for details on the groups and participants).

2.3 Setup and Training Procedure

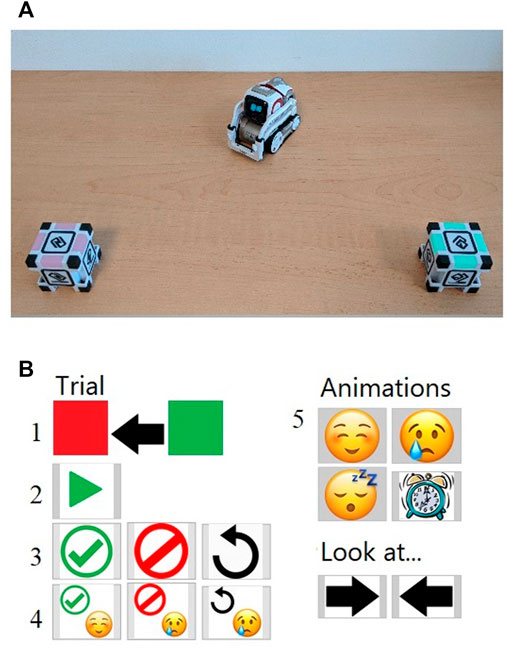

We used the robot Cozmo, a low-price commercial toy robot, provided with cubes that can be lit in different colors. We designed a program for Cozmo that enabled the therapists to conduct the experiment autonomously, without the need for an experimenter to support them. The therapists were trained to prepare the setup and launch the experiment. Following Anki’s SDK setup1 we developed a custom Python program to control the robot via a webpage. Based on the documentation provided by Anki to use Cozmo with a custom program, the setup required the robot Cozmo, a tablet (here, Android) with the Anki application on SDK mode connected via USB to a computer (here, Windows 10) which ran the Python code. A Python script controlled the training sequence and the robot’s behaviors, and a webpage developed in HTML and JavaScript acted as a user interface. Flask2 served as a server to communicate between the Python script and the webpage. The user interface enabled the therapist to navigate through the training, and record the participant’s identifier and correct/incorrect answers.

The activity with the robot had a duration of 10–15 min, it was conducted once or twice a week (depending on the child’s therapy frequency) during a period of 5 weeks, for a total of eight sessions with the robot per child. The therapists were trained to control the robot in full autonomy (no experimenter was present on-site during the experiment). The robot activity was a joint attention training based on a spatial attention cueing paradigm, with Cozmo delivering twelve joint attention cues. For each trials, the robot turned and gazed to one of the two cubes. Then, the cubes lit in different colors (between red, blue, green and yellow). The therapist asked the child which cube was looking Cozmo, and then recorded the answer of the child thanks to the interface. Finally, the robot went back to its initial position and the cubes turned off. The robot was able to deliver two animations of emotions during the training, happiness and sadness. These were two simple custom animations with a smile or a frowning face appearing on the LED display of Cozmo, and simple body movements (moving the arms ups and down with the face looking up for the happy animation, and looking down with small body movements to the left and right for the sad animation). The therapist chose if the robot performed these animation to reinforce correct or incorrect answer, or at the end of the twelve trials as a reward for participation. The therapist could skip the robot’s animation if she/he considered that they did not provide reinforcement but a distraction (for example, if a child disliked the animations). The therapist was able to restart the trial if the child was not attentive to the robot. Each session contained twelve gaze cueing trials. At the end of the session, the therapist proposed to the child if she/he wanted to see again the animation on the robot before saying to it goodbye, and had the possibility to register some additional comments about the session. Then, standard therapy with the child continued. The full protocol is described in (Ghiglino et al., 2021). Figure 2 depicts the setup and the interface used in the training.

FIGURE 2. (A) The robot and the cubes; (B) Interface provided to the therapist. After having clicked on the “play” button (2), the robot put itself in the position as displayed in (A). On the interface (B), the position and the colors of the cubes are reproduced (1). The therapist can enter the response of the child as “correct” or “incorrect” or to repeat the movement if the child was not paying attention. The feedback given to children can be accompanied or not with an animation (respectively in 3 and 4). At the end of the session, the therapist can play some animations on the robot as a reward (5).

2.4 Measurements

2.4.1 Early Social Communication Scale

The ESCS (Seibert and Hogan, 1982) provides measures of individual differences in nonverbal communication skills that emerge typically in children between 8 and 30 months of age. It is a videotaped structured observation measure that requires between 15 and 25 min to administer. The scale evaluates three different nonverbal communication skills: joint attention (subscales: initiating, responding and maintaining joint attention), social interaction (subscales: initiating, responding and maintaining social interaction), and behavioral request (subscales: initiating and responding to behavioral request). ESCS was used as pre and post-test to screen the social skill variations of the children in both conditions (i.e., standard therapy with the robot activity and standard therapy without the robot activity).

2.4.2 Sensory Profile Checklist Revised

The Sensory Profile Checklist Revised (second edition) (Bogdashina, 2003) assesses the individual’s sensitivity in vision, audition, touch, smell, taste, proprioception, and vestibular perception. The questionnaire enables to clarify the strength and weakness of a child regarding their sensory profiles. Each sense is investigated by 20 categories that explore the child sensitivity. The categories assess different aspects of sensory sensitivity, for examples being unable to stop to feel a sensory change (category 2), or acute sensitivity to some stimuli (category 7). For each sense, each category has specific questions (the number of questions in the categories are uneven across sense, for a total of 312 questions) to be answered to evaluate if the child displays the assessed behavior in the category. So, the more a child shows atypical sensory behaviors, the more categories will be marked as true. For each sense, the 20 categories are added to obtain a score from 1 to 20, with a higher score corresponding to a higher sensitivity to the sense. This questionnaire does not take place as a diagnosis tool, but as a help for the parents and therapists to understand the children’s sensory sensibility and therefore adapt their environment and activities (Robinson, 2010). The SPCR was administered by the therapists in charge of the children at the Piccolo Cottolengo Genovese di Don Orione.

2.4.3 Performance Score

Participants’ performance score was computed as the rate of the correct answers in the total of the joint attention trials they performed during the whole training.

2.4.4 Number of Robot’s Animation Played

During the sessions with the robot, the therapist was able to choose to make the robot do the “happy” or “sad” animations during the training. We identified those animations as events that were providing visual and auditory cues to the children. The robot does body movements which can trigger the visual sensitivity, and noises coming from the motor can trigger auditory sensitivity. As the therapist could choose to trigger the robot if they considered it to be beneficial for the child, we considered that it could reflect the sensory sensitivity of the child towards the robot. We recorded how many times the animations were played on the robot during the whole training. Thus, for each participants we collected three measures: the total of animations played during the sessions, the total of animations played as a reinforcement for a correct or incorrect answer, and the total of animations played as a reward for participation.

2.5 Data Analysis

We used multiple linear regression analysis to test if the SPCR scores in vision, hearing, touch, and proprioception (we discarded smell, taste, and vestibular perception from our analysis) were predictors of the following dependent variables: the children’s improvements in the ESCS items (for both standard therapy with the robot activity and standard therapy without the robot activity), the children’s performance in the joint attention activity with the robot, and the children’s exposure to the robot’s animations (in total; as reinforcement to an answer; and after the training).

3 Results

3.1 Children’s Improvements in the ESCS Items

3.1.1 During Standard Therapy With the Robot Activity

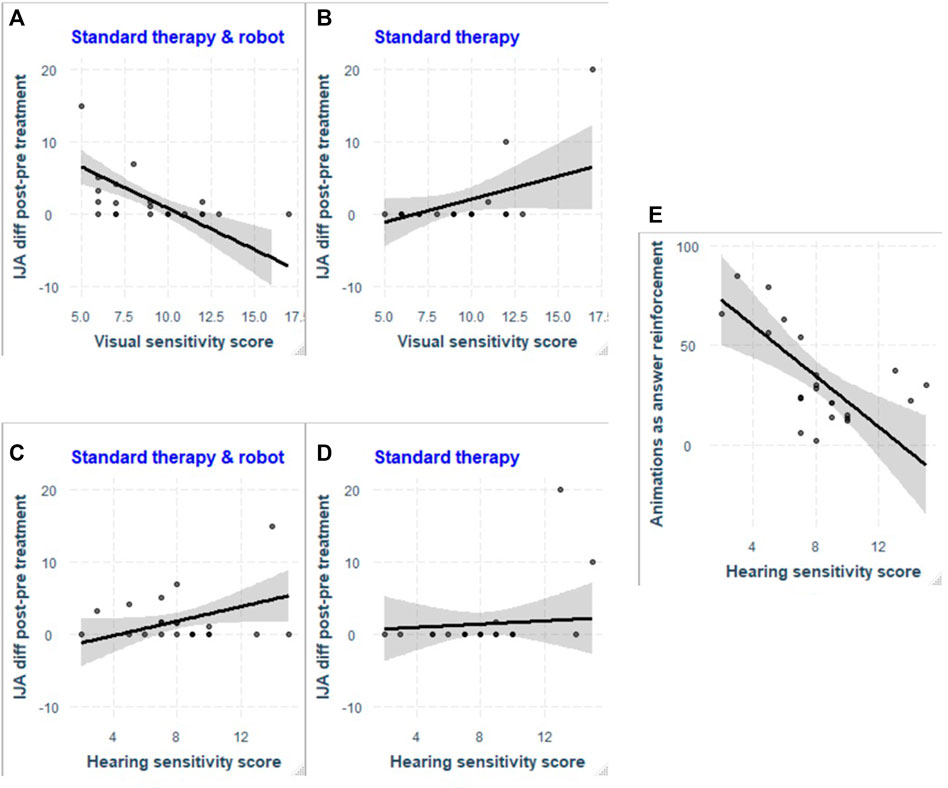

We found a significant regression for Initiating Joint Attention item of the ESCS with the SPCR scores as predictors during the standard therapy augmented by the robot activity (R2 = 0.584, F (4,17)= 5.965, p < 0.01). We found that the visual and hearing sensitivity scores significantly predicted the improvement in Initiating Joint Attention (Visual: β = −1.14, p < 0.001; Hearing: β = 0.5, p < 0.05). See Figures 3A,C. We did not find other significant regressions between the sensory sensitivity and the improvements of the ESCS items during standard therapy augmented by the robot activity.

FIGURE 3. Scatter plot of the difference in scores in the initiating joint attention item of the ESCS as a function of the participants visual sensitivity score, during Standard therapy and robot condition (A) and Standard therapy (B); and as a function of the participants’ hearing sensitivity score, during Standard therapy and robot condition (C) and Standard therapy (D). The visual and hearing sensitivity scores significantly predicted the improvement in Initiating Joint Attention during Standard therapy and robot condition [(A) and (C), respectively], but did not in the Standard therapy alone [(B) and (D), respectively]. In (E), the scatter plot of the number of animation played as a reinforcement to a correct or an incorrect answer in function of the hearing sensitivity of the participants.

3.1.2 Standard Therapy Without the Robot Activity

During the standard therapy without the robot activity, we found a significant regression for the Initiating Joint Attention item of the ESCS with the SPCR scores as predictors (R2 = 0.557, F(4,17)= 5.34, p < 0.01). However, none of the factors included in the model predicted the performance. See Figures 3B,D where we reported the plots for both hearing and visual sensitivity, for comparison for the standard therapy with the robot activity. We did not find other significant regressions between the sensory sensitivity and the improvements in the ESCS items during standard therapy without the robot activity.

3.2 Children’s Performance to the Joint Attention Activity

We did not find any significant regressions between the SPCR scores and the performance of the children in the joint attention task.

3.3 Number of Animations Played

We found a significant regression for the total of animation played as a reinforcement for a correct or incorrect answer and the participants’ SPCR scores (R2 = 0.557, F(4,17) = 5.35, p < 0.01). We found that hearing sensitivity significantly predicted the number of animations played as answer reinforcement to a correct or incorrect answer (β = −6.39, p < 0.01) (see Figure 3E). We did not find significant relationships between the sensory sensitivity and the total number of animations played or the total of animation played at the end of the sessions.

4 Discussion

In this study, we investigated how sensory sensitivity can affect robot-assisted therapy for children with ASD. Individuals’ visual and auditory sensitivity seem to affect the clinical improvement in initiating joint attention when the therapy included the activity with the robot. More precisely, children with ASD with low sensitivity to vision improved better in their initiating joint attention skill than the participant with higher vision sensitivity. We can speculate that participants with lower sensitivity to vision process social cues from the robot with ease. Importantly, sensitivity to vision did not affect the clinical outcome of the standard therapy alone. The opposite pattern of results was found when we considered sensitivity to hearing as predictor of the clinical outcome. Specifically, children with ASD that were more sensitive to hearing improved better in their initiating joint attention skill than the children with lower hearing sensitivity, when the standard therapy was combined with the activity with the robot. We hypothesize that participants highly sensitive to hearing could be more sensitive to motor sounds, provided by the robot during the training. Similarly as in the visual sensitivity, the hearing sensitivity did not affect the clinical outcome of the standard therapy alone. From these results, we have elements to believe that the children’s improvements in their social skills during such type of interventions would benefit from different behaviors of the robot. Future experiments assessing different robot behaviors would allow for a proper evaluation of user’s need. For example, for the visual sensitivity, we can speculate that participants with higher visual sensitivity could benefit from a robot that shows lower social cues at the beginning of the training, progressively increasing the complexity of social cues during the course of the training (e.g., starting with a robot that shows simple animations in terms of body behavior or facial expressions, and gradually moves towards more complex behaviors and expressions). Similarly, for the hearing sensitivity, we can hypothesize that participants with higher sensory sensitivity could beneficiate from a quieter robot [see (Chevalier et al., 2021a) for similar results]. Robots designed for individuals with high sensitivity could, for example, perform behaviors with a lower number of movements, or movements involving quieter actuators to fit the user-preferences.

Interestingly, the effect of sensory sensitivity was observed on a skill that was not directly addressed during the activity with the robot. Indeed, sensory sensitivity predicted the improvement of Initiating Joint Attention, whereas the Response to Joint Attention was trained in this protocol. Accordingly to the ESCS, responding to joint attention refers to the ability to follow the direction of gaze and gestures of others whereas initiating joint attention refers to the ability to use direction of gaze and gestures to direct the attention of others to spontaneously share experiences (Seibert and Hogan, 1982). Following these definitions, the training with the robot was focused on responding to joint attention, as the children had to follow the movement of the robot to know which color the robot looked at. Variation in the Initiating joint attention skill has been discussed to be driven by social motivation by Mundy et al. (2009), with infants showing more interest in social events and engage more in initiating joint attention. We speculate that the improvement of this skill predicted by the visual and auditory sensitivity may be driven by an increase interest and motivation during the interaction with the robot, and beneficiated the children during interactions with an adult. Participants’ performance in the task was not found to be linked to their sensory sensitivity, in contrast to the results obtained in (Chevalier et al., 2016).

We also observed that the participants with higher hearing sensitivity were exposed to less animation during the joint attention trials, compared to individuals with less sensitivity. The children’s therapists, who were in charge of activating or not the animations, seemed to avoid providing positive or negative feedbacks though the robot to the children with higher hearing sensitivity. However, the same pattern was not observed when the therapist had to display the conclusive animation of the robot when the training session was completed. This result suggests that children that have a high sensitivity in hearing might have preferred fewer animations from the robot, meaning less noise from the robot’s motors, during the training phase. However, as the animations were regulated by the therapist, there may have been other reasons not to play them during the training. The therapist did not report the reason why they played (or not) the animations during the training phase.

Future works aimed at adapting robot interventions to the sensory sensitivity of children diagnosed with ASD could implement one of the following strategies. The first one would be to assess sensory sensitivity with a questionnaire (such as the Sensory Profile Checklist Revised, Bogdashina, 2003; the Short Sensory Profiles, Tomchek and Dunn, 2007; the Sensory Profiles, Dunn, 2009) before doing the interaction and to “manually” adapt the interaction to the child. A second one would be to study if the sensory sensitivity could be measured using machine learning techniques, via computer vision for example, tracking events as avoiding behaviors (e.g., covering the ears when a motor sound occurs) or seeking behaviors (e.g., engaged with the robot during animations).

As final words, this work suggests that sensory sensitivity has an impact on the outcomes of the robot-assisted therapy, and therefore, encourages pursuing research in this direction. Therefore, the animations implemented in assistive robots as a reward during therapeutic activities with ASD individuals should include different levels of intensity that can be adapted to the individual’s sensitivity.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Comitato Etico Regione Liguria. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

Author Contributions

AW, DG, and PC conceptualized the paper. AW, DG, TP, FF, and PC conceptualized the experimental procedure. All authors revised the manuscript.

Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No. 754490—MINDED project. This research also received support from Istituto Italiano di Tecnologia, and was conducted as a joint collaborative project between Istituto Italiano di Tecnologia and Don Orione Italia. The content of this work reflects the authors’ view only and the EU Agency is not responsible for any use that may be made of the information it contains.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank the healthcare professional of Piccolo Cottolengo Genovese di Don Orione, the participants and their families. We thank AloSpeak for allowing us to use their tablets.

Footnotes

1see http://cozmosdk.anki.com/docs/index.html.

2https://flask.palletsprojects.com/en/1.1.x/.

References

Agrigoroaie, R., and Tapus, A. (2017). “Influence of Robot's Interaction Style on Performance in a Stroop Task,” in Social Robotics Lecture Notes in Computer Science. A. Kheddar, E. Yoshida, S. S. Ge, K. Suzuki, J.-J. Cabibihan, F. Eyssel Editorset al. (New York: Springer International Publishing), 95–104. doi:10.1007/978-3-319-70022-9_10

APA (2013). Diagnostic and Statistical Manual of Mental Disorders (DSM-5®). Washington, D.C.: American Psychiatric Pub.

Aresti-Bartolome, N., and Garcia-Zapirain, B. (2014). Technologies as Support Tools for Persons with Autistic Spectrum Disorder: A Systematic Review. Int. J. Environ. Res. Public Health 11, 7767–7802. doi:10.3390/ijerph110807767

Bartneck, C., Suzuki, T., Kanda, T., and Nomura, T. (2006). The Influence of People's Culture and Prior Experiences with Aibo on Their Attitude towards Robots. AI Soc. 21, 217–230. doi:10.1007/s00146-006-0052-7

Bogdashina, O. (2003). Sensory Perceptual Issues in Autism and Asperger Syndrome: Different Sensory Experiences, Different Perceptual Worlds. London, UK: Different Perceptual Worlds, Jessica Kingsley.

Cabibihan, J.-J., Javed, H., Ang, M., and Aljunied, S. M. (2013). Why Robots? A Survey on the Roles and Benefits of Social Robots in the Therapy of Children with Autism. Int. J. Soc. Robotics 5, 593–618. doi:10.1007/s12369-013-0202-2

Charman, T., Swettenham, J., Baron-Cohen, S., Cox, A., Baird, G., and Drew, A. (1997). Infants with Autism: An Investigation of Empathy, Pretend Play, Joint Attention, and Imitation. Develop. Psychol. 33, 781–789. doi:10.1037/0012-1649.33.5.781

Chevalier, P., Floris, F., Priolo, T., De Tommaso, D., and Wykowska, A. (2021a). ““iCub Says: Do My Motor Sounds Disturb You?” Motor Sounds and Imitation with a Robot for Children with Autism Spectrum Disorder,” in The Thirteenth International Conference on Social Robotics, November 10–13, 2021 (Singapore: Springer LNAI series).

Chevalier, P., Kompatsiari, K., Ciardo, F., and Wykowska, A. (2020). Examining Joint Attention with the Use of Humanoid Robots-A New Approach to Study Fundamental Mechanisms of Social Cognition. Psychon. Bull. Rev. 27, 217–236. doi:10.3758/s13423-019-01689-4

Chevalier, P., Martin, J.-C., Isableu, B., Bazile, C., Iacob, D.-O., and Tapus, A. (2016). “Joint Attention Using Human-Robot Interaction: Impact of Sensory Preferences of Children with Autism, to Appear,” in 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (New York, NY: Columbia University), 849–854. doi:10.1109/ROMAN.2016.7745218

Chevalier, P., Raiola, G., Martin, J.-C., Isableu, B., Bazile, C., and Tapus, A. (2017). “Do Sensory Preferences of Children with Autism Impact an Imitation Task with a Robot?,” in HRI ’17: Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction (New York, NY, USA: ACM), 177–186. doi:10.1145/2909824.3020234

Chevalier, P., Vasco, V., Willemse, C., De Tommaso, D., Tikhanoff, V., Pattacini, U., et al. (2021b). Upper Limb Exercise with Physical and Virtual Robots: Visual Sensitivity Affects Task Performance. Paladyn J. Behav. Robot. 12, 199–213. doi:10.1515/pjbr-2021-0014

Clabaugh, C., Mahajan, K., Jain, S., Pakkar, R., Becerra, D., Shi, Z., et al. (2019). Long-Term Personalization of an in-Home Socially Assistive Robot for Children with Autism Spectrum Disorders. Front. Robot. AI 6, 110. doi:10.3389/frobt.2019.00110

Coeckelbergh, M., Pop, C., Simut, R., Peca, A., Pintea, S., David, D., et al. (2016). A Survey of Expectations about the Role of Robots in Robot-Assisted Therapy for Children with ASD: Ethical Acceptability, Trust, Sociability, Appearance, and Attachment. Sci. Eng. Ethics 22, 47–65. doi:10.1007/s11948-015-9649-x

Courgeon, M., and Clavel, C. (2013). MARC: a Framework that Features Emotion Models for Facial Animation during Human-Computer Interaction. J. Multimodal User Inter. 7, 311–319. doi:10.1007/s12193-013-0124-1

Deng, E., Mutlu, B., and Mataric, M. J. (2019). Embodiment in Socially Interactive Robots. FNT in Robotics 7, 251–356. doi:10.1561/2300000056

Emery, N. J. (2000). The Eyes Have it: the Neuroethology, Function and Evolution of Social Gaze. Neurosci. Biobehavioral Rev. 24, 581–604. doi:10.1016/S0149-7634(00)00025-7

Ferrari, E., Robins, B., and Dautenhahn, K. (2009). “Therapeutic and Educational Objectives in Robot Assisted Play for Children with Autism,” in RO-MAN 2009-The 18th IEEE International Symposium on Robot and Human Interactive Communication (Toyama, Japan: IEEE), 108–114. doi:10.1109/roman.2009.5326251

Ghiglino, D., Chevalier, P., Floris, F., Priolo, T., and Wykowska, A. (2021). Follow the white Robot: Efficacy of Robot-Assistive Training for Children with Autism Spectrum Disorder. Res. Autism Spectr. Disord. 86, 101822. doi:10.1016/j.rasd.2021.101822

Gouaillier, D., Hugel, V., Blazevic, P., Kilner, C., Monceaux, J., Lafourcade, P., et al. (2008). The Nao Humanoid: a Combination of Performance and Affordability. CoRR arXiv:0807.3223.

Green, E., Stroud, L., Bloomfield, S., Cronje, J., Foxcroft, C., Hurter, K., et al. (2015). Griffiths Scales of Child Development. 3rd Edn. Göttingen, Germany: Hogrefe Publishing.

Johnson, C. P., and Myers, S. M., and American Academy of Pediatrics Council on Children With Disabilities (2007). Identification and Evaluation of Children with Autism Spectrum Disorders. Pediatrics 120, 1183–1215. doi:10.1542/peds.2007-2361

Khosla, R., Nguyen, K., and Chu, M.-T. (2015). “Service Personalisation of Assistive Robot for Autism Care,” in IECON 2015 - 41st Annual Conference of the IEEE Industrial Electronics Society (Yokohama, Japan: EEE), 002088–002093. doi:10.1109/IECON.2015.7392409

Mataric, M. J. (2017). Socially Assistive Robotics: Human Augmentation versus Automation. Sci. Robot. 2, aam5410. doi:10.1126/scirobotics.aam5410

Mou, Y., Shi, C., Shen, T., and Xu, K. (2020). A Systematic Review of the Personality of Robot: Mapping its Conceptualization, Operationalization, Contextualization and Effects. Int. J. Human-Computer Interaction 36, 591–605. doi:10.1080/10447318.2019.1663008

Mundy, P. (2018). A Review of Joint Attention and Social-Cognitive Brain Systems in Typical Development and Autism Spectrum Disorder. Eur. J. Neurosci. 47 (6), 497–514.

Mundy, P., and Newell, L. (2007). Attention, Joint Attention, and Social Cognition. Curr. Dir. Psychol. Sci. 16, 269–274. doi:10.1111/j.1467-8721.2007.00518.x

Mundy, P., Sullivan, L., and Mastergeorge, A. M. (2009). A Parallel and Distributed-Processing Model of Joint Attention, Social Cognition and Autism. Autism Research 2 (1), 02–21.

Pages, J., Marchionni, L., and Ferro, F. (2016). Tiago: the Modular Robot that Adapts to Different Research Needs. Int. Workshop Robot Modul. IROS.

Parmiggiani, A., Fiorio, L., Scalzo, A., Sureshbabu, A. V., Randazzo, M., Maggiali, M., et al. (2017). “The Design and Validation of the R1 Personal Humanoid,” in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Vancouver, BC, Canada: IEEE), 674–680. doi:10.1109/IROS.2017.8202224

Pennisi, P., Tonacci, A., Tartarisco, G., Billeci, L., Ruta, L., Gangemi, S., et al. (2016). Autism and Social Robotics: A Systematic Review. Autism Res. 9, 165–183. doi:10.1002/aur.1527

Robert, L. (2018). Personality in the Human Robot Interaction Literature: A Review and Brief Critique. Rochester, NY: Social Science Research Network. Available at: https://papers.ssrn.com/abstract=3308191 (Accessed September 18, 2019).

Robinson, L. D. (2010). Towards Standardisation of the Sensory Profile Checklist Revisited: Perceptual and Sensory Sensitivities in Autism Spectrum Conditions. Available at: https://era.ed.ac.uk/handle/1842/5308 (Accessed July 28, 2021).

Rutter, M., DiLavore, P., Risi, S., Gotham, K., and Bishop, S. (2012). Autism Diagnostic Observation Schedule: ADOS-2. Torrance, CA: Western Psychological Services.

Scassellati, B., Henny Admoni, H., and Matarić, M. (2012). Robots for Use in Autism Research. Annu. Rev. Biomed. Eng. 14, 275–294. doi:10.1146/annurev-bioeng-071811-150036

Seibert, J. M., and Hogan, A. E. (1982). Procedures Manual for the Early Social-Communication Scales (ESCS). Coral Gables, FL: Mailman Center for Child Development, University of Miami.

Tomchek, S. D., and Dunn, W. (2007). Sensory Processing in Children with and without Autism: A Comparative Study Using the Short Sensory Profile. Am. J. Occup. Ther. 61, 190–200. doi:10.5014/ajot.61.2.190

van Straten, C. L., Smeekens, I., Barakova, E., Glennon, J., Buitelaar, J., and Chen, A. (2018). Effects of Robots' Intonation and Bodily Appearance on Robot-Mediated Communicative Treatment Outcomes for Children with Autism Spectrum Disorder. Pers Ubiquit Comput. 22, 379–390. doi:10.1007/s00779-017-1060-y

Keywords: autism, robot, human-robot interaction, robot-assisted therapy, sensory sensitivity

Citation: Chevalier P, Ghiglino D, Floris F, Priolo T and Wykowska A (2022) Visual and Hearing Sensitivity Affect Robot-Based Training for Children Diagnosed With Autism Spectrum Disorder. Front. Robot. AI 8:748853. doi: 10.3389/frobt.2021.748853

Received: 28 July 2021; Accepted: 09 December 2021;

Published: 12 January 2022.

Edited by:

Adham Atyabi, University of Colorado Colorado Springs, United StatesReviewed by:

Fernanda Dreux M. Fernandes, University of São Paulo, BrazilKonstantinos Tsiakas, Eindhoven University of Technology, Netherlands

Copyright © 2022 Chevalier, Ghiglino, Floris, Priolo and Wykowska. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: A. Wykowska, YWduaWVzemthLnd5a293c2thQGlpdC5pdA==

P. Chevalier

P. Chevalier D. Ghiglino

D. Ghiglino F. Floris

F. Floris T. Priolo3

T. Priolo3 A. Wykowska

A. Wykowska