- Hank Greenspun School of Journalism and Media Studies, University of Nevada, Las Vegas, NV, United States

Research on Generative Artificial Intelligence (GAI) in higher education primarily focuses on faculty use and experiences, with limited attention given to why some abstain from using it. Drawing from Innovation Resistance Theory, this study aims to address this gap by exploring the perceptions of both faculty users and non-users of GAI, identifying the reasons and concerns why they avoid GAI. A survey of 294 full-time higher education faculty from two mid-size U.S. public universities was conducted. Using qualitative and quantitative analysis, results show that over one-third of the faculty members opted out of using GAI for five primary reasons: not ready/not now, no perceived value, identity in tension, threat to human intelligence, and future fears and present risks. While both groups expressed concerns about academic dishonesty, non-users associate GAI with broader negative societal consequences, whereas users viewed it as related to innovation and potential benefits. For non-users, top concerns included a perceived lack of originality and accountability, while users were primarily concerned with accuracy. Surprisingly, general comfort with technology emerged as a significant predictor of non-user faculty’s behavioral intention to use GAI. This research contributes to understanding faculty resistance to GAI, emphasizing the need to balance its benefits with drawbacks in higher education.

Introduction

As Generative AI (GAI) becomes more widespread, it has the potential to transform the landscape of higher education, bringing significant benefits and challenges that warrant careful consideration (Cordero et al., 2024). Faculty plays a pivotal role in shaping the educational experience, and their use or reluctance to embrace AI could influence the evolution of academic practices (Shata and Hartley, 2025). Past research has highlighted that many educators view GAI as a valuable tool for enhancing teaching efficiency, enriching the learning experience, and improving student engagement (Francis et al., 2025). However, Mah and Groß (2024) analysis revealed that 61.2% of the faculty profiles express more concerns and challenges about GAI than benefits. Many concerns have emerged around academic integrity, accuracy, cheating, false information, and skill development (Nikolic et al., 2024; Williams and Ingleby, 2024). Faculty worry that GAI often prioritizes commercial interests over pedagogical objectives (Aad and Hardey, 2025).

Past research on GAI in higher education has been largely exploratory, with a primary focus on students’ perceptions (Chan and Hu, 2023; Mansoor et al., 2024). Much of the existing literature on faculty is primarily descriptive, often presented as systematic or literature reviews (Crompton and Burke, 2023; Sekli et al., 2024). While the limited empirical research focuses on uses, benefits, and strategies for effective AI integration in academia (Chiu, 2024; Noviandy et al., 2024), there remains a significant gap in understanding educators’ hesitations and concerns, particularly the underlying reasons for limited GAI adoption in academia. For example, Cervantes et al. (2024) found that 42% of faculty have not used AI at all despite being aware of it, yet does not deeply explore the reasons behind this lack of adoption. Most existing literature tends to list common concerns without fully explaining why. In response, there is a rising need for robust ethical frameworks to guide its use, and a deeper dialog within the academic communities (Nikolic et al., 2024). These ongoing hesitations and concerns, coupled with the absence of clear guidelines and institutional support, underscore the urgent need to understand why some educators choose to opt out of using GAI.

Innovation resistance theory (IRT) explains why individuals resist adopting new innovations (Ram and Sheth, 1989). Rooted in the tendency to preserve the status quo, IRT posits that resistance to innovation stems from two main barriers: functional barriers related to practical concerns about the innovation’s use, such as usability, value, and risk, and psychological barriers linked to users’ beliefs, perceptions, and cultural factors. For example, the IRT has been applied to examine students’ resistance to AI technologies, highlighting barriers such as usage, value, risk, image, tradition, and cost factors (Alghamdi and Alhasawi, 2024). In healthcare, patients’ resistance to AI was shaped by the need for personal contact, perceived technological dependence, and general skepticism (Sobaih et al., 2025).

Understanding faculty concerns and the reasons behind their hesitations is crucial for overcoming barriers to AI integration in academia, ensuring its ethical implementation that supports, rather than replaces, human creativity and critical thinking, while also preparing students for an AI-driven workforce. This research aims to explore and compare GAI perceptions and concerns among faculty non-users and users of AI, to understand the reasons why faculty abstain or do not use GAI, and to identify the factors affecting their decision to adopt. This will offer valuable insights that will help create a balanced, responsible approach to AI adoption in higher education, ensuring its benefits are maximized while minimizing potential risks. Thus, the following are proposed:

RQ: What are faculty members’ perceptions and concerns regarding GAI, and what are the reasons non-users choose to opt-out of GAI in their practice?

H1: There is a significant difference between faculty users and non-users of GAI in terms of their (a) concerns and (b) comfort levels, such that non-users report higher concerns and lower comfort with the technology compared to users.

H2: Among non-users of GAI, (a) concerns about the technology are negatively associated with the intention to use it, whereas (b) general comfort with technology is positively associated with the intention to use GAI.

Research design

Participants

An online survey was designed using Qualtrics and administered to full-time higher education faculty members recruited from two mid-size public U. S. universities, one on the East Coast and one in the Southwest. The sample (N = 294) represented all faculty members in the social sciences and humanities disciplines as they use GAI in a similar manner that differs fundamentally from its application in STEM disciplines.

Procedures

Faculty emails were compiled from an online directory, then narrowed to social sciences and humanities. Using Qualtrics, we sent email invitations to participate in the study along with a survey link. Upon clicking, participants first completed a consent form, followed by a filter question if they use GAI. Non-users were asked about their perceptions, concerns, reasons for non-use, behavioral intentions, and technology comfort, followed by demographics. Users answered the same questions, plus reported current GAI uses and impressions. To ensure data quality, attention-check questions were included to detect inattention or rushed responses. Data collection was conducted in 2024.

Thematic analysis was employed to identify, organize, and interpret patterns of meaning within the data. The process began with close reading to become familiar with the content and taking notes of initial observations. Using an inductive approach, the data was re-examined closely and assigned initial codes, grouping relevant parts together based on emerging concepts. Next, by bringing together the codes and fragmented ideas that link data together, analyzing data for patterns of similarity or contrast, and synthesizing codes into potential themes. Finally, the themes were refined and modified to reflect the data and patterns of shared meaning.

Measures

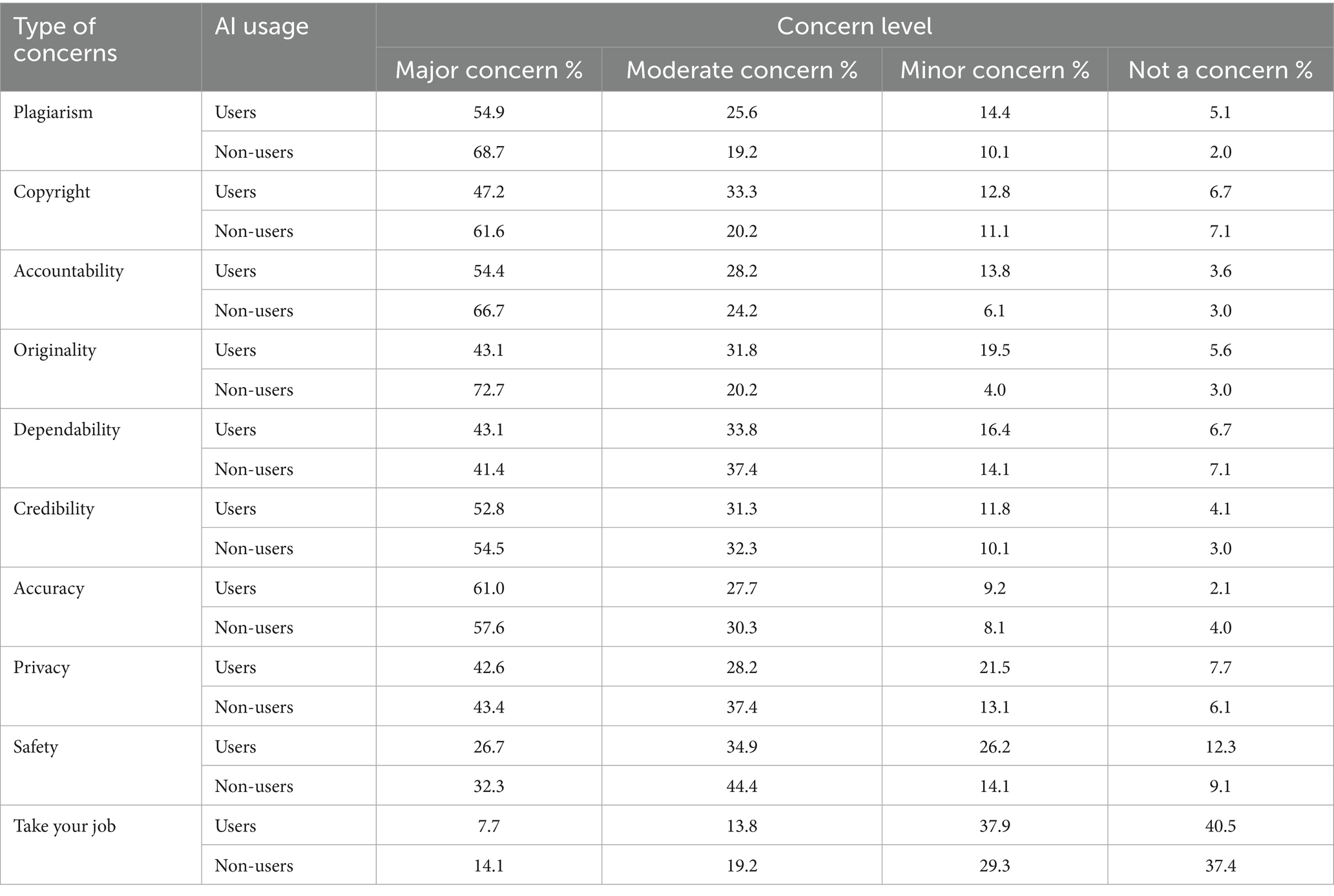

All the theoretical constructs used existing measures, adapted to fit the AI context and measured using multi-item scales validated in previous research. Behavior Intention is the intention level to adopt GAI, measured using a five-point Likert scale adapted from with four statements (Youk and Park, 2023) (α = 0.957). Comfort with technology measures the acceptance, use, and overall level of comfort with technology. It was measured using a five-point Likert scale adapted from with six statements (Rosen et al., 2013) (α = 0.820). GAI concerns are worries about the potential negative impacts of GAI. It included nine items obtained from interviews and discussions with college academics and from media reports. Each item was assessed on a five-item scale (see Table 1) (α = 0.842).

Sample characteristics

A total of 294 higher-education faculty completed the survey; 33.6% reported not using GAI. Faculty roles included Full Professors (24.5%), Associate Professors (23%), Assistant Professors (19%), Faculty in residence (7.5%), Adjunct Faculty (6.5%), and Visiting Faculty (6.5%). Most (82%) held no administrative roles. Experience levels were: 35% (20 + years), 21% (9–14 years), 20% (4–8 years), and 15% (15–20 years). Participants identified as Female (45%), Male (43%), and prefer not to say (8%). Participants identified as Caucasian (69%), African American (7%), Asian/Pacific Islander (7%), Hispanic (6%). Their age ranged from 41–50 (25%), 51–60 (23%), 31–40 (21%), and 61–70 (15%).

Findings

Qualitative analysis

Key reasons for faculty NOT using GAI

Thematic analysis highlighted five key themes/barriers:

1. Not Ready, Not Now: This theme highlights faculty’s lack of sufficient knowledge and information about GAI technology, as reflected in repeated comments such as “Do not know how -Do not know enough.” They are not familiar with the technology or how to use it and have not explored it yet. Additionally, faculty also feel busy with other responsibilities like research, teaching, service, and they cannot take another new responsibility. One faculty said, “I’m busy enough without one more thing to learn.” The implicit expectation that faculty should already comprehend how AI is being applied in the industry, while the field itself remains in a state of rapid development with no universally accepted frameworks or standardized practices, puts a lot of pressure on faculty, making them feel overwhelmed and more hesitant to use it.

2. No Perceived Value: This theme focuses on faculty’s perceptions about GAI, some see no perceived usefulness or meaningful value in using it, arguing that there is no need, no value, and no benefit. They question its utility and think it is irrelevant and offer no advantage to their disciplines or expertise. For example, one faculty said, “No motivation to use it, or figure out how to use it. I’m fine with writing and searching for myself. I do not see much to be gained from using GenAI.” This raised further concerns among faculty that relying on GAI may increase dependency on technology and weaken individuals’ critical thinking and analytical skills. Additionally, some faculty believe GAI not only fails to offer meaningful improvements, but provide bad outcomes, arguing “results are gibberish and unsettling, which can change academia in a negative way.”

3. Identity in Tension: This theme centers on faculty’s self-image, perceptions about oneself, and how others see them. It is rooted in a sense of ownership, pride, and professional authenticity in relation to their work. At its core, it is about being satisfied with who you are, what you do, and how others see you. Faculty see GAI as a questionable tool for experts and serious academic work that undermines professional integrity because they associate its use with a lack of effort or expertise. One faculty said, “I’m an accomplished researcher and writer. When I put my name on something, it is my own. I’m uncomfortable with work that is not one’s own effort.” Additionally, some faculty are worried about their image and how other colleagues and students see them, arguing, “I want my students to see me as an authentic scholar who can teach them how to learn without technology-aided software.” Underlying these concerns is a deeper struggle with finding purpose and value in their work and academic roles. It challenges the aspects of their work that bring them fulfillment, arguing, “it challenges most of the meaningfulness I get from the job.”

4. Threat to Human Intelligence: This theme reflects faculty concerns that using GAI can underestimate, replace, or erase human creativity, critical thinking, individuality, and original work. Many worry that overreliance on GAI may lead to intellectual laziness, diminishing the value of deep thought, undervaluing human work, and substituting it with GAI work. For example, one faculty shared, “I do not use it because I object to it on a moral and creative basis; I know these tools are going to be used to undermine authors, artists, and other creators to devalue, steal from, and replace their work.” Many faculty value their freedom to express themselves, stand up for their beliefs, and appreciate human intelligence. One explained, “I’m a humanist disciplinarily and intellectually. I do not want human creativity and intellectual thought outsourced to computers.”

5. Future Fears and Present Risks: Some faculty members have expressed several ethical concerns about GAI. A primary concern is misuse, particularly in relation to privacy and copyright, where GAI may exploit information in harmful or unauthorized ways. Faculty also worry about potential harmful consequences due to risks of falsification, fabrication, plagiarism, and cheap labor. One faculty said, “reading about the underpaid workers who are traumatized by plunging the darkest depths of the internet to flag content, so ChatGPT does not return inappropriate content is an example of unethical and horrific human labor.” Another concern is the distrust and lack of accuracy of the output generated, which contributed to further questioning the quality and reliability of the data. Additionally, some faculty expressed concern about letting technology determine the outcome of human endeavors. They warned that “Technology on a societal level furthers socio-political interest and accelerates societal devolution towards a dystopia as never has been seen in this world.”

Faculty perceptions of GAI

A qualitative analysis of faculty members’ perceptions of GAI was conducted based on their brief descriptions of the GAI technology. Non-users associate GAI with academic dishonesty with concerns centered around cheating, plagiarism, copyright violations, inaccuracy, unethical, cheap shortcut, invasion of privacy, lacking deep third-level thought, causing “intellectual laziness.” Many express fears about the future for its possible negative societal impact, describing it with terms like the “Terminator, Skynet, Pandora’s box, dystopian future, uncharted, I-robot,” warning it could cause trouble or lead to significant complications, like perceived “loss of humanity,” dismissing it as “garbage.” However, some faculty acknowledge its powerful potential, describing it as helpful, smart, fast, and full of possibilities. While few faculty adopt a more balanced perspective, viewing it as a “double-edged sword - opportunity, yet scary, good and bad, strange but awesome.”

For faculty users, they perceive it as “an evolution, advanced technology or advanced Google” that has the potential to bring about “revolutionary change.” Many see significant benefits, particularly in its role as an “assistant” where it is praised for being “efficient, helpful, convenient, saves time, good for brainstorming, and a powerful tool” for boosting 3 Ps—productivity, prediction, and problem-solving. Participants successfully identified and described GAI as “LLMs, machine learning, and language model.” However, some faculty express concerns about its experimental nature, especially its unreliability and unpredictability, questioning its trustworthiness, asking questions “What if….—what is true?” This uncertainty about its future impact leaves them uneasy, viewing it as inherently risky. They share the same academic dishonesty concerns, in addition to “hallucinations, biased algorithms, lack of regulation, and reduced agency.”

Faculty concerns about GAI

As shown in Table 1, non-users of AI are most concerned with its potential lack of originality and accountability, supporting the above-mentioned theme on the threat to creativity and human intelligence, while users of GAI prioritize concerns about accuracy. Both groups, however, share common concerns regarding plagiarism, accountability, and copyright issues. Interestingly, “job displacement” was the least significant concern for both GAI users and non-users.

Quantitative analysis

For H1, an independent t-test was conducted and results showed that GAI concerns were higher among faculty users (M = 1.94; SD = 0.56) than the non-users (M = 1.77; SD = 0.60), and this difference was statistically significant [t(292) = 2.394, p < 0.05]. Thus, H1a is not supported. However, another independent samples t-test revealed a statistically significant difference in comfort with technology among faculty groups, t(292) = 2.744, p < 0.05, such that non-users reported lower comfort levels (M = 3.66, SD = 0.76) compared to faculty users (M = 3.92, SD = 0.76). Thus, H1b is supported.

For H2, a multiple regression found that the model was significant, [F(2, 98) = 9.741, p < 0.001, R2 = 0.169], with only general comfort with technology was a significant positive predictor of intention use GAI [β = 0.576, t(98) = 4.095, p < 0.000] compared to the concerns about GAI (p = 0.056). Thus, only H2b was supported.

Discussion and conclusion

The study findings address the gap in the literature by providing new empirical evidence into faculty perspective, moving beyond surface-level concerns to deeply explore the underlying reasons or barriers for opting out of GAI. The five themes/barriers align with the Innovation Resistance Theory (Ram and Sheth, 1989), which highlights functional and psychological barriers to adopting new technology. The themes Not ready/Not now, No perceived value, and Future fears and present risks are consistent with the functional barriers related to performance and outcomes. While the identity tension and threat to human creativity themes represent the psychological barriers as they challenge their established values, core beliefs, and professional identities, creating dissonance resulting in faculty resisting GAI (Talwar et al., 2024).

Regarding faculty perceptions, users tend to emphasize the potential benefits of GAI, while non-users adopt a more cautious and pessimistic perspective. These findings align with Mah and Groß’s (2024) faculty profiles, such that users correspond with the “optimistic” profile, which emphasizes the benefits of AI tools, whereas non-users align with the “critical” and “critically reflective” profiles, which recognize more of the challenges and, to varying degrees, the benefits of AI. This distinction reflects a form of skepticism toward technology, rather than complete resistance.

Faculty expressed concern about academic dishonesty, consistent with past literature (Cervantes et al., 2024; Nikolic et al., 2024). Yet, a deeper analysis revealed distinct differences; non-users were concerned about GAI’s lack of originality, threat to creative work, and negative societal impact. In contrast, users were more concerned with “post-use” issues, such as the accuracy and reliability of outputs, potential bias in the information, and the lack of control over data. Interestingly, both groups found job displacement to be the least concern, contrary to common narrative and fears reported in the media and public, faculty do not see GAI as a threat to employment, maybe because they feel their roles require complex, human-centered skills that AI cannot replicate (Benzinger et al., 2023).

Quantitative analysis revealed that non-users reported lower levels of general comfort with technology, which negatively influenced their intention to use GAI. Their reluctance appears rooted in a broader discomfort with new technologies that fall outside their comfort zone, potentially contributing to limited adoption. Although concerns about GAI were marginally insignificant (p = 0.056), they may still play a subtle role in shaping adoption decisions, which warrants further investigation across diverse contexts and populations.

Contributions, limitations and future directions

This research offers valuable insights into the barriers driving faculty resistance to GAI in higher education, contributing to the literature on technology adoption/resistance. These findings challenge existing technology acceptance models by highlighting that faculty resistance is not solely a function of perceived usefulness or ease of use, but is also rooted in deeper identity-based, value/belief system, and ethical concerns. This suggests that current models must expand to account for the emotional and value-driven reasons, not just practical ones.

The findings inform the development of institutional policies that address faculty concerns through training, support, and clear guidelines. They also encourage ongoing academic dialog to balance GAI’s benefits with ethical implications, ensuring its use enhances rather than undermines human creativity and critical thinking, and prepares students for an AI-integrated future while preserving academic values. However, a key limitation of this study is focusing on two U.S. universities within the humanities and social sciences. Thus, findings may not be generalized to STEM disciplines or to institutions in international contexts. Future research can track changes over time and focus on disciplinary differences, especially in STEM.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by UNLV Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AS: Investigation, Writing – review & editing, Formal analysis, Methodology, Writing – original draft, Data curation, Supervision, Conceptualization, Project administration.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The publication fees for this article were supported by the UNLV University Libraries Open Article Fund.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aad, S., and Hardey, M. (2025). Generative AI: hopes, controversies, and the future of faculty roles in education. Qual. Assur. Educ. 33, 267–282. doi: 10.1108/QAE-02-2024-0043

Alghamdi, S., and Alhasawi, Y. (2024). Exploring the factors influencing the adoption of ChatGPT in educational institutions: insights from innovation resistance theory. J Appl Data Sci 5, 474–490. doi: 10.47738/jads.v5i2.198

Benzinger, L., Ursin, F., Balke, W. T., Kacprowski, T., and Salloch, S. (2023). Should artificial intelligence be used to support clinical ethical decision-making? A systematic review of reasons. BMC Med. Ethics 24:48. doi: 10.1186/s12910-023-00929-6

Cervantes, J., Smith, B., Ramadoss, T., D'Amario, V., Shoja, M. M., and Rajput, V. (2024). Decoding medical educators' perceptions on generative artificial intelligence in medical education. J. Investig. Med. 72, 633–639. doi: 10.1177/10815589241257215

Chan, C. K. Y., and Hu, W. (2023). Students’ voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Chiu, T. K. F. (2024). Future research recommendations for transforming higher education with generative AI. Comput. Educ. Artif. Intell. 6:100197. doi: 10.1016/j.caeai.2023.100197

Cordero, J., Torres-Zambrano, J., and Cordero-Castillo, A. (2024). Integration of generative artificial intelligence in higher education: best practices. Educ. Sci. 15:32. doi: 10.3390/educsci15010032

Crompton, H., and Burke, D. (2023). Artificial intelligence in higher education: the state of the field. Int. J. Educ. Technol. High. Educ. 20:22. doi: 10.1186/s41239-023-00392-8

Francis, N. J., Jones, S., and Smith, D. P. (2025). Generative AI in higher education: balancing innovation and integrity. Br. J. Biomed. Sci. 81:14048. doi: 10.3389/bjbs.2024.14048

Mah, D.-K., and Groß, N. (2024). Artificial intelligence in higher education: exploring faculty use, self-efficacy, distinct profiles, and professional development needs. Int. J. Educ. Technol. High. Educ. 21:58. doi: 10.1186/s41239-024-00490-1

Mansoor, H. M., Bawazir, A., Alsabri, M. A., Alharbi, A., and Okela, A. H. Artificial intelligence literacy among university students—a comparative transnational survey. Front. Commun. 9:24. doi: 10.3389/fcomm.2024.14784762024

Nikolic, S., Wentworth, I., Sheridan, L., Moss, S., Duursma, E., Jones, R. A., et al. (2024). A systematic literature review of attitudes, intentions and behaviours of teaching academics pertaining to AI and generative AI (GenAI) in higher education: an analysis of GenAI adoption using the UTAUT framework. Australas. J. Educ. Technol. 40, 56–75. doi: 10.14742/ajet.9643

Noviandy, T. R., Maulana, A., Idroes, G. M., Zahriah, Z., Paristiowati, M., Emran, T. B., et al. (2024). Embrace, don’t avoid: reimagining higher education with generative artificial intelligence. J Educ Manage Learn. 2, 81–90. doi: 10.60084/jeml.v2i2.233

Ram, S., and Sheth, J. N. (1989). Consumer resistance to innovations: the marketing problem and its solutions. J. Consum. Mark. 6, 5–14. doi: 10.1108/EUM0000000002542

Rosen, L. D., Whaling, K., Carrier, L. M., Cheever, N. A., and Rokkum, J. (2013). The media and technology usage and attitudes scale: An empirical investigation. Comput. Hum. Behav. 29, 2501–2511.

Sekli, G. M., Godo, A., and Véliz, J. C. (2024). Generative AI solutions for faculty and students: a review of literature and roadmap for future research. J. Inf. Technol. Educ. Res. 23:014. doi: 10.28945/5304

Shata, A., and Hartley, K. (2025). Artificial intelligence and communication technologies in academia: faculty perceptions and the adoption of generative AI. Int. J. Educ. Technol. High. Educ. 22:14. doi: 10.1186/s41239-025-00511-7

Sobaih, A. E. E., Chaibi, A., Brini, R., and Abdelghani Ibrahim, T. M. (2025). Unlocking patient resistance to AI in healthcare: a psychological exploration. Eur J Invest Health Psychol Educ 15:6. doi: 10.3390/ejihpe15010006

Talwar, M., Corazza, L., Bodhi, R., and Malibari, A. (2024). Why do consumers resist digital innovations? An innovation resistance theory perspective. Int. J. Emerg. Mark. 19, 4327–4342. doi: 10.1108/IJOEM-03-2022-0529

Williams, R. T., and Ingleby, E. (2024). Artificial intelligence (AI) in practitioner education in higher education (HE). Practice 6, 105–111. doi: 10.1080/25783858.2024.2380282

Keywords: AI, higher education, faculty, concerns, AI resistance, innovation resistance theory

Citation: Shata A (2025) “Opting Out of AI”: exploring perceptions, reasons, and concerns behind faculty resistance to generative AI. Front. Commun. 10:1614804. doi: 10.3389/fcomm.2025.1614804

Edited by:

Davide Girardelli, University of Gothenburg, SwedenReviewed by:

Rashmi Kodikal, Graphic Era University, IndiaGila Kurtz, Holon Institute of Technology, HIT, Israel

Copyright © 2025 Shata. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aya Shata, YXlhLnNoYXRhQHVubHYuZWR1

Aya Shata

Aya Shata