- 1School of Languages and Literature, Wuhan University of Bioengineering, Wuhan, China

- 2Research Institute of Communication, Communication University of China, Beijing, China

Introduction: Digital assistive technologies are transforming social inclusion for visually impaired individuals, yet their public health implications remain contested. This study aims to examine how interface cues and platform algorithms shape affective labor in assistive technologies, and what implications this has for health equity. Focusing on the transnational platform “Be My Eyes,” we analyzed how technology-mediated caregiving reshapes social support networks and impacts health equity through affective labor.

Methods: The study employed digital ethnography. Semi-structured interviews were conducted with 11 volunteers who heavily utilized Be My Eyes. In addition, the respondents’ help diaries were analysed.

Results: Four interlinked themes emerged. (1) Feeling rules: interface cues (urgency banners, countdowns, re-matching scripts, default anonymity, notification cadence) specify when to step in, how fast to act, and what tone to use; (2) Surface acting: volunteers manage voice, wording, and pacing to keep calls steady under time pressure; (3) Deep acting: stance shifts from “savior” to collaborator, using shared metaphors and pacing to co-construct meaning; and (4) Emotional dissonance: speed cues, metrics, and modality limits (e.g., tactile gaps) can pull felt emotion and displayed composure apart.

Conclusion: Our findings critique “algorithmic altruism,” wherein empathy is rendered computable via metrics such as response speed and closure, and highlight hidden public health risks, such as emotional exhaustion in volunteers. We identify scope-bounded design levers—tempo flexibility for openings/closings, minimal opt-in identity cues to allow warmth without losing anonymity, and light relational continuity—to support responsiveness and emotional integrity. We also mark boundary conditions (task type, modality demands, cultural fit). This study urges a shift toward sensory-diverse, equity-oriented design and policy protections for affective labor, advancing health equity by centering disabled agency rather than perpetuating market-driven disparities. These insights complement Sustainable Development Goals (SDG 3 and SDG 10) by specifying interface-level mechanisms through which technologies can bridge, rather than widen, inequalities.

1 Introduction

Digital media have significantly improved the daily lives of individuals with disabilities (Ellis et al., 2019). For those with visual impairments, smartphones and assistive platforms now perform tasks such as navigation and entertainment, reducing barriers to social participation. In this sense, technological advancements have bridged gaps caused by disabilities, mitigating inequalities in social participation to some extent (Zyskowski et al., 2015; Khetarpal, 2015). Importantly, these tools transcend functional utility—they reshape social networks by connecting users beyond homogeneous circles, challenging the stigmatized identities imposed on disabled groups (Hamraie, 2017; Dobransky and Hargittai, 2016). As argued by Alabi and Mutula (2020), virtual environments can temporarily obscure disability markers, enabling visually impaired individuals to engage in social interactions with greater confidence, reducing communication barriers with non-disabled individuals, and strengthen their social self-efficacy.

However, this narrative of digital inclusion conceals a paradox: while technologies eliminate spatial barriers, they risk consolidating new forms of segregation (Badr et al., 2024). As Roulstone (2016) cautions, digitally mediated spaces may intensify isolation by trapping disabled users in algorithmic echo chambers, reinforcing withdrawal and disconnection (Shpigelman and Gill, 2014). Combined with persistent infrastructural inequalities, such as inaccessible urban design (Imrie, 2012), and an overreliance on smart devices (Ellis et al., 2019), visually impaired individuals remain caught between digital liberation and deeper marginalization.

At the same time, the rapid development of algorithms and platform technologies, particularly with the integration of artificial intelligence, has made algorithmic care increasingly embedded in everyday life, especially in the health domain. Digital accessibility now provides unprecedented convenience for disability communities. For the visually impaired, assistive platforms such as intelligent voice assistants, accessible navigation applications, and telemedicine services aim to bridge information gaps and enhance health and wellbeing. Yet recent research also highlights new tensions: these platforms can foster “enclosed” virtual circles that reinforce social isolation rather than integration (Rauchberg, 2025). In contexts such as China, where structural barriers such as insufficient transport accessibility and incomplete social recognition remain prevalent (Jin, 2024), reliance on digital platforms has become both a coping strategy and a new burden. Thus, platform design does not merely enable or constrain use; it actively shapes users’ affective labor in adapting to interfaces and negotiating stigma. This dual role positions digital accessibility as a key determinant of health equity, with platform rules and defaults functioning as hidden mechanisms that may either mitigate or exacerbate existing inequalities (Kerdar et al., 2024). Groom et al. (2024) further propose the Digital Health Equity–Focused Implementation Research Conceptual Model (DH-EquIR), which integrates health equity into each stage of digital health deployment—from planning and design to implementation and evaluation—highlighting how inclusive frameworks can systematically reduce inequities embedded in technological defaults.

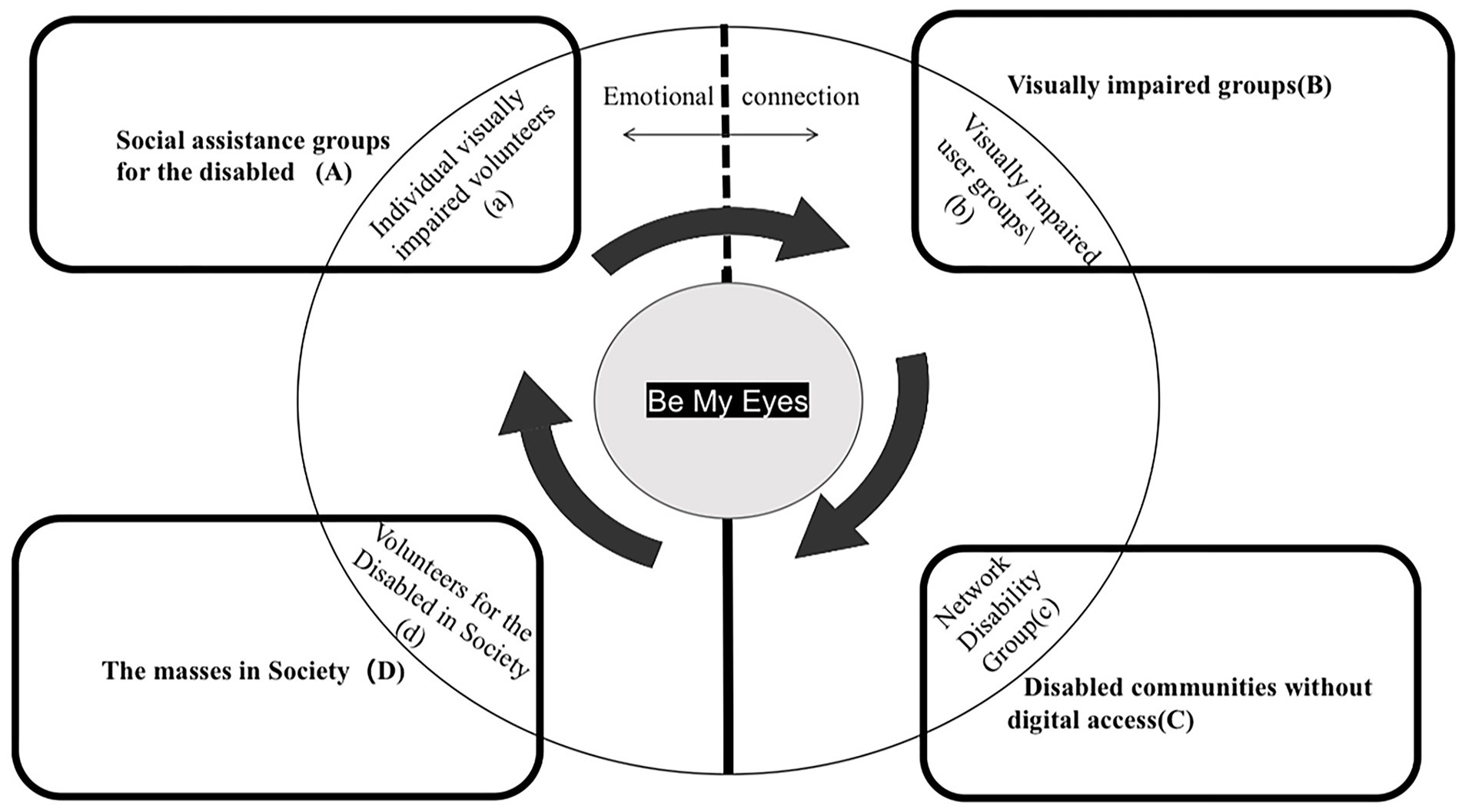

Amid these tensions, platforms such as Be My Eyes function as socio-technological experiments. Launched in 2015, this crowdsourced assistance app connects over 700,000 visually impaired users with 7 million global volunteers via real-time video calls, providing immediate visual support for tasks like reading labels or navigating public transit. Unlike traditional charity models, Be My Eyes exemplifies Latour’s (2005) concept of “hybrid collectives,” which involve collaborations between human and non-human actors (e.g., algorithms, interfaces) to reconfigure social relations. From a digital society perspective, the platform successfully integrates grassroots volunteer networks with institutional resources via bottom-up, agentic communication, creating an efficient and inclusive disability assistance system (Oomen and Aroyo, 2011). This model not only provides substantial aid to visually impaired individuals but also highlights digital media’s potential to foster social inclusion and communal wellbeing (Alper and Goggin, 2017), and transforms “digital liberation” from abstract rhetoric into tangible reality (Figure 1).

Recent studies indicate that interface cues and default settings in digital health services operate as affective rules that shape users’ emotional regulation and engagement, with significant implications for health equity (Pettersson et al., 2023). Yet little is known about how such mechanisms unfold in assistive platforms for the visually impaired, where design choices may carry unique cultural and affective implications. However, despite enhancing accessibility (Oomen and Aroyo, 2011), the impact of such platforms on public health, particularly in mental health and equity, remains underexplored. For instance, how does technology-mediated care affect emotional wellbeing? Might algorithmic indicators like response speed inadvertently reinforce health disparities? These questions warrant further discussion in the aforementioned context. In this study, we ask how interface cues and platform defaults operate as feeling rules that shape pace, tone, and the scope of help; how volunteers manage displays under time pressure; when stance shifts toward collaboration enable shared meaning; and where misalignment between speed cues, metrics, or modality limits produces strain. Situated in the Chinese context, where mianzi (face concerns around evaluation and social standing), notification-dense mobile ecologies, and strong efficiency norms may amplify or soften these dynamics, we treat Hochschild’s concepts as sensitizing rather than a priori categories that link environmental factors to participation and sensory translation. This framing clarifies not only benefits but also risks (e.g., “algorithmic altruism”) and motivates design and policy levers that prioritize sensory diversity and affective labor protections.

2 Literature review

2.1 Re-examining digital inclusion

Scholars have long analyzed the relationship between digital technologies and disability inclusion through the “digital divide” framework, which emphasizes disparities in access to information and skills among marginalized groups (Khasawneh, 2024; Lythreatis et al., 2022; Wei and Hindman, 2011). Early research prioritized material barriers like inaccessible interfaces (Goggin and Newell, 2003), whereas recent critiques increasingly examine how digital platforms reinforce or challenge structural ableism (Hamraie, 2017; Dobransky and Hargittai, 2016; Wolbring et al., 2024). For visually impaired individuals, smartphones and assistive tools appear to democratize social participation, enabling navigation of both physical and virtual spaces (Zyskowski et al., 2015). However, as Oliver (2013) argues, disability exclusion stems not only from physical limitations but also from socio-material systems that privilege normative abilities. This “social model” of disability highlights dual barriers faced by visually impaired communities: infrastructural inaccessibility (Kitchin and Law, 2001) and attitudinal stigmatization (Shakespeare, 1994), both embedded within Campbell’s (2009) concept of the “production of disability.”

The emergence of digital technologies complicates this dichotomy by blurring disability markers (Alabi and Mutula, 2020; Alper, 2017) and creating a seemingly egalitarian space. For people with disabilities, how digital platforms help them find a sense of relational belonging is crucial. Baumeister and Leary’s (1995) belongingness theory suggests that sustained and effective interpersonal connections are fundamental to human motivation. Yet, current technological applications may amplify the “algorithmic isolation” described by Roulstone (2016), where technological systems structurally exclude people with disabilities through interface design, missing training data, and value differences. This contradiction also reflects a broader debate in critical disability studies: does digital inclusion merely accommodate disabled bodies within existing systems, or does it subvert ableist norms (Alper and Goggin, 2017; Kim and Park, 2020).

2.2 The affective turn in disability studies

The “affective turn” in the social sciences (Ahmed, 2013; Papacharissi, 2015) offers a critical perspective on these contradictions. Affectivity, once seen as marginal to technological design, are now recognized as central to human-technology assemblages (Latour, 2005). Emotional communication involves the expression, contagion, and sharing of individuals’ or groups’ emotions and associated information. With new media technologies and evolving social realities, emotional communication mechanisms mainly involve the generation (Papacharissi, 2015), transmission (Hatfield et al., 1993), and reception (Nabi, 2010) of emotions.

In the context of disability, affective labor—the affective work to sustain caring relationships—is both a liberating force and a site of control. Building on Hochschild’s formulation of emotional labor as the rule-governed management of feeling and display in service relations—signaling the broader marketization of affect (Hochschild, 1979, 2012)—subsequent work highlights emotional expression as a core medium of role communication shaped by institutional expectations (Morris and Feldman, 1997; Silard et al., 2023). Kim and Cameron’s (2011) crisis-emotion processing evidence draws analytic attention to how specific events trigger discrete emotions that shape information processing and subsequent responses. See also Nabi (2010) on discrete emotions in communication.

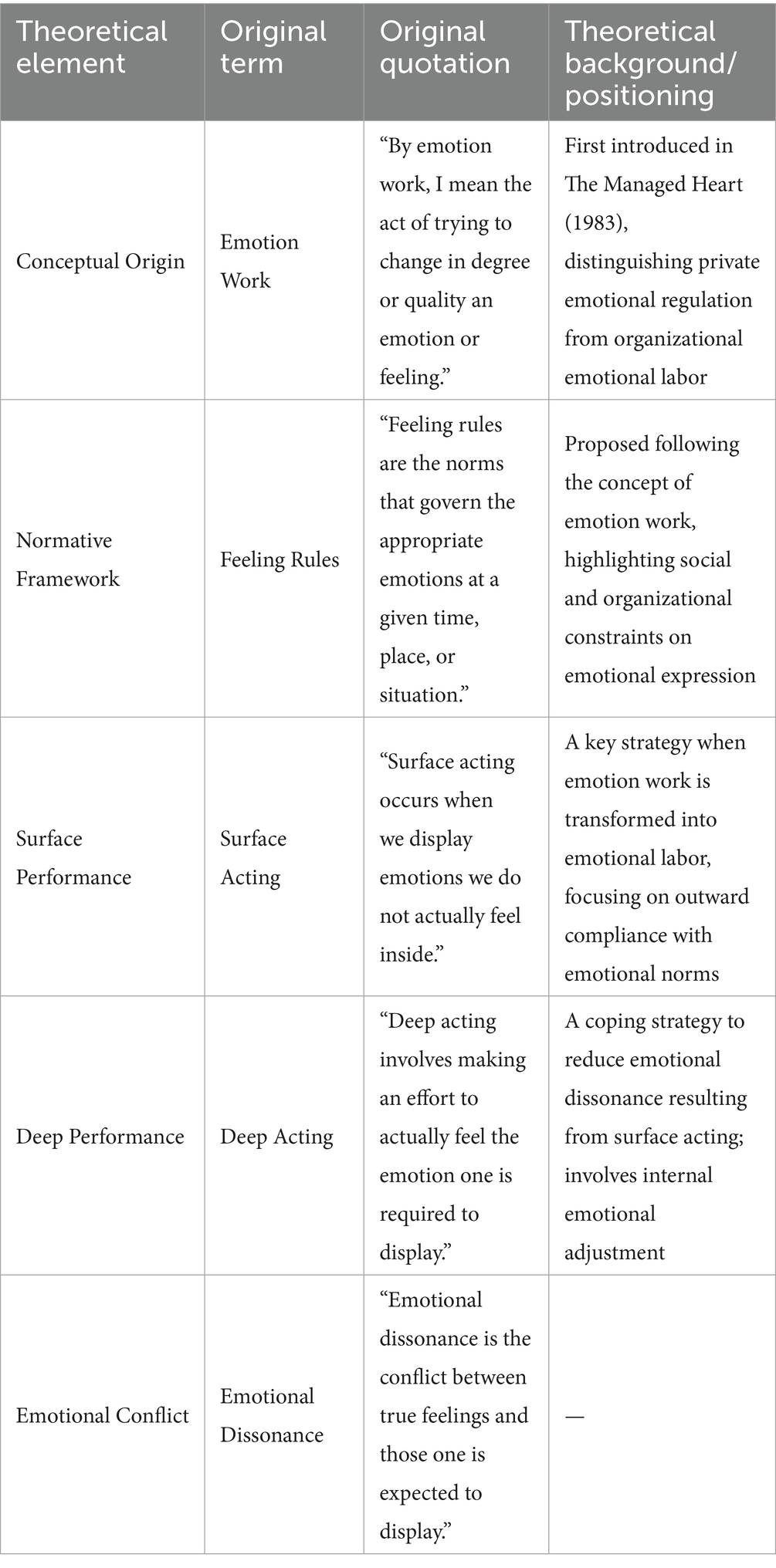

Read through this lens, digitally mediated, non-institutional volunteering for visually impaired users surfaces platform-specific “feeling rules” (e.g., urgency badges, countdown timers, notification cadence, anonymity, and re-matching) that contour when and how care is performed. These strands jointly sensitize our coding to two recurrent experiential currents (empowerment and exhaustion) and to the structuring role of algorithmic cues, providing the theoretical scaffolding for our operationalization in this study (Table 1).

Recent research has expanded this conversation. (Erdoğan et al., 2025) conceptualize assistive platforms as “affective infrastructures” that configure both practical support and emotional rhythms, while van Kessel et al. (2024) highlight how digital health governance must incorporate “friendly defaults” and readability to reduce inequities. In contrast, Irani (2015) analysis of platforms like Amazon Mechanical Turk shows how they convert affective labor into quantifiable performance through algorithmic metrics like response speed, leading 72% of volunteers to sacrifice interaction depth for efficiency-based rewards. Building on this, Graham and Woodcock (2019) demonstrate how gig economy platforms more broadly discipline workers’ emotions by rendering them calculable, while Sveen et al. (2023) highlights how digital volunteering embeds “soft coercion” through algorithmic prompts that script both timing and tone of engagement. Could “Be My Eyes” become another such case? Might its volunteers, in maintaining fulfilling interactions beyond transactional assistance, be forced to adjust their behavior and attitudes due to platform algorithms? Could communication efficiency replace sustained emotional connections?

Existing research outlines the functional outcomes of digital inclusion, such as skill-learning progress (Dobransky and Hargittai, 2016), and emphasizes the emotional drivers of disability support (Soldatic, 2013), yet rarely explores how affective labor functions through technological mediation. How do digital platforms encode emotions into quantifiable transactions? Does algorithm prioritize efficiency over meaningful connections, and can it accommodate the complexity of human care? Could volunteers’ emotional exhaustion and users’ dependency risks offset the benefits of expanded social support networks? These questions are pressing for health communication.

Accordingly, framing affective labor as a health communication process, we examine how platform logics shape its enactment in assistive encounters and what this means for equitable, sustainable communication practice.

2.3 Interface cues as feeling rules

Over the past five years, scholars have increasingly recognized that interface cues and platform default settings in digital health services function as affective rules that shape user behavior. Design features that appear neutral—such as persistent reminders, pop-up alerts, or default settings—often impose implicit demands for affective labor, influencing how users acquire health information, engage with services, and sustain social interactions, with direct consequences for health equity. For instance, persistent metric reminders and pop-up alerts can induce anxiety about being “passively evaluated,” subsequently undermining long-term engagement (Milkman et al., 2021). Similarly, large-scale studies demonstrate that uneven access to portals and complex interaction paths disproportionately disadvantage users with low health literacy (Anthony et al., 2024).

Moreover, the accessibility and readability of interface prompts directly constitute distributive rules. A nationwide survey found that individuals with disabilities reported significantly greater difficulties across nearly all eHealth services, with variation across disability types closely linked to minor design elements such as interface language, icon contrast, and process prompts (Pettersson et al., 2023). Visually impaired users or those with higher cognitive load often need to invest extra patience and self-regulation, thereby incurring elevated affective costs.

In response, recent frameworks in international health and informatics have advocated incorporating “friendly defaults,” “easy opt-out,” “polite tone,” and “low-threshold readability” into digital health governance. This trend underscores that platforms should proactively reclaim users’ affective labor rather than outsourcing it to vulnerable populations, positioning technological design as an institutional lever for health equity (Kim and Backonja, 2025; Bucher et al., 2024).

Building on this foundation, the present study critically examines how, in assistive platforms for the visually impaired community, interface cues and platform defaults function as implicit or explicit perceptual rules that guide affective labor. These mechanisms require specific forms of emotional regulation, adaptive learning, and affective expression when seeking help, which in turn shape users’ access to health information, service utilization, and social interactions, ultimately affecting health equity.

3 Methodology

3.1 Research design and epistemological foundations

This study is situated within an interpretivist, abductive paradigm to examine how volunteers experience and make sense of algorithmically mediated caregiving on the Be My Eyes platform. We operationalize “digital ethnography” as the process of forming an in-depth understanding of emotional labor in algorithmic environments through emotional documentation supported by platform mediation and reflective interviews.

To guide the analysis, we integrate digital ethnography with inductive thematic analysis, treating Hochschild’s concepts—feeling rules, surface/deep acting, and emotional dissonance—as sensitizing concepts rather than a priori categories. We thus treat reality as socially constructed and interactional, and we trace how platform cues (e.g., urgency badges, countdown timers, re-matching, default anonymity) shape the intersubjective “feeling rules” that organize help encounters and volunteers’ affective regulation. This design is appropriate for unpacking the dialectic between structure and experience—how algorithmic governance and local cultural norms are interpreted, negotiated, and sometimes resisted in lived practice.

3.2 Participants

Primary data were collected between January and April 2025. We used purposive sampling via Xiaohongshu to recruit volunteers who met three criteria: at least three months of active use, 3–5 assistance sessions in the prior 30 days, and willingness to keep digital diaries. We recruited 15 volunteers; four were excluded due to incomplete diaries, yielding a final sample of n = 11 predominantly urban, tech-savvy participants.

While this homogeneity limits generalizability, it strategically focuses on the platform’s most active users, offering valuable insights into idealized care behaviors.

3.3 Data collection

To enhance methodological transparency and reproducibility, this study employed a phased research design that combined semi-structured interviews with digital diary collection. During the remote interview phase, encrypted video platforms were used for audio recording, with informed consent obtained from all participants. No third parties were present. Ensuring that the interview environment was quiet and private, each interview lasted no more than one hour and revolved around three thematic dimensions: technological affordances (e.g., “How do urgent help notifications affect your sense of responsibility?”), relational dynamics (e.g., “Can you recall a moment when the help recipient took control of the interaction?”), and algorithmic perception (e.g., “Do you think your response speed affects your matching priority on the platform?”). In addition, researchers recorded field notes immediately after each interview to supplement contextual information.

In the second phase, participants submitted digital diaries via a secure app to document real-time emotional reactions, contextual specifics, and platform-related reflections following help interactions. This method draws on sensory ethnography as proposed by Pink et al. (2015), enabling the capture of fleeting emotional residues that are often inaccessible in retrospective interviews. Digital diaries served, on the one hand, as source triangulation to verify interview accounts against in-situ practice, and on the other hand, as fine-grained documentation of supportive actions; accordingly, we treat them as part of our contextual field observations. Together with interviews, the diaries formed an evidentiary chain that captured stage-specific dynamics of emotional labor over time. All diaries were normalized and coded alongside interviews.

3.4 Data analysis

We employed an iterative–inductive thematic analysis (Timmermans and Tavory, 2012), with two principal researchers manually and independently completing the first-cycle open coding, tagging experiential segments and affective meanings. A second-cycle axial coding then grouped categories and examined relationships (e.g., “time–pressure cues ↔ emotional strain”). To enhance emotional sensitivity and strengthen coding reliability, we invited a medical humanities scholar from Zhongnan Hospital of Wuhan University to perform secondary coding (an independent review uninfluenced by the first-cycle codes). Discrepancies were reconciled through adjudication meetings to harmonize code definitions and consolidate a shared codebook and thematic framework.

Throughout the analysis, we monitored thematic saturation; by the eleventh case, novel codes had become infrequent, and the final case introduced no new core themes, indicating that theoretical saturation was largely achieved. Although full member checking was not feasible, the team circulated summary memos of key diary themes to selected participants to confirm interpretive accuracy and solicit clarifications. We did not use CAQDAS; coding was managed with spreadsheets and reflexive memos.

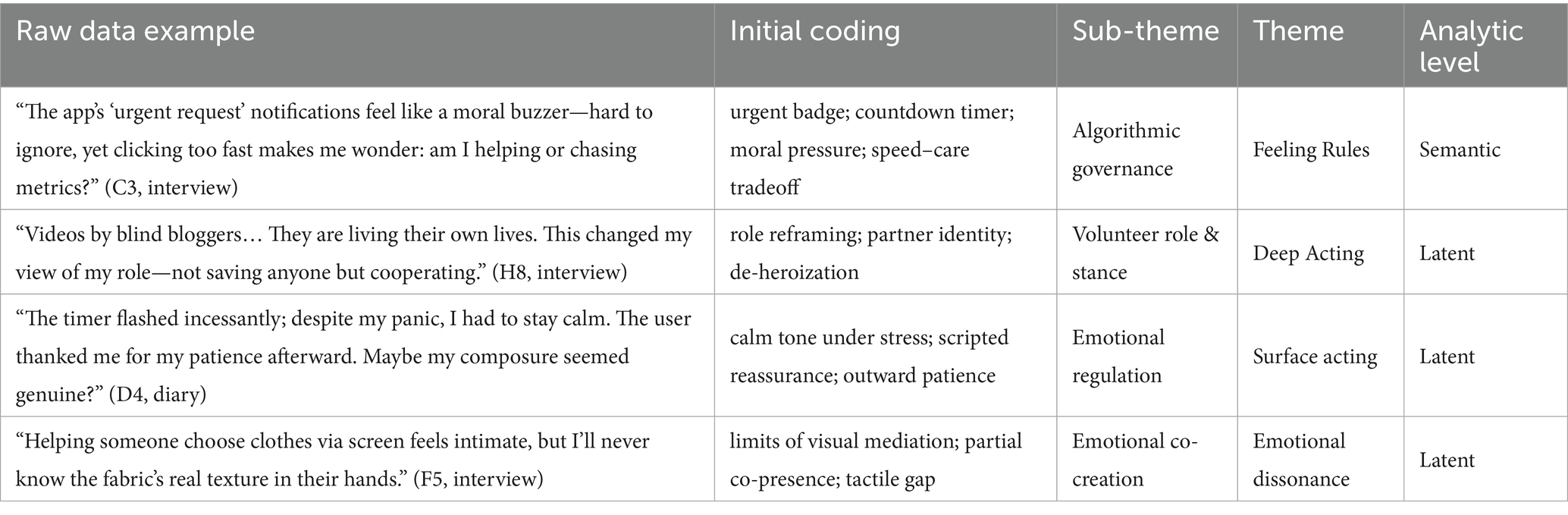

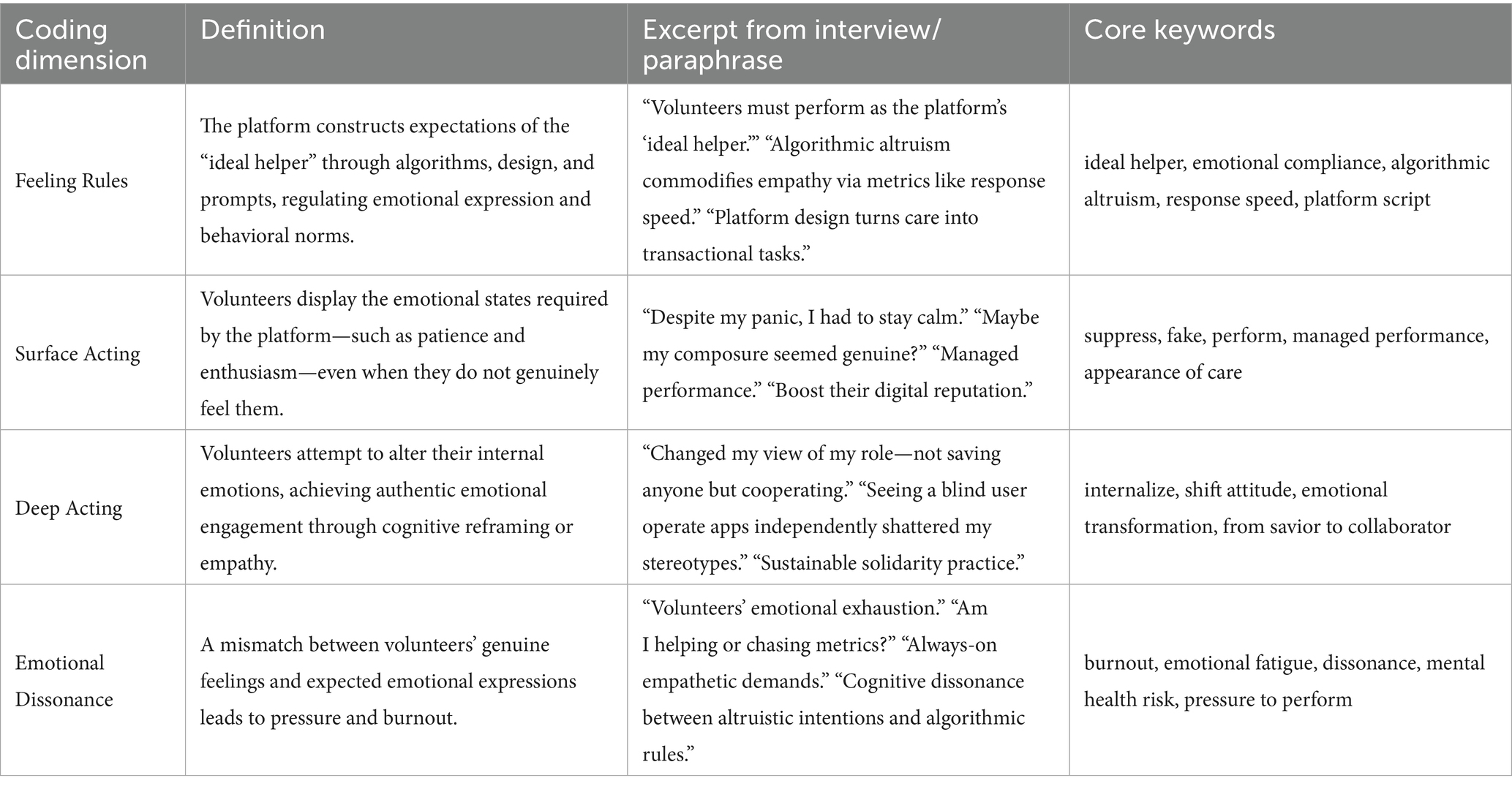

To ensure transparency without over-formalizing the process, we provide Table 2, a chain-of-evidence that maps raw excerpts → initial codes → second-cycle sub-themes → emergent analytic themes (e.g., Algorithmic governance); the Sensitizing concept column indicates how these themes align with Hochschild’s framework summarized in Table 3 (Feeling Rules, Surface Acting, Deep Acting, Emotional Dissonance).

As shown in Table 2, we traced a chain of evidence from raw excerpts to initial codes, then to second-cycle sub-themes and themes aligned with Hochschild’s sensitizing concepts. C3’s line that the app’s “urgent request” feels like a moral buzzer was first coded as urgent badge, countdown timer, moral pressure, and speed–care trade-off. These codes clustered into the sub-theme Algorithmic governance and were located under the Feeling Rules theme to indicate how urgency cues operate as situational norms. D4’s diary note about keeping a calm voice while the timer flashed produced codes such as calm tone under stress, scripted reassurance, and outward patience. We grouped these into Emotional regulation and interpreted the display as Surface acting.

H8’s reflection on “not saving anyone but cooperating” yielded role reframing, partner identity, and de-heroization, which formed Volunteer role and stance and were read as Deep acting. F5’s account of intimacy through a screen yet uncertainty about fabric texture generated limits of visual mediation, partial co-presence, and tactile gap. This became Emotional co-creation (limits) and was interpreted as Emotional dissonance.

Analytic memos documented the links among these steps. A memo on time and strain noted frequent co-occurrence of countdown cues with reports of panic alongside outward calm, supporting the relation between time pressure and surface-acting displays. A memo on role reframing traced how exposure to competent blind creators and repeated matches shifted volunteers from a savior posture to a collaborative one, consistent with deep acting. A memo on mediation limits connected “intimacy at a distance” with the tactile gap, clarifying why some encounters produced dissonance despite positive intent.

Through this chain of evidence, themes emerged inductively and were mapped onto Hochschild’s sensitizing concepts rather than imposed in advance.

4 Results

Our analysis yielded four thematic areas on “Be My Eyes.” Feeling Rules captures how urgency badges, countdowns, and default anonymity set situational norms of helping. Surface Acting describes composed tone and scripted reassurance under time pressure. Deep Acting reflects a shift from “savior” to “collaborator” and the use of sensory metaphors to co-construct meaning. Emotional Dissonance gathers tensions such as metric anxiety, exhaustion, and the tactile gap of screen-mediated intimacy. We link quotations to sub-themes and state the analytic moves that connect data to interpretation.

4.1 Feeling rules: algorithmic cues as situational norms

Volunteers consistently described how interface prompts and platform defaults came to define when, how fast, and in what tone help should be delivered. Urgency banners, countdown timers, scripted prompts, anonymity defaults, and notification cadence were experienced not merely as technical features but as normative cues that organized interaction tempo and emotional display.

Urgency signals were perceived as moral imperatives. One participant explained: “The app’s ‘urgent request’ notifications feel like a moral buzzer—hard to ignore, yet clicking too fast makes me wonder: am I helping or chasing metrics?” (C3, interview). This ambivalence captures a recurring tension: the same cue that mobilizes attention can narrow the space for exploratory talk, nudging exchanges toward brisk reassurance and rapid task completion.

Short scripted prompts and automatic re-matching were also reported to compress interaction into a standardized rhythm. As one participant put it, these features “make you get in, identify the task, finish, and hand off,” with little room for lingering (G7, interview, paraphrase). The result is a recognizable cadence—greet, solve, close—that stabilizes what counts as a proper helping exchange. Volunteers read this cadence as an implicit rule about tempo and talk length, one that privileges efficiency as the default display of care even when both parties might benefit from a slower pace.

Default anonymity also shaped the emotional climate. During video calls, volunteers act as “eyes” for the visually impaired via cameras to solve specific problems, such as checking medication expiration dates or confirming traffic light status. Many appreciated anonymity for reducing evaluative pressure: “Platform anonymity allows me to help more naturally and makes my actions feel pure, free from external judgments” (H8, interview).

In the Chinese context, participants explicitly connected this comfort to mianzi concerns: anonymity lowers the felt risk of evaluation for both asking and offering help, normalizing help-seeking and making interactions feel lighter and less awkward. At the same time, a few volunteers observed that full anonymity can mute warmth, hinting at a trade-off between reduced face pressure and reduced familiarity that we return to in the section on divergent cases.

Notification cadence further produced a background sense of obligation. A diary entry described “repeated pings” that make the need feel ever-present, leading the volunteer to stay “on” even when intending to take a break (J10, diary, paraphrase). This background hum functions as a soft rule of availability, cultivating low-intensity vigilance and a readiness to respond before a specific request arrives.

Taken together, these accounts show that interface cues do more than inform; they organize a local moral order of helping by setting tempo, tone, and acceptable scope. Urgency signals and scripted hand-offs encourage quick, task-first action; anonymity lowers face work while standardizing a restrained intimacy; notification cadence sustains ambient availability. These normative expectations establish the framework within which volunteers manage their displayed emotions, laying the foundation for the strategies of regulation and adaptation examined in the next section on Surface Acting.

4.2 Surface acting: managing displays under time pressure

Surface acting refers to the ways volunteers consciously manage their outward tone, demeanor, and verbal displays in order to appear calm, cheerful, and reassuring under conditions of time pressure. Even when internally stressed or fatigued, volunteers stabilize the emotional atmosphere by treating composure and warmth as essential parts of “doing the job right.”

Volunteers often described the timer as an ever-present pressure, yet they deliberately maintained steady voices. One diary entry noted: “The timer kept flashing; despite my panic, I had to keep a calm voice. The user later thanked me for my ‘patience’.” (D4, diary). Here, the outward display of steadiness reassured the caller even while the volunteer managed hidden stress.

Fatigue also prompted deliberate efforts to sound upbeat. As one participant recalled: “After three calls in a row I was exhausted, but I kept my voice bright so they would not worry.” (E5, interview, paraphrase). The deliberate use of a cheerful tone functioned as a protective strategy, preserving the other person’s experience even at personal cost.

Micro-repairs were another common strategy. One diary account described: “When I misread a label, I apologized quickly and reframed—‘let us try that again from the top’—then slowed my speech.” (K11, diary, paraphrase). Quick apologies and reframing smoothed over small failures and restored order without disrupting the overall flow.

Volunteers also relied on scripted reassurance as a reliable display rule. As one participant explained: “I find myself using the same lines—‘you are doing great,’ ‘we have got this’—because they keep people calm, even if I’m not feeling calm.” (B2, interview, paraphrase). Familiar phrases signaled reliability and anchored the interaction, particularly when timers and prompts heightened pressure.

Taken together, these accounts illustrate surface acting as a practical craft: stabilizing tone, employing reassuring scripts, narrating steps, and deploying micro-repairs to maintain smooth, predictable interactions. These techniques ensure efficiency and stability for callers but also create a gap between displayed and felt emotion. This gap contributes to the pressures that culminate in Emotional Dissonance, while in some cases, the repetition of these strategies opens space for deeper alignment, foreshadowing the more authentic stance shifts explored in Deep Acting.

4.3 Deep acting: reframing roles and co-constructing meaning

Deep acting refers to inward reorientations in stance and feeling that move beyond surface display management. Volunteers described shifting from a mindset of “helper fixes a problem” toward a collaborative posture, aligning their inner attitudes with a partner identity. Through reframing roles, deploying metaphors, and sustaining familiarity across encounters, volunteers engaged in meaning-making that transformed tasks into shared experiences.

Several participants emphasized role reframing as central to their shift from one-way aid to joint work. One interviewee recalled: “Videos made by blind bloggers… They are living their own lives instead of waiting for rescue. This changed my view of my role—not saving anyone but cooperating.” (H8, interview). Here, the stance change was not only about sounding cooperative but also about genuinely internalizing collaboration as the guiding orientation.

Volunteers also described using metaphor and imagery to co-construct shared scenes. A diary entry recounted: “When I guided a user to ‘see’ cherry blossoms through metaphor—comparing petals to ‘strawberry yogurt’—she inhaled and said she could smell sweetness.” (D4, diary). By adjusting descriptions until resonance was reached, volunteers and users leaned into a sensory frame together. Similarly, another participant noted: “I described a sweater’s color as ‘pre-storm sky gray-blue.’ I wasn’t just naming a hue; it opened space for her sadness about her daughter’s last gift.” (F5, interview). In such moments, practical description expanded into emotional understanding, shifting the volunteer’s focus from task accuracy to attending to meaning for the user.

Continuity across repeated encounters also nurtured deeper alignment. One volunteer reflected: “After a few matches with the same person, we do not start from zero. They tell me how they like directions. I find myself caring about their day, not just the task.” (J10, interview, paraphrase). Repeated matching fostered a thin but genuine thread of familiarity, allowing volunteers to internalize a partner mindset. This relational stance was reinforced through language choices. Another participant explained: “I stopped saying ‘do not worry, I’ll handle it’ and started asking, ‘how do you want to do this together?’ It feels more respectful.” (K11, interview, paraphrase). Here, linguistic shifts signaled internal changes in orientation—from control to collaboration—described as sincerely felt rather than superficially performed.

The broader disability discourse provides further context (Goggin et al., 2024). Under the medical model, disability has often been framed as an individual defect to be corrected (Garland-Thomson, 2017), emphasizing narratives of “overcoming difficulties.” In contrast, assistive platforms such as Be My Eyes offer a relational model of accessibility, where empathy is enacted as reciprocity rather than pity. Deep acting thus aligns with this alternative framing, recasting caregiving as shared participation in meaning-making rather than unilateral aid.

Together, these accounts demonstrate deep acting as an inward shift: volunteers reframe roles from rescuer to partner, employ metaphor and pacing to co-construct sensory and emotional scenes, and carry forward a thread of continuity through repeated encounters. These practices buffer strain by infusing meaning and relational depth into caregiving. Yet they also depend on conditions not always available—time, relational fit, and willingness to slow down. When such conditions are disrupted by speed cues, metrics, or modality constraints, volunteers experience tensions that manifest as Emotional Dissonance, the focus of the next section.

4.4 Emotional dissonance: metric anxiety and intimacy-at-a-distance

Emotional dissonance emerges when volunteers’ inner feelings diverge from the emotions they are expected to display. On the platform, this gap often arises under speed cues, metric pressures, screen-mediated intimacy, and technical frictions. While volunteers strive to present composure and warmth, the disjunction between inward exhaustion and outward reassurance produces fatigue, guilt, and second-guessing.

Volunteers frequently highlighted the pressure of metrics. One participant reflected: “After a string of ‘urgent’ calls I feel emptied out. It starts to feel like keeping my response score up instead of really helping.” (E5, interview, paraphrase). Here, efficiency is equated with success, but inwardly the work feels hollow. Another participant described urgency signals as a moral provocation: “The app’s ‘urgent request’ notifications feel like a moral buzzer—hard to ignore, yet clicking too fast makes me wonder: am I helping or chasing metrics?” (C3, interview). Together, these accounts illustrate how the platform’s design—favoring frequent, low-cost interactions—converts care into quantifiable outputs, a dynamic volunteers recognized as “algorithmic altruism.”

Feelings of guilt often surfaced after efficient exchanges. As one diary entry noted: “Even when the person thanks me, I close the app and feel oddly guilty, as if I rushed them.” (D4, diary). Gratitude at the surface did not erase the unease of having cut short a potentially deeper exchange. Volunteers desired authentic reciprocity, yet the platform’s optimization for speed and scalability often transformed care into transactional tasks.

Participants also described the limitations of screen-based intimacy. One interviewee explained: “Helping someone choose clothes via screen feels intimate, but I’ll never know the fabric’s real texture in their hands.” (F5, interview). Here, the missing tactile dimension created a sense of incompleteness—an embodied reminder of the limits of visual mediation. Despite claims of inclusivity, such design reinforces what Hamraie (2017) terms “sensory imperialism,” privileging vision at the expense of multisensory experience. The example of ubiquitous QR codes in urban China further illustrates how design choices in digital cities normalize able-bodied assumptions, requiring renewed reflection on accessibility infrastructures.

Technical disruptions compounded these tensions. One participant recalled: “When the connection lags I catch myself speaking faster to fill the space, and then I hear the user getting tense. Afterward I feel off.” (H5, interview, paraphrase). Such adjustments to mask technical issues inadvertently heightened anxiety on both sides. Another diary entry captured the lingering pull of availability norms: “I mute notifications to rest, then worry I’m letting someone down.” (J10, diary, paraphrase). Even efforts to protect energy were shadowed by guilt, showing how expectations of constant availability extended beyond the app itself.

Taken together, these accounts illustrate how algorithmic signals, scripted closures, missing sensory modalities, and technical frictions create dissonance between outward warmth and inward feeling. This misalignment often surfaced after calls—as fatigue, guilt, or self-doubt—and sometimes during calls when glitches forced volunteers into inauthentic displays. While some participants described coping strategies such as brief resets, slower pacing, or step-by-step narration, these depended on time and relational fit. Where such conditions were absent, strain accumulated, highlighting the fragility of emotional equilibrium under algorithmic care.

5 Discussion

This study demonstrates that digitally mediated care is neither a neutral inclusion tool nor merely an extension of charity logic, but rather a contested space where affective labor, algorithmic governance, and disability politics collide. Building on the four themes reported in Section 4, we show how interface cues operate as feeling rules that set pace, tone, and scope; how volunteers stabilize calls through surface display work; when deeper stance shifts support collaboration; and where dissonance emerges under speed cues and modality limits. Together these patterns explain when care tilts toward speed and closure, and when it can remain relational and meaningful.

5.1 From platform cues to feeling rules

Urgency banners, countdowns, scripted prompts/re-matching, default anonymity, and notification cadence do more than pass on information; they specify how help should feel in the moment—fast, composed, task-first, restrained in intimacy, and generally available. Reading these interface features as feeling rules extends Hochschild’s framework to platform-mediated, non-institutional volunteering and clarifies why the same surface techniques (steady prosody, micro-repairs) recur across calls, when deep acting becomes possible, and where emotional dissonance accumulates.

These findings resonate with Graham and Woodcock’s (2019) observation that platform architectures render emotions calculable and measurable, thereby disciplining workers’ affective performance (Griesser et al., 2024). Beyond interface discipline, our findings also align with JD–R evidence that high demands and work–home interference predict volunteer burnout, indicating that efficiency-first configurations can erode affective resources over time (Magrone et al., 2024).

5.2 Algorithmic altruism

The concept of “algorithmic altruism” reveals how empathy is commodified through quantifiable metrics like response time and task completion rates. Volunteers’ poetic descriptions of invisible colors and textures serve dual purposes: moral care and performative data points. When empathy becomes computable, volunteers internalize platform efficiency standards to boost their digital reputation. This process risks reducing human connections to transactional efficiency, masking the depth of relationships between volunteers and people with disabilities, and exacerbating participants’ emotional exhaustion.

Our findings resonate with recent work on assistive platforms as affective infrastructures. Pai et al. (2023) argue that platforms like Be My Eyes not only deliver practical support but also configure the affective rhythms of everyday life for disability communities. By showing how volunteers recalibrate their speech, metaphors, and tones in response to algorithmic prompts, our study extends this insight to demonstrate how platform governance codifies affective reciprocity into calculable outputs.

At the same time, design choices such as interface language, icon contrast, and readability directly shape users’ participation. Pettersson et al. (2023) found that accessibility barriers in eHealth services disproportionately taxed users with disabilities, creating uneven affective costs. Our participants’ accounts of fatigue and guilt mirror this dynamic, as they struggled to meet implicit rules of constant availability and composure. Similarly, frameworks proposed by Bucher et al. (2024) on “friendly defaults” and “low-threshold readability” reinforce the need for governance models that reclaim affective labor for users rather than outsourcing it to already vulnerable groups.

Taken together, algorithmic altruism reveals a paradox: while assistive platforms expand opportunities for engagement, they also risk privatizing welfare responsibilities, shifting burdens from collective systems to individuals and intensifying health inequities. By situating our findings within these debates, we demonstrate that platform-mediated care is not only about individual goodwill but also about institutional design choices that distribute emotional and social costs.

5.3 Interplay of surface and deep acting: affective infrastructure

Our results suggest a practical division of labor: surface acting keeps the call steady under time pressure (calm tone, brief narration, quick repairs), while deep acting—role reframing from “savior” to collaborator, shared metaphors, paced breathing—makes the work feel worthwhile and sustains dignity. The shift from “helping behavior” to emotional co-creation reflects broader questions about technology’s role in reshaping human relationships.

These dynamics echo Timmermans and Kaufman’s (2020) view of algorithmic care as both enabling and inequitable, and van Kessel et al.’s (2024) argument that digital health often outsources emotional regulation to users. While surface acting secures efficiency at emotional cost, deep acting offers meaning but relies on conditions the platform does not consistently support. Our findings also resonate with Hamraie’s (2017) critique of “sensory imperialism”: by privileging visual mediation, Be My Eyes limits multisensory reciprocity and reinforces able-bodied assumptions.

From this perspective, Be My Eyes can be seen as a hybrid collective of humans and algorithms, but one where metrics risk outweighing meaningful reciprocity. We therefore argue that assistive platforms should be positioned as public health infrastructure rather than market-driven tools, designed to redistribute rather than privatize affective labor.

5.4 Digital empathy’s duality and governance implications

Our most insightful finding is the duality of digital empathy. While platforms enable transcendent connections through practices such as metaphor-based sensory translation, they also risk reducing care to transactional data points. This duality extends Hochschild’s framework into algorithmic environments, showing how platform cues codify feeling rules and how the oscillation between surface and deep acting shapes affective outcomes.

Consequently, volunteers experience both fulfillment and fatigue: their labor sustains moral responsibility and self-actualization but is systematically devalued as “volunteer” work. These findings clarify when care tilts toward speed and closure and when it remains relational, highlighting tempo, fit, and relational continuity as key determinants of emotional integrity in assistive interactions.

Our results point to governance levers that can redistribute affective labor rather than outsourcing it to volunteers alone. These include tempo-flexible openings and closings, gentler re-matching protocols, minimal identity cues to balance warmth with anonymity, light relational continuity through preference memory or repeated pairing, and protections such as do-not-disturb windows, batched notifications, and post-call resets. Rather than framing technology as a means to “fix” disabled users, design and policy should address who manages the affective infrastructure. Current arrangements privatize emotional data and shift care burdens to unstable volunteer forces, underscoring the need for regulatory safeguards.

6 Conclusions, limitations, and future directions

This study examined how algorithmically mediated care on the Be My Eyes platform structures affective labor for volunteers assisting visually impaired users. Drawing on digital ethnography and thematic analysis, we identified four interrelated dynamics: (1) Feeling Rules, where interface cues such as urgency banners, anonymity defaults, and notification cadences establish normative expectations; (2) Surface Acting, where volunteers stabilize calls through composure, scripted reassurance, and micro-repairs; (3) Deep Acting, where stance shifts, metaphors, and repeated encounters foster collaborative meaning-making; and (4) Emotional Dissonance, where metrics, sensory gaps, and technical frictions generate fatigue, guilt, and strain.

These findings extend Hochschild’s framework of emotional labor into the domain of digital accessibility and algorithmic governance, highlighting how platform cues codify affective expectations in non-institutional caregiving. Empirically, the study provides a grounded account of volunteers’ experiences in China, showing how cultural norms of mianzi, efficiency, and mobile notification ecologies mediate platform interactions. Practically, the results point to governance levers—such as tempo flexibility, minimal identity cues, relational continuity, and affective protections—that could sustain both responsiveness and emotional integrity in assistive platforms. Ultimately, digital empathy emerges as a dual phenomenon: enabling transcendent connections while simultaneously risking reduction to transactional data points. Recognizing this duality reframes assistive technologies not as neutral inclusion tools, but as affective infrastructures whose design and governance directly influence health equity.

Despite these contributions, the study also has limitations. Our sample over-represented tech-savvy urban volunteers (72.7% were internet professionals/students from cities like Beijing and Shanghai), and algorithm-recommended “popular users” were the main focus. In addition, those willing to participate in interviews were often “model volunteers” with high emotional energy. This reflects the algorithm’s “survivorship bias.” Marginalized groups, such as rural elderly users, those who abandoned the platform after unsuccessful requests, and silent users not showcasing their assistance on social media, remained overlooked. This exposes the harsh irony of digital inclusion: technologies aiming to “see” minority groups often create new blind spots.

In future research, we must address the limitations arising from sample concentration in specific regions and occupational homogeneity and reflect on the causes of these phenomena. Furthermore, future research must investigate how urban–rural disparities and Global South contexts reshape platform-mediated disability support. For instance, how do infrastructure gaps, such as unstable internet, transform emotional reciprocity into frustration?

Longitudinal studies are also essential. Our 4-month study failed to capture seasonal emotional cycles (like holiday-related loneliness peaks) or platform algorithm updates that quietly restructure care practices.

If feasible, we will also compare across disability groups to examine similarities and contrasts in platform-mediated caregiving. At the cultural level, we will test transferability through cross-cultural comparisons, specifically examining how mianzi, notification ecologies, and efficiency norms shape feeling rules in different settings. At the platform level, we will pursue design-informed evaluation by piloting scope-bounded changes suggested by our findings—such as an optional “slow mode,” gentler re-matching windows, minimal opt-in identity cues, and lightweight continuity—in collaboration with user organizations. Together, these directions clarify the scope and boundaries of our claims, situate the findings within disability policy and cultural contexts, and chart a feasible path for cumulative, design-relevant research aimed at sustaining responsiveness and emotional integrity in assistive platforms.

Finally, this study challenges public health to confront its ableist assumptions. As Kafer (2013) reminds us, an equitable future requires viewing disability as a generative force that compels us to reimagine connection and belonging in a mediated world. The path forward demands not only technical fixes but a reconstruction of socio-technical systems that respect human vulnerability rather than exploit it as data capital. Looking ahead, we will broaden participants and perspectives by incorporating the accounts of visually impaired users alongside volunteers, thereby centering disability as an analytic category.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: to protect the privacy of study participants, it is not appropriate to disclose the data from this study. Requests to access these datasets should be directed to eWV4aWFuZ3hpYW9qaWVAeWVhaC5uZXQ=.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

ZZ: Formal analysis, Methodology, Software, Conceptualization, Writing – original draft, Investigation. JL: Visualization, Investigation, Conceptualization, Formal analysis, Supervision, Methodology, Data curation, Writing – review & editing, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alabi, A. O., and Mutula, S. M. (2020). Digital inclusion for visually impaired students through assistive technologies in academic libraries. Libr. Hi Tech News 37, 14–17. doi: 10.1108/LHTN-11-2019-0081

Alper, M. (2017). Giving voice: Mobile communication, disability, and inequality. London: MIT Press.

Alper, M., and Goggin, G. (2017). Digital technology and rights in the lives of children with disabilities. New Media Soc. 19, 726–740. doi: 10.1177/1461444816686323

Anthony, D., Campos-Castillo, C., and Nishii, A. (2024). Patient-centered communication, disparities, and patient portals in the US, 2017-2022. Am. J. Managed Care 30, 19–25. doi: 10.37765/ajmc.2024.89483

Badr, J., et al. (2024). Digital health technologies and inequalities: a scoping review. Health Policy 138:106049. doi: 10.1016/j.healthpol.2024.105122

Baumeister, R. F., and Leary, M. R. (1995). The need to belong: desire for interpersonal attachments as a fundamental human motivation. Psychol. Bull. 117, 497–529. doi: 10.1037/0033-2909.117.3.497

Bucher, A., Chaudhry, B. M., Davis, J. W., Lawrence, K., Panza, E., Baqer, M., et al. (2024). How to design equitable digital health tools: a narrative review of design tactics, case studies, and opportunities. PLOS Digital Health 3:e0000591. doi: 10.1371/journal.pdig.0000591

Campbell, F. K. (2009). Contours of ableism: The production of disability and abledness. Palgrave Macmillan. doi: 10.1057/9780230245181

Dobransky, K., and Hargittai, E. (2016). Unrealized potential: exploring the digital disability divide. Poetics 58, 18–28. doi: 10.1016/j.poetic.2016.08.003

Ellis, K., Goggin, G., Haller, B., and Curtis, R. (2019). The Routledge companion to disability and media. 1st Edn. London: Routledge.

Erdoğan, F., Alper, B., and Kalkan, S. (2025). Beyond cognition: a socio-emotional perspective on collaborative learning in mathematics education. International Journal of Research in Teacher Education (IJRTE), 16. doi: 10.29329/ijrte.2025.1300.01

Garland-Thomson, R. (2017). Extraordinary bodies: Figuring physical disability in American culture and literature. New York, NY: Columbia University Press.

Goggin, G., Hawkins, W., and Schokman, A. (2024). *disability & digital citizenship: Australian consumers and citizens with disability navigating digital society* [research report]. Sydney, NSW: Institute for Culture and Society, Western Sydney University & Australian Communications Consumer Action Network.

Goggin, G., and Newell, C. (2003). Digital disability: The social construction of disability in new media. Lanham, MD: Rowman & Littlefield.

Graham, M., and Woodcock, J. (2019). The gig economy: A critical introduction. London: Polity Press.

Griesser, A., Mzoughi, M., Bidmon, S., and Cherif, E. (2024). How do opt-in versus opt-out settings nudge patients toward electronic health record adoption? An exploratory study of facilitators and barriers in Austria and France. BMC Health Serv. Res. 24:439. doi: 10.1186/s12913-024-10929-w

Groom, L. L., Schoenthaler, A. M., Mann, D. M., and Brody, A. A. (2024). Construction of the digital health equity-focused implementation research conceptual model-bridging the divide between equity-focused digital health and implementation research. PLoS Digit. Health 3:e0000509. doi: 10.1371/journal.pdig.0000509

Hamraie, A. (2017). Building access: Universal design and the politics of disability. Minnesota, MN: University of Minnesota Press.

Hatfield, E., Cacioppo, J. T., and Rapson, R. L. (1993). Emotional contagion. Curr. Dir. Psychol. Sci. 2, 96–100. doi: 10.1111/1467-8721.ep10770953

Hochschild, A. R. (1979). Emotion work, feeling rules, and social structure. Am. J. Sociol. 85, 551–575. doi: 10.1086/227049

Hochschild, A. R. (2012). The managed heart: Commercialization of human feeling. Oakland, CA: University of California Press.

Imrie, R. (2012). Universalism, universal design and equitable access to the built environment. Disabil. Rehabil. 34, 873–882. doi: 10.3109/09638288.2011.624250

Irani, L. (2015). The cultural work of microwork. New Media Soc. 17, 720–739. doi: 10.1177/1461444813511926

Jin, S. (2024). Inequalities changes in health services utilization among middle-aged and older adult persons with disabilities in China. Front. Public Health 22:1434106. doi: 10.3389/fpubh.2024.1434106

Kerdar, S. H., Bächler, L., and Kirchhoff, B. M. (2024). The accessibility of digital technologies for people with visual impairment and blindness: A scoping review. Discover Computing, 27. doi: 10.1007/s10791-024-09460-7

Khasawneh, M. A. (2024). Accessibility matters: investigating the usability of social media platforms for individuals with motor disabilities. Stud. Media Commun. 12, 1–12. doi: 10.11114/smc.v12i2.6615

Khetarpal, A. (2015). Information and communication technology (ICT) and disability. Rev. Market Integr. 6, 96–113. doi: 10.1177/0974929214560117

Kim, H. K., and Park, J. (2020). Examination of the protection offered by current accessibility acts and guidelines to people with disabilities in using information technology devices. Electronics 9:742. doi: 10.3390/electronics9050742

Kim, K. K., and Backonja, U. (2025). Digital health equity frameworks and key concepts: a scoping review. JAMIA 32, 932–944. doi: 10.1093/jamia/ocaf017

Kim, H. J., and Cameron, G. T. (2011). Emotions matter in crisis: the role of anger and sadness in shaping information processing and outcomes in a crisis. Commun. Res. 38, 826–855. doi: 10.1177/0093650210385813

Kitchin, R., and Law, R. (2001). The socio-spatial construction of (in)accessible public toilets. Urban Stud. 38, 287–298. doi: 10.1080/00420980124395

Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Oxford: Oxford University Press.

Lythreatis, S., Singh, S. K., and El-Kassar, A. N. (2022). The digital divide: a review and future research agenda. Technol. Forecast. Soc. Change 175:121359. doi: 10.1016/j.techfore.2021.121359

Magrone, M., Montani, F., Emili, S., Bakker, A. B., and Sommovigo, V. (2024). A new look at job demands, resources, and volunteers’ intentions to leave: the role of work–home interference and burnout. Voluntas 35, 1118–1130. doi: 10.1007/s11266-024-00679-y

Milkman, K. L., Gandhi, L., Patel, M. S., Graci, H. N., Gromet, D. M., Ho, H., et al. (2021). A megastudy of text-based nudges encouraging patients to get vaccinated at an upcoming doctor’s appointment. Proc. Natl. Acad. Sci. USA 118:e2101165118. doi: 10.1073/pnas.2101165118

Morris, J. A., and Feldman, D. C. (1997). Managing emotions in the workplace. J. Manag. Issues 9, 257–274.

Nabi, R. L. (2010). The case for emphasizing discrete emotions in communication research. Commun. Monogr. 77, 153–159. doi: 10.1080/03637751003790444

Oliver, M. (2013). The social model of disability: thirty years on. Disabil. Soc. 28, 1024–1026. doi: 10.1080/09687599.2013.818773

Oomen, J., and Aroyo, L. (2011). “Crowdsourcing in the cultural heritage domain: Opportunities and challenges,” in Proceedings of the 5th International Conference on Communities and Technologies (138–149). ACM.

Pai, Y. S., Armstrong, M., Skiers, K., Kundu, A., Peng, D., Wang, Y., et al. (2023). The empathic metaverse: An assistive bioresponsive platform for emotional experience sharing arXiv. doi: 10.48550/arXiv.2311.16610

Papacharissi, Z. (2015). Affective publics: Sentiment, technology, and politics. Oxford: Oxford University Press.

Pettersson, S., Johansson, S., Demmelmaier, I., et al. (2023). Disability digital divide: survey of accessibility of eHealth services as perceived by people with and without impairment. BMC Public Health 23:181. doi: 10.1186/s12889-023-15094-z

Pink, S., Horst, H., Lewis, T., Hjorth, L., and Postill, J. (2015). Digital ethnography: Principles and practice. London: SAGE.

Rauchberg, J. (2025). Articulating algorithmic ableism: the suppression and surveillance of disabled TikTok creators. J. Gender Stu. 22, 1–12. doi: 10.1080/09589236.2025.2477116

Roulstone, A. (2016). Disability and technology: An interdisciplinary and international approach. New York, NY: Palgrave Macmillan.

Shakespeare, T. (1994). Cultural representation of disabled people: dustbins for disavowal? Disabil. Soc. 9, 283–299. doi: 10.1080/09687599466780341

Shpigelman, C. N., and Gill, C. J. (2014). How do adults with intellectual disabilities use Facebook? Disabil. Soc. 29, 1601–1616. doi: 10.1080/09687599.2014.966186

Silard, A., Watson-Manheim, M. B., and Lopes, N. J. (2023). The influence of text-based technology-mediated communication on the connection quality of workplace relationships: the mediating role of emotional labor. Rev. Manag. Sci. 17, 2035–2053. doi: 10.1007/s11846-022-00586-w

Soldatic, K. (2013). The transnational sphere of justice: disability praxis and the politics of impairment. Disabil. Soc. 28, 744–755. doi: 10.1080/09687599.2013.802218

Sveen, S., Anthun, K. S., Batt-Rawden, K. B., and Tingvold, L. (2023). Volunteering: a tool for social inclusion and promoting the well-being of refugees? A qualitative study. Societies. 132:12. doi: 10.3390/soc13010012

Timmermans, S., and Kaufman, R. (2020). Technologies and health inequities. Annu. Rev. Sociol. 46, 583–602. doi: 10.1146/annurev-soc-121919-054802

Timmermans, S., and Tavory, I. (2012). Theory construction in qualitative research: from grounded theory to abductive analysis. Sociol Theory 30, 167–186. doi: 10.1177/0735275112457914

van Kessel, R., Seghers, L-E., Anderson, M., and Nienke, M. (2024). A scoping review and expert consensus on digital determinants of health. Bulletin of the World Health Organization. doi: 10.2471/BLT.24.292057

Wei, L., and Hindman, D. B. (2011). Does the digital divide matter more? Comparing the effects of new media and old media use on the education-based knowledge gap. Mass Commun. Soc. 14, 216–235. doi: 10.1080/15205431003642707

Wolbring, G., Nasir, L., and Mahr, D. (2024). Academic coverage of online activism of disabled people: a scoping review. Societies 14:215. doi: 10.3390/soc14110215

Zyskowski, K., Morris, M. R., Bigham, J. P., Gray, M. L., and Kane, S. K. (2015). “Accessible crowdwork? Understanding the value in and challenge of microtask employment for people with disabilities,” in Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing (1682–1693). ACM.

Keywords: algorithmic care, health equity, affective labor, assistive platforms, visual disability communities

Citation: Zheng Z and Li J (2025) Algorithmic care and health equity: affective labor in assistive platforms for visual disability communities. Front. Commun. 10:1628426. doi: 10.3389/fcomm.2025.1628426

Edited by:

Raj Kumar Bhardwaj, University of Delhi, IndiaReviewed by:

Ángel Freddy Rodríguez Torres, Central University of Ecuador, EcuadorJose Manuel Salum Tome, Universidad Autonoma de Chile, Chile

Copyright © 2025 Zheng and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jinqian Li, eWV4aWFuZ3hpYW9qaWVAeWVhaC5uZXQ=

Zhong Zheng

Zhong Zheng Jinqian Li

Jinqian Li