- 1Policy Evaluation and Research Unit, Department of Sociology, Manchester Metropolitan University, Manchester, United Kingdom

- 2School of Education, Manchester Metropolitan University, Manchester, United Kingdom

Background: Improving reading skills in primary school pupils is a crucial focus for educators, researchers, and policymakers worldwide. Within the United Kingdom many schools are opting to use “evidence-based” reading programs to deliver or supplement the teaching of reading. This article reports a protocol for a rigorous efficacy study of DreamBox Reading Plus, an online adaptive program aimed at improving reading fluency, comprehension, and vocabulary for elementary pupils.

Methods and analysis: We conduct a pragmatic, parallel cluster randomized controlled trial in English primary schools, with schools serving as the unit of randomisation. Schools are allocated to either a treatment group, which received the DreamBox Reading Plus intervention, or a control group following standard practices, on a 1:1 basis. The study’s primary outcome focuses on reading attainment, assessed using a standardized reading test for pupils starting in Year 5. Secondary outcomes include various measures of reading fluency, comprehension, vocabulary, reading self-efficacy, and motivation. The intervention is scheduled to begin in October 2024, with outcome analysis planned for August 2025.

Discussion: By generating high-quality evidence on the efficacy of DreamBox Reading Plus, this study aims to inform best practices and contribute to the broader discourse on effective educational technologies in the classroom.

1 Introduction

Improving reading skills in primary school pupils is a crucial focus for educators, researchers, and policymakers in England and worldwide (Scammacca et al., 2015; Education Endowment Foundation [EEF], 2021a). While a wealth of research has been conducted into reading development, there is a lack of consensus around the most effective classroom approaches to the teaching of reading, especially in the late primary phase of schooling (Education Endowment Foundation [EEF], 2021b; Wyse and Bradbury, 2022). In England, the Department for Education’s Reading Framework sets out their “evidence-informed position on the best way to teach reading” (Department for Education [DFE], 2023). However, schools’ responses in terms of how they implement these principles in practice are varied (Lewin et al., 2024). While some schools have chosen to adopt commercial reading programs, others have developed bespoke and/or less formalized approaches to teaching reading at Key Stage 21 (KS2; Boyle, 2024). In the face of this diversity of approaches and available schemes to support reading, headteachers, literacy leads and classroom teachers in KS2 are faced with making a decision about which approach(es) will be best for their school (Boyle, 2024). Schools are increasingly turning to research evidence to guide their choices, including the Education Endowment Foundation’s (EEF) Teaching and Learning Toolkit, evidence reviews and evaluations of specific interventions (Education Endowment Foundation [EEF], 2019a). The current study sets out the protocol for an EEF-funded evaluation of reading program, DreamBox Reading Plus (shortened to Reading Plus hereinafter), which will contribute to this evidence base.

1.1 The process of learning to read

The developmental process of learning to read is complex and multi-faceted; however, it may broadly be conceptualized as taking place along two dimensions: word reading and language comprehension (Department for Education [DFE], 2023; Gough and Tunmer, 1986; Hoover and Gough, 1990; Hoover and Tunmer, 2018). In the early stages of learning to read English, there is a predominant focus on decoding. As children’s word recognition skills become more secure, they gradually develop reading fluency – the ability to read accurately, automatically and with prosody (with appropriate stress and intonation) (e.g., Breadmore et al., 2019; Hoover and Tunmer, 2018; Rasinski, 2014). Studies have highlighted that fluency is initially more connected to decoding skills (you need to be able to automatically decode words to read smoothly and at pace), but it becomes increasingly significant for reading comprehension in later years (Rasinski et al., 2017) (it is difficult for pupils to focus on the meaning of what they are reading until they are able to easily “lift” the words off the page). This shift underscores the transition from “learning to read,” with a focus on word knowledge, to “reading to learn,” which involves the advancement of vocabulary and comprehension skills for gaining knowledge (Chall, 1996; Spichtig et al., 2019). This transition usually starts around Year 5 in the United Kingdom (equivalent to 4th grade in the United States), but it can be delayed by insufficient word knowledge or language skills, resulting in pupils being at varying stages of reading development during this period (Hirsch, 2003).

1.2 Empirical evidence in relation to the effectiveness of reading programs in KS2

While there are a growing number of Randomized Controlled Trials (RCTs) being conducted to evaluate the efficacy of reading programs in England (Education Endowment Foundation [EEF], 2021b), the majority of these have involved targeted interventions to help struggling readers “catch up” with their peers and/or are designed for younger pupils (e.g., Culliney et al., 2021; Rutt, 2015; King and Kasim, 2015; Gorard et al., 2015a; Molotsky et al., 2022). Relatively few RCTs have been conducted to evaluate whole class reading programs in KS2. There examples of such RCTs that have been conducted in England are evaluations of Peer Assisted Learning Strategies UK (PALS-UK) (Lewin et al., 2024), FFT Reciprocal Reading (O’Hare et al., 2019) and Accelerated Reader (Sutherland et al., 2021).

PALS-UK is a highly structured peer tutoring program, which shares with Reading Plus a focus on reading comprehension and fluency; however, the mode of implementation is very different. For example, while PALS-UK is a paired reading intervention, where children read out loud to one another, Reading Plus involves children reading silently and independently. Furthermore, while PALS-UK involves pupils reading from (paper) books, Reading Plus involves children reading online shorter texts. A recent efficacy trial (Lewin et al., 2024) found that pupils receiving PALS-UK made 2 months additional progress in reading attainment, reading comprehension; and fluency rate. It will be interesting to see if similar gains are found for Reading Plus, which has similar target outcomes, but a very different mode of implementation. Similarly, FFT Reciprocal Reading differs substantially from Reading Plus. FFT Reciprocal involves pupil working together as a group to understand texts, taking on roles as predictors, clarifiers, questioners and summarisers. This is very different to the focus on silent independent reading in PALS-UK. An efficacy trial of FFT Reciprocal Reading found that when it was used as a targeted intervention (for struggling readers only), they made 2 months additional progress compared to the control group in both overall reading and reading comprehension; but when used as a whole-class intervention, pupils did not make more progress than the control group (O’Hare et al., 2019). Again, it will be interesting to see if Reading Plus compares favorably as a whole class intervention given its shared aims but very different design.

The final whole-class reading intervention which has been tested in England with pupils of a similar age is Accelerated Reader (Sutherland et al., 2021). This varies considerably from PALS-UK and FFT Reciprocal Reading, sharing with Reading Plus an emphasis on independent reading. Accelerated Reader is a digital reading management system, which provides quizzes for pupils to complete after reading a (paper) book, allowing teachers to monitor their progress. Reading Plus also uses comprehension quizzes to assess pupils’ progress and to motivate them to read for meaning; but unlike Accelerated Reader, the reading itself also happens online and the pupils are able to engage with a number of additional features: text selections automatically calibrated to pupils’ scores, a “guided window” to support pupils to track the text (which moves at an adaptive speed) and vocabulary activities, A whole-class effectiveness trial in Year 5 found that pupils using Accelerated Reader did not make additional progress relative to controls (Sutherland et al., 2021), although an earlier efficacy trial with struggling readers in (Year 7) found that intervention pupils made an additional 3 months progress (Gorard et al., 2015b).

1.3 Evidence for the effectiveness of EdTech reading programs

Fully digital reading interventions provide a range of potential affordances for both pupils and teachers which might enhance their impact. Here we will focus on two advantages of EdTech that are particularly relevant to digital reading programs: the potential to provide personalized instruction for pupils in an automated way; and the potential to reduce workload for teachers.

1.3.1 Personalized instruction

Adaptive EdTech programs that respond to pupils’ assessed proficiency can facilitate differentiated reading practice (Cheung and Slavin, 2013). While teacher-led approaches also aim for tailored instruction, their ability to continuously assess and adapt to each pupil is constrained by staff time, expertise and class sizes. According to See et al. (2022), formative assessment through EdTech can enhance reading skills at the primary level. Similarly, a meta-analysis by Silverman et al. (2020) suggests that technology interventions can positively impact reading comprehension. This finding is generally supported by a review commissioned by the EEF (Lewin et al., 2019), though the evidence does not consistently indicate a positive effect.

Two popular EdTech reading programs in the United Kingdom and the United States, which provide personalized instruction informed by ongoing assessments are Lexia Reading Core5 and Reading Plus (the focus of the current trial). Lexia Reading Core5 can be used from preschool through the primary phase (pupils aged 3–11 years) but focuses more on foundational reading skills (e.g., phonological awareness and phonics) for younger children and/or older lower attaining readers. A recent efficacy trial in England of Lexia Reading Core5, provided promising evidence of the benefits of this program (Tracey et al., 2022). Studies of Lexia Reading Core5 in the United States also found encouraging results (What Works Clearinghouse, 2009; Macaruso et al., 2019; Taylor, 2018).

It is important to note that the personalization of learning built into EdTech programs can also be based on interest as well as proficiency. As highlighted above, intrinsic motivation to read is an important enabler within the process of becoming a skilled reader (Schiefele et al., 2012). Both Accelerated Reader and Reading Plus allowing children to self-select texts from recommendations made by the system based on their interests (in terms of which texts they have chosen to read previously) as well as their attainment level.

While the evidence briefly outlined above suggests that EdTech interventions may have the potential to boost pupils’ reading attainment, on average, in comparison with pupils who are not engaging in a digital reading programme, it is also important to consider whether EdTech reading interventions might have differential impacts on different groups of pupils. For example, Silverman et al. (2020) found that students from low-income families did not gain as much from EdTech language comprehension interventions compared to their peers, but that students learning English as an additional language (EAL) experienced greater improvements from the EdTech interventions than their native English peers. On the other hand, Lewin et al. (2019)’s review identified two studies which suggest that socio-economically disadvantaged students may benefit more from such interventions, although they differed in their operationalization of “disadvantage”: Takacs et al. (2015) defined disadvantaged students broadly as students with low socioeconomic status, special educational needs or disability; whereas McNally et al. (2016) explored only socioeconomic status. Given the lack of consistent evidence across studies, further research is warranted into the potential for EdTech reading interventions to have differential impacts on particular groups of pupils and the possible mechanisms underlying such differences.

1.3.2 Reduced teacher workload

Recent research in England indicates that headteachers and teachers perceive EdTech interventions to be potentially helpful in reducing workload, particularly by aiding in assessment tasks (CooperGibson Research, 2021, 2022). This is likely to be perceived as a significant advantage by schools given that workload is commonly cited as one of the biggest factors influencing teacher wellbeing and retention (Ainsworth and Oldfield, 2019; Oldfield and Ainsworth, 2022; Education Support, 2023). However, it is also important to note that EdTech interventions can also have disadvantages for teachers depending on the nature of the intervention, the knowledge and experience of the teacher and a range of other implementation factors (Fernández-Batanero et al., 2021). For example, in an evaluation of EdTech mathematics program, MathsFlip, teachers’ lack of familiarity with the technology was raised as an issue (Rudd et al., 2017) and some studies have found evidence that certain types and uses of EdTech can increase teacher stress and anxiety (see Fernández-Batanero et al., 2021).

Reading Plus and similar interventions like Lexia Reading Core5 have the potential to reduce teacher workload significantly. In the case of Reading Plus, once the pupils are up and running on the system, the program is designed to run in an almost entirely automated fashion. The ongoing assessments that are built into the program and inform future pupil activities are also automated, meaning that teachers do not need to plan/implement their own assessment activities for these sessions. Teachers are, however, encouraged to monitor pupil progress and engagement using the data dashboard and to intervene as needed (e.g., putting a “hold” on a pupil’s progress through the program if it appears that they are rushing through texts without engaging with them properly). Similarly, Lexia Reading Core5 has assessments and readymade activities built into the program, so this too has the potential to reduce teacher workload; although it differs from Reading Plus in that there is more of a focus on teachers supplementing the online activities with teacher-led groupwork.

1.4 The intervention

Reading Plus is an adaptive, online silent reading program designed to improve pupils’ reading fluency, comprehension, and vocabulary. A distinctive feature of the program is the Guided Window - a patented visual tracking tool that moves text across the screen at a pace tailored to each pupil’s reading ability. This feature is intended to support smooth and sustained reading, helping pupils shift from learning to read to reading to learn (Spichtig et al., 2019). Evidence suggests that this type of scaffolded presentation can enhance reading efficiency and attentional focus, particularly when combined with targeted instruction (Rasinski et al., 2011; Reutzel et al., 2012; Radach and Kennedy, 2013).

In addition to the Guided Window, Reading Plus includes Visual Skills activities aimed at improving eye movement control and fluency - especially for pupils identified as weaker readers. The program also allows pupils to choose from a range of high-interest, age-appropriate texts, supporting engagement and reading stamina. Furthermore, it aims to indirectly improve reading outcomes by raising teachers’ awareness of the importance of silent reading fluency, which is a key component of skilled reading (Klauda and Guthrie, 2008).

1.4.1 Theory of change

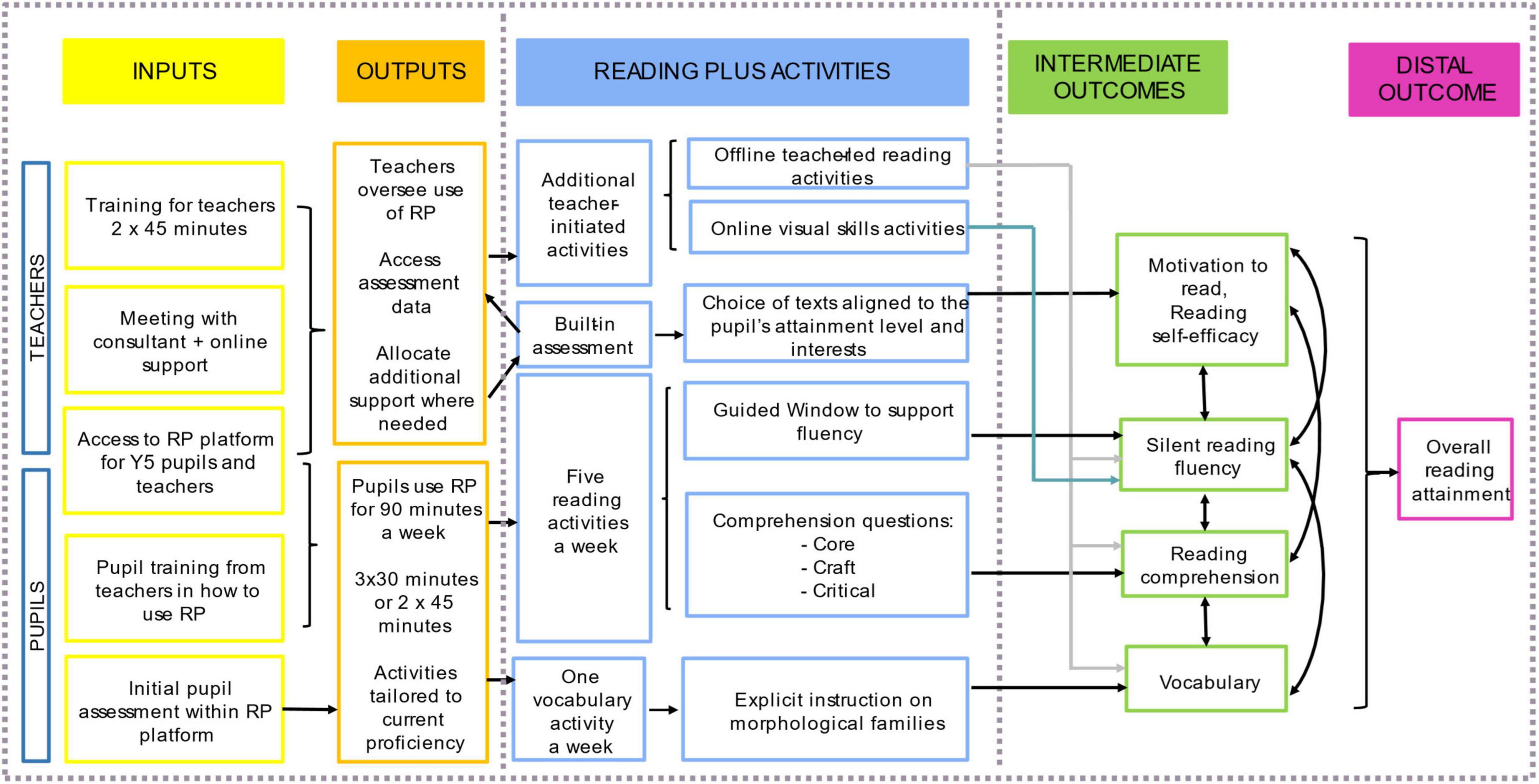

The study is guided by a theory of change that outlines the mechanisms through which Reading Plus is expected to enhance reading outcomes. Key to this theory is the program’s adaptive design, which delivers personalized reading instruction tailored to pupils’ individual needs based on continuous formative assessment. This approach ensures that lessons are dynamically adjusted to match each pupil’s developmental level, allowing for targeted support, especially for those who struggle, and reducing teacher workload.

The program’s adaptive design allows pupils to complete five reading tasks per week, each containing comprehension questions that become progressively more complex as the pupil advances. This scaffolded approach builds reading confidence and self-efficacy by aligning tasks with the pupil’s current skill level. Vocabulary development is another key focus, with pupils engaging in vocabulary tasks alongside reading activities. These tasks are designed to expose pupils to increasingly complex words, including academic vocabulary that supports broader learning and comprehension across subjects (Quinn et al., 2015). By providing access to a diverse array of texts, Reading Plus helps build cultural knowledge and enriches pupils’ academic experiences, which are critical to their overall educational outcomes (Stopforth and Gayle, 2022).

Motivation and engagement are fundamental aspects of Reading Plus, achieved through high-interest texts, gamification elements, and instant feedback. These features are designed to create a positive reading environment that fosters autonomy and sustained engagement, encouraging pupils to develop independent reading habits (Guthrie and Klauda, 2014). The program’s motivational strategies are intended to keep pupils engaged and committed to improving their reading skills, supporting their preparation for the KS2 reading assessments and their broader educational journey.

A logic model was developed by the evaluators in collaboration with Reading Solutions UK. The model indicates that by targeting reading fluency, comprehension, vocabulary, reading motivation, and self-efficacy simultaneously, the program aims to enhance overall reading proficiency. It is anticipated that Reading Plus will help narrow the reading gap for disadvantaged pupils due to its adaptive technology and focus on fluency and vocabulary development—areas where socially disadvantaged children often need additional support (e.g., Pace et al., 2017; Martins et al., 2021). As pupils become more confident and skilled readers, they are also expected to expand their content knowledge and access the wider curriculum. Thus, by aligning reading tasks with pupils’ interests, the program seeks to enhance reading stamina and enjoyment, contributing to long-term improvements in reading proficiency (see Figure 1).

Figure 1. Logic model (Reproduced from the project protocol Gellen et al., 2024 with permission, available at https://d2tic4wvo1iusb.cloudfront.net/production/documents/projects/reading_plus_2024-25_trial_-_evaluation-protocol.pdf?v=1729174942).

1.5 The present study

To contribute to the growing research base on the value of EdTech reading interventions, this study evaluates the impact of Reading Plus on Year 5 pupils’ reading attainment in England through a two-arm, parallel, cluster randomized controlled trial (RCT) starting in September 2024. Reading Plus has seen widespread implementation in England, with several studies indicating positive effects on pupil outcomes. However, the current evidence base remains limited, particularly in the United Kingdom context. A small, non-randomized study in England reported that pupils eligible for pupil premium made significantly more progress using Reading Plus than peers in comparator schools (Reading Solutions, 2021). In the United States, a randomized controlled trial found positive impacts on reading achievement compared to standard practice (Spichtig et al., 2019). Yet, there is a need for more independent and large-scale evaluations to robustly establish the program’s effectiveness.

To address limitations of previous research and inform practice, this rigorous, large-scale efficacy trial aims to evaluate the efficacy of Reading Plus in enhancing students’ reading outcomes.

The study focuses on children’s reading abilities, where they are in this crucial developmental period (children aged 9–10 years). It evaluates the potential impact of Reading Plus on three key competences: reading comprehension, vocabulary and fluency – which, as outlined above, are each implicated in the process of becoming a skilled reader. Reading Plus is designed to support fluency by increasing the volume of children’s independent reading, which is a key predictor of reading attainment (Sparks et al., 2014). The study also investigates the potential impact on how children feel about reading (i.e., their motivation to read and reading self-efficacy). These affective dimensions have been shown to profoundly influence the likelihood that a child will develop into a confident, engaged reader (Breadmore et al., 2019). It is likely that there is a bidirectional relationship between affective factors like reading motivation and reading self-efficacy and reading attainment: the more you read, the better you get at it; and the more confident you feel, the more motivated you are to read (Cunningham and Stanovich, 1998; Morgan and Fuchs, 2007). Reading Plus aims to promote this virtuous circle by developing children’s confidence with reading (through the provision of texts and activities that are closely aligned to their attainment level), while simultaneously trying to promote an interest in reading by allowing learners to choose from a broader range of electronic texts (designed to be of interest for that age group).

These factors are considered crucial in preparing pupils to overcome academic challenges and transitions effectively. We hypothesize that pupils using Reading Plus will demonstrate greater improvements in the targeted outcomes following the intervention compared to those who receive standard reading instruction.

Furthermore, the study will address the evidence gap around whether EdTech reading interventions have differential impacts on different groups of pupils by exploring whether the Reading Plus program is particularly beneficial for pupils from disadvantaged backgrounds—including those eligible for free school meals (FSM)2 and those with special educational needs (SEND)3 —and by examining how patterns of use may help explain any differences in impact.

2 Methods and analysis

2.1 Study design

The impact evaluation will be conducted as a parallel cluster-randomized controlled trial to assess the efficacy of Reading Plus, involving two arms with schools randomly assigned to either the intervention or control group on a 1:1 basis. All Year 5 pupils in schools assigned to the intervention group will be encouraged to use the Reading Plus program, while pupils in control group schools will not have access to the program.

The primary outcome of the study will be reading attainment, measured using the New Progress in Reading Assessment reading test (PiRA; Ruttle et al., 2020), administered online to pupils in both intervention and control schools after exposure to Reading Plus. Secondary outcomes will include assessments of reading fluency, comprehension, and vocabulary using the Kaufman Test of Educational Achievement, Third Edition (KTEA-3) standardized assessment tool. Additionally, measures of reading self-efficacy and reading motivation will be collected through the Feelings about Reading (FAR) questionnaire. Secondary analyses will estimate the effects of the intervention on reading self-efficacy and motivation outcomes for the entire Year 5 cohort. For the KTEA-3 assessments, to manage costs, outcomes will be measured in a randomly selected subset of 10 pupils per school, as these assessments require individual, face-to-face administration by trained assessors.

The effectiveness of Reading Plus will be measured by comparing average scores between the two groups at the end of the summer term in 2025. The evaluation also includes an Implementation and Process Evaluation (IPE) to explore how the program is delivered in practice and how it influences outcomes. Whilst not discussed in detail here, the IPE will investigate various aspects of program implementation, including fidelity, quality of delivery, and contextual factors that may affect its effectiveness.

2.2 Selection of subjects

The focal participants are pupils entering Year 5 in September 2024 from primary schools recruited to the trial. These pupils are to be identified while in Year 4, prior to their transition into Year 5.

2.2.1 School selection and eligibility criteria

Schools must meet a combination of mandatory and preferred eligibility criteria. Mandatory criteria are essential and non-negotiable, while the preferred criteria provide flexibility if recruitment targets prove challenging. To be eligible, schools must be state-maintained primary, junior, middle, or all-through schools located in England. They must not have held a KS2 Reading Plus license in the 12 months before the trial delivery (academic year 2023/24) and must not be involved in another EEF funded trial targeting Year 5 pupils and teachers in 2024/25. Eligible schools must provide one-to-one access to devices such as PCs, laptops, or tablets, enabling whole-class participation in Reading Plus and the completion of online assessments at the end of the intervention (June/July 2025). Additionally, they must be able to schedule 90 min per week for Reading Plus, divided into either three 30 min sessions or two 45 min sessions.

Preference is given to schools without mixed-age literacy classes; those with mixed-age groups may join a recruitment waitlist if still interested. Schools are also preferred if they are not participating in any other EEF trials in 2024/25, to avoid potential conflicts that might affect engagement. However, if schools participating in other EEF trials express interest, they may also be added to a recruitment waitlist.

Schools are to be identified and approached during the spring term of 2023/24. Schools meeting the eligibility criteria are invited to sign the Memorandum of Understanding (MoU), which details the project aims, potential benefits for participants, a timetable of activities, data protection considerations, and the responsibilities of all parties involved. Once a school signs the MoU, it is officially considered recruited.

2.2.2 Pupil inclusion and withdrawal process

The study sample will include all Year 4 pupils enrolled in eligible schools at the time of recruitment and set to enter Year 5 in September 2024. Once the MoU is signed, schools are required to distribute withdrawal letters and information sheets to parents, who are given a 2 weeks window to opt their child out of the study. During this period, schools must keep records of any pupils who withdraw and ensure no personal information about these pupils is shared with the evaluators. If a pupil withdraws after the initial 2 weeks period, the school must notify MMU using a secure link to ensure that any existing data for these pupils is destroyed. Pupils who withdraw or join the school after baseline assessments will not be included in the evaluation. Although new pupils who enter schools in the intervention group will participate in Reading Plus, they will not complete endline assessments. Parents retain the right to withdraw their child from the evaluation at any time during the study.

2.3 Sample size

We use the concept of Minimum Detectable Effect Size (MDES) for our power calculations (Dong and Maynard, 2013). The MDES indicates the smallest true effect (expressed as a standardized difference in means) of Reading Plus on the primary outcome that the study can detect, given its sample size, design, and specified levels of statistical significance and power. With the Delivery team’s recruitment limit set at 126 schools, the study is projected to detect an MDES of 0.18 if there is no attrition, increasing to 0.19 with 10% attrition. This effect size corresponds to an estimated 2–3 months’ additional progress in reading attainment for pupils receiving the intervention (Higgins et al., 2015).

2.4 Treatment of subjects

Implementation of Reading Plus will occur from October 2024 to May 2025, during which Year 5 teachers in intervention schools will deliver the program for at least 90 min per week, divided into two or three sessions. These lessons will replace other reading activities, such as guided reading, ensuring that the program is fully integrated into regular reading instruction.

To support effective implementation, teachers will participate in two 45 min online training sessions delivered by Reading Solutions UK. These sessions will cover the pedagogy underlying Reading Plus and how to use the program’s assessment data to guide targeted instruction. Additional training resources, including tutorial videos and access to the Reading Plus platform, will be available to teachers to enhance their understanding and application of the program.

2.4.1 Program implementation

The intervention primarily occurs online through the Reading Plus platform, supplemented by optional offline activities that teachers may use with pupils requiring additional support. At the start of the intervention, all pupils will undergo assessments measuring reading speed, comprehension, vocabulary, and motivation through a series of online tasks. These baseline scores will determine the lessons available to them on the platform, aligning with the program’s approach that effective reading comprehension depends on matching texts to the pupil’s developmental level, considering vocabulary, syntax, semantic complexity, background knowledge, and text length (Spichtig et al., 2019). Teachers will receive a Class Screening Report, identifying students performing below, at, or above year-level expectations and highlighting those needing extra support with reading comprehension, vocabulary, or fluency.

Year 5 teachers will oversee pupils’ use of Reading Plus and monitor their engagement through a teacher dashboard, allowing them to identify skill gaps. Teachers can use this data to conduct targeted interventions, such as small group or one-to-one sessions, using supplementary lesson materials provided by the program. The reporting tools available also enable schools and trusts to track reading progress throughout the year, including the progress of disadvantaged pupils.

Within the platform, pupils choose texts that match their current reading level. The platform recommends texts based on each pupil’s reading ability and interests, helping to guide their selections. As they read, they are supported by the Guided Window.

The Guided Window becomes optional once pupils achieve age-appropriate reading proficiency, at which point the system continues to develop fluency by gradually increasing text length.

Pupils typically complete five reading tasks per week, focusing on fluency and comprehension, although this can be adjusted based on individual needs. After each text, pupils answer ten comprehension questions that influence their progression within the program. The questions increase in complexity and depth, designed to support meaningful engagement with the text and promote self-efficacy in reading. Pupils move to the next level when they consistently achieve at least 80% on these questions; this adaptive feature is designed to ensure that text difficulty aligns with pupil proficiency to build confidence and fluency.

The platform also supports vocabulary development, crucial for reading comprehension and broader learning. Pupils encounter increasingly sophisticated vocabulary as they progress through the program, and they complete one vocabulary task per week in addition to reading tasks. Reading Plus also aims to foster motivation through high-interest texts, gamification, and instant feedback, which are key elements of its design.

2.5 Data collection

Quantitative data for the impact evaluation will be collected through surveys administered at both baseline (prior to randomisation) and endline (following program delivery). Specifically, Key Stage 1 (KS1) Teacher Assessment scores from the National Pupil Database (NPD) will be used as baseline covariates for measuring outcomes in reading attainment, silent fluency, comprehension, and vocabulary. To assess baseline levels of reading motivation and self-efficacy, the FAR questionnaire will be administered at baseline.

Additionally, the IPE will address its research questions using a combination of qualitative and quantitative methods. The IPE aims to examine how the delivery of the Reading Plus program affects pupil outcomes and is based on the theory of change (Humphrey et al., 2016). This will include gathering survey data, conducting interviews with headteachers and Year 5 teachers, and carrying out case study visits. The IPE will also involve exploratory quantitative analysis of the platform usage data and pupil progress measures generated within Reading Plus to help us understand mechanisms of change and explore explanations for the findings of the IE. They will also support us in testing the prediction within the logic model that the formative assessment data collected within the Reading Plus will provide useful monitoring information for schools (i.e., whether it predicts relevant summative assessments).

2.6 Outcome measures

2.6.1 New PiRA reading test

The primary outcome of reading attainment will be assessed through the Summer-Term Year 5 PiRA administered online in June/July 2025. The PiRA test is highly reliable (Cronbach’s alpha above 0.9) and aligns with national curriculum guidelines, demonstrating strong concurrent validity by correlating well with national test scores. It measures overall reading attainment by covering key reading skills, including vocabulary, comprehension, summary, inference, prediction, structure, impact, and comparison. Scores range from 0 to 45. As the logic model links reading attainment to these three skills, PiRA is an appropriate choice for the primary outcome measure. It is closely aligned to the National Curriculum in England, designed to measure the key content domains for reading (Department for Education [DFE], 2016), making it particularly appropriate for the English context.

The test will be administered online by teachers, with all Year 5 students participating unless withdrawn. Teachers’ involvement aims to minimize trial costs and school disruptions, avoiding logistical issues associated with external test administrators. However, teacher administration presents risks, such as non-standard delivery or influencing pupil performance, either deliberately or inadvertently. Mitigation measures include providing clear instructions, a step-by-step guide, and a video outlining proper test administration, informed by previous PiRA use in evaluations. The evaluation team will quality-assure the administration by piloting these resources in six case study schools in May 2025. PiRA tests are automatically scored online, eliminating scoring bias, and take approximately 40–50 min.

The impact of the Reading Plus intervention on reading attainment will be analyzed using multiple regression, with PiRA scores as the dependent variable and prior attainment as a covariate, using KS1 reading scores. KS1 scores will be accessed via the NPD, ensuring comprehensive information for analysis.

2.6.2 Kaufman test of educational achievement

Secondary outcomes of silent reading fluency, reading comprehension, and vocabulary will be assessed using the KTEA-3. The tool is a reliable, valid measure (Breaux and Lichtenberger, 2016), whose subtests provide robust observations on three secondary outcomes of central interest within the logic model. The KTEA-3 will be administered to 10 randomly selected students per school by trained assessors in June/July 2025. Administrators will receive thorough training, including safeguarding protocols, and will always conduct assessments in a supervised environment in accordance with guidance on research with children.

2.6.3 Feelings about reading questionnaire

The final secondary outcome measures will assess reading self-efficacy and motivation using a modified version of the FAR questionnaire. This instrument consists of two parts: a 20-item self-efficacy scale and a 10-item motivation scale, both scored on a seven-point Likert scale. The self-efficacy component is based on self-efficacy theory, while the motivation scale draws on self-determination theory. The reading motivation scale, created by Vardy et al. (2025), is based on self-determination theory (Deci and Ryan, 1985) and shows high reliability with a Cronbach’s alpha of.83. The reading self-efficacy scale, derived from self-efficacy theory (Bandura, 1993) and adapted from Carroll and Fox’s (2017) version with slight modifications, has a Cronbach’s alpha of.90 (Vardy et al., 2025). The questionnaire will be administered online to all students at both baseline and endline. Teachers will receive specific instructions on how to administer the questionnaire, supported by training materials, including a script to be read aloud to students to ensure standardization.

2.7 Statistical analysis

The primary outcome, reading attainment, is continuous and normally distributed. To evaluate the effect of Reading Plus, a linear mixed model will be used:

Yijk is the raw reading attainment score for pupil i in class j and school k. Tk is a binary variable coded to ‘1’ if school k is assigned to the intervention ‘0’ otherwise. The sample estimate of β1 is the estimated treatment effect of Reading Plus. Xijk is the KS1 reading test score for pupil i in class j, from school k (this covariate is entered as a pupil level covariate and will reduce variance explained at all three levels – school, class and pupil); birthijk captures the month of birth for pupil i in class j and school k. There are random effects at the school vk and class levels ςjk as well as a pupil level residual εijk. Age is included as a covariate because the outcome measures are not age-standardized, following the methodology of a similar recent EEF trial (Gellen and Morris, 2023). Parameter estimates will be obtained using restricted maximum likelihood with the “mixed” command in STATA v18. The intervention effect will be reported as an effect size, consistent with Hedges’ g.

Secondary analyses will estimate the effects of Reading Plus on silent reading fluency, comprehension, and vocabulary, using data from the KTEA-3 instrument administered to a random subsample of pupils. The models will be similar to the primary analysis. Additionally, effects on reading motivation and self-efficacy will be analyzed using data from the Feelings about Reading questionnaire administered at baseline and endline. Two regression models will be used, one for each construct, with baseline scores included as covariates.

2.7.1 Effect sizes

The numerator for calculating the effect size of each individual model will be the coefficient of the intervention group from the multilevel model. The total variance from the multilevel models without covariates will serve as the denominator for these calculations, which is analogous to Hedges’ g. To determine confidence intervals for each effect size, we will obtain the upper and lower limits of the 95% confidence interval from the regression output associated with the estimate of β1 after fitting the regression model, dividing both limits by the denominator from the expression immediately above.

2.7.2 Additional analyses

Sub-group analyses will focus on pupils entering Year 5 who are ever-FSM and those designated SEND at baseline. Two analytical approaches will be used: restricted sub-sample analyses and interaction analyses. The restricted sub-sample approach applies the primary analysis model to subsets of pupils categorised as “SEND” and “non-SEND,” as well as “Ever-FSM” and “not Ever-FSM.” The interaction approach incorporates interaction terms between subgroup indicators and treatment allocation within the primary analysis model. The results from these analyses will be reported as effect sizes with 95% confidence intervals.

In addressing non-compliance, which pertains to pupils in intervention schools not using Reading Plus effectively, several compliance measures will be considered. These include schools that fail to attend training, those that attend training but show no evidence of subsequent use, and those demonstrating minimal engagement, defined as fewer than 15 texts over the three terms. The Reading Plus software will track usage, and compliance data will be cross-referenced with training attendance logs. A Complier Average Causal Effect (CACE) will be estimated using a binary compliance variable where 1 denotes compliers and 0 denotes non-compliers.

Further analyses and robustness checks will include fitting various models to explore the effects of covariates and design features. These models include a simple model with only the intervention dummy variable, a design model that incorporates the intervention dummy along with month of birth, and a comprehensive model that adds multiple available covariates such as KS1 scores, self-efficacy and motivation baseline scores, FSM status, gender, and EAL. Mediation analysis will also be performed to examine whether improvements in reading fluency mediate the effect of Reading Plus on reading attainment. The natural indirect effect (NIE) will be estimated using the ‘mediate‘ command in STATA v18, focusing on whether changes in reading fluency account for the observed effects of the intervention.

Further exploratory analyses are also planned, incorporating a time-series element using Reading Plus platform data. These will include tracking weekly trends in reading proficiency across the academic year, disaggregated by key pupil characteristics (e.g., FSM, EAL, SEND, gender, and prior attainment). In addition, we will examine whether the number of texts completed (dose) is associated with reading attainment outcomes, using mixed multiple linear regression models. These analyses provide a temporal and process-oriented perspective, offering deeper insights into patterns of engagement and progress over time.

2.7.3 Missing data analysis

Sensitivity tests will assess the impact of missing data on the primary analysis. Missing data sources include parent withdrawals, pupil absences, and school withdrawals. We will examine the type of missingness (MCAR, MAR, MNAR) and use multiple imputation with chained equations (mice) to address missing values. Sensitivity analyses will explore the consequences of missing data and use Lee bounds for treatment effect estimates if attrition is imbalanced between trial arms. Bounds will be calculated using the “leebounds” package in STATA v18 (Tauchmann, 2014).

3 Discussion

3.1 Implications for practice

This study aims to evaluate the efficacy of educational technologies, specifically Reading Plus, in enhancing reading outcomes for Year 5 pupils. With the widespread adoption of Reading Plus across England and positive preliminary results reported in several smaller-scale studies (Reading Solutions, 2021; Spichtig et al., 2019), it is crucial to evaluate its efficacy through a large-scale, rigorously designed trial. As highlighted in the introduction, teachers are increasingly turning to commercial reading programs in the drive to raise reading attainment; when faced with decisions about which programs to invest in, schools often rely on evidence produced by What Works Centers, like the EEF (in the case of the UK). This trial will make an important contribution to the EEF’s growing evidence base in relation to the efficacy/effectiveness of reading programs in the UK. The results will provide robust evidence of the efficacy of the program, which may be used to inform decision making by teachers, school leaders/administrators and parents.

The trial findings will also contribute to the growing body of work examining the efficacy of EdTech interventions. As noted by Lewin et al. (2019, p.29), “technology can be beneficial for pupils, but it depends on a range of factors including the context, the subject area, the content, the pedagogy, access to technology, training/support, the length of the intervention and how it is integrated with other classroom teaching.” The implementation and process evaluation will allow us to understand any observed gains on attainment of Reading Plus (or lack of) in relation to these factors. By observing the program being delivered and exploring practitioner and pupil perspectives on Reading Plus, we will be able draw conclusions about the extent to which the potential benefits of this digital reading program are realized in practice. These benefits include continuous formative assessment that provides automated, tailored instruction, and the use of a guided window to promote fluency. The findings will provide evidence in relation to the efficacy of Reading Plus specifically but are also likely to provide insights into the affordances and limitations of digital instruction more broadly.

3.2 Contribution to knowledge

As well as exploring whether Reading Plus is effective in terms of promoting gains in pupils’ reading attainment, the trial aims to investigate why Reading Plus works (if indeed it does). Within the theory of change, it is predicted that Reading Plus will promote overall reading attainment mediated by gains in fluency, vocabulary, reading self-efficacy, reading motivation and reading comprehension. Reading Plus is designed to boost pupils’ self-efficacy by ensuring that the difficulty level of the text and the pace of the ‘guided window’ are well aligned to pupils’ current proficiency levels, allowing them to gradually increase their fluency and comprehension levels and experience success in their reading. It is predicted that when pupils experience such success, and gradually become more confident at reading texts of greater complexity at greater speed, they will become more motivated to read; motivation is also promoted within the programme by allowing pupils to self-select from texts recommended based on pupils’ interest ratings of texts they have read previously.

By collecting a range of secondary outcome measures, in addition to the primary outcome measure of reading attainment (silent reading fluency, vocabulary, reading comprehension, motivation for reading, and reading self-efficacy), we will be able to conduct a comprehensive evaluation of whether the mechanisms proposed within the theory of change operate as predicted. For example, by incorporating measures of “feelings about reading,” we will be able to investigate the extent to which Reading Plus fosters positive attitudes toward reading and boosting self-confidence among pupils in addition to its primary aim of boosting attainment. These factors are critical as they underpin academic success and support pupils in navigating academic transitions (Breadmore et al., 2019).

3.3 Methodological contribution

The RCT protocol reported here has been rigorously constructed, following current best practice (Education Endowment Foundation [EEF], 2022, 2019b) is in line with the EEF philosophy that a trial’s validity hinges on its design. The analytical strategies and methods to be used are consistent with the trial’s design, randomisation choices (Rubin, 2008), and the nested structure of educational data (Gelman et al., 2012; Gelman and Hill, 2007). Moreover, analytical considerations have been selected to maximize the statistical power available, given the trial’s design (Education Endowment Foundation [EEF], 2022). The two-arm, parallel, cluster randomized controlled trial addresses the need for more comprehensive and unbiased data on Reading Plus’s impact, extending beyond the confines of previous research predominantly led by the developer.

Additionally, the inclusion of both quantitative and qualitative methods in the study allows for a richer understanding of how Reading Plus influences various aspects of reading development. While this paper focuses on the quantitative impact evaluation, this will be complemented by in-depth qualitative analysis of the perceived impacts of the intervention from the perspectives of the teachers, school leaders/administrators and pupils involved. This qualitative data, collected through interviews, surveys and observations will be analyzed using thematic analysis. A coding framework derived from the ToC will be applied deductively and additional themes developed inductively. This analysis of data from the IPE will aid interpretation of the impact analyses, providing the opportunity to develop further hypotheses around mediators and sources of heterogeneity. This mixed methods approach not only quantifies improvements in reading skills but also explores the contextual factors and user experiences that may contribute to or hinder these outcomes.

One aspect of this study that is relatively unusual in randomized control trials, is the fact that there will be a substantial quantitative component to the IPE. In addition to in depth exploration of stakeholder experiences, we will also have access to the data which the Reading Plus platform which gathers progress data each time pupils engage with the platform. This data will allow us to track how pupils reading rates, comprehension scores and interest levels change over time. In this way the IPE will be able to look inside the ‘black box’ of the intervention in a way that is usually not possible.

4 Ethics and dissemination

Ethical approval for the project has been granted by Manchester Metropolitan University. The submission included detailed project design, ethical procedures, participant information sheets, consent forms, and privacy notices.

All assessment data will be handled by the Evaluation team. Data will be anonymised and securely stored in compliance with GDPR and the Data Protection Act 2018. A data sharing agreement will be in place, and personal identifiers will not be used in any reports. Pupils can withdraw from the evaluation at any time, and parents can request data deletion until 31 August 2025. Schools may also withdraw and request data deletion until 31 August 2026. All collected data will be used solely for research purposes. Personal data held by stakeholders will be destroyed in accordance with GDPR by 31 July 2026. The research findings will be published in 2026. Following the release of the main reports, the evaluation team may also submit articles to academic journals. All publications will be written in a manner that conceals the identities of the research participants.

Data availability statement

The original contributions presented in this study are included in this article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

SG: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Writing – original draft, Writing – review & editing. SA: Conceptualization, Investigation, Methodology, Project administration, Supervision, Writing – original draft, Writing – review & editing. SM: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Writing – review & editing. CL: Conceptualization, Investigation, Methodology, Writing – review & editing. KW: Data curation, Investigation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This project was funded by the Education Endowment Foundation (EEF). While the EEF did not directly contribute to the study design, they were consulted during the design phase. Their involvement during the execution phase is limited to assisting with study promotion to support recruitment. They will not be involved in the data analysis or interpretation. Publication was funded by the Policy Evaluation and Research Unit.

Acknowledgments

We wish to express our appreciation to the Policy Evaluation and Research Unit for funding this publication.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Key Stage 2 (KS2) refers to the stage of education in England and Wales for pupils aged 7 to 11, typically corresponding to grades 2 through 5 in the U.S. education system.

2. ^FSM eligibility refers to a student’s eligibility for Free School Meals (FSM), a government initiative in the UK aimed at providing meals at no cost to children from low-income families.

3. ^This term refers to children and young people who require additional or different support compared to their peers due to a range of needs, which may include but not limited to learning difficulties and physical disabilities.

References

Ainsworth, S., and Oldfield, J. (2019). Quantifying teacher resilience: Context matters. Teach. Teach. Educ. 82, 117–128. doi: 10.1016/j.tate.2019.03.012

Bandura, A. (1993). Perceived self-efficacy in cognitive development and functioning. Educ. Psychol. 28, 117–148. doi: 10.1207/s15326985ep2802_3

Boyle, K. (2024). A Multiple-Case Study of Reading Comprehension: How do Teachers Understand and Teach Reading Comprehension?. Ormond: Manchester Metropolitan University.

Breadmore, H. L., Vardy, E. J., Cunningham, A. J., Kwok, K. W., and Carroll, J. M. (2019). Literacy Development: Evidence Review. London: Education Endowment Foundation.

Breaux, K. C., and Lichtenberger, E. O. (2016). Essentials of KTEA-3 and WIAT-III Assessment. Hoboken, NJ: John Wiley and Sons.

Carroll, J. M., and Fox, A. C. (2017). Reading self-efficacy predicts word reading but not comprehension in both girls and boys. Front. Psychol. 7:2056. doi: 10.3389/fpsyg.2016.02056

Cheung, A. C., and Slavin, R. E. (2013). The effectiveness of educational technology applications for enhancing mathematics achievement in K–12 classrooms: A meta-analysis. Educational Research Review 9, 88–113. doi: 10.1016/j.edurev.2013.01.001

CooperGibson Research (2021). Education Technology (EdTech) Survey 2020-21. Research Report. London: Department for Education.

CooperGibson Research (2022). Education technology: Exploring digital maturity in Schools. London: Department for Education.

Culliney, M., Daniels, K., Booth, J., Coldwell, M., and Demack, S. (2021). REACH Primary Evaluation Report. London: Education Endowment Foundation.

Cunningham, A. E., and Stanovich, K. E. (1998). “The impact of print exposure on word recognition,” in Word Recognition in Beginning Literacy, eds J. L. Metsala, L. C. Ehri, J. L. Metsala, and L. C. Ehri (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 235–262.

Deci, E. L., and Ryan, R. M. (1985). The general causality orientations scale: Self-determination in personality. J. Res. Pers. 19, 109–134. doi: 10.1016/0092-6566(85)90023-6

Department for Education [DFE] (2016). English Reading Test Framework. London: Department for Education

Dong, N., and Maynard, R. (2013). PowerUp!: A tool for calculating minimum detectable effect sizes and minimum required sample sizes for experimental and quasi-experimental design studies. J. Res. Educ. Effect. 6, 24–67. doi: 10.1080/19345747.2012.673143

Education Endowment Foundation [EEF] (2021a). Reading Comprehension Strategies. London: Education Endowment Foundation.

Education Endowment Foundation [EEF] (2021b). Improving Literacy in Key Stage 2. Guidance Report. London: Education Endowment Foundation.

Education Endowment Foundation [EEF] (2022). Statistical Analysis Guidance for EEF evaluations (Version 2022.14.11). London: Education Endowment Foundation.

Education Endowment Foundation [EEF] (2019a). Guide for Governing Boards. London: Education Endowment Foundation.

Education Endowment Foundation [EEF] (2019b). Classification of the Security of Findings from EEF Evaluations. London: Education Endowment Foundation.

Fernández-Batanero, J. M., Román-Graván, P., Reyes-Rebollo, M. M., and Montenegro-Rueda, M. (2021). Impact of educational technology on teacher stress and anxiety: A literature review. Int. J. Environ. Res. Public Health 18:548. doi: 10.3390/ijerph18020548

Gellen, S., and Morris, S. (2023). Evaluation of the Peer Assisted Learning Strategies for Reading UK (PALS-UK) Intervention, a Two-Armed Cluster Randomised Trial. London: Education Endowment Foundation.

Gellen, S., Wicker, K., Ainsworth, S., Morris, S., and Lewin, C. (2024). Using the DreamBox Reading Plus Adaptive Literacy Intervention to Improve Reading Attainment, a Two-Armed Cluster Randomised Trial Evaluation Protocol. London: Education Endowment Foundation.

Gelman, A., and Hill, J. (2007). Data Analysis using Regression and Multilevel/Hierarchical Models. Cambridge: Cambridge University Press.

Gelman, A., Hill, J., and Yajima, M. (2012). Why we (usually) don’t have to worry about multiple comparisons. J. Res. Educ. Effect. 5, 189–211. doi: 10.1080/19345747.2011.618213

Gorard, S., Siddiqui, N., and See, B. H. (2015a). Fresh Start Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Gorard, S., Siddiqui, N., and See, B. H. (2015b). Accelerated reader Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Gough, P. B., and Tunmer, W. E. (1986). Decoding, reading and reading disability. Remed. Spec. Educ. 7, 6–10. doi: 10.1177/074193258600700104

Guthrie, J. T., and Klauda, S. L. (2014). Effects of classroom practices on reading comprehension, engagement, and motivations for adolescents. Read. Res. Q. 49, 387–416. doi: 10.1002/rrq.81

Higgins, S., Katsipataki, M., Coleman, R., Henderson, P., Major, L., Coe, R., et al. (2015). The Sutton Trust- Education Endowment Foundation Teaching and Learning Toolkit. London: Education Endowment Foundation.

Hirsch, E. D. (2003). Reading comprehension requires knowledge—of words and the world. Am. Educ. 27, 10–13.

Hoover, W. A., and Gough, P. B. (1990). The simple view of reading. Read. Writ. 2, 127–160. doi: 10.1007/BF00401799

Hoover, W. A., and Tunmer, W. E. (2018). The simple view of reading: Three assessments of its adequacy. Remed. Spec. Educ. 39, 304–312. doi: 10.1177/0741932518773154

Humphrey, N., Lendrum, A., Ashworth, E., Frearson, K., Buck, R., and Kerr, K. (2016). Implementation and Process Evaluation (IPE) for Interventions in Education Settings: An Introductory Handbook. London: The Education Endowment Foundation.

King, B., and Kasim, A. (2015). Rapid Phonics Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Klauda, S. L., and Guthrie, J. T. (2008). Relationships of three components of reading fluency to reading comprehension. J. Educ. Psychol. 100:310.

Lewin, C., Morris, S., Ainsworth, S., Gellen, S., and Wicker, K. (2024). Peer Assisted Learning Strategies – UK (PALS-UK): A Whole-Class Reading Approach. London: Education Endowment Foundation.

Lewin, C., Smith, A., Morris, S., and Craig, E. (2019). Using Digital Technology to Improve Learning: Evidence Review. London: Education Endowment Foundation.

Macaruso, P., Wilkes, S., Franzén, S., and Schechter, R. (2019). Three-Year Longtitudinal Study: Impact of a blended learning program – Lexia Core5 reading – on reading gains in Low-SES Kindergarteners. Comput. Sch. 36, 2–18. doi: 10.1080/07380569.2018.1558884

Martins, M., Reis, A. M., Castro, S. L., and Gaser, C. (2021). Gray matter correlates of reading fluency deficits: SES matters, IQ does not. Brain Struct. Funct. 226, 2585–2601. doi: 10.1007/s00429-021-02353-1

McNally, S., Ruiz-Valenzuela, J., and Rolfe, H. (2016). ABRA: Online Reading Support. Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Molotsky, A., Dias, P., and Nakamura, P. (2022). Evaluation report: Read Write Inc. Phonics and Fresh Start. London: Education Endowment Foundation.

Morgan, P. L., and Fuchs, D. (2007). Is there a bidirectional relationship between children’s reading skills and reading motivation? Council Except. Child. 73, 165–183. doi: 10.1177/001440290707300203

O’Hare, L., Stark, P., Cockerill, M., Llyod, K., McConnellogue, S., Gildea, A., et al. (2019). Reciprocal Reading Evaluation Report. London: Education Endowment Foundation.

Oldfield, J., and Ainsworth, S. (2022). Decentring the ‘resilient teacher’: Exploring interactions between individuals and their social ecologies. Camb. J. Educ. 52, 409–430. doi: 10.1080/0305764X.2021.2011139

Pace, A., Luo, R., Hirsh-Pasek, K., and Golinkoff, R. M. (2017). Identifying pathways between socioeconomic status and language development. Annu. Rev. Linguist. 3, 285–308. doi: 10.1146/annurev-linguistics-011516-034226

Quinn, J. M., Wagner, R. K., Petscher, Y., and Lope, D. (2015). Developmental relations between vocabulary knowledge and reading comprehension: A latent change score modelling study. Child Dev. 86, 159–175. doi: 10.1111/cdev.12292

Radach, R., and Kennedy, A. (2013). Eye movements in reading: Some theoretical context. Q. J. Exp. Psychol. 66, 429–452. doi: 10.1080/17470218.2012.750676

Rasinski, T., Paige, D., Rains, C., Stewart, F., Julovich, B., Prenkert, D., et al. (2017). Effects of intensive fluency instruction on the reading proficiency of third-grade struggling readers. Read. Writ. Q. 33, 519–532. doi: 10.1080/10573569.2016.1250144

Rasinski, T., Samuels, S. J., Hiebert, E., Petscher, Y., and Feller, K. (2011). The relationship between a silent reading fluency instructional protocol on students’ reading comprehension and achievement in an urban school setting. Read. Psychol. 32, 75–97. doi: 10.1080/02702710903346873

Reading Solutions (2021). Reading Plus Efficacy Study in Partnership with Derby Research School. Gateshead: Reading Solutions UK.

Reutzel, D. R., Petscher, Y., and Spichtig, A. N. (2012). Exploring the value added of a guided, silent reading intervention: Effects on struggling third-grade readers’ achievement. J. Educ. Res. 105, 404–415. doi: 10.1080/00220671.2011.629693

Rubin, D. B. (2008). For objective causal inference, design trumps analysis. Ann. Appl. Stat. 2, 808–840. doi: 10.1214/08-AOAS187

Rudd, P., Berenice, A., Aguilera, V., Elliott, L., and Chambers, B. (2017). MathsFlip: Flipped Learning Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Rutt, S. (2015). Catch Up® Literacy: Evaluation Report and Executive Summary. London: Education Endowment Foundation.

Ruttle, K., Lallaway, M., Bennett, M., Pepper, L., Kilburn, V., Swift, J., et al. (2020). New PiRA primary: Progress in Reading Assessment. Key Stage One and Key Stage Two Interim Test Guidance. Abingdon: RS Assessment from Hodder Education.

Scammacca, N. K., Roberts, G., Vaughn, S., and Stuebing, K. K. (2015). A meta-analysis of interventions for struggling readers in grades 4–12: 1980–2011. J. Learn. Disabil. 48, 369–390. doi: 10.1177/0022219413504995

Schiefele, U., Schaffner, E., Möller, J., and Wigfield, A. (2012). Dimensions of reading motivation and their relation to reading behavior and competence. Read. Res. Q. 47, 427–463. doi: 10.1002/RRQ.030

See, B. H., Gorard, S., Lu, B., Dong, L., and Siddiqui, N. (2022). Is technology always helpful?: A critical review of the impact on learning outcomes of education technology in supporting formative assessment in schools. Res. Papers Educ. 37, 1064–1096. doi: 10.1080/02671522.2021.1907778

Silverman, R. D., Johnson, E., Keane, K., and Khanna, S. (2020). Beyond decoding: A meta-analysis of the effects of language comprehension interventions on K–5 students’ language and literacy outcomes. Read. Res. Q. 55, S207–S233. doi: 10.1002/rrq.346

Sparks, R. L., Patton, J., and Murdoch, A. (2014). Early reading success and its relationship to reading achievement and reading volume: Replication of ‘10 years later’. Read Writ 27, 189–211. doi: 10.1007/s11145-013-9439-2

Spichtig, A., Gehsmann, K., Pascoe, J., and Ferrara, J. (2019). The impact of adaptive, web-based, scaffolded silent reading instruction on the reading achievement of students in grades 4 and 5. Element. Sch. J. 119, 443–467. doi: 10.1086/701705

Stopforth, S., and Gayle, V. (2022). Parental social class and GCSE attainment: Re-reading the role of ‘cultural capital’. Br. J. Sociol. Educ. 43, 680–699. doi: 10.1080/01425692.2022.2045185

Sutherland, A., Broeks, M., Ilie, S., Sim, M., Krapels, J., Brown, E. R., et al. (2021). Accelerated Reader Evaluation Report. London: Education Endowment Foundation.

Takacs, Z. K., Swart, E. K., and Bus, A. G. (2015). Benefits and pitfalls of multimedia and interactive features in technology-enhanced storybooks: A meta-analysis. Rev. Educ. Res. 85, 698–739. doi: 10.3102/0034654314566989

Tauchmann, H. (2014). Lee (2009) treatment-effect bounds for nonrandom sample selection. Stata J. 14, 884–894.

Taylor, M. (2018). Using the Lexia Reading Program to Increase NWEA MAP Reading Scores in Grades 1 to 3. Springfield, MA: American International College.

Tracey, L., Elliott, L., Fairhurst, C., Mandefield, L., Fountain, I., and Ellison, S. (2022). Lexia Reading Core5® Evaluation Report. London: Education Endowment Foundation.

Vardy, E. J., Breadmore, H. L., and Carroll, J. M. (2025). Measuring the will and the skill of reading: validation of the self-efficacy and motivation to read scale (under review).

What Works Clearinghouse (2009). Beginning Reading: Lexia Reading. Washington, DC: Institute of Education Sciences.

Keywords: digital education, RCT, reading attainment, primary school, literacy

Citation: Gellen S, Ainsworth S, Morris S, Lewin C and Wicker K (2025) Methodological approach to evaluating the DreamBox Reading Plus intervention: a cluster randomised trial. Front. Educ. 10:1513465. doi: 10.3389/feduc.2025.1513465

Received: 18 October 2024; Accepted: 30 July 2025;

Published: 18 August 2025.

Edited by:

Sonsoles López-Pernas, University of Eastern Finland, FinlandReviewed by:

Lina Mukhopadhyay, English and Foreign Languages University, IndiaMeryem Üstün-Yavuz, The University of Sheffield, United Kingdom

Copyright © 2025 Gellen, Ainsworth, Morris, Lewin and Wicker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sandor Gellen, cy5nZWxsZW5AbW11LmFjLnVr

Sandor Gellen

Sandor Gellen Steph Ainsworth

Steph Ainsworth Stephen Morris

Stephen Morris Cathy Lewin2

Cathy Lewin2 Kate Wicker

Kate Wicker