- 1Curriculum Studies, Ferdowsi University of Mashhad, Mashhad, Iran

- 2Department of Curriculum Studies and Instruction, Faculty of Educational Sciences and Psychology, Ferdowsi University of Mashhad, Mashhad, Iran

- 3Department of Biostatistics, School of Health, Mashhad University of Medical Sciences, Mashhad, Iran

Purpose: Learners with a design thinking mindset can improve several levels of cognitive processes, such as thinking skills, research skills, learning skills, self-exploration, creativity, and innovation. The present study was carried out with the aim of translation, cultural adaptation, and evaluation of the psychometric characteristics of the design thinking mentality questionnaire for Iranian students.

Methods: A four-step study was performed in the current work. After translating the questionnaire using the translation-re-translation method, content validity was examined. The content validity ratio (CVR) and content validity index (CVI) were assessed using constructing construct validity factor analysis. Then, the translated questionnaire was presented to 300 students. Reproducibility (stability) was evaluated using the intracluster correlation coefficient. The internal consistency was evaluated using Cronbach’s alpha.

Results: The results of confirmatory factor analysis (CFA) confirmed the structure of eight factors (risk tolerance, human-centeredness, holistic view, problem reframing, teamwork, experiment, abductive thinking, and creative self-confidence) with 37 questions. Cronbach’s alpha coefficient for the whole instrument was 0.925, and the intracluster correlation coefficient (ICC) was 0.842 at a significance level of p = 0.001, which indicates the stability and good reproducibility of the tool.

Conclusion: The findings generally, the findings support the validity and reliability of the Persian version of the design thinking mentality questionnaire. This means that the instrument can be used to measure the design-thinking mentality.

Introduction

In recent decades, with increasing pressure to establish a connection between university graduates and the labor market, employability has become a key concept in higher education around the world (Cheng et al., 2021; Small et al., 2017) and a necessary skill to enter the world of work has been highlighted (Suarta et al., 2018). Essentially, employability is a set of soft skills and attributes that make graduates more successful in their chosen careers and contribute to the workplace, society, and economy (Yorke, 2006). These skills include self-management, creativity, and innovation (Suarta et al., 2018), teamwork and problem-solving (Kenayathulla et al., 2019; Zinser, 2003), communication and interpersonal skills (Clarke, 2007; Kenayathulla et al., 2019; Suarta et al., 2018; Zinser, 2003), thinking skills, information skills, system skills, and technology (Clarke, 2007) Essentially, employability skills are general rather than job-specific and can be applied to all industries, businesses, and job levels, from entry-level to senior positions (Robinson, 2006). To find a common language between higher education, society, and the labor market, the scope of employable or soft skills can be narrowed down to a manageable concept such, as design thinking. Design thinking in higher education can potentially bridge the gap between higher education institutions and employers (Denning-Smith, 2020).

Design thinking is a model of thinking that equips people with tools to effectively face the growing needs and challenges of today’s world and complex social issues (Li et al., 2019; Liu and Li, 2023; Vignoli et al., 2023) and enables learners to develop soft skills (Goldman and Zielezinski, 2016; Lor, 2017; Naghshbandi, 2020). Design Thinking promotes collaboration among stakeholders in the education industry (Senivongse and Bennet, 2023). In addition, researchers also agree on the importance of promoting design thinking (DT) in educational environments to address complex issues and nurture professionals who can face complex social issues (Vignoli et al., 2023). Design thinking is a mindset (Luka, 2014; Thi-Huyen et al., 2021) and a human-centered approach to learning, collaboration, and problem-solving (Brown, 2008). It allows learners to develop different levels of cognitive processes, including thinking skills, inquiry skills, social competence, learning skills, self-exploration, creativity, and innovation (Dell’Era et al., 2020; Lorusso et al., 2021; Tsai, 2021). In addition, skills such as problem-solving, teamwork (McLaughlin et al., 2022), critical thinking, empathy, flexibility, and communication are all inherent in the design thinking process. They can be easily integrated at the classroom level into the higher education system (Denning-Smith, 2020). Therefore, various efforts have been made in higher education to develop design thinking (Crites and Rye, 2020) and identify the characteristics of people with design thinking. Design thinking is a problem-solving method that relies on skills, processes, and mindsets that help people create new solutions to problems (Koh et al., 2015). This approach promotes innovative thinking and an entrepreneurial mindset among students, enabling them to thrive in today’s rapidly changing business environment (Senivongse and Bennet, 2023). One of the most essential elements identified in the design thinking approach is the mindset of design thinking, which includes elements such as flexibility, risk-taking, human-centeredness, empathy and sympathy, mindfulness, awareness of the process, a holistic view, problem-reframing, teamwork, multidisciplinary collaboration, openness to different perspectives and diversity, learning-oriented, experimentation or learning from mistakes, experiential intelligence, bias toward action, critical questioning, abductive thinking, envisioning new things, creative confidence, desire to make a difference, and optimism to have an impact (Dosi et al., 2018; Vignoli et al., 2023).

According to what has been stated, research and practice in design thinking are very important for developing employability competencies and 21st-century skills. However, what is more important is to measure them using a valid scale. Essentially, a tool that measures design thinking capabilities allows individuals to understand their current capabilities and weak points. A careful review of various sources identified several scales that measure design thinking in different disciplines (Blizzard et al., 2015; Ladachart et al., 2021; Vignoli et al., 2023). One of the existing scales is the design thinking mindset questionnaire (Dosi et al., 2018), which has been used in several studies (Ladachart et al., 2021; Thi-Huyen et al., 2021).

The use of different instruments requires the culture and characteristics of the people of that society, and in Persian culture and language, there is no valid and reliable tool to measure the mindset of design thinking. In terms of history, Iranian society has a diverse ethnic, cultural, linguistic and religious context (Saberi, 2019). Iranian people are usually identified for being hospitable, humanization, Justice-oriented, Perfection-oriented. Attention to science and knowledge, legalism and rationality, and adherence to moral and social principles such as honesty, patience and tolerance are among the significant characteristics of Iranian people’s culture. Therefore, the present study was conducted with the aim of translation, cultural adaptation, and evaluation of the psychometric characteristics of the design thinking mentality questionnaire for Iranian students. Such validation is essential for several reasons. First, it ensures that the scale measures what it is intended to measure in the Iranian context. Second, it provides a reliable tool for researchers and practitioners to assess social well-being among Iranian women accurately.

Materials and methods

The purpose of this study was to translate and validate the design thinking mindset questionnaire (Dosi et al., 2018) in four phases. In the first phase, the instrument was translated based on the Brislin model (Brislin, 1970). In the second stage, its face validity and content validity were established. In the third stage, its construct validity was established through confirmatory factor analysis. Finally, its reliability was evaluated using the test-retest method and the internal consistency of the scale.

The design thinking mindset questionnaire to measure the Design Thinking Mindset self-awareness was developed based on a review of the literature and the opinions of academic experts and companies with more than 8 years of experience in this field (Dosi et al., 2018; Vignoli et al., 2023). Through an exploratory factor analysis of the responses of two samples (N = 307) of Design Thinking professionals with some level of experience resulted in a 70 items with 19 components.. Tolerance: ambiguity and uncertainty (5 items), embracing risk (2 items) human-centeredness (3 items), empathy/sympathy (4 items), mindfulness and awareness of the process (3 items), holistic view (3 items), problem reframing (3 items), and teamwork (4 items), multi-, inter-, and interdisciplinary collaboration (4 items) open to different perspectives and diversity (4 items) learning-oriented (5 items); experimentation or learning from mistakes or failure (6 items); experimental intelligence/bias toward action (4 items) critical questioning (3 items) abductive thinking (4 items); envisioning new things (3 items); creative self-confidence (4 items), desire to make a difference (3 items), and optimism to have an impact (3 items). Respondents can respond to each item on a five-point Likert scale by selecting the following options: strongly agree, agree, have no opinion, disagree, and strongly disagree. The total scores range from 71 to 355 and higher scores indicate higher design thinking mindset. Based on the scores, the design thinking mindset were considered low (71–142), medium (142–213), and high (213–355).

The estimated composite reliabilities of the factors ranged from 0.78 to 0.88, demonstrating a level higher than the recommended threshold of .70 for the latent variables. Goodness-of-fit statistics for all measurement models were high (NFI, NNFI, and CFI ≥ 0.90). The average variance extracted (AVE) was over 50% for each dimension, demonstrating good convergent validity. In sum, the loadings, fit statistics, and AVEs suggest that each scale captures a significant amount of variation in the latent DT mindset dimensions (Dosi et al., 2018; Vignoli et al., 2023).

Phase 1: translation

After receiving the developer’s permission for the design thinking mindset questionnaire, this tool was translated based on the Brislin model using the back translation method (Brislin, 1970). First, the English version of the questionnaire was translated into Farsi by two people fluent in both English and Farsi. There was no change in the meaning of the phrases or their level of difficulty. For this purpose, the conceptual equivalence of phrases and sentences was emphasized. Then, two Persian translations of the questionnaire were checked and revised by the translators, and finally, a single Persian version of the design thinking mindset questionnaire was prepared. The prepared Farsi text was back-translated into English by a translator fluent in both English and Farsi. The English translation was compared with the original version and examined in terms of compatibility with the original tool by the project organizers. Finally, after modifying some items, the final version was prepared.

Phase 2: modifying the questionnaire to achieve the desired form and content validity

The set of initial questionnaire items was checked and modified for face and content validity. Face validity is a subjective evaluation; the questionnaire is presented to people and checked for relevance, reasonableness, and clarity. Examining face validity is important because it provides insight into how respondents will later interpret the items (Devon et al., 2007). Therefore, the initial questionnaire containing 70 items was given to 20 undergraduate students who were selected randomly, and they were asked to give feedback on the level of difficulty and clarity of the proposed questionnaire, including the items and response scale, and report any problems. The item analysis was used to evaluate the importance of questions from the student’s point of view at this stage. If the impact score of each item is ≥ 1.5, the statement is retained for subsequent analysis. At this stage, the impact score of the nine items was 1.5, and as a result, the item was modified or removed. Based on the feedback received, these nine items were modified to be more transparent, and more understandable.

In the next phase, content validity was used to ensure that the questionnaire adequately covered the entire content (Devon et al., 2007; Pallant, 2020). For this purpose, 11 experts in educational sciences were asked to evaluate the design thinking questionnaire to see whether the proposed questionnaire sufficiently covered the components of the design thinking mentality. Then, the content validity rate (CVR) index was used to evaluate the necessity of items. Therefore, the experts were asked to evaluate the relevance of each potential item for the desired construct by selecting one of the following alternatives: “not necessary,” “useful but not necessary,” and “necessary.” Moreover, the content validity index (CVI) was used to evaluate the relevance of the items. (i.e., “not relevant,” “relatively relevant,” “relevant,” and “completely relevant”) were used. Items were retained, modified, or deleted after item analysis, expert feedback, and considering theoretical support from the literature. In the end, by removing 23 items and improving the wording of several items based on experts’ suggestions, a questionnaire with 47 items was developed.

Phase 3: construct validity

Due to the certainty of structure in content validity, confirmatory factor analysis was used to evaluate construct validity. The purpose of measuring construct validity and conducting confirmatory factor analysis is to understand to what extent the questionnaire structure is consistent with the primary objectives of the questionnaire. Confirmatory factor analysis provides the possibility of comparing the proposed conceptual model based on the correlation between the hidden factor and the variability of the observed variables.

Phase 4: determining reliability

The internal consistency of the questionnaire was evaluated using Cronbach’s alpha coefficient for the entire questionnaire and each component. Also, to measure the stability of the questionnaire, the test-retest method was used, and the intra-cluster correlation index (ICC) was calculated. For this purpose, the approved questionnaire version was distributed to 30 students in a preliminary study. Then, with an interval of 2 weeks, the same participants completed the same questionnaire again under the same conditions. Following After collecting the questionnaires, the intra-cluster correlation coefficient between the two stages was calculated.

Participants

At the suggestion of (Habibi and Kolah, 2016), 300 participants were included for confirmatory factor analysis, of whom 99 were men (33.0%) and 201 were women (67.0%). The years they entered the university were 2018 (73 people, 24.3%), 2019 (73 people, 24.3%), 2020 (79 people, 26.3%), and 2021 (75 people, 25.0%).

Data collection procedure

Data were collected online using the Porsline platform. The participants had access to the questionnaire by clicking the provided link. They were first informed about the study, and their contribution was appreciated. They were then asked to sign the consent form. Moreover, the first question assessed whether participants met the inclusion criteria. For those whose university entry date was before the interval, the survey was automatically terminated. The participants who met the inclusion criteria were first asked to determine their gender, and then they were asked to complete the 47 items of the design thinking mindset questionnaire on a 5-point Likert scale. The time for the completion of the questionnaire was 10 min.

Statistical analysis

To evaluate the construct validity and factor structure of the design thinking mindset questionnaire, confirmatory factor analysis (CFA) was performed using AMOS version 24. The presumptions of CFA were controlled before performing the examination. Sample size (n = 300) meets the requirements indicated as a minimum of 300 by Habibi and Kolah (2016). Data are presumed normally distributed if the ranges of skewness values are between −2 and +2, and the ranges of kurtosis values are between −10 and +10 (Collier, 2020). This study results showed the skewness values that ranged from −0.23 to −2.002 and kurtosis values ranged from −1.19 to 0.94, thereby proved normal distribution of the data, which supported normality of the distribution. Since the assumptions were not violated, CFA was used. The goodness of fit of the model was evaluated by degrees of freedom: comparative fit index (CFI), Tucker-Lewis index (TLI), and root mean square error of approximation (RMSEA).

Results

Initially, the questionnaire was translated and re-translated following the Brislin model. Then, the Persian version of the questionnaire (Dosi et al., 2018), with 70 items in 19 areas, was prepared.

Content validity

The results from the analyzed indicators show relatively good validity in general, considering that for the number of panel members (11), the minimum value should be CVR ≤ 0.59 (Lawshe, 1975). Lawshe’s content validity index was less than 0.59 for some items. A total of 23 items were removed from the questionnaire. In addition, since the minimum value of the CVI should be 0.78, the index of content validity, the overall CVI was 0.85, which showed good validity; however, several items with a value between 0.70 and 0.79, were modified, and finally, the questionnaire with 47 items was approved. A total of 11 components were deleted or integrated into other questions and eight components remained.

Confirmatory factor analysis

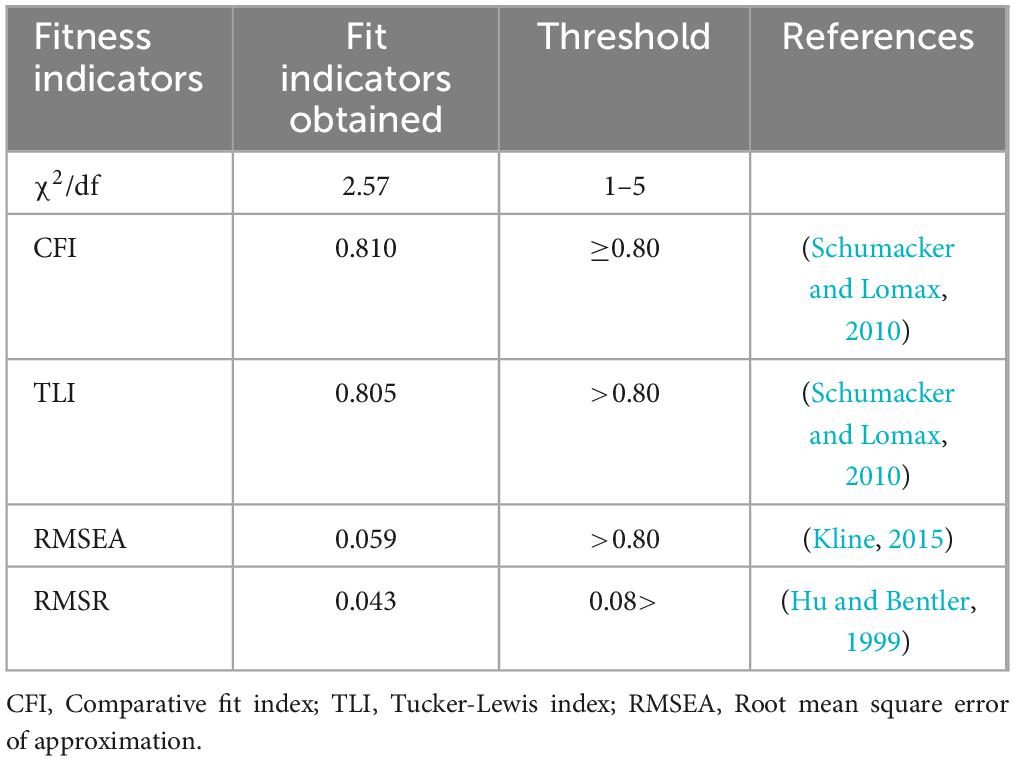

Confirmatory factor analysis was used to establish the validity of the construct. The results of the analysis yielded a suitable and reasonable estimate based on the general indicators of the model’s suitability, so that the two indexes divided by the degree of freedom 2 df, the root mean square error of estimation index (RMSEA), the comparative fit index (CFI), and the TLI index were acceptable and good fit. Table 1 shows Confirmatory factor analysis fit indices Persian version of the design thinking mindset.

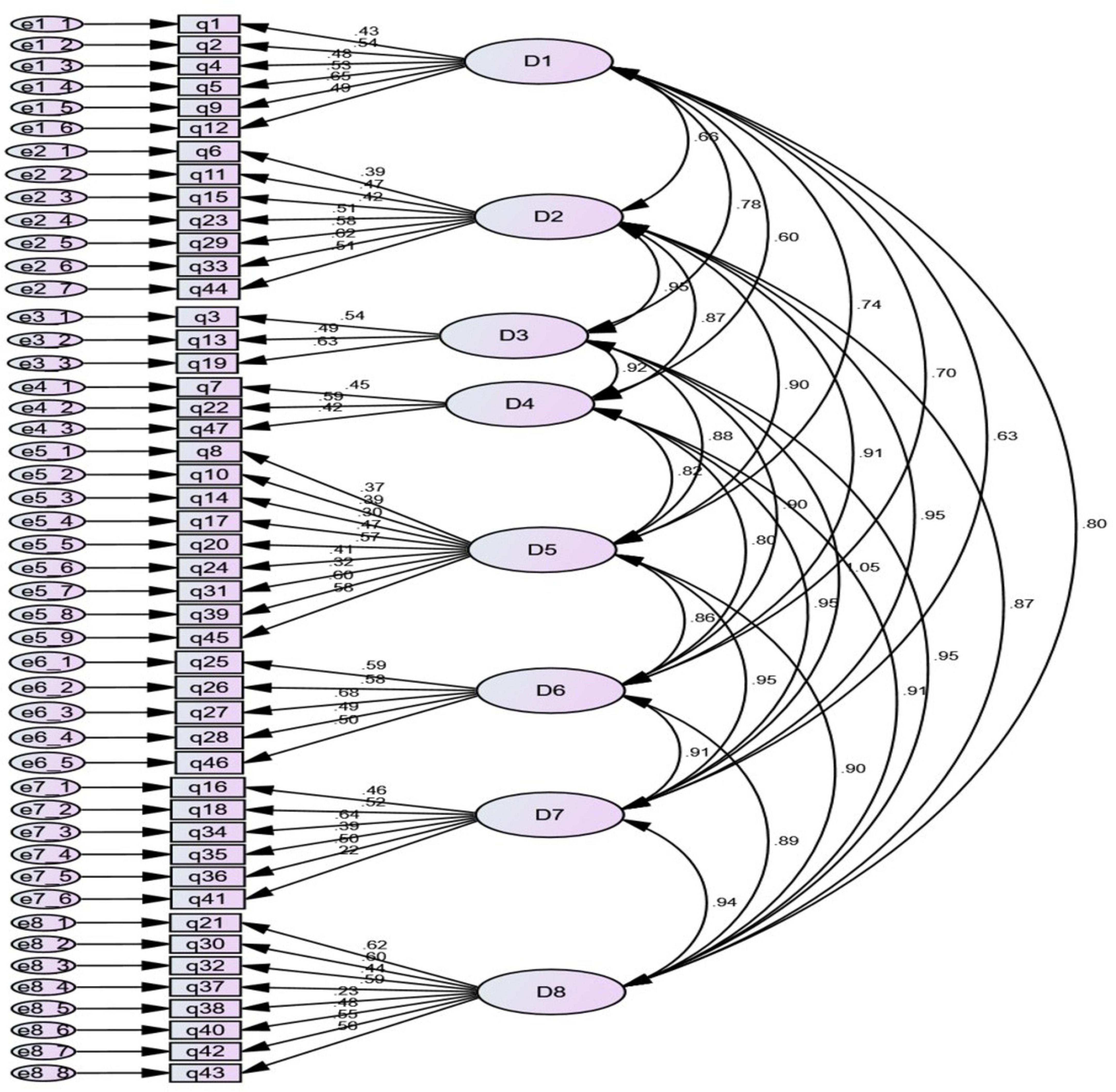

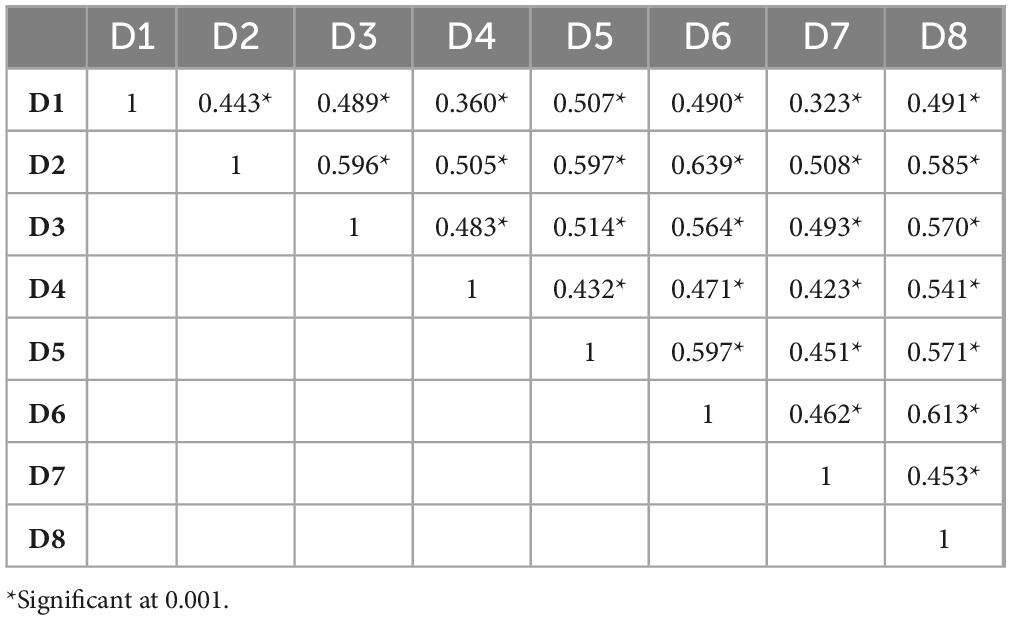

After confirmatory factor analysis (Figure 1), nine items whose factor loading values were less than 0.4 were removed, and Table 2 shows Inter-Factor Correlation.

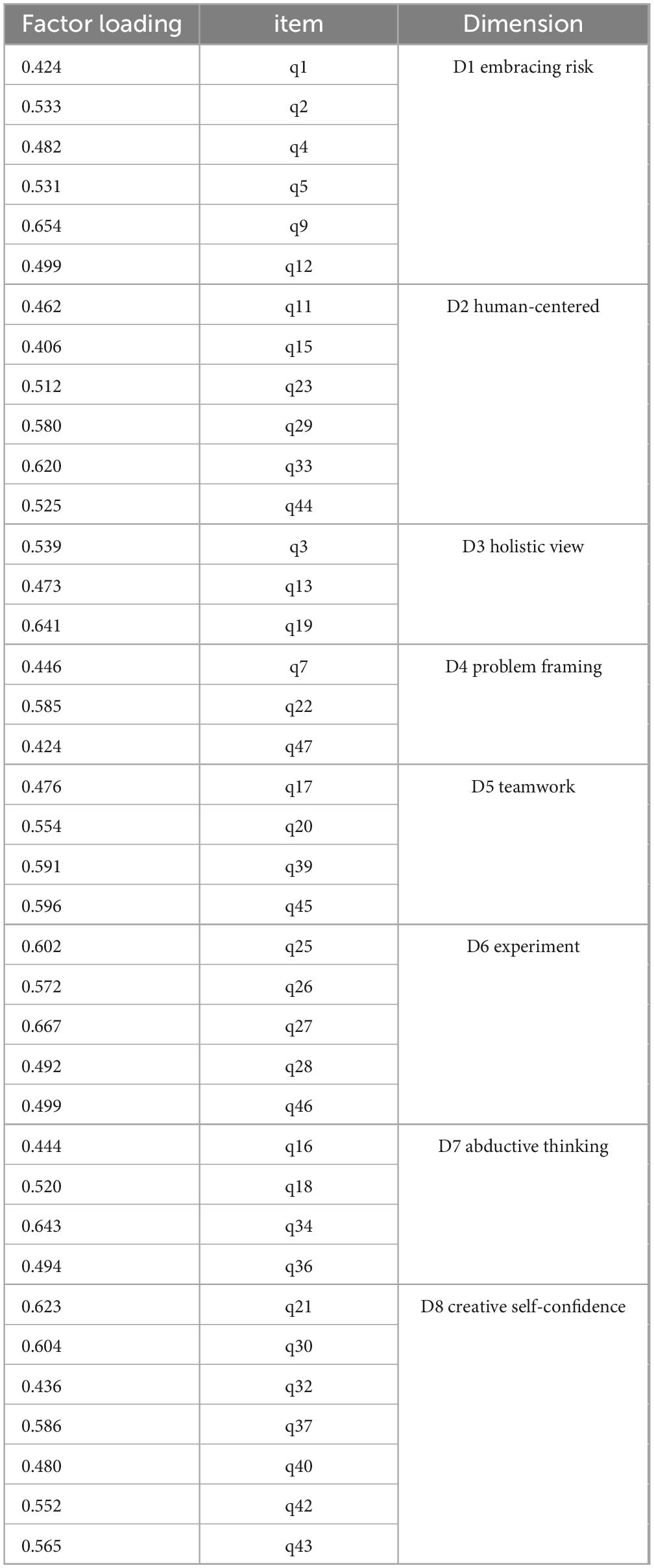

Finally, the Persian version of the questionnaire with eight components and 38 items was obtained. The first component has six items associated with embracing risks, the second component has six items measuring human-centeredness, the third component has three items related to holistic view, the fourth component has three items related to problem reframing, the fifth component has four items on teamwork, the sixth component has five items on the experiment, the seventh component has four items on analytical thinking, and the eighth component has seven items associated with creative self-confidence. The loading level of the domains in the final model was between 0.667 and 0.424, all of which were higher than the acceptable value of 0.4 and significant (Table 3).

Reliability

The reliability of the questionnaire was evaluated using two approaches: internal consistency and reproducibility. Cronbach’s alpha was used to check the internal consistency, the test-retest method was used to measure the reproducibility, and the intra-cluster correlation index (ICC) was calculated. Thirty undergraduate students were selected, and they were asked to answer the Persian version of the questions on two occasions, 2 weeks apart. The correlation between test and retest responses for the whole instrument (ICC = 0.842) was obtained at a significance level of p = 0.001, which indicates the good stability and reproducibility of the instrument.

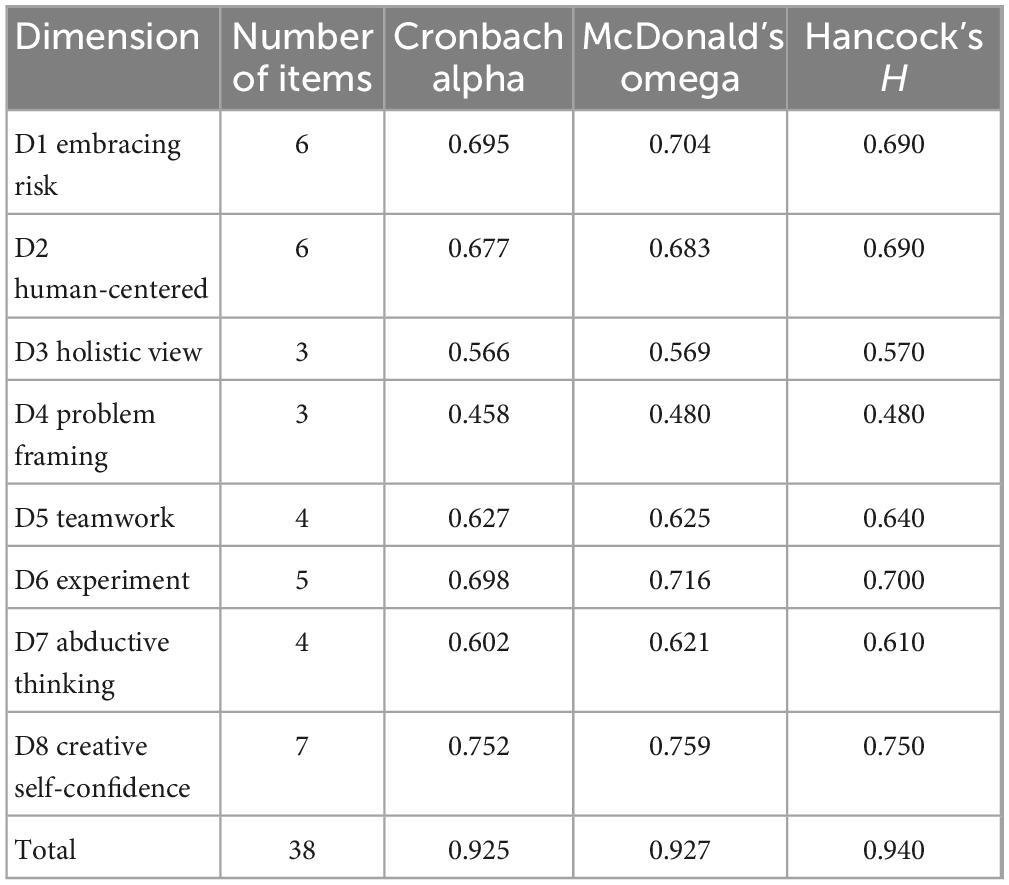

Cronbach’s alpha coefficient for the whole tool after factor analysis was 0.925, and its components were 0.458–0.752 (Table 4).

Discussion

This study was conducted to evaluate the psychometric properties of the design thinking mentality questionnaire (Dosi et al., 2018) for Iranian students. To evaluate a tool for use in research, it seems necessary to have at least four standards, including at least one type of content validity, one type of construct validity, and two types of stability evaluation (Norbeck, 1985). In this research, it was tried to establish formal and content validity based on the views of experts in the fields of educational sciences and design thinking. At the end of the formal and content validity determination stages, some items that were not clear enough were revised based on the opinion of the research team. At this stage, some items were removed from the original version of the questionnaire if there were cases of overlap and affinity between the concept and culture of integration and the items with a CVR of less than 0.59.

Two components of tolerance ambiguity (with five items, of which one was deleted) and embracing risk (with two items) were combined and presented in the form of the risk tolerance component. Its initial seven items were reduced to six items.

Three human-centered components (with three items), learning-centered (with five items, of which four items were deleted), and empathy/sympathy (with four items, of which one item was deleted) were combined and presented in the form of a human-centered component with seven items.

The three components of teamwork (with four items), multi/inter/interdisciplinary joint teams (with four items of which 1 item was deleted), and openness to different views and diversity (with four items of which two items were deleted) were combined and presented as teamwork component with nine items.

Three test components (with three items of which one was deleted), experimental intelligence or bias toward action (four items of which three were deleted), and learning from mistakes or failure (three items of which one was deleted) were combined and presented in the form of a test component with five items.

The three components of critical questioning (with four items, of which two were removed), Positive thinking (three items), and envisioning new things (three items, of which two were removed) were combined in the form of the innovative thinking component with six items. Creative self-confidence (with four items, of which one was deleted), desire to make a difference (three items, of which 1 was deleted), and optimism to have an impact (three items), combined in the form of a creative self-confidence component with 8 items, were presented. The holistic view with three items and problem reframing with three items remained unchanged. The component of mindfulness was also completely removed.

Finally, the Persian version of the questionnaire, which included eight components and 38 items, was prepared. Based on the results of the present study, the Persian version of the questionnaire mentioned above showed excellent psychometric properties, with high Cronbach’s alpha coefficients for all subscales and the total scale. These findings are consistent with the results of the scale of 31 questions in 10 dimensions (Vignoli et al., 2023) and the results of the 19-factor scale from the research (Karshenas, 2023) are consistent. Other previous empirical studies (Carlgren et al., 2016; Schweitzer et al., 2016) showed that, despite the literature agreement on specific construct definitions, professionals fail to recognize them as distinct characteristics of the DT mindset. In an attempt to develop a reliable instrument measuring design thinking mindset with Thai elementary teachers (Ladachart et al., 2021), a questionnaire with 24 items was obtained. Its five components include comfortable with problems, User empathy, Collaborative working with diversity, Orientation to learning, and Creative confidence., consistent with the research results, but the component is Mindfulness, which was omitted in this research (Ladachart et al., 2021) it was recognized as one of the main components.

The lower alpha values for dimensions with fewer items (e.g., D3: 3 items, α = 0.566; D4: 3 items, α = 0.458) align with established psychometric principles. Cronbach’s alpha is highly sensitive to the number of items in a scale, and shorter subscales naturally yield lower reliability estimates. For instance, Nunnally and Bernstein (1994) emphasize that alpha values below 0.7 may still be acceptable for exploratory or newly adapted scales, especially when subscales have fewer than 5–6 items (Tavakol and Dennick, 2011). This is further supported by Bonett (2002), who notes that alpha increases with additional related items (Bonett, 2002). In our study, subscales like D3 and D4 were intentionally concise to maintain focus on distinct constructs, which may explain their lower alphas.

While the conventional threshold of α ≥ 0.7 is widely cited, recent methodological critiques argue against rigid adherence to this standard. Cortina (1993) and Taber (2018) highlight that alpha values must be interpreted in context, particularly for multidimensional constructs or novel instruments (Taber, 2018; Tavakol and Dennick, 2011). For example, in the validation of the Persian Self-Compassion Scale Youth Version, a Cronbach’s alpha of 0.60 for test-retest reliability was deemed acceptable due to the scale’s complexity and cultural adaptation challenges (Nazari et al., 2022). Similarly, our study focuses on design thinking mindsets—a multifaceted construct where subscales may reflect diverse cognitive and behavioral traits, warranting a nuanced evaluation of reliability.

The high overall Cronbach’s alpha for the full questionnaire (α = 0.925) indicates strong internal consistency at the total scale level, which aligns with recommendations for instruments measuring broad constructs (Tavakol and Dennick, 2011). Additionally, we acknowledge that alpha alone does not fully capture reliability. In Table 4, McDonald’s omega was reported, which is less affected by tau-equivalence assumptions and provides a more accurate estimate for multidimensional scales (Nazari et al., 2022). For instance, in the validation of the Escapism Scale, both alpha (0.73) and omega (0.90) were reported to provide a holistic reliability assessment (Nooripour et al., 2023).

For subscales with notably low alpha (e.g., D4: Problem Framing, α = 0.458), we conducted post-hoc item analysis. Items with poor inter-item correlations (r < 0.3) were identified, suggesting potential cultural or linguistic nuances in the Persian adaptation. Following Tavakol and Dennick’s (2011) recommendations, we plan to revise or replace these items in subsequent iterations (Tavakol and Dennick, 2011). Furthermore, the confirmatory factor analysis (CFA) supported the structural validity of these subscales (e.g., CFI = 0.810, RMSEA = 0.059), indicating that the low alpha may reflect scale brevity rather than conceptual inconsistency (Khodaei and Shokri, 2023).

We emphasizing that while the subscale alphas are modest, they are consistent with similar studies on complex psychological constructs (Nooripour et al., 2023; Taber, 2018). For example, in the Academic Parenting Scale validation, subscales with 3–4 items also reported alphas between 0.49 and 0.72, yet the instrument was deemed valid for exploratory use (Khodaei and Shokri, 2023). We also propose follow-up studies with larger samples to refine item phrasing and test-retest stability, as recommended (Bonett, 2002). We calculated Hancock’s H for each subscale using the formula:

Where λ = standardized factor loadings and θ = error variances.

It should be noted that other studies have developed tools to measure design thinking (Liu, 2023; Rusmann and Ejsing-Duun, 2022; Trung et al., 2024), which usually emphasize factors such as empathy, problem identification, ideation, modeling, reasoning, and process management. Perhaps the reason for the distinction between these factors and the factors confirmed in this study is that the various tools consider design thinking as a general competency and do not focus solely on design thinking as a mindset.

Research efforts have also been made to provide a self-evaluation scale to measure the five characteristics Brown (2008) identified as the characteristics of design thinking (Blizzard et al., 2015), which is comparable and compatible with the present research. The test-retest result showed a satisfactory and reasonable level of reliability. Overall, the results of this study showed that the Persian version of the design thinking mindset questionnaire has good validity and reliability.

Given that the design thinking mentality plays a role in developing different characteristics in students, educational programs should be designed and implemented in such a way that they foster the formation and development of design thinking. However, there were some limitations in conducting the research, such as the selection of undergraduate students as the target community and the sample sizes inadequate for subgroup comparisons then we recommend future studies to validate invariance.. It is hoped that this translation and adaptation of the Persian form will be an introduction to this type of study and, more importantly, a suitable tool for measuring the mindset of thinking about design in Iranian society.

Conclusion

This study presents a Persian version of the design thinking mindset questionnaire that can potentially be used for educational and research purposes. It is suggested that this instrument be used in future research to measure the executive functions of students, managers, and educational experts.

The present questionnaire can be used in future studies, and the information obtained can be used for planning in the field of education. The respondents to this research were students of Ferdowsi University of Mashhad. Replicating the research with subjects in different fields can enrich the discussion.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

ZV: Writing – original draft, Writing – review & editing, Data curation. MK: Supervision, Writing – review & editing. JJ: Conceptualization, Formal Analysis, Writing – review & editing. MSR: Conceptualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Blizzard, J., Klotz, L., Potvin, G., Hazari, Z., Cribbs, J., and Godwin, A. (2015). Using survey questions to identify and learn more about those who exhibit design thinking traits. Design Stud. 38, 92–110. doi: 10.1016/J.DESTUD.2015.02.002

Bonett, D. (2002). Sample size requirements for testing and estimating coefficient alpha. J. Educ. Behav. Stat. 27, 335–340, doi: 10.3102/10769986027004335

Brislin, R. W. (1970). Back-translation for cross-cultural research. J. Cross Cult. Psychol. 1, 185–216. doi: 10.1177/135910457000100301

Carlgren, L., Rauth, I., and Elmquist, M. (2016). Framing design thinking: The Concept in idea and enactment. Creativity Innov. Manag. 25, 38–57. doi: 10.1111/CAIM.12153

Cheng, M., Adekola, O., and Albia, J. (2021). Employability in higher education: A review of key stakeholders’ perspectives. High. Educ. Eval. Dev. 16, 16–31. doi: 10.1108/HEED-03-2021-0025

Clarke, M. (2007). Where to from here? Evaluating employability during career transition. J. Manag. Organ. 13, 196–211. doi: 10.5172/JMO.2007.13.3.196

Collier, J. (2020). Applied structural equation modeling using AMOS: Basic to advanced techniques, 1st Edn, New York, NY: Routledge. doi: 10.4324/9781003018414

Cortina, J. M. (1993). What is coefficient alpha? An examination of theory and applications. J. Appl. Psychol. 78:98. doi: 10.1037/0021-9010.78.1.98

Crites, K., and Rye, E. (2020). Innovating language curriculum design through design thinking: A case study of a blended learning course at a Colombian university. System 94:102334. doi: 10.1016/J.SYSTEM.2020.102334

Dell’Era, C., Magistretti, S., Cautela, C., Verganti, R., and Zurlo, F. (2020). Four kinds of design thinking: From ideating to making, engaging, and criticizing. Creativity Innov. Manag. 29, 324–344. doi: 10.1111/CAIM.12353

Denning-Smith, J. (2020). Design Thinking as a Common Language between Higher Education and Employers. Bowling Green, KY: Western Kentucky University, doi: 10.13140/RG.2.2.10091.87848

Devon, H. A., Block, M. E., Moyle-Wright, P., Ernst, D. M., Hayden, S. J., Lazzara, D. J., et al. (2007). A psychometric toolbox for testing validity and reliability. J. Nurs. Scholarsh. 39, 155–164. doi: 10.1111/J.1547-5069.2007.00161.X

Dosi, C., Rosati, F., and Vignoli, M. (2018). Measuring design thinking mindset. Proc. Int. Design Conf. Design 5, 1991–2002. doi: 10.21278/IDC.2018.0493

Goldman, S., and Zielezinski, M. B. (2016). Teaching with design thinking: Developing new vision and approaches to twenty-first century learning. Contemp. Trends Issues Sci. Educ. 44, 237–262. doi: 10.1007/978-3-319-16399-4_10

Habibi, A., and Kolah, I. B. (2016). Structural equation modeling and factor analysis (practical training of LISREL software). Tehran: Press Organization Jahade Daneshgahi.

Hu, L. -t., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Karshenas, Z. (2023). Validation of a design thinking mindset scale in design students. Institute for Cognitive Science Studies, Department of Cognitive Science.

Kenayathulla, H. B., Ahmad, N. A., and Idris, A. R. (2019). Gaps between competence and importance of employability skills: Evidence from Malaysia. High. Educ. Eval. Dev. 13, 97–112. doi: 10.1108/HEED-08-2019-0039

Khodaei, A., and Shokri, O. (2023). Factorial validity and psychometric properties of the persian version of the academic parenting scale. Iranian J. Fam. Psychol. 10, 30–45. doi: 10.22034/IJFP.2024.2004801.1238

Koh, J. H. L., Chai, C. S., Wong, B., and Hong, H.-Y. (2015). Design Thinking for Education?: Conceptions and Applications in Teaching and Learning. Berlin: Springer.

Ladachart, L., Ladachart, L., Phothong, W., and Suaklay, N. (2021). Validation of a design thinking mindset questionnaire with Thai elementary teachers. J. Phys. Conf. Ser. 1835:012088. doi: 10.1088/1742-6596/1835/1/012088

Lawshe, C. H. (1975). A quantitative approach to content validity. Pers. Psychol. 28, 563–575. doi: 10.1111/J.1744-6570.1975.TB01393.X

Li, Y., Schoenfeld, A. H., DiSessa, A. A., Graesser, A. C., Benson, L. C., English, L. D., et al. (2019). Design and design thinking in STEM education. J. STEM Educ. Res. 2, 93–104. doi: 10.1007/s41979-019-00020-z

Liu, H. Y. (2023). Measuring design thinking competence in Taiwanese nursing students: A cross-cultural instrument adaptation. BMC Med. Educ. 23:927. doi: 10.1186/S12909-023-04911-Z/TABLES/4

Liu, S., and Li, C. (2023). Promoting design thinking and creativity by making: A quasi-experiment in the information technology course. Think. Skills Creativity 49:101335. doi: 10.1016/j.tsc.2023.101335

Lor, R. (2017). “Design thinking in education: A critical review of literature | Institutional Repository,” in Proceedings of the Asian Conference on Education & Psychology, Bangkok.

Lorusso, L., Lee, J. H., and Worden, E. A. (2021). Design thinking for healthcare: Transliterating the creative problem-solving method into architectural practice. HEED 14, 16–29. doi: 10.1177/1937586721994228

Luka, I. (2014). Design thinking in pedagogy. J. Educ. Cult. Soc. 5, 63–74. doi: 10.15503/JECS20142.63.74

McLaughlin, J. E., Chen, E., Lake, D., Guo, W., Skywark, E. R., Chernik, A., et al. (2022). Design thinking teaching and learning in higher education: Experiences across four universities. PLoS One 17:e0265902. doi: 10.1371/JOURNAL.PONE.0265902

Naghshbandi, S. (2020). Exploring the impact of experiencing design thinking on teachers’ conceptualizations and practices. TechTrends 64, 868–877. doi: 10.1007/S11528-020-00517-0

Nazari, N., Hernández, R. M., Ocaña-Fernandez, Y., and Griffiths, M. D. (2022). Psychometric validation of the persian self-compassion scale youth version. Mindfulness 13, 385–397. doi: 10.1007/S12671-021-01801-7

Nooripour, R., Ghanbari, N., Hosseinian, S., Lavie, C. J., Mozaffari, N., Sikström, S., et al. (2023). Psychometric properties of persian version of escapism scale among Iranian adolescents. BMC Psychol. 11:323. doi: 10.1186/S40359-023-01379-W/FIGURES/2

Norbeck, J. (1985). What constitutes a publishable report of instrument development? Nursing Res. 34, 380–382.

Nunnally, J. C., and Bernstein, I. H. (1994). The Assessment of Reliability. Psychom. Theory 3, 248–292.

Pallant, J. (2020). SPSS Survival Manual: A Step by Step Guide to Data Analysis using IBM SPSS - Julie Pallant - Google Books, 7th Edn. New York: McGraw-Hill Education.

Robinson, J. S. (2006). Graduates and Employers Perceptions of Entry-Level Employability Skills Needed by Agriculture, Food and Natural Resources Graduates. PhD Thesis. Columbia, MO: University of Missouri.

Rusmann, A., and Ejsing-Duun, S. (2022). When design thinking goes to school: A literature review of design competences for the K-12 level. Int. J. Technol. Design Educ. 32, 2063–2091. doi: 10.1007/S10798-021-09692-4/METRICS

Saberi, R. (2019). Ethnic enclosure in multicultural muslim community life: Case study in golestan province, I.R. Iran. J. Studi Sosial Dan Politik 3, 84–96. doi: 10.19109/JSSP.V3I2.4333

Schumacker, R. E., and Lomax, R. G. (2010). A beginners guide to structural equation modeling. New York, NY: Routledge.

Schweitzer, J., Groeger, L., and Sobel, L. (2016). The design thinking mindset: An assessment of what we know and what we see in practice. J. Design Bus. Soc. 2, 71–94. doi: 10.1386/dbs.2.1.71_1

Senivongse, C., and Bennet, A. (2023). University-as-a-service: Designing thinking approach for Bangkok University business innovation curriculum and service development. Innov. Educ. Teach. Int. 61, 1–24. doi: 10.1080/14703297.2023.2253251

Small, L., Shacklock, K., and Marchant, T. (2017). Employability: A contemporary review for higher education stakeholders. J. Vocat. Educ. Training 70, 148–166. doi: 10.1080/13636820.2017.1394355

Suarta, I. M., Suwintana, I. K., Fajar Pranadi, Sudana, I. G. P., and Dessy Hariyanti, N. K. (2018). Employability skills for entry level workers: A content analysis of job advertisements in indonesia. J. Techn. Educ. Train. 10, 49–61. doi: 10.30880/JTET.2018.10.02.005

Taber, K. S. (2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi: 10.1007/S11165-016-9602-2/TABLES/1

Tavakol, M., and Dennick, R. (2011). Making sense of Cronbach’s alpha. Int. J. Med. Educ. 27, 53–55. doi: 10.5116/ijme.4dfb.8dfd

Thi-Huyen, N., Xuan-Lam, P., Thanh, and Tu, N. T. (2021). The impact of design thinking on problem solving and teamwork mindset in a flipped classroom. Eur. J. Educ. Res. 96, 30–50. doi: 10.14689/EJER.2021.96.3

Trung, T. T., Ngan, D. H., Nam, N. H., and Quynh, L. T. T. (2024). Framework for measuring high school students’ design thinking competency in STE(A)M education. Int. J. Technol. Design Educ. 35, 557–583. doi: 10.1007/S10798-024-09922-5/METRICS

Tsai, M. F. (2021). Exploration of students’ integrative skills developed in the design thinking of a Psychology course. Think. Skills Creativity 41:100893. doi: 10.1016/J.TSC.2021.100893

Vignoli, M., Dosi, C., and Balboni, B. (2023). Design thinking mindset: Scale development and validation. Stud. High. Educ. 48, 926–940. doi: 10.1080/03075079.2023.2172566

Yorke, M. (2006). (13) (PDF) Employability in Higher Education: What It Is, What It Is Not. Available online at: https://www.researchgate.net/publication/225083582_Employability_in_Higher_Education_What_It_Is_What_It_Is_Not (accessed May 3, 2023).

Keywords: design thinking mindset, questionnaire, reliability, translation, validity

Citation: Vaqari Zamharir Z, Karami M, Jamali J and Saeidi Rezvani M (2025) Psychometric properties of the Persian version of the design thinking mindsets questionnaire. Front. Educ. 10:1525702. doi: 10.3389/feduc.2025.1525702

Received: 10 November 2024; Accepted: 02 June 2025;

Published: 30 June 2025.

Edited by:

Noureddin Nakhostin Ansari, Tehran University of Medical Sciences, IranReviewed by:

Fiorela Anaí Fernández Otoya, Universidad Católica Santo Toribio de Mogrovejo, PeruThanh-Trung Ta, Ho Chi Minh City Pedagogical University, Vietnam

Copyright © 2025 Vaqari Zamharir, Karami, Jamali and Saeidi Rezvani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zahra Vaqari Zamharir, emFocmEuY3VycmljdWx1bS5zdHVkZW50QGdtYWlsLmNvbQ==; Morteza Karami, bS5rYXJhbWlAdW0uYWMuaXI=

Zahra Vaqari Zamharir

Zahra Vaqari Zamharir Morteza Karami

Morteza Karami Jamshid Jamali

Jamshid Jamali Mahmoud Saeidi Rezvani

Mahmoud Saeidi Rezvani